- 1School of Medicine, California University of Science and Medicine, Colton, CA, United States

- 2Northwell Cancer Institute, New Hyde Park, NY, United States

Artificial intelligence (AI) is increasingly embedded in oncology. While initial technical evaluations emphasize diagnostic accuracy and efficiency, the impact on patient–physician interaction (PPI)—the foundation of trust, communication, comprehension, and shared decision-making—remains underexplored. In this review, we studied the current development of AI technology facing both physicians and patients with a focus in cancer care. Among different AI technologies, chatbots, large language model agents, and extended reality applications have shown the promise to date. Survey data suggest oncologists recognize AI's potential to augment efficiency but remain cautious about liability and the erosion of relational care. Key to future AI success in improving cancer care critically depends on design, validation, governance, and human guidance and gatekeeping in care delivery.

1 Introduction

Artificial Intelligence (AI) has started to enter nearly every facet of cancer care, including screening, diagnostics, treatment selection, and survivorship. As deep learning technology continues to improve, AI increasingly mediates how patients and clinicians communicate, decide, and make decisions. Patient-facing chatbots and LLMs can answer questions on demand, synthesize complex information into plain language, and scaffold decision discussions. Furthermore, immersive XR platforms can extend communication beyond text into embodied, visual explanations that may reduce anxiety and foster shared decision-making (SDM) for cancer patients. However, without appropriate guidance, this can result in, overstated confidence and miss nuance—undercutting trust at the bedside (1–6).

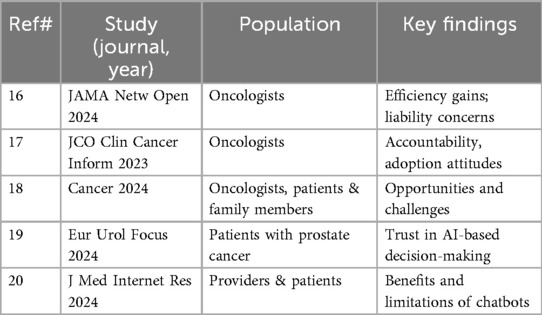

Professional discourse mirrors this ambivalence. Recent surveys show oncologists value AI for automating administrative tasks and enhancing efficiency, but express concern about accountability in error cases, unequal patient access, and loss of empathy in encounters. These attitudes highlight AI's double-edged role: a potential partner in communication, yet a possible culprit for disruption of trust if poorly governed.

This review situates oncology AI literature explicitly within the PPI lens. Unlike prior reviews focused on diagnostic accuracy, we examine how AI affects communication quality, comprehension, decisional conflict, satisfaction, and trust, while acknowledging professional perspectives and ongoing debates about responsibility.

2 Methods

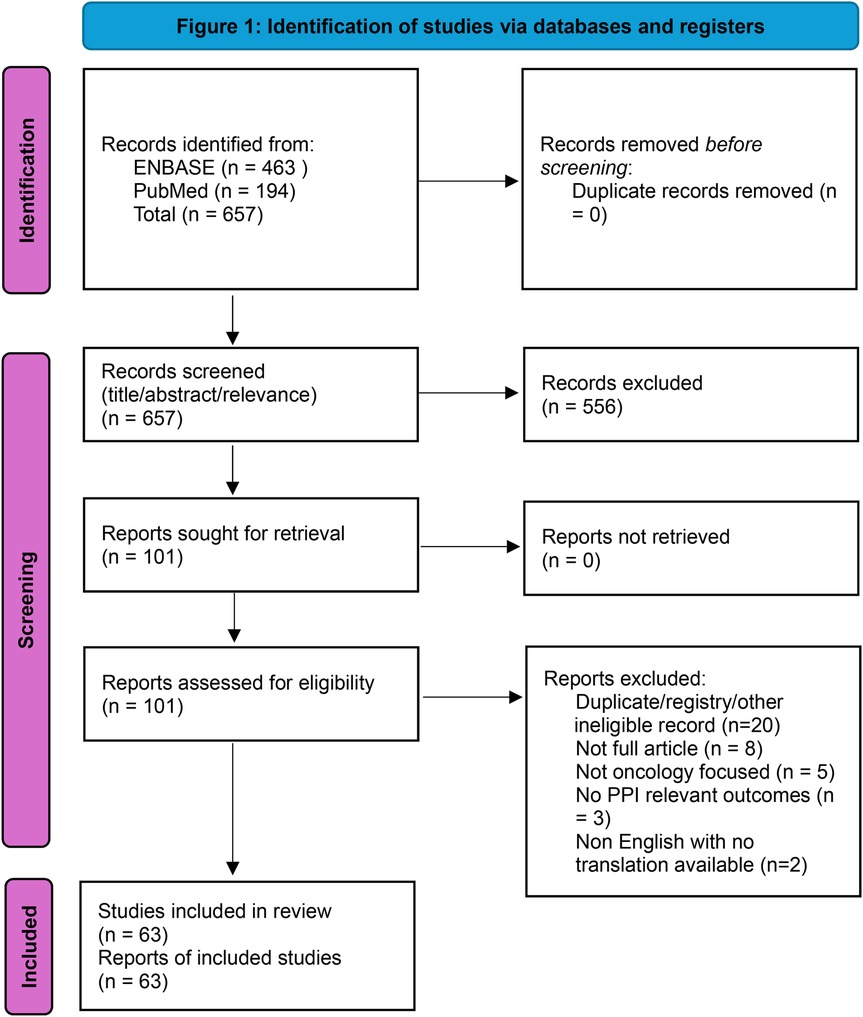

We followed PRISMA 2020 guidance in a streamlined format appropriate for this review. Searches covered PubMed and Embase (no lower date limit; last update September 2025) using: (chatbot) AND (“artificial intelligence” OR AI) AND (cancer OR oncology) AND education. Filters included English, peer-reviewed, full-text. Exclusions: conference abstracts, books, letters, and editorials. Title/abstract screening was followed by full-text assessment against prespecified inclusion criteria: oncology context; chatbot/LLM or closely related patient-facing AI; and PPI-relevant outcomes (e.g., communication quality, comprehension/readability, decisional conflict, trust, satisfaction, equity).

We identified 657 records (PubMed n = 194, Embase n = 463). After screening titles/abstracts, 101 reports were retrieved for full-text review. Following eligibility assessment, 63 unique studies met inclusion criteria. Several duplicate entries were identified across databases (e.g., trial registrations and overlapping records) and removed during full-text deduplication. Two XR breast cancer studies suggested by peer reviewers were screened against the same criteria and included. Data extraction captured cancer type, AI modality, care phase, study design, comparators, PPI outcomes, and guardrail domains. Supplementary Table 1 lists all included studies. A PRISMA-style flow diagram is presented in Figure 1.

3 Results

3.1 Overview

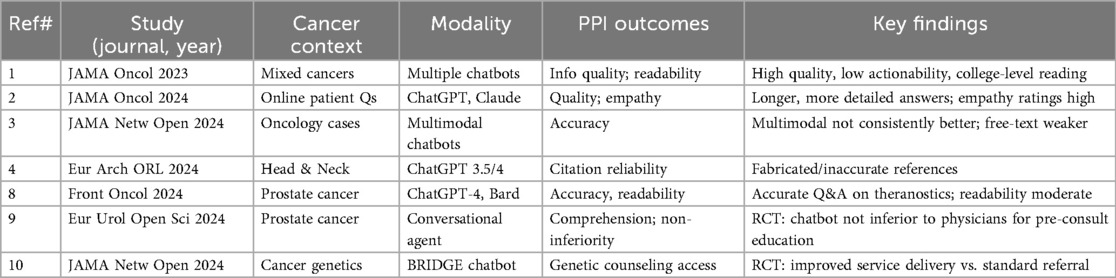

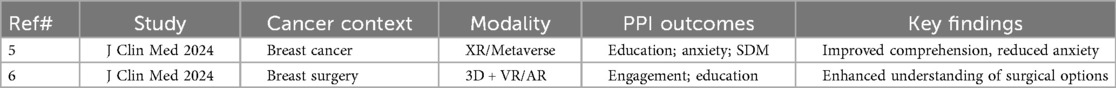

A large subset of the included studies evaluated chatbots and LLMs answering cancer questions or assisting reasoning. Across studies, chatbots often produced readable, detailed answers but showed variable accuracy and inconsistent citation behavior, with risks of fabricated references (4–6). Multimodal systems did not consistently outperform text-only models and struggled on free-text reasoning (3). In imaging, randomized evidence from mammography (MASAI trial) shows AI can reduce reading workload while maintaining safety—indirectly supporting PPI by freeing clinician time (7). XR studies in breast cancer suggest immersive education lowers anxiety and strengthens SDM (5, 6).

3.2 Chatbots and conversational agents

A 2023 JAMA Oncology analysis found chatbots produced high-quality consumer information using validated instruments, though readability was at a college level and actionability limited (1). In 2024, JAMA Oncology reported that chatbots generated longer, more detailed responses to patient questions than oncologists, with comparable empathy ratings but unresolved safety concerns (2). A 2024 JAMA Network Open study found multimodal chatbots were not consistently more accurate than unimodal ones, especially on open-ended tasks (3). Head-and-neck oncology work highlighted citation fabrication as a major limitation for trust (4). Key evaluations of oncology chatbots/LLMs are summarized in Table 1, including landmark studies as well as prostate theranostics, randomized patient-facing chatbot trials, and cancer genetics applications (8–10).

3.3 Decision-support and digital pathology tools

Tools such as OncoKB and AI-derived pathology biomarkers translate genomics and histology into treatment recommendations. For example, OncoKB categorizes cancer mutations by therapeutic actionability, which helps oncologists frame genomic results for patients during molecular tumor board discussions. Similarly, Armstrong et al. demonstrated that an AI-derived pathology biomarker could predict prostate cancer patients' benefit from androgen deprivation therapy, guiding physician–patient discussions on intensifying treatment. These tools influenced PPI indirectly by shaping clinical dialogue, particularly when clinicians contextualized outputs for patients. Survey studies reinforce that clinicians expect to remain accountable for interpretation, underscoring the need for AI as support rather than surrogate (11, 12).

3.4 XR/metaverse applications

Two breast cancer studies illustrated immersive AI tools' ability to clarify surgical planning and survivorship education. For instance, one study used VR to simulate breast reconstruction outcomes, enabling patients to better visualize surgical choices and report less decisional conflict. Another integrated XR into survivorship education, where patients demonstrated higher comprehension of follow-up care and greater satisfaction with counseling. Patients exposed to XR/VR environments overall reported improved comprehension, reduced anxiety, and greater engagement in SDM. These are summarized in Table 2.

3.5 Risks and guardrails

As advancement in AI application has entered oncology care, several potential risks have been identified: (1) unverifiable claims undermining trust (1–4); (2) bias and inequity in access and training data; (3) workflow misfit and unclear liability raising clinician burden; and (4) relational harms, such as reduced empathy when technology displaces human dialogue. Guardrails include verifiability, transparency, clinician oversight, equity-focused design, and reporting standards such as CONSORT-AI, TRIPOD + AI, and CHART (13–15).

3.6 Professional perspectives

Surveys highlight that oncologists value efficiency gains but remain cautious about liability, accountability, and loss of empathy. For example, one international survey found that while 75% of oncologists believed AI could streamline documentation, fewer than 30% trusted AI with independent decision-making. Another U.S.-based survey reported that most respondents expected empathy and accountability to remain clinician responsibilities, even if AI were adopted (16–20). Table 3 summarizes key surveys and professional perspectives, including attitudes toward accountability, willingness to adopt AI, and perceptions of patient trust.

4 Discussion

AI in oncology holds potential to reshape the contours of patient–physician communication. At the most basic level, chatbots make cancer knowledge more accessible, translating medical jargon into plain language (1–3). Several studies highlight that patients value such accessibility, particularly in low-resource settings where direct oncologist consultation may be limited. But accessibility without accuracy is hollow; hallucinations and citation fabrication remain major threats (4). Patients increasingly verify AI outputs online, and fabricated references can erode trust more rapidly than incomplete answers. Trust, once broken, is difficult to restore—making verifiability a non-negotiable design requirement. In practice, this points to a model where AI outputs are mediated through human gatekeeping mechanisms: clinicians or trained staff vet AI-generated information before it reaches patients, or present AI summaries with their own interpretive framing. Such oversight maintains the benefits of accessibility while safeguarding against misinformation, aligning with survey data showing that patients and clinicians alike expect physicians to remain accountable for final interpretation.

A second central theme is the evolving balance of empathy across oncology settings. Evidence from 2024 suggests some chatbots generated responses rated as more empathetic than oncologists, raising the possibility that tone-optimized AI could complement strained clinician bandwidth in high-volume settings (2). Beyond general cancer information, chatbots are also being deployed in pediatric, survivorship, and palliative care contexts, where communication needs are unique. For instance, co-design pilots in pediatric and AYA oncology demonstrated feasibility and empowerment but also highlighted literacy barriers (21). Survivorship interventions reported improved engagement and self-management, though accuracy limitations persisted, and palliative care evaluations found chatbots useful in structuring sensitive conversations but constrained in empathy and nuance (22). Professional surveys reinforce this nuance, noting that oncologists value efficiency gains but remain cautious about accountability and relational harms (16–20). Taken together, these findings suggest that chatbots may best serve as supplemental tools—able to introduce information and reinforce self-management—while clinicians remain central for contextual, emotional, and relational care.

XR/Metaverse studies expand the conversation. By immersing patients in their own anatomy and surgical options, XR fosters active participation in decision-making (5, 6). These immersive approaches may be especially impactful in breast cancer surgery, where anxiety and decisional conflict are common. The XR evidence suggests AI can scaffold communication not only through words but also through visualization. Importantly, these approaches can be positioned to complement rather than compete with text-based chatbots or LLMs: while conversational agents provide accessible, on-demand explanations, XR models offer experiential visualization that can reinforce those explanations and anchor them in the patient's own body. Prototype “virtual nursing” models already blend conversational AI with immersive guidance, hinting at a future where multimodal platforms combine verbal and visual support to enhance comprehension and engagement. The question is whether such benefits will generalize beyond single-institution studies in high-resource settings.

Decision-support systems (e.g., OncoKB, AI pathology biomarkers) occupy another layer. Although not directly conversational, they shape PPI indirectly: the quality of oncologist–patient discussions hinges on how confidently and transparently clinicians can explain AI-derived recommendations (11, 12). For example, the FDA-cleared ArteraAI digital pathology test predicts which prostate cancer patients may benefit from androgen deprivation therapy, enabling more precise treatment discussions. Such tools illustrate how AI-derived recommendations can enhance precision medicine while placing responsibility on clinicians to interpret results responsibly. Here, liability concerns loom large. Surveys show most oncologists expect to remain responsible for AI-informed decisions, highlighting the need for governance frameworks that preserve accountability while distributing responsibility fairly.

Across all modalities, the equity dimension cannot be ignored. Bias in training data disproportionately affects marginalized populations. Readability levels skew above average literacy. And XR platforms risk widening the digital divide. Addressing these inequities requires deliberate design choices—multilingual support, culturally competent training data, and evaluation in diverse populations (16–20). Equity is not a side issue but a central determinant of whether AI improves or undermines relational care. Sustained investment is also needed: technologies must be developed with inclusive language models, low-bandwidth XR options, and educational scaffolds that adapt to varied health literacy. Such investments can help ensure that AI reduces rather than reinforces disparities.

Our synthesis aligns with and extends professional discourse. ASCO and other professional bodies stress the ethical stakes of AI in oncology, calling for transparency, interpretability, and explicit measurement of communication outcomes (23). Yet most published studies stop short at technical accuracy or single-use case evaluations. Few directly measure trust, decisional conflict, or patient satisfaction, leaving a gaping evidence gap. Looking ahead, several priorities emerge. First, prospective trials must move beyond accuracy and efficiency to relational endpoints such as trust, comprehension, decisional conflict, and satisfaction, using validated tools. Without such measures, we cannot know whether AI is truly strengthening PPI. Second, hybrid human–AI models deserve systematic evaluation: chatbots may triage questions, XR can visualize options, and decision-support systems can suggest treatments, but oncologists must integrate, contextualize, and humanize these outputs. Third, governance and accountability frameworks are essential. Surveys show that oncologists demand clear lines of responsibility, without which adoption will remain limited and trust fragile (16–18). Fourth, equity and access must be central: tools must be evaluated across literacy levels, languages, and socioeconomic contexts, and XR interventions must be assessed for accessibility to avoid deepening disparities (16–20). Finally, education and training are needed to prepare the next generation of oncologists to critically appraise AI outputs, integrate them into relational communication, and maintain empathy.

5 Conclusion

Artificial intelligence in oncology, as it currently stands, it is a double-edged sword whose relational impact is critically dependent on design, governance, and integration. Chatbots and XR show promise for education, engagement, and anxiety reduction, while decision-support tools can enhance the diagnostic and treatment precision leading to better quality of care. Yet, risks of hallucinations, inequity, liability confusion, and empathy erosion are substantial risks at hand. Oncology must commit to designing, evaluating, and governing AI in ways to supplement and partner with clinicians, and in doing so strengthen the human connection at the heart of care. Future studies should prioritize relational outcomes and partnership, ensuring that AI serves as an ally in patient–physician interaction rather than an interloper.

Author contributions

BT: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Visualization, Writing – original draft, Writing – review & editing, Software. C-KT: Conceptualization, Project administration, Resources, Supervision, Validation, Writing – review & editing, Methodology.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fdgth.2025.1633577/full#supplementary-material

References

1. Pan A, Musheyev D, Bockelman D, Loeb S, Kabarriti AE. Assessment of artificial intelligence chatbot responses to top searched queries about cancer. JAMA Oncol. (2023) 9(10):1437–40. doi: 10.1001/jamaoncol.2023.2947

2. Chen D, Parsa R, Hope A, Hannon B, Mak E, Eng L, et al. Physician and artificial intelligence chatbot responses to cancer questions from social media. JAMA Oncol. (2024) 10(7):956–60. doi: 10.1001/jamaoncol.2024.0836

3. Chen D, Huang RS, Jomy J, Wong P, Yan M, Croke J, et al. Performance of multimodal artificial intelligence chatbots evaluated on clinical oncology cases. JAMA Netw Open. (2024) 7(10):e2437711. doi: 10.1001/jamanetworkopen.2024.37711

4. Lechien JR, Briganti G, Vaira LA. Accuracy of ChatGPT-3.5 and -4 in providing scientific references in otolaryngology–head and neck surgery. Eur Arch Otorhinolaryngol. (2024) 281(4):2159–65. doi: 10.1007/s00405-023-08441-8

5. Żydowicz WM, Skokowski J, Marano L, Polom K. Navigating the metaverse: a new virtual tool with promising real benefits for breast cancer patients. J Clin Med. (2024) 13(15):4337. doi: 10.3390/jcm13154337

6. Żydowicz WM, Skokowski J, Marano L, Polom K. Advancements in breast cancer surgery through three-dimensional imaging, virtual reality, augmented reality, and the emerging metaverse. J Clin Med. (2024) 13(3):915. doi: 10.3390/jcm13030915

7. Lång K, Josefsson V, Larsson AM, Larsson S, Högberg C, Sartor H, et al. Artificial intelligence-supported screen reading versus standard double Reading in the mammography screening with artificial intelligence trial (MASAI). Lancet Oncol. (2023) 24(8):936–44. doi: 10.1016/S1470-2045(23)00298-X

8. Bilgin GB, Bilgin C, Childs DS, Orme JJ, Burkett BJ, Packard AT, et al. Performance of ChatGPT-4 and bard chatbots in responding to common patient questions on prostate cancer 177Lu-PSMA-617 therapy. Front Oncol. (2024) 14:1386718. doi: 10.3389/fonc.2024.1386718

9. Baumgärtner K, Byczkowski M, Schmid T, Muschko M, Woessner P, Gerlach A, et al. Effectiveness of the medical chatbot PROSCA to inform patients about prostate cancer: results of a randomized controlled trial. Eur Urol Open Sci. (2024) 69:80–8. doi: 10.1016/j.euros.2024.08.022

10. Kaphingst KA, Kohlmann WK, Lorenz Chambers R, Bather JR, Goodman MS, Bradshaw RL, et al. Uptake of cancer genetic services for chatbot vs standard-of-care delivery models: the BRIDGE randomized clinical trial. JAMA Netw Open. (2024) 7(9):e2432143. doi: 10.1001/jamanetworkopen.2024.32143

11. Chakravarty D, Gao J, Phillips SM, Kundra R, Zhang H, Wang J, et al. OncoKB: a precision oncology knowledge base. JCO Precis Oncol. (2017) 2017:PO.17.00011. doi: 10.1200/PO.17.00011

12. Armstrong AJ, Liu VYT, Selvaraju RR, Chen E, Simko JP, DeVries S, et al. Development and validation of an artificial intelligence digital pathology biomarker to predict benefit of long-term hormonal therapy and radiotherapy in men with high-risk prostate cancer across multiple phase III trials. J Clin Oncol. (2025):JCO2400365. doi: 10.1200/JCO.24.00365

13. Liu X, Cruz Rivera S, Moher D, Calvert MJ, Denniston AK, SPIRIT-AI and CONSORT-AI Working Group. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the CONSORT-AI extension. Lancet Digit Health. (2020) 2(10):e537–48. doi: 10.1016/S2589-7500(20)30218-1

14. Collins GS, Moons KGM, Dhiman P, Riley RD, Beam AL, Van Calster B, et al. TRIPOD+AI: updated guidance for reporting clinical prediction models that use regression or machine learning methods. Br Med J. (2024) 385:e078378. doi: 10.1136/bmj-2023-078378

15. Huo B, Collins GS, Chartash D, Thirunavukarasu AJ, Flanagin A, Iorio A, et al. Reporting guideline for chat bot health advice studies: the CHART statement. JAMA Netw Open. (2025) 8(8):e2530220. Published August 1 2025. doi: 10.1001/jamanetworkopen.2025.30220

16. Hantel A, Walsh TP, Marron JM, Kehl KL, Sharp R, Van Allen E, et al. Perspectives of oncologists on the ethical implications of using artificial intelligence for cancer care. JAMA Netw Open. (2024) 7(3):e244077. Published March 4 2024. doi: 10.1001/jamanetworkopen.2024.4077

17. Hildebrand RD, Chang DT, Ewongwoo AN, Ramchandran KJ, Gensheimer MF. Study of patient and physician attitudes toward automated prognostic models for metastatic cancer. JCO Clin Cancer Inform. (2023) 7:e2300023. doi: 10.1200/CCI.23.00023

18. National Cancer Institute (CBIIT). Survey reveals oncologists’ views on using AI in cancer care. April 17, 2024.

19. Rodler S, Kopliku R, Ulrich D, Kaltenhauser A, Casuscelli J, Eismann L, et al. Patients’ trust in artificial intelligence-based decision-making for localized prostate cancer: results from a prospective trial. Eur Urol Focus. (2024) 10(4):654–61. doi: 10.1016/j.euf.2023.10.020

20. Laymouna M, Ma Y, Lessard D, Schuster T, Engler K, Lebouché B. Roles, users, benefits, and limitations of chatbots in health care. J Med Internet Res. (2024) 26:e56930. doi: 10.2196/56930

21. Hasei J, Hanzawa M, Nagano A, Maeda N, Yoshida S, Endo M, et al. Empowering pediatric, adolescent, and young adult patients with cancer utilizing generative AI chatbots to reduce psychological burden and enhance treatment engagement: a pilot study. Front Digit Health. (2025)7:1543543. Published February 25 2025. doi: 10.3389/fdgth.2025.1543543

22. Kim MJ, Admane S, Chang YK, Shih KSK, Reddy A, Tang M, et al. Chatbot performance in defining and differentiating palliative care, supportive care, and hospice care. J Pain Symptom Manage. (2024) 67(5):e381–e391. doi: 10.1016/j.jpainsymman.2024.01.008

Keywords: artificial intelligence, oncology, patient–physician interaction, chatbots, extended reality, communication, shared decision-making

Citation: Thind BS and Tsao C-K (2025) Artificial intelligence in oncology: promise, peril, and the future of patient–physician interaction. Front. Digit. Health 7:1633577. doi: 10.3389/fdgth.2025.1633577

Received: 24 May 2025; Accepted: 10 October 2025;

Published: 4 November 2025.

Edited by:

Eric Druyts, Pharmalytics Group, CanadaReviewed by:

Luigi Marano, Academy of Applied Medical and Social Sciences, PolandCopyright: © 2025 Thind and Tsao. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Birpartap S. Thind, YmlycGFydGFwLnRoaW5kQG1kLmN1c20uZWR1

Birpartap S. Thind

Birpartap S. Thind Che-Kai Tsao

Che-Kai Tsao