- Department of Agronomy, Food, Natural Resources, Animals and Environment, University of Padua, Legnaro, Italy

Genomic selection has been increasingly implemented in the animal breeding industry, and it is becoming a routine method in many livestock breeding contexts. However, its use is still limited in several small-population local breeds, which are, nonetheless, an important source of genetic variability of great economic value. A major roadblock for their genomic selection is accuracy when population size is limited: to improve breeding value accuracy, variable selection models that assume heterogenous variance have been proposed over the last few years. However, while these models might outperform traditional and genomic predictions in terms of accuracy, they also carry a proportional increase of breeding value bias and dispersion. These mutual increases are especially striking when genomic selection is performed with a low number of phenotypes and high shrinkage value—which is precisely the situation that happens with small local breeds. In our study, we tested several alternative methods to improve the accuracy of genomic selection in a small population. First, we investigated the impact of using only a subset of informative markers regarding prediction accuracy, bias, and dispersion. We used different algorithms to select them, such as recursive feature eliminations, penalized regression, and XGBoost. We compared our results with the predictions of pedigree-based BLUP, single-step genomic BLUP, and weighted single-step genomic BLUP in different simulated populations obtained by combining various parameters in terms of number of QTLs and effective population size. We also investigated these approaches on a real data set belonging to the small local Rendena breed. Our results show that the accuracy of GBLUP in small-sized populations increased when performed with SNPs selected via variable selection methods both in simulated and real data sets. In addition, the use of variable selection models—especially those using XGBoost—in our real data set did not impact bias and the dispersion of estimated breeding values. We have discussed possible explanations for our results and how our study can help estimate breeding values for future genomic selection in small breeds.

Introduction

Genomic information has been successfully implemented in animal breeding due to its effectiveness in bringing significant improvements in accuracy (Blasco and Toro, 2014). These improvements in accuracy can lead to an increase in the rate of genetic gains and have reduced the cost of progeny testing by allowing to preselect animals with great genetic merit early (Meuwissen et al., 2001). Combining these advancements with the progressively reduced cost of genotyping makes single-nucleotide polymorphism (SNP) panels a promising tool to select small local breeds (Biscarini et al., 2015).

SNP marker information allows for better modeling of Mendelian sampling than the traditional pedigree-based best linear unbiased prediction (PBLUP) (VanRaden, 2008a), which used only pedigree information. The genomic BLUP (GBLUP) method was developed to replace the pedigree-based relationships for genomic relationships estimated from SNP markers, which captured the genomic similarity between animals but are limited to the use of only genotyped animals (Habier et al., 2013). In addition, Legarra et al. (2009) proposed a naive method, single-step GBLUP (ssGBLUP), in which genotyped and non-genotyped animals are jointly combined under the assumption that the genomic and pedigree relationship matrixes are multivariate and normally distributed. Due to its straightforward computational approach (Misztal et al., 2013) and unbiased breeding values predictions, compared to the GBLUP with its multistep approach (Masuda et al., 2018), the ssGBLUP has become a routine method for genomic evaluations in many livestock breeds and species (Aguilar et al., 2010; Christensen and Lund, 2010).

However, one major challenge in using (ss)GBLUP remains the accuracy of estimation when phenotyped animals are limited in number, such as in local breeds (Meuwissen et al., 2001). For example, Karaman et al. (2016) reported that GBLUP showed lower performance than that of models using only SNPs selected through a Bayesian hierarchical model as Bayes B and Bayes C, but only when phenotyped animals were few. Indeed, when presented with a small number of animals and many SNP markers (n < p), models that select a number of priority SNPs (variable selection models) and models that assume heterogenous variance can lead to improvements in EBV accuracy. These models can accomplish this by reducing the number of variables to estimate and by preventing overfitting linked to high-dimensional data (Gianola 2013). Frouin et al. (2020) went as far as deriving the prediction accuracy of GBLUP as a function of the ratio n/p, while Pocrnic et al. (2019) regarded the accuracy of GBLUP as not only strictly dependent on the number of SNPs but also on the number of independent chromosome segments.

Several studies thus focused on relaxing the assumption of ssGBLUP that all SNPs must show a common variance by applying different weights to the SNPs when the G matrix is calculated. Methods such as weighted ssGBLUP (WssGBLUP) (Wang et al., 2014) were widely reported to outperform ssGBLUP’s accuracy of prediction (Gualdrón Duarte et al., 2014; Gualdrón Duarte et al., 2020; Mehrban et al., 2021; Ren et al., 2021), but their use led to a proportional increase of breeding value bias and dispersion (Mancin et al., 2021b; Botelho et al., 2021; Cesarani et al., 2021; Mehrban et al., 2021).

Moreover, it is unclear how models considering heterogenous variances account for selection since only k-fold cross-validation is usually applied (Zhu et al., 2021). In real-life breeding scenarios, time cross-validation should be considered (Liu, 2010) because this validation method mimics the true accumulation of information across time. The estimated breeding values (EBVs) are in fact used to select young bulls, and after 3–5 years, the bulls will receive daughter information; it is thus desirable that EBVs would highly correlate to the final EBVs. However, the few studies that evaluated the impact of WssGBLUP using time cross-validation with small samples of individuals (e.g., Cesarani et al., 2021) found higher bias and overdispersion. These mutual increases are relevant when a low number of phenotypes and high shrinkage values are present, and the reasons behind the loss of these unbiased properties in heterogenous SNP regression or GBLUP are still not entirely clear.

This issue is not trivial as the bias and the slope of the regression (dispersion) need to be considered, especially when proven, and young animals are mixed in the population as young candidates will have unfair EBVs (Legarra and Reverter, 2017).

Thus, the abovementioned issues of lack of accuracy of ssGBLUP when used in contexts with a few animals have not been conclusively resolved. For this reason, in the present study, we intend to explore alternative methods to improve accuracy in small populations within a single-step framework. A possible solution could come from implementing a naïve approach, where instead of giving each SNP a specific weight, we removed the non-informative ones or variable selection models. Thus, we aimed to investigate the impact, in terms of accuracy of predictions, dispersion, and bias, of reducing the dimensionality of the G matrix by constructing it using only a subset of informative markers.

In order to accomplish this, we tried different machine learning and variable selection algorithms with the aim to identify the most informative SNPs by indirect prediction. These algorithms were as follows: least absolute shrinkage and selection operator (LASSO), spike-and-slab LASSO (SSLASSO), recursive feature elimination using ridge regression (RfeRR), recursive feature elimination using support vector machine regression (RfeSVM), and extreme gradient boost (XGBoost).

We aimed to test suitable procedures for genomic estimation by considering both the abovementioned variable selection models ssGBLUP and the predictions of BLUP, classical ssGBLUP, and WssGBLUP. To do that, we created different simulated populations and also considered a local population, the Rendena cattle. We then used different cross-validation methods to assess our results.

Materials and Methods

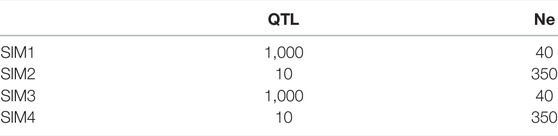

For a graphical representation of our methodology for testing BLUP models, see Figure 1.

FIGURE 1. Graphical representation of our methodology for testing model predictions both in simulated and real populations. Each replicate of a simulated population is represented with a circle; SIM1 is polygenic with low Me, SIM2 polygenic with high Me, SIM3 is oligogenic with low Me, and SIM4 is oligogenic with high Me. Each phenotype (both real and simulated) is represented with an arrow.

Data sets

Simulated Data sets

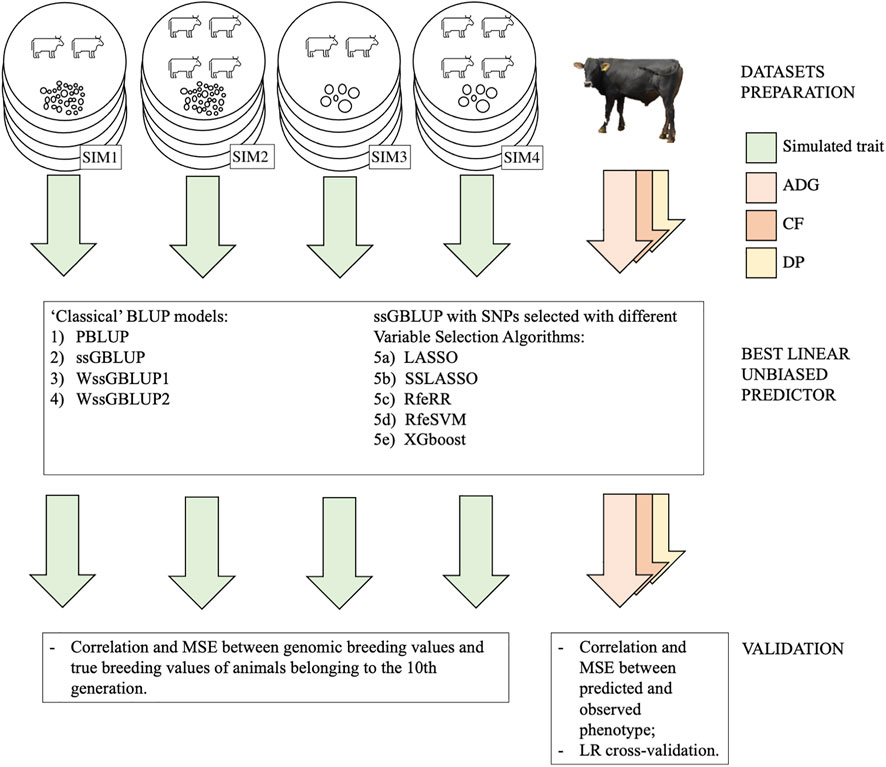

Simulations were performed with the QMSim simulation program (Sargolzaei and Schenkel, 2009). A total of four different populations were simulated based on various combinations of quantitative trait locus (QTL) number and effective population size (Ne). Each simulation was replicated five times.

All simulations were generated starting from the historical population using a similar structure to that used by Pocrnic et al. (2019): we created an initial bottleneck contracting the historical population size from 5,000 to 1,000 animals in 1,250 generations and then expanded it to 25,000. In the first generation, 10 bovine autosomes were simulated, placing evenly spaced 80,000 ca. biallelic SNPs with equal allele frequencies and a recurrent mutation rate of 2.5e−5 per generation. The number of SNPs per chromosome was set to 8,000, while the QTL number changed according to different simulation strategies. In two of the four simulations, one biallelic and randomly distributed QTL per chromosome was sampled from a gamma distribution with a shape parameter equal to 0.4 (oligogenic scenarios). In the other two simulations, 100 QTLs per chromosome were generated using the same parameter (polygenic scenarios). In all these simulations, 10 discrete generations were created by randomly mating 750 females and a different number of sires according to the simulation strategies. In two scenarios, one oligogenic and one polygenic, we assumed a large Ne, with 100 males per generation used as sires, while in simulations with a low Ne, only 10 males per generation were used as sires. The following four populations were, thus, created by mixing different numbers of QTL and different Ne values, and five replicates for each population were generated:

• SIM1 polygenic population with small Ne

• SIM2 polygenic population with large Ne

• SIM3 oligogenic population with small Ne

• SIM4 oligogenic population with large Ne

The effective population size and number of QTLs in the four different simulated populations are reported in Table 1, and numbers of -genotyped animals are reported in Table 2 (2,250 animals). We simulated a single trait with heritability of 0.3, close to the heritability of the traits in the real data set further described. To do that, we obtained a single phenotype record per animal by adding an overall mean of 1.0 to the sum of the QTL effects together with a residual effect. As in the study by Pocrnic et al. (2019), only phenotypes from generations 8 to 9 were retrieved, and genomic information of animals belonging to generations 8 to 10 was used for further analysis (750 × 3 = 2,250 animals). The structure of simulated populations is reported in Table 2. Before proceeding with genomic prediction, SNPs with a minor allele frequency (MAF <0.01) and with high linkage disequilibrium (LD > 80) were removed using the SNPrune program (Calus and Vandenplas, 2018).

Real Data set

A real data set containing information from the performance test evaluations of young bulls belonging to the Rendena cattle breed was provided by the National Breeders Association of Rendena (ANARE). ANARE also provided herdbook information about the whole population traced back to the 1950s, whereas genomic data of bulls were, in part, provided by ANARE (PSRN DualBreeding, www.dualbreeding.it) and, in part, obtained under academic funding (SID Project, BIRD183281). Rendena is a small local population (6,384 heads for 249 breeding males and 6,135 breeding females belonging to 202 herds censed on 31.12.2020; fao/dad.is.org) bred for the dual-purpose attitude of milk and meat. Rendena is native to the Northeastern Alps in Italy but is now widespread also in the adjacent lowland territory on the right side of the Brenta River in the Veneto region (Po Valley; Guzzo et al., 2018).

The real phenotypes considered in this study were single individual records of average daily gain (ADG), in vivo estimates of carcass fleshiness (CF) and dressing percentage (DP) collected in the years 1985–2020. These traits have been extensively described in Guzzo et al. (2019) and Mancin et al. (2021b). The Illumina Bovine LD GGP v3, comprising 26,497 SNP markers (low-density panel: LD), and Bovine 150K Array GGP v3 Bead Chip, including 138,974 SNPs (Illumina Inc, San Diego, CA, United States; high-density panel: HD), were used for genotyping Rendena cattle.

The LD panel belonging to 1,416 individuals with 26,497 SNPs was imputed on the HD panel with 138,974 SNPs belonging to 554 bulls. The overlap between the two panels was about 60%. Information about data quality control and imputation is reported in greater detail by Mancin et al. (2022). In addition to the previous study, further quality control was performed by removing SNPs with high linkage disequilibrium (>80), using SNPrune (Calus and Vandenplas, 2018): this removed a total of 28,049 SNPs. An amount of 85,331 SNPs was finally retained for analysis. Overall, the study considered 1,691 young bulls with only phenotypic information, 1,739 animals with only genotypic information, and 687 animals with both phenotypic and genotypic information. The data structure of the real data set used for genomic prediction is reported in Table 2.

Prediction Models

The breeding values for the single trait of the four simulated populations and the three performance test traits of the real Rendena data set were estimated using several BLUP models. First, we used four ‘classical’ BLUP models:

1) standard pedigree best linear unbiased prediction (PBLUP, described in Pedigree Best Linear Unbiased Predictor);

2) single-step genomic BLUP (ssGBLUP, described in Single-Step Genomic Best Linear Unbiased Predictor);

3) small shrinkage weighted single-step genomic BLUP (WssGBLUP1, described in Weighted Single-Step Genomic Best Linear Unbiased Predictor);

4) high shrinkage weighted single-step genomic BLUP (WssGBLUP2, described in Weighted Single-Step Genomic Best Linear Unbiased Predictor).

Then, we performed five ssGBLUPs with preselected SNPs (described in 2.2.4). SNP selection was achieved using the following algorithms:

5a) least absolute shrinkage and selection operator [LASSO, described in Least Absolute Shrinkage and Selection Operator (LASSO)];

5b) spike-and-slab LASSO (SSLASSO, described in Spike-and-Slab LASSO);

5c) recursive feature elimination using ridge regression [RfeRR, described in Recursive Feature Elimination Using Ridge Regression (RfeRR)];

5d) recursive feature elimination using support vector machine [RfeSVM, described in Recursive Feature Elimination Using Support Vector Machine (RfeSVM)];

5e) extreme gradient boosting (XGBoost, described in Boosting Ensemble).

Pedigree Best Linear Unbiased Predictor

PBLUP was the first method used to estimate predictors, and it is described by the following equation (Henderson, 1975):

where y is the vector of phenotypic observations, X is the matrix of the incidence of fixed effects, and b is the vector of these effects. In the real data set, fixed effects are represented by the contemporary group (young bulls tested at the same period in the same pen; 142 levels) and parity group of dams in four classes (Guzzo et al., 2019). In the simulated data sets, X was substituted by a vector of 1’s; thus, b stands for the mean of the models. Matrix Z represents the incidence matrix that relates the random genetic additive effect, included in vector

Single-Step Genomic Best Linear Unbiased Predictor

We used ssGBLUP as a benchmark to evaluate the impact of other models (see further, WssGBLUP and ssGBLUP with selected SNPs). The ssGBLUP method presents the same structure of equation as in 2.2.1, except for the (co)variance matrix of random genetic effects, which is substituted by H, as described by Aguilar et al. (2010):

where

The G matrix was built using the first methods proposed by VanRaden (2008b):

where p is the allele frequency of the ith locus and M is a matrix of SNP content centered by twice the current allele frequencies. Since the frequencies of the current genotyped population are used to center G, pedigree and genomic matrices have different bases, G was adjusted so the average diagonal and off-diagonal matched the averages of diagonal and off-diagonal in A22, as described by Vitezica et al. (2011).

Weighted Single-Step Genomic Best Linear Unbiased Predictor

The WssGBLUP is the third method we used (two models, each with a different CT value, as explained below). This approach is equal to model 2.2.2, except for the matrix G, built following the second method of VanRaden (2008a), as shown below:

where p is the allele frequency of the ith locus, M is a matrix of SNP content centered by twice the current allele frequencies, and D is the diagonal matrix in which SNP specific weights are contained. The iterative algorithm reported by Zhang et al. (2016) has been used as a weighting strategy. The SNP weights presented in D were obtained as a function of the estimated SNP effect (

1. The initial parameter was set as

2. GEBV (

3. The SNP effect (

4.

5. The weight of SNPs is standardized by maintaining a constant genetic variance among iterations:

6. Matrix G is then recreated by including the new weights:

7. Set

We created two different WssGBLUP models with two different CT values: WssGBLUP1 had a CT value of 1.105, while WssGBLUP2 had a CT value of 1.250. This process was carried out to grant WssGBLUP1 the lowest possible shrinkage effect and WssGBLUP2 the highest possible shrinkage effect. For both models, the maximum number of iterations was set to five. For simplicity, we reported only two WssGBLUP predictions instead of the 10 analyzed in this study (combination of two CT values and five iterations). Thus, we retained two opposite WssGBLUP scenarios: WssGBLUP1, which presents the lowest SNPs shrinkage effect, and WssGBLUP2, which provides the highest shrinkage effect.

Single-Step Linear Unbiased Predictor With Only Informative SNPs

The last group of models (five models) consisted of ssGBLUP in which the G matrix of 2.2.2 was constructed using SNPs obtained after applying the different variable selection algorithms (described below, Section 2.4). The number of columns in Z is, thus, different for each trait and each data set.

Model Computations

A was built with the pedigree information tracking back up to three generations in all models. In addition, according to Cesarani et al. (2019), the variance components of each data set were estimated under PBLUP models by tracing back all animals in the pedigree. Variance components were estimated using the AIReml algorithm (Gilmour et al., 1995). All genetic and genomic prediction analyses were performed using the BLUPF90 family of programs (Aguilar et al., 2018). The consistency of all this information is reported in Table 2. Preliminary analysis, such as LD calculation, was conducted using preGSf90 (Aguilar et al., 2018, belonging to the BLUPF90 family of programs).

Featured Selection Algorithms

The EBVs of the target trait were used to map the major SNP markers associated with the phenotype, using five different statistical approaches. The genome content was considered a covariance matrix, while EBVs of genotyped animals

Least Absolute Shrinkage and Selection Operator

In the high-dimensional information literature, many penalized likelihood approaches have been proposed. Given the baseline

where

A grid search was performed to find the optimal values obtained by testing values from 0 to 20 in increments of 0.1. These values were used to maximize the LASSO model performance, based on the highest coefficient of determination and the lowest mean squared error (MSE) in the training set. To carry out this calculation, we used the glmet R package (Friedman et al., 2010).

Spike-and-Slab LASSO

Spike-and-slab LASSO (SSLASSO) was proposed by Ročková and George (2018). It is based on the idea that every penalized likelihood has a Bayesian interpretation (Bai et al., 2021). For instance, the LASSO penalization is equivalent to a Laplace distribution regulated by hyperparameter

The SSLASSO is the equivalent to a two-point mixture of Laplace distributions defined as follows:

where

The Bayesian prior can be rearranged in a penalized likelihood context by taking this marginal logarithm as a prior (Bai et al., 2021); after some derivation, the following can be obtained:

where

SSLASSO was computed using the SSLASSO R package (Ročková and George, 2018), error variances were assumed to be unknown, and a self-adaptive penalty was set. In so doing,

Recursive Feature Elimination Using Ridge Regression

Similar to LASSO, ridge regression is based on a principle of penalized likelihood, with a penalty equal to

After that, a recursive feature elimination using penalized ridge regression was performed as follows. In each iteration, the SNP effect

Recursive Feature Elimination Using the Support Vector Machine

The SVM is a kernel-based supervised learning technique, often used for regression analysis. Depending on the kernel function considered, the SVM can map linear or nonlinear relationships between phenotypes and SNP markers. The best kernel function to map genotype to phenotype was determined in different training subsets: a five-fold split was used to determine which kernel function was a better fit for the data, either with linear, polynomial, or radial basis. We found that performing the SVM with a linear basis function outperformed the polynomial and radial basis function of about 12.5% in predictive ability.

The general model for the SVM (Evgeniou and Pontil, 2005; Hastie et al., 2009) can be described as follows:

where

After that, recursive feature elimination using the SVM was performed using the same procedure described for RfeRR in the study by Sanz et al. (2018).

Boosting Ensemble

The boosting approach (XGBoost) is an ensemble technique that combines gradient descent error minimization with boosting, aiming to convert weak regression tree models into strong learners (Hastie et al., 2009; Natekin and Knoll, 2013). This ensemble process combines different predictor variables sequentially in the regression tree model, using regularization via selection and shrinkage of the predictors to control the residual from the previous model (Friedman, 2002). In addition, the XGBoost can use parallel computation to use more regularized models to prevent overfitting. The XGBoost approach can be described as follows:

where

Effective Population Size Calculations

The effective population size (Ne) has been computed from the individual increase in inbreeding (

where

Validation

Validation in the Simulated Data set

Quality of prediction was measured as the correlation and MSE between the genomic breeding values estimated under different models and the true breeding values for animals belonging to the 10th generation, that is, the last generation of animals, including individuals without phenotypes but with genotype.

Validation in the Real Data set

In the real data set, two different cross-validation methods were applied. The first method we used to cross validate predictive ability was to calculate both the correlation and MSE between predicted and observed phenotypes. In this case, five-fold cross-validation with 10 iterations was performed. Since not all animals were genotyped in each iteration, 1/5 of non-genotyped and 1/5 of genotyped animals were masked. The current study considered predictive ability metrics only for genotyped animals; however, results about non-genotyped animals were also obtained (Supplementary Figure S1).

Linear regression (LR) (Legarra and Reverter, 2018) was used as the second cross-validation method. It compares the prediction performances of different models on groups of focal individuals born after a given date, in this case, the young bulls. LR is particularly suited to the specific needs of the Rendena population since predicting the future performance of young bulls without phenotype is one of the main objectives of the breeding plans for performance tests (Mancin et al., 2021a).

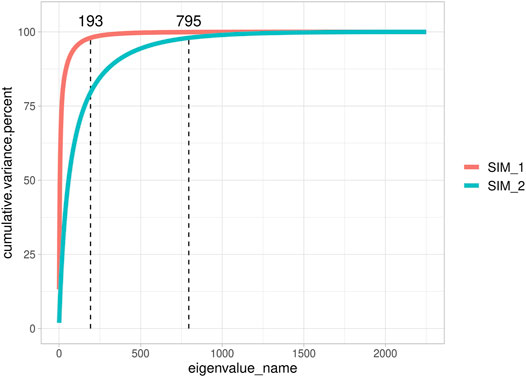

The LR method evaluates the goodness of a model by comparing its performance in a complete data set and a partial data set. The complete data set contains the whole amount of information or it is the data set used for prediction. A partial data set is referred to as the complete data set with some animals with the phenotype removed, usually young animals known as candidates to selection. According to Macedo et al. (2020), we built partial data sets by excluding phenotypes since a target recent birth year of young bulls (since 2012–2020; since 2014–2020; and since 2017–2020) to describe possible variations and random deviations of the estimator; consistencies are reported on Table 3. LR considered the following three parameters: bias, dispersion, and accuracy. Bias is the difference between the expected breeding values estimated under the complete and partial data sets. Dispersion was calculated as the regression coefficient considering the breeding values from the complete data set on the ones estimated from the partial data and accuracy as correlations between the two breeding values.

TABLE 3. Description of different validation sets used in cross-validation. The first and last years of birth of animals in the training data set and the number of animals (individuals) used in the validation cohort are reported.

Results

Genomic Structure

Genomic Structure in Simulated Data sets

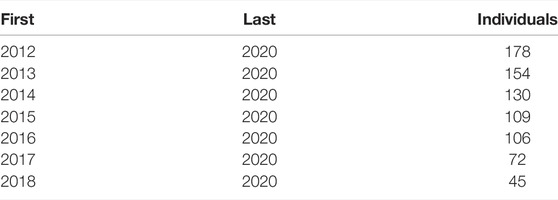

Figure 2 highlights the different genomic assets of small Ne populations (SIM1 and SIM3; 10 sires per generation) and large Ne populations (SIM2 and SIM4; 200 sires per generation). Since the different number of QTLs assumed for the populations with the same Ne (that is, 10 vs. 1000 QTL) did not impact G matrix dimensionality, only SIM1 and SIM2 were plotted for simplicity. In SIM1, 193 eigenvalues were necessary to explain 98% of G matrix variance, while in SIM2, 795 eigenvalues were necessary to explain 98% of G matrix variance. When only ten sires per generation were used, it was possible to observe different subpopulations (Supplementary Figure S2); however, no population structure was found when plotting the first two eigenvalues. On the other hand, SIM2 appeared homogenous, and individuals seemed almost unrelated. In addition, when LD per chromosome was calculated, a greater value was observed in SIM1 (0.161 ± 0.076) than that in SIM2 (0.067 ± 0.054; data not shown). An Ne value of 81.18 ± 4 was determined for SIM1 and SIM3 and 1869 ± 546 for SIM2 and SIM4.

FIGURE 2. Cumulative explained variance of all eigenvalues of the genomic relationship matrix of two representative simulated populations.

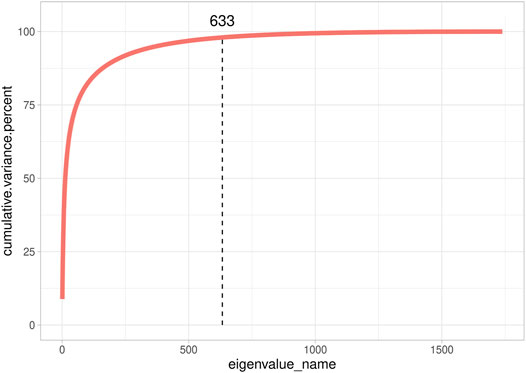

Genomic Structure in the Real Data set

We also investigated G’s dimensionality on the real data set of the Rendena cattle population (Figure 3). The real data set presented a situation closer to SIM1 and SIM3 than to SIM2 and SIM4. It showed, indeed, an average Ne value of 108.2 ± 0.74 calculated from pedigree data. It is possible to observe a few clusters in the genomic relationship matrix (Figure 3); however, they are not as straightforward as in SIM. We, therefore, can note that no population structure is present in Rendena breed (i.e., no subpopulations), which is in line with previous research (Mancin et al., 2022). Only 633 eigenvalues explained 98% of G variance; thus, the scenario was closer to SIM2 than SIM1. In addition, we observed an average LD of 0.187 ± 0.107 per chromosome (Mancin et al., 2022).

FIGURE 3. Cumulative explained variance of all eigenvalues of the genomic relationship matrix of Rendena populations.

SNPs Retained by Variable Selection Models

SNPs Retained in Simulated Data sets

Figure 4 reports the impact of the different algorithms in terms of the number of informative markers retained. Specifically, we were interested in identifying the impact that different G matrix dimensionality and number of QTLs had on the number of SNPs considered informative. In all simulations, LASSO and SSLASSO retained the lowest number of SNPs (roughly 2,000 SNPs averaged across simulations), and they presented lower intra- and between-scenario variability. On the contrary, RfeSVM and RfeRR algorithms retained higher numbers of SNPs, on average 12,000 for RfeRR and 7,000 for RfeSVM. RfeSVM also presented an extreme variability across scenarios (Figure 4). XGBoost retained an intermediate number of SNPs, with an average of 3,000 SNPs retained across simulations. As shown in Figure 4, different numbers of QTLs did not affect the number of SNPs retained by each algorithm. In fact, no difference was observed between SIM1 vs. SIM3 and SIM2 vs. SIM4; only LASSO and SSLASSO algorithms seem to be slightly affected by the number of QTLs. Interestingly, the dimensionality of the G matrix seems to be more influential as scenarios with higher Ne presented a higher number of SNPs (SIM1 and SIM2). The XGBoost is the only algorithm where this trend was not seen. Crucially, we observed that the negative gap in model accuracy present in simulations with lower QTL (SIM3 and SIM4) fades when variable selection models are introduced.

FIGURE 4. Bar plot representing the number of SNPs retained by each algorithm on the four simulated population; error bars represent standard deviation.

SNPs Retained in the Real Data set

We showed the impact of variable selection methods regarding the number of informative markers retained in the Rendena population in Figure 5. Although the number of initial SNPs was similar to that of the simulated populations, in general, the algorithms retained a higher number of SNPs in the real data set. Similar to what was reported in the simulated data, LASSO and SSLASSO were the most restrictive algorithms of SNP selection, with an average of 2,000 SNPs retained across the simulations. The XGBoost was the second most restrictive algorithm in terms of SNPs retained by the models, about 3,000 on average. RfeSVM and RfeRR algorithms retained almost half of the SNPs presented in the panels (40,000 SNPs). No clear patterns were identified across different phenotypes: some algorithms found a greater number of SNPs in certain traits and some in others. For example, the lowest number of informative markers retained by RFE algorithms was identified on the DP trait, but the opposite situation occurred for XGBoost, where the number of informative SNPs retained for DP was almost twice the number of informative SNPs retained for other traits.

FIGURE 5. Bar plot representing the number of SNPs retained by each algorithm on the three phenotypes of the Rendena population.

Breeding Value Prediction

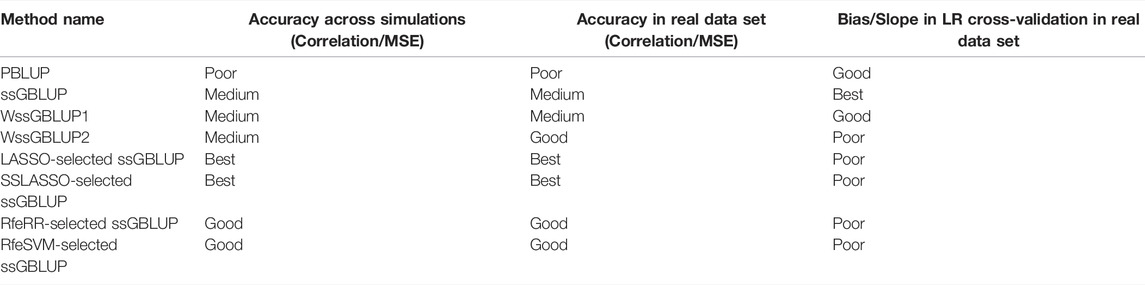

We compared the prediction accuracy of four ‘classical’ models for BLUP and ssGBLUP with five different SNP preselection strategies. The models are detailed in Materials and Methods and summarized as follows: 1) PBLUP; 2) single ssGBLUP; 3) WssGBLUP1; 4) WssGBLUP2; 5a) ssGBLUP with SNPs preselected via LASSO; 5b) ssGBLUP with SNPs preselected via SSLASSO; 5c) ssGBLUP with SNPs preselected via RfeRR; 5d) ssGBLUP with SNPs preselected via RfeSVM; and 5e) ssGBLUP with SNPs preselected via XGBoost. Table 4 provides a qualitative summary of the results, described in the following paragraphs.

TABLE 4. Summary of results obtained using the nine models considered in the study and the cross-validations applied.

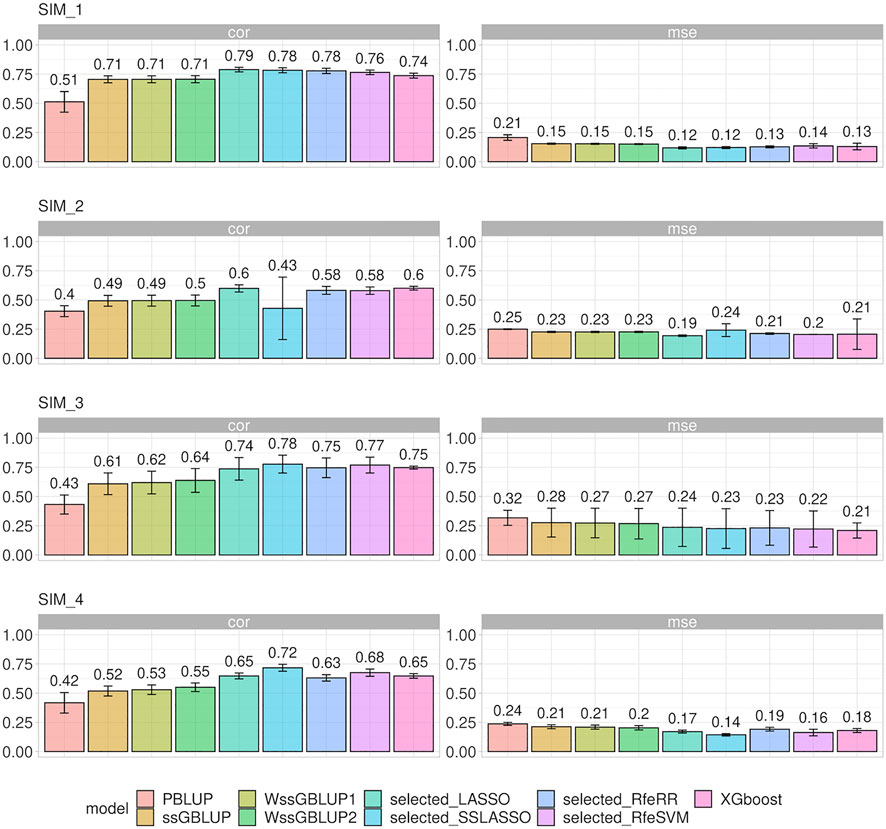

Breeding Value Prediction in Simulated Data sets

Different prediction model accuracies are reported in Figure 6, with correlation and MSE as comparison metrics. MSE values were comparable to those obtained for correlations. Standard BLUP models achieved the lowest accuracy. A substantial increase in accuracy was observed in ssGBLUP models, that is, when genomic data were integrated: this increase of accuracy was more relevant for populations with small Ne (SIM1 and SIM3).

FIGURE 6. Bar plot representing correlation (corr) and mean squared error (MSE) between predicted and true breeding values on the four different simulated populations. Error bars represent standard deviations.

A slightly greater accuracy than that in ssGBLUP was observed when a heterogenous distribution of SNPs was considered within the matrix G (WssGBLUP). The gap in accuracy was greater in the populations with few QTLs (SIM3 and SIM4), especially for WssGBLUP2. On the other hand, the increase in accuracy for SIM1 and SIM2 under WssGBLUP was almost close to zero. A substantial variation in accuracy values was observed when ssGBLUP was performed with G matrixes constructed with selected SNPs; however, the accuracy of the prediction performance of each variable selection model changed according to the simulation structure. Generally, SSLASSO presented the highest increase in accuracy among the genetic models in all simulations, except for SIM2, where we observed a dramatic drop in accuracy. On the other hand, LASSO achieved greater accuracy on both SIM1 and SIM2. Other algorithms presented an intermediate increase in accuracy among the genetic models in all simulations, namely, RfeRR, RfeSVM, and XGBoost, with different rankings in different scenarios.

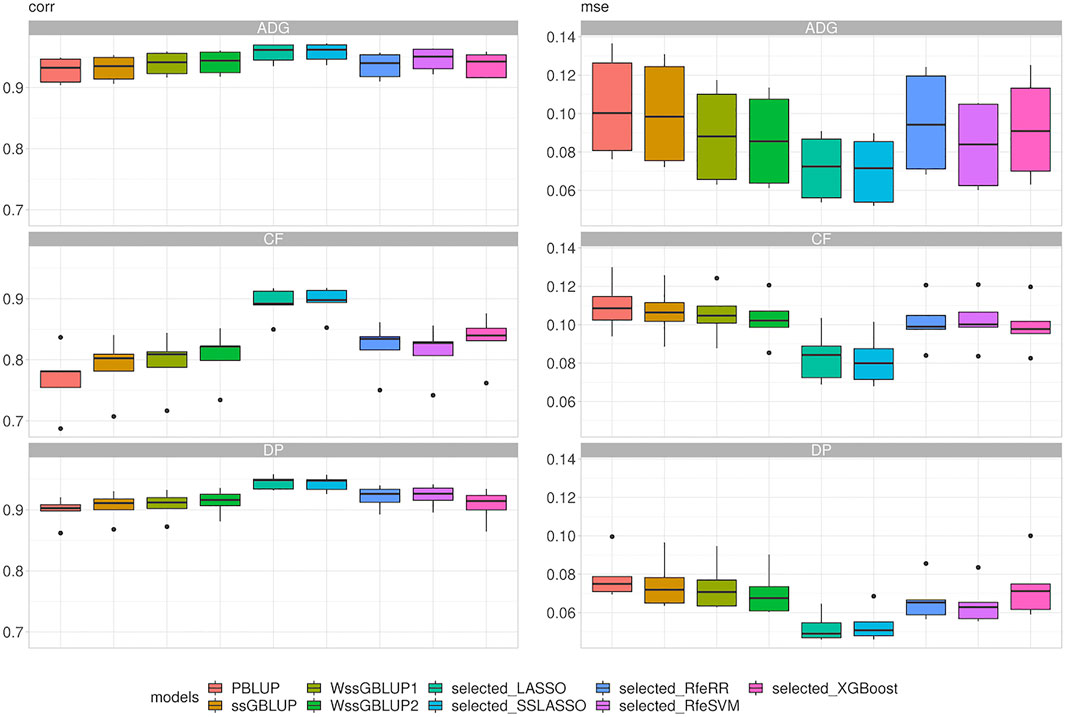

Breeding Value Prediction in Real Data set

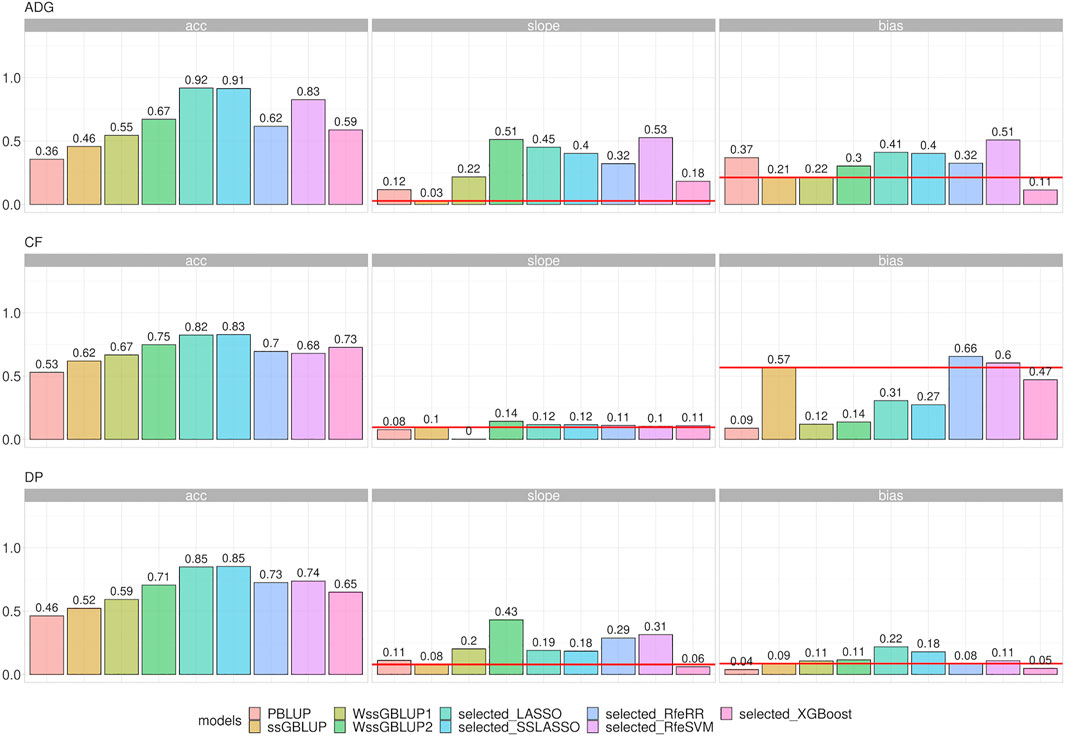

With our real data sets, we were first interested in evaluating the performance of these models in terms of prediction; then, we wanted to evaluate the feasibility of introducing them in a real breeding plan scenario. This point was achieved using LR cross-validation methods (Legarra and Reverter, 2018). Figure 7 presents the results of repeated five-fold cross-validation. The integrations of genomic data led again to a substantial increase in accuracy: the PBLUP presented the overall lowest correlation (r) values (r from 0.36 to 0.53). The ssGBLUP presented the lowest correlation values among genomic models (r from 0.46 to 0.62), while a slight increment was observed for WssGBLUP1 (from 0.55 to 0.67) and for WssGBLUP2 (from 0.67 to 0.75). As with simulated data, variable selection models improved model accuracy substantially. Again, the highest correlations were found for LASSO and SSLASSO, with values of r ranging from 0.83 to 0.92, while other algorithms presented intermediate values (r around 0.70). This pattern was observed across all traits. MSE reflected the results obtained with correlations.

FIGURE 7. Box plot representing correlation (corr) and mean squared error (MSE) between predicted and true breeding values of phenotypes recorded in Rendena performance testing stations. Target phenotypes are ADG: average daily gain; CF: in vivo carcass fleshiness; DP: in vivo dressing percentage.

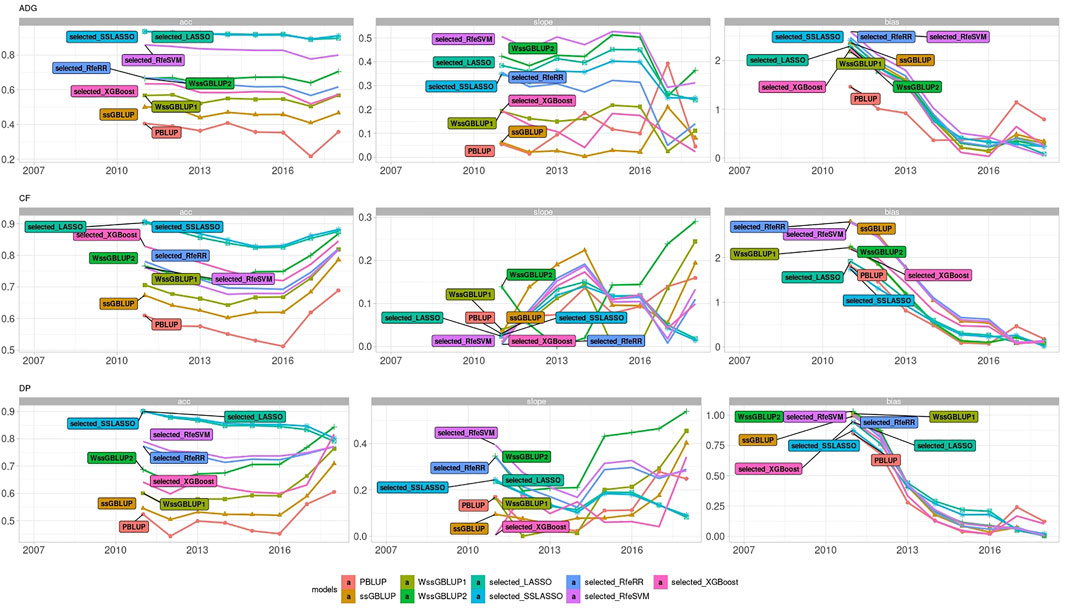

LR methods evaluated dispersion and bias in addition to accuracy. Figure 8 represents the different results obtained using LR cross-validation methods in various validation sets from 2015–2020. This set of years was chosen as representative of all seven validation cohorts. Figure 9 reports the summary statistics of all seven validation cohorts.

FIGURE 8. Bar plots representing accuracy, dispersion, and bias of the Rendena data set estimated using LR cross-validation in the validation cohort of 2015–2020. Dispersion was defined as 1, the absolute value of dispersion, while bias as absolute values of bias divided by its genetic variance to improve assessment of model rankings. Horizontal lines represent the values of ssGBLUP to permit easier comparison among models.

FIGURE 9. Line plot representing accuracy, dispersion, and bias of Rendena data set estimated using LR cross-validation in the validation cohort of 2021. Dispersion was represented as 1, absolute value of dispersion, while bias as absolute values of bias divided by its genetic variance.

Accuracy trends of the real data set measured with the LR method were similar to the accuracies obtained with five-fold cross-validation. However, looking at the other statistics (slope and bias), we can observe that LASSO, SSLASSO RfeRR, and RfeSVM cannot be considered suitable variable selection approaches in real breeding plans due to their higher bias and dispersion values, especially if compared with ssGBLUP. Conversely, XGBoost was the only model with similar or even lower bias and dispersion values than ssGBLUP but with greater accuracy. As seen in Figure 9, we demonstrate that these trends are consistent over different validation cohorts.

Discussion

The present study had two objectives: testing if reducing the number of SNPs used to construct G could lead to an increase in the accuracy of (ss)GBLUP and whether this method could be introduced in genomic evaluations of a real population with a small size, such as the Rendena breed.

In our study, using both simulated and real data sets, we demonstrated that the accuracy of (ss)GBLUP increases when it is performed when the number of SNPs to construct G was reduced. This finding agrees with that of the extensive literature supporting the increased accuracy of Bayesian variable selection models in many different species (Lourenco et al., 2014; Mehrban et al., 2021; Yoshida et al., 2018; Zhu et al., 2021). For example, Akbarzadeh et al. (2021) integrated only a subset of chosen SNPs into the GBLUP framework based on a classical GWAS analysis (i.e., 1, 5, 10, and 50% of significant SNPs). A slightly greater accuracy than that in the canonical GBLUP was observed when G was constructed using only the best 10 and 50% SNPs; contrariwise, models using the 1 and 5% of the SNP prediction underperformed. Furthermore, Akbarzadeh et al. (2021) reported a dramatic decline in performance when the same percentage of SNPs was randomly chosen. We tried preliminary tests of a similar approach—construction of the G matrix using the top 500, 1,000, and 50,000 SNPs ranked by their absolute SNP effect values calculated through back solutions—in Rendena breed; however, we immediately discarded this approach because of the extreme bias and inflated breeding value predictions (these findings are reported by Mancin et al., 2022 in press). In addition, choosing so few and unrepresentative SNPs reduced a lot the compatibility between A and G matrices, and thus ssGBLUP properties were affected (Misztal et al., 2013).

Li et al. (2018) and then Piles et al. (2021) showed how using different methods to select the most informative SNPs could significantly improve the performance of the variable selection models. Li et al. (2018) constructed the G matrix using the best 400, 1,000, and 3,000 SNPs, ranking SNPs effects by three different machine learning models. As in the previous case, an increase in accuracy was obtained only with a certain number of selected SNPs (1,000 SNPs), while a lower accuracy than that in the canonical GBLUP was observed with a lower number of SNPs. In addition, Piles et al. (2021) and Azodi et al. (2019) showed that by combining different variable selection algorithms with various parametric and nonparametric prediction models (i.e., ensemble predictions), it is possible to obtain a consistent increase in accuracy compared to models without variable selection. However, our study has not explored these scenarios since prediction methods other than ssGBLUP or ssSNP-BLUP (Fernando et al., 2017) do not seem to bring any concrete improvement for livestock traits (Abdollahi-Arpanahi et al., 2020). Furthermore, ssGBLUP and ssSNP-BLUP are the only methods that allow combining straightforwardly non-genotyped animals with genotyped ones—a crucial feature for a real-life routine selection plan and something that the other algorithms cannot do.

Our result that reducing the number of parameters positively impacts accuracy is also supported by Frouin et al. (2020). In that study, it was demonstrated that the error of the prediction tends to linearly increase when n > p until the “irreducible” error

Our simulated results support the abovementioned theory, as simulations with lower Ne presented higher accuracy of ssGBLUP (SIM1, SIM3). Furthermore, differences between scenarios emerge when comparing simulations differing for their number of QTLs. ssGBLUP showed lower performance in SIM3 and SIM4 (QTL10) than in SIM1 and SIM2 (QTL1000); however, this discrepancy in accuracy decreases by applying variable selection. This result agrees with that by Daetwyler et al. (2010), which demonstrated that SNP selection via Bayes B presents substantial advantages when the number of QTLs is small compared to the number of independent chromosome segments.

As mentioned above, Bayesian SNP regression, or (ss)GBLUP using a weighted realized relationship matrix (Tiezzi and Maltecca, 2015; Zhang et al., 2016), always provides higher prediction accuracy than models assuming homogenous variance among SNPs (GBLUP or SNP-BLUP). However this increase in accuracy is often connected with increases in bias, especially when time cross-validation is used (Mehrban et al., 2021), instead of five-fold or leave-one-out cross-validation (Zhu et al., 2021). However, when the goal is to achieve the “best predictor”, namely, a value closer as possible to real one, models assuming heterogenous variances and models with variable selection can be identified as the best models. They have, indeed, the highest MSE, intended as bias-variance trade-off (Gianola, 2013). In this regard, LASSO and SSLASSO, thus, appeared as “best models” for both simulated and real data. We showed that (SS)LASSO regression performs automatic feature selection, especially in the presence of linearly correlated features, such as SIM1 and SIM3, since their simultaneous presence will increase the value of the cost function. Thus, LASSO regression will try to shrink the coefficient of the less important SNPs to 0 to select the best features.

However, in real-life breeding scenarios, time cross-validation must be considered (Liu, 2010; Legarra and Reverter, 2017) as this procedure simulates the natural accumulation of information across time. Only a few studies evaluated the impact of heterogenous or variable selection models using time cross-validation with small samples of individuals. Cesarani et al. (2021) and Mancin et al. (2021b) found higher bias and overdispersion values in WssGBLUP than in ssGBLUP.

The same pattern emerged when we performed LR cross-validation (Mancin et al., 2021b; Cesarani et al., 2021), namely, that higher shrinkages or selected SNPs have high accuracy but carry higher bias and dispersion values. Specifically, (SS)LASSO models showed the best accuracy in all three traits when measured with LR. Conversely, other feature selection models and WssGBLUP presented lower accuracy. Among the variable selection models, we found slightly lower values of accuracy in the XGBoost; however, we suggest that XGBoost could be regarded as the best variable selection model among those tested as it is the only model that presented higher accuracy than ssGBLUP, at a net of better bias and dispersion.

Several questions persist about the use of these models in routine evaluation. One of these issues concerns the implementation of preselected SNPs in multitrait models. However, this is a recurring problem not only when the G matrix is built with preselected SNPs but also more in general whenever models take into account the specific genomic architecture of traits, as WssGBLUP does. A possible solution to bypass this issue might be using multiple G matrix prediction models, one for each trait: yet, this is not computationally straightforward. A preliminary selection of SNPs by multiobjective optimization framework algorithms, as in Garcia (2019), could be a more concrete approach for future studies.

Another possible concern about the large-scale use of variable selection ssGBLUP is the fluctuations of SNPs across generations. Similarly to the issue with multitrait models, this regards all genomic selections (Hidalgo et al., 2020); however, it is true that with respect to other methods, such as Bayesian SNP regression, generation-by-generation recalibration of SNP preselection algorithms can be highly computationally demanding, especially when algorithms such as XGBoost are chosen. Finally, SNP preselection could be influenced by variability in SNP frequency across animals or more in general in the presence of population structure, as with subpopulations. Nonetheless, in our study, the PCA plots referring to SIM1 (Supplementary Materials S2), where some clusters are present, show that variable selection models overcome this issue quite effectively. It would be interesting to choose one or more variable selection models in future studies and evaluate their impact on more stratified populations.

Besides increasing the EBV accuracies, developing an optimal strategy for SNP variable selection in high-density panels will be particularly useful in local breeds. It would in fact allow the use of informative but lower density and cheaper panels, accounting for the best SNPs suitable for the target trait and population. Furthermore, given that small breeds cannot attract the same level of technological investment as their cosmopolitan counterparts (e.g., Holstein), decreasing the costs of genomic selection could be critical to help guarantee their selection, and thus their survival.

Aside from the economic factors, the importance of developing ad hoc selection methods for small-population cattle, especially for local breeds, is of primary importance for their conservation. Maintaining genetic progress for the productive characters and at the same time keeping intact the genetic variability and the distinct characteristics of the breeds can be guaranteed through breeding plans implementing careful selection (Biscarini et al., 2015). These plans are needed to preserve genetic variability within livestock local populations, a goal which, in the medium term, is critical for the animal husbandry industry to ensure the conservation of native breeds, their productive and reproductive efficiency, health, survival, and overall resilience to future changing environmental pressures (Mastrangelo et al., 2014).

Conclusion

Genomic information, especially the single-step GBLUP technique, has brought great improvements to selection and breeding decisions in livestock. However, these methods still present methodological issues when applied to populations with a small size, such as local and endemic cattle breeds. Our rigorous testing of different algorithms for variable selection of informative SNPs has highlighted that prediction accuracy of variable selection ssGBLUP (especially that of XGBoost) was greater than that of other ssGBLUP methods, without the inflated bias and dispersion that accompany the weighted ssGBLUP. Our use of machine learning models could thus represent a solution to the issue of genomic selection in small populations. Local cattle breeds are an often untapped resource of genetic diversity and have great potential to adapt to varying environmental conditions. The methods presented here might, thus, be used in their conservation, study, and increase their economic competitiveness.

Data Availability Statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below https://datadryad.org/stash/share/O6ld-ZCZLhAUtXmVpOlXkhkffVagc1_Stfnqktk907w.

Ethics Statement

Ethical review and approval were not required for the animal study because this study did not require any specific ethics permit. The cattle samples belonged to commercial private herds and were not experimentally manipulated. The samples were collected by technicians from the Breeders Association of Rendena.

Author Contributions

Idea: EM; conceptualization: EM, CS, BT, LM, and RV; methodology: EM and LM; formal analysis: EM and LM; support to analysis: BT; investigation: BT, CS, RM, and EM; resources: RM and RV; data curation: EM and RM; writing—original draft preparation: EM and BT writing—review and editing: LM, CS, and RM. All authors have read and agreed to the published version of the manuscript.

Funding

The study was funded by the DUALBREEDING project (CUP J61J18000030005) and by BIRD183281.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors, and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

The authors are grateful to the National Breeders Association of Rendena (ANARE) for data support.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fgene.2022.814264/full#supplementary-material

References

Abdollahi-Arpanahi, R., Gianola, D., and Peñagaricano, F. (2020). Deep Learning versus Parametric and Ensemble Methods for Genomic Prediction of Complex Phenotypes. Genet. Sel. Evol. 52, 1–15. doi:10.1186/s12711-020-00531-z

Aguilar, I., Tsuruta, S., Masuda, Y., Lourenco, D. A. L., Legarra, A., and Misztal, I. (2018). “BLUPF90 Suite of Programs for Animal Breeding,” in The 11th World Congress of Genetics Applied to Livestock Production (Auckland, New Zealand, 11, 751.

Aguilar, I., Misztal, I., Johnson, D. L., Legarra, A., Tsuruta, S., and Lawlor, T. J. (2010). Hot Topic: A Unified Approach to Utilize Phenotypic, Full Pedigree, and Genomic Information for Genetic Evaluation of Holstein Final Score. J. Dairy Sci. 93, 743–752. doi:10.3168/jds.2009-2730

Akbarzadeh, M., Dehkordi, S. R., Roudbar, M. A., Sargolzaei, M., Guity, K., Sedaghati-khayat, B., et al. (2021). GWAS Findings Improved Genomic Prediction Accuracy of Lipid Profile Traits: Tehran Cardiometabolic Genetic Study. Sci. Rep. 11, 1–9. doi:10.1038/s41598-021-85203-8

Alvarenga, A. B., Veroneze, R., Oliveira, H. R., Marques, D. B. D., Lopes, P. S., Silva, F. F., et al. (2020). Comparing Alternative Single-step GBLUP Approaches and Training Population Designs for Genomic Evaluation of Crossbred Animals. Front. Genet. 11, 263. doi:10.3389/fgene.2020.00263

Azodi, C. B., Bolger, E., McCarren, A., Roantree, M., de los Campos, G., and Shiu, S.-H. (2019). Benchmarking Parametric and Machine Learning Models for Genomic Prediction of Complex Traits. G3 Genes, Genomes, Genet. 9, 3691–3702. doi:10.1534/g3.119.400498

Bai, R., Ročková, V., and George, E. I. (2021). Spike-and-Slab Meets LASSO: A Review of the Spike-And-Slab LASSO. Handb. Bayesian Sel., 81–108. –108. doi:10.1201/9781003089018-4

Biscarini, F., Nicolazzi, E. L., Stella, A., Boettcher, P. J., and Gandini, G. (2015). Challenges and Opportunities in Genetic Improvement of Local Livestock Breeds. Front. Genet. 6, 33. doi:10.3389/fgene.2015.00033

Blasco, A., and Toro, M. A. (2014). A Short Critical History of the Application of Genomics to Animal Breeding. Livestock Sci. 166, 4–9. doi:10.1016/j.livsci.2014.03.015

Botelho, M. E., Lopes, M. S., Mathur, P. K., Knol, E. F., Guimarães, S. E. F., Marques, D. B. D., et al. (2021). Applying an Association Weight Matrix in Weighted Genomic Prediction of Boar Taint Compounds. J. Anim. Breed. Genet. 138, 442–453. doi:10.1111/jbg.12528

Calus, M. P. L., and Vandenplas, J. (2018). SNPrune: An Efficient Algorithm to Prune Large SNP Array and Sequence Datasets Based on High Linkage Disequilibrium. Genet. Sel. Evol. 50, 1–11. doi:10.1186/s12711-018-0404-z

Cesarani, A., Biffani, S., Garcia, A., Lourenco, D., Bertolini, G., Neglia, G., et al. (2021). Genomic Investigation of Milk Production in Italian buffalo. Ital. J. Anim. Sci. 20, 539–547. doi:10.1080/1828051X.2021.1902404

Cesarani, A., Pocrnic, I., Macciotta, N. P. P., Fragomeni, B. O., Misztal, I., and Lourenco, D. A. L. (2019). Bias in Heritability Estimates from Genomic Restricted Maximum Likelihood Methods under Different Genotyping Strategies. J. Anim. Breed. Genet. 136, 40–50. doi:10.1111/jbg.12367

Chen, T., and Guestrin, C. (2016). “XGBoost,” in Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA (ACM), 785–794. doi:10.1145/2939672.2939785

Cherkassky, V., and Ma, Y. (2004). Practical Selection of SVM Parameters and Noise Estimation for SVM Regression. Neural Networks 17, 113–126. doi:10.1016/S0893-6080(03)00169-2

Christensen, O. F., and Lund, M. S. (2010). Genomic Prediction when Some Animals Are Not Genotyped. Genet. Sel. Evol. 42, 2–8. doi:10.1186/1297-9686-42-2

Evgeniou, T., and Pontil, M. (2005). “Support Vector Machines: Theory and Applications,” in Machine. Editor L. Wang (Berlin, Heidelberg: Springer Berlin Heidelberg). doi:10.1007/b95439

Falconer, D. S., and Mackay, T. F. C. (1996). Introduction to Quantitative Genetics, 4. Harlow, Essex, UK Ed: Longmans Green & Co., 464.

Fragomeni, B. O., Lourenco, D. A. L., Legarra, A., VanRaden, P. M., and Misztal, I. (2019). Alternative SNP Weighting for Single-step Genomic Best Linear Unbiased Predictor Evaluation of Stature in US Holsteins in the Presence of Selected Sequence Variants. J. Dairy Sci. 102, 10012–10019. doi:10.3168/jds.2019-16262

Fragomeni, B. O., Lourenco, D. A. L., Masuda, Y., Legarra, A., and Misztal, I. (2017). Incorporation of Causative Quantitative Trait Nucleotides in Single-step GBLUP. Genet. Sel. Evol. 49, 1–11. doi:10.1186/s12711-017-0335-0

Friedman, J., Hastie, T., and Tibshirani, R. (2010). Regularization Paths for Generalized Linear Models via Coordinate Descent. J. Stat. Softw. 33, 1–22. doi:10.18637/jss.v033.i01

Friedman, J. H. (2002). Stochastic Gradient Boosting. Comput. Stat. Data Anal. 38, 367–378. doi:10.1016/S0167-9473(01)00065-2

Frouin, A., Dandine-Roulland, C., Pierre-Jean, M., Deleuze, J.-F., Ambroise, C., and Le Floch, E. (2020). Exploring the Link between Additive Heritability and Prediction Accuracy from a Ridge Regression Perspective. Front. Genet. 11, 1–15. doi:10.3389/fgene.2020.581594

Gianola, D. (2013). Priors in Whole-Genome Regression: The Bayesian Alphabet Returns. Genetics 194, 573–596. doi:10.1534/genetics.113.151753

Gilmour, A. R., Thompson, R., and Cullis, B. R. (1995). Average Information REML: An Efficient Algorithm for Variance Parameter Estimation in Linear Mixed Models. Biometrics 51, 1440–1450. doi:10.2307/2533274

Gualdrón Duarte, J. L., Cantet, R. J., Bates, R. O., Ernst, C. W., Raney, N. E., and Steibel, J. P. (2014). Rapid Screening for Phenotype-Genotype Associations by Linear Transformations of Genomic Evaluations. BMC Bioinformatics 15, 1–11. doi:10.1186/1471-2105-15-246

Gualdrón Duarte, J. L., Gori, A.-S., Hubin, X., Lourenco, D., Charlier, C., Misztal, I., et al. (2020). Performances of Adaptive MultiBLUP, Bayesian Regressions, and Weighted-GBLUP Approaches for Genomic Predictions in Belgian Blue Beef Cattle. BMC Genomics 21, 1–18. doi:10.1186/s12864-020-06921-3

Habier, D., Fernando, R. L., and Garrick, D. J. (2013). Genomic BLUP Decoded: A Look into the Black Box of Genomic Prediction. Genetics 194, 597–607. doi:10.1534/genetics.113.152207

Hastie, T., Tibshirani, R., and Friedman, J. (2009). The Elements of Statistical Learning. New York, NYNew York: Springer.

Henderson, C. R. (1975). Best Linear Unbiased Estimation and Prediction under a Selection Model. Biometrics 31, 423–447. doi:10.2307/2529430

Karaman, E., Cheng, H., Firat, M. Z., Garrick, D. J., and Fernando, R. L. (2016). An Upper Bound for Accuracy of Prediction Using GBLUP. PLoS One 11, e0161054–18. doi:10.1371/journal.pone.0161054

Legarra, A., Aguilar, I., and Misztal, I. (2009). A Relationship Matrix Including Full Pedigree and Genomic Information. J. Dairy Sci. 92, 4656–4663. doi:10.3168/jds.2009-2061

Legarra, A., and Reverter, A. (2017). Can We Frame and Understand Cross-Validation Results in Animal Breeding? Proc. Assoc. Advmt. Anim. Breed. Genet. 22, 73–80.

Legarra, A., and Reverter, A. (2018). Semi-parametric Estimates of Population Accuracy and Bias of Predictions of Breeding Values and Future Phenotypes Using the LR Method. Genet. Sel. Evol. 50, 1–18. doi:10.1186/s12711-018-0426-6

Li, B., Zhang, N., Wang, Y.-G., George, A. W., Reverter, A., and Li, Y. (2018). Genomic Prediction of Breeding Values Using a Subset of SNPs Identified by Three Machine Learning Methods. Front. Genet. 9, 1–20. doi:10.3389/fgene.2018.00237

Macedo, F. L., Christensen, O. F., Astruc, J.-M., Aguilar, I., Masuda, Y., and Legarra, A. (2020). Bias and Accuracy of Dairy Sheep Evaluations Using BLUP and SSGBLUP with Metafounders and Unknown Parent Groups. Genet. Sel. Evol. 52, 1–10. doi:10.1186/s12711-020-00567-1

Mancin, E., Lourenco, D., Bermann, M., Mantovani, R., and Misztal, I. (2021a). Accounting for Population Structure and Phenotypes from Relatives in Association Mapping for Farm Animals: A Simulation Study. Front. Genet. 12. doi:10.3389/fgene.2021.642065

Mancin, E., Tuliozi, B., Pegolo, S., Sartori, C., and Mantovani, R. (2022). Genome Wide Association Study of Beef Traits in Local Alpine Breed Reveals the Diversity of the Pathways Involved and the Role of Time Stratification. Front. Genet. 12, 1–22. doi:10.3389/fgene.2021.746665

Mancin, E., Tuliozi, B., Sartori, C., Guzzo, N., and Mantovani, R. (2021b). Genomic Prediction in Local Breeds: The Rendena Cattle as a Case Study. Animals 11, 1815–1819. doi:10.3390/ani11061815

Mastrangelo, S., Saura, M., Tolone, M., Salces-Ortiz, J., Di Gerlando, R., Bertolini, F., et al. (2014). The Genome-wide Structure of Two Economically Important Indigenous Sicilian Cattle Breeds1. J. Anim. Sci. 92, 4833–4842. doi:10.2527/jas.2014-7898

Masuda, Y., VanRaden, P. M., Misztal, I., and Lawlor, T. J. (2018). Differing Genetic Trend Estimates from Traditional and Genomic Evaluations of Genotyped Animals as Evidence of Preselection Bias in US Holsteins. J. Dairy Sci. 101, 5194–5206. doi:10.3168/jds.2017-13310

Mehrban, H., Naserkheil, M., Lee, D. H., Cho, C., Choi, T., Park, M., et al. (2021). Genomic Prediction Using Alternative Strategies of Weighted Single-step Genomic BLUP for Yearling Weight and Carcass Traits in Hanwoo Beef Cattle. Genes 12, 266. doi:10.3390/genes12020266

Meuwissen, T. H. E., Hayes, B. J., and Goddard, M. E. (20011). Prediction of Total Genetic Value Using Genome-wide Dense Marker Maps. Genetics 157, 1819–1829. doi:10.1093/genetics/157.4.1819

Meyer, D., Dimitriadou, E., Hornik, K., Weingessel, A., Leisch, F., Chang, C.-C., et al. (2020). Misc Functions of the Department of Statistics. Probability Theory Group, e1071. (TU Wien), 1–63.

Misztal, I., Aggrey, S. E., and Muir, W. M. (2013). Experiences with a Single-step Genome Evaluation. Poult. Sci. 92, 2530–2534. doi:10.3382/ps.2012-02739

Mitchell, R., and Frank, E. (2017). Accelerating the XGBoost Algorithm Using GPU Computing. Peerj Comput. Sci. 3, e127. doi:10.7717/peerj-cs.127

Natekin, A., and Knoll, A. (2013). Gradient Boosting Machines, a Tutorial. Front. Neurorobot. 7, 1–21. doi:10.3389/fnbot.2013.00021

Piles, M., Bergsma, R., Gianola, D., Gilbert, H., and Tusell, L. (2021). Feature Selection Stability and Accuracy of Prediction Models for Genomic Prediction of Residual Feed Intake in Pigs Using Machine Learning. Front. Genet. 12. doi:10.3389/fgene.2021.611506

Pocrnic, I., Lourenco, D. A. L., Masuda, Y., and Misztal, I. (2019). Accuracy of Genomic BLUP when Considering a Genomic Relationship Matrix Based on the Number of the Largest Eigenvalues: A Simulation Study. Genet. Sel. Evol. 51, 1–10. doi:10.1186/s12711-019-0516-0

Ren, D., An, L., Li, B., Qiao, L., and Liu, W. (2021). Efficient Weighting Methods for Genomic Best Linear-Unbiased Prediction (BLUP) Adapted to the Genetic Architectures of Quantitative Traits. Heredity 126, 320–334. doi:10.1038/s41437-020-00372-y

Ročková, V., and George, E. I. (2018). The Spike-And-Slab LASSO. J. Am. Stat. Assoc. 113, 431–444. doi:10.1080/01621459.2016.1260469

Sanz, H., Valim, C., Vegas, E., Oller, J. M., and Reverter, F. (2018). SVM-RFE: Selection and Visualization of the Most Relevant Features through Non-linear Kernels. BMC Bioinformatics 19, 432. doi:10.1186/s12859-018-2451-4

Sargolzaei, M., and Schenkel, F. S. (2009). QMSim: a Large-Scale Genome Simulator for Livestock. Bioinformatics 25, 680–681. doi:10.1093/bioinformatics/btp045

Tibshirani, R. (1996). Regression Shrinkage and Selection via the Lasso. J. R. Stat. Soc. Ser. B (Methodological) 58, 267–288. doi:10.1111/j.2517-6161.1996.tb02080.x

VanRaden, P. M. (2008a). Efficient Methods to Compute Genomic Predictions. J. Dairy Sci. 91, 4414–4423. doi:10.3168/jds.2007-0980

VanRaden, P. M. (2008b). Efficient Methods to Compute Genomic Predictions. J. Dairy Sci. 91, 4414–4423. doi:10.3168/jds.2007-0980

Vitezica, Z. G., Aguilar, I., Misztal, I., and Legarra, A. (2011). Bias in Genomic Predictions for Populations under Selection. Genet. Res. 93, 357–366. doi:10.1017/S001667231100022X

Wang, H., Misztal, I., Aguilar, I., Legarra, A., Fernando, R. L., Vitezica, Z., et al. (2014). Genome-wide Association Mapping Including Phenotypes from Relatives without Genotypes in a Single-step (ssGWAS) for 6-week Body Weight in Broiler Chickens. Front. Genet. 5, 134. doi:10.3389/fgene.2014.00134

Zhang, X., Lourenco, D., Aguilar, I., Legarra, A., and Misztal, I. (2016). Weighting Strategies for Single-step Genomic BLUP: An Iterative Approach for Accurate Calculation of GEBV and GWAS. Front. Genet. 7, 151. doi:10.3389/fgene.2016.00151

Keywords: genomic selection accuracy, single-step GBLUP, SNP selection methods, machine learning, local breed cattle, Rendena, genomic selection

Citation: Mancin E, Mota LFM, Tuliozi B, Verdiglione R, Mantovani R and Sartori C (2022) Improvement of Genomic Predictions in Small Breeds by Construction of Genomic Relationship Matrix Through Variable Selection. Front. Genet. 13:814264. doi: 10.3389/fgene.2022.814264

Received: 13 November 2021; Accepted: 22 March 2022;

Published: 18 May 2022.

Edited by:

Mudasir Ahmad Syed, Sher-e-Kashmir University of Agricultural Sciences and Technology, IndiaReviewed by:

Yi Liu, University of Chicago, United StatesBreno De Oliveira Fragomeni, University of Connecticut, United States

Copyright © 2022 Mancin, Mota, Tuliozi, Verdiglione, Mantovani and Sartori. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Beniamino Tuliozi, YmVuaWFtaW5vLnR1bGlvemlAdW5pcGQuaXQ=

†These authors have contributed equally to this work and share first authorship

‡These authors have contributed equally to this work

Enrico Mancin†

Enrico Mancin† Lucio Flavio Macedo Mota

Lucio Flavio Macedo Mota Beniamino Tuliozi

Beniamino Tuliozi Roberto Mantovani

Roberto Mantovani Cristina Sartori

Cristina Sartori