- 1Center for Clinical Translational Science, College of Nursing, The Ohio State University, Columbus, OH, United States

- 2Division of Biostatistics, College of Public Health, The Ohio State University, Columbus, OH, United States

- 3Department of Medicine, University of Louisville, Louisville, KY, United States

- 4College of Pharmacy, The Ohio State University, Columbus, OH, United States

Background: Accreditation of graduate academic programs in clinical research requires demonstration of program achievement of Joint Task Force for Clinical Trial Competence-based standards. Evaluation of graduate programs include enrollment, student grades, skills-based outcomes, and completion rates, in addition to other measures. Standardized measures of competence would be useful.

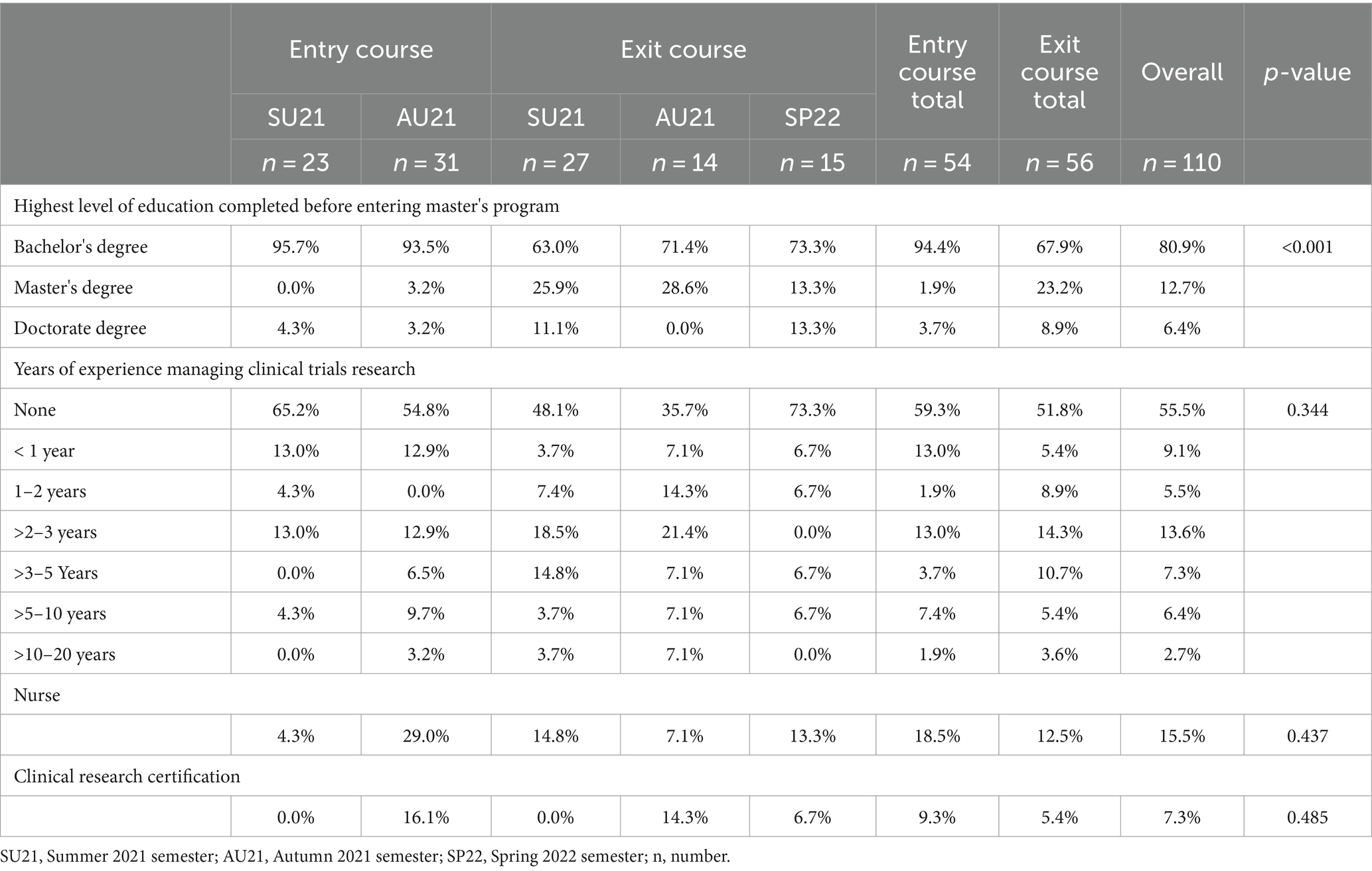

Methods: We used the Competency Index for Clinical Research Professionals (CICRP), in a separate-sample pretest-posttest study to measure self-confidence or self-efficacy in clinical research competency comparing cohorts of students entering and completing a master’s degree program in clinical research across three semesters (summer 2021 – spring 2022). CICRP is a 20-item Likert scale questionnaire (0 = Not at all confident; 10 = extremely confident).

Results: The study sample of 110 students (54 in the entry course, 56 in the exit course) showed overall 80.9% entered the program with only a baccalaureate degree and 55.5% had no prior experience in managing clinical trial research. Cronbach alpha for the instrument showed a high level of content validity (range 0.93–0.98). Median CICRP item rating range at entry was [1, 6] and at exit [7, 10]. Mean CICRP total score (sum of 20 items) at entry was 72.7 (SD 41.9) vs. 167.0 (SD 21.1) at exit (p < 0.001). Mean total score at program entry increased with increasing years of clinical trial management experience but attenuated at program exit.

Conclusion: This is the first use of the CICRP for academic program evaluation. The CICRP may be a useful tool for competency-based academic program evaluation, in addition to other measures of program excellence.

1 Introduction

Academic programs in clinical research have evolved over the past two decades to provide an educational pathway for clinical research professionals for chosen career paths in clinical research. Academic programs may range from associate degrees, undergraduate or graduate certificates, undergraduate degrees and master’s degrees in clinical research management and regulatory affairs. Many of these programs are distance-based and asynchronous, enrolling students nationally and internationally. Other graduate programs also support more advanced clinical translational research and regulatory science education for doctorally prepared clinical translational scientists (e.g., physicians, pharmacologists, and basic scientists).

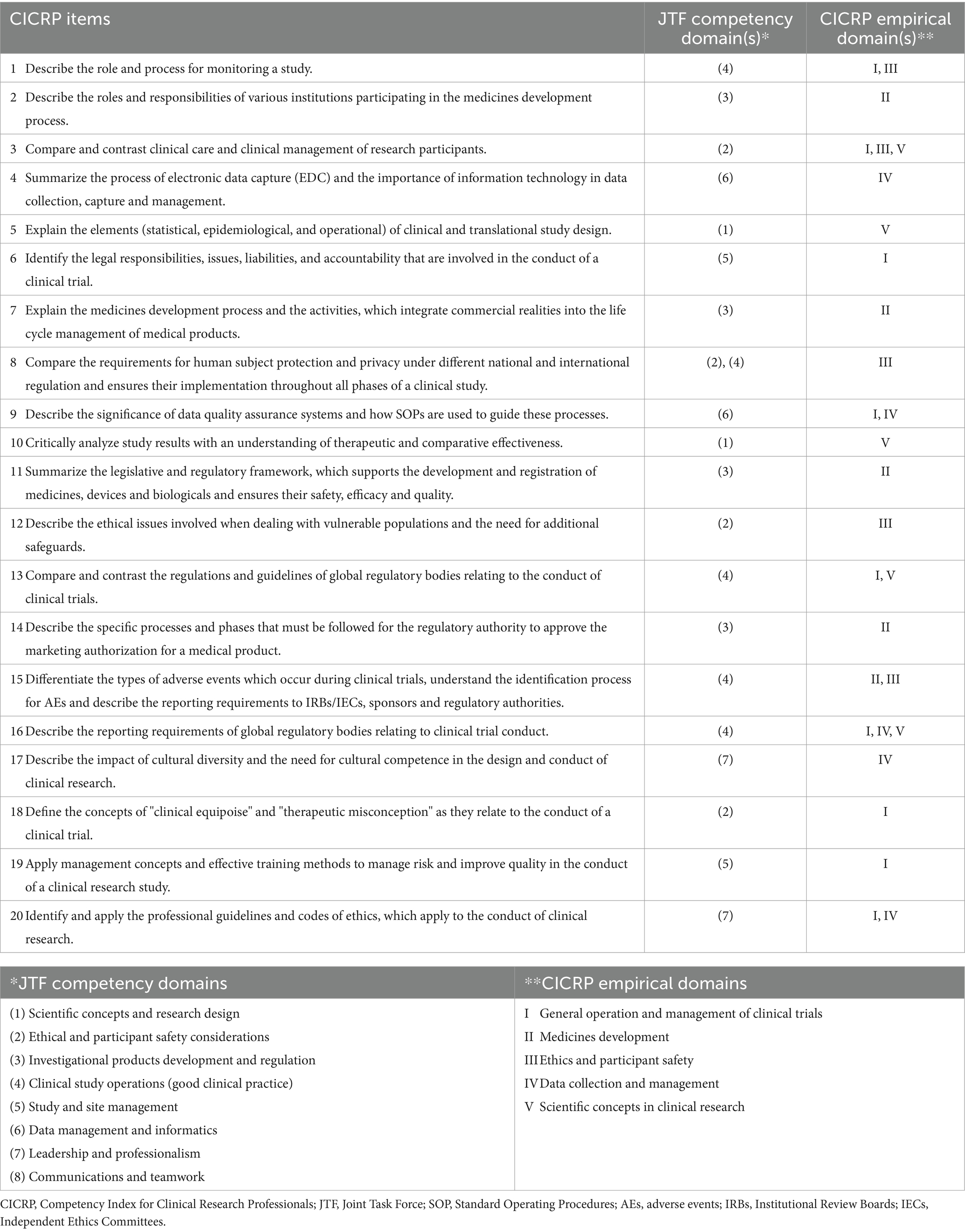

The Joint Task Force (JTF) for Clinical Trial Competency (JTF Framework) is an international team of investigators, educators, sponsors and clinical research professionals that has developed a framework that defines the knowledge, skills and attitudes necessary for conducting safe, ethical, and high-quality clinical research. This group published core competencies in clinical research, harmonizing evolving work in role-based competencies at the time (1, 2) (Figure 1). Subsequent research on the JTF Framework included a global survey applied to competency relevance to roles and training needs in clinical trials (3). Since that time, the JTF Framework has been updated to include illustrated leveling and project management. The JTF website is maintained by the Multi-Regional Clinical Trials Center at Harvard University (4–6).

Figure 1. Joint task force clinical trial competency framework (3). Reprinted with permission from Barbara Bierer, Director, Multiregional Clinical Trials Center (MRCT) at https://mrctcenter.org/clinical-trial-competency/. Copyright 2024 Brigham and Women’s Hospital Division of Global Health Equity.

In 2018, a factor analysis of the global survey data for non-investigator, clinical research professionals working in the United States and Canada resulted in a short-form 20-item competency index assessment tool called the Competency Index for Clinical Research Professionals (CICRP) (Table 1) that used a 0–10 Likert scale (7). The tool analysis included five empirical domain subscales: I. General Operation and Management of Clinical Trials, II. Medicines Development, III. Ethics and Participant Safety, IV. Data Collection and Management, and V. Scientific Concepts in Clinical Research (CICRP-I). The scale was used in a subsequent study exploring the use of the index to compare self-perceived self-efficacy in performing clinical trial skills among clinical research professionals (CRPs) working at academic medical center settings, other site settings and students of academic programs in clinical research. This study assessed the importance of clinical trial experience and academic education in CRPs (8). This index, known as CICRP-II, measured routine functions and advanced functions of clinical research professionals (8).

The Consortium of Academic Programs in Clinical Research, established an accreditation pathway for academic programs in clinical research. Accreditation is offered by Commission on Accreditation of Allied Health Education Programs (CAAHEP) and is administered by the Committee on Accreditation of Academic Programs in Clinical Research (CAAPCR) (9). The CAAPCR accreditation standards incorporate the JTF Competency Framework for competency-based clinical research educational programs. The self-study process requires gathering numerous student, course, program and institutional evaluation materials and data to address the specific requirements for the CAAPCR standards and guidelines. Program evaluation measures include enrollment; retention and graduation metrics; and student and course demonstration of achieving clinical research competencies by analysis of competency-based course assignments mapped to program goals, course objectives and the JTF Framework.

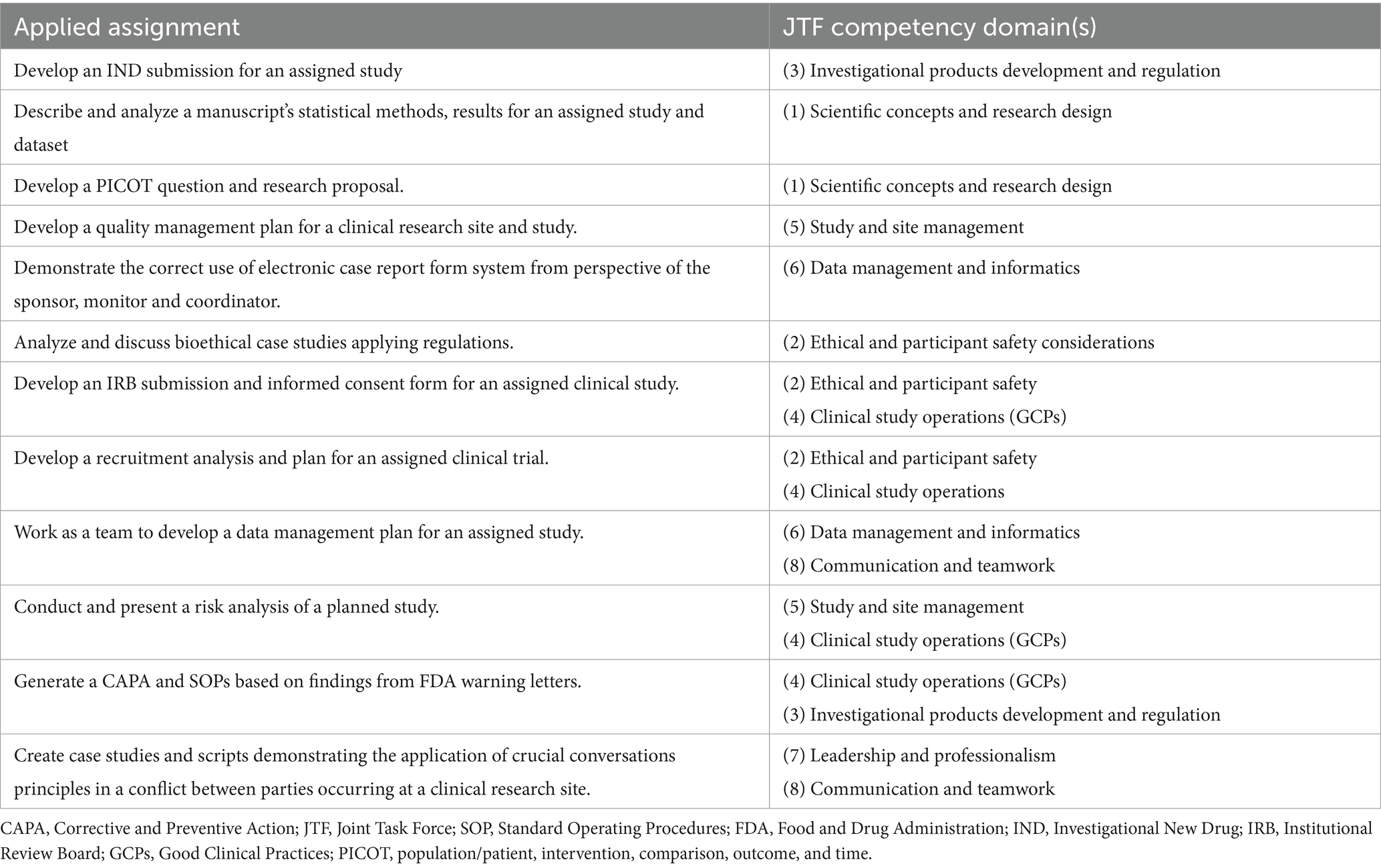

The authors are reporting on the use of the 20-item CICRP instrument as an evaluation tool in a 100% online asynchronous master’s degree program in clinical research (Master of Clinical Research, MCR) with specializations in both clinical research management and regulatory affairs at a midwestern public institution in the United States, with a major academic medical center. Students complete 12 graduate courses (36 credit hours total) consisting of seven core courses, four specialization courses and a culminating project course. Students are accepted into the program three times per year (spring, summer, and autumn) using a holistic admissions method, including required undergraduate GPA of 3.0. Prior clinical research experience is not a pre-requisite to admission. Courses are delivered using a well-established learning management system adopted by the university and taught by faculty with experience in clinical research, clinical trials, pharmacology, bioethics, and biostatistics. The program curriculum is mapped to the JTF Framework with a heavy distribution of JTF competencies across the core courses and more focused JTF competencies across the specialization courses. The final course allows students to select one of five culminating project options: develop an integrative review, develop a research protocol/proposal, develop a manuscript on a clinical research topic, develop and perform a clinical research-related project, or work with a mentor in a focused research opportunity. Another deliverable in the culminating project course is the development of an ePortfolio that included evidence of acquired JTF competency skillsets and an essay on each of the JTF competency domains reflecting on their learning in each domain and future learning and experiential goals as a clinical research professional. We included applied real-world assignments to provide authentic learning for students to enhance the competency-based nature of our asynchronous learning environment. Table 2 provides examples from a subset of applied competency-based assignments found in courses in the curriculum. Furthermore, our courses were structured using program-designed, learner-centric module templates, applying collaborative learning pedagogy including forming a course community, providing opportunities for interactive discussion, and requiring ongoing teacher scaffolding through frequent input. This pedagogy is in keeping with the best practices for online collaborative education (10). The program requires that students maintain a B- or above final grade in all completed courses and an overall GPA of 3.0 to graduate.

Table 2. Subset of authentic applied assignments in the master’s program core courses aligned to JTF competency domai.

While the master’s program evaluated competence for clinical research professional roles through students’ assignments, ePortfolios and culminating projects, a standardized assessment tool was lacking. The program aimed to supplement the existing measures of competency by including the CICRP questionnaire as a program evaluation tool. The purpose of this study is to describe the results of entry versus exit course assessments using the CICRP tool for academic competency-based program evaluation.

2 Methods

2.1 CICRP survey instrument

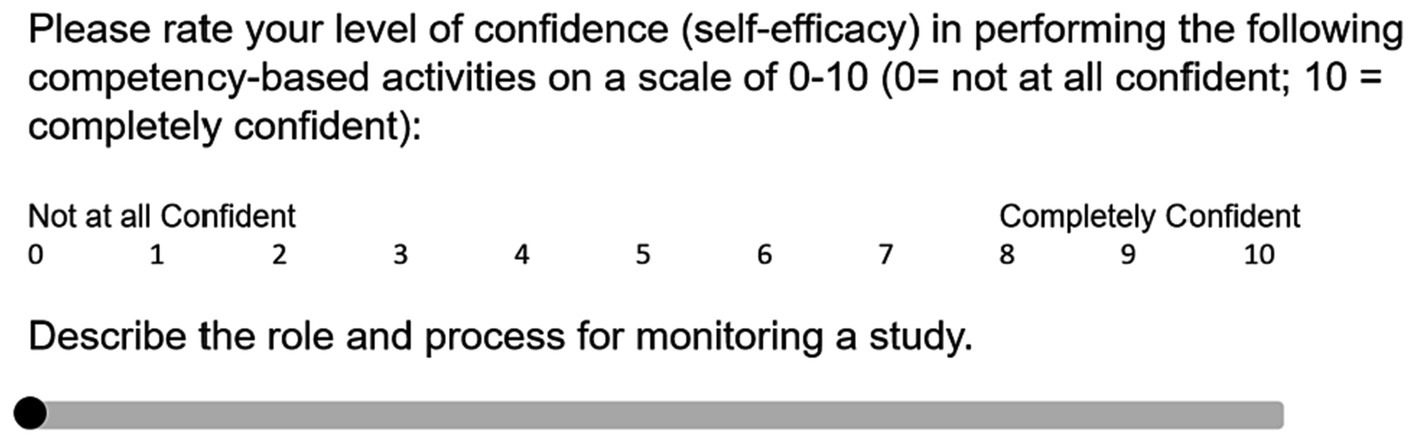

For the purpose of using the CICRP instrument as a program evaluation tool, we used composite scores from the 20-item scale without the CICRP I or II subscale analyses (6, 11). Using a separate-sample pre-post study design, we administered the CICRP questionnaire to students in the entry and exit courses of our clinical research master’s degree program during the 2021–2022 academic year. We created a QualtricsXM (Provo, Utah) survey instrument including the 20 CICRP items asking students to rate their self-efficacy in performing each item (Table 1) using a Likert slider scale from 0 to 10 (0 = not at all confident; 10 = extremely confident) (Figure 2). The survey required a response to each item, and if the respondent intended to select zero, a click or tap on zero was required. Students could participate in the survey on a desktop or a mobile device. We posted links to the survey in the learning management system in course modules and on the course calendar. We also sent reminders to take the CICRP survey through course announcements that generated a notice to their student E-mail inbox.

Figure 2. Illustration of CICRP question in QualtricsXM using a slider scale. CICRP, Competency Index for Clinical Research Professionals.

We included the CICRP QualtricsXM survey as a required non-graded assessment in the beginning of the students’ initial program course (course number MCR 7770) and again at the end of their final program course (course number MCR 7599). We designed this study to measure and compare entering and exiting students to assess whether the CICRP tool had utility for program evaluation. Prior to commencing the survey, students were provided with an informed consent for this study with a prompt to proceed if they consented. The survey study was granted exempt approval by the institution’s Institutional Review Board.

2.2 Statistical methods

We describe the two student groups entering and exiting the program by highest degree at program entry, years of clinical research experience, whether being a nurse, and whether holding clinical research certification (12, 13). The QualtricsXM data set includes the CICRP ratings of the students from the entry course in semesters Summer 2021 (SU21) and Autumn 2021 (AU21) (the entry course is not offered during spring semester). In the exit course, the CICRP survey was conducted with students toward the end of the course during SU21, AU 21 and Spring 2022 (SP22). For each of the 20 survey items we used the Likert scale based on CICRP from zero to 10 (7). The summation of the combined 20 CICRP items is a good tool to evaluate clinical trial core competencies overall (11), which we denote as the CICRP total score (range 0–200).

Because each CICRP item contains 11 categories (0, 1,…,10) it would be reasonable to treat the rating scale as continuous interval data (14, 15). For each item, a higher rating means greater perceived efficacy for that item, while a higher CICRP total score signifies greater perceived competency.

CICRP total scores were first directly compared between the students taking the program entry course and the program exit course. Students put their student email ID in the tool to ensure no duplicate entries. When the dataset was downloaded, those identifiers were removed before analysis to preserve anonymity. We used linear regression to adjust for these potential confounders such as “semester” and “highest degree at program entry.

3 Results

3.1 Participant characteristics

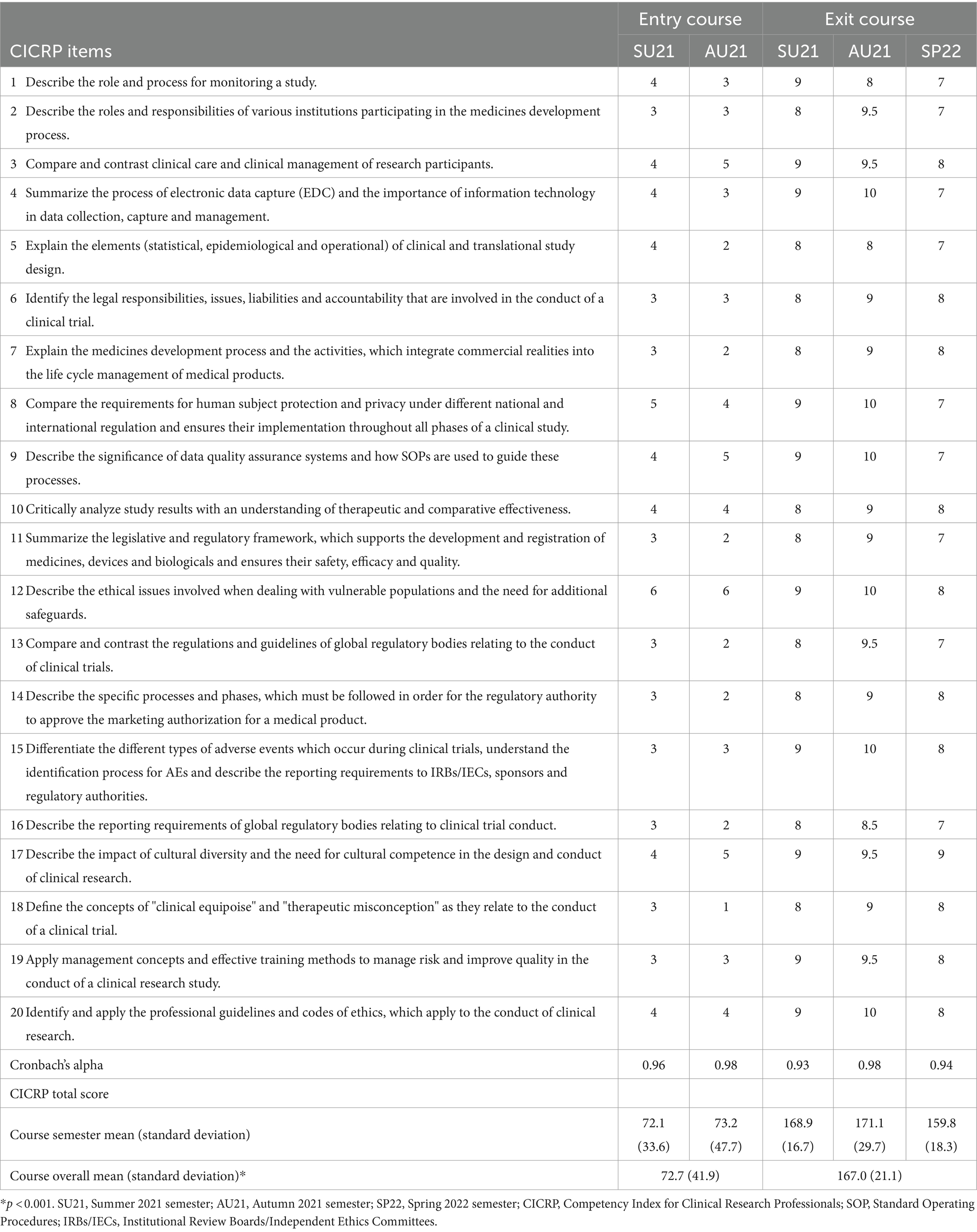

All students were required to complete the CICRP survey as a non-graded assignment. The number of students in the entry course taking the CICRP was 23 in SU21 and 31 in AU21 (total n = 54). The number of students taking the CICRP in the exit course was 27 in SU21, 14 in AU21 and 15 in SP22 (total n = 56) (Table 3).

As required in the program, all students had a bachelor’s degree; however, in the exit course, a greater proportion of students had entered the program already holding a graduate degree (5.6% in the entry course vs. 32.1% in the exit course, p < 0.001). We asked students, “What is your current level of experience in clinical research?” Of the students taking the CICRP in the entry course, 59.3% indicated they had no experience, while 25.9% had more than 2 years of experience. Among students taking the CICRP in their exit course, responses showed more but statistically insignificant levels of experience: 51.8% indicated no prior experience, while 33.9% indicated more than 2 years (p = 0.344). The program aims to increase its enrollment of nurses to the program so the question, “Are you a nurse” provides data for correlational scores in future analyses.

3.2 CICRP total scores

Our analysis explored the question, “Does the Master of Clinical Research program have any significant effect on the improvement of students’ self-efficacy in clinical trial core competences in terms of the CICRP ratings.” We calculated Cronbach’s alpha (16) for each assessment with ratings ranging from 0.93 to 0.98 (Table 4) showing a high degree of content and face validity. The range for combined entry course median item ratings were 0–6, and the range for exit course median item ratings were 7–10.

We conducted parametric and non-parametric two-sample tests to see whether the group of individuals leaving the program have significantly higher mean CICRP total scores compared to the group of individuals entering the program, 167.0 (SD 21.1) vs. 72.7 (SD 41.9), respectfully. Both the Welch’s two-sample t-test and Wilcoxon rank-sum test show very significant differences between the group of students entering the program and leaving the program (p < 0.001).

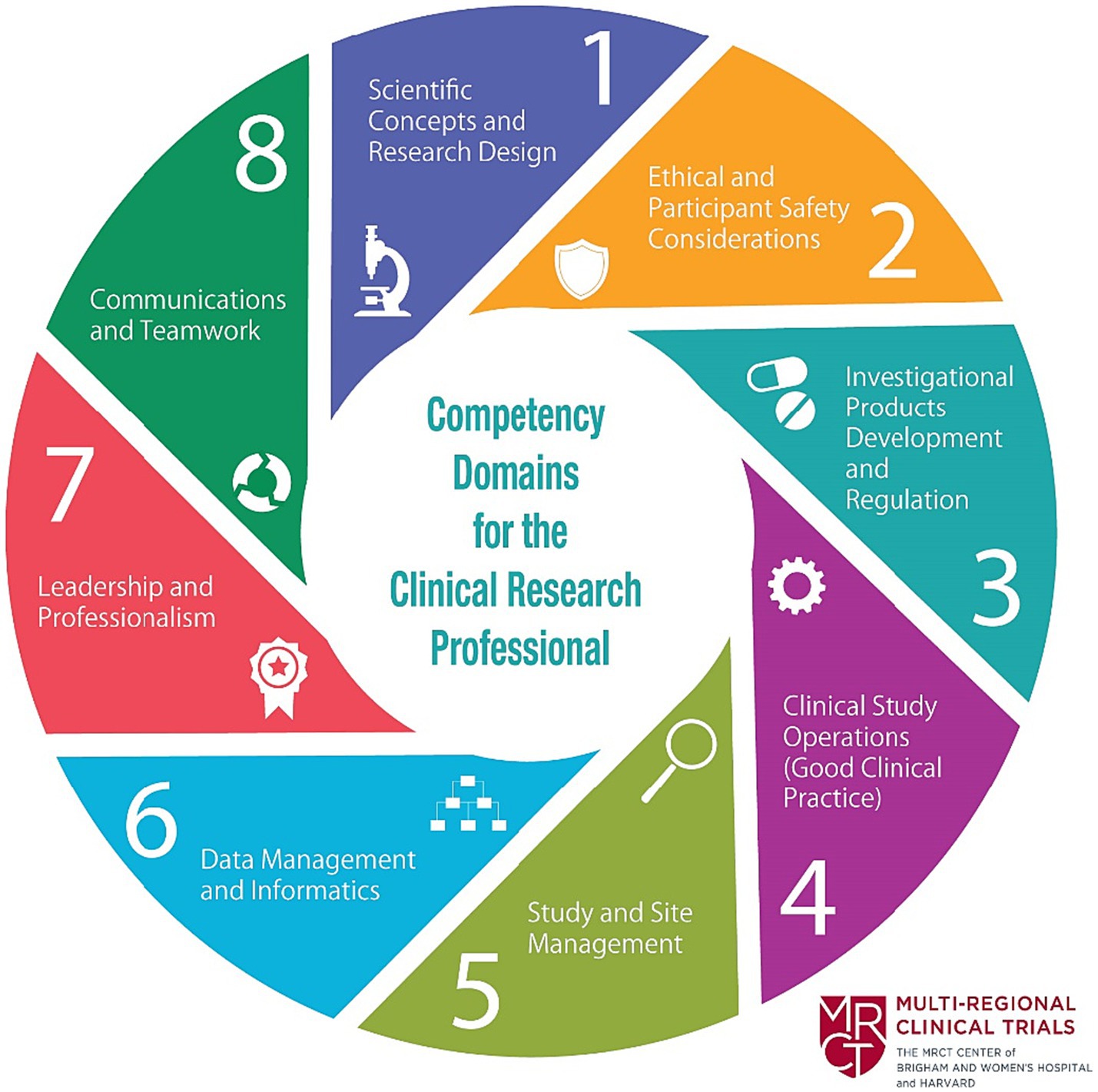

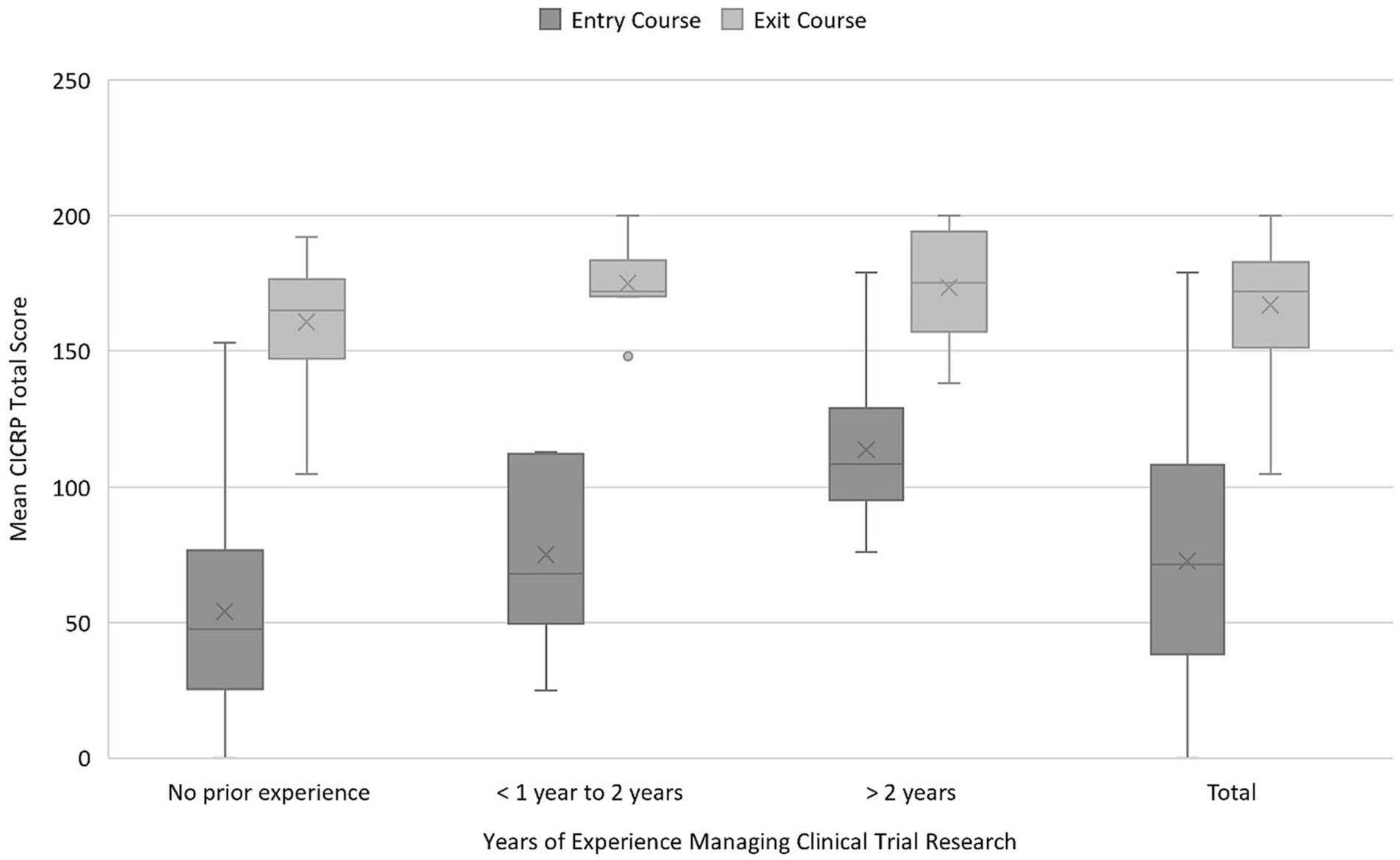

Correlations between years of experience and median total scores of each group were difficult to accurately calculate because of the large percentage of students who had no or < 1 year of clinical research experience at the time of the survey. Those in other experience categories were too few to draw meaningful conclusions. However, when combining years of experience into three categories, a significant increase in mean CICRP total score is seen at each experience level between program entry and program exit: no prior experience 54.1 (SD 35.9) vs. 160.7 (SD 21.7), <1 to 2 years 75.2 (SD 33.5) vs. 174.9 (SD 14.8), >2 years 113.9 (SD 28.4) vs. 173.4 (SD 20.3) (p < 0.001) (Figure 3).

Figure 3. Mean CICRP total score by years of experience. CICRP, Competency Index for Clinical Research Professionals.

We further implemented a linear regression of CICRP total scores by course, semester, highest degree at program entry, years of experience, whether being a nurse and clinical research certification to see the effect of course adjusting for other available covariates. The linear regression has a result that, adjusting for available covariates, individuals taking the exit course have a mean CICRP total score 92.690 (p < 0.001) higher than individuals taking the entry course. The diagnostic plot of the linear regression does not show signs of fundamental deviation from a normal distribution and generalized variance-inflation factors do not show signs of collinearity. There are significant differences in the variances of different course and semester groups based on Levene’s test. Therefore, we used a general linear model (17) allowing different variances for different course and semester groups. The general linear model does produce a better fit in terms of diagnostic plot, but the result is very close to the ordinary linear model with course coefficient 94.750 (p < 0.001). We also carried out backward selection of variables based on the change of courses’ coefficient and p-values to omit unnecessary adjustment and to improve precision for estimate of courses’ coefficient. Though we did not find any noticeable changes in the estimates of the course coefficient. The CICRP total scores for these data demonstrate relatively consistent results for students entering and completing the master’s program.

4 Discussion

As clinical research competency-based educational programs prepare for accreditation, having a standardized competency evaluation measure such as the CICRP could be a useful program evaluation tool. Competency indexes have been used to evaluate clinical research trainees and educational programs in translational research. The Clinical Research Appraisal Inventory (CRAI) was a 92-item set of competencies for clinical and translational investigators. Robinson et al. created and evaluated a 12-item abbreviated CRAI instrument that was used to evaluate investigator trainees and their acquisition of perceived competence in clinical research (18, 19). Our study presents a potential program evaluation tool for usefulness in assessing whether our competency-based academic program is meeting the JTF Competency needs of students targeting clinical research professional roles. The assessment tool had high Cronbach’s alpha demonstrating a high level of internal consistency. Moreover, these data from our program demonstrate acquisition of competence in the areas of scientific concepts and research design and investigational product development, areas that have been shown to be deficits in the field (20).

A limitation of our study is that it did not measure a head-to-head (entry and exit) pre-test and post-test total scores matched to individual students. Rather, we compare entering students as a cohort (those taking entry course) to graduating students (those taking final course) as an initial pilot to determine feasibility of the index for program evaluation. Furthermore, we found that the graduating cohort in our study appeared to have greater levels of clinical research experience than those entering the program. This may be partially because students in our cohort gained employment in clinical research during their tenure as a student. The graduate students enrolled in our professional master’s degree vary in their progression through the program. Some may take one to two courses per semester (part-time) or three to four courses per semester (full-time). Moreover, some students take semesters off for professional or personal reasons and return at varying time-points, especially during the COVID-19 pandemic. Ideally, we would have assessed individual students and compare total scores at program entry and exit; however, for feasibility purposes we initially wanted to evaluate the tool for usefulness in program evaluation. Future assessments should match specific individual student pre- and post- CICRP total scores and conduct more in-depth assessments of correlations. Another limitation of this study is that it is applicable to students in a specific United States (U.S.) master’s degree program and may not be applicable to students in other U.S. programs or students internationally.

5 Conclusion

The Competency Index for Clinical Research Professionals (CICRP) is a short form (20-item) competency index for the JTF Clinical Trial Competencies. It is a useful tool to measure self-efficacy in clinical trial skillsets for clinical research professionals. Used as a pre-test and post-test for students entering and graduating from a graduate-level clinical research academic program, the tool may contribute to evaluate effectiveness of the program, in addition to other program evaluation criteria such as course deliverables, student e-Portfolios, grade point average (GPA), completion rates and successful employment as clinical research professionals. Future research on the use of the tool in program evaluation is warranted.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by the Ohio State University IRB Columbus, United States. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

CJ: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Supervision, Writing – original draft, Writing – review & editing, Software, Validation, Visualization. XL: Data curation, Formal analysis, Investigation, Methodology, Validation, Writing – original draft, Writing – review & editing, Software, Visualization. CH: Investigation, Validation, Writing – original draft, Writing – review & editing, Methodology. JF: Data curation, Investigation, Project administration, Validation, Writing – original draft, Writing – review & editing. MN: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Software, Validation, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported in part by the following grants from the National Center for Advancing Clinical Translational Science (NCATS): Grant # UL1TR002733 & # UM1TR004548, The Ohio State University Center for Clinical Translational Science.

Acknowledgments

The authors are thankful for the assistance by Michael Griffin, The Ohio State University, College of Nursing, in assisting with the Qualtrics survey instruments.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Jones, CT, Parmentier, J, Sonstein, S, Silva, H, Lubejko, B, Pidd, H, et al. Defining competencies in clinical research: issues in clinical research education. Res. Pract. (2012) 13:99–107.

2. Sonstein, SA, Seltzer, J, Li, R, Jones, CT, Silva, H, and Daemen, E. Moving from compliance to competency: a harmonized core competency framework for the clinical research professional. Clin Res. (2014) 28:17–23. doi: 10.14524/CR-14-00002R1.1

3. Sonstein, S, Silva, H, Jones, CT, Calvin-Naylor, N, Halloran, L, and Yrivarren, JL. Global self-assessment of competencies, role relevance, and training needs among clinical research professionals. Clin Res. (2016) 30:38–45. doi: 10.14524/CR-16-0016

4. Joint Task Force for Clinical Trial Competency (2020). JTF core competency framework. MRCT. Available at: https://mrctcenter.org/clinical-trial-competency/framework/domains/ (Accesed February 26, 2023).

5. Sonstein, SA, Palladino-Kim, L, Ichhpurani, N, Padbidri, R, White, SA, Aldinger, CE, et al. Incorporating competencies related to project management into the joint taskforce Core competency framework for clinical research professionals. Ther Innov Regul Sci. (2022) 56:206–11. doi: 10.1007/s43441-021-00369-7

6. Sonstein, S, Brouwer, RN, Gluck, W, Kolb, R, Aldinger, C, Bierer, B, et al. Leveling the joint task force core competencies for clinical research professionals. Ther Innov Regul Sci. (2020) 54:1–20. doi: 10.1007/s43441-019-00024-2

7. Hornung, CA, Jones, CT, Calvin-Naylor, NA, Kerr, J, Sonstein, SA, Hinkley, T, et al. Competency indices to assess the knowledge, skills and abilities of clinical research professionals. Int J Clin Trials. (2018) 5:46–53. doi: 10.18203/2349-3259.ijct20180130

8. Hornung, CA, Kerr, J, Gluck, W, and Jones, CT. The competency of clinical research coordinators: the importance of education and experience. Ther Innov Regul Sci. (2021) 55:1231–8. doi: 10.1007/s43441-021-00320-w

9. Committee on Accreditation of Allied Health Education Programs . (2023). Clinical research. Available at: https://caahep-public-site-5be3d9.webflow.io/committees-on-accreditation/clinical-research-professional (Accessed February 26, 2023).

10. Redmond, P, and Lock, JV. A flexibile framework for online collaborative learning. Internet High Educ. (2006) 9:267–76. doi: 10.1016/j.iheduc.2006.08.003

11. Hornung, C, Ianni, P, Jones, C, Samuels, E, and Ellingrod, V. Indices of clinical research coordinators’ competence. J Clin Transl Sci. (2019) 3:75–81. doi: 10.1017/cts.2019.381

12. Association of Clinical Research Professionals . (2018). ACRP certification. ACRP. Available at: https://www.acrpnet.org/professional-development/certifications/ (Accessed 26 February 2023).

13. Society of Clinical Research Associates (2018). Certification program overview. SoCRA. Available at: https://www.socra.org/certification/program-overview/ (Accessed February 26, 2023)

14. Boone, HN, and Boone, DA. Analyzing likert data. J Ext. (2012) 50:1–5. doi: 10.34068/joe.50.02.48

15. Harpe, SE . How to analyze Likert and other rating scale data. Curr Pharm Teach Learn. (2015) 7:836–50. doi: 10.1016/j.cptl.2015.08.001

16. Tavakol, M, and Dennick, R. Making sense of Cronbach’s alpha. Int J Med Educ. (2011) 2:53–5. doi: 10.5116/ijme.4dfb.8dfd

17. Galecki, A, and Burzykowski, T. Linear mixed-effects models using R: a step-by-step approach. New York, NY: Springer (2013).

18. Robinson, G, Switzer, GE, Cohen, ED, Primack, BA, Kapoor, WN, Seltzer, DL, et al. A shortened version of the clinical research appraisal inventory: CRAI-12. Acad Med. (2013) 88:1340–5. doi: 10.1097/ACM.0b013e31829e75e5

19. Robinson, GF, Moore, CG, McTigue, KM, Rubio, DM, and Kapoor, WN. Assessing competencies in a master of science in clinical research program: the comprehensive competency review. Clin Transl Sci. (2015) 8:770–5. doi: 10.1111/cts.12322

Keywords: clinical trial competency, program evaluation, competency-based education, academic program in clinical research, clinical research professionals

Citation: Jones CT, Liu X, Hornung CA, Fritter J and Neidecker MV (2024) The competency index for clinical research professionals: a potential tool for competency-based clinical research academic program evaluation. Front. Med. 11:1291667. doi: 10.3389/fmed.2024.1291667

Edited by:

Michael J. Wolyniak, Hampden–Sydney College, United StatesReviewed by:

Marc Henri De Longueville, UCB Pharma, BelgiumJun Hyun Kim, Inje University Ilsan Paik Hospital, Republic of Korea

Copyright © 2024 Jones, Liu, Hornung, Fritter and Neidecker. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Carolynn Thomas Jones, am9uZXMuNTM0MkBvc3UuZWR1

Carolynn Thomas Jones

Carolynn Thomas Jones Xin Liu2

Xin Liu2 Jessica Fritter

Jessica Fritter Marjorie V. Neidecker

Marjorie V. Neidecker