- 1Cognitive Neuroscience, Research Centre Jülich, Institute of Neuroscience and Medicine (INM-3), Jülich, Germany

- 2Melbourne School of Psychological Sciences, The University of Melbourne, Melbourne, VIC, Australia

- 3Department of Individual Differences and Psychological Assessment, University of Cologne, Cologne, Germany

- 4Department of Neurology, Faculty of Medicine, University Hospital Cologne, University of Cologne, Cologne, Germany

Accurate metacognitive judgments, such as forming a confidence judgment, are crucial for goal-directed behavior but decline with older age. Besides changes in the sensory processing of stimulus features, there might also be changes in the motoric aspects of giving responses that account for age-related changes in confidence. In order to assess the association between confidence and response parameters across the adult lifespan, we measured response times and peak forces in a four-choice flanker task with subsequent confidence judgments. In 65 healthy adults from 20 to 76 years of age, we showed divergent associations of each measure with confidence, depending on decision accuracy. Participants indicated higher confidence after faster responses in correct but not incorrect trials. They also indicated higher confidence after less forceful responses in errors but not in correct trials. Notably, these associations were age-dependent as the relationship between confidence and response time was more pronounced in older participants, while the relationship between confidence and response force decayed with age. Our results add to the notion that confidence is related to response parameters and demonstrate noteworthy changes in the observed associations across the adult lifespan. These changes potentially constitute an expression of general age-related deficits in performance monitoring or, alternatively, index a failing mechanism in the computation of confidence in older adults.

Introduction

Humans can report a subjective sense of confidence that is closely related to the accuracy of their actions. This ongoing monitoring of decisions and their execution is called metacognition and includes the evaluation of behavior and the detection of occurring errors (Fleming and Dolan, 2012). Accurate metacognitive judgments should lead to adaptive behavior adjustments and are thus crucial for all activities. Undetected errors (i.e., an example of incorrect metacognitive judgments) might have severe implications for real-life scenarios because they may not trigger the required adjustments for future actions and decisions (Wessel et al., 2018). Research on perceptual metacognition (i.e., metacognitive evaluations of perceptual decisions) has evolved largely separate in the fields of decision confidence and error detection, which were suggested to share the same underlying mechanisms (Boldt and Yeung, 2015; Charles and Yeung, 2019). From this point of view, low confidence in the accuracy of a decision (e.g., expressed in a confidence rating as “certainly wrong”) would be equivalent to reporting the detection of an error (e.g., signaled by a designated key press).

Metacognitive performance across the adult lifespan seems to depend on the cognitive domain being assessed. In the memory domain, evidence points toward largely preserved metacognitive abilities with older age (Dodson et al., 2007; Hertzog and Curley, 2018; Zakrzewski et al., 2021; although for aspects of memory metacognition that declined with age, see Chua et al., 2009; Tauber and Dunlosky, 2012). In the perceptual domain, in contrast, tasks require fundamentally different cognitive processes and research showed a rather robust decline in metacognitive performance across age (Rabbitt, 1990; Palmer et al., 2014; Niessen et al., 2017; Wessel et al., 2018). This study is intended to assess perceptual metacognition only. When participants were asked to report committed errors in an easy choice-reaction task, the detection rates declined with age, even when task performance was comparable (Harty et al., 2017; Niessen et al., 2017). In our previous publication, using the same dataset described in this study (Overhoff et al., 2021), we asked participants to rate their confidence after each decision on a four-point scale instead of reporting detected errors, and we concordantly revealed a decline in metacognitive performance across the lifespan. The accuracy of these ratings (i.e., the degree to which they matched the observed performance) decreased gradually with higher age, reflecting that older adults were less aware of their errors and rated correct responses with lower confidence compared to younger adults. Previous studies investigating age-related changes in metacognitive performance using confidence ratings are rare and yielded contradicting results. A recent large online study investigated metacognitive performance in 304 participants across the lifespan in a gamified setting using a visual perception task (McWilliams et al., 2022). Despite lower mean confidence with older age, the results revealed no effect of age on the accuracy of metacognitive judgments. In contrast, a laboratory based study with 60 participants using a similar task observed a significant decline in metacognitive accuracy with older age (Palmer et al., 2014; see also Filippi et al., 2020). Thus, while findings regarding age-related changes in perceptual metacognition are mixed, the question of which factors are related to this selective decline – when it is found – remains open.

In order to understand the age-related decline in metacognitive performance, it is essential to understand the basic mechanisms underlying the computation of confidence. It is still unclear which information is used to compute confidence, and how input from different sources is weighted (Charles and Yeung, 2019; Feuerriegel et al., 2021). For instance, confidence has been related to the strength of stimulus evidence, stimulus discriminability (Yeung and Summerfield, 2012; Charles and Yeung, 2019; Turner et al., 2021b), or instructed time pressure (Vickers and Packer, 1982). Furthermore, growing evidence suggests that the interoceptive feedback of a motor action while giving a response might be another source of information contributing to the formation of confidence about the decision (Kiani et al., 2014; Fleming et al., 2015; Palser et al., 2018; Gajdos et al., 2019; Siedlecka et al., 2021; Turner et al., 2021a). Fleming and colleagues (2015) investigated the interaction of confidence and motor-related activity by delivering single-pulse transcranial magnetic stimulation (TMS) to the dorsal premotor cortex. This perturbation did not affect task performance, but crucially, it did affect the accuracy of subsequent confidence judgments. This finding indicates that action-specific cortical activations might contribute to confidence. In line with this assumption, confidence ratings have been shown to be more accurate if the preceding decision required a motor action (Pereira et al., 2020; Siedlecka et al., 2021). For instance, Siedlecka et al. (2021) recently showed that metacognitive accuracy was higher after decisions requiring a key press than decisions which were indicated without a motor action. Taken together, these findings suggest that features of the motor response indicating a given decision might influence the confidence ratings about this decision. Therefore, further investigations of how confidence is reflected in different response parameters are warranted.

A response can be characterized by different dimensions. The most commonly used output variable is time, usually response time or movement time. A robust finding across studies is a negative relationship between response times for the initial decision and subsequent confidence ratings (Fleming et al., 2010; Kiani et al., 2014; Rahnev et al., 2020). Intuitively, one might assume that the degree of confidence is expressed in the time taken to make the decision, i.e., the less confident we are about a decision, the longer it should take to respond. However, another possible explanation is that the monitoring system uses the interoceptive signal of a movement produced by the response as an informative cue about the difficulty of the decision (Kiani et al., 2014; Fleming and Daw, 2017). Accordingly, if an easy decision led to a fast response, the internal read-out could boost subjective confidence. A recent study provided evidence for the directional effect of movement time (i.e., the time from lifting to dropping a marble) on confidence (Palser et al., 2018). In this study, movement speed was experimentally manipulated by instructing participants to move faster than they naturally would, and this manipulation resulted in declined metacognitive accuracy.

Nevertheless, temporal parameters do not capture all aspects of a movement. For instance, subthreshold motor activity (i.e., partial responses) cannot be detected by classical RT recordings but rather by recording muscle activity. However, partial responses have also been shown to affect reported confidence (Ficarella et al., 2019; Gajdos et al., 2019). An informative motor parameter of a response is the applied force, which is often measured in its peak force, i.e., the maximum exerted force during a response action. Notably, peak force and response time index distinct processes as they show divergent behavioral patterns (i.e., small to no correlation) across experimental manipulations (Franz and Miller, 2002; Stahl and Rammsayer, 2005; Cohen and van Gaal, 2014). Therefore, the measurement of peak force might provide unique information about cognitive processes, which in turn might enrich our understanding of age-related impairments in metacognition because previous studies have already suggested a close relationship between peak force and metacognitive judgments (Stahl et al., 2020; Turner et al., 2021a).

Recently, Turner et al. (2021a) examined the relationship between confidence and response force by explicitly manipulating the degree of physical effort that had to be exerted to give a response. When participants were prompted to submit their response to a perceptual decision with varying force levels, participants reported higher confidence in their decisions when their response peak force was higher. Notably, requiring participants to produce a specific (and comparably high) degree of force (as mandated in the experiment) is fundamentally different from measuring naturally occurring force patterns of a response (in terms of a dependent measure). The latter was done, for example, in a study by Bode and Stahl (2014), who found that naturally occurring peak force was lower in errors compared to correct responses. It was suggested that this might indicate a process in which low force in error trials signifies an unsuccessful attempt to stop the already initiated response, which requires early and fast error detection (Ko et al., 2012; Bode and Stahl, 2014; Stahl et al., 2020). However, error detection or confidence was not directly assessed, rendering comparison between these two studies difficult.

The relationship between different response parameters and confidence has not been systematically assessed in the context of healthy aging. While response and movement times are slower and more variable with older age, findings of age-related changes in response force are inconsistent (Salthouse, 2000; Bunce et al., 2004; Dully et al., 2018). Some studies showed delayed and altered electrophysiological signatures of motor processing in older age [e.g., lateralized readiness potential (LRP)/movement-related potential (MRP) and mu/beta desynchronization; Sailer et al., 2000; Falkenstein et al., 2006; Quandt et al., 2016]. When required to produce visually cued levels of force, older participants’ force output was found to be more variable (Vaillancourt and Newell, 2003), which may be restricted to very old age though (Sosnoff and Newell, 2006). In contrast, electromyographic or force recordings of motor responses revealed similar patterns in younger and older adults (Van Der Lubbe et al., 2002; Yordanova et al., 2004; Falkenstein et al., 2006; Dully et al., 2018). Notably, these studies did not assess error detection or confidence. Therefore, it is warranted to specifically examine the associations between confidence and response time and between confidence and response force and to investigate whether these associations change across the adult lifespan.

The present study constitutes the first comprehensive assessment of the association between metacognitive accuracy and two main response parameters across the adult lifespan. We intended to answer the following questions: First, what are the relationships between decision confidence and response time on the one hand, and peak force of a response (as it naturally occurs, i.e., without specific instruction or experimental manipulation) on the other hand? Second, do these relationships between confidence and response parameters change with age? Additionally, we were interested in investigating the potential moderating effect of accuracy because many studies on decision confidence only assessed the relationship between a given response parameter and confidence in correct responses. However, evidence suggests that meaningful differences in confidence levels within correct responses and within errors exist (Charles et al., 2013). Concerning response time, for instance, the well-known negative relationship with confidence is inverted for errors when the confidence rating allows to indicate error detection (i.e., a rating scale was used that ranged from certainty in being correct to certainty in being wrong; Pereira et al., 2020). In order to answer these questions, we employed a conflict task, which is often used in studies of error monitoring (Vissers et al., 2018; Maier et al., 2019), while studies of decision confidence typically use signal detection tasks where stimuli are difficult to discriminate and errors are rarely detected (Resulaj et al., 2009; Fleming et al., 2016).

We expected significant associations between confidence judgments and parameters of the response (Fleming et al., 2015; Pereira et al., 2020; Rahnev et al., 2020; Turner et al., 2021a). In particular, response time was expected to decrease with higher confidence for correct trials (Kiani et al., 2014; Dotan et al., 2018; Rahnev et al., 2020) and to increase with higher confidence for errors (Pereira et al., 2020). We tentatively hypothesized a positive relationship between response force and confidence for errors and correct responses (Ko et al., 2012; Bode and Stahl, 2014; Turner et al., 2021a).

Most importantly, we intended to explore age-related changes in the associations between response parameters and confidence without having a priori hypotheses about the direction of possible effects due to a lack of previous studies on this topic. If we find divergent patterns across the lifespan, this might encourage research on the causal relationship between response parameters and confidence.

Materials and methods

Participants

Eighty-two participants were recruited and received monetary compensation for their participation in the experiment. Inclusion criteria were, amongst others, no history of or current neurological or psychiatric disease, which excluded participants with diagnosed Parkinson’s disease, a condition characterized by altered patterns of response force (Franz and Miller, 2002). Data from seventeen participants had to be discarded due to: symptoms of depression (N = 1, Beck’s Depression Inventory score higher than 17; BDI; Hautzinger, 1991), poor behavioral performance (N = 8, more than 30% invalid trials, error rate higher than chance, here 25%), or a behavioral pattern that was indicative of an insufficient understanding or implementation of task demands (N = 8, inspection of individual datasets for a combination of errors in the color discrimination test described below, near chance task performance, frequent invalid trials, and biased use of single response keys). This resulted in a final sample for analysis of sixty-five healthy, right-handed adults [age = 45.5 ± 2.0 years (all results are indicated as mean ± standard error of the mean; SEM); age range = 20 to 76 years with a near equal distribution of participants across decades; <40 years: 28, 40–59 years: 20, >60 years: 13; 26 female, 39 male] with (corrected to) normal visual accuracy, no color-blindness, no signs of cognitive impairment (Mini-Mental-State Examination score lower than the cut-off of 24; MMSE; Folstein et al., 1975) and no history of psychiatric or neurological diseases.

The current study’s data have been used previously (Overhoff et al., 2021). The same exclusion criteria regarding the neuropsychological assessment and the task performance were applied, resulting in the same subsample included in the analyses. In the previous publication, we thoroughly examined the metacognitive performance and its relation to behavioral parameters (response accuracy, response time, behavioral adjustments) as well as two electrophysiological potentials (i.e., the error/correct negativity, Ne, and the error/correct positivity, Pe; for detailed results and discussion thereof, see Overhoff et al., 2021). We did not report or analyze any response force measures in the previous publication.

The experiment was approved by the ethics committee of the German Psychological Society (DGPs). All participants gave written informed consent, and the study followed the Declaration of Helsinki.

Stimuli

The experiment consisted of a color version of the Flanker task (Eriksen and Eriksen, 1974) with four response options intended to increase conflict and thereby the number of errors while ensuring feasibility for participants of all ages. Four target colors were mapped onto both hands’ index and middle fingers. In each trial, we presented one central, colored target square flanked by two squares on the left and right side, respectively. Participants had to respond to the central target by pressing the corresponding finger. The flankers were presented slightly before the target appeared to increase their distracting effect. Flankers could be of the same color as the target (congruent condition), of one of three additional neutral colors that were not mapped to any response (neutral condition), or of another target color (incongruent condition).

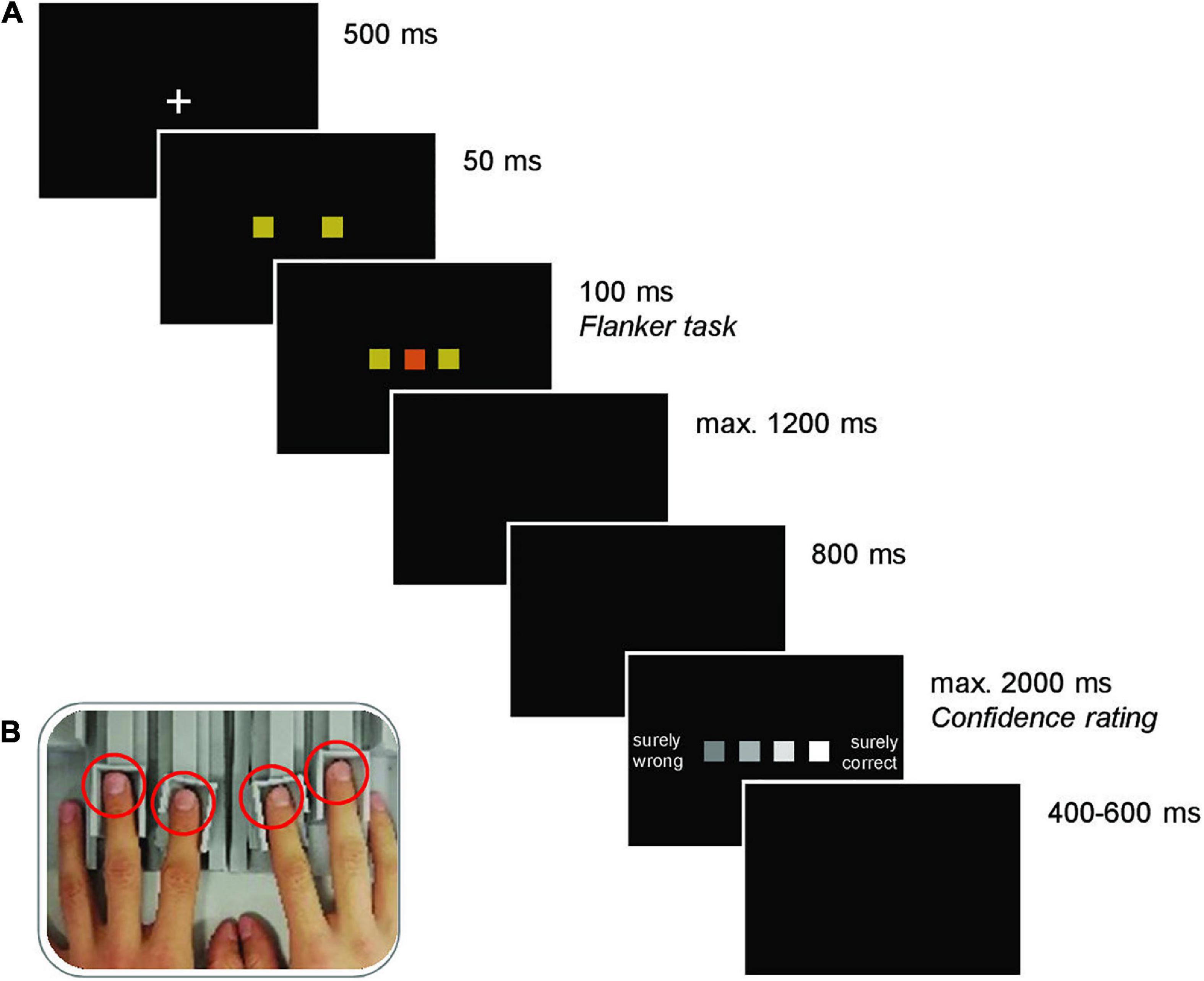

Experimental paradigm

Each trial started with the presentation of a white fixation cross on black background for 500 ms. The fixation cross was replaced by the two flankers, followed by the target after 50 ms and the two flankers and the target remained on screen for another 100 ms. Participants pressed their left or right index or middle finger to indicate their decision (see Figure 1B). Participants were instructed to respond as fast and accurately as possible. A black screen was presented until a response was registered (max. 1200 ms) and an additional 800 ms before presenting the confidence rating. For this rating, participants indicated their confidence in the decision on a four-point scale comprising the options “surely wrong,” “maybe wrong,” “maybe correct,” and “surely correct” (max. 2000 ms). A jittered intertrial interval of 400 to 600 ms preceded the subsequent trial. If no response was registered in the decision task, the participants received feedback about being too slow, and the trial was terminated. The sequence of an experimental trial is depicted in Figure 1A.

Figure 1. (A) Trial structure (here, incongruent condition illustrated). Each trial commenced with the presentation of a fixation cross. Subsequently, two colored squares (flankers) were presented, and a third square (of the same or a different color; target) was added shortly after. The stimuli disappeared after 100 ms and the ensuing black screen, where participants were instructed to make a response by pressing one of four response keys mapped onto one color each, remained until a response was registered (max. 1200 ms). If no response was given, the German words for “too slow” were shown, and the trial was terminated. Otherwise, after another black screen, the confidence rating scale was presented, which remained on the screen until a judgment (the four fingers were mapped onto the squares according to their spatial location) was made (max. 2000 ms). The next trial started after another black screen of random duration between 400 and 600 ms. (B) Force-sensitive response keys. Left and right index and middle fingers (red circles) were placed on adjustable finger rests.

Procedures

Prior to testing, we collected demographic details, and the participants conducted a brief color discrimination test without any time pressure or cognitive load to ensure that they were capable of correctly discriminating the stimulus colors used in the experiment. The neuropsychological tests for assessing the exclusion criteria (BDI; MMSE; Edinburgh Handedness Inventory, EHI; Oldfield, 1971; see Supplementary Table 1) were administered after the main experiment.

Participants first performed 18 practice trials without confidence rating, receiving feedback about their accuracy, which could be repeated once if necessary. Two practice blocks of 72 trials without feedback or the option to repeat followed. Another practice block of 18 trials then introduced the confidence rating. The actual experiment consisted of five blocks with 72 trials each, with optional breaks after each block. The electroencephalogram (EEG) was recorded throughout the testing session. Note that the EEG results have been reported in our previous publication (Overhoff et al., 2021). The main experiment lasted approximately 40 min.

Apparatus

The participants were seated in a noise-insulated and dimly lit testing booth at a viewing distance of 70 cm to the screen (LCD monitor, 60 Hz). A chin rest minimized non-task related movements.

For response recording, we used force sensitive keys with a sampling rate of 1024 Hz and a high temporal resolution that is superior to standard keyboards (Figure 1B; Stahl et al., 2020). The keys were calibrated to the fingers’ weight before and during the experiment. The keys could be adjusted to the hand size, and a comfortable hand position was ensured by a wrist rest. An applied force was registered as a response when it exceeded a threshold of 40 cN.

The color discrimination test was programmed using Presentation software (Neurobehavioral Systems, version 14.5) and the main task using uVariotest software (version 1.978).

Analysis

Response time (RT) was defined as the time from target stimulus presentation to the initial crossing of the response force threshold of 40 cN by any response key. Peak force (PF) was defined as the maximum of a force pulse following the crossing of the threshold. Additionally, we measured the time from response onset to the time of the PF (only used for the exclusion of trials).

We excluded from the analysis: invalid trials, which were too slow, responses without confidence rating, responses with an RT below 200 ms (indicating premature responding), a PF below 40 cN (indicating incomplete or aborted responding), a time to PF of more than three standard deviations above the mean (indicating that the response was not of the expected ballistic nature), and recording artifacts (implausible time between response onset and time of the PF, incorrect identification of response key in case of multiple responses).

As a first step, to characterize the distribution of the behavioral parameters of interest independent of confidence, we computed paired samples t-tests at the group level to compare RT, PF, and their dispersion between correct and incorrect trials. For the investigation of age-related effects, we used a series of linear regressions with the predictor age for each of the following variables: error rates (ER; the proportion of valid responses that were incorrect), mean confidence ratings, mean RT and PF, and standard deviation of RT and PF. The latter analyses were performed separately for errors and correct responses.

Next, data were analyzed using generalized linear mixed-effects models (GLMMs) with a beta distribution using the glmmTMB package (version 1.0.2.1; Brooks et al., 2017) in R (version 4.0.5; R Core Team, 2021). We chose this modeling approach because the beta distribution is assumed to better account for data that are not normally distributed and doubly bounded (i.e., having an upper and a lower bound; here: 1, “surely wrong,” and 4, “surely correct”), which applies to our confidence data (Verkuilen and Smithson, 2012). All continuous predictor variables were mean centered and scaled for model fitting, and confidence was scaled to the open interval (0,1; i.e., the range is slightly compressed to avoid boundary observations; Verkuilen and Smithson, 2012). Analyses were again conducted separately for correct responses and errors.

We examined the effects of age and the two parameters (RT, PF) of the response on confidence ratings using the following regression model structures (separately for the subsets of errors and correct responses):

(1) Confidence ∼ Age + (RT | Participant)

(2) Confidence ∼ RT*Age + (RT | Participant)

(3) Confidence ∼ PF*Age + (RT | Participant)

(4) Confidence ∼ RT*PF*Age + (RT | Participant)

Response time (RT) and PF were used as fixed effects, and age was included as a covariate due to its documented negative effect on metacognitive accuracy (i.e., a negative effect on confidence for correct responses and a positive effect on confidence for errors; Palmer et al., 2014; Overhoff et al., 2021). For the most complex model, we considered an interaction term between all three factors, as RT and PF are known to vary across age (Dully et al., 2018), and previous work suggests potential interactions between RT and PF (Bode and Stahl, 2014; Gajdos et al., 2019). We fitted random intercepts for participants, allowing their mean confidence ratings to differ. If possible and the models converged, random slopes by participant were added for the predictors of interest to account for individual differences in the degree to which these were related to the confidence ratings (Barr et al., 2013). Models were checked for singularity and multicollinearity by calculating the variance inflation factor (VIF) using the performance package (version 0.7.2; Lüdecke et al., 2021).

We compared model fits including all effects of interest (model 4) to models including only one (models 2, 3) or no effect of interest (model 1) using likelihood ratio tests, and computed Wald z-tests to determine the significance of each coefficient. This means that, if a model including one predictor of interest (e.g., RT) fits the data better than a model including no effect of interest, this predictor has a relevant effect on confidence, and its inclusion in the model allows for a better prediction of participants’ ratings.

To follow up on significant interaction effects between age and the predictors of interest, we calculated slopes for three values of age (the mean and one standard deviation above and below the mean). Additionally, for statistical analysis of the transitions between these values, we computed Johnson-Neyman intervals using an adapted version of the johnson_neyman function of the interactions package (version 1.1.0; Long, 2019). This analysis reveals whether the statistical effect of the response parameters on confidence is conditional on the entire range of the moderator age, or just a sub-range, thus providing bounds for where the observed interaction effect is significant.

Results

Overview of response parameters

On average, participants had an error rate of 15.4 ± 1.6 %. Correct trials had a mean RT of 709.2 ± 11.5 ms and were faster [t(64) = –3.01, p = 0.004] and had a smaller standard deviation [t(64) = –5.53, p < 0.001] than error trials with an RT of 734.3 ± 13.9 ms. The mean peak force (PF) was higher for correct trials (236.2 ± 13.1 cN) compared to errors [191.7 ± 10.3 cN; t(64) = 5.51, p < 0.001] but did not differ in its standard deviation [t(64) = –0.21, p = 0.836; see Supplementary Figure 1].

Effect of age on response parameters

We have already reported the relationship between age and error rate, RT, and confidence in our previous publication (Overhoff et al., 2021) based on a slightly different subset of trials to the one used here (due to additional force-related exclusions of trials in this study). Our initial results were confirmed using a series of linear regression analyses, each using age as the predictor for one of the following variables (for an overview, see Supplementary Table 2): We found that, at group level, the error rate increased with age [F(1,63) = 34.12, p < 0.001, β = 0.005, SE = 0.001, t = 5.84]. RT increased with age for correct [F(1,63) = 27.07, p < 0.001, β = 3.115, SE = 0.599, t = 5.20] and incorrect responses [F(1,63) = 10.16, p = 0.002, β = 2.568, SE = 0.806, t = 3.19], while age did not significantly predict PF for either type of response [correct: F(1,63) = 0.02, p = 0.884, β = 0.120, SE = 0.816, t = 0.15; error: F(1,63) = 1.33, p = 0.254, β = 0.734, SE = 0.637, t = 1.15]. RTs were more variable with higher age for correct responses [F(1,63) = 7.43, p = 0.008, β = 0.477, SE = 0.175, t = 2.73], but not errors [F(1,63) = 0.04, p = 0.837, β = 0.053, SE = 0.255, t = 0.21]. Similar to the mean PF, the standard deviation of PF did not change with age [correct: F(1,63) = 0.15, p = 0.704, β = 0.164, SE = 0.429, t = 0.38; error: F(1,63) = 0.08, p = 0.774, β = 0.137, SE = 0.475, t = 0.30]. These results are illustrated in the Supplementary Figure 2.

The mean confidence (in the decision being correct, on a scale from 1 to 4) for correct responses (3.82 ± 0.02 for the entire sample) decreased with age [F(1,63) = 22.42, p < 0.001, β = –0.007, SE = 0.002, t = –4.74]. This finding indicates that the older participants were, the less confident they were in being correct when responding correctly. Contrarily, the mean confidence for errors (2.35 ± 0.08 for the entire sample) increased with age [F(1,63) = 21.96, p < 0.001, β = 0.019, SE = 0.004, t = 4.69; see Supplementary Figure 2]. Hence, the older the participants were, the less sure they were that the decision was wrong when making an error. We have recently described this phenomenon as an age-related tendency to use the middle of the confidence scale, pointing toward increased uncertainty in older adults (Overhoff et al., 2021).

Modeling of confidence

Variance inflation factors across all models with interactions were <2.03, indicating low collinearity (<5; James et al., 2013) between the predictors, and the models were not overfitted, as the fits proved not to be singular.

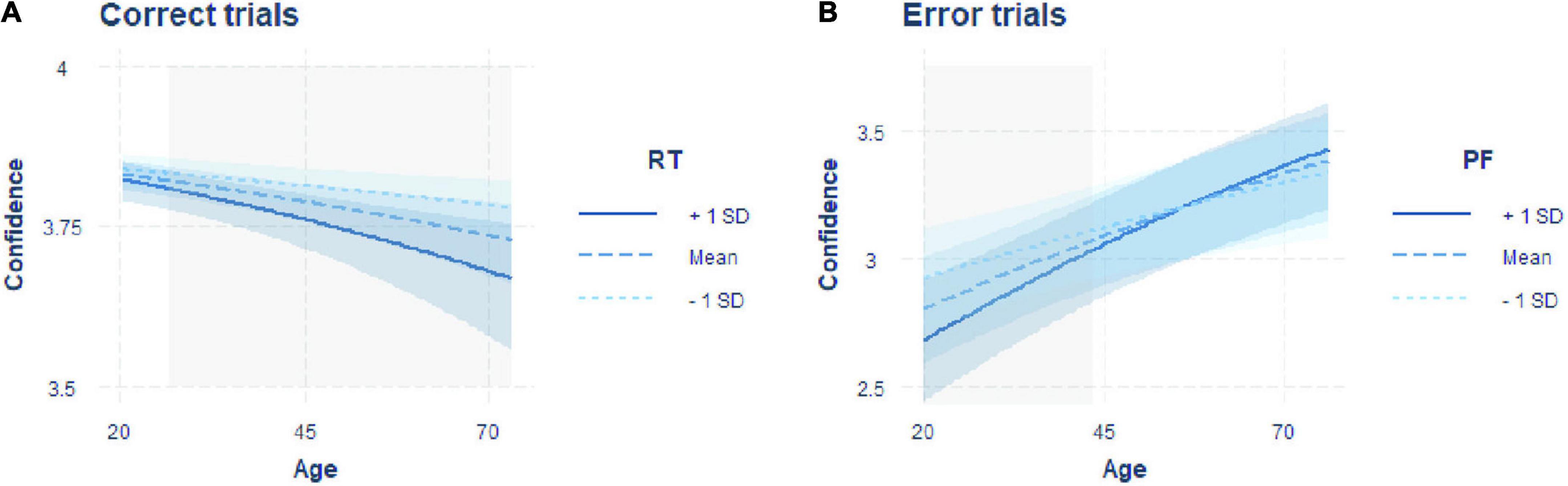

Confidence in correct decisions

We computed likelihood ratio tests to compare the model fit of the winning model to the three other models. These tests revealed that for correct decisions, model 2 [i.e., Confidence ∼ RT*Age + (RT | Participant)], which included the interaction between RT and age, fitted the data best. It was superior to model 1 (the null model), which included only the fixed effect of age [χ2(2) = 38.15, p < 0.001], and model 3, which included only the interaction between PF and age [χ2(2) = 33.71, p < 0.001]. Moreover, model 4, which included the full interaction between PF, RT and age, did not improve the fit further [χ2(4) = 8.64, p = 0.071].

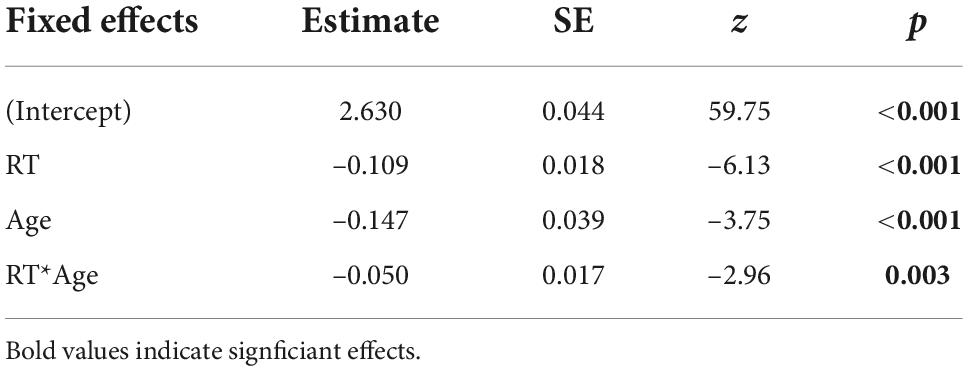

The best fitting model showed significant negative effects of age [β = –0.147, SE = 0.039, z = –3.75, p < 0.001] and RT [β = –0.109, SE = 0.018, z = –6.13, p < 0.001] on confidence and a significant interaction between the two factors [β = –0.050, SE = 0.017, z = –2.96, p = 0.003; Table 1]. Given that we found a significant interaction between RT and age, we computed simple slopes for three values of age (the mean and one SD above and below the mean). The analysis revealed that the negative effect of RT on confidence (i.e., higher confidence for faster responses) increased with older age [Figure 2A; –1SD (younger adults): β = –0.097, SE = 0.026, z = –3.75, p = 0.001; mean (middle-aged adults): β = –0.147, SE = 0.018, z = –8.32, p < 0.001; +1SD (older adults): β = –0.197, SE = 0.023, z = –8.53, p < 0.001]. The Johnson-Neyman technique revealed that the effect of RT on confidence became significant from around 24 years of age onward (higher bound of insignificant interaction effect: 24.41; Figure 2A).

Table 1. Regression coefficients (Estimate), standard errors (SE), and associated z- and p-values from the winning generalized linear (beta distribution) mixed-effects model for predicting confidence in correct responses.

Figure 2. Interaction plots including the predictors of the models predicting confidence best for errors and correct responses. (A) Regression of age on confidence in correct trials with the moderator RT. (B) Regression of age on confidence in error trials with the moderator PF. Regressions are shown for the moderator fixed on the mean (dashed line) and one standard deviation above (solid line) and below (dotted line) the mean. Blue shaded areas indicate confidence intervals, and gray shaded areas indicate the age range in which a significant effect of RT (in correct trials) or PF (in error trials) on confidence is observed, resulting in the significant interaction effect.

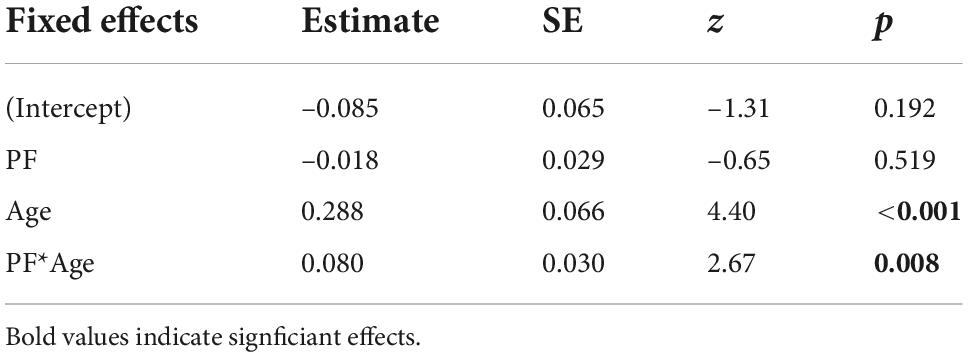

Confidence in erroneous decisions

For errors, the best fitting model was model 3 [i.e., Confidence ∼ PF*Age + (RT | Participant)], which included the interaction between PF and age. The likelihood ratio tests revealed that this model fitted the data better than model 1 (the null model) [χ2(2) = 8.38, p = 0.015] and model 2, which included the interaction between RT and age [χ2(2) = 5.36, p < 0.001], and model 4, which included the interaction between all three factors, did not show an improved model fit, either [χ2(4) = 4.47, p = 0.347].

The winning model showed a significant effect of age [β = 0.288, SE = 0.066, z = 4.40, p < 0.001] and a significant interaction between PF and age [β = 0.080, SE = 0.030, z = 2.67, p = 0.008], but no main effect of PF [β = –0.018, SE = 0.029, z = –0.65, p = 0.519; Table 2]. To further unpack the interaction effect, we ran a simple slope analysis. This analysis showed a negative relationship between confidence and PF only for younger adults, while with increasing age, the slope was not significantly different from zero [Figure 2B: –1SD (younger adults): β = –0.098, SE = 0.038, z = –2.60, p = 0.009; mean (middle-aged adults): β = –0.018, SE = 0.029, z = –0.645, p = 0.519; +1SD (older adults): β = 0.061, SE = 0.045, z = 1.37, p = 0.170]. Computation of the Johnson-Neyman interval showed that above an age of about 44 years (lower bound of significant interaction effect: 43.501), PF was no longer significantly associated with confidence (Figure 2B).

Table 2. Regression coefficients (Estimate), standard errors (SE), and associated z- and p-values from the winning generalized linear (beta distribution) mixed-effects model for predicting confidence in incorrect responses.

Discussion

This study investigated age-related changes in the relationship between the temporal and motor response parameters RT and PF with decision confidence in a perceptual conflict task. Overall, higher confidence was related to faster and less forceful responses. We could further show that, across the entire sample, confidence was associated with both parameters, and these effects were moderated by performance accuracy: While RT was related to confidence in correct responses, peak force was related to confidence in error trials. Finally, age interacted with the response parameters so that with higher age, the effect of RT on confidence was more pronounced, while the effect of PF on confidence was diminished.

We will first focus on the observed age-related associations between confidence and response parameters and subsequently discuss possible interpretations within two different theoretical frameworks.

Behavioral correlates of confidence

In a complex conflict task, we replicated one of the most robust findings on decision confidence, namely a negative relationship between RT and confidence (Fleming et al., 2010; Rahnev et al., 2020). In correct trials, the higher participants rated their confidence in a decision, the faster they had made the decision. In addition, we found a negative relationship between PF and confidence for errors (higher PF was related to lower confidence for the younger participants), which has not been reported before. Observing the latter association is interesting per se because participants’ attention was not directed to the applied force in any way (i.e., participants were not aware of the PF assessment), whilst the relevance of speed had been stressed in the instructions. A recent study (Turner et al., 2021a) showed, in a sample of young participants, that when higher levels of force had to be produced to report the (correct) decision, participants’ confidence ratings were higher. While these results do not mirror ours, it should be noted that these findings also cannot be directly compared as their study was conceptually different to our study design and explicitly required participants to produce different force ranges. However, these studies together highlight the added value of assessing response force. In our study, the differential effect of RT and PF for correct and error trials, respectively, further highlights that these are dissociable parameters of a response, supporting a model of Ulrich and Wing (1991); see Jaśkowski et al. (2000) and Armbrecht et al. (2013) that RT and RF do not reflect just two sides of the same coin. Further, our findings stress the significance of including incorrect responses as a distinct response type in the corresponding analyses.

Interestingly, the observed associations between the response parameters and confidence differed across the studied age range. While age-related changes in metacognitive performance, and error detection in particular, have been studied across tasks and domains (Palmer et al., 2014; Harty et al., 2017; Niessen et al., 2017; McWilliams et al., 2022), specific characteristics of confidence judgments have rarely been investigated in the context of healthy aging. The current study revealed a stronger association with increasing age between confidence and RT in correct trials and a weaker association between confidence and PF in errors. Importantly, control analyses (see Results and for illustration, Supplementary Figure 1) revealed increased mean and dispersion of RTs with older age, which may have caused the pronounced negative relationship between confidence and RT, but no effect of age on the force output. This may appear counterintuitive, but it is in in line with previous studies showing similar response force for younger and older adults (except for very old adults above 70 years of age; Van Der Lubbe et al., 2002; Sosnoff and Newell, 2006). It was suggested that older adults may have difficulties in visuo-motor integration in tasks that require to meet a visually cued target force rather than in the pure motor execution (Sailer et al., 2000; Slifkin et al., 2000; Mattay et al., 2002). This implies that age-related changes in the relationship between confidence and PF in our study were – most likely – not due to generally altered patterns of PF.

In sum, this study provides the first comprehensive quantification of the interrelation between confidence and two behavioral response parameters across age. Additionally, it bridges the gap between two related research fields by combining approaches from error detection and decision confidence studies (Rabbitt, 1966; Sosnoff and Newell, 2006; Cohen and van Gaal, 2014; Kiani et al., 2014; Palmer et al., 2014; Stahl et al., 2020; Thurm et al., 2020; Turner et al., 2021a). In our previous study, we showed that age-related changes in metacognitive accuracy were associated with altered patterns of electrophysiological correlates of confidence (Overhoff et al., 2021), which is complemented by the present description of age-related changes in behavioral correlates of confidence. This characterization in a sample of participants covering a broad age range constitutes a further step in identifying and understanding age-related changes in metacognitive performance.

We will present two complementary but not exclusive interpretations of the observed age-related variations in the following. In the first part, we attempt to explain our findings under the assumption that response characteristics simply co-occur with the build-up of confidence. In contrast, in the second part, we assume that response parameters comprise additional information about the decision accuracy that is integrated into confidence during its formation process.

Response parameters as the expression of confidence

One possible framework for explaining the experimental findings is to assume that the level of decision confidence is expressed in the RT or PF of the response indicating this decision, either because confidence defines the response parameters or because a common process drives both confidence and the two parameters. In other words, if a participant is highly confident in a decision, this will affect the speed and the force with which they report this decision. Research has identified multiple stimulus-related characteristics that alter the accuracy of confidence judgments, like relative and absolute evidence strength (Peters et al., 2017; Ko et al., 2022) or evidence reliability (Boldt et al., 2017). If sensory evidence is unambiguous, an easy decision will accordingly lead to high certainty of having made a correct response. In turn, if the participant nevertheless responds incorrectly but changes their mind and detects this error, the certainty of having made an error will be high (i.e., resulting in a low confidence rating). It is intuitive to imagine that high certainty of having made a correct or incorrect response (which is identical to very high or very low confidence, respectively) will lead to fast and more forceful responses.

Notably, neither RT nor PF showed the expected pattern of change as would be expected if one or both parameters simply mirrored a decline in confidence with age. Arguably, it might still be possible to explain the differential interactions with age by assuming that multiple other sources (e.g., perception, attention, response selection, motor processes) cause the observed relationships between confidence and the two response parameters. If aging impacts (some of) these sources differentially, this might result in altered associations between confidence, RT and PF, as observed here. For instance, a cognitive process that is differentially susceptible in older compared to younger adults might affect the RT-confidence relationship but spare the relationship between PF and confidence. However, as we did not systematically investigate these other processes in the present study, we can neither support nor rule out these assumptions.

Modulation of confidence by response parameters

Alternatively, our findings could also be interpreted in line with recent studies postulating that parameters of a response indicating a decision may serve as an additional source of evidence that is integrated into confidence judgments about this decision – especially in ambiguous situations (Gajdos et al., 2019; Filevich et al., 2020; Pereira et al., 2020; Wokke et al., 2020; Turner et al., 2021a). These studies showed that confidence could be altered, for instance, by applying TMS to the dorsal premotor cortex or by instructing participants to move faster (Fleming et al., 2015; Palser et al., 2018). Using very different methodological approaches, these studies mutually indicate that the post-decisional evidence accumulation might incorporate response characteristics of the initial decision into the subsequent confidence rating. Although our study design assessing the relationship of confidence with RT and naturally occurring PF precludes any conclusions regarding the causal direction of effects, it is nevertheless interesting to reflect on our results within this framework.

Looking at the overall relationship between confidence and the two response parameters, our differential findings for errors and correct responses suggest serial processing. First, the RT-related information might be “read out” by the monitoring system and serve as an interoceptive cue about the difficulty of a decision. This assumption is in line with previous work (Fleming et al., 2010; Kiani et al., 2014; Dotan et al., 2018; Gajdos et al., 2019; Rahnev et al., 2020). This interpretation would suggest that the decision-makers arrive at a higher confidence judgment because they also register having responded faster [e.g., via the efference copy (Latash, 2021) or the later representation of their action].

However, this proposed mechanism might exclusively operate in correct trials to refine confidence judgments. For the relationship between confidence and RT in error trials, which were on average slower than correct trials, it must be considered that a variety of aspects can cause errors (e.g., lack of attention, perceptual lapse), and the response profiles of errors are similarly heterogeneous. Therefore, in case of conflict (which is present in error trials), RT might no longer yield reliable information about the task requirements, and the monitoring system might probe PF instead as an alternative response parameter to compensate for the lack of reliable RT when computing confidence. In support, recent work indicated that within a similar speed range for responses, the PF in error trials was related to decision confidence (Stahl et al., 2020). Hence, while RT might not differentiate confidence levels in errors, variations in PF may well capture this information and could therefore be integrated into the final confidence judgment.

Given the frequently described decline of metacognitive abilities with older age, which was also shown in our previous analysis of the current data set [by using the Phi correlation coefficient (Nelson, 1984) for the analysis of metacognitive accuracy (Overhoff et al., 2021)], it seems likely that the older adults were lacking relevant input for the computation of confidence, making it harder for them to accurately rate their decisions. Consequently, one possibility is that the stronger association between confidence and RT in older adults might reflect a compensation mechanism. To explain, while our study does not allow for firm conclusions as to why metacognitive accuracy declined in older adults, it appears that the input to the performance monitoring system was diminished (or not adequate anymore) and did not allow for computing confidence with the same level of accuracy as in younger adults. Therefore, it is possible that the stronger reliance on RT (in correct trials) might reflect the attempt to compensate for this by relying more on other sources of input, like the interoceptive feedback about the response speed (Fleming et al., 2010; Palser et al., 2018). However, it remains unclear whether this compensation fails, as, despite more substantial reliance on RT information, confidence judgments were still poorer compared to younger adults. This could be plausible, for example, if the monitoring of response parameters itself might also become poorer with increasing age. Alternatively, it is also possible that this compensation was indeed (somewhat) successful, and without incorporating RT information more strongly, confidence judgments would be even worse. Ultimately, our study cannot resolve this question.

For error trials, we observed that the relationship between confidence and response force diminished with age. One explanation might be related to the finding that healthy aging has been associated with diminished neural specificity for errors (Park et al., 2010; Endrass et al., 2012; Harty et al., 2017; Overhoff et al., 2021), meaning that older adults might have generally been worse at detecting the errors in the first place. A recent fMRI study has extended these findings by showing that the activity related to error awareness was specifically reduced in older adults (Sim et al., 2020). Based on these findings, our results could be interpreted as another instance of an age-related error-specific processing deficit. This functional processing deficit might also extend to the sensorimotor feedback of the produced force. The read-out of the response force – which might be used to infer confidence in case of errors – might thus not be readily accessible by older adults and potentially contribute to the demonstrated deficits in metacognitive accuracy.

Limitations

While the simultaneous recording of two response parameters for each response constitutes a strength of the present study, treating RT and PF as equivalent may be problematic. We have discussed RT and PF as separate but comparable features of motor activity, even though their apparent relevance differed largely. Force was produced without constraints, while the time to report the decision was limited to 1200 ms and exerted considerable time pressure on the participants. Therefore, it would be interesting to examine changes in the modulation of confidence by RT and PF without limiting the time to respond. Moreover, since the RT in a given trial represents the sum of the time for stimulus-related processes (between stimulus onset and the start of the response movement) and the time for motor-related processes (e.g., movement time – the time between starting and terminating a response movement), future studies should additionally assess movement time and its relation to confidence.

As mentioned above, this study cannot resolve the question of causality of the observed associations. Based on the described literature, it is reasonable to speculate that our findings can be explained within the framework of response dynamics informing confidence judgments and the discussion of this interpretation should be comprehended as a proposal of avenues for future research. Having established the associations between confidence and naturally occurring response parameters in this study, future work could directly manipulate RT and/or PF, for example, by providing visual feedback about the required speed/force, or via instruction (Palser et al., 2018; Turner et al., 2021a). We have carefully outlined that alternative explanations are equally plausible and acknowledge that both lines of interpretation may be valid in part.

Finally, a thorough characterization of age-related changes in behavioral and neural correlates of confidence is needed to explain normal and abnormal impairments related to aging and might proof valuable to predict the onset of cognitive decline or age-related (neurodegenerative) diseases such as dementia (Wilson et al., 2015).

Conclusion

Corroborating recent evidence, we revealed significant associations between decision confidence and the parameters of the responses indicating this decision. Furthermore, we extended these findings by showing that confidence was associated with fine-grained changes in the time taken to report a decision and the force invested in this response. These relationships were moderated by the accuracy of the response, and, most importantly, changed markedly across the adult life span. This notion should encourage the recording of response force in behavioral experiments whenever possible, as it might uncover specific effects that cannot be revealed by measuring other response parameters, like response times. While a causal explanation of these findings was beyond the scope of this study, one possible interpretation is that the observed age-related changes in the pattern of associations reflect a mechanism in the computation of confidence and may even constitute one aspect of the frequently observed decline in metacognitive ability with older age.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving human participants were reviewed and approved by German Psychological Society (DGPs). The patients/participants provided their written informed consent to participate in this study.

Author contributions

HO, YK, JS, PW, SB, and EN contributed to the conceptualization and methodology. HO wrote the scripts, wrote the original draft, and performed the experiments and the formal analysis, guided by EN. YK, JS, PW, SB, EN, and GF helped interpret the results. JS, PW, SB, and EN provided the supervision. GF, PW, JS, and SB provided the resources. All authors reviewed and edited the manuscript.

Funding

GF and PW were funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) – Project-ID 431549029 (SFB 1451). SB was funded by the Australian Research Council (Discovery Project Grant; DP160103353). Open access publication was funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) – Project-ID 491111487. YK and HO were funded by a Jülich-University of Melbourne Postgraduate Academy (JUMPA) scholarship.

Acknowledgments

We thank all colleagues from the Institute of Neuroscience and Medicine (INM-3), Cognitive Neuroscience, the Department of Individual Differences and Psychological Assessment, and the Decision Neuroscience Lab at the Melbourne School of Psychological Sciences for valuable discussions and their support.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnagi.2022.969074/full#supplementary-material

References

Armbrecht, A.-S., Gibbons, H., and Stahl, J. (2013). Effects of response force parameters on medial-frontal negativity. PLoS One 8:e54681. doi: 10.1371/journal.pone.0054681

Barr, D. J., Levy, R., Scheepers, C., and Tily, H. J. (2013). Random effects structure for confirmatory hypothesis testing: Keep it maximal. J. Mem. Learn. 68, 255–278. doi: 10.1016/j.jml.2012.11.001

Bode, S., and Stahl, J. (2014). Predicting errors from patterns of event-related potentials preceding an overt response. Biol. Psychol. 103, 357–369. doi: 10.1016/j.biopsycho.2014.10.002

Boldt, A., de Gardelle, V., and Yeung, N. (2017). The impact of evidence reliability on sensitivity and bias in decision confidence. J. Exp. Psychol. Hum. Percept. Perform. 43, 1520–1531. doi: 10.1037/XHP0000404

Boldt, A., and Yeung, N. (2015). Shared neural markers of decision confidence and error detection. J. Neurosci. 35, 3478–3484. doi: 10.1523/JNEUROSCI.0797-14.2015

Brooks, M. E., Kristensen, K., Van Benthem, K. J., Magnusson, A., Berg, C. W., Nielsen, A., et al. (2017). glmmTMB balances speed and flexibility among packages for zero-inflated generalized linear mixed modeling. R. J. 9, 378–399. doi: 10.3929/ethz-b-000240890

Bunce, D., Macdonald, S. W. S., and Hultsch, D. F. (2004). Inconsistency in serial choice decision and motor reaction times dissociate in younger and older adults q. Brain Cogn. 56, 320–327. doi: 10.1016/j.bandc.2004.08.006

Charles, L., Van Opstal, F., Marti, S., and Dehaene, S. (2013). Distinct brain mechanisms for conscious versus subliminal error detection. Neuroimage 73, 80–94. doi: 10.1016/j.neuroimage.2013.01.054

Charles, L., and Yeung, N. (2019). Dynamic sources of evidence supporting confidence judgments and error detection. J. Exp. Psychol. Hum. Percept. Perform. 45, 39–52. doi: 10.1037/xhp0000583

Chua, E., Schacter, D. L., and Sperling, R. (2009). Neural basis for recognition confidence. Psychol. Aging 24, 139–153. doi: 10.1037/a0014029.Neural

Cohen, M. X., and van Gaal, S. (2014). Subthreshold muscle twitches dissociate oscillatory neural signatures of conflicts from errors. Neuroimage 86, 503–513. doi: 10.1016/j.neuroimage.2013.10.033

Dodson, C. S., Bawa, S., and Krueger, L. E. (2007). Aging, metamemory, and high-confidence errors: A misrecollection account. Psychol. Aging 22, 122–133. doi: 10.1037/0882-7974.22.1.122

Dotan, D., Meyniel, F., and Dehaene, S. (2018). On-line confidence monitoring during decision making. Cognition 171, 112–121. doi: 10.1016/j.cognition.2017.11.001

Dully, J., McGovern, D. P., and O’Connell, R. G. (2018). The impact of natural aging on computational and neural indices of perceptual decision making: A review. Behav. Brain Res. 355, 48–55. doi: 10.1016/j.bbr.2018.02.001

Endrass, T., Schreiber, M., and Kathmann, N. (2012). Speeding up older adults: Age-effects on error processing in speed and accuracy conditions. Biol. Psychol. 89, 426–432. doi: 10.1016/j.biopsycho.2011.12.005

Eriksen, B. A., and Eriksen, C. W. (1974). Effects of noise letters upon the identification of a target letter in a nonsearch task. Percept. Psychophys. 16, 143–149.

Falkenstein, M., Yordanova, J., and Kolev, V. (2006). Effects of aging on slowing of motor-response generation. Int. J. Psychophysiol. 59, 22–29. doi: 10.1016/J.IJPSYCHO.2005.08.004

Feuerriegel, D., Murphy, M., Konski, A., Mepani, V., Sun, J., Hester, R., et al. (2021). Electrophysiological correlates of confidence differ across correct and erroneous perceptual decisions. bioRxiv doi: 10.1101/2021.11.22.469610doi

Ficarella, S. C., Rochet, N., and Burle, B. (2019). Becoming aware of subliminal responses: An EEG/EMG study on partial error detection and correction in humans. Cortex 120, 443–456. doi: 10.1016/j.cortex.2019.07.007

Filevich, E., Koß, C., and Faivre, N. (2020). Response-related signals increase confidence but not metacognitive performance. eNeuro 7, 1–14. doi: 10.1523/ENEURO.0326-19.2020

Filippi, R., Ceccolini, A., Periche-tomas, E., and Bright, P. (2020). Developmental trajectories of metacognitive processing and executive function from childhood to older age. Q. J. Exp. Psychol. 73, 1757–1773. doi: 10.1177/1747021820931096

Fleming, S. M., and Daw, N. D. (2017). Self-evaluation of decision-making: A general Bayesian framework for metacognitive computation. Psychol. Rev. 124, 91–114. doi: 10.1037/REV0000045

Fleming, S. M., and Dolan, R. J. (2012). The neural basis of metacognitive ability. Philos. Trans. R. Soc. B Biol. Sci. 367, 1338–1349. doi: 10.1098/rstb.2011.0417

Fleming, S. M., Maniscalco, B., Ko, Y., Amendi, N., Ro, T., and Lau, H. (2015). Action-specific disruption of perceptual confidence. Psychol. Sci. 26, 89–98. doi: 10.1177/0956797614557697

Fleming, S. M., Massoni, S., Gajdos, T., and Vergnaud, J.-C. (2016). Metacognition about the past and future: Quantifying common and distinct influences on prospective and retrospective judgments of self-performance. Neurosci. Conscious. 2016:niw018. doi: 10.1093/NC/NIW018

Fleming, S. M., Weil, R. S., Nagy, Z., Dolan, R. J., and Rees, G. (2010). Relating introspective accuracy to individual differences in brain structure. Science 329, 1541–1543. doi: 10.1126/SCIENCE.1191883

Folstein, M. F., Folstein, S. E., and McHugh, P. R. (1975). Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J. Psychiatr. Res. 12, 189–198. doi: 10.1016/0022-3956(75)90026-6

Franz, E. A., and Miller, J. (2002). Effects of response readiness on reaction time and force output in people with Parkinson’s disease. Brain 125, 1733–1750. doi: 10.1093/brain/awf192

Gajdos, T., Fleming, S. M., Saez Garcia, M., Weindel, G., and Davranche, K. (2019). Revealing subthreshold motor contributions to perceptual confidence. Neurosci. Conscious. 5, 1–8. doi: 10.1093/nc/niz001

Harty, S., Murphy, P. R., Robertson, I. H., and O’Connell, R. G. (2017). Parsing the neural signatures of reduced error detection in older age. Neuroimage 161, 43–55. doi: 10.1016/j.neuroimage.2017.08.032

Hertzog, C., and Curley, T. (2018). “Metamemory and cognitive aging,” in Oxford research encyclopedia of psychology, (Oxford: Oxford University Press), doi: 10.1093/acrefore/9780190236557.013.377

James, G., Witten, D., Hastie, T., and Tibshirani, R. (2013). An introduction to statistical learning: With applications in R. New York, NY: Springer.

Jaśkowski, P., Van Der Lubbe, R. H. J., Wauschkuhn, B., Wascher, E., and Verleger, R. R. (2000). The influence of time pressure and cue validity on response force in an S1-S2 paradigm. Acta Psychol. (Amst). 105, 89–105. doi: 10.1016/S0001-6918(00)00046-9

Kiani, R., Corthell, L., and Shadlen, M. N. (2014). Choice certainty is informed by both evidence and decision time. Neuron 84, 1329–1342. doi: 10.1016/j.neuron.2014.12.015

Ko, Y. H., Feuerriegel, D., Turner, W., Overhoff, H., Niessen, E., Stahl, J., et al. (2022). Divergent effects of absolute evidence magnitude on decision accuracy and confidence in perceptual judgements. Cognition 225:105125. doi: 10.1016/j.cognition.2022.105125

Ko, Y. T., Alsford, T., and Miller, J. (2012). Inhibitory effects on response force in the stop-signal paradigm. J. Exp. Psychol. Hum. Percept. Perform. 38, 465–477. doi: 10.1037/a0027034

Latash, M. (2021). Efference copy in kinesthetic perception: A copy of what is it? J. Neurophysiol. 125, 1079–1094. doi: 10.1152/jn.00545.2020

Long, J. A. (2019). Interactions: Comprehensive, user-friendly toolkit for probing interactions. R package version 1.1.0. Available online at: https://cran.r-project.org/package=interactions

Lüdecke, D., Ben-Shachar, M. S., Patil, I., Waggoner, P., and Makowski, D. (2021). Performance: An R package for assessment, comparison and testing of statistical models. J. Open Source Softw. 6:3139. doi: 10.21105/joss.03139

Maier, M. E., Ernst, B., and Steinhauser, M. (2019). Error-related pupil dilation is sensitive to the evaluation of different error types. Biol. Psychol. 141, 25–34. doi: 10.1016/j.biopsycho.2018.12.013

Mattay, V. S., Fera, F., Tessitore, A., Hariri, A. R., Das, S., Callicott, J. H., et al. (2002). Neurophysiological correlates of age-related changes in human motor function. Neurology 58, 630–635. doi: 10.1212/WNL.58.4.630

McWilliams, A., Bibby, H., Steinbeis, N., David, A. S., and Fleming, S. M. (2022). Age-related decreases in global metacognition are independent of local metacognition and task performance. PsyArXiv 1–23. doi: 10.31234/osf.io/nmhxv

Nelson, T. (1984). A comparison of current measures of the accuracy of feeling-of-knowing predictions. Psychol. Bull. 95, 109–133. doi: 10.1037/0033-2909.95.1.109

Niessen, E., Fink, G. R., Hoffmann, H. E. M. M., Weiss, P. H., and Stahl, J. (2017). Error detection across the adult lifespan: Electrophysiological evidence for age-related deficits. Neuroimage 152, 517–529. doi: 10.1016/j.neuroimage.2017.03.015

Oldfield, R. C. (1971). The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 9, 97–113. doi: 10.1016/0028-3932(71)90067-4

Overhoff, H., Ko, Y. H., Feuerriegel, D., Fink, G. R., Stahl, J., Weiss, P. H., et al. (2021). Neural correlates of metacognition across the adult lifespan. Neurobiol. Aging 108, 34–46. doi: 10.1016/j.neurobiolaging.2021.08.001

Palmer, E. C., David, A. S., and Fleming, S. M. (2014). Effects of age on metacognitive efficiency. Conscious. Cogn. 28, 151–160. doi: 10.1016/j.concog.2014.06.007

Palser, E. R., Fotopoulou, A., and Kilner, J. M. (2018). Altering movement parameters disrupts metacognitive accuracy. Conscious. Cogn. 57, 33–40. doi: 10.1016/j.concog.2017.11.005

Park, J., Carp, J., Hebrank, A., Park, D. C., and Polk, T. A. (2010). Neural specificity predicts fluid processing ability in older adults. J. Neurosci. 30, 9253–9259. doi: 10.1523/JNEUROSCI.0853-10.2010

Pereira, M., Faivre, N., Iturrate, I., Wirthlin, M., Serafini, L., Martin, S., et al. (2020). Disentangling the origins of confidence in speeded perceptual judgments through multimodal imaging. Proc. Natl. Acad. Sci. U.S.A. 117, 8382–8390. doi: 10.1073/pnas.1918335117

Peters, M. A. K., Thesen, T., Ko, Y. D., Maniscalco, B., Carlson, C., Davidson, M., et al. (2017). Perceptual confidence neglects decision-incongruent evidence in the brain. Nat. Hum. Behav. 1, 1–21. doi: 10.1038/s41562-017-0139

Quandt, F., Bönstrup, M., Schulz, R., Timmermann, J. E., Zimerman, M., Nolte, G., et al. (2016). Spectral variability in the aged brain during fine motor control. Front. Aging Neurosci. 8:1–9. doi: 10.3389/FNAGI.2016.00305

R Core Team (2021). R: A language and environment for statistical computing. Vienna: R Foundation for Statistical Computing.

Rabbitt, P. M. A. (1966). Errors and error correction in choice-response tasks. Exp. Psychol. 71, 264–272. doi: 10.1037/h0022853

Rabbitt, P. M. A. (1990). Age, IQ and awareness, and recall of errors. Ergonomics 33, 1291–1305. doi: 10.1080/00140139008925333

Rahnev, D., Desender, K., Lee, A. L. F., Adler, W. T., Aguilar-Lleyda, D., Akdoğan, B., et al. (2020). The confidence database. Nat. Hum. Behav. 4, 317–325. doi: 10.1038/s41562-019-0813-1

Resulaj, A., Kiani, R., Wolpert, D. M., and Shadlen, M. N. (2009). Changes of mind in decision-making. Nature 461, 263–266. doi: 10.1038/nature08275

Sailer, A., Dichgans, J., and Gerloff, C. (2000). The influence of normal aging on the cortical processing of a simple motor task. Neurology 55, 979–985. doi: 10.1212/WNL.55.7.979

Salthouse, T. A. (2000). Aging and measures of processing speed. Biol. Psychol. 54, 35–54. doi: 10.1016/S0301-0511(00)00052-1

Siedlecka, M., Koculak, M., and Paulewicz, B. (2021). Confidence in action: Differences between perceived accuracy of decision and motor response. Psychon. Bull. Rev. 28, 1698–1706. doi: 10.3758/s13423-021-01913-0

Sim, J., Brown, F., O’Connell, R., and Hester, R. (2020). Impaired error awareness in healthy older adults: An age group comparison study. Neurobiol. Aging 96, 58–67. doi: 10.1016/j.neurobiolaging.2020.08.001

Slifkin, A. B., Vaillancourt, D. E., and Newell, K. M. (2000). Intermittency in the control of continuous force production. J. Neurophysiol. 84, 1708–1718. doi: 10.1152/JN.2000.84.4.1708

Sosnoff, J. J., and Newell, K. M. (2006). Aging, visual intermittency, and variability in isometric force output. Available online at: https://academic.oup.com/psychsocgerontology/article/61/2/P117/558376 (Accessed October 7, 2021).

Stahl, J., Mattes, A., Hundrieser, M., Kummer, K., Mück, M., Niessen, E., et al. (2020). Neural correlates of error detection during complex response selection: Introduction of a novel eight-alternative response task. Biol. Psychol. 156:107969. doi: 10.1016/j.biopsycho.2020.107969

Stahl, J., and Rammsayer, T. H. (2005). Accessory stimulation in the time course of visuomotor information processing: Stimulus intensity effects on reaction time and response force. Acta Psychol. (Amst). 120, 1–18. doi: 10.1016/j.actpsy.2005.02.003

Tauber, S. K., and Dunlosky, J. (2012). Can older adults accurately judge their learning of emotional information? Psychol. Aging 27, 924–933. doi: 10.1037/A0028447

Thurm, F., Li, S. C., and Hämmerer, D. (2020). Maturation- and aging-related differences in electrophysiological correlates of error detection and error awareness. Neuropsychologia 143:107476. doi: 10.1016/j.neuropsychologia.2020.107476

Turner, W., Angdias, R., Feuerriegel, D., Chong, T. T., Hester, R., and Bode, S. (2021a). Perceptual decision confidence is sensitive to forgone physical effort expenditure. Cognition 207:104525. doi: 10.1016/j.cognition.2020.104525

Turner, W., Feuerriegel, D., Andrejeviæ, M., Hester, R., and Bode, S. (2021b). Perceptual change-of-mind decisions are sensitive to absolute evidence magnitude. Cogn. Psychol. 124:101358. doi: 10.1016/j.cogpsych.2020.101358

Ulrich, R., and Wing, A. M. (1991). A recruitment theory of force-time relations in thte production of brief force pulses: The parallel force unit model. Psychol. Rev. 98, 268–294. doi: 10.1037/0033-295X.98.2.268

Van Der Lubbe, R. H. J., Verleger, R., der Lubbe, R. H. J., and Verleger, R. (2002). Aging and the simon task. Psychophysiology 39, 100–110. doi: 10.1111/1469-8986.3910100

Vaillancourt, D. E., and Newell, K. M. (2003). Aging and the time and frequency structure of force output variability. J. Appl. Physiol. 94, 903–912. doi: 10.1152/JAPPLPHYSIOL.00166.2002/ASSET/IMAGES/LARGE/DG0332134006.JPEG

Verkuilen, J., and Smithson, M. (2012). Mixed and mixture regression models for continuous bounded responses using the beta distribution. J. Educ. Behav. Stat. 37, 82–113. doi: 10.3102/1076998610396895

Vickers, D., and Packer, J. (1982). Effects of alternating set for speed or accuracy on response time, accuracy and confidence in a unidimensional discrimination task. Acta Psychol. (Amst). 50, 179–197. doi: 10.1016/0001-6918(82)90006-3

Vissers, M. E., Ridderinkhof, K. R., Cohen, M. X., and Slagter, H. A. (2018). Oscillatory mechanisms of response conflict elicited by color and motion direction: An individual differences approach. J. Cogn. Neurosci. 30, 468–481. doi: 10.1162/jocn_a_01222

Wessel, J. R., Dolan, K. A., and Hollingworth, A. (2018). A blunted phasic autonomic response to errors indexes age-related deficits in error awareness. Neurobiol. Aging 71, 13–20. doi: 10.1016/j.neurobiolaging.2018.06.019

Wilson, R. S., Boyle, P. A., Yu, L., Barnes, L. L., Sytsma, J., Buchman, A. S., et al. (2015). Temporal course and pathologic basis of unawareness of memory loss in dementia. Neurology 85, 984–991. doi: 10.1212/WNL.0000000000001935

Wokke, M. E., Achoui, D., and Cleeremans, A. (2020). Action information contributes to metacognitive decision-making. Sci. Rep. 10, 1–15. doi: 10.1038/s41598-020-60382-y

Yeung, N., and Summerfield, C. (2012). Metacognition in human decision-making: Confidence and error monitoring. Philos. Trans. R. Soc. B Biol. Sci. 367, 1310–1321. doi: 10.1098/rstb.2011.0416

Yordanova, J., Kolev, V., Hohnsbein, J., and Falkenstein, M. (2004). Sensorimotor slowing with ageing is mediated by a functional dysregulation of motor-generation processes: Evidence from high-resolution event-related potentials. Brain 127, 351–362. doi: 10.1093/brain/awh042

Keywords: aging, confidence, metacognitive accuracy, response parameters, response force

Citation: Overhoff H, Ko YH, Fink GR, Stahl J, Weiss PH, Bode S and Niessen E (2022) The relationship between response dynamics and the formation of confidence varies across the lifespan. Front. Aging Neurosci. 14:969074. doi: 10.3389/fnagi.2022.969074

Received: 14 June 2022; Accepted: 15 November 2022;

Published: 15 December 2022.

Edited by:

Chiara Spironelli, University of Padua, ItalyReviewed by:

Christopher Hertzog, Georgia Institute of Technology, United StatesElisa Di Rosa, University of Padua, Italy

Copyright © 2022 Overhoff, Ko, Fink, Stahl, Weiss, Bode and Niessen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Helen Overhoff, aC5vdmVyaG9mZkBmei1qdWVsaWNoLmRl

Helen Overhoff

Helen Overhoff Yiu Hong Ko1,2,3

Yiu Hong Ko1,2,3 Gereon R. Fink

Gereon R. Fink Jutta Stahl

Jutta Stahl Peter H. Weiss

Peter H. Weiss Stefan Bode

Stefan Bode Eva Niessen

Eva Niessen