- 1Institute of Biomedical Engineering, Chinese Academy of Medical Sciences and Peking Union Medical College, Tianjin, China

- 2School of Electronics and Information Engineering, Tianjin Polytechnic University, Tianjin, China

- 3Institute of Traditional Chinese Medicine, Tianjin University of Traditional Chinese Medicine, Tianjin, China

- 4School of Microelectronics, Tianjin University, Tianjin, China

Brain-controlled wheelchair (BCW) has the potential to improve the quality of life for people with motor disabilities. A lot of training is necessary for users to learn and improve BCW control ability and the performances of BCW control are crucial for patients in daily use. In consideration of safety and efficiency, an indoor simulated training environment is built up in this paper to improve the performance of BCW control. The indoor simulated environment mainly realizes BCW implementation, simulated training scenario setup, path planning and recommendation, simulated operation, and scoring. And the BCW is based on steady-state visual evoked potentials (SSVEP) and the filter bank canonical correlation analysis (FBCCA) is used to analyze the electroencephalography (EEG). Five tasks include individual accuracy, simple linear path, obstacles avoidance, comprehensive steering scenarios, and evaluation task are designed, 10 healthy subjects were recruited and carried out the 7-days training experiment to assess the performance of the training environment. Scoring and command-consuming are conducted to evaluate the improvement before and after training. The results indicate that the average accuracy is 93.55% and improves from 91.05% in the first stage to 96.05% in the second stage (p = 0.001). Meanwhile, the average score increases from 79.88 in the first session to 96.66 in the last session and tend to be stable (p < 0.001). The average number of commands and collisions to complete the tasks decreases significantly with or without the approximate shortest path (p < 0.001). These results show that the performance of subjects in BCW control achieves improvement and verify the feasibility and effectiveness of the proposed simulated training environment.

Introduction

A brain-computer interface (BCI) provides a new communication and control channel between the human brain and the external world without depending on peripheral nerves and muscles, which helps users interact with an external environment directly (Wolpaw et al., 2002; Naseer and Hong, 2015). There are various non-invasive methods for obtaining brain signals in BCI systems such as electroencephalography (EEG) (Abiri et al., 2019), functional near-infrared spectroscopy (Khan and Hong, 2017; Hong et al., 2018), functional magnetic resonance imaging (Sitaram et al., 2008), and magnetoencephalography (Mellinger et al., 2007). Specifically, brain-controlled wheelchair (BCW) is a particular device based on BCI, which is able to provide assistance and potentially improve the quality of life for people who have no ability to control a wheelchair by conventional interfaces due to some diseases, such as motor neuron diseases, total paralysis, stroke, etc. (Rebsamen et al., 2010). Technically, the signals obtained from spontaneous or evoked brain activities are used to generate and send commands operating the wheelchair. The common types include event-related desynchronization (ERD)/event-related synchronization (ERS)-based BCW (Tanaka et al., 2005; Wang and Bezerianos, 2017), P300-based BCW (Iturrate et al., 2009; Rebsamen et al., 2010; Yu et al., 2017), steady-state somatosensory evoked potentials (SSSEP)-based BCW (Kim et al., 2018), steady-state visual evoked potentials (SSVEP)-based BCW (Diez et al., 2013; Müller et al., 2013) and hybrid BCW (Long et al., 2012; Cao et al., 2014; Li et al., 2014).

To our knowledge, the first report of BCW was published by Tanaka and coworkers, in which the motor imagery (MI) tasks were adopted to control a wheelchair and the accuracy is close to 80% (Tanaka et al., 2005). Rebsamen et al. (2006) combined P300 and path guidance to steer the wheelchair in an office-like environment without complex sensors. Müller et al. (2015) used low and high frequency SSVEP to control the BCW and the corresponding average accuracies of disabled subjects are 54% and 51%, but the high frequency stimuli are more comfortable. In recent years, systems with shared control and different levels of artificial intelligence were introduced into BCW to improve the driving safety (Rebsamen et al., 2007; Galán et al., 2008; Satti et al., 2011; Tang et al., 2018). Some simulated systems were also built to test the feasibility of the related designs. According to reports by Leeb et al. (2007), a tetraplegic is able to control movements of the wheelchair through EEG in a virtual environment. Gentiletti et al. (2009) designed a simulation platform based on P300 and verified the practicability through wheelchair control, and Herweg et al. (2016) reported a virtual environment for wheelchair control based on P300 as well. Through audio-cued MI-based BCI, Francisco et al. (2013) achieved wheelchair control in virtual and real environments. Besides, Wang and coworkers also proposed multiple patterns of MI to implement the movement control of the virtual automatic car (Wang et al., 2019). In terms of the simulated systems based on SSVEP-based BCI or hybrid BCI, Bi et al. (2014) applied SSVEP-based BCI to control a simulated vehicle and Li et al. (2018) combined hybrid BCI with computer vision to build a simulated driving system.

However, for the development of BCW, the practical application is still a critical problem (Yu et al., 2017). It is difficult and, to some extent, dangerous for patients to control BCW in complex situations, especially for naive users (Bi et al., 2013; Fernández-Rodríguez et al., 2016). In addition, the proficiency and efficiency of BCW control are crucial for patients in daily use (Fernández-Rodríguez et al., 2016). Therefore, it is desirable to exploit efficient systems to improve the BCW control performance of users. Recently, many studies have shown that training is one of the effective ways to improve the performance of subjects in BCI (Herweg et al., 2016; Wan et al., 2016; Yao et al., 2019). Hence, we hold the opinion that training can be capable of improving the ability to control the BCW of users, which has rarely been adopted in previous reports. Meanwhile, it is useful for the calibration of parameters (e.g., threshold values) and performance test of BCW (Gentiletti et al., 2009; Li et al., 2018). Furthermore, rehabilitation experts believe that the motivation of patients in training plays an important role in the recovery of motor control (Kaufman and Becker, 1986; Griffiths and Hughes, 1993; Maclean et al., 2000). Nowadays, training in the simulated environment is a better choice because of safety, convenience, and low consumption (Bi et al., 2013). It has been proved that the disabled are able to perform motor learning and task training in the virtual environment and transfer the learned skills to real world performance (Kenyon and Afenya, 1995; Rose et al., 2000). In some cases, the skills can even be extended to other untrained tasks and have good effects (Todorov et al., 1997; Holden, 2005). At the same time, simulated training in a familiar indoor environment (e.g., home or hospital) is more helpful, because users (patients) spend most of their time in home or hospital.

Nevertheless, in the previous researches, few indoor simulated environments were proposed for BCW control training according to different situations and levels of difficulty. In addition, effective training experiments and evaluation methods are also necessary. Taking into account these factors, an indoor simulated training environment based on SSVEP-based BCI is presented in this paper. This training environment integrated training and control in the processes from BCW implementation, training scenario setting, path planning and recommendation, simulated control training, and finally to scoring. And a 7-days simulated training experiment with four training sessions and five tasks was conducted to evaluate the feasibility. The reasons for choosing SSVEP-based BCI are as follows. From the aspect of practical application, SSVEP-based BCI is more suitable for BCW to issue control commands due to its fast command issuing and more stable performance (Bi et al., 2013, 2014). In most situations, view switching between the stimuli and the environment needs to be trained repeatedly as well. Secondly, SSVEP-based BCI generally requires only short-term calibration and training processes of the systems, so the results of performance improvement obtained by the 7-days training experiment are convincing.

The hypothesis in this paper is that training in our simulated training environment can lead to an increase of the accuracy and scores, and eventually the performance enhancement of the users who never used BCW before. In this study, 10 healthy subjects were recruited and asked to carry out training experiments to assess the feasibility and performance of the indoor simulated training environment. This paper is organized as follows. In see section “Materials and Methods,” the methods and materials of the system are introduced. In section Results, experimental results are presented. See section “Discussion” provides discussions. At last, some conclusions are given in see section “Conclusion.”

Materials and Methods

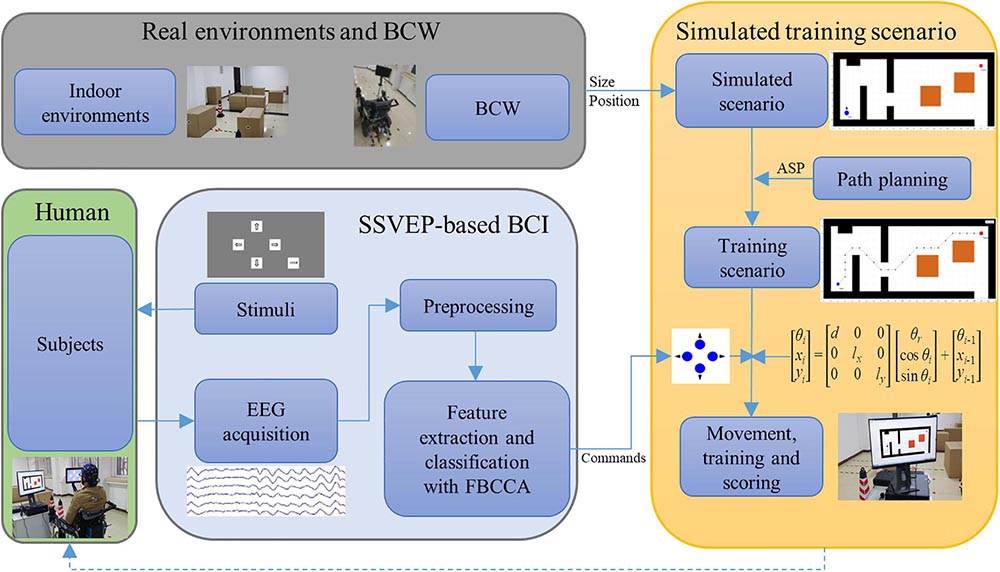

The schematic of the indoor simulated training environment is as shown in Figure 1. The main functions of the environment include SSVEP-based BCI input, training scenario setup, path planning and recommendation, simulated operation, and scoring. The SSVEP-based BCI uses a sampled sinusoidal stimulation method to evoke SSVEP (Nikolay et al., 2013) and utilize a filter bank canonical correlation analysis (FBCCA) method to improve the detection accuracies of SSVEP by incorporating fundamental and harmonic frequency components (Chen et al., 2015). The simulated scenario is modeled from a real indoor environment with BCW and objects (obstacles) placed in it. The approximate shortest path (ASP) is provided by using the A-star algorithm owing to its simplicity and efficiency (Koenig and Likhachev, 2005; Le et al., 2018). Subjects are able to steer according to the scenario with or without path planning.

System Hardware

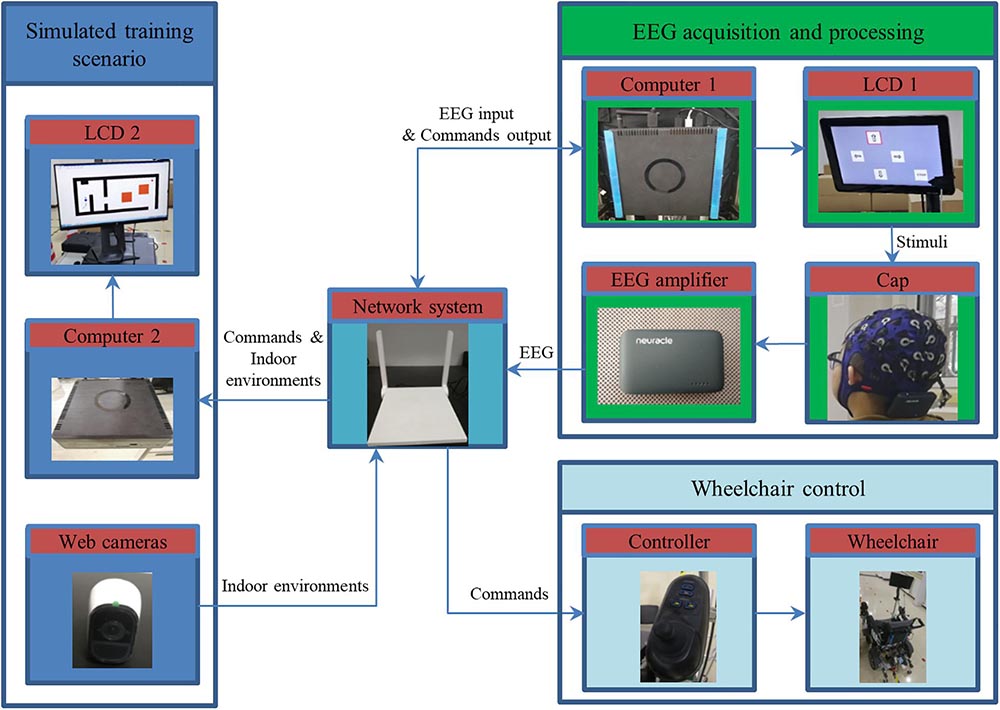

The relevant hardware of the system is as shown in Figure 2, which mainly includes three parts: EEG acquisition and processing, wheelchair control, and simulated training scenario. And data exchange between the various parts is implemented by the network system (router). The associated hardware mainly consists of a wheelchair, two liquid-crystal display (LCD) screens, a minicomputer, a wireless EEG amplifier, three web cameras, and a server computer.

The minicomputer (Computer 1) includes Intel core CPU (i5-7500 T, 64-bit), 32 GB RAM, and 8 GB video card (Nvidia GTX 1070). The stimulus programs and data analysis are running in Computer 1 using MATLAB (MathWorks, Inc.). The stimuli are presented on a 12.1-inch LCD screen (LCD 1) with a resolution of 1280 × 800 pixels and a 60 Hz refresh rate. EEG data are recorded by a wireless EEG amplifier (Neuracle, Inc.) with an EEG cap. The ordinary powered wheelchair (DYW-459-46A6, 0.55 m width and 1.10 m length) is used and a wireless module is added, so it can be controlled by both the joystick and Wi-Fi pattern. In addition, a server computer (Computer 2) is used to run the training program with a 26-inch LCD screen (LCD 2) to display the training scenario and three web cameras are connected to the server to obtain the situation of the room. The parameters (e.g., size and position) of BCW and obstacles in the simulated scenario are set according to the images captured by the cameras.

Stimulation and Data Acquisition

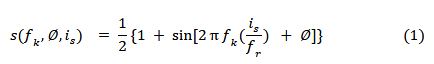

The functional modules of our SSVEP-based BCW mainly include stimuli, EEG acquisition, feature extraction and classification, and control. The screen luminance of the stimulus sequence s(fk,∅,is) is modulated by the sampled sinusoidal stimulation method as the following equation (Wang et al., 2010; Nikolay et al., 2013):

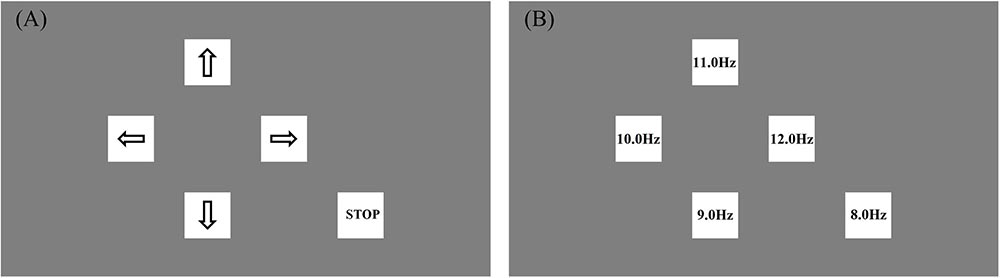

where fk is the stimulus frequency; ∅ represents the initial phase; is is the frame index of the stimulus (is = 0, 1, 2, …); fr is the refresh rate of the screen and set at 60 Hz. In order to get a stronger response and avoid harmonic interference, the range of fk is commonly set from 8 Hz to less than 16 Hz (Pastor et al., 2003; Chen et al., 2014). The number of stimulus targets is determined by fk and ∅. The basic control intentions (commands) of BCW are turn-right, move-forward, turn-left, move-backward and stop, so k is set at an integer from 1 to 5. The corresponding frequencies fk is set at 12, 11, 10, 9, and 8 Hz, and ∅ is set at 0. Figure 3 shows the distribution and frequency values of the stimulus targets.

Six Ag/AgCl electrodes (O1, Oz, O2, PO3, POz, and PO4 of the international 10–20 system) over the occipital and parietal areas are used to acquire SSVEP with the ground electrode at the midpoint of FPz and Fz and the reference electrode at the vertex (Cz). The signals of six channels are acquired at a sampled rate of 250 Hz when the electrode impedances are below 10 kΩ, the recording data XEEG is processed by the band-pass filtered at 1–100 Hz and the notch filter at 50 Hz. Event trigger signal occupies another channel to synchronize the EEG data and the stimulus event.

Data Analysis

In consideration of the accuracy and efficiency of feature extraction and classification, FBCCA is adopted to achieve frequency detection in this study (Chen et al., 2015). FBCCA is an extended method to improve the accuracy of canonical correlation analysis (CCA) in the frequency detection of SSVEP. It decomposes the full frequency range of EEG into sub-bands and calculates the correlation coefficients between each sub-bands and the reference signal, respectively. The maximum weighted sum of the correlation coefficients is used to determine the classified results of SSVEP.

In CCA, the reference signal Yfk corresponding to the stimulus frequency fk is as following (Lin et al., 2007):

where Nh is the number of harmonics; t is equal from to , in which fs is the sampling rate and Ns is the number of sampling points. The linear combination of two variables x and y are set as x = XTWX and y = YTWY. And the maximum correlation coefficient of two variables ρ(x, y) is calculated via:

In FBCCA, we first acquire the maximum correlation coefficients between each sub-bands and reference signals by CCA and then calculate the weighted sum in the full frequency range. The weighted sum of the maximum correlation coefficients represents as follows:

where () is the maximum correlation coefficients and calculated by Eq. (3), n (n = 1:N) and k (k = 1:5) are the indexes and represent the sequence number of sub-bands and stimulus frequencies, respectively. XSBn represents the signal of the n-th sub-band obtained by filter bank analysis from XEEG. Namely, serial band-pass filters are used to extract sub-bands. Since the useful harmonics frequencies are below 90 Hz, we set N = 7 here and the frequency of the n-th sub-band range from n× 8 Hz to 88 Hz. WX(XSBn,Yfk) and WY(XSBn,Yfk) are the weight vectors consist of the first pair (maximum) of canonical variables in the CCA between XSBn and Yfk. The better empirical values of a, b, and Nh are 1.25, 0.25, and 5 (Chen et al., 2015). And the frequency of Yfk corresponding to the maximum is denoted as the stimulus frequency. Finally, the results of classification are transformed into commands and sent to the wheelchair or the simulated training scenario through the TCP/IP protocol.

Simulated Training Scenario

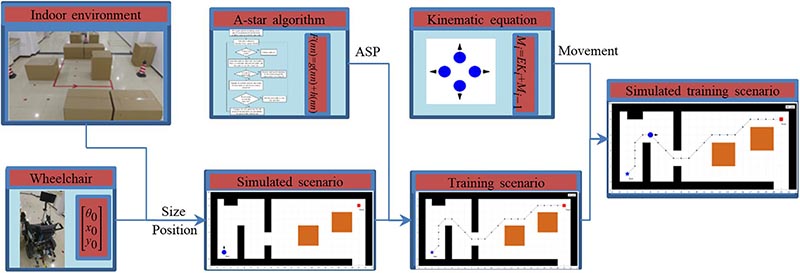

A common room with about 13.2 m length and 5.4 m width is the prototype of the simulated scenario. The simulated training scenario provides an operator interface to set up different situations. Therefore, the obstacles and SSVEP-based BCW can be placed in the room or scenario according to the demand for training. Figure 4 illustrates the diagram of the simulated training scenario.

The space of the simulated scenario is divided into a grid map. According to the width (0.55 m) and length (1.10 m) of the wheelchair, the side length of the square grid corresponds to 0.6 m and the BCW is able to occupy about two grids. The grids are defined as obstacle grids and not allowed to pass while the obstacles belong to the grids. And the rest are defined as freedom grids. In this way, the coordinates of nodes are obtained from the vertices of respective grids. The position of obstacles is acquired by the web cameras fixed on the walls. The dimension information is deemed to the known quantity and input to the training scenario using a rectangular coordinate. The start point, the goal point, and the obstacle placement are determined in accordance with the training. In step-by-step BCW control, four parameters (the moving distance of the move-forward and move-backward, the rotation angle of the turn-right and turn-left) are able to be set according to the different situations. The simulated kinematical equation of BCW is given by:

where Mi is the motion coordinates vector; i represents the i-th step operation (i = 1, 2,…). E is the individual matrix determined by different situations (e.g., turning with motion or not); Ki is the kinematic vector. θi is the heading angle (initial value is set to θ0); θr is the rotation angle and determined as a positive value when turning left (d = 1) and a negative value when turning right (d = −1). (xi, yi) is the position coordinate of BCW, and the initial value (x0, y0) is the position of the start point. l is the variable quantity of the moving distance.

Path Planning

In view of the operating space and accuracy, the A-star algorithm is used to search ASP. For security, the shortest safety distance between BCW and obstacles is determined to be equal to the half diagonal length of the grids (about 0.42 m in a real environment). We set the central initial position of BCW as the start point, and the neighbor freedom nodes are added to the queue list. The cost evaluation F of the path is calculated as following (Koenig and Likhachev, 2005):

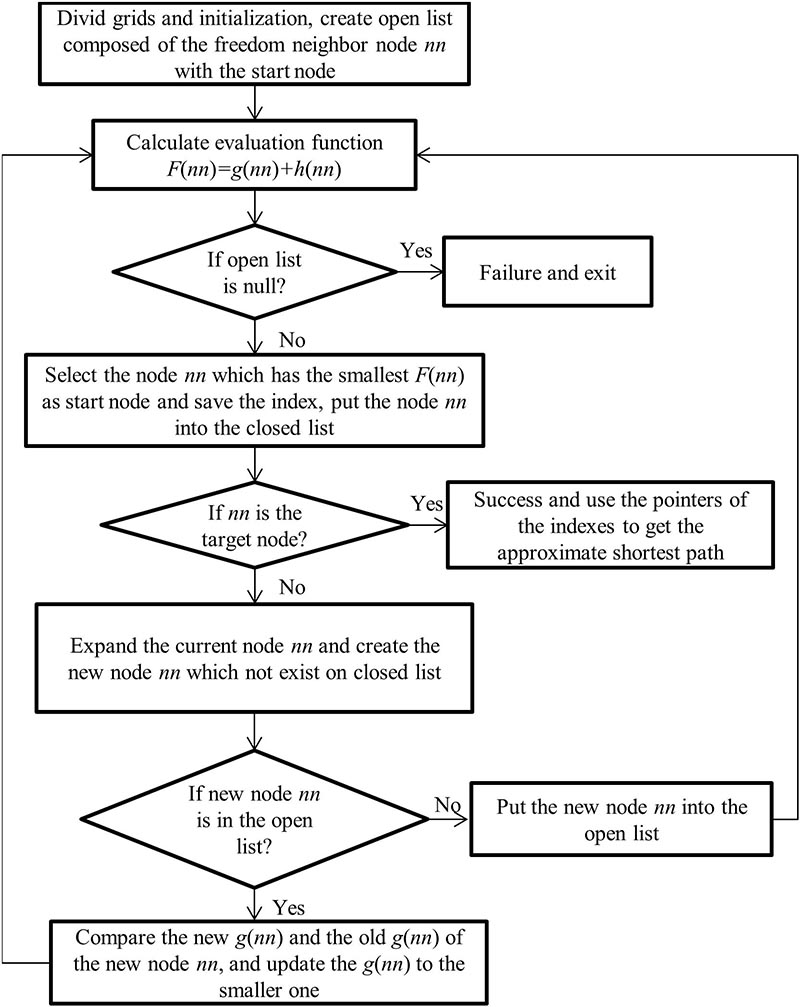

where nn is the next node within the neighbor freedom nodes on the path, g(nn) is the distance between nn and the start node on the path, h(nn), depends on the heuristic information, is the estimate of the distance between nn and the goal node. For the start point, all the F values of neighbor nodes are calculated. The next node nn is obtained from the node with the minimum value of F. In the same way, the node nn is replaced with its next node with the minimum value of F at each iteration, and the corresponding queue lists are updated as well. The loop iteration is stopped as the value of h is equal to zero. The approximate shortest path (ASP) is the line connected with the nodes which have the minimum value of F. The flowchart of the A-star algorithm is as shown in Figure 5.

For the convenience of design and realization, all programs are developed using the MATLAB environment.

Experiment

Participants

To test the feasibility and performance, 10 healthy subjects (7 males and 3 females, 24 ± 3 years old and with normal or corrected-to-normal vision) who had never participated in any experiment on SSVEP-based BCW were recruited in the online training experiment. All of them signed the consent forms before the experiment. The experiment was performed according to the standards of the Declaration of Helsinki, and the study was approved by the Research Ethics Committee of Chinese Academy of Medical Sciences, China.

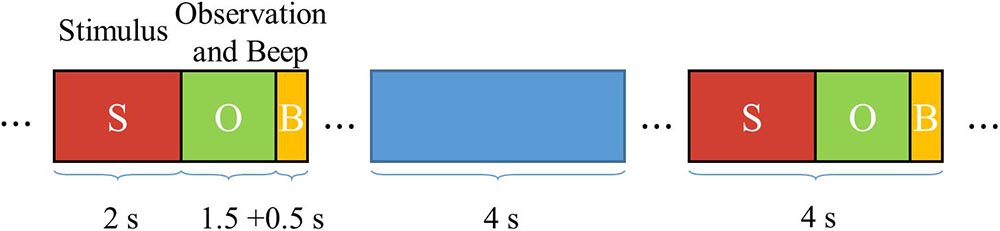

The paradigm of SSVEP stimuli of all tasks are exactly the same; each trial includes 2 s stimulus, 1.5 s observation, and 0.5 s beep, as shown in Figure 6. During the 2 s stimulus time, the subjects need to focus their attention on one of the stimulus targets. After the end of stimulus, subjects are asked to shift their gaze to the interface of simulated training scenario as soon as possible to observe the “road conditions” and determine the next state in 1.5 s. A 0.5 s beep sounding before the next trial is used to prompt the user to shift their gaze back to the stimulus targets.

Procedure

A total of four sessions for each subject in the training experiment are carried out, there are about 48 h rest between each session. And the integrated session consists of 5 (or 4) different tasks and one questionnaire survey. After each task, subjects are asked to take a 3 min break. Task 1 (T1) is designed to test the individual accuracy of subjects. In Task 2 (T2), Task 3 (T3), and Task 4 (T4), the level of difficulties increases gradually and subjects are asked to steer the simulated BCW from the start point to the goal point along ASP. In Task 5 (T5), ASP does not provide and the subjects should arrive at the goal point as safely and quickly as possible. In Session 1 (S1) and Session 3 (S3), subjects are requested to participate in T1 to T4 (a total of 10 tasks). T1 is carried out firstly (once), and then T2, T3, and T4 (training three times, respectively). In Session 2 (S2) and Session 4 (S4), subjects are requested to participate in T1 to T5 and the T5 is also performed three times (a total of 13 tasks). Finally, the questionnaire is filled out at the end of each session.

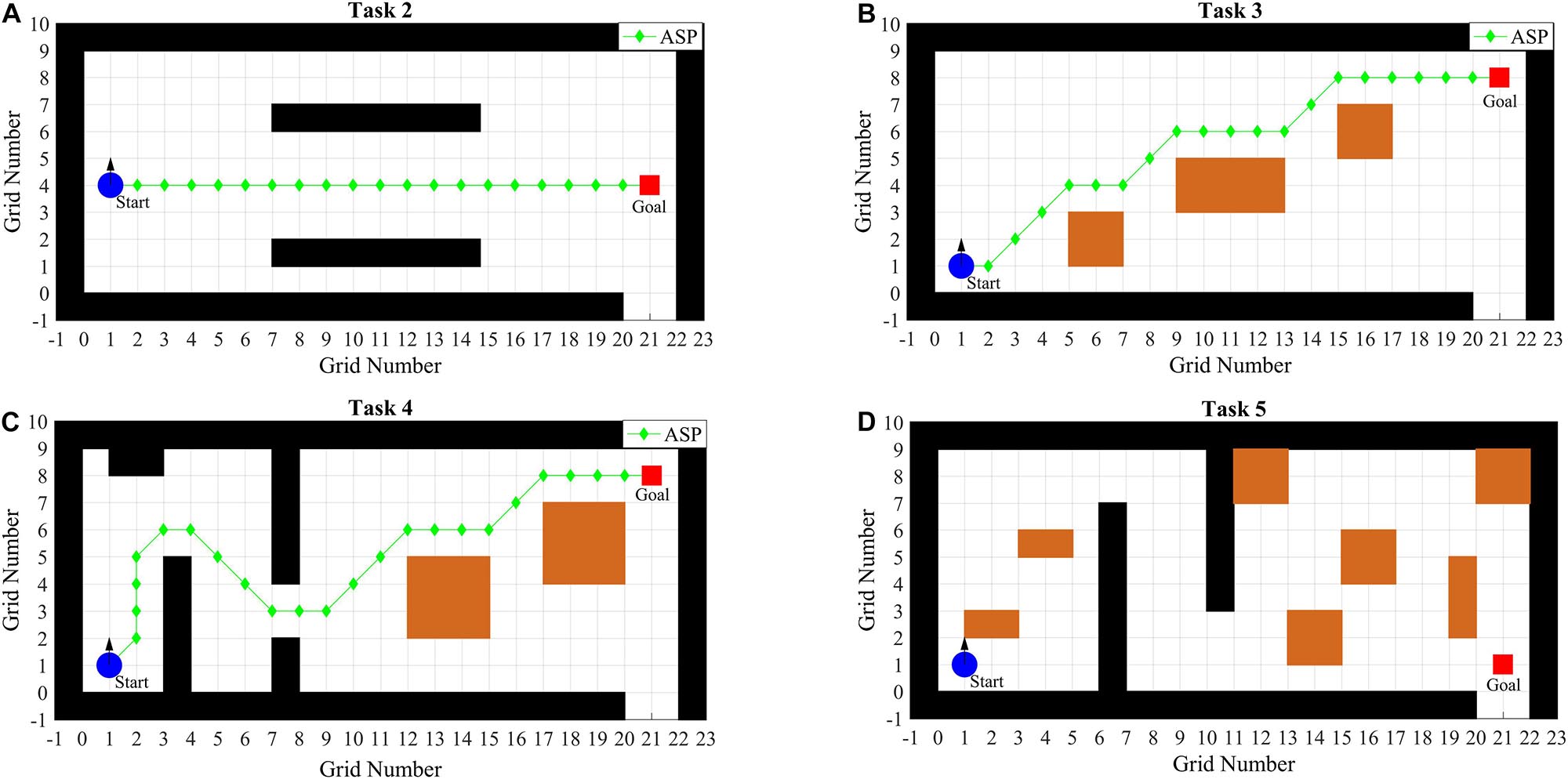

To facilitate the operation, the rotation angle of turn-right or turn-left is set to π/4 and the moving distance of move-forward or move-backward is set to the half side length or half diagonal length (when the heading angle is an odd multiple of π/4) of the grid using Formula (5). The different scenarios of T2, T3, T4, and T5 are as shown in Figure 7. The specific descriptions of tasks are as follows:

T1: Individual accuracyTo evaluate the accuracy of the individual and the designed system, the random intentions (commands) task is carried out. A red triangle under a stimulus target is used as the cue of “gaze” during each trial and subjects should focus their attention on the cued targets. Each target is randomly repeated 20 trials (a total of 100 trials). The training scenario is set to a square with the 40 grids length of the side and has no obstacle.

T2: Simple linear pathThe subjects are asked to go through the corridor along ASP in this task. The simple linear path task without any obstacles is able to help the subjects to familiar with the simulated environment and practice moving in a straight line.

T3: Obstacles avoidanceThree obstacles are placed and the subjects are asked to turn left, right or right-angle to avoid them along ASP.

T4: Comprehensive scenarioThe scenario includes right-angle bend, S-shaped bend, corridor, door, and obstacles. ASP is provided as well.

T5: Evaluation taskS1 and S2 are the first stage of training; S3 and S4 are the second stage. T5 is designed to evaluate the training effect at the end of each stage. The difficulty of T5 is increased, and ASP is not provided. The subjects should determine the control paths by themselves in light of experiences obtained from training.

Scoring and Questionnaire

A score is designed to evaluate the performance and judge the task is successful or not. The time and distance to complete the task are closely related to the number of commands in step-by-step BCW movement, so the improvement before and after training can be clearly seen from the number of commands. At the same time, it is easy and simple to get information about safety in the driving process through the number of collisions. Therefore, the performance of the subjects in the tasks is mainly measured by the number of commands and collisions. Thereinto, the number of operation commands according to ASP is adopted as a reference, indicating the best performance that the subjects can achieve. The subjects should avoid collisions as much as possible. The full score is set to 100. To encourage participants, the task is deemed to fail as the result is less than or equal to 0. It does not matter whether a single task is successful or not, the whole task or session should be completed unless subjects give up voluntarily. The score is set as:

where Sc is the score of the subjects in the tasks. Na represents the total number of actual operation commands, and NASP is the number of operation commands according to ASP. C is the number of collisions.

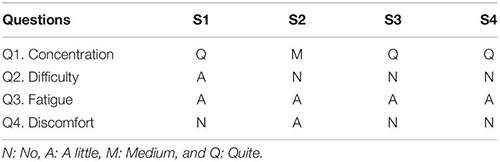

After each session, the questionnaire is asked to answer and the questions (Q1-Q4) are as following:

1. Can you stay concentrated in the whole session?

2. Do you think the tasks are difficult?

3. Do you feel tired in the tasks?

4. Do you feel uncomfortable about the stimuli?

The alternative answers are No (N), A little (A), Medium (M), Quite (Q).

Performance Evaluation

To evaluate the improvement of subjects after training with the simulated training environment, the results of T1 to T4 in S1 and T5 in S2 are set as the control group and compared with those of other sessions. In addition to using paired-samples t-test to compare the results, one-way repeated-measures analysis of variance (ANOVA) is also applied to determine the statistical significance of differences in the accuracy.

Results

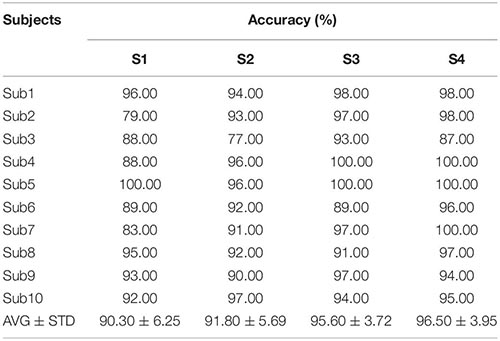

In T1, there is no obstacle in the scenario, and subjects need to gaze the stimulus following the cues. The accuracies including the average values (AVG) and standard deviations (STD) are listed in Table 1. It can be found that the maximum is up to 100.00%, and the grand average of accuracies is 93.55%. Thereinto, the average accuracies of S1 to S4 are increases gradually and the result of S4 is 96.50 ± 3.95%. A paired-sample t-test reveals an improvement on the accuracy. And the average accuracy improves from 91.05% in the first stage (S1 and S2) to 96.05% in the second stage (S3 and S4) (p = 0.001). Comparing S1 and S4, the average accuracy increases from 90.30% in the S1 to 96.50% in the S4 (p < 0.05). In addition, one-way repeated-measures ANOVA is also applied to analyze the differences of accuracy in Table 1. The results show that there is a statistically significant difference between these four sessions [F(3, 36) = 3.50, p < 0.05]. Pairwise comparisons reveal a significant difference between S1 and S4 (p < 0.05). And there is no significant difference between S1 and S2 (p > 0.05), no significant difference between S1 and S3 (p > 0.05). All of these results are considered to be acceptable.

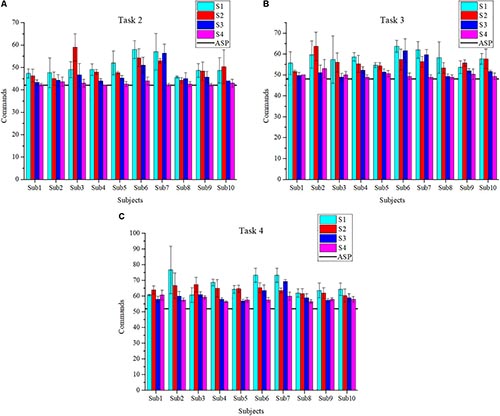

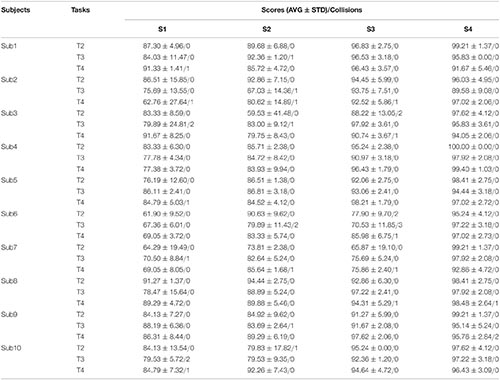

In T2, T3, and T4, ASPs are provided, which can help subjects practice turning right-angle, S-shaped bend, crossing the corridor and door, and obstacles avoidance. The scores and the number of commands are used to assess the performance of subjects as far as possible. And the number of commands of ASP is used as a reference, which are 42, 48, and 52 in T2, T3, and T4, respectively. To facilitate the observation of results, the histograms of the average number of commands in each task are plotted in Figure 8. The collisions and average scores of each session in the experiment are also calculated and as shown in Table 2.

In T2, the scenario is relatively simple and subjects are asked to control the BCW go through the corridor along ASP. It can be seen from Figure 8A that subjects perform better and better except Sub3 in S2, because Sub3 makes some mistakes and costs lots of commands for corrections in that session. The average number of 59 commands is executed, which is much greater than 42 commands of ASP. But during S4, the average number of 43 commands is cost, which is very close to 42. T3 is mainly constructed to practice turning and obstacles avoidance. As can be seen from Figure 8B, the overall performance of each subject tends to be better from S1 to S4. In addition, the results of S3 and S4 (second stage) are better than those of S1 and S2 (first stage). Figure 8C shows the average number of commands in T4. With the exception of Sub1 and Sub3, the average number of commands used by each subject to complete the tasks is gradually reduced. The most impressive results belong to Sub2, and the average number of commands is 76.67, 66.67, 60.00, and 57.67 from S1 to S4.

In addition, the paired-sample t-test is adopted to analyze the difference of all subjects between S1 and S4. The average and standard deviation of commands are 58.40 ± 9.14 and 50.32 ± 6.56, respectively. The average number of commands decreases by 8.08 and the standard deviation decreases by 2.58, and is significantly different (p < 0.001). Note that the average number of commands in the synthesis of T2, T3, and T4 according to ASP is only 48.67. It indicates that the operational proficiency of subjects improves through the training with ASP.

According to the scores in Table 2, it can be found that the performance of subjects is improved between S1 and S4. The T2 of Sub7 shows the most significant improvement, the average score increases by 34.92 (from 64.29 ± 19.49 of S1 to 99.21 ± 1.37 of S4). Meanwhile, the paired-sample t-test is used to analyze the difference in the scores of S1 and S4 of all subjects. The average score of S4 (96.66 ± 3.70) is 16.78 higher than that of S1 (79.88 ± 12.64), and the difference is significant (p < 0.001). And the standard deviation decreases by 8.94. These results further show that the performance has improved and become more stable after the training. Regarding the total number of collisions in each task, there are five collisions in T2, 13 collisions in T3 which mainly occurred in S1 and S2, and 14 collisions in T4 since the scenario is slightly difficult and comprehensive.

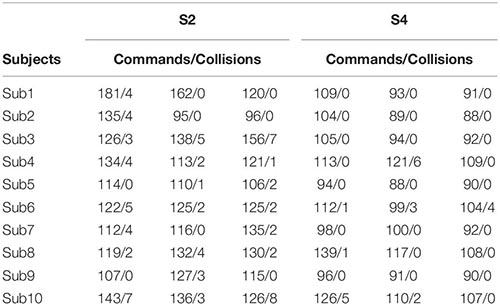

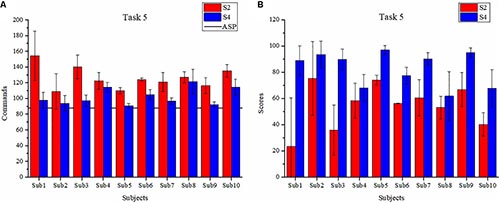

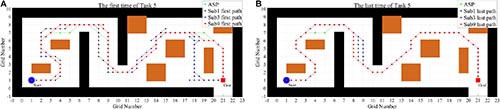

The main aim of T5 is to assess the training effect. The first T5 is scheduled at the end of S2, and the latter is scheduled in S4. The number of commands and collisions of T5 in S2 and S4 are as shown in Table 3, the average number of commands and scores are as shown in Figure 9.

Regarding Table 3, the mean number of collisions is 2.57 in S2, since the scenario is harder than that of T4 and ASP is not provided for subjects. The average number of commands is 125.90, which is much higher than the number of commands in ASP (88). In S4, the performance of subjects has improved. The average number of commands is 102.30 with the number of collisions dropping to 0.73. In terms of the average number of commands, the result of S4 (102.30 ± 12.53) is 23.60 less than that of S2 (125.90 ± 18.35) and the difference is significant (p < 0.001) for paired-sample t-test. Especially, the average number of commands of the last T5 is 97.1, which is close to the commands of ASP.

As can be seen from Figure 9A, the average number of commands for all subjects shows a downward trend as a whole. In S4, the number of commands used by all subjects to complete T5 is close to the number of commands of ASP (88). According to the scores shown in Figure 9B, the average scores of S4 are higher than those of S2. In S4, all the subjects could complete T5 with better performance, and the maximum is 96.97 ± 3.47. The average score of S4 (82.95 ± 15.13) in T5 is 28.30 higher than that of S2 (54.65 ± 21.14), the difference being significant (p < 0.001) for paired-sample t-test. And the standard deviation decreases by 6.01. These results verify that all subjects achieve an improved performance of BCW control.

For T5, Figure 10 shows the first and the last paths of Sub1, Sub3, and Sub9, who earn the lowest, medium and highest scores in the first test, respectively. It shows the specific paths of subjects. It can be found that the subjects steer basically according to previous experience, and the actual paths are similar to ASP, especially the last paths.

Figure 10. The specific paths of three subjects in the first T5 of S2 (A) and the last T5 of S4 (B).

The comprehensive results of the questionnaire are shown in Table 4. For each question, we calculate the average score of all subjects’ answers in each session and mark the alternative answer closest to the average score as a comprehensive result. Here, N, A, M, and Q correspond to scores of 25, 50, 75, and 100, respectively. As seen in Table 4, the subjects are able to stay concentrated and do not feel very uncomfortable in the whole experiment. The subjects feel a little fatigue in all sessions, which satisfies the mental workload required for training. According to the evaluation of the difficulty, the tasks are acceptable, although subjects feel a little difficult at the beginning of the experiment. It indicates that the simulated training environment is available to the subjects.

Discussion

Improving the proficiency and efficiency of BCW control is very important for users. The main aim of our study is to design and apply an indoor simulated training environment to train and improve users’ performance in using SSVEP-based BCW which is evaluated by classification accuracy and scoring (or commands). The system can help users to understand the indoor situation, which users may encounter in daily life, by establishing a simulated environment from an overhead perspective. The control proficiency of users will be improved through repeated training in different situations, such as going straight, turning left or right, turning right-angle and S-shaped bend, crossing the corridor and door, and obstacles avoidance, etc. Furthermore, the complexity of training is gradually increased to improve the mental workload of users. So, training in this indoor simulated environment is beneficial to the performance improvement of users and help them learn and become proficient in BCW control.

In a similar approach, some simulated systems or neurofeedback training systems for BCI have been developed in the recent past. Related work has described introducing a hybrid BCI that uses the MI-based mu rhythm and the P300 potential to control a brain-actuated simulated or real wheelchair (Long et al., 2012). All of the subjects accomplished predefined tasks successfully and obtained an average accuracy of 83.10%. In the other study, SSVEP-based BCI has succeeded in the control of a brain-controlled simulated vehicle (Bi et al., 2014). Four participants were required to perform a driving task online to test the system. The average accuracy is 76.87% and the mean ratio of task completion time to the nominal time (i.e., time optimality ratio) is 1.36. In more recent work, a simulated driving system based on hybrid BCI and computer vision was proposed to explore and verify the feasibility of human-vehicle collaborative driving (Li et al., 2018). The information transfer rate (ITR) in the on-line experiment reaches 85.80 bits/min and the task success rate is 91.1%. Besides, a recently published paper designed a virtual automatic car based on multiple patterns of MI-based BCI (Wang et al., 2019). The participants were asked to navigate the virtual automatic car through the six predefined destinations sequentially. The experimental results showed that the average accuracy is 75% and the mean time optimality ratio reaches 1.28.

In comparison with the abovementioned simulated systems (Long et al., 2012; Bi et al., 2014; Li et al., 2018; Wang et al., 2019), which aimed to verify and test the feasibility and performance of the designed BCW or BCI systems, the present study designed and applied an indoor simulated training environment to improve the performance of users in BCW control. As results of the individual accuracy task, the average accuracy is 93.55% and the maximum individual accuracy is 100.00% (see Table 1). In terms of time optimality ratio, we get the results by calculating the ratio of the actual number of commands in tasks to the nominal number of commands in ASP (4 s per command). And the mean ratio of T2, T3, and T4 is 1.15 while the result is 1.30 in T5. These results substantiate that all subjects are able to use the SSVEP-based BCI to issue commands with high accuracy. As for ITR, according to the formula of Yuan et al. (2013), the average ITR is 28.26 ± 4.65 bits/min and the maximum is 34.83 bits/min (with 5 targets and 4 s of each trial). And ITR will decrease as the time of each trial increases, however, the users need more time to observe the situation since the obstacles are usually close to the BCW in the indoor environment.

In recent years, training has been used to improve the performance of some BCI systems. Relevant to MI-based BCIs, Kus et al. (2012) designed an asynchronous BCI based on MI and improved performance through neurofeedback training. After training, the participants used the asynchronous MI-based BCI to navigate the cursor through the maze and achieved a mean ITR of 4.51 bits/min and a mean accuracy of 74.84%. Recently, Yao et al. (2019) proposed a sensory stimulation training approach to improve the performance of a BCI based on somatosensory attentional orientation (Yao et al., 2019). Results showed that a significantly improved accuracy of 9.4% has been realized between the pre- and post-training and the average accuracy after training is 78.6%. In the aspect of P300-based BCIs, a study found that training can improve tactile P300-based BCI performance within a virtual wheelchair navigation task (Herweg et al., 2016). And mean accuracy improved from 88.43% in the 1st to 92.56% in the last session while the ITR increased from 4.5 bits/min to 4.98 bits/min. Similarly, another study also investigated the effect of training on performance of BCI with an auditory P300 multi-class speller paradigm (Baykara et al., 2016). Subjects were asked to spell several words by attending to animal sounds representing the coordinates of the letter in the matrix. The ITR increased from 3.72 bits/min to 5.63 bits/min after five training sessions. The previous study also demonstrated that alpha neurofeedback training improves SSVEP-based BCI performance (Wan et al., 2016). The training group showed an average increase of 16.5% in the SSVEP signal SNR (signal-to-noise ratio) and the average accuracy improved from 65.4% to 78.7%. Unlike the above training systems (Kus et al., 2012; Baykara et al., 2016; Herweg et al., 2016; Wan et al., 2016; Yao et al., 2019), the main purpose of this study is to achieve performance improvements in BCW control trough the training environment. However, it is worthwhile mentioning that the average accuracy is improved and the mean ITR is also increased (p < 0.001). Although the improved accuracy and ITR are better than the results of the above studies (Kus et al., 2012; Baykara et al., 2016; Herweg et al., 2016; Wan et al., 2016; Yao et al., 2019), we pay more attention to the improvement of users’ control ability and performance. With regard to control performance, the number of commands decreases significantly while the score is significantly increased (p < 0.001). All of these compared results prove that the performance of subjects in BCW control is improved and verify the feasibility of the proposed environment.

Different from the previous simulated BCW systems (Leeb et al., 2007; Gentiletti et al., 2009; Huang et al., 2012; Francisco et al., 2013; Herweg et al., 2016), the training scenario in this work is modeled according to the real indoor environment (e.g., home, hospital), and the position, size of the objects are almost the same. The work (operation steps and process) required the subjects to control BCW from the starting point to the goal point in the simulated environment is basically the same as that in the real environment. Users will be familiar with the environment in advance while training. Meanwhile, the ASP obtained by path planning can be used to assist the driving route selection in a real environment. And the training scenario also provides an operator interface to adjust parameters (e.g., size, shape, and obstacle placement) according to the actual situation. Users are able to choose some routes commonly used in daily life for specific training. For the simulated wheelchairs, the motion parameters (e.g., speed, rotation angle) can be set and adjusted to meet the different real motion. Furthermore, the simulated training environment is not limited to SSVEP-based BCW. In order to satisfy the needs of practical applications better, this paper chooses to use SSVEP-based BCI to get user commands and as a control input for the environment. According to the characteristics of ERD/ERS-based BCI and P300-based BCI, better training effects are predictable.

On the other hand, there are aspects in the following that need to be further improved. The evaluation matrix can be further improved by adding more factors (e.g., fatigue) and whether the users can carry out the BCW control in the real environment can be assessed by scoring in the future. Secondly, the SSVEP-based BCI adopted a synchronous protocol, which cannot discriminate the control and idle states of subjects. Adding an ON/OFF switch is one of the good solutions for this problem (Cheng et al., 2002). Finally, the simulated scenario is designed into a 2D space for the convenience of path planning and recommendation. The virtual reality (VR) technology can be used to build a more high-quality simulated environment (Rutkowski, 2016). In addition, although only 10 subjects conducted four training sessions within 7 days, the feasibility and training effect of the environment has been proved with the satisfactory results of subjects. Next, we will conduct simulated training of ERD/ERS-based BCW or P300-based BCW to further verify the performance of the environment, and invite more subjects (or disabled subjects) to participate in the experiment.

Conclusion

In this study, an indoor simulated training environment for SSVEP-based BCW control training is built. The 7-days training experiment includes individual accuracy task and other four tasks with or without ASP are designed and validated. The cases of turning right-angle and S-shaped bend, crossing the corridor and door, and obstacles avoidance are practiced. Scoring and command-consuming are considered to measure the performance and training effect of the subjects. The results of experiments show that the subjects can realize the control training of BCW through our indoor simulated training environment and achieve a certain degree of improvement, and prove the feasibility and effectiveness of the environment.

Data Availability Statement

The datasets and programs generated for this study are available on request to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by the Research Ethics Committee of Chinese Academy of Medical Sciences, China. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

ML and KW designed the research. ML performed the research. XC, KW, and ML wrote the programs. ML, YC, and JZ analyzed the data. ML, KW, and JZ wrote the manuscript. HW, JW, and SX provided facilities and equipment.

Funding

This work was supported by the National Natural Science Foundation of China (Grant Nos. 61603416 and 81774148), the youth of Peking Union Medical College (Grant No. 3332016103), and CAMS Innovation Fund for Medical Sciences (CIFMS 2016-I2M-3-023).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to express their gratitude to the volunteers.

References

Abiri, R., Borhani, S., Sellers, E. W., Jiang, Y., and Zhao, X. (2019). A comprehensive review of EEG-based brain–computer interface paradigms. J. Neural Eng. 16:011001. doi: 10.1088/1741-2552/aaf12e

Baykara, E., Ruf, C. A., Fioravanti, C., Käthner, I., Simon, N., Kleih, S. C., et al. (2016). Effects of training and motivation on auditory P300 brain–computer interface performance. Clin. Neurophysiol. 127, 379–387. doi: 10.1016/j.clinph.2015.04.054

Bi, L., Fan, X., Jie, K., Teng, T., Ding, H., and Liu, Y. (2014). Using a head-up display-based steady-state visually evoked potential brain–computer interface to control a simulated vehicle. IEEE Trans. Intell. Transp. Syst. 15, 959–966. doi: 10.1109/TITS.2013.2291402

Bi, L., Fan, X., and Liu, Y. (2013). EEG-based brain-controlled mobile robots: a survey. IEEE Trans. Hum. Mach. Syst. 43, 161–176. doi: 10.1109/TSMCC.2012.2219046

Cao, L., Li, J., Ji, H., and Jiang, C. (2014). A hybrid brain computer interface system based on the neurophysiological protocol and brain-actuated switch for wheelchair control. J. Neurosci. Methods 229, 33–43. doi: 10.1016/j.jneumeth.2014.03.011

Chen, X., Chen, Z., Gao, S., and Gao, X. (2014). A high-ITR SSVEP-based BCI speller. Brain Comput. Interfaces 1, 181–191. doi: 10.1080/2326263X.2014.944469

Chen, X., Wang, Y., Gao, S., Jung, T.-P., and Gao, X. (2015). Filter bank canonical correlation analysis for implementing a high-speed SSVEP-based brain–computer interface. J. Neural Eng. 12:046008. doi: 10.1088/1741-2560/12/4/046008

Cheng, M., Gao, X. R., Gao, S. G., and Xu, D. F. (2002). Design and implementation of a brain-computer interface with high transfer rates. IEEE Trans. Biomed. Eng. 49, 1181–1186. doi: 10.1109/tbme.2002.803536

Diez, P. F., Müller, S. M. T., Mut, V. A., Laciar, E., Avila, E., Bastos-Filho, T. F., et al. (2013). Commanding a robotic wheelchair with a high-frequency steady-state visual evoked potential based brain-computer interface. Med. Eng. Phys. 35, 1155–1164. doi: 10.1016/j.medengphy.2012.12.005

Fernández-Rodríguez, Á., Velasco-Álvarez, F., and Ron-Angevin, R. (2016). Review of real brain-controlled wheelchairs. J. Neural Eng. 13:061001. doi: 10.1088/1741-2560/13/6/061001

Francisco, V. -Á., Ricardo, R.-A., Leandro, D. S.-S., and Salvador, S.-R. (2013). Audio-cued motor imagery-based brain–computer interface: navigation through virtual and real environments. Neurocomputing 121, 89–98. doi: 10.1016/j.neucom.2012.11.038

Galán, F., Nuttin, M., Lew, E., Ferrez, P. W., Vanacker, G., Philips, J., et al. (2008). A brain-actuated wheelchair: asynchronous and non-invasive brain–computer interfaces for continuous control of robots. Clin. Neurophysiol. 119, 2159–2169. doi: 10.1016/j.clinph.2008.06.001

Gentiletti, G. G., Gebhart, J. G., Acevedo, R. C., Yáñez-Suárez, O., and Medina-Bañuelos, V. (2009). Command of a simulated wheelchair on a virtual environment using a brain-computer interface. IRBM 30, 218–225. doi: 10.1016/j.irbm.2009.10.006

Griffiths, L., and Hughes, D. (1993). Typification in a neuro-rehabilitation centre: scheff revisited? Soc. Rev. 41, 415–445. doi: 10.1111/j.1467-954X.1993.tb00072.x

Herweg, A., Gutzeit, J., Kleih, S., and Kübler, A. (2016). Wheelchair control by elderly participants in a virtual environment with a brain-computer interface (BCI) and tactile stimulation. Biol. Psychol. 121, 117–124. doi: 10.1016/j.biopsycho.2016.10.006

Holden, M. K. (2005). Virtual environments for motor rehabilitation: review. Cyberpsychol. Behav. 8, 187–211. doi: 10.1089/cpb.2005.8.187

Hong, K.-S., Khan, M. J., and Hong, M. J. (2018). Feature extraction and classification methods for hybrid fNIRS-EEG brain-computer interfaces. Front. Hum. Neurosci. 12:246. doi: 10.3389/fnhum.2018.00246

Huang, D., Qian, K., Fei, D., Jia, W., Chen, X., and Bai, O. (2012). Electroencephalography (EEG)-based brain–computer interface (BCI): a 2-D virtual wheelchair control based on event-related desynchronization/synchronization and state control. IEEE Trans. Neural Syst. Rehabil. Eng. 20, 379–388. doi: 10.1109/TNSRE.2012.2190299

Iturrate, I., Antelis, J. M., Kubler, A., and Minguez, J. (2009). A noninvasive brain-actuated wheelchair based on a P300 neurophysiological protocol and automated navigation. IEEE Trans. Robot. 25, 614–627. doi: 10.1109/TRO.2009.2020347

Kaufman, S., and Becker, G. (1986). Stroke: health care on the periphery. Soc. Sci. Med. 22, 983–989. doi: 10.1016/0277-9536(86)90171-1

Kenyon, R. V., and Afenya, M. B. (1995). Training in virtual and real environments. Ann. Biomed. Eng. 23, 445–455. doi: 10.1007/BF02584444

Khan, M. J., and Hong, K.-S. (2017). Hybrid EEG–fNIRS-based eight-command decoding for BCI: application to quadcopter control. Front. Neurorobot. 11:6. doi: 10.3389/fnbot.2017.00006

Kim, K. T., Suk, H. I., and Lee, S. W. (2018). “Commanding a brain-controlled wheelchair using steady-state somatosensory evoked potentials,” in Proceedings of the IEEE Transactions on Neural Systems and Rehabilitation Engineering, Piscataway, NJ.

Koenig, S., and Likhachev, M. (2005). Fast replanning for navigation in unknown terrain. IEEE Trans. Robot. 21, 354–363. doi: 10.1109/TRO.2004.838026

Kus, R., Valbuena, D., Zygierewicz, J., Malechka, T., Graeser, A., and Durka, P. (2012). Asynchronous BCI based on motor imagery with automated calibration and neurofeedback training. IEEE Trans. Neural Syst. Rehabil. Eng. 20, 823–835. doi: 10.1109/TNSRE.2012.2214789

Le, A., Prabakaran, V., Sivanantham, V., and Mohan, R. (2018). Modified A-star algorithm for efficient coverage path planning in tetris inspired self-reconfigurable robot with integrated laser sensor. Sensors 18:2585. doi: 10.3390/s18082585

Leeb, R., Friedman, D., Müller-Putz, L., Scherer, R., Slater, M., and Pfurtscheller, G. (2007). Self-paced (asynchronous) BCI control of a wheelchair in virtual environments: a case study with a tetraplegic. Comput. Intell. Neurosci. 2007:79642. doi: 10.1155/2007/79642

Li, J., Ji, H., Cao, L., Zang, D., Gu, R., Xia, B., et al. (2014). Evaluation and application of a hybrid brain computer interface for real wheelchair parallel control with multi-degree of freedom. Int. J. Neural Syst. 24:1450014. doi: 10.1142/s0129065714500142

Li, W., Duan, F., Sheng, S., Xu, C., Liu, R., Zhang, Z., et al. (2018). A human-vehicle collaborative simulated driving system based on hybrid brain–computer interfaces and computer vision. IEEE Trans. Cogn. Dev. Syst. 10, 810–822. doi: 10.1109/TCDS.2017.2766258

Lin, Z., Zhang, C., Wu, W., and Gao, X. (2007). Frequency recognition based on canonical correlation analysis for SSVEP-based BCIs. IEEE Trans. Biomed. Eng. 54, 1172–1176. doi: 10.1109/TBME.2006.889197

Long, J., Li, Y., Wang, H., Yu, T., Pan, J., and Li, F. (2012). A hybrid brain computer interface to control the direction and speed of a simulated or real wheelchair. IEEE Trans. Neural Syst. Rehabil. Eng. 20, 720–729. doi: 10.1109/TNSRE.2012.2197221

Maclean, N., Pound, P., Wolfe, C., and Rudd, A. (2000). Qualitative analysis of stroke patients’ motivation for rehabilitation. BMJ 321, 1051–1054. doi: 10.1136/bmj.321.7268.1051

Mellinger, J., Schalk, G., Braun, C., Preissl, H., Rosenstiel, W., Birbaumer, N., et al. (2007). An MEG-based brain–computer interface (BCI). Neuroimage 36, 581–593. doi: 10.1016/j.neuroimage.2007.03.019

Müller, S. M. T., Bastos-Filho, T. F., and Filho, M. S. (2013). Proposal of a SSVEP-BCI to command a robotic wheelchair. J. Control Autom. Elect. Syst. 24, 97–105. doi: 10.1007/s40313-013-0002

Müller, S. M. T., Diez, P. F., Bastos-Filho, T. F., Sarcinelli-Filho, M., Mut, V., Laciar, E., et al. (2015). “Robotic wheelchair commanded by people with disabilities using low/high-frequency SSVEP-based BCI,” in World Congress on Medical Physics and Biomedical Engineering, ed. D. A. Jaffray, (Toronto: Springer International Publishing), 1177–1180. doi: 10.1007/978-3-319-19387-8_285

Naseer, N., and Hong, K.-S. (2015). fNIRS-based brain-computer interfaces: a review. Front. Hum. Neurosci. 9:3. doi: 10.3389/fnhum.2015.00003

Nikolay, V. M., Nikolay, C., Arne, R., Adrien, C., Marijn van, V., and Marc, M. V. H. (2013). Sampled sinusoidal stimulation profile and multichannel fuzzy logic classification for monitor-based phase-coded SSVEP brain–computer interfacing. J. Neural Eng. 10:036011. doi: 10.1088/1741-2560/10/3/036011

Pastor, M. A., Artieda, J., Arbizu, J., Valencia, M., and Masdeu, J. C. (2003). Human cerebral activation during steady-state visual-evoked responses. J. Neurosci. 23, 11621–11627. doi: 10.1523/jneurosci.23-37-11621.2003

Rebsamen, B., Burdet, E., Cuntai, G., Haihong, Z., Chee Leong, T., Qiang, Z., et al. (2006). “A brain-controlled wheelchair based on P300 and path guidance,” in Proceedings of the The First IEEE/RAS-EMBS International Conference on Biomedical Robotics and Biomechatronics(BioRob), (Pisa: IEEE Press), 1101–1106.

Rebsamen, B., Burdet, E., Guan, C., Zhang, H., Teo, C. L., Zeng, Q., et al. (2007). Controlling a wheelchair indoors using thought. IEEE Intell. Syst. 22, 18–24. doi: 10.1109/MIS.2007.26

Rebsamen, B., Guan, C., Zhang, H., Wang, C., Teo, C., Ang, M. H., et al. (2010). A brain controlled wheelchair to navigate in familiar environments. IEEE Trans. Neural Syst. Rehabil. Eng. 18, 590–598. doi: 10.1109/TNSRE.2010.2049862

Rose, F. D., Attree, E. A., Brooks, B. M., Parslow, D. M., Penn, P. R., and Ambihaipahan, N. (2000). Training in virtual environments: transfer to real world tasks and equivalence to real task training. Ergonomics 43, 494–511. doi: 10.1080/001401300184378

Rutkowski, T. M. (2016). Robotic and virtual reality BCIs using spatial tactile and auditory oddball paradigms. Front. Neurorobot. 10:20. doi: 10.3389/fnbot.2016.00020

Satti, A. R., Coyle, D., and Prasad, G. (2011). “Self-paced brain-controlled wheelchair methodology with shared and automated assistive control,” in Proceedings of the 2011 IEEE Symposium on Computational Intelligence, Cognitive Algorithms, Mind, and Brain (CCMB), (Paris: IEEE Press), 1–8.

Sitaram, R., Weiskopf, N., Caria, A., Veit, R., Erb, M., and Birbaumer, N. (2008). fMRI brain-computer interfaces. IEEE Signal Process. Mag. 25, 95–106. doi: 10.1109/msp.2008.4408446

Tanaka, K., Matsunaga, K., and Wang, H. (2005). Electroencephalogram-based control of an electric wheelchair. IEEE Trans. Robot. 21, 762–766. doi: 10.1109/TRO.2004.842350

Tang, J., Liu, Y., Hu, D., and Zhou, Z. (2018). Towards BCI-actuated smart wheelchair system. Biomed. Eng. Online 17:111. doi: 10.1186/s12938-018-0545-x

Todorov, E., Shadmehr, R., and Bizzi, E. (1997). Augmented feedback presented in a virtual environment accelerates learning of a difficult motor task. J. Mot. Behav. 29, 147–158. doi: 10.1080/00222899709600829

Wan, F., da Cruz, J. N., Nan, W., Wong, C. M., Vai, M. I., and Rosa, A. (2016). Alpha neurofeedback training improves SSVEP-based BCI performance. J. Neural Eng. 13:036019. doi: 10.1088/1741-2560/13/3/036019

Wang, H., and Bezerianos, A. (2017). Brain-controlled wheelchair controlled by sustained and brief motor imagery BCIs. Elect. Lett. 53, 1178–1180. doi: 10.1049/el.2017.1637

Wang, H., Li, T., Bezerianos, A., Huang, H., He, Y., and Chen, P. (2019). The control of a virtual automatic car based on multiple patterns of motor imagery BCI. Med. Biol. Eng. Comput. 57, 299–309. doi: 10.1007/s11517-018-1883-3

Wang, Y., Wang, Y., and Jung, T. (2010). Visual stimulus design for high-rate SSVEP BCI. Elect. Lett. 46, 1057–1058. doi: 10.1049/el.2010.0923

Wolpaw, J. R., Birbaumer, N., McFarland, D. J., Pfurtscheller, G., and Vaughan, T. M. (2002). Brain–computer interfaces for communication and control. Clin. Neurophysiol. 113, 767–791. doi: 10.1016/S1388-2457(02)00057-3

Yao, L., Sheng, X., Mrachacz-Kersting, N., Zhu, X., Farina, D., and Jiang, N. (2019). Sensory stimulation training for BCI system based on somatosensory attentional orientation. IEEE Trans. Biomed. Eng. 66, 640–646. doi: 10.1109/TBME.2018.2852755

Yu, Y., Zhou, Z., Liu, Y., Jiang, J., Yin, E., Zhang, N., et al. (2017). “Self-paced operation of a wheelchair based on a hybrid brain-computer interface combining motor imagery and P300 potential,” in Proceedings of the IEEE Transactions on Neural Systems and Rehabilitation Engineering, Piscataway, NJ.

Keywords: simulated environment, brain-controlled wheelchair, indoor training, steady-state visual evoked potentials, path recommendation

Citation: Liu M, Wang K, Chen X, Zhao J, Chen Y, Wang H, Wang J and Xu S (2020) Indoor Simulated Training Environment for Brain-Controlled Wheelchair Based on Steady-State Visual Evoked Potentials. Front. Neurorobot. 13:101. doi: 10.3389/fnbot.2019.00101

Received: 19 June 2019; Accepted: 19 November 2019;

Published: 08 January 2020.

Edited by:

Keum-Shik Hong, Pusan National University, South KoreaReviewed by:

Erwei Yin, China Astronaut Research and Training Center, ChinaRonald Lee Kirby, Dalhousie University, Canada

Copyright © 2020 Liu, Wang, Chen, Zhao, Chen, Wang, Wang and Xu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ming Liu, bGl1bWluZ190anVAMTYzLmNvbQ==

Ming Liu1*

Ming Liu1* Kangning Wang

Kangning Wang Yuanyuan Chen

Yuanyuan Chen