Abstract

Noise management associated with input signals in sensor devices arises as one of the main problems limiting robot control performance. This article introduces a novel neuromorphic filter model based on a leaky integrate and fire (LIF) neural model cell, which encodes the primary information from a noisy input signal and delivers an output signal with a significant noise reduction in practically real-time with energy-efficient consumption. A new approach for neural decoding based on the neuron-cell spiking frequency is introduced to recover the primary signal information. The simulations conducted on the neuromorphic filter demonstrate an outstanding performance of white noise rejecting while preserving the original noiseless signal with a low information loss. The proposed filter model is compatible with the CMOS technology design methodologies for implementing low consumption smart sensors with applications in various fields such as robotics and the automotive industry demanded by Industry 4.0.

1. Introduction

The term neuromorphic, coined by Mead (1990), refers to Very Large Scale of Integration (VLSI) systems aiming to reproduce biological neuron behaviors. Neuromorphic computing platforms are relatively simple regarding the number of active elements (transistors) compared to complex traditional digital units (microprocessors) to replicate brain-like responses. Today, the convergence of electronics, computing science, and neuroscience offers bountiful inspiration to explore novel hardware structures, algorithms, and innovative ways to process information more efficiently, maintaining low levels of energy waste and material use (Schuman et al., 2017). One of the most remarkable contributions of this inter-discipline convergence is the conception of spiking neurons (SN), also called the third generation of artificial neurons (Maass, 1997). The main difference concerning previous generations is the inclusion of temporal information in the computing process, and this feature offers the possibility to process signals efficiently with variations across time. Unfortunately, the large-scale modeling of SN units is limited due to the high computational cost involved in solving numerically the whole set of differential equations representing each SN unit. Therefore, the design and implementation of these units are more convenient at the silicon plane and in the analog domain to overpass this vast amount of numerical computation effort.

Traditional analog filters are designed based on scaling specific frequency domain signal components and attenuating the rest. This approach has been proven effective with noise that is primarily out of signal frequency range. However, linear filters cannot clear noisy signals when disturbance affectation is in the same frequency range as primary signal. In this context, digital filters, especially average filter techniques, take precedence at the cost of resources expense. For this reason, some filter proposals based on the use of several SNs have been made (Orchard et al., 2021; Sharifshazileh et al., 2021). Generally, integrated circuits hosting neuromorphic implementations possess an inherent capacity to extract primary features of given entries since integrals tied to the SN model can be interpreted as the average value operator on a time window. Thus, neuromorphic systems allow average filtering while retaining the benefits of analog circuits.

2. Methods

2.1. Neural Circuit

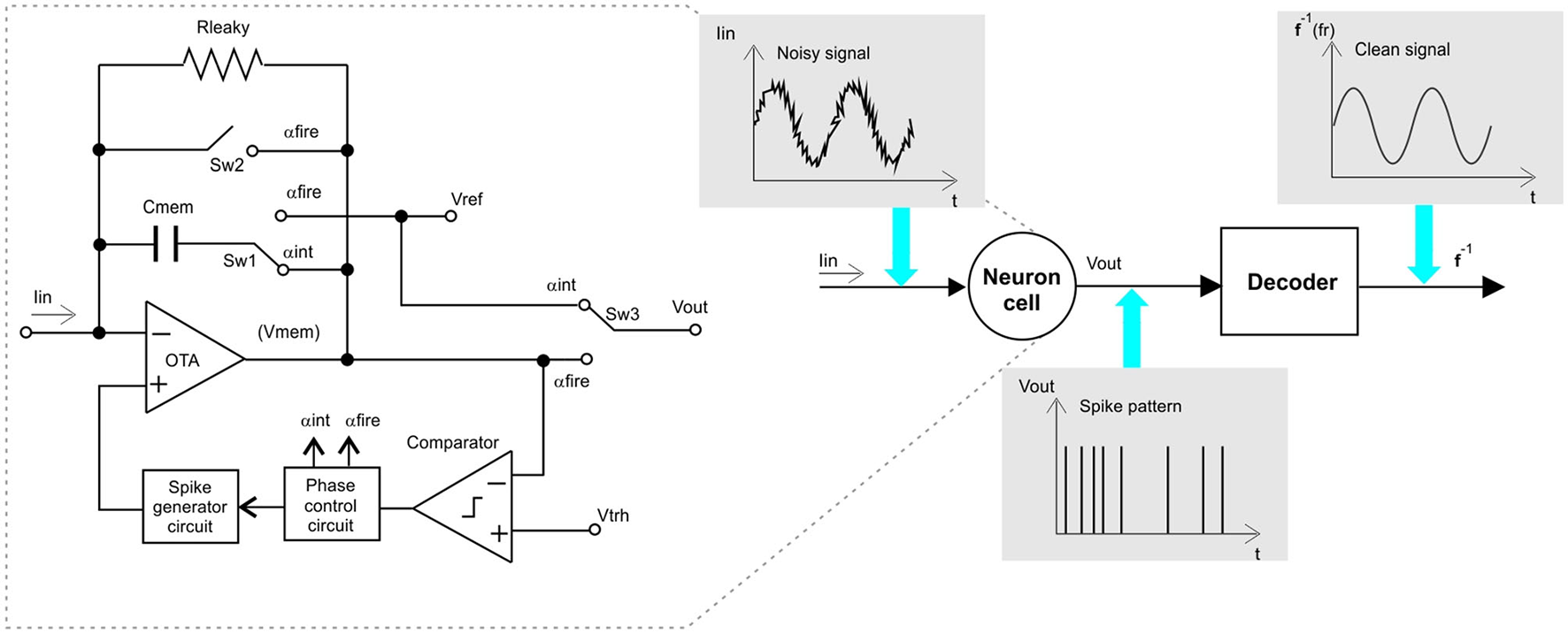

There are several proposals reported on analog implementations of neural model circuits (Abbott, 1999; Wijekoon and Dudek, 2008; Zamarreño-Ramos et al., 2011; Wu et al., 2015; Zare et al., 2021). Throughout the development of this study, the leaky integrate and fire neuron (IFN) circuit, proposed in Wu et al. (2015) was used, as seen in Figure 1. However, the proposed methodology could be easily adapted to work with other neural circuit models. This proposal is divided into two main parts, a leaky, current integrator circuit (LI) to emulate the behavior of a neuron during the period of depolarization and a reset engine that returns the output voltage of the operational amplifier (Vmem) to a reference voltage level (VRef). It also assumes the generation of a convenient spike shape, compliant with memristors technology to allow weight adjustment during the learning phase.

Figure 1

Neuromorphic filter architecture, showing a spiking neuron CMOS circuit implementation, see Wu et al. (2015).

Wu's neural circuit shown in Figure 1 operates in the integration and firing mode. At the integration mode, the OPAMP works as a leaky integrator, over the current, Iin, flowing at its negative input. At the integration mode, the voltage level at the output of the OPAMP decreases until it reaches a determined threshold voltage, Vthr. The comparator circuit compares the membrane voltage, Vmem, with vthr, to generate a signal activating the Phase Control block when the descending Vref reaches Vthr. At this moment, the Phase Control block commands the Spike Generator block to initiate a spike event with a predefined waveform and it changes the control signals, αfire to ON state, while αint to OFF state. These control signals are complementary. The neural circuit is reconfigured by the current states of αfire and αint. If αint is ON, the OPAMP works in the integration mode, if αfire is ON the neuron is in fire mode. During integration mode the neuron output Vout is set in Vref, at the same time, the spike generator block must hold a Vref at the positive OPAMP input, which is buffered at the negative OPAMP input. In the firing mode, Vout, is connected to Vmem, generating a spike event, feedbacking, Vmem to the negative OPAMP input. At the end of the firing mode, Cmem is reset to a Vref, potential.

The equivalent model of the LI section is presented in Equation (1).

Where Vp is the voltage objective, while the circuit is in integration mode Vp corresponds to Vthr.

Wu's circuit functioning could still be simplified to implement the proposed methodology, performing a noise signal filtering process. The simplification consists of establishing constant delays before switching states and restarting the integration phase. It imposes a period of neuron inactivity corresponding to the refractory period, seen in biological brains. This behavior is modeled as shown below:

Once the neuron is in the refractory period, it maintains its state for a predefined period, after which it returns to the previous (integration) state.

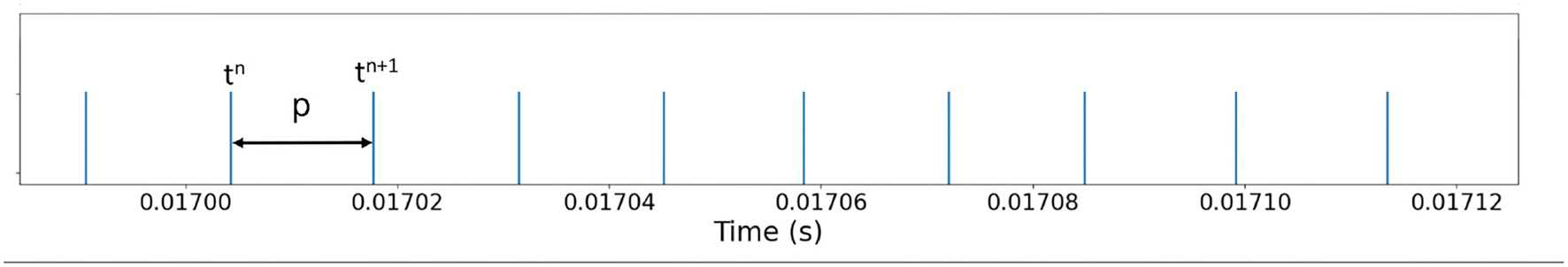

2.2. Tuning Curves

Since spike trains convey information through their timing and any spike-wave produced by neural circuits models are supposed to be identical (Gerstner et al., 2016), the membrane's potential in neuromorphic circuits can be characterized simply by a list of events: t0, t1, ..., tn, where 0 ≤ ti ≤ T, with i = 0, 1, 2, ..., n is the i-th spike time in an observed period T (Dayan and Abbot, 2001). Figure 2 shows a representation of this list.

Figure 2

Neuron circuit spike response. Since spike waveform is not needed for the filtering process, spike events are registered as a list of times when the neuron circuit reach threshold voltage. Time elapsed between tn and tn+1 is denoted as p.

A simple way to characterize the response of a neuromorphic circuit is by counting the number of peak voltages fired during the presentation of a stimulus (input current). By repeating this operation for a certain number of different stimuli, it is possible to estimate a function, f, that describes the relationship between an input current, Iin, and a frequency of spikes fr (Dayan and Abbot, 2001; Elliasmith, 2013). In this study, an alternative way to estimate the neuron frequency is proposed. Since neuromorphic circuits have no stochastic behavior, it is possible to prove that the same neuron frequency response will always be obtained for a given Iin. Therefore, by measuring the time elapsed between the event of two spikes, p = tn−tn−1 , the frequency is obtained by using .

2.3. Mean Value Theorem for Integrals

The time between spikes in the circuit presented in Figure 1 corresponds to the mean value of the input current.

Rearranging elements from Equation (1), we find the next expression.

Equation (2) corresponds to Current Kirchhoff's Law, producing a summation of all the currents at input node, Iin = IRLeak+ICmem, where IRLeak(t) is the current across RLeak, which behavior is unknown in advance, thus:

Now, integrating both sides of Equation (3) with the defined time intervals limits between neural events (spike occurrences) results in Equation (4). Internal values of the neuromorphic units are reset at the end of each neural event,

Solving integrals on both sides:

Where the value Vmem = Vthr−Vref results at the end of the integration period. Equation (5) corresponds to the Mean Value Theorem for integrals (Stewart, 2018). Thus, a constant value of Iin exists such that applied for the time interval, tn−tn−1, equals the value of the current IRLeak(t) on the same period. Particularly, Iin can be seen as the mean value of current on the period tn−tn−1 plus a constant value (CmemVmem).

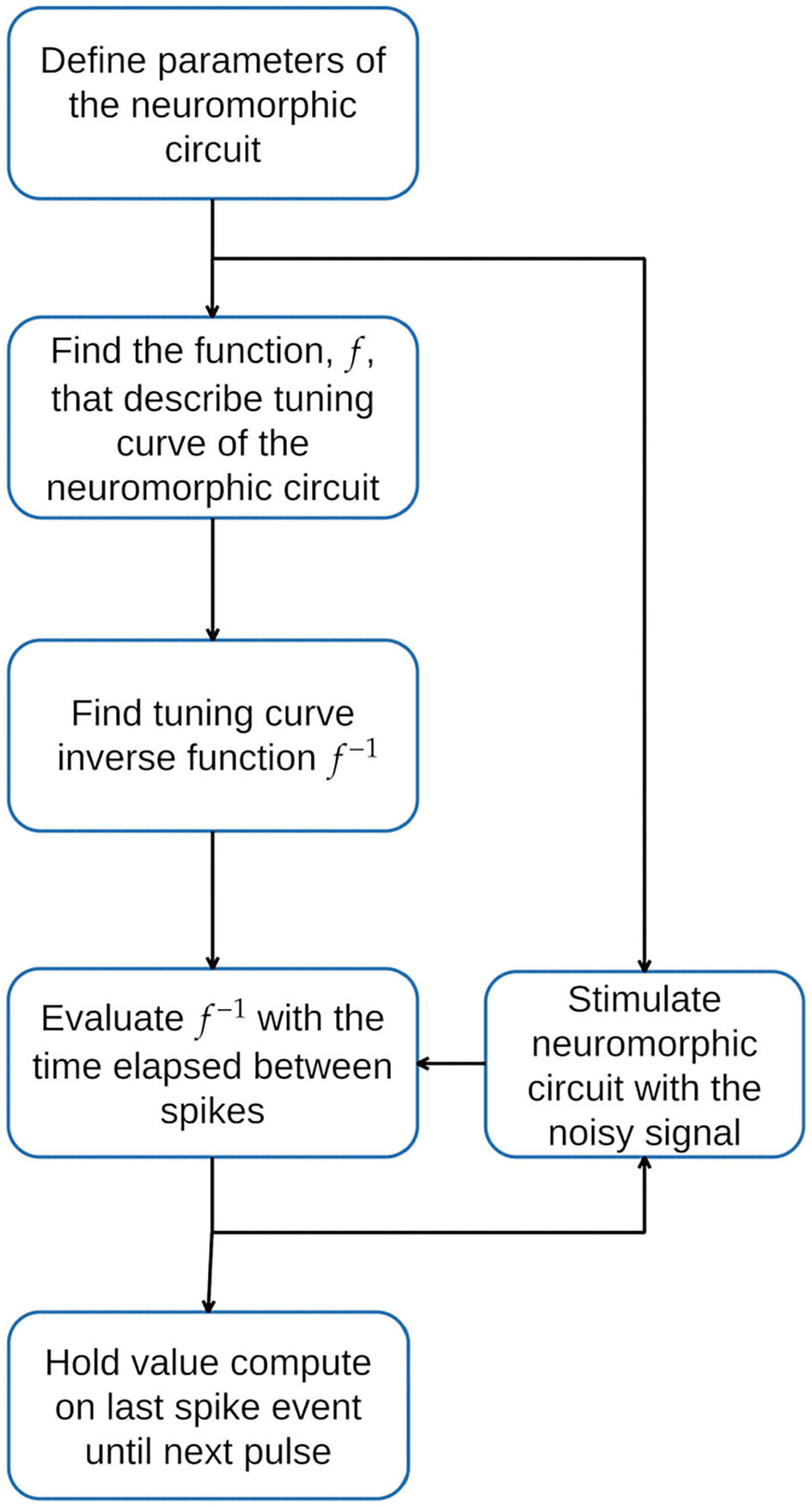

3. Proposed Methodology for a Neural Filter Design

Our proposal consists of using the tuning curve function of the neuromorphic circuit to estimate the Iin value on Equation (5). The methodology proposed to use a neural circuit as a signal filter is depicted in Figure 3.

Figure 3

Proposed Methodology. First, define the parameters of the neural circuit. Second, characterize the response to obtain the circuit tuning curve function, f, and its inverse function. Finally, stimulate the neural circuit with the noisy input signal and use the time elapsed since the last spike to evaluate the inverse function f−1. The value computed using f−1 is held until the next event occurs.

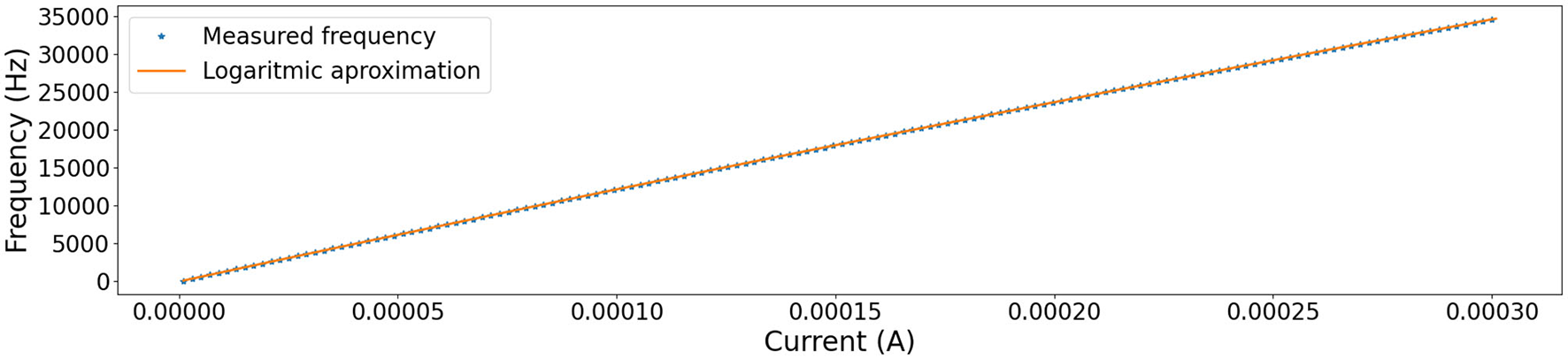

The tuning curve for the circuit introduced in Figure 4, is obtained by sweeping the current Iin of Equation (1) between a current interval ∈[0, 300]μA, considering the following electrical and timing parameters: Cmem = 1μF, Rleak = 10kΩ, and a refractory period of 10μs, we proceed to measure the time elapsed between potential membrane spikes. The below equation is proposed as a prototype to estimate function f.

Parameters a = 1724.8761, b = 21.6051, c = −161.1285, are determined using nonlinear least squares curve fitting (Virtanen et al., 2020).

Figure 4

Tuning curve of the used circuit. Marks shows measurements frequencies obtained by sweeping the current Iin of Equation (1) in interval 0−300μA, with electrical and timing parameters: Cmem = 1μF, Rleak = 10kΩ, and a refractory period of 10μs. The orange line shows approximation by 7. * refers to the measured frequency of spikes. - refers to measurments approximation made by Equation (6).

Because Equation (6) is invertible, we can take two produced spikes and calculate the current in the elapsed period between spikes. That is to say, f−1 computes the equivalent input current value in the system (Iin).

Where:

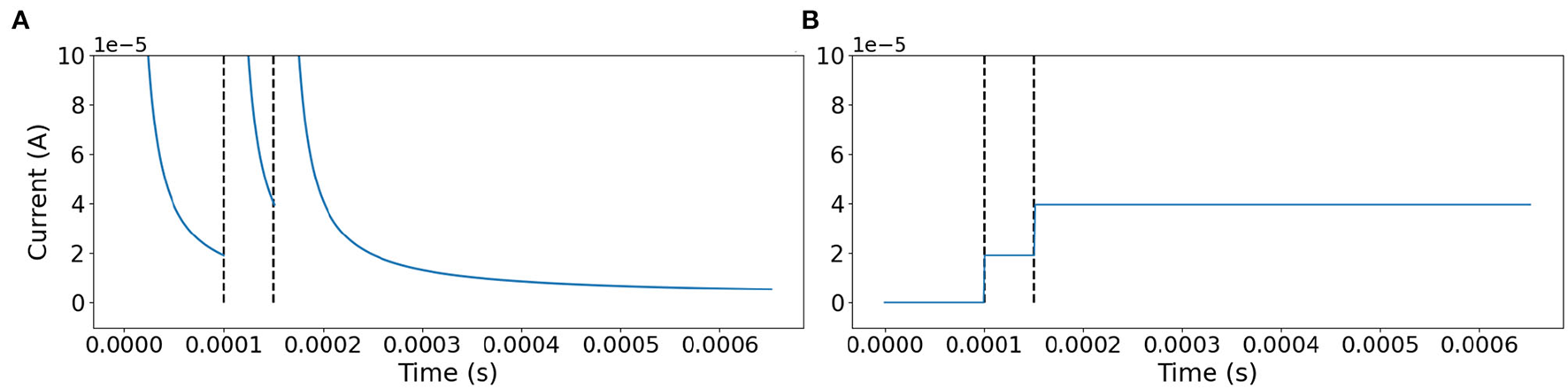

with: p = tn−tn−1. tn is the time of the n-th spike. In order to maintain values on a more convenient time-scale α = 1 × 104 and β = 1 × 10−6 are added as scale factors. Equation (7) is evaluated at each spike and the value is held until the next spike occurs, refer to Figure 5B.

Figure 5

Signal rebuild scheme. (A) Behavior of Equation (7) evaluated at each step of the simulation. (B) At each spike Equation (7) is evaluated with p equal to the time elapsed from the last spike, and the value is preserved until the next event is reached. The dotted line marks the time of two spikes.

Observe that Equation (7) is a decaying exponential function; therefore, it is possible to define a circuit that reproduces this behavior by using the capacitor discharging dynamic in a commuted capacitor scheme working as follows. At each spike event, a low impedance branch quickly charges a capacitor during the refractory period of the SN unit. Once the refractory period concludes, the charging branch for the capacitor is open, and discharge becomes through a branch with fixed impedance such that the current on the capacitor has a behavior similar to Equation (7), refer to Figure 5A. Once a new spike event occurs, the current value of the capacitor is registered and held until the next spike event.

This scheme based on frequency shows a better performance than other strategies previously introduced (Dupeyroux et al., 2021; Guo et al., 2021) since it demonstrates good noise mitigation capacity employing only one neuron.

4. Experiments and Results

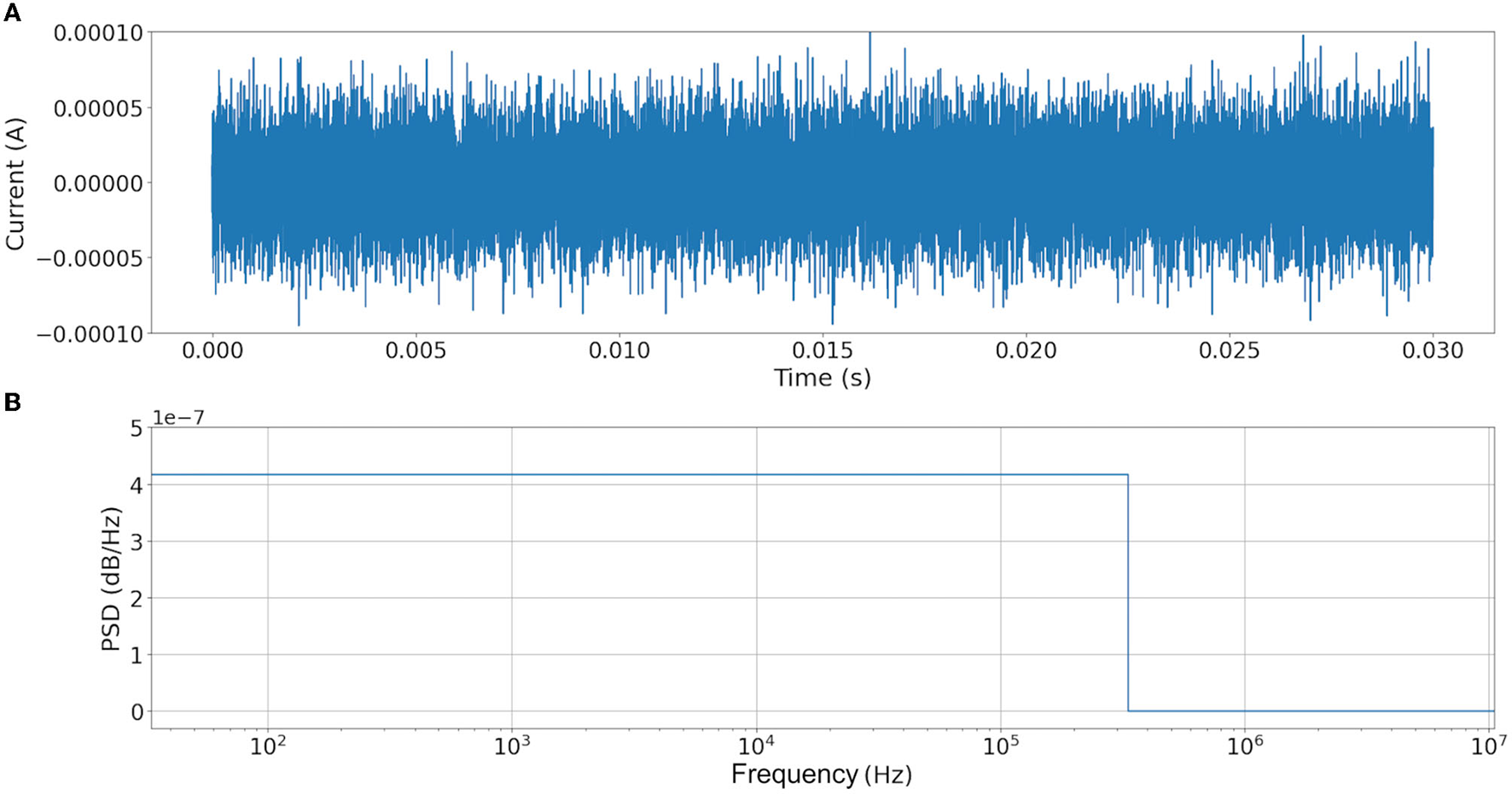

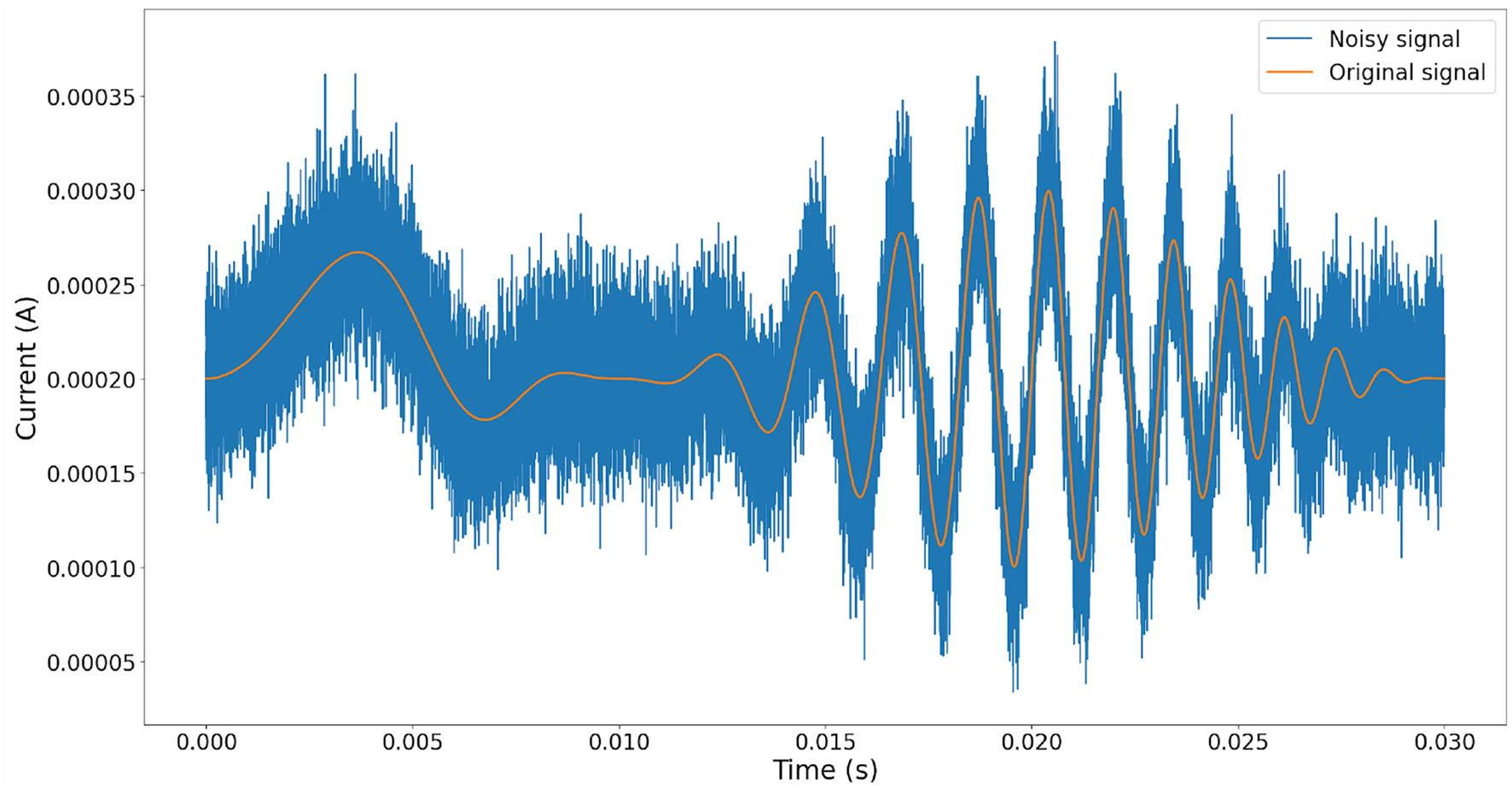

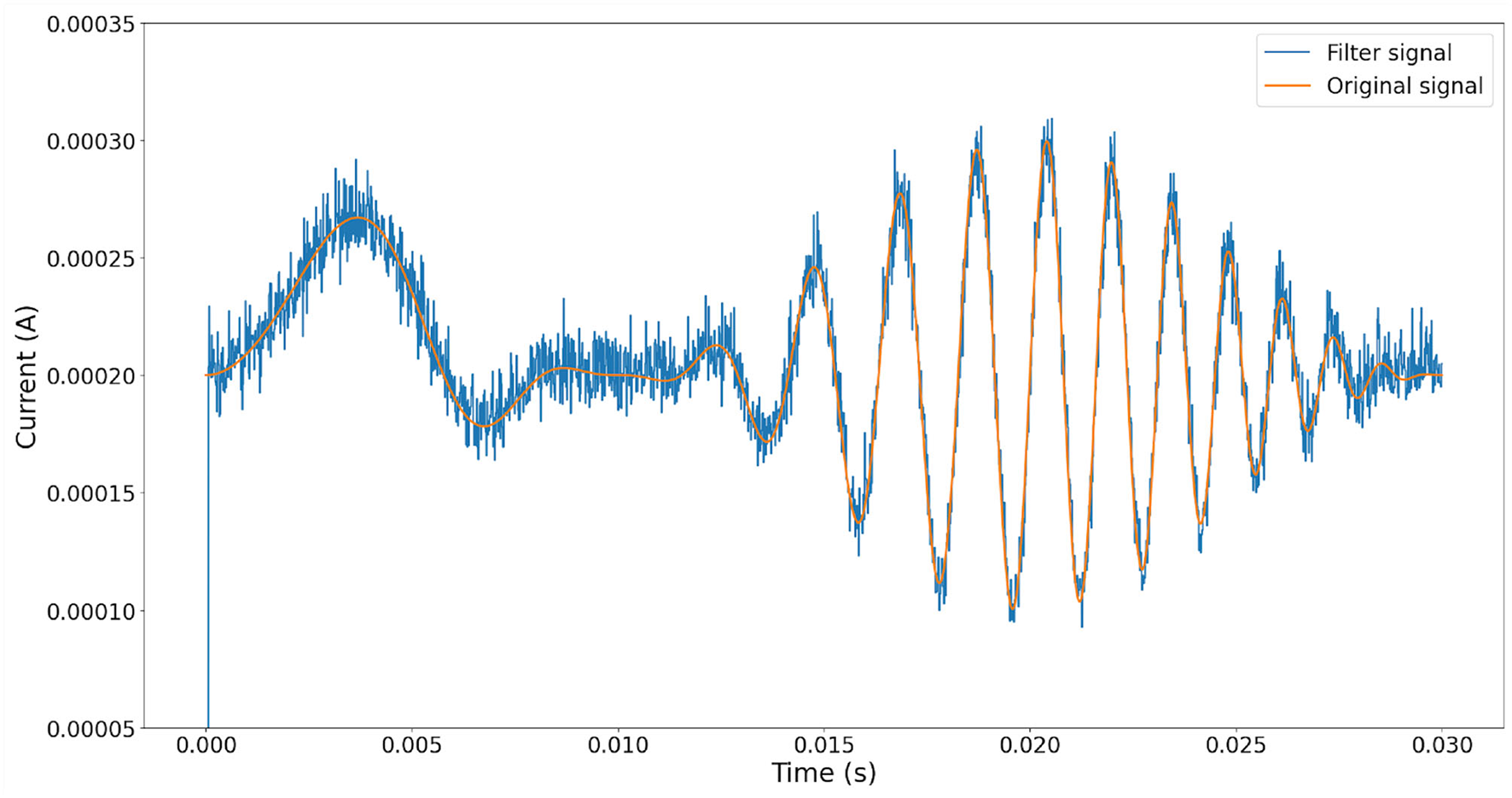

To demonstrate the performance of our proposal, the following experiment was conducted. First, synthetic white noise is simulated to ensure a critical noise condition affectation over the clean signal with a uniform frequency distribution (Grinsted, 2022) (Figure 6). Second, the white noise is added to an arbitrary signal, refer to Figure 7. Finally, the noisy signal is used as the input for Equation (1), and the equivalent current output is computed using Equation (7). The results are shown in Figure 8.

Figure 6

Synthetic white noise generate for this study. (A) Noise signal added. (B) Fast Fourier Transform of noise. It is possible to observe that the noise has a frequency uniform distribution between 1Hz and 31.6228 × 104 Hz.

Figure 7

Comparison between the original signal with the noisy signal. For this experiment, the signal was computed evaluating the function +200 ×10−6. It is important to observe that the signal must be positive at any moment.

Figure 8

Comparison between signal rebuild from spike frequency on neuron output (blue line) and original signal (orange line).

It is possible to appreciate a significant noise reduction after the rebuilding operation. Figure 9 shows the Power Spectral Density of both original and noisy signals, and preservation of fundamental frequency is observed, thus we could conclude that the recovered signal is a good approximation of the original one. Notice that fundamental frequencies of the original signal are within the frequency range of noise.

Figure 9

Power Spectral Density graph of the original signal and filter signal by our proposal. We can observe than primary frequencies are presented with low degradation.

In order to compare this proposal with other approaches, the same noisy signal was filtered using linear (Chebyshev, Butterworth Butterworth, 1930, Elliptic) and digital (median Tukey, 1977) filters (Virtanen et al., 2020). Figure 10 shows the output responses obtained from these standard filters. Figure 11 shows the error measure of each filter, computed as the difference between the original and the output of the corresponding filter. Additional experiments were conducted using Gaussian Multiplicative Noise and Impulsive Random Noise, results are shown in Figures 12, 13.

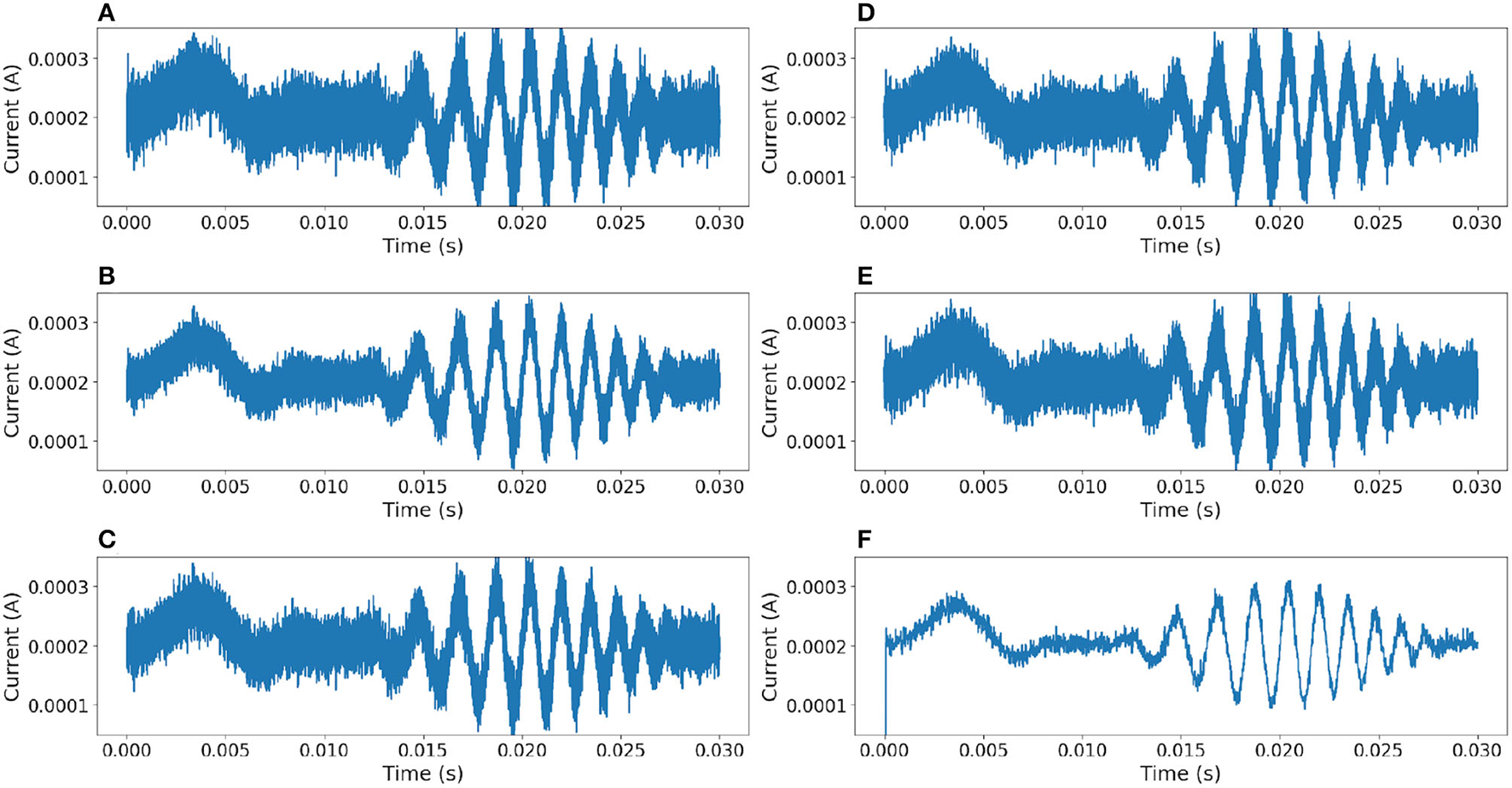

Figure 10

Comparison of results between different filters. (A) Median filter. (B) 3rd-order Chebyshev type 1 filter with cutoff frequency at 55kHz. (C) 3rd-order Chebyshev type 2 filter with cutoff frequency at 55kHz. (D) 3rd-order Butterworth filter with cutoff frequency at 55kHz. (E) 3rd-order Elliptic filter with cutoff frequency at 55kHz. (F) Neural filter proposed.

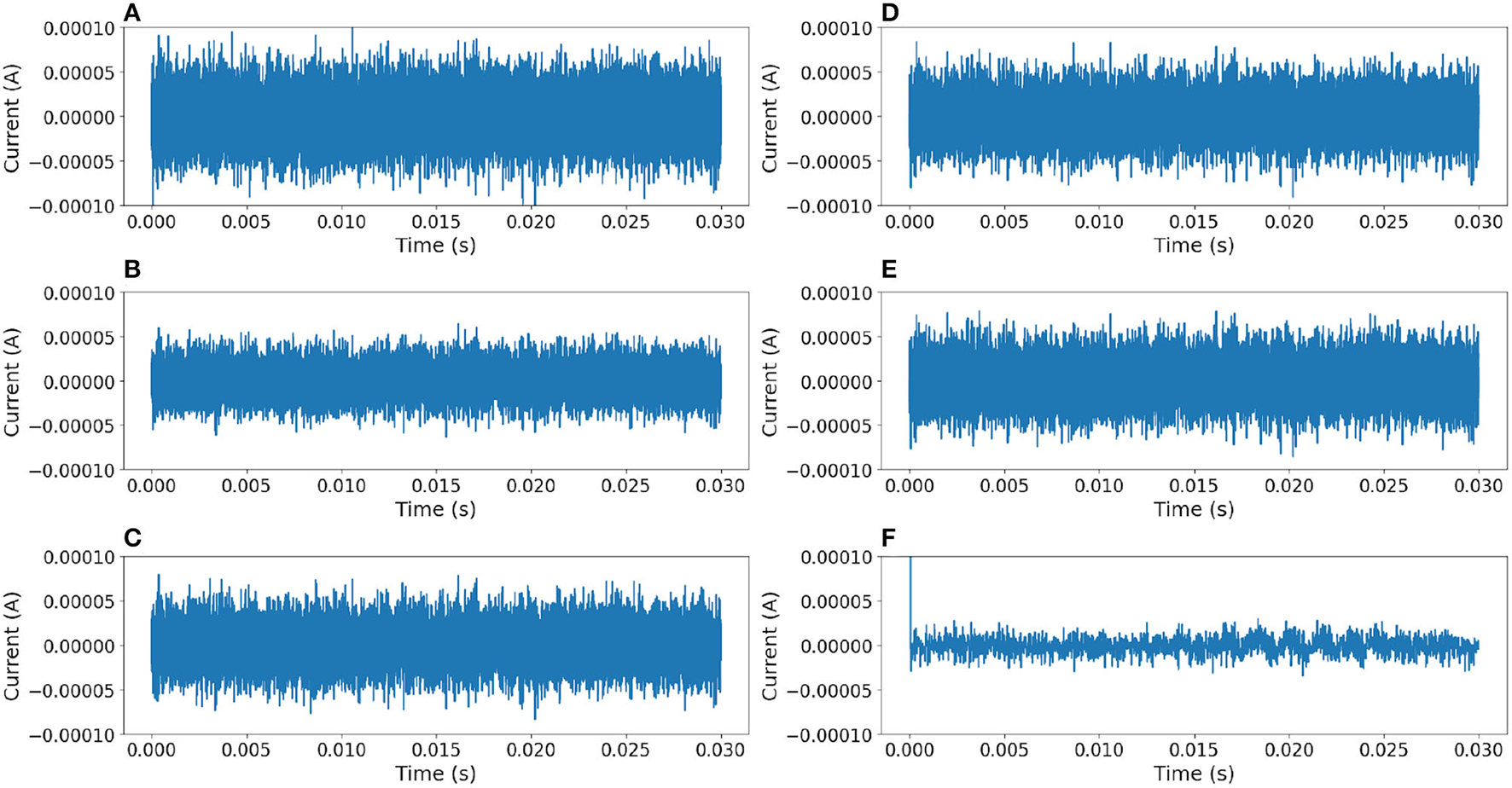

Figure 11

Comparison of error between outputs of different filters (additive white noise) and the original signal. The ideal error signal must be 0 at any time. (A) Median filter. (B) 3rd-order Chebyshev type 1 filter. (C) 3rd-order Chebyshev type 2 filter. (D) 3rd-order Butterworth filter. (E) 3rd-order Elliptic filter. (F) Neural filter proposed.

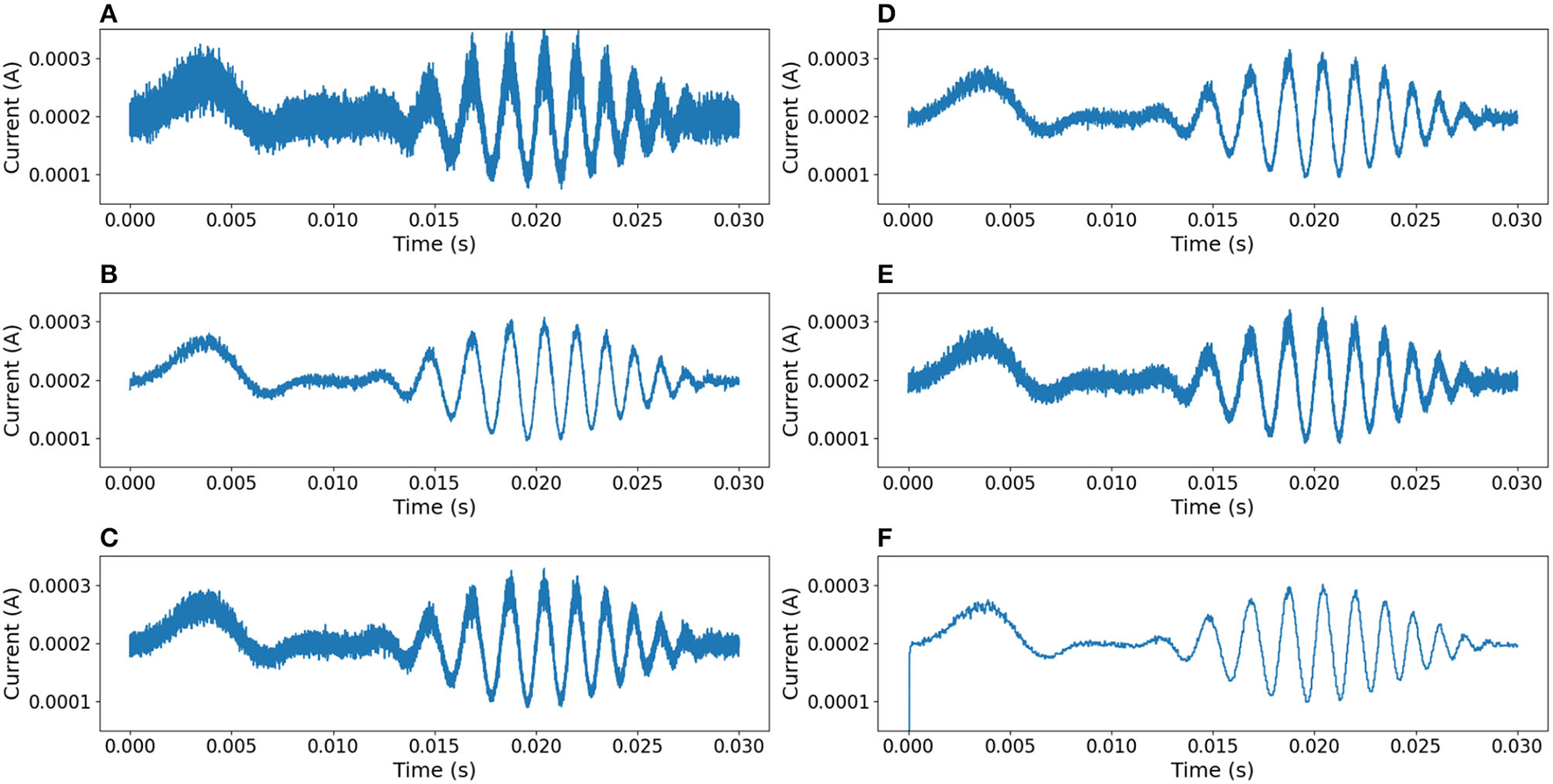

Figure 12

Comparison of results between different filters, using a signal affected by Gaussian Multiplicative Noise of 30% . (A) Median filter. (B) 3rd-order Chebyshev type 1 filter with cutoff frequency at 55kHz. (C) 3rd-order Chebyshev type 2 filter with cutoff frequency at 55kHz. (D) 3rd-order Butterworth filter with cutoff frequency at 55kHz. (E) 3rd-order Elliptic filter with cutoff frequency at 55kHz. (F) Neural filter proposed.

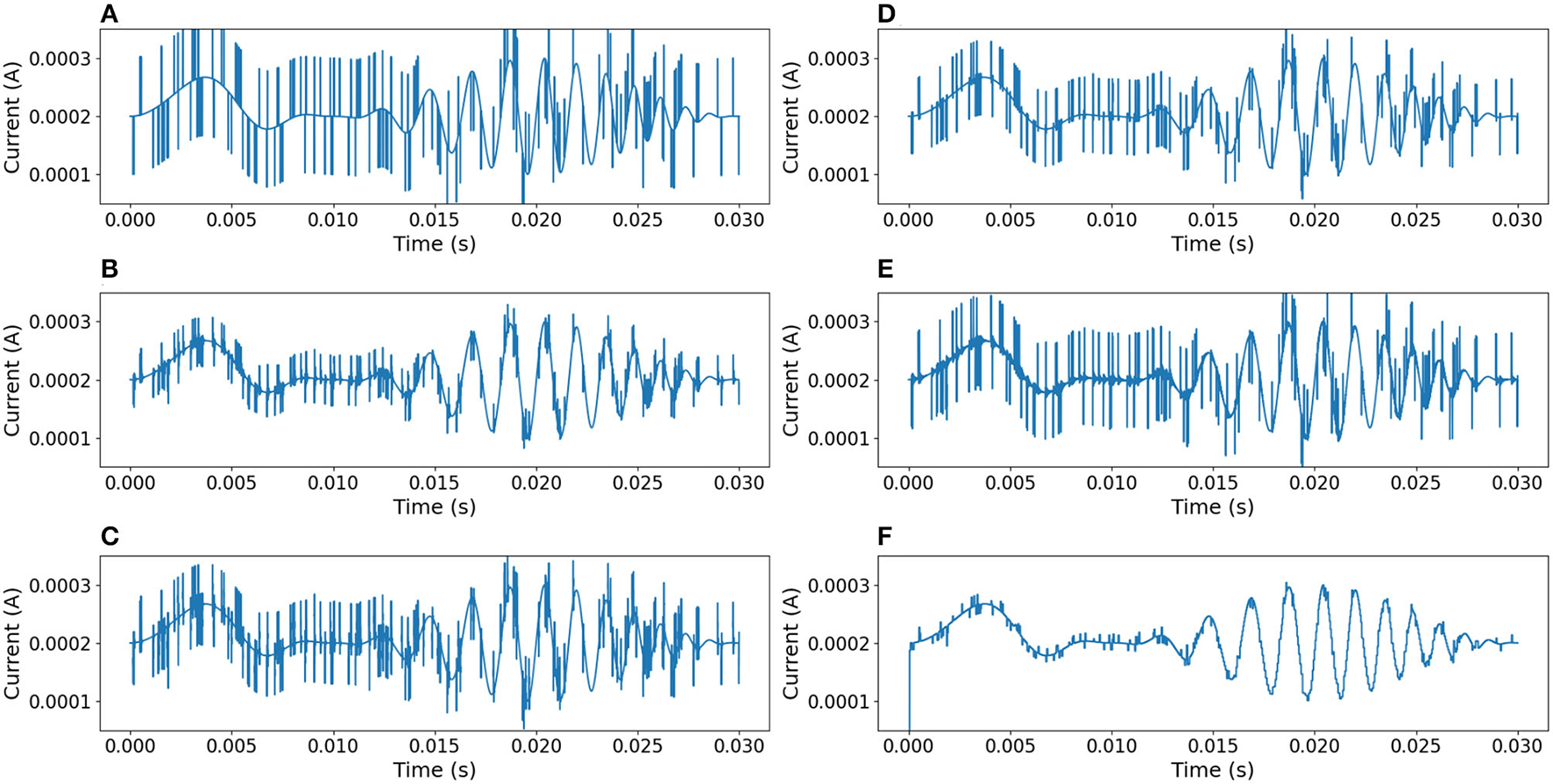

Figure 13

Comparison of results between different filters. The signal is affected by impulsive random noise (random pulses of 5μs and 0.1mA were added). (A) Median filter. (B) 3rd-order Chebyshev type 1 filter with cutoff frequency at 55kHz. (C) 3rd-order Chebyshev type 2 filter with cutoff frequency at 55kHz. (D) 3rd-order Butterworth filter with cutoff frequency at 55kHz. (E) 3rd-order Elliptic filter with cutoff frequency at 55kHz. (F) Neural filter proposed.

To define a figure of merit for the proposed filter, we measure the distance between each filter response and the original signal (noiseless signal). As each filter introduces a different amount of time delay caused by the filtering process, Euclidean distance is not an appropriate choice since filtering techniques with a minimum delay will tend to render better results. Therefore, Fast Dynamic Time Wrapping (Salvador and Chan, 2007) (FastDTW) was used as a performance evaluation criterion. Comparisons of error results applying both Euclidean distance and FastDTW are shown in Table 1.

Table 1

| Filter | Euclidean | FDTW | MSE | PSNR* |

|---|---|---|---|---|

| Additive white noise | ||||

| Median filter | 0.257062 | 2.66536 | 4.40539 × 10−09 | 15.6014 |

| 3rd-order Chebyshevtype-1 filter | 0.0300501 | 0.246951 | 6.02007 × 10−11 | 34.2452 |

| 3rd-order Chebyshevtype-2 filter | 0.0575698 | 0.576117 | 2.20952 × 10−10 | 28.5982 |

| 3rd-order Butterworth filter | 0.0378712 | 0.342871 | 9.56154 × 10−11 | 32.2359 |

| Elliptic filter | 0.047365 | 0.463987 | 1.49563 × 10−10 | 30.293 |

| Proposed Neural filter | 0.043605 | 0.187784 | 1.26760× 10−10 | 31.0114 |

| Multiplicative gaussian noise | ||||

| Median filter | 0.0574893 | 0.543259 | 2.20335× 10−10 | 28.6104 |

| 3rd-order Chebyshevtype-1 filter | 0.0278647 | 0.153496 | 5.17626× 10−11 | 34.901 |

| 3rd-order Chebyshevtype-2 filter | 0.0365451 | 0.299232 | 8.90364× 10−11 | 32.5455 |

| 3rd-order Butterworth filter | 0.0306838 | 0.206129 | 6.27664× 10−11 | 34.0639 |

| Elliptic filter | 0.0338538 | 0.260648 | 7.64055× 10−11 | 33.21 |

| Proposed neural filter | 0.0453503 | 0.170839 | 1.37110× 10−10 | 30.6705 |

| Impulsive Random Noise | ||||

| Median filter | 0.0653834 | 0.142627 | 2.85000× 10−10 | 27.4928 |

| 3rd-order Chebyshevtype-1 filter | 0.0364574 | 0.172277 | 8.86096× 10−11 | 32.5664 |

| 3rd-order Chebyshevtype-2 filter | 0.0488858 | 0.20908 | 1.59321× 10−10 | 30.0185 |

| 3rd-order Butterworth filter | 0.0468088 | 0.160894 | 1.46071× 10−10 | 30.3956 |

| Elliptic filter | 0.0486396 | 0.226337 | 1.57720× 10−10 | 30.0623 |

| Proposed Neural filter | 0.0438345 | 0.173065 | 1.28098× 10−10 | 30.9658 |

Comparison between filter performance with Additive White Noise, Multiplicative Gaussian Noise, and Impulsive Random Noise.

Highlighted values represent the best performance according to the used criterion. A lower value implies better performance (*Higher value is better). The results shown in this table are the average values obtained from 15 conducted simulations.

5. Conclusion

This study introduced the capacity and performance of simulated spiking neural network circuits to recognize primary signal information from signals corrupted deliberately with noise. Our proposal works as the analog mobile mean filter (refer to Mean Value Theorem for Integrals section) minimizing digital electronics, thus reducing the required number of transistors. Our frequency base decoding scheme has proven to have a good noise rejection, specially added white noise, but maintaining good performance with other types of noise, bringing artificial intelligence closer to circuit technology to deliver innovative solutions to filter white noise with the same frequency domain as the original signal, with minimal latency and low information loss. It is also a promising approach to, i.e., the conception of future innovative lab-on-chip implementations. Increasing the signal-to-noise ratio rejection ratio, cost efficiency, and sensitivity, is essential in these devices.

Funding

The authors would like to thank the economic support of the projects SIP 20210124, 20221780, 20211657, 20220268, 20212044, 20221089, 20210788, 20220226, and COFAA and CONACYT FORDECYT-PRONACES 6005.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Statements

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

LG-S proposed, developed, programmed the neural filter code, conducted the simulation runs, and wrote the first draft of the manuscript. VP-P and HS proposed modifications to the encoding and decoding strategy architectures. ER-E reviewed the test bench for the filter experiments. JM-N helped with the neural modeling in Python. All authors contributed to the conception and design of the study, manuscript revision, read, and approved the submitted version.

Acknowledgments

The authors would like to thank the support provided by Instituto Politécnico Nacional, Secretaría de Investigación y Posgrado, Comisión de Operación y Fomento de Actividades Académicas and CONACYT-México for the support to carry out this research.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. The reviewer AZ declared a shared affiliation with the authors to the handling editor at the time of review.

References

1

AbbottL. (1999). Lapicque's introduction of the integrate-and-fire model neuron (1907). Brain Res. Bull. 50, 303–304. 10.1016/S0361-9230(99)00161-6

2

ButterworthS. (1930). On the theory of filter amplifier. Exp. Wireless Wireless Eng. 7, 536–541.

3

DayanP.AbbotL. K. (2001). Theoretical Neuroscience, Computational and Mathematical Modeling of Neural Systems. Cambridge, MA: MIT Press. p. 14–16.

4

DupeyrouxJ.StroobantsS.de CroonG. (2021). A toolbox for neuromorphic sensing in robotics.

5

ElliasmithC. (2013). How to Build a Brain: A Neural Architecture for Cognition, 1st Edn. Oxfort: Oxfort University Press.

6

GerstnerW.KistlerW. M.NaudR.PaninskiL. (2016). Neuronal Dynamics. Cambridge: Cambridge University Press.

7

GrinstedA. (2022). fftnoise - generate noise with a specified power spectrum.

8

GuoW.FoudaM. E.EltawilA. M.SalamaK. N. (2021). Neural coding in spiking neural networks: a comparative study for robust neuromorphic systems. Front. Neurosci. 15, 638474. 10.3389/fnins.2021.638474

9

MaassW. (1997). Networks of spiking neurons: The third generation of neural network models. Neural Netw. 10, 1659–1671. 10.1016/S0893-6080(97)00011-7

10

MeadC. (1990). Neuromorphic electronic systems. Proc. IEEE78, 1629–1636. 10.1109/5.58356

11

OrchardG.FradyE. P.Dayan RubinD. B.SanbornS.ShresthaS. B.SommerF. T.et al. (2021). “Efficient neuromorphic signal processing with loihi 2,” in 2021 IEEE Workshop on Signal Processing Systems (SiPS) (Coimbra: IEEE).

12

SalvadorS.ChanP. (2007). Toward accurate dynamic time warping in linear time and space. Intell. Data Anal. 11, 561–580. 10.3233/IDA-2007-11508

13

SchumanC. D.PotokT. E.PattonR. M.BirdwellJ. D.DeanM. E.RoseG. S.et al. (2017). A survey of neuromorphic computing and neural networks in hardware. CoRR, abs/1705.06963. 10.48550/arXiv.1705.06963

14

SharifshazilehM.BureloK.SarntheinJ.IndiveriG. (2021). An electronic neuromorphic system for real-time detection of high frequency oscillations (hfo) in intracranial EEG. Nat. Commun. 12, 3095. 10.1038/s41467-021-23342-2

15

StewartJ. (2018). Calculus Early Transcendentals, 8th Edn. Belmont, CA: Cengage. p. 461–462.

16

TukeyJ. W. (1977). Exploratory Data Analysis. Princeton, NJ: Addison-Wesley Publishing Company.

17

VirtanenP.GommersR.OliphantT. E.HaberlandM.ReddyT.CournapeauD.et al. (2020). SciPy 1.0: fundamental algorithms for scientific computing in python. Nat. Methods17, 261–272. 10.1038/s41592-020-0772-5

18

WijekoonJ. H.DudekP. (2008). Compact silicon neuron circuit with spiking and bursting behaviour. Neural Netw. 21, 524–534. 10.1016/j.neunet.2007.12.037

19

WuX.SaxenaV.ZhuK.BalagopalS. (2015). A cmos spiking neuron for brain-inspired neural networks with resistive synapses and in-situ learning. IEEE Trans. Circ. Syst. II. 62, 1088–1092. 10.1109/TCSII.2015.2456372

20

Zamarreño-RamosC.Camuñas-MesaL. A.Pérez-CarrascoJ. A.MasquelierT.Serrano-GotarredonaT.Linares-BarrancoB. (2011). On spike-timing-dependent-plasticity, memristive devices, and building a self-learning visual cortex. Front. Neurosci. 5, 26. 10.3389/fnins.2011.00026

21

ZareM.ZafarkhahE.Anzabi-NezhadN. S. (2021). An area and energy efficient lif neuron model with spike frequency adaptation mechanism. Neurocomputing465, 350–358. 10.1016/j.neucom.2021.09.004

Summary

Keywords

neuromorphic, filter, CMOS, low-frequency, sensoring

Citation

García-Sebastián LM, Ponce-Ponce VH, Sossa H, Rubio-Espino E and Martínez-Navarro JA (2022) Neuromorphic Signal Filter for Robot Sensoring. Front. Neurorobot. 16:905313. doi: 10.3389/fnbot.2022.905313

Received

26 March 2022

Accepted

09 May 2022

Published

13 June 2022

Volume

16 - 2022

Edited by

Florian Röhrbein, Technische Universität Chemnitz, Germany

Reviewed by

Dante Mujica-Vargas, Centro Nacional de Investigación y Desarrollo Tecnológico, Mexico; Enrique Garcia-Trinidad, Tecnológico de Estudios Superiores de Huixquilucan, Mexico; Alejandro Zacarías, Instituto Politécnico Nacional (IPN), Mexico

Updates

Copyright

© 2022 García-Sebastián, Ponce-Ponce, Sossa, Rubio-Espino and Martínez-Navarro.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Luis M. García-Sebastián lgarcias2020@cic.ipn.mxVictor H. Ponce-Ponce vponce@cic.ipn.mx

This article was submitted to Original Research Article, a section of the journal Frontiers in Neurorobotics

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.