- Department of Neurobiology, Weizmann Institute of Science, Rehovot, Israel

Brain computational challenges vary between behavioral states. Engaged animals react according to incoming sensory information, while in relaxed and sleeping states consolidation of the learned information is believed to take place. Different states are characterized by different forms of cortical activity. We study a possible neuronal mechanism for generating these diverse dynamics and suggest their possible functional significance. Previous studies demonstrated that brief synchronized increase in a neural firing [Population Spikes (PS)] can be generated in homogenous recurrent neural networks with short-term synaptic depression (STD). Here we consider more realistic networks with clustered architecture. We show that the level of synchronization in neural activity can be controlled smoothly by network parameters. The network shifts from asynchronous activity to a regime in which clusters synchronized separately, then, the synchronization between the clusters increases gradually to fully synchronized state. We examine the effects of different synchrony levels on the transmission of information by the network. We find that the regime of intermediate synchronization is preferential for the flow of information between sparsely connected areas. Based on these results, we suggest that the regime of intermediate synchronization corresponds to engaged behavioral state of the animal, while global synchronization is exhibited during relaxed and sleeping states.

Introduction

Cortical activity was shown to depend critically on behavioral state of the animal. Experiments reveal that different frequency ranges are dominant in slow wave sleep (SWS), rapid eye movement sleep (REM) and different wake states (Steriade et al., 1993; Harris and Thiele, 2011). Various cortical states can also be seen in recording of membrane potential in awake animals (Poulet and Petersen, 2008; Okun et al., 2010). The influence of behavioral states on network dynamics is observed throughout the cortex, beginning from the primary sensory cortices (Harris and Thiele, 2011).

Recording extracellular activity from the somatosensory cortex (S1) and auditory cortex of rats showed that response to a stimulus is larger in the passive states compare to the active state (Fanselow and Nicolelis, 1999; Castro-Alamancos, 2004; Otazu et al., 2009). Intracellular recordings from S1 of mice revealed larger fluctuations in the membrane potential and larger correlations between neighboring neurons during quiet wake state compared to whisking state, while the mean firing rate of pyramidal neurons did not change significantly between these states. It appears that changes in neural dynamics originate from internal regulation because sensory inputs have no significant effect on global properties of neural dynamics at all behavioral states (Poulet and Petersen, 2008; Gentet et al., 2010).

There are various models for generating network activity synchronization (Sturm and Konig, 2001). In a recurrent network model with short-term synaptic depression (STD) there is a parameter regime in which short synchronized bursts of activity [Population Spike (PS)] can emerge spontaneously at a low frequency (Tsodyks et al., 2000; Loebel and Tsodyks, 2002). This type of synchronized events was confirmed experimentally in the auditory cortex (DeWeese and Zador, 2006). In this study we considered clustered networks divided into strongly interconnected groups of neurons. This clustered architecture was inspired by experimental studies on cortical connectivity (Song et al., 2005; Yoshimura et al., 2005).

It was previously proposed that network synchronization can ensure propagation of signals from one area to another in the sparsely connected cortex (Singer, 1993); thus controlling network synchronization may have an important functional role. Transitions between different behavioral and neural states can be accomplished by activation of different neuromodulatory systems (Steriade et al., 1993). These systems influence all of the forebrain in a diffusive way (Hasselmo, 1995) and can alter network dynamics via their effect on neurons and synaptic connections (Steriade et al., 1993; Marder and Thirumalai, 2002; Giocomo and Hasselmo, 2007). Synaptic depression can be regulated by neuromodulators that change the release probability in intracortical connections (Tsodyks and Markram, 1997; Wu and Saggau, 1997). Consequently, the emergence of PSs and their synchronization across strongly interconnected groups can be regulated, which results in controlling the flow of sensory information to distinct cortical areas.

Here we present a model which suggests a clear mechanism to control the level of synchronization in network activity. We show that the synchronization of noisy clustered network with STD can be shift smoothly from asynchronous to synchronous state by adjusting the release probability in recurrent connections. Synchronized activity can overcome the sparse connectivity between cortical areas; as a consequence, the flow of information from one cortical area to another can also be controlled.

Methods

Modeling Cortical Column

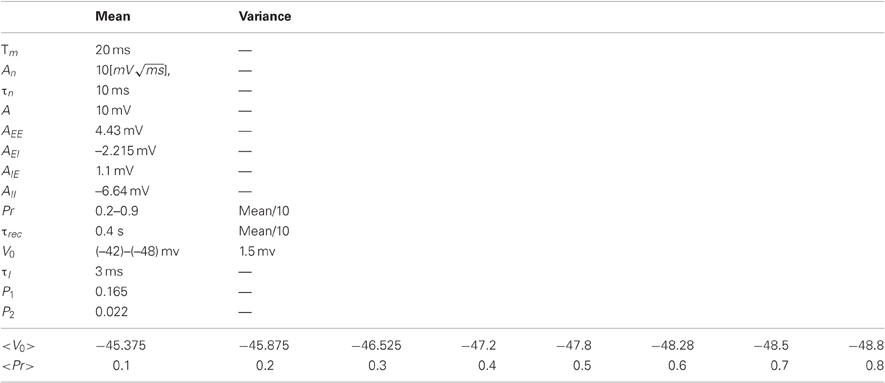

We represent a cortical column by a network of interconnected clusters; each one is divided into two units representing highly connected groups of excitatory and inhibitory neurons, respectively. Connections between units of different clusters are weaker then connections within clusters.

We used the rate model to describe the dynamics (Wilson and Cowan, 1972):

The Ei (Ii) are the excitatory (inhibitory) rate variables for a corresponding unit in cluster i. τE (τI) is the corresponding time constant. N is the number of clusters in the network. τref determines the neurons' refractory period. Every unit receives synaptic inputs from all other units with synaptic efficacies Jαβ (pre-synaptic α neuron projects to post-synaptic β neuron, α; β = E; I). eE (eI) is the mean background input, representing inputs from other brain areas or alternatively can represent mean resting membrane potential relative to threshold. s(t) is the external sensory input which is taken to be zero for spontaneous activity and otherwise as pulses with a certain duration (δs) and amplitude (As) that occur as random refractory Poisson process with a constant rate of Hz (the minimal time interval between pulses is 1 s). For simplicity we chose threshold —linear form of the neuronal gain function [z]+ = max (z, 0). We further introduced fluctuations to the input, η(t), which is a time correlated noise with time constant τn and a standard deviation of :

We introduced synaptic depression in the excitatory-to-excitatory connections following a previous modeling work (Tsodyks et al., 1998). These synaptic connections are scaled by a factor (Pr · xj), where xj is the average available synaptic resources in a unit j which decreases with unit activity and recovers to one with a time constant τ d; Pr is the release probability (same for all connections) and therefore the average fraction of synaptic resources that is utilized after each spike. The dynamics of the average available synaptic resources is governed by the following equation:

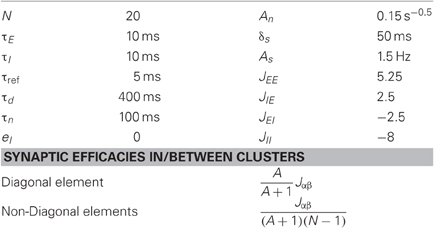

The parameters used in the simulations are listed in Table 1. The synaptic efficacies were adjusted such that the mean synaptic input from all other clusters is A time smaller then the mean inputs from within the cluster (see Table 1). We implemented the transitions between behavioral states by changing Pr in the range of 0.2–0.9. In order to keep the firing rate constant (2Hz), the external input eE was adapted according to an empirical relationship (Figure 2C).

Modeling Readout Population

The activity of the readout population is controlled by the following equation:

R is the firing rate variable, τR is its corresponding time constant and JR is the synaptic efficacy of the readout synapses. tsp is the spike time, Si,j is the number of spikes emitted by neuron i belonging to a unit j. Nn is the number of excitatory neurons from each cluster that are connected to the readout. We chose τR = 10 ms and JR = 1/N. In order to study the effect of sparse connectivity, we changed the number of feed-forward neurons belonging to each unit that are connected to the readout (Nn). The spike trains of neurons from a unit j were constructed as Poisson processes with a rate Ej.

Readout Performance

We quantified the readout population performance by defining a threshold for detecting network activity events. We adopt terms from the receiver operating characteristic (ROC) nomenclature; True positive (TP) is a detected event that follows a stimulus and false positive (FP) is a spontaneous event. False negative (FN) refers to the situation when there was a stimulus but the readout population activity did not cross the threshold (Dayan and Abbott, 2001). We defined a time window for the network response to a stimulus by computing the per-istimulus time histogram (PSTH) of the readout population. The response period was taken to be the time duration after a stimulus in which the PSTH is above the mean value before stimulus (spontaneous activity). True negative event refers to a situation in which there was no stimulus and the activity did not cross the threshold. We simulated continuous activity, and consequently, almost the entire range of the simulation can account as True negative events. Taking these events into account in the readout performance will mask all other events. As a result, standard ROC analysis is not appropriate measure for our network performance. We therefore define a true positive ration (TPR) as a measure of performance:

We quantified the ability of the network to signal the occurrence of the stimulus by calculating the maximal TPR (TPRmax) with respect to readout population detection threshold, for each values of Pr and Nn (we assume that a readout neuron can learn the optimal threshold, therefore it is not an important parameter in our examination).

Synchrony Measure

We calculated the global synchrony in the network as a normalized standard deviation of network firing rate, following a previous work (Golomb and Hansel, 2000):

Ei corresponds to firing rate of one unit (or neuron in the Integrate-and-Fire (I&F) network). The firing rate of a neuron in the I&F network was calculated with sliding window of 50 ms.

This synchrony measure is between 0 and 1, with 0 for asynchronous activity and 1 for fully synchronized activity.

Integrate and Fire Network

Neurons were modeled as current based leaky integrate and fire units (Dayan and Abbott, 2001). The voltage membrane potential evolved according to the following equation:

where τm denotes the membrane time constant of a neuron, V0 is the neuron resting potential, Isyn is the recurrent synaptic current, ηi(t) represents a non-specific background current (to excitatory neurons only) which was modeled as a time correlated noise, the same current for every neuron at the same unit [see Equation (3)] and ξi(t) represents a non-specific background current which was modeled as a Gaussian white noise (different noise to each neuron). In the following, we incorporated the input resistance of the neuron, Rin, into the currents, which were therefore measured in units of voltage (millivolts). Each time the membrane potential of a neuron reached threshold (–40 mv), a spike was emitted; then the neuron voltage was set to threshold voltage for 3 ms.

The synaptic current, Isyn, was modeled as a summation of post-synaptic currents (PSCs) from all the pre-synaptic neurons connected to neuron (i). The excitatory-to-excitatory connections exhibit STD, therefore the synaptic current to an excitatory neuron follows the equation:

The synaptic current to an inhibitory neuron is controlled by the following equation:

τI is the synaptic current time constant, Ne and Ni are the number of excitatory and inhibitory neurons, respectively (Ne = 2000, Ni = 500).

The available synaptic resources (xi) decrease with every spike and recover with a time constant (τrec):

The membrane resting potential and the synaptic parameters (τrec, Pr) were Gaussian distributed across the neurons with mean and variance given in Table 2. As before, we implemented the transitions between behavioral states by changing <Pr> in the range of 0.2–0.8. The membrane resting potential of the excitatory cells was adjusted such that the mean firing rate of the excitatory neurons was ~2 Hz (the mean firing rate of the inhibitory neurons varied between 0.5 Hz and 1 Hz).

The clustered architecture were constructed by assigning different connection probabilities between pairs of neurons belonging to the same cluster (p1) compared to different clusters (p2) and different synaptic efficacies (the synaptic efficacies were five time larger in the connections within cluster than between clusters) such that the ratio between the mean synaptic inputs from within the cluster and from other clusters is p1 × 5/[p2 × (N − 1)] ≈ 2 (N is the number of groups, N = 20).

The stimulus was simulated as pulses of a constant current (Fin = 1.5 mV, duration of 50 ms) that occur as random refractory Poisson process with a constant rate of Hz (the minimal time interval between pulses is 1 s).

Results

Inspired by experimental studies of cortical connectivity (Song et al., 2005; Yoshimura et al., 2005), we modeled a cortical column as a clustered recurrent network and explored its spontaneous dynamics and response to sensory stimulations. The network is composed of several clusters, each one divided into two units representing highly connected groups of excitatory and inhibitory neurons, respectively. Connections between units of different clusters are weaker then connections within clusters (see Figure 1). We used rate equations for the network dynamics such that each unit is described by one variable representing its average firing rate (See “Methods”). The excitatory-to-excitatory connections were endowed with activity dependent STD caused by depletion of synaptic resources. A fraction of available synaptic resources is utilized in response to an action potential and then recovers with the corresponding time constant (Tsodyks et al., 1998). In biological terms, this fraction reflects synaptic release probability (Pr). In addition to sensory input each excitatory unit receives random time-correlated noise current. (See “Methods” for details of the model). The ability of the network to transfer information about the occurrence of the stimulus was explored by quantifying the response of a readout population to changes in network activity following stimuli.

Figure 1. Network Architecture. Network is composed of clusters; each one is divided into two units representing highly connected groups of excitatory and inhibitory neural populations. All clusters are connected to each other; the connections within clusters are stronger than the connections between clusters. Excitatory neurons project feed—forward connections to a readout population (R). The input to the readout population is a summation over spike trains. The spike train of neurons from a certain unit was constructed as a Poisson process with the corresponding rate.

Partial Synchronization in Clustered Networks

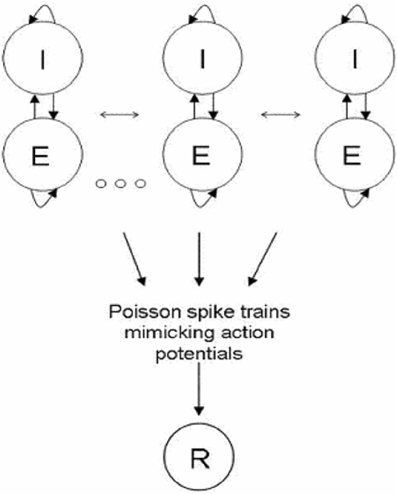

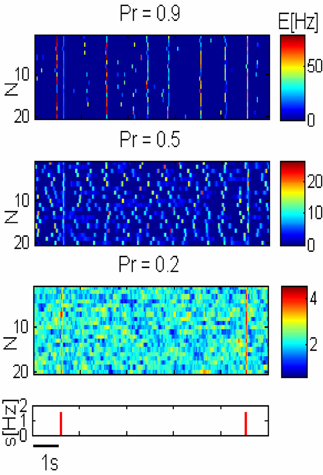

Previous theoretical studies have shown that including STD synapses in a homogenous recurrent network can result in PSs as transient network instability for a certain range of parameters (Tsodyks et al., 2000; Loebel and Tsodyks, 2002). In our network we add noise to the excitatory units such that the PSs are triggered by current fluctuations (Figure 2A). Noisy homogenous networks with STD exhibit two dynamical regimes; asynchronous activity and global synchronous activity. During the state of asynchronous activity the units fluctuate around their mean firing rate while during synchronous activity spontaneous synchronized PSs can be observed. A new, intermediate dynamical regime exists in a clustered network for which each unit can emit PS as a result of synaptic input fluctuation, yet there is no complete synchronization between the units (Figure 2A). The synchronous activity can be controlled such that the network shifts gradually from state of asynchronous activity to a global synchronization (Figure 2B), consequently there is a continuum range of synchronization as was suggested experimentally (Harris and Thiele, 2011).

Figure 2. Network dynamics across different states. (A) Spontaneous activity of the excitatory units (N is the unit label). The synchronization in the network increases as a result of the increase in the release probability (Pr). Pr = 0.2: asynchronous activity, Pr = 0.5: PSs can occur within units but they are not synchronized. Pr = 0.9: Synchronous activity, PSs occur simultaneously in different clusters. (B) The synchronization grows smoothly with the release probability. (C) The mean resting potential was adapted according to Pr such that the firing rate was kept constant across the conditions.

Transmission of synchronized changes in pre-synaptic activity via depressing synapses strongly depends on the release probability; in particular, post-synaptic response becomes more transient as Pr increases (Tsodyks and Markram, 1997). We therefore conjectured that Pr is the natural parameter for controlling PSs in recurrent networks. Our simulations confirm this prediction (Figure 2A). Small fluctuation in external inputs cannot be enhanced by recurrent connections with low Pr, and increasing Pr above a certain value enables units to produce PSs. If Pr is not too large PS in one unit cannot initiate a PS in another unit, therefore there is no synchronization between the clusters. Increasing Pr further results in higher effective synaptic connections within and between the excitatory units, and in higher amplitude of PS, such that PS in one unit trigger PS in other units and the whole network synchronizes (Figure 2A).

While increasing the Pr, we decrease the average external current into the excitatory units in order to keep the firing rate constant, thus constraining the dynamics (Figure 2C).

Varying these two parameters simultaneously is biologically plausible. For example, acetylcholine (ACh), which is a neuromodulator that is involved in the regulation of transition between behavioral states (Steriade et al., 1993), both reduces the release probability in cortical pyramidal cells and depolarized the membrane potential (McCormick and Prince, 1986; Giocomo and Hasselmo, 2007).

Optimal Dynamical State

It was previously suggested that synchronization of neuronal activity is important for signal propagation in the cortex (Abeles, 1991). We examined the effect of synchronization in the form of PS on the flow of information between two networks representing two distinct areas in the brain. The first is the network described in the previous section which receives the sensory stimuli and the second is a readout population. The sensory input is taken to be an excitatory pulse that arrives at random times (See “Methods”). The response of the network to stimuli is shown in Figure 3.

Figure 3. Network response to pulse stimuli. Three upper panels— response of three networks illustrated in Figure 2A to external inputs. Lower panel—inputs are shown as red pulses. Response amplitude grows with the release probability (Pr) but spontaneous global synchronization is observed with high Pr.

In order to consider how the network can transmit sensory stimuli to higher cortical areas, we added a readout population (R) that receives spike trains from the network (Figure 1). We modeled spike trains emitted by excitatory neurons belonging to a certain unit by constructing Poisson process with the corresponding rate. Since the connectivity between different cortical areas is very sparse (Anderson et al., 1998; Douglas and Martin, 2007), we assume that the number of feed-forward neurons from each excitatory unit (Nn) that are connected to the readout population is small. Activity of the readout in response to stimuli is plotted in Figure 4.

Figure 4. Readout response to pulse stimuli. (A) Readout response to external inputs (network parameters as in the three networks illustrated in Figure 2A). (B) Examples of readout PSTH, ---- Pr = 0.2, –– Pr = 0.5, and —— Pr = 0.9. (C) Readout response amplitude grows with the release probability (Pr). Nn = 5 in all of the panels.

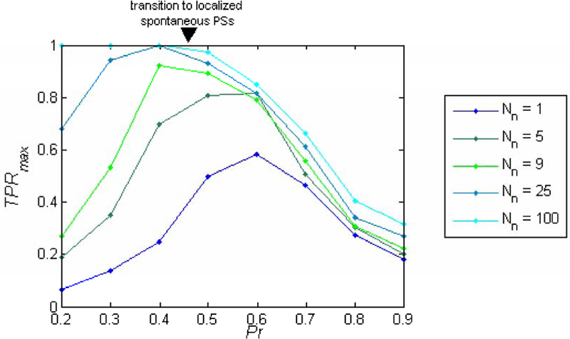

We quantified readout performance by defining events as peaks of readout activity that cross a threshold and calculated the ratio (TPR) between the number of events during stimulus (true positive events-TP) and the sum of total number of events and FNs (See “Methods”). The TPR is a function of the threshold, thus we characterize the performance by the maximum of the TPR (TPRmax), (we assume a readout can learn the optimum threshold, therefore, it is not a parameter of our model).

The behavior of TPRmax as a function of Pr depends on the sparseness of the readout connections (Nn). While for larger values of Nn the performance as a function of the release probability TPRmax(Pr) is a monotonically decreasing function of Pr (Figure 5), for small enough values of Nn (sparse connectivity) this function exhibits a peak at a certain value of Pr (Figure 5) which corresponds to the regime of intermediate synchronization in the network.

Figure 5. Readout performance as a function of the release probability. TPRmax is plotted as a function of the release probability (Pr) separately for each number of projecting neurons from each cluster (Nn). The optimum TPRmax depends on Nn; for Nn < 9 the maximum appears in the regime of localized spontaneous PSs, while with higher Nn, the maximum shifts to the regime of asynchronous activity. With dense connectivity, the performance becomes a monotonically decreasing function of Pr.

What Determines the Optimal State?

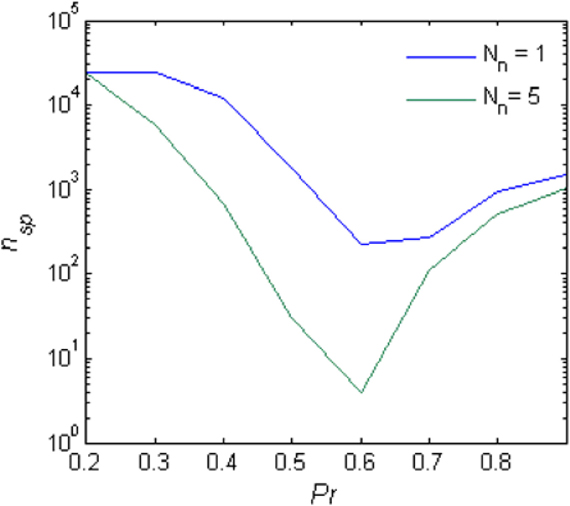

For small Pr, the amplitude of network response to external stimulus is low, (Figure 3). This implies that for each input, only a small and highly variable fraction of neurons will emit a spike. Because the sampling of neurons by the readout is sparse, its response will be unreliable (within the noise level, Figure 4A). Consequently, the number of spontaneous events in the readout that cross the maximal PSTH value (nsp) is high. With large Pr values, the magnitude of networks spontaneous events (PSs) are in the same range as the responses (Figure 3), hence, nsp is also high, independently on the number of sampled neurons (Figure 6). For the intermediate level of Pr the PSs synchronized across clusters as a result of stimuli while there is no spontaneous PSs synchronization, therefore, nsp is small. Low values of nsp enable a good performance for an appropriately chosen threshold (Figure 4). With sparse connectivity, nsp has minimal value in the regime of local PSs (Figure 6) that corresponds to maximal TPRmax(Figure 5).

Figure 6. Spontaneous peaks in activity. The number of spontaneous events that cross the maximal PSTH value (nsp) as a function of the Pr.

In summary, Network and readout responses to stimuli are growing with Pr (Figures 3, 4), but spontaneous synchronized events occur for networks with high Pr (Figure 3), which can be erroneously recognized by the readout as inputs (false alarms). Hence, the fully synchronized regime is not beneficial for flow of sensory information. In the regime of intermediate synchronization, network response to stimuli is stronger than in the asynchronous state, while there are less FP events (Figure 3). Stronger network response results in higher probability of each neuron to fire action potentials in response to stimuli and consequently the flow of information in the sparsely connected cortex is more reliable. This advantage disappears when the sampling size (Nn) increases and thus the optimal Pr shifts to smaller values.

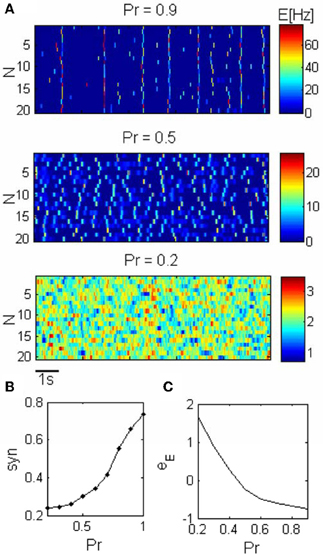

Integrate and Fire Networks

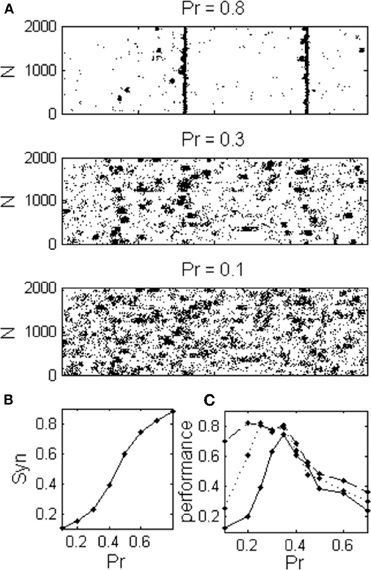

In order to verify that the results obtained with the rate model remain valid in a more realistic model, we simulated networks of I&F spiking neurons (See “Methods”). The three dynamical regimes: asynchronous dynamics, local synchronization and global synchronization, were also observed in I&F networks (Figures 7A,B). The optimal regime for information flow between sparsely connected networks is again the intermediate dynamical regime (Figure 7C). Only with denser sampling, (more than Nn = 9, approximately 10% of the network neurons), the performance becomes a monotonically decreasing function of Pr. The problem of sparse connectivity will be even more acute if we consider a more realistic case of noisy readout (not shown).

Figure 7. Integrate and Fire network exhibits the same dynamical regimes as in the rate model. (A) Raster plot shows spontaneous activities of excitatory neurons during three different dynamical regimes corresponding to different values of the release probability; asynchronous, localized synchronization, and global synchronization. (B) Network exhibits a continuum range of synchronization (C) The optimal regime for information transfer depends on the connectivity to the readout population as in the rate model, (Nn: ——— one connection for every third cluster, ---- Nn = 1 and –– Nn = 9 connections from each cluster).

Discussion

We proposed a mechanism by which the synchronization of network activity can be generated and regulated. As previous studies showed, homogenous networks with STD exhibit two dynamical regimes: asynchronous and globally synchronized activity in the form of PS. In this study we modeled a cortical column as a clustered recurrent network. We report that networks with clustered architecture possess a new dynamical regime in which groups of neurons (clusters) emit PSs that are not fully synchronized between the groups. This regime is further divided into a continuum of states with gradually changing levels of global synchronization that can be controlled by network parameters, as was suggested by experiments (Harris and Thiele, 2011). We showed that reduction in release probability results in de-synchronization of neuronal activity.

Our proposed mechanism for transition between dynamical states may be implemented in the cortex by neuromodulators such as ACh (Goard and Dan, 2009). ACh can regulate STD in the cortex by its effect on the probability of neurotransmitter release (Tsodyks and Markram, 1997), therefore controlling the synchronization that is generated in the cortex by the mechanism proposed in this study. Indeed, ACh is involved in the regulation of transition between behavioral states, in particular, its secretion increases in the cortex when the animal is in the alert state (Perry et al., 1999; Giocomo and Hasselmo, 2007).

Previous work demonstrated variable dynamic state, with different synchrony levels, in recordings of cortical activity in the urethane anesthetized rats. The data was fitted to a dynamical system such that every state characterized by different set of parameters. They showed that the synchronized cortical states can be modeled as self-exciting system while the most desynchronized state is better approximated with a linear dynamics (Curto et al., 2009). Here we used biologically plausible dynamics in which cortical state is regulated via the release probability that controls the non-linearity of the dynamics; therefore shift it from non-linear in the synchronized state to approximately linear in the desynchronized state.

It has been suggested that synchronization of neuronal activity is beneficial for information flow in the sparsely connected cortex (Singer, 1993). In our model, during PSs the neurons have higher probability to fire as a result of the synchronous activity; therefore it may be a mechanism by which primary cortical populations overcome their sparse connectivity to remote areas in the brain in order to transfer further the sensory information. However, it has some disadvantages: large activity during PS synchronizes the clusters and increases the occurrence of false detections. Depletion in the synaptic resources, as a result of synchronous PSs, prevents column responses to an incoming stimulus that appears in a short time interval after strong synchronous network event. Our simulation results imply that the best regime for information transfer would be when PSs occur within clusters but there is no synchronization across clusters.

Further suggestions can be made concerning the possible role of clustered architecture. Inspired by the patchy long distance connection in primary sensory areas (Gilbert and Wiesel, 1989; Amir et al., 1993) and the binding theory (Singer, 1993), we can hypothesize that different clusters which belong to the same column, responding to the same feature such as orientation, tone etc, are involved in the coding of complex stimuli (such as combination of features) by synchronized their activity with part of other columns clusters. Then, synchronous PSs increase the noise correlation between the clusters which may encode different complex stimuli. The regime of asynchronous PSs within groups will be also more beneficial in encoding these various features combinations. Moreover, it may be interesting to examine what can be the functional role of the other dynamical regimes. For example, it has been suggested that synchronous activity is important for plasticity (Singer, 1993; Sejnowski and Destexhe, 2000), therefore it may be interesting to examine what could be the effect of PS generation and synchronization on memory consolidation.

In summary, our results illustrate that the cortical networks can exhibit very different activity regimes most suitable for a particular behavioral state. In the case of sensory processing that we considered in this study, choosing the right regime is beneficial for signaling the sensory inputs to higher brain areas. We believe, however, that all the cortical circuits are capable of performing several functions, and have to be tuned to the particular behavior depending on the computational demands of the area. The mechanisms by which the brain can achieve this tuning are probably diverse and should be a subject of further theoretical studies.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Sandro Romani and Michael Okun for valuable discussions. This study was supported by the Israeli Science Foundation.

References

Amir, Y., Harel, M., and Malach, R. (1993). Cortical hierarchy reflected in the organization of intrinsic connections in macaque monkey visual cortex. J. Comp. Neurol. 334, 19–46.

Anderson, J. C., Binzegger, T., Martin, K. A., and Rockland, K. S. (1998). The connection from cortical area V1 to V5, a light and electron microscopic study. J. Neurosci. 18, 10525–10540.

Castro-Alamancos, M. A. (2004). Absence of rapid sensory adaptation in neocortex during information processing states. Neuron 41, 455–464.

Curto, C., Sakata, S., Marguet, S., Itskov, V., and Harris, K. D. (2009). A simple model of cortical dynamics explains variability and state dependence of sensory responses in urethane-anesthetized auditory cortex. J. Neurosci. 29, 10600–10612.

Dayan, P., and Abbott, L. F. (2001). Theoretical Neuroscience: Computational and Mathematical Modeling of Neural Systems. Cambridge, MA: Massachusetts Institute of Technology Press.

DeWeese, M. R., and Zador, A. M. (2006). Non-Gaussian membrane potential dynamics imply sparse, synchronous activity in auditory cortex. J. Neurosci. 26, 12206–12218.

Douglas, R. J., and Martin, K. A. (2007). Mapping the matrix: the ways of neocortex. Neuron 56, 226–238.

Fanselow, E. E., and Nicolelis, M. A. (1999). Behavioral modulation of tactile responses in the rat somatosensory system. J. Neurosci. 19, 7603–7616.

Gentet, L. J., Avermann, M., Matyas, F., Staiger, J. F., and Petersen, C. H. (2010). Membrane potential dynamics of GABAergic neurons in the barrel cortex of behaving mice. Neuron 65, 422–435.

Gilbert, C. D., and Wiesel, T. N. (1989). Columnar specificity of intrinsic horizontal and corticocortical connections in cat visual cortex. J. Neurosci. 9, 2432–2442.

Giocomo, L. M., and Hasselmo, M. E. (2007). Neuromodulation by glutamate and acetylcholine can change circuit dynamics by regulating the relative influence of afferent input and excitatory feedback. Mol. Neurobiol. 36, 184–200.

Goard, M., and Dan, Y. (2009). Basal forebrain activation enhances cortical coding of natural scenes. Nat. Neurosci. 12, 1444–1449.

Golomb, D., and Hansel, D. (2000). The number of synaptic inputs and the synchrony of large, sparse neuronal networks. Neural Comput. 12, 1095–1139.

Harris, K. D., and Thiele, A. (2011). Cortical state and attention. Nat. Rev. Neurosci. 12, 509–523.

Hasselmo, M. E. (1995). Neuromodulation and cortical function: modeling the physiological basis of behavior. Behav. Brain Res. 67, 1–27.

Loebel, A., and Tsodyks, M. (2002). Computation by ensemble synchronization in recurrent networks with synaptic depression. J. Comput. Neurosci. 13, 111–124.

Marder, E., and Thirumalai, V. (2002). Cellular, synaptic and network effects of neuromodulation. Neural Netw. 15, 479–493.

McCormick, D. A., and Prince, D. A. (1986). Mechanisms of action of acetylcholine in the guinea-pig cerebral cortex in vitro. J. Physiol. 375, 169–194.

Okun, M., Naim, A., and Lampl, I. (2010). The subthreshold relation between cortical local field potential and neuronal firing unveiled by intracellular recordings in awake rats. J. Neurosci. 30, 4440–4448.

Otazu, G. H., Tai, L. H., Yang, Y., and Zador, A. M. (2009). Engaging in an auditory task suppresses responses in auditory cortex. Nat. Neurosci. 12, 646–654.

Perry, E., Walker, M., Grace, J., and Perry, R. (1999). Acetylcholine in mind: a neurotransmitter correlate of consciousness? Trends Neurosci. 22, 273–280.

Poulet, J. F., and Petersen, C. H. (2008). Internal brain state regulates membrane potential synchrony in barrel cortex of behaving mice. Nature 454, 881–885.

Singer, W. (1993). Synchronization of cortical activity and its putative role in information processing and learning. Annu. Rev. Physiol. 55, 349–374.

Song, S., Sjöström, P. J., Reigl, M., Nelson, S., and Chklovskii, D. B. (2005). Highly nonrandom features of synaptic connectivity in local cortical circuits. PLoS Biol. 3:e68. doi: 10.1371/journal.pbio.0030068

Steriade, M., McCormick, D. A., and Sejnowski, T. J. (1993). Thalamocortical oscillations in the sleeping and aroused brain. Science 262, 679–685.

Sturm, A. K., and Konig, P. (2001). Mechanisms to synchronize neuronal activity. Biol. Cybern. 84, 153–172.

Tsodyks, M., Pawelzik, K., and Markram, H. (1998). Neural networks with dynamic synapses. Neural Comput. 10, 821–835.

Tsodyks, M., Uziel, A., and Markram, H. (2000). Synchrony generation in recurrent networks with frequency-dependent synapses. J. Neurosci. 20, RC50.

Tsodyks, M., and Markram, H. (1997). The neural code between neocortical pyramidal neurons depends on neurotransmitter release probability. Proc. Natl. Acad. Sci. U.S.A. 94, 719–723.

Wilson, H. R., and Cowan, J. D. (1972). Excitatory and inhibitory interactions in localized populations of model neurons. Biophys. J. 12, 1–24.

Wu, L. G., and Saggau, P. (1997). Presynaptic inhibition of elicited neurotransmitter release. Trends Neurosci. 20, 204–212.

Keywords: neural network, synchrony and synaptic depression

Citation: Mark S and Tsodyks M (2012) Population spikes in cortical networks during different functional states. Front. Comput. Neurosci. 6:43. doi: 10.3389/fncom.2012.00043

Received: 28 February 2012; Accepted: 11 June 2012;

Published online: 13 July 2012.

Edited by:

Viktor Jirsa, Movement Science Institute, FranceCopyright © 2012 Mark and Tsodyks. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and subject to any copyright notices concerning any third-party graphics etc.

*Correspondence: Misha Tsodyks, Department of Neurobiology, Weizmann Institute of Science, Rehovot 76100, Israel. e-mail:bWlzaGFAd2Vpem1hbm4uYWMuaWw=