- Department of Mathematics, University of Houston, Houston, TX, USA

Persistent activity observed during delayed-response tasks for spatial working memory (Funahashi et al., 1989) has commonly been modeled by recurrent networks whose dynamics is described as a bump attractor (Compte et al., 2000). We examine the effects of interareal architecture on the dynamics of bump attractors in stochastic neural fields. Lateral inhibitory synaptic structure in each area sustains stationary bumps in the absence of noise. Introducing noise causes bumps in individual areas to wander as a Brownian walk. However, coupling multiple areas together can help reduce the variability of the bump's position in each area. To examine this quantitatively, we approximate the position of the bump in each area using a small noise expansion that also assumes weak amplitude interareal projections. Our asymptotic results show the motion of the bumps in each area can be approximated as a multivariate Ornstein–Uhlenbeck process. This shows reciprocal coupling between areas can always reduce variability, if sufficiently strong, even if one area contains much more noise than the other. However, when noise is correlated between areas, the variability-reducing effect of interareal coupling is diminished. Our results suggest that distributing spatial working memory representations across multiple, reciprocally-coupled brain areas can lead to noise cancelation that ultimately improves encoding.

Introduction

Persistent spiking activity has been experimentally observed in prefrontal cortex (Funahashi et al., 1989; Miller et al., 1996), parietal cortex (Colby et al., 1996; Pesaran et al., 2002), superior colliculus (Basso and Wurtz, 1997), caudate nucleus (Hikosaka et al., 1989; Levy et al., 1997), and globus pallidus (Mushiake and Strick, 1995; McNab and Klingberg, 2008) during the retention interval of visuospatial working memory tasks. Often, the subject must remember a cue's location for several seconds (Funahashi et al., 1989). Delay period neurons persistently fire in response to a preferred cue orientation as described by a bell-shaped tuning curve. Networks of these neurons, with recurrent excitation between similarly tuned neurons and broadly tuned feedback inhibition, can generate spatially localized “bumps.” The position of these bumps encodes the remembered location of the cue (Compte et al., 2000).

Dynamic variability can degrade the accuracy of working memory over time though. Fluctuations in membrane voltage and synaptic conductance can lead to spontaneous spike or failure events at the edge of the bump, causing the bump to wander diffusively (Compte et al., 2000; Laing and Chow, 2001). Bump attractor networks are particularly prone to such diffusive error because bump positions lie on a line attractor where each location is neutrally stable (Amari, 1977). Interestingly, psychophysical data demonstrates spatial working memory error does scale linearly with delay time, suggesting the underlying process that degrades memory is diffusive (White et al., 1994; Ploner et al., 1998). Much theoretical work has examined network properties that might limit memory degradation. Several computational studies have explored networks built from bistable neuronal units, which sustain persistent states that are less susceptible to noise (Camperi and Wang, 1998; Koulakov et al., 2002; Goldman et al., 2003). In addition, synaptic facilitation has been shown to slow the drift of bump position due to internal variability (Itskov et al., 2011). Synaptic plasticity has also be shown to reduce diffusion of bumps in (Hansel and Mato, 2013). Finally, spatially heterogeneous recurrent excitation can reduce wandering of bumps quantizing the line attractor by stabilizing a finite set of bump locations (Kilpatrick and Ermentrout, 2013; Kilpatrick et al., 2013).

Complementary to these possibilities, we propose that interareal coupling across multiple areas of cortex may reduce error in working memory recall generated by dynamic fluctuations. Multiple representations of spatial working memory have been identified in different cortical areas (Colby et al., 1996). This distributed representation makes working memory information readily available for motor (Owen et al., 1996) and decision-making (Curtis and Lee, 2010) tasks. In addition, this redundancy may serve to reduce degrading effects of noise. It is known that several areas involved in oculomotor delayed response tasks are reciprocally coupled to one another (Constantinidis and Wang, 2004; Curtis, 2006). We presume the representation of a spatial working memory in a single area takes the form of a bump in a recurrently coupled neural field. Projections between areas share information about bump position across the multi-area network. Recently, (Folias and Ermentrout, 2011) showed several novel activity patterns emerge when considering neural fields with multiple areas. In addition, recent analyses of spatiotemporal dynamics of perceptual rivalry have exploited dual population neural field models, where activity in each area represents a single percept (Kilpatrick and Bressloff, 2010; Bressloff and Webber, 2012b). In this study, we focus on activity patterns where bumps in each area have positions that remain close.

Our study mostly focuses on a dual area model of spatial working memory, where each area provides a replicate representation of the presented cue. We begin by demonstrating the neutral stability of the bump position in each area, in the absence of noise and interareal projections. Upon including noise and interareal projections, we use a small-noise expansion to derive an effective stochastic differential equation for the position of the bump in each area. The effective system is a multivariate Ornstein–Uhlenbeck process, which we can analyze using diagonalization. The variance of this stochastic process decreases as the strength of connections between areas increases. Variance reduction relies on cancelations arising due to averaging noise between both areas. Thus, when noise is strongly correlated between areas, the effect of interareal coupling is negligible. Lastly, we show this analysis extends to the case of N (more than two) areas and that for sufficiently strong interareal connections, variance scales as 1/N.

Materials and Methods

Dual Area Model of Spatial Working Memory

We consider a recurrently coupled model commonly used for spatial working memory (Camperi and Wang, 1998; Ermentrout, 1998) and visual processing (Ben-Yishai et al., 1995). GABAergic inhibition (Gupta et al., 2000) typically acts faster than excitatory NMDAR kinetics (Clements et al., 1992), and we assume excitatory synapses contain a mixture of AMPA and NMDA components. Thus, we make the assumption that inhibition is slaved to excitation as in (Amari, 1977). We can then describe average activity u1(x, t) and u2(x, t) of neurons in either area by the system (Ben-Yishai et al., 1995; Folias and Ermentrout, 2011; Kilpatrick and Ermentrout, 2013)

where the effects of synaptic architecture are described by the convolution

for j, k = 1, 2, so the case j = k describes recurrent synaptic connections within a area and j ≠ k describes synaptic connections between areas (interareal). Several fMRI and electrode recordings have revealed correlations between activity in multiple cortical areas during spatial working memory tasks (Constantinidis and Wang, 2004; Curtis, 2006), such as parietal and prefrontal cortex (Chafee and Goldman-Rakic, 1998). However, it seems the strength of these correlations is often not on the order of the activity itself (di Pellegrino and Wise, 1993). For this reason, we presume the strength of interareal connections is weak 0 ≤ ε1/2 « 1. Note, we could choose to make them a different magnitude than the noise, but for analytical convenience, we choose interareal connection and noise magnitude to be roughly the same. Analysis could still be performed in other cases, but it would simply be more complicated. By setting τ = 1, we can assume that time evolves on units of the excitatory synaptic time constant, which we presume to be roughly 10 ms (Häusser and Roth, 1997). The function wjk(x − y) describes the strength (amplitude of wjk) and net polarity (sign of wjk) of synaptic interactions from neurons with stimulus preference y to those with preference x. Following previous studies, we presume the modulation of the recurrent synaptic strength is given by the cosine

so neurons with similar orientation preference excite one another and those with dissimilar orientation preference disynaptically inhibit one another (Ben-Yishai et al., 1995; Ferster and Miller, 2000). Lateral inhibitory type network architectures are supported by anatomical studies of the delay period neurons in prefrontal cortex (Goldman-Rakic, 1995). Our general analysis will apply to any even symmetric function of the distance x − y, but we typically compute things using (Equation 3) since it eases calculations. Finally, synaptic connections from area k to j are specified by the weight function wjk(x − y), and we typically take this to be the function

where Ej and Mj specify the strength of baseline excitation and modulation projecting to the jth area.

Output firing rates are given by taking the gain function f(u) of the synaptic input, which we usually proscribe to be (Wilson and Cowan, 1973)

and often take the high gain limit γ → ∞ for analytical convenience, so (Amari, 1977)

Effects of noise are described by the small amplitude (0 ≤ ε « 1) stochastic processes ε1/2Wj (x, t) that are white in time and correlated in space so that 〈dWj(x, t)〉 = 0 and

describing both local and shared noise in either area, j = 1, 2 with j ≠ k. For simplicity, we assume the local spatial correlations have a cosine profile Cj(x) = cj cos(x). We also typically assume the correlated noise component has cosine profile so Cc(x) = cc cos(x). Therefore, in the limit cc → 0, there are no interareal noise correlations, and in the limit cc → min (c1, c2), noise in each area is maximally correlated. For instance, when c1 = c2 = cc = 1, noise in each area is drawn from the same process.

Multiple-Area Model of Spatial Working Memory

To incorporate the effects of many coupled, redundant areas encoding a spatial working memory, we consider a model with N areas and arbitrary synaptic architecture, given by

where uj represents neural activity in the jth area where j = 1, …, N. As before, we set τ = 1, so each time unit corresponds to the roughly 10 ms timescale of excitatory synaptic conductance. The weight function wjk(x − y) represents the connection from neurons in area k with cue preference y to neurons in area j with cue preference x as described by (Equation 2). For comparison with numerical simulations, we take weight functions to be the cosines (Equation 3) and (Equation 4) and the firing rate function to be Heaviside (Equation 5). As in the dual area model, noises Wj(x, t) are white in time and correlated in space so that 〈dWj(x, t)〉 = 0 and

with j, k = 1,…, N, where local noise correlations are described when j = k and noise correlations between areas are described when j ≠ k. For comparison with numerical simulations, we consider Cjj (x) = cos(x) and Cjk (x) = cc cos(x) for all j ≠ k.

Numerical Simulation of Stochastic Differential Equations

The spatially extended model (Equation 1) was simulated using an Euler–Maruyama method with a timestep 10−4, using Riemann integration on the convolution term with 2000 spatial grid points. To compute and compare the variances 〈Δ1(t)2〉 for the dual and multiple area model, we simulated the system 5000 times. The position of the bump Δj at each timestep, in each simulation, was determined by the position x in each area j at which the maximal value of uj(x, t) was attained. The variance was then computed at each timepoint and compared to our asymptotic calculations.

Results

We will now study how interareal architecture affect the dynamics of bumps in multiple area stochastic neural fields. To start, we demonstrate that in the absence of reciprocal connectivity between areas bump attractors exist that are neutrally stable to perturbations that change their position, which has long been known (Amari, 1977; Camperi and Wang, 1998; Ermentrout, 1998). Introducing weak interareal connectivity can decrease the variability in bump position because noise that moves bumps in the opposite direction is canceled due to an attractive force introduced by connectivity. Perturbations that push bumps in the same direction are still integrated, so bumps wander due to dynamic fluctuations, but their effective variance is smaller than it would be without interareal synaptic connections. In the presence of noise correlations between areas, effects of noise cancelation are weaker since stochastic forcing in each area is increasingly similar. Our asymptotic analysis is able to explain all of this with its resulting multivariate Ornstein–Uhlenbeck process.

Bumps in the Noise-Free System

To begin, we seek stationary solutions to Equation (1) in the absence interareal connections and noise (ε → 0). Similar analyses have been carried out for bumps in single area populations (Ermentrout, 1998; Hansel and Sompolinsky, 1998). For this study, we assume recurrent connections are identical in all areas (wjj = w). Relaxing this assumption slightly does not dramatically alter our results. Note first stationary solutions take the form (u1(x, t), u2(x, t)) = (U1(x), U2(x)). In the absence of any interareal connections, we would not necessarily expect the peaks of these bumps to be at the same location. However, translation invariance of the system (Equation 1) allows us to set the center of both bumps to be x = 0 to ease calculations. The stationary bump solutions then satisfy the system

so the shape of each bump is only determined by the local connections w. For w given by Equation (3), since U1(x) and U2(x) are assumed to be peaked at x = 0, then by also assuming even symmetric solutions, we find

where we use cos(x − y) = cosx cosy + sinx siny. We can more easily compute the precise shape of these bumps in case of a Heaviside firing rate function (Equation 5). There is then an identical active region of each bump such that U1(x) > θ and U2(x)> θ when x ∈ (−a, a), so the Equation (8) become U1(x) = U2(x) = 2sina cosx. Applying self-consistency, U1(±a) = U2(±a) = θ, we can generate an implicit equation for the half-widths of the bumps a given by 2sina cosa = sin(2a) = θ. Solving this explicitly for a, we find two solutions on and . Only the bump associated with as is stable.

The bumps (Equation 7) are neutrally stable to perturbations in both directions, which can lead to encoding error once the effects of dynamic fluctuations are considered (Kilpatrick et al., 2013). Since the two areas are uncoupled, examining bumps' stability can be reduced to studying each bump's stability individually (see Kilpatrick and Ermentrout, 2013 for details). Translating a bump by a scaling of the spatial derivative U′(x), we find uj(x, t) = Uj(x) + ε1/2 U′j(x) eλt is associated with a zero eigenvalue (λ = 0), corresponding to neutral stability. To see this, we plug it into the corresponding bump equation of Equation (1) in the absence of noise and interareal connections and examine the linearization

Note, in the limit of infinite gain γ → ∞, a sigmoid f becomes the Heaviside (Equation 5), and

in the sense of the distributions. Equation (9) still hold in this case. Differentiating (Equation 7), and integrating by parts, we find

where the boundary terms vanish due to periodicity of the domain [−π, π]. Thus, the right hand side of Equation (9) vanishes, and λ = 0 is the only eigenvalue corresponding to translating perturbations. Thus, either bump (in area 1 or 2) is neutrally stable to perturbations that shifts its position in either direction (rightwards or leftwards), since the bump in each area experiences no force from the other bump.

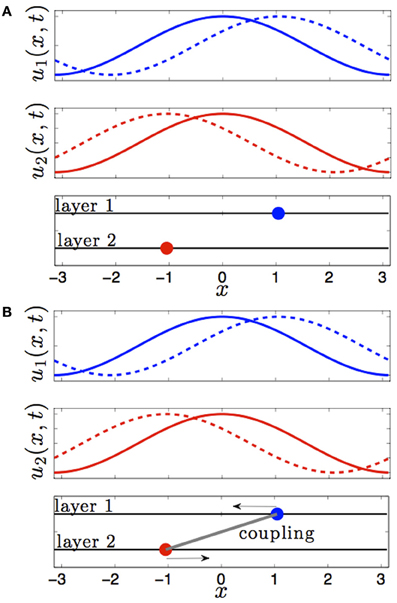

This changes when we consider the effect of interareal connectivity. Once the two areas of Equation (1) are reciprocally coupled, bumps are stable to perturbations that translate them in opposite directions of one another (see Figure 1). Interareal connections act as a restoring force between the two positions of each bump. We will demonstrate this in the subsequent section by deriving a linear stochastic system for the position of either bump in the presence of small noise and weak interareal connectivity. The restorative nature of interareal connectivity is revealed by the negative eigenvalue associated with the interaction matrix (Equation 15) of our stochastic system, as shown in Equation (18).

Figure 1. Effect of interareal coupling on the stability of bumps to translating perturbations. (A) In the absence of interareal coupling, bumps (solid) are neutrally stable to perturbations (dashed) that translate them in opposite directions. (B) In the presence of interareal coupling, bumps are linearly stable, as revealed by the negative eigenvalue in Equation (18), to perturbations that translate them in opposite directions.

Noise-Induced Wandering of Bumps

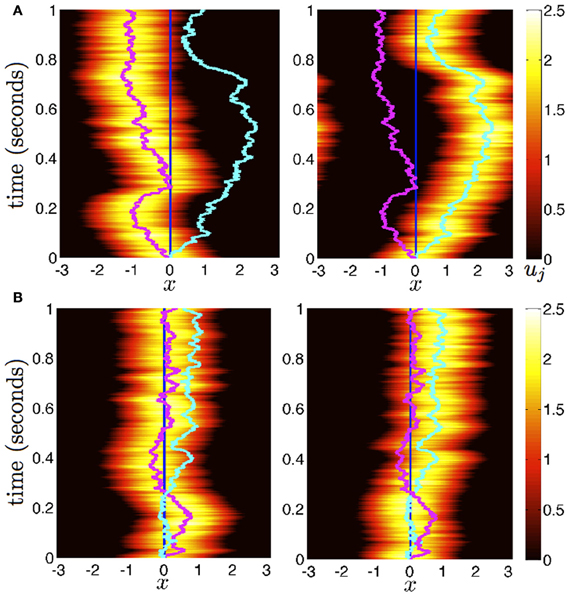

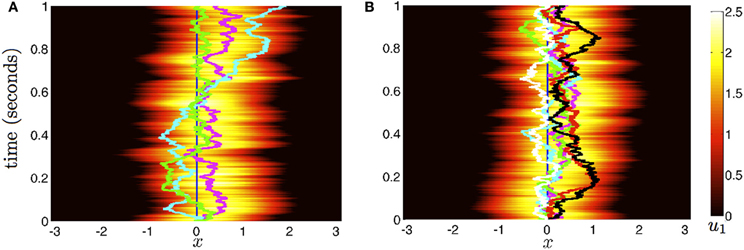

Now we consider the effects of small noise on the position of bumps in the presence of weak interareal connections. We start by presuming noise generates two distinct effects in the bumps (see Figure 2). First, noise causes both bumps to wander away from their initial positions, while still being pulled back into place by the bump in the other area. Bump position in areas 1 and 2 will be described by the time-varying stochastic variables Δ1(t) and Δ2(t). Second, noise causes fluctuations in the shape of both bumps, described by a correction Φj. To account for this, we consider the ansatz

Figure 2. Diffusion of bumps in the dual area stochastic neural field (Equation 1). (A) Without interareal connections (w12 = w21 ≡ 0), each bump executes Brownian motion about the domain, due to stochastic forces. (B) In the presence of interareal connections , the position of bump 1 (magenta) is attracted to the position of bump 2 (cyan) and vice versa. Due to the reversion of each bump to the position of the other, both bumps effectively wander the domain less. Local connectivity is described by the cosine (Equation 3); the firing rate function is Equation (5). Other parameters are threshold θ = 0.5 and noise amplitude ε = 0.025.

Armero et al. (1998) originally developed this approach to analyze of front propagation in stochastic PDE models. In stochastic neural fields, it has been modified to analyze wave propagation (Bressloff and Webber, 2012a) and bump wandering (Kilpatrick and Ermentrout, 2013). Plugging the ansatz (Equation 11) into the system (Equation 1) and expanding in powers of ε1/2, we find that at  (1), we have the bump solution (Equation 7). Proceeding to

(1), we have the bump solution (Equation 7). Proceeding to  (ε1/2), we find

(ε1/2), we find

where  is the 2 × 1 vector function

is the 2 × 1 vector function

Φ = (Φ1(x, t), Φ2(x, t))T; and is the linear operator

for any vector u = (u(x)v(x)T of integrable functions. Note that the nullspace of  includes the vectors (U′1, 0)T and (0, U′2)T, due to Equation (10). The last terms in the right hand side vector of Equation (12) arise due to interareal connections. We have linearized them under the assumption |Δ1 − Δ2| remains small, so

includes the vectors (U′1, 0)T and (0, U′2)T, due to Equation (10). The last terms in the right hand side vector of Equation (12) arise due to interareal connections. We have linearized them under the assumption |Δ1 − Δ2| remains small, so

where j = 1, 2 and k ≠ j. To make sure that a solution to Equation (12) exists, we require the right hand side is orthogonal to all elements of the null space of the adjoint  , which is defined

, which is defined

for any integrable vector p = (p(x)q(x))T. It then follows

We can show that the nullspace of  contains the vector f1 = (f′(U1)U′1, 0)T by plugging it into Equation (13) to yield

contains the vector f1 = (f′(U1)U′1, 0)T by plugging it into Equation (13) to yield

where 0 = (0, 0)T and we use Equation (10). We can also show the nullspace of  contains f2 = (0, f′(U2)U′2)T in the same way. Thus, we can ensure Equation (12) has a solution by taking the inner product of both sides of Equation (12) with the two null vectors to yield

contains f2 = (0, f′(U2)U′2)T in the same way. Thus, we can ensure Equation (12) has a solution by taking the inner product of both sides of Equation (12) with the two null vectors to yield

where we define the inner product 〈u, v〉 = ∫π−π u(x)v(x)dx. Therefore, the stochastic vector Δ(t) = (Δ1(t), Δ2(t))T obeys the multivariate Ornstein–Uhlenbeck process

where effects of interareal connections are described by the matrix

with

and (w12 * f(U2)) · U′1 and (w21 * f(U1)) · U′2 vanish upon integration since they are odd. Noise is described by the vector  with

with

The white noise term W has zero mean 〈W(t)〉 = 0 and variance described by pure diffusion so 〈W(t)WT(t)〉 = Dt with

where the associated diffusion coefficients of the variance are

where Fj(x) = f′(Uj(x))U′j(x) and covariance is described by the coefficient

In the next section, we analyze this stochastic system (Equation 14), showing how coupling between areas can reduce the variability of the bump positions Δ1(t) and Δ2(t).

Effect of Coupling on Bump Position Variance

To analyze the Ornstein–Uhlenbeck process (Equation 14), we start by diagonalizing the matrix K = V Λ V−1 using the eigenvalue decomposition

such that Λ is the diagonal matrix of eigenvalues; columns of V are right eigenvectors; and rows of V−1 are left eigenvectors. Eigenvalues λ1, λ2 and eigenvectors v1, v2 inform us of the effect of interareal coupling on linear stability. The eigenvalue λ1 = 0 corresponds to the neutral stability of the positions (Δ1, Δ2)T to translations in the same direction v1 = (1, 1)T. The negative eigenvalue λ2 = − (κ1 + κ2) corresponds to the linear stability introduced by interareal connections. The positions (Δ1, Δ2)T revert to one another when perturbations translate them in opposite directions v2 = (κ1, −κ2)T.

Diagonalizing K = V Λ V−1 using Equation (18), we can compute the mean and variance of the vector Δ(t) given by Equation (14). First, note that the mean 〈Δ(t)〉 = eKt Δ(0) (Gardiner, 2003), which we can compute

using the diagonalization eKt = VeΛt V−1. Since λ2 = −(κ1 + κ2) < 0,

Thus, the means of Δ1(t) and Δ2(t) always relax to the same position in long time, due to the linear stability introduced by connections between areas. Under the assumption they both begin at Δ1(0) = Δ2(0) = 0, the covariance matrix is given (Gardiner, 2003)

where D is the covariance coefficient matrix of the white noise vector W(t) given by Equation (17). To compute Equation (19), we additionally need the diagonalization KT = (V−1)T Λ VT, so eKT t = (V−1)T eΛ t VT. After multiplying and integrating (Equation 19), we find the elements of the covariance matrix

are

where the effective diffusion coefficients are

so that D+ and D− are variances of noises occurring along the eigendirections v1 and v2. The functions r1(t), r2(t) are exponentially saturating

The main quantities of interest to us are the variances (Equation 20) and (Equation 21) with which we can make a few observations concerning the effect of interareal connections on the variance of bump positions.

First, note the long term variance of either bump's position Δ1(t) and Δ2(t) will be the same, described by the averaged diffusion coefficient D+, since

As the effective coupling strengths κj are increased, we can expect the variances 〈Δj(t)2〉 approach these limits at faster rates since other portions of the variance decay at a rate proportional to |λ2| = κ1 + κ2.

Next, we study the case, across all times t, where connections between areas are the same (w12 ≡ w21 = wr) and noise within areas is identical (D1≡ D2 = Dl), the mean reversion rates will be the same (κ1 = κ2 = κ) and terms in Equation (23) cancel so Dr = 0. Thus, the variances will be identical (〈Δ1(t)2〉 = 〈Δ2 (t)2〉 = 〈Δ(t)2〉) and

This demonstrates the way in which correlated noise (Dc) contributes to the variance. When noise within each area is shared (Dc → Dl), there is no benefit to interareal coupling and 〈Δ(t)2〉 = Dlt (see Kilpatrick and Ermentrout, 2013). However, when any noise is not shared between areas (Dc < Dl), variance can be reduced by increasing coupling strength κ between areas. The variance 〈Δ(t)2〉 is monotone decreasing in κ since

Inequality holds because (1 + 4 κt) ≤ e4 κt is ensured by the Taylor series expansion of e4 κt when κt > 0.

Thus, variance is minimized in the limit

Therefore, strengthening interareal connections in both directions reduces the variance in bump position. On the other hand, in the limit of no interareal connections, we find limκ→0〈Δ(t)2〉 = Dlt, and the variance in a bump's position is determined entirely by local sources of noise.

Returning to asymmetric connectivity (κ1 ≠ κ2), we consider the case of feedforward connectivity from area 1 to 2 (w12≡ 0), κ1 = 0, so D+ = D1 and the formulas for the variances reduce to

so the pure diffusive term of both variances is wholly determined by the local noise of area 1. Then, only the position of the bump in area 2 possesses additional mean-reverting fluctuations in its position, which arise from local sources of noise that force it away from the position of the bump in area 1. In this situation, the variance of the bump in area 2's position is minimized when

Comparing this with Equation (26) we see that, since Dc ≤ D1, the variances 〈Δj(t)2〉 will always be higher in this case than in the case of very strong reciprocal coupling between both areas. Averaging information and noise between both areas decreases positional variance as opposed to one area simply receiving noise and information from another. Similar results have been recently identified in the context of studying synchrony of reciprocally coupled noisy oscillators (Ly and Ermentrout, 2010).

One important caveat is that if area 1 has more noise than area 2, the weighting of reciprocal connectivity, κ1 and κ2, should be balanced to minimize the variance. If the average diffusion coefficient D+ is weighted too heavily with the area having the larger variance, the area with less intrinsic noise can end up noisier than it would be without reciprocal connectivity. To see this in the extreme case feedforward coupling, note that if D2 < D1, then D2t < D1t < 〈Δ2(t)2〉. Thus, the variance of Δ2(t) increases as opposed to the uncoupled case where 〈Δ2(t)2〉 = D2t.

We now derive the optimal weighting of κ1 and κ2 to minimize the long term variance (Equation 25) for general asymmetric connectivity, in the absence of correlated noise Dc = 0. To do so, we fix κ2 and find the κ1 that minimizes D+, which happens to be

Thus, for identical noise D1 = D2, setting κ1 = κ2 minimizes D+. For much stronger noise in area 2 (D2 » D1), κ1 should be made relatively small. In the case of noise correlations between areas (Dc > 0), the optimal value of κ1 that minimizes (Equation 25) is

Calculating the Stochastic Motion of Bumps

We now compute the effective variances (Equation 20) and (Equation 21), considering the specific case of Heaviside firing rate functions (Equation 5), cosine synaptic weights (Equation 3) and (Equation 4). Doing so, we can compare our asymptotic results to those computed from numerical simulations. We compute the mean reversion terms κ1 and κ2 by noting the spatial derivative of each bump will be U′1(x) = U′2(x) = −2 sina sinx and the null vector components are

for j = 1, 2. Plugging these formulae into Equation (16), we find κ1 = ε1/2M1 and κ2 = ε1/2M2.

We first consider the case of uncorrelated noise between areas, so cc ≡ 0, meaning Dc = 0. We can compute the diffusion coefficients associated with the local noise in each area assuming cosine spatial correlations

We can then compute Equations (20) and (21) directly, for the case of no noise correlations between areas, by plugging in Equation (27).

For symmetric connections between areas, κ = ε1/2 M1 = ε1/2M2, as well as identical noise, c1 = c2 = 1, we have 〈Δ1(t)2〉 = 〈Δ2(t)2〉 = 〈Δ(t)2〉 and

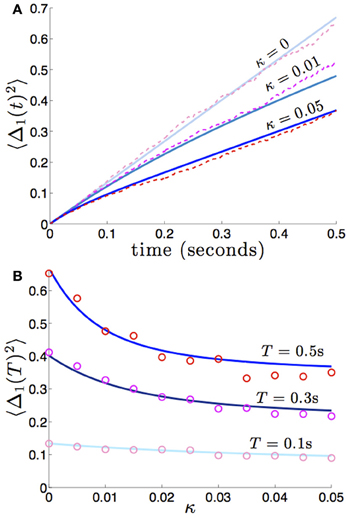

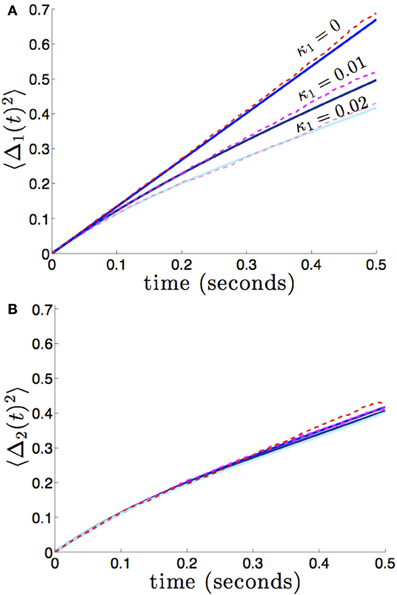

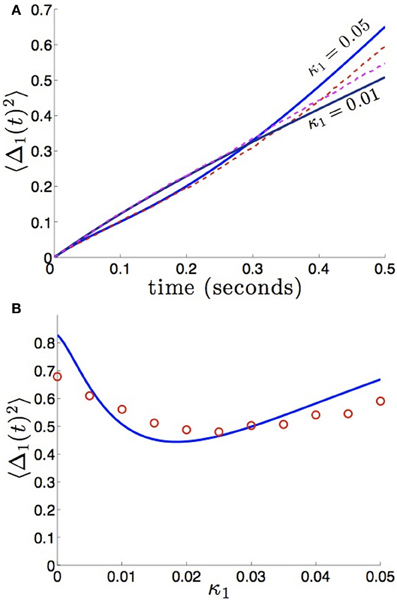

We compare the formula (28) to results we obtain from numerical simulations in Figure 3, finding our asymptotic formula (28) matches quite well. In addition, we compare our results for general (possibly asymmetric) reciprocal connectivity to results from numerical simulations in Figure 4. We also show in Figure 5, as predicted, when κ2 is held fixed, there is a finite optimal value of κ1 that minimizes variance 〈Δ1 (t)2〉. Therefore, reciprocal connectivity in multi-area networks should be balanced, in order to minimize positional variance of the stored bump.

Figure 3. Variance in the position of bumps as computed numerically (red shades) and from theory (blue shades) using Equation (28). Coupling between areas is symmetric , so 〈Δ1(t)2〉 = 〈Δ2(t)2〉, and there is no shared noise (cc = 0). (A) The increase in variance is slower for stronger amplitudes of interareal coupling κ. Notice variance climbs sublinearly for κ > 0, due to the mean-reversion caused by coupling. (B) Variance drops considerably more over low values of κ that over high values. Other constituent functions and parameters are the same as in Figure 2.

Figure 4. Variance in the position of bumps as it depends on asymmetric reciprocal connectivity (κ1 ≠ κ2) when noise in each area is independent and identical (c1 = c2 = 1). Fixing κ2 = 0.02 and varying κ1, we find (A) the variance 〈Δ1(t)2 of bump 1 decreases as coupling from area 2 to 1 (κ1) increases; (B) variance 〈Δ2(t)2〉 of bump 2 remain relatively unchanged. Other constituent functions and parameters are the same as in Figure 2.

Figure 5. Bump position variance depends non-monotonically on asymmetric connectivity strength. (A) For κ2 = 0.01 and high enough values of coupling (κ1 = 0.05), variance 〈Δ1(t)2〉 scales more quickly than for symmetric coupling (κ1 = 0.01). Layer 1 is being sourced by the noisier area 2. (B) Non-monotonic dependence of variance 〈Δ1(t)2〉 on projection strength from area 2 to area 1 κ1 is shown for fixed time T = 50 and κ2 = 0.01 fixed. Amplitude of noise in area 2 is twice that of area 1 (c1 = 1 and c2 = 2). Other constituent functions and parameters are the same as in Figure 2.

Next, we consider the case of correlated noise between areas, so cc > 0, meaning Dc > 0. In this case, the covariance terms in D+ and D− are non-zero. We can thus compute the diffusion coefficient associated with correlated noise

In the case of symmetric connections between areas, κ = ε1/2M1 = ε1/2M2, and identical internal noise, c1 = c2 = 1, we have 〈Δ1(t)2〉 = 〈Δ2(t)2〉 = 〈Δ(t)2〉 and

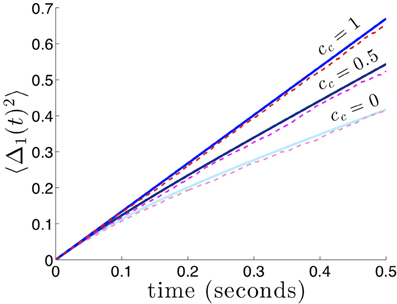

which reflects the fact that interareal connections do not reduce variability as much when there are strong noise correlations cc between areas. We demonstrate the accuracy of the theoretical calculation (Equation 29) as compared to numerical simulations in Figure 6. Numerical simulations also reveal the fact that stronger noise correlations between areas diminish the effectiveness of interareal connections at reducing bump position variance.

Figure 6. Variance in the position of bumps as noise correlation between areas is increased. Numerically computed variance (red shades) match theoretical curves from Equation (29), blue shades, very well. Reciprocal connectivity reduces variability the most when there is no correlated noise (cc = 0) between areas. As the shared noise between areas increased is amplitude (cc = 0.5, 1), the advantage of reciprocal connectivity is diminished. When cc = 1 changing κ does not affect the variance 〈Δ(t)2〉 (see formula (29) in the limit cc → 1). Other constituent functions and parameters are the same as in Figure 2.

Reduction of Bump Wandering in Multiple Areas

We now examine the effect of interareal connections in networks with more than two areas using the system (Equation 6). As with the dual area network without noise or interareal connectivity, stationary bump solutions take the form (u1, …, uN) = (U1(x),…, UN(x)), and translation invariance let us to set all bump peaks to be located at x = 0 so

As before, we presume wjj = w, and relaxing this assumption does not dramatically alter our results. Linear stability analysis of bumps proceeds along similar lines to the dual area network, so we omit those calculations and summarize the results. In the absence of interareal connections, each bump is neutrally stable to perturbation in either direction. In the presence of interareal connections, all bumps are only neutrally stable to translations that move them all in the same direction. Therefore, networks with more areas provide more perturbation cancelations.

To study how noise and interareal connections affect the trajectory of bump positions, we again note noise causes all bumps to wander away from their initial position, while being pulled back into place by projections from other areas (see Figure 7). The position of the bump in area j is described by the stochastic variable Δj. Noise also causes fluctuations in the shape of both bumps, which is described by the correction term Φj. Therefore, we presume the resulting state of the system satisfies the ansatz

where j = 1,…, N. Plugging this ansatz into Equation (6) and expanding in powers of ε1/2, we find that at  (1), we simply have the system of Equation (30) for the bump solutions. Proceeding to

(1), we simply have the system of Equation (30) for the bump solutions. Proceeding to  (ε1/2), we find

(ε1/2), we find

where  is an N × 1 vector whose jth entry is

is an N × 1 vector whose jth entry is

Φ = (Φ1(x, t), …, ΦN(x, t))T; and  is the linear operator

is the linear operator

for any integrable vector Ψ = (Ψ1(x),…, ΨN(x))T. The nullspace of  is spanned by the vectors (U′1, 0, …, 0)T; (0, U′2, 0, …, 0)T; …; and (0, …, 0, U′N)T, which can be seen by differentiating (Equation 30). The last terms on the right hand side of Equation (31) arise due to interareal connections. We have linearized them under the assumption that |Δk − Δj| remains small for all j, k. To ensure a solution to Equation (31), we require the right hand side is orthogonal to all elements of the null space of the adjoint operator

is spanned by the vectors (U′1, 0, …, 0)T; (0, U′2, 0, …, 0)T; …; and (0, …, 0, U′N)T, which can be seen by differentiating (Equation 30). The last terms on the right hand side of Equation (31) arise due to interareal connections. We have linearized them under the assumption that |Δk − Δj| remains small for all j, k. To ensure a solution to Equation (31), we require the right hand side is orthogonal to all elements of the null space of the adjoint operator  . The adjoint is defined with respect to the inner product

. The adjoint is defined with respect to the inner product

where ϒ = (ϒ1(x),…,ϒN(x))T is integrable. It then follows

Figure 7. Stochastic evolution of bump position in multi-area networks. (A) With weak coupling for j ≠ k) between N = 3 areas, the position of bumps 1 (magenta), 2 (cyan), and 3 (green) reverts to one another. We show only the evolution of activity u(x, t) in area 1. (B) For N = 6 areas and the same interareal coupling, the reduction in bump wandering is even more considerable. The trajectories of bumps in all areas (colored lines) stay close together. All other parameters are as in Figure 2.

The nullspace of  contains the vectors (f′(U1)U′1, 0, …, 0)T; (0, f′(U2)U′2, 0, … 0)T; …; and (0, …, 0, f′(UN)UN′), which can be shown by applying

contains the vectors (f′(U1)U′1, 0, …, 0)T; (0, f′(U2)U′2, 0, … 0)T; …; and (0, …, 0, f′(UN)UN′), which can be shown by applying  to them and using the formula generated by differentiating (Equation 30). Thus, to be sure (Equation 31) has a solution, we take the inner product of both sides of the equation with all N null vectors and isolate dΔj terms to yield the multivariate Ornstein–Uhlenbeck process

to them and using the formula generated by differentiating (Equation 30). Thus, to be sure (Equation 31) has a solution, we take the inner product of both sides of the equation with all N null vectors and isolate dΔj terms to yield the multivariate Ornstein–Uhlenbeck process

where effects of interareal connections are described by the matrix K ∈ ℝN×N where the diagonal and off-diagonal entries are given

for j = 1,…, N and k ≠ j, where

and we have used the fact that wjk * f(Uk) · U′j is an odd function for all j, k, so they vanish on integration. Stochastic forces are described by the vector

The white noise vector W(t) has zero mean 〈W(t)〉 = 0, and covariance matrix 〈W(t)WT(t)〉 = Dt where associated coefficients of the matrix D are

where Fj(x) = f′(Uj(x))U′j(x), which describe the variance within an area and

which describes covariance between areas. Since correlations are symmetric Cjk(x) = Ckj(x) for all j, k, then Djk = Dkj for all j, k.

A detailed analysis of the linear stochastic system (Equation 32) is difficult without some knowledge of the entries κjk. However, we can make a few general statements. We note that all eigenvalues of K must have negative real part or be zero, due to the Gerschgorin circle theorem (Feingold and Varga, 1962), which states that all eigenvalues a matrix K must lie in one of the disks with center Kjj and radius ∑k≠j |Kjk|. Since Kjj = − ∑k≠j κjk and Kjk = κjk, then

is the maximal possible eigenvalue, since κjk ≥ 0 for all j, k. Therefore, we expect N eigenpairs λj, vk associated with K, where λN ≤ λN − 1 ≤ … ≤ λ2 ≤ λ1 = 0. This means we can perform the diagonalization K = V Λ V−1, where Λ is the diagonal matrix of eigenvalues; columns of V are right eigenvectors; and rows of V−1 are left eigenvectors. Therefore, we can decompose the stochastic solution to Equation (32), when Δ(0) = 0 as

Thus, as we expect, any stochastic fluctuations in Equation (32) will be integrated or decay over time due to the exponential filters eλj(t − s). In addition, when Δ(0) = 0 the covariance matrix can be computed as

where D is the matrix of diffusion coefficients for the covariance 〈W(t) WT(t)〉. We now compute the covariance in the specific case of symmetric connectivity.

In the case of symmetric connectivity between areas, wjk = wr for all j ≠ k, so κjk = κ for all j ≠ k. Effects of connectivity between areas are described by the symmetric matrix

where JN is the N × N matrix of ones and I is the identity. The eigenvalues of JN are N, with multiplicity one, and zero, with multiplicity N − 1. Thus, the largest eigenvalue of K = κJN − N κI is λ1 = 0 with associated eigenvector v1 = (1,…, 1)T. All other eigenvalues are λj = −Nκ for j ≥ 2, with associated eigenvectors vj = e1 − ej, where j = 2, …, N and ej is the unit vector with a one in the jth row and zeros elsewhere. Our diagonalization of the symmetric matrix K = KT = V Λ V−1 then involves the diagonal matrix Λ of eigenvalues λj; the symmetric matrix V whose columns vj are right eigenvectors; and the symmetric matrix V−1 whose rows are left eigenvectors. The matrix V−1 takes the form

We can thus compute the covariance using the diagonalization eKt = eKTt = V eΛt V−1. In addition, we will assume each area receives noise with identical statistics (Djj = Dl) and there are identical noise correlations between areas (Djk = Dc for j ≠ k), so D = (Dl − Dc)I + DcJN. Multiplying and integrating (Equation 34), we find the diagonal entries (variances) of 〈Δ(t)ΔT(t)〉 are

and the off-diagonal entries (true covariances) are

As revealed by the diffusive term in Equation (35), the system still possesses a rotational symmetry, given by the action of rotating all the bumps in the same direction. Thus, the component of noise in this direction is not damped out by coupling. Thus, note that the long term variance of any bump's position Δj(t) will be approximately described by the averaged diffusion

As the strength of coupling κ or number of areas N is increased, the variances 〈Δj(t)2〉 approach this limit at a faster rate, since the other portions of variance decay at a rate proportional to |λ2| = Nκ. Note also that in the limit Dc → Dl, effects of coupling are negligible and the long term variance of each bump is determined by the diffusion introduced by its area's internal noise.

Returning to study the full variance Equation (35) for symmetric coupling and noise, we make a few observations. First, in the limit of purely correlated noise across areas (Dc → Dl), interareal connections have no effect, and 〈Δj(t)2〉 = Dlt for all areas and arbitrary coupling strength. However, if there is any independent noise in each area (Dc < Dl), variance 〈Δj(t)2〉 can always be reduced further by increasing coupling strength or the number of areas since

where inequality (1 + 2Nκt) ≥ e2Nκt holds due to the Taylor expansion of e2Nκt when Nκt ≥ 0, and

when N ≥ 2, since Dl ≥ Dc and due to the Taylor expansion of e2Nκt. Note, we have temporarily treated N as a continuous variable. Thus, we know the variance 〈Δj(t)2〉 to decrease with increasing κ and expect it to decrease with increasing N.

We can compute the variance 〈Δj(t)2〉 explicitly in the case of Heaviside firing rate functions (Equation 5), cosine synaptic weights (Equation 3) and (Equation 4). With these assumptions, as well as there being identical noise to all areas (cjj = 1 for all j, cjk = cc for j ≠ k), we find

so that

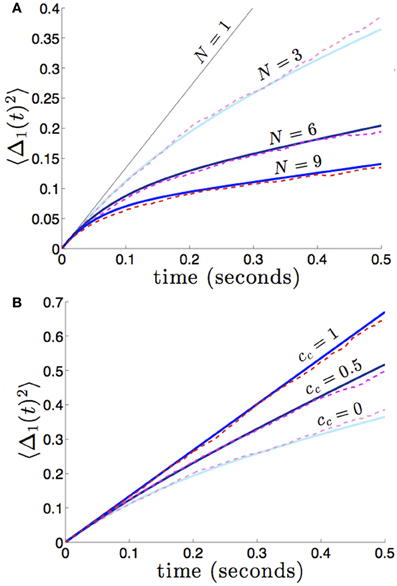

which reflects the fact that increasing the number of areas will decrease variability, when noise between areas is not too strongly correlated. We demonstrate the accuracy of this formula (36) in Figure 8. In numerical simulations, as predicted by our asymptotic calculations, the variance scales more slowly in time in networks with more areas.

Figure 8. (A) Variance in the position of the bump in the first area 〈Δ1(t)2〉 builds up more slowly in networks with more areas N, and we expect similar behavior in all other areas. Fixing the strength of interareal connections, for j ≠ k, we see that varying N decreases the variance 〈Δj(t)2〉. (B) As in dual area networks, increasing the level of noise correlations between areas diminishes the effectiveness of interareal connectivity as a noise cancelation mechanism. Other parameters are as in Figure 2.

Discussion

We have shown that interareal coupling in multi-area stochastic networks can reduce the diffusive wandering of bumps. Since bump attractors offer a well studied model of persistent activity underlying spatial working memory (Compte et al., 2000), our results provide a novel suggestion for how the memory networks may reduce error. Our calculations have exploited a small noise approximation for the position of the bump in each area (Armero et al., 1998; Bressloff and Webber, 2012a). Assuming connectivity between areas is weak, we have shown the equations describing bump positions reduce to a multivariate Ornstein–Uhlenbeck process. In this formulation, we find interareal connectivity stabilizes all but one eigendirection in the space of bump position movements. Neutral stability does still exist, so stochastic forces that move bumps in all areas in the same direction do not decay away. However, sources of noise that force bumps in opposite directions create bump movements that will decay with time. Thus, interareal connectivity provides a noise cancelation mechanism that operates by stabilizing the bumps in each area to stochastic forces that push them in opposite directions. (Polk et al., 2012) recently explored noise correlation statistics in persistent state networks that reduce wandering. Our work complements these results by studying synaptic architectures that limit persistent state diffusion.

Storing spatial working memories with neural activity that spans multiple brain areas does serve other purposes than potential noise cancelation. Delayed response tasks that lead to limb motion can generate persistent activity in the parietal cortex (Colby et al., 1996; Pesaran et al., 2002) so that motor responses can be readily executed. In addition, superior colliculus demonstrates sustained activity (Basso and Wurtz, 1997), which is an area also thought to underlie directed behavioral responses. Therefore, activity is distributed between areas providing short term information storage, like prefrontal cortex (Goldman-Rakic, 1995), and those responsible for motor responses and/or behavior. An additional effect of this delegation of activity is that reciprocal connections between areas may provide noise cancelation during the storage period of working memory. However, our work suggests distributing working memory-serving neural activity between areas that receive strongly correlated noise will not provide as effective cancelation.

Our work should be contrasted with several other results concerning the stabilization of networks that encode a continuous variable (Koulakov et al., 2002; Goldman et al., 2003; Cain and Shea-Brown, 2012; Kilpatrick et al., 2013). Pure integrators, which are usually line attractors, are notoriously fragile to parametric perturbations, so (Koulakov et al., 2002) suggested they may be made more robust by considering networks that integrate in discrete bursts, rather than continuously. This can be implemented by considering a population of bistable neural units so that firing rate integration of a stimulus occurs in a stairstep fashion, rather than a ramplike fashion (see Goldman et al., 2003 for example). Related ideas were recently implemented in a bump attractor model of spatial working memory (Kilpatrick et al., 2013), but quantization was implemented with synaptic architecture rather than single neural unit properties. As opposed to the approach of quantizing the space of possible stimulus representations, we have kept the representation space a continuum. Deleterious effects of noise are reduced by considering reciprocal connectivity between encoding areas that redundantly represent the stimulus. Due to noise cancelations, the encoding error of the network decreases as the number of areas is increased.

Conflict of Interest Statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Amari, S. (1977). Dynamics of pattern formation in lateral-inhibition type neural fields. Biol. Cybern. 27, 77–87. doi: 10.1007/BF00337259

Armero, J., Casademunt, J., Ramirez-Piscina, L., and Sancho, J. M. (1998). Ballistic and diffusive corrections to front propagation in the presence of multiplicative noise. Phys. Rev. E 58, 5494–5500. doi: 10.1103/PhysRevE.58.5494

Basso, M. A., and Wurtz, R. H. (1997). Modulation of neuronal activity by target uncertainty. Nature 389, 66–69. doi: 10.1038/37975

Ben-Yishai, R., Bar-Or, R. L., and Sompolinsky, H. (1995). Theory of orientation tuning in visual cortex. Proc. Natl. Acad. Sci. U.S.A. 92, 3844–3848. doi: 10.1073/pnas.92.9.3844

Bressloff, P. C., and Webber, M. A. (2012a). Front propagation in stochastic neural fields. SIAM J. Appl. Dyn. Syst. 11, 708–740. doi: 10.1137/110851031

Bressloff, P. C., and Webber, M. A. (2012b). Neural field model of binocular rivalry waves. J. Comput. Neurosci. 32, 233–252. doi: 10.1007/s10827-011-0351-y

Cain, N., and Shea-Brown, E. (2012). Computational models of decision making: integration, stability, and noise. Curr. Opin. Neurobiol. 22, 1047–1053. doi: 10.1016/j.conb.2012.04.013

Camperi, M., and Wang, X. J. (1998). A model of visuospatial working memory in prefrontal cortex: recurrent network and cellular bistability. J. Comput. Neurosci. 5, 383–405. doi: 10.1023/A:1008837311948

Chafee, M. V., and Goldman-Rakic, P. S. (1998). Matching patterns of activity in primate prefrontal area 8a and parietal area 7ip neurons during a spatial working memory task. J. Neurophysiol. 79, 2919–2940.

Clements, J. D., Lester, R. A., Tong, G., Jahr, C. E., and Westbrook, G. L. (1992). The time course of glutamate in the synaptic cleft. Science 258, 1498–1501. doi: 10.1126/science.1359647

Colby, C. L., Duhamel, J. R., and Goldberg, M. E. (1996). Visual, presaccadic, and cognitive activation of single neurons in monkey lateral intraparietal area. J. Neurophysiol. 76, 2841–2852.

Compte, A., Brunel, N., Goldman-Rakic, P. S., and Wang, X. J. (2000). Synaptic mechanisms and network dynamics underlying spatial working memory in a cortical network model. Cereb. Cortex 10, 910–923. doi: 10.1093/cercor/10.9.910

Constantinidis, C., and Wang, X.-J. (2004). A neural circuit basis for spatial working memory. Neuroscientist 10, 553–565. doi: 10.1177/1073858404268742

Curtis, C. E. (2006). Prefrontal and parietal contributions to spatial working memory. Neuroscience 139, 173–180. doi: 10.1016/j.neuroscience.2005.04.070

Curtis, C. E., and Lee, D. (2010). Beyond working memory: the role of persistent activity in decision making. Trends Cogn. Sci. 14, 216–222. doi: 10.1016/j.tics.2010.03.006

di Pellegrino, G., and Wise, S. P. (1993). Visuospatial versus visuomotor activity in the premotor and prefrontal cortex of a primate. J. Neurosci. 13, 1227–1243.

Ermentrout, B. (1998). Neural networks as spatio-temporal pattern-forming systems. Rep. Progress Phys. 61, 353. doi: 10.1088/0034-4885/61/4/002

Feingold, D. G., and Varga, R. S. (1962). Block diagonally dominant matrices and generalizations of the gerschgorin circle theorem. Pacific J. Math. 12, 1241–1250.

Ferster, D., and Miller, K. D. (2000). Neural mechanisms of orientation selectivity in the visual cortex. Annu. Rev. Neurosci. 23, 441–471. doi: 10.1146/annurev.neuro.23.1.441

Folias, S. E., and Ermentrout, G. B. (2011). New patterns of activity in a pair of interacting excitatory-inhibitory neural fields. Phys. Rev. Lett. 107:228103. doi: 10.1103/PhysRevLett.107.228103

Funahashi, S., Bruce, C. J., and Goldman-Rakic, P. S. (1989). Mnemonic coding of visual space in the monkey's dorsolateral prefrontal cortex. J. Neurophysiol. 61, 331–349.

Goldman, M. S., Levine, J. H., Major, G., Tank, D. W., and Seung, H. S. (2003). Robust persistent neural activity in a model integrator with multiple hysteretic dendrites per neuron. Cereb. Cortex 13, 1185–1195. doi: 10.1093/cercor/bhg095

Goldman-Rakic, P. S. (1995). Cellular basis of working memory. Neuron 14, 477–485. doi: 10.1016/0896-6273(95)90304-6

Gupta, A., Wang, Y., and Markram, H. (2000). Organizing principles for a diversity of gabaergic interneurons and synapses in the neocortex. Science 287, 273–278. doi: 10.1126/science.287.5451.273

Hansel, D., and Mato, G. (2013). Short-term plasticity explains irregular persistent activity in working memory tasks. J. Neurosci. 33, 133–149. doi: 10.1523/JNEUROSCI.3455-12.2013

Hansel, D., and Sompolinsky, H. (1998). “Modeling feature selectivity in local cortical circuits,” in Methods in Neuronal Modeling: From Ions to Networks, chapter 13, eds C. Koch, and I. Segev (Cambridge: MIT), 499–567.

Häusser, M., and Roth, A. (1997). Estimating the time course of the excitatory synaptic conductance in neocortical pyramidal cells using a novel voltage jump method. J. Neurosci. 17, 7606–7625.

Hikosaka, O., Sakamoto, M., and Usui, S. (1989). Functional properties of monkey caudate neurons. iii. activities related to expectation of target and reward. J. Neurophysiol. 61, 814–832.

Itskov, V., Hansel, D., and Tsodyks, M. (2011). Short-term facilitation may stabilize parametric working memory trace. Front. Comput. Neurosci. 5:40. doi: 10.3389/fncom.2011.00040

Kilpatrick, Z. P., and Bressloff, P. C. (2010). Binocular rivalry in a competitive neural network with synaptic depression. SIAM J. Appl. Dyn. Syst. 9, 1303–1347. doi: 10.1137/100788872

Kilpatrick, Z. P., and Ermentrout, B. (2013). Wandering bumps in stochastic neural fields. SIAM J. Appl. Dyn. Syst. 12, 61–94. doi: 10.1137/120877106

Kilpatrick, Z. P., Ermentrout, B., and Doiron, B. (2013). Optimizing working memory with spatial heterogeneity of recurrent cortical excitation. (Submitted).

Koulakov, A. A., Raghavachari, S., Kepecs, A., and Lisman, J. E. (2002). Model for a robust neural integrator. Nat. Neurosci. 5, 775–782. doi: 10.1038/nn893

Laing, C. R., and Chow, C. C. (2001). Stationary bumps in networks of spiking neurons. Neural. Comput. 13, 1473–1494. doi: 10.1162/089976601750264974

Levy, R., Friedman, H. R., Davachi, L., and Goldman-Rakic, P. S. (1997). Differential activation of the caudate nucleus in primates performing spatial and nonspatial working memory tasks. J. Neurosci. 17, 3870–3882.

Ly, C., and Ermentrout, G. B. (2010). Coupling regularizes individual units in noisy populations. Phys. Rev. E Stat. Nonlin. Soft. Matter. Phys. 81(1 Pt 1):011911. doi: 10.1103/PhysRevE.81.011911

McNab, F., and Klingberg, T. (2008). Prefrontal cortex and basal ganglia control access to working memory. Nat. Neurosci. 11, 103–107. doi: 10.1038/nn2024

Miller, E. K., Erickson, C. A., and Desimone, R. (1996). Neural mechanisms of visual working memory in prefrontal cortex of the macaque. J. Neurosci. 16, 5154–5167.

Mushiake, H., and Strick, P. L. (1995). Pallidal neuron activity during sequential arm movements. J. Neurophysiol. 74, 2754–2758.

Owen, A. M., Evans, A. C., and Petrides, M. (1996). Evidence for a two-stage model of spatial working memory processing within the lateral frontal cortex: a positron emission tomography study. Cereb. Cortex 6, 31–38. doi: 10.1093/cercor/6.1.31

Pesaran, B., Pezaris, J. S., Sahani, M., Mitra, P. P., and Andersen, R. A. (2002). Temporal structure in neuronal activity during working memory in macaque parietal cortex. Nat. Neurosci. 5, 805–811. doi: 10.1038/nn890

Ploner, C. J., Gaymard, B., Rivaud, S., Agid, Y., and Pierrot-Deseilligny, C. (1998). Temporal limits of spatial working memory in humans. Eur. J. Neurosci. 10, 794–797. doi: 10.1046/j.1460-9568.1998.00101.x

Polk, A., Litwin-Kumar, A., and Doiron, B. (2012). Correlated neural variability in persistent state networks. Proc. Natl. Acad. Sci. U.S.A. 109, 6295–6300. doi: 10.1073/pnas.1121274109

White, J. M., Sparks, D. L., and Stanford, T. R. (1994). Saccades to remembered target locations: an analysis of systematic and variable errors. Vis. Res. 34, 79–92. doi: 10.1016/0042-6989(94)90259-3

Keywords: neural field, bump attractor, spatial working memory, correlations, noise cancelation

Citation: Kilpatrick ZP (2013) Interareal coupling reduces encoding variability in multi-area models of spatial working memory. Front. Comput. Neurosci. 7:82. doi: 10.3389/fncom.2013.00082

Received: 29 April 2013; Paper pending published: 24 May 2013;

Accepted: 11 June 2013; Published online: 01 July 2013.

Edited by:

Ruben Moreno-Bote, Foundation Sant Joan de Deu, SpainReviewed by:

Albert Compte, Institut d'investigacions Biomèdiques August Pi i Sunyer, SpainMoritz Helias, Institute for Advanced Simulation, Germany

Copyright © 2013 Kilpatrick. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and subject to any copyright notices concerning any third-party graphics etc.

*Correspondence: Zachary P. Kilpatrick, Department of Mathematics, University of Houston, 651 Phillip G Hoffman Hall, Houston, 77204-3008 TX, USA e-mail:enBraWxwYXRAbWF0aC51aC5lZHU=