- Bionet Group, Department of Electrical Engineering, Columbia University in the City of New York, New York, NY, USA

We present algorithms for identifying multidimensional receptive fields directly from spike trains produced by biophysically-grounded neuron models. We demonstrate that only the projection of a receptive field onto the input stimulus space may be perfectly identified and derive conditions under which this identification is possible. We also provide detailed examples of identification of neural circuits incorporating spatiotemporal and spectrotemporal receptive fields.

1. Introduction

Recently, a novel class of system identification algorithms, called Channel Identification Machines (CIMs), has been developed for identifying dendritic processing in spiking neural circuits (Lazar and Slutskiy, 2010, 2012). In the simplest setting, CIMs allow one to identify a communication/processing channel in [Filter]-[Asynchronous Sampler] circuits, where the effect of the channel on an input signal can be described by a linear filter and the output of the channel is mapped, or encoded, into a time sequence by a non-linear asynchronous sampler. Such [Filter]-[Asynchronous Sampler] circuits are known in the literature as Time Encoding Machines (TEMs) and their specific embodiments in neuroscience include neural circuit models in which an analog dendritic stimulus processor is followed by a point neuron model encoding the aggregate dendritic current produced by the processor. A few examples of asynchronous samplers include asynchronous sigma/delta modulators (ASDMs), conductance-based point neuron models such as Hodgkin-Huxley, Morris-Lecar, Fitzhugh-Nagumo, Wang-Buzsáki, Hindmarsh-Rose, oscillators with multiplicative coupling and simpler models such as the integrate-and-fire neuron or the threshold-and-fire neuron (Brown et al., 2004; Lazar and Slutskiy, 2012, 2014a).

The above-mentioned asynchronous samplers incorporate the temporal dynamics of spike generation at the axon hillock of biological neurons and allow one to consider, in particular for neuroscience applications, more biologically-plausible non-linear spike generation (sampling) mechanisms. This is in contrast to existing methods, such as the generalized linear model (GLM), which typically assumes a simplified description of the spike generation dynamics by using a static non-linearity. The non-linear contribution of a dynamical system such as the Hodgkin-Huxley neuron model is not static, but dynamic and stimulus-driven (Lazar and Slutskiy, 2014a). It changes from one spike to the next and thus affects the estimation procedure if not properly taken into account. Furthermore, since the non-linearity does not fully capture the complex spike generation dynamics, the filters fit to that non-linearity may not provide an adequate description of the neural circuit, and in particular may confound dendritic processing and encoding (Lazar and Slutskiy, 2014a).

More recently, the CIM methodology has been extended to neural circuits in higher brain centers that receive multidimensional spike trains as input stimuli instead of continuous signals and to circuits with extensive lateral connections and feedback (Lazar and Slutskiy, 2014a). Together with TEMs and Time Decoding Machines (TDMs) for decoding stimuli from spike trains, CIMs provide a unified framework for studying encoding, decoding and identification in neural systems.

The motivation for multidimensional CIMs (Lazar and Slutskiy, 2013) is provided by the concept of a receptive field that is well established in neuroscience. Introduced in 1906 by Sherrington (Sherrington, 1906) to describe an area of the body surface capable of eliciting a reflex in response to a stimulus, the term “receptive field” has been extended to many different sensory modalities and spans many different types of neurons. For example, in the visual system, the receptive field of a photoreceptor is a 3-dimensional cone in space comprising all possible directions in which light can hit the photoreceptor. In the auditory system, receptive fields can correspond to certain spectral regions of audio stimuli. More broadly, the receptive field is that part of the the sensory space that can evoke a neuronal response (Dayan and Abbott, 2005). Spatial and spatiotemporal receptive fields have been successfully used in vision to model retinal ganglion cells in the retina as well as neurons in the lateral geniculate nucleus and the visual cortex (see DeAngelis et al., 1995 for a review). Similarly, spectrotemporal receptive fields have been used to describe responses of auditory neurons (Aertsen and Johannesma, 1981), neurons in cochlear nuclei (Clopton and Backoff, 1991) and neurons in the auditory cortex (Kowalski et al., 1996).

Circuits that process multidimensional feedforward input are often encountered in biological systems (e.g., the retina, Pillow et al., 2008). From a modeling standpoint, one can also consider spiking neural circuits in which, in addition to feedforward input, every neuron may receive lateral inputs from neurons in the same layer. Furthermore, back-propagating action potentials (Waters et al., 2005), or feedback, may affect computations performed within the dendritic tree. Until now, CIMs for circuits with lateral connectivity and feedback have only been considered in the context of scalar or vector-valued signals of one variable, e.g., functions of time u1(t), t ∈ ℝ.

In this paper we discuss multidimensional channel identification machines that allow one to identify signal transformations applied to multidimensional signals un(x1, …, xn), n ∈ ℕ, where xn typically designates the time variable. A few examples of multidimensional CIMs include (i) spatial CIMs, where the input signal u2(x, y) is a function of a two-dimensional space, describing, e.g., images; (ii) spectrotemporal CIMs, where the input signal u2(ν, t) is a function of spectrum and time, describing, e.g., auditory signals; (iii) spatiotemporal CIMs, where the input signal u3(x, y, t) is a function of space and time, describing, e.g., video signals. Signal transformations are performed by dendritic stimulus processors. They are modeled here as receptive fields whose output is encoded by spiking neurons with lateral connectivity (cross-feedback) and feedback.

The rigorous mathematical formalism employed here enables us to obtain two key results: (i) the projection of the multidimensional receptive field onto the input signal space can be perfectly identified, and (ii) the problem of identification of multidimensional receptive fields is dual to the problem of neural decoding. The duality result shows that the identification of a multidimensional receptive field is equivalent to the problem of encoding its projection with a neural circuit whose receptive field has an impulse response that is exactly the multidimensional input test signal. We provide an identification algorithm and give detailed examples arising in spiking models of audition and vision.

2. Methods

2.1. Modeling Multidimensional Stimuli and Their Processing

2.1.1. The space of input stimuli

A multidimensional communication/processing channel of interest is shown in Figure 1. An analog signal un(x1, …, xn) of n dimensions is passed through a channel with memory that describes a physical communication link or a signal processing stage. The output of the channel v is then mapped, or encoded, by an asynchronous sampler into the time sequence (tk)k∈ℤ. In the example shown in Figure 1, the asynchronous sampler is a (leaky) IAF neuron.

Figure 1. Multidimensional problem setting. A known multidimensional signal un(x1, x2, …, xn), is first passed through a communication/processing channel. A non-linear sampler then maps the output v of the channel into an observable time sequence (tk)k∈ℤ.

We model input signals as elements of a Reproducing Kernel Hilbert Space (RKHS) (Berlinet and Thomas-Agnan, 2004). For practical and computational reasons we choose to work with the multidimensional space of trigonometric polynomials  n defined below. However, the results are not limited to this particular RKHS.

n defined below. However, the results are not limited to this particular RKHS.

Definition 1. The space of trigonometric polynomials  n is a Hilbert space of complex-valued functions un = un (x1, …, xn), where

n is a Hilbert space of complex-valued functions un = un (x1, …, xn), where

defined over the domain = [0, T1] × [0, T2] × ··· × [0, Tn], where ul1…ln ∈ ℂ and

Here Ωi is the bandwidth in dimension xi, Li is the order, and Ti = 2πLi/Ωi is the period, for all i = 1, …, n, and  n is endowed with the inner product 〈·,·〉 :

n is endowed with the inner product 〈·,·〉 :  n ×

n ×  n → ℂ

n → ℂ

Note that given the inner product in (1), the set of elements el1 … ln(x1, …, xn) forms an orthonormal basis in  n. Moreover,

n. Moreover,  n is an RKHS with a reproducing kernel (RK) given by

n is an RKHS with a reproducing kernel (RK) given by

In what follows we provide two- and three-dimensional signal models that arise in audition and vision. For convenience, we use a simpler and widely-used notation.

Example 1. We model spectrotemporal stimuli u2(ν, t) as elements of an RKHS of trigonometric polynomials  2 defined on = [0, T1] × [0, T2], where T1 = 2πL1/Ω1, T2 = 2πL2/Ω2, with (Ω1, L1) and (Ω2, L2), being the (bandwidth, order) pairs in the spectral direction ν and in time t, respectively. A signal u2 ∈

2 defined on = [0, T1] × [0, T2], where T1 = 2πL1/Ω1, T2 = 2πL2/Ω2, with (Ω1, L1) and (Ω2, L2), being the (bandwidth, order) pairs in the spectral direction ν and in time t, respectively. A signal u2 ∈  2 can be written as

2 can be written as

where the coefficients ul1 l2 ∈ ℂ and functions

form an orthonormal basis for the (2L1 + 1)(2L2 + 1)-dimensional space  2.

2.

Example 2. We model spatiotemporal stimuli u3(x, y, t) as elements of an RKHS of trigonometric polynomials  3 defined on = [0, T1] × [0, T2] × [0, T3], where T1 = 2πL1/Ω1, T2 = 2πL2/Ω2, T3 = 2πL3/Ω3, with (Ω1, L1), (Ω2, L2), and (Ω3, L3), being the (bandwidth, order) pairs in spatial directions x and y and in time t, respectively. A visual stimulus u3 ∈

3 defined on = [0, T1] × [0, T2] × [0, T3], where T1 = 2πL1/Ω1, T2 = 2πL2/Ω2, T3 = 2πL3/Ω3, with (Ω1, L1), (Ω2, L2), and (Ω3, L3), being the (bandwidth, order) pairs in spatial directions x and y and in time t, respectively. A visual stimulus u3 ∈  3 can be written as

3 can be written as

where coefficients ul1 l2 l3 ∈ ℂ and functions

form an orthonormal basis for the (2L1 + 1)(2L2 + 1)(2L3 + 1)-dimensional space  3.

3.

2.1.2. Modeling multidimensional processing

In the simplest setting, the communication/processing channel is described by a receptive field with a kernel hn(x1, …, xn). The kernel is assumed to be causal in the time variable (if any) and BIBO-stable. We also assume that the kernel has a finite support of length Si ≤ Ti in each direction xi. In other words, each kernel hn belongs to the space Hn.

Definition 2. The filter kernel space Hn is given by

Since the length of the filter support is smaller than or equal to the period of the input signal in each dimension, we effectively require that for given Si and fixed input signal bandwidth Ωi, the order Li of the space  n satisfies Li ≥ Si · Ωi/(2π) for all i = 1, …, n.

n satisfies Li ≥ Si · Ωi/(2π) for all i = 1, …, n.

Definition 3. The operator  : Hn →

: Hn →  n given (by abuse of notation) by

n given (by abuse of notation) by

is called the projection operator.

Since  hn ∈

hn ∈  n, we have

n, we have

2.2. Multidimensional [Filter]-[IAF] Tems and Their T-Transforms

In this section we analyze the general single-input single-output (SISO) multidimensional TEM shown in Figure 1 and describe in detail its I/O behavior. We then provide two specific examples of SISO multidimensional TEMs that are often encountered in neuroscience.

Without loss of generality, we assume that memory effects in the neural circuit arise only in the temporal dimension t of the stimulus and interactions in other dimensions are multiplicative in their nature. Consequently, the output v of the multidimensional receptive field is given by a convolution in the temporal dimension and integration in all other dimensions, i.e.,

The temporal signal v(t) represents the total dendritic current flowing into the spike initiation zone, where it is encoded into spikes by a point neuron model, such as the (leaky) IAF neuron of Figure 1. The mapping of the multidimensional stimulus un into a temporal sequence (tk)k∈ℤ is described by the set of equations

also known as the t-transform (Lazar, 2004; Lazar and Tóth, 2004), where

Assuming the stimulus un(x1, …, xn − 1, t) ∈  n and using the kernel representation, we have

n and using the kernel representation, we have

where y = (y1, …, yn) and dy = dy1 dy2 … dyn.

Let us now define the linear functional  k :

k :  n → ℝ

n → ℝ

By the Riesz representation theorem there is a function ϕk ∈  n such that

n such that

We arrived at the following

Lemma 1. The SISO multidimensional TEM with a receptive field described by a kernel hn = hn(x1, …, xn − 1, t) provides irregular samples, or quantal measurements of the projection  hn of the kernel hn onto the input stimulus space

hn of the kernel hn onto the input stimulus space  n. In other words, the t-transform may be rewritten as an inner product

n. In other words, the t-transform may be rewritten as an inner product

for every inter-spike interval [tk, tk + 1), k ∈ ℤ, where ϕk ∈  n.

n.

Remark 1. The result above has a simple interpretation. First, information about the receptive field is encoded in the form of quantal measurements qk. These measurements can be readily computed from the spike times (tk)k∈ℤ. Second, the information about the receptive field is only partial and depends on the stimulus space  n used in identification. Specifically, qk's are measurements not of the original kernel hn but of its projection

n used in identification. Specifically, qk's are measurements not of the original kernel hn but of its projection  hn onto the space Hn.

hn onto the space Hn.

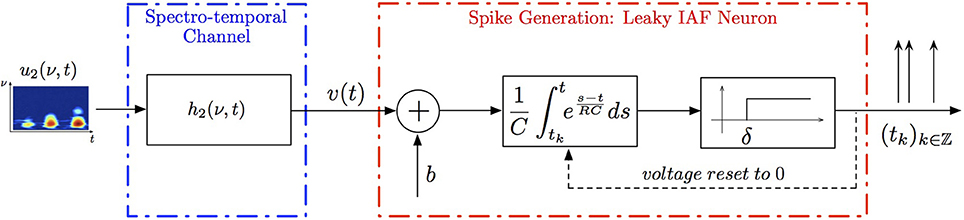

Example 3. A SISO Spectrotemporal TEM is shown in Figure 2. The signal u2(ν, t), (ν, t) ∈ = [0, T1] × [0, T2], is the input to a communication/processing channel with kernel h2(ν, t). The signal u2(ν, t) may represent the time-varying amplitude of a sound in a frequency band centered around ν and h2(ν, t) the spectrotemporal receptive field (STRF) (Kowalski et al., 1996). The output v of the kernel is encoded into a sequence of spike times (tk)k∈ℤ by the leaky integrate-and-fire neuron with a threshold δ, bias b and membrane time constant RC. A spectrotemporal TEM can be used to model the processing or transmission of, e.g., auditory stimuli characterized by a frequency spectrum varying in time (Kowalski et al., 1996). The operation of such a TEM can be fully described by the t-transform

with qk given by (4) for all k ∈ ℤ.

Assuming the spectrotemporal stimulus u2(ν, t) ∈  2, Equation (7) can be written as

2, Equation (7) can be written as

where  k :

k :  2 → ℝ is a linear functional. By the Riesz representation theorem (Berlinet and Thomas-Agnan, 2004), there exists a function ϕk ∈

2 → ℝ is a linear functional. By the Riesz representation theorem (Berlinet and Thomas-Agnan, 2004), there exists a function ϕk ∈  2 such that

2 such that

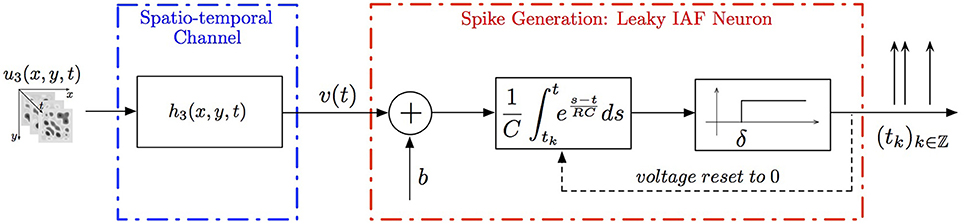

Example 4. A simple SISO Spatiotemporal TEM is shown in Figure 3. A video signal u3(x, y, t), (x, y, t) ∈ = [0, T1] × [0, T2]| × [0, T3], appears as an input to a communication/processing channel described by a filter with a kernel h3(x, y, t). The output v of the kernel is encoded into a sequence of spike times (tk)k∈ℤ by the leaky integrate-and-fire neuron.

A spatiotemporal TEM can be used to model the processing or transmission of, e.g., video stimuli characterized by a spatial component varying in time. The t-transform of such a TEM is given by

with qk given by (4) for all k ∈ ℤ.

Assuming the video stimulus u3(x, y, t) ∈  3, Equation (9) can be written as

3, Equation (9) can be written as

where  k:

k: 3 → ℝ is a linear functional. By the Riesz representation theorem, there is a function ϕk ∈

3 → ℝ is a linear functional. By the Riesz representation theorem, there is a function ϕk ∈  3 such that

3 such that

Example 5. A special case of the SISO Spatiotemporal TEM is the SISO Spatial TEM, in which the communication/processing channel affects only the spatial component of the spatiotemporal input signal. In other words, the output of the receptive field is given by

Note that because only the spatial component of the input is processed, a simpler stimulus that does not vary in time may be presented when identifying this system. For example, such a stimulus may be a static image u2(x, y). Then,

where  k :

k :  2 → ℝ is a functional. As before, by the Riesz representation theorem, there is a function ϕk ∈

2 → ℝ is a functional. As before, by the Riesz representation theorem, there is a function ϕk ∈  2 such that

2 such that

2.3. Identification Algorithms

In the previous section we demonstrated a relationship between the problem of identification of a receptive field and an irregular sampling problem. Namely, we showed that a projection  hn of the multidimensional receptive field hn is embedded in the output spike sequence of the neuron as samples, or quantal measurements, qk of

hn of the multidimensional receptive field hn is embedded in the output spike sequence of the neuron as samples, or quantal measurements, qk of  hn. A natural followup question to ask is how to reconstruct

hn. A natural followup question to ask is how to reconstruct  hn from these measurements. We have the following result.

hn from these measurements. We have the following result.

2.3.1. Feedforward multidimensional SISO CIM

Theorem 1. (SISO Multidimensional CIM)

Let {uin | uin ∈  n}Ni = 1 be a collection of N linearly independent stimuli at the input to a [Filter]-[Leaky IAF] circuit with a multidimensional receptive field hn ∈ Hn. If the number of signals N ≥ ∏n − 1p = 1(2Lp + 1) and the total number of spikes produced in response to all stimuli is greater than ∏np = 1(2Lp + 1) + N, then the filter projection

n}Ni = 1 be a collection of N linearly independent stimuli at the input to a [Filter]-[Leaky IAF] circuit with a multidimensional receptive field hn ∈ Hn. If the number of signals N ≥ ∏n − 1p = 1(2Lp + 1) and the total number of spikes produced in response to all stimuli is greater than ∏np = 1(2Lp + 1) + N, then the filter projection  hn can be perfectly identified from a collection of input/output (I/O) pairs {(uin, )}Ni = 1 as

hn can be perfectly identified from a collection of input/output (I/O) pairs {(uin, )}Ni = 1 as

where h = Φ+ q. Here [h]l = hl1, …, ln, Φ = [Φ1; Φ2; …; ΦN] and the elements of each matrix Φi are given by

with the column index l traversing all possible subscript combinations of l1, l2, …, ln for all k ∈ ℤ, i = 1, 2, …, N. Furthermore, q = [q1; q2; …; qN], [qi]k = qik and

for k ∈ ℤ, i = 1, …, N.

Proof: The representation (6) for stimuli uin takes the form

with ϕik ∈  n. Since

n. Since  hn ∈

hn ∈  n and ϕik ∈

n and ϕik ∈  n, we have

n, we have

and

and, therefore,

In matrix form we have qi = Φi h, with [qi]k = qik, where the elements [Φi]kl = ϕil1 … lnk, with the column index l traversing all possible subscript combinations of l1, l2, …, ln and [h]l = hl1, …, ln. Repeating for all signals i = 1, …, N, we obtain q = Φh with q = [q1; q2; …; qN] and Φ = [Φ1; Φ2; …; ΦN]. This system of linear equations can be solved for h, provided that the rank r(Φ) of the matrix Φ satisfies r(Φ) = ∏np = 1(2Lp + 1). A necessary condition for the latter is that the total number of spikes generated by all N neurons is greater or equal to ∏np = 1(2Lp + 1) + N. Then h = Φ+ q, where Φ+ denotes a pseudoinverse of Φ. To find the coefficients ϕil1 … lnk, we note that

Finally, we note that since the dendritic current v has a maximum bandwidth of Ωt, we only need 2Lt + 1 measurements to specify it. Thus, in response to each stimulus uin, the neuron can produce a maximum of only 2Lt + 1 informative measurements, or equivalently, 2Lt + 2 informative spikes on the interval [0, Tt]. It follows that if the neuron generates ν ≥ 2Lt + 2 spikes for each test signal, the minimum required number of signals is N = ∏n−1p = 1(2Lp + 1)(2Lt + 1)/(2Lt + 1) = ∏n−1p = 1(2Lp + 1). Similarly, if the neuron generates ν < 2Lt + 2 spikes for each signal, then the minimum required number of signals is .

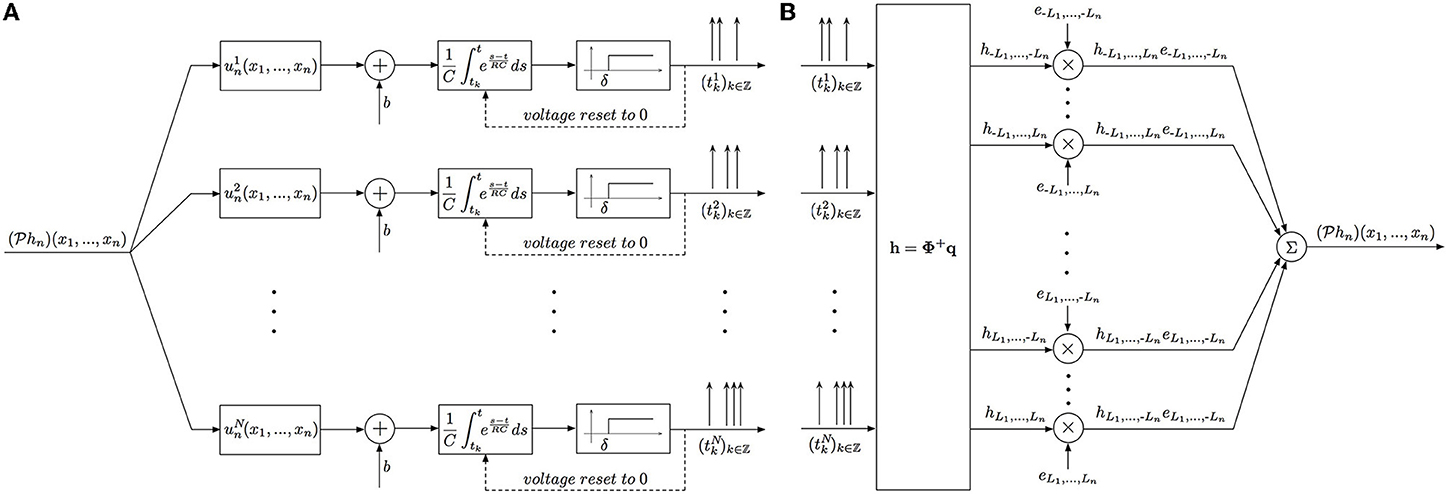

The block diagram of the identification procedure and algorithm are shown in Figure 4. Identification of the filter hn has been reduced to the encoding of the projection  hn with a SIMO TEM whose receptive fields are uin, i = 1, …, N.

hn with a SIMO TEM whose receptive fields are uin, i = 1, …, N.

Figure 4. Block diagram of the Multidimensional CIM. (A) Time encoding interpretation of the multidimensional channel identification problem. (B) Block diagram of the multidimensional channel identification machine.

2.3.2. SISO multidimensional CIM with lateral connectivity and feedback

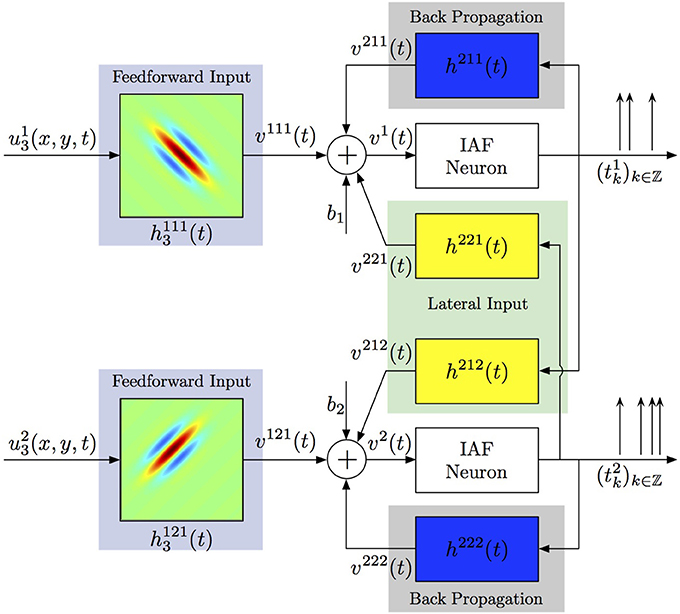

Generalizing the ideas above, one can consider more complex spiking neural circuits, in which every neuron may receive not only feedforward inputs, but also lateral inputs from neurons in the same layer and back-propagating action potentials may contribute to computations within the dendritic tree. A two-neuron circuit incorporating these considerations is shown in Figure 5. Each neuron j processes a visual stimulus uj3(x, y, t) using a distinct spatiotemporal receptive field h1j13(x, y, t), j = 1, 2. The processing of lateral inputs is described by the temporal receptive fields (cross-feedback filters) h221 and h212, while various signals produced by back-propagating action potentials are modeled by the temporal receptive fields (feedback filters) h211 and h222. The aggregate dendritic currents v1 and v2, produced by the receptive fields and affected by back propagation and cross-feedback, are encoded by IAF neurons into spike times (t1k)k∈ℤ, (t2k)k∈ℤ.

Figure 5. Identifying spatiotemporal receptive fields in circuits with lateral connectivity and feedback.

Theorem 2. (SISO multidimensional CIM with lateral connectivity and feedback)

Let {[u1,in, u2,in] | uj,in ∈  n, j = 1, 2}Ni = 1 be a collection of N linearly independent vector stimuli at the input to two neurons with multidimensional receptive fields h1j1n ∈ Hn, j = 1, 2, lateral receptive fields h212, h221 and feedback receptive fields h211 and h222. Let (t1k)k∈ℤ and (t2k)k∈ℤ be the sequences of spike times produced by the two neurons. If the number of test stimuli N ≥ ∏n − 1p = 1(2Lp + 1) + 2 and the total number of spikes produced by each neuron in response to all stimuli is greater than ∏np = 1(2Lp + 1) + 2(2Ln + 1) + N, then the filter projections

n, j = 1, 2}Ni = 1 be a collection of N linearly independent vector stimuli at the input to two neurons with multidimensional receptive fields h1j1n ∈ Hn, j = 1, 2, lateral receptive fields h212, h221 and feedback receptive fields h211 and h222. Let (t1k)k∈ℤ and (t2k)k∈ℤ be the sequences of spike times produced by the two neurons. If the number of test stimuli N ≥ ∏n − 1p = 1(2Lp + 1) + 2 and the total number of spikes produced by each neuron in response to all stimuli is greater than ∏np = 1(2Lp + 1) + 2(2Ln + 1) + N, then the filter projections  h211,

h211,  h212,

h212,  h221,

h221,  h222 and

h222 and  h1j1n, j = 1, 2, can be identified as (

h1j1n, j = 1, 2, can be identified as ( h211)(t) = h211l el(t), (

h211)(t) = h211l el(t), ( h212)(t) = h212l el(t), (

h212)(t) = h212l el(t), ( h221)(t) = h221l el(t) (

h221)(t) = h221l el(t) ( h222)(t) = h222l el(t) and

h222)(t) = h222l el(t) and

Here, the coefficients h211l, h212l, h221l, h222l and h1j1l are given by h = [Φ1; Φ2]+ q with q = [q11, …, q1N, q21, …, q2N]T, [qji]k = qjik and h = [h1; h2], where hj = [h1j1−Ln, …, −Ln, …, h1j1Ln, …, Ln, h2[(j mod 2) + 1]j−L, …, h2[(j mod 2) + 1]jL, h2jj−L, …, h2jjL]T, j = 1, 2, provided each matrix Φj has rank r(Φj) = ∏np = 1(2Lp + 1) + 2(2Ln + 1). The ith row of Φj is given by [Φ1ij, Φ2ij, Φ3ij], i = 1, …, N, with

and

l = −Ln, …, Ln. The entries [Φ1ij]kl are as given in Theorem 1.

Proof: Essentially the same as in Theorem 1, with an addition of lateral and feedback terms. Each additional temporal filter requires (2Ln + 1) additional measurements, corresponding to the number of bases in the temporal variable t.

3. Results

Figures 6–9, and corresponding figure legends demonstrate the performance of the multidimensional Channel Identification Machine.

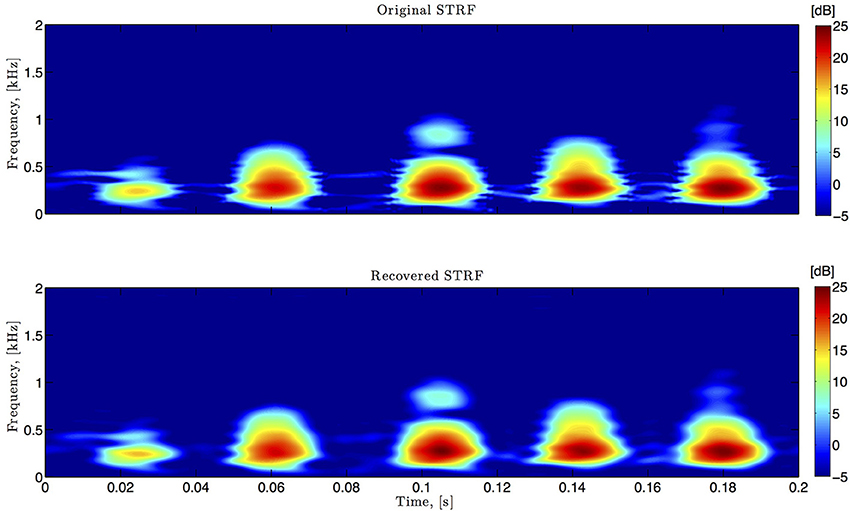

Figure 6. Spectro-temporal example. Original and identified spectrotemporal filters are shown in the top and bottom plots, respectively. Ω1 = 2π · 80 rad/s, L1 = 16, Ω2 = 2π · 120 rad/s, L2 = 24.

In simulations pertaining to the spectrotemporal receptive field (see also Figure 6), we used the short-time Fourier transform of an arbitrarily chosen 200 ms segment of the Drosophila courtship song as a model of the STRF. The space of spectrotemporal signals  2 had bandwidth Ω1 = 2π · 80 rad/s and order L1 = 16 in the spectral direction ν and bandwidth Ω2 = 2π · 120 rad/s and order L2 = 24 in the temporal direction t. The STRF appeared in cascade with an ideal IAF neuron (see Figure 2), whose parameters were chosen so that it generated a total of more than (2L1 + 1)(2L2 + 1) = 33 × 49 = 1, 617 measurements in response to all test signals. We employed a total of N = 40 spectrotemporal signals (which is larger than the (2L1 + 1) = 33 requirement of Theorem 1) in order to identify the STRF.

2 had bandwidth Ω1 = 2π · 80 rad/s and order L1 = 16 in the spectral direction ν and bandwidth Ω2 = 2π · 120 rad/s and order L2 = 24 in the temporal direction t. The STRF appeared in cascade with an ideal IAF neuron (see Figure 2), whose parameters were chosen so that it generated a total of more than (2L1 + 1)(2L2 + 1) = 33 × 49 = 1, 617 measurements in response to all test signals. We employed a total of N = 40 spectrotemporal signals (which is larger than the (2L1 + 1) = 33 requirement of Theorem 1) in order to identify the STRF.

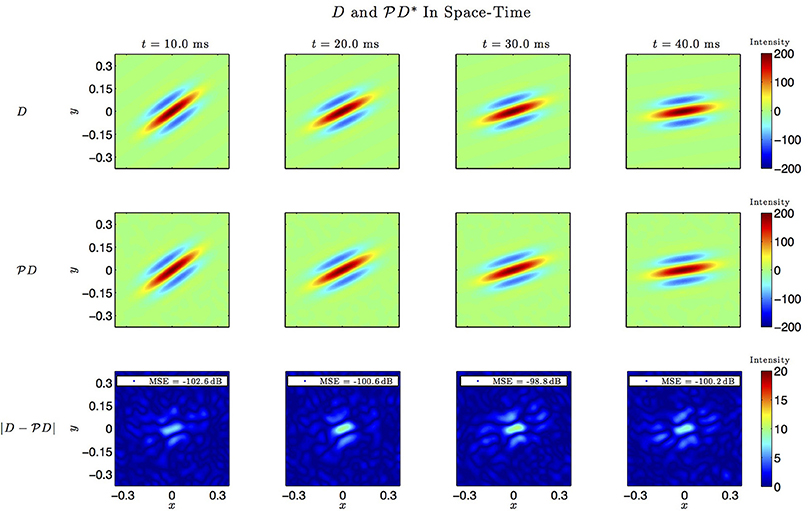

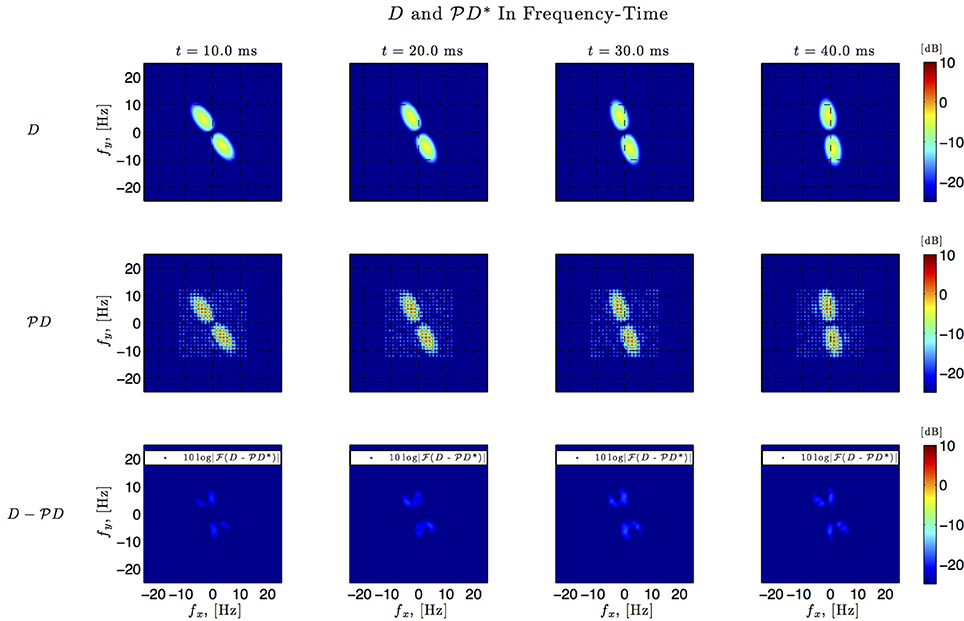

In simulations involving the spatiotemporal receptive field (see also Figures 7, 8 we used a spatial Gabor function that was either rotated, dilated or translated in space as a function of time. The space of spatiotemporal signals  3 had bandwidth Ω1 = 2π · 12 rad/s and order L1 = 9 in the spatial direction x, bandwidth Ω2 = 2π · 12 rad/s and order L2 = 9 in the spatial direction y, and bandwidth Ω3 = 2π · 100 rad/s and order L3 = 5 in the temporal direction t. The STRF appeared in cascade with an ideal IAF neuron (see Figure 2), whose parameters were chosen so that it generated a total of more than (2L1 + 1)(2L2 + 1)(2L3 + 1) = 19 × 19 × 11 = 3, 971 measurements in response to all test signals. In order to identify the projection

3 had bandwidth Ω1 = 2π · 12 rad/s and order L1 = 9 in the spatial direction x, bandwidth Ω2 = 2π · 12 rad/s and order L2 = 9 in the spatial direction y, and bandwidth Ω3 = 2π · 100 rad/s and order L3 = 5 in the temporal direction t. The STRF appeared in cascade with an ideal IAF neuron (see Figure 2), whose parameters were chosen so that it generated a total of more than (2L1 + 1)(2L2 + 1)(2L3 + 1) = 19 × 19 × 11 = 3, 971 measurements in response to all test signals. In order to identify the projection  h3 we employed a total of N = 400 spatiotemporal signals, a number that is larger than the (2L1 + 1)(2L2 + 1) = 361 requirement of Theorem 1).

h3 we employed a total of N = 400 spatiotemporal signals, a number that is larger than the (2L1 + 1)(2L2 + 1) = 361 requirement of Theorem 1).

Figure 7. Spatio-temporal example #1. Top row: Four frames of the original spatiotemporal kernel h3(x, y, t). Here, h3 is a spatial Gabor function rotating clockwise in space with time. Middle row: Four frames of the identified kernel. Ω1 = 2π · 12 rad/s, L1 = 9, Ω2 = 2π · 12 rad/s, L2 = 9, Ω3 = 2π · 100 rad/s, L3 = 5. Bottom row: Absolute error between four frames of the original and identified kernel.

Figure 8. Spatio-temporal example #1 in the frequency domain. Top row: Fourier amplitude spectrum of the four frames of the original spatiotemporal kernel h3(x, y, t) in Figure 7. Note that the frequency support is roughly confined to a square [−10, 10] × [−10, 10]. Middle row: Fourier amplitude spectrum of the four frames of the identified spatiotemporal kernel in Figure 7. Nine spectral lines (L1 = L2 = 9) in each spatial direction cover the frequency support of the original kernel. Bottom row: Absolute error between four frames of the original and identified kernel.

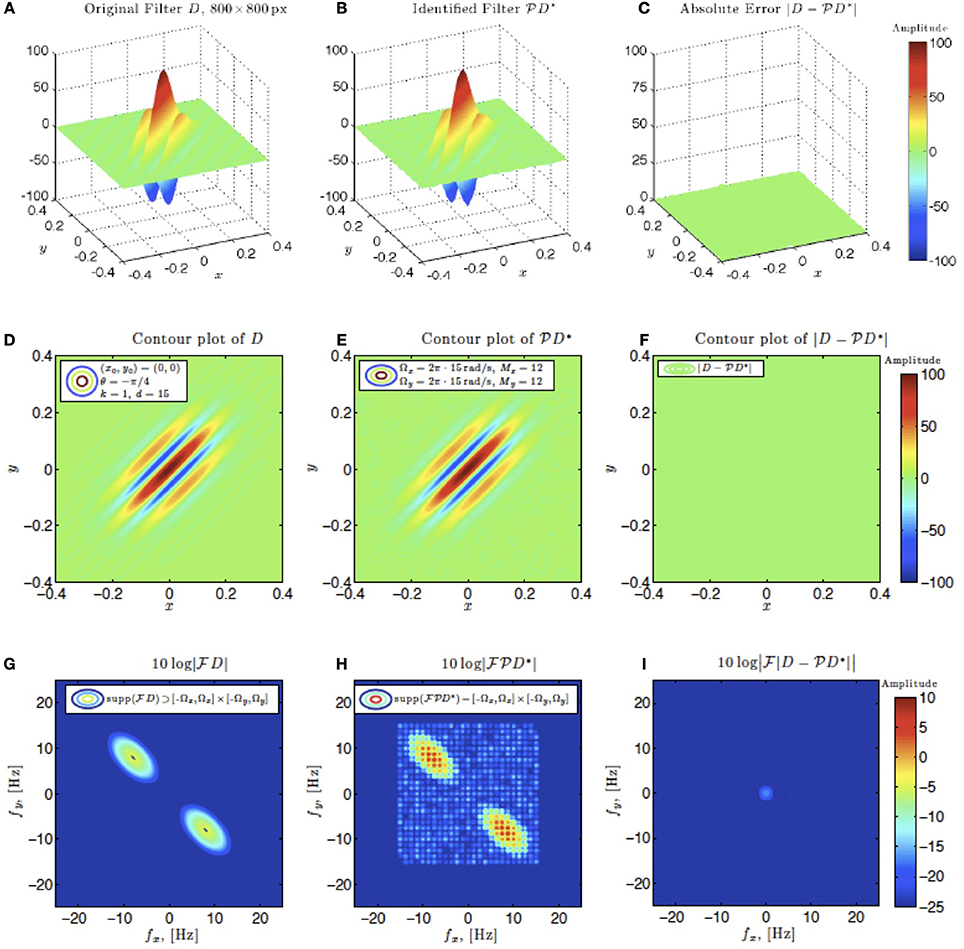

In simulations involving the spatial receptive field (see also Figure 9), we used a static spatial Gabor function. The space of spatial signals  2 had bandwidths Ω1 = Ω2 = 2π · 15 rad/s and L1 = L2 = 12 in spatial directions x and y. The STRF appeared in cascade with an ideal IAF neuron (see Figure 2 as a reference), whose parameters were chosen so that it generated a total of more than (2L1 + 1)(2L2 + 1) = 25 × 25 = 625 measurements in response to all test signals. In order to identify the projection

2 had bandwidths Ω1 = Ω2 = 2π · 15 rad/s and L1 = L2 = 12 in spatial directions x and y. The STRF appeared in cascade with an ideal IAF neuron (see Figure 2 as a reference), whose parameters were chosen so that it generated a total of more than (2L1 + 1)(2L2 + 1) = 25 × 25 = 625 measurements in response to all test signals. In order to identify the projection  h2 we employed a total of N = 688 spatial signals (a number that is larger than the (2L1 + 1)(2L2 + 1) = 625 requirement of Theorem 1).

h2 we employed a total of N = 688 spatial signals (a number that is larger than the (2L1 + 1)(2L2 + 1) = 625 requirement of Theorem 1).

Figure 9. Spatial example #1. Ω1 = Ω2 = 2π · 15 rad/s, L1 = L2 = 12. A minimum of N = 625 images are required for identification. 1.1 × N = 688 images were used. (A–C) Left to right: original spatial kernel h2(x, y), identified kernel and absolute error between the two. (D–F) Left to right: contour plots of the original spatial kernel h2(x, y), identified kernel and absolute error. (G–I) Fourier amplitude spectrum of signals in (D–E).

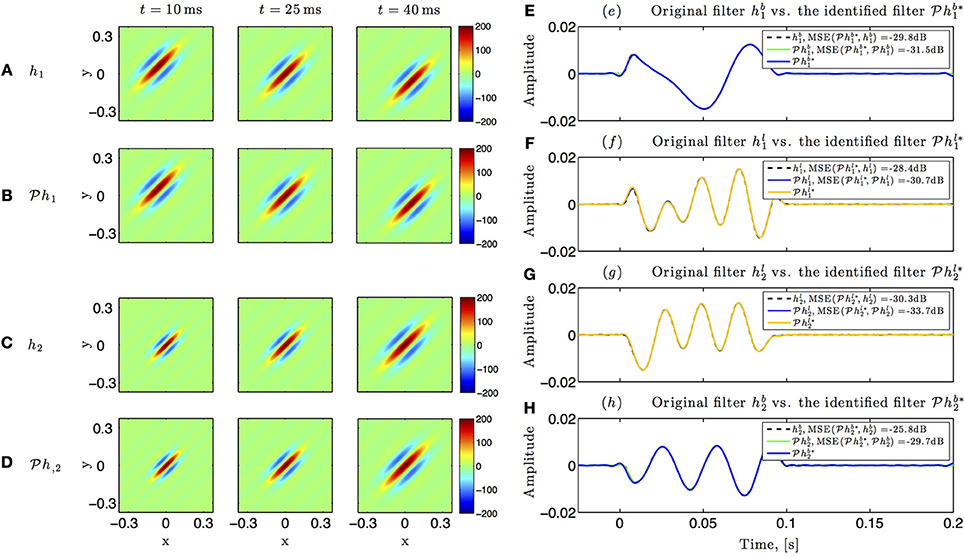

Identification results for the circuit in Figure 5 are shown in Figure 10. The spatiotemporal receptive fields used in this simulation were non-separable. The first receptive field was modeled as a single spatial Gabor function (at time t = 0) translated in space with uniform velocity as a function of time, while the second receptive field was a spatial Gabor function uniformly dilated in space as a function of time. Three different time frames of the original and the identified receptive field of the first neuron are shown in Figures 10A,B, respectively. Similarly, three time frames of the original and identified receptive field of the second neuron are respectively plotted in Figures 10C,D. The identified lateral and feedback kernels are visualized in plots (e-h) of Figure 10.

Figure 10. Identifying spatiotemporal receptive fields in circuits with lateral connectivity and feedback. (A–D) Identifying the feedforward spatiotemporal receptive fields of Figure 5. (E–H) Identifying the lateral connectivity and feedback filters of Figure 5.

4. Discussion

4.1. Implications for Multidimensional Encoding and Decoding

The duality between multidimensional channel identification and stimulus decoding problems allowed us to derive identification algorithms for estimation of receptive fields of arbitrary dimensions and precise conditions under which the identification is possible. At this point it is important to pause and analyze the relationship between the dual problems. As it often turns out, looking at a dual problem can provide a tremendous insight about the primal problem.

Interestingly, previous results for video time encoding and decoding machines provided only the necessary condition of having enough spikes to decode the video (Lazar et al., 2010; Lazar and Pnevmatikakis, 2011). This condition naturally follows from having to invert a matrix in order to compute the basis coefficients of the video signal. Since the matrix needs to be full rank to provide a unique solution, and there are a total of (2L1 + 1)(2L2 + 1)(2L3 + 1) coefficients involved, (2L1 + 1)(2L2 + 1)(2L3 + 1) + N spikes are needed from a population of N neurons (the number of spikes is larger than the number of needed measurements by N since every measurement qk is computed using two consecutive spikes tk and tk + 1.).

Note that a necessary condition only tells us that the number of spikes must have been greater than (2L1 + 1)(2L2 + 1)(2L3 + 1) + N if we were able to recover the video signal. In order to guarantee that the video can be recovered we need a sufficient condition.

The sufficient condition can be derived by drawing comparisons between the decoding and identification problems. In identification, estimation of a receptive field from a single trial is usually not possible, even if the neuron produces a large number of spikes (Lazar and Slutskiy, 2014b). Intuitively, this is because the output of the receptive field is just a function of time. In essence, all dimensions of the stimulus are compressed into just one—the temporal dimension—and we need only (2L3 + 1) measurements to specify a temporal function. As a result, only (2L3 + 1) measurements are informative and we do not gain any new information if the neuron is oversampling the temporal signal. Thus, if the neuron is producing at least (2L3 + 1) measurements per each test stimulus, we need N ≥ (2L1 + 1)(2L2 + 1) different trials to reconstruct a (2L1 + 1)(2L2 + 1)(2L3 + 1)-dimensional receptive field. Similarly, to decode a (2L1 + 1)(2L2 + 1)(2L3 + 1)-dimensional input stimulus, we need N ≥ (2L1 + 1)(2L2 + 1) neurons, with each neuron in the population producing at least (2L3 + 1) measurements. If each neuron produces less than (2L3 + 1) measurements, a larger population N is needed to faithfully encode the video signal.

More generally, if the n-dimensional input stimulus is an element of a (2L1 + 1)(2L2 + 1)…(2Ln + 1)-dimensional RKHS (where the last dimension is time), and the neuron is producing at least at least (2Ln + 1) + 1 spikes per test stimulus, a minimum of (2L1 + 1)(2L2 + 1)…(2Ln − 1 + 1) linearly independent stimuli, or trials with linearly independent stimuli, are needed to identify the receptive field. This condition is sufficient and by duality between channel identification and time encoding, complements the previous necessary condition derived for time decoding machines.

4.2. Extensions

In the derivations above we implicitly assumed that the I/O system was noiseless. In practice, noise is introduced either by the channel or the sampler itself (Lazar et al., 2010). In the presence of noise it is not possible to identify the projection  hn loss-free. However, the analysis/methodology presented above can be extended within an appropriate mathematical setting to I/O systems with noisy measurements. For example, we can still identify an optimal estimate

hn loss-free. However, the analysis/methodology presented above can be extended within an appropriate mathematical setting to I/O systems with noisy measurements. For example, we can still identify an optimal estimate  of

of  hn with respect to an appropriately defined cost function, e.g., by using the Tikhonov regularization method. The regularization methodology extensively discussed in Lazar and Slutskiy (2014a) can be adopted with minor modifications to our setting here.

hn with respect to an appropriately defined cost function, e.g., by using the Tikhonov regularization method. The regularization methodology extensively discussed in Lazar and Slutskiy (2014a) can be adopted with minor modifications to our setting here.

Finally we note that, for convenience and simplicity of notation, the asynchronous sampler used throughout this paper was the IAF neuron. Extensions of our results to neural circuits built with other biophysically-grounded neuron models can be readily obtained by adapting the methodology developed in Lazar and Slutskiy (2012, 2014a).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work was supported by AFOSR under grant #FA9550-12-1-0232 and by NIH under grant #R021 DC012440001.

References

Aertsen, A. M., and Johannesma, P. I. (1981). The spectro-temporal receptive field. A functional characteristic of auditory neurons. Biol. Cybern. 42, 133–143. doi: 10.1007/BF00336731

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Berlinet, A., and Thomas-Agnan, C. (2004). Reproducing Kernel Hilbert Spaces in Probability and Statistics. New York, NY: Kluwer Academic Publishers. doi: 10.1007/978-1-4419-9096-9

Brown, E., Moehlis, J., and Holmes, P. (2004). On the phase reduction and response dynamics of neural oscillator populations. Neural Comput. 16, 673–715. doi: 10.1162/089976604322860668

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Clopton, B. M., and Backoff, P. M. (1991). Spectraltemporal receptive fields of neurons of cochlear nucleus of guinea pig. Hear. Res. 52, 329–344. doi: 10.1016/0378-5955(91)90023-3

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Dayan, P., and Abbott, L. (2005). Theoretical Neuroscience: Computational and Mathematical Modeling of Neural Systems. Cambridge, MA: The MIT Press.

DeAngelis, G. C., Ohzawa, I., and Freeman, R. D. (1995). Receptive-field dynamics in the central visual pathways. Trends Neurosci. 18, 451–458. doi: 10.1016/0166-2236(95)94496-R

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Kowalski, N., Depireux, D. A., and Shamma, S. A. (1996). Analysis of dynamic spectra in ferret primary auditory cortex. i. characteristics of single-unit responses to moving ripple spectra. J. Neurophysiol. 76, 3503–3523.

Lazar, A. A. (2004). Time encoding with an integrate-and-fire neuron with a refractory period. Neurocomputing 58–60, 53–58. doi: 10.1016/j.neucom.2004.01.022

Lazar, A. A., and Pnevmatikakis, E. A. (2011). Video time encoding machines. IEEE Trans. Neural Netw. 22, 461–473. doi: 10.1109/TNN.2010.2103323

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Lazar, A. A., Pnevmatikakis, E. A., and Zhou, Y. (2010). Encoding natural scenes with neural circuits with random thresholds. Vision Res. 50, 2200–2212. doi: 10.1016/j.visres.2010.03.015

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Lazar, A. A., and Slutskiy, Y. B. (2010). Identifying dendritic processing. Adv. Neural Inf. Process. Syst. 23, 1261–1269.

Lazar, A. A., and Slutskiy, Y. B. (2012). Channel identification machines. Comput. Intell. Neurosci. 2012:209590. doi: 10.1155/2012/209590

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Lazar, A. A., and Slutskiy, Y. B. (2013). Multisensory encoding, decoding, and identification. Adv. Neural Inf. Process. Syst. 26, 3183–3191.

Lazar, A. A., and Slutskiy, Y. B. (2014a). Functional identification of spike-processing neural circuits. Neural Comput. 26, 264–305. doi: 10.1162/NECO-a-00543

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Lazar, A. A., and Slutskiy, Y. B. (2014b). Spiking neural circuits with dendritic stimulus processors: encoding, decoding, and identification in reproducing kernel Hilbert spaces. J. Comput. Neurosci. doi: 10.1007/s10827-014-0522-8. [Epub ahead of print]

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Lazar, A. A., and Tóth, L. T. (2004). Perfect recovery and sensitivity analysis of time encoded bandlimited signals. IEEE Trans. Circ. Syst. I 51, 2060–2073. doi: 10.1109/TCSI.2004.835026

Pillow, J. W., Shlens, J., Paninski, L., Sher, A., Litke, A. M., Chichilnisky, E. J., et al. (2008). Spatio-temporal correlations and visual signaling in a complete neuronal population. Nature 454, 995–999. doi: 10.1038/nature07140

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Sherrington, C. S. (1906). The Integrative Action of the Nervous System. New York, NY: C Scribner and Sons.

Waters, J., Schaefer, A., and Sakmann, B. (2005). Backpropagating action potentials in neurones: measurement, mechanisms and potential functions. Prog. Biophys. Mol. Biol. 87, 145–170. doi: 10.1016/j.pbiomolbio.2004.06.009

Pubmed Abstract | Pubmed Full Text | CrossRef Full Text | Google Scholar

Keywords: channel identification machines, spiking neural circuits, biophysical neuron models, system identification, multidimensional receptive fields, RKHS, time encoding

Citation: Lazar AA and Slutskiy YB (2014) Channel identification machines for multidimensional receptive fields. Front. Comput. Neurosci. 8:117. doi: 10.3389/fncom.2014.00117

Received: 01 April 2014; Accepted: 31 August 2014;

Published online: 26 September 2014.

Edited by:

Gabriel A. Silva, University of California, San Diego, USAReviewed by:

Gabriel A. Silva, University of California, San Diego, USAMassoud Louis Khraiche, University of California, San Diego, USA

Copyright © 2014 Lazar and Slutskiy. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Aurel A. Lazar, Bionet Group, Department of Electrical Engineering, Columbia University, New York, NY 10027, USA e-mail:YXVyZWxAZWUuY29sdW1iaWEuZWR1

Aurel A. Lazar

Aurel A. Lazar Yevgeniy B. Slutskiy

Yevgeniy B. Slutskiy