- Center for Adaptive Systems, Graduate Program in Cognitive and Neural Systems, Departments of Mathematics & Statistics, Psychological & Brain Sciences, and Biomedical Engineering, Boston University, Boston, MA, United States

This article develops a model of how reactive and planned behaviors interact in real time. Controllers for both animals and animats need reactive mechanisms for exploration, and learned plans to efficiently reach goal objects once an environment becomes familiar. The SOVEREIGN model embodied these capabilities, and was tested in a 3D virtual reality environment. Neural models have characterized important adaptive and intelligent processes that were not included in SOVEREIGN. A major research program is summarized herein by which to consistently incorporate them into an enhanced model called SOVEREIGN2. Key new perceptual, cognitive, cognitive-emotional, and navigational processes require feedback networks which regulate resonant brain states that support conscious experiences of seeing, feeling, and knowing. Also included are computationally complementary processes of the mammalian neocortical What and Where processing streams, and homologous mechanisms for spatial navigation and arm movement control. These include: Unpredictably moving targets are tracked using coordinated smooth pursuit and saccadic movements. Estimates of target and present position are computed in the Where stream, and can activate approach movements. Motion cues can elicit orienting movements to bring new targets into view. Cumulative movement estimates are derived from visual and vestibular cues. Arbitrary navigational routes are incrementally learned as a labeled graph of angles turned and distances traveled between turns. Noisy and incomplete visual sensor data are transformed into representations of visual form and motion. Invariant recognition categories are learned in the What stream. Sequences of invariant object categories are stored in a cognitive working memory, whereas sequences of movement positions and directions are stored in a spatial working memory. Stored sequences trigger learning of cognitive and spatial/motor sequence categories or plans, also called list chunks, which control planned decisions and movements toward valued goal objects. Predictively successful list chunk combinations are selectively enhanced or suppressed via reinforcement learning and incentive motivational learning. Expected vs. unexpected event disconfirmations regulate these enhancement and suppressive processes. Adaptively timed learning enables attention and action to match task constraints. Social cognitive joint attention enables imitation learning of skills by learners who observe teachers from different spatial vantage points.

1. Perception, Learning, Invariant Recognition and Planning During Search and Navigation Cycles

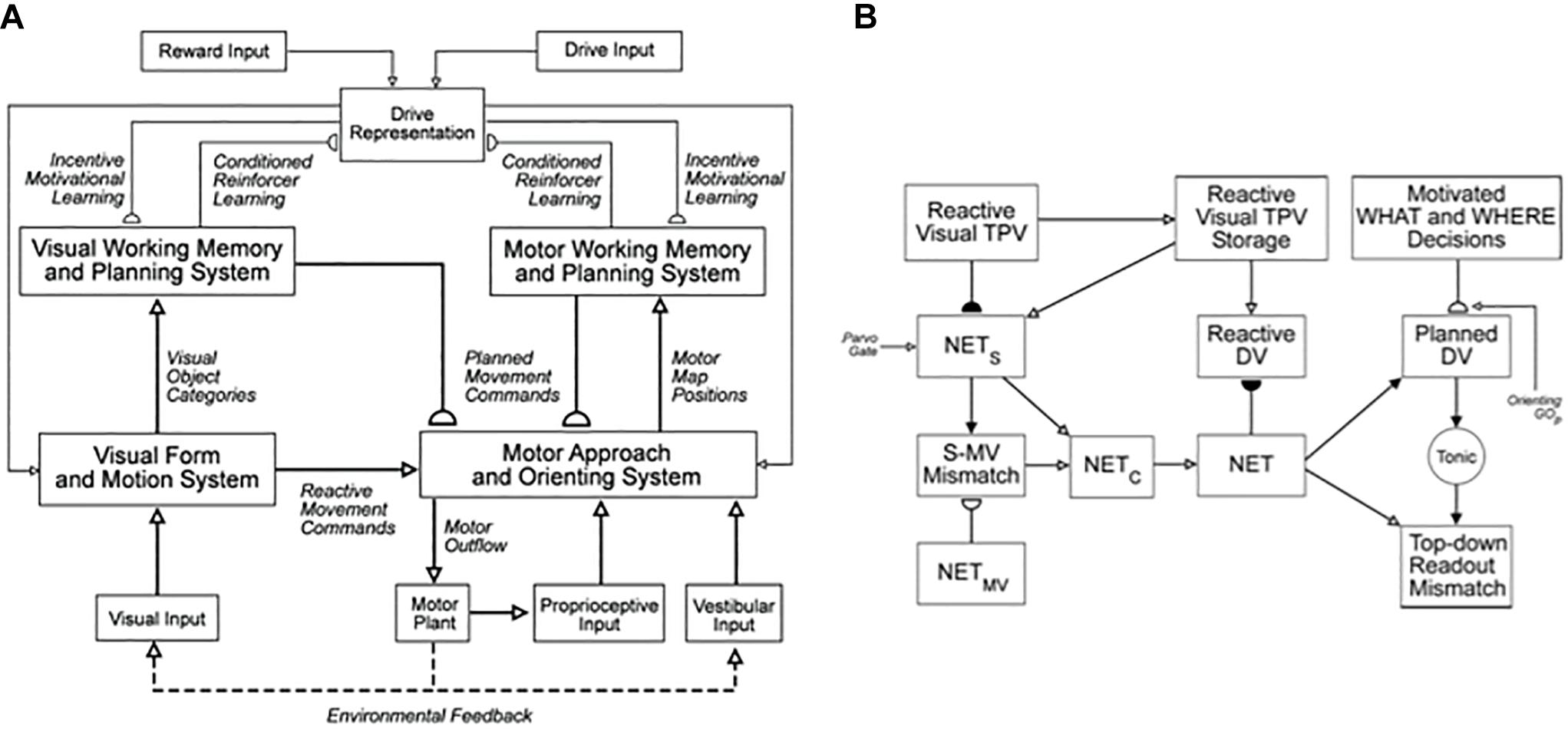

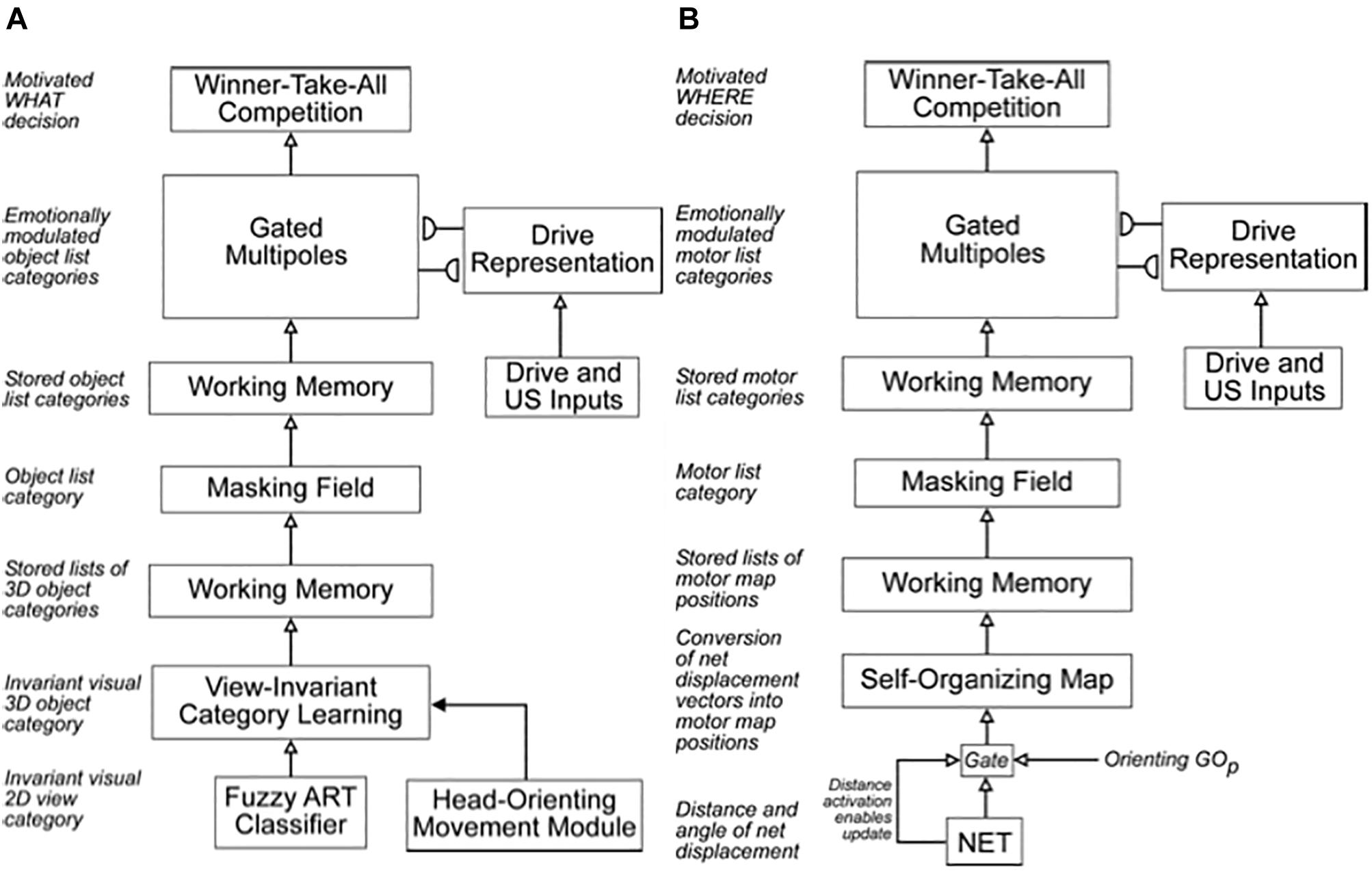

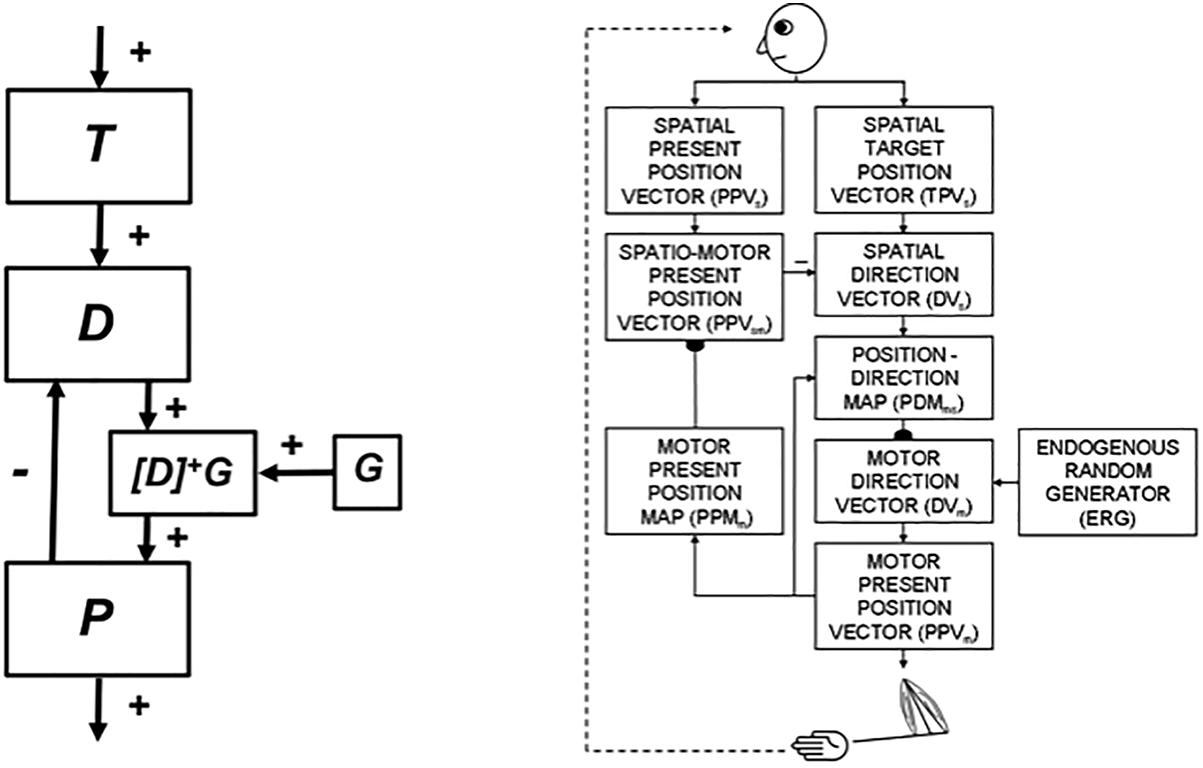

This article contributes to an emerging scientific and computational revolution aimed at understanding and designing increasingly autonomous adaptive intelligent algorithms and mobile agents. In particular, it summarizes an emerging neural architecture that is capable of visually searching and navigating an unfamiliar environment while it autonomously learns to recognize, plan, and efficiently navigate toward and acquire valued goal objects. This article accordingly reviews, and outlines how to extend, the SOVEREIGN architecture of Gnadt and Grossberg (2008) (Figure 1A). The purpose of that architecture is described in the subtitle of the article: An autonomous neural system for incrementally learning planned action sequences to navigate towards a rewarded goal.

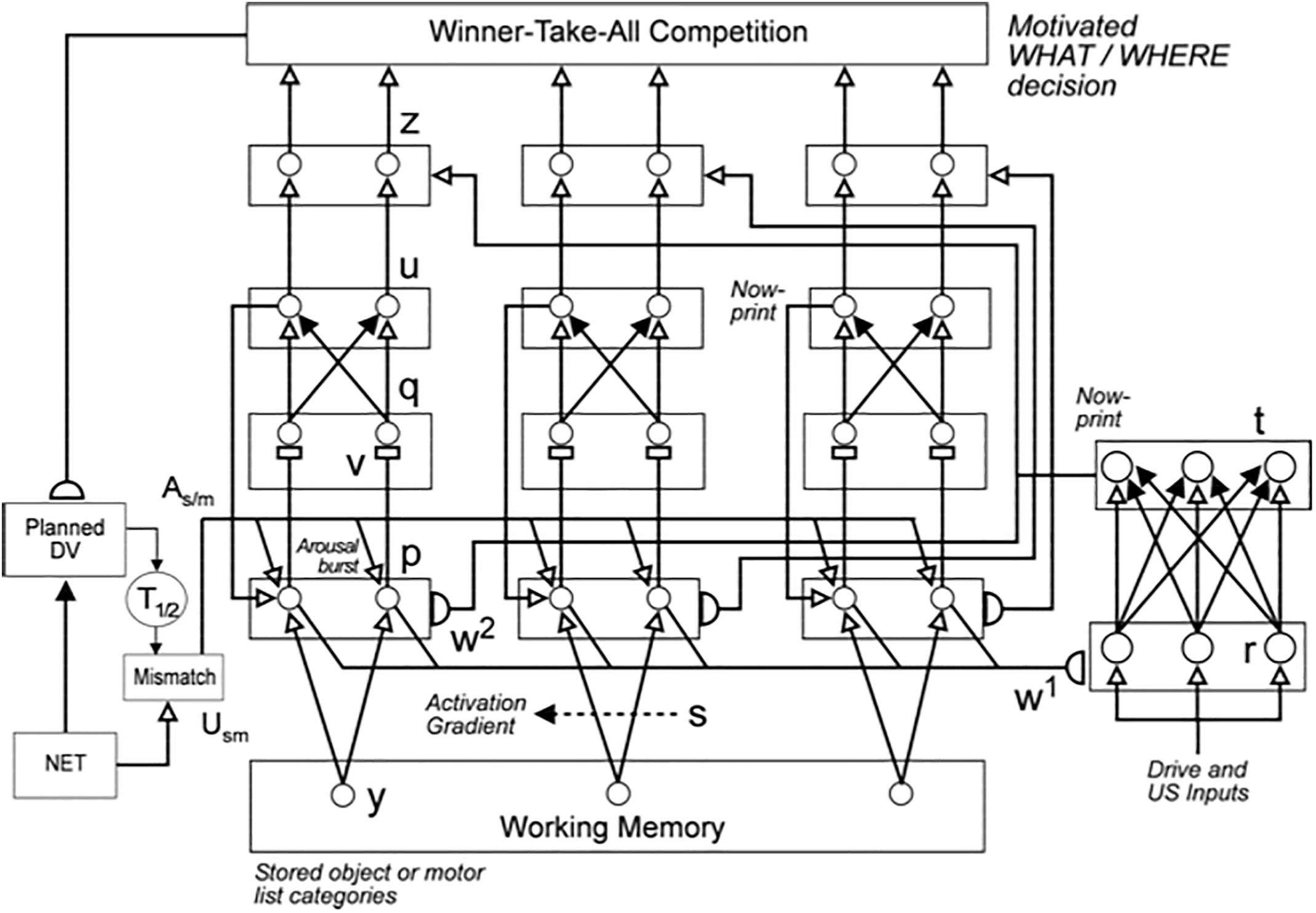

Figure 1. (A) The main interactions between functional systems of the SOVEREIGN model. (B) The Motor Approach and Orienting System flow diagram depicts the control hierarchy that generates outflow motor commands. See the text for details [Reprinted with permission from Gnadt and Grossberg (2008)].

The architecture was called SOVEREIGN because it describes how Self-Organizing, Vision, Expectation, Recognition, Emotion, Intelligent, and Goal-oriented Navigation processes interact during adaptive mobile behaviors. The term Self-Organizing emphasizes that SOVEREIGN’s learning is carried out autonomously and incrementally in real time, using unconstrained combinations of unsupervised or supervised learning. Expectation refers to the fact that key learning processes in SOVEREIGN learn expectations that match incoming data, or predict future outcomes. Good enough matches focus attention upon expected combinations of critical features, while mismatches drive memory searches to learn better representations of an environment. Recognition acknowledges that SOVEREIGN learns object categories, or “chunks,” whereby to recognize objects and events. Emotion denotes that SOVEREIGN carries out reinforcement learning whereby unfamiliar objects can learn to become conditioned reinforcers, as well as sources of incentive motivation that can maintain attention upon valued goals, while actions to acquire those goals are carried out. Reinforcement learning also supports the learning of value categories that can recognize valued combinations of homeostatic drive inputs. Intelligent means that SOVEREIGN includes processes whereby sequences, or lists, of objects and positions may be temporarily stored in cognitive and spatial working memories as they are experienced in real time. Stored sequences trigger learning of sequence categories or plans, also called list chunks, that recognize particular sequential contexts and learn to predict the most likely future outcomes as they are modulated by reinforcement learning and incentive motivational learning. Goal-oriented navigation means that SOVEREIGN includes circuits for controlling exploratory and planned movements while navigating unfamiliar and familiar environments.

1.1. Learning Routes as a Labeled Graph of Angles Turned and Distances Traveled

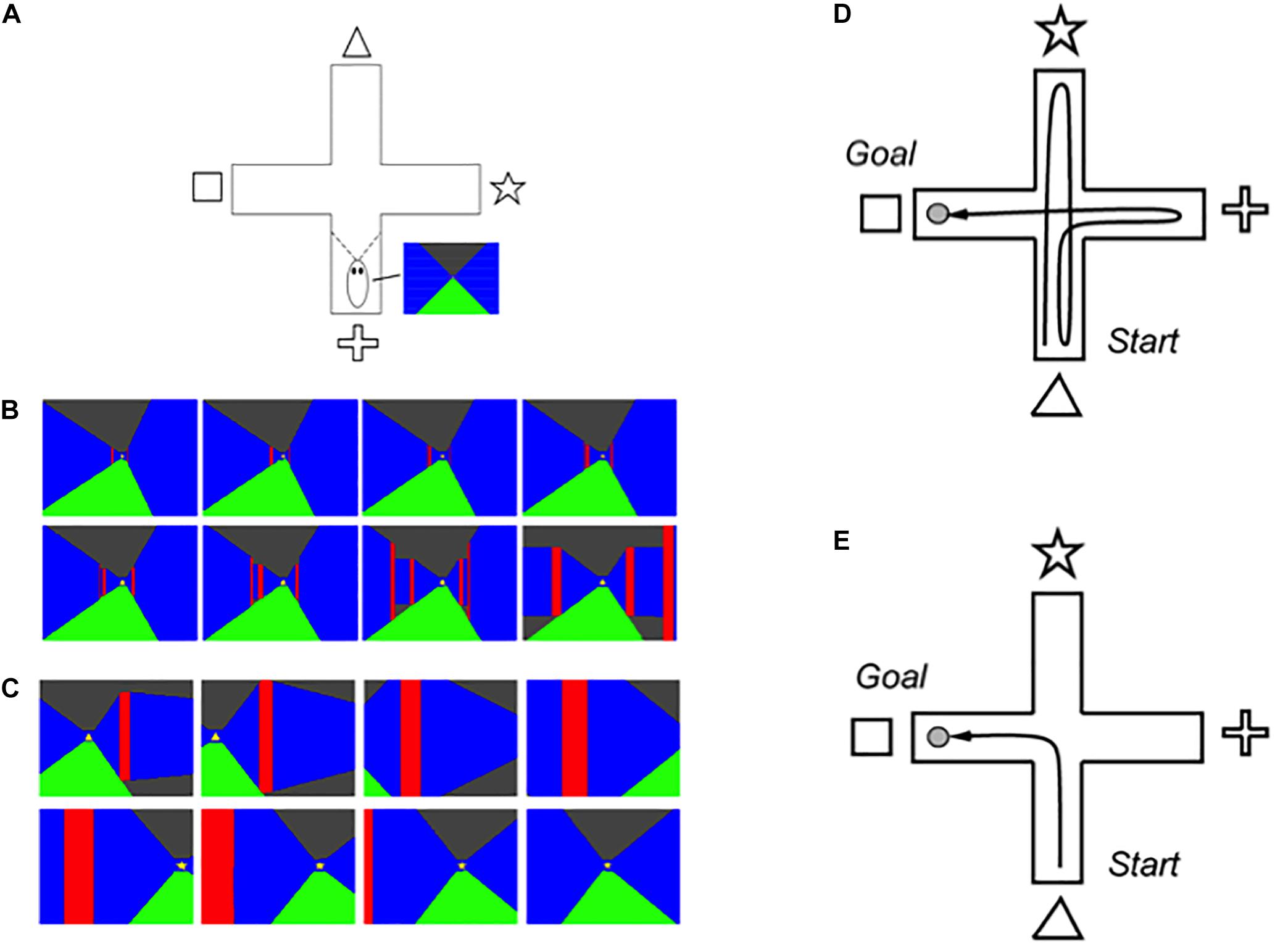

SOVEREIGN used these capabilities to simulate how an animal, or animat, can autonomously learn to reach valued goal objects through planned sequences of navigational movements within a virtual reality environment. Learning was simulated in a cross maze (Figure 2A) that was seen by the animat as a virtual reality 3D rendering of the maze as it navigated it through time. At the end of each corridor in the maze, a different visual cue was displayed (triangle, star, cross, and square). Sequences of virtual reality views on two navigational routes, shown in color for vividness, are summarized in Figures 2B,C, where the floor is green, the walls are blue, the ceiling in black, and the interior corners where pairs of maze corridors meet are in red. Figure 2B illustrates how the views change as the animat navigates straight down one corridor, and Figure 2C illustrates how the views change as the animal makes a turn from facing one corridor to facing a perpendicular one.

Figure 2. (A) The 3D graphical simulation of the virtual reality plus maze generates perspective views from any position within the maze. (B) Snapshots from the 3D virtual reality simulation depict changes in the scene during reactive homing toward the triangle cue. (C) During reactive approach to the triangle cue, visual motion signals trigger a reactive head orienting movement to bring the star cue into view. Two overhead views of a plus maze show (D) a typical initial exploratory reactive path, and (E) an efficient learned planned path to the goal [Adapted with permission from Gnadt and Grossberg (2008)].

SOVEREIGN incrementally learned how to navigate to a rewarded goal object in this cross maze, which is the perhaps the simplest environment that requires all of the SOVEREIGN designs to explore an unfamiliar visual environment (Figure 2D) while learning efficient routes whereby to acquire a valued goal, rather than less efficient or valued routes (Figure 2E). Several different types of neural circuits, systems, and learning are needed to achieve this competence. They will be described in the subsequent sections. The same mechanisms generalize to much more complex visual environments, especially because, as will be described below, all the perceptual, cognitive, and affective learning mechanisms scale to more complex environments and dynamically self-stabilize their memories using learned expectation and attention mechanisms, while the spatial and motor mechanisms are platform independent.

One key SOVEREIGN accomplishment is worthy of mention now because it illustrates how SOVEREIGN goes beyond reactive navigation to autonomously learn the most efficient routes whereby to acquire a valued goal, while rejecting less efficient routes that were taken early in the exploratory process. SOVEREIGN explains how arbitrary navigational trajectories can be incrementally learned as sequences of turns and linear movements until the next turn. In other words, the model explains how route-based navigation can learn a labeled graph of angles turned and distances that are traveled between turns. The angular and linear velocity signals that are experienced at such times are used in the model to learn the angles that a navigator turns, and the distances that are traveled in a straight path before the next turn.

The prediction that a labeled graph is learned during route navigation has recently received strong experimental support in Warren et al. (2017) who show how, when humans navigate in a virtual reality environment, such a labeled graph controls their navigational choices during route finding, novel detours, and shortcuts.

1.2. From SOVEREIGN to SOVEREIGN2: New Processes and Capabilities

SOVEREIGN did not include various brain processes and psychological functions of humans that are needed to realize a more sophisticated level of autonomous adaptive intelligence. This article summarizes some of the neural models that have been developed to explain these functions, and that can be consistently incorporated into an enhanced architecture called SOVEREIGN2. These processes have been rigorously modeled and parametrically simulated over a 40-year period, culminating in recent syntheses such as Grossberg (2013, 2017, 2018). They are reviewed heuristically here to bring together in one place the basic design principles, mechanisms, and architectures that they embody. Rigorous embodiment of all of these competences in SOVEREIGN2 will require a sustained research program. The current article provides a roadmap for that task.

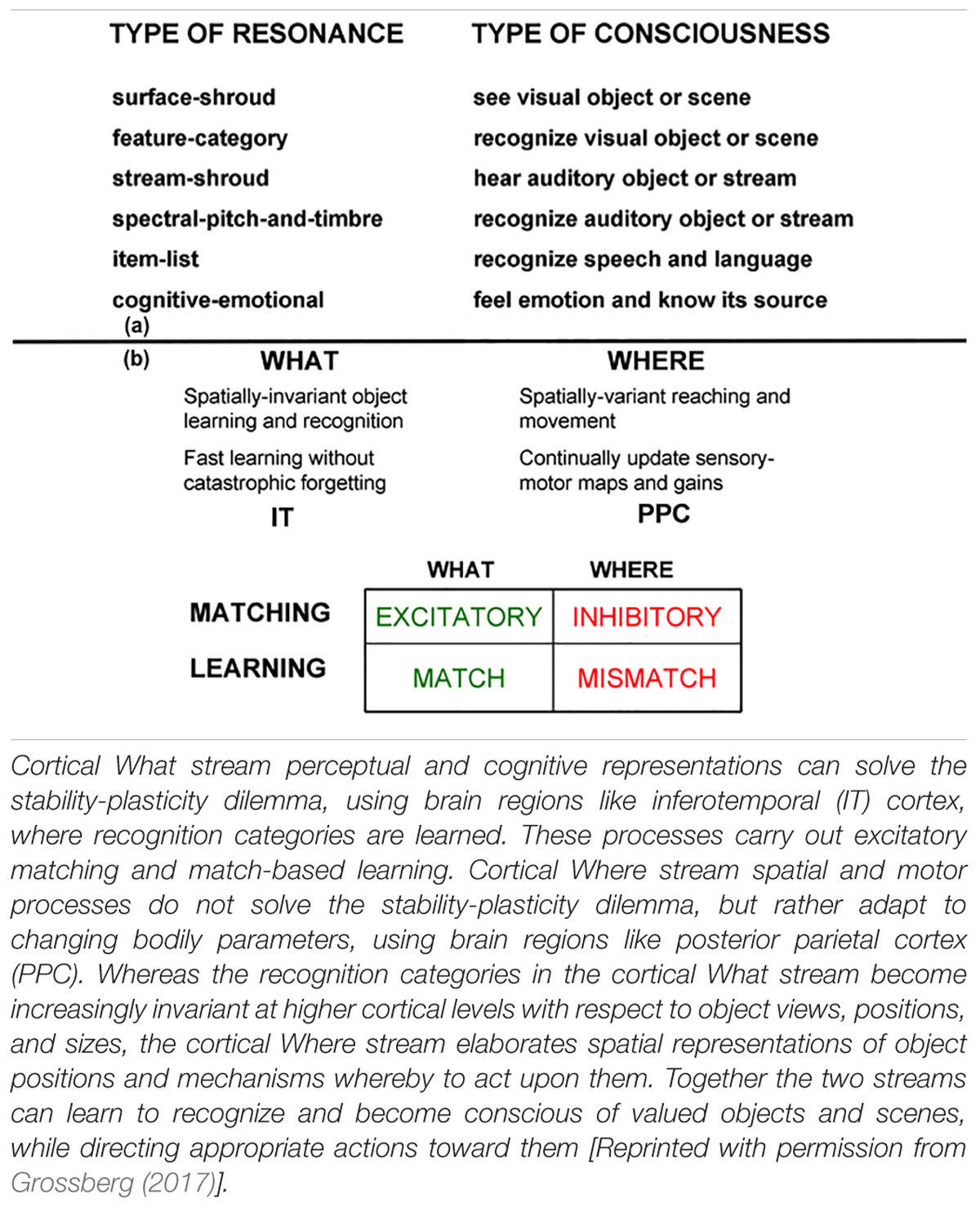

The most important new perceptual, cognitive, and navigational properties emerge within feedback networks that regulate one or another kind of attention as part of resonant brain states that support conscious experiences of seeing, feeling, and knowing. These resonant states are modeled as part of Adaptive Resonance Theory, or ART. Table 1a also lists resonances that arise during auditory processing. Auditory processing will not be considered below, but is described with the others in Grossberg (2017). SOVEREIGN2 will embody such resonant dynamics, including states that in humans support consciousness, because of a deep computational connection that has been modeled between conscious states and the choice of effective task-relevant actions. ART hereby provides explanations of what goes on in each of our brains when we consciously see, hear, feel, or know something; where it is going on; and why evolution may have been driven to discover conscious states of mind.

Table 1. (a) Types of resonances and the conscious experiences that they embody. (b) Complementary What and Where cortical stream properties.

Additional processes in SOVEREIGN2 include circuits for target tracking with smooth pursuit and saccadic eye or camera movements (see section 3.2); visual form and motion perception in response to noisy and incomplete sensor signals (see section 4.13); incremental unsupervised view-, size-, and position-specific object category learning and hypothesis testing in real time in response to arbitrarily large non-stationary databases that may include unexpected events (see sections 4.2–4.9, 6.2, and 6.3); incremental unsupervised learning of view-, size-, and position- invariant object categories during free scanning of a scene with eye or camera movements (see sections 4.1, 6.1, and 6.4); selective storage in working memory of task-relevant object, spatial, or motor event sequences (see sections 4.10, 6.9, 6.10, and 7); unsupervised learning of cognitive and motor plans based upon working memory storage of event sequences in real time, and Where’s Waldo search for currently valued goal objects (see sections 6.10 and 7); unsupervised learning of reaching behaviors that automatically supports accurate tool manipulation in space (see section 5.4); unsupervised learning of present position in space using path integration during spatial navigation (see sections 6.11 and 8); platform-independent navigational control using either leg or wheel movements (see section 5.6); unsupervised learning of adaptively timed actions and maintenance of motivated attention while these actions are executed (see sections 6.7 and 6.8); and social cognitive capabilities like joint attention and imitation learning whereby a classroom of robots can learn spatial skills by each observing a teacher from its own unique spatial perspective (see section 5.5).

2. Brains Assemble Equations and Microcircuits Into Modal Architectures: Contrast Deep Learning

ART architectures embody key design principles that are found in advanced brains, and which enable general-purpose autonomous adaptive intelligence to work. These designs have enabled biological neural networks to offer unified principled explanations of large psychological and neurobiological databases (e.g., see Grossberg, 2013, 2017, 2018) using just a small set of mathematical laws or equations-such as the laws for short-term memory or STM, medium-term memory or MTM, and long-term memory or LTM-and a somewhat larger set of characteristic microcircuits that embody useful combinations of functional properties-such as properties of cognitive and cognitive-emotional learning and memory, decision-making, prediction, and action. Just as in physics, only a few basic equations are used to explain and predict many facts about mind and brain, when they are embodied in a somewhat larger number of microcircuits that may be thought of as the “atoms” or “molecules” of intelligence. Specializations of these laws and microcircuits are then combined into larger systems that are called modal architectures, where the word “modal” stands for different modalities of intelligence, such as vision, speech, cognition, emotion, and action. Modal architectures are less general than a general-purpose von Neumann computer, but far more general than a traditional algorithm from AI.

As I will illustrate throughout this article, these designs embody computational paradigms that are called complementary computing, hierarchical resolution of uncertainty, and adaptive resonance. In addition, the paradigm of laminar computing shows how these designs may be realized in the layered circuits of the cerebral cortex and, in so doing, achieve even more powerful computational capabilities. These computational paradigms differ qualitatively from currently popular algorithms in AI and machine learning, notably Deep Learning (Hinton et al., 2012; LeCun et al., 2015) and its variants like Deep Reinforcement Learning (Mnih et al., 2013). Despite their successes in demonstrating various recent applications, these algorithms do not come close to matching the generality, adaptability, and intelligence that is found in models that more closely emulate brain designs. As just one of many problems, Deep Learning algorithms are susceptible to undergoing catastrophic forgetting, or an unexpected collapse of the memory of previously learned information while new information is being learned, a property that is shared by all variants of the classical back propagation algorithm (Grossberg, 1988). This kind of problem becomes increasingly destructive as a Deep Learning algorithm tries to learn from very large databases. The ART-based systems that are summarized below do not experience these problems.

No less problematic is that Deep Learning is just a feedforward adaptive filter. It does not carry out any of the basic kinds of information processing that are typically identified as “intelligent,” but which are carried out within ART and other biological learning algorithms that are embedded within neural network architectures. Deep Learning has none of the architectural features, such as learned top-down expectations, attentional focusing, and mismatch-mediated memory search and hypothesis testing, that are needed for stable learning in a non-stationary world of Big Data.

Perhaps these problems are why Geoffrey Hinton said in an Axios interview on September 15, 2017 (LeVine, 2017) that he is “deeply suspicious of back propagation…I don’t think it’s how the brain works. We clearly don’t need all the labeled data…My view is, throw it all away and start over” (italics mine). This essay illustrates that we do not need to start over.

Section 17 in Grossberg (1988) lists 17 different learning and performance properties of Back Propagation and Adaptive Resonance Theory. The third of the 17 differences between Back Propagation and ART is that ART does not need labeled data to learn. ART can learn using arbitrary combinations of unsupervised and supervised learning. ART also does not experience any of the computational problems that compromise Back Propagation and Deep Learning, including catastrophic forgetting.

3. Building Upon Three Basic Design Themes: Balancing Reactive and Planned Behaviors

The original SOVEREIGN architecture contributed models of three basic design themes about how advanced brains work. The first theme concerns how brains learn to balance between reactive and planned behaviors. During initial exploration of a novel environment, many reactive movements may occur in response to unfamiliar and unexpected environmental cues (Leonard and McNaughton, 1990). These movements may seem initially to be random, as an animal orients toward and approaches many stimuli (Figure 2D). As the animal becomes familiar with its surroundings, it learns to discriminate between objects likely to yield a reward and those that lead to punishment or to no valued consequences. Such approach-avoidance behavior is typically learned via reinforcement learning during a perception-cognition-emotion-action cycle in which an action and its consequences elicit sensory cues that are associated with them. When objects are out of direct viewing or reaching ranges, reactive exploratory movements may be triggered to bring them closer. Eventually, reactive exploratory behaviors are replaced by more efficient planned sequential trajectories within a familiar environment (Figure 2E). One of the main goals of SOVEREIGN was to explain how erratic reactive exploratory behaviors trigger learning to carry out organized planned behaviors, and how both reactive and planned behaviors may remain balanced so that planned behaviors can be carried out where appropriate, without losing the ability to respond quickly to novel reactive challenges.

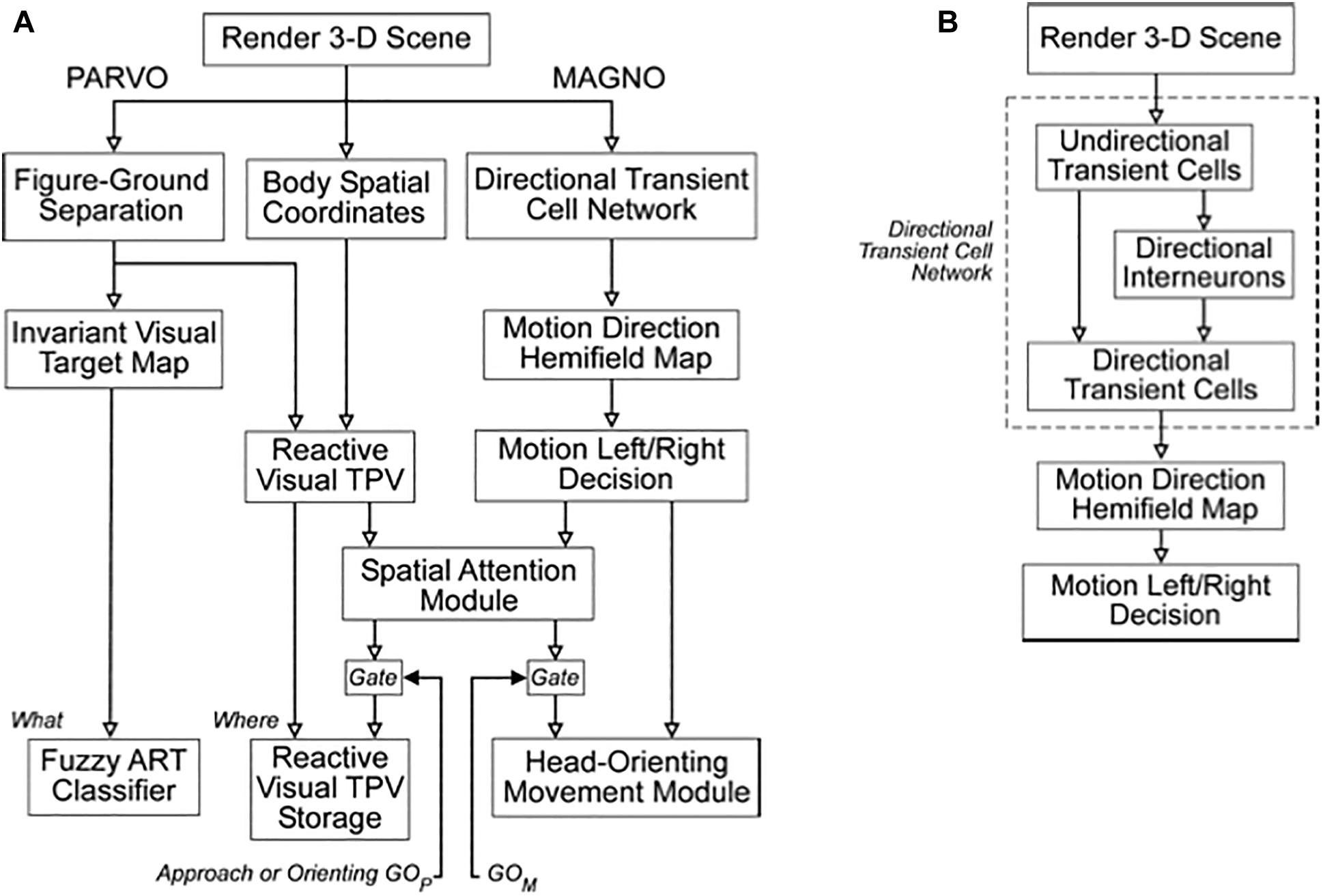

3.1. Parallel Streams for Computing Visual Form and Motion

One way that SOVEREIGN realizes a flexible balance between reactive and planned behaviors is its organization into parallel streams for computing visual form and motion. In Figure 3A, these streams are labeled PARVO and MAGNO, corresponding to contributions at early visual processing stages of parvocellular cells to form processing and magnocellular cells to motion processing (e.g., Maunsell and Newsome, 1987; DeYoe and Van Essen, 1988; Maunsell et al., 1990; Schiller et al., 1990). Roughly speaking, the form stream supports sustained attention upon foveated objects, whereas the motion stream attracts attention and bodily movements in response to sudden changes, including motions, in the periphery. sections 3.2 and 4.13 will further describe how SOVEREIGN carries out form processing and will outline how SOVEREIGN2 can achieve much more powerful form processing capabilities. Figure 3B provides a more detailed summary of the early motion processing that enables SOVEREIGN to track objects moving at variable speeds (Chey et al., 1997; Berzhanskaya et al., 2007). Orienting movements to a source of motion were controlled algorithmically in SOVEREIGN; e.g., see the Head-Orienting Movement Module in Figure 3A.

Figure 3. (A) The Visual Form System (PARVO) and Motion System (MAGNO) flow diagrams depict the stages of visual processing in SOVEREIGN. (B) Detailed stages of motion processing within the Motion System are shown in this diagram. The Directional Transient Cell Network module comprises multiple stages of processing. The Motion Left/Right Decision generates signals that are capable of eliciting a reactive left or right head-orienting signal. The several transient cell stages enable the Motion System to retain its directional selectivity in response to motions at variable speeds. See the text for details.

3.2. Log Polar Retinas and Fixating Unpredictably Moving Targets With Eye Movements

Many primate retinas have a localized region of high visual acuity that is called the fovea, with resolution decreasing with distance from the fovea (see Supplementary Figure S4) to realize a cortical magnification factor whereby spatial representations of retinal inputs in the visual cortex get coarser as they move from the foveal region to the periphery (Daniel and Whitteridge, 1961; Fischer, 1973; Tootell et al., 1982; Schwartz, 1984; Polimeni et al., 2006). The cortical magnification factor is approximated by a log-polar function, which allows a huge reduction in the number of cells that are needed to see Schwartz (1984), Wallace et al. (1984), Schwartz et al. (1995). However, because of this retinal organization, eye and head movements are needed to move the fovea to look at objects of interest.

Both smooth pursuit movements and saccadic eye movements are used to keep the fovea looking at objects of interest. During a smooth pursuit movement, as the eyes track a moving target in a given direction, the entire scene moves in the opposite direction on the retina (Supplementary Figure S1). Why does not this background motion interfere with tracking by causing an involuntary motion, called nystagmus, in the opposite direction than the target is moving? How does accurate tracking continue, even after the eye catches up with the moving target, so that there is no net speed of the target on the fovea, and thus no retinal slip signals from the foveal region of the eyes to move them toward the target?

Remarkably, both of these questions seem to have the same answer, which includes the fact that the background motion facilitates tracking, rather than interfering with it, in the manner that is summarized in Supplementary Figures S1, S2. Supplementary Figure S1 summarizes the fact that, for fixed target speed, as the target speed on the retina decreases due to increasingly good target tracking, the background speed in the opposite direction on the retina increases. Supplementary Figure S2 schematizes the smooth pursuit eye movement, or SPEM, model of Pack et al. (2001) of how cells in the dorsal Medial Superior Temporal region (MSTd), which are activated by the background motion, excite cells that are sensitive to the opposite direction in the ventral MST (MSTv) region. The MSTv cells are the ones that control the movement commands whereby the eyes pursue the moving target. When the eyes catch up to the target, they can maintain accurate foveation even in the absence of retinal slip signals, because background motion signals compensate for the reduced retina speed of the target, and can thus be used to accurately move the eyes in the desired direction at the target speed (Supplementary Figure S1). This kind of SPEM model can replace the Head-Orienting Movement Module in SOVEREIGN if an animat with orienting eyes or cameras is used.

When a valued target suddenly changes its speed or direction of motion, then smooth pursuit movements may be insufficient. Ballistic saccadic movements can then catch up with the target. Animals such as humans and monkeys can coordinate smooth pursuit and ballistic saccadic eye movements to catch up efficiently. Indeed, the current speed and direction of smooth pursuit when the target suddenly changes its speed or direction may be used to calibrate a ballistic saccade with the best chance to catch up. This kind of predictive coordination is achieved by the SAC-SPEM model of Grossberg et al. (2012). The sheer number of brain regions that work together to accomplish such coordination (Supplementary Figure S3) will challenge future mobile robotic designers to embody this tracking competence in the simplest possible way.

4. Building Upon Three Basic Design Themes: Complementary Computing, Hierarchical Resolution of Uncertainty, and Adaptive Resonance

The second design theme is that advanced brains are organized into parallel processing streams with computationally complementary properties (Grossberg, 2000, 2017). Complementary computing means that each stream’s properties are related to those of a complementary stream much as a key fits into a lock, or two pieces of a puzzle fit together. The mechanisms that enable each stream to compute one set of properties prevent it from computing a complementary set of properties. As a result, each of these streams exhibits complementary strengths and weaknesses. Interactions between these processing streams use multiple processing stages to overcome their complementary deficiencies and generate psychological properties that lead to successful behaviors. This interactive multi-stage process is called hierarchical resolution of uncertainty.

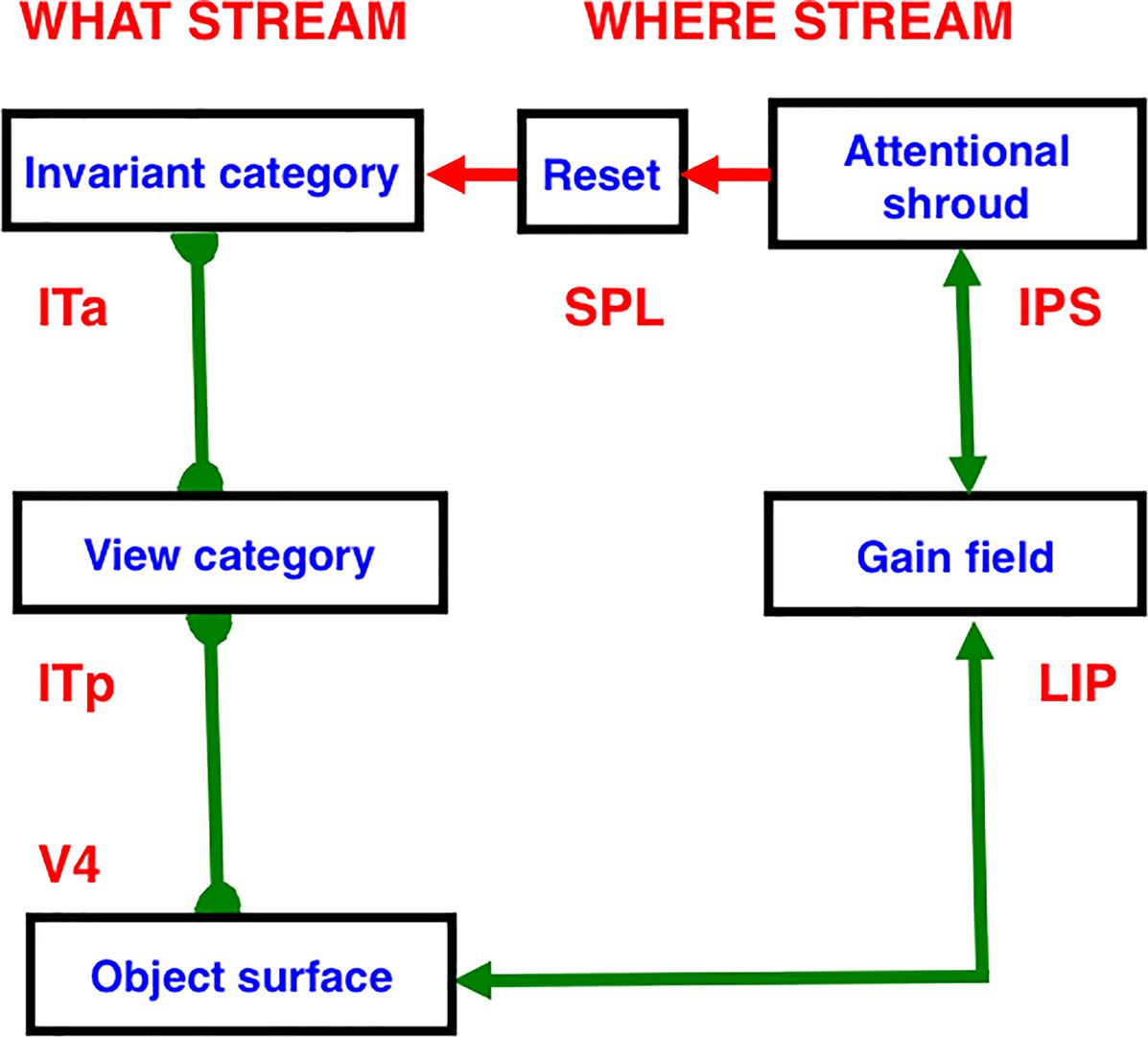

Two of these complementary streams are the ventral What cortical stream for object perception and recognition, and the dorsal Where (or Where/How) cortical processing stream for spatial representation and action (Ungerleider and Mishkin, 1982; Mishkin, 1982; Mishkin et al., 1983; Goodale et al., 1991; Goodale and Milner, 1992). Key properties of these cortical processing streams have been shown to be computationally complementary (Table 1b).

4.1. Invariant Object Category Learning

One of several basic reasons for this particular kind of complementarity is that the cortical What stream learns object recognition categories that become substantially invariant under changes in an object’s view, size, and position at higher cortical processing stages, such as at the anterior inferotemporal cortex (ITa) and beyond (Tanaka, 1997, 2000; Booth and Rolls, 1998; Fazl et al., 2009; Cao et al., 2011; Chang et al., 2014). These invariant object categories have a compact representation that enables valued objects to be recognized without causing the combinatorial explosion that would have occurred if our brains needed to store every individual exemplar of every object that was ever experienced. However, because they are invariant, these categories cannot, by themselves, locate and act upon a desired object in space. Cortical Where stream spatial and motor representations can locate objects and trigger actions toward them, but cannot recognize them. By interacting together, the What and Where streams can consciously see and recognize valued objects and direct appropriate goal-oriented actions toward them.

The original SOVEREIGN model explained simple properties of how such invariant categories are learned as an animal, or animat, explores a novel environment. It used log-polar preprocessing of input images, followed by coarse-coding and algorithmic shift operations, to generate size-invariant and position-invariant input images. These preprocessed images were then input to a Fuzzy ART classifier (Carpenter et al., 1991b) for learning invariant visual 2D view-specific categories whereby SOVEREIGN could recognize an object at variable distances. These view-specific categories were converted into categories that were view-invariant, as well as positionally invariant and size-invariant, by algorithmically associating multiple view-specific categories with a shared view-invariant category (Figure 4A).

Figure 4. (A) The Visual Working Memory and Planning System computes motivationally reinforced representations of sequences of 3D object categories. (B) The Motor Working Memory and Planning System computes motivationally reinforced representations of sequences of motor map positions/directions. See the text for details. [Reprinted with permission from Gnadt and Grossberg (2008)].

Since SOVEREIGN was published, the 3D ARTSCAN SEARCH model was developed to explain how humans and other primates may accomplish incremental unsupervised learning of view-, position-, and size-invariant categories, without any algorithmic shortcuts, and how these invariant categories can be used to trigger a cognitively or motivationally driven Where’s Waldo search for a desired object in a cluttered scene (Fazl et al., 2009; Grossberg, 2009b; Cao et al., 2011; Foley et al., 2012; Chang et al., 2014; Grossberg et al., 2014). These important Recognition and Where’s Waldo search capabilities, which will be further discussed in sections 6.1 and 6.4, can also be incorporated into SOVEREIGN2 instead of the bottom two category learning processes in Figure 4A.

4.2. Adaptive Resonance Theory: A Universal Design for Autonomous Classification and Prediction

The ART in the Fuzzy ART algorithm abbreviates Adaptive Resonance Theory, which was introduced in 1976 (Grossberg, 1976a,b) and developed into the most advanced cognitive and neural theory of how advanced brains learn to attend, recognize, and predict objects and events in complex changing environments that may be filled with unexpected events. ART currently has an unrivalled explanatory and predictive range about how processes of consciousness, learning, expectation, attention, resonance, and synchrony interact in advanced brains. Along the way, all of the foundational hypotheses of ART have been confirmed by later psychological and neurobiological experiments. See Grossberg (2013, 2017, 2018) for recent reviews and syntheses.

ART’s significance is highlighted by the fact that its design principles and mechanisms can be derived from a thought experiment whose simple assumptions are familiar to us all as facts that we experience ubiquitously in our daily lives. These facts embody environmental constraints which, taken together, define a multiple constraint problem that evolution has solved in order to enable humans and other higher animals to be able to autonomously learn to attend, recognize, and predict their unique and changing worlds. Such a competence is essential in autonomous adaptive mobile agents, which is why some ART algorithms were already algorithmically implemented in SOVEREIGN.

4.3. Predictive Brain: Intention, Attention, and Resonance Solve the Stability-Plasticity Dilemma

One of the critical properties of ART that enable it to support open-ended incremental autonomous learning is that resonant states can trigger rapid learning about a changing world while solving the stability-plasticity dilemma. This dilemma asks how can our brains learn quickly without being forced to forget previously learned, but still useful, memories just as quickly?

The stability-plasticity dilemma was articulated before the catastrophic forgetting problem was stated (French, 1999), and clarifies that it is a problem of balance between fast learning and stable memory. Catastrophic forgetting means that an unpredictable part of previously learned memories can rapidly and unpredictably collapse during new learning. This problem becomes particularly acute when learning any kind of Big Data problem, notably during the kind of open-ended incremental learning that an autonomous adaptive robot might need to do as it navigates unfamiliar environments. A catastrophic collapse of previous memories while trying to completely learn about a huge database, not to mention a database that is continually changing through time, is intolerable in any application that can have serious real world consequences. Popular machine learning algorithms such as Back Propagation and its recent variant, Deep Learning (Hinton et al., 2012; LeCun et al., 2015), do not solve the catastrophic forgetting problem. In brief, Deep Learning is unreliable.

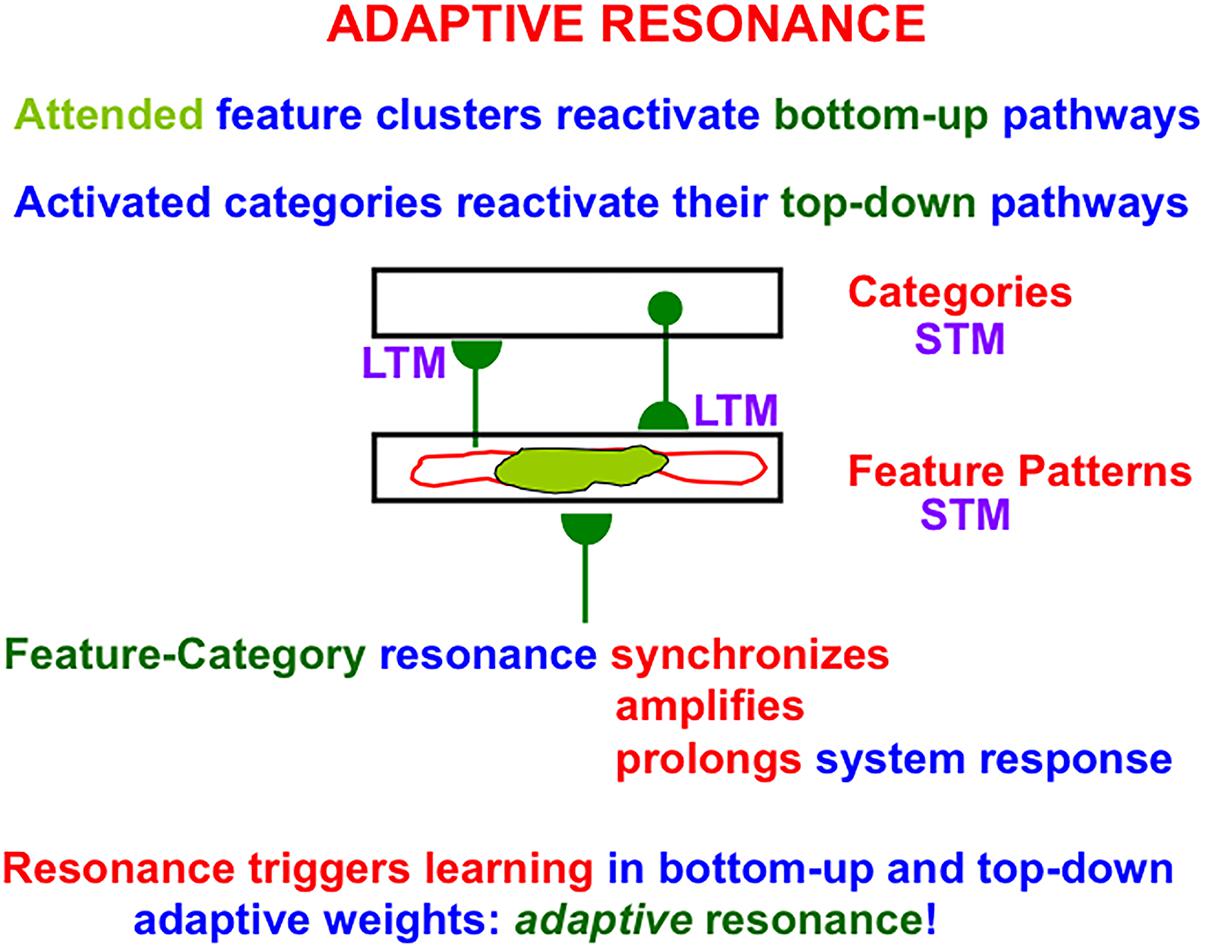

A resonant brain state is a dynamical state during which neuronal firings across a brain network are amplified and synchronized when they interact via reciprocal excitatory feedback signals during an attentive matching process that occurs between bottom-up and top-down pathways. In the case of learning recognition categories, the bottom-up pathways are adaptive filters that tune their adaptive weights, or LTM traces, to more reliably activate the category that best matches the input feature patterns that activate them. The top-down pathways are learned recognition expectations whose LTM traces focus attention upon a prototype of critical features that best predict the active category. As will be explained in greater detail below (see Figure 7 below), a resonance of this kind is called a feature-category resonance in order to distinguish it from the multiple other kinds of resonances that dynamically stabilize learning in different brain systems.

A resonance represents a system-wide consensus that the attended information is worthy of being learned. It is because resonances can trigger fast learning that they are called adaptive resonances, and why the theory that explicates them is called Adaptive Resonance Theory. ART’s proposed solution of the stability-plasticity dilemma mechanistically links the process of stable learning and memory with the mechanisms of Consciousness, Expectation, Attention, Resonance, and Synchrony that enable it. Due to their mechanistic linkage, these processes are often abbreviated as the CLEARS processes.

ART hereby predicts that interactions among CLEARS mechanisms solve the stability-plasticity dilemma. That is why humans and other higher animals are intentional and attentional beings who use learned expectations to pay attention to salient objects and events, why “all conscious states are resonant states,” and how brains can learn both many-to-one maps (representations whereby many object views, positions, and sizes learn to activate the same invariant object category), and one-to-many maps (learned representations that enable us to expertly know many things about individual objects and events).

As will be explained in greater detail below, the link between Consciousness, Learning, and Resonance is a particularly important one for understanding both characteristically human experiences and how future machine learning algorithms may embody them.

4.4. Object Attention Dynamically Stabilizes Learning Using the ART Matching Rule

ART solves the stability-plasticity dilemma by using learned expectations and attentional focusing to selectively process only those data that are predicted to be relevant in any given situation. Because of the CLEARS relationships, such selective attentive processing also solves the stability-plasticity dilemma.

For this to work, the correct laws of object attention need to be used. ART has predicted how object attention is realized in human and other advanced primate brains (e.g., Grossberg, 1980, 2013; Carpenter and Grossberg, 1987a, 1991). In order to dynamically stabilize learning, the learned expectations that focus attention obey a top-down, modulatory on-center, off-surround network. This network is said to obey the ART Matching Rule.

In such a network, when a bottom-up input pattern is received at a processing stage, it can activate its target cells, if nothing else is happening. When a top-down expectation pattern is received at this stage, it can provide excitatory modulatory, or priming, signals to cells in its on-center, and driving inhibitory signals to cells in its off-surround, if nothing else is happening. The on-center is modulatory because the off-surround network also inhibits the on-center cells, and these excitatory and inhibitory inputs are approximately balanced (“one-against-one”). When a bottom-up input pattern and a top-down expectation are both active, cells that receive both bottom-up excitatory inputs and top-down excitatory priming signals can fire (“two-against-one”), while other cells are inhibited. In this way, only cells can fire whose features are “expected” by the top-down expectation, and an attentional focus starts to form at these cells. As a result only attended feature patterns are learned. The system wherein category learning takes place is thus called an attentional system.

The property of the ART Matching Rule that bottom-up sensory activity may be enhanced when matched by top-down signals is in accord with an extensive neurophysiological literature showing the facilitatory effect of attentional feedback (e.g., Sillito et al., 1994; Luck et al., 1997; Roelfsema et al., 1998). This property contradicts popular models, such as Bayesian Explaining Away models, in which matches with top-down feedback cause only suppression (Mumford, 1992; Rao and Ballard, 1999). A related problem is that suppressive matching circuits cannot solve the stability-plasticity dilemma.

An ART expectation is a top-down, adaptive, and specific event that activates its target cells during a match within the attentional system. “Adaptive” means that the top-down pathways contain adaptive weights that can learn to encode a prototype of the recognition category that activates it. “Specific” means that each top-down expectation reads out its learned prototype pattern. One psychophysiological marker of such a resonant match is the processing negativity, or PN, event-related potential (Grossberg, 1978, 1984b; Näätänen, 1982; Banquet and Grossberg, 1987).

4.5. ART Is a Self-Organizing Production System: Lifelong Learning of Expertise

The above properties of an expectation are italicized because, as will be seen below, they are computationally complementary to those of an orienting system that enables ART to autonomously learn about arbitrarily many novel events in a non-stationary environment without experiencing catastrophic forgetting. As will be explained more fully below, if a top-down expectation mismatches an incoming bottom-up input pattern too much, the orienting system is activated and drives a memory search and hypothesis testing for either a better-matching category if the input represents information that is familiar to the network, or a novel category if it is not.

Taken together, the ART attentional and orienting systems constitute a self-organizing production system that can learn to become increasingly expert about the world that it experiences throughout the life span of the individual or machine into which it is embedded.

4.6. ART Can Carry Out Open-Ended Stable Learning of Huge Non-stationary Databases

Our ability to achieve learning throughout life can be stated in another way that emphasizes its critical importance in human societies no less than in designing autonomous adaptive robots with real intelligence: Without stable memories of past experiences, we could learn very little about the world, since our present learning would wash away previous memories unless we continually rehearsed them. But if we had to continuously rehearse everything that we learned, then we could learn very little, because there is just so much time in a day to rehearse. Having an active top-down matching mechanism greatly amplifies the amount of information that humans can quickly learn and stably remember about the world. This capability, in turn, sets the stage for developing a sense of self, which requires that we can learn and remember a record of many experiences that are uniquely ours over a period of years.

With appropriately implemented ART algorithms on board, a SOVEREIGN2 robot can continue to learn indefinitely for its entire lifespan.

4.7. Large-Scale Machine Learning Applications in Engineering and Technology

ART enables a general-purpose category learning, recognition, and prediction capability that has already been used in multiple large-scale applications in engineering and technology. When it is embodied completely enough in SOVEREIGN2, then SOVEREIGN2 can also be used to carry out such applications, and can do so with the advantage being able to navigate environments where these applications occur.

Fielded applications include: airplane design (including the Boeing 777); medical database diagnosis and prediction; remote sensing and geospatial mapping and classification; multidimensional data fusion; classification of data from artificial sensors with high noise and dynamic range (synthetic aperture radar, laser radar, multi-spectral infrared, night vision); speaker-normalized speech recognition; sonar classification; music analysis; automatic rule extraction and hierarchical knowledge discovery; machine vision and image understanding; mobile robot controllers; satellite remote sensing image classification; electrocardiogram wave recognition; prediction of protein secondary structure; strength prediction for concrete mixes; tool failure monitoring; chemical analysis from ultraviolet and infrared spectra; design of electromagnetic systems; face recognition; familiarity discrimination; and power transmission line fault diagnosis. Some of these applications are listed at http://techlab.bu.edu/resources/articles/C5/.

4.8. Mathematically Provable ART Learning Properties Support Large-Scale Applications

It is because the good learning properties of ART have been mathematically proved and tested with comparative computer simulation benchmarks that ART has been used with confidence in these applications (e.g., Carpenter and Grossberg, 1987a,b, 1990; Carpenter et al., 1989, 1991a,b, 1992, 1998).

These theorems prove how ART can rapidly learn, from arbitrary combinations of unsupervised and supervised trials, to categorize complex, and arbitrarily large, non-stationary databases, dynamically stabilize their learned memories, directly access the globally best matching categories with no search during recognition, and use these categories to predict the most likely outcomes in a given situation.

In particular, ART provably solved the catastrophic forgetting problem that other approaches to machine learning have failed to solve.

4.9. ART Solves the Explainable AI Problem and Extracts Knowledge Hierarchies From Data

ART offers a solution of another problem that other researchers in machine learning and AI are still seeking. The learned weights of the fuzzy ARTMAP algorithm (Carpenter et al., 1992) translate, at any stage of learning, into fuzzy IF-THEN rules that “explain” why the learned predictions work. Understanding why particular predictions are made is no less important than their predictive success in applications that have life or death consequences, such as medical database diagnosis and prediction, to which ART has been successfully applied. This problem has not yet been solved in traditional AI, as illustrated by the current DARPA Explainable AI program (XAI1).

In addition, ART can self-organize hierarchical knowledge structures from masses of incomplete and partially incompatible data taken from multiple observers who do not communicate with each other, and who may use different combinations of object names and sensors to incrementally collect their data at different times, locations, and scales (Carpenter et al., 2005; Carpenter and Ravindran, 2008). If swarms of SOVEREIGN2 robots collect data in this distributed way, then they can share it wirelessly to self-organize such cognitive hierarchies of rules.

4.10. Cognitive and Spatial Working Memories and Plans

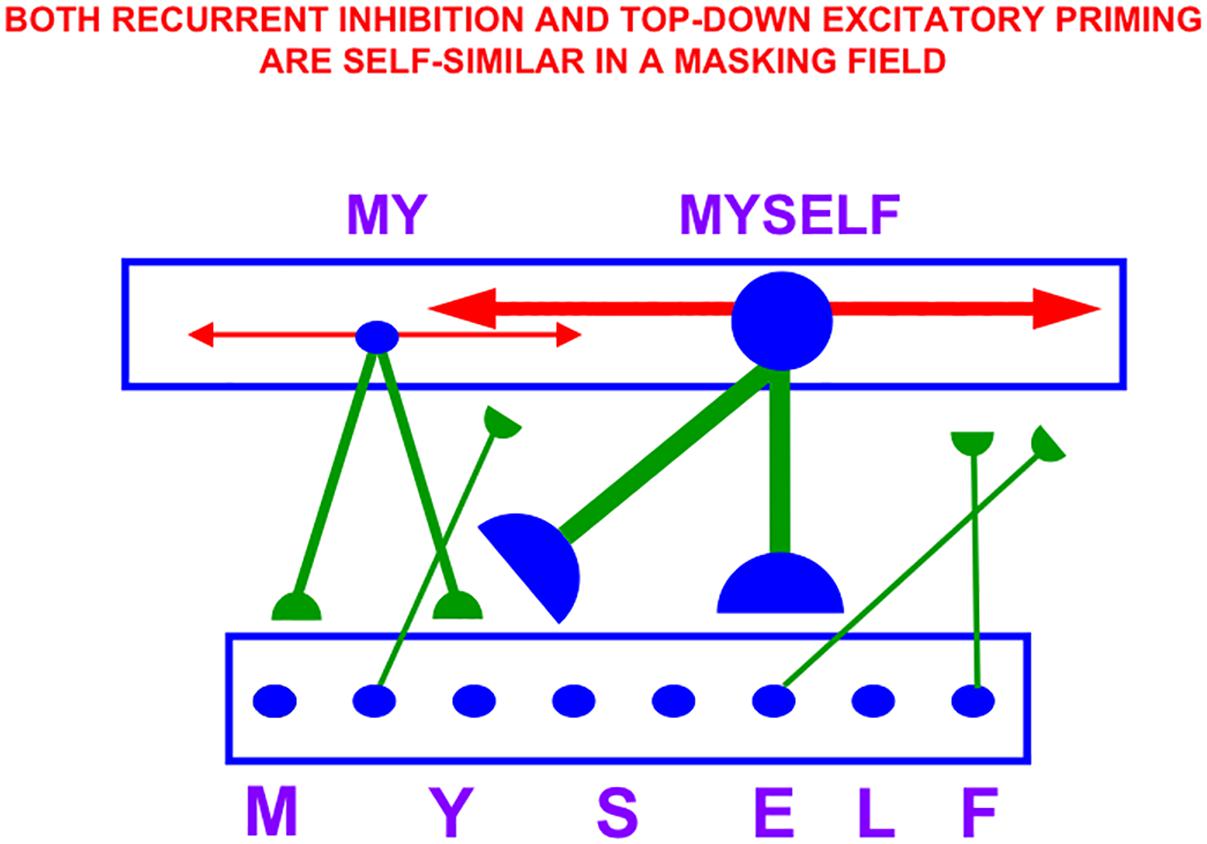

Figure 4A also summarizes higher cognitive and cognitive-emotional processes that are modeled in SOVEREIGN. Together with Figure 4B, these contribute to SOVEREIGN’s Intelligent and Goal-oriented navigation processing whereby cognitive working memories (Figure 4A) and spatial working memories (Figure 4B) provide the information whereby cognitive plans (Figure 4A) and spatial plans (Figure 4B) are learned and used to control actions to acquire valued goals. The cognitive working memory temporarily stores the temporal order of sequences of invariant object categories that represent recently experienced objects. These sequences are themselves categorized during learning of cognitive plans, or list chunks, that fire selectively in response to particular stored object sequences. Such a network of list chunks is called a Masking Field (Grossberg, 1978; Cohen and Grossberg, 1986, 1987; Grossberg and Myers, 2000; Grossberg and Kazerounian, 2011; Kazerounian and Grossberg, 2014). The corresponding spatial working memory and Masking Field in Figure 4B do the same thing for the stored sequences of navigational movements—notably combinations of turns and straight excursions in space—that SOVEREIGN carries out while exploring the maze. These processes will be discussed further in sections 6.9, 6.10, and 7, notably how they need to be enhanced in SOVEREIGN2 to achieve selective processing and storage of only task-relevant sequences of information.

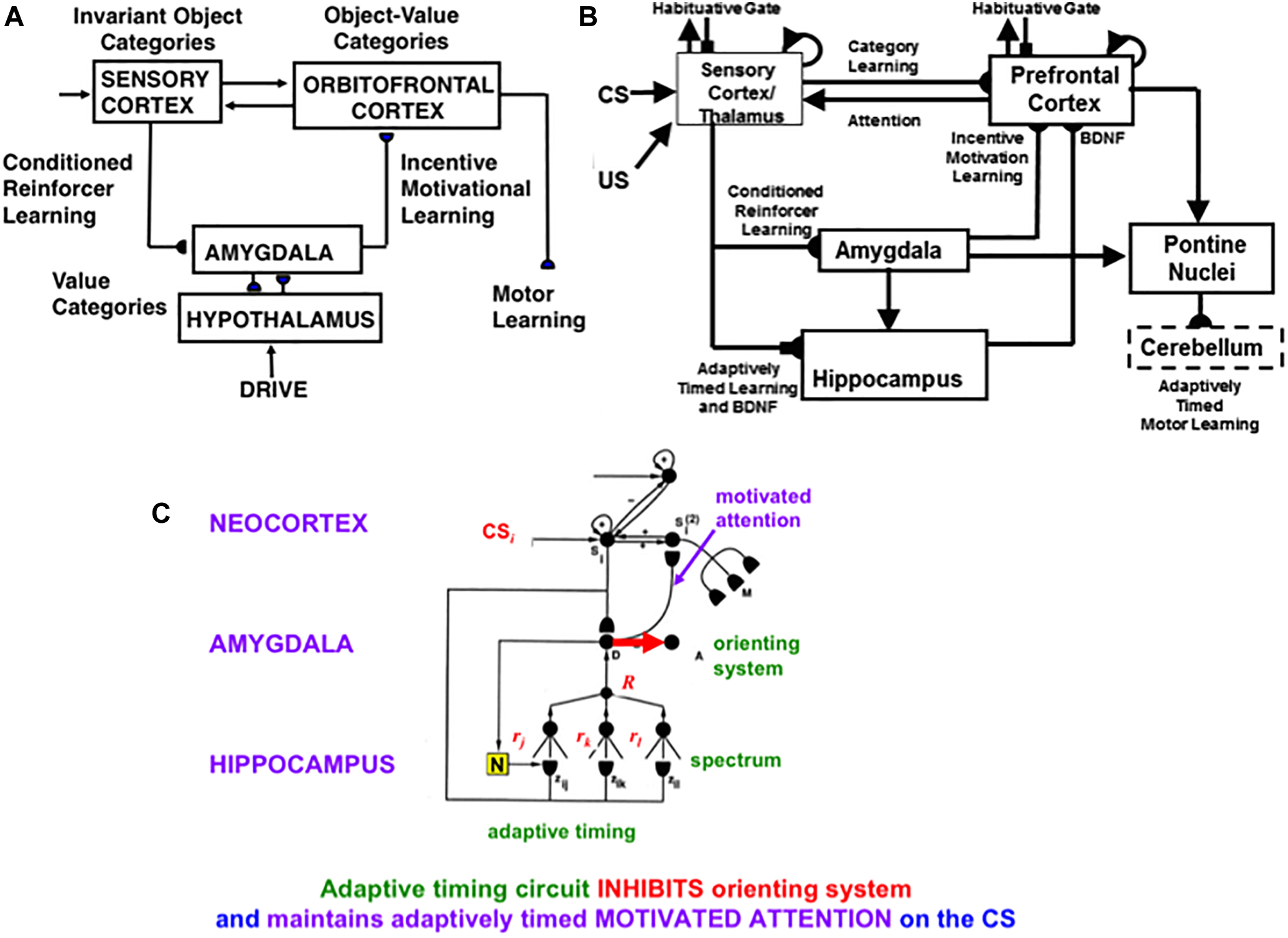

4.11. Reinforcement Learning and Incentive Motivation to Acquire Valued Goals

These cognitive and spatial processes do not themselves compute indices of predictive success and failure. The processes that accomplish goal-oriented selectivity—including gated multipoles and drive representations—occur next (See Figure 12 below). These reinforcement learning and incentive motivational processes enable SOVEREIGN to select, amplify, and sustain in working memory those previous event sequences that have led to predictive success in the past, and to use these list categories to predict the actions most likely to achieve valued goals in the future. These processes will be further discussed in sections 6.5–6.7.

4.12. Prefrontal Regulation of Cognitive and Cognitive-Emotional Dynamics

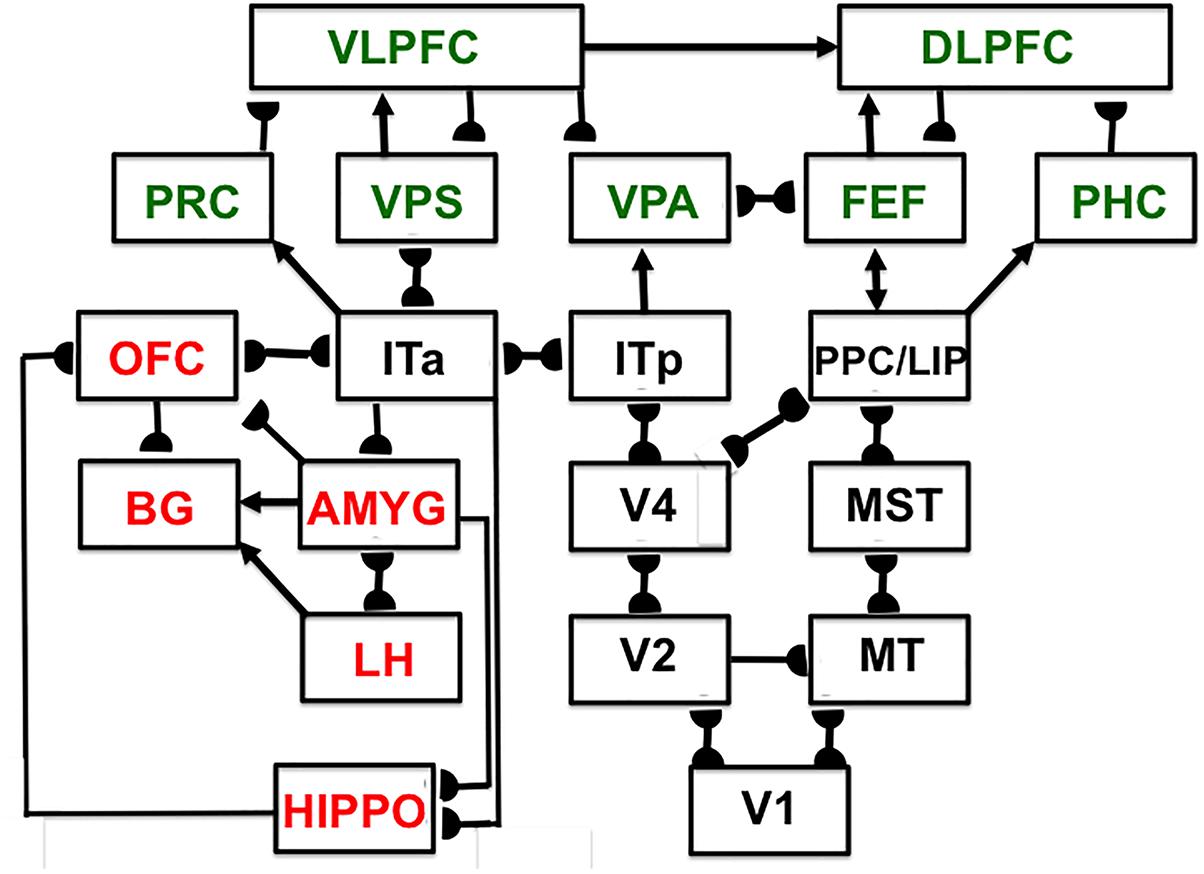

Since SOVEREIGN appeared, the predictive Adaptive Resonance Theory, or pART, model (Grossberg, 2018) has proposed how several parts of the prefrontal cortex (PFC) learn to interact with multiple brain regions to carry out cognitive and spatial working memory, planning, and cognitive-emotional processes. The seven prefrontal cortical regions marked in green in Figure 5 illustrate this complexity. As one of its several explanatory accomplishments, pART clarifies how a top-down cognitive prime from the PFC can bias object attention in the What cortical stream to anticipate expected objects and events, while it also focuses spatial attention in the Where cortical stream to trigger actions that acquire currently valued objects (Fuster, 1973; Baldauf and Desimone, 2014; Bichot et al., 2015). Section 7 will summarize several of these enhanced capabilities of pART. As these enhanced capabilities of pART are incorporated into SOVEREIGN2, it will be able to carry out more sophisticated cognitive, cognitive-emotional, and Where’s Waldo search capabilities than can the SOVEREIGN or the 3D ARTSCAN SEARCH models.

Figure 5. Macrocircuit of the main brain regions, and connections between them, that are modeled in the predictive Adaptive Resonance Theory (pART) model of working memory and cognitive-emotional dynamics. Abbreviations in green denote brain regions used in working memory dynamics, whereas abbreviations in red denote brain regions used in cognitive-emotional dynamics. Black abbreviations refer to brain regions that process visual data during visual perception and are used to learn visual object categories. Arrows denote non-adaptive excitatory synapses. Hemidiscs denote adaptive excitatory synapses. Many adaptive synapses are bidirectional, thereby supporting synchronous resonant dynamics among multiple cortical regions. The output signals from the basal ganglia that regulate reinforcement learning and gating of multiple cortical areas are not shown. Also not shown are output signals from cortical areas to motor responses. V1, striate, or primary, visual cortex; V2 and V4, areas of prestriate visual cortex; MT, middle temporal cortex; MST, medial superior temporal area; ITp, posterior inferotemporal cortex; ITa, anterior inferotemporal cortex; PPC, posterior parietal cortex; LIP, lateral intraparietal area; VPA, ventral prearcuate gyrus; FEF, frontal eye fields; PHC, parahippocampal cortex; DLPFC, dorsolateral hippocampal cortex; HIPPO, hippocampus; LH, lateral hypothalamus; BG, basal ganglia; AMGY, amygdala; OFC, orbitofrontal cortex; PRC, perirhinal cortex; VPS, ventral bank of the principal sulcus; VLPFC, ventrolateral prefrontal cortex. See text for further details. [Reprinted with permission from Grossberg (2018)].

The pART model embodies several different kinds of brain resonances. In particular, the Fuzzy ART classifier in Figure 4A is an algorithmic realization of the kind of feature-category resonance that links cortical areas V4 and ITp in Figure 5. Such a resonance focuses attention upon salient combinations of features while it triggers learning in the bottom-up adaptive filters and top-down learned expectations that bind the attended feature patterns to the object categories that are used to recognize them. Adaptive Resonance Theory, or ART, explicates several different kinds of brain resonances and their different functional roles, as will be further discussed in sections 4.15 and 4.16.

4.13. From Incomplete Early Sensory Representations to Conscious Awareness and Effective Action

Hierarchical resolution of uncertainty occurs even at the earliest cortical processing levels. One of the most important consequences of hierarchical resolution of uncertainty arises from the fact that the perceptual representations that are computed at early processing stages may not be able to control effective actions. These processing stages did not have to be included in SOVEREIGN because it directly processed simplified virtual reality images (Figure 2). SOVEREIGN thus did not have to deal with problems that are raised when images are processed by noisy detectors that are made from biological or physical components.

For example, visual images that are registered on the retina of a human eye are noisy and incomplete due to the existence of the blind spot and retinal veins, which prevent visual features from being registered on the retina at their positions (Supplementary Figure S4). Supplementary Figure S5 illustrates this problem with the simple example of a line that is occluded by the blind spot and some retinal veins. The parts of the line that are occluded need to be completed at higher processing stages before actions like looking and reaching can be directed to these positions. Processes of boundary completion and surface filling-in are needed to generate a sufficiently complete, context-sensitive, and stable visual surface representation upon which subsequent actions can be based (Grossberg, 1994, 1997, 2013, 2017).

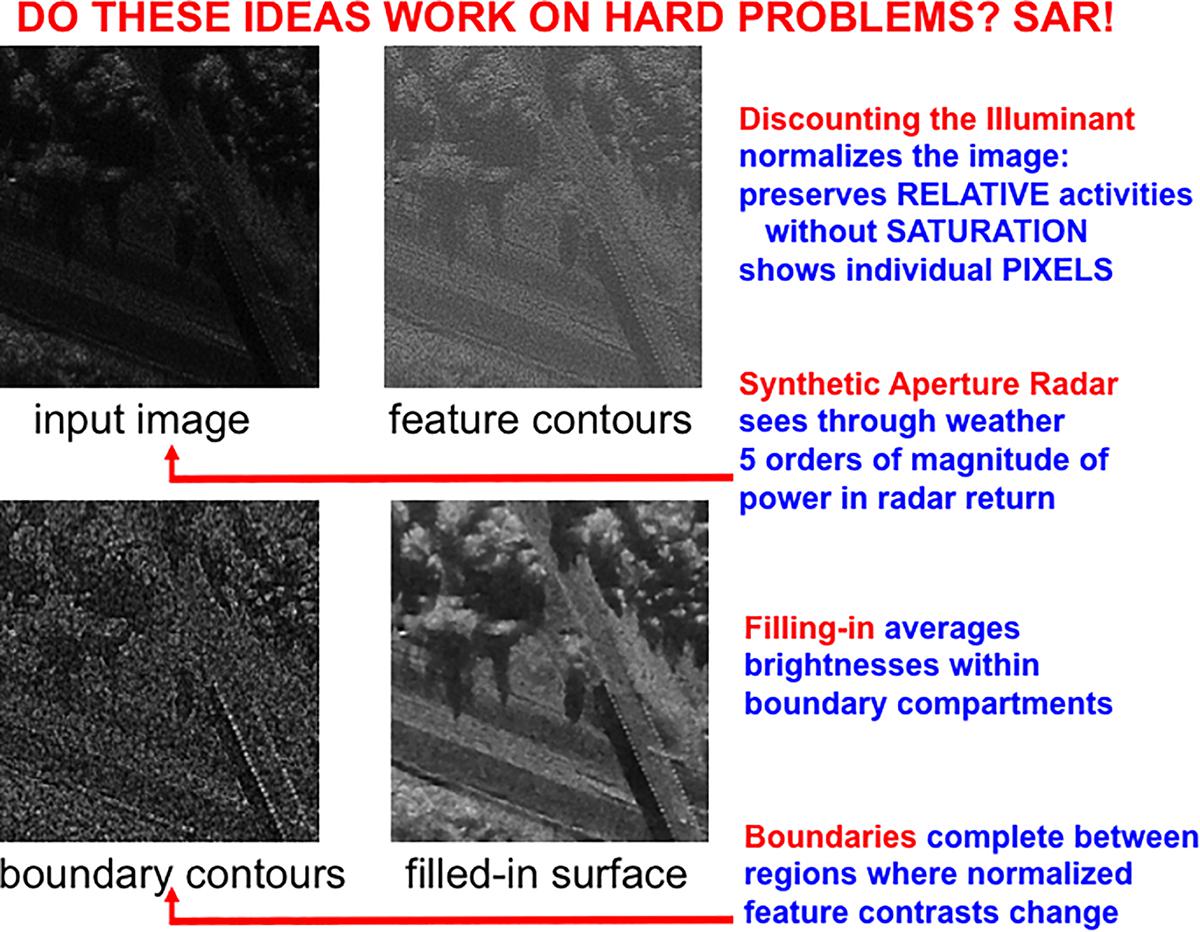

The front end of SOVEREIGN2 can be consistently extended to include these boundary completion and surface filling-in processes, instead of the Render 3-D Scene and Figure-Ground Separation processes in Figure 3A. SOVEREIGN2 can then function even using sensory detectors that may be pixelated or degraded in various ways due to use. Such detectors include artificial sensors such as Synthetic Aperture Radar, Laser Radar, and Multispectral Infrared. Synthetic Aperture Radar, or SAR, can be used to process images that can see through the weather. Figure 6 shows a computer simulation of how a SAR image can be processed by boundary completion and surface filling-in processes that compensate for sensor failures.

Figure 6. A Synthetic Aperture Radar (SAR) input image (upper left panel) is normalized (upper right panel) before it is used to compute boundaries (lower left panel) that join statistically regular pixel contrasts. Then the still highly pixelated normalized image fills-in the compartments defined by the boundaries (lower right image) to generate a representation of a scene in upper New York State in which a diagonal road crosses over a highway in a wooded area. See text for details. [Adapted with permission from Mingolla et al. (1999)].

Boundary completion and surface filling-in processes illustrate one of the best known examples of complementary computing (Grossberg, 1984a, 1994, 1997; Grossberg and Mingolla, 1985): Boundaries are completed inwardly between pairs or greater numbers of inducers in an oriented fashion. Boundary completion is also triggered after the processing stage where cortical complex cells pool signals from simple cells that are sensitive to opposite contrast polarities, thus becoming insensitive to direction of contrast. Because they pool over opposite contrast polarities-including achromatic black–white contrasts, and chromatic red–green and blue–yellow contrasts-boundaries cannot represent conscious visual qualia. That is, all boundaries are invisible. Surface filling-in of brightness and color spread outwardly in an unoriented fashion until they hit a boundary, or attenuate due to their spatial spread. Surface filling-in is also sensitive to direction of contrast. All conscious percepts of visual qualia are surface percepts. These three pairs of properties (inward vs. outward, oriented vs. unoriented, and insensitive vs. sensitive to direction of contrast) are manifestly complementary.

4.14. Why Did Evolution Discover Consciousness? Conscious States Control Adaptive Actions

The above review of some of the early processing stages in the visual system provides a foundation for understanding how ART provides a rigorous computational proposal both for what happens in each brain and how and where it happens as it learns to consciously see, hear, feel, or know something, as well as for why evolution was driven to discover conscious states in the first place (Grossberg, 2017). In particular, as noted above, in order to resolve the computational uncertainties caused by complementary computing, the brain needs to use multiple processing stages that include interactions between pairs of complementary cortical processing streams to realize a hierarchical resolution of uncertainty.

Because the light that falls on our retinas may be occluded by the blind spot, multiple retinal veins, and all the other retinal layers through which light passes before it reaches the light-sensitive photoreceptors (Supplementary Figures S4, S5), these retinal images are highly noisy and incomplete. Using them to control actions like looking and reaching could lead to incorrect, and potentially disastrous, actions.

In order to compute the functional units of visual perception, namely 3D boundaries and surfaces, three pairs of computationally complementary uncertainties need to be resolved using a hierarchical resolution of uncertainty. If this is indeed the case, then why do not the earlier processing stages undermine behavior by causing incorrect, and potentially disastrous, actions to be taken? In the case of visual perception, the proposed answer is that brain resonance, and with it conscious awareness of visual qualia, is triggered at the cortical processing stage that represents 3D surface representations, after they are complete, context-sensitive, and stable enough to control visually based actions like attentive looking and reaching. The conscious state is an “extra degree of freedom” that selectively “lights up” this surface representation and enables our brains to selectively use it to control adaptive actions.

ART hereby links the evolution of consciousness to the ability of advanced brains to learn how to control adaptive actions. In the case of visual perception, this surface representation is predicted to occur in prestriate visual cortical area V4, where a surface-shroud resonance that supports conscious seeing is predicted to be triggered between V4 and the posterior parietal cortex, or PPC (Figure 5), before it propagates both top-down to V2 and V1 and bottom-up to the PFC. The PPC is in the dorsal Where cortical stream. An attentional shroud is spatial attention that fits itself to the shape of an attended object surface (Tyler and Kontsevich, 1995). An active surface-shroud resonance maintains spatial attention on the surface throughout the duration of the resonance. When spatial attention shifts, the resonance collapses and another object can be attended.

While a surface-shroud resonance is still active, it regulates saccadic eye movement sequences that foveate salient features on the attended object surface. These properties mechanistically explain the distinction between two different functional roles of PPC: its control of top-down attention from PPC to V4 and its control of the intention to move, a distinction that has been reported in both psychophysical and neurophysiological experiments (e.g., Andersen et al., 1985; Gnadt and Andersen, 1988; Snyder et al., 1997, 1998). How spatial attention regulates the learning of invariant object categories during free scanning of a scene using its intentional choice of scanning eye movements that foveate sequences of salient surface features will be summarized in section 6.4.

The proposed link between consciousness and action is relevant to the design of future autonomous adaptive robots, and provides a new computational perspective for discussing whether machine consciousness is possible, and how it may be necessary to control a robot’s choice of context-appropriate actions.

4.15. Synchronized Resonances for Seeing and Knowing: Visual Neglect and Agnosia

Many psychological and neurobiological data have been explained using ART resonances. For example, surface-shroud resonances for conscious seeing and feature-category resonances for conscious knowing of visual events can synchronize via shared visual representations in the prestriate cortical areas V2 and V4 when a person sees and knows about a familiar object (Figure 5). A lesion of the parietal cortex in one hemisphere can prevent a surface-shroud resonance from forming, thereby leading to the clinical syndrome of visual neglect, whereby an individual may draw only one half of the world, dress only one half of the body, and make erroneous reaches. A lesion of the inferotemporal cortex can prevent a feature-category resonance from forming, thereby leading to the clinical syndrome of visual agnosia, whereby a human can accurately reach for an object without knowing anything about it. See Grossberg (2017) for mechanistic explanations.

4.16. Classification of Adaptive Resonances for Seeing, Hearing, Feeling, Knowing, and Acting

In addition to the surface-shroud resonances that supports conscious seeing and the feature-category resonances that support conscious knowing, ART explains what resonances support hearing and feeling, and how resonances supporting knowing are synchronously linked to them. All of these resonances support different kinds of learning that solve the stability-plasticity dilemma; e.g., visual and auditory learning, reinforcement learning, cognitive recognition learning, and cognitive speech and language learning.

In summary, surface-shroud resonances support conscious percepts of visual qualia. Feature-category resonances support conscious learning and recognition of visual objects and scenes. Both kinds of resonances may synchronize during conscious seeing and recognition, so that we know what a familiar object is as we see it. Stream-shroud resonances support conscious percepts of auditory qualia. Spectral-pitch-and-timbre resonances support conscious learning and recognition of sources in auditory streams. Stream-shroud and spectral-pitch-and-timbre resonances may synchronize during conscious hearing and recognition of auditory streams. Item-list resonances support conscious learning and recognition of speech and language. They may synchronize with stream-shroud and spectral-pitch-and-timbre resonances during conscious hearing of speech and language, and build upon the selection of auditory sources by spectral-pitch-and-timbre resonances in order to recognize the acoustical signals that are grouped together within these streams. Cognitive-emotional resonances support conscious percepts of feelings, as well as learning and recognition of the objects or events that cause these feelings. Cognitive-emotional resonances can synchronize with resonances that support conscious qualia and knowledge about them.

These resonances embody parametric properties of individual conscious experiences that enable effective actions to be chosen without interference from earlier processing stages. For example, surface-shroud resonances help to control looking and reaching; stream-shroud resonances help to control auditory communication, speech, and language; and cognitive-emotional resonances help to acquire valued goal objects. In autonomous adaptive systems that solve the stability-plasticity dilemma using ART dynamics, formal mechanistic homologs of such different states of resonant consciousness may be needed to choose the different kinds of actions that they control. More information will be summarized below about cognitive-emotional resonances in sections 6.5–6.7.

5. Building Upon Three Basic Design Themes: Homologous Circuits for Reaching and Navigating

A third design theme that is realized by the SOVEREIGN model is that advanced brains use homologous circuits to compute arm movements during reaching behaviors, and body movements during spatial navigation. In particular, both navigational movements and arm movements are controlled by circuits which share a similar mismatch learning law—called a Vector Associative Map, or VAM (Gaudiano and Grossberg, 1991, 1992; see section 5.3)—that enables learned calibration of difference vectors in the manner described below. This proposed homology clarifies how navigational and arm movements can be coordinated when a body navigates toward a goal object before grasping it. SOVEREIGN used difference vectors to model navigational movements. It did not, however, include a controller for arm movements that could grasp a valued object when it came within range. The text below indicates how unsupervised incremental learning in SOVEREIGN2 realizes such a capability and can, moreover, do so using a tool (see section 5.4).

5.1. Arm Movement Control Using Difference Vectors and Volitional GO Signals

Neural models of arm movement trajectory control, such as the Vector Integration to Endpoint, or VITE, model (Bullock and Grossberg, 1988) (Figure 8, left panel) and their refinements (e.g., Bullock et al., 1993) (Figure 8, right panel) propose how cortical arm movement control circuits compute a representation of where the arm wants to move (i.e., the target position vector T) and subtracts from it an outflow representation of where the arm is now (i.e., the present position vector P). The resulting difference vector D between target position T and present position P represents the direction and distance that the arm needs to move to reach its goal position. Basal ganglia (BG) volitional signals of various kinds, such as a GO signal G, transform the difference vector D into a motor trajectory that can move with variable speed by multiplying D with G, before this product is integrated by P. Because P integrates the product DG, DG represents the commanded outflow movement speed. Then P moves at a speed that increases with G, other things being equal. As P approaches T, D approaches zero, along with the outflow speed DG, so the movement terminates at the desired target position.

5.2. Computing Present Position for Spatial Navigation From Vestibular Signals: Place Cells

Because the arm is attached to the body, the present position of the arm can be computed using outflow, or corollary discharge, commands P that are derived directly from the movement commands to the arm itself (Figure 8, left panel). In contrast, when a body moves with respect to the world, no such immediately available present position command is available. The ability to compute a difference vector between a target position and the present position of the body-in order to determine the direction and distance that the body needs to navigate to acquire the target-requires more elaborate brain machinery. At the time SOVEREIGN was published, computation of such a Present Position Vector, called NET in SOVEREIGN, used an algorithm to estimate the information that vestibular signals compute in vivo.

SOVEREIGN breaks down spatial navigation into sequences of straight excursions in fixed directions, after which a head/body turn changes the direction before another straight excursion occurs. In vivo, vestibular signals provide angular velocity and linear velocity signals that can be integrated to compute these head/body angles and straight movement distances. The SOVEREIGN algorithm adds the head/body turn angles, as well as the body approach distances for each straight excursion, to compute NET. Then, as Figure 1B summarizes, NET is subtracted from the Reactive Visual TPV Storage to compute a Reactive DV, which controls the next straight movement in space. Each head/body turn resets NET to allow the next NET estimate to be computed. Using such computations, SOVEREIGN was able to learn how to navigate toward valued goals in structured environments like the maze in Figure 2.

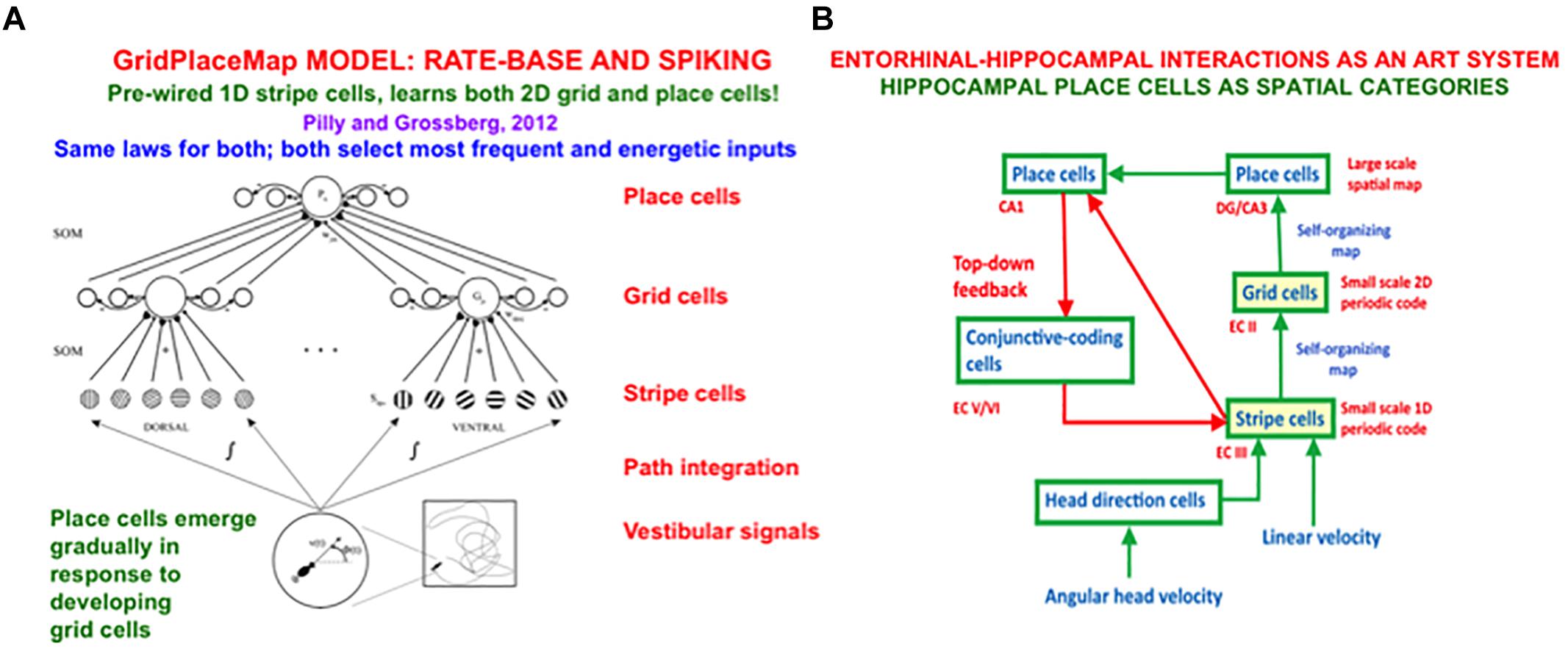

In sufficiently advanced terrestrial animals, from rats to humans, an animal’s position in space is computed from a combination of both visual and path integration information. The visual information is derived from 3D perceptual representations that are completed by processes such as boundary completion and surface filling-in. The path integration information is derived from vestibular angular velocity and linear velocity signals that are activated by an animal’s navigational movements. This vestibular information is transformed by entorhinal grid cells and hippocampal place cells into representations of the animal’s present position in space (O’Keefe and Nadel, 1978; Hafting et al., 2005). The GridPlaceMap model simulated how these cells learn their spatial representations as the animal navigates realistic trajectories (e.g., Grossberg and Pilly, 2014). Key properties of the GridPlaceMap model and some of the grid cell and place cell data that it can explain are summarized in section 8.

When SOVEREIGN2 replaces the algorithmic computations of NET in Figure 1B by circuits that learn grid and place cells, it can then autonomously learn spatial NET estimates as the animat navigates novel environments that may be far more complicated than the plus maze in Figure 2. When such a self-organized NET estimate is used to compute a difference vector between the present and target positions, a volitional GO signal can move the animat toward the desired target, just as in the case of an arm movement.

5.3. From VITE to VAM: How a Circular Reaction Drives Mismatch Learning to Calibrate VITE

In order for VITE dynamics to work properly, its difference vectors need to be properly calibrated. In particular, when T and P represent the same position in space, D must equal zero. However, T and P are computed in two different networks of cells. It is too much to expect that the activities of these two networks, and the gains of the pathways that carry their signals to D, become perfectly matched without the benefit of some kind of learning. The Vector Associative Map, or VAM model explains how this kind of learning occurs (Gaudiano and Grossberg, 1991, 1992). In brief, the VAM model corrects this problem using a form of mismatch learning that adaptively changes the gains in the T-to-D pathways until they match those in the P-to-D pathways, so that when T = P, D = 0.

The VAM model does this using what has been called a circular reaction since the pioneering work of Jean Piaget on infant development (Piaget, 1945, 1951, 1952). All infants normally go through a babbling phase, and it is during such a babbling phase that a circular reaction can be learned. In particular, during a visual circular reaction, babies endogenously babble, or spontaneously generate, hand/arm movements to multiple positions around their bodies. As their hands move in front of them, their eyes automatically, or reactively, look at their moving hands. While the baby’s eyes are looking at its moving hands, the baby learns an associative map from its hand positions to the corresponding eye positions, and from eye positions to hand positions. Learning of the map between eye and hand in both directions constitutes the “circular” reaction.

After map learning occurs, when a baby, child, or adult looks at a target position with its eyes, this eye position can use the learned associative map to generate a movement command to reach the corresponding position in space. In order for the command to be read out, a volitional GO signal from the BG-notably from the substantia nigra pars reticulata, or SNr—opens the corresponding movement gate (Prescott, 2008). Such a gate-opening signal realizes “the will to act.” Then the hand/arm can reach to the foveated position in space under volitional control. Because our bodies continue to grow for many years as we develop from babies into children, teenagers, and adults, these maps continue updating themselves throughout our lives.

In a VAM, endogenous babbling is accomplished by an Endogenous Random Generator, or ERG+, that sends random signals to P that cause the arm to automatically babble a movement in its workspace. This movement is thus not under volitional control. When P gets activated, in addition to causing the arm to move, it sends signals that input an inhibitory copy of itself to D.

The ERG has an opponent organization. It is the ERG ON, or ERG+, component that energizes the babbled arm moment. When ERG+ momentarily shuts off, ERG OFF, or ERG-, is disinhibited and opens a gate that lets P get copied at T, where it is stored. At this moment, both T and P represent the same position in space. If the model were correctly calibrated, the excitatory T-to-D and inhibitory P-to-D signals that input to D in response to the same positions at T and P would cancel, causing D to equal zero. If D is not zero under these circumstances, then the signals are not properly calibrated. The VAM model uses such non-zero D vectors as mismatch teaching signals that adaptively calibrate the T-to-D signals. As perfect calibration is achieved, D approaches zero, at which time mismatch learning self-terminates.

Another refinement of VITE showed how arm movements can compensate for variable loads and obstacles, and interpreted the hand/arm trajectory formation stages in terms of identified cells in motor and parietal cortex, whose temporal dynamics during reaching behaviors were quantitatively simulated (Bullock et al., 1998; Cisek et al., 1998).

5.4. Motor-Equivalent Reaching With Clamped Joints and Tools: The DIRECT Model

Yet another VITE model refinement, called the DIRECT model (Figure 8, right panel), builds upon VAM calibration to propose how motor-equivalent reaching is learned (Bullock et al., 1993). Motor-equivalent reaching explains how, during movement planning, either arm, or even the nose, could be moved to a target position, depending on which movement system receives a GO signal.

The DIRECT model also begins to learn by using a circular reaction that is energized by an ERG (Figure 8, right panel). Motor-equivalent reaching emphasizes that reaching is not just a matter of combining visual and motor information to transform a target position on the retina into a target position in body coordinates. Instead, these visual and motor signals are first combined to learn a representation of the space around the actor which can then be downloaded to move any of several motor effectors.

Remarkably, after the DIRECT model uses its circular reaction to learn its spatial representations and transformations, its motor-equivalence properties enable it to accurately move an arm, even when its joints are clamped, to any position in its workspace on the first try. DIRECT can also manipulate a tool in space. The conceptual importance of this result cannot be overemphasized: Without measuring tool length or angle with respect to the hand, the model can move the tool’s endpoint to touch the target’s position correctly under visual guidance on its first try, in a single reach without later corrective movements, and without additional learning. In other words, the spatial affordance for tool use, a critical foundation of human societies, follows from the brain’s ability to learn a circular reaction for motor-equivalent reaching in space. Adding these reaching capabilities to SOVEREIGN2 will enable it to use tools to manipulate target objects after it navigates to them.

5.5. Social Cognition: Joint Attention and Imitation Learning Using CRIB Circular Reactions

The DIRECT model shows how the spatial affordance for tool use could arise as a result of the circular reactions that enable reaching behaviors to develop. With DIRECT on board, a child, monkey, or robot could then volitionally reach objects with its own hand, or even using a tool like a stick. If a monkey happened to pick up a stick in this way, put it into an ant hill, and pulled it out with some ants on it, it could learn this skill to eat ants in the future whenever it wanted to do so. However, another monkey looking at this skill could not learn it from the first one without further brain machinery, because the two monkeys experience this event from two different spatial vantage points. This additional brain machinery is needed for social cognitive skills to be learned, including the learning of joint attention and imitation learning. These are competences upon which all human societies have built.

Grossberg and Vladusich (2010) develops the Circular Reactions for Imitative Behavior, or CRIB, model to explain how imitation learning utilizes inter-personal circular reactions that take place between teacher and learner, notably how a learner can follow a teacher’s gaze to fixate a valued goal object, and distinguishes them from the classical intra-personal circular reactions of Piaget that take place within a single learner, such as the one that enables reaching behaviors to be learned. After a learner can volitionally reach objects on its own, it can also learn, using an inter-personal circular reaction, to reach an object at which a teacher is looking, such as a stick with which to retrieve ants from an anthill. By building upon intra-personal circular reactions that are capable of learning motor-equivalent reaches, the CRIB model hereby clarifies how a pupil can learn from a teacher to manipulate a tool in space.

In order to achieve joint attention and imitation learning, the learner needs to be able to bridge the gap between the teacher’s coordinates and its own. In the neurobiological literature, this capability is often attributed to mirror neurons that fire either if an individual is carrying out an action or just watching someone else perform the same action (Rizzolatti and Craighero, 2004; Rizzolatti, 2005). This attribution does not, however, mechanistically explain how the properties of mirror neurons arise. The CRIB model proposes that the “glue” that binds these two coordinate systems, or perspectives, together is a surface-shroud resonance. How this works is modeled in Grossberg and Vladusich (2010). It is also known that a breakdown of joint attention can cause severe social difficulties in individuals with autism. How these and other breakdowns in learning cause symptoms of autism are modeled by the iSTART model (Grossberg and Seidman, 2006).

If CRIB-like social cognition capabilities are incorporated into a “classroom” of SOVEREIGN2 robots, they can then all learn sensory-motor skills from a teacher who they see from different vantage points.

5.6. Platform Independent Movement Control

If SOVEREIGN2 is used to control an embodied mobile robot, then an important design choice is whether to use legs or wheels with which to navigate. Difference vector (DV) control of direction and distance that is gated by a GO signal can be used in either case.

To help guide the development of a legged robot, neural network models have shown how leg movements can be performed with different gaits, such as walk or run in bipeds, and walk, trot, pace, and gallop in quadrupeds, as the GO signal size increases (Pribe et al., 1997).

An example of DV-GO control in a wheeled mobile robot was developed by Zalama et al. (1995) and Chang and Gaudiano (1998) and tested on robots such as the Khepera and Pioneer 1 mobile robots to demonstrate VAM learning of how to approach rewards and avoid obstacles in a cluttered environment, with no prior knowledge of the geometry of the robot or of the quality, number, or configuration of the robot’s sensors. Learning in one environment generalized to other environments because it is based on the robot’s egocentric frame of reference. The robot also adapted on line to miscalibrations produced by wheel slippage, changes in wheel sizes, and changes in the distance between the wheels.

In summary, both navigational movements in the world and movements of limbs with respect to the body use a difference vector computational strategy.

Sections 6–8 provide a deeper and broader conceptual and mechanistic insight into the themes that the earlier sections have introduced.

6. Resonant Dynamics for Perception, Cognition, Affect, and Planning

6.1. Invariant Object Category Learning Uses Feature-Category Resonances and Surface-Shroud Resonances

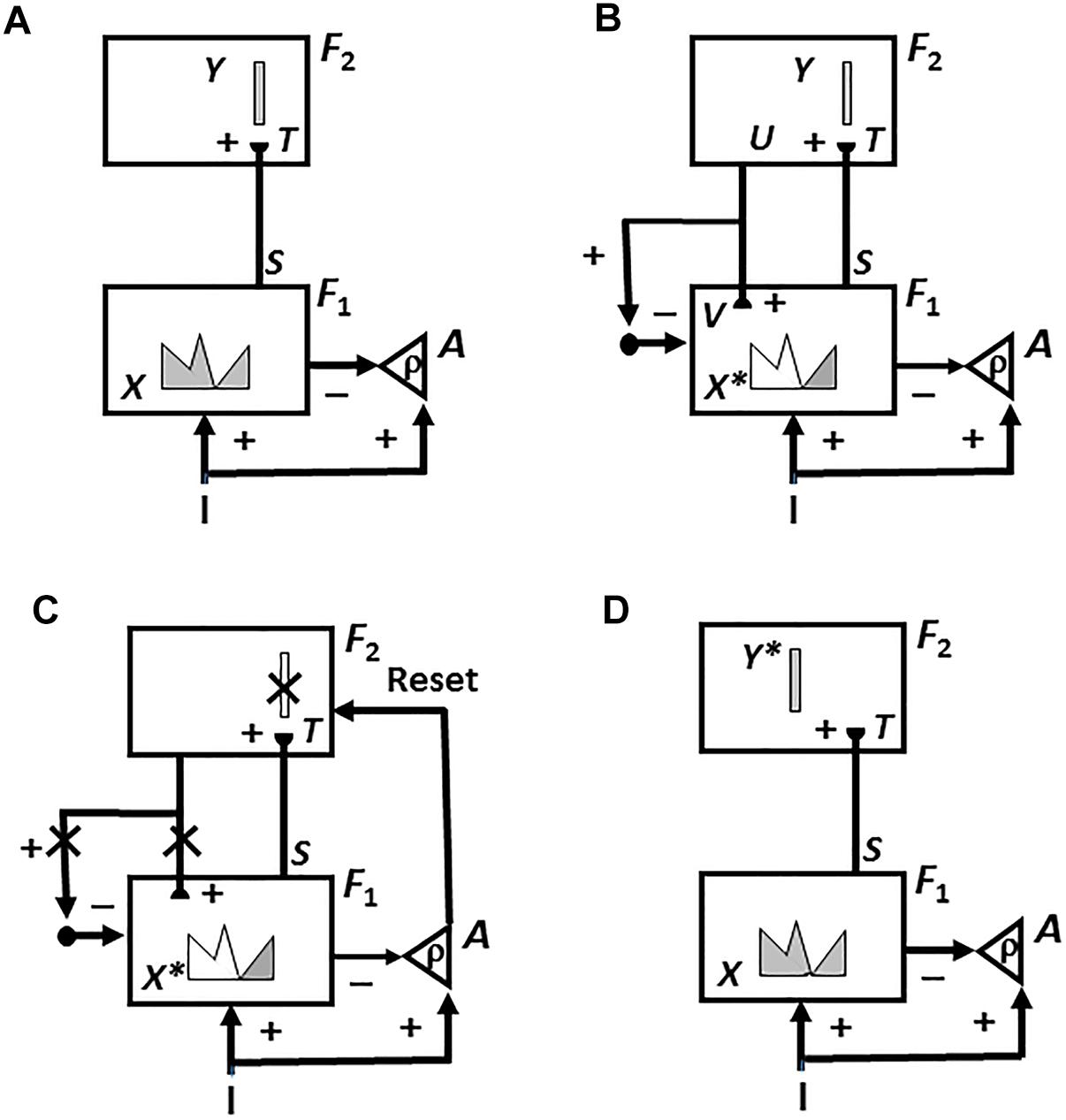

Many of the enhanced capabilities of SOVEREIGN2 will use resonant processes. In particular, in order for SOVEREIGN2 to learn view-, position-, and size-invariant object categories as it scans a scene with eye, or camera, movements, two different types of resonances need to be coordinated: feature-category resonances and surface-shroud resonances. In vivo, view-, position-, and size-specific visual percepts in the striate and prestriate visual cortices V1, V2, and V4 are transformed into view-, position-, and size-specific object recognition categories in the posterior inferotemporal cortex (ITp) via feature-category resonances (Table 1a and Figure 7) within the What cortical stream.

Figure 7. During an adaptive resonance, attended feature patterns interact with recognition categories, both stored in short-term memory (STM), via positive feedback pathways that can synchronize, amplify, and prolong the resonating cell activities. Such a resonance can trigger learning in the adaptive weights, or long-term memory (LTM) traces, within both the bottom-up adaptive filter pathways and the top-down learned expectation pathways. In the present example, the resonance is a feature-category resonance (see Table 1a).