Abstract

Hierarchical structures constitute a wide array of brain areas, including the visual system. One of the important questions regarding visual hierarchical structures is to identify computational principles for assigning functions that represent the external world to hierarchical structures of the visual system. Given that visual hierarchical structures contain both bottom-up and top-down pathways, the derived principles should encompass these bidirectional pathways. However, existing principles such as predictive coding do not provide an effective principle for bidirectional pathways. Therefore, we propose a novel computational principle for visual hierarchical structures as spatio-temporally efficient coding underscored by the efficient use of given resources in both neural activity space and processing time. This coding principle optimises bidirectional information transmissions over hierarchical structures by simultaneously minimising temporal differences in neural responses and maximising entropy in neural representations. Simulations demonstrated that the proposed spatio-temporally efficient coding was able to assign the function of appropriate neural representations of natural visual scenes to visual hierarchical structures. Furthermore, spatio-temporally efficient coding was able to predict well-known phenomena, including deviations in neural responses to unlearned inputs and bias in preferred orientations. Our proposed spatio-temporally efficient coding may facilitate deeper mechanistic understanding of the computational processes of hierarchical brain structures.

Introduction

It is well-established that a wide array of brain areas has a hierarchical structure, including the visual system (Felleman and Van Essen, 1991; Mesulam, 1998; Harris et al., 2019; Hilgetag and Goulas, 2020). Studies have identified a link between hierarchical structures and gene expression (Burt et al., 2018; Hansen et al., 2021), suggesting that hierarchical structures are genetically determined a priori. Given that one of the major functions of the brain is to represent the external world (deCharms and Zador, 2000; Kriegeskorte and Diedrichsen, 2019), an ensuing question arises: How do a priori hierarchical brain structures attain functions to represent the external world? This question can be addressed by identifying a fundamental neural coding principle that assigns representational functions to hierarchical structures.

The traditional view of the neural coding of visual hierarchical structures is bottom-up visual information processing, whereby simple features are processed in a lower visual hierarchy and more complex features created by integrating simple features are processed in a higher visual hierarchy (Hubel and Wiesel, 1962, 1968; Riesenhuber and Poggio, 1999, 2000; Serre et al., 2007; DiCarlo et al., 2012; Yamins et al., 2014). However, this view does not consider the role of top-down pathways that are abundant even in the early visual system, such as the lateral geniculate nucleus (Murphy and Sillito, 1987; Wang et al., 2006) and primary visual cortex (Zhang et al., 2014; Muckli et al., 2015; Huh et al., 2018).

The role of top-down visual processing is especially prominent in predictive coding (Rao and Ballard, 1999; Spratling, 2017). According to predictive coding, a higher hierarchy performs top-down predictions of neural responses in a lower hierarchy. Both inference and learning of predictive coding are based on the minimisation of bottom-up prediction errors. Predictive coding has been used to explain the neural responses corresponding to prediction errors (Friston, 2005) and extends from the explanations of perception to action (Friston, 2010; Clark, 2013). A recent study combined predictive coding with sparse coding (i.e., sparse deep predictive coding) and demonstrate that it could enhance perceptual explanatory power (Boutin et al., 2021).

Nevertheless, predictive coding has several theoretical shortcomings. Since inference in predictive coding aims to minimise prediction errors, the hierarchical structure would require an additional information processing subsystem to perform this inference. In addition, because bottom-up transmitted information contains only prediction errors, predictive coding requires the presence of error units (biological neurons) in the hierarchical structure to represent this prediction error, yet such error units remain as hypothetical entities and evidence for prediction error responses is limited in some conditions (Solomon et al., 2021).

In a hierarchical structure in which information is exchanged in both directions, if the information represented by the upper and lower hierarchies at the same time is different, it is difficult to obtain a stable neural response on time domain for an external input. The reason is that if the information represented by the upper and lower hierarchy are different, different information is exchanged, and thus the information represented next time may be also different. These inter-connected structures could also produce chaotic dynamics (Rubinov et al., 2009; Tomov et al., 2014). Nevertheless, neural responses in the early visual system can be decoded as external inputs. These decodable neural responses include both neuronal spikes (Berens et al., 2012; Zavitz et al., 2016) and blood-oxygen-level-dependent responses (Kamitani and Tong, 2005; Brouwer and Heeger, 2009). Therefore, it is important to find neural coding principles that enable decodable stable neural representations in hierarchical structures on time domain.

Existing efficient coding (Attneave, 1954; Barlow, 1961; Laughlin, 1981) does not require additional information processing subsystems or virtual error units, which are shortcomings of predictive coding. Unfortunately, however, existing efficient coding does not consider hierarchical structures on the time domain to deal with the above issue. Many other ideas have been proposed for representation learning (Bengio et al., 2013). Properties of the visual cortex have been successfully studied using sparse coding (Olshausen and Field, 1996). Sparse coding has been successfully implemented using artificial neural networks such as restricted Boltzmann machines for representation learning (Goodfellow et al., 2011). However, these sparse coding studies have the disadvantage that they do not take into account the passage of time, which is an important aspect in the operation of the real brain. The hierarchical structure of the brain, which ascends to the upper hierarchy from the input by the bottom-up pathway and then descends to the input by the top-down pathway again, resembles the structure of autoencoders (Bourlard and Kamp, 1988; Hinton and Zemel, 1993) of artificial neural networks. Many autoencoders have also been used for representation learning (Bengio et al., 2013). Typical examples include sparse autoencoders (Ranzato et al., 2006), denoising autoencoders (Vincent et al., 2008), and contractive autoencoders (Rifai et al., 2011). However, these examples do not take into account the passage of time.

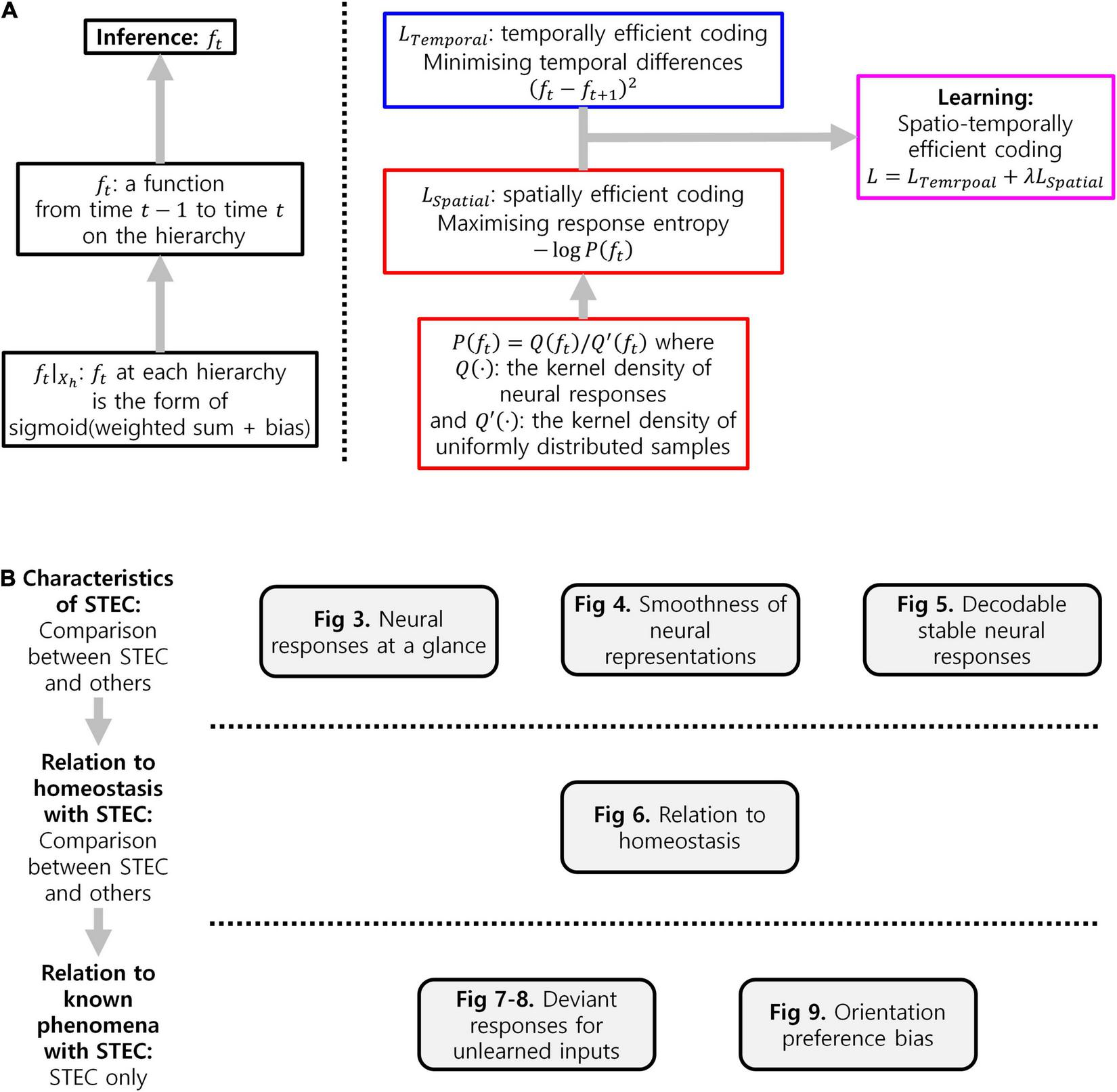

We, therefore, propose a novel computational principle for hierarchical structures as spatio-temporally efficient coding underscored by the efficient use of given resources in both neural activity space and processing time (Figure 1). Spatio-temporally efficient coding minimises temporal differences of neural responses (temporally efficient coding), and maximises activity space of neural responses for different external stimuli (spatially efficient coding). We call the combination of temporally efficient coding and spatially efficient coding as spatiotemporal efficient coding. By spatio-temporally efficient coding, neural responses change smoothly but dynamically. Those dynamical changes of neural responses for the changing external world differs from the slow feature analysis (Wiskott and Sejnowski, 2002; Berkes and Wiskott, 2005; Creutzig and Sprekeler, 2008) which also minimises temporal differences of neural responses. Similar to slow feature analysis, there have been studies on the properties of cells in the visual cortex using temporal coherence to obtain slow representations (Hurri and Hyvärinen, 2002; Zou et al., 2011). However, such studies using temporal coherence lack the aspect of dynamically reacting to changes in external input or neural responses of other hierarchies.

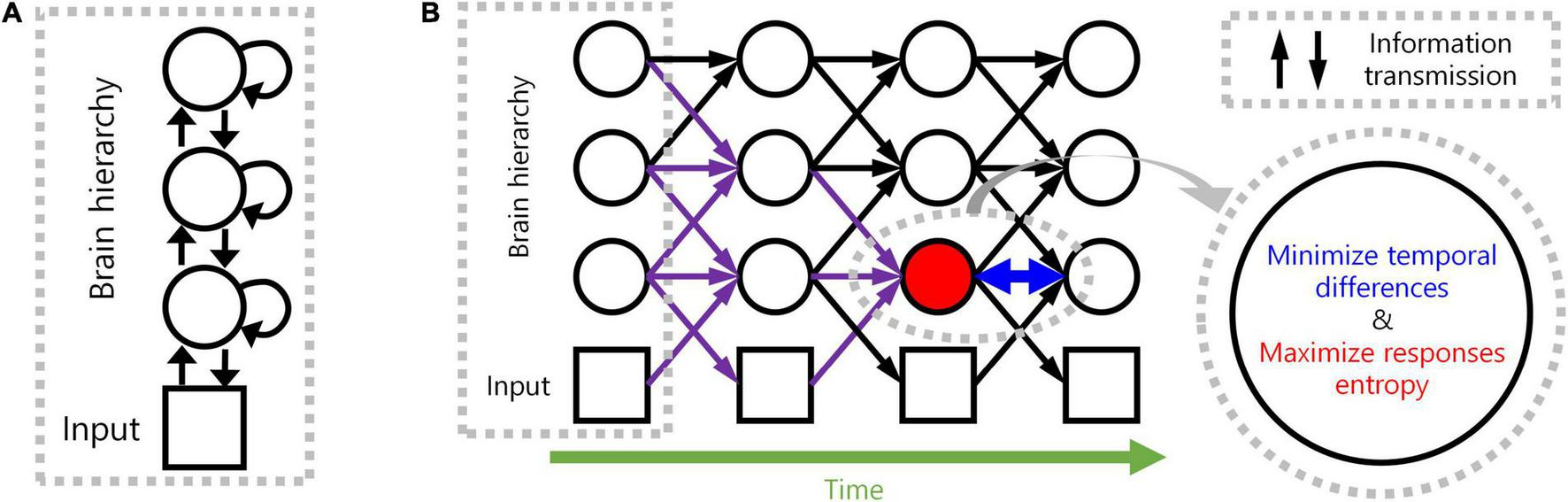

FIGURE 1

Spatio-temporally efficient coding. (A) This illustration depicts a hierarchical structure of the brain. Open black circles indicate an ensemble of neuronal units in each hierarchy of the brain. Open black square indicates visual input. Back arrows indicate information transmissions of bottom-up (upward arrow), recurrent (loop arrow), and top-down (downward arrow). (B) This illustration depicts a hierarchical structure of the brain and learning objectives. The hierarchical structure learns to represent input from the external world, which is depicted as black squares (e.g., visual input). Open black circles indicate an ensemble of neuronal units in each hierarchy of the brain. Inference based on spatio-temporally efficient coding is made by neuronal units as bottom-up, recurrent, and top-down information transmissions over time (black arrows). Learning in spatio-temporally efficient coding consists of two objectives: minimising the temporal differences between present and future neural responses and maximising the informational entropy of neural responses. For example, information transmissions (purple arrows) are optimised to minimise the temporal differences between present neural responses at the corresponding hierarchy (red filled circle) and future neural responses (circle to the right of the red filed circle) while concurrently maximising the informational entropy of neural responses at the corresponding hierarchy (red filled circle).

Temporally efficient coding decreases the conditional entropy of neural response given stimulus, H(X|S) where X indicates neural response and S indicates stimulus. Spatially efficient coding increases H(X). Spatio-temporally efficient coding, thus, increases the Shannon mutual information I(X; S) = H(X)−H(X|S) simultaneously in both terms: H(X) and −H(X|S). This is also the definition of another existing efficient coding (Friston, 2010). In this manner, spatio-temporally efficient coding in hierarchical structures can also be seen as an extension of existing efficient coding into hierarchical structures on time domain.

Spatio-temporally efficient coding enables rapid stabilisation of neural responses, smooth neural representations, and decodable stable neural responses. Through simulations, these properties (smooth temporal trajectory of neural responses, smooth neural representations, decodable stable neural responses; Figures 2–4) and predictable phenomena (deviant neural response for unlearned inputs, orientation preference bias; Figures 5–7) were confirmed.

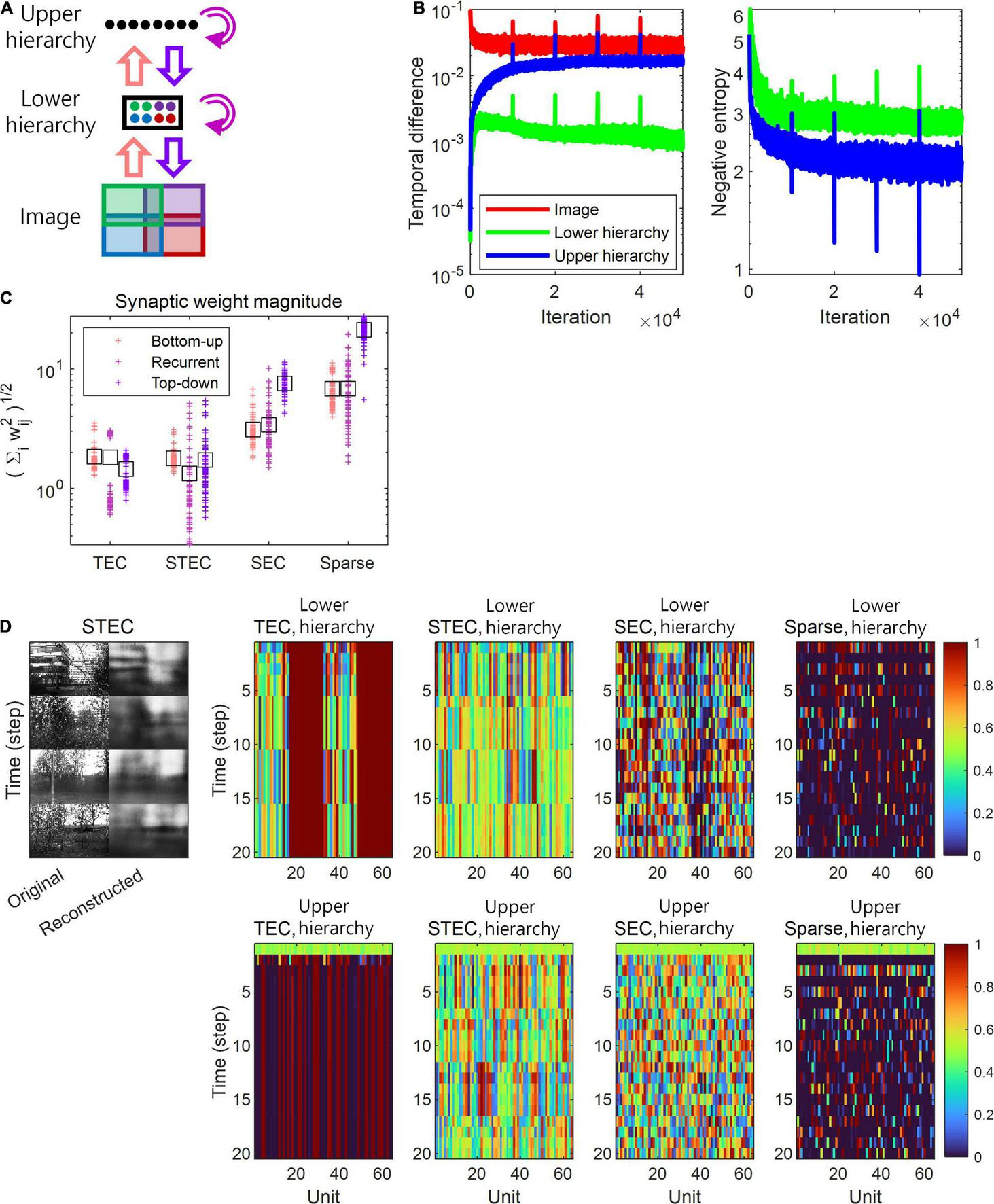

FIGURE 2

A simulation of spatio-temporally efficient coding. (A) Architecture of the simulation model. An input image is divided into four subspaces with overlaps, denoted by different colours. A subset of neuronal units in lower hierarchy receive corresponding parts of the image (matching colours). There is no spatial correspondence between lower and upper hierarchies. Pink, magenta, and purple arrows indicate bottom-up, recurrent, and top-down information transmissions,respectively. (B) Learning curves of STEC. Left panel: mean temporal difference. Right panel: mean negative entropy. As the negative entropy of the lower and upper hierarchies decreases, it can be observed that the temporal difference increases and then stabilises or decreases again. Vertical axis has the logarithm scale. (C) The magnitude of synaptic weights on lower hierarchy units is compared for different conditions, where STEC, the balanced condition between temporally and spatially efficient coding objectives; TEC, temporally efficient coding alone; SEC, spatially efficient coding alone which is existing efficient coding; Sparse, sparse coding. Each cross indicates the L2 norm of synaptic weights for each unit. Black squares indicate the mean. (D) Left: Four different original input images and their reconstructions from the neuronal responses of lower hierarchy. Right: Representative neural responses for different conditions. The neural response is the output of the sigmoid function and is therefore normalised to a range between 0 and 1.

Materials and Methods

Spatio-Temporally Efficient Coding

A possible approach to overcome the shortcomings of both bottom-up processing and predictive coding in visual hierarchical structures is to make bottom-up information transmissions similarly to top-down information transmissions across hierarchies, instead of transmitting bottom-up prediction errors. For example, context-independent bottom-up predictions and context-dependent top-down predictions (Teufel and Fletcher, 2020). Such bidirectional information transmissions eliminate the necessity for hypothetical error units, while presumably elucidating the neural responses of hierarchical structures underlying bottom-up feature integration and top-down predictive coding. A neural coding principle underlying bidirectional information transmissions of hierarchical structures can be found in the theory of efficient coding that draws upon the efficient use of given resources (Laughlin, 2001; Bullmore and Sporns, 2012), which crucially include limited time resources related to processing speed (Griffiths et al., 2015; Lieder and Griffiths, 2020). A possible solution to promote the most efficient use of limited time resources by the bidirectional information transmission system is to render present neural responses similar to future ones before the occurrence of future neural responses. This can be achieved by minimising the temporal differences between present and future neural responses. Accordingly, we consider this temporal difference minimisation as our learning principle, referred to as temporally efficient coding. Here, inference simply refers to a bidirectional information transmission mediated by top-down and bottom-up pathways. Unlike inference in predictive coding, which requires further error minimisation, inference in temporally efficient coding involves simple single-step information transmission.

Temporally efficient coding involves a trivial solution: neural responses do not change to changes in external events. This trivial solution is comparable to the dark-room problem of predictive coding or free-energy principle, where an agent stays and is unchanged in a dark room with no surprise or unpredicted parts (Friston et al., 2012; Clark, 2013). We circumvent this issue by adding a complementary neural coding (learning) principle that maximises the informational entropy of neural responses to alter neural responses to changing external events. It maximises the neural response space available to represent the external world under the constraints of both the number of neurons and maximum firing rates. Maximal entropy coding indicates that the system uses spatial resources of neural responses efficiently (Attneave, 1954; Barlow, 1961; Laughlin, 1981), referred to as spatially efficient coding. By combining spatially efficient coding and temporally efficient coding, we propose a neural coding principle termed spatio-temporally efficient coding (Figure 1).

Spatially efficient coding has the same objective as existing efficient coding (Barlow, 1961; Laughlin, 1981), which minimises informational redundancy because it increases the difference between neural responses. Temporally efficient coding, on the other hand, can be regarded to increase informational redundancy, as it reduces the difference between neural responses. Two seemingly opposing coding objectives can be reconciled by isolating mechanisms that decrease the differences between consecutive neural responses on time domain and those that increase the differences between neural responses to the apparently different external world. In the real brain, it can be explained that different mechanisms are applied depending on the degree of difference in neural response. In the implementation of the present study, two coding objectives were applied in different ways. As an implementation of temporally efficient coding, we minimise the difference between consecutive neural responses on the time. In spatially efficient coding, the neural responses to the apparently different external stimuli are implemented in a minibatch method that simultaneously learns different images. We maximised the difference between neural responses to different images within each time step (see section “Implementation of Spatio-Temporally Efficient Coding”).

Temporally efficient coding trains the present neural response to be similar to the future neural response in order to efficiently use a given time resource. The temporal trajectory of the neural response is smoothed as the difference between the present and future neural responses is minimised. It thereby minimises the size of the space represented, when a single stimulus (stimulus in the external world) is represented on the time domain. In other words, it reduces neuronal noise (also see, Figure 2D) which is defined as the uncertainty of a neural response for given stimulus (Borst and Theunissen, 1999). This is to decrease the conditional entropy of neural response given stimulus, H(X|S) where X indicates neural response and S indicates stimulus. Spatially efficient coding increases H(X). Spatio-temporally efficient coding, thus, increases the Shannon mutual information I(X; S) = H(X)−H(X|S) simultaneously in both terms: H(X) and −H(X|S). This is also the definition of another existing efficient coding (Friston, 2010). Spatio-temporally efficient coding in hierarchical structures, therefore, can also be seen as an extension of existing efficient coding into hierarchical structures on time domain.

Neural system homeostasis is associated with maximisation of mutual information between neural responses and external stimuli (Toyoizumi et al., 2005; Sullivan and de Sa, 2006). Since spatio-temporally efficient coding increases the Shannon mutual information between neural responses and external stimuli, it is related to homeostasis. In particular, temporal difference minimisation of neural responses in temporally efficient coding is reminiscent of homeostasis of energy metabolism. Smoothing the temporal trajectory of a neural responses reduces the variance of the neural response distribution so that the neural response stays within a certain range. This is also a consequence of the homeostatic plasticity (Turrigiano and Nelson, 2004) of the brain (also see, Figure 8).

As mentioned earlier, smoothing the temporal trajectories of neural responses reduces the spatial extent of neural responses to static stimuli. This leads to a rapid stabilisation of the neural response to the static stimulus (also see, Figures 2D, 4); decodable stable neural representations which is the main subject of the present study. The rapid stabilisation of neural responses shows the characteristic of temporally efficient coding, which makes efficient use of given time resources. Another effect of this smoothing the temporal trajectory of neural response is to render smooth neural representations that locally preserves the structure of the external world. If a stimulus is static or changes smoothly, making the temporal trajectory of the neural response smooth is to render a similar neural response to the similar stimuli. This is smooth neural representations that locally preserves the structure of the external world (also see, Figure 3).

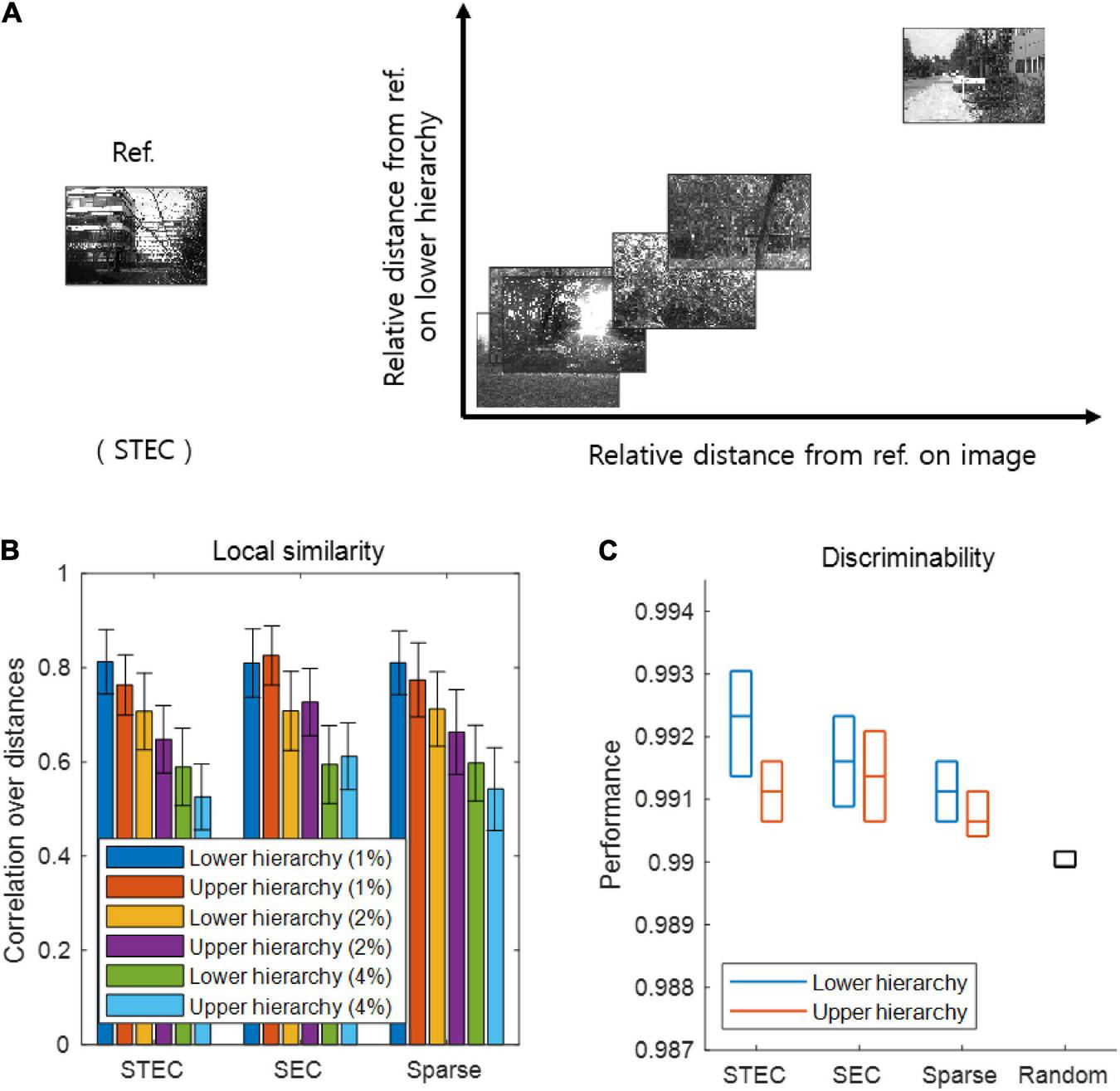

FIGURE 3

Smooth neural representations of visual images by spatio-temporally efficient coding. (A) Examples of relative Euclidean distances from the reference image (Ref. left) to other images in the natural scene image space are depicted on the horizontal axis. Relative Euclidean distances from the neural representations of the reference image at hierarchy 1 to those of corresponding other images are depicted on the vertical axis. (B) Local similarity based on correlations of distances between overall natural scene images (N = 42 out of 4212: 1%, N = 84 out of 4212: 2%; N = 168 out of 4212: 4%) (global feature based distance) with those of corresponding neural responses (Euclidean distance). Error bars indicate standard deviations. (C) Discriminability of neural responses for images (N = 4170 out of 4212; 99%). Three horizontal lines in each box indicates 25, 50, and 75 % levels of data, respectively.

Implementation of Spatio-Temporally Efficient Coding

In the present study, visual information processing in hierarchical structures was established as biologically inspired temporal processing. Specifically, visual information processing is described as a function ft for both image Ximage and neural responses Xh in each hierarchy h such that it maps from Ximage and Xh at time t-1 to those at time t:

Where Xh,t–1 and Xh,t are the neural responses Xh at time t-1 and t, respectively, at hierarchy h. For convenience, Xh =0 denotes Ximage, in particular Xh =0, t denotes the image presented at time t. The details of the visual information processing ft are as follows: If h > 0, then,

Where Xh,t is an Xh value vector at time t, ft(⋅)|Xh,t indicates restricting the range of the function value ft(⋅) to Xh,t, Wh+1,h is a synaptic weight matrix from hierarchy h+1 to h, T is the transpose of a matrix, bh is a bias vector at hierarchy h, and σ(⋅) is a sigmoid function. The terms of , , and indicate top-down, recurrent, and bottom-up information transmissions, respectively. In case of h + 1 > H the term would be omitted. If h = 0, then,

If h > 0, Xh,t = ft|Xh,t. On the other hand, when h = 0, Xh,t is the image presented at time t, not ft|Xh,t. This function ft makes inferences using spatio-temporally efficient coding. Unlike inference in predictive coding (Rao and Ballard, 1999; Spratling, 2017) that requires additional processes such as minimisation of prediction errors, inference in spatio-temporally efficient coding is a function value ft itself.

Learning in spatio-temporally efficient coding minimises the ensuing objectives of both temporally and spatially efficient coding. The objective of temporally efficient coding is given by:

Where ◦ is the function composition, xh =0,t͂, image =n indicates that the image is fixed to the nth sample throughout the temporal processing (i.e., Xh = 0,t−2 = Xh = 0,t−1 = Xh = 0,t is nth image sample), squaring is operated component-wise. In case of h = 0, which is the image presented at time t+1 while is the function value as inference. The function composition ft◦ft−1 of two functions (inferences) in the LTemporal allows the simultaneous learning of all information transmissions across all hierarchies because the depth of hierarchy is H = 2 in the present study (bottom-up, recurrent, and top-down information transmission across the hierarchy of depth H = 2). The inimisation of the given objective minimises the temporal differences between present and future neural responses. The objective of temporally efficient coding is to render present neural responses similar to future neural responses that has not yet arrived. By doing so, it uses the given time resources efficiently. This minimisation of temporal difference creates a learning effect in which the temporal trajectory of the neural response becomes smooth. The expected effect of this learning effect is to quickly stabilise neural responses when a static external stimulus is given (also see, Figures 2D, 3). In that aspect, it is also to use the given time resources efficiently.

The objective of spatially efficient coding is given by,

Where h > 0 in Xh (so, ) and P(⋅) is a probability. Because the term LSpatial is an estimation of negative informational entropy, minimising the objective maximises the informational entropy of ft. This objective increases the entropy of each neuron, and it can be seen that an increase in marginal entropy in each neuron will increase the joint entropy of the entire system. Therefore, spatially efficient coding maximises the entropy of individual neurons as in Laughlin’s study (Laughlin, 1981) and consequently the entropy of the entire system. The objective of spatially efficient coding is to render different neural responses to different inputs from the external world. We, therefore, can overcome the trivial solution of temporally efficient coding, where there is no change in neural response despite changes in the external world. This thereby does not conflict with the learning of temporally efficient coding, which decreases differences in consecutive neural responses in the time domain to inputs of one stream.

To minimise LSpatial, which is the objective of spatially efficient coding, it is necessary to calculate the probability P(⋅) in LSpatial (eq. 5). Instead of calculating the exact probabilities, we obtained pre-normalised densities in the sense of probabilities without a partition function. As the value of the partition function is fixed, it does not affect the minimisation process. Note that, when h > 0, Xh,t is the space of all possible neural responses, i.e., Xh,t = [0, 1]dimXh,t. Let x ∈ Xh,t be a value of . Kernel density estimation was used to obtain P(x). Using a Gaussian kernel with width 0.1(dimXh,t)1/2, the neural response density Q(x) and compensation density Q′(x) at x ∈ Xh,t were obtained. Then, the pre-normalised density of interest is,

The neural response density Q(x) is obtained by kernel density estimation of neural responses on Xh,t. The compensation density Q′(x) is obtained using pseudo-uniformly generated samples on Xh,t instead of the neural responses (For sparseness constraint, i.e., sparse neural responses, Q′(x) is obtained using pseudo-uniformly generated samples on [−1, 1]dim Xh,t instead of Xh,t = [0, 1]dim Xh,t, so that Q′(x) has a fat distribution around zero. See, Figure 5) The compensation density is necessary to compensate for the non-uniform intrinsic expectation of Q(⋅) resulting from the fact that Xh,t is bounded. At the boundary of Xh,t, the density of neural responses, Q(x), measured by kernel density estimation is decrease. This intrinsic decrease corresponds to Q′(x). We compensated for the decrease by dividing Q(x) by Q′(x).

Finally, the objective of spatio-temporally efficient coding is a linear combination of those two objectives:

Where λ is a regularisation parameter. A smaller λ indicates a greater emphasis on the temporally efficient coding objective, whereas a larger λ indicates the opposite. As mentioned previously, temporally efficient coding decreases the conditional entropy of neural response given stimulus, H(X|S) where X indicates neural response and S indicates stimulus. Spatially efficient coding increases H(X). Hence, the regularisation parameter λ can be seen as controlling the balance between H(X) and −H(X|S). It is a modification of fixed balance of Shannon mutual information I(X; S) = H(X)−H(X|S) of the existing efficient coding (Friston, 2010).

Temporal trajectories of neural responses are smoothed by temporally efficient coding, but this does not mean just slow neural representations. By spatially efficient coding, different neural responses to different inputs from the external world should be exhibited. Therefore, it should show rapid changes in neural responses to sudden changes in the external world (fast representation). This is the difference from slow feature analysis (Wiskott and Sejnowski, 2002; Berkes and Wiskott, 2005; Creutzig and Sprekeler, 2008) or temporal coherence (Hurri and Hyvärinen, 2002; Zou et al., 2011), which targets slow neural representations. On the one hand, with temporally efficient coding, changes in neural responses should be smoothed out quickly when the external input is not changing (fast stabilisation).

Suppose that Gaussian noise is added to a series of temporally correlated external inputs (e.g., static images or smoothly moved images + Gaussian noise). If these noisy external inputs are still temporally correlated, then smoothing the temporal trajectory of neural responses (by temporally efficient coding objective LTemporal) is rendering temporally similar neural responses to temporally similar inputs. It is also smooth neural representations that locally preserves the structure of the external world. Moreover, if the noise is provided independently of the input, the effect of the noise on neural representations will be dispelled by multiple independent trials. Therefore, temporally efficient coding objective LTemporal increases the fidelity of the neural representation with respect to a Gaussian noise.

Smooth neural representation also means making different neural responses to different inputs. In other words, it increases discriminability for different inputs. This is achieved by spatially efficient coding objective LSpatial that maximises the entropy of neural responses. Assumed that Gaussian noise is added to external inputs. Let Dtrue be a binary-valued random variable for the discrimination between two actually different inputs such that Dtrue = 1 means the discrimination that two noised inputs differ and Dtrue = 0 means the discrimination that two noised inputs are same. Dtrue is probabilistic because of the Gaussian noise mentioned earlier. Let Dresponse be a binary-valued random variable for the discrimination between two neural responses for inputs such that Dresponse = 1 means the discrimination that two neural responses differ and Dresponse = 0 means the discrimination that two neural responses are same. Let Ptrue and Presponse be the probability mass functions of Dtrue and Dresponse, respectively. Spatially efficient coding objective LSpatial decreases the Kullback–Leibler divergence from Presponse to Ptrue, i.e., DKL(Ptrue||Presponse). Hence it also decreases the binary cross entropy H(Ptrue) + DKL(Ptrue||Presponse) where H(⋅) is an informational entropy.

In the present study, the depth H of hierarchies was set to 2, the minimum depth to realise both bottom-up and top-down pathways in the same hierarchy. Images with 64×96 size were divided into four overlapping 38×58 patches. Each patch was connected to 16 of 64 lower hierarchy units. Further, 64 lower hierarchy units were fully connected to 64 upper hierarchy units. All units in each hierarchy are fully connected to each other (Figure 2A).

Because L = LTemporal + λLSpatial is differentiable, the minimisation of the objective in spatio-temporally efficient coding was performed with a gradient descent. The Adam optimiser (Kingma and Ba, 2015) was used to perform the stochastic gradient descent with momentum. The parameters of the Adam optimiser used in this study were α = 0.001, β1 = 0.9, β2 = 0.999, and=10−8. The optimisation lasted 104 iterations for each repetition and restarted with five repetitions. For each iteration, the duration of temporal processing ft was five (i.e., t ∈ [1,5]), and the minibatch size was 40. For a given image, the duration of temporal processing of ft was given as five (i.e., t ∈ [1,5]) in both learning and inference, because four time steps are required for the image information to reach the top hierarchy and return over H = 2 hierarchies, in addition to one time step to obtain future neural responses. In learning, after temporal processing of five durations was finished, new temporal processing begins, and the initial values of neural responses of new temporal processing were the last neural responses values of the previous temporal processing. In our simulations, we repeatedly exposed the hierarchical structure to natural scene images, which enabled it to learn the bidirectional information transmissions between top-down and bottom-up hierarchies using spatio-temporally efficient coding with a range of the balancing parameter λ. Successful learning was confirmed by minimising or stabilising L during learning (see, Figure 2B). Further, we verified that the learned hierarchical structure could successfully reconstruct an input image, as shown in Figure 2D.

The appendix of the summary for all mathematical formulations is available at the Supplementary Material.

Simulation codes for spatio-temporally efficient coding are available from https://github.com/DuhoSihn/Spatio-temporally efficient-coding (Sihn, 2021).

The flowchart of details of implementation of spatio-temporally efficient coding (Figure 9A) and experimental workflow in the present study (Figure 9B) were summarised in Figure 9.

Datasets

For the simulations, van Hateren’s natural scene image dataset (van Hateren and van der Schaaf, 1998) was used. The dataset was downloaded from https://pirsquared.org/research/#van-hateren-database. The images were downsized to 64×96 pixels. For the comparison tests, the MNIST handwritten digit dataset (Lecun et al., 1998) was used. The dataset was downloaded from http://yann.lecun.com/exdb/mnist/. The images were resized to 64×96 pixels to fit the images used in the simulations. All image data were rescaled between 0 and 1.

Computation of Receptive Fields

Images of 64×96 size with randomly selected 16×16 subregions were created to compute the receptive field. These 64×96 images had a value of one in the 16×16 subregions and zero otherwise. Receptive fields were calculated based on the average neural response when the visual hierarchy (Xh = 1×…×Xh = H) was exposed to these images. Specifically, the value of the receptive field at a pixel was defined as the average neural response to an image with a value of one at this pixel. This averaging method was inspired by the previous study in case of binary neural responses (firing or not) (Ohzawa et al., 1996). The difference is that the previous study was for image averaging and the present study was for neural response averaging.

Results

Balancing Between Temporally and Spatially Efficient Coding

The temporally efficient coding objective that minimises the temporal difference between present and future neural responses smooths the temporal trajectory of neural responses. The spatially efficient coding objective that maximises the informational entropy of neural responses renders different neural responses to different inputs. We can predict that when the temporally efficient coding objective and the spatially efficient coding objective are properly balanced, different neural responses to different stimuli and temporally smooth neural responses to the same stimuli can be expected. This means that when the external input changes, the neural response changes quickly, and when the external input does not change, the neural response quickly stabilises. If the temporally efficient coding objective is overweighted, it can be predicted to arrive at a trivial solution in which the neural response does not change despite changes in external input. Conversely, if the spatially efficient coding objective is overweighted, it can be predicted that the temporal trajectory of the neural response is not smooth, resulting in neuronal noise. Three regularisation parameters λ ∈ {0, 10, 1000} were used to confirm these predictions through simulations, and each λ corresponds to each simulation condition: the temporally efficient coding overweighted (λ = 0), the balanced condition of two coding objectives (λ = 10), and the condition of the spatially efficient coding overweighted (λ = 1000), respectively. The selection of λ ∈ {0, 10, 1000} made the comparison between spatio-temporally efficient coding and other neural codings. Putting λ = 0 renders that L = LTemporal in the eq. (7), consequentially making that the temporally efficient coding objective is overweighted (TEC condition). Putting λ = 1000 renders that LTemporal lose its influence such that L≈LSpatial in the eq. (7), consequentially making that the spatially efficient coding objective is overweighted (SEC condition). Putting λ = 10 renders the appropriate balance between LTemporal and LSpatial, representing the balanced condition of two objectives (STEC condition). The SEC condition is a condition in which only the spatially efficient coding objective is considered, and represents the existing efficient coding (Barlow, 1961; Laughlin, 1981). Sparse coding (Olshausen and Field, 1996, 1997) was chosen to compare with other neural coding principles where temporal factors were not considered. The sparseness constraint (see section “Implementation of Spatio-Temporally Efficient Coding”) is applied to λ = 1000 to implement sparse coding (Sparse condition).

Based on the simulation, we confirmed this phenomenon by observing that, under STEC condition (λ = 10), when the external input changes, the neural response changes rapidly, and when the external input does not change, the neural response quickly stabilises. In addition, we confirmed that under TEC condition (λ = 0, control), we arrive at the trivial solution in which the neural response does not change even when the external input changes. We also confirmed that under SEC condition (λ = 1000) and Sparse condition (λ = 1000 + sparseness constraint), the temporal trajectory of the neural response is not smooth, resulting in neuronal noise (Figure 2D). This also shows the problem of applying existing efficient coding (Barlow, 1961; Laughlin, 1981) and sparse coding (Olshausen and Field, 1996, 1997) directly to the hierarchical structure on time domain.

A change in balance between the two objectives also altered the relative strengths between bottom-up and top-down synaptic connections. In order to find out whether the strength of the bottom-up and top-down synaptic weights is related to the balance between the two objectives, the synaptic weights at various λ values were investigated. The synaptic weights were defined as where wij is an ijth component of a synaptic weight matrix W which is defined at Implantation of spatio-temporally efficient coding section. Simulation results demonstrated that top-down synaptic strengths from upper to lower hierarchy were increased compared to bottom-up synaptic strengths from images to lower hierarchy as λ increased, with an emphasis on spatially efficient coding (Figure 2C, see section “Materials and Methods” for details on the computation of synaptic strength). This finding suggests that the balance between spatially and temporally efficient coding could be related to the balance between bottom-up and top-down synaptic strengths in the brain.

Smooth Neural Representations of the External World

As a major function of the brain is to represent the external world (deCharms and Zador, 2000; Kriegeskorte and Diedrichsen, 2019), we investigated whether spatio-temporally efficient coding creates appropriate neural representations of the external world. Spatio-temporally efficient coding can be expected to smooth the temporal trajectory of neural responses while having different neural responses to different external inputs. This effect may render smooth neural representations that locally preserves the structure of the external world, i.e., similar neural responses for similar external stimuli. To confirm this by simulation, we compared similarities between natural scene images and neural responses to those images. We quantified this by examining the relations between a natural scene image space and a neural response space. Specifically, we first measured the global feature based distance (Di Gesù and Starovoitov, 1999) from a natural scene image (i.e., reference image) to other natural scene images in the image space and the Euclidean distances from the neural responses for the reference image to those for other compared images in the neural response space (Figure 3A). It has been proven that global feature based distance reflects differences in images in a way that humans actually perceive (Di Gesù and Starovoitov, 1999). When calculating the distances between neural responses, we used the neural responses at five time steps after receiving a given image based on the assumption that the neural responses became temporally steady after five steps of temporal processing (see Figure 1B).

As a further analysis, we measured local similarity and discriminability based on distances defined in the natural scene image space and the neural response space. Local similarity indicates how well the similarity among the relatively similar images is reflected in the neural responses to those images, representing one of measures that similar neural responses for similar external stimuli. The local similarity was measured the Pearson’s linear correlation between the distances in the image space and those in the neural response space. A high correlation indicates that neural responses tend to be similar when the visual system perceives natural scenes close to each other (in the sense of global feature based distance). We set each image sample as a reference and repeated the calculations for the correlation across all image samples. We defined local similarity as the average correlation obtained by using the distances from one image to its neighbouring images only (1, 2, and 4% of all images).

Discriminability is a measure complementary to local similarity, which measures whether neural responses to different images are different. We measured discriminability as the proportion of dissimilar neural responses among dissimilar images. This dissimilarity was defined as 99% of all, based on certain distances (the global feature based distance for images, the Euclidean distance for neural responses). Specifically, the discriminability for an image sref was defined as |{s ∈ images |dS(s, sref) > θS and dX(f(s), f(sref)) > θX}| divided by |{s ∈ images |dS(s, sref) > θS}| where |{⋅}| is the set size, dS(s, sref) is the distance on the natural scene image space, dX(f(s), f(sref)) is the distance on the neural response space, θS is the threshold which indicates 99% of all images, and θX is the threshold which indicates 99% of all neural responses. A high discriminability indicates that if the image is different, the neural response will also be different.

If the learned neural representations are smooth, i.e., locally preserving the structure of the external world, both local similarity and discriminability will be high. We can predict that when the temporally efficient coding objective and spatially efficient coding objective are balanced (STEC condition), neural representations are smooth, so that local similarity and discriminability are high. From the simulations, we confirmed that local similarity and discriminability do not lower in STEC condition than SEC or Sparse condition (Figures 3B,C).

Decodable Stable Neural Representations

In a hierarchical structure in which information is exchanged in both directions, if the information represented by the upper and lower hierarchies at the same time is different, it is difficult to obtain a stable neural response on time domain for an external input. The reason is that if the information represented by the upper and lower hierarchy are different, different information is exchanged, and thus the information represented next time may be also different. These inter-connected structures could also produce chaotic dynamics (Rubinov et al., 2009; Tomov et al., 2014).

The objective of temporally efficient coding is to render present neural responses similar to future neural responses that has not yet arrived. This minimisation of temporal difference creates a learning effect in which the temporal trajectory of the neural response becomes smooth. It thereby minimises the size of the space represented, when a single stimulus is represented on the time domain. In other words, it reduces neuronal noise which is defined as the uncertainty of a neural response for given stimulus (Borst and Theunissen, 1999). The expected effect of this learning effect is to quickly stabilise neural responses when a static external stimulus is given. The objective of spatially efficient coding is to render different neural responses to different stimuli. The expected effect of spatio-temporally efficient coding is to render decodable stable neural representations which is an appropriate function to hierarchical brain structures on the time domain.

To confirm these expectations, we performed four simulation experiments. The first simulation is to present multiple images for five time step for each image and check the neural responses for those images. When each image was given, it was checked whether the neural response was quickly stabilised (Figure 4A). The second simulation measures the amount of noise in the neural responses when each image is presented during 25 time step. Neuronal noise is defined as the uncertainty of a neural response for given stimulus (Borst and Theunissen, 1999), so we measured the conditional entropy of neural responses during each five time step as neuronal noise. That is, for each t′ ∈ {0, 5, 10, 15, 20} where s is an image, S is the set of all images, H(⋅|⋅) is the conditional entropy, and ft is the neural response at time t. Probability was estimated by kernel density estimation without a partition function, and thus shifted conditional entropy was measured. We checked the amount of neuronal noise (Figure 4B). In the third simulation, we checked whether the neural responses to each image stabilised over time. To do this, we measured how confused the neural response over time (9 time step) to one image was with the neural response to a similar image. This is represented by the confusion index. Confusion index for image s at time t = |ft(s)−f10(s)|/|f10(s)−f10(s′)|where ft(s) is the neural response for image s at time t and s′ is the nearest image of s based on the global feature based distance (Di Gesù and Starovoitov, 1999). If the confusion index is less than 1, it can be said that the neural response is well stabilised. We checked whether the neural response was stabilised by reducing the confusion index to less than 1 (Figure 4C). In fourth simulation, we checked how decodable the neural responses was. To measure how decodable the neural response is, the neural responses of time steps 1,…, 9 were decoded using the neural responses of time steps 10 and 11 as a training set. The naïve Bayes classifier (Hastie et al., 2009) was selected as the decoder, and each of the 4212 natural scene images was defined as one class (Figure 4D).

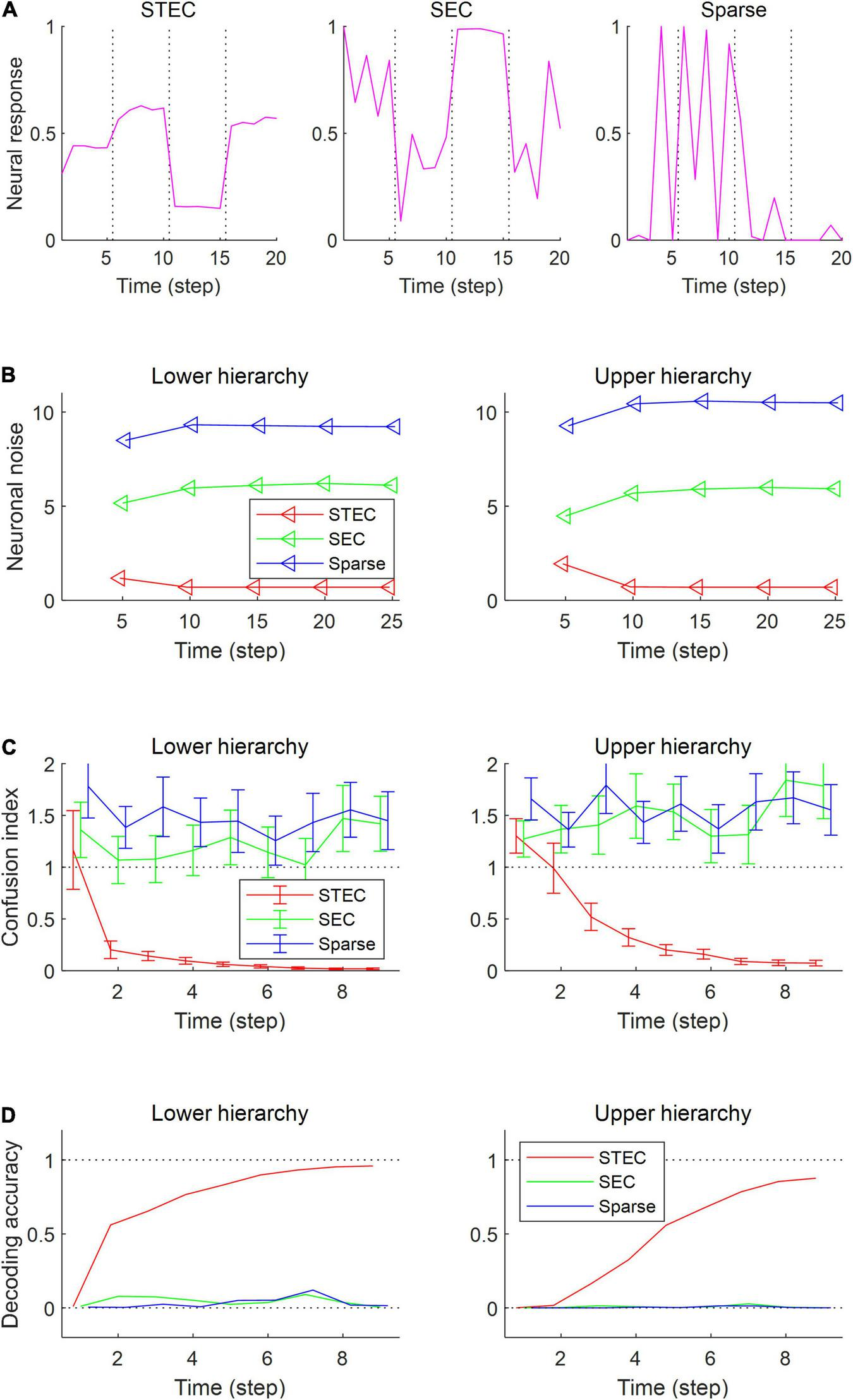

FIGURE 4

Decodable stable neural representations via spatio-temporally efficient coding. (A) Examples of neural responses in the lower hierarchy. These were neural responses of 3rd units in Figure 2D. Dotted vertical black lines indicate the presentations of new external input. (B) Neuronal noise that measured as shifted conditional entropy of neural responses given stimulus. Conditional entropy was measured by collecting neural responses at every five time step. (C) Confusion index that a measure of how much it confuses neural responses to one stimulus with neural responses to another similar stimulus. The dotted lines denote the confusion index of 1 which indicates the confusion. Error bars indicate standard deviations. (D) Decoding accuracy via the naïve Bayes classifier. The upper dotted line denotes the maximum decoding accuracy, 1. The lower dotted line denotes the chance level, 1/4212. Error bars indicate standard deviations.

As a result of the simulation, different neural responses to different stimuli were shown in the STEC condition, and these neural responses were rapidly stabilised. On the other hand, neural responses were less stabilised under SEC and sparse conditions (Figure 4A). The factor affecting the stabilisation of the neural response was the amount of neuronal noise. To quantify the amount of neural noise, conditional entropy of neural responses given stimulus was measured. Conditional entropy was measured by collecting neural responses at every five time step. The STEC condition showed lower neuronal noise than the SEC and Sparse conditions (Figure 4B).

We checked whether the neural response was well stabilised through the confusion index. Under STEC condition, the confusion index was kept below 1 on average for the majority of time steps at each hierarchy (Figure 4C). This means that the neural representations are rapidly stabilised in STEC condition. On the other hand, the confusion index was not sufficiently reduced in SEC and Sparse condition (Figure 4C). This means that sufficient stabilisation of neural representations is difficult with only existing efficient coding or sparse coding.

Finally, we checked whether the neural response is decodable. In the STEC condition, the decoding accuracy gradually increased over time, suggesting decodable stable neural representations. On the other hand, the decoding accuracy was low in SEC and Sparse conditions.

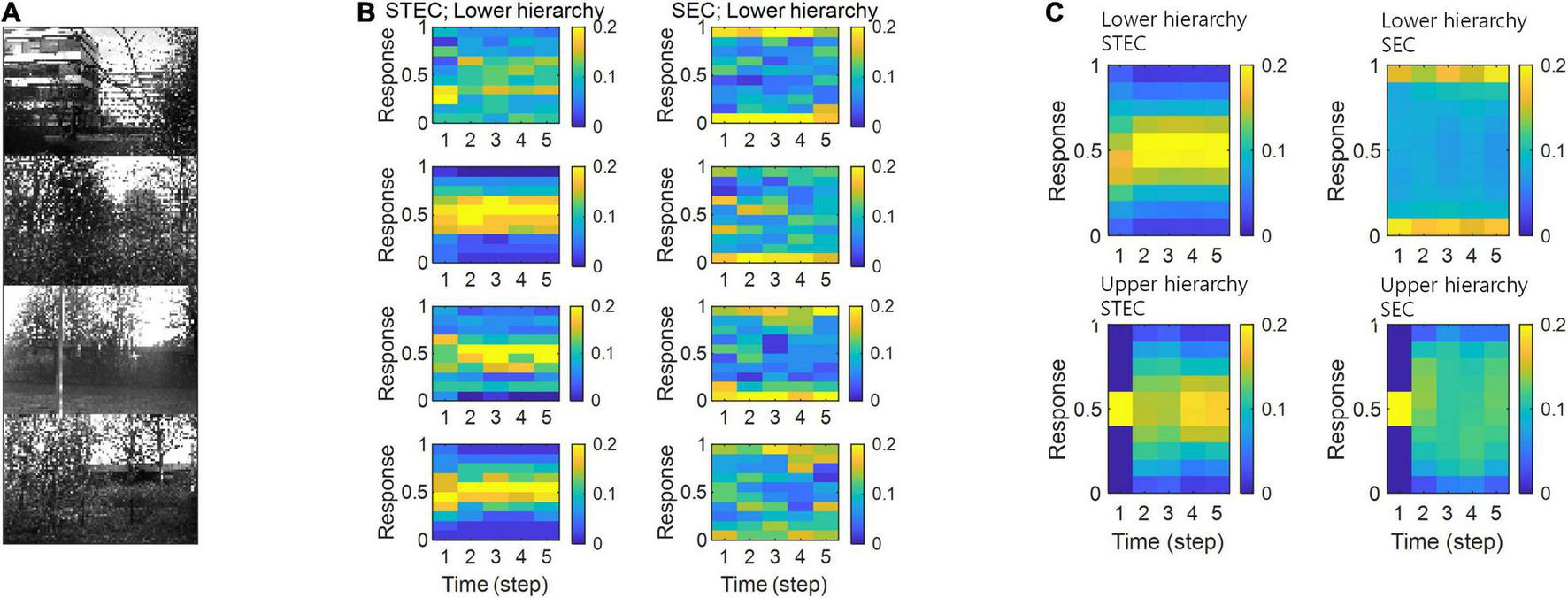

Relation to Homeostasis

Neural system homeostasis is associated with maximisation of mutual information between neural responses and external stimuli (Toyoizumi et al., 2005; Sullivan and de Sa, 2006). Since spatio-temporally efficient coding increases the Shannon mutual information between neural responses and external stimuli, it is related to homeostasis. In particular, temporal difference minimisation of neural responses in temporally efficient coding is reminiscent of homeostasis of energy metabolism. Smoothing the temporal trajectory of a neural responses reduces the variance of the neural response distribution so that the neural response stays within a certain range. We confirmed this through simulation. It was checked whether the neural response had a middle value, not an extreme value such as 0 or 1, which is homeostasis of neural responses. In STEC condition emphasising temporally efficient coding objective, neural responses were concentrated at the middle value than in SEC condition (Figure 8). Figure 8B showed specific cases corresponding to stimuli in Figure 8A. Figure 8C showed the distribution of overall neural responses, indicating that this is general phenomena of STEC. This is also a consequence of the homeostatic plasticity (Turrigiano and Nelson, 2004) of the brain.

Deviant Neural Responses to Unlearned Inputs

The visual system often responds selectively to sensory inputs (Margoliash, 1983; Waydo et al., 2006). Even for the type of sensory inputs to which the visual system is responsive, unfamiliar inputs induce larger neural responses compared to familiar inputs (Huang et al., 2018; Issa et al., 2018). These large neural responses to unfamiliar inputs are thought to be due to prediction errors (Issa et al., 2018). On the one hand, spatio-temporally efficient coding renders smooth neural representations, i.e., locally preserving the structure of the external world. Because unlearned inputs differ from learned inputs, if their neural representations are smooth, their neural representations will also differ. By STEC homeostasis, since the neural response to the learned input has a middle value (Figure 8), the neural responses to the unlearned input (if these differ from the neural responses to the learned input) may be closed to extreme values such as 0 or 1. These are deviant neural responses to unlearned inputs. Accordingly, we can expect that spatio-temporally efficient coding could predict the phenomenon of deviant neural responses to unlearned inputs without the introduction of prediction error responses mediated by error units. We checked whether the distribution of neural responses to unlearned input is close to extreme values such as 0 or 1, unlike the distribution of neural responses to learned input.

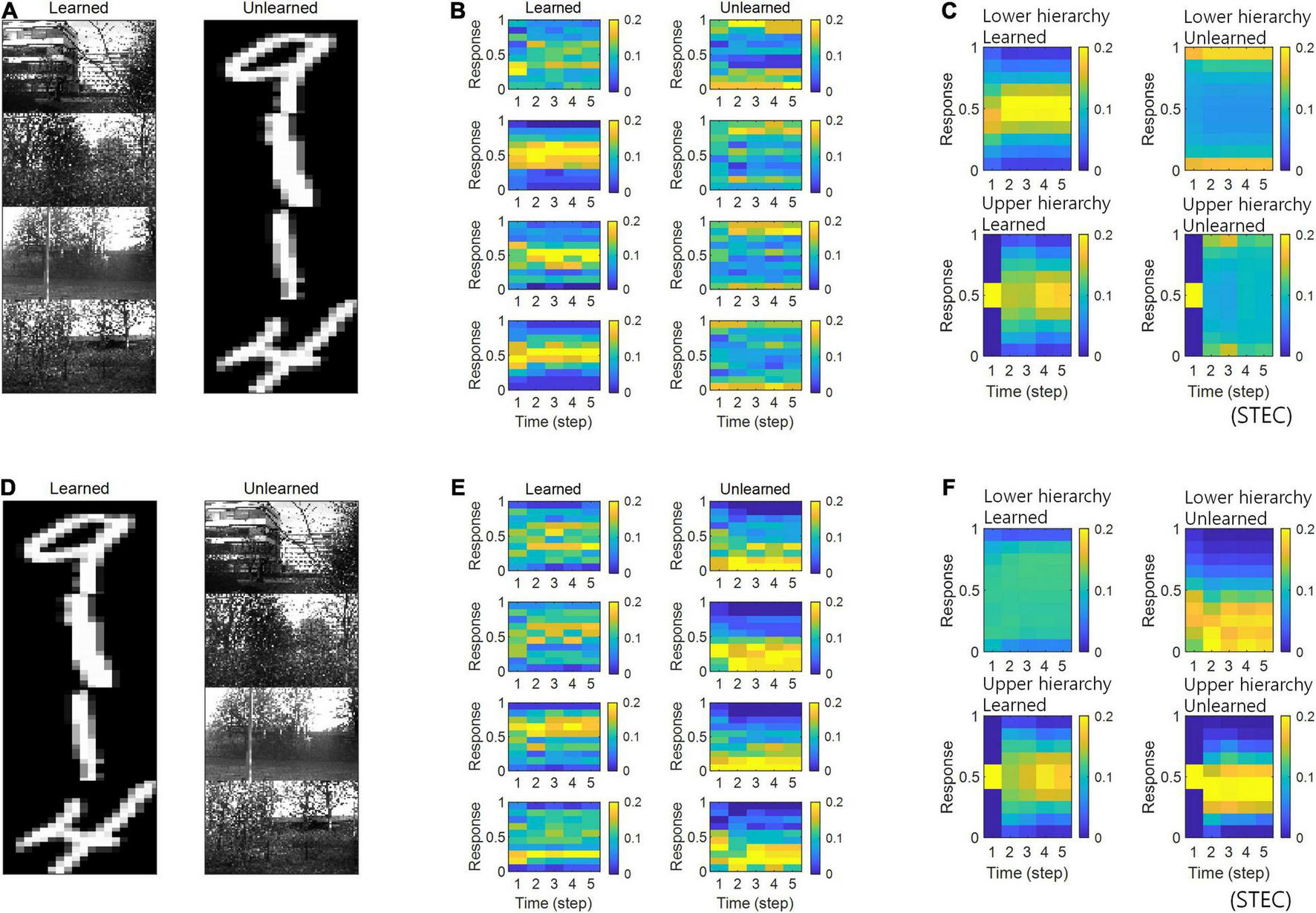

In the simulations, the visual hierarchical structure learned to be learned with natural scene images, and novel handwritten digit images were used as unlearned visual inputs (Figure 5A). The simulation results revealed that neural responses were distributed over middle values for learned images and over smaller or larger values for unlearned inputs (Figures 5B,C), suggesting that spatio-temporally efficient coding could predict the phenomenon of deviant neural responses to unlearned inputs. The same conclusion could also be reached if the learned images were handwritten digit images and the unlearned images were natural scene images (Figures 5D–F).

FIGURE 5

Neural response distributions for learned and unlearned inputs. (A) Natural scene images are used as learned inputs (for learning in the visual hierarchical structure) and handwritten digit images are used as unlearned inputs (not used in learning). (B) Examples of neural response distributions of lower hierarchy units for each of the five time steps of bidirectional information transmissions, corresponding to images in (A). The colour scale indicates the proportion. (C) Overall neural response distributions in response to all input images (either learned or unlearned) at lower and upper hierarchies, respectively. (D–F) Are similar to (A–C), but handwritten digit images are used as learned inputs and natural scene images are used as unlearned inputs.

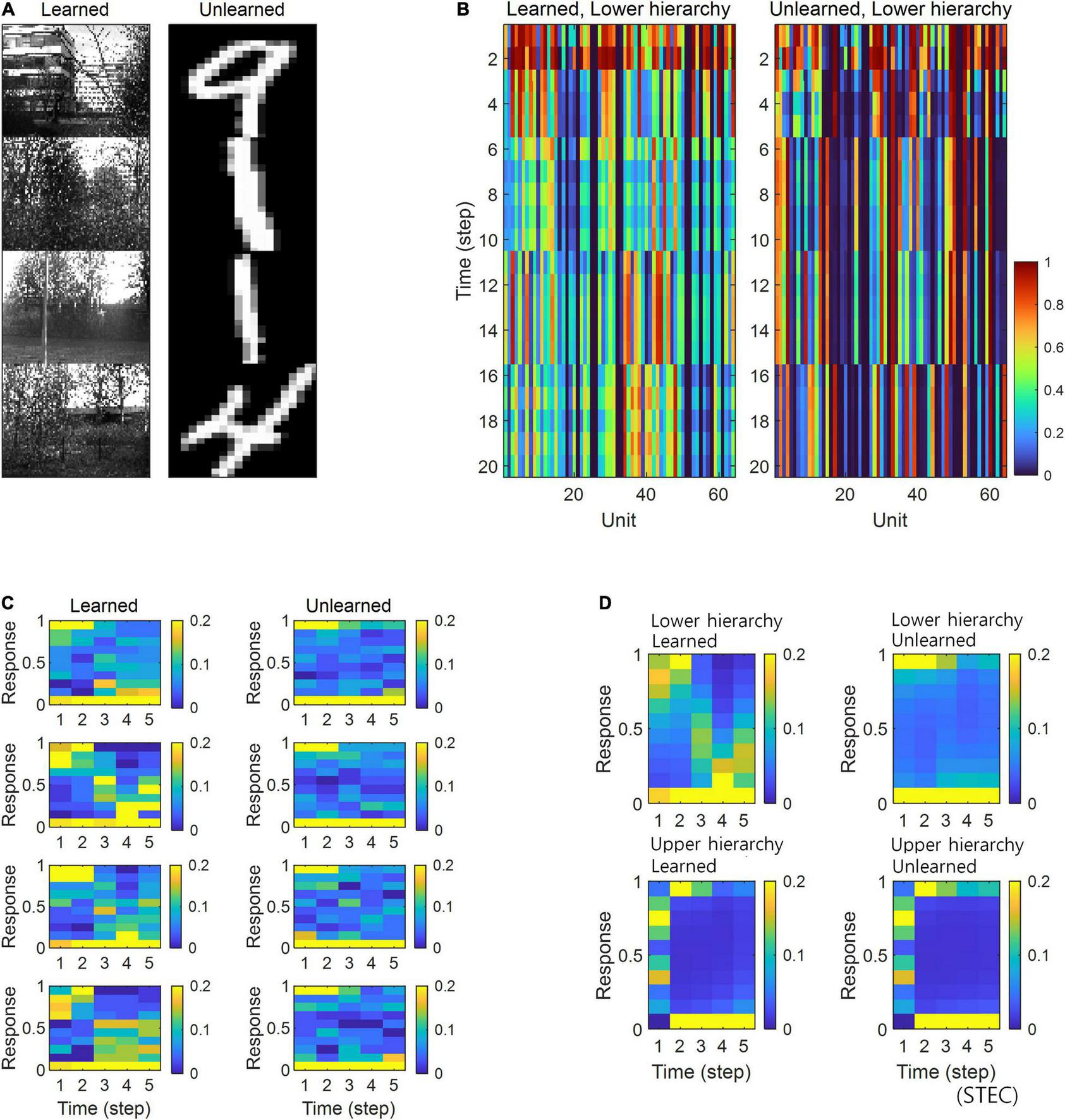

Simulations in the present study demonstrated that neural responses were distributed around smaller or larger extremes for unlearned inputs and around intermediate values for learned inputs (Figures 5B,C). In a separate analysis, we allowed the neural responses to learned inputs be distributed only around lower values (see section “Implementation of Spatio-Temporally Efficient Coding,” λ = 5), similar to sparse coding (Olshausen and Field, 1996, 1997), and observed that neural responses of the lower hierarchy to unlearned inputs exhibited higher values (Figure 6). Under this sparseness constraint, when an external stimulus was first given, the neural response surged from zero to a large value and then stabilised again (Figures 6C,D).

FIGURE 6

Neural response distributions for learned and unlearned inputs under sparseness constraint. (A) Natural scene images are used as learned inputs (for learning in the visual hierarchical structure) and handwritten digit images are used as unlearned inputs (not used in learning). (B) Lower hierarchy neural responses for consecutive external inputs in (A). The neural response is the output of the sigmoid function and is therefore normalised to a range between 0 and 1. (C) Examples of neural response distributions of lower hierarchy units for each of the five time steps of bidirectional information transmissions, corresponding to images in (A). Unlike (B), it is a neural response when images are presented separately rather than consecutively. The colour scale indicates the proportion. (D) Overall neural response distributions in response to all input images (either learned or unlearned) at lower and upper hierarchies, respectively.

Preferred Orientation Biases of Receptive Fields

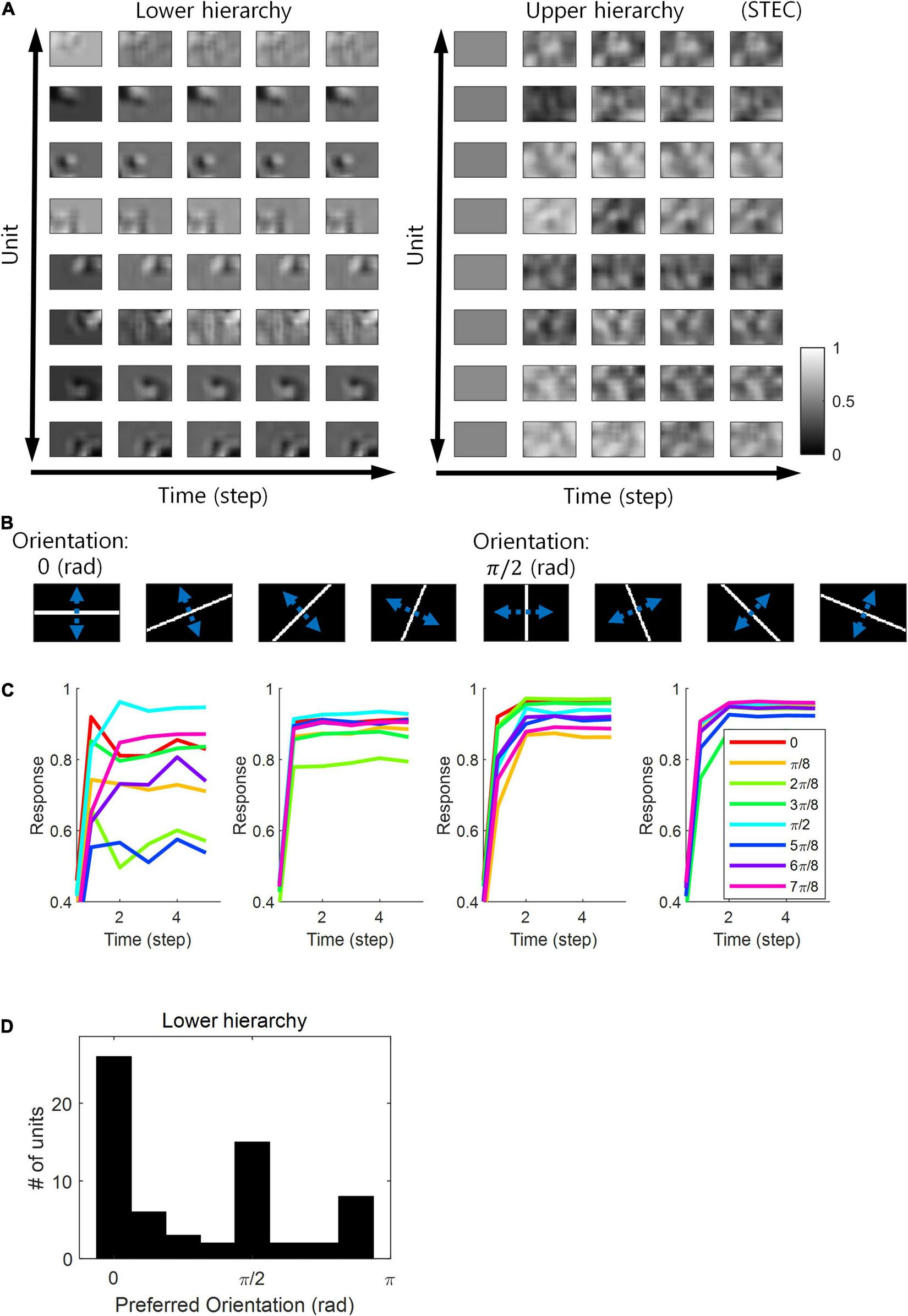

Neurons in the visual system prefer horizontal and vertical orientations over oblique orientations (Furmanski and Engel, 2000; Li et al., 2003). Indeed, orientation discrimination is more sensitive to horizontal and vertical orientations than to oblique orientations (Girshick et al., 2011). This is a bias toward cardinals (Girshick et al., 2011). Since smooth neural representations of spatio-temporally efficient coding reflects the structure of the external world well, we predicted that it would also reflect the environmental statistics of natural scenes. We investigated whether units in the visual hierarchical structure that learned by spatio-temporally efficient coding of natural scene images exhibited such biases. We checked whether the neural responses of the units were highest to the horizontal/vertical orientation stimuli. Units in the lower hierarchy had the Gabor-like visual receptive fields, while units in the upper hierarchy had more complex visual receptive fields (Figure 7A) (also see section “Computation of Receptive Fields”). Because the units had an oriented Gabor-like receptive field, we used oriented bar stimuli to measure the unit’s preferred orientation. We presented a static bar oriented in one of eight angles that moved in the direction perpendicular to the orientation angle (Figure 7B) and defined the response of each unit to that orientation by the largest response during presentation. We then defined the preferred orientation of each unit as the orientation that elicited the largest response. Example neural responses were shown in Figure 7C. Simulation results revealed that units clearly prefer horizontal and vertical orientations over oblique orientations (Figure 7D), consistent with the orientation bias of visual cortical neurons and in accordance with context-independent bottom-up prediction (Teufel and Fletcher, 2020). This means that smooth neural representations when well-balanced between temporally efficient coding objective and spatially efficient coding objective reflect the environmental statistics of natural scenes.

FIGURE 7

Orientation preference. (A) Examples of visual receptive fields of lower and upper hierarchy units. (B) Orientation images used to test the visual orientation preference of neuronal units at hierarchy 1 in the visual hierarchical structure that learned by the spatio-temporally efficient coding of natural scene images. White bars in each of the eight orientations are moved in the perpendicular directions denoted by blue dotted arrows. (C) Examples of lower hierarchy neural responses for bar stimuli. Each colour indicates each orientation. The neural responses of each orientation were obtained from the bar positions with the largest neural responses at time step 5. These four example units correspond to 1st, 3rd, 5th, and 7th units in (A). (D) Histograms of the orientation preference at time step.

Discussion

The present study aimed to find computational principles that enables visual hierarchical structures to attain the function to represent external visual information. To address the lack of neural coding principles to encompass both bottom-up and top-down pathways, we propose spatio-temporally efficient coding as a novel computational model. As a principled way of efficiently using given resources in both neural activity space and processing time, this coding principle optimises bidirectional information transmissions over hierarchical structures by simultaneously minimising temporally differences in neural responses and maximising entropy in neural representations. Simulation results showed that the proposed spatio-temporally efficient coding assigned the function of appropriate neural representations of natural scenes to a visual hierarchical structure on time domain and that it could predict deviations in neural responses to unlearned inputs and a bias in preferred orientations, which are well known characteristics of the visual system.

Appropriate neural representations in the present study were decodable stable neural representations which is an appropriate function to hierarchical brain structures on the time domain. To demonstrate this, we compared spatio-temporally efficient coding (STEC condition) with existing efficient coding (SEC condition) and sparse coding (Sparse condition) as shown in Figure 4. Only spatio-temporally efficient coding showed decodable stable neural representations. Existing predictive coding is conceptually problematic to apply to such real-time information processing (Hogendoorn and Burkitt, 2019). It would be interesting to compare the results with the conceptually improved predictive coding as well. Temporal coherence (Hurri and Hyvärinen, 2002; Zou et al., 2011) or slow feature analysis (Wiskott and Sejnowski, 2002; Berkes and Wiskott, 2005; Creutzig and Sprekeler, 2008) elicits smooth changes in neural responses, similar to temporally efficient coding in the present study. However, they were not used as comparative models because they are not intended to elicit different neural responses to different external inputs and are not suitable for direct application to the bidirectional multiple hierarchical structure of in the present study. Nevertheless, they can be substituted to the role of temporally efficient coding in the present study.

Since its initial proposal (Attneave, 1954; Barlow, 1961), spatially efficient coding has been validated experimentally (Laughlin, 1981). However, observed correlations between neurons, which maximise entropy to a lesser extent compared to mere spatially efficient coding assuming no inter-neuronal correlations, have yet to be incorporated into the principle of spatially efficient coding. Empirically observed neuronal correlations may drive computational processes of the brain away from strict spatially efficient coding. Recent studies suggest that biological visual systems are intermediate between strict spatially efficient coding and correlated neural responses (Stringer et al., 2019). Therefore, to create biologically plausible computational models, it is necessary to mitigate the spatially efficient coding objective by combining firing-rate-dependent correlations (de la Rocha et al., 2007). This enables more accurate information transmissions of visual perception mediated by visual hierarchical structures. As we focused on integrating spatially efficient coding with temporally efficient coding for computation in hierarchical structures, this study did not incorporate the correlations between neurons in spatially efficient coding, which will be pursued in follow-up studies.

Based on our simulations, we observed that the learning of bidirectional information transmission networks with spatio-temporally efficient coding was hindered when the balancing parameter λ was too small or too large (Figure 2D). Therefore, it was necessary to confine λ within a certain range, in which the magnitude of λ affected neural responses such that a larger λ rendered responses more variable (Figure 2D). Such increased variability is likely to originate from recurrent responses via higher hierarchies. This was confirmed by the observation that top-down synaptic weights become larger than bottom-up synaptic weights when λ increased (Figure 2C). Although a large λ attenuated the appropriateness of neural representations (Figure 3), it rendered stronger top-down synaptic connections in lower hierarchy (Figure 2C), which is consistent with the previous finding that top-down synaptic connections are stronger than bottom-up connections in the lateral geniculate nucleus (Sillito et al., 2006). As to why top-down synaptic weights increase with a larger λ value (Figure 2C), we speculate that learning via spatio-temporally efficient coding may increase the range of neural responses to maximise entropy through top-down pathways. While the bottom-up pathways originating from external inputs are invariant during learning, the top-down pathways originating from higher hierarchy neural responses are more flexible to adjustment to maximise entropy during learning. An increase in these top-down synaptic weights predicts impairment of eye movement tracking partially occluded visual targets in schizophrenic patients (Adams et al., 2012). This may be due to an increased higher hierarchy’s influence in patients with schizophrenia. The increased higher hierarchy’s influence in our simulations is that they do not stabilise neural responses sufficiently to distinguish a given input from other inputs (Figure 4C).

The deviant neural responses to unlearned inputs observed in this study (Figure 5) arise from smooth neural representations for learned inputs (distributed over the middle value). As such, neural representations for learned inputs extrude neural responses to unlearned inputs into a range of deviant neural responses. We conjecture that the visual system may generate deviant neural responses via a similar mechanism.

Spatio-temporally efficient coding predicted a bias in preferred orientations (Figure 7). In this regard, spatially efficient coding alone has been reported to predict bias in preferred orientations (Ganguli and Simoncelli, 2014). Notably, spatio-temporally efficient coding was able to predict this bias well, even when λ was low, that is, when the spatially efficient coding objective was less weighted (Figure 7D). Therefore, this bias prediction should be viewed as a result of spatio-temporally efficient coding, not as a result of spatially efficient coding alone.

The trial-to-trial variability of neural responses can be widely observed in the brain (Malins et al., 2018; Daniel et al., 2019; Nogueira et al., 2020; Li et al., 2021; Zhang et al., 2022). It is observed as both electrophysiological signals (Daniel et al., 2019; Li et al., 2021) and blood-oxygenation-level-dependent signals (Malins et al., 2018; Zhang et al., 2022). These trial-to-trial variability of neural responses are neuronal noise. Assuming strict efficient coding, neurons must code different information to minimise redundancy. This makes neural coding susceptible to neuronal noise (Pryluk et al., 2019). If we allow some redundancy, this coding can be made more robust to neuronal noise (Pryluk et al., 2019). In the real brain, neurons are known to perform robust coding (not strict efficient coding) against neuronal noise by having smooth tuning curves (Stringer et al., 2019). These smooth tuning curves are observed from many experimental data (Chen and Hafed, 2018; Kutter et al., 2018; Chettih and Harvey, 2019; Christensen et al., 2019), supporting the mitigation of strict efficient coding. Spatio-temporally efficient coding makes the neural responses distributed around the middle value by minimising the temporal difference of the neural responses (Figure 8). This is deviant from strict efficient coding as it does not reach entropy maximisation. Therefore, it may be closer to the coding principle of the real brain.

FIGURE 8

Neural response distributions. (A) Examples of natural scene images. (B) Examples of neural response distributions of lower hierarchy units for each of the five time steps of bidirectional information transmissions, corresponding to images in (A). The colour scale indicates the proportion. (C) Overall neural response distributions in response to all input images (either STEC or SEC) at lower and upper hierarchies, respectively. The colour scale indicates the proportion.

FIGURE 9

Summary. (A) Flowchart of details of implementation of spatio-temporally efficient coding. (B) Experimental workflow in the present study.

The present study has several limitations. First, for simplicity, our simulation model contained only two hierarchies. However, it is necessary to explore how spatio-temporally efficient coding operates in models with more hierarchies. We also modelled 64 neuronal units at each hierarchy, as we assumed that this would be sufficient to represent the natural scene images used in this study. Nevertheless, the interactions between the number of neuronal units, levels of hierarchy, and spatio-temporally efficient coding require further investigation. Second, we demonstrated that the visual hierarchical structure could learn to represent static natural scene images with spatio-temporally efficient coding, but future follow-up studies will investigate whether the visual hierarchical structure learns to represent moving scenes using the same coding principle (also see, Sederberg et al., 2018). Finally, the scope of the present study was limited to the visual system given that its hierarchical structure is well documented, but spatio-temporally efficient coding may be applied to other systems (e.g., somatosensory system) or to movements and planning. Recent efficient coding researches are expanding their scope to perception generalisation (Sims, 2018), subjective value (Polanía et al., 2019), and memory (McPherson and McDermott, 2020). It seems that spatio-temporally efficient coding can also broaden its scope.

Conclusion

In the present study, we proposed spatio-temporally efficient coding, inspired by the efficient use of given resources in neural systems, as a neural coding mechanism to assign representational functions to the hierarchical structures of the visual system. Simulations demonstrated that the visual hierarchical structure could represent the external world (i.e., natural scenes) appropriately using bidirectional information transmissions (Figures 2–4). Furthermore, spatio-temporally efficient coding predicted the well-known properties of visual cortical neurons, including deviations in neural responses to unlearned images (Figures 5, 6) and bias in preferred orientations (Figure 7). Our proposed spatio-temporally efficient coding may facilitate deeper mechanistic understanding of the computational processes of hierarchical brain structures.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Statements

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found in the article/Supplementary Material.

Author contributions

DS: conceptualisation, methodology, software, validation, formal analysis, investigation, resources, data curation, writing – original draft, writing – review and editing, visualisation, supervision, and project administration. S-PK: writing – original draft, writing – review and editing, visualisation, supervision, project administration, and funding acquisition. Both authors contributed to the article and approved the submitted version.

Funding

This research was supported by the Brain Convergence Research Programs of the National Research Foundation (NRF), funded by the Korean Government (MSIT) (Nos. NRF-2019M3E5D2A01058328 and 2021M3E5D2A01019542).

Acknowledgments

This research was previously uploaded to the preprint server bioRxiv (Sihn and Kim, 2021). Available at: https://www.biorxiv.org/content/10.1101/2021.08.13.456321v4.abstract.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fncom.2022.890447/full#supplementary-material

References

1

AdamsR. A.PerrinetL. U.FristonK. (2012). Smooth pursuit and visual occlusion: active inference and oculomotor control in schizophrenia.PLoS One7:e47502. 10.1371/journal.pone.0047502

2

AttneaveF. (1954). Some informational aspects of visual perception.Psychol. Rev.61183–193. 10.1037/h0054663

3

BarlowH. B. (1961). “Possible principles underlying the transformations of sensory messages,” in Sensory Communication, ed.RosenblithW. A. (Cambridge, MA: MIT Press), 217–234.

4

BengioY.CourvilleA.VincentP. (2013). Representation learning: a review and new perspectives.IEEE Trans. Pattern Anal. Mach. Intellig.351798–1828. 10.1109/TPAMI.2013.50

5

BerensP.EckerA. S.CottonR. J.MaW. J.BethgeM.ToliasA. S. (2012). A fast and simple population code for orientation in primate V1.J. Neurosci.3210618–10626. 10.1523/JNEUROSCI.1335-12.2012

6

BerkesP.WiskottL. (2005). Slow feature analysis yields a rich repertoire of complex cell properties.J. Vis.5579–602. 10.1167/5.6.9

7

BorstA.TheunissenF. E. (1999). Information theory and neural coding.Nat. Neurosci.2947–957. 10.1038/14731

8

BourlardH.KampY. (1988). Auto-association by multilayer perceptrons and singular value decomposition.Biol. Cybernet.59291–294. 10.1007/BF00332918

9

BoutinV.FranciosiniA.ChavaneF.RuffierF.PerrinetL. (2021). Sparse deep predictive coding captures contour integration capabilities of the early visual system.PLoS Comput. Biol.17:e1008629. 10.1371/journal.pcbi.1008629

10

BrouwerG. J.HeegerD. J. (2009). Decoding and reconstructing color from responses in human visual cortex.J. Neurosci.2913992–14003. 10.1523/JNEUROSCI.3577-09.2009

11

BullmoreE.SpornsO. (2012). The economy of brain network organization.Nat. Rev. Neurosci.13336–349. 10.1038/nrn3214

12

BurtJ. B.DemirtaşM.EcknerW. J.NavejarN. M.JiJ. L.MartinW. J.et al (2018). Hierarchy of transcriptomic specialization across human cortex captured by structural neuroimaging topography.Nat. Neurosci.211251–1259. 10.1038/s41593-018-0195-0

13

ChenC.-Y.HafedZ. M. (2018). Orientation and contrast tuning properties and temporal flicker fusion characteristics of primate superior colliculus neurons.Front. Neural Circ.12:58. 10.3389/fncir.2018.00058

14

ChettihS. N.HarveyC. D. (2019). Single-neuron perturbations reveal feature-specific competition in V1.Nature567334–340. 10.1038/s41586-019-0997-6

15

ChristensenR. K.LindénH.NakamuraM.BarkatT. R. (2019). White noise background improves tone discrimination by suppressing cortical tuning curves.Cell Rep.292041.e4–2053.e4. 10.1016/j.celrep.2019.10.049

16

ClarkA. (2013). Whatever next? Predictive brains, situated agents, and the future of cognitive science.Behav. Brain Sci.36181–204. 10.1017/S0140525X12000477

17

CreutzigF.SprekelerH. (2008). Predictive coding and the slowness principle: an information-theoretic approach.Neural Comput.201026–1041. 10.1162/neco.2008.01-07-455

18

DanielE.MeindertsmaT.AraziA.DonnerT. H.DinsteinI. (2019). The Relationship between trial-by-trial variability and oscillations of cortical population activity.Sci. Rep.9:16901. 10.1038/s41598-019-53270-7

19

de la RochaJ.DoironB.Shea-BrownE.JosicK.ReyesA. (2007). Correlation between neural spike trains increases with firing rate.Nature448802–806. 10.1038/nature06028

20

deCharmsR. C.ZadorA. (2000). Neural representation and the cortical code.Annu. Rev. Neurosci.23613–647. 10.1146/annurev.neuro.23.1.613

21

Di GesùV.StarovoitovV. (1999). Distance-based functions for image comparison.Pattern Recogn. Lett.20207–214. 10.1016/S0167-8655(98)00115-9

22

DiCarloJ. J.ZoccolanD.RustN. C. (2012). How does the brain solve visual object recognition?Neuron73415–434. 10.1016/j.neuron.2012.01.010

23

FellemanD. J.Van EssenD. C. (1991). Distributed hierarchical processing in the primate cerebral cortex.Cereb. Cortex11–47. 10.1093/cercor/1.1.1

24

FristonK. (2005). A theory of cortical responses.Philos. Trans. R. Soc. B360815–836. 10.1098/rstb.2005.1622

25

FristonK. (2010). The free-energy principle: a unified brain theory?Nat. Rev. Neurosci.11127–138. 10.1038/nrn2787

26

FristonK.ThorntonC.ClarkA. (2012). Free-energy minimization and the dark-room problem.Front. Psychol.3:130. 10.3389/fpsyg.2012.00130

27

FurmanskiC. S.EngelS. A. (2000). An oblique effect in human primary visual cortex.Nat. Neurosci.3535–536. 10.1038/75702

28

GanguliD.SimoncelliE. P. (2014). Efficient sensory encoding and bayesian inference with heterogeneous neural populations.Neural Comput.262103–2134. 10.1162/NECO_a_00638

29

GirshickA. R.LandyM. S.SimoncelliE. P. (2011). Cardinal rules: visual orientation perception reflects knowledge of environmental statistics.Nat. Neurosci.14926–932. 10.1038/nn.2831

30

GoodfellowI. J.CourvilleA.BengioY. (2011). “Spike-and-slab sparse coding for unsupervised feature discovery,” in Proceedings of the Conference on Neural Information Processing Systems, Granada.

31

GriffithsT. L.LiederF.GoodmanN. D. (2015). Rational use of cognitive resources: levels of analysis between the computational and the algorithmic.Top. Cogn. Sci.7217–229. 10.1111/tops.12142

32

HansenJ. Y.MarkelloR. D.VogelJ. W.SeidlitzJ.BzdokD.MisicB. (2021). Mapping gene transcription and neurocognition across human neocortex.Nat. Hum. Behav.51240–1250. 10.1038/s41562-021-01082-z

33

HarrisJ. A.MihalasS.HirokawaK. E.WhitesellJ. D.ChoiH.BernardA.et al (2019). Hierarchical organization of cortical and thalamic connectivity.Nature575195–202. 10.1038/s41586-019-1716-z

34

HastieT.TibshiraniR.FriedmanJ. (2009). The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd Edn. Cham: Springer.

35

HilgetagC. C.GoulasA. (2020). ‘Hierarchy’ in the organization of brain networks.Philos. Trans. R. Soc. B375:20190319. 10.1098/rstb.2019.0319

36

HintonG. E.ZemelR. S. (1993). “Autoencoders, minimum description length and Helmholtz free energy,” in Proceedings of the Conference on Neural Information Processing Systems, Denver, CO.

37

HogendoornH.BurkittA. N. (2019). Predictive coding with neural transmission delays: a real-time temporal alignment hypothesis.eNeuro6:ENEURO.0412-18.2019. 10.1523/ENEURO.0412-18.2019

38

HuangG.RamachandranS.LeeT. S.OlsonC. R. (2018). Neural correlate of visual familiarity in macaque area V2.J. Neurosci.388967–8975. 10.1523/JNEUROSCI.0664-18.2018

39

HubelD. H.WieselT. N. (1962). Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex.J. Physiol.160106–154. 10.1113/jphysiol.1962.sp006837

40

HubelD. H.WieselT. N. (1968). Receptive fields and functional architecture of monkey striate cortex.J. Physiol.195215–243. 10.1113/jphysiol.1968.sp008455

41

HuhC. Y. L.PeachJ. P.BennettC.VegaR. M.HestrinS. (2018). Feature-specific organization of feedback pathways in mouse visual cortex.Curr. Biol.28114.e5–120.e5. 10.1016/j.cub.2017.11.056

42

HurriJ.HyvärinenA. (2002). “Temporal coherence, natural image sequences, and the visual cortex,” in Proceedings of the Conference on Neural Information Processing Systems, Vancouver.

43

IssaE. B.CadieuC. F.DiCarloJ. J. (2018). Neural dynamics at successive stages of the ventral visual stream are consistent with hierarchical error signals.eLife7:e42870. 10.7554/eLife.42870

44

KamitaniY.TongF. (2005). Decoding the visual and subjective contents of the human brain.Nat. Neurosci.8679–685. 10.1038/nn1444

45

KingmaD. P.BaJ. (2015). “Adam: a method for stochastic optimization,” in Proceedings of the 3th International Conference on Learning Representations, San Diego, CA.

46

KriegeskorteN.DiedrichsenJ. (2019). Peeling the onion of brain representations.Annu. Rev. Neurosci.42407–432. 10.1146/annurev-neuro-080317-061906

47

KutterE. F.BostroemJ.ElgerC. E.MormannF.NiederA. (2018). Single neurons in the human brain encode numbers.Neuron100753.e4–761.e4. 10.1016/j.neuron.2018.08.036

48

LaughlinS. B. (1981). A simple coding procedure enhances a neuron’s information capacity.Z. Naturforsch.36910–912. 10.1515/znc-1981-9-1040

49

LaughlinS. B. (2001). Energy as a constraint on the coding and processing of sensory information.Curr. Opin. Neurobiol.11475–480. 10.1016/S0959-4388(00)00237-3

50