- 1Department of Linguistics, University of California, Berkeley, Berkeley, CA, United States

- 2Department of Linguistics, University of Washington, Seattle, WA, United States

Training deep neural networks on well-understood dependencies in speech data can provide new insights into how they learn internal representations. This paper argues that acquisition of speech can be modeled as a dependency between random space and generated speech data in the Generative Adversarial Network architecture and proposes a methodology to uncover the network's internal representations that correspond to phonetic and phonological properties. The Generative Adversarial architecture is uniquely appropriate for modeling phonetic and phonological learning because the network is trained on unannotated raw acoustic data and learning is unsupervised without any language-specific assumptions or pre-assumed levels of abstraction. A Generative Adversarial Network was trained on an allophonic distribution in English, in which voiceless stops surface as aspirated word-initially before stressed vowels, except if preceded by a sibilant [s]. The network successfully learns the allophonic alternation: the network's generated speech signal contains the conditional distribution of aspiration duration. The paper proposes a technique for establishing the network's internal representations that identifies latent variables that correspond to, for example, presence of [s] and its spectral properties. By manipulating these variables, we actively control the presence of [s] and its frication amplitude in the generated outputs. This suggests that the network learns to use latent variables as an approximation of phonetic and phonological representations. Crucially, we observe that the dependencies learned in training extend beyond the training interval, which allows for additional exploration of learning representations. The paper also discusses how the network's architecture and innovative outputs resemble and differ from linguistic behavior in language acquisition, speech disorders, and speech errors, and how well-understood dependencies in speech data can help us interpret how neural networks learn their representations.

1. Introduction

How to model language acquisition is among the central questions in linguistics and cognitive science in general. Acoustic speech signal is the main input for hearing infants acquiring language. By the time acquisition is complete, humans are able to decode and encode information from or to a continuous speech stream and construct a grammar that enables them to do so (Saffran et al., 1996, 2007; Kuhl, 2010). In addition to syntactic, morphological, and semantic representations, the learner needs to learn phonetic representations and phonological grammar: to analyze and in turn produce speech as a continuous acoustic stream represented by mental units called phonemes. Phonological grammar manipulates these discrete units and derives surface forms from stored lexical representations. The goal of linguistics and more specifically, phonology, is to explain how language-acquiring children construct a phonological grammar, how the grammar derives surface outputs from inputs, and what aspects of the grammar are language-specific in order to tease them apart from those aspects that can be explained by general cognitive processes or historical developments (de Lacy, 2006; Moreton, 2008; Moreton and Pater, 2012a,b; de Lacy and Kingston, 2013; Beguš, 2018b).

Computational models have been invoked for the purpose of modeling language acquisition and phonological grammar ever since the rise of computational methods and computationally informed linguistics (for an overview of the literature, see Alderete and Tupper, 2018a; Dupoux, 2018; Jarosz, 2019; Pater, 2019). One of the major shortcomings of the majority of the existing proposals is that learning is modeled with an already assumed level of abstraction (Dupoux, 2018). In other words, most of the proposals model phonological learning as symbol manipulation of discrete units that already operates on the abstract, discrete phonological level. The models thus require strong assumptions that phonetic learning has already taken place, and that phonemes as discrete units have already been inferred from continuous speech data (for an overview of the literature, see Oudeyer, 2005, 2006; Dupoux, 2018).

This paper proposes that language acquisition can be modeled with Generative Adversarial Networks (Goodfellow et al., 2014). More specifically, phonetic and phonological computation is modeled as the mapping from random space to generated data of a Generative Adversarial Network (Goodfellow et al., 2014) trained on raw unannotated acoustic speech data in an unsupervised manner (Donahue et al., 2019). To the author's knowledge, language acquisition has not been modeled within the GAN framework despite several advantages of this architecture. The characteristic feature of the GAN architecture is an interaction between the Generator network that outputs raw data and the Discriminator that distinguishes real data from Generator's outputs (Goodfellow et al., 2014). A major advantage of the GAN architecture is that learning is completely unsupervised, the networks include no language-specific elements, and that, as is argued in Section 4 below, phonetic learning is modeled simultaneously with phonological learning. The discussion on the relationship between phonetics and phonology is highly complex (Kingston and Diehl, 1994; Cohn, 2006; Keyser and Stevens, 2006). Several opposing proposals, however, argue that the two interact at various different stages and are not dissociated from each other (Hayes, 1999; Pierrehumbert, 2001; Fruehwald, 2016, 2017). A network that models learning of phonetics from raw data and shows signs of phonological learning is likely one step closer to reality than models that operate with symbolic computation and assume phonetic learning has already taken place independently of phonology (and vice versa).

We argue that the latent variables in the input of the Generator network can be modeled as approximates to phonetic or potentially phonological representations that the Generator learns to output into a speech signal by attempting to maximize the error rate of a Discriminator network that distinguishes between real data and generated outputs. The Discriminator network thus has a parallel in human speech: the imitation principle (Nguyen and Delvaux, 2015). The Discriminator's function is to enforce that the Generator's outputs resemble (but do not replicate) the inputs as closely as possible. The GAN network thus incorporates both the pre-articulatory production elements (the Generator) as well as the imitation principle (the Discriminator) in speech acquisition. While other neural network architectures might be appropriate for modeling phonetic and phonological learning as well, the GAN architecture is unique in that it combines a network that produces innovative data (the Generator) with a network that forces imitation in the Generator. Unlike, for example, autoencoder networks, the Generative Adversarial network lacks a direct connection between the input and output data and generates innovative data rather than data that resembles the input as closely as possible.

We train a Generative Adversarial Network architecture implemented for audio files in Donahue et al. (2019) (WaveGAN) on raw speech data that contains information for an allophonic distribution: word-initial pre-vocalic aspiration of voiceless stops ([ˈphɪt] ~ [ˈspɪt]). The data is curated in order to control for non-desired effects, which is why only sequences of the shape #TV and #sTV1 are fed to the model. This allophonic distribution is appropriate for testing learnability in a GAN architecture, because the dependency between the presence of [s] and duration of VOT is not strictly local. To be sure, the dependency is local in phonological terms, as [s] and T are two segments and immediate neighbors, but in phonetic terms, a period of closure intervenes between the aspiration and the period (or absence thereof) of frication noise of [s]. It is not immediately clear whether a GAN model is capable of learning such non-local dependencies. To our knowledge, this is the first proposal that tests whether neural networks are able to learn an allophonic distribution based on raw acoustic data.

The hypothesis of the computational experiment presented in Section 4 is the following: if VOT duration is conditioned on the presence of [s] in output data generated from noise by the Generator network, it means that the Generator network has successfully learned a phonetically non-local allophonic distribution. Because the allophonic distribution is not strictly local and has to be learned and actively controlled by speakers (i.e., is not automatic), evidence for this type of learning is considered phonological learning in the broadest sense. Conditioning the presence of a phonetic feature based on the presence or absence of a phoneme that is not automatic is, in most models, considered part of phonology and is derived with phonological computation. That the tested distribution is non-automatic and has to be actively controlled by the speakers is evident from L1 acquisition: failure to learn the distribution results in longer VOT durations in the sT condition documented in L1 acquisition (see Section 5.1).

The results suggest that phonetic and phonological learning can be modeled simultaneously, without supervision, directly from what language-acquiring infants are exposed to: raw acoustic data. A GAN model trained on an allophonic distribution is successful in learning to generate acoustic outputs that contain this allophonic distribution (VOT duration). Additionally, the model outputs innovative data for which no evidence was available in the training data, allowing a direct comparison between human speech data and the GAN's generated output. As argued in Section 4.2, some outputs are consistent with human linguistic behavior and suggest that the model recombines individual sounds, resembling phonemic representations and productivity in human language acquisition (Section 5).

This paper also proposes a technique for establishing the Generator's internal representations. The inability to uncover networks' representations has been used as an argument against neural network approaches to linguistic data (among others in Rawski and Heinz, 2019). We argue that the internal representation of a network can be, at least partially, uncovered. By regressing annotated dependencies between the Generator's latent space and output data, we identify values in the latent space that correspond to linguistically meaningful features in generated outputs. This paper demonstrates that manipulating the chosen values in the latent space has phonetic effects in the generated outputs, such as the presence of [s] and the amplitude of its frication. In other words, the GAN learns to use random noise as an approximation of phonetic (and potentially phonological) representations. This paper proposes that dependencies, learned during training in a latent space that is limited by some interval, extend beyond this interval. This crucial step allows for the discovery of several phonetic properties that the model learns.

By modeling phonetic and phonological learning with neural networks without any language-specific assumptions, the paper also addresses a broader question of how many language-specific elements are needed in models of grammar and language acquisition. Most of the existing models require at least some language-specific devices, such as rules in rule-based approaches or pre-determined constraints with features and feature matrices in connectionist approaches. The model proposed here lacks language-specific assumptions (similar to the exemplar-based models). Comparing the performance of substance-free models with competing proposals and human behavior should result in a better understanding of what aspects of phonological grammar and acquisition are domain-specific (Section 5).

In the following, we first survey existing theories of phonological grammar and literature on computational approaches to phonology (Section 2). In Section 3, we present the model in Donahue et al. (2019) based on Radford et al. (2015) and provide acoustic and statistical analysis of the training data. The network's outputs are first acoustically analyzed and described in Sections 4.1 and 4.2. In Section 4.3, we present a technique for establishing the network's internal representations and test it with two generative tests. In Section 4.5, we analyze phonetic properties of the network's internal representations. Section 5 compares the outputs of the model with L1 acquisition, speech impairments, and speech errors.

2. Previous Work

In the generative tradition, phonological grammar derives surface phonetic outputs from phonological inputs (Chomsky and Halle, 1968). For example, /p/ is an abstract unit that can surface (be realized) with variations at the phonetic level. English /p/ is realized as aspirated [ph] (produced with a puff of air) word-initially before stressed vowels, but as unaspirated plain [p] (without the puff of air) if [s] immediately precedes it. This distribution is completely predictable and derivable with a simple rule (Iverson and Salmons, 1995), which is why the English phoneme /p/ as an abstract mental unit is unspecified for aspiration (or absence thereof) in the underlying representation (/ˈpɪt/ “pit” and /ˈspɪt/ “spit”). The surface phonetic outputs after the phonological derivation had taken place are [ˈphɪt] with the aspiration and [ˈspɪt] without the aspiration.

One of the main objectives of phonological theory is to explain how the grammar derives surface outputs, i.e., phonetic signals, from inputs, i.e., phonemic representations. Two influential proposals have been in the center of this discussion, the rule-based approach and Optimality Theory. The first approach uses rewrite rules (Chomsky and Halle, 1968) or finite state automata (Heinz, 2010; Chandlee, 2014) to derive outputs from inputs through derivation. A connectionist approach called Optimality Theory (Prince and Smolensky, 2004) and related proposals such as Harmonic Grammar and Maximum Entropy (MaxEnt) grammar (Legendre et al., 1990, 2006; Goldwater and Johnson, 2003; Wilson, 2006; Hayes and Wilson, 2008; Pater, 2009; Hayes and White, 2013; White, 2014, 2017), on the other hand, model phonological grammar as input-output pairing: the grammar chooses the most optimal output given an input. These models were heavily influenced by the early advances in neural network research (Alderete and Tupper, 2018a; Pater, 2019). Modeling linguistic data with neural networks has seen a rapid increase in the past few years (Alderete et al., 2013; Avcu et al., 2017; Kirov, 2017; Alderete and Tupper, 2018a; Dupoux, 2018; Mahalunkar and Kelleher, 2018; Weber et al., 2018; Prickett et al., 2019, for cautionary notes, see Rawski and Heinz, 2019). One of the promising implications of neural network modeling is the ability to test generalizations that the models produce without language-specific assumptions (Pater, 2019).

In opposition to the generative approaches, there exists a long tradition of usage-based models in phonology (Bybee, 1999; Silverman, 2017) which diverges from the generative approaches in some crucial aspects. Exemplar models (Johnson, 1997, 2007; Pierrehumbert, 2001; Gahl and Yu, 2006; Kaplan, 2017), for example, assume that phonetic representations are stored as experiences or exemplars. Grammatical behavior emerges as a consequence of generalization (or computation) over a cloud of exemplars (Johnson, 2007; Kaplan, 2017). In this framework, there is no direct need for a separate underlying representation from which the surface outputs are derived (or optimized). Several phenomena in phonetics and phonology have been successfully derived within this approach (for an overview, see Kaplan, 2017), and the framework allows phonology to be modeled computationally. The computational models often involve interacting agents learning some simplified phonetic properties (e.g., de Boer, 2000; Wedel, 2006; Kirby and Sonderegger, 2015).

The majority of existing computational models in phonology (including finite state automata, the MaxEnt model and the existing neural network methods) model learning as symbol manipulation and operate with discrete units—either with completely abstract made-up units or with discrete units that feature some phonetic properties that can be approximated as phonemes. This means that either phonetic and phonological learning are modeled separately or one is assumed to have already been completed (Martin et al., 2013; Dupoux, 2018). This is true for both proposals that model phonological distributions or derivations (Alderete et al., 2013; Futrell et al., 2017; Kirov, 2017; Prickett et al., 2019) and featural organizations (Faruqui et al., 2016; Silfverberg et al., 2018). The existing models also require strong assumptions about learning: underlying representations, for example, are pre-assumed and not inferred from data (Kirov, 2017; Prickett et al., 2019).

Most models in the subset of the proposals that operate with continuous phonetic data assume at least some level of abstraction and operate with already extracted features (e.g., formant values) on limited “toy” data (e.g., Pierrehumbert, 2001; Kirby and Sonderegger, 2015, for a discussion, see Dupoux, 2018). Guenther and Vladusich (2012), Guenther (2016) and Oudeyer (2001, 2002, 2005, 2006) propose models that use simple neural maps that are based on actual correlates of neurons involved in speech production in the human brain (based on various brain imaging techniques). Their models, however, do not operate with raw acoustic data (or require extraction of features in a highly abstract model of articulators; Oudeyer, 2005, 2006), require a level of abstraction in the input to the model, and do not model phonological processes—i.e., allophonic distributions. Phonological learning in most of these proposals is thus modeled as if phonetic learning (or at least a subset of phonetic learning) has already taken place: the initial state already includes phonemic inventories, phonemes as discrete units, feature matrices that have already been learned, or extracted phonetic values.

Prominent among the few models that operate with raw phonetic data are Gaussian mixture models for category-learning or phoneme extraction (Lee and Glass, 2012; Schatz et al., 2019). Schatz et al. (2019) propose a Dirichlet process Gaussian mixture model that learns categories from raw acoustic input in an unsupervised learning task. The model is trained on English and Japanese data and the authors show that the asymmetry in perceptual [l]~[r] distinction between English and Japanese falls out automatically from their model. The primary purpose of the proposal in Schatz et al. (2019) is modeling perception and categorization: they model how a learner is able to categorize raw acoustic data into sets of discrete categorical units that have phonetic values (i.e., phonemes). No phonological processes are modeled in the proposal.

A number of earlier works in the connectionist approach included basic neural network architectures to model mapping from some simplified phonetic space to the discrete phonological space (McClelland and Elman, 1986; Gaskell et al., 1995; Plaut and Kello, 1999; Kello and Plaut, 2003). Input to most of these models is not raw acoustic data (except in Kello and Plaut, 2003), but already extracted features. Learning in these models is also not unsupervised: the models come pre-specified with discretized phonetic or phonological units. None of the models are generative and do not model learning of phonological processes, but rather of classifying a simplified phonetic space with already available phonological elements.

Recently, neural network models for unsupervised feature extraction have seen success in modeling acquisition of phonetic features from raw acoustic data (Räsänen et al., 2016; Eloff et al., 2019; Shain and Elsner, 2019). The model in Shain and Elsner (2019), for example, is an autoencoder neural network that is trained on pre-segmented acoustic data. The model takes as input segmented acoustic data and outputs values that can be correlated to phonological features. Learning is, however, not completely unsupervised as the network is trained on pre-segmented phones. Thiollière et al. (2015) similarly propose an architecture that extracts units from unsupervised speech data. Other proposals for unsupervised acoustic analysis with neural network architecture are similarly primarily concerned with unsupervised feature extraction (Kamper et al., 2015). These proposals, however, do not model learning of phonological distributions, but only of feature representations, do not show a direct relationship between individual variables in the latent space and acoustic outputs (as in Section 4.4 and Figure 14), and crucially are not generative, meaning that the models do not output innovative data, but try to replicate the input as closely as possible (e.g., in the autoencoder architecture).

As argued below, the model based on a Generative Adversarial Network (GAN) learns not only to generate innovative data that closely resemble human speech, but also learns internal representations that resemble phonological learning with unsupervised phonetic learning from raw acoustic data. Additionally, the model is generative and outputs both the conditional allophonic distributions and innovative data that can be compared to productive outputs in human speech acquisition.

3. Materials

3.1. The Model: Donahue et al. (2019) Based on Radford et al. (2015)

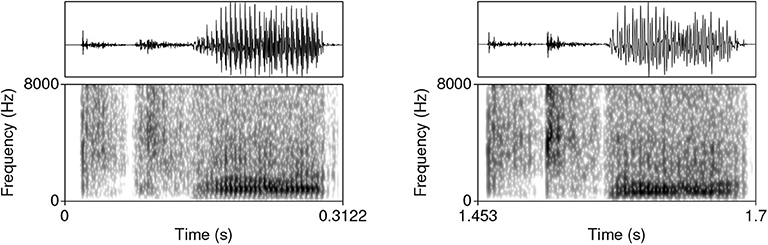

Generative Adversarial Networks, proposed by Goodfellow et al. (2014), have seen a rapid expansion in a variety of tasks, including but not limited to computer vision and image generation (Radford et al., 2015). The main characteristic of GANs is the architecture that involves two networks: the Generator network and the Discriminator network (Goodfellow et al., 2014). The Generator network is trained to generate data from random noise, while the Discriminator is trained to distinguish real data from the outputs of the Generator network (Figure 1). The Generator is trained to generate data that maximizes the error rate of the Discriminator network. The training results in a Generator (G) network that takes random noise as its input (e.g., multiple variables with uniform distributions) and outputs data such that the Discriminator is inaccurate in distinguishing the generated from the real data (Figure 1).

Figure 1. A diagram showing the Generative Adversarial architecture as proposed in Goodfellow et al. (2014) and Donahue et al. (2019), trained on data from the TIMIT database in this paper.

Applying the GAN architecture to time-series data such as a continuous speech stream poses several challenges. Recently, Donahue et al. (2019) proposed an implementation of a Deep Convolutional Generative Adversarial Network proposed by Radford et al. (2015) for audio data (WaveGAN); the model along with the code in Donahue et al. (2019) were used for training in this paper. The model takes 1 s long raw audio files as inputs, sampled at 16 kHz with 16-bit quantization. The audio files are converted into a vector and fed to the Discriminator network as real data. Instead of the two-dimensional 5 × 5 filters, the WaveGAN model uses one-dimensional 1 × 25 filters and larger upsampling. The main architecture is preserved as in DCGAN, except that an additional layer is introduced in order to generate longer samples (Donahue et al., 2019). The Generator network takes as input z, a vector of one hundred uniformly distributed variables () and outputs 16,384 data points, which constitutes the output audio signal. The network has five 1D convolutional layers (Donahue et al., 2019). The Discriminator network takes 16,384 data points (raw audio files) as its input and outputs a single value. The Discriminator's weights are updated five times per each update of the Generator. The initial GAN design as proposed by Goodfellow et al. (2014) trained the Discriminator network to distinguish real from generated data. Training such models, however, posed substantial challenges (Donahue et al., 2019). Donahue et al. (2019) implement the WGAN-GP strategy (Arjovsky et al., 2017; Gulrajani et al., 2017), which means that the Discriminator is trained “as a function that assists in computing the Wasserstein distance” (Donahue et al., 2019). The WaveGAN model (Donahue et al., 2019) uses ReLU activation in all but the last layer for the Generator network, and Leaky ReLU in all layers in the Discriminator network (as recommended for DCGAN in Radford et al., 2015). For exact dimensions of each layer and other details of the model, see Donahue et al. (2019).

3.2. Training Data

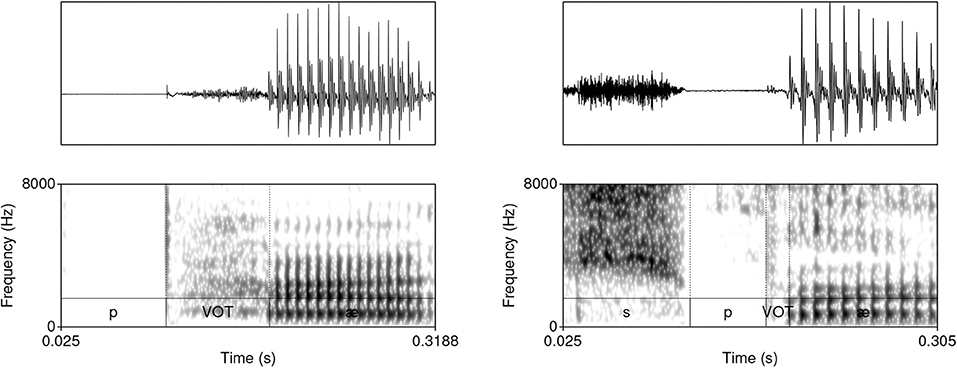

The model was trained on the allophonic distribution of voiceless stops in English. As already mentioned in Section 1, voiceless stops /p, t, k/ surface as aspirated (produced with a puff of air) [ph, th, kh] in English in word-initial position when immediately followed by a stressed vowel (Lisker, 1984; Iverson and Salmons, 1995; Vaux, 2002; Vaux and Samuels, 2005; Davis and Cho, 2006). If an alveolar sibilant [s] precedes the stop, however, the aspiration is blocked and the stop surfaces as unaspirated [p, t, k] (Vaux and Samuels, 2005). A minimal pair illustrating this allophonic distribution is [ˈphɪt] “pit” vs. [ˈspɪt] “spit.” The most prominent phonetic correlate of this allophonic distribution is the difference in Voice Onset Time (VOT) duration (Lisker and Abramson, 1964; Abramson and Whalen, 2017) between the aspirated and unaspirated voiceless stops. VOT is the duration between the release of the stop ([p, t, k]) and the onset of periodic vibration in the following vowel.

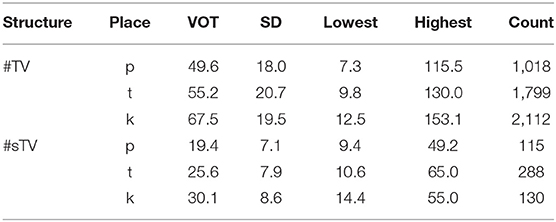

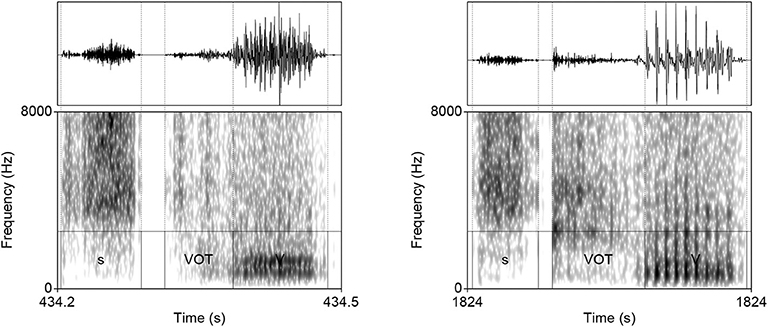

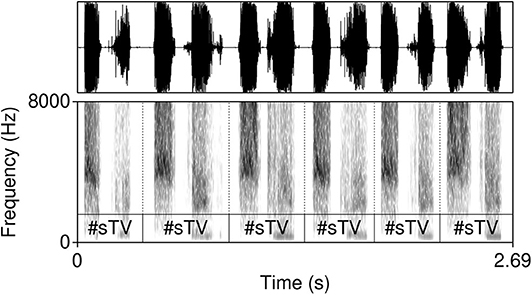

The model was trained on data from the TIMIT database (Garofolo et al., 1993)2. The corpus was chosen because it is one of the largest currently available hand-annotated speech corpora, the recording quality is relatively high, and the corpus features a relative high degree of variability. The database includes 6,300 sentences, 10 sentences per 630 speakers from 8 major dialectal areas in the US (Garofolo et al., 1993). The training data consist of 16-bit ·wav files with 16 kHz sampling rate of word initial sequences of voiceless stops /p, t, k/ (= T) that were followed by a vowel (#TV) and word initial sequences of /s/ + /p, t, k/, followed by a vowel (#sTV). The training data includes 4,930 sequences with the structure #TV (90.2%) and 533 (9.8%) sequences with the structure #sTV (5,463 total). Figure 2 illustrates typical training data: raw audio files with speech data, but limited to two types of sequences, #TV and #sTV. Figure 2 also illustrates that the duration of VOT depends on a condition that is not immediately adjacent in phonetic terms: the absence/presence of [s] is interrupted from the VOT duration by a period of closure in the training data. That VOT is significantly shorter if T is preceded by [s] in the training data is confirmed by a Gamma regression model: β = −0.84, t = −49.69, p < 0.0001 (for details, see Section 1, Supplementary Materials).

Figure 2. Waveforms and spectrograms (0−8, 000 Hz) of [phæ] (left) and [spæ] (right) illustrating typical training data with annotations from TIMIT. Only the raw audio data (in ·wav format) were used in training. The annotation illustrates a substantially longer duration of VOT in word-initial stops when no [s] precedes.

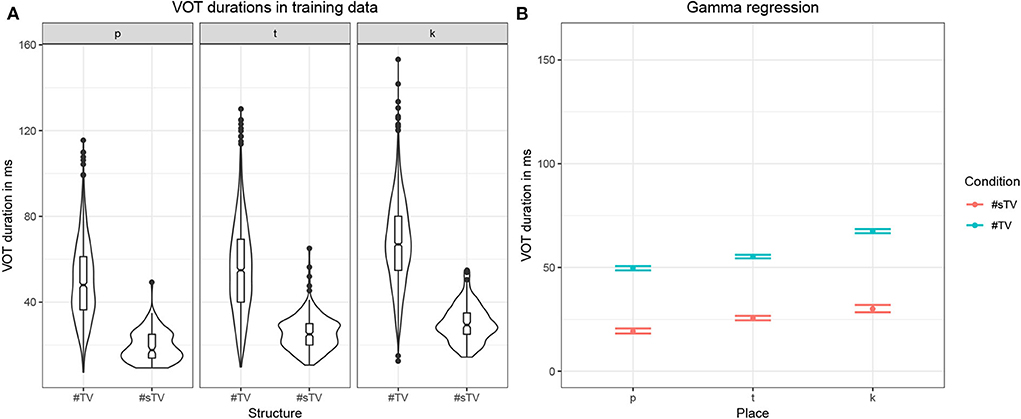

Both stressed and unstressed vowels are included in the training data. Including both stressed and unstressed vowels is desirable, as this condition crucially complicates learning and makes the task for the model more challenging as well as more realistic. Aspiration is less prominent in word-initial stops not followed by a stressed vowel. This means that in the condition #TV, the stop will be either fully aspirated (if followed by a stressed vowel) or unaspirated (if followed by an unstressed vowel). Violin plots in Figure 3 illustrate that aspiration of stops before an unstressed vowel can be as short as in the #sTV condition. In the #sTV condition, the stop is never aspirated. Learning of two conditions is more complex if the dependent variable in one condition can range across the variable in the other condition.

Figure 3. (A) Violin plots with box-plots of durations in ms of VOT in the training data based on two conditions: when word-initial #TV sequence is not preceded by [s] (#TV) and when it is preceded by [s] (#sTV) across the three places of articulation: [p], [t], [k]. (B) Fitted values of VOT durations with 95% confidence intervals from the Gamma (with log-link) regression model in Table S1 in Supplementary Materials.

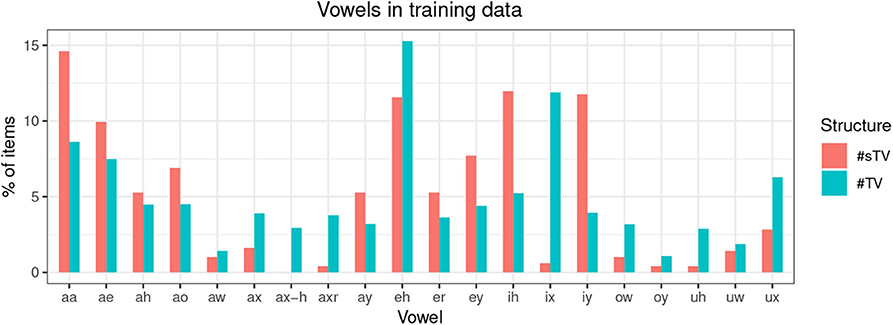

The training data is not completely naturalistic: #TV and #sTV sequences are sliced from continuous speech data. This, however, has a desirable effect. The primary purpose of this paper is to test whether a GAN model can learn an allophonic distribution from data that consists of raw acoustic inputs. If the entire lexicon was included in the training data, the distribution of VOT duration could be conditioned on some other distribution, not the one this paper is predominately interested in: the presence or absence of [s]. It is thus less likely that the distribution of VOT duration across the main condition of interest, the presence of [s], is conditioned on some other unwanted factor in the model precisely because of the balanced design of the training data. The only condition that can potentially influence learning is the distribution of vowels across the two conditions. Figure 4, however, shows that vowels are relatively equally distributed across the two conditions, which means that vowel identity likely does not influence the outcomes substantially. Finally, vowel duration (or the equivalent of speech rate in real data) and identity are not controlled for in the present experiment. To control for vowel duration, VOT duration would have to be modeled as a proportion of the following vowel duration. Several confounds that are not easy to address would be introduced, the main of which is that vowel identification is problematic for generated inputs with fewer training steps. Because the primary interest of the experiment is the difference in VOT durations between two groups (the presence and absence of [s]) and substantial differences in vowel durations (or speech rate) between the two groups are not expected, we do not anticipate the results to be substantially influenced by speech rate.

Figure 4. Distribution of training items according to vowel identity as described in TIMIT in ARPABET, where aa = ɑ, ae = æ, ah = ʌ, ao = ɔ, aw = aʊ, ax = ə, ax-h = , axr = ɚ, ay = aɪ, eh = ɛ, er = ɝ, ey = eɪ, ih = ɪ, ix = ɨ, iy = i, ow = oʊ, oy = oɪ, uh = ʊ, uw = u, ux = ʉ in the International Phonetic Alphabet.

4. Experiment

4.1. Training and Generation

The purpose of this paper is to model phonetic and phonological learning. For this reason, the data was generated and examined at different points as the Generator network was in the process of being trained. For the purpose of modeling learning, it is more informative to probe the networks with fewer training steps, which allows a comparison between the model's outputs and L1 acquisition (Section 5.1). Outputs of the network are analyzed after 12,255 steps (Section 4.2). The number of steps was chosen as a compromise between quality of output data and the number of epochs in the training. Establishing the number of training steps at which an effective acoustic analysis can be performed is at this point somewhat arbitrary. We generated outputs of the Generator model trained after 1,474, 4,075, 6,759, 9,367, and 12,255 steps and manually inspected them. The model trained after 12,255 steps was considered the first that allowed a reliable acoustic analysis based on quality of the generated outputs. It would be informative to test how accuracy of labeled data improves with training steps, but this is left for future work. The model was trained on a single NVIDIA K80 GPU. The network was trained at an approximate pace of 40 steps per 300 s. In Section 4.2, we present measurements of VOT durations in the #sTV and #TV conditions in the generated outputs and discuss linguistically interpretable innovative outputs that violate the training data. In Section 4.3.1, we propose a technique for recovering the Generator network's internal representations; in Section 4.4 we illustrate that manipulating these variables has a phonetically meaningful effect in the output data.

4.2. VOT Duration

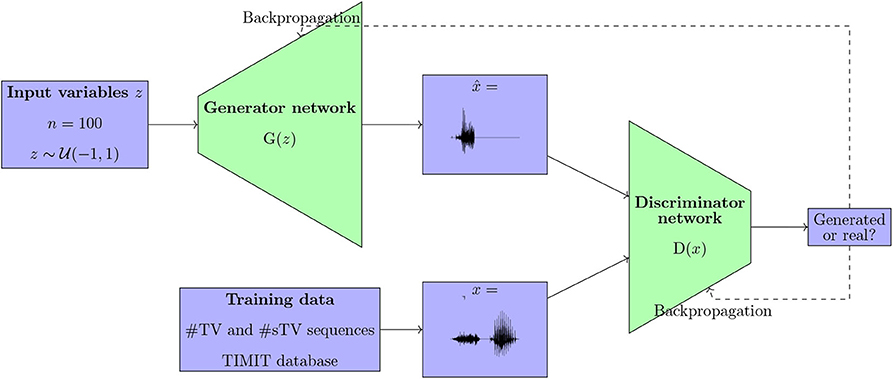

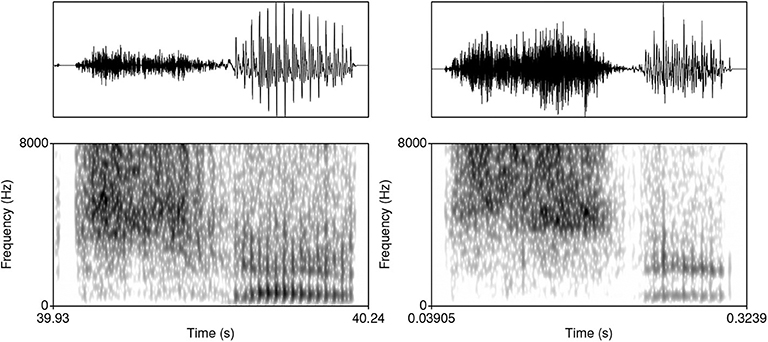

The Generator network after 12,255 steps (~ 716 epochs) generates acoustic data that appear close to actual speech data. Figure 5 illustrates a typical generated sample of #TV (left) and #sTV (right) structures with a substantial difference in VOT durations.

Figure 5. Waveforms and spectrograms (0–8,000 Hz) of typical generated samples of #TV (left) and #sTV (right) sequences from a Generator trained after 12,255 steps.

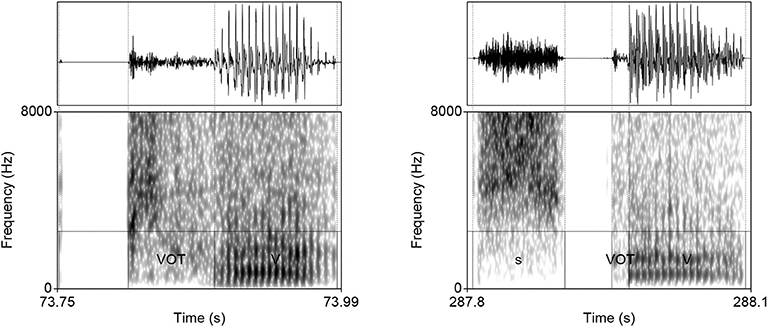

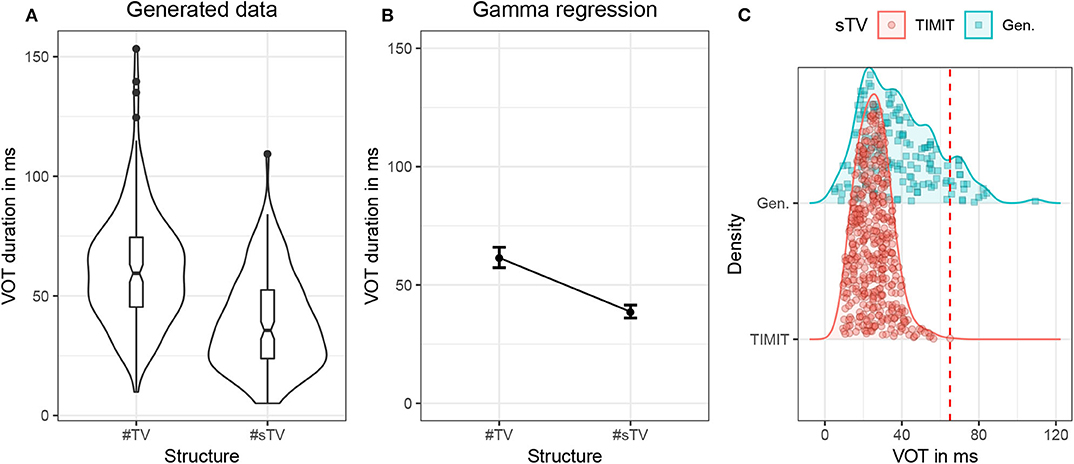

To test whether the Generator learns the conditional distribution of VOT duration, 2,000 samples were generated and manually inspected. First, VOT duration was manually annotated in all #sTV sequences. There were altogether 156 such sequences. To perform significance testing on a similar sample size, the first 158 sequences of the #TV structure were also annotated for VOT duration. VOT was measured from the release of closure to the onset of periodic vibration with clear formant structure. Altogether 314 generated samples were thus annotated. Only samples with structure that resembles real acoustic outputs and for which VOT could be determined were annotated. The proportion of inputs for which a clear #sTV or #TV sequence was not recognizable is relatively small: in only 8 of the first 175 annotated outputs (4.6%) was it not possible to estimate the VOT duration or whether the sequence is of the #TV or #sTV structure. Figure 6 shows the raw distribution of VOT durations in the generated samples that closely resembles the distribution in the training data (Figure 3).

Figure 6. (A) Violin plots with box-plots of durations in ms of VOT in the generated data based on two conditions: when word-initial #TV sequence is not preceded by [s] (#TV) and when it is preceded by [s] (#sTV). (B) Estimates of VOT duration based on fitted values with 95% confidence intervals across two conditions, #TV and #sTV in the generated data. (C) Duration (in ms) of VOT in the #sTV condition that compares the TIMIT training data (red circles) and generated outputs (green squares). The dashed line represents the longest VOT duration in the training data. The figure illustrates the proportion of outputs that violate the training data.

The results suggest that the network does learn the allophonic distribution: VOT duration is significantly shorter in the #sTV condition (β = −2.79, t = −78.34, p < 0.0001; for details of the statistical model, see Section 2, Supplementary Materials). Figure 6 illustrates estimates of VOT duration across the two conditions with 95% confidence intervals. The model, however, shows clear traces that the learning is imperfect and that the generator network fails to learn the distribution categorically. This is strongly suggested by the fact that VOT durations are substantially longer in the generated data compared to the training data. The difference in means between the #TV and #sTV conditions in the training data is 32.35 ms, while in the generated data the difference is 22.52 ms. The ratio between the two conditions in the training data is 2.34, while the generated data's ratio is 1.59.

Another aspect of generated data that also strongly suggests the learning is imperfect is the fact that the longest VOT durations in the #sTV condition in the generated data are substantially longer than the longest VOT durations in the training data, where the longest duration reaches 65 ms (see Table 1 and Figure 3). VOT in the generated data is in 19 out of 156 total #sTV sequences (or 12.2%) longer than 65.5 ms, the longest VOT in the training data. The longest three VOT durations in #sTV sequences are, for example, 109.35, 84.17, and 82.37 ms.

This generalization holds also in proportional terms. To control for the overall duration of segments in the output, we measure ratio of VOT duration and duration of the preceding [s] (i.e., thus controlling for “speech rate”). The highest ratio between the VOT duration and the duration of preceding [s] () in the training data is 0.773, which appears in an acoustically very different token compared to the generated outputs. The ratio in all other tokens in the training data are even lower, below 0.69. Several values of the ratios between VOT and [s] duration in the generated data are substantially higher compared to the training data. In the three outputs with longest absolute duration of VOT, the ratios are 1.91, 1.40, and 0.89. Other high ratios measured include, for example, 1.79, 1.72, 1.60, 1.50, 1.46. Figure 7 shows two such cases. It is clear that the generator fails to reproduce the conditioned durational distribution from the training data in these particular cases. In other words, while the Generator learns to output significantly shorter VOT durations when [s] is present in the output, it occasionally (in approximately 12.2% of cases) fails to observe this generalization and outputs a long VOT in the #sTV condition which is longer than any VOT duration in the #sTV condition in the training data. As will be argued in Section 5.1, the outcomes of this imperfect learning closely resemble L1 acquisition.

Figure 7. Waveforms and spectrograms (0–8,000 Hz) of two generated outputs of #sTV sequences in which the stop has longer VOT than any VOT in #sTV condition in the training data.

Longer VOT duration in the #sTV condition in the generated data compared to training data is not the only violation of the training data that the Generator outputs and that resembles linguistic behavior in humans. Among approximately 3,000 generated samples analyzed, we observe generated outputs that feature only frication noise of [s] and periodic vibration of the following vowel, but lack stop elements completely (e.g., closure and release of the stop). In other words, the generator occasionally outputs a linguistically valid and innovative #sV sequence for which no evidence was available in the training data. Such innovative sequences in which the segments are omitted or inserted are rare compared to innovative outputs with longer VOT—approximately two per 3,000 inspected cases (but the overall rate of outputs that are acoustically difficult to analyze is also small: 4.6%). All sequences containing [s] from the training data were manually inspected by the author and none of them contain a #sV sequence without a period of closure and VOT. The minimal duration of closure in #sTV sequences in the training data is 9.2 ms, and the minimal duration of VOT is 9.4 ms. Aspiration noise in stops that resembles frication of [s] and homorganic sequences of [s] followed by an alveolar stop [t] (#stV) are occasionally acoustically similar to the sequence without the stop (#sV) due to similar articulatory positions or because frication noise from [s] carries onto the homorganic alveolar closure which can be very short. Such data points in the training data can serve as the basis for the innovative output #sV. However, there is a clear fall and a second rise of noise amplitude after the release of the stop in #stV sequences. Figure 8 shows two cases of the Generator network outputting an innovative #sV sequence without any stop-like fall of the amplitude, for which no direct evidence exists in the training data.

Figure 8. Waveforms and spectrograms (0–8,000 Hz) of two generated outputs of the shape #sV. The sample on the left was generated after 16,715 steps.

Similarly, the Generator occasionally outputs a sequence with two stops and a vowel (#TTV). One potential source of such innovative sequences might be residual noise that is sometimes present during the period of closure in the TIMIT database. However, residual noise in the training data differs substantially from a clear aspiration noise in the generated #TTV sequences. Figure 9 illustrates two generated examples in which the vocalic period is preceded by two bursts, two periods of aspiration and a short period of silence between the aspiration noise of the first consonant and the burst of the second consonant that corresponds to closure of the second stop4. Spectrograms show the distribution of energy differs across the two bursts and aspiration noises, suggesting that the output represents a heterogranic cluster [pt] followed by a vowel.

Measuring overfitting is a substantial problem for Generative Adversarial Networks with no consensus on the most appropriate quantitative approach to the problem (Goodfellow et al., 2014; Radford et al., 2015). The risk with overfitting in a GAN architecture is that the Generator network would learn to fully replicate the input5. The best evidence against overfitting is precisely the fact that the Generator network outputs samples that substantially violate data distributions (Figures 7–9)6.

4.3. Establishing Internal Representations

Establishing what and how neural networks learn is a challenging task (Lillicrap and Kording, 2019). Exploration of latent space in the GAN architecture has been performed before (Radford et al., 2015; Donahue et al., 2017; Lipton and Tripathi, 2017), but to the author's knowledge, most previous work did not focus on discovering meaningful values (phonetic correlates) of each variable and has not fully used the potential to extend those variables to values well outside the training range (15 or 25). Below, we propose a technique for uncovering dependencies between the network's latent space and generated data based on logistic regression. We first use regression estimates to identify variables with a desired effect on the output by correlating the outputs of the Generator with its corresponding input variables that are uniformly distributed with an interval (−1, 1) during training. We then Generate outputs by setting the identified latent variables to values well beyond the training range (to 4.5, 15, or 25). This method has the potential to reveal the underlying values of latent variables and shed light on the network's internal representations. Using the proposed technique, we can estimate how the network learns to map from latent space to phonetically and phonologically meaningful units in the generated data.

To identify dependencies between the latent space and generated data, we correlate annotations of the output data with the variables in the latent space (in Section 4.3.1). As a starting point, we choose to identify correlates of the most prominent feature in the training data: the presence or absence of [s]. Any number of other phonetic features can be correlated with this approach (for future directions, see Section 6); applying this technique to other features and other alternations should yield a better understanding of the network's learning mechanisms. Focusing on more than the chosen feature, however, is beyond the scope of this paper.

4.3.1. Regression

First, 3,800 outputs from the Generator network were generated and manually annotated for the presence or absence of [s]. 271 outputs (7.13%) were annotated as involving a segment [s] which is similar to the percentage of data points with [s] in the training data (9.8%). Frication that resembled [s]-like aspiration noise after the alveolar stop and before high vowels was not annotated as including [s]7. Innovative outputs such as an #[s] without the following vowel or #sV sequences were annotated as including an [s].

The annotated data together with values of latent variables for each generated sample (z) were fit to a logistic regression generalized additive model (using the mgcv package; Wood, 2011 in R Core Team, 2018) with the presence or absence of [s] as the dependent variable (binomial distribution of successes and failures) and smooth terms of latent variables (z) as predictors of interest (estimated as penalized thin plate regression splines; Wood, 2011). Generalized additive models were chosen in order to avoid assumptions of linearity: it is possible that latent variables are not linearly correlated with features of interest in the output of the Generator network. The initial full model (FULL) includes smooths for all 100 variables in the latent space that are uniformly distributed within the interval (−1, 1) as predictors.

The models explored here do not serve for hypothesis testing, but for exploratory purposes: to identify variables, the effects of which are tested with two independent generative tests (see Sections 4.3.2 and 4.3.3). For this reason, several strategies to reduce the number of variables in the model with different shrinkage techniques are explored and compared: the latent variables for further analysis are then chosen based on combined results of different exploratory models.

First, we refit the model with modified smoothing penalty (MODIFIED), which allows shrinkage of the whole term (Wood, 2011). Second, we refit the model with original smoothing penalty (SELECT), but with an additional penalty for each term if all smoothing parameters tend to infinity (Wood, 2011). Finally, we identify non-significant terms by Wald test for each term (using anova.gam() with α = 0.05) and manually remove them from the model (EXCLUDED). 38 predictors are thus removed.

The estimated smooths appear mostly linear (Figure 11). We also fit the data to a linear logistic regression model (LINEAR) with all 100 predictors. To reduce the number of predictors, another model is fit (LINEAR EXCLUDED) with those predictors removed that do not improve fit (based on the AIC criterion when each predictor is removed from the full model). 23 predictors are thus removed. The advantage of the linear model is that predictors are parametrically estimated8.

While the number of predictors in the models is high even after shrinkage or exclusion, there is little multicollinearity in the data as the 100 variables are randomly sampled for each generation. The highest Variance Inflation Factor in the linear logistic regression models (LINEAR and LINEAR EXCLUDED) estimated with the vif() function (in the car package; Fox and Weisberg, 2019) is 1.287. All concurvity estimates in the non-linear models are below 0.3 (using concurvity() in Wood, 2011). While the number of successes per predictor is relatively low, it is unlikely that more data would yield substantially different results (as will be shown in Sections 4.3.2 and 4.3.3, the model successfully identifies those values that have direct phonetic correlates in the generated data).

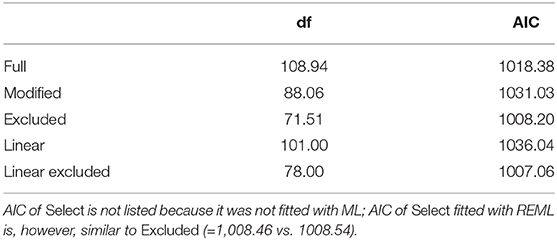

Six models are thus fit in an exploratory method to identify variables in the latent space that predict the presence of [s] in generated outputs. Table 2 lists AIC for each model. The LINEAREXCLUDED model has the lowest AIC score. All six models, however, yield similar results. For further tests based on Lasso regression and Random Forest models that also yield similar results, see Section 3 (Supplementary Materials).

Table 2. AIC values of five fitted models with corresponding degrees of freedom (df), fitted with Maximum Likelihood.

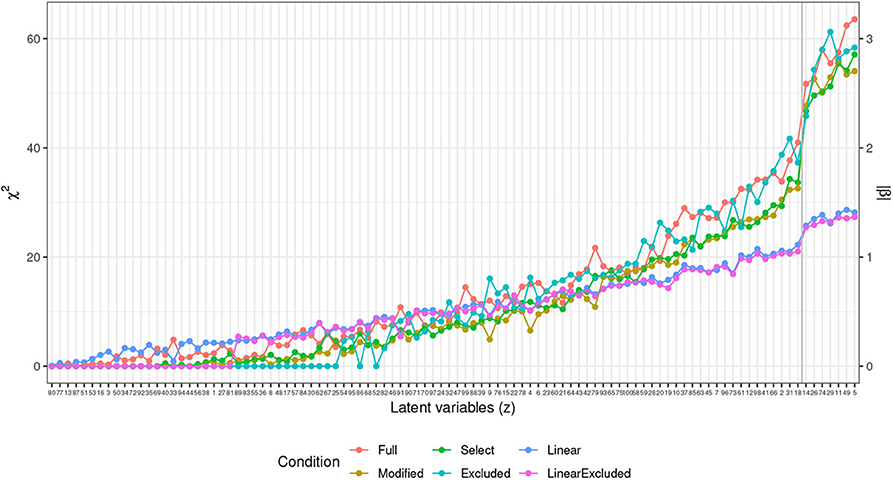

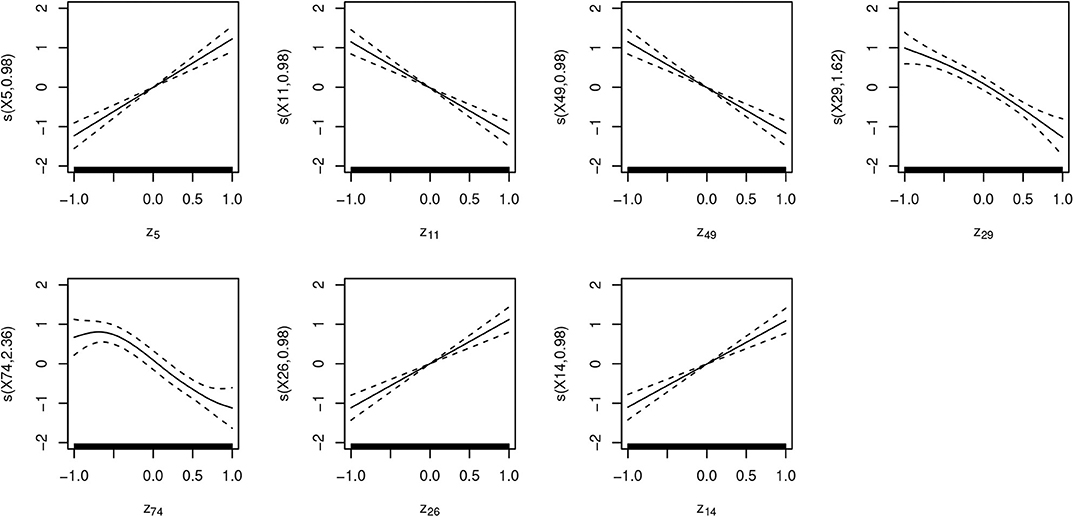

To identify the latent variables with the highest correlation with [s] in the output, we extract χ2 estimates for each term from the generalized additive models and estimates of slopes (β) from the linear model. Figure 10 plots those values in a descending order. The plot points to a substantial difference between the highest seven predictors and the rest of the latent space. Seven latent variables are thus identified (z5, z11, z49, z29, z74, z26, z14) as potentially having the largest effect on the presence or absence of [s] in output. Figure 11 plots smooths of the seven predictors (z5, z11, z49, z29, z74, z26, z14) from a non-linear model SELECT. The smooths show a linear or near-linear relationship between values of the chosen seven variables and the probability of [s] in the output.

Figure 10. Plot of χ2 values (left scale) for the 100 predictors across the four generalized additive models. For the two linear models (LINEAR and LINEAR EXCLUDED), estimates of slopes in absolute values (|β|) are plotted (right scale). The blue vertical line indicates the division between the seven chosen predictors and the rest of the predictor space with a clear drop in estimates between the first seven values (z5, z11, z49, z29, z74, z26, z14) and the rest of the space.

Figure 11. Plots of seven smooth terms with highest χ2 values in a generalized additive logistic regression model with all 100 latent variables (z) as predictors, estimated with penalty for each term (SELECT). Many of the predictors show linear correlation, which is why a linear logistic regression outputs similar estimates.

Several methods for finding the features that predict the presence or absence of [s] are thus used. Logistic regression is presented here because it is the simplest and easiest to interpret. In future work, a combination of techniques is recommended to be used for exploratory purposes in a similar way as proposed in this paper. Below, we conduct two independent generative tests to evaluate whether the proposed technique indeed identifies variables that correspond to presence of [s] in the output.

4.3.2. Generative Test 1

To conduct an independent generative test of whether the chosen values correlate with [s] in the output data of the Generator network, we set values of the seven identified predictors (z5, z11, z49, z29, z74, z26, z14) to the marginal value of 1 or −1 (depending on whether the correlation is positive or negative; see Figure 11) and generated 100 outputs. Altogether seven values in the latent space were thus manipulated, which represents only 7% (7/100) of all latent variables. Of the 100 outputs with manipulated values, 73 outputs included an [s] or [s]-like element, either with the stop closure and vowel or without them. The rate of outputs that contain [s] is thus significantly higher when the seven values are manipulated to the marginal levels compared to randomly chosen latent space. In the output data without manipulated values, only 271 out of 3,800 generated outputs (or 7.13%) contained an [s]. The difference is significant [].

High proportions of [s] in the output can be achieved with manipulation of single latent variables, but the values need to be highly marginal, i.e., extend well beyond the training space. Setting the z11 value outside the training interval to −15, for example, causes the Generator to output [s] in 87 out of 100 generated (87%) sequences, which is again significantly more than with randomly distributed input latent variables []. When z11 is −25, the rate goes up to 96 out of 100, also significantly different from random inputs [].

4.3.3. Generative Test 2

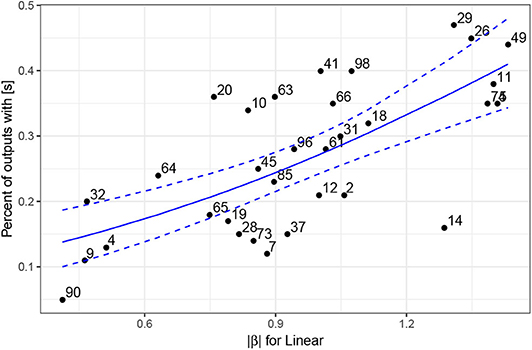

To further confirm that the regression models identify the variables involved with the presence of [s] in generated outputs, another generative experiment was conducted. In addition to manipulating the seven identified variables, we test the effect of other variables in the latent space on the presence of [s] in the output. If the regression estimates provide reliable information, the variables with higher estimates should have more of an effect on the presence of [s] in the output and vice versa. Testing the entire latent space would be too expensive, which is why we limit our tests to 25 variables with highest estimates from the regression models (which includes the seven chosen variables) and six additional variables with descending regression estimates. Altogether 31/100 variables or 31% of the latent variables are thus analyzed. The variables were chosen in the following way: first, we manipulate values of the first 25 variables with the highest estimates based on regression models in Figure 10 (7 chosen variables plus additional 18 variables for a total of 25). Because we want to test the effects of the latent variables as evenly as possible and also to test the effects of variables with the lowest regression estimates, we picked 6 additional variables that are distanced from the 25th highest variable in increments of 5 (random choice of variables might miss the variables with lowest estimates).

To perform the generative test of the correlation between the latent space and the proportion of [s] in the output, we set each of the 31 variables at a time to a marginal level well beyond the training interval (to ±4.5), while keeping the rest of the latent space randomly sampled, but constant. In other words, all variables are sampled randomly and held constant across all samples, with the exception of the variable in question at a time that is set to ±4.5. The ±4.5 value was chosen based on manual inspection of generated samples: as is clear from Figure 15, changes in amplitude of [s] become increasingly smaller when variables have a value greater than ±3.5. For effects of values beyond 4.5, see Figure 12.

Figure 12. Seven waveforms and spectrograms (0–8,000 Hz) of outputs from the Generator network with the value of z11 set at −25. In 96 out of 100 generated samples, the network outputs a sequence containing an [s]. With such a low value of z11 (that correlates with amplitude of frication noise), the amplitude of the frication noise reaches the maximum level of 1 in all outputs with [s].

One hundred outputs are generated for each of the 31 manipulated latent variables. Altogether 31 × 100 (3,100) outputs were thus analyzed and annotated for the presence or absence of [s] in the output. For example, when the effect of latent variable z11 on the proportion of [s] in the output is tested, we set its value to −4.5 while keeping other variables random. One-hundred samples are generated in which the other 99 variables are randomly distributed with the exception of the z11 variable (which is set at the marginal level). Samples are annotated for the presence or absence of [s] and the proportion of [s] in the output is calculated from the number of samples with [s] divided by the number of all samples. The same procedure is applied to the other 30 variables examined. To control for the unwanted effects of the latent space on the output, all 99 other variables with the exception of the one manipulated are kept constant across all 31 samples. The 31 data points of this proportion are thus the dependent variable in regression models (Figure 13) that test the correlation between the identified variables and [s] in the output.

Figure 13. Plot of absolute values of estimates from the Linear model for 31 analyzed latent variables z (numbered on the plot) and the percent of outputs that contain an [s] based on 100 generated samples. The blue solid line represents predicted values based on the beta regression model with estimates of the Linear model as the predictor; the dashed lines represent 95% confidence intervals.

A beta regression model with the proportion of [s] as the dependent variable and with estimates of the Linear model as the independent variable suggests that there exists a significant linear correlation between the estimates of the regression model and the actual proportion of generated outputs with [s]: β = 1.44, z = 5.07, p < 0.0001 (for details on model selection, see Section 4, Supplementary Materials). In other words, the technique for identifying latent variables that correlate with the presence of [s] in the output based on regression models (in Figure 10) successfully identifies such variables. This is confirmed independently: the proportion of generated outputs containing an [s] correlates significantly with its estimates from the regression models.

4.3.4. Interpretation

The regression models in Figure 10 identify those z-variables in the latent space that have the largest effect on the presence of [s] in the output. Figure 13 confirms that the generator outputs significantly higher proportions of [s] for some variables, while other variables have no effect on the presence of [s]. In other words, variables with lower regression estimates do not affect the proportion of [s] in the output. The proportion of [s] in the output when variables such as z90, z9, z4, z7 are manipulated is very close to the 7.13% of [s] in the output when all z-variables in the latent space are random. It thus appears that the Generator uses portions of the latent space to encode the presence of [s] in the output.

Some latent variables cause a high proportion of [s] in the output despite the regression model estimating their contribution lower than the seven identified latent variables (Figure 13) and vice versa. Outputs for variable z14 contain frication noise that falls between [s] and [s]-like aspiration, which were difficult to classify (also, the target for [s]-like outputs in this variable is closer to 2.5). The two variables with the highest proportion of [s] in the output that are estimated substantially lower than the seven variables are z41 and z98. There is a clear explanation for the discrepancy of the regression estimates and the rates of [s]-outputs for such variables. While outputs at the marginal values of the two variables (at ±4.5) do indeed contain a high proportion of [s]-outputs, the frication noise ceases during the (−1, 1) interval on which the model is trained. Because the regression model only sees the training interval (−1, 1) (annotations fed to the regression models are performed on this interval) and does not access outputs with variables outside of this interval, the estimates are consequently lower than the outputs at the marginal levels for these variables. There are only a handful of such variables, and since we are primarily interested in those variables that correspond to [s] both within the training interval and outside of it, we focus our analysis below on the seven variables identified in Section 4.4. The problem with variables in which [s]-outputs are present predominantly outside of the training interval is the possibility that the [s]-output in these types of cases is secondary/conditioned on some other distribution, because it was likely not encoded in the training stage.

While there is a consistent drop in estimates of the regression models after the seven identified variables (Figure 10) and while several independent generation tests confirm that the seven variables have the strongest effect on the presence of [s] in the output, the cutoff point between the seven variables and the rest of the latent space is still somewhat arbitrary. It is likely that other latent variables directly or indirectly influence the presence of [s] as well: the learning at this point is not yet categorical and several dependencies not discovered here likely affect the results. Nevertheless, further explorations of the latent space suggest the variables identified with the logistic regression (and other) models (Figure 10) are indeed the main variables involved with the presence or absence of [s] in the output.

Additionally, if at the value of z that so substantially exceeds the training interval (±4.5) the latent variable does not influence the outcomes substantially and only marginally increases the proportion of [s]-outputs, as is the case for the majority of the latent variables outside of the seven chosen ones, it is likely that its correlation with [s] in the output is secondary and that the variable does not contribute crucially to the presence of [s].

4.4. Interpolation and Phonetic Features

Fitting the annotated data and corresponding latent variables from the Generator network to generalized additive and linear logistic regression models identifies values in the latent space that correspond to the presence of [s] in the output. As will be shown below, this is not where exploration of the Generator's internal representations should end. We explore whether the mapping between the uniformly distributed input z-variables and the Generator's output signal that resembles speech can be associated with specific phonetic features in that output. The crucial step in this direction is to explore values of the latent space and their phonetic correlates in the output beyond the training interval, i.e., beyond (−1, 1). We observe that the Generator network, while being trained on latent space limited to the interval (−1, 1), learns representations that extend this interval. Even if the input latent variables (z) exceed the training interval, the Generator network outputs samples that closely resemble human speech. Furthermore, the dependencies learned during training extend outside of the (−1, 1) interval. As is argued in Section 4.5, exploring phonetic properties at these marginal values has the potential to reveal the actual underlying function of each latent variable.

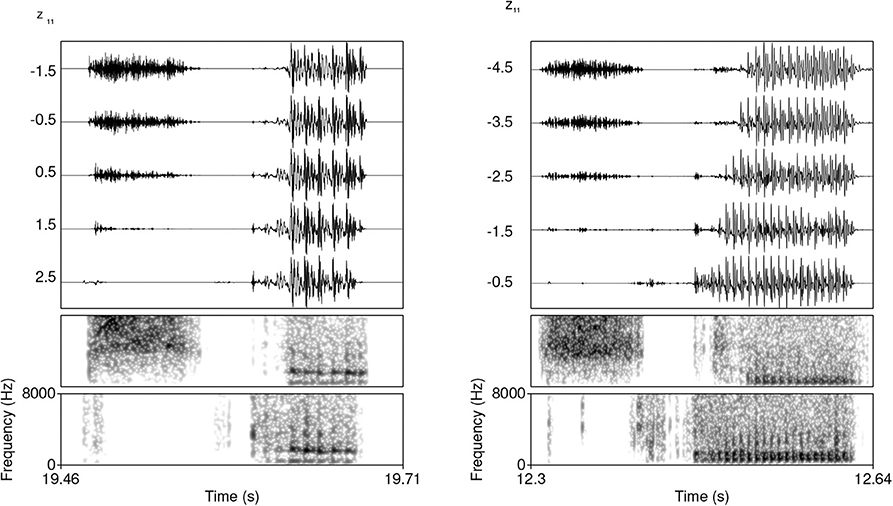

To explore phonetic correlates of the seven latent variables, we set each of the seven variables separately to the marginal value −4.5 and interpolate to its opposite marginal value 4.5 in 0.5 increments, while keeping randomly-sampled values of the other 99 latent variables z constant. Again, the ±4.5 value was chosen based on manual inspection of generated samples: amplitude of [s] ceases to change substantially past values around ±3.5 (Figure 15). Seven sets of generated samples are thus created, one for each of the seven z values: z5, z11, z14, z26, z29, z49, and z74 (with the other 99 z-values randomly sampled, but kept constant for all seven manipulated variables). Each set contains a subset of 19 generated outputs that correspond to the interpolated variables from −4.5 to 4.5 in 0.5 increments. Twenty-nine such sets that contained an [s] in at least one set are extracted for analysis (sets that lack an [s] were not analyzed).

A clear pattern emerges in the generated data: the latent variables identified as corresponding to the presence of [s] via regression (Figure 10) have direct phonetic correlates and cause changes in amplitude and the presence/absence of frication noise of [s] when each of the seven values in the latent space are manipulated to the chosen values, including values that exceed the training interval. In other words, by manipulating the identified latent variables, we control the presence/absence of [s] in the output as well as the amplitude of its frication noise.

Figure 14 illustrates this effect. Frication noise of [s] gradually decreases by increasing the value of z11 until it completely disappears from the output. The exact value of z11 for which the [s] disappears differs across examples and likely interacts with other features. It is possible that frication noise in the training has a higher amplitude in some conditions, which is why such cases require a higher magnitude of manipulation of z11. The figure also shows that as the frication noise of [s] disappears, aspiration of a stop in what appear to be #TV sequences starts surfacing and replaces the frication noise of [s]. Occasionally, frication noise of [s] gradually transforms into aspiration noise. The exact transformation is likely dependent on the 99 other z-variables held constant and their underlying phonetic effects. Regardless of these underlying phonetic effects, manipulating the chosen variables has a clear effect of causing [s] to appear in the output and controlling its amplitude.

Figure 14. Waveforms and two spectrograms (both 0−8, 000 Hz) of generated data with z11 variable manipulated and interpolated. The values on the left of waveforms indicate the value of z11. The two spectrograms represent the highest and the lowest value of z11. A clear attenuation of the frication noise is visible until complete disappearance.

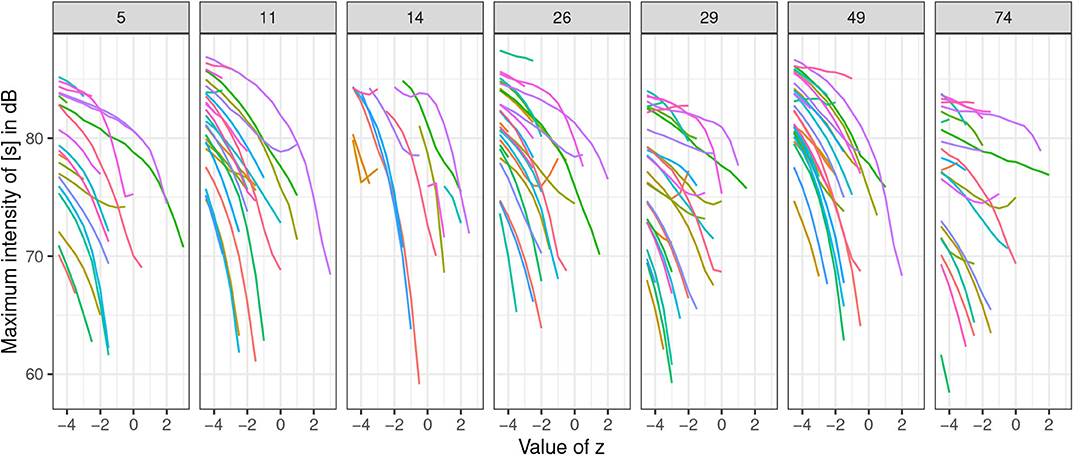

To test the significance of the effects of the seven identified features on the presence of [s] and the amplitude of its frication noise, the 29 generated sets of 19 outputs (with z-value from −4.5 to 4.5) for each of the seven variables were analyzed. The outputs were manually annotated for [s] and the following vowel. Outputs gradually change from #sTV to #TV. Only sequences containing an [s] were analyzed; as soon as [s] stops in the output, annotations were stopped and the outputs were not further analyzed. Altogether 161 trajectories were thus annotated; the total number of data points measured is 1,088 because each trajectory contains a number of measurements of the interpolated values of z. For each datapoint, maximum intensity of the fricative and maximum intensity of the vowel were extracted in Praat (Boersma and Weenink, 2015) with a 13.3 ms window length (with parabolic interpolation)9. Figure 15 illustrates how manipulating the values of z of the chosen variables from the marginal value ±4.5 decreases frication noise in the output until [s] is completely absent.

Figure 15. Plots of maximum intensity (in dB) of the fricative part in #sTV sequences when values of the seven z-variables are interpolated from the marginal values ±4.5 in 0.5 increments. Each set of generated samples with the randomly sampled latent variables held constant is colored with the same color across the seven z-variables. Values of z5, z14, and z26 are inverted for clarity purposes.

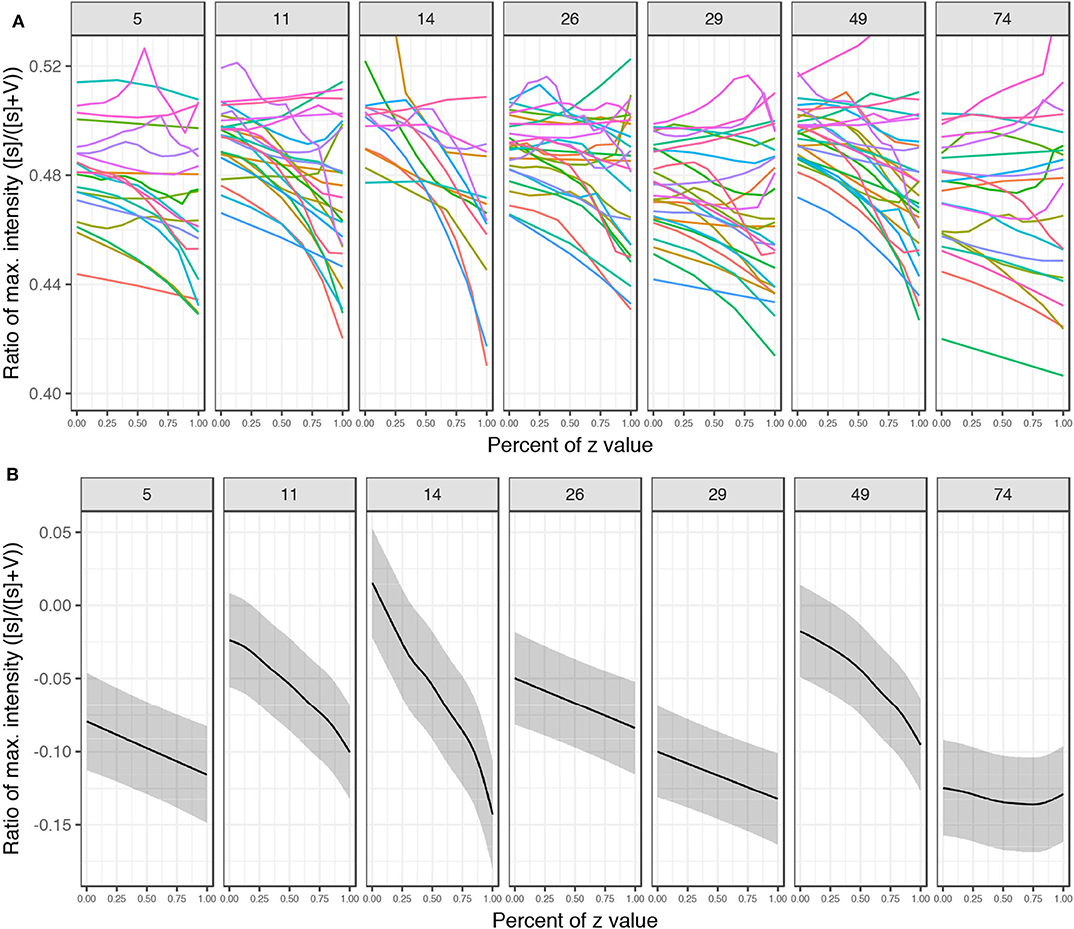

To test whether the decreased frication noise is not part of a general effect of decreased amplitude, we perform significance tests on the ratio of maximum intensity between the frication noise of [s] and the following vowel in the #sTV sequences. Figure 16 plots the ratio of maximum intensity of the fricative divided by the sum of two maximum intensities: of the fricative ([s]) and of the vowel (V). The manipulated z-values are additionally normalized to the interval [0,1], where 0 represents the most marginal value with [s] (usually ±4.5; referred to as STRONG henceforth) and 1 represents the last value before [s] disappears (WEAK). Note that the point at which [s] is not present in the output anymore, but the vowel still surfaces (which would yield the ratio at 0) is not included in the model.

Figure 16. (A) Plots of ratios of maximum intensity between the frication of [s] and phonation of the vowel in #sTV sequences across the seven variables. The interpolated values are normalized where 0 represents the most marginal value of z with [s] in the output and 1 represents the value of z right before [s] ceases from the output. Four marginal values are left out from the plot (but are included in the models). Each set of generated samples with the randomly sampled latent variables held constant is colored with the same color across the seven z-variables. (B) Predicted values with 95% CIs of the ratio based on beta regression generalized additive model (Table S3, Supplementary Materials) across the several variables with normalized values.

The data were fit to a beta regression generalized additive mixed model (in the mgcv package; Wood, 2011) with the ratio as the dependent variable, the seven chosen variables as the parametric term, thin-plate smooths for each variable and random smooths (with first order of penalty; Baayen et al., 2016; Sóskuthy, 2017) for (i) trajectory and for (ii) value of other variables in the latent space of the Generator network. Figure 16 plots the normalized trajectories of the ratio and predicted values based on the generalized additive model. All smooths (except for z74) are significantly different from 0 (all coefficients in Table S3, Supplementary Materials) and the plots show a clear negative trajectory. In other words, maximum intensity of [s] is increasingly attenuated compared to the intensity of the vowel as z approaches the opposite value from the one identified as predicting the presence of [s] until it completely disappears from the output.

The seven variables thus strongly correspond to the presence or absence of [s] in the output; by manipulating the chosen variables to the identified values we can attenuate frication noise of [s] and cause its presence or complete disappearance in the generated data. Again, the discovery of these features is possible because we extend the initial training interval and test predictions on marginal values. In Section 4.5, we analyze further phonetic correlates of each of the seven variables.

4.5. Phonetic Values of Latent Variables

Interpolation of latent variables reveals that the presence of [s] is not controlled by a single latent variable, but by at least seven of them. Additionally, there appears to be no categorical cut-off point in the magnitude of the effect between the variables, only a steep drop of regression estimates (Figure 10) and a decline of outputs with [s] in generated data (Figure 13). This suggests that the learning at this stage is gradient and probabilistic rather than fully categorical.

The different latent variables that correspond to the presence of [s], however, are not phonetically vacuous: individually, they have distinct phonetic correspondences. The generated samples reveal that the variables' secondary effect (besides outputting [s] and controlling its intensity) are likely spectral properties of the frication noise. The seven variables are thus similar in the sense that manipulation of their values results in the presence of [s] by controlling its frication noise. They crucially differ, however, in the effects on the spectral properties of the outputs.

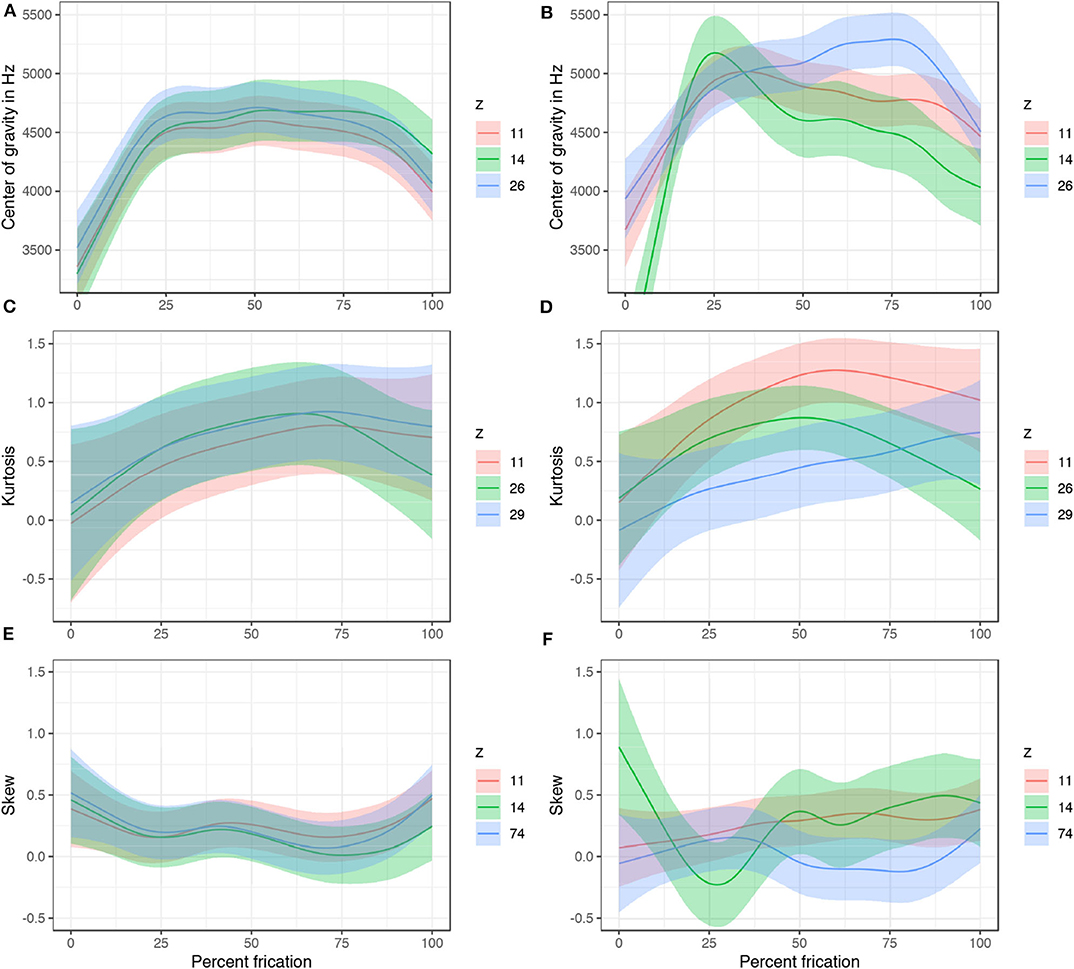

To test this prediction, spectral properties of the output fricatives are analyzed. The same 29 sets of generated samples are used in the analysis; one z-value is manipulated in each set while other variables are sampled randomly and held constant. The marginal values of the variables were chosen for this test: the values with the strongest presence of [s] (which in most cases is ±4.5; henceforth STRONG) and the value before which [s] ceases from the output (henceforth WEAK). Center of gravity (COG), kurtosis, and skew of the frication noise were analyzed with and extracted with a script from Rentz (2017) in Praat (Boersma and Weenink, 2015). Period of frication is sliced into 10% intervals. The data includes 161 trajectories (from the 29 generated sets) and 161 × 10 = 1, 610 unique data points. COG, kurtosis, and skew based on power spectra are measured in each of these 1,610 intervals with 750–8,000 Hz Hann band pass filter (100 Hz smoothing). Results were fit to six generalized additive mixed models with COG, kurtosis, and skew as the dependent variables (3 for each of the levels STRONG and WEAK). The parametric terms included the seven latent variables z. The smoothing terms included smooths for latent variable z11 and difference smooths for the other six variables z. The model also includes random smooths for each fricative (from 10 to 100% with 10 knots) and for each of the 29 generated sets with equal random values of other 99 z-variables (with 7 knots; random smooths are fitted with first order of penalty, see Baayen et al., 2016; Sóskuthy, 2017). The models were fit with correction for autocorrelation with ρ-values ranging from 0.15 to 0.7.

Spectral properties of the generated fricatives are generally not significantly different at the value of z right before [s] disappears from the outputs (WEAK; left column in Figure 17). As values of z increase toward the marginal levels (in most cases, ±4.5), however, clear differentiation in spectral properties emerge between some of the seven z-variables (STRONG; right column in Figure 17). The trajectory for center of gravity, for example, significantly differs between z11 and most of the other six variables. Overall kurtosis is significantly different when z11 is manipulated, compared to, for example, z26 and z29. Similarly, while z74 does not significantly attenuate amplitude of [s], it significantly differs in skew trajectory of [s]. The main function of z74 is thus likely in its control of spectral properties of frication of [s] (e.g., skew). For all coefficients and significant and non-significant relationship of the six models, see Tables S4–S9, Supplementary Materials.

Figure 17. A subset of predicted values of COG, kurtosis, and skew with 95% CIs in two conditions: WEAK with z-variables at the value before [s] ceases from the output (left column) and STRONG (right column) with the most marginal value with [s]-output (±4.5 in most cases). Predicted values are based on generalized additive models in Tables S4–S9 (Supplementary Materials). The plots show a clear differentiation from no significant differences in COG, kurtosis, and skew, to clear significant overall differences and trajectory differences as the z-values move from WEAK toward the marginal (STRONG) values. Difference smooths for the presented variables are in Figure S1, Supplementary Materials.

In sum, manipulating the latent variables that correspond to [s] in the output not only attenuates frication noise (when vocalic amplitude is controlled for) and causes [s] to surface or disappear from the output, but the different z-variables likely correspond to different phonetic features of the frication noise. At the level before the frication noise ceases from the output, there are no differences in spectral moments between the latent variables. By setting the values to the marginal levels well beyond the training interval, however, significant differences emerge both in overall levels as well as in trajectories of COG, kurtosis, and skew. It is thus likely that the variables collectively control the presence or absence of [s], but that individually, they control various phonetic features — spectral properties of the frication noise.

5. Discussion

The Generator network trained after 12,255 steps learns to generate outputs that closely resemble human speech in the training data. The results of the experiment in Section 4.2 suggest that the generated outputs from the Generator network replicate the conditional distribution of VOT duration in the training data. The Generator network thus not only learns to output signal that resembles human speech from noise (input variables sampled from a uniform distribution), but also learns to output shorter VOT durations when [s] is present in the signal. While this distribution is phonologically local, it is non-local in phonetic terms as a period of closure necessarily intervenes between [s] and VOT. It is likely, however, that minor local dependencies (such as burst or vowel duration) also contribute to this distribution. While it is currently not possible to disambiguate between the local and non-local effects, it is desirable for a model to capture all possible dependencies, as speech production and perception often employ several cues as well.

5.1. Parallels in Human Behavior

Several similarities emerge between the training of the Generative Adversarial networks and L1 acquisition. The training data in the GAN model is of course not completely naturalistic (even though the inputs are raw audio recordings of speech data): the network is trained on only a subset of sound sequences that a language learning infant is exposed to. The purpose of these comparisons is not to suggest the GAN model learns the data in exactly the same manner as human infants, but to suggest that clear similarities exist in behavior between the proposed model and human behavior in speech acquisition. Such comparisons have both the potential to inform computational models of human cognition and conversely, shed light on the question of how neural networks learn the data.

While the generated outputs contain evidence that the network learns the conditional distribution of VOT duration, a proportion of outputs violates this distribution. In fact, in approximately 12.2% of the #sTV sequences, the Generator outputs VOT durations that are longer than any VOT duration in the #sTV condition in the training data. This suggests that the model learns the conditional distribution, but that the learning is imperfect and the Generator occasionally violates the distribution. Crucially, these outputs that violate the training data closely resemble human behavior in L1 acquisition. Infants acquiring VOT in English undergo a period in which they produce VOT durations substantially longer compared to the adult input, not only categorically in all stops (Macken and Barton, 1980; Catts and Jensen, 1983; Lowenstein and Nittrouer, 2008), but also in the position after the sibilant [s]. McLeod et al. (1996) studied acquisition of #sTV and #TV sequences in 2;0 to 2;11 year old children. Unlike the Generator network, children often simplify the initial clusters from #sTV to a single stop #TV. What is parallel to the outputs of the Generator, however, is that the VOT duration of the simplified stop is overall significantly shorter in underlying #sTV sequences, but there exists a substantial period of variation and occasionally the language-acquiring children output long-lag VOT durations there (McLeod et al., 1996, for similar results in language-delayed children, see Bond, 1981). Bond and Wilson (1980) present a similar study, but include older children that do not simplify the #sT cluster. This group behaves exactly parallel to the Generator's network: the overall duration of VOT in the #sTV sequences is shorter compared to the #TV sequences, but the longest duration of any VOT is attested once in the #sTV, not in the #TV condition (Bond and Wilson, 1980). The children thus learn both to articulate the full #sT cluster and to output a shorter VOT durations in the cluster condition. Occasionally, however, they output a long-lag VOT in the #sTV condition that violates the allophonic distribution and is longer than any VOT in the #TV condition.