- 1Faculty of Law, Aligarh Muslim University, Aligarh, India

- 2NALSAR University of Law, Hyderabad, India

The increasing utilization of Artificial Intelligence (AI) systems in the field of healthcare, from diagnosis to medical decision making and patient care, necessitates identification of its potential benefits, risks and challenges. This requires an appraisal of AI use from a legal and ethical perspective. A review of the existing literature on AI in healthcare available on PubMed, Oxford Academic and Scopus revealed several common concerns regarding the relationship between AI, ethics, and healthcare—(i) the question of data: the choices inherent in collection, analysis, interpretation, and deployment of data inputted to and outputted by AI systems; (ii) the challenges to traditional patient-doctor relationships and long-held assumptions about privacy, identity and autonomy, as well as to the functioning of healthcare institutions. The potential benefits of AI’s application need to be balanced against the legal-ethical issues emanating from its use—bias, consent, access, privacy and cost—to guard against detrimental effects of uncritical AI use. The authors suggest that a legal framework for AI should adopt a critical and grounded perspective—cognizant of the material political realities of AI and its wider impact on more marginalized communities. The largescale utilization of health datasets often without consent, responsibility or accountability, further necessitates regulation in the field of technology design, given the entwined nature of AI research with advancements in wearables and sensor technology. Taking into account the ‘superhuman’ and ‘subhuman’ traits of AI, regulation should aim to encourage the development of AI systems that augment rather than outrightly replace human effort.

1 Introduction

The integration of Artificial intelligence (AI) in healthcare has stimulated various advancements in diagnostics, treatment and patient care protocols. The most notable contribution of AI is in making worldclass surgical knowledge and expertise available, in all operation theaters at all times, through an AI-powered conduit “Surgical Collective Consciousness” (Acevedo, 2018). Drawing on population data, the collective renders real-time clinical decision support (Hashimoto et al., 2018). AI has been able to save lives and improve healthcare outcomes by making healthcare accessible and healthcare centers more efficient.

Recently, Med-PaLM, an AI model cleared the US Medical exam with expert level scores. This signals that AI-powered healthcare is the future (Singhal et al., 2023). Nonetheless, the burgeoning technological developments have spurred a range of ethical and legal concerns warranting critical examination (Hanna et al., 2025). This paper examines the questions of choice and responsibility arising with the large-scale collection and use of data by AI. It argues that by prioritizing ethical considerations in the development and deployment of AI, medical professionals can enhance health outcomes and cultivate patient trust, thereby bridging the gap between technological advancements and nuanced healthcare realities (Collins et al., 2024).

Additionally, a robust rights based legal framework is required for balancing privacy interests of patients and AI’s need for their data (Mennella et al., 2024).

2 Materials and methods

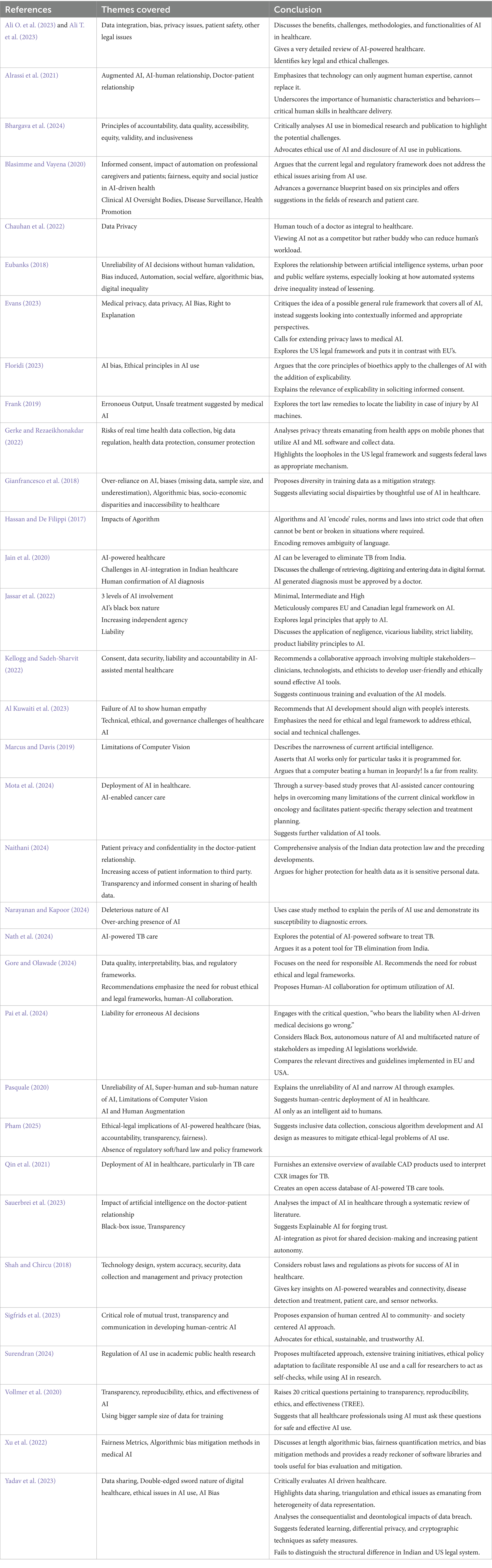

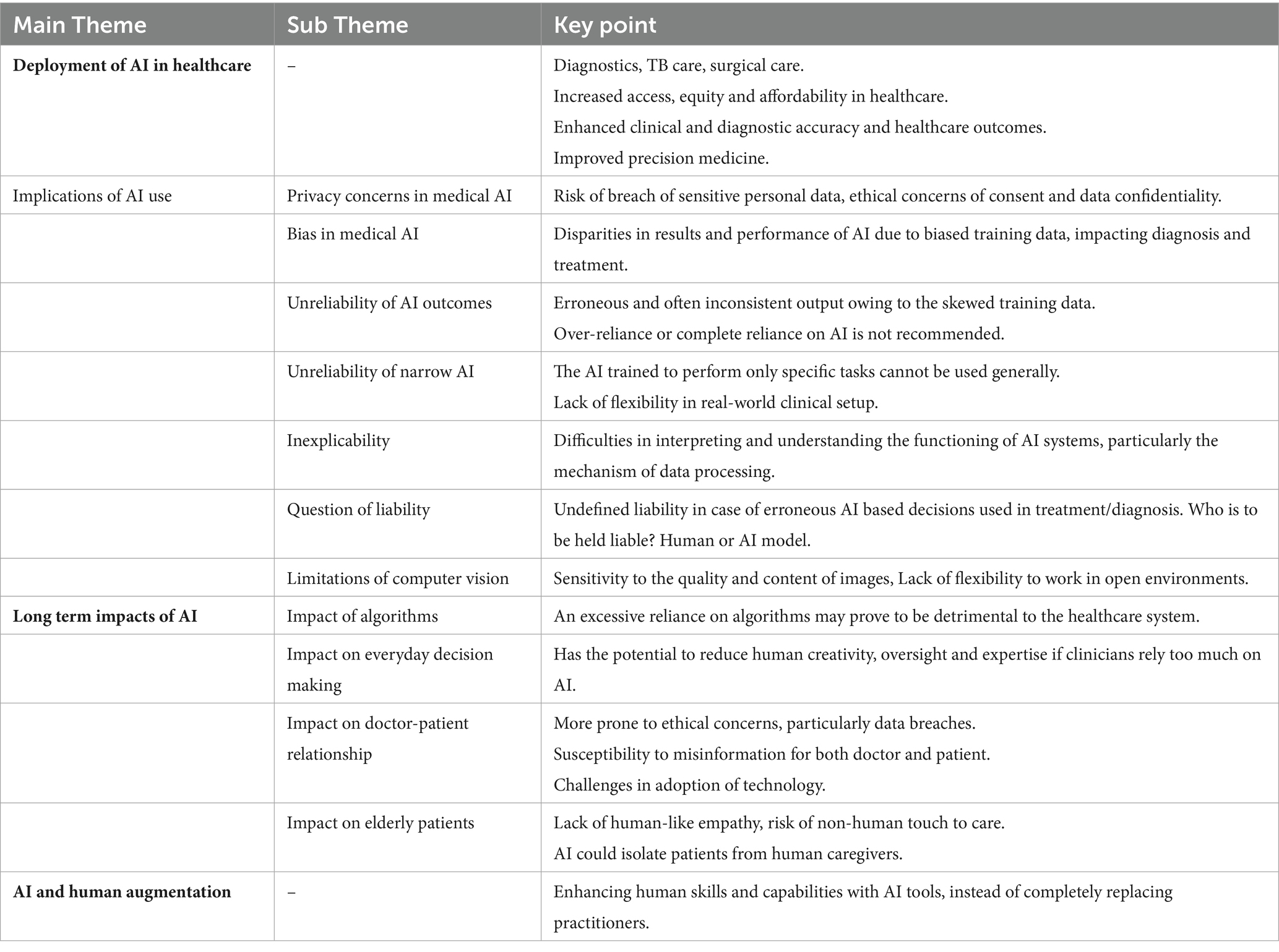

A comprehensive review of literature the on interaction of AI and healthcare available on medical databases and legal databases including PubMed, Oxford Academic, SCOPUS and reports published by the Indian government institutions and international organizations was conducted. The literature was selected on the basis of its engagement with the legal and ethical issues emanating from AI use in healthcare, with the inclusion criteria focused on interdisciplinary approaches and peer-reviewed work published between 2018 and 2025. A total of 25 research papers were reviewed alongside 06 books, policy documents and relevant government/institutional guidelines. The limitation pertains to the plausible bias in selection of the literature and exclusion of some grey literature.

3 Deployment of AI in healthcare

AI is the process of training a computer system using complex and large data sets to build its ability to make decisions and predict outcomes when presented with new data (Floridi, 2023). AI models designed for healthcare rely on the data sets of millions of patients fed into it. For instance, to decide what treatment option is likely to work and which treatment will give the best results for the new patient, an AI model will look at data of past patients having similar medical conditions. Since, every individual has a different set of DNAs and AI models can identify similarities and differences, the genomic data helps AI to make highly informed decisions in respect of diagnosis and treatment (Dias and Torkamani, 2019). This potential of AI has made it a valuable asset. For instance, in cases of cancer which involves complicated diagnostics, AI simplifies the task of identification of the level of cancer and the right treatment plan for doctors. By simply feeding the genomic information gathered from tissue biopsy, reports of blood test results and X-ray images of the liaisons, a doctor can leverage AI models to generate the prognosis and suggest the best treatment option (Pesheva, 2024). A similar tool has been developed in Brisbane and is being used in cancer treatment (Mota et al., 2024). AI has made healthcare more refined than ever, charting a course for highly advanced and accessible precision medicine. It has also emerged as a powerful tool for low-income countries like India. Plagued by the challenges of the “Iron Triangle”—comprising of access, equity and cost—India continues to grapple with resource scarcity and accessibility in healthcare. This has contributed to the suboptimal performance on the latest Healthcare Access and Quality Index, where India is placed at 145 out of 190 countries (Yadavar, 2018). In this context, AI-integration is perceived transformative in Indian healthcare sector. With an adoption rate of approximately 68 percent, AI has significantly advanced preventive care, diagnostics, and personalized medicine. However, more than 92 percent of deployment is still in the proof of concept (POC) stage (NASSCOM, 2024). This stage marks AI’s early development and deployment phase, where the primary objective is to test the ‘feasibility” and effectiveness’ of the technology (AI) in a controlled environment.

Given AI’s promising role in healthcare, it is anticipated to contribute approximately 25–30 billion USD to India’s GDP by 2025 (Press Trust of India, 2025). The market size of AI in Indian Healthcare was 950 million USD and it is likely to reach 1.6. billion by the end of 2025 (Market Size of AI in Healthcare 2025, 2025). Further, the current compound annual growth rate (CAGR) of 22.5% is predicted to expand the market size of healthcare AI in India to 650 billion U.S. dollars by the end of 2025 (Blue Weave Consulting, 2024).

AI has been pivotal in managing one of India’s most prevalent diseases—Tuberculosis. AI technology, specifically Computer Aided Detection (AI-CAD) is widely used to detect TB (Jain et al., 2020). Similarly, Sanjay Gandhi Post Graduate Institute of Medical Sciences, Lucknow, has also developed an AI tool for the rapid detection of tuberculosis with an accuracy of 95% (Nath et al., 2024). These AI-modeled systems address the scarcity of personnel and testing laboratories, making TB care affordable and accessible (Qin et al., 2021; Bhargava et al., 2024). Similarly, in overburdened and understaffed gynecology clinics, AI integration is a gamechanger. It has transformed their approach to patient handling. AI algorithms are used for diagnosis by providing it with patient health information (PHI) and patient history; for curating effective and personalized treatment plans for each patient (Emin et al., 2019). Not only AI has made identification of rare and high-risk cases easier it has also been instrumental in reducing diagnostic errors in OBG (Tanos et al., 2024). Moreover, a critical concern of follow-up and timely medication is addressed by AI, which sends automated reminders to patients and signals the doctors in case of any significant change in the patient’s conditions. This saves the doctors from multiple physical visits to the in-patient wards.

Despite several benefits, AI-powered healthcare has many ethical-legal implications which need prompt consideration (Pham, 2025; Gore and Olawade, 2024).

3.1 Implications of AI-use

3.1.1 Privacy concerns in medical AI

The healthcare industry heavily relies on data—PHI including their sensitive personal information (SPI). PHI encompasses demographics (gender, age and marital status), financial information (health insurance), information pertaining to their health, specifically medical procedures, genomics and allied information. Their PHI and PII are collected at many touchpoints digitally and physically, and are exchanged on various platforms from diagnostic centres to hospitals in delivery of healthcare services. In AI-powered healthcare, PHI and PII are provided to the AI system for generating outputs, for instance, genome data is given to AI for identifying a person’s anti-microbial resistance to a set of drugs (Ali T. et al., 2023). Such a use of data makes it both an asset and a potent vulnerability. The management of SPI by AI systems raises various questions relating to privacy and data protection. Moreover, the possibility of re-identification of pseudo-anonymized patient data remains a significant challenge. Further, with commercialisation of medical AI, the risks of exploitation of data, its misuse and breach have also increased.

Our existing regulations and laws are not designed for AI integrated healthcare; but for physical human performed surgeries and treatments. As AI can become more intelligent and suitable over time to the environment in which it is deployed, it is challenging traditionally established understandings of consent and autonomy. For AI-powered healthcare to flourish there is a need for law enabling data sharing and simultaneously protect the patient privacy.

For instance, an Apple Watch uses AI to monitor real-time user’s heart rhythms. This collection of data by AI which it eventually uses to train its algorithm raises severe data security and privacy risks and with sharing for purposes of innovations the risk of intensifies manifolds (Gerke and Rezaeikhonakdar, 2022).

3.1.2 Bias in medical AI

Holdsworth (2023) describes AI Bias as referring to “the occurrence of biased results due to human biases that skew the original training data or AI algorithm—leading to distorted outputs and potentially harmful outcomes.” For fair and unbiased output, the developers must ensure data diversity. Further, such inherent biases may lead to a of adequate healthcare facilities to marginalized communities, further entrenching existing disparities (Eubanks, 2018).

Gianfrancesco et al. (2018) considers missing data and misclassification as major factors resulting in algorithmic bias. Often, certain unmitigable factors contribute to use of skewed data sets. For instance, to develop an AI model for detecting skin cancer, huge data sets of Caucasian patients available as they are more prone to it as compared to Asian patients. This creates an inherent bias in the model, as it is likely to give biased results for Asian patients. To mitigate such issues, the model must be enabled to give “I do not know” as output in case of an Asian patient. With such modifications, AI is more likely to facilitate more personalized healthcare for all.

A classic case of skewed data culminating into erroneous outputs is of IBM Watson’s Oncology AI. The software was proposed as a game changer, however, on application, it delivered unsafe and erroneous results (Frank, 2019). For instance, to a lung cancer patient with heavy bleeding it recommended a treatment which could have proved fatal. The reason for such erroneous outputs was the limitations in training data. The model was trained with data of few hypothetical cases without inclusion of any real cases or clinical inputs. Ultimately, the hospitals had to discontinue using this model. It is suggested that the policy advisors, healthcare professionals, AI developers and patients must together brainstorm and help in developing AI tools which can serve the whole world and not a miniscule minority. Thus, for bias mitigation and ensuring fairness in medical AI, apart from use of diverse data from heterogenous population for training, various other measures—regular audits, inclusive design teams (policy advisors, healthcare professionals, beneficiary patients and developers). It should be ensured that the algorithms are transparent, explainable and adhere to the fairness metrics—like demographic (same outcome across demographics/groups), equal opportunity (true positive rate) and odds (true and false positive rates) (Xu et al., 2022). Further, feedback from AI users, particularly doctors should be taken at regular intervals to further train the model. The developer’s awareness of existing health disparities and the provision for continuous feedback sharing by users would significantly enhance the effectiveness of AI in healthcare.

3.1.3 Unreliability of AI outcomes

Even when AI seemingly is ‘correct’, the outcomes can still be deleterious (Narayanan and Kapoor, 2024) discuss in detail a 1997 study that tried to use AI to predict outcomes of patients with pneumonia and whether it could make better predictions than healthcare workers. The model’s predictions were fairly accurate, but it discovered that having asthma led to a lower risk of complications due to pneumonia. In reality, however, since the training data was based on the hospital’s existing decision-making system, asthmatic patients had a lower risk for complications because they were actually sent to the ICU immediately upon arrival. If the model were to be deployed in the hospital, asthmatic patients would now be more likely to be sent home instead of getting proper care, leading to disastrous outcomes for patients.

3.1.4 Unreliability of narrow AI

Even when AI is trained to work on a very narrow scope and scale, it might still be unreliable. Pasquale explains how a ‘narrow AI’ trained to detect polyps might correctly identify a problem polyp that might be missed by a gastroenterologist yet fail to see any other abnormalities that would be easily spotted by a human because of not having adequate training data for that specific abnormality. Thus, an ideal system would be an expert working alongside AI assistance. There is immense value in interdisciplinary collaborations between experts (Ali O. et al., 2023).

3.1.5 Inexplicability

Doctors and patients may often need information beyond AI outputs, i.e., about the features, characteristics and assumptions on which the outputs are based or how the weight between artificial neural networks is interpreted (black box). Such questions underscore the inexplicability debate in the context of informed consent, certification of devices and liability. Doctors often grapple with the challenge of balancing transparency against complexity of AI systems.

The four overarching principles of bioethics are beneficence, nonmaleficence, autonomy and justice adapt fairly well to the new legal challenges posed by the AI use in healthcare. However, when placed in contrast with the other recognized principles governing AI, it becomes clear that an additional principle is required—explicability. Explicability is understood as encompassing both the epistemological sense of intelligibility—answering the question “how does it work?”—and in the ethical dimension of intelligibility—answering the question “who is responsible for the for the way it works.” Explicability opens up the “black box” by making the AI system’s decision-making process transparent and understandable (Floridi, 2023).

3.1.6 The question of liability

The determination of liability when AI is used in healthcare is a challenging task for lawmakers and judges ahead—owing to the lack of applicability of traditional legal principles (Jassar et al., 2022). In cases where AI generates an erroneous diagnosis of a patient, the question of liability arises: who will be liable—the doctor, the AI developer or the concerned healthcare institution? Globally, ambiguities surrounding AI—from the ‘black box’ problem, to the autonomous nature of working of AI systems, to the complex roles of stakeholders—have so far led to an absence of proper legal frameworks (Pai et al., 2024).

3.1.7 The limitations of computer vision

The strengths of AI may in many cases also serve as its weaknesses, owing to how AI technologies essentially work with data and statistical analysis. Hence, while that working could provide insights that humans never could, it could also fail to make the most obvious observations. This is what (Pasquale, 2020) describes when he talks about how ‘computer vision’ can be both superhuman and subhuman at the same time. ‘Superhuman’ here refers to capabilities of carrying out computation on scales much greater than the human brain ever could, while ‘subhuman’ refers to the possibilities of failing at the smallest of tasks that a human child could do easily (Pasquale, 2020). Even the idea of a task completed ‘successfully’ can have multiple interpretations based on the kind of AI system being observed and what the system has been trained to identify as ‘success’. Often, AI systems trained in environments with fixed parameters such as fail in open ended environments (Marcus and Davis, 2019).

3.2 Long term impacts of AI in healthcare

3.2.1 Impacts of algorithms

An excessive reliance on algorithms may prove to be detrimental to the healthcare system. Lending too much credence to AI outputs—be they predictions and forecasts or generative content, is harmful to knowledge production and paves way for real-world complexity to get flattened. However, it also has another effect—that of devaluing the very real human creativity and flexibility that is an important part of administrative operations, not just in healthcare but in all other fields. Algorithms and AI ‘encode’ rules, norms and laws into strict code that often cannot be bent or broken in situations where required. Encoded rules in this way have none of the ambiguity and openness of interpretation of traditional legal language (Hassan and De Filippi, 2017). AI also is likely to have impacts on the academic work in healthcare—where unethical use of AI may be detrimental to the cultivation of academic rigor and creativity (Bhargava et al., 2024). Proper frameworks should be developed at the institutional level to deal with issues such as listing AI as co-authors.

3.2.2 Impact on everyday decision making

As (Blasimme and Vayena, 2020) describe, healthcare operators everyday take decisions where they “calibrate objective criteria with the reality of each individual case,”—such as when deciding which patient is to be treated first. The reality of each individual case takes precedence over the usual “objective” order of things. However, it is unlikely that AI-based patient care systems can be programmed to display such flexibility. Many scholars consider hypothetical situations and discuss how AI systems used to calculate the risks of longer stays in hospital admission decisions might end up discriminating against more vulnerable patients. Probably, it is impossible to develop a one-size-fits-all approach to medical AI—and hence, all sorts of rules and legal frameworks would need to be contextually informed (Evans, 2023).

3.2.3 Impact on doctor-patient relationships

Automated systems employment raises ethical concerns within the doctor-patient relationship. With advancement of AI chatbots, their impacts on this relationship are becoming more pertinent. With increasing access to advanced technology, both doctors and patients have become susceptible to misinformation, necessitating a shift in doctor’s skills and roles (Alrassi et al., 2021). Moreover, privacy and data protection issues arise as healthcare data becomes accessible to third parties, warranting stricter regulations (Naithani, 2024). Sauerbrei et al. (2023) emphasize that concrete steps are needed to ensure AI tools positively impact doctor-patient relationships, focusing on empathy, trust, shared decision making and compassion.

3.2.4 Impact on elderly patients

While, in one way, chatbots and AI assistants can ease up the workloads of doctors and caregivers, they can also have a negative, isolating impact on patients who grow more distant from human care and empathy. This is an ethical issue particularly pronounced in the case of elderly patients, where AI can provide care and companionship yet become an unhealthy replacement for actual human interaction (Pasquale, 2020).

4 AI and human augmentation

Considering the power differentials at play in the working of AI, vulnerable and marginalized communities are likely to face more harm as a result of the uncritical deployment of AI. This necessitates human-centric deployment of AI (Sigfrids et al., 2023). Pasquale (2020) suggests looking at AI as ‘augmented intelligence’ i.e. as an intelligent aid to a human doctor instead of the more common idea of an ‘Artificial Intelligence’ working autonomously, without human intervention. Serving as a potent tool in clinician’s hands, augmented intelligence strengthens the expertise of clinicians, augments their ability to recognize patterns, make informed decisions and provide effective patient care based on the data-driven insights (Kellogg and Sadeh-Sharvit, 2022). Simply put, it envisions doctors and AI working in conjunction by task-sharing. For instance, ChestLink, an AI model developed by Oxypit is used for triaging (Fanni et al., 2023). It scans the X-rays to identify abnormalities and generates the diagnosis. In case it does not find any, the AI model sends it to a human radiologist (Tables 1, 2).

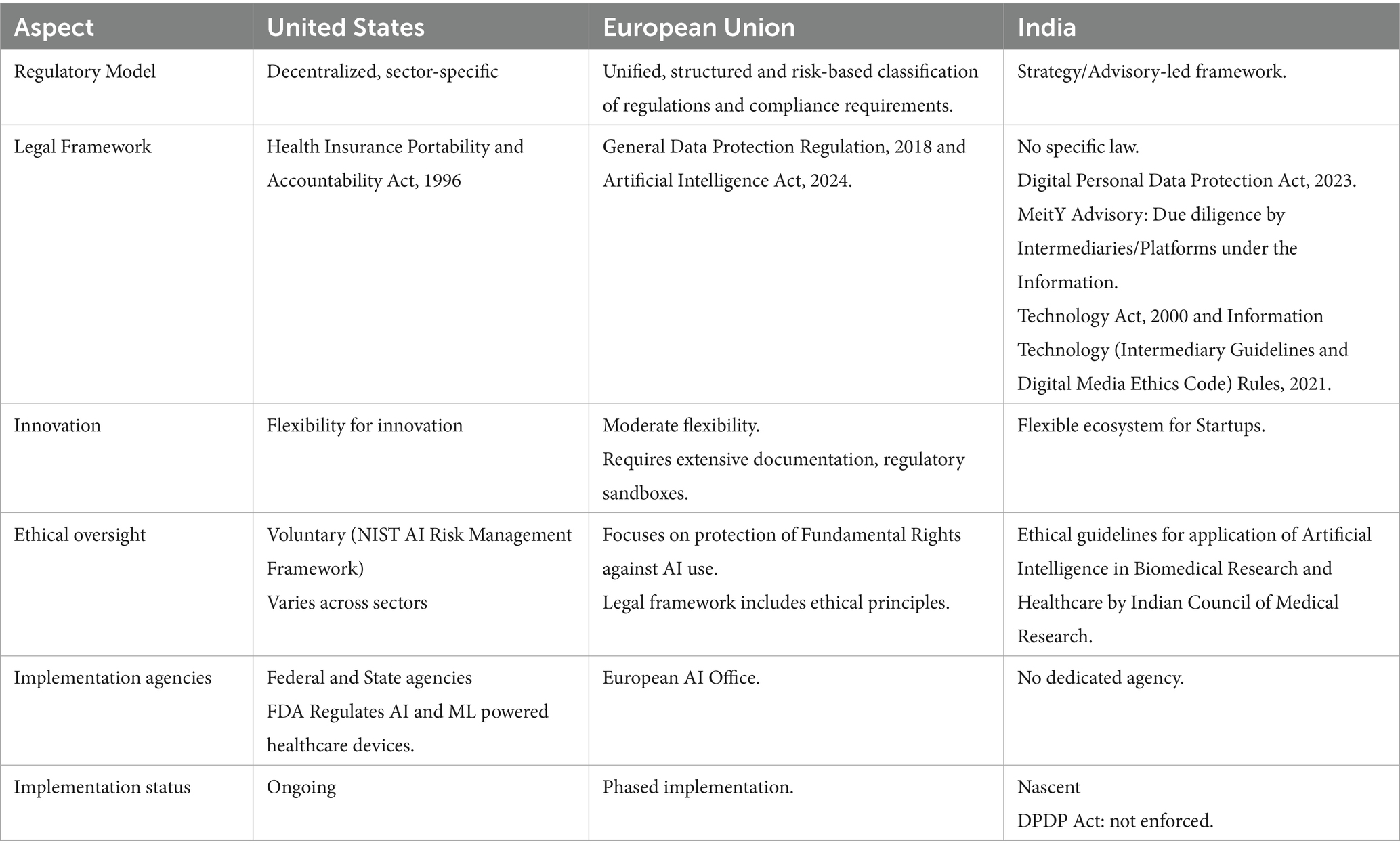

5 Legal and regulatory framework

5.1 International regulatory and policy developments

The scope and scale of the data being collected requires researchers to formalize new “best practices” and promote transparency, replicability and an engagement with ethical concern (Vollmer et al., 2020; Surendran, 2024). Given the entwined nature of AI research with advancements in wearables and sensor technology, questions of technology design also need examination (Shah and Chircu, 2018).

Taking cognizance of the legal-ethical issues resulting from AI use in healthcare, the (World Health Organization Guidance, 2021) released containing six guiding principles: the protection of human autonomy, the promotion of human well-being, safety and public interest, ensuring transparency, explainability, and intelligibility, fostering responsibility and accountability, ensuring inclusiveness and equity, and promotion of sustainability. Subsequently it acknowledged large language models (LLMs) as deleterious to patient’s safety, highlighting the dangers of biased data, the illusion of authoritativeness and plausibility in LLM outputs that masks potentially serious errors, the non-consensual data reuse and deliberate disinformation.

World Health Organization (2024) Guidance on AI ethics and governance for LMM AI models reiterates the importance of quality data, warns against ‘automation bias’ and potential cybersecurity risks, advocates for a multi-stakeholder approach, and recommends governments to invest in public infrastructure, draft law and regulation to enshrine ethical obligations and human rights standards, and to establish regulatory agencies for governing AI in medicine.

5.2 Regulatory and policy frameworks in India

The Indian model exemplifies a policy-driven, hybrid framework characterized by the interplay of AI governance Advisories, Strategies, (Indian Council of Medical Research, 2023) Guidelines and scattered sectoral Regulations and evolving data protection law, albeit snail paced. The data protection law, i.e., Digital Personal Data Protection Act enacted in 2023 is yet to be enforced. This law permits processing of personal data with consent of the data principal (individual whose personal data is collected) and without consent in certain legitimate cases as outlined in Section 7. The legitimate uses include scenarios where data is required to respond to any medical emergency involving a threat to the life or immediate threat to the health of the person whose data is concerned (data principal) or any other individual; or where it is necessary for taking measures to provide medical treatment or health services to any individual during an epidemic, outbreak of disease, or any other threat to public health.

The Act, however, does not define “health data” or “sensitive personal data” as opposed to its precursors, which creates a regulatory gap, particularly in sectors like health. Moreover, it treats does not enhanced safeguards for sensitive data. This absence of safeguards and clear definition, raises concerns about AI’s potential to exacerbate systemic risks. Without adequate safeguards, AI-driven healthcare may lead to erroneous decisions or exclusionary practices driven by algorithmic bias or non-causal correlations. The importance of addressing regulatory gaps in India cannot be overstated.

Unfortunately, DISHA Act which proposed a rights-based framework for patient’s privacy and enabled digital sharing of PHI between hospitals and clinics, could not see the light of the day and hence, we still do not have an adequate legal framework. Thus, proper legal and ethical frameworks—covering a wide range of issues from consent to access, from privacy to cost, in addition to effective governance mechanisms need to be evolved to protect society from the harmful effects of an uncritical use of AI (Table 3; Al Kuwaiti et al., 2023).

6 Conclusion

AI use in healthcare is at a nascent stage, yet it has transformed healthcare by enhancing accessibility and outcomes to a large extent. AI-powered healthcare has proved to be a viable solution in hospital settings with less resources but huge patient footfall. A major concern spurred by AI use is patient privacy as AI systems require their health and other sensitive data to function effectively. The increasing incursions into the privacy of human beings are alarming. Consequently, the patients are concerned about data security and its potential breaches, which in turn undermines their trust. AI systems are also likely to violate privacy laws if not handled with care. Moreover, the reliability of AI is often questioned leading to ethical dilemmas pertaining to accountability in situations where the AI-powered systems give erroneous diagnostics. Legal and medical professionals have called for defined guidelines to address the accountability conundrum. On the counts of AI as a replacement of human doctors, it is clear that the humane touch of a doctor in a patient’s treatment is irreplaceable. AI is merely a competent assistant and not an “intelligent competitor” of a human being. Furthermore, inherent bias in AI makes it clear that AI generated output will require verification by a human doctor as complete reliance on AI is not feasible.

Additionally, AI should be deployed consciously because it may and has resulted in widening the disparities in certain situations. Thus, a robust regulatory framework complementing the ethical guidelines are indispensable for navigating the present and anticipated challenges.

Author contributions

MN: Conceptualization, Resources, Methodology, Writing – original draft, Project administration, Writing – review & editing, Supervision. KS: Resources, Conceptualization, Methodology, Writing – original draft. SA: Writing – review & editing, Writing – original draft, Methodology, Conceptualization, Resources.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Acevedo, E. (2018). For optimal outcomes, surgeons should tap into “collective surgical consciousness”. Am. Coll. Surg. 10:2018.

Al Kuwaiti, A., Nazer, K., Al-Reedy, A., Al-Shehri, S., Al-Muhanna, A., Subbarayalu, A. V., et al. (2023). A review of the role of artificial intelligence in healthcare. J. Pers. Med. 13:951. doi: 10.3390/JPM13060951

Ali, O., Abdelbaki, W., Shrestha, A., Elbasi, E., Alryalat, M. A. A., and Dwivedi, Y. K. (2023). A systematic literature review of artificial intelligence in the healthcare sector: benefits, challenges, methodologies, and functionalities. J. Innov. Knowl. 8:100333. doi: 10.1016/J.JIK.2023.100333

Ali, T., Ahmed, S., and Aslam, M. (2023). Artificial intelligence for antimicrobial resistance prediction: challenges and opportunities towards practical implementation. Antibiotics 12:523. doi: 10.3390/ANTIBIOTICS12030523

Alrassi, J., Katsufrakis, P. J., and Chandran, L. (2021). Technology can augment, but not replace, critical human skills needed for patient care. Acad. Med. 96, 37–43. doi: 10.1097/ACM.0000000000003733

Bhargava, M., Bhardwaj, P., and Dasgupta, R. (2024). Artificial intelligence in biomedical research and publications: it is not about good or evil but about its ethical use. Indian J. Community Med. 49, 777–779. doi: 10.4103/ijcm.ijcm_560_24

Blasimme, A., and Vayena, E. (2020). “The ethics of AI in biomedical research, patient care, and public health” in The Oxford Handbook of Ethics of AI, 702–718. doi: 10.1093/OXFORDHB/9780190067397.013.45

Blue Weave Consulting. (2024). “India AI in healthcare market size zooming 6X to touch USD 6.5 billion by 2030.” Available online at: https://www.blueweaveconsulting.com/report/india-ai-in-healthcare-market (accessed April 26, 2024).

Collins, B. X., Belisle-Pipon, J. C., Evans, B. J., Ferryman, K., Jiang, X., Nebeker, C., et al. (2024). Addressing ethical issues in healthcare artificial intelligence using a lifecycle-informed process. JAMIA Open 7:108. doi: 10.1093/JAMIAOPEN/OOAE108

Chauhan, N., Shahir, A., Naveen, D., and Ram, J.. (2022). Artificial Intelligence in the Practice of Pulmonology: The Future Is Now. Lung India 39:1–2. doi: 10.4103/LUNGINDIA.LUNGINDIA_692_21

Dias, R., and Torkamani, A. (2019). Artificial intelligence in clinical and genomic diagnostics. Genome Med. 11, 1–12. doi: 10.1186/S13073-019-0689-8

Emin, E. I., Emin, E., Papalois, A., Willmott, F., Clarke, S., and Sideris, M. (2019). Artificial intelligence in obstetrics and gynaecology: is this the way forward? In Vivo 33, 1547–1551. doi: 10.21873/invivo.11635

Eubanks, V. (2018). Automating inequality: How high-tech tools profile, police, and punish the poor. 1st Edn. USA: St. Martin’s Press, Inc.

Evans, B. J. (2023). Rules for robots, and why medical AI breaks them. J. Law Biosci. 10:1. doi: 10.1093/JLB/LSAD001

Fanni, S. C., Marcucci, A., Volpi, F., Valentino, S., Neri, E., and Romei, C. (2023). Artificial intelligence-based software with CE mark for chest X-ray interpretation: opportunities and challenges. Diagnostics 13:2020. doi: 10.3390/DIAGNOSTICS13122020

Floridi, L. (2023). The ethics of artificial intelligence: principles, challenges, and opportunities. 1st Edn. UK: Oxford University Press.

Frank, X. (2019). Is Watson for oncology per se unreasonably dangerous?: making a case for how to prove products liability based on a flawed artificial intelligence design. Am. J. Law Med. 45, 273–294. doi: 10.1177/0098858819871109

Gerke, S., and Rezaeikhonakdar, D. (2022). Privacy aspects of direct-to-consumer artificial intelligence/machine learning health apps. Intell. Based Med. 6:100061. doi: 10.1016/J.IBMED.2022.100061

Gianfrancesco, M. A., Tamang, S., Yazdany, J., and Schmajuk, G. (2018). Potential biases in machine learning algorithms using electronic health record data. JAMA Intern. Med. 178:1544. doi: 10.1001/JAMAINTERNMED.2018.3763

Gore, M. N., and Olawade, D. B. (2024). Harnessing AI for public health: India’s roadmap. Front. Public Health 12:7568. doi: 10.3389/fpubh.2024.1417568

Hanna, M. G., Pantanowitz, L., Jackson, B., Palmer, O., Visweswaran, S., Pantanowitz, J., et al. (2025). Ethical and Bias considerations in artificial intelligence/machine learning. Mod. Pathol. 38:100686. doi: 10.1016/J.MODPAT.2024.100686

Hashimoto, D. A., Rosman, G., Rus, D., and Meireles, O. R. (2018). Artificial intelligence in surgery: promises and perils. Ann. Surg. 268, 70–76. doi: 10.1097/SLA.0000000000002693

Hassan, Samer, and De Filippi, Primavera. (2017). “The expansion of algorithmic governance: from code is law to law is code.” No. Special Issue 17 (December), 88–90. Available online at: http://journals.openedition.org/factsreports/4518

Indian Council of Medical Research. (2023). “ICMR ethical guidelines for application of artificial intelligence in biomedical research and healthcare.” Available online at: https://www.icmr.gov.in/ethical-guidelines-for-application-of-artificial-intelligence-in-biomedical-research-and-healthcare (accessed April 22, 2024).

Jain, M., Jain, M., Rodrigues, E., and Bhargava, S. (2020). Using artificial intelligence for pulmonary TB prevention, diagnosis, and treatment. Indian J. Tuberc. 67, S119–S121. doi: 10.1016/J.IJTB.2020.11.008

Jassar, S., Adams, S. J., Zarzeczny, A., and Burbridge, B. E. (2022). The future of artificial intelligence in medicine: medical-legal considerations for health leaders. Healthc. Manage. Forum 35, 185–189. doi: 10.1177/08404704221082069

Kellogg, K. C., and Sadeh-Sharvit, S. (2022). Pragmatic AI-augmentation in mental healthcare: key technologies, potential benefits, and real-world challenges and solutions for frontline clinicians. Front. Psych. 13:990370. doi: 10.3389/FPSYT.2022.990370/FULL

Marcus, G., and Davis, E. (2019). Rebooting AI: Building artificial intelligence we can trust. United States: Pantheon Books.

“Market Size of AI in Healthcare 2025.” (2025). Statista. Available online at: https://www.statista.com/statistics/1493056/india-market-size-of-ai-in-healthcare/ (Accessed March 18, 2025).

Mennella, C., Maniscalco, U., De Pietro, G., and Esposito, M. (2024). Ethical and regulatory challenges of AI Technologies in Healthcare: a narrative review. Heliyon 10:e26297. doi: 10.1016/J.HELIYON.2024.E26297

Mota, S. M., Priester, A., Shubert, J., Bong, J., Sayre, J., Berry-Pusey, B., et al. (2024). Artificial intelligence improves the ability of physicians to identify prostate Cancer extent. J. Urol. 212, 52–62. doi: 10.1097/JU.0000000000003960

Naithani, P. (2024). Protecting healthcare privacy: analysis of data protection developments in India. Indian J. Med. Ethics. doi: 10.20529/IJME.2023.078

Narayanan, A., and Kapoor, S. (2024). AI Snake oil: What artificial intelligence can do, what it can’t, and how to tell the difference. 1st Edn. Princeton, New Jersey, United States: Princeton University Press.

NASSCOM. (2024). “Advancing healthcare in India: navigating the transformative impact of AI.” NASSCOM. Available online at: https://community.nasscom.in/communities/ai/advancing-healthcare-india-navigating-transformative-impact-ai (Accessed August 13, 2024).

Nath, A., Hashim, Z., Shukla, S., Poduvattil, P. A., Neyaz, Z., Mishra, R., et al. (2024). A multicentre study to evaluate the diagnostic performance of a novel CAD software, DecXpert, for radiological diagnosis of tuberculosis in the northern Indian population. Sci. Rep. 14, 1–15. doi: 10.1038/s41598-024-71346-x

Pai, S. N., Jeyaraman, M., Jeyaraman, N., and Yadav, S. (2024). Doctor, bot, or both: questioning the Medicolegal liability of artificial intelligence in Indian healthcare. Cureus, 16, e69230. doi: 10.7759/cureus.69230

Pasquale, Frank. (2020). “New Laws of robotics: defending human expertise in the age of AI,” 330. Available online at: https://www.hup.harvard.edu/books/9780674975224 (accessed April 10, 2024).

Pesheva, Ekaterina. (2024). “A new artificial intelligence tool for Cancer.” Harvard Medical School. Available online at: https://hms.harvard.edu/news/new-artificial-intelligence-tool-cancer (Accessed September 4, 2024).

Pham, T. (2025). Ethical and legal considerations in healthcare AI: innovation and policy for safe and fair use. R. Soc. Open Sci. 12:241873. doi: 10.1098/RSOS.241873

Press Trust of India. (2025). “AI in healthcare likely to contribute $30 Bn to India’s GDP by 2025.” The Economic Times. Available online at: https://economictimes.indiatimes.com/industry/healthcare/biotech/healthcare/ai-in-healthcare-likely-to-contribute-30-bn-to-indias-gdp-by-2025-report/articleshow/118603045.cms. (Accessed February 27, 2025).

Qin, Z. Z., Naheyan, T., Ruhwald, M., Denkinger, C. M., Gelaw, S., Nash, M., et al. (2021). A new resource on artificial intelligence powered computer automated detection software products for tuberculosis Programmes and implementers. Tuberculosis 127:102049. doi: 10.1016/j.tube.2020.102049

Sauerbrei, A., Kerasidou, A., Lucivero, F., and Hallowell, N. (2023). The impact of artificial intelligence on the person-Centred, doctor-patient relationship: some problems and solutions. BMC Med. Inform. Decis. Mak. 23:73. doi: 10.1186/s12911-023-02162-y

Shah, R., and Chircu, A. M. (2018). IoT and AI in healthcare: a systematic literature review. Issues Inf. Syst. 19, 33–41. doi: 10.48009/3_iis_2018_33-41

Sigfrids, A., Leikas, J., Salo-Pöntinen, H., and Koskimies, E. (2023). Human-centricity in AI governance: a systemic approach. Front. Artif. Intell. 6:976887. doi: 10.3389/FRAI.2023.976887/BIBTEX

Singhal, K., Azizi, S., Tao, T., Sara Mahdavi, S., Wei, J., Chung, H. W., et al. (2023). Large language models encode clinical knowledge. Nature 620, 172–180. doi: 10.1038/S41586-023-06291-2

Surendran, S. (2024). Concerns and considerations of using artificial intelligence in research: a qualitative exploration among public health residents in Kolkata. Indian J. Community Med. 49, S78–S79. doi: 10.4103/ijcm.ijcm_abstract271

Tanos, P., Yiangou, I., Prokopiou, G., Kakas, A., and Tanos, V. (2024). Gynaecological artificial intelligence diagnostics (GAID) GAID and its performance as a tool for the specialist doctor. Healthcare (Switzerland) 12:223. doi: 10.3390/healthcare12020223

Vollmer, S., Mateen, B. A., Bohner, G., Király, F. J., Ghani, R., Jonsson, P., et al. (2020). Machine learning and artificial intelligence research for patient benefit: 20 critical questions on transparency, replicability, ethics, and effectiveness. BMJ 368:6927. doi: 10.1136/BMJ.L6927

World Health Organization (2024). Ethics and governance of artificial intelligence for health: Guidance on large multi-modal models. Geneva: World Health Organization.

World Health Organization Guidance (2021). Ethics and governance of artificial intelligence for health. 1st Edn. Geneva: World Health Organization.

Xu, J., Xiao, Y., Wang, W. H., Ning, Y., Pankhuri Dudani,, Somesh Gupta,, et al. (2022). Data Privacy in Healthcare: In the Era of Artificial Intelligence. Indian Dermatology Online Journal 14:788. doi: 10.1016/J.EBIOM.2022.104250

Yadav, N., Saumya, P., Amit Gupta,, Ning, Y., Shenkman, E. A., Bian, J., et al. (2023). Algorithmic fairness in computational medicine. EBioMedicine 84:104250–92. doi: 10.4103/IDOJ.IDOJ_543_23

Yadavar, Swagata. (2018). “India worse than Bhutan, Bangladesh in healthcare, ranks 145th globally” Business Standard. Available online at: https://www.business-standard.com/article/current-affairs/india-worse-than-bhutan-bangladesh-in-healthcare-ranks-145th-globally-118052400135_1.html (Accessed May 24, 2018).

Keywords: AI-powered healthcare, AI regulation, augmented intelligence (AI), health data, medical privacy, law and AI

Citation: Nasir M, Siddiqui K and Ahmed S (2025) Ethical-legal implications of AI-powered healthcare in critical perspective. Front. Artif. Intell. 8:1619463. doi: 10.3389/frai.2025.1619463

Edited by:

Filippo Gibelli, University of Camerino, ItalyReviewed by:

Juan José Martí-Noguer, Digital Mental Health Consortium, SpainHariharan Shanmugasundaram, Vardhaman College of Engineering, India

Copyright © 2025 Nasir, Siddiqui and Ahmed. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Samreen Ahmed, c2FobWFkOEBteWFtdS5hYy5pbg==

Mohammad Nasir

Mohammad Nasir Kaif Siddiqui

Kaif Siddiqui Samreen Ahmed

Samreen Ahmed