- 1German Research Center for Artificial Intelligence (DFKI), Interactive Machine Learning Department, Saarbrücken, Germany

- 2Applied Artificial Intelligence, University of Oldenburg, Oldenburg, Germany

The scanpath is an important concept in eye tracking. It refers to a person's eye movements over a period of time, commonly represented as a series of alternating fixations and saccades. Machine learning has been increasingly used for the automatic interpretation of scanpaths over the past few years, particularly in research on passive gaze-based interaction, i.e., interfaces that implicitly observe and interpret human eye movements, with the goal of improving the interaction. This literature review investigates research on machine learning applications in scanpath analysis for passive gaze-based interaction between 2012 and 2022, starting from 2,425 publications and focussing on 77 publications. We provide insights on research domains and common learning tasks in passive gaze-based interaction and present common machine learning practices from data collection and preparation to model selection and evaluation. We discuss commonly followed practices and identify gaps and challenges, especially concerning emerging machine learning topics, to guide future research in the field.

1 Introduction

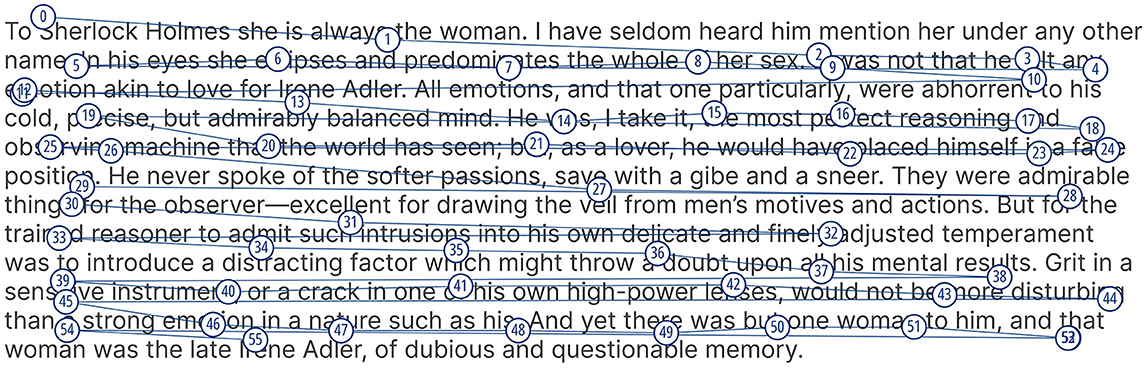

Eye tracking is a technology that records eye movements and gaze locations over time (Carter and Luke, 2020), and has seen increased usage in research over recent years. The scanpath is an important concept in eye tracking, and it refers to the trace of a user's eye movements across space over a period of time (Holmqvist et al., 2011). Scanpaths are closely associated with two eye tracking terms: fixations and saccades. Fixations describe the state when the eyes remain relatively still for a time period lasting between a few tens of milliseconds up to a few seconds, while saccades are the rapid eye movements from one fixation to another (Holmqvist et al., 2011). The combination of both fixations and saccades produces a scanpath. Figure 1 shows a visual encoding of a scanpath where the numbered circles represent fixations, the lines connecting them represent saccades, and both are superimposed on top of a text stimulus. Scanpaths are often regarded as one of the most commonly used methods for analyzing and representing human eye movements (Blascheck et al., 2017; Li et al., 2021).

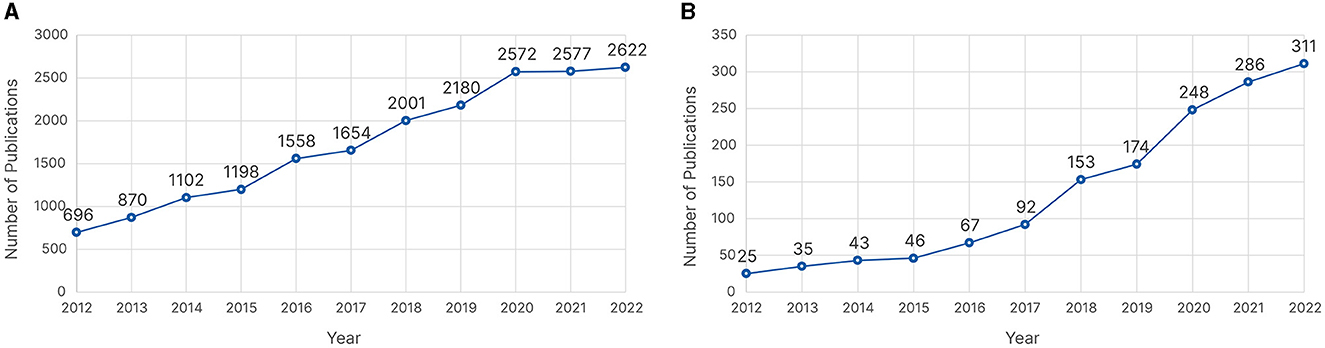

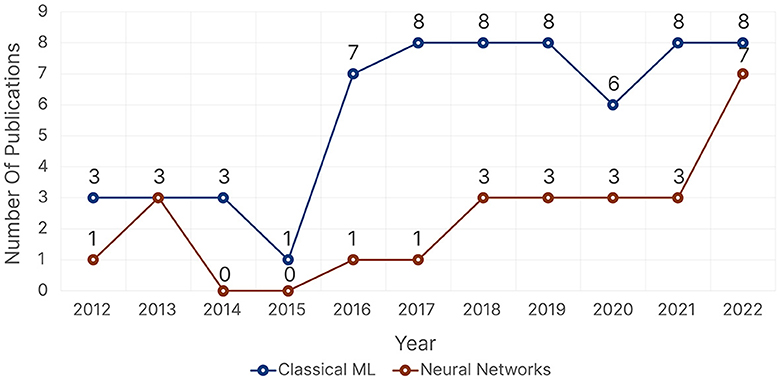

The latest Artificial Intelligence (AI) Index Annual Report revealed that AI research has more than doubled since 2010 (Maslej et al., 2023). This prompted us to investigate whether this trend can also be found in eye tracking research. A query to the Dimensions AI database1 for eye tracking publications2 from 2012 to 2022 showed an almost fourfold increase in the number of publications, as shown in Figure 2A. This growth has occurred despite a slowdown due to the COVID-19 pandemic. By further refining our search query to include some common machine learning (ML) keywords,3 we observed a rapid increase in publications, with more than a tenfold increase from 2012 to 2022, as shown in Figure 2B. This shows that the eye tracking community has been rapidly adopting ML algorithms in their research, aligning with the statement of Maslej et al. (2023). These findings encouraged us to conduct a literature review where we focus on a specific topic within eye tracking research to provide an overview of how ML has been used. Because of the importance of scanpaths and because automated scanpath analysis has been under investigation since the late nineties (Brandt and Stark, 1997), we decided to focus on scanpaths for passive gaze-based interaction, Passive gaze-based applications use eye tracking as a supporting modality to monitor and understand a user's behavior without the user actively interacting with the system using their gaze (Qvarfordt, 2017; Duchowski, 2018).

Figure 2. Number of publications from 2012 to 2022 retrieved from Dimension AI on 27 November 2023 for (A) eye tracking as whole and (B) eye tracking alongside machine learning.

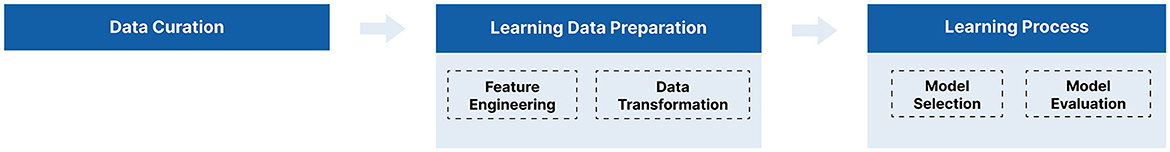

This paper provides a practical overview of how ML has been used for scanpath analysis in passive gaze-based applications. Our contribution lies in reporting and summarizing findings from 77 publications between 2012 and 2022. We begin by examining the different research domains and passive gaze-based learning tasks to see the current trends in the field. Afterwards, we structure our ML findings in the order of a general ML workflow based on those from Amershi et al. (2019) and Souza et al. (2022). An ML workflow is a high-level overview of the different steps needed for an ML project. It consists of three main steps: the data curation, where a dataset is acquired; the learning data preparation, where the dataset is transformed into a proper format ready to be processed by the ML algorithm; and the learning process, where the ML algorithm is trained on the dataset, and is evaluated afterwards. We provide a comprehensive overview of how ML has been used in scanpath analysis for passive gaze-based interaction to highlight research gaps that could benefit from further investigation; we do so by providing answers to these questions:

Q1. What research domains have used machine learning to analyze scanpaths, and what passive gaze-based learning tasks have they focused on?

Q2. Which research domains and passive gaze-based learning tasks have yet to use machine learning in scanpath processing?

Q3. What are the commonly followed machine learning practices, in line with a general machine learning workflow, that have been used for scanpath analysis in passive gaze-based applications?

Q4. Which machine learning topics have yet to be investigated for scanpath analysis in passive gaze-based interaction, and what benefits could they provide?

2 Review methodology

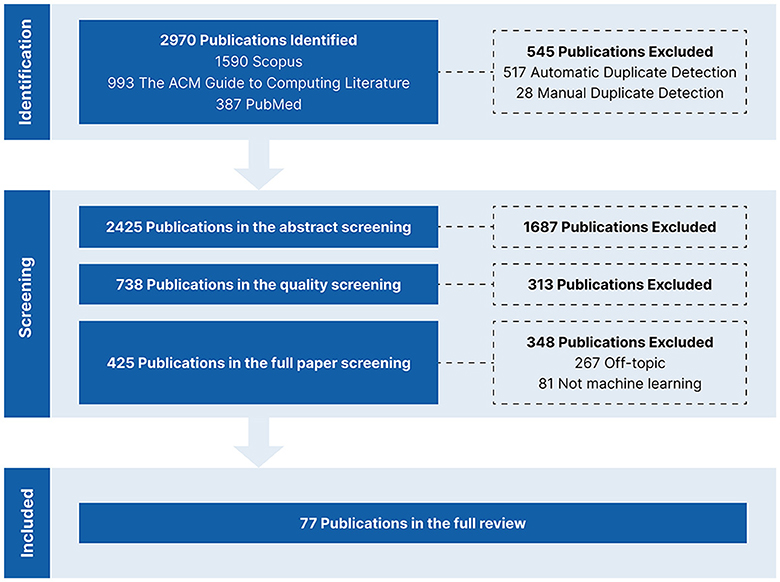

A literature review mainly consists of four main phases: the identification phase, where we retrieve publications from databases using keywords; the screening phase, where we classify the retrieved publications as either relevant or irrelevant to our topic; the data extraction phase, where we extract the information and insights that we want to report from the relevant publications; and the reporting phase where we report our findings. We followed the PRISMA4 framework (Page et al., 2021) throughout the review to make sure we did not overlook any step in our reporting. Figure 3 shows our PRISMA flow diagram to summarize and provide an overview of the different phases in our review.

2.1 Identification

We searched for English research articles on eye tracking and scanpaths published between 2012 and 2022 in three databases: Scopus,5 The ACM Guide to Computing Literature,6 and PubMed.7 This is in line with the AMSTAR8 guidelines of querying at least two databases for a literature review (Shea et al., 2017). Our search query consisted of two terms connected via an AND operator, and each term consisted of multiple keywords connected via an OR operator as follows: (“Eye Tracking” OR “Eye-Tracking” OR “Eyetracking” OR “Eye Movement”) AND (“Scan Path*” OR “Scanpath*” OR “Visual Scanning” OR “Gaze Pattern”). We defined the query based on a preliminary search of related publications. We included the word stem for the most common spellings of Scanpath and Eye Tracking. Adding an asterisk * allows search engines, with regular expression support, to return results containing the singular or plural of a word and corresponding compound words. In addition, to make sure we did not overlook any publication, we included the keywords Gaze Pattern and Eye Movement. We decided not to include any ML keywords in the query because we did not want to overlook or miss relevant publications; ML is very diverse, and we might have gotten limited results if we had only focused on certain keywords. The databases retrieved publications with at least one of the keywords in their title or abstract. As shown in Figure 3, we retrieved 2,970 publications from all three databases. We used the Zotero9 reference management tool to automatically detect duplicates, which we manually double-checked. We discarded 545 duplicates: 517 were identified by Zotero, and 28 by manual inspection.

2.2 Screening

We started the screening phase with 2,425 publications. We conducted the initial screening step based on the abstracts. We discarded any review papers, workshop papers, demo papers, extended abstracts, book chapters, and any publication that was clearly out of scope. We ended the initial screening by discarding 1,687 publications and retaining 738 publications for further analysis.

Afterwards, we conducted an intermediate screening step based on the venue quality.10 For journals, we only retained journals with an impact factor of three or higher. For conferences, we only retained A-ranked conferences. In addition, to ensure that we did not discard any specialized venues regardless of their rank or impact factor, we retained any venue, whether journal or conference, with five or more publications. We conducted the quality-based intermediate screening to ensure that we focused on publications from top venues. This might have led to discarding some relevant papers, but we believe this step was crucial for quality assurance. We ended the intermediate screening by discarding 313 publications and retaining 425 publications.

For the final screening step, we screened the full papers to make sure they were within our scope and that they used ML to process scanpaths. We discarded 267 publications that were not passive gaze-based applications or for scanpath processing, and 81 publications that did not use ML for scanpath processing. We ended the screening phase with 77 publications for information extraction.

2.3 Information extraction

We started the information extraction phase with 77 publications, which included 48 journal publications and 29 conference publications. The top three venues with respect to the number of remaining publications were The ACM Symposium on Eye Tracking Research and Applications, (ETRA)11 The ACM International Conference on Multimodal Interaction (ICMI),12 and Vision Research Journal.13 Moving forward, we present the different insights we extracted from the publications in the order of the ML workflow shown in Figure 6. However, we first discuss the research domains and learning tasks across the 77 publications. This step precedes the ML workflow but should help identify the current research state and possible challenges and gaps. We attached an Excel sheet as Supplementary material to this review, which includes all the information extraction phase details. It includes the publications' metadata, research domain categorization, learning tasks, used ML algorithms, and extracted scanpath features. In addition, it lists all experiments and corresponding results per publication.

3 Domains and tasks

Across the 77 publications included in this review, we identified four main research domains and ten main passive gaze-based learning tasks, with an additional domain and task labeled as Other for those with fewer than three publications each. We categorized publications based on the ML task of interest for which the scanpaths were processed, which led to some publications falling into multiple domains and tasks. We begin this section with an overview of the research domains, followed by the learning tasks.

3.1 Research domains

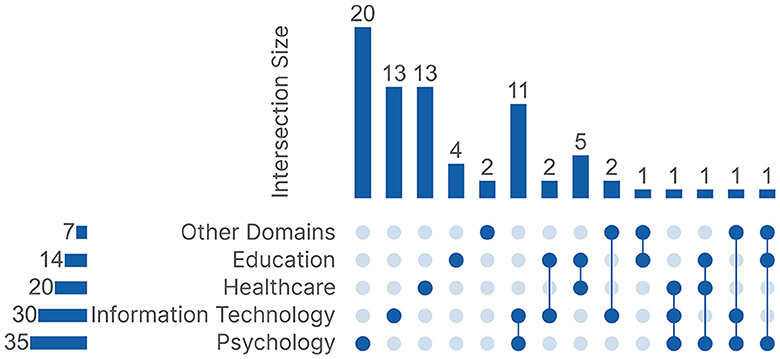

Research domains present a high-level perspective on the focus of studies on ML for scanpath processing in passive gaze-based applications. We found that the publications primarily fell under five research domains: Education, Healthcare, Psychology, Information Technology, and Other Domains, which includes domains with fewer than three publications each. Each publication was categorized based on what it wanted to infer from the ML results. Some publications focused on multiple tasks, which led them to be categorized under multiple domains. Figure 4 shows an UpSet Plot (Lex et al., 2014) to visualize the different connections between all five research domains. Of the 77 publications, 52 were categorized under a single domain, and 25 were categorized under multiple domains.

3.1.1 Education (n = 14 publications)

Education focuses on publications directed toward teaching and learning processes. In addition to designing and evaluating educational material, theories, and technologies. For example, how dental students from different semesters differ in their visual behavior while viewing medical scans (Castner et al., 2018) or predicting learning behavior while doing complex tasks (Giannakos et al., 2019).

3.1.2 Healthcare (n = 20 publications)

Healthcare focuses on diagnosing various health conditions, such as vision loss (Crabb et al., 2014) and different psychiatric, learning, neurodevelopmental, and mood disorders (Atyabi et al., 2023). In addition to any task related to healthcare professionals, such as comparing their visual behavior (Castner et al., 2018), or monitoring their attention (Khosravan et al., 2019).

3.1.3 Psychology (n = 35 publications)

Psychology holds a wide range of publications aimed at understanding and predicting human behavior and cognitive processes. This includes exploring perceptual and behavioral differences across different groups (Abdi Sargezeh et al., 2019), monitoring stress levels (Kim et al., 2022) and cognitive load (Ktistakis et al., 2022), assessing reading behaviors (Kelton et al., 2019), understanding emotion perception and attention patterns (Kanan et al., 2015), and predicting user tasks and decisions in various contexts (Coutrot et al., 2018). The domains Psychology and Healthcare are closely related. We assigned a publication to Healthcare if it focused on diagnosis only. If the underlying visual behavior was investigated as well, such as in Hayes and Henderson (2018), we assigned both domains.

3.1.4 Information Technology (n = 30 publications)

Information Technology has two main types of publications: those that focus on the technical implementation and the methodology more than the actual task, e.g., Li et al. (2021), and those that focus on technology tasks such as assessing password strength (Abdrabou et al., 2021), user experience (UX) evaluation (Moon et al., 2021), adaptive visualizations (Fu and Steichen, 2022), and contributing to fields like affective computing and cognitive modeling (Alghofaili et al., 2019).

3.1.5 Other Domains (n = 7 publications)

Other Domains holds various publications which could not be clearly assigned to only one of the other four domains. This includes publications related to driving (Lethaus et al., 2013), aviation (Peysakhovich et al., 2022), maritime (Li et al., 2022), marketing and product design (Moacdieh and Sarter, 2017), and linguistics (Reich et al., 2022).

3.2 Learning tasks

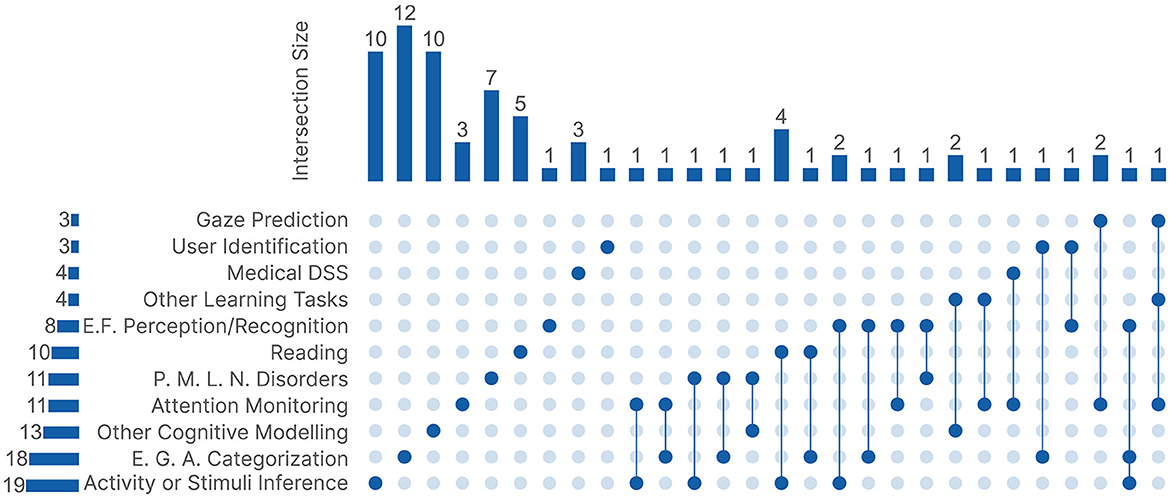

Learning tasks offer a more detailed perspective on the research focus across the 77 publications. Similar to the research domains, some publications were categorized under multiple learning tasks. This was mainly because of publications that developed novel processing methods and tested them on different tasks. We had ten main task groups alongside an extra group Other Tasks, which includes tasks with fewer than three publications each. Figure 5 shows an UpSet Plot to visualize the connections between all 11 learning tasks. Similar to the research domains, out of the 77 publications, 52 were categorized under only one learning task.

Figure 5. The UpSet plot between the 11 learning tasks. Medical DSS, Medical Decision Support System; E.F., emotion or face; P.M.L.N., psychiatric, mood, learning, or neurodevelopmental; E.G.A, Experience, Gender, or Age Categorization.

3.2.1 Activity, or Stimuli Inference (n = 19 publications)

Activity, or Stimuli Inference holds publications that focus on predicting the activity or stimulus based on the assumption that different stimuli and activities produce different scanpath data. For activity prediction, the activities were often diverse, including viewing natural images, web surfing, or watching a video (Greene et al., 2012; Kanan et al., 2014; Haass et al., 2016; Martinez et al., 2017; Coutrot et al., 2018; Hild et al., 2018; Srivastava et al., 2018; Kucharský et al., 2020; Lan et al., 2020). However, some publications focused on specific tasks such as driving cars (Lethaus et al., 2013), piloting plans (Peysakhovich et al., 2022), or reading text (Biedert et al., 2012; Kelton et al., 2019). For stimuli prediction, publications used different videos, images, or text types to study the visual behavior unique to each stimulus (Greene et al., 2012; Lanatà et al., 2013; Kanan et al., 2014; Coutrot et al., 2016, 2018; Fuhl et al., 2019; Necka et al., 2019; Lan et al., 2020; Wang et al., 2020; Li et al., 2021).

3.2.2 Experience, Gender, or Age Categorization (n = 18 publications)

Experience, Gender, or Age Categorization holds publications that focus on the assumption that certain groups of people have unique visual behavior characteristics. This was used to differentiate between different genders (Coutrot et al., 2016; Galdi et al., 2016; Abdi Sargezeh et al., 2019; Atyabi et al., 2023), and age groups (Glady et al., 2013; Galdi et al., 2016; Chaby et al., 2017; French et al., 2017; Atyabi et al., 2023). Other publications focused on identifying characteristic visual behavior associated with different levels of experience within certain professions such as dentists (Castner et al., 2018, 2020, 2022), radiographers and radiologists (Gandomkar et al., 2017, 2018; Li et al., 2019), pilots (Lounis et al., 2021), and students (Pejić et al., 2021). While others focused on different levels of experience, but within a certain task such as history learning (Sáiz-Manzanares et al., 2021), crossword puzzles (Sáiz Manzanares et al., 2020), and coding comprehension (Harada and Nakayama, 2021).

3.2.3 Attention Monitoring (n = 11 publications)

Attention Monitoring holds publications that focus on monitoring the visual attention behavior over the course of a specific activity or a period of time (Jiang et al., 2016; Shi et al., 2017; Xu et al., 2018; Abdelrahman et al., 2019; Khosravan et al., 2019; Lounis et al., 2021; Xia et al., 2021; Peysakhovich et al., 2022). This was sometimes related to other tasks, such as reaction or response time prediction (Moacdieh and Sarter, 2017; Li et al., 2022), or modeling a participant's conversation engagement (Ishii et al., 2013).

3.2.4 Emotion, or Face Perception/Recognition (n = 8 publications)

Emotion, or Face Perception/Recognition holds publications that focus on the visual behavior associated with viewing different faces or emotion-inducing stimuli to predict the recognized face or the perceived emotion. These publications explicitly used stimuli depicting different emotions, such as anger and happiness (Lanatà et al., 2013; Kanan et al., 2015; Chaby et al., 2017; Shi et al., 2017), or different people's faces (Coutrot et al., 2016; Chaby et al., 2017; Chuk et al., 2017a; Król and Król, 2019; Necka et al., 2019). This task group focused explicitly on those types of stimuli, which meant that if a publication focused, for example, on different activities to induce certain emotions, it was not included in this task group.

3.2.5 Reading (n = 10 publications)

Reading holds publications that focus on aspects related to reading behavior. It is built on the assumption that reading could be a unique indication toward understanding a participant's or a text's characteristics. This includes predicting text difficulty (Wang et al., 2020; Reich et al., 2022), text relevance to a trigger question (Bhattacharya et al., 2020a), the type of text document (Lan et al., 2020), reading comprehension (Reich et al., 2022; Southwell et al., 2022), whether the reader is a native speaker of the text language (Reich et al., 2022), and whether the participant is reading or skimming the text (Biedert et al., 2012; Kelton et al., 2019). In addition to specialized use cases such as understanding visual behavior indicative of correct and incorrect responses to a reading task (Nakayama and Hayashi, 2014), evaluating computer science skills (Harada and Nakayama, 2021), and evaluating sarcasm understandability (Mishra et al., 2016).

3.2.6 Other Cognitive Modeling (n = 13 publications)

Other Cognitive Modeling holds publications that focus on cognitive modeling tasks. However, the previous learning tasks could fully or partially be considered forms of cognitive modeling. This task group does not include any of the learning tasks from any of the previous groups. This group holds tasks such as predicting confidence (Smith et al., 2018), confusion (Sims and Conati, 2020), stress (Kim et al., 2022), cognitive workload (Ktistakis et al., 2022), navigation aid requirement (Alghofaili et al., 2019), and cognitive processing (Raptis et al., 2017; Roy et al., 2020). In addition, it holds publications that focus on understanding the visual behavior indicative of success or failure (Giannakos et al., 2019; Appel et al., 2022; Fu and Steichen, 2022), modeling how different disorders affect the viewing behavior of natural images (Hayes and Henderson, 2018), evaluating gaze behavior associated with weak and strong password creation (Abdrabou et al., 2021), and perception of product design (Moon et al., 2021).

3.2.7 Psychiatric, Mood, Learning, or Neurodevelopmental Disorders (n = 11)

Psychiatric, Mood, Learning, or Neurodevelopmental Disorders holds publications that focus on different tasks related to multiple disorders such as Autism Spectrum Disorder (ASD) (Hayes and Henderson, 2018; Król and Król, 2019; Liaqat et al., 2021; Li et al., 2021; Kanhirakadavath and Chandran, 2022; Varma et al., 2022; Atyabi et al., 2023), schizophrenia (Benson et al., 2012; Nikolaides et al., 2016), bipolar disorder (Chung et al., 2018), depression (Chung et al., 2018; Zhang et al., 2022), Attention Deficit Disorder (ADD) (Hayes and Henderson, 2018), and dyslexia (Hayes and Henderson, 2018).

3.2.8 Medical Decision Support System (n = 4 publications)

Medical Decision Support System holds publications that focus only on medical tasks, such as diagnosing vision loss (Crabb et al., 2014; David et al., 2019; Krishnan et al., 2021) and understanding visual attention on medical images (Khosravan et al., 2019). There is an overlap between this task group and the previous task group, i.e., Psychiatric, Mood, Learning, or Neurodevelopmental Disorders. However, due to the nature of the publications in the previous task group, we did not want to add them underneath Medical Decision Support Systems. So, we created a distinction between both groups.

3.2.9 User Identification (n = 3 publications)

User Identification holds publications that focus on collecting data from multiple participants to try and identify each participant based on their unique visual behavior (Kanan et al., 2015; George and Routray, 2016; Pejić et al., 2021).

3.2.10 Gaze Prediction (n = 3 publications)

Gaze Prediction holds publications that focus on predicting future gaze behavior based on previous gaze behavior depicted in scanpath data (Jiang et al., 2016; Xu et al., 2018; Xia et al., 2021).

3.2.11 Other Tasks (n = 4 publications)

Other Tasks holds various publications that could not be clearly assigned to only one of the other 11 learning tasks. This includes security tasks for evaluating password strength (Abdrabou et al., 2021), evaluating product design and user experience (Moacdieh and Sarter, 2017; Moon et al., 2021), and visual search (Xu et al., 2018).

4 Machine learning and scanpaths

Our goal in this review is to present how ML is used to process scanpaths for passive gaze-based applications. The previous section explored the different research domains and passive gaze-based learning tasks. This section focuses on the ML process, which is organized according to an ML workflow. Figure 6 shows the workflow that we followed, which is based on the workflows from Amershi et al. (2019) and Souza et al. (2022). This workflow is divided into three main parts: Data Curation, Learning Data Preparation, and Learning Process.

In the Data Curation, we outline the specifications for both the apparatus and the participants involved in the data collection studies. Additionally, we report on the use of external datasets by some publications as an alternative to conducting user studies. In the Learning Data Preparation, we discuss the various scanpath representation formats and features, and the different strategies for partitioning the data into training and testing subsets for ML algorithms. In the Learning Process, we examine the different ML algorithms, with a more detailed focus on neural networks, and evaluation metrics reported across the various publications. We present our findings throughout each step and draw connections, when appropriate, to the different research domains and ML algorithms. This should provide a more tailored experience across the full topic of interest and highlight the standard practices followed in the field.

4.1 Data Curation

This section focuses on the practical steps required to prepare a properly labeled and ready-to-use dataset. We report the specifications of the used eye trackers and information regarding participant selection to help guide an informed decision. In addition, we report the external datasets used by some publications.

4.1.1 Apparatus

An important step in eye tracking studies is to decide on the eye tracker frequency. We could not find consistent reporting across all 77 publications because some studies reported the frequency of the eye tracker, others reported the downsampling frequency, and a few reported the eye tracker model without detailing any frequencies. For studies that reported a downsampling frequency, it was used instead of the data collection frequency. For those that did not report a frequency, the default frequency of the device found online was documented. If a publication used multiple datasets or conducted separate user studies, we treated each one separately. However, if a publication conducted a single user study and later examined different use cases using the same dataset, it was recorded only once.

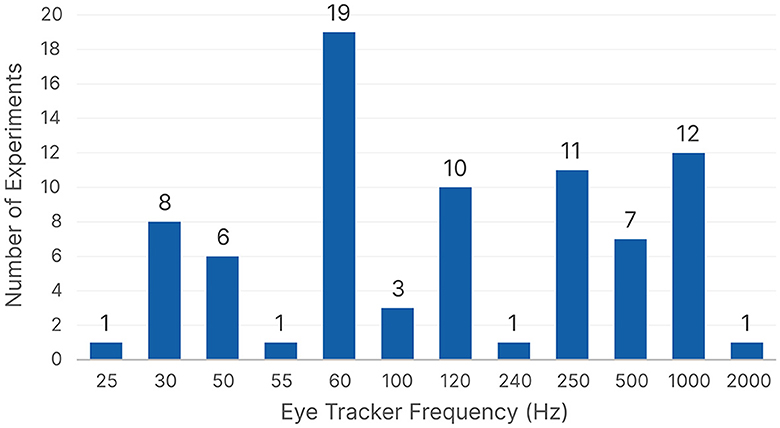

Figure 7 shows the distribution of the eye tracker frequencies. We can see that 60 Hz is the most frequently used eye tracker frequency, followed by 1,000 Hz. However, most studies that reported a downsampling frequency used a 1,000 Hz eye tracker, which was then downsampled to either 500 Hz (Benson et al., 2012; Mishra et al., 2016; Necka et al., 2019; Kucharský et al., 2020) or 250 Hz (Coutrot et al., 2016, 2018; George and Routray, 2016). Upon further investigation, we found that 60 Hz was the most common frequency across all five domains except for Psychology, which had 1,000 Hz as the most popular choice. When we considered the type of ML algorithm used afterwards in the processing, we found that 60 Hz was still the most common frequency for traditional ML, but 120 Hz was the most common choice for neural networks. We can see that across almost all domains and ML algorithms, 60 Hz is the most common choice, followed by 1,000, 250, and 120 Hz, respectively.

4.1.2 Participant demographics and group distribution

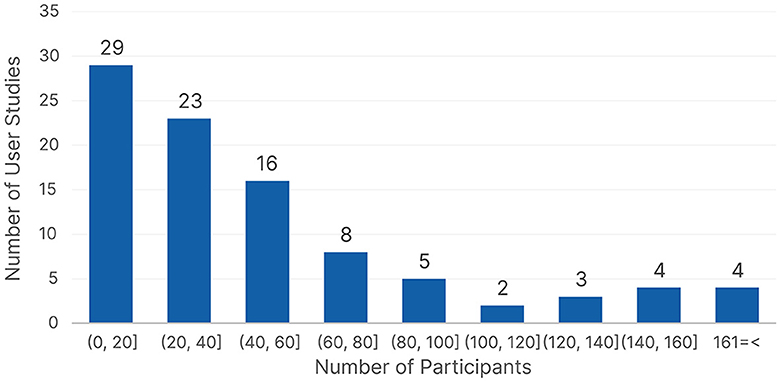

Another important aspect of user studies is to determine the number of participants. We found a total of 94 reported datasets across the different publications because a few publications conducted multiple user studies or used more than one dataset. Figure 8 shows the number of participants reported across the different datasets, we had 57 unique dataset sizes, so we grouped them to provide better insights. More than 50% of the reported datasets used data from up to 40 participants. We found that using 24 participants (n = 8), followed by eight participants (n = 7) were the most popular single values. Only three publications (Benson et al., 2012; Coutrot et al., 2016; Appel et al., 2022) used more than 200 participants; all three publications used existing datasets and did not conduct their own user studies. Upon further investigation, we found that Information Technology, Psychology, and Education preferred using data with up to 40 participants, but Healthcare preferred larger datasets with 61–80 participants. The insights did not change drastically when looking at the data with respect to the type of ML algorithm, with collecting data from up to 40 participants being the most frequent strategy for both traditional ML and neural network algorithms. We can see that there is a tendency to collect data from up to 40 participants, with a slight preference for around 20 participants throughout the different domains and ML algorithms.

Out of the 94 reported datasets, only 44 reported the number of females and males. Fifteen datasets were balanced, with female participants making between 40 and 55% of the data. Only eight datasets were skewed toward having more female participants, with female participants making up between 60 and 80% of the data. While 21 datasets had more male participants, with female participants making up <40% of the data. We had 27 datasets with distinct control and target groups. For any diagnosis, the healthy participants were the control group; for any experience categorization, the experienced professionals were the control group; and for age categorization, older participants were the control group. Sixteen datasets had an almost balanced split, with the target group making between 40 and 55% of the data, with seven datasets having a perfect 50-50 split. The target group across the remaining 11 datasets made between 56 and 78% of the data.

Reporting the demographic information of the dataset is important because it could lead to future contributions. For example, if a study wants to focus on data from only one gender or for participants belonging to a certain age group and so on. In addition, diverse datasets concerning gender and age have a better chance of providing insights that could lead to better generalizability. We could not provide similar insights toward the number of stimuli or participants' ages, because they were not consistently reported. Some publications reported the number of trials, some reported the length of the recording, and others reported the number of collected gaze points. There was an inconsistency in the reporting for us to extract meaningful insights, which could also come back to the range of learning tasks the publications covered.

4.1.3 External datasets

Eighteen publications opted to use already available datasets for a total of 23 different reported datasets. Only three datasets were used by two different publications: the OSIE dataset (Xu et al., 2014), the MIT dataset (Judd et al., 2009), and the dataset from Coutrot and Guyader (2014). While the publications for the SedentaryActivity dataset (Srivastava et al., 2018) and the dataset from Greene et al. (2012) are both covered within this review. Table 1 lists all 23 datasets alongside the learning tasks that they were used for. We wanted to report these datasets because they might be available online or via correspondence with their respective authors. This could help researchers save the tedious time, effort, and expenses required for collecting a dataset and conducting a user study.

Table 1. Table listing the 23 reported external datasets, alongside the learning tasks they were used for and by which publication.

4.2 Learning data preparation

In the learning data preparation, we focus on preparing the dataset as a suitable input to the ML algorithm. This section focuses on the different scanpath representation formats and the different methods for splitting a dataset into training and testing subsets. This is part of formulating the standard ML practices for scanpath processing.

4.2.1 Scanpath representation formats

We found that there were six main formats for representing scanpaths as suitable inputs to an ML algorithm: Visual Encoding (n = 10 Publications), String Representation (n = 12 Publications), Time Series (n = 9 Publications), Graph Representation (n = 4 Publications), Feature Engineering (n = 64 Publications), and Hidden Markov Modeling (n = 6 Publications). Visual Encoding is when a scanpath, i.e., the combination of fixations and saccades, is projected on top of either a stimulus, as shown in Figure 1, or an empty space; this is the format most commonly associated with scanpaths. String Representation is the name we gave to any sequence of symbols representing a scanpath, which Holmqvist et al. (2011) referred to as symbol sequences; this format includes Area Of Interest (AOI) strings where each symbol represents a fixation or a dwell on an AOI, saccade amplitude and direction-based strings, and fixation duration strings. Time Series Representation is when a scanpath is formatted as an ordered series of coordinates, sometimes accompanied by the duration. Graph Representation is when the gaze data in a scanpath are clustered or grouped to create nodes and edges representing a graph structure. Feature Engineering is a very general term representing when features, e.g., fixation duration, are computed to represent certain aspects of a scanpath. Hidden Markov Modeling (HMM) is a type of feature engineering, but because it has been mentioned explicitly by six publications and not as part of feature engineering (Kanan et al., 2014, 2015; Coutrot et al., 2016, 2018; Jiang et al., 2016; Necka et al., 2019), we decided to treat it separately. A HMM is a statistical method used to analyze data that changes over time; the unique feature of HMMs is that they are based on Markov processes, which are memoryless stochastic processes; this means that the probability distribution of the next state depends solely on the current state, not on the sequence of events that led to it (Coutrot et al., 2018).

Feature engineering is the most used format, with 64 publications, but before focusing more on it, we wanted to investigate the other five formats without feature engineering in the picture. We found that the string representation format was the most common representation format for Education and tied with time series for Information Technology. Psychology had the majority of HMM studies, which was tied as the most popular choice, along with time series representation. Lastly, Healthcare had visual encoding as the most popular format. When we considered the learning tasks, we found that Experience, Gender, or Age Categorization and Attention Monitoring were the two tasks with high usage of string representation, while Activity, or Stimuli Inference and Emotion, or Face Perception/Recognition were the two tasks with high usage of HMM. The remaining tasks were not as frequent in using formats other than feature engineering. Lastly, when we considered the type of ML algorithm, we found that string representation was the most common choice for traditional ML algorithms, while visual encoding was the most common format for neural networks, followed closely by time series data.

4.2.1.1 Feature engineering

We focused on features directly related to scanpaths, fixations, saccades, or AOIs for feature engineering. We excluded any blink features, pupil features, or features computed from additional modalities, such as keyboard keystrokes (Giannakos et al., 2019; Wang et al., 2020), thermal imaging (Abdelrahman et al., 2019), Electroencephalogram (EEG) (Shi et al., 2017; Giannakos et al., 2019; Moon et al., 2021), and physiological monitoring wristbands (Giannakos et al., 2019). Moving forward, when we refer to feature or relevant feature, this is what we mean.

Across the reviewed publications, we found 126 different reported features. Most publications extracted up to 10 features (n = 57 Publications) with an average of five features per publication. However, we had two extreme outliers, i.e., Giannakos et al. (2019) extracted 36 features, and Sáiz-Manzanares et al. (2021) extracted 26 features, but both of them used feature selection methods afterwards, especially since Giannakos et al. (2019) had even more features computed from other modalities.

We categorized each feature based on its type into fixation-based, saccade-based, AOI-based, and scanpath. Saccade-based features were the most diverse and numerous (n = 63 features). Fixation-based features came in second (n = 40 Features), followed by full scanpath features (n = 35 Features) and AOI-based features (n = 28 Features), respectively. Fixation-based features, e.g., fixation count, and saccade-based features, e.g., saccade duration, require the computation of fixations or saccades, respectively, in order to correctly extract the features. Scanpath features, e.g., scanpath length, require the full scanpath to be constructed in order to compute the feature, and AOI-based features, e.g., fixation count per AOI, take the AOIs into consideration for feature extraction. Some features belong to more than one group, e.g., fixation count per AOI is considered both a fixation-based and an AOI-based feature.

Despite having more saccade-based features, fixation-based features were more commonly used per publication. Average fixation duration was the most commonly used feature (n = 24 Publications), followed by total fixation duration (n = 16 Publications) and total fixation count (n = 16 Publications). Scanpath length (n = 13 Publications) was the most common full scanpath feature, followed by total scanpath duration (n = 6 Publications). Meanwhile, saccade amplitude (n = 7 Publications), total saccade count (n = 7 Publications), and total saccade duration (n = 7 Publications) were the most commonly used saccade-based features. For AOI-based features, fixation duration per AOI (n = 9 Publications) and fixation count per AOI (n = 9 Publications) were the most common.

The distribution of the most used features across the research domains and learning tasks showed similar behavior to the overall view, with average fixation duration, total fixation duration, total fixation count, fixation count per AOI, scanpath length, total saccade count, and total saccade duration being the main features used across all five research domains and most of the learning tasks. We found that Activity, or Stimuli Inference and Reading publications showed very similar tendencies to use the same set of features, which could be due to the fact that multiple publications within the Activity, or Stimuli Inference task group used reading as an activity within their user studies. Experience, Gender, or Age Categorization publications tended to use a wide range of feature types but were the most frequent users of scanpath and AOI-based features, which are mostly due to using scanpath comparison metrics, where they compare pairs of scanpaths and use the scores as inputs to the ML algorithm. When considering traditional ML and neural networks, we found that traditional ML publications used all feature types. Almost all of the features, i.e., 118 out of 126 features, were for traditional ML algorithms. However, neural networks focused on a less diverse set of features, i.e., 46 out of 126 features.

After the general insights toward the different features, we wanted to highlight three feature groups. The first feature group contains two unique features that were only used by one publication each. Scanpath Spatial Density was only used by Moon et al. (2021), and Saccade Duration Per AOI was only used by Wang et al. (2020). The second feature group contains different algorithms for quantifying the difference between pairs of scanpaths. They were used by 11 publications (Glady et al., 2013; Jiang et al., 2016; French et al., 2017; Shi et al., 2017; Castner et al., 2018; Król and Król, 2019; Li et al., 2019; Necka et al., 2019; Sáiz Manzanares et al., 2020; Liaqat et al., 2021; Appel et al., 2022). Across the publications, we found the following algorithms: Levenshtein Distance (Levenshtein, 1966), Mannan Distance (Mannan et al., 1997), Uniform Distance model, City Block Distance, Euclidian Distance, Hausdorff Distance, Frechett Distance, Vector-Based Comparison (Jarodzka et al., 2010), Dynamic Time Warping (DTW) (Müller, 2007), FastDTW (Salvador and Chan, 2007), Contrast mining (Dong and Bailey, 2012), Needleman-Wunsch Algorithm (Needleman and Wunsch, 1970), in addition to SubsMatch (Kübler et al., 2017) and ScanMatch (Cristino et al., 2010) which both use the Needleman-Wunsch Algorithm. These algorithms were interesting since they were mostly used alone as inputs to the ML algorithms without any additional features. For further reading, the separate sources for each algorithm or systematic reviews focusing on scanpath comparison algorithms, e.g., Anderson et al. (2015); Fahimi and Bruce (2021), could be helpful. The third feature group contains feature extraction methods that were used alone without extracting additional features. Three publications, i.e., Castner et al. (2020); Li et al. (2021); Kanhirakadavath and Chandran (2022), used a Convolutional Neural Network (CNN)-based architecture to extract the features from scanpaths, which were then fed into another ML model for the learning process. Li et al. (2021) presented the Oculomotor Behavior Framework (OBF), which is a framework that makes use of a convolutional, recurrent, and transformer-based architecture to prepare the data and extract features which can then be used to teach other ML models. Hayes and Henderson (2018) used Successor Representation Scanpath Analysis (SRSA) (Hayes et al., 2011, 2015), which uses temporal difference learning to capture statistical regularities in scanpaths, to quantify the differences between scanpaths. Kucharský et al. (2020) used the Scanpath Transition Probability Matrix, which is a matrix that represents the probability of moving from one state, or AOI, to another, as a feature extraction method to transform their scanpaths. Finally, Pejić et al. (2021) used the Sequence Graph Transform (SGT) algorithm (Ranjan et al., 2022), which is a feature embedding function commonly used in data mining. The majority of these methods are a bit complex, but they provide a single algorithm to transform the scanpaths before the learning process. The only outlier was Lethaus et al. (2013), who used a simple feature, i.e., fixation duration within each AOI, as their single feature of interest.

4.2.2 Data transformation

Data transformation is the final step in preparing scanpath data. It focuses on splitting the data into different subsets for training and testing. This is crucial to ensure that ML models perform well and generalize to unseen data. One common method for data splitting is the holdout method, where the dataset is divided into training, testing, and, sometimes, validation subsets. The training subset is used to train the model, the testing subset is used to evaluate its performance, and the validation subset is used to fine-tune the model parameters. There are various strategies for performing the holdout split. We found that the most commonly used method was to use separate datasets or sessions (n = 9 Experiments), where complete sessions or datasets are used for training, and the remaining sessions or different datasets are used for testing. Without a validation subset, an 80-20 split (n = 7 Experiments) and a 50-50 split (n = 5 Experiments) were the most common training-testing splits. However, with the addition of a validation subset, a 70-20-10 split was the most common training-testing-validation split. User-dependent (n = 10 Experiments) is a special case where each user's data is split into training and testing subsets to create a unique model for each user.

Resampling is another approach that encompasses cross-validation and bootstrap resampling. Bootstrap resampling was only used by Nikolaides et al. (2016) to generate multiple random replicas of the original dataset for training and testing the model, but it replaces the selected samples back into the original dataset once again, allowing it to be picked multiple times. By generating multiple smaller subsets, they are able to estimate the distribution of the model performance afterwards. On the other hand, cross-validation, which encompasses multiple strategies, was a lot more common. K-fold Cross-validation (n = 25 Experiments) works by splitting the data into K equal-sized groups where K−1 groups are used for training the model, and one group is used for testing the model; this is then repeated K times (K-fold) to ensure that each group has been used in the training and testing. From the publications that reported the value of K, we found multiple options, with K = 10 being the most common, followed by K = 5. Leave-one-out Cross-validation (n = 25 Experiments) is a special type of cross-validation where instead of dividing the data into K groups, one sample or stimulus is used for testing the model and the remaining samples or stimuli are used for training the model; this is then repeated over the full dataset to ensure that each sample or stimulus has been used in testing the model. Another type of cross-validation is Leave-user(s)-out Cross-validation (n = 24 Experiments), where the full data from a single user or a group of users are used for testing the model, while the data from the remaining users are used to train the model, which is then repeated for all users; this ensures that the data belonging to a certain user appears either in the training or the testing subsets and not in both.

We can see that Cross-validation accounts for the majority of the reported ML experiments, making it the most preferable method in the literature we reviewed. Cross-validation is more computationally expensive than the holdout method. However, training and testing the model on multiple different data splits provides a better indication of the model's performance.

4.3 Learning process

The learning process is the last step in the ML workflow. At this point, the learning task should have been well-defined, the data curation should have been finished, either by using an already available dataset or conducting a user study to collect a new dataset, and the learning data preparation to decide on the scanpath representation format, the scanpath features, and the data split should have all been done. A lot of these decisions are closely related to the learning step. This section discusses the commonly used ML algorithms for scanpath processing, the commonly reported best-performing models, and the most commonly used evaluation metrics. In addition, we focus more on the neural networks due to their complexity.

4.3.1 Model selection

ML algorithms are often split into three main categories: supervised learning models, unsupervised learning models, and reinforcement learning (RL) models. Supervised learning is when we have a labeled dataset. It can be used for classification, where we predict a discrete value, e.g., gender, or regression, where we predict a continuous value, e.g., stock prices. Unsupervised Learning is when we have an unlabeled dataset. It is often used for dimensionality reduction or clustering problems. Reinforcement Learning is a different type of problem where a model tries to learn how to behave through trial and error (Kaelbling et al., 1996), e.g., autonomous driving.

In our reviewed papers, we had 67 publications that used supervised learning models, 24 that used unsupervised learning models, and only one publication by Jiang et al. (2016) that used the Least-Squares Policy Iteration (LSPI) RL. We found that publications tested more than one algorithm on average before deciding on the best-performing one. Supervised learning publications tested, on average, two models (2.075 ± 1.636). Some publications evaluated only one model, while others evaluated up to six (Roy et al., 2020; Krishnan et al., 2021) or seven (Lanatà et al., 2013; Ktistakis et al., 2022) models. However, unsupervised learning publications tended to focus, on average, on just one model (1.304 ± 0.765).

4.3.1.1 Supervised learning

For supervised learning problems, we found a total of 43 different models, which we grouped into 12 categories: Bayesian Algorithms, CNN-based Architectures, Decision Trees, Ensemble Models (Random forest, Bagged Tree, Gradient Boosting, XGBoost, Adaboost, Gradient Boosted Decision Trees, Decision jungle), Linear Models (Linear Regression, Logistic Regression, Lasso, Multiple Regression), Linear or Quadratic Discriminant Analysis (LDA & QDA), Multilayer Perceptron (MLP), Nearest Neighbors, Gaussian Processes, Recurrent Neural Networks (RNNs), Support Vector Machines (SVMs), and Other Neural Networks which includes networks that were not specified or that did not fall into either CNN-based, RNNs, or MLP such as Feedforward–backpropagation (FFBP) network, Probabilistic Neural Networks (PNNs), Radial Basis Function Neural Networks, and Kohonen Self-Organizing Map.

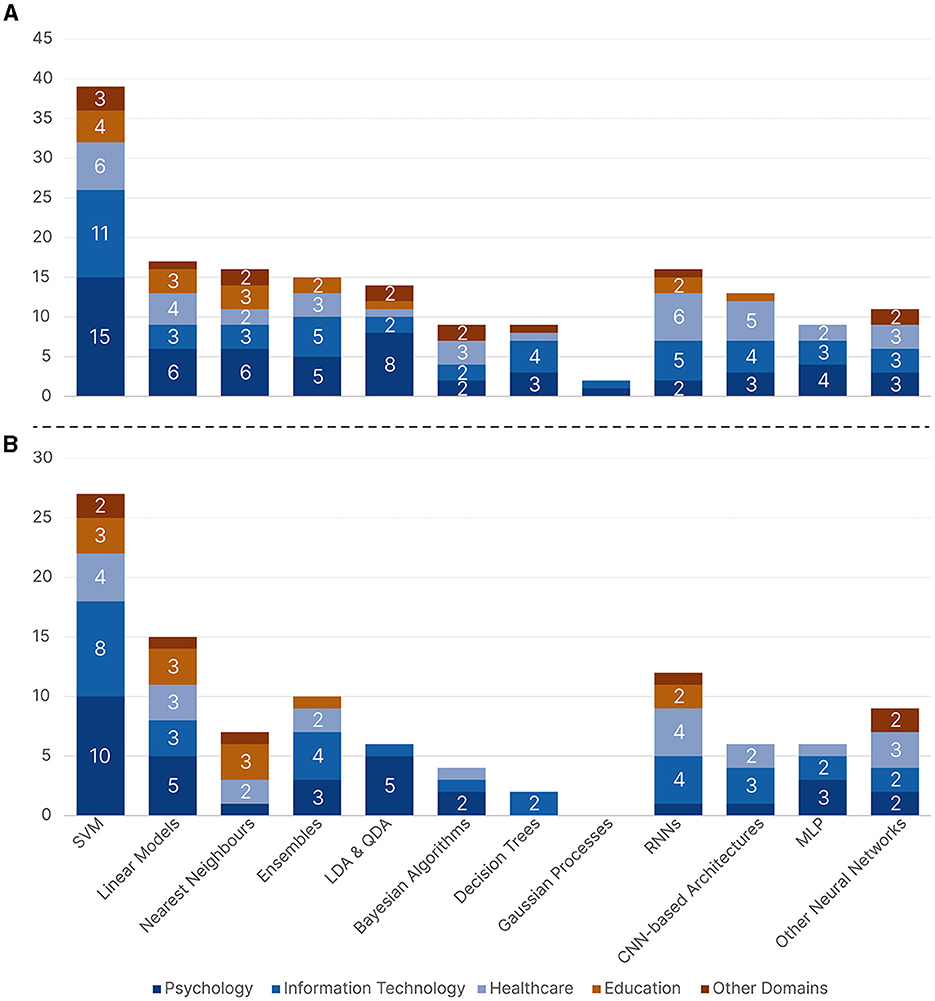

Figure 9A shows the distribution of the reported supervised learning algorithms with respect to the five research domains. SVM models were the overall most common across all five research domains, except for Healthcare, which had RNNs tied with SVMs as the most common models. Almost all research domains were more inclined toward more traditional ML algorithms as opposed to neural networks. When looking at traditional ML algorithms aside from SVMs, Information Technology used Ensemble and Decision Tree models, which are models that usually require hyperparameter optimization, more often than other domains. While both Psychology and Education used simple models such as Linear, Nearest Neighbors, and LDA & QDA models. When focusing on neural networks, Healthcare and Information Technology were the two domains with increased neural network usage, as opposed to Education, which was the domain with the least usage of neural networks. Figure 9B shows the distribution of the best-performing supervised learning algorithms with respect to the five research domains. The distribution did not change much; SVM still came on top as the most commonly reported best-performing model, followed by Linear models and RNNs, respectively. However, Decision Trees, Bayesian, and Gaussian models were less likely to be chosen as the best-performing models.

Figure 9. A bar chart showing the supervised machine learning algorithms across all five research domains where (A) contains all the reported algorithms and (B) contains the reported best-performing algorithms.

4.3.1.2 Unsupervised learning

Twenty-four out of the 77 reviewed publications used unsupervised ML algorithms. They were used for clustering, dimensionality reduction, feature selection, feature generation using Autoencoders, which were only used by Xia et al. (2021), and data generation using Generative Adversarial Networks (GANs), which were only used by Fuhl et al. (2019). We found four types of clustering algorithms: Partitioning Clustering (K-Means, Fuzzy K-Means), Density-Based Clustering (DBSCAN), Hierarchical Clustering, and Grid-Based Clustering (BIRCH). In addition, we found two types of dimensionality reduction and feature selection algorithms: Principal Component Analysis (PCA), and Manifold Learning which includes the Isomap Algorithm used by Chaby et al. (2017), and t-distributed Stochastic Neighbor Embedding (t-SNE) used by Król and Król (2019).

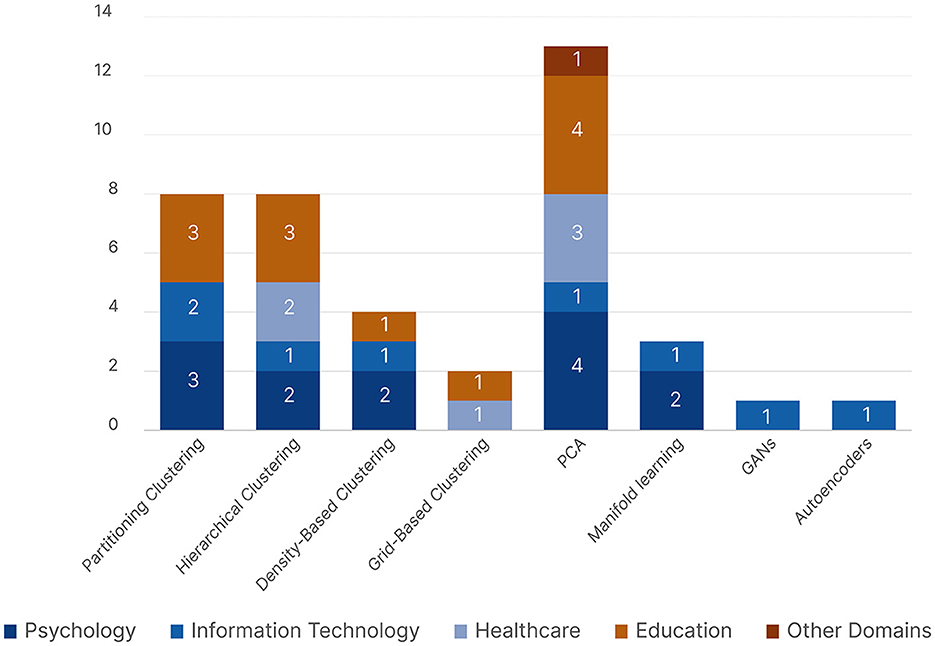

Figure 10 shows the distribution of the unsupervised learning algorithms with respect to the five research domains. Psychology and Education were the main research domains to use unsupervised learning. PCA was the most common unsupervised learning algorithm and the only one used by all five research domains. We can see that both Partitioning and Hierarchical Clustering were more popular than Density-Based and Grid-Based Clustering. Information Technology, represented by Fuhl et al. (2019); Xia et al. (2021), was the only domain to use unsupervised neural networks.

Figure 10. A bar chart showing all the reported unsupervised machine learning algorithms across all five research domains.

4.3.2 Neural network insights

Artificial neural networks are a subset of ML that are inspired to simulate neurons similar to a human brain (Krogh, 2008). Neural networks are often more complex than traditional ML algorithms and often require larger datasets and fine-tuning. In order to provide a comprehensive overview of how ML is used in scanpath analysis for passive gaze-based interaction, we focus in this section on the different details related to neural networks reported across the reviewed publications. We start with the preprocessing steps, which are the same for traditional ML algorithms as well, then the network hyperparameters, and finally, the commonly followed network architectures.

4.3.2.1 Preprocessing

Across the publications that reported any preprocessing, we found that there were seven main preprocessing groups: Artifact Removal, Data Scaling, Data Augmentation, Coordinate Transformation, Windowing, Image Processing, and Fixation Filtering.

Artifact Removal involves removing any unwanted data points, such as blinks, noise, or outliers. Most publications reported using the eye tracking manufacturer's software for blink detection and removal. However, it was different for other artifacts. For example, Alghofaili et al. (2019) used a Moving Average filter, and George and Routray (2016) used a Savitzky-Golay filter to remove noise, while Lan et al. (2020) used a Sliding Window Median filter to remove outliers. Data Scaling consists of data standardization, which re-scales the data to have a mean of zero and a standard deviation of one, and data normalization, which transforms the data to the same scale to ensure uniformity; for example, the minimum value and the maximum value get transformed into zero and one, respectively, and every intermediate value gets transformed into a decimal value between zero and one. Windowing refers to sliding windows that split continuous data into smaller subsets, or expanding windows, which add or expand a window with new values over time. Data Augmentation is a technique to increase the data size artificially. This can be achieved by rotating or flipping existing images, resampling existing data, or interpolating new values between existing ones, e.g., SMOTE (Chawla et al., 2002) is an interpolation-based technique to increase the size of the minority class. We also considered Zero-Padding as a form of data augmentation because it is used to create equal-sized time series samples or windows by augmenting them with zeros. Coordinate Transformation involves changing the data coordinate system. Image Processing encompasses a group of four different processes. Gray Scaling converts an image to different gray tones ranging between black and white. Color Coding encodes different temporal or spatial information, e.g., fixation duration or saccade direction, as different colors or shades when constructing scanpaths. Image Rescaling changes the size of an image while preserving the content, a common step when using CNNs, e.g., Atyabi et al. (2023) rescaled their images from 1,680 × 1,050 pixels to 100 × 100. Extracting Image Patches also changes the size of the input image, but by cropping the image into smaller patches, mostly around fixations or certain AOIs. Finally, Fixation filtering involves limiting fixations above or below a certain duration, e.g., George and Routray (2016) limited their fixations to a minimum of 12 ms and a maximum of 100 ms, while David et al. (2019) limited them between 80 and 1,300 ms.

4.3.2.2 Network hyperparameters

A neural network set of hyperparameters depends on multiple factors, such as the size of the dataset, the model complexity, and the learning task. However, we are reporting the different hyperparameters to provide an overview since they are all related to scanpaths processing and passive gaze-based applications.

Batch Size determines the number of training samples used in one iteration. It can be optimized by evaluating different values, e.g., Smith et al. (2018) evaluated an MLP with batch sizes of 5, 10, 25, and 50. However, most publications used only one value, 64 and 100 being the most common. Number of Epochs refers to the total number of times that a network passes through the entire training dataset during the learning process. The most common values were 50, 100, and 500 epochs, while larger values, e.g., 1,000 and above, were not that common. Early Stopping is commonly associated with larger numbers of epochs to prevent the network from overfitting and for computational efficiency by avoiding unnecessary epochs. However, only six publications reported using early stopping. Regularization also helps prevent the network from overfitting. Dropout and L2 regularization were the two most common techniques. Dropout ignores randomly selected neurons during training, which helps prevent overfitting by ensuring that the network does not rely too heavily on any single neuron. The values 0.5 and 0.2 were the most commonly reported dropout rates across the different networks. L2 Regularization, sometimes called weight decay, adds a penalty to the loss function based on the magnitude of the neurons' weights. This penalty discourages the learning process from assigning too much importance to any single neuron. Loss Function evaluates how well the network was able to model the training data. Binary cross-entropy was by far the most common loss function and is used for binary classification problems. Cross-entropy is used for multi-class classification problems, while MAE and MSE were also reported, and they are typically used for regression problems where the goal is to minimize the difference between the predicted and actual values. Activation Function determines the output of the neural network. Sigmoid activation was the most reported function and is used for binary classification problems. Softmax is used for multi-class classification problems. ReLu was also used often because of the publications that used CNNs for feature extraction. Linear and Gaussian activation functions were also reported, and they are used for regression problems. Learning Rate determines the step size toward finding the minimum loss value. The most common learning rate was 0.001, followed by 0.1, which was also the largest reported learning rate. Other less common values ranged from 1e−6 up to 0.05. Optimization Algorithm changes the network parameters to reduce the loss, and the Adam optimizer (Kingma and Ba, 2017) was almost exclusively used by all of the publications.

4.3.2.3 Network architectures

For the network architectures, we report them based on whether the network was used for feature extraction or for making a prediction. We start with the networks used for feature extraction. Xia et al. (2021) used the Autoencoder by Krizhevsky and Hinton (2011) to extract features from a dataset comprising 3,378 samples. The Autoencoder had an encoder composed of five fully connected layers, each with sizes of 675, 4,096, 2,048, 1,024, and 512 sequentially. This was followed by a bottleneck layer and, finally, a decoder that mirrored the encoder's structure but in reverse order. For regularization, they applied a weight decay of 0.0002. They evaluated using 50 and 100 epochs and batch sizes of 100 and 200. However, using convolutional layers was more common for feature extraction. Fuhl et al. (2019) used a GAN composed of a generator with multiple convolutional and deconvolutional layers, and a discriminator with convolutional layers to convert 5,000 scanpaths into emojis. They used the Cycle Consistency Loss function and a batch size of one. Chung et al. (2018), Castner et al. (2020), and Kanhirakadavath and Chandran (2022) all used CNNs for their feature extraction. All four publications, i.e., Chung et al. (2018); Fuhl et al. (2019); Castner et al. (2020), and Kanhirakadavath and Chandran (2022) essentially followed a similar structure by having multiple convolutional layers, each followed by a mixture of ReLu activation, Max Pooling, or Batch Normalization. They all used Dropout functions and Fully Connected layers, aside from Castner et al. (2020), who used a VGG-16 (Simonyan and Zisserman, 2015) network pre-trained on the ImageNet dataset (Deng et al., 2009), but without a Dropout or a Fully Connected layer. Li et al. (2021) were the only ones to use a combination of convolutional and recurrent layers in their framework called OBF. OBF consisted of an encoder and four decoders. The encoder had a single convolutional layer followed by a Leaky ReLu, Average Pooling, and two Gated Recurrent Units (GRUs) (Cho et al., 2014). Each decoder had two GRUs followed by Batch Normalization, Sigmoid Activation, and a Contrastive Learning Siamese Network, which is a neural network that encodes two scanpath segments using identical subnetworks to pull similar scanpath segments closer in the feature space and pushes dissimilar ones apart.

Using neural networks for predictions was more common. Lethaus et al. (2013) and Li et al. (2022) used shallow ANNs, with Li et al. (2022) using a linear activation function and Bayesian regularization. Benson et al. (2012) used a PNN, and George and Routray (2016) and French et al. (2017) used RBF and FFBP Networks, respectively. However, RNNs, CNNs, and MLPs were much more common. For the reported RNNs, all of them used LSTMs (Hochreiter and Schmidhuber, 1997) aside from David et al. (2019) who used GRUs with a Gumbel Softmax activation followed by Log Transformation. The reported LSTM networks usually had either one or two layers, each followed by a mixture of Batch Normalization, Dropout, and Sigmoid activation, before final fully connected layers. Liaqat et al. (2021) were the only exception with five LSTM layers. All of the used LSTMs used an internal Tangent activation function. The publications used different LSTM sizes, but 512 (Alghofaili et al., 2019), 200 (Xia et al., 2021; Castner et al., 2022), and 128 (Chung et al., 2018; Xu et al., 2018) were common values. The reported CNNs were quite similar, consisting of a mixture of two to four convolutional layers, each followed by a mixture of ReLu, Dropout, and Max Pooling functions, then fully connected layers before the final activation function. The only exceptions were Atyabi et al. (2023), who used 32 convolutional layers, and Liaqat et al. (2021), who used a pre-trained ResNet-18 (He et al., 2015). The VTNet from Sims and Conati (2020) was also interesting because they combined a CNN that processed scanpath images in parallel to a GRU that processed the raw gaze data. The 2-layer CNN and the 1-layer GRU with 256 units were combined afterwards using two fully connected layers and a Softmax activation. The reported MLPs were not often provided with a lot of details. Smith et al. (2018), Li et al. (2021), and Liaqat et al. (2021), all reported similar architectures of using two or three layers each followed by ReLu, Batch Normalization, and Dropout, before a final fully connected layer.

4.3.3 Model evaluation

Across all 77 publications, multiple publications reported multiple model evaluation metrics. However, they all focused on a specific metric either in the abstracts, discussions, or conclusions, so we noted the main metrics from the different publications. Accuracy was the choice of most publications, as it was reported 44 times, followed by Area under the ROC Curve (AUC) score being reported seven times. Other notable mentions are the F1 score (n = 5) and Recall, i.e., True Positive Rate (TPR) (n = 4). Error rates were also used, especially for evaluating clustering algorithms, which include Mean Absolute Error (MAE), Mean Squared Error (MSE), Normalized Root Mean Squared Error, and Root Mean Squared Error.

5 Discussion

In this review, we presented an overview of the current state of using ML for processing scanpaths in passive gaze-based applications extracted from 77 publications from 2012 to 2022. The overview consisted of two main parts: the first focused on the possible research domains and learning tasks, while the second focused on the different stages of the ML workflow, shown in Figure 6. In this section, we discuss both parts to answer the questions raised in the introduction.

5.1 Q1. What research domains have used machine learning to analyse scanpaths, and what passive gaze-based learning tasks have they focused on?

Based on the specific ML task for which the scanpaths were analyzed, we categorized the publications under two dimensions: research domains and learning tasks. We had a total of five research domains and 11 learning tasks, and each publication was categorized under at least one domain and one learning task. The five research domains were: Education, Healthcare, Psychology, Information Technology, and Other Domains. Other Domains includes Driving, Aviation, Maritime, Marketing and Product Design, and Linguistics, but they each had less than three publications, so we combined them under one group. The 11 learning tasks were: Activity, or Stimuli Inference, Experience, Gender, or Age Categorization, Attention Monitoring, Emotion, or Face Perception/Recognition, Reading, Cognitive Modeling, Psychiatric, Mood, Learning, or Neurodevelopmental Disorders, Medical Decision Support System, User Identification, Gaze Prediction, and Other Tasks. Other Tasks includes Security and Privacy, Product Design Evaluation, User Experience Evaluation, and Visual Search, but they each had less than three publications, so we combined them under one group.

Looking at each dimension separately does not provide the full picture of the research landscape. Figure 11 shows a heatmap of the intersection and distribution of the learning tasks across the research domains. We see that each domain has one or two learning tasks where most of the contribution was focused. The majority of Psychology publications focused on Activity, or Stimuli Inference tasks, with 15 out of 19 Activity, or Stimuli Inference publications being psychology-related, while the remaining four publications, i.e., Lethaus et al. (2013); Srivastava et al. (2018); Fuhl et al. (2019), and Peysakhovich et al. (2022) focused on predicting the task or stimuli without further investigation of the underlying visual behavior. Information Technology was the only research domain seen across all the learning tasks, except for Medical Decision Support System. We found that multiple publications focused more on the technological implementation (n = 15 Publications) for different learning tasks and only used the task as a way to evaluate the technology, which is why we categorized these publications under Information Technology; this caused it to have a large intersection with other research domains across the different learning tasks. Both Information Technology and Psychology show quite similar behavior in the distribution of a few learning tasks, mainly caused by publications on Human-Computer Interaction (HCI); this can be seen in Activity, or Stimuli Inference, which was also the most common learning task within Information Technology, and in Other Cognitive Modeling and Reading. Healthcare had all the Medical Decision Support System publications, a large number of Experience, Gender, or Age Categorization publications due to the focus on healthcare professionals' visual behavior, and all of Psychiatric, Mood, Learning, or Neurodevelopmental Disorders publications except for Król and Król (2019) because they focused on understanding the difference between ASD and Typically Developing (TD) children in their face-scanning patterns, and not on pure diagnosis. We initially expected Psychiatric, Mood, Learning, or Neurodevelopmental Disorders publications to be fully categorized under Psychology; however, most of them did not focus on studying the actual underlying visual behavior or cognitive process associated with each task, but rather on the diagnosis. Education had the majority of Experience, Gender, or Age Categorization publications; this is because most of the publications focused on differentiating between different experience levels to provide insights to improve the learning process. Four out of the seven publications categorized under Other Domains focused on Attention Monitoring.

Our two-dimensional categorization is quite similar to the approach followed in the seminal research work of Duchowski (2002), but with a few key differences. Regarding the research domains, we did not encounter neuroscience publications, i.e., publications that focus on the neural components of vision. We had three publications that used eye tracking alongside EEG devices (Shi et al., 2017; Giannakos et al., 2019; Moon et al., 2021), but they did not qualify as such because they focused on various tasks and not on how the changes in one signal translated to the other signal and so on. In addition, Duchowski (2002) had Industrial Engineering and Human Factors as a domain, but we split it into its components of Aviation and Driving. Lastly, we did not want to name a domain as Computer Science because all of our publications fall under computer science with their focus on ML; we opted for Information Technology as a substitute to avoid causing confusion. Duchowski (2002) stated that broader applications will emerge with the improvement in computer and graphical systems. This statement holds true and can be seen in our categorization of more than eleven distinct passive gaze-based applications across our review. Our tasks differ from their task categorization because we focused on what the authors wanted to infer from the ML algorithms and not the actual visual activity the participants were undergoing; for example, publications on Scene Perception were mostly categorized under Activity, or Stimuli Inference or Attention Monitoring. However, we had a lot in common, such as reading and visual search tasks.

5.2 Q2. Which research domains and passive gaze-based learning tasks have yet to use machine learning in scanpath processing?

After identifying the research domains and learning tasks, we saw that there were research domains that have yet to use ML in scanpath processing for passive gaze-based learning tasks. This section highlights some domains and tasks that we think could warrant more focus in future research.

Regarding the research domains, Sports is an interesting domain for eye tracking to understand decision-making for both players and referees, but surprisingly, despite finding sport-related publications in our initial pool, none of them used ML to process scanpaths. Gaming is also quite popular as an important application domain, with certain eye trackers being marketed toward gaming purposes,14 but we were unable to find hits, even in the initial pool of publications. Aside from completely missing domains, we expected to find more publications on Linguistics, but we were only left with just one publication. We can see that a lot of domains still have a large room for exploration where ML processing of scanpaths might be a suitable solution. Multidisciplinary publications, i.e., publications categorized under more than one domain, could also be a promising research direction. The UpSet plot in Figure 4 shows that 52 publications out of 77 were categorized under just one domain. This can be further supported by the fact that most of the remaining 25 publications had an intersection with Information Technology due to focusing mainly on the technology rather than the task, which left even fewer pure multidisciplinary publications. Education and Healthcare had a total of six common publications, which was due to Experience, Gender, or Age Categorization publications that focused on how different levels of experience affect the visual behavior of healthcare professionals. This opens two possible future research directions, one direction where the learning task could be utilized in exploring the differences between professionals in other domains aside from Healthcare to see if the established findings can be generalized and transferred to other professions. The other direction focuses on exploring different learning tasks in the Education domain as it still has a large room for improvement given that it was the research domain with the least diversity.

Regarding the learning tasks, Evaluating Product Design and User Experience only had two publications despite being an active area for scanpath analysis in general. This could mean they still depend on manual evaluation without ML automation to infer insights. Extended Reality (XR) publications were also not as common as we had initially expected, especially with the advances in headsets with integrated eye tracking, which increased the popularity of their intersection (Plopski et al., 2022). We only had three publications that used Virtual Reality (VR). Alghofaili et al. (2019) focused on predicting if a participant needed help in navigating a virtual environment, Kim et al. (2022) focused on stress and cognitive load monitoring, and Xu et al. (2018) focused on scanpath prediction. Scanpaths have the benefit of retaining temporal information; if the order of actions is important, then scanpaths offer valuable insights. We believe that the intersection of XR applications, both VR and Augmented Reality (AR), and scanpath processing could open opportunities for both domains and tasks; it also presents opportunities for research into solving technical challenges regarding adapting current efforts to 3D setups. There are already multiple available toolkits that could help in this regard. Kapp et al. (2021) presented ARETT, a toolkit for reliable eye tracking data acquisition in AR; it was later tested in visual Attention Monitoring and identifying the objects in a user's view in an AR setup (Barz et al., 2021b). Evangelista Belo et al. (2022) presented AUIT, a toolkit for designing adaptive user interfaces (UIs) in XR, which can be extended to work with gaze data. Creating adaptive UIs is an interesting research area because it can make use of ML algorithms that use various passive gaze-based cues to change the UI in response. For example, any of the tasks in Other Cognitive Monitoring, e.g., stress, can be a trigger to change the UI in a way to facilitate the user's experience; or Experience, Age, or Gender Categorization tasks, e.g., detecting the age group of the user to present a different UI to children, teenagers, adults, and seniors in a way to keep them safe and offer them a tailored experience. This shows that certain tasks still have room for further investigation and that other tasks are more mature, which also allows for investigating novel ML methods since you could compare them against already established benchmarks.

5.3 Q3. What are the commonly followed ML practices, in line with a general ML workflow, that have been used for scanpath analysis in passive gaze-based applications?

We present our answer to this question following our general workflow shown in Figure 6. For each section, i.e., data curation, learning data preparation, and learning process, we summarize the key takeaways that can be used as guidelines for future investigations.

5.3.1 Data curation

For the data curation, we focused on three main points: the eye tracker frequency, the number of study participants, and the participants' demographic information. We found that the majority of publications, i.e., 59 out of 77, preferred to collect their own datasets. However, some publications used already available datasets, which we summarized in Table 1.

Across all the reported datasets, we found that 60 Hz was the most frequently used eye tracking frequency, followed by 250 and 1,000 Hz. Using frequencies <60 Hz was not that common, with only 25% of datasets using frequencies <60 Hz. Eye trackers operating at lower frequencies are often less expensive, but they could lead to sampling errors and not being able to compute certain features accurately. Andersson et al. (2010) argued that sampling errors can be mitigated by collecting more samples but cease to be a problem for frequencies above 200 Hz. Analysis of fixations becomes more stable from 60 Hz, but to correctly detect and analyze saccades, the eye tracker should have at least a 120 Hz sampling frequency (Leube et al., 2017), while some saccade-based features would require frequencies above 200 Hz (Andersson et al., 2010). Sixty Hz might be the most common due to being more affordable, but to avoid these issues, we would argue that an eye tracker of 250 Hz would be better to avoid sampling errors and if we needed to study fast eye movements and saccade-based features.

For the number of participants, we found that collecting data from up to 40 participants was the most frequent strategy, with 24 participants being the most popular choice. However, Healthcare publications preferred larger datasets with up to 80 participants. We cannot provide a one-size-fits-all answer because it depends on the number of collected samples, the research question, the study design, and additional factors such as available time and funding. The rule that is mostly followed for eye tracking studies related to usability testing is to collect data from at least 30 participants (Eraslan et al., 2016), but similar statements cannot be made across the full landscape. The provided numbers are just an overview of the field of what is commonly found across the literature, but for a more informed decision to compute the number of participants, we would recommend reading publications such as (Martínez-Mesa et al., 2014; Brysbaert, 2019). In addition, the Supplementary material has full details for more detailed insights toward the number of participants used by each domain or learning task.

We found inconsistencies in reporting demographic information across the reviewed literature, such as the participants' genders, nationalities, and ages. Reporting this information could benefit future use of the datasets, e.g., focusing on use cases for certain genders or age groups. We recommend using checklists such as the one from Dunn et al. (2023), which provides a list of information that should be included when reporting eye tracking studies and the reasons behind their inclusion.

5.3.2 Learning data preparation

For the learning data preparation, we focused on two main points: how scanpaths are formatted as suitable inputs to an ML algorithm and how the data is split into training, testing, and, sometimes, validation subsets.