- Think Tank ISE Group, Kyiv, Ukraine

The increasing integration of artificial intelligence (AI) into digital communication platforms has significantly transformed the landscape of information dissemination. Recent evidence indicates that AI-enabled tools, particularly generative models and engagement-optimization algorithms, play a central role in the production and amplification of disinformation. This phenomenon poses a direct challenge to democratic processes, as algorithmically amplified falsehoods systematically distort political information environments, erode public trust in institutions, and foster polarization – conditions that degrade democratic decision-making. The regulatory asymmetry between traditional media – historically subject to public oversight – and digital platforms exacerbates these vulnerabilities. This policy and practice review has three primary aims: (1) to document and analyze the role of AI in recent disinformation campaigns, (2) to assess the effectiveness and limitations of existing AI governance frameworks in mitigating disinformation risks, and (3) to formulate evidence-informed policy recommendations to strengthen institutional resilience. Drawing on qualitative analysis of case studies and regulatory trends, we argue for the urgent need to embed AI-specific oversight mechanisms within democratic governance systems. We recommend a multi-stakeholder approach involving platform accountability, enforceable regulatory harmonization across jurisdictions, and sustained civic education to foster digital literacy and cognitive resilience as defenses against malign information. Without such interventions, democratic processes risk becoming increasingly susceptible to manipulation, delegitimization, and systemic erosion.

1 Introduction

1.1 Empirical insights into disinformation dynamics

A growing body of empirical research has illuminated how disinformation proliferates across digital ecosystems, driven by a complex interplay of user psychology, algorithmic design, media structures, and emerging technologies. Rather than stemming from a single source or mechanism, disinformation spreads through multiple, reinforcing channels – each of which has been rigorously studied in recent years.

Vosoughi et al. (2018) conducted a landmark analysis of ~126,000 Twitter cascades and found that false news diffused “significantly farther, faster, deeper, and more broadly” than true news across all topics. False stories were typically more novel and emotionally evocative (e.g., inducing surprise or disgust), which likely made people more inclined to share them. Notably, this disparity was driven by human behavior rather than bots: the authors observed that automated accounts amplified true and false news at similar rates, implying that human users are more prone to spread falsehoods. This early large-scale evidence revealed a fundamental vulnerability in the online information ecosystem – sensational misinformation appeals to users and propagates rapidly, posing a challenge for truth to keep up.

While humans are the primary propagators of viral falsehoods, subsequent research showed that automated “bot” accounts still play a significant amplifying role. Shao et al. (2018) analyzed 14 million tweets sharing hundreds of thousands of low-credibility news articles around the 2016 U. S. election and found that social bots had a disproportionate impact on spreading misinformation. In the crucial early moments of an article’s life cycle, bots would aggressively spread links from false or low-quality sources – even targeting influential users via replies and mentions – to jumpstart viral momentum. Humans often then unwittingly reshared this content introduced by bots. Shao et al. conclude that curbing orchestrated bot networks could help dampen the initial wildfire-like spread of false stories online. In short, platform manipulation by bots was shown to boost the reach of fake news, even if the ultimate decisions to share lay with human users.

On Facebook, large-scale data suggests misinformation sharing is highly skewed to certain segments of the population. Guess et al. (2019) tracked people’s Facebook activity during the 2016 election and found that only a small fraction of users accounted for the majority of fake news shares. Importantly, those most likely to share false news stories were disproportionately older adults: Americans over 65 shared several times more fake news articles on average than younger age groups, even after controlling for ideology and other factors. This robust age effect was not simply because older people use Facebook more or are more conservative – it persisted independent of partisanship. The findings point to specific demographic vulnerabilities in the spread of online misinformation. They suggest that digital media literacy gaps (particularly among seniors who did not grow up with the internet) could be a driving factor, and that interventions might be needed to support those populations. In sum, the propagation of fake news on social platforms is not uniform; it concentrates among certain user groups, which has implications for targeted counter-misinformation strategies.

Beyond user behavior and platform mechanics, the integrity of the information supply itself can contribute to widespread misinformation. Focusing on the COVID-19 pandemic, Parker et al. (2021) interviewed scientists and health communicators to identify systemic drivers of COVID misinformation in scientific communication. They found broad concern that certain failures in the scientific and publishing system – such as the publication of low-quality or biased research, limited public access to high-quality findings, and insufficient public understanding of science – greatly facilitated the spread of false or misleading health claims. For example, if preliminary or flawed studies are hyped without rigorous peer review, they can seed misinformation in public discourse. Parker et al. note that participants advocated a range of structural solutions: strengthening research standards and peer review, incentivizing careful science communication (e.g., translating findings for lay audiences), expanding open-access to credible research, and leveraging new technologies to better inform the public. There was even debate over the role of preprints – whether they exacerbate misinformation or help by rapidly disseminating data. The study’s conclusions underscore that systemic failings in how science is produced and communicated can fuel misinformation crises. Thus, bolstering the transparency, rigor, and accessibility of scientific information is seen as a necessary policy response to prevent future infodemics. This complements the platform-focused studies by showing that the misinformation problem also roots in the upstream information ecosystem.

Encouragingly, empirical research has started to identify practical interventions to reduce the spread of disinformation/misinformation. Pennycook et al. (2021) demonstrated through large-scale experiments that simple behavioral “nudges” can significantly improve the quality of content people share online. They found that when social media users are subtly prompted to consider the accuracy of a news headline before deciding to share it, their likelihood of sharing false or misleading headlines drops substantially. In other words, many people do not intend to spread misinformation; rather, they often fail to think critically about truthfulness in the rush of online sharing. Pennycook et al.’s participants overwhelmingly said that sharing only accurate news is important to them, and the intervention helped align their sharing behavior with that value. This approach – shifting users’ attention toward accuracy at key moments – proved effective across the political spectrum and did not rely on any partisan framing. The broader implication is that social platforms could implement low-cost, scalable accuracy checks or prompts to nudge users toward more mindful sharing. Such measures, informed by behavioral science, offer a promising complement to algorithmic or fact-checking-based solutions. They support the case for industry and policy initiatives that embed “friction” or reflection into the sharing process as a way to stem the tide of misinformation.

Finally, the advent of advanced AI technologies is transforming the misinformation landscape, raising new concerns that justify proactive policy intervention. A growing body of interdisciplinary research and case evidence provides a comprehensive review of emerging AI-driven disinformation techniques – from deepfake media to AI chatbots – and warns of their potential to dramatically amplify false narratives. Notably, today’s state-of-the-art generative models (large language models) can produce politically relevant false news content that humans often cannot distinguish from real news. In one evaluation, some AI-generated election disinformation was indistinguishable from authentic human-written journalism in over half of instances. Moreover, AI systems can now mimic real individuals with uncanny realism; for example, language models have been shown to imitate the style of politicians or public figures with greater perceived authenticity than the persons’ actual statements. Similar progress in deepfake video and audio means that one can forge convincing videos or voice recordings of public figures at scale. These developments lower the barrier for malicious actors to generate and disseminate false content on a massive scale, and they blur the boundaries between truth and fabrication in ways that could easily deceive the public. The rise of AI-generated disinformation has made the problem more acute than ever, necessitating robust countermeasures - from better detection technologies (e.g., deepfake detection, content provenance) to updated regulations on AI use - to safeguard information integrity. In short, the evolving capabilities of AI demand an equally evolving policy response.

Collectively, these empirical studies reinforce the urgent need for intervention at multiple levels – platform governance, user behavior, information integrity, and algorithmic transparency – to effectively counter AI-driven disinformation. The findings not only validate the systemic nature of the problem but also highlight concrete mechanisms through which disinformation proliferates and can potentially be mitigated. By grounding our analysis in this growing body of research, we set the stage for a more granular examination of how specific disinformation modalities – such as deepfakes, bots, and synthetic personas – operate across different scales. The next section builds on these insights by categorizing disinformation risks and mapping their observable dynamics against global benchmarks to inform more targeted policy responses.

1.2 Mapping disinformation risk by category and scale

The rapid expansion of global internet and social media usage has substantially increased the surface area vulnerable to AI-driven disinformation campaigns. As of April 2025, an estimated 5.64 billion individuals – approximately 68.7% of the world’s 8.21 billion population – were active internet users (DataReportal, 2025a, 2025b). Simultaneously, 5.31 billion social media accounts were in use, representing 64.7% of the global population (DataReportal, 2025a, 2025b). Average daily engagement remains high: users spent between 143 and 147 min per day on social media platforms during early 2025 (SOAX, 2025; Exploding Topics, 2025; BusinessDasher, 2024).

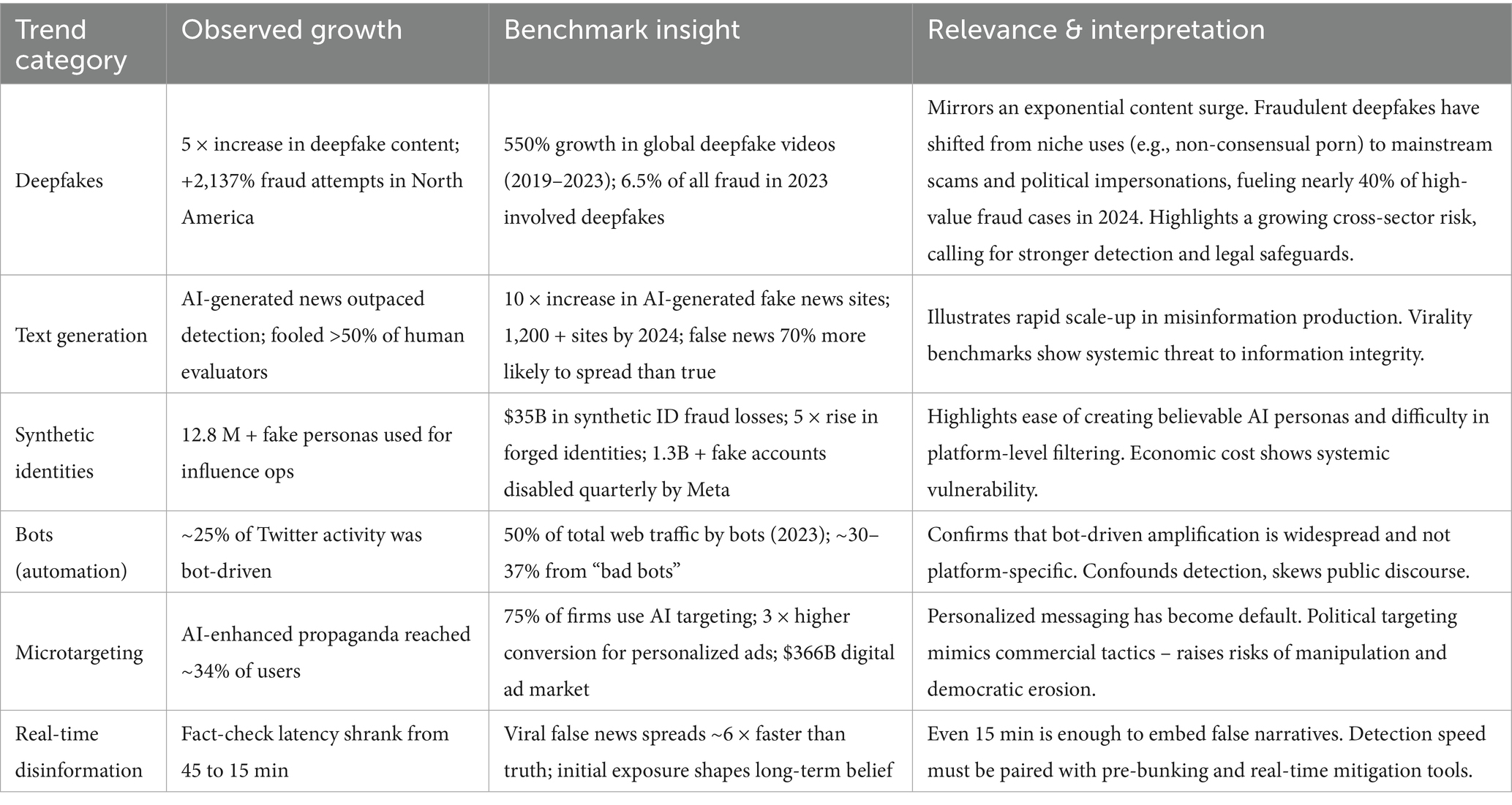

This level of global digital saturation offers a fertile environment for disinformation to propagate rapidly, especially as generative AI systems enable low-cost, scalable content production and targeting. Against this backdrop, it becomes imperative to benchmark observed disinformation trends against these broader usage patterns. The sections below present category-specific trends – ranging from deepfakes and bots to synthetic identities and real-time disinformation – contextualized within global benchmarks to assess scope, scale, and systemic risk (see Table 1).

Broadly, the benchmark comparisons confirm that observed trends are part of a larger, accelerating wave of AI-fueled disinformation. In many cases, the specific increases noted (e.g., a fivefold rise in deepfakes, or tens of millions of fake identities) mirror global surges in those phenomena (NewsGuard, 2024; Sumsub, 2024). This alignment strengthens our confidence that the trends are real and significant – not just isolated anomalies – and underscores the urgency for interventions.

Several notable insights emerge from examining the metrics in light of benchmarks:

The deepfakes category illustrates an arms race between AI capabilities and detection. A 5 × local increase in deepfake content is contextualized by exponential global growth (550% since 2019) in known deepfake videos (Security.org, 2024; MarketsandMarkets, 2024). Crucially, deepfakes are transitioning from niche (mostly pornographic content) to mainstream weaponization in scams, politics, and malign influence. Meanwhile, the volume of deepfakes online is growing exponentially – around half a million deepfake videos were shared on social media in 2023, and projections show up to 8 million by 2025. This arms race between deepfake generators and detectors underscores the urgent need for countermeasures (Moore, 2024). The fact that over 6% of fraud incidents now involve deepfakes (ACI Worldwide, 2024) signals to policymakers that this is no longer a theoretical threat – it is actively undermining financial and information integrity. Implication: There is a pressing need for better deepfake detection tools and regulatory frameworks. Policy options include mandating provenance watermarks for AI-generated media and criminalizing malicious deepfake use (European Parliament, 2022). Investing in R&D for detection (e.g., deepfake forensics, authenticated media pipelines) is critical. The benchmarks also suggest international cooperation is needed: deepfake fraud and disinformation are rising across all regions (e.g., +1740% in N. America, +780% in Europe in one year) (Redline Digital, 2023), so solutions must be global in scope.

1.2.1 Generative text oversupply

The text generation trends show AI-written disinformation now spreads widely and often evades traditional detection. Our benchmarks confirm that AI-driven “fake news” sites grew tenfold in one year (NewsGuard, 2024), flooding the infosphere with low-cost, algorithmically generated propaganda. This mass production of content, combined with the known fact that falsehoods spread faster than truths on social networks (Vosoughi et al., 2018), creates a perfect storm: even moderately convincing AI fakes can achieve wide circulation before fact-checkers can respond. Implication: Traditional moderation (reactively deleting false posts) may be insufficient – we need proactive and AI-assisted countermeasures. Possibilities include AI content provenance checks, real-time content authenticity scoring, and “counter-LLMs” deployed to detect AI-generated text patterns. Policies encouraging transparency (such as requiring labeling of AI-generated political ads or narratives) could help. The stark benchmark that NewsGuard already counted 1,200 + AI content websites by 2024 also raises the issue of media literacy: users must be educated to scrutinize sources, as many websites may now be effectively “content farms” run by bots or generative models. The lack of inferential controls in our data is mitigated by these comparisons – it is clear that the rise in AI text disinformation we observed is part of a systemic transformation of the information landscape.

1.2.2 Scale of fake personas

It’s noted that millions of Synthetic Identities deployed in disinformation campaigns, and benchmarks reinforce how widespread this tactic has become. The Federal Reserve (Boston Fed, 2024) reports $35 billion in losses in 2023 from synthetic identity fraud (often related to financial crimes). While financial fraud is one aspect, the same fake persona generation techniques are being repurposed for information operations – fake activists, fake journalists, fake grassroots groups. The 5 × jump in digital identity forgeries in just two years underscores the impact of generative AI in automating the creation of credible-looking personas (complete with AI-generated profile photos and even “deepfake voices”) (Sumsub, 2024). Implication: From a policy perspective, strengthening digital identity verification is key. Social media platforms and messaging services may need stricter “know-your-customer” rules for high-reach accounts or for political advertisers, to prevent large-scale bot armies with AI faces from multiplying. There is a trade-off with privacy/anonymity, but benchmarks show the pendulum has swung toward chaos – millions of fake identities can now be marshaled to distort the public discourse. Improving authentication (blue-check verification reforms, multi-factor checks for accounts) and tracking coordinated fake account networks (Meta, 2023) should be a priority. Additionally, collaborative efforts like sharing bot/fake account blacklists across platforms could reduce cross-platform abuse.

1.2.3 Pervasive bot automation

In the Bots category, the estimate (~25% of social media interactions in some domains are driven by bots) is backed by global data showing nearly half of all web traffic is non-human (Imperva/Statista, 2024). This indicates that what we observe on one platform (e.g., a quarter of Twitter content being bot-driven) is symptomatic of a wider phenomenon. Bots – especially “bad bots” – are now deeply entrenched in social media ecosystems, often orchestrated to amplify disinformation (Zhang et al., 2023). For instance, during health crises and elections, researchers have found bot networks boosting polarizing or false narratives (Shao et al., 2018). Implication: The prevalence of bots calls for strengthening platform defenses and perhaps regulatory oversight on transparency. Platforms should be encouraged (or required) to detect and label bot accounts (e.g., through robust use of tools like botometer or in-house AI systems) and to shut down coordinated inauthentic networks proactively. Benchmarks show even verified channels can be co-opted by bots (e.g., the mention of “verified bot” problems on Twitter/X), so verification processes need updates to stay ahead of AI-fueled fakery. From a policy angle, one idea is introducing “bot disclosure” laws – requiring automated accounts to self-identify as such – which some jurisdictions have explored. Additionally, research must continue on distinguishing AI-driven behavior from human behavior online; the battle is dynamic (as bots get more human-like with AI, detection must get more sophisticated).

1.2.4 Microtargeting and personalized propaganda

The Microtargeting trends highlight that AI can tailor messages to exploit individuals’ psychological profiles at scale. The observed stat – targeted campaigns influencing 34% of users – is plausible given that most advertisers already use AI-driven microtargeting and see greatly improved engagement. Benchmarks on ad performance (e.g., targeted ads yielding 10 × higher click-through with retargeting) suggest why malactors also microtarget: personalized disinformation is simply more effective at persuading or manipulating than one-size-fits-all propaganda (AI Marketing Advances, 2023; Redline Digital, 2023). Implication: There are critical policy and ethical questions here. Should microtargeted political ads be limited or banned? (For example, the EU has considered restrictions on microtargeting voters with political messages.) At minimum, transparency measures are needed – users should know why they are seeing certain political or issue-based content, and researchers should have access to platform data on ad targeting (as enabled by ad libraries in the EU’s Digital Services Act). Moreover, digital literacy programs should teach citizens that the news or ads they see might be algorithmically curated specifically for them, which could help inoculate against blindly trusting tailored misinformation. Technical defenses might involve detecting when malicious actors use advertising tools or algorithmic boosting for propaganda – for instance, unusual patterns in ad buys or content that is disproportionately targeted at vulnerable groups could trigger audits. The benchmarks reinforce that microtargeting is not inherently nefarious (it’s now a standard marketing practice), but when used to propagate false or extremist content, its high success rate becomes a threat to the democratic discourse.

1.2.5 Speed of disinformation vs. response

The Real-Time Disinformation metrics indicate some progress – response times to viral falsities have improved (15 min on average in 2023, whereas in 2021 it often took 30–45 min or more for corrections to emerge) (Disinformation Research, 2023). However, when juxtaposed with the benchmark that false news can achieve massive spread in minutes, it’s evident that even a 15-min lag is problematic. Malicious actors capitalize on breaking news moments – for example, immediately after a disaster or major announcement – to inject falsehoods that ride the momentum before facts are verified. Our case of adversaries “weaponizing speed” is exemplified by real incidents (e.g., a fake news report causing brief market chaos before being debunked) (Hunter J., 2024). Implication: Real-time detection and intervention must be a centerpiece of counter-disinformation strategy. This could involve algorithms monitoring spikes in certain keywords or sentiment shifts that often accompany fake virality, and then alerting moderators or posting contextual warnings in nearly real-time. Collaboration between platforms and independent fact-checkers/press agencies is also key – e.g. WhatsApp’s pilot projects with rapid rumor quashing, or Google working with health authorities to counter misinformation spurts. The 2023 landscape saw the emergence of community-driven efforts (like Community Notes on X/Twitter) that can sometimes provide corrective context within hours of a misleading post – a positive development, but still not fast enough in many cases. Going forward, “pre-bunking” strategies (releasing forewarnings about likely false narratives before they spread) and crisis-response communication protocols (so that official sources can flood the zone with accurate info quickly during emergencies) are needed. The improvements from 45 → 15 min show it’s possible to shorten reaction time; benchmarks like the MIT study (false news 6 × faster) show we must get even faster (Vosoughi et al., 2018). Policymakers might support the creation of real-time misinformation monitoring centers, possibly run by coalitions of tech companies, civil society, and governments, to coordinate swift responses to emerging disinformation campaigns.

In summary, benchmarking the observed disinformation trends against global data confirms that these patterns are not isolated incidents but part of a widespread and accelerating phenomenon. While the present analysis does not employ formal inferential controls, the convergence with international benchmarks offers external validation of the identified trends. For instance, a fivefold local increase in deepfake content closely parallels a tenfold global rise, reinforcing the urgency of addressing this threat. Likewise, the proliferation of AI-generated news sites and synthetic identities highlights the systemic nature of disinformation vectors across technological, social, and political domains. These benchmark comparisons provide essential interpretive context, clarifying which categories represent the highest systemic risk and guiding where targeted interventions may be most effective.

Building on these findings, the following section critically examines the existing policy landscape and mitigation strategies. It assesses the strengths and limitations of current regulatory approaches, identifies persistent gaps, and outlines evidence-based recommendations to enhance societal and institutional resilience. Given the transnational character of AI-enabled disinformation, the analysis emphasizes the need for coordinated, forward-looking governance mechanisms that are both adaptive and inclusive.

2 Sections on assessment of policy/guidelines options and implications

2.1 Global landscape of AI regulations and country specific cases

The development and implementation of artificial intelligence (AI) regulations have gained significant momentum worldwide. Since 2017, 69 countries have collectively adopted over 800 AI regulations (World Health Organization, 2018). Regulations aim to address issues such as bias, discrimination, privacy, and security. Most regulatory frameworks seek to foster AI development while safeguarding individual rights and societal interests as well as fighting disinformation. Some regulations, like the EU AI Act, will apply to AI systems used within their jurisdiction, regardless of the provider’s location (European Parliament, 2022).

2.1.1 European Union—the AI act (2024)

The EU’s artificial intelligence act is a comprehensive risk-based framework that categorizes AI applications by risk level. It bans certain “unacceptable risk” uses of AI (for example, social scoring and real-time biometric surveillance in public), imposes strict requirements on “high-risk” systems (such as those used in critical infrastructure or law enforcement), and places minimal restrictions on low-risk applications. Importantly, the AI Act includes transparency mandates relevant to disinformation: providers of generative AI must disclose AI-generated content and ensure compliance with EU copyright laws. High-impact general-purpose AI models will face additional evaluations. The Act was adopted in 2024, with most provisions enforceable starting in 2026, and is supported by significant EU funding (around €1 billion per year) to encourage compliant AI development. Notably, the regulation has extraterritorial reach, applying to any AI system used in the EU market regardless of the provider’s origin.

2.1.2 United States—sectoral and state-level approach

The United States lacks a single comprehensive AI law at the federal level. Instead, it relies on a patchwork of initiatives and guidelines. The National AI Initiative Act of 2021 established a coordinated strategy for federal investment in AI research and development, and the National Institute of Standards and Technology (NIST) released an AI Risk Management Framework to promote trustworthy AI practices. However, there is no dedicated federal law addressing AI in areas like privacy or disinformation. Enforcement is carried out through sector-specific regulations and state laws – for example, New York City’s Local Law 144 (effective July 2023) regulates the use of AI in hiring decisions, and the Federal Trade Commission has warned it will police deceptive commercial uses of AI under its broad consumer protection mandate. This decentralized approach has led to gaps and inconsistencies. The absence of a federal AI-specific privacy or content law means digital platforms in the U.S. primarily govern AI-driven disinformation through their own policies, under general oversight such as anti-fraud and election laws. The result is a less uniform defense against AI misuse, as rules can differ significantly by state and sector.

2.1.3 Canada – the artificial intelligence and data act (proposed 2022)

Canada has pursued a balanced approach with its proposed Artificial Intelligence and Data Act (AIDA), introduced in June 2022 as part of a broader Digital Charter Implementation Act. AIDA would establish common requirements for AI systems, especially high-impact applications, focusing on principles like transparency, accountability, and human oversight. As of late 2024, AIDA has not yet been passed into law. In the meantime, Canada has invested heavily in AI innovation (over $1 billion by 2020) and released guidelines such as the Directive on Automated Decision-Making for government use of AI. The intent is to encourage AI advancement (Canada is home to a robust AI research community) while guarding against harms like biased or unsafe AI outcomes. If enacted, AIDA is expected to introduce mandatory assessments and monitoring for risky AI systems, which could encompass tools that spread or detect disinformation.

2.1.4 United Kingdom – National AI Strategy (2021)

The UK’s approach, outlined in its National AI Strategy, is characterized by a “pro-innovation” stance that so far avoids sweeping new AI-specific legislation. Instead, the UK has articulated high-level principles such as safety, fairness, and accountability to guide AI development. It empowers existing regulators (in finance, healthcare, etc.) to tailor AI guidance for their sectors rather than creating a single AI regulator. Starting in 2023 and into 2024, the UK government has been evaluating how to implement these principles, including whether to introduce light-touch regulations in specific areas. For instance, Britain has considered regulations on deepfakes and online harms as part of its Online Safety Bill, and it hosted a global AI Safety Summit in late 2024 to discuss international coordination. However, as of early 2025, the UK relies on general data protection law (the UK GDPR), consumer protection law, and voluntary industry measures to handle AI-driven disinformation. This decentralized approach gives industry more flexibility, but some critics worry it may lag behind the curve on fast-moving threats like synthetic media manipulation.

2.1.5 India – IT rules and content takedown (2021–2023)

In India, policymakers have approached disinformation largely through amendments to information technology regulations. The Information Technology Rules, 2021 (under the IT Act, 2000) mandate social media platforms to remove false or misleading content flagged as such by the government. The Intermediary Guidelines and Digital Media Ethics Code established under these rules obligates tech companies to actively curb the spread of misinformation on their services. In 2023, India introduced further measures by amending the IT Rules to create a government-run fact-checking unit tasked with identifying false information related to government policies. As a result, platforms like Twitter, Facebook, and WhatsApp are now required to comply with official takedown requests targeting content deemed “fake news” by authorities (Mehrotra and Upadhyay, 2025). These legal mechanisms showcase India’s aggressive stance in forcing platform compliance; however, they have raised concerns among free speech advocates. Granting the government broad power to determine truth can risk overreach and censorship, potentially stifling legitimate dissent (Access Now, 2023). India’s experience highlights the tension in regulating disinformation in a democracy – how to curb dangerous falsehoods at scale without undermining civil liberties. The high volume of content in India (over 800 million internet users by 2025) also makes enforcement difficult, and misinformation (often via WhatsApp forwards in myriad local languages) has continued to spark violence and social unrest in recent years. Thus, while India’s regulatory strategy enables swift removal of content, it also underscores the need for complementary approaches like community fact-checking and media literacy to address root causes of belief in rumors.

2.1.6 China – deep synthesis regulations (2023)

China’s authoritarian information control regime has taken a markedly different approach, focusing on stringent preemptive regulations to prevent AI misuse. The Cyberspace Administration of China (CAC) implemented pioneering rules on “deep synthesis” technology that took effect in January 2023 – one of the world’s first comprehensive laws targeting deepfakes. These regulations prohibit the use of deepfake or other generative AI technology to produce and disseminate “fake news” or content that could disrupt economic or social order. Service providers offering generative AI tools are required to authenticate users’ real identities and to ensure their algorithms do not generate prohibited content. Critically, the rules mandate that any AI-generated or AI-manipulated content must be clearly labeled as such, both through visible markers in the content (e.g., watermarks or disclaimers) and through embedded metadata (China Daily, 2025). This dual labeling requirement is intended to ensure public awareness that a piece of media is synthetic and to facilitate traceability via hidden “fingerprints” in case the content is reposted or altered. Chinese platforms and internet services are held liable for enforcing these rules – meaning they must detect and remove unlabeled deepfakes and report violators to authorities. The Chinese government’s approach emphasizes a security-first, censorship-heavy model: it leverages its centralized control over the internet to preemptively block and punish the creation of AI-driven disinformation within its borders. At the same time, China has been known to deploy disinformation abroad via state media and covert influence operations. Thus, domestically China shows one extreme of a regulatory regime (mandatory labeling and strict prohibition), though such an approach is enabled by its broader curbs on speech and would likely not be palatable in open societies.

2.1.7 Brazil (and South America) – proposed “Fake News” law (2020–2023)

Across South America, governments have also grappled with how to stem harmful disinformation, which in countries like Brazil spreads rapidly through platforms like WhatsApp and YouTube. Brazil’s experience is illustrative. In 2020, lawmakers introduced Bill No. 2630, dubbed the “Fake News Bill,” to address online misinformation and abuse. After delays, the bill regained momentum in 2023 with significant revisions. The far-reaching proposal would overhaul internet liability rules by establishing new “duty of care” obligations for tech platforms regarding content moderation (Freedom House, 2023). Rather than waiting for court orders, platforms would be required to actively monitor, find, and remove illegal or harmful content (including disinformation) or face hefty fines (Paul, 2023). The bill also includes provisions to curb inauthentic behavior, such as requiring phone number registration for social media accounts (to limit anonymous bot networks) and increasing transparency of political advertising. Major tech companies have fiercely opposed the legislation, arguing that some provisions threaten encryption and free expression (for instance, by potentially requiring WhatsApp to trace forwarded messages, which conflicts with end-to-end encryption). Under pressure, a vote on PL 2630 was postponed in 2023, and as of early 2025 it remains pending in Brazil’s Congress (Freedom House, 2023). Nonetheless, Brazil’s judiciary stepped into the breach during recent elections – the Electoral Court (TSE) ordered the removal of thousands of pieces of false content and even temporarily suspended messaging apps that failed to control rampant misinformation. Other South American countries have taken smaller steps: Argentina launched media literacy programs; Colombia and Peru have set up anti-fake-news observatories. The trajectory in the region suggests a trend toward imposing greater accountability on platforms for content spread, while trying to balance the demands of democracy and free speech. Brazil’s proposed law, in particular, if passed, would be among the world’s strongest social media regulations against disinformation, potentially influencing other democracies facing similar challenges.

Overall, the global regulatory landscape for AI and disinformation is fragmented. The EU’s comprehensive and stringent regime contrasts with the more ad hoc or principles-based approaches in the US and UK, as well as the heavy censorship model in China. Such divergence can complicate efforts to combat AI misuse internationally. Gaps between jurisdictions create opportunities for malicious actors to engage in regulatory arbitrage – exploiting the most lenient environment to base their operations or technological development. For example, a disinformation website flagged in Europe can move its hosting to a country with weaker rules; AI developers can open-source a potentially harmful model from a location with minimal oversight. Similarly, inconsistent standards (like on political deepfake ads) mean content banned in one country may still reach audiences elsewhere. This patchwork of rules underscores a need for greater international coordination, which we address later in our recommendations and future directions.

2.2 AI regulations and their implications for disinformation

Despite new regulations, significant challenges remain in addressing AI-fueled disinformation. One major issue is global fragmentation of AI governance. The lack of uniform rules across jurisdictions creates opportunities for malicious actors to engage in regulatory arbitrage – exploiting gaps by operating from countries with lax or no AI oversight. For example, an organization banned from deploying certain AI-generated content in the EU could base its operations in a country with weaker laws and still target EU audiences online. Inconsistent standards also mean that what one nation labels and detects as AI-generated disinformation may go unrecognized elsewhere. Companies attempting to police disinformation globally face a complex compliance puzzle, needing to navigate multiple legal regimes simultaneously. This patchwork of regulations can slow down cross-border responses to online campaigns and limit collaboration. International efforts to counter disinformation – such as sharing threat intelligence or coordinated content takedowns – are more difficult without a harmonized legal foundation.

Enforcement also looms large as a concern. Laws on paper do not automatically translate to effective action. Resource constraints, jurisdictional limits, and Big Tech’s resistance can all undermine enforcement. Many countries’ regulatory agencies lack the technical expertise or manpower to audit complex AI systems or to continuously monitor platforms for compliance. In the case of India’s aggressive takedown rules, enforcement relies on platforms’ willingness and ability to quickly remove flagged content; encrypted messaging services pose a further obstacle. In open societies, enforcement must also respect free speech and avoid political abuse – a difficult balance when governments themselves can become arbiters of truth. There is an inherent tension between controlling disinformation and upholding democratic freedoms. Overly heavy-handed laws risk censorship and could drive misinformation to harder-to-monitor channels (like private groups or decentralized networks).

Meanwhile, a technological arms race is underway between those creating disinformation and those attempting to counter it. Tighter regulations, while intended to curb abuse, can inadvertently fuel this race. As platforms and regulators impose new constraints, disinformation actors often respond by developing more advanced and evasive AI tools. For instance, mandated content labeling may incentivize adversaries to refine generative models that produce outputs indistinguishable from authentic content. In turn, governments and private actors race to develop more sophisticated defensive technologies to detect and neutralize these threats. This dynamic escalates the complexity of both offense and defense: bots trained to mimic human behavior can bypass AI-based filters, and hyper-realistic deepfakes may outpace current detection systems.

The rapid evolution of generative AI compounds this challenge, frequently outpacing legislative and regulatory cycles. By the time a governance framework is enacted, the threat landscape may have already shifted. Effective policy responses must therefore move beyond reactive regulation or blanket investment and instead prioritize agile, pre-competitive collaboration between research institutions, civil society, and industry. Such frameworks could include shared threat modeling environments, sandboxed co-development of detection tools, and adaptive policy toolkits designed to evolve in parallel with AI capabilities.

Efforts to rein in disinformation must also consider freedom of speech. Measures like automated content filters or legal penalties for spreading “fake news” risk encroaching on legitimate expression if not carefully calibrated. AI-driven content moderation, for instance, can mistakenly flag satire, opinion, or contextually complex posts as disinformation. Overzealous or poorly tuned algorithms might over-censor and suppress valid discussions, raising concerns about violating free speech rights. The lack of human judgment in some AI moderation tools means nuance can be lost – what is misleading in one context might be valid in another, and current AI may struggle with such distinctions. Additionally, if governments mandate certain AI filters, there is a danger that those systems could be biased towards particular political or cultural viewpoints, intentionally or not, thereby silencing dissenting voices under the guise of combating falsity. Maintaining transparency and avenues for appeal in content moderation decisions is thus critical to uphold democratic values even as we try to clean up the information space.

Another set of issues revolves around data protection and privacy. Paradoxically, laws like the European GDPR – which protect users’ personal data and grant rights over automated profiling – can impede the fight against disinformation. Effective AI detection of fake accounts or targeted misinformation often requires analyzing large amounts of user data (to spot inauthentic behavior patterns or trace how false stories spread through networks). Privacy regulations restrict access to some of this data or require anonymization that might reduce its utility. For example, an AI system might be less effective at identifying a network of coordinated fake profiles if it cannot easily aggregate personal metadata due to legal constraints. GDPR also gives users the right to opt out of automated decision-making or to demand explanations for it; platforms, fearing liability, might limit their use of AI moderation tools or throttle back automation to avoid infringing those rights. This could constrain content moderation efforts just when AI’s speed and scale would be most useful. Policymakers thus face a difficult task in reconciling robust privacy protections with agile anti-disinformation mechanisms.

In addition, there are practical and resource challenges. Disinformation is a cross-border, cross-platform problem, but enforcement mechanisms are typically national. Even when countries agree on principles, coordinating actions (such as shutting down a global botnet or sanctioning a foreign propaganda outlet) is cumbersome. Companies that operate internationally must deal with not only multiple laws but also sometimes conflicting demands (for instance, one country may demand certain content be removed as “fake,” while another country’s law protects that content). Cross-border cooperation among regulators is still nascent, and mechanisms for rapid information sharing or joint action are limited. Furthermore, not all organizations have the capacity to implement the latest AI defenses. Large social media firms invest heavily in AI moderation and teams of experts, but smaller platforms or local media outlets often lack such resources. This creates a weak link that disinformants can exploit, by spreading falsehoods on less moderated services and then pushing that content into mainstream discussion. Finally, AI tools themselves can carry biases that affect what is labeled disinformation – if a detection algorithm is trained on skewed data, it might overlook certain languages or communities, thereby missing targeted misinformation campaigns in those segments. All these additional challenges highlight that combating AI-driven disinformation requires not just laws and algorithms, but also capacity building, international norms, and continual refinement of both technological and policy approaches.

In summary, current AI regulatory efforts, while a crucial start, have notable limitations when confronting disinformation. Fragmented governance allows malicious actors to maneuver around restrictions, and even within regulated spaces, transparency, enforcement, and rights-balancing pose difficulties. A coordinated, adaptive strategy is needed – one that harmonizes laws across borders, updates rules in step with technological advances, safeguards fundamental freedoms, and supports both major and minor stakeholders in the information ecosystem. The next section will propose actionable policy recommendations that build on this analysis, aiming to strengthen our collective ability to counter AI-powered falsehoods.

3 Actionable recommendations

3.1 Limitation in AI regulation vs. policy recommendations

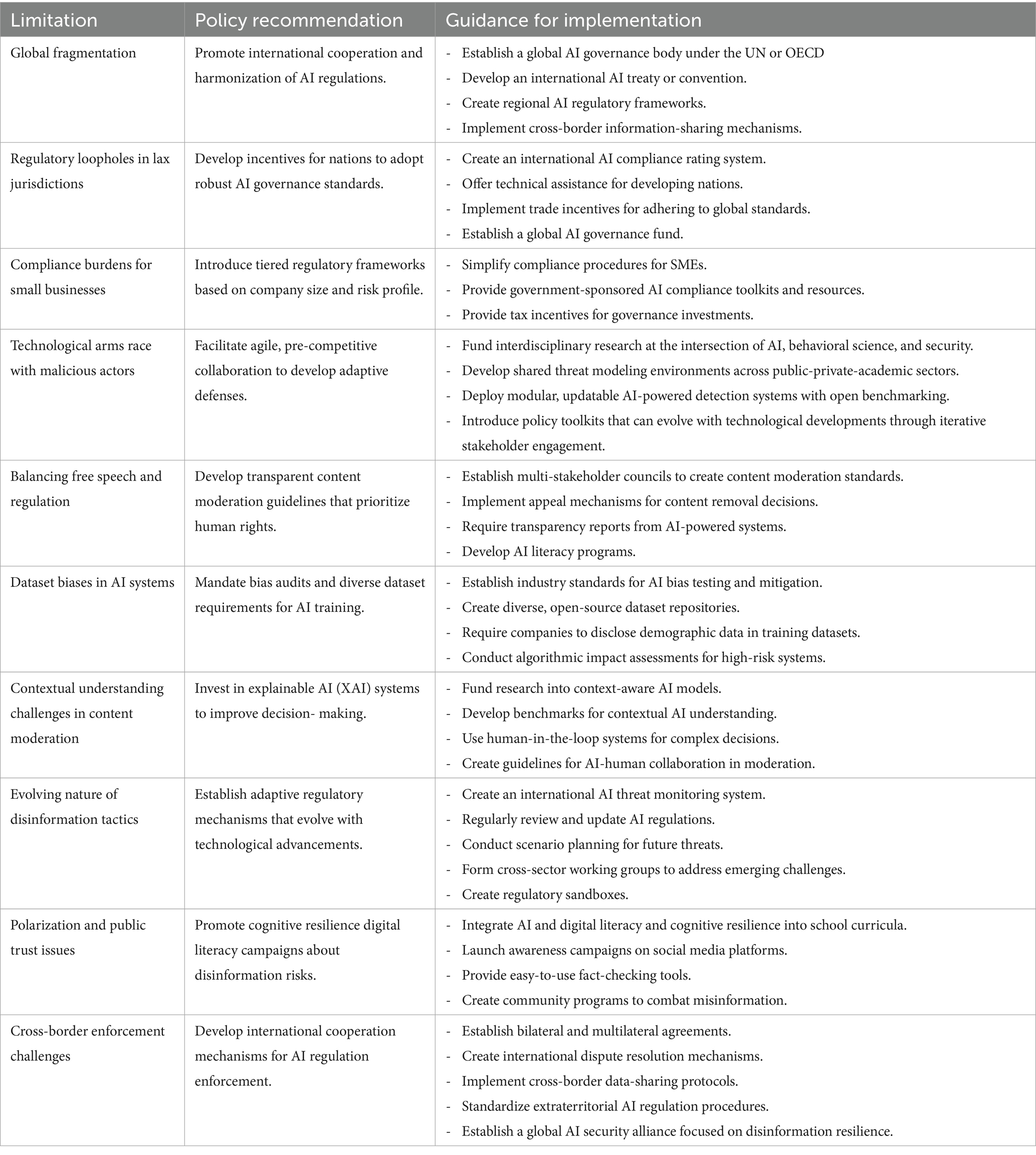

As we have explored the global landscape of AI regulations, country-specific approaches, and the implications of AI regulations on disinformation, it becomes evident that there are significant limitations in current AI regulatory frameworks. These limitations necessitate a more nuanced and forward-thinking approach to policy recommendations. The complex interplay between rapidly advancing AI technologies, diverse national interests, and the ever-evolving nature of disinformation presents unique challenges that cannot be adequately addressed by existing regulatory measures alone.

Moreover, the challenges in enforcing transparency, the potential for a technological arms race in disinformation, and the delicate balance between regulation and innovation underscore the limitations of current regulatory efforts.

In light of these challenges, it is crucial to examine the limitations of existing AI regulations and propose policy recommendations that can effectively address the multifaceted issues surrounding AI governance, particularly in the context of combating disinformation. The following section will delve into these limitations and present a set of policy recommendations designed to bridge the gaps in current regulatory frameworks, foster responsible AI development, and enhance our collective ability to mitigate the risks associated with AI-driven disinformation (see Table 2).

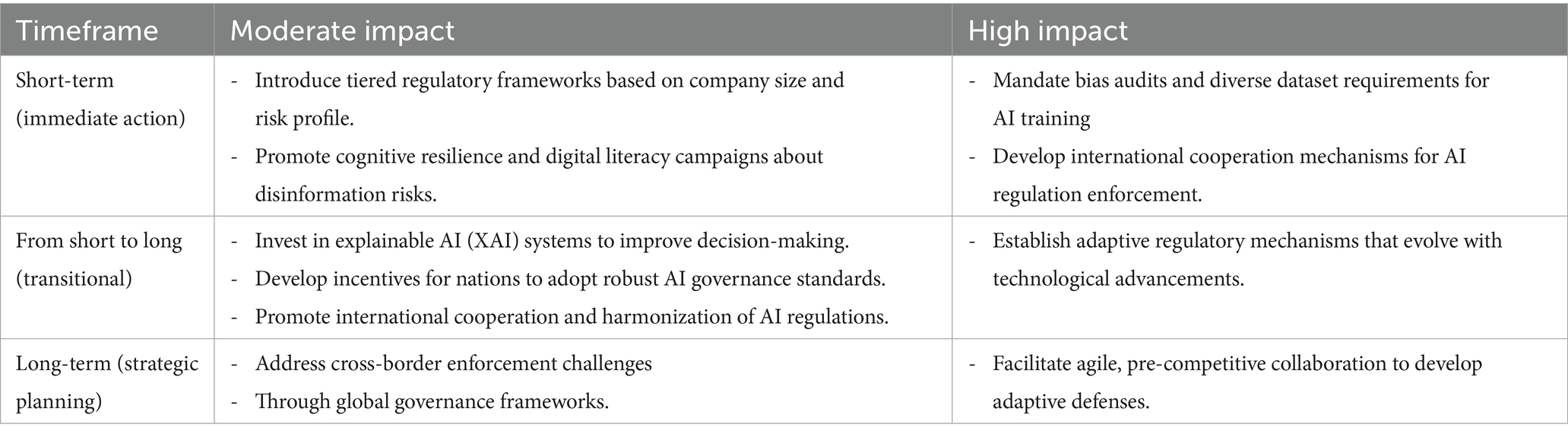

3.2 From limitations to a phased governance framework

While existing AI regulations have begun to address certain risks, particularly in content moderation and transparency, substantial gaps remain in managing the dynamic and adversarial nature of disinformation ecosystems. The preceding analysis underscored several structural limitations, including regulatory lag, jurisdictional fragmentation, and the challenge of operationalizing adaptability in policy instruments. To bridge these gaps, we propose a structured set of policy interventions calibrated to the velocity of AI advancement and the evolving threat landscape.

The following matrix presents a temporal-impact framework that categorizes the proposed policy recommendations by their expected timeframe for implementation (short-term, transitional, long-term) and level of systemic impact. This approach facilitates strategic sequencing, allowing policymakers, platforms, and civil society actors to prioritize actions that are both immediately actionable and scalable over time. It also provides a roadmap for balancing urgent mitigation needs with sustainable governance mechanisms (see Table 3).

The analysis of existing AI regulations and their limitations in addressing disinformation reveals several critical gaps that require high-impact policy recommendations. These recommendations are designed to address the shortcomings of current regulatory frameworks and provide a more robust approach to combating AI-driven disinformation.

3.2.1 Mandate bias audits and diverse datasets

To enhance the operational integrity of AI systems used in content moderation and detection – particularly in high-impact applications influencing public discourse – we recommend a risk-based framework for algorithmic auditing and data inclusivity verification. Rather than imposing uniform compliance burdens, regulatory efforts should prioritize functional performance across linguistic, demographic, and regional variables, thereby ensuring that disinformation targeting underrepresented groups is not systematically overlooked. A tiered approach to auditing – similar to cybersecurity red-teaming – should be encouraged through lightweight, third-party evaluations based on internationally recognized standards. Developers may be incentivized to engage in voluntary self-assessments, supported by transparency reporting and regulatory safe harbors.

This recommendation is not intended to expand regulatory complexity, but to address a critical performance gap: systems trained on narrow datasets are less capable of detecting non-dominant language content and culturally specific manipulation tactics. A measured and outcome-focused auditing regime can improve detection precision while maintaining innovation incentives and public trust.

3.2.2 Develop international cooperation mechanisms

Given the global nature of online platforms and influence operations, international governance cooperation is essential to close the gaps that single-nation policies leave open. We recommend the establishment of a transnational AI oversight body or coalition – potentially under the auspices of the United Nations or a consortium like the OECD – that facilitates coordination among national regulators. Such a body could maintain an up-to-date repository of AI-driven disinformation threats and the techniques used to counter them, allowing countries to share best practices in real time. It should create standardized protocols for information-sharing, so that if one country discovers a network of deepfake accounts, others can be alerted swiftly through an agreed channel. Joint cyber exercises or simulations could be run to improve collective readiness for major disinformation attacks (for instance, ahead of international elections or referendums). Furthermore, aligning certification and compliance standards for AI systems across borders would reduce adversaries’ ability to exploit weak links. We propose pursuing mutual recognition agreements for AI audit and certification regimes. In practice, this means if an AI model is certified as safe and transparent in one jurisdiction, others accept that certification – and conversely, models or services flagged as malicious in one place can be rapidly restricted elsewhere. To address regulatory loopholes in lax jurisdictions, the international coalition could also introduce incentive structures for nations to adopt robust AI governance. This might include an AI governance rating or index and tying development aid, trade benefits, or membership in certain international forums to improvements in AI regulatory standards. Technical assistance programs could help developing countries craft and enforce AI rules so they do not become unwitting safe havens for disinformation operations. Over time, these measures foster a more harmonized global approach, making it harder for disinformation agents to simply relocate their activities to avoid scrutiny.

To institutionalize these efforts, we advocate for the creation of a Global AI Security Alliance – a network of regulatory authorities, research institutions, and digital platform providers dedicated to proactive defense and regulatory convergence. This alliance should be initiated by a core group of digitally advanced democracies (e.g., EU, U.S., Japan, Australia) via a multilateral memorandum of understanding (MoU). Operational capacity would be structured around three interconnected pillars: (1) Shared Intelligence Infrastructure, for exchanging real-time data on emerging AI threats and synthetic content across jurisdictions; (2) Joint R&D Accelerator, to support cross-sector consortia building modular, adaptive detection systems and provenance verification tools; (3) Policy Harmonization Track, to align AI oversight standards through regulatory sandboxing, mutual recognition of audits, and best-practice exchange.

Anchoring this initiative in a multilateral framework (e.g., G7, OECD, or UNESCO) would enhance legitimacy, scalability, and interoperability. To address regulatory loopholes in lax jurisdictions, the alliance could introduce incentive structures – such as a global AI governance index or linking development aid, trade benefits, and digital access to regulatory compliance. Technical assistance programs should also be developed to support capacity-building in lower-resourced jurisdictions. These coordinated mechanisms would form a robust global governance architecture, making it increasingly difficult for disinformation actors to evade accountability through cross-border regulatory arbitrage.

3.2.3 Establish adaptive regulatory mechanisms

To ensure that legal frameworks remain fit for purpose in the face of rapidly evolving AI capabilities, adaptability must be embedded into the very structure of AI governance. Static rules are unlikely to withstand the pace and complexity of innovation in AI-generated content and algorithmic manipulation. Instead, a dynamic, data-driven, and iterative regulatory architecture is needed. One effective approach is the introduction of regulatory sandboxes tailored to AI applications in the information environment. These sandboxes provide controlled environments where platforms, regulators, and civil society actors can test and refine new moderation or detection technologies before they are mandated at scale. For instance, a platform might deploy a prototype AI labeler for likely disinformation under regulatory oversight, allowing for real-time learning and adjustment. Such experimentation can inform key design questions, such as acceptable error margins, redress mechanisms for false positives, and proportionality thresholds for intervention. These insights are crucial before formalizing policies into law.

In parallel, the inclusion of sunset clauses and scheduled policy reviews should become a standard feature of AI-related legislation. Laws passed today may be obsolete within two years; thus, built-in review cycles (e.g., every 18–24 months) allow regulatory frameworks to evolve alongside technological progress. In addition, legal mechanisms should empower relevant authorities to trigger accelerated updates in response to breakthroughs or emergent threats – such as audio deepfakes used in impersonation scams or novel bot networks designed to hijack trending topics. We further propose the formation of dedicated AI threat foresight and monitoring units at the national and regional levels. These bodies would be tasked with horizon scanning for emergent disinformation vectors, maintaining ongoing risk assessments, and coordinating with global partners for early warning and preemptive guidance. Such units could operate under the auspices of broader regulatory agencies or be integrated into the proposed Global AI Security Alliance. Importantly, adaptability does not mean deregulation. It requires a clear procedural framework to evaluate when and how policies should be revised – grounded in empirical evidence, ethical principles, and inclusive stakeholder dialogue.

This adaptive approach acknowledges that disinformation is a moving target and must be governed as a continuously evolving socio-technical risk, not a one-time legislative challenge. By embracing regulatory agility, policymakers can strike a balance between fostering innovation and safeguarding democratic discourse in an age of algorithmic manipulation.

3.2.4 Facilitate agile, pre-competitive collaboration to develop adaptive defenses

Technology is not only part of the problem – it is also an essential part of the solution. Rather than relying solely on isolated innovation or reactive investment, we recommend fostering agile, pre-competitive collaboration among public, private, and academic stakeholders to accelerate the development of adaptive defenses against AI-enabled disinformation. Governments, platforms, and international bodies should jointly fund interdisciplinary R&D initiatives at the intersection of AI, cybersecurity, behavioral science, and information integrity. This includes building shared threat modeling environments, where researchers can test adversarial AI tactics and co-develop countermeasures. For instance, new generative detection models could analyze imperceptible anomalies in audio, image, or video artifacts left behind by synthetic content – an approach already showing promise in deepfake identification. AI-powered verification tools are also essential. Leveraging real-time cross-referencing with trusted sources and metadata can help determine the authenticity of viral content. Equally critical is cognitive resilience – developing tools and educational interventions to help users recognize and resist manipulation. Adaptive AI systems can deliver timely “prebunking” prompts or context-aware alerts to users exposed to potentially misleading content, based on risk indicators such as origin, format, or linguistic style.

Complementary technologies such as blockchain-based provenance tracking and cryptographic watermarking offer innovative tools for bridging these gaps. Blockchain can enhance content traceability by recording immutable, timestamped hashes and metadata at the point of media creation – providing tamper-evident provenance that persists even as content is shared and modified. Projects like the Content Authenticity Initiative and Project Origin demonstrate how these tools could be embedded at scale. Beyond content traceability, blockchain can support AI accountability mechanisms. Developers and providers of generative AI tools could collaborate through decentralized autonomous organizations (DAOs) or self-regulatory bodies, using blockchain-backed smart contracts to formalize shared norms and compliance mechanisms to implement smart contracts that codify ethical deployment commitments. These may include obligations to watermark AI outputs, audit downstream usage, or enable redress mechanisms when misuse occurs. Such on-chain mechanisms create transparent, verifiable records without compromising user privacy. Importantly, blockchain’s decentralized, immutable nature aligns with the need for distributed oversight of powerful AI systems. While challenges remain – such as transaction scalability, energy use, and integration with legal frameworks – these technologies offer a blueprint for tamper-resistant infrastructure that complements existing governance proposals. Policymakers should invest in pilot programs and regulatory sandboxes to explore how blockchain-based mechanisms can be embedded in future AI oversight architectures.

3.2.5 Promote digital literacy and cognitive resilience

Technological and policy measures must be complemented by efforts to strengthen the human element of resilience. A well-informed and vigilant public is one of the best defenses against disinformation. We recommend national and international initiatives to educate citizens about AI-generated content and online manipulation techniques. This should start in schools by integrating media literacy and critical thinking into curricula, including specific modules on deepfakes, synthetic media, and the tricks used in viral misinformation. Already, several countries and NGOs have piloted programs to teach students how to recognize false or manipulated content; these should be expanded and shared globally. Public awareness campaigns are also needed for the adult population. Governments, in collaboration with civil society and media organizations, can run information drives on social media and traditional media, illustrating common examples of AI-fabricated news and how to spot them. Platforms can assist by providing easy-to-use tools for users to fact-check content or trace its source with one click (for example, plug-ins that reveal an image’s origin or whether a video has been edited). Libraries, community centers, and workplaces could host workshops on navigating misinformation online. The overarching goal is to build cognitive resilience – the mental ability to critically assess information and resist manipulation. Researchers describe cognitive resilience as akin to a “cognitive firewall” that prevents false information from taking root (Kont et al., 2024). This can be cultivated through “prebunking” and inoculation strategies: for example, exposing people to weakened doses of common misinformation tropes and debunking techniques so that they are less susceptible when they encounter falsehoods in the wild (van der Linden et al., 2021). Platforms might deploy brief pop-up warnings or tutorials for users who are about to share content that has characteristics of a deepfake or bot-originated post, thereby nudging users to pause and verify. Over time, a more discerning public will reduce the effectiveness of disinformation, as false narratives fail to gain traction and credibility. While digital/media literacy alone cannot stop a determined influence campaign, it raises the costs for disinformers and can mitigate the damage. Importantly, these efforts also contribute to healthier civic discourse by encouraging people to seek reliable sources and engage critically rather than impulsively with provocative content.

In implementing these recommendations, a phased approach can be useful. Some actions (like bias audits and tiered regulations for small businesses, or launching literacy campaigns) can be taken immediately as “short-term” measures, as they have moderate impact but lay the groundwork. Transitional steps (over the next 1–2 years) include establishing cooperation frameworks and adaptive regulatory processes, which have higher impact and need careful planning. Finally, long-term strategies (3–5 years and beyond) such as large-scale research initiatives and global governance agreements will yield the highest impact in fortifying the information ecosystem. By combining quick wins with sustained strategic efforts, governments and stakeholders can progressively reinforce society’s defenses.

4 Discussions of cases of with role of AI used to produce or to detect disinformation that we studied

4.1 Classification of types of AI and their roles in disinformation

To illustrate the interplay between AI technologies, disinformation tactics, and societal impacts, we examine recent cases and developments across several categories of AI application. Below, we classify the main ways AI is used to produce disinformation and provide real-world examples of each, along with the harms they have caused. We also discuss how AI is being employed in counter-disinformation efforts. This classification underscores the dual nature of AI – as a tool for malign manipulation and as an instrument for defense.

AI techniques leveraged in disinformation campaigns can be grouped into a few broad categories:

Generative AI for content creation – this includes deepfakes (AI-generated synthetic video or audio that imitates real people) and AI-generated text (using large language models). These tools can fabricate convincing false content at scale.

Synthetic identities and personas – AI can create realistic profile pictures, names, and personal backgrounds, enabling the mass production of fictitious online personas that appear authentic.

Automation and bot networks – AI-driven bots can mimic human behavior on social media, posting and engaging with content automatically. Machine learning helps coordinate these bots to act in swarms or networks.

Coordination of disinformation at scale – real-time social media analysis and strategy adaptation, cross-platform botnet deployment, natural language generation in multiple languages and styles.

AI in counter-disinformation – on the defensive side, AI is used to detect fake content and accounts, as well as to automate fact-checking and content moderation.

We now delve into each of the offensive categories (first four) with case studies, and then discuss the defensive use of AI.

4.2 Generative AI for content creation (deepfakes)

AI-generated synthetic media, or deepfakes, have become one of the most visible tools of disinformation. Deepfake detection company Onfido reported a 3,000% increase in deepfake attempts in 2023 (Onfido, 2023). The global market value for AI-generated deepfakes is projected to reach $79.1 million by the end of 2024, with a compound annual growth rate (CAGR) of 37.6% (MarketsandMarkets, 2024). There were 95,820 deepfake videos in 2023 – a 550% increase since 2019, with the number doubling approximately every six months (MarketsandMarkets, 2024). In 2023, deepfake fraud attempts accounted for 6.5% of total fraud incidents, marking a 2,137% increase over the past three years (Onfido, 2023).

Deepfakes utilize advanced neural networks to create fake video or audio that is often difficult to distinguish from real recordings, thereby manipulating viewers’ perceptions of reality. A number of high-profile incidents in recent years demonstrate their disruptive potential:

4.2.1 Political deception

During the Russian invasion of Ukraine, a fabricated video emerged online in March 2022 that depicted Ukrainian President Volodymyr Zelenskyy apparently urging his troops to lay down their arms and surrender. This video, a deepfake, was cleverly edited into a social media broadcast format and spread rapidly on Facebook and other platforms before being debunked. Had it been widely believed, it could have eroded military morale and undermined public trust in official communications during a critical national crisis. Ukrainian news outlets and government spokespeople rushed to clarify that the video was fake, illustrating both the risk and the swift response such disinformation provokes (U.S. Department of State, 2024).

4.2.2 Election interference

In the 2024 Indian regional elections, deepfake videos were deployed to subvert language barriers and spread targeted propaganda. Reports indicate that partisan operatives created videos of political leaders mouthing words in languages they never spoke, tailoring messages to different ethnic groups. Over 15 million people were reached via about 5,800 WhatsApp groups that circulated these deepfakes (Election Commission of India, 2024). By exploiting India’s linguistic diversity, the campaign manipulated voter perceptions of rival candidates. This not only misled voters on the politicians’ actual statements but also inflamed tensions via disinformation that appeared to come from trusted community figures. Election officials noted that such tactics, if unchecked, could compromise electoral integrity and make it harder for voters to discern truth amid a flood of AI-fabricated content.

4.2.3 Stoking public distrust

In Slovakia, on the eve of the 2024 parliamentary elections, an AI-generated audio recording surfaced in which voices resembling two prominent politicians discussed plans to rig the election (Slovak Ministry of Interior Ministry of the Interior of the Slovak Republic, 2024). Even though the audio was quickly suspected to be fake, it circulated widely on messaging apps. The content of the deepfake phone call fed into existing anxieties about corruption, leading some citizens to question the legitimacy of the election process. This incident eroded trust in the democratic process; officials later confirmed no such conversation had occurred, but the damage was done in terms of sowing doubt. Voter turnout in certain areas was thought to be depressed by fears that the system was rigged, showing how a simple audio deepfake can have tangible effects on civic behavior.

4.2.4 Personal attacks and intimidation

Deepfakes have also been weaponized to harass individuals and attempt to silence voices. In Northern Ireland, Member of Parliament Cara Hunter was targeted in 2023 by a malicious deepfake pornography campaign (Hunter C., 2024). Someone used AI to graft her likeness onto explicit sexual content and disseminated the fake video online. The reputational damage and emotional trauma from this incident were significant. Beyond the personal toll on Ms. Hunter, observers feared such tactics could discourage women and young people from participating in politics, if they see that outspoken figures risk being humiliated by fabricated scandals. This case drew attention to the need for stronger legal recourse against deepfake harassment, and indeed, the UK Parliament cited it in debates on criminalizing certain uses of deepfakes.

These examples highlight the various social and political harms deepfakes can inflict: from confusion on the battlefield, to manipulated democratic decisions, to the chilling effect on public life. The technological advancement in deepfake quality is rapid. Recent statistics show a three-fold increase in the number of deepfake videos and an eight-fold increase in deepfake audio clips circulating online from 2022 to 2023. By 2023, an estimated 500,000 deepfake videos were shared on social media. As deepfakes become more common and harder to detect, the necessity of robust countermeasures grows. Efforts are underway on multiple fronts: researchers are developing AI-driven deepfake detection tools, legislators in several countries are drafting laws to penalize malicious deepfake creation (especially in contexts like election interference or defamation), and media literacy campaigns are educating the public to be skeptical of sensational video/audio clips. These responses are critical. If deepfakes can be exposed and contextualized quickly, their impact can be mitigated. A combination of technical detection (using algorithms to verify authentic media or spot anomalies) and comprehensive media literacy is seen as the best defense. In summary, deepfakes represent a significant new dimension of disinformation – one that directly targets our sense of reality – and combating them will remain a top priority for policymakers and technologists alike.

4.3 Generative AI for content creation (AI-generated text)

Large Language Models (LLMs) such as GPT-3 and GPT-4 have enabled the mass production of synthetic text that is often highly coherent and hard to distinguish from human writing. This capability has been co-opted by disinformation actors to flood social media and news sites with fabricated articles, posts, and comments, exploiting the scale and speed that AI text generation affords. There are several dimensions to this phenomenon:

On the supply side, the accessibility of powerful text generators has grown. The global market for AI text generation tools was estimated at $423.8 million in 2022 and is projected to reach $2.2 billion by 2032, expanding at a rapid rate as businesses and individuals adopt these models for various uses (Dergipark, 2024). This growth means the tools are widely available and becoming cheaper, lowering the barrier for malicious use. North America currently leads in usage share, but Asia-Pacific is expected to see the fastest growth, indicating a geographically widening usage (Grand View Research, 2024). As more actors gain access to these AI systems, the potential volume of AI-written disinformation increases correspondingly.

We have witnessed misinformation campaigns driven by AI text causing real-world impacts. During the COVID-19 pandemic, for example, numerous false narratives about vaccines and health measures were propagated through what appeared to be legitimate news articles and blog posts. Investigations later revealed that some of these pieces were authored by AI systems and then posted on websites masquerading as news outlets, or shared via social media bots. These AI-generated articles made baseless claims (such as exaggerating vaccine side effects or promoting fake cures) but were written in a convincing journalistic style. According to the World Economic Forum, such health misinformation contributed to increased vaccine hesitancy in multiple countries. The public, already fearful due to the pandemic, encountered what looked like factual reports, not realizing they were computational concoctions. This demonstrates how AI-generated text can amplify the reach of harmful falsehoods by sheer quantity and the illusion of legitimacy, thereby undermining public trust in health authorities and complicating crisis responses.

In the political realm, AI text generators have been harnessed to produce persuasive messaging at a volume and personalization level not achievable before. Political consultants and propagandists can use tools like GPT-based text generation platforms to draft tailored emails, manifestos, or social media posts targeting specific voter demographics. One reported case involved the use of a tool called Quiller.ai during recent elections to help a particular campaign draft thousands of unique fundraising emails and social media posts. The AI was given basic points and the profiles of target recipients, and it produced content that resonated with those individuals’ known interests and fears. While fundraising itself is legitimate, blending this strategy with propaganda crosses into disinformation when the messages contain misleading claims or emotionally manipulative rhetoric disconnected from facts. The use of AI blurred the lines between genuine grassroots communication and mass-produced propaganda, making it harder for recipients to tell if a heartfelt plea on social media was written by a real supporter or generated by an algorithm. This raises ethical questions about authenticity in political discourse and shows how AI can supercharge microtargeting efforts with minimal human effort.

Another telling example comes from the 2016 Brexit referendum in the UK, which, although predating the latest AI advances, foreshadowed tactics that AI is now amplifying. Campaigners on both sides employed microtargeted advertising on Facebook to deliver custom messages to voters based on their data profiles. According to subsequent analyses, many of these messages were misleading or fear-mongering. Fast forward to today: similar microtargeting can be conducted by AI agents autonomously generating content. Reports indicate that in follow-up campaigns and discussions around Brexit, automated persona accounts (some using AI-generated profile photos and AI-written posts) engaged UK voters by pushing emotionally charged narratives – such as exaggerated fears about immigration or economic doom – and these were tailored to individuals’ online behavior patterns. The AI essentially acted as a propagandist that learned what each segment of the population cared about and then produced slogans and “news” addressing those exact fears. The concern is that such personalized disinformation is far more convincing than one-size-fits-all falsehoods; it can quietly reinforce people’s biases and is difficult to challenge because each person may see a slightly different misleading message, hidden from public scrutiny.

The economic impacts of AI-generated text-based disinformation are non-trivial. One analysis by the World Economic Forum in 2020 estimated global economic losses of around $78 billion in that year due to misinformation and fake news spreading online. These losses come from various channels: scams and frauds (often enabled by fake emails or news that trick people into financial decisions), companies losing value due to false rumors, resources spent on debunking hoaxes, and broader erosion of trust in markets and institutions. If AI allows misinformation to scale up, these economic costs could grow. We have already seen stock prices dip or surge based on viral social media claims – some notable cases involved automated Twitter bots spreading false reports about companies, causing brief chaos in financial markets before corrections. As LLMs become integrated into bots, the false reports could become more elaborate and harder to immediately dismiss, potentially leading to more severe market manipulation incidents.

Public perception data underscores the seriousness of the challenge. Surveys show that large portions of the population are aware of and worried about AI’s role in creating misinformation. In the UK, 75% of adults in 2023 believed that digitally altered videos and images (e.g., deepfakes) contributed to the spread of online misinformation, and 67% felt that AI-generated content of all types was making it harder to tell truth from falsehood on the internet (Ofcom, 2023). Globally, more than 60% of news consumers believe that news organizations at least occasionally report stories they know to be false, a cynicism fueled in part by awareness of mis/disinformation dynamics. Intriguingly, 38.2% of U.S. news consumers admit to having unknowingly shared a fake news item on social media, only realizing later that it was false. These figures highlight a growing distrust in media and the self-reinforcing nature of misinformation – people are both victims and unwitting vectors of disinformation in the online ecosystem. Journalists themselves are highly concerned: 94% of journalists surveyed see made-up news as a significant problem in their field. This situation creates a vicious cycle where disinformation, boosted by AI, begets more distrust, which in turn primes the public to be more susceptible to the next wave of disinformation or to dismiss truthful reporting as “fake.”

In conclusion, AI text generation tools have become double-edged swords. They offer efficiency and creativity but in the wrong hands can greatly magnify the reach and believability of false information. Addressing this will require not only better detection algorithms (to flag AI-written trolls or bogus news) but also platform policies to throttle or label automated accounts, and a culture of critical thinking among readers. Some social networks are exploring authenticity verification for accounts and limiting bot activity, while researchers are developing methods to watermark AI-generated text to aid detection. However, adversarial use of AI will likely circumvent simpler safeguards, meaning the guardians of information integrity will need to continuously adapt, perhaps even employing counter-LLMs that identify linguistic patterns of AI vs. human text. The battle between AI-generated disinformation and AI-enabled detection is already underway as a key front in maintaining a healthy information space.

4.4 Synthetic identities and personas