- 1Department of Health Professions Education, Faculty of Interdisciplinary Sciences, Datta Meghe Institute of Higher Education and Research, Wardha, India

- 2Department of Biomedical Laboratory Science, George Washington University, Washington, DC, United States

- 3School of Health and Care Sciences, University of Lincoln, Lincoln, United Kingdom

- 4Department of Cardiovascular and Pulmonary Physiotherapy, Career College, Bhopal, India

- 5Department of Radiodiagnosis, Jawaharlal Nehru Medical College, Datta Meghe Institute of Higher Education and Research, Wardha, India

1 Introduction

Generative artificial intelligence (genAI) systems are progressively transforming health science education and research by assisting clinicians in diagnosis and structuring specific intervention regimens (Mir et al., 2023). Moreover, these technologies serve educators in yielding simple concept-based educational modules tailored as per student's requirements and large language models (LLMs) and analogous models display the potential for automated streamlined literature reviews, prompt generation of interpretations and conclusions, and effortless drafting of manuscripts within seconds (Mir et al., 2023; Al Kuwaiti et al., 2023; Gupta et al., 2024) thereby, offering a potentially high level of convenience and efficiency. Furthermore, many researchers contend that genAI has the potential to completely automate research processes, including drafting proposals, analyzing data, and composing concluding reports (Almansour and Alfhaid, 2024; Preiksaitis and Rose, 2023). Hence, these merits indicate a prospective future for genAI, particularly in domains that demand processing of large scale data and iterative analyses.

However, despite these evident positive outcomes, concerns persist regarding the quality of AI-generated outputs, which may include inaccuracies in text reporting that contribute to misinformation, logical inconsistencies, outdated or unverified claims, and hallucinated references, all of which undermine the academic credibility of writing (Athaluri et al., 2023; Farrelly and Baker, 2023; Sittig and Singh, 2024). In addition, a lack of transparency in datasets exacerbates ethical challenges and biases (Norori et al., 2021). In an era characterized by the expedited advancement and refinement of genAI, it is necessary to critically evaluate whether these systems encourage critical thinking and human intelligence or subtly undermine them. This raises an important question: are we unintentionally relinquishing the cognitive abilities that have propelled scientific and clinical advancements, as healthcare professionals progressively integrate genAI into clinical and academic domains? The dilemma lies in balancing the efficiency of genAI with the preservation of essential human cognitive skills, such as critical thinking, ethical reasoning, and independent problem-solving. At its core, this dilemma focuses on how health professionals, medical trainees, and early career researchers apply outcomes produced by genAI as overreliance risk supporting passive dependence on algorithm produced outcomes in a context where time and cognitive capacity are consistently constrained. The expertise involving scientific accuracy, ethical judgment, and diagnostic reasoning are cultivated through proactive contribution by integrating knowledge in innovative and contextually relevant approaches, critically evaluating evidence, and grappling with uncertainty that genAI cannot substitute (Passerini et al., 2025; Shoja et al., 2023).

2 GenAI and the risk of cognitive complacency

The significance of the risk of cognitive complacency is emphasized globally, as industry and academia compete to implement genAI tools, and have rapidly accelerated publications related to AI, reflecting both enthusiasm and apprehension. However, medical professionals and researchers may demonstrate overdependence on genAI tools due to faster processing, thus, reducing opportunities for independent problem-solving and critical thinking (Shoja et al., 2023; Zhai et al., 2024). Additionally, in medical research where precision plays an important factor, underlying biases in data training of genAI can propagate false information (Norori et al., 2021). The inefficacy of plagiarism detection software to recognize text generated by genAI, undermines conventional ethical integrity measures as the output may be erroneously identified as genuine scholarly writing (Farrelly and Baker, 2023), though some exceptions exist (Elkhatat et al., 2023; Weber-Wulff et al., 2023). This attitude leads to a workforce adept at utilizing genAI but deficient in cognitive analytical competencies. Moreover, an evolving repository of research studies warns against excessive reliance on genAI for academic work by emphasizing that while genAI can generate complex answers to assignments, it may encourage students to trade critical thinking for speed, eroding the meta-cognitive skills essential to patient care and clinical reasoning (Fan et al., 2024; Eachempati et al., 2024).

However, the ecosystem of scholarly communication stands at a crossroads: will the next generation of scientists and clinicians accept findings derived from genAI uncritically, prioritizing output over insight, or will they develop the intellectual resilience necessary to critically evaluate the outcomes? In the healthcare industry, the capability to incorporate evolving evidence into public health policy, interpret subtle patient cues, and navigate cultural sensitivities is not exclusively governed by algorithms or encoded by pre-trained genAI models. This concern is particularly relevant because these higher-order cognitive functions necessitate emotional intelligence, nuances, and analytical judgment which are fundamental for fostering innovative and ethical healthcare in the future. The credibility of scientific research is defined by ethical considerations, peer evaluation, and reproducibility (Prager et al., 2019). However, negligence in critically evaluating genAI produced research risks eroding these fundamental principles. Therefore, to ensure research integrity, transparency, and mitigate overreliance on automated results, it is essential to establish and integrate genAI usage policies in academia (Athaluri et al., 2023).

3 GenAI as a tool for augmenting human intelligence

Prominent journals warns of a global “feedback loop” that risks reinforcing pre-existing knowledge patterns at the expense of transformative inquiry if genAI tools remain unmonitored (Kwong et al., 2024). Furthermore, a recent analysis elucidates that true intelligence is characterized by the ability to solve novel, previously un-encountered problems, rather than relying on recycling pre-existing solutions (Gignac and Szodorai, 2024). The discussion highlights conceptual clarity on the distinction between “intelligence” and “achievement” within both human and artificial domains (Gignac and Szodorai, 2024). Contemporary generative AI systems, extensively trained on existing datasets, may mimic expertise; however, they frequently lack demonstration of true intelligence, as their outputs are predominantly dependent on prior data exposure (Gignac and Szodorai, 2024). By consistently relying on familiar patterns, there is a risk of fostering intellectual complacency rather than encouraging original thoughts. This distinction is particularly significant in the health sciences, where true innovation emerges from confronting novel challenges, rather than revisiting established solutions.

GenAI should function as an assistive tool that enhances cognitive capabilities, embodying the concept of “augmented intelligence,” which emphasizes its role in complementing, rather than replacing human intellect and expertise (Monteith et al., 2024). It is crucial to strike a balance between human supervision and the efficiencies of genAI, cautioning that while genAI has the potential to optimize specific tasks, genuine depth, and innovation arise from the uniquely human cognitive abilities for critical reflection and rigorous interrogation (Sittig and Singh, 2024). Human centered AI operates as a two-dimensional framework that strategically balances automation with human control, aiming to enhance both technological reliability and human agency. This approach fosters the development of trustworthy, reliable, and safe AI-assisted computer programs while enhancing human self-efficacy, performance, creativity, and responsibility (Shneiderman, 2020). Promoting the ethical use of genAI includes key principles such as fairness, unbiased decision making, transparency in datasets, accountability, autonomy, and data privacy (Jobin et al., 2019; Habli et al., 2020).

Both academia and industry should be urged to collaboratively oversee the development of more advanced genAI, emphasizing that scientific rigor (transparency, and reproducibility), and critical peer review must govern the evolution of these technologies. Without such oversight, claims associated with AI's imminent artificial general intelligence risk go unchallenged, promoting a narrative of effortless problem-solving that sidesteps human contribution (Sittig and Singh, 2024). In contrast, maintaining rigorous standards and embedding critical thinking in genAI integration ensures that researchers and clinicians remain architects of their intellectual landscapes. If properly contextualized, genAI may become a powerful collaborator, rather than a technological crutch.

4 Institutional responsibility in AI adoption

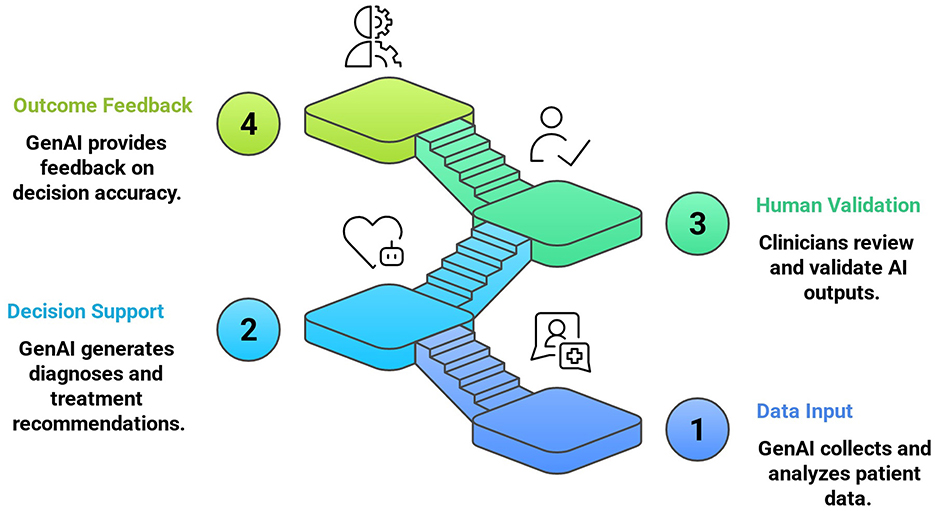

Graduate programs play a pivotal role in steering the ethical incorporation of genAI into health sciences. Medical education assisted by genAI mainly includes cloud computing, wearable devices, 5G, big data analysis, virtual reality, and the Internet of Things (Sun et al., 2023). Medical institutions must prioritize human supervision in research projects and clinical applications involving genAI (Janumpally et al., 2025) to ensure patient safety and ethical integrity (Figure 1). For instance, genAI is increasingly used for surgical training without direct patient involvement (Bhuyan et al., 2025). Western Michigan University exemplifies this by integrating over 100+ h of AI-simulation training in its medical curriculum, allowing students to engage in realistic patient scenarios under professor supervision (Bhuyan et al., 2025). Similarly, the University of Illinois College of Medicine successfully employs its AI in medicine (AI-Med) program, which cultivates critical appraisal skills required for assessing and implementing genAI in medical research. This initiative not only enhances proficiency in scientific writing and communication but also ensures the responsible dissemination of findings within the medical community (AI-MED, 2025). Congruently, the National University of Singapore mandatorily leverages undergraduate programs in bioinformatics and AI in medicine (Feigerlova et al., 2025). However, the overreliance on genAI poses significant risks. Inappropriate drug recommendations and misdiagnoses such as the inability to detect lesions or tumors, can be executed by genAI thus, endangering patient's lives (Saadat et al., 2024). For instance, despite insufficient accuracy, the widespread adoption of an externally validated AI sepsis prediction model flagged concerns leading to postponed intervention (Wong et al., 2021). These failures illustrate the critical need for human oversight and highlight the consequences of over-dependence on genAI. The key challenge encountered in genAI education involves curriculum standardization, due to resistance from some researchers and educators who lack the skills to detect the content produced by genAI or who rely on unreliable detection tools. This originates due to the inability of plagiarism detection software to differentiate between genAI produced content and human written text and due to a lack of knowledge and competency among health professionals regarding genAI technologies. For instance, tools such as PlagiarismCheck, CrossPlag, and Zero GPT often fail to detect genAI content due to the rapid evolution of these models, which progressively enhance their abilities to mimic human written text, making identification more challenging (Weber-Wulff et al., 2023; Elkhatat et al., 2023).

The curricula should therefore conceptualize genAI not as a shortcut but as a tool whose outputs demand scrutiny. Academic institutions should prioritize AI ethics in curriculum development, equipping future researchers and clinicians with the skills needed to critically engage with the content generated by genAI (Katznelson and Gerke, 2021; Bahroun et al., 2023). In fact, a study found that dental university instructors often mistook AI-generated reflections for student work, raising concerns about academic integrity and authorship (Brondani et al., 2024). Academic projects encouraging systematic synthesis of literature or research design through human cognitive abilities can allow students and trainees to identify biases, challenge assumptions, and detect conceptual gaps in conclusions derived from generative AI (Ganjoo et al., 2024). Moreover, healthcare professionals and students must be trained in AI literacy focusing on technical foundation, applications in real-world scenarios, the interaction between humans and genAI, critical thinking skills to differentiate genAI false claims and reality, strengthening of prompt engineering skills, and provide hands-on experience through case studies, and practical exercises, to apply genAI tools in simulated and real-life and to navigate its applications effectively (Charow et al., 2021; Walter, 2024; Sridharan and Sequeira, 2024). Incorporating assignments that require students to cross-check AI-generated information with reputable sources can foster critical thinking and responsible use of technology. This approach not only enhances students' research skills but also prepares them to navigate the ethical challenges associated with emerging AI technologies in their professional careers (Ganjoo et al., 2024; Masters et al., 2025). Integrating critical thinking into AI literacy courses ensures students grasp genAI's capabilities, limitations, ethical implications, and societal impact. By fostering analytical skills, educators help students become both technically proficient and ethically responsible. Furthermore, health professional educators must ensure the validation of genAI tools for accuracy and precision, personal data privacy, instructional strategies, assessment of teaching efficiency, learning outcomes, and feedback mechanisms for effective implementation of genAI-based educational programs (Feigerlova et al., 2025; Reddy, 2024; Shokrollahi et al., 2023).

GenAI should be framed as a tool for enhancing learning, not replacing traditional educational methodologies. Journals and academic bodies should enforce transparency in research submissions assisted by genAI. Specifically disclosing AI usage in manuscripts, ensuring human verification of AI-generated data, and maintaining ethical standards in publication practices are essential measures that preserve the credibility of scholarly communication in an AI-augmented academic environment (Koul, 2023; Yang et al., 2024). Encouraging transparency in the application of generative AI for both the researcher and publisher reinforces an environment where original reasoning is not overshadowed by the ease of prompt engineering.

5 Conclusion

By embracing the above-mentioned principles, we steer generative AI to augment human cognitive efforts, rather than replacing them. Instead of viewing efficiency and authenticity as competing values, we can align them using AI's processing power to handle repetitive tasks, while retaining human judgment for higher-level functions involving ethical reflection, contextual interpretation, and hypothesis generation. In doing so, we encourage a future in which technology catalyzes genuine innovation, inspiring thinkers to use genAI judiciously rather than dependently. It is essential to resist the allure of seamless outputs provided by generative AI that lack the precision and depth of the human intellect. The challenge is not to reject AI (quite the contrary) but to integrate it in ways that uphold the ethical, critical, and creative dimensions of health sciences. By doing so, we preserve the human spark that transforms data into discovery, complexities into clarity, and knowledge into wisdom.

Author contributions

WN: Conceptualization, Writing – original draft, Writing – review & editing. RG: Writing – original draft, Writing – review & editing. MR: Writing – review & editing. AP: Writing – original draft, Writing – review & editing. GM: Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The manuscript is supported by Datta Meghe Institute of Higher Education and Research, India.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

AI-MED (2025). Artificial Intelligence in Medicine (AI-MED) | College of Medicine | University of Illinois College of Medicine.” n.d. Accessed March 12, 2025. https://medicine.uic.edu/education/md/scholarly-concentration-programs/ai-med/

Al Kuwaiti, A., Nazer, K., Al-Reedy, A., Al-Shehri, S., Al-Muhanna, A., Subbarayalu, A. V., et al. (2023). A review of the role of artificial intelligence in healthcare. J. Pers. Med. 13:951. doi: 10.3390/jpm13060951

Almansour, M., and Alfhaid, F. M. (2024). Generative artificial intelligence and the personalization of health professional education: a narrative review. Medicine 103:e38955. doi: 10.1097/MD.0000000000038955

Athaluri, S. A., Manthena, S. V., Kesapragada, V. S. R. K.M., Yarlagadda, V., Dave, T., and Duddumpudi, R. T. S. (2023). Exploring the boundaries of reality: investigating the phenomenon of artificial intelligence hallucination in scientific writing through chatGPT references. Cureus 15:e37432. doi: 10.7759/cureus.37432

Bahroun, Z., Anane, C., Ahmed, V., and Zacca, A. (2023). Transforming education: a comprehensive review of generative artificial intelligence in educational settings through bibliometric and content analysis. Sustainability 15:12983. doi: 10.3390/su151712983

Bhuyan, S. S., Sateesh, V., Mukul, N., Galvankar, A., Mahmood, A., Nauman, M., et al. (2025). Generative artificial intelligence use in healthcare: opportunities for clinical excellence and administrative efficiency. J. Med. Syst. 49:10. doi: 10.1007/s10916-024-02136-1

Brondani, M., Alves, C., Ribeiro, C., Braga, M. M., Garcia, R. C. M., Ardenghi, T., et al. (2024). Artificial intelligence, chatGPT, and dental education: implications for reflective assignments and qualitative research. J. Dent. Educ. 88, 1671–1680. doi: 10.1002/jdd.13663

Charow, R., Jeyakumar, T., Younus, S., Dolatabadi, E., Salhia, M., Al-Mouaswas, D., et al. (2021). Artificial intelligence education programs for health care professionals: scoping review. JMIR. Med. Educ. 7:e31043. doi: 10.2196/31043

Eachempati, P., Komattil, R., Coelho, C., and Nagraj, S. K. (2024). Natural stupidity versus artificial intelligence: where is health professions education headed?. BDJ. In Pract. 37:403. doi: 10.1038/s41404-024-2944-y

Elkhatat, A. M., Elsaid, K., and Almeer, S. (2023). Evaluating the efficacy of AI content detection tools in differentiating between human and AI-generated text. Int. J. Educ. Integr. 19:17. doi: 10.1007/s40979-023-00140-5

Fan, Y., Tang, L., Le, H., Shen, K., Tan, S., Zhao, Y., et al. (2024). Beware of metacognitive laziness: Effects of generative artificial intelligence on learning motivation, processes, and performance. Brit. J.Educ. Technol. 56, 489–530. doi: 10.1111/bjet.13544

Farrelly, T., and Baker, N. (2023). Generative artificial intelligence: implications and considerations for higher education practice. Educ. Sci. 13:1109. doi: 10.3390/educsci13111109

Feigerlova, E., Hani, H., and Hothersall-Davies, E. (2025). A systematic review of the impact of artificial intelligence on educational outcomes in health professions education. BMC. Med. Educ. 25:129. doi: 10.1186/s12909-025-06719-5

Ganjoo, R., Rankin, J., Lee, B., and Schwartz, L. (2024). Beyond boundaries: exploring a generative artificial intelligence assignment in graduate, online science courses. J. Microbiol. Biol. Educ. 25, e00127–e00124. doi: 10.1128/jmbe.00127-24

Gignac, G. E., and Szodorai, E. T. (2024). Defining intelligence: bridging the gap between human and artificial perspectives. Intelligence 104:101832. doi: 10.1016/j.intell.2024.101832

Gupta, N., Khatri, K., Malik, Y., Kanwal, A., Aggarwal, S., and Dahuja, A. (2024). Exploring prospects, hurdles, and road ahead for generative artificial intelligence in orthopedic education and training. BMC. Med. Educ. 24:1544. doi: 10.1186/s12909-024-06592-8

Habli, I., Lawton, T., and Porter, Z. (2020). Artificial intelligence in health care: accountability and safety. Bull. World. Health. Organ. 98, 251–256. doi: 10.2471/BLT.19.237487

Janumpally, R., Nanua, S., Ngo, A., and Youens, K. (2025). Generative artificial intelligence in graduate medical education. Front. Med. 11:1525604. doi: 10.3389/fmed.2024.1525604

Jobin, A., Ienca, M., and Vayena, E. (2019). The global landscape of AI ethics guidelines. Nat. Mach. Intell. 1, 389–399. doi: 10.1038/s42256-019-0088-2

Katznelson, G., and Gerke, S. (2021). The need for health AI ethics in medical school education. Adv. Health. Sci. Educ. Theory. Pract. 26, 1447–1458. doi: 10.1007/s10459-021-10040-3

Koul, P. A. (2023). Disclosing use of artificial intelligence: promoting transparency in publishing. Lung India 40, 401–403. doi: 10.4103/lungindia.lungindia_370_23

Kwong, J. C. C., Nickel, G. C., Wang, S. C. Y., and Kvedar, J. C. (2024). Integrating artificial intelligence into healthcare systems: more than just the algorithm. NPJ Digit. Med. 7, 1–3. doi: 10.1038/s41746-024-01066-z

Masters, K., MacNeil, H., Benjamin, J., Carver, T., Nemethy, K., Valanci-Aroesty, S., et al. (2025). Artificial intelligence in health professions education assessment: AMEE guide no. 178. Med. Teach. 1–15. doi: 10.1080/0142159X.2024.2445037

Mir, M. M., Mir, G. M., Raina, N. T., Mir, S. M., Mir, S. M., Miskeen, E., et al. (2023). Application of artificial intelligence in medical education: current scenario and future perspectives. J. Adv. Med. Educ. Prof. 11, 133–140. doi: 10.30476/JAMP.2023.98655.1803

Monteith, S., Glenn, T., Geddes, J. R., Achtyes, E. D., Whybrow, P. C., and Bauer, M. (2024). Differences between human and artificial/augmented intelligence in medicine. Comput. Hum. Behav. 2:100084. doi: 10.1016/j.chbah.2024.100084

Norori, N., Hu, Q., Aellen, F. M., Faraci, F. D., and Tzovara, A. (2021). Addressing bias in big data and AI for health care: a call for open science. Patterns 2:100347. doi: 10.1016/j.patter.2021.100347

Passerini, A., Gema, A., Minervini, P., Sayin, B., and Tentori, K. (2025). Fostering effective hybrid human-LLM reasoning and decision making. Front. Artif. Intell. 7:1464690. doi: 10.3389/frai.2024.1464690

Prager, E. M., Chambers, K. E., Plotkin, J. L., McArthur, D. L., Bandrowski, A. E., and Bansal, N. (2019). Improving transparency and scientific rigor in academic publishing. J. Neurosci. Res. 97, 377–390. doi: 10.1002/jnr.24340

Preiksaitis, C., and Rose, C. (2023). Opportunities, challenges, and future directions of generative artificial intelligence in medical education: scoping review. JMIR. Med. Educ. 9:e48785. doi: 10.2196/48785

Reddy, S. (2024). Generative AI in healthcare: an implementation science informed translational path on application, integration and governance. Implement. Sci. 19:27. doi: 10.1186/s13012-024-01357-9

Saadat, A., Siddiqui, T., Taseen, S., and Mughal, S. (2024). Revolutionising impacts of artificial intelligence on health care system and its related medical in-transparencies. Ann. Biomed. Eng. 52, 1546–1548. doi: 10.1007/s10439-023-03343-6

Shneiderman, B. (2020). Human-centered artificial intelligence: reliable, safe and trustworthy. Int. J. Hum. Comput. Interact. 36, 495–504. doi: 10.1080/10447318.2020.1741118

Shoja, M. M., Van de Ridder, J. M. M., and Rajput, V. (2023). The emerging role of generative artificial intelligence in medical education, research, and practice. Cureus 15:e40883. doi: 10.7759/cureus.40883

Shokrollahi, Y., Yarmohammadtoosky, S., Nikahd, M. M., Dong, P., Li, X., and Gu, L. (2023). A comprehensive review of generative AI in healthcare. arXiv preprint arXiv:2310.00795. doi: 10.48550/arXiv.2310.00795

Sittig, D. F., and Singh, H. (2024). Recommendations to ensure safety of AI in real-world clinical care. JAMA. 333, 457–458. doi: 10.1001/jama.2024.24598

Sridharan, K., and Sequeira, R. P. (2024). Artificial intelligence and medical education: application in classroom instruction and student assessment using a pharmacology and therapeutics case study. BMC. Med. Educ. 24:431. doi: 10.1186/s12909-024-05365-7

Sun, L., Yin, C., Xu, Q., and Zhao, W. (2023). Artificial intelligence for healthcare and medical education: a systematic review. Am. J. Transl. Res. 15, 4820–4828.

Walter, Y. (2024). Embracing the future of artificial intelligence in the classroom: the relevance of AI literacy, prompt engineering, and critical thinking in modern education. Int. J. Educ. Technol. High. Educ. 21:15. doi: 10.1186/s41239-024-00448-3

Weber-Wulff, D., Anohina-Naumeca, A., Bjelobaba, S., Foltýnek, T., Guerrero-Dib, J., Popoola, O., et al. (2023). Testing of detection tools for AI-generated text. Int. J. Educ. Integr. 19:26. doi: 10.1007/s40979-023-00146-z

Wong, A., Otles, E., Donnelly, J. P., Krumm, A., McCullough, J., DeTroyer-Cooley, O., et al. (2021). External validation of a widely implemented proprietary sepsis prediction model in hospitalized patients. JAMA. Intern. Med. 181, 1065–1070. doi: 10.1001/jamainternmed.2021.2626

Yang, K., Rakovi,ć, M., Liang, Z., Yan, L., Zeng, Z., Fan, Y., et al. (2024). Modifying AI, enhancing essays: how active engagement with generative AI boosts writing quality. arXiv preprint arXiv:2412.07200. doi: 10.1145/3706468.3706544

Keywords: generative artificial intelligence, cognitive capacity, augmented intelligence, healthcare sciences, large language models, critical thinking

Citation: Naqvi WM, Ganjoo R, Rowe M, Pashine AA and Mishra GV (2025) Critical thinking in the age of generative AI: implications for health sciences education. Front. Artif. Intell. 8:1571527. doi: 10.3389/frai.2025.1571527

Received: 05 February 2025; Accepted: 28 April 2025;

Published: 21 May 2025.

Edited by:

Diego Zapata-Rivera, Educational Testing Service, United StatesReviewed by:

Burcu Arslan, Educational Testing Service, United StatesFrancisco Javier López-Flores, Michoacana University of San Nicolás de Hidalgo, Mexico

Copyright © 2025 Naqvi, Ganjoo, Rowe, Pashine and Mishra. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rohini Ganjoo, cmdhbmpvb0Bnd3UuZWR1

Waqar M. Naqvi

Waqar M. Naqvi Rohini Ganjoo

Rohini Ganjoo Michael Rowe

Michael Rowe Aishwarya A. Pashine

Aishwarya A. Pashine Gaurav V. Mishra

Gaurav V. Mishra