- 1ETIS Laboratory, ENSEA, CNRS, UMR8051, CY Cergy-Paris University, Cergy, France

- 2Independent Researcher, Jülich, Germany

- 3Artificial Intelligence Research Institute (IIIA-CSIC), Bellaterra, Spain

This work examines the integration of large language models (LLMs) into multi-agent simulations by replacing the hard-coded programs of agents with LLM-driven prompts. The proposed approach is showcased in the context of two examples of complex systems from the field of swarm intelligence: ant colony foraging and bird flocking. Central to this study is a toolchain that integrates LLMs with the NetLogo simulation platform, leveraging its Python extension to enable communication with GPT-4o via the OpenAI API. This toolchain facilitates prompt-driven behavior generation, allowing agents to respond adaptively to environmental data. For both example applications mentioned above, we employ both structured, rule-based prompts and autonomous, knowledge-driven prompts. Our work demonstrates how this toolchain enables LLMs to study self-organizing processes and induce emergent behaviors within multi-agent environments, paving the way for new approaches to exploring intelligent systems and modeling swarm intelligence inspired by natural phenomena. We provide the code, including simulation files and data at https://github.com/crjimene/swarm_gpt.

1 Introduction

1.1 From rule-based to LLM-driven agents: a new paradigm in ABMS

In this study, we use the terms “agent” and “multi-agent” based on their foundational meanings in agent-based modeling and simulation (ABMS, Macal and North, 2009), while extending them through the integration of large language models (LLMs, Chang et al., 2024). Traditionally, an agent in ABMS is an autonomous entity with localized decision-making abilities, interacting with its environment and other agents according to predefined rules or principles. A multi-agent system (MAS, Wooldridge, 2009) refers to a collection of such agents operating within a shared environment, where global behaviors emerge from their local interactions. Examples of ABMS include simulations of ecosystem dynamics, urban development, and social interactions (Heckbert et al., 2010; Chen, 2012; Bianchi and Squazzoni, 2015).

Beyond rule-based agents, ABMS literature also explores agents controlled by neural networks, especially in applications that require adaptability or learning. Techniques like reinforcement learning and evolutionary strategies are commonly used to optimize agent behaviors in dynamic environments (Hecker and Moses, 2015; Ning and Xie, 2024; Liu et al., 2024a). Other research investigates the application of biologically inspired architectures, particularly spiking neural networks, to develop solutions that are both energy-efficient and computationally effective (Fang and Dickerson, 2017; Putra et al., 2024). Within these systems, emergent behavior plays a pivotal role, facilitating the effective handling of complex tasks (Jimenez Romero et al., 2024).

Recently, these terms have been introduced in a different context within artificial intelligence. Here, AI agents often represent task-oriented entities designed to autonomously achieve specific goals, such as generating dialogues or managing workflows. These agents typically focus on individual task execution rather than the emergent dynamics central to ABMS (Talebirad and Nadiri, 2023; Kannan et al., 2024; Li et al., 2024).

In our work, we employ the terms “agent” and “multi-agent” in the context of ABMS while integrating LLMs to guide agent behaviors. An agent in our simulations can be either LLM-steered or rule-based. We incorporate LLMs to guide agent behaviors in two ways: (1) simulations consisting entirely of LLM-steered agents, and (2) hybrid simulations where LLM-steered agents interact with traditional rule-based agents. This means our simulations can have populations of agents that are completely LLM-based or a mix of LLM-based and rule-based agents within the same environment.

We aim to explore the potential advantages of leveraging the decision-making and pattern-generation capabilities of LLMs to augment ABMS. Specifically, we are interested in investigating whether integrating LLMs can help us model emergent behavior using the language processing capabilities and the knowledge base of LLMs.

From this point forward, when we refer to “agents,” we mean agents within the ABMS framework that may incorporate LLM intelligence.

1.2 Motivation

The field of agent-based simulations has rapidly evolved, driven by advances in artificial intelligence (AI) and computational power. These simulations, which model the interactions of autonomous agents within a defined environment, are increasingly being enhanced by the integration of generative AI, particularly LLMs. In this context, LLMs—with their capacity to process and generate human-like text—offer a novel means of guiding and influencing agent behaviors in real-time. A critical aspect of this integration is prompt engineering, which is the careful design of prompts that serve as instructions for the agents, dictating how they should respond to their environment.

The motivation and contribution of this work are found in the presentation of a toolchain that integrates LLMs with agent-based simulations within the NetLogo environment (Tisue and Wilensky, 2004; Amblard et al., 2015), a platform widely recognized in the complexity science community for its robustness and versatility. NetLogo's value as an educational tool spanning various academic levels further underscores its importance, making it an ideal choice for demonstrating the integration of advanced AI methods into multi-agent simulations.

Our study investigates two distinct approaches to utilizing LLMs within multi-agent environments, focusing on the role of prompt engineering in shaping agent behavior. The first approach employs detailed, structured prompts within an ant colony simulation. These prompts are designed to specify behaviors under clearly defined conditions, such as following pheromone trails or retrieving food. This method allows for precise control over agent actions, enabling a rule-based system where each agent's behavior is explicitly dictated by the LLM-generated instructions.

In contrast, the second approach explores the use of less structured, principle-based prompts in a bird flocking simulation. Here, the prompts rely on the LLM's inherent understanding of complex concepts such as flocking dynamics and self-organization. Instead of requiring explicit, rule-based instructions, these prompts allow the LLM to handle the intricate behavioral patterns that would otherwise need numerous rules to define. This approach leverages the LLM's capacity to intuitively model these dynamics, enabling behaviors that emerge naturally from agents' interactions with each other and their environment. As we will show, the LLM can produce adequate and adaptive agent behaviors that realistically reflect complex, emergent patterns within the simulation.

1.3 Research objectives

The following are the main objectives of our research:

• To assess the efficacy of structured prompts in the context of the rule-based ant colony foraging simulation of NetLogo, which is a classic MAS model that demonstrates swarm intelligence principles based on how real ants find food and communicate via pheromones. It is widely used in artificial intelligence, complexity science, and optimization research.

• To assess the efficacy of structured prompts in NetLogo's knowledge-driven bird flocking simulation, which is also a classic model demonstrating self-organizing behavior in MAS. It is inspired by Craig Reynolds' “Boids” model (Reynolds, 1987), which simulates how birds, fish, or other animals move in cohesive groups without a central leader.

• To present a comprehensive toolchain that combines LLMs with multi-agent simulation environments, offering a new method for modeling and analyzing swarm behavior in complex systems.

This investigation aims to explore how LLMs, through effective prompt engineering, can be integrated into multi-agent systems to model and guide emergent, self-organizing behaviors in simulated environments.

1.4 Background and related work

The integration of generative AI into multi-agent systems represents a burgeoning field that seeks to enhance the autonomy, adaptability, and realism of agent behaviors in simulations. This approach leverages the vast knowledge embedded within LLMs to influence agent interactions in ways that were previously unfeasible with traditional rule-based systems. The use of generative AI in multi-agent simulations has opened new avenues for exploring complex behaviors, emergent dynamics, and adaptive systems.

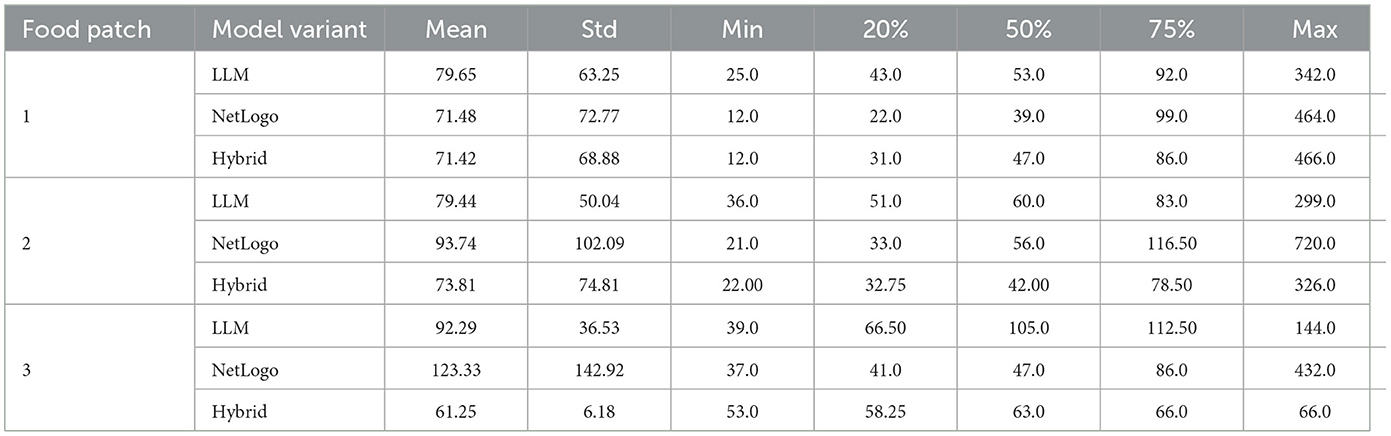

In particular, the integration of LLMs with agent-based simulations represents a significant convergence of natural language processing (NLP) and complex systems modeling. LLMs, with their ability to generate human-like text and understand complex linguistic patterns, have transformed various fields within artificial intelligence, particularly in automating and interpreting language-based tasks. Agent-based simulations are a robust framework for modeling complex systems where individual agents interact with each other and their environment, potentially leading to emergent behaviors. The use of LLMs in simulations may hereby vary widely, from highly structured, rule-based prompts that delineate specific actions to more generalized prompts that rely on the LLM's broader knowledge base. This study highlights two distinct methodologies in applying LLM capabilities to simulate emergent, multi-agent behaviors with varying degrees of prompt specificity and autonomy.

Integrating LLMs with agent-based simulations presents transformative opportunities across various domains, enhancing the realism and complexity of simulations. This integration can significantly improve the modeling of social systems, industrial automation, and multi-agent interactions.

Park et al. (2023) introduce an LLM-driven agent that can engage and converse with both humans and other AI agents. The agent has the capability to generate text that can be comprehended and interpreted by other agents. This facilitates clear communication between them, fostering effective interactions and collaboration. The simulated environment functions as a sandbox composed of text, allowing the agent to perceive and interpret the surrounding context effectively. This setting enables the agent to navigate and interact with the provided information. Inspired by the work of Park et al. (2023), Junprung (2023) presents two LLM-driven experiments, two-agent negotiation, and a six-agent murder mystery game to simulate human behavior. The author describes the behavior of three categorical different LLM-driven simulations and discusses the limitations of large-scale language models.

Gao et al. (2023) create a framework for social network simulation called S3. They simulate motion, attitude, and interactive behaviors to emulate social behavior. Due to the changing environment, the agents have to adapt and retain a memory to utilize past experiences and adjust their behavior. They observe the emergence of collective behavior among the agents and conclude their environment holds potential for further exploration in the fields of social sciences and informed decision-making. This insight suggests that the dynamics observed could provide valuable perspectives on group interactions and collaborative processes.

The research of Dasgupta et al. (2023) investigates the use of LLMs to improve the decision-making abilities of AI agents that interact with their environment. The proposed system consists of three parts: a Planner that uses a pre-trained LLM to generate instructions, a reinforcement-learning agent, the Actor, that carries out these instructions, and a Reporter that provides environmental feedback to the Planner. The Planner reads a description of the task and breaks it down into simple instructions for the Actor, who was trained to understand simple instructions and operates upon them. The Reporter observes the effects of the Actor's actions on the environment and communicates this information in a text-based form back to the Planner. The system is tested on complex tasks that require reasoning and gathering information, and the results show that it outperforms traditional reinforcement learning methods, especially when using larger language models. The researchers demonstrate that Large language models (70 billion parameters) consistently outperformed smaller language models (7 billion parameters) in the experiments, indicating that larger models have resilience against noisy or irrelevant information and greater capacity for the complex reasoning required to solve these tasks. Zhu et al. (2023) present Ghost in the Minecraft (GITM), a framework for developing general capable agents in the Minecraft world. In contrast to previous approaches, especially reinforcement learning algorithms, GITM uses large language models to achieve high success rates, e.g. in the “Obtain Diamond” task. Typical reinforcement learning-based agents often struggle with the complexity of Minecraft due to the long time horizon of the task, which can lead to difficulties in learning and adapting. In contrast, Zhu et al. (2023) leverages LLMs to enable a hierarchical decomposition of complex tasks into manageable sub-goals and structured actions. This approach yields significantly higher efficiency and robustness, allowing agents to better navigate and interact with the Minecraft environment. GITM integrates the logical reasoning and knowledge base of LLMs with text-based knowledge and memory, enabling effective interaction with the environment and the pursuit of intricate, long-term objectives. The article demonstrates the potential of LLMs for the development of generally capable agents in open, complex environments.

Recently, researchers incorporated LLM into swarm systems to leverage the reasoning and knowledge capabilities of these models (Gao et al., 2024; Qu, 2024). Strobel et al. (2024) integrate LLMs into robot swarms to enhance their reasoning, planning, and collaboration abilities. They exchange the robot programming controller by proposing two changes: (1) An indirect integration uses LLMs to generate and validate the programming of the controller before or during the deployment. This approach improves efficiency and reduces human error by automating the design process. (2) A direct integration implements a separate LLM for each robot during deployment, enabling the robot to plan, reason, and collaborate using natural language. The LLM-driven robots can detect and respond to unexpected behaviors and are more resilient in dynamic environments without prior information.

Feng et al. (2024) present an algorithm aimed at adapting LLM experts using collaborative search techniques inspired by swarm intelligence. This method allows several LLMs to collaborate in exploring the weight space to optimize a specific utility function without the need for extensive fine-tuning data or strong assumptions about the models involved. In their work, each LLM can be treated as a particle within a swarm navigating within the weight space and adjusting its position based on its best or worst-found solutions. The algorithm demonstrates flexibility in different single or multi-task objectives. Due to their collaborative search approach the LLM experts can discover unseen capabilities, which enables the transition from weak to strong performance levels.

In their work, called Swarm-GPT, Jiao et al. (2023) integrate LLMs with motion-based planning to automate Unmanned Aerial Vehicle (UAVs) swarm choreography. Users are able to generate synchronized drone performance via language commands. Swarm-GPT is able to utilize LLMs to create UAVs formations and movements which are synchronized to music. The system includes a trajectory planner that utilizes waypoints generated by the LLM, guaranteeing that the drone movements are both collision-free and feasible. Swarm-GPT has been effectively demonstrated at live events, highlighting its practical application and ability to perform in real time.

Liu et al. (2024c) explore the application of multimodal LLMs to control the formation of UAVs using image and text inputs. The researchers first pre-trained an LLM on a single UAV, demonstrating the LLM's potential to interpret and execute commands effectively. Then they expanded their approach to coordinate multiple UAVs in formation. The multimodal LLM recognizes environmental signals from the images captured by the primary drone (via a camera). Then, the pre-trained LLM analyzes the data and generates instructions for managing the UAV to attain a specified formation.

Another application in language-guided formation control is presented by Liu et al. (2024b). The authors propose a framework called Language-Guided Pattern Formation (LGPF) for swarm robotics. Their system employs an LLM to translate a high-level pattern description into specific actions for a swarm of robots, integrating multi-agent reinforcement learning for detailed control. The LGPF framework allows for intuitive and flexible control of robot swarms, enabling them to achieve complex formations guided by natural language instructions.

Most existing MAS and swarm intelligence simulations rely on explicitly programmed, rule-based behaviors that are often domain-specific and lack flexibility. While several recent works have explored LLMs for planning or social agent interactions, such as role-playing agents or virtual societies, these typically focus on human-like reasoning, not swarm intelligence or nature-inspired collective behavior. In contrast to existing literature, our paper makes the following contributions. (1) We investigate how LLMs can be integrated into swarm intelligence. This is one of the first works to use LLMs as decentralized behavioral engines for non-human and non-verbal, swarm-like agents. We demonstrate our framework on two classical swarm intelligence scenarios–ant colony foraging and bird flocking–each involving different sensory inputs and emergent outcomes. In both cases, agents receive localized information about their environment and act based on LLM-generated decisions. (2) We introduce a generalizable toolchain for integrating LLMs with OpenAI's GPT models via Python, enabling agents to act based on prompt-generated decisions rather than fixed procedural logic. This framework decouples agent logic from hardcoded rules, enabling behavior to be modified or extended via natural language prompts. (3) Our work compares two categories of LLM prompt - structured rule-based and autonomous knowledge-driven - to show how different prompt designs affect emergent behavior in collective systems. While the structured prompts encode rule-based logic in natural language, the autonomous prompts rely on the LLM's internal knowledge and general reasoning. This comparison provides insight into the design space of LLM-based agent prompts and their effect on emergent system dynamics.

2 Materials and methods

In this study, we employed two distinct simulations of the behavior of social insects to explore the integration of LLMs in guiding agent behaviors within multi-agent systems. The experiments were designed to investigate the effectiveness of structured, rule-based prompts in one scenario and principle-based, knowledge-driven prompts in the other one. Both simulations utilize the LLM to process environmental inputs and generate agent actions, providing insights into how LLMs can be leveraged to model complex behaviors such as foraging and flocking.

Structured rule-based prompts are designed with explicit, predefined instructions that guide the LLM to generate deterministic agent actions. These prompts specify exact conditions and responses, ensuring consistent and predictable agent behaviors. For example, in a foraging scenario, structured prompts might include direct rules for following pheromone trails or picking up food when encountered.

Knowledge-driven prompts, on the other hand, rely on the LLM's inherent understanding of broader behavioral concepts and principles. These prompts are less rigid and provide the LLM with general guidelines, enabling more adaptive and flexible agent behaviors. In the context of a bird flocking simulation, such prompts might encourage behaviors based on principles like alignment, cohesion, and separation without specifying exact actions, allowing the LLM to synthesize responses that foster emergent, self-organizing dynamics.

To clearly illustrate the conceptual differences between the two types of prompts employed in this study: structured, rule-based prompts and knowledge-driven prompts, Table 1 presents a comparative analysis outlining their defining criteria and characteristics.

Table 1. Comparative analysis of structured, rule-based prompts versus knowledge-driven prompts, highlighting their distinctive design criteria, operational characteristics, and intended use within agent-based simulations.

2.1 Toolchain for LLM-driven multi-agent simulations with NetLogo

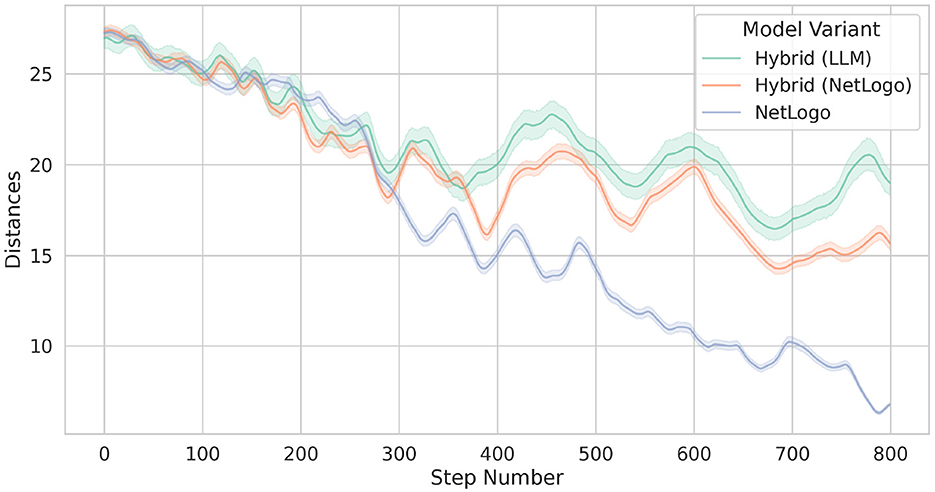

Figure 1 illustrates the toolchain for LLM-driven multi-agent simulations with NetLogo, showing the integration between NetLogo, GPT-4o, and the Python extension. The following enumeration describes each step of the workflow:

1. Environment encoding: The simulation toolchain leverages NetLogo to capture real-time environmental states, including agent positions, inter-agent interactions, and other relevant environmental variables depending on the simulation (e.g. pheromone concentrations). These data are encoded into structured prompts that convey a comprehensive environmental context to the LLM. This encoding ensures that the LLM receives timely, accurate input representing dynamic changes in the environment.

2. Python extension integration: NetLogo uses its Python extension to facilitate communication with GPT-4o via the OpenAI API. This extension allows NetLogo to send encoded environmental data as prompts to the LLM and receive structured responses, enabling the interaction between the simulation platform and the LLM.

3. LLM processing: The structured prompts are processed by GPT-4o, which interprets the input data and generates agent behavior suggestions based on encoded environmental information. The LLM's ability to process complex, context-rich data allows it to infer and propose actions that adhere to predefined rules (for structured prompts) or leverage general behavioral principles (in principle-based prompts). This stage ensures that agent responses align with the overall objectives of the simulation, be it foraging success or cohesive flocking.

4. Decoding LLM output: The LLM output, formatted as a structured JSON or Python dictionary, is translated into executable actions predefined within the NetLogo simulation. This step converts the structured actions generated by the LLM into precise instructions for agents, such as movement vectors, state transitions, or pheromone release behaviors. The Python extension facilitates this process by receiving the LLM responses from the OpenAI API and converting them into a NetLogo-compatible data structure. This translation mechanism ensures both syntactic and semantic alignment between the LLM's output and the data format required by the simulation.

5. Agent action execution and iterative process: The decoded commands are executed by the agents in NetLogo, updating their states and behaviors in response to the LLM's instructions. This action directly modifies the simulation environment, forming a closed-loop system where each action feeds back into the environmental context for the next iteration. The iterative process ensures that agent behaviors continuously respond to evolving environmental conditions and LLM feedback, fostering emergent behaviors and adaptive responses.

Figure 1. Diagram illustrating the toolchain for LLM-driven multi-agent simulations, integrating NetLogo and GPT-4o via the Python extension and OpenAI API. The workflow showcases a closed-loop process where environmental states are encoded into structured prompts, processed by GPT-4o to generate behavior suggestions, decoded into executable actions, and iteratively executed by agents within the NetLogo simulation environment.

The following sections detail the setup, LLM integration, and procedures used in each experiment.

3 Experiment 1: ant colony foraging simulation

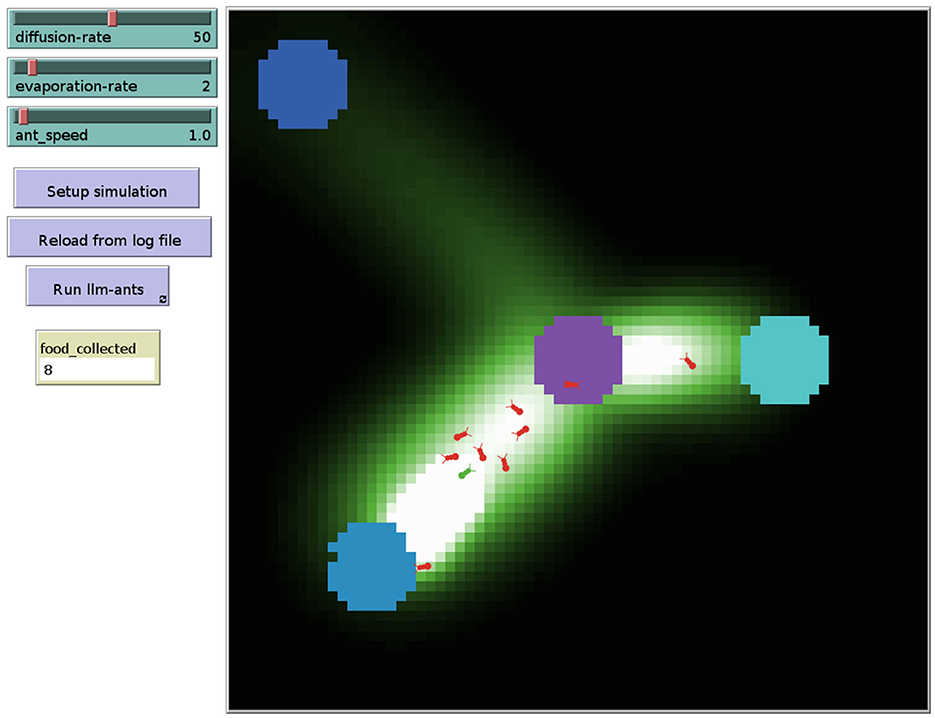

As mentioned above, this experiment is based on the ant foraging model implemented in the NetLogo library (see https://ccl.northwestern.edu/netlogo/models/Ants). It takes place in a two-dimensional foraging area consisting of designated food sources scattered throughout the environment and a central nest where the ants must return to deposit the food they collect. The environment is designed to mimic natural foraging conditions, where agents (ants) must navigate to find food and return it to the nest while interacting with environmental cues such as pheromone trails and nest scents; see Figure 2.

• Agents: The simulation features stateless ants, each operating as an independent agent without memory of past actions. These ants rely entirely on real-time environmental inputs and LLM-generated prompts to determine their behaviors. The agents are designed to follow explicit, rule-based instructions derived from the LLM, ensuring that their actions are predictable and consistent with predefined conditions.

• LLM integration: OpenAI GPT-4o is employed to process structured prompts that define the ants' behaviors. The default API parameters are used, with the exception of the temperature, which is set to 0.0 to ensure deterministic results based on the provided inputs. The LLM receives real-time environmental information and generates actions according to a predefined set of rules. These structured prompts ensure that the ants' responses are clearly defined and predictable, enabling systematic analysis of their behavior. Nevertheless, there is still a small chance that the LLM may occasionally generate responses that deviate from the specified rules.

Figure 2. Ant foraging simulation in NetLogo. The central circle depicts the nest area, while the three blue circles nearby indicate food sources.

3.1 Procedure

3.1.1 Prompt design

The prompt is structured as a zero-shot prompt, requiring the LLM to generate accurate responses without relying on prior examples or contextual memory from previous interactions. This intentional design maintains stateless prompts to control the agents. We employed an API function that does not retain conversation context between prompts, making each interaction independent and requiring the LLM to interpret and respond solely based on the current input. The final prompt used in our experiments resulted from several iterations in a trial-and-error process to ensure the LLM could effectively understand the environment and rules and execute the expected tasks accordingly. For this experiment, precise behavioral rules were provided for the ants within the simulation, including instructions such as following pheromone trails when searching for food, picking up food when encountered, and releasing pheromones to mark food sources.

3.1.2 Tuning process

Our initial approach utilized minimal instructions, providing a general description of the foraging task to assess how effectively ants could perform without specific guidance: finding food, marking paths to food sources using pheromones, and utilizing nest scent to navigate back to the nest when carrying food. This minimal instruction set was intentionally selected based on fundamental biological principles described in ecological literature related to ant foraging behavior, particularly focusing on pheromone-based communication and nest-scent navigation. The primary rationale was to evaluate the inherent capacity of LLM-driven agents to manifest realistic collective foraging behavior without extensive, explicitly detailed instructions. Through this initial generalization attempt, we aimed to determine whether the LLM required more detailed or structured instructions to accurately reproduce emergent ant colony behaviors.

Following the initial evaluation, it became clear that more explicit rules were essential for consistent and realistic ant behavior. Throughout this iterative process, the language model offered valuable feedback by highlighting misunderstandings or ambiguities in the prompts. This feedback was instrumental in refining the prompts to enhance the ants' performance. Below, we present an analysis of how these prompts evolved, focusing on specific improvements and the reasoning behind each iteration.

Iteration 1

• Prompt Text

• Observed behavior

In this first attempt, we provided general instructions to establish a baseline for ant behavior. The ants were tasked with finding food, marking paths with pheromones, and using nest scent to return home when carrying food. However, simulations revealed inconsistencies. Ants often failed to follow pheromone and nest scent gradients effectively, sometimes moving away from stronger cues. Some ants released pheromones unexpectedly, while others exhibited random movement patterns. This inconsistency prevented the colony from displaying an organized foraging behavior, indicating that clearer rules were needed for actions such as pheromone release and gradient follow-up.

• Analysis

While this prompt established the basic framework for the simulation, it lacked specific guidance on how ants should interpret and prioritize environmental cues or resolve conflicting signals. The absence of detailed instructions led to ambiguous behaviors, including inconsistencies in following pheromone and nest scent gradients. This highlighted the need for more explicit rules to ensure consistent and organized swarm behavior.

Iteration 2

• Prompt text

We add an instruction to the prompt to prioritize nest scent over pheromone trails when carrying food.

• Observed behavior

To address the issues from the first prompt, we added a directive for ants to prioritize nest scent over pheromone trails when carrying food, aiming to better mimic foraging ant behavior. Despite this improvement, ants still exhibited inconsistencies in following pheromone and nest scent gradients. When nest scent and pheromone trails had similar strengths, ants demonstrated conflicting actions. Additionally, the prompt did not specify behaviors for ants not carrying food, leading to inefficient exploration. Ants tended to rotate aimlessly near the nest and were slow to venture outward, showing the need for clearer guidance to improve exploration efficiency.

• Analysis

Introducing prioritization helped align the ants' actions when carrying food, but inconsistencies in following scent gradients persisted. Ants not carrying food and not sensing any pheromones tended to remain near the nest without effectively exploring the environment. This emphasized the necessity for comprehensive guidance covering all possible states and clearer instructions on responding to environmental cues to enhance exploration efficiency.

Iteration 3

• Prompt text

As before, with added clarifications in the current environment:

• Observed behavior

We observed that ants sometimes failed to pick up food or drop it at the nest, possibly due to a lack of awareness of their current state. To rectify this, we explicitly stated their status in the prompt, such as whether they were at the nest or carrying food. This redundancy ensured that ants performed correct actions in these situations. However, inconsistencies in following pheromone and nest scent gradients remained. Ants continued to exhibit limited exploration when not carrying food, tending to stay near the nest rather than venturing into new areas or effectively following pheromone trails.

• Analysis

Explicitly stating the ants' status improved decision-making by providing clear context, leading to better execution of actions like picking up and dropping food. Yet, the lack of specific instructions on following scent gradients meant ants still showed inconsistencies in navigating toward pheromone trails or nest scent. Their inefficient exploration highlighted the need for clearer guidance to enhance movement away from the nest.

Iteration 4

• Prompt text

We add an instruction to the prompt to use the highest pheromone scent to navigate to food when not carrying any.

• Observed behavior

To guide ants not carrying food, we specified that they should navigate toward food using the highest pheromone concentration. Their ability to find food sources when pheromone trails were present was clearly improved in this way. However, inconsistencies in following pheromone gradients persisted. In the absence of pheromones or nest scents, ants tended to remain near the nest, exhibiting inefficient exploration behaviors.

• Analysis

By distinguishing between the states of carrying and not carrying food, we enhanced the ants' foraging efficiency when environmental cues were available. Nonetheless, inconsistencies in following pheromone gradients indicated that ants needed clearer instructions on interpreting and acting upon varying scent intensities. The lack of an effective exploration strategy, when cues were absent, remained a challenge.

Iteration 5

• Prompt text

Environmental information about pheromone concentration and nest scent presented with directional cues instead of quantities:

• Observed behavior

Recognizing the need for better interpretation of environmental cues, we modified how information was presented by using directional descriptions instead of numerical values—e.g., “Higher Pheromone Concentration: Front” and “Stronger Nest Scent: Left.” This adjustment significantly improved the ants' ability to follow pheromone and nest scent gradients. Ants became more consistent in moving toward stronger cues, enhancing their navigation and foraging efficiency.

However, when no scents were detected, ants still showed limited exploration, often remaining near the nest rather than actively searching new areas. This indicated that while gradient following had improved, the exploration strategy was still inefficient in the absence of sensory cues.

• Analysis

Using directional cues provided clearer guidance on responding to environmental gradients, resolving many inconsistencies observed in previous prompts. From Prompt 5 onward, ants became more adept at following pheromone and nest scent gradients, leading to a more organized foraging behavior. Despite these improvements, ants' exploration remained inefficient when no sensory cues were present, indicating a need for further instructions to promote effective exploration.

Iteration 6

• Prompt text

We add an instruction to the prompt to release pheromones on food sources and while carrying food.

• Observed behavior

To encourage trail formation back to the nest, we instructed ants to release pheromones while carrying food. This led to stronger trails and improved the efficiency of other ants in locating food sources. With the improved gradient-following ability from Prompt 5, ants were more consistent in navigation.

Nevertheless, in the absence of pheromones and nest scents, ants still exhibited limited exploration behaviors, tending to stay near the nest. This indicated that their exploration strategy was still inefficient and required refinement.

• Analysis

By enhancing pheromone deposition during food transport and improving gradient following, we boosted colony cooperation and foraging success. However, the persistent issue of limited exploration in scent-free areas indicated that additional instructions were necessary to promote outward movement and enhance exploration efficiency.

Iteration 7

• Prompt text

We added the word “only” in the prompt as follows:

• Observed behavior

In earlier iterations, ants sometimes prioritized nest scent over pheromones even when not carrying food, leading them to return to the nest unnecessarily. With this clarification, the ants began to prioritize the nest scent appropriately, using it only when they were carrying food. However, ants still exhibited limited exploration when no sensory cues were present, tending to remain near the nest rather than actively searching new areas.

• Analysis

Adding “only” to the instruction text was crucial to ensure that the ants did not prioritize the scent of the nest when they were looking for food. This eliminated unnecessary returns and improved foraging efficiency.

Iteration 8

• Prompt text

We added an instruction to the prompt to move away from the nest and rotate randomly if not carrying any food and not sensing any pheromone.

• Observed behavior

In previous iterations, we observed limited exploratory behavior of the ants in areas without scents. To address this, we introduced a directive for proactive exploration. This approach improved exploration, with ants venturing further from the nest and discovering food sources in fewer simulation steps. However, a noticeable bias concerning the rotation remained, particularly around the nest, indicating that the randomness was not functioning as efficiently as intended.

• Analysis

By instructing ants to move away from the nest and rotate randomly when not carrying food and not sensing pheromones, we encouraged them to explore new areas more effectively. This change increased the likelihood of ants finding food, as they ventured further from the nest rather than lingering nearby.

Iteration 9

• Prompt text

We expanded the rotation options to include “random”:

• Observed behavior

With this adjustment, ants demonstrated more varied and unpredictable movement patterns during exploration. They effectively moved away from the nest and searched a wider area, increasing their chances of encountering food sources more quickly and efficiently.

• Analysis

To enhance the randomness of the ants' exploration, we expanded their rotation options to include “random.” This meant that when the LLM selected “random” as the rotation action, it was making a high-level decision to delegate the choice of direction to chance. In the simulation, this “random' ' option was implemented at a programming level in NetLogo to randomly choose the direction of rotation either left or right.

Through iterative tuning, we significantly enhanced the simulated ants' behavior, making it more consistent with the ant foraging dynamics observed in the rule-based NetLogo model. Each prompt iteration addressed specific issues identified in simulations, with language model feedback guiding some of the adjustments.

3.1.3 Prompt deployment

The prompts are presented in a format that the LLM can process and output as a series of actionable commands. Communication with the LLM is facilitated through the OpenAI API, specifically using the chat.completions mechanism, which allows context-free messages to be passed at each step. This setup involves sending a system prompt that outlines the overall task and rules, followed by user prompts that provide real-time environmental information.

At each simulation step, NetLogo translates the agent's perception of its environment into the input variables described in the prompt. This ensures that the LLM has an accurate and up-to-date representation of the environment on which its decisions can be based. The LLM then generates a response formatted as a Python dictionary, containing specific actions the agent should take. The following example prompt illustrates the process:

• System prompt

• Possible user prompt

• Possible response from the LLM

3.2 System monitoring

The simulation monitors the ants' efficiency in navigating the environment, specifically their ability to locate food, follow pheromone trails, and return food to the nest. Key metrics include the time taken to find and return food, the consistency of pheromone trail usage, and the accuracy of following the nest scent when carrying food. The observed behaviours and performance analysis are documented in the result section.

4 Experiment 2: bird flocking simulation

As mentioned before, the bird flocking model of NetLogo (see https://ccl.northwestern.edu/netlogo/models/Flocking) is an implementation of the famous Boids model from Reynolds (1987). The simulation takes place in two-dimensional airspace. Although this environment is relatively simple, it effectively replicates key flocking behaviors like group cohesion, allowing for the observation of flocking dynamics under varying conditions. By adjusting specific parameters, the simulation provides insights into how changes in the environment influence flocking behavior.

• Agents: The agents in this simulation are modeled as birds, each operating under principle-based prompts. Unlike rule-based systems, these birds are guided by general principles of flocking dynamics, that is, by alignment, separation, and cohesion (Reynolds, 1987). These principles help the birds navigate their environment by adjusting their headings in response to the positions and headings of neighboring birds.

• LLM integration: The prompts provided to the LLM leverage its inherent knowledge of flocking dynamics, requiring it to apply these general principles to guide the behavior of each bird. The LLM is responsible for interpreting environmental data and generating responses that ensure the birds align with their flockmates, maintain an appropriate distance to avoid collisions, and stay cohesive as a group.

4.1 Procedure

4.1.1 Prompt design

Similar to the setup in the case of ant foraging, prompts for the flocking task are structured as zero-shot prompts, meaning they operate without prior examples or contextual memory from previous interactions. The final prompt was tuned through several iterations (see Listing 4.1.2) in a trial-and-error process to ensure the LLM could effectively interpret the environment and calculate heading directions according to flocking principles. Each prompt guiding a bird is designed to determine its heading based on the three core principles of flocking dynamics as implemented in the NetLogo library: Separation (steering to avoid crowding neighbors), Alignment (steering towards the average heading of nearby birds), and Cohesion (steering towards the average position of nearby flockmates).

4.1.2 Tuning process

The initial rules were inspired by well established principles from flocking theory, notably the classical alignment, cohesion, and separation behaviors introduced in Reynolds (1987) Boids model. These basic principles were intended to produce generalized flocking patterns that could be broadly applicable across scenarios. However, as will be shown below, it was crucial to explicitly state in the prompt that the compass convention is used in the simulation. This alignment with NetLogo's world representation, where headings are measured in degrees—0 degrees pointing north, 90 degrees east, 180 degrees south, and 270 degrees west—was essential. Clearly defining this convention ensured that the LLM could accurately compute and adjust the birds' headings according to flocking dynamics, maintaining consistency in the agents' behavior within NetLogo's simulation environment.

Iteration 1

• Prompt Text

• Observed behavior

In this initial attempt, we provided general instructions to establish a baseline for flocking behavior. The agents were instructed to determine their heading based on the principles of separation, alignment, and coherence. However, most of the LLM-generated responses were not interpretable by the simulation, as they did not adhere to the expected format. Additionally, even when successfully parsed, inconsistencies in the agents' behavior were observed, preventing the emergence of flocking.

• Analysis

While this prompt defined the basic framework for the simulation, it lacked constraints to enforce a structured response. In many cases, the LLM's output included extended textual and mathematical explanations before or alongside the JSON object, which interfered with proper parsing.

Iteration 2

• Prompt text

An explicit instruction was added to limit the response to the JSON object only.

• Observed behavior

To address the issues from the first iteration, we added a directive restricting the response format to a JSON object only. This modification successfully constrained the output, making it more reliable and compatible with the simulation. However, while some flocking behavior emerged, it was inconsistent. Small clusters formed briefly, but overall alignment and coherence were weaker than expected.

• Analysis

We compared the resulting headings with those produced by a rule-based model. While some calculated headings were numerically similar, they often pointed in opposite directions. This suggested ambiguity in the LLM's coordinate system. Since NetLogo employs a compass convention for heading calculations, we decided to explicitly specify this convention in the next iteration.

Iteration 3

• Prompt text

An instruction was added to specify that the compass convention should be used.

• Observed behavior

By explicitly specifying the compass convention for heading calculations, flocking behavior improved. Larger clusters formed compared to previous iterations. However, flocking remained inconsistent, as some agents moved in seemingly random directions.

• Analysis

Examining the erratic headings, we requested the LLM to explain its calculations. When generating a reasoning process before outputting the final heading, the LLM produced correct answers. However, errors occurred when providing only the numerical result. This highlighted the need for a structured reasoning process, or “chain of thought,” to ensure accurate heading calculations.

Iteration 4

• Prompt text

A new key, “rationale”, was added to the JSON output to encourage reasoning before determining the final heading.

• Observed behavior

Introducing the “rationale” key significantly improved flocking behavior. The agents demonstrated more consistent heading adjustments, enhancing the emergence of flocking dynamics. However, occasional errors persisted, particularly when agents needed to turn counterclockwise to reach a nearby target heading.

• Analysis

The “rationale” key enabled the LLM to engage in a structured thought process, substantially improving flocking behavior. However, some agents still moved in the opposite direction when making small adjustments, particularly for counterclockwise turns. This suggested that additional guidance was necessary to ensure agents always chose the shortest rotation path.

Iteration 5

• Prompt text

An explicit instruction was added to ensure the shortest rotational path (clockwise or counterclockwise) was always chosen when adjusting the heading.

• Observed behavior

By explicitly instructing the model to select the shortest path to the target heading, flocking behavior improved significantly. The LLM-driven agents formed larger, more stable flocking clusters, achieving performance comparable to the original, rule-based NetLogo model.

• Analysis

Including the shortest-path directive ensured that LLM-based agents correctly aligned their heading adjustments with both LLM-based and rule-based agents. This modification resolved the previously observed issues, leading to a more coherent and emergent flocking behavior.

4.1.3 Prompt deployment

This task uses the same prompt deployment mechanism as Experiment 1. Communication with the LLM is handled via the OpenAI API using the chat.completions mechanism, which supports context-free messaging. A system prompt defines the task and rules, followed by a user prompt providing real-time environmental data.

At each simulation step, NetLogo translates the agent's perception into the input variables in the prompt, including the heading and position of other agents within its vision radius, into the input variables used in the prompt. This ensures the LLM has an accurate, up-to-date view of the environment. The LLM then generates a response formatted in JSON, specifying the agent's actions. The following example prompt illustrates this process:

• System prompt

• Possible user prompt

• Possible Response of the LLM

4.2 Monitoring Behavior

Key metrics include the degree of separation maintained between agents, the consistency of alignment with the average heading of nearby birds, and the effectiveness of cohesion in moving toward the flock's center of mass. The observed behaviors and performance analyses are documented in the results section.

5 Results

5.1 Experiment 1: ant foraging with structured rule-based prompting

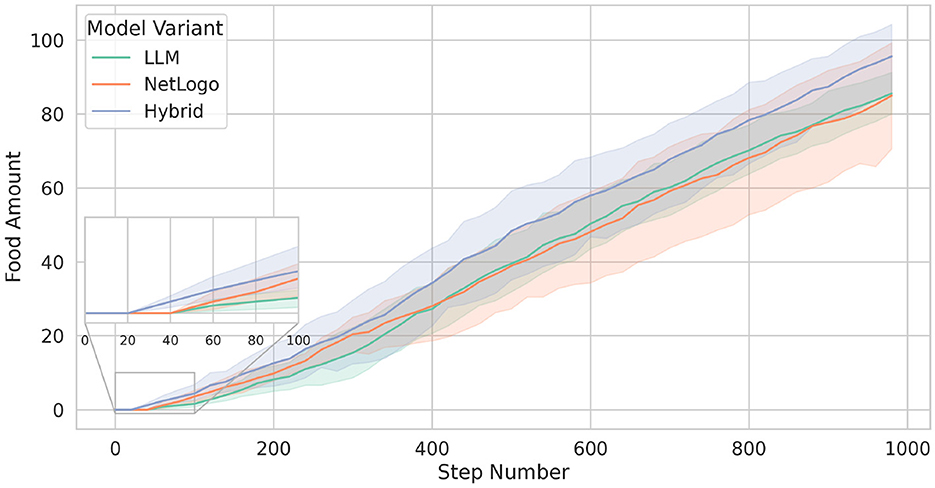

The following three variants of the ants foraging simulation were applied:

1. The original NetLogo model (henceforth simply called “NetLogo”).

2. The model in which the rule-governed ants of the original model are replaced by LLM-governed ants (henceforth called “LLM”).

3. A hybrid model in which half of the ants are rule-governed and the other half are LLM-governed (henceforth called “Hybrid”).

In all simulations, we used a colony of 10 ants, three food patches to be exploited, and a stopping criterion of 1000 simulation steps. Moreover, each experiment was repeated five times (with different seeds). The efficacy of each model was assessed by quantifying the total amount of food gathered within these 1000 simulation steps.

5.1.1 Food collection behavior

Figure 3 shows the total amount of food collected over 1,000 simulation steps for the three different model variants. NetLogo and LLM perform similarly in terms of the ants' success in bringing food back to the nest, with both models accumulating approximately 85 units of food by the end of the simulation. However, the standard deviation for NetLogo is around 20, whereas LLM displays a much lower standard deviation of about 7.

Figure 3. Comparison of the total food collected across the three tested models: NetLogo (represented by the orange line), LLM (green line), and Hybrid (blue line). This visualization highlights the food collection performance differences among the different models over five runs with different seeds. The lines represent the means, while the shaded areas indicate the standard deviations.

In contrast, the Hybrid model outperforms the other two variants, collecting an average of approximately 95 units of food with a standard deviation of about 12. This superior performance is due to the combination of the behavioural differences between LLM-guided ants and rule-based ants. The zoomed inset in Figure 3, for example, shows that Hybrid starts returning food to the nest at around 20 simulation steps, whereas LLM and NetLogo begin this process at about 40 steps. This means that, for some reason, the Hybrid variant is more efficient in quickly finding food sources.

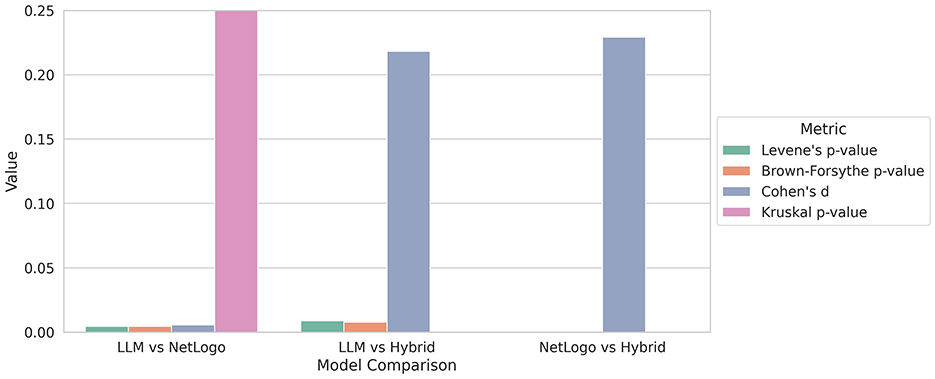

To better understand the significance of the observed performance differences in the food collection, we analyzed the variations in standard deviations between the models utilizing various tests. These tests are depicted in Figure 4 as groups corresponding to the models. The Levene and Brown-Forsythe tests assess the homogeneity of variances between the groups, with the Levene test utilizing the mean and the Brown-Forsythe test employing the median. Cohen's d quantifies the effect size, indicating the magnitude of the difference between two groups. The Kurskal-Wallis test (referred to as Kruskal in the plot) assesses whether there are statistically significant differences in the distributions, serving as a non-parametric test for medians.

Figure 4. Comparison of performance metrics of the food collection among LLM, NetLogo, and Hybrid. The Levene, Brown-Forsythe, and Kruskal-Wallis tests indicate significant differences between Hybrid and the other models, while the LLM and NetLogo show no meaningful shifts in central tendency or overall distribution. For details, see the text.

The Levene and Brown-Forsythe tests for the LLM vs. NetLogo group yielded p-values around 0.0045, indicating a significant difference in variance and suggesting that the groups have an unequal spread. In contrast, the Cohen's d value of 0.006 reflects a small effect size, suggesting nearly identical group means. The Kruskal-Wallis test produces a high value of 0.99 (truncated in the figure for improved visibility) indicating no statistical difference in the distributions. In summary, there are no practical or statistical differences observed between the LLM and NetLogo groups regarding the outcome. While the variances differ slightly, there is no meaningful shift in central tendency or overall distribution. The Levene, Brown-Forsythe, and Kruskal p-values for the LLM vs. Hybrid group are all close to zero, indicating statistically significant differences. Additionally, Cohen's d effect size is approximately 0.2, suggesting a small yet meaningful effect. This analysis reveals a statistically significant and practically noticeable difference between the LLM and Hybrid groups. While the effect size is small, it is still meaningful, indicating that Hybrid performs differently from LLM, albeit not drastically. The differing variances further support the notion that their behaviors are not merely a shift in location. The NetLogo vs. Hybrid group shows a notable and statistically supported difference between the two models. The variances differ significantly, and although the effect size is modest at 0.23, it remains consistent across analyses. This suggests that there is a meaningful distinction in performance between NetLogo and Hybrid.

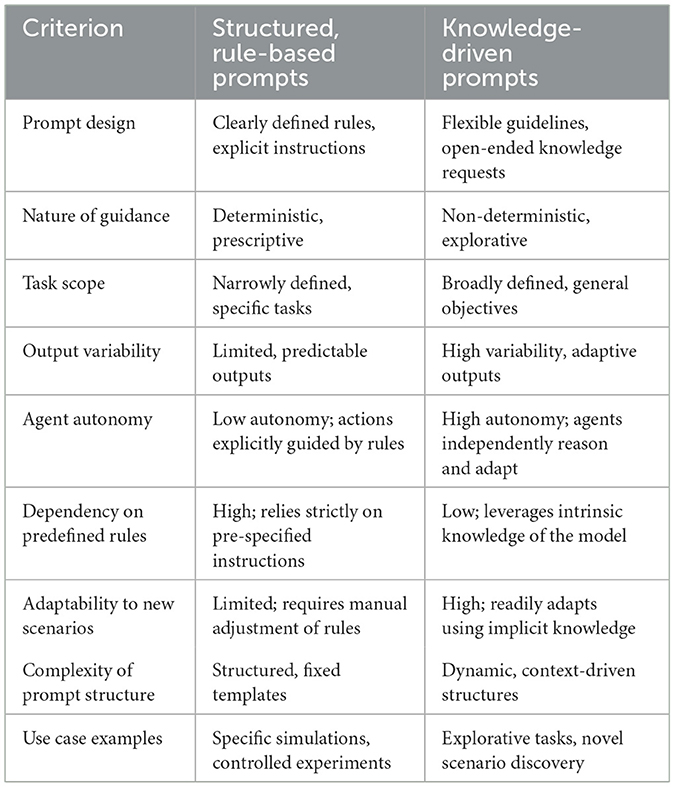

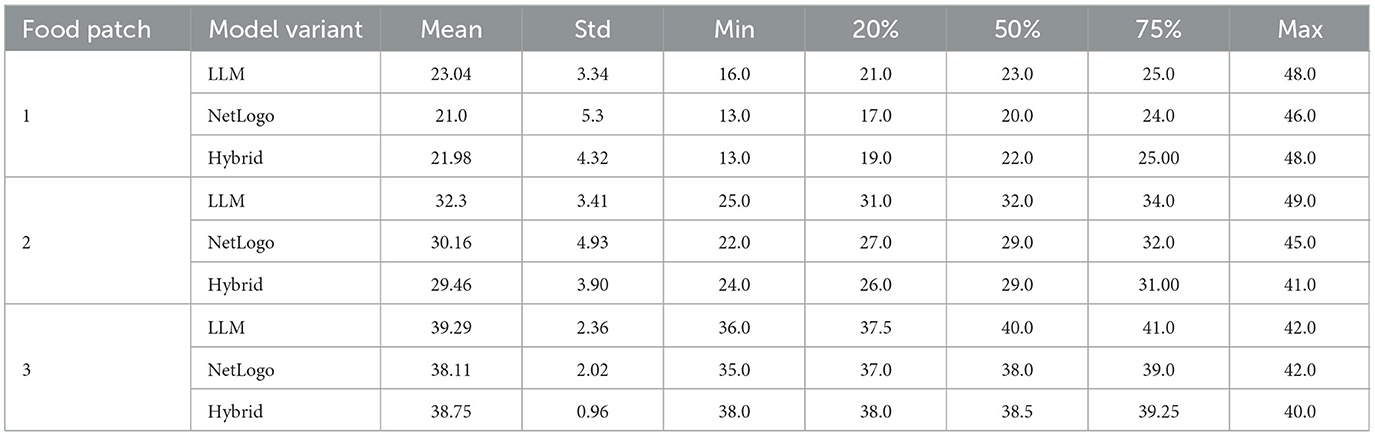

We were also interested in evaluating the effectiveness of the three model variants regarding individual ants. Figure 5 depicts the average number of simulation steps taken by an ant to return to its nest after picking up food, presented as boxplots. Generally, ants in NetLogo (rule-governed ants) require fewer steps than those controlled by the LLM. The LLM-guided ants demonstrate consistent foraging behavior across the different experiments, particularly for food patches 1 and 2. Notably, food patch 1 is the closest to the nest, while food patch 3 is the farthest away. Detailed statistics, including the three quartiles, mean, standard deviation, and minimum and maximum amounts of food collected, are provided in Table 2.

Figure 5. The average number of steps taken by an ant to return to its nest after picking up food (for food patches 1–3). The green boxplots represent the simulations of LLM, the orange boxplots those of NetLogo, while the blue boxplots show the results of Hybrid. Each boxplot spans from the first to the third quartile, with the vertical line within the box indicating the median. The whiskers extend to represent the minimum and maximum number of steps taken, while the circles denote outliers.

Table 2. Statistics concerning the average amount of steps taken by an ant to return food to the nest.

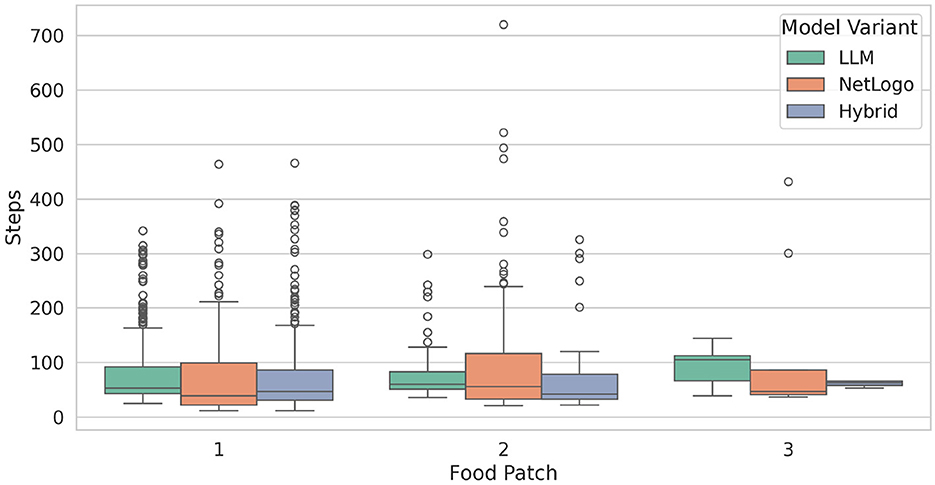

Furthermore, we investigated the average number of steps taken by an ant from leaving the nest until finding a food source, which is represented in Figure 6. We specifically track and count ants that are not carrying food and are exploring their environment until they start to carry the food. Hybrid demonstrates consistent performance in finding food patches 1 and 2. In contrast, LLM and NetLogo display a more variable behavior during food searches. Notably, concerning food patch 1, the models exhibit a higher number of outliers, which can be attributed to the ants' initial exploration of the environment before encountering the food. A notable outlier is observed in the context of NetLogo and food patch 2, where an ant required 720 steps to find food. Detailed statistics are listed in Table 3.

Figure 6. Average number of steps taken by an ant from leaving the nest to finding a food source. Each boxplot spans from the first to the third quartile, with the vertical line within the box indicating the median. The whiskers extend to represent the minimum and maximum number of steps taken, while the circles denote outliers.

5.2 Experiment 2: bird flocking simulation with knowledge-driven prompts

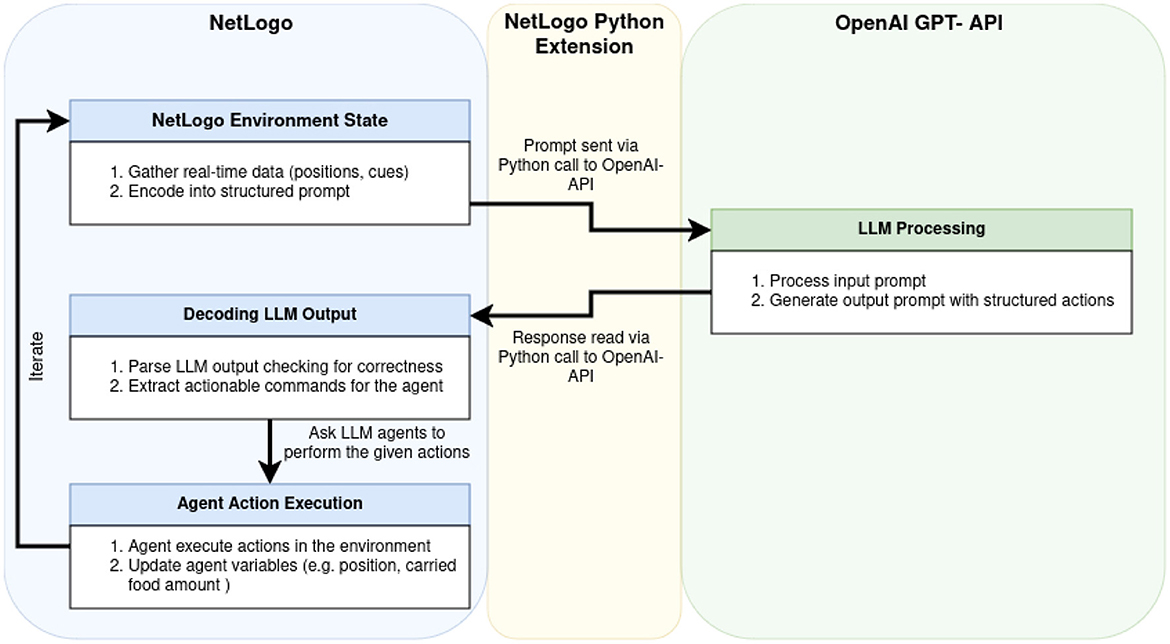

The following two model variants were experimentally tested and evaluated:

1. The original NetLogo model (henceforth simply called “NetLogo”, like in the ant foraging case).

2. The model in which some of the rule-governed birds of the original model are replaced by LLM-governed birds (henceforth called “Hybrid”).

In all simulations, we used a flock of 30 birds and a simulation length of 800 steps. In the case of Hybrid, five of 30 rule-based birds are replaced by LLM-guided birds. Moreover, each experiment was repeated five times (with different seeds). The effectiveness of the flocking behavior is evaluated by measuring the distances and angular disparities between birds across the entire simulation. Figure 7 depicts the flocking simulation executed in the NetLogo environment, featuring a heterogeneous population of 25 rule-based and 5 LLM-guided birds.

Figure 7. Bird flocking simulation in the NetLogo environment: yellow birds follow rule-based behavior, while red birds are guided by the LLM.

5.2.1 Flocking behavior

Figure 8 compares the differences in the birds' heading directions between two model variants, as outlined above. However, note that the heading differences between the rule-based birds and all other birds in model variant Hybrid (orange line) are separated from the heading differences between the LLM-guided birds and all other birds of Hybrid (green line).

Figure 8. Comparison of bird flocking heading differences across two simulation approaches: original NetLogo (blue line) and Hybrid (orange and green lines). In fact, the orange line shows the behavior of the 25 rule-based birds of Hybrid, while the green line presents the behavior of the 5 LLM-guided birds of Hybrid. The lines represent the means, while the shaded areas indicate the standard deviations.

The results shown in Figure 8 allow to make the following observations. While the two bird types of Hybrid show a similar evolution of the heading differences, the rule-based birds of the original NetLogo model show somewhat lower heading differences. We anticipate that with longer simulation runs, the heading differences of the two model variants would converge to similar values. We also observed that the LLM-guided birds tend to congregate at the outer peripheries of the flocks, positioning themselves further away from the flocks' center. An example of this behavior is visualized in Figure 7 (see the flock on the right) and also illustrated in Figure 9 which indicates the average distances between birds. We hypothesize that this rather “conservative” behavior of the LLM-guided birds contributes to greater heading differences among the rule-based birds of Hybrid, as this behavior introduces slight perturbations in the flocking dynamics. Another possible interpretation involves the internal representation of distance within the LLM. Although we define distance in Euclidean space and provide these distances as float values to the language model, it may interpret and represent distances in a different manner.

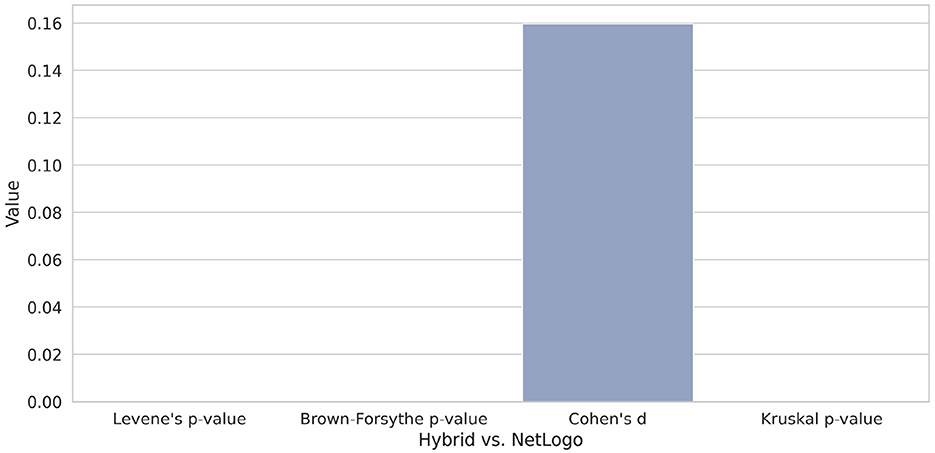

Figure 10 illustrates the statistical analysis of the heading differences between Hybrid (LLM and rule-driven birds) and NetLogo, which reveals disparities in the distributional properties of the experiments. Although the observed mean difference is relatively small, as indicated by a Cohen's d of 0.16–suggesting a minor effect size, the variance-based tests provide strong evidence of heterogeneity. Specifically, both Levene's test and the Brown-Forsythe test return extremely low p-values, indicating that the assumption of homogeneity of variances is violated. Furthermore, the Kruskal-Wallis test yields a p-value close to zero as well, signifying statistically significant differences in the overall distributions of the groups. In summary, these results suggest that although central tendencies may be similar, the variance and distributional shape differ, indicating that the underlying behavior of the models diverges substantially.

Figure 10. Boxplot comparing different test metrics of heading differences between Hybrid and NetLogo. Despite a small effect size, significant differences in variance and distribution are evident. For details, see the text.

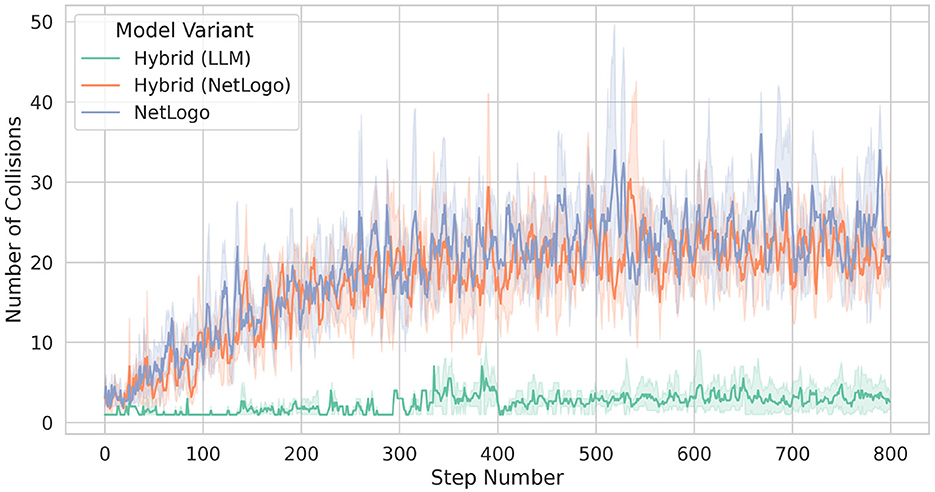

We further investigated the behavior of rather staying at the border of flocks by examining collisions between birds, which are defined as occasions in which the Euclidean distance between two birds is smaller than one. In fact, it turns out that, throughout a simulation, the LLM-guided birds try to avoid collisions; see Figure 11. In contrast, the rule-based birds from Hybrid and those from NetLogo, exhibit a much higher number of collisions.

Figure 11. Collisions between birds. A collision occurs when the distance d between birds is at most one (that is, d ≤ 1).

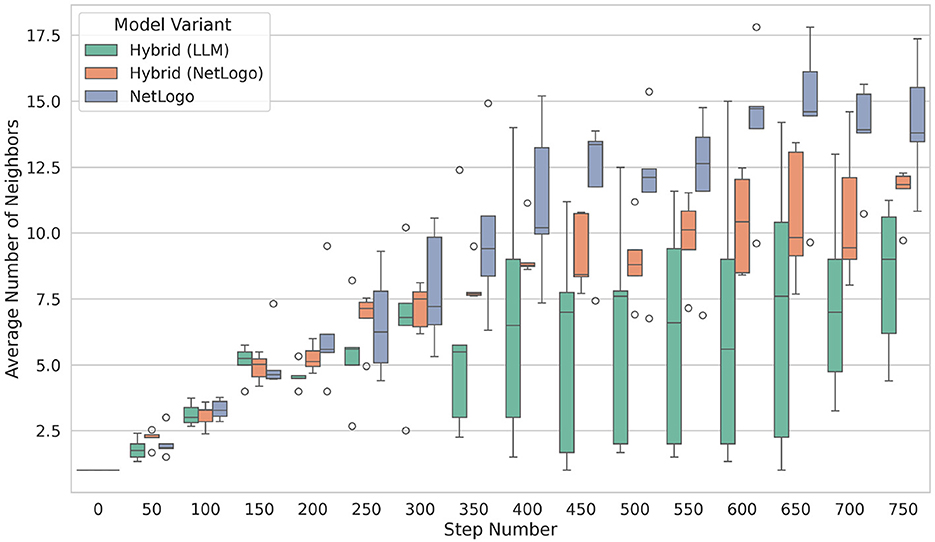

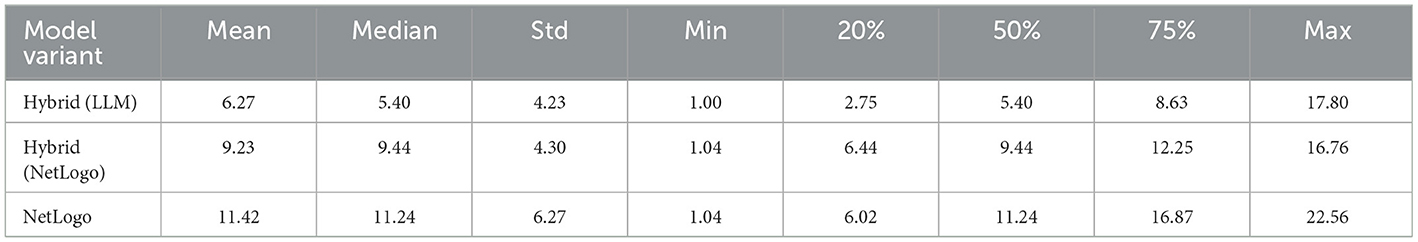

Furthermore, triggered by our earlier observations, we examined the average number of neighbors of a bird, as shown in Figure 12. Hereby, we define two birds as neighbors if they are at a distance greater than one (no collision) and within a distance d of at most five (that is, 1 < d ≤ 5). Moreover, we require a heading difference of h ≤ 15. As expected, rule-based birds exhibit the highest number of neighbors, while the LLM-guided birds display the lowest number, a result of their conservative behavior. Statistics on the average number of flocking neighbors can be found in Table 4.

Figure 12. Average number of neighbors: A neighbor is defined as any entity within a distance d such that 1 ≤ d ≤ 5, thereby excluding collisions. Furthermore, we establish a heading difference criterion of h ≤ 15.

Table 4. Statistics for the average number of flocking neighbors. The values are aggregated over all steps and experiments.

5.3 Simulation length and experimental runs

In the bird flock simulation, we empirically observed that collective behaviors stabilized after approximately 500 steps, with cluster formations remaining relatively unchanged in subsequent simulation steps. Therefore, extending the simulations beyond the chosen limit of 800 steps would likely have provided minimal additional information on emergent dynamics or system stability.

In contrast, for the ant foraging scenario, we acknowledge that a longer simulation would have been beneficial to comprehensively explore long-term emergent behaviors, particularly since complete depletion of food resources typically occurs around 2000 steps. Our choice to limit the simulations to 1000 steps was primarily motivated by computational costs and practical time constraints. Thus, we strongly recommend that future studies consider longer simulation durations to more fully capture and characterize long-term emergent behaviors and overall system stability.

6 Discussion

6.1 Prompt strategies

We explored two complementary strategies for integrating Large Language Models (LLMs) into multi-agent simulations: (i) a structured, rule-based approach in an ant foraging context, and (ii) a principle-based, knowledge-driven approach in a bird flocking scenario. In both cases, our experiments demonstrated how LLMs can support swarm-like behaviors: guiding ants to locate and retrieve food by following pheromone trails, and prompting “birds” to coordinate alignment according to core flocking principles. Overall, the LLM-driven agents performed comparably to their fully rule-based counterparts, but they sometimes displayed notable differences in how they interpreted and prioritized local cues when relying on text-based decision-making.

A key theme across both simulations was the importance of iterative prompt-tuning, which proved essential for producing consistent and context-appropriate responses. In the ant foraging simulations, early prompts did not specify what ants should do if no pheromone or nest scent was present, leading to confusion or inaction. Through multiple rounds of tuning, we added directives, such as “move away from the nest when no pheromone signals are detected” that encouraged exploration. Similarly, clarifying that nest scent should take precedence over pheromone while carrying food helped ants more reliably locate and deposit resources. Following these refinements, the foraging performance of the LLM-driven ants nearly matched that of the standard NetLogo model.

A new insight arose from the hybrid simulations, in which a portion of the ant colony was rule-based while the rest was LLM-driven. These mixed colonies often outperformed both purely rule-based and purely LLM-based groups. One possible explanation is that deterministic if-then logic efficiently manages well-understood aspects of foraging, while LLM-driven exploration provides adaptability in more uncertain situations. Thus, combining traditional rules with text-based reasoning can yield more robust foraging strategies. However, this seemingly better performance of the hybrid populations warrants further investigation. We recommend running the simulations for longer durations so that the colony has enough time to collect any remaining pieces of food, which may help clarify the mechanisms driving this performance advantage.

In the bird flocking simulations, using longer prompts that highlighted alignment, separation, and cohesion improved stability. Early prompts did not define heading conventions (e.g., 0° = north, 90° = east), causing erratic turns and reversals. After establishing the conventions and clarifying the short-turn logic (which favored minimal angular adjustments), the flocks became more cohesive. However, LLM-driven birds generally stayed slightly farther from the flock center and experienced fewer collisions than their rule-based counterparts, indicating that LLMs can interpret spatial cues in subtly different ways while still maintaining coherent swarm behavior.

In both scenarios, we observed that LLM decision-making can function effectively in a “stateless” manner, relying on complete contextual details at every step. This guarantees that the model consistently acts on relevant information but also necessitates highly detailed prompts. Failing to include key details—like pheromone intensity or heading conventions—can result in ambiguous or incorrect actions. Expanding this approach to incorporate short-term memory or more sophisticated environmental representations could enable LLM-driven agents to maintain internal states that more closely resemble those in traditional agent-based models.

Together, these results confirm that LLMs can serve as flexible engines for agent behaviors that align with swarm principles, offering adaptive, context-driven responses. They also highlight how prompt design and iterative refinement are central to achieving the desired outcomes. Even small changes in the prompts, such as specifying the angle to rotate or how to handle conflicting signals, can significantly influence emergent group-level patterns. This underscores both the potential and the complexity of integrating LLMs into agent-based simulations, where subtle details of agent logic can greatly affect collective behavior.

6.2 Challenges

Finally, regarding potential drawbacks of our approach, two key issues must be noted, computation time and cost:

First, the interaction between an agent (such as an ant or bird) and the remote LLM at each iteration of a simulation requires significantly more computation time compared to executing simple rules within NetLogo. This increase primarily stems from API latency, the computational complexity of large-scale language model inference, and the associated natural language processing operations. It is important to clarify, however, that when using an external API (such as the OpenAI API), much of this computational burden is offloaded to the AI service provider, thereby alleviating the direct computational cost from the simulation user's perspective.

In our specific experiments, we observed that each step involving an LLM-driven agent interaction typically required processing times on the order of seconds due to network latency and model response times, whereas conventional rule-based simulations executed agent interactions within milliseconds or less per simulation step. Thus, although significantly more computationally intensive per agent-step, the method remains practically feasible for smaller-scale exploratory simulations and conceptual validations, as demonstrated in this paper.

Second, utilizing GPT-4o through the OpenAI API inherently introduces token-based costs and dependencies associated with external model access, which may affect simulation scalability, cost-efficiency, and reliability. To mitigate these potential issues, the computational overhead could be significantly reduced by deploying smaller, locally hosted LLMs, particularly after targeted fine-tuning tailored to specific simulation tasks.

At the outset of this research (prior to June 2024), we experimented with multiple LLMs. Among those evaluated, GPT-4o emerged as the most efficient and successful in handling tasks, consistently achieving the expected behavior. Other tested models often exhibited unreliable and erratic behavior, including ants randomly dropping food and failing to demonstrate expected self-organizing capabilities.

However, it is crucial to emphasize that our framework is not inherently tied to GPT-4o or the OpenAI API. Rather, we explicitly designed our methodological framework to ensure adaptability and generality, allowing straightforward integration with alternative LLMs, including open-source and locally deployed solutions. Although the experiments presented in this study utilized a single external model, our methodological approach is fully extendable and compatible with a variety of language models. Given the rapid progress in performance and resource efficiency of LLMs, we anticipate future developments will further enhance the feasibility and effectiveness of locally deployed language models for the simulation of swarm behaviors.

7 Conclusion

By applying LLMs to two classic multiagent models, ant foraging and bird flocking, this study shows that LLMs can serve as a viable alternative or complement to traditional rule-based logic in achieving effective swarm-like dynamics.

In ant foraging simulations, LLM-guided ants gathered food at rates comparable to ants of the standard NetLogo model, as long as their instructions were meticulously designed. Moreover, hybrid colonies that integrated LLM-driven and rule-based ants showed a promising trend of improved performance compared to uniform groups, suggesting that the combination of deterministic efficiency and text-based reasoning can be mutually beneficial and warrants further investigation. In bird flocking, LLM-driven agents adhered to the separation, alignment, and cohesion principles to form cohesive flocks. While heading convergence sometimes lagged behind purely rule-based simulations, the resulting formations remained visually coherent. In particular, LLM-based birds adopted slightly more peripheral positions, indicating that nuanced differences in textual instructions, such as how distance and turning are interpreted, can shape global flock patterns.

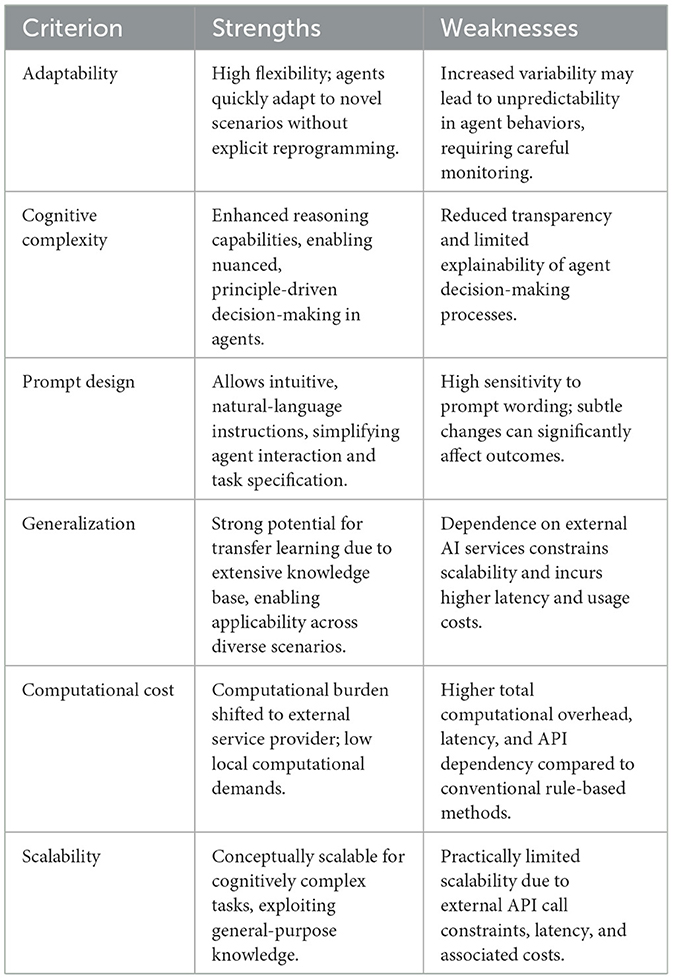

These two experimental cases were used to explore our main research objectives: to demonstrate how structured, rule-based prompts and knowledge-driven prompts could be effectively incorporated into simulations of collective emergent behavior. Although our results show promise even in these relatively simple swarm contexts, we believe that the true potential and comparative advantage of integrating LLMs into agent-based systems would be most pronounced in scenarios requiring agents with higher levels of cognitive complexity or sophisticated principle-driven decision making. In such contexts, whether modeling complex social systems, strategic economic behavior, or adaptive ecological interactions, the flexible knowledge representation, contextual understanding, and general reasoning capabilities of LLMs could significantly outweigh the additional computational costs. We summarize the strengths and weaknesses of our approach in Table 5.

Table 5. Summary of strengths and weaknesses of the proposed LLM-driven multi-agent simulation approach across relevant evaluation criteria.

These advantages manifest particularly in enabling agents to autonomously adapt to novel, dynamic, and cognitively demanding scenarios without requiring extensive reprogramming or domain-specific rule engineering, a persistent challenge with traditional hard-coded systems. Furthermore, LLMs' ability to integrate multiple knowledge domains and leverage implicit world knowledge offers the potential for more nuanced and contextually appropriate agent behaviors that would otherwise require more complex rule systems.

Additionally, in line with our research objectives, the toolchain we present, integrating NetLogo simulations with LLM interactions via Python extension and the OpenAI API, offers a practical contribution in terms of accessibility and pedagogical potential. NetLogo, widely recognized for its educational utility and intuitive interface, provides a suitable platform for exploring complex multi-agent systems. By incorporating LLMs into this familiar and user-friendly environment, our approach facilitates the study of cognitively richer and more adaptive agent behaviors, while also lowering the barrier to entry for researchers, educators, and students interested in experimenting with advanced AI techniques in agent-based modeling. We believe that this integration can support broader engagement with simulation-based research and teaching, especially in interdisciplinary contexts where accessibility and interpretability are essential.

It is important to acknowledge certain practical considerations that accompany our LLM-based approach to multi-agent systems. The integration of LLMs into agent-based simulations introduces significant computational overhead compared to traditional rule-based implementations, with each agent-LLM interaction requiring network communication and remote inference that substantially increases simulation time. Additionally, accessing commercial LLM APIs like GPT-4o incurs token-based costs that scale with simulation complexity, agent population, and runtime duration. These resource implications must be weighed against the benefits of enhanced agent capabilities and behavioral sophistication.

Future implementations might mitigate computational costs through optimizations such as local model deployment, periodic rather than continuous LLM consultation, or the distillation of LLM-derived insights into more efficient specialized models that maintain key behavioral characteristics while reducing inference requirements.

Taken together, these considerations not only illustrate the potential of LLMs in agent-based systems, but also remind us of the importance of thoughtful design when bringing these technologies into simulation contexts. Our findings highlight the central role of iterative prompt tuning in shaping how LLMs behave within multi-agent environments. Careful attention to the length, structure, and clarity of the prompts is essential to achieve consistent, context-aware responses. At the same time, this need for well-crafted prompts opens up exciting possibilities for future work. More complex simulations could benefit from increased LLM adaptability, especially if supported by mechanisms such as partial memory, contextual awareness, or reinforcement signals that allow agents to go beyond stateless behavior and respond in more nuanced, dynamic ways.

Ultimately, this work underscores the potential for advanced language models, guided by carefully designed prompts, to enrich or even extend the capabilities of traditional agent-based models, offering new perspectives on swarm intelligence, self-organization, and emergent behaviors. Beyond simulation, these insights could also inform real-world applications, particularly in swarm robotics, where the ability to generate flexible, context-aware behavior on the fly may open new possibilities for autonomous coordination, exploration, and collective problem solving in dynamic environments.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://github.com/crjimene/swarm_gpt.

Author contributions

CJ-R: Methodology, Data curation, Writing – review & editing, Investigation, Writing – original draft, Conceptualization, Funding acquisition, Formal analysis, Software, Resources. AY: Writing – original draft, Visualization, Methodology, Writing – review & editing, Software, Formal analysis, Investigation. CB: Funding acquisition, Validation, Supervision, Writing – review & editing, Writing – original draft, Investigation.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. Christian Blum was supported by grant PID2022-136787NB-I00 funded by MCIN/AEI/10.13039/501100011033.This research was supported by the EUTOPIA Science and Innovation Fellowship Programme and funded by the European Union Horizon 2020 programme under the Marie Sklodowska-Curie grant agreement No 945380.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Author disclaimer

This article reflects only the author's view and the EU Research Executive Agency is not responsible for any use that may be made of the information it contains.

References