- 1Department of Methodology of Behavioral Sciences, Faculty of Psychology and Speech Therapy, Universitat de València, València, Spain

- 2Department of Psychology, University of Turin, Turin, Italy

Introduction: This correlational study investigates the psychological and contextual factors associated with the adoption of artificial intelligence (AI) technologies among Italian high school students. Building on the Unified Theory of Acceptance and Use of Technology 2 (UTAUT2), the study extends the model by incorporating Problematic Internet Use (PIU) and Attitudes Toward AI (ATAI) to better account for habitual AI use and behavioural intentions.

Method: A sample of 933 students (Mage = 16.20, SDage = 1.29, 54.98% female) completed a survey assessing key UTAUT2 dimensions, psychological traits, and usage patterns of AI tools in educational contexts. Confirmatory factor analysis (CFA) was used to evaluate the functioning of the adapted UTAUT2. Multiple regression was used to investigate factors predicting habit formation and behavioural intention related to AI use.

Results: Confirmatory factor analysis supported the structural validity of the adapted UTAUT2 model. Multiple regression analyses revealed that Performance Expectancy, Social Influence, Hedonic Motivation, and Schoolwork-related AI use were significant predictors of both habit and behavioural intention. PIU showed a robust association with habitual use, suggesting a spillover effect from compulsive Internet behavior to AI engagement. ATAI was associated only with behavioural intention, indicating its role in initial adoption rather than sustained use. Demographic and contextual factors (e.g., school type, citizenship) showed additional effects.

Discussion: These findings contribute to a more comprehensive understanding of adolescent AI engagement by highlighting the role of compulsive tendencies and motivational beliefs. The study underscores the importance of designing inclusive, age-appropriate interventions to promote balanced and informed AI use in educational settings.

Introduction

Artificial Intelligence (AI) technologies have become increasingly pervasive, shaping how individuals interact with digital environments (Moravec et al., 2024). This is particularly true for adolescents, who are at the forefront of using these tools due to their widespread exposure to the digital world, but who may lack the maturity to manage the associated risks. From productivity tools to creative applications, AI systems offer novel capabilities that are transforming educational, professional, and personal domains. As these technologies gain widespread adoption, understanding the factors influencing their use and the resulting behavioural patterns is critical, particularly among adolescent high school students who represent future workforce entrants and early adopters of technological innovations (Ryzhko et al., 2023).

In education, AI promises to lay the foundation for a profound redefinition of teaching practices and learning processes, opening up both new opportunities and significant challenges. While the development of AI holds significant potential to improve the quality of education, its impact is not without uncertainties and risks, necessitating a critical reflection on the responsible and balanced use of AI in education (AIED). An important aspect of this shift is to understand not only how AI works, but also how its use impacts students, with the aim of maximizing its benefits and mitigating potential negative effects, especially for younger users. In this context, high school students constitute a unique demographic for studying AI adoption. Adolescents are in a transitional phase characterized by identity formation and cognitive development (Erikson, 1968). At the same time, they are growing up in a digital environment (Prensky, 2001), which makes them early adopters and potentially vulnerable users of new technologies. In this phase, their technological engagement often intersects with educational tasks, social interactions and entertainment, making them an ideal population to explore AI usage patterns and attitudes (Ali et al., 2024). Existing models, such as the Unified Theory of Acceptance and Use of Technology (UTAUT2), provide a robust framework for examining the determinants of technology adoption, integrating constructs such as Performance Expectancy, Effort Expectancy, and Hedonic Motivation (Tamilmani et al., 2021). However, the application of UTAUT2 to the context of AI usage among adolescents remains underexplored, as do the nuanced roles of demographic, contextual, and psychological factors in shaping AI-related behaviors as possible expansions of the model itself (Ali et al., 2024; Cabrera-Sanchez et al., 2021).

Artificial intelligence in education

The connection between AI development and education has deep roots that go back to the early stages of AI research. Cognitive scientists such as Simon and Newell combined their studies of machine learning with a deep interest in understanding human learning processes. These scientists saw AI not only as a technical innovation, but also as a key tool to advance educational theories (Doroudi, 2023). Over time, the focus gradually shifted from the pursuit of “Strong AI,” an AI designed to replicate human cognitive abilities (Searle, 1990), to the development of more practical and targeted systems known as “Weak AI.” These systems are designed to emulate human outcomes in specific domains. The introduction of tools such as ChatGPT, the first Generative Artificial Intelligence (GAI) platform, which has been freely accessible since November 2022, marked a turning point. AI use among adolescents has increased rapidly, especially in the education sector, where this age group has emerged as one of the key user demographics (Mogavi et al., 2024). According to Klarin et al. (2024), ChatGPT is currently the most used AI tool among adolescents, primarily used to complete school assignments. Apart from being the first GAI model introduced to the market, its popularity can probably be attributed to its ease of use, versatility and effectiveness in text generation, key features of technologies based on large language models (LLMs).

As a result of the growing accessibility of AI systems and their technological advances, academic research on AIED has experienced significant growth, with a notable increase in publications (Xia et al., 2023; Klarin et al., 2024). This growth reflects not only the increasing adoption of these technologies, but also the growing recognition of their transformative potential in education (Roy and Swargiary, 2024).

In the school environment, AI has the potential to be used for tasks such as translating, writing texts, and completing assignments. It may introduce new opportunities for personalized learning, by providing adaptive and tailored experiences for each student. For example, AI could be used to adapt educational content to the specific needs of individual students, especially in critical areas such as science, technology, engineering, and mathematics (STEM) (Panjwani-Charania and Zhai, 2023). AI support could be particularly valuable for students who face challenges, such as students with disabilities, learning disabilities, or language disadvantages, as they can benefit from support that is tailored to their unique characteristics and needs (Reiss, 2021). These applications could promote the integration of students with different cultural and linguistic backgrounds in the classroom (Salas-Pilco et al., 2022). AI systems can also be used to provide individualized tutoring by responding to students’ questions and offering explanations on complex topics, adapting the level of detail and language to each student’s needs (Roy and Swargiary, 2024).

Risks, challenges, and conflicting findings

Beyond the positive aspects, several researchers have expressed significant concerns about the potential risks of AI use, highlighting in particular issues related to students’ digital health, wellbeing, and cognitive development. Some authors warn that excessive reliance on AI tools could encourage superficial learning habits and hinder the development of fundamental skills, such as critical thinking (Mogavi et al., 2024). If students consistently rely on AI to complete tasks or find solutions, they might not fully develop their creativity and independent thinking skills, potentially compromising the achievement of meaningful goals, such as independent writing (Costa and Murphy, 2025). The systematic use of AI could therefore pose risks to the development of important cognitive skills, such as planning and problem solving (Klarin et al., 2024).

As this is a relatively new and rapidly developing field, research on the psychological and social impact of students’ use of AI provides ambivalent and often contradictory results. Some studies emphasize the risks associated with AI, particularly the negative impact on students’ health, digital wellbeing and socio-emotional development. Others, however, emphasize the potential of AIED and present it as a significant opportunity for learning and personal development.

Several researchers warn of the risks of technological dependency resulting from the overuse of AI in educational activities. Klarin et al. (2024) and Mogavi et al. (2024) warn that the reliance on AI for educational and academic tasks could lead students to become overly dependent on these tools. Similarly, León-Domínguez (2024) suggests that the unregulated use of AI could even lead to situations of technology addiction. On the other hand, studies such as that of Roy and Swargiary (2024) indicate that student motivation and engagement increase in AI-supported learning activities, suggesting that these tools could promote more active and participatory learning experiences.

The effects of AI on students’ empathy and socio-emotional skills also show conflicting perspectives. Lai et al. (2023) find that emotional awareness and the ability to manage emotions decrease in adolescents who frequently use AI, raising concerns about their emotional development. In contrast, Wang et al. (2024) suggest that AI, by offering students personalized and inclusive learning, could contribute to developing greater social sensitivity, laying the foundation for potential openness to different cultures and perspectives.

At the relational level, there are different views on the impact of AI. On the one hand, Neugnot-Cerioli and Muss Laurenty (2024) point out the risk that prolonged use of AI could hinder the development of meaningful relationships in the real world and possibly lead to social isolation. On the other hand, a study conducted in China by Xie et al. (2022) offers an opposing perspective: students who use AI show higher scores in social adaptability scores compared to peers who do not, suggesting that AIED may actually improve social integration and adaptability. Furthermore, Lai et al. (2023) note that the use of AI could reduce direct interactions between students and educators, thereby limiting opportunities for socio-emotional learning. However, Neugnot-Cerioli (2024) suggests that AI could provide an inclusive learning environment for students with communication barriers, such as students with autism spectrum disorders, by providing them with tools to overcome relational barriers, thereby supporting their participation and social development.

The development and use of AI is a transformative phenomenon that is rapidly expanding and involving an ever-increasing number of people. AIED presents adolescents with scenarios that are rich in potential and at the same time raise critical questions. It is important to carefully assess the psychological and educational implications of the use of AI among adolescents, especially given the delicate developmental stage they are in. On the one hand, AI appears to offer tools for personalized learning, potentially opening new avenues for more effective educational outcomes. It could also hold promises for overcoming language and cultural barriers, potentially promoting inclusion in diverse school environments. On the other hand, concerns have been raised about the potential risk of technological dependency and the possible long-term impact on the acquisition of important skills and overall wellbeing. While research on AIED is still at an early stage, several studies raise important questions about the psychological and social impact of intensive and prolonged use of AI.

Enthusiasm for new technological possibilities must therefore be tempered by critical reflection on potential risks. Policy makers and educational institutions are at the forefront of addressing these challenges. The Artificial Intelligence Act, recently adopted by the European Parliament (2023), emphasizes the strategic importance of AI in education. Similarly, UNESCO has published a framework for AI competences for students at promoting the inclusive, sustainable, and responsible use of these technologies (Miao and Cukurova, 2024). This student-focused framework emphasizes the need to train students not only in the technical use of AI, but also in the critical understanding of its social and environmental impacts. The guide is structured around two main dimensions: the development of fundamental competencies.

Classical models of technology acceptance and adoption

In recent decades, technology acceptance models have served as a basic theoretical framework for explaining the reasons why people choose to adopt new technologies. An important starting point is the Theory of Reasoned Action (TRA) developed by Fishbein and Ajzen (1975). According to TRA, individuals act on the basis of behavioural intentions, which are primarily derived from two factors: attitude towards the behavior, i.e., how positively or negatively a person evaluates the performance of a certain action, and subjective norms, i.e., the perceived expectations of people who are considered important (such as friends, family members or teachers) with regard to this behavior. If a person believes that important people expect them to perform a certain behavior, this belief will influence their decision to perform it or not in practice.

Building on these concepts, Ajzen (1991) extended the TRA by formulating the Theory of Planned Behavior (TPB), introduces the notion of perceived behavioural control, which is the individual’s belief as to whether they have the means to actually perform an action using the technology. Based on these premises, Davis (1989) developed the Technology Acceptance Model (TAM). This model identifies two main factors for the use of technology: perceived usefulness, i.e., how much the individual believes the technology will improve their performance, and perceived ease of use, i.e., how easy the user believes the technology will be to use. According to the TAM, these factors explain why a person intends to use technology in everyday life or at work.

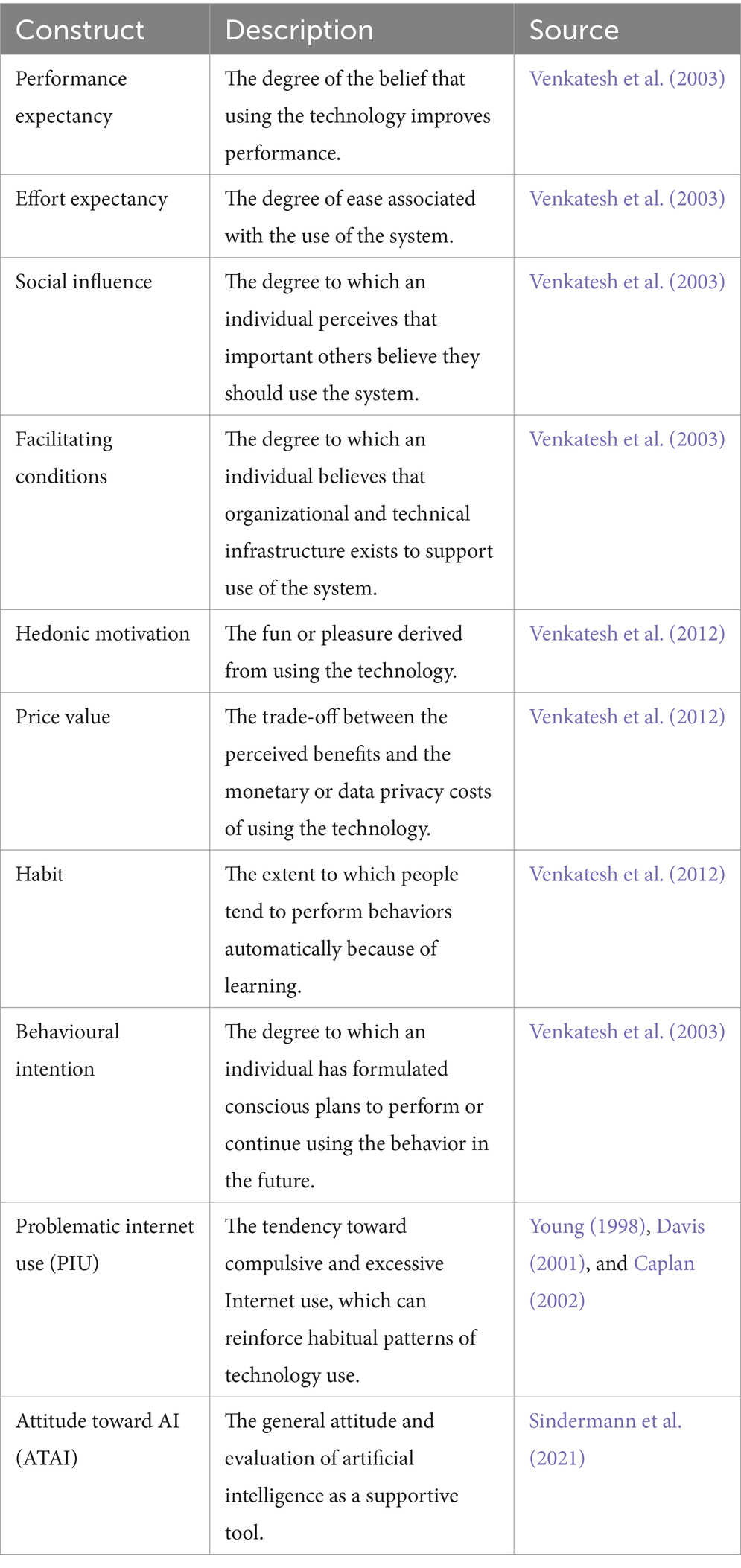

To overcome the limitation of separate models, Venkatesh et al. (2003) proposed the Unified Theory of Acceptance and Use of Technology (UTAUT), which summarizes and integrates the key constructs from TRA, TPB and TAM into a single framework combining individual elements with contextual factors to explain technology adoption decisions. UTAUT identifies several key constructs to measure technology acceptance: Performance Expectancy, i.e., the perceived usefulness of a technology in improving one’s performance (e.g., a student evaluates whether AI can help to learn better or faster); Effort Expectancy, which refers to the perceived ease of use of the device; Social Influence, which measures how much the opinion of significant others (friends, teachers, parents) influences the decision to use the technology; and Facilitating Conditions, which is the extent to which the individual believes they have the resources and technical support they need to use the device.

The further development of this model, known as UTAUT2 (Venkatesh et al., 2012), later included additional factors such as Hedonic Motivation, Habit and Price value to also interpret technology use in the context of daily use (Tamilmani et al., 2021). Hedonic Motivation refers to the pleasure and curiosity associated with using technology; Habit is understood as the routine and continuous use of technology; price value represents the balance between the perceived benefits and the costs borne by the user. Recent studies focused on university students (Soliman et al., 2024; Soliman et al., 2025) have also confirmed that UTAUT2 provides a robust structure for modelling the adoption of AI tools in educational contexts.

Integration of PIU and ATAI into the UTAUT2 model

Recent studies show the importance of considering psychological variables to explain complex behaviors such as the repeated and sometimes problematic use of digital tools.

The concept of Problematic Internet Use (PIU) was developed to describe situations in which the use of digital tools goes beyond instrumental use and leads to negative effects on psychological and social well-being (Young, 1998; Davis, 2001; Caplan, 2002). In this context, the instrument developed by Young (1998), the Internet Addiction Test (IAT), is still one of the most frequently used indicators for measuring PIU including in the educational sector. This phenomenon is particularly relevant for adolescents, as they are more susceptible to forms of addiction and excessive technology use due to their stage of development (Waldo, 2014). According to Davis (2001), users with a tendency towards PIU are more likely to approach and quickly familiarize themselves with innovative digital tools, which reinforces constant usage habits. This link makes PIU a relevant construct to integrate into models such as UTAUT2, as it can reinforce dimensions such as habit formation for regular and routine use of AI (Kuss and Griffiths, 2011).

A second important aspect concerns attitudes towards the use of technology and, in the case of this study, towards AI (Attitude Toward Artificial Intelligence, ATAI). Recent research has developed instruments that specifically measure these attitudes and show how they can influence the intention to use AI (Sindermann et al., 2021). However, as Turós et al. (2025) has shown in a study of high school students, positive attitudes towards AI do not necessarily translate into actual repeated or routine use, especially when it comes to school activities such as completing assignments. This discrepancy suggests that ATAI, when integrated into the UTAUT2 framework, can contribute to a better understanding of the differences between intention, actual use and habit formation.

The integration of PIU and ATAI within the UTAUT2 framework in the present study therefore aims to provide a more comprehensive perspective on the psychological and motivational factors that influence the development of habitual AI use and future adoption intentions in high school students. See Table 1 for a list of the constructs included in the model, and their definition.

The present study

Although the UTAUT2 provides an effective framework for the systematic investigation of the factors influencing technology adoption – while offering the possibility to include contextual and demographic factors such as gender, school type or cultural background to reflect the specific educational environment (Tamilmani et al., 2021; Soliman et al., 2025) – its application remains limited to the study of AI use among high school students (Strzelecki, 2024).

A second aspect that is still insufficiently developed concerns the integration of psychological constructs within this framework. While some previous studies have considered dimensions such as technology-related anxiety (Li et al., 2024; Chai et al., 2020), the integration of PIU (Young, 1998; Davis, 2001; Caplan, 2002) and ATAI (Sindermann et al., 2021) into the UTAUT2 in the context of AI adoption among adolescents is still poorly explored (Huang et al., 2024; Turós et al., 2025). Further research is therefore needed to understand how PIU can act as an indicator of compulsive behavior and risks to digital wellbeing, and how ATAI translates into sustained Behavioural adoption.

In light of the previous considerations, this study extends the UTAUT2 framework to investigate AI use among Italian high school students, examining how demographic characteristics, school contexts, and psychological variables influence two key outcomes: habitual use of AI technologies and the intention to use them. Specifically, we investigate the role of PIU and ATAI, providing a more comprehensive model of technology adoption. PIU captures behavioural compulsivity, reflecting usage patterns rooted in habit and dependence, while ATAI addresses the motivational and evaluative aspects that influence intentional engagement. Together, they provide a nuanced understanding of how external (e.g., social pressure, facilitating conditions) and internal (e.g., attitudes, compulsive tendencies) factors interact to shape AI adoption behaviors.

In addition, the study examines high school students’ current AI use patterns as a significant factor driving habit formation and behavioural intention to use AI in the future. High school students’ current AI use reflects how these technologies are already being integrated into their daily lives, providing a foundation for habitual engagement and future use. Whether AI is used for practical, creative, or academic purposes, frequent interaction in these contexts is likely to reinforce habits through positive reinforcement and shape their willingness to continue or expand their usage in the future.

Specifically, this research addresses the following objectives:

1. To assess the applicability of the UTAUT2 framework to high school student populations in the context of AI technologies, evaluating the relevance of constructs like Performance Expectancy, Effort Expectancy, Hedonic Motivation, and Facilitating Conditions, while incorporating psychological and contextual moderators specific to this demographic.

2. To identify the factors influencing habitual use and behavioural intentions related to AI technologies among Italian high school students, including individual demographic traits, school-related contextual variables, and psychological constructs such as Problematic Internet Use (PIU) and Attitudes Toward AI (ATAI) and current AI use.

In exploring these aims, the present study adopts a non-experimental, correlational approach leveraging questionnaire data. We aim to provide novel findings by extending the UTAUT2 framework through an innovative examination of AI technology adoption among high school students. By integrating demographic variables and psychological constructs within a validated technological acceptance model, the present study aims to offer a more comprehensive understanding of how student’s individual characteristics collectively influence AI usage habits and behavioural intentions.

Method

Participants

The initial sample comprised 1,035 high school students aged between 14 and 20 years (M = 16.18, SD = 1.28); 55.88% identified as female, 1.40% as non-binary, and 85.50% reported Italian citizenship. A total of 102 participants completed only the demographic section and were therefore excluded from subsequent analyses. The resulting study sample consisted of 933 high school students from Italy, aged between 14 and 20 years old (M = 16.20, SD = 1.29). Among the participants, 54.98% identified as female, 1.29% identified as non-binary, and 85.64% indicated they were Italian citizens. The largest proportion of students (51.8%) attends a lyceum, students from vocational schools make up 26.8%, while those in technical schools form the smallest group at 21.4%.

Participants completed the questionnaire in their classrooms; to address potential biases inherent in self-report data, we assured participants of anonymity and confidentiality and clearly stated there were no right or wrong answers. This approach aimed to reduce social desirability and encourage honest responses. Participation in this study was completely voluntary and carried no incentives or rewards. Note that all instruments were pre-tested with a pilot group of 25 students to ensure clarity and age-appropriateness of the adapted items. Based on student feedback, no adjustments were required to the instrument, as it was deemed comprehensible, relevant, and suitable for the target age group. Prior to the data collection, ethical approval was obtained from the ethics committee at the authors’ affiliated university (IRB N. 856,434).

Measures

Unified theory of acceptance and use of technology 2

To assess the determinants of AI use, this study employed an adapted version of the Unified Theory of Acceptance and Use of Technology 2 (UTAUT2) model (Venkatesh et al., 2012). UTAUT2 is a robust framework for understanding technology acceptance, encompassing key constructs such as Performance Expectancy, Effort Expectancy, Social Influence, Facilitating Conditions, Hedonic Motivation, Price Value, Habit, and Behavioural Intention. The original UTAUT2 items were modified to specifically address the context of AI use in this study. For instance, phrases like “mobile Internet” in the original questionnaire were replaced with “Artificial Intelligence.” Participants responded to each item using a 5-point Likert scale ranging from 1 (Strongly Disagree) to 5 (Strongly Agree). Example items include: “I believe that artificial intelligence is useful in my studies” (Performance Expectancy); “Learning to use artificial intelligence is easy for me” (Effort Expectancy); “People who influence my behavior believe I should use artificial intelligence” (Social Influence); and “Using artificial intelligence is fun” (Hedonic Motivation).

AI usage patterns

First, we asked participants to report on their currently used AI tools using an open-ended question [“Which artificial intelligence applications do you use (e.g., ChatGPT, Google Gemini, Algor Education, etc.)?”]. Based on manual coding of student responses, ChatGPT was the most widely used tool, with 68.6% of respondents reporting its use. This was followed by Google Translate at 4.7%, Bing Copilot at 3.7%, Google Gemini at 3.2%, Photomath at 3.0%, and MyAI Snapchat at 2.9%. Reverso and Canva both had a usage rate of 1.4%, while Algor and Character each had 0.8%. PizzaGPT was used by 0.5% of participants, and Claude had the lowest usage rate, at just 0.1%.

Next, we asked students to report on of how they you used artificial intelligence (e.g., for translation, creating texts, doing school assignments, creating images for social media, etc.). The analysis of responses revealed that 53.59% of students reported using AI for school support or homework tasks, 38.59% for content creation, 36.98% for translation, and 22.72% for information search or research. These variables were measured as binary indicators (0 = no, 1 = yes), capturing whether participants engaged in each specific AI use category.

Problematic internet use

The study employed an adaptation of the 8-item instrument designed by Young (1998) to assess participants’ tendencies toward Problematic Internet Use (PIU). This instrument includes items designed to explore behaviors and attitudes related to Internet use. Example items are: “Do you feel the need to use the Internet with increasing amounts of time in order to achieve satisfaction?”; [“Do you use the Internet as a way of escaping from problems or of relieving a dysphoric mood (e.g., feelings of helplessness, guilt, anxiety, depression)?”]. Participants were asked to reflect on the frequency of the behaviors described in each question and select the response that best described their situation, using the following scale: 0 = Never, 1 = Rarely, 2 = Occasionally, 3 = Often, 4 = Very Often. For the present study, Cronbach’s Alpha was acceptable (α = 0.78).

Attitude towards artificial intelligence

To assess participants’ attitudes towards artificial intelligence, we adapted the Attitude Towards Artificial Intelligence (ATAI) scale developed by Sindermann et al. (2021). This scale is designed to measure general attitudes towards AI across different cultural contexts. The ATAI scale consists of five items presented as statements related to AI, with which participants indicate their level of agreement on a 5-point Likert scale ranging from 1 (strongly disagree) to 5 (strongly agree). The items capture a range of sentiments towards AI, including fear, trust, perceived existential threat, potential benefits, and concerns about job displacement: 1. AI frightens me; 2. I trust AI; 3. AI will destroy humanity.; 4. AI will benefit humanity; 5. AI will cause the loss of many jobs. The scale’s brevity and straightforward wording make it suitable for use with diverse populations, including high school students. For the present study, Cronbach’s Alpha was acceptable (α = 0.70).

Data analysis

To assess the applicability of the UTAUT2 model to AI use, Confirmatory Factor Analysis (CFA) was performed. This analysis evaluated the structural validity of the model, focusing on factor loadings, covariances, and standard model fit indices, including Chi-square (χ2), Comparative Fit Index (CFI), Tucker-Lewis Index (TLI), Standardized Root Mean Square Residual (SRMR), and Root Mean Square Error of Approximation (RMSEA). We consider values of CFI > 0.95, TLI > 0.95, and RMSEA < 0.05 as an indication of good model fit, while CFI and TLI > 0.90, and RMSEA < 0.08, as indication of acceptable fit (Hu and Bentler, 1999; Marsh et al., 2004).

Reliability was assessed using Cronbach’s alpha (α) and McDonald’s Omega (ω) to ensure internal consistency of the constructs. These analyses were conducted in R using the lavaan (Rosseel, 2012) and psych (Revelle, 2019) packages.

Subsequently, multiple regression analyses were conducted to investigate the predictors of Habit and Behavioural Intention related to AI use as outcome variables. The predictors included demographic characteristics (e.g., age, gender, Italian citizenship, type of secondary school), student’s GPA, type of AI usage contexts (e.g., translation, information retrieval, schoolwork, content creation), PIU, ATAI, and the key constructs from the UTAUT2 model (i.e., Performance Expectancy, Effort Expectancy, Social Influence, Facilitating Conditions, Hedonic Motivation, Price Value). The regression models were designed to assess the unique contribution of each predictor while statistically controlling for potential confounding variables. Standardized beta coefficients (β) were used to interpret effect sizes, with model fit evaluated using R2 and Adjusted R2 to account for explained variance and model complexity. Significance was determined at p < 0.05. These analyses were performed in SPSS, version 29.

Results

Model fit of UTAUT2

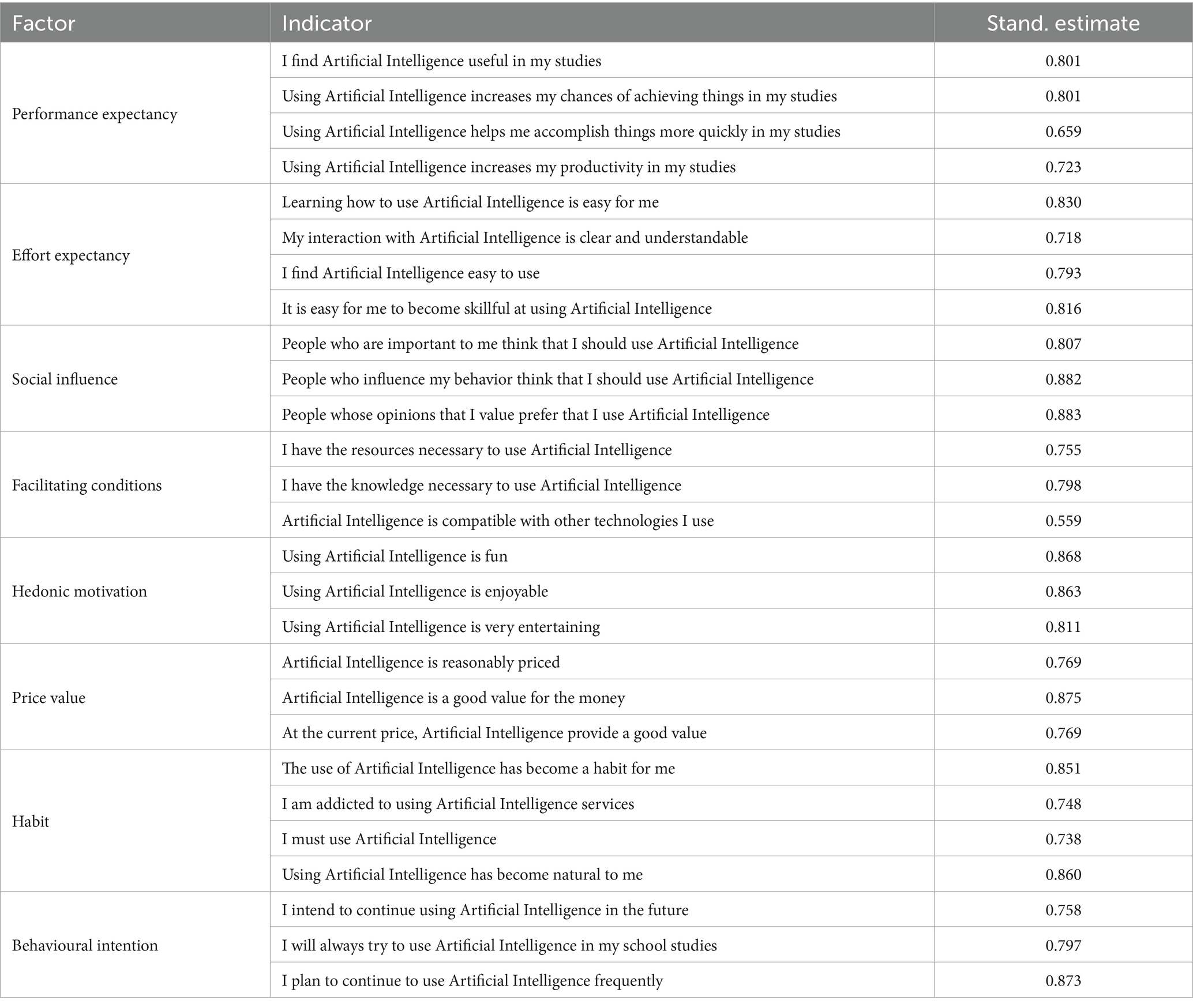

The chi-square test was significant (χ2 = 1,152, df = 322, p < 0.001), which is expected with large samples. However, other fit indices indicated acceptable model fit: CFI = 0.94, TLI = 0.93, SRMR = 0.0507, RMSEA = 0.0516 (90% CI [0.0484, 0.0549]). All items had standardized loadings >0.50, except for Item 15 (“I can get help from others when I have difficulties using artificial intelligence”) from the Facilitating Conditions dimension that had a factor loading = 0.20. We decided to remove the item. After running the model a second time, the chi-square test was still significant (χ2 = 1,050, df = 296, p < 0.001). Fit indices indicated acceptable model fit: CFI = 0.95, TLI = 0.94, SRMR = 0.0485, RMSEA = 0.0513 (90% CI [0.048, 0.0547]).

Table 2 presents the factor loadings for the retained items. All standardized factor loadings were above 0.50, indicating strong relationships between the items and their respective constructs. All loadings were statistically significant (p < 0.001). All factor correlations were statistically significant (p < 0.001), indicating significant relationships between the constructs, with latent correlations ranging from 0.246 to 0.801.

Cronbach’s alpha coefficients were calculated to assess the reliability of the constructs, with all values meeting acceptable thresholds. Performance Expectancy showed an alpha of 0.829 (McDonald’s ω = 0.832), and Effort Expectancy had 0.867 (McDonald’s ω = 0.869). Social Influence and Hedonic Motivation exhibited strong reliability with alphas of 0.892 (McDonald’s ω = 0.893) and 0.886 (McDonald’s ω = 0.888), respectively. Price value and Habit also showed high reliability, with alphas of 0.844 (McDonald’s ω = 0.847) and 0.879 (McDonald’s ω = 0.888). Behavioural Intention had an alpha of 0.846 (McDonald’s ω = 0.849), while Facilitating Conditions reported a slightly lower but acceptable alpha of 0.737 (McDonald’s ω = 0.750). These results indicate that the constructs demonstrate consistent internal reliability.

Regression analyses predicting habit and behavioural intention related to AI use

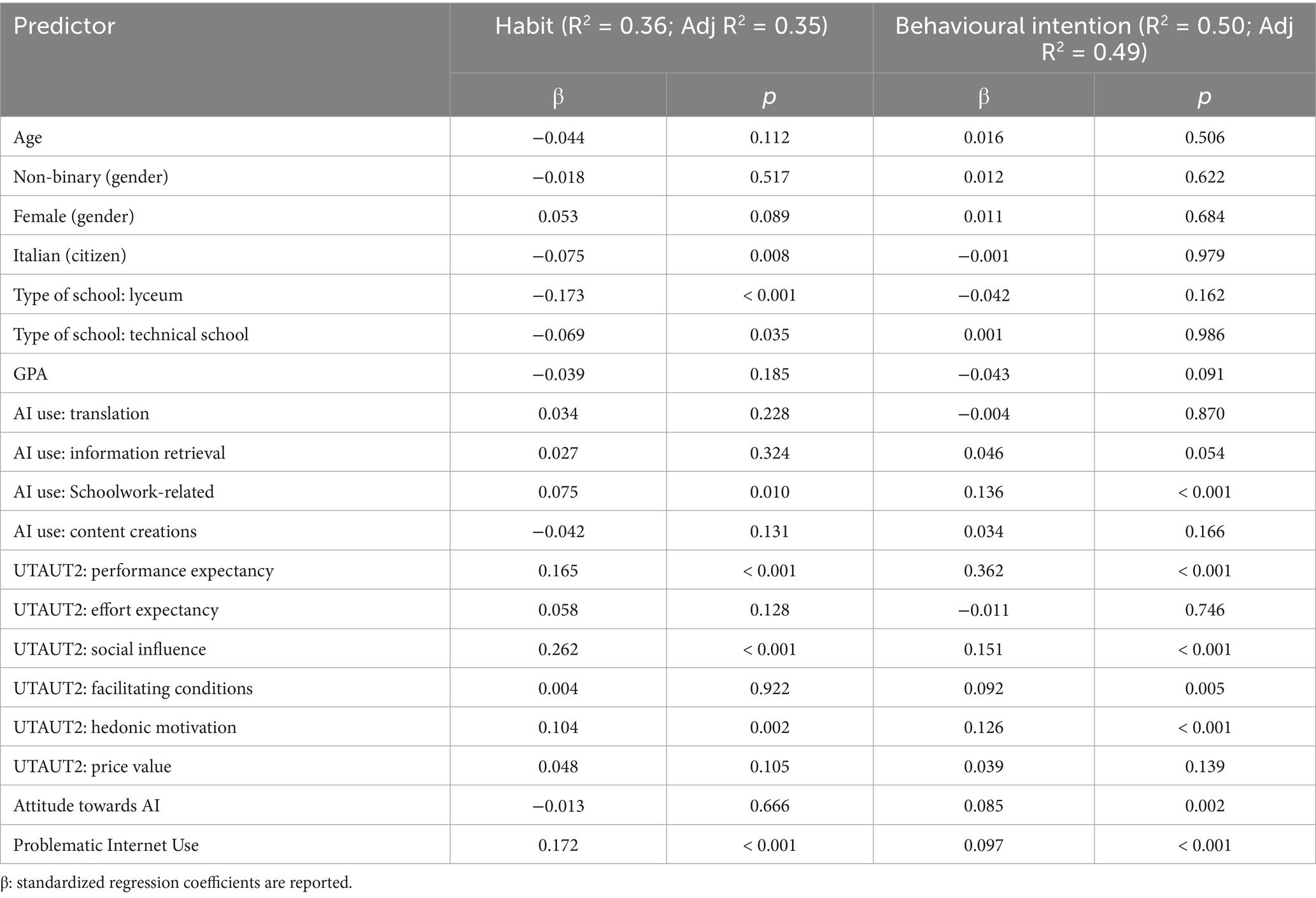

The regression analyses revealed distinct predictors for habit and behavioural intention, with model fit indices suggesting varying explanatory power (see Table 3). The model for Habit explained 36% of the variance (R2 = 0.36; Adj. R2 = 0.35), while the model for behavioural intention explained 50% (R2 = 0.50; Adj. R2 = 0.49).

For Habit, significant positive predictors included performance expectancy (β = 0.165, p < 0.001), social influence (β = 0.262, p < 0.001), Hedonic Motivation (β = 0.104, p = 0.003), Schoolwork-related use of AI (β = 0.075, p = 0.010), and Problematic Internet Use (PIU) scores (β = 0.172, p < 0.001). Students reporting having an Italian citizenship showed lower Habit compared to students with non-Italian background (β = −0.075, p = 0.008). Additionally, when compared with students attending vocational school, student attending a lyceum (β = −0.173, p < 0.001), or a Technical school (β = −0.069, p = 0.038) report lower habit related to AI use. Interestingly, Attitude Towards AI (ATAI) was not a significant predictor for Habit (β = −0.013, p = 0.666).

For behavioural intention, significant positive predictors were performance expectancy (β = 0.362, p < 0.001), social influence (β = 0.151, p < 0.001), hedonic motivation (β = 0.126, p < 0.001), facilitating conditions (β = 0.092, p = 0.005), schoolwork-related use of AI (β = 0.136, p < 0.001), PIU (β = 0.097, p < 0.001), and ATAI (β = 0.085, p = 0.002). While information retrieval approached significance (β = 0.046, p = 0.057), other predictors such as effort expectancy, price value, and demographic variables did not significantly contribute to the model.

These results suggest that habitual AI use is primarily driven by social and performance-based factors, as well as enjoyment and task relevance. However, behavioural intention appears to be more broadly influenced, with ATAI emerging as a significant predictor, highlighting its importance in shaping user intentions. The continued influence of facilitating conditions and social factors underscores the role of external support and community in encouraging AI adoption.

Discussion

The present study provides a comprehensive examination of adoption of artificial intelligence (AI) technology among Italian high school students, extending the Unified Theory of Acceptance and Use of Technology (UTAUT2) framework through an innovative analysis of psychological and contextual factors influencing AI usage habits and behavioural intentions (Tamilmani et al., 2021).

Our research revealed nuanced insights into AI technology adoption among adolescents. The model for habit and behavioural intention demonstrated significant explanatory power, highlighting the complex interplay of factors shaping AI technology engagement. Notably, Performance Expectancy consistently showed strong positive associations with both habit and behavioural intention for AI use, suggesting a significant association between students’ perception of the benefits of AI and their level of technological engagement. The substantial effect on behavioural intention indicates that perceived instrumental value may play a crucial role in shaping future technology use intentions (Grassini et al., 2024; Moravec et al., 2024).

Social Influence also emerged as a key variable for both habit and behavioural intention, underscoring the profound impact of peer dynamics and social contexts on technological adoption (Ryzhko et al., 2023). This finding aligns with previous research emphasizing the role of social networks in technology acceptance, particularly among younger populations (Ali et al., 2024). A perceived influence resulting from the behavior of peers seems to play an important role: when students observe or sense that their classmates are actively using AI, they may feel encouraged or indirectly motivated to adopt similar practices. This tendency may be reinforced by a fear of falling behind, whether through a desire to acquire technological skills that improve academic performance and provide a competitive advantage, or by conforming to social norms, trends and habits that are seen as essential for social integration and success.

Findings on Hedonic Motivation suggest that it has significant, though comparatively weaker, associations with both habit and behavioural intention to use AI. This indicates that while most students reported using AI primarily for academic purposes, such as homework support, the perceived value of AI use may also be influenced by the intrinsic enjoyment it provides (Shuhaiber et al., 2025). The remaining UTAUT2 variables showed mixed or non-significant effects on habit and behavioural intention related to AI use. Among these, Facilitating Conditions showed a significant association with behavioural intention but not of habit formation. This finding aligns with previous research highlighting the importance of initial support mechanisms in fostering users’ intention to adopt a technology (Habibi et al., 2023). In contrast, other components of the model—namely social influence, performance expectancy, and hedonic motivation—appear to serve as stronger psychological drivers of habit formation.

Effort Expectancy was not associated with behavioural intention, possibly reflecting the intuitive and user-friendly nature of AI tools such as ChatGPT, which require minimal learning effort. This may be particularly relevant for adolescents, often referred to as “digital natives” (Prensky, 2001), who have grown up immersed in digital environments. Their continuous exposure to technology has likely shaped distinct cognitive patterns and usage habits, making it easier for them to adopt new tools that demand little additional effort. Price value also did not reveal significant associations with either habit or behavioural intention, an outcome that warrants further consideration. According to Venkatesh et al. (2012) and supported by the meta-analysis of Tamilmani et al. (2021), price value is typically conceptualized in terms of direct monetary cost, reflecting users’ evaluation of whether the benefits of a technology justify its financial expense. Some students may have interpreted this construct narrowly, considering only the economic cost and deeming it irrelevant due to their use of free or freemium AI tools like ChatGPT. Others may have adopted a broader interpretation, viewing “cost” in terms of data sharing or providing feedback in exchange for usage (LaRose et al., 2008; Soliman et al., 2025). This divergence in interpretation may partly account for the non-significant association between price value and habit and behavioural intention related to AI use.

Looking beyond the examined UTAUT2 variables, we found several significant associations with habit and behavioural intention to use AI. Among these, schoolwork-related AI use was positively associated with both habit formation and future behavioural intention related to AI use, suggesting that educational utility is a primary mechanism for use of AI technology, which in turn it’s consistent with the observed surge in school-related use of LLMs among students secondary education (Zhu et al., 2025). In contrast, use of AI for content creation and translation showed non-significant associations in relation to habit and behavioural intention to use AI, though both represented relevant usage categories in our sample. This suggests that while students actively employ AI for these purposes, such applications may not be as strongly linked to long-term technological engagement.

The study also revealed interesting contextual variations. Students with non-Italian backgrounds showed higher AI usage habits, suggesting cultural and potentially socioeconomic factors influence technological adoption. This aligns with findings that international students, who often face cultural and language barriers, may be more inclined to use AI tools to support their academic performance (Ittefaq et al., 2025). Beyond this, students from vocational also demonstrated higher habit tendencies related to AI compared to those in technical and lyceum schools, indicating potential institutional variations in technological engagement (Baek et al., 2024). These findings suggest that AI use is unlikely to be uniform, but rather contextual, and may be influenced by the goals, expectations, and challenges specific to each student group (Acosta-Enriquez et al., 2024).

Of note, students’ attitude towards AI (ATAI) showed a positive association with behavioural intention but not Habit formation, suggesting that general attitudes toward AI support initial adoption decisions but are not sufficient to sustain habitual use (Sindermann et al., 2021; Turós et al., 2025). This reinforces prior findings showing that attitudes influence intention but must be supported by reinforcement mechanisms to shape long-term behavior. Finally, an intriguing and novel finding from the present study indicated that Problematic Internet Use (PIU) significantly was significantly associated with both habit and behavioural intention related to AI use. This finding aligns with the existing literature indicating that PIU is strongly associated with a higher propensity to adopt and engage with new technologies, extending this concept into the domain of artificial intelligence. Prior research has identified PIU as a predictor of early adoption and increased use of digital platforms, often motivated by novelty seeking, escapism, and social compensation (Kuss and Griffiths, 2017; Montag et al., 2021). The current study builds on this foundation by demonstrating that these same psychological drivers may now be directed towards AI, resulting in habitual engagement with AI tools and intentional efforts to integrate AI into everyday life. This extends our understanding of PIU by suggesting a spillover effect, where problematic online behaviors do not remain confined to traditional Internet usage but adapt to incorporate use of emerging technologies like AI, not necessarily for functional outcomes but as a coping mechanism (Caplan, 2002; Davis, 2001). In turn, excessive AI use, possibly fueled by PIU, could hinder the development of critical cognitive skills such as planning, problem-solving, and independent thinking (Lai et al., 2023; Xie et al., 2022). This represents a paradox: AI is used to improve performance, but its overuse may diminish the very capacities needed for autonomous learning. These interpretations must be treated with caution due to the cross-sectional design. It remains unclear whether compulsive engagement with AI stems from general PIU or from specific psychological effects unique to human-like AI systems (Sindermann et al., 2021; Turós et al., 2025).

From a theoretical standpoint, this study confirms the validity of UTAUT2 for modeling adolescent AI adoption, consistent with findings in university populations (Soliman et al., 2024; Tamilmani et al., 2021). The results also demonstrate the added value of integrating psychological constructs into the model, especially PIU, which helps explain compulsive behavior patterns not captured by UTAUT2 alone. Furthermore, this study directly addressed the research gaps described in the literature review. While UTAUT2 has been widely used to explain the adoption of technology in general (Tamilmani et al., 2021), its application to the use of AI among high school students is still limited (Strzelecki, 2024). By testing UTAUT2 in this specific context, the study provides new evidence that key constructs such as Performance Expectancy and Social Influence are strongly associated with behavioural intention and habit, supporting the model’s original assumptions and extending its relevance to high school students population. In addition, the study also fills the gap created by the limited integration of psychological constructs into the UTAUT2 framework for high school students, capturing the role of compulsive tendencies and attitudes that the standard UTAUT2 model does not fully explain (Huang et al., 2024).

In view of the observed associations between perceived utility of AI, social influence and the potential for problematic use patterns, promoting balanced and conscious use of AI among high school students appears to require carefully designed interventions. International guidelines such as the UNESCO AI Competency Frameworks for teachers and students (Miao and Cukurova, 2024) provide valuable guidance for the development of AI competency initiatives that go beyond technical education and promote a critical, ethical and human-centered understanding of AI systems. These frameworks emphasize the importance of students becoming not only informed users, but also responsible co-creators who are aware of both the opportunities and risks associated with the use of AI. At the same time, the role of teachers as learning guides is fundamental and could be strengthened accordingly. As recent pedagogical approaches such as Situated Learning Episodes with AI (ESLAI) (Panciroli et al., 2023) and the Artificial Intelligence-Technological Pedagogical Content Knowledge (AI-TPACK, Ning et al., 2024) show, teacher education may benefit from integrating AI into a robust instructional design framework that combines disciplinary knowledge, pedagogical strategies and computational thinking. The Synergy between People and Artificial Intelligence for Collaborative Education (S.P.Ai.C.E.) model (Messina and Panciroli, 2025) supports this approach by providing educators with tools to critically select, test and validate AI applications in rapidly evolving technological contexts. From a practical perspective, the deliberate and collaborative use of AI can support teachers in implementing established methodological approaches by acting as a didactic mediator. For example, within the consolidated framework of Universal Design for Learning (CAST, 2018), AI might be used to create accessible teaching materials such as concept maps (Novak and Gowin, 1984) and mind maps (Buzan and Buzan, 1993), as well as to produce text simplifications or translate learning content for students who are not yet fully proficient in the language of instruction. Such concrete applications may allow teachers to tailor the content to the different needs of the students while maintaining the pedagogical depth of the lesson.

Limitations and future research

While our study provides valuable insights, several limitations warrant acknowledgment. First, the study employed a cross-sectional design, which limits the ability to draw causal inferences regarding the observed relationships among psychological, contextual, and technological variables. Although our model identifies significant predictors of habitual and intentional AI use, longitudinal studies are needed to establish the temporal ordering and directionality of these associations.

Second, the exclusive reliance on self-report measures introduces the potential for common-method bias. Despite efforts to minimize social desirability effects, such as guaranteeing anonymity and emphasizing the absence of right or wrong answers, the use of a single method of data collection may have inflated some of the associations among variables. Future research would benefit from employing multi-method approaches, including behavioural data (e.g., logs of AI usage), peer or teacher reports, or experimental paradigms, to strengthen the validity of findings and reduce method variance.

Lastly, given the rapid evolution of AI technologies and their applications in education, future studies should adopt dynamic and iterative research designs to capture changes in usage patterns and psychological correlates over time. Incorporating qualitative methodologies may also help uncover deeper motivations and concerns that underlie adolescent engagement with AI, providing richer context for interpreting quantitative findings.

Conclusion

By integrating psychological constructs within the UTAUT2 framework, this research advances the understanding of AI technology adoption among adolescents. The findings emphasize the multifaceted nature of technological engagement, highlighting the interplay of social, psychological, and contextual factors in shaping digital behaviors.

The study confirms the central role of Social Influence, Performance Expectancy, and Hedonic Motivation in driving both behavioural intention and habit, underscoring how peer networks and the perceived utility and pleasantness of AI significantly impact technology adoption. Notably, the use of AI as a tool for enhancing academic performance, such as completing school assignments, reinforces the importance of guiding students toward a critical and mindful engagement with these technologies.

A significant and novel contribution of this study lies in the identification of the spillover effect from PIU to AI adoption. This relationship highlights how dependency-driven behaviors may influence habitual AI engagement, shifting its use from a functional academic tool to a form of digital escapism. While AI’s utilitarian potential seems to align with students’ performance expectations and may support the achievement of academic goals, the shadow of compulsive and problematic use could pose a critical risk that should not be overlooked. Such risks might include negative consequences for students’ wellbeing, learning processes, and overall cognitive development. This finding underscores the need for targeted strategies and policies to foster a balanced relationship with AI, ensuring that its adoption supports learning and academic success without compromising students’ cognitive development or digital wellbeing. Educational institutions and teachers are at the forefront of this effort. They should aim to equip students with the skills to critically evaluate AI-generated content, understand its limitations – including biases and inaccuracies – and maintain human oversight. Most of all, they should focus on helping students develop an awareness of the potential risks associated with problematic AI use, which could negatively impact their learning, health, and wellbeing. Integrating AI into targeted educational activities could help clarify its role as a tool that complements, rather than replaces, students’ learning processes.

Looking ahead, this research opens several avenues for future studies. Cross-cultural comparisons, subgroups analysis, longitudinal analyses, and objective measures of AI usage would provide a deeper understanding of how technological habits evolve over time. Moreover, exploring the perspectives of teachers and the adoption of AI for pedagogical purposes could yield practical insights for designing effective interventions and training programs.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Institutional Review Boards of the University of Turin. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants’ legal guardians/next of kin. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

LC: Investigation, Data curation, Writing – original draft. CL: Investigation, Supervision, Writing – review & editing. LB-R: Writing – review & editing, Formal analysis, Methodology, Software. DM: Methodology, Software, Formal analysis, Writing – original draft, Supervision.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frai.2025.1614993/full#supplementary-material

References

Acosta-Enriquez, B. G., Farroñan, E. V. R., Zapata, L. I. V., Garcia, F. S. M., Rabanal-León, H. C., Angaspilco, J. E. M., et al. (2024). Acceptance of artificial intelligence in university contexts: a conceptual analysis based on UTAUT2 theory. Heliyon 10:e38315. doi: 10.1016/j.heliyon.2024.e38315

Ajzen, I. (1991). The theory of planned behavior. Organ. Behav. Hum. Decis. Process. 50, 179–211. doi: 10.1016/0749-5978(91)90020-T

Ali, I., Warraich, N., and Butt, K. (2024). Acceptance and use of artificial intelligence and AI-based applications in education: a meta-analysis and future direction. Inf. Dev. 41, 859–874. doi: 10.1177/02666669241257206

Baek, C., Tate, T., and Warschauer, M. (2024). “ChatGPT seems too good to be true”: college students’ use and perceptions of generative AI. Comput. Educ. 7:100294. doi: 10.1016/j.caeai.2024.100294

Buzan, T., and Buzan, B. (1993). The mind map book: how to use the radiant thinking to maximize your brain’s untapped potential. London: Penguin Book Ltd.

Cabrera-Sanchez, J., Ramos, A., Liébana-Cabanillas, F., and Shaikh, A. (2021). Identifying relevant segments of AI applications adopters—expanding the UTAUT2's variables. Telematics Informatics 58:101529. doi: 10.1016/j.tele.2020.101529

Caplan, S. E. (2002). Problematic internet use and psychosocial well-being: development of a theory-based cognitive-Behavioural measurement instrument. Comput. Hum. Behav. 18, 553–575. doi: 10.1016/S0747-5632(02)00004-3

CAST (2018). Universal Design for Learning Guidelines version 2.2. Available online at: http://udlguidelines.cast.org. [Accessed June 28, 2025].

Chai, C. S., Wang, X., and Xu, C. (2020). An extended theory of planned behavior for the modelling of Chinese secondary school students’ intention to learn artificial intelligence. Mathematics 8:2089. doi: 10.3390/math8112089

Costa, C., and Murphy, M. (2025). Generative artificial intelligence in education: (what) are we thinking?. Learning, Media and Technology, 1–12. doi: 10.1080/17439884.2025.2518258

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 13, 319–340. doi: 10.2307/249008

Davis, R. A. (2001). A cognitive-Behavioural model of pathological internet use. Comput. Hum. Behav. 17, 187–195. doi: 10.1016/S0747-5632(00)00041-8

Doroudi, S. (2023). The intertwined histories of artificial intelligence and education. Int. J. Artif. Intell. Educ. 33, 885–928. doi: 10.1007/s40593-022-00313-2

European Parliament (2023). Artificial Intelligence Act: deal on comprehensive rules for trustworthy AI, Available online at: https://www.europarl.europa.eu/news/en/press-room/20231206IPR15699/artificial-intelligence-act-deal-on-comprehensive-rules-for-trustworthy-ai [Accessed June 28, 2025].

Fishbein, M., and Ajzen, I. (1975). Belief, attitude, intention, and behavior: An introduction to theory and research. Reading, MA: Addison-Wesley.

Grassini, S., Aasen, M. L., and Møgelvang, A. (2024). Understanding university students’ acceptance of ChatGPT: insights from the UTAUT2 model. Appl. Artif. Intell. 38:2371168. doi: 10.1080/08839514.2024.2371168

Habibi, A., Muhaimin, M., Danibao, B. K., Wibowo, Y. G., Wahyuni, S., and Octavia, A. (2023). ChatGPT in higher education learning: acceptance and use. Comput. Educ. 5:100190. doi: 10.1016/j.caeai.2023.100190

Hu, L. T., and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Model. Multidiscip. J. 6, 1–55. doi: 10.1080/10705519909540118

Huang, S., Lai, X., Ke, L., Li, Y., Wang, H., Zhao, X., et al. (2024). AI technology panic—is AI dependence bad for mental health? A cross-lagged panel model and the mediating roles of motivations for AI use among adolescents. Psychol. Res. Behav. Manag. 17, 1087–1102. doi: 10.2147/PRBM.S440889

Ittefaq, M., Zain, A., Arif, R., Ahmad, T., Khan, L., and Seo, H. (2025). Factors influencing international students’ adoption of generative artificial intelligence: the mediating role of perceived values and attitudes. J. Int. Stud. 15, 127–154. doi: 10.32674/fnwdpn48

Klarin, J., Hoff, E., Larsson, A., and Daukantaitė, D. (2024). Adolescents’ use and perceived usefulness of generative AI for schoolwork: exploring their relationships with executive functioning and academic achievement. Front. Artif. Intell. 7:1415782. doi: 10.3389/frai.2024.1415782

Kuss, D. J., and Griffiths, M. D. (2011). Online social networking and addiction—a review of the psychological literature. International Journal of Environmental Research and Public Health, 8, 3528–3552. doi: 10.3390/ijerph8093528

Kuss, D. J., and Griffiths, M. D. (2017). Social networking sites and addiction: ten lessons learned. Int. J. Environ. Res. Public Health 14:311. doi: 10.3390/ijerph14030311

Lai, T., Zeng, X., Xu, B., Xie, C., Liu, Y., Wang, Z., et al. (2023). The application of artificial intelligence technology in education influences Chinese adolescent’s emotional perception. Curr. Psychol. 43, 5309–5317. doi: 10.1007/s12144-023-04727-6

LaRose, R., Rifon, N. J., and Enbody, R. J. (2008). Promoting personal responsibility for internet safety. Commun. ACM 51, 71–76. doi: 10.1145/1325555.1325569

León-Domínguez, U. (2024). Potential cognitive risks of generative transformer-based AI chatbots on higher order executive functions. Neuropsychology 38, 293–308. doi: 10.1037/neu0000948

Li, W., Zhang, X., Li, J., Yang, X., Li, D., and Liu, Y. (2024). An explanatory study of factors influencing engagement in AI education at the K-12 level: an extension of the classic TAM model. Sci. Rep. 14:13922. doi: 10.1038/s41598-024-64363-3

Marsh, H. W., Hau, K. T., and Wen, Z. (2004). In search of golden rules: comment on hypothesis-testing approaches to setting cutoff values for fit indexes and dangers in overgeneralizing Hu and Bentler’s (1999) findings. Struct. Equ. Model. Multidiscip. J. 11, 320–341. doi: 10.1207/s15328007sem1103_2

Messina, S., and Panciroli, C. (2025). Rethinking teaching with GenAI: theoretical models and operational tools. J. Inclus. Methodol. Technol. Learn. Teach., 5. Available online at: https://inclusiveteaching.it/index.php/inclusiveteaching/article/view/273 (Accessed June 28, 2025)

Miao, F., and Cukurova, M. (2024). AI competency framework for teachers. UNESCO. doi: 10.54675/ZJTE2084

Mogavi, R. H., Zhou, P., Kwon, Y. D., Hosny, A., Tlili, A., Bassanelli, S., et al. (2024). ChatGPT in education: a blessing or a curse? A qualitative study exploring early adopters’ perceptions towards its educational applications and impact. Comput. Hum. Behav. 2:100027. doi: 10.1016/j.chbah.2023.100027

Montag, C., Lachmann, B., Herrlich, M., and Zweig, K. (2021). Addictive features of social media/messenger platforms and freemium games against the background of psychological and economic theories. Int. J. Environ. Res. Public Health 16:2612. doi: 10.3390/ijerph16142612

Moravec, V., Hynek, N., Gavurová, B., and Kubák, M. (2024). Everyday artificial intelligence unveiled: societal awareness of technological transformation. Oeconomia Copernicana 15, 367–406. doi: 10.24136/oc.2961

Neugnot-Cerioli, M., and Muss Laurenty, O. (2024). The future of child development in the AI era: Cross-disciplinary perspectives between AI and child development experts [Preprint]. arXiv. Available online at: https://arxiv.org/abs/2405.19275

Ning, Y., Zhang, C., Xu, B., Zhou, Y., and Wijaya, T. T. (2024). Teachers’ AI-TPACK: Exploring the relationship between knowledge elements. Sustainability 16:978. doi: 10.3390/su16030978

Novak, J. D., and Gowin, D. B. (1984). Learning how to learn. Cambridge, UK: Cambridge University Press. doi: 10.1017/CBO9781139173469

Panciroli, C., Allegra, M., Gentile, M., and Rivoltella, P. C. (2023). “Towards AI literacy: a proposal of a framework based on the episodes of situated learning” in Ital-IA 2023: 3rd National Conference on artificial intelligence (Pisa, Italy: CINI). Available online at: https://www.ital-ia2023.it/submission/177/paper

Panjwani-Charania, S., and Zhai, S. (2023). “AI for students with learning disabilities: a systematic review” in Uses of artificial intelligence in STEM education. eds. X. Zhai and J. Krajcik (Oxford, UK: Oxford University Press), 471–495. doi: 10.1093/oso/9780198882077.003.0021

Prensky, M. (2001). Digital natives, digital immigrants. On the Horizon 9, 1–6. doi: 10.1108/10748120110424816

Reiss, M. J. (2021). The use of AI in education: practicalities and ethical considerations. Lond. Rev. Educ. 19, 1–14. doi: 10.14324/LRE.19.1.05

Revelle, W. (2019). Psych: procedures for psychological, psychometric, and personality research. [R package]. Available online at: https://cran.r-project.org/package=psych.

Rosseel, Y. (2012). Lavaan: an R package for structural equation modeling. J. Stat. Softw. 48, 1–36. doi: 10.18637/jss.v048.i02

Roy, K., and Swargiary, K. (2024). Exploring the impact of AI integration in education: A mixed-methods study : SSRN. doi: 10.2139/ssrn.4857648

Ryzhko, O., Krainikova, T., and Krainikov, E. (2023). Models of the use of artificial intelligence in the context of life practices of Ukrainian youth. Obraz 43, 111–122. doi: 10.21272/obraz.2023.3(43)-111-122

Salas-Pilco, S. Z., Xiao, K., and Oshima, J. (2022). Artificial intelligence and new Technologies in Inclusive Education for minority students: a systematic review. Sustainability 14:13572. doi: 10.3390/su142013572

Searle, J. R. (1990). Is the brain’s mind a computer program? Sci. Am. 262, 26–31. doi: 10.1038/scientificamerican0190-26

Shuhaiber, A., Kuhail, M. A., and Salman, S. (2025). ChatGPT in higher education-a student's perspective. Comput. Hum. Behav. Rep. 17:100565. doi: 10.1016/j.chbr.2024.100565

Sindermann, C., Sha, P., Zhou, M., Wernicke, J., Schmitt, H. S., Li, M., et al. (2021). Assessing the attitude towards artificial intelligence: introduction of a short measure in German, Chinese, and English language. Künstl. Intell. 35, 109–118. doi: 10.1007/s13218-020-00689-0

Soliman, M., Ali, R. A., Khalid, J., Mahmud, I., and Wanamina, B. A. (2024). Modelling continuous intention to use generative artificial intelligence as an educational tool among university students: findings from PLS-SEM and ANN. J. Comput. Educ. doi: 10.1007/s40692-024-00333-y

Soliman, M., Ali, R. A., Mahmud, I., and Noipom, T. (2025). Unlocking AI-powered tools adoption among university students: a fuzzy-set approach. J. Inform. Commun. Technol. 24, 1–28. doi: 10.32890/jict2025.24.1.1

Strzelecki, A. (2024). Students’ acceptance of ChatGPT in higher education: an extended unified theory of acceptance and use of technology. Innov. High. Educ. 49, 223–245. doi: 10.1007/s10755-023-09686-1

Tamilmani, K., Rana, N., Wamba, S., and Dwivedi, R. (2021). The extended unified theory of acceptance and use of technology (UTAUT2): a systematic literature review and theory evaluation. Int. J. Inf. Manag. 57:102269. doi: 10.1016/j.ijinfomgt.2020.102269

Turós, M., Nagy, R., and Szűts, Z. (2025). What percentage of secondary school students do their homework with the help of artificial intelligence? A survey of attitudes towards artificial intelligence. Comput. Educ. 8:100394. doi: 10.1016/j.caeai.2025.100394

Venkatesh, V., Morris, M. G., Davis, G. B., and Davis, F. D. (2003). User acceptance of information technology: toward a unified view. MIS Q. 27, 425–478. doi: 10.2307/30036540

Venkatesh, V., Thong, J. Y. L., and Xu, X. (2012). Consumer acceptance and use of information technology: extending the unified theory of acceptance and use of technology. MIS Q. 36, 157–178. doi: 10.2307/41410412

Waldo, A. D. (2014). Correlates of internet addiction among adolescents. Psychology 5, 1999–2008. doi: 10.4236/psych.2014.518203

Wang, S., Wang, F., Zhu, Z., Wang, J., Tran, T., and Du, Z. (2024). Artificial intelligence in education: a systematic literature review. Expert Syst. Appl. 252:124167. doi: 10.1016/j.eswa.2024.124167

Xia, Q., Chiu, T. K. F., Zhou, X.-Y., Chai, C. S., and Cheng, M.-T. (2023). Systematic literature review on opportunities, challenges, and future research recommendations of artificial intelligence in education. Comput. Educ. 4:100118. doi: 10.1016/j.caeai.2022.100118

Xie, C., Ruan, M., Lin, P., Wang, Z., Lai, T., Xie, Y., et al. (2022). Influence of artificial intelligence in education on adolescents’ social adaptability: a machine learning study. Int. J. Environ. Res. Public Health 19:7890. doi: 10.3390/ijerph19137890

Young, K. S. (1998). Internet addiction: the emergence of a new clinical disorder. Cyberpsychol. Behav. 1, 237–244. doi: 10.1089/cpb.1998.1.237

Keywords: artificial intelligence in education, UTAUT2 framework, adolescent technology use, problematic internet use, behavioural intention

Citation: Caffaratti LB, Longobardi C, Badenes-Ribera L and Marengo D (2025) AI adoption among adolescents in education: extending the UTAUT2 with psychological and contextual factors. Front. Artif. Intell. 8:1614993. doi: 10.3389/frai.2025.1614993

Edited by:

Kelly Merrill, University of Cincinnati, United StatesReviewed by:

Mohamed Soliman, Prince of Songkla University, ThailandMazen Alzyoud, Al al-Bayt University, Jordan

Katerina Velli, University of Macedonia, Greece

Jesús Catherine Saldaña Bocanegra, Universidad César Vallejo, Peru

Copyright © 2025 Caffaratti, Longobardi, Badenes-Ribera and Marengo. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Claudio Longobardi, Q2xhdWRpby5sb25nb2JhcmRpQHVuaXRvLml0

Luca Ballestra Caffaratti

Luca Ballestra Caffaratti Claudio Longobardi

Claudio Longobardi Laura Badenes-Ribera

Laura Badenes-Ribera Davide Marengo

Davide Marengo