- 1Centre for Cancer Screening, Prevention and Early Diagnosis, Wolfson Institute of Population Health, Queen Mary University of London, London, United Kingdom

- 2Department of Public Health and Primary Care, University of Cambridge, Cambridge, United Kingdom

- 3Centre for Health Psychology, University of Manchester, Manchester, United Kingdom

In response to an increased focus on acceptability research in healthcare, this perspective paper highlights the challenges of assessing acceptability and the need for, and importance of, further work to develop best practice guidelines for evaluating the acceptability of cancer screening. We report the results of a rapid consultation consensus survey carried out to explore the extent of conceptual, methodological, and translational challenges shared by those involved in assessing the acceptability of cancer screening. Our findings demonstrate that the current lack of consensus regarding the conceptualization and definition of acceptability is a key challenge which gives rise to further methodological and translational issues. The implications of the challenges experienced by those assessing the acceptability of cancer screening are discussed from a behavioral science perspective.

Background

In recent years there has been increasing interest in the concept of acceptability in healthcare, driven by a growing global focus on patient-centered care. In 2016, the World Health Organization called for central changes to the ways that health systems operate (1). They set out a strategy for the provision of health services which respond to the needs and preferences of individuals throughout their lifetime, and this is reflected in the aims of bodies such as Patient Centered Outcomes Research Institute (PCORI) (2). Increasing recognition of the importance of public and patient acceptability is also reflected in healthcare intervention frameworks. The UK Medical Research Council's latest framework for developing and evaluating complex interventions includes acceptability as a key element (3). In response to rising demand for a definition and measure of acceptability, the Theoretical Framework of Acceptability (TFA) was published in 2017 consisting of seven key domains for evaluating healthcare interventions: affective attitude, burden, perceived effectiveness, ethicality, intervention coherence, opportunity costs, and self-efficacy (4), and has since received over 3,000 citations (Google Scholar).

In the context of cancer screening, acceptability is further endorsed by researchers and regulatory bodies. The CanTest framework advocates for patient and clinician acceptability to be evaluated during the development and implementation of early cancer detection tests, including screening (5). Similarly, the UK National Screening Committee stipulates that tests used in screening programmes should be acceptable to the target population and that there should be evidence that the screening programme is “clinically, socially, and ethically acceptable to health professionals and the public.” (6). This has become increasingly relevant given recent innovations in early cancer detection (such as risk-stratification, the use of Artificial Intelligence, and emerging biomarkers and technologies) which are expected to inform the delivery of established and emerging screening programmes (such as lung cancer screening) (7, 8). Understanding the acceptability of current and emerging practice is important to identify factors which may influence participation and experiences of taking part in cancer screening. This information can be used to inform the introduction of new screening programmes and guide public health communications about changes to existing practice. Importantly, there are widespread and deep-rooted inequalities in cancer screening uptake.

Individuals experiencing higher socioeconomic deprivation and those from minoritised ethnic groups, as well as other marginalized groups (e.g., LGBTQ+ people, those with physical and learning disabilities and people with mental illness) face additional barriers to taking part and show lower rates of participation across national screening programmes (9–15). Acceptability research is therefore further justified to identify the specific needs and preferences of underserved populations to inform the equitable implementation of emerging innovations in cancer screening and reduce existing disparities.

Challenges in acceptability research: a rapid consultation consensus survey

Given the growing importance of evaluating public acceptability of current and emerging practices in cancer screening, sound methodological approaches for researching acceptability are required. Following several cross-institution cancer behavioral science research seminars in 2022, and a well-attended symposium at the UK Society for Behavioral Medicine conference in 2023, the authors identified various shared challenges of conducting acceptability research in cancer screening. To determine if these challenges were experienced by other researchers, we conducted a survey to gather stakeholder consensus among the wider research community. This survey sought to better understand the challenges of conceptualizing, conducting, assessing and interpreting acceptability research in the context of cancer screening.

The anonymous online survey was hosted on Qualtrics. Participants were given study information and agreed to a consent statement before completing the survey. This study was approved by the Queen Mary University of London Research Ethics Committee (reference: QME23.0283) and study recruitment took place between 8 April and 22 July 2024. All participants were recruited using convenience sampling and snowballing techniques. Authors invited cancer screening stakeholders (n = 61) to complete the survey either via LinkedIn or direct email, and participants were encouraged to share the survey link with others in the field.

Survey items were developed to assess participants' involvement and experience of assessing the acceptability of cancer screening and early detection. These included their methodological approaches and the challenges they face in conceptualizing and defining acceptability, using theories/frameworks, and translating acceptability outcomes (Figure 1). Participants were asked to indicate “how much have each of the following been a challenge for you” on a 4-point Likert scale ranging from “not at all challenging” to “very challenging.” There was also a “Not sure/Don't know” and a “not relevant to my work” option. Free-text boxes were included for participants to offer further detail about experienced challenges. The survey is available at OSF Challenges of assessing acceptability in the context of cancer screening.

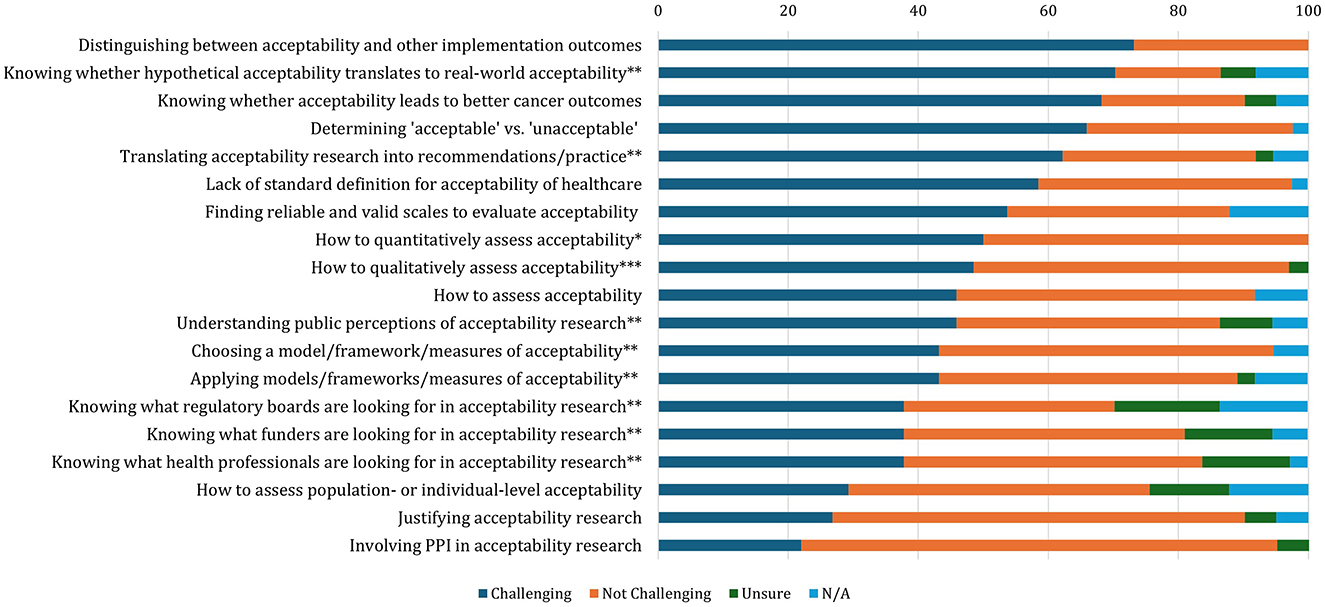

Figure 1. Extent of challenges experienced by researchers in the context of cancer screening (N = 41). Responses: *n = 26; **n = 37; ***n = 33. Responses to the items were collapsed into dichotomous responses: Not Challenging (“Not Challenging,” “A Little Challenging”) and Challenging (“Quite Challenging” “Very Challenging”).

Responses to the challenge items were collapsed into dichotomous outcomes: “Not Challenging” (“Not At All Challenging” and “A Little Challenging”) and “Challenging” (“Quite Challenging” and “Very Challenging”). The number and percentage of individuals who responded “Challenging” vs. “Not Challenging” for each item are reported in Figure 1 and Supplementary Table S1, as well as those who selected “Not Sure/Don't Know and “Not relevant to my work.”

The survey was completed by 41 participants which limits analyses beyond descriptive statistics. We used direct and snowball sampling to reach a range of stakeholders, but this means we do not know how many participants were contacted indirectly through snowballing, and therefore it is not possible to report a response rate.

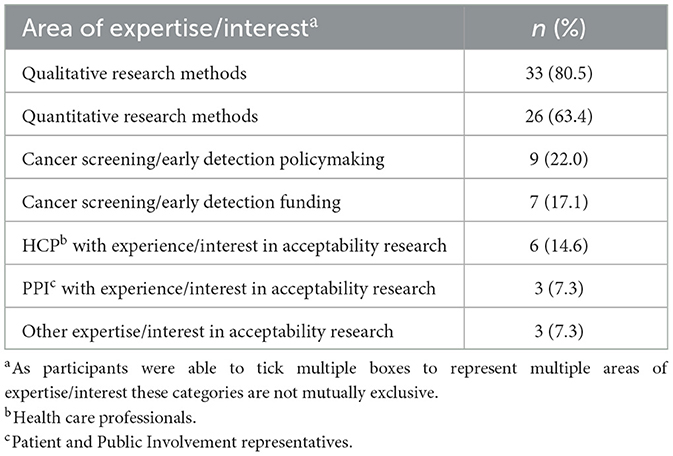

Most participants had experience of qualitative and/or quantitative acceptability research (80.5 and 63.4%, respectively; Table 1). Other stakeholders included policymakers (22.0%), healthcare professionals (14.6%) and Patient and Public Involvement (PPI) representatives (7.3%).

The survey related to both cancer screening and early detection research. However, the following results are interpreted in the screening context. The extent of challenges experienced by participants involved in assessing the acceptability of cancer screening are outlined in Figure 1. Although many of these challenges are inter-related, key concerns relate to a lack of consensus about (1) how to conceptualize cancer screening acceptability, (2) what methods to use to evaluate it; and (3) how this translates to evidence-based practice. These challenges are discussed in turn.

Conceptual challenges

Defining acceptability and distinguishing it from other constructs

The challenge endorsed by the greatest proportion (73.2%, n = 30) of survey respondents was distinguishing between acceptability and other implementation outcomes. Although there is broad consensus that it is important to assess acceptability in screening (6, 16) and other healthcare interventions (3), there is a widespread failure to define the concept. Without a clear definition, it is profoundly challenging to design and evaluate healthcare interventions in the cancer screening domain (4).

A variety of definitions have been employed across healthcare literature, underlining the opacity of the concept. The term “social acceptability” has been used to indicate a mutually shared or communal opinion about the nature of the intervention [e.g., “the patients' assessment of the acceptability, suitability, adequacy or effectiveness of care and treatment” (17)]. However, the use of acceptability within this definition renders it inherently circular and poorly operationalised. The systematic review used to develop the widely used TFA defines acceptability as “A multi-faceted construct that reflects the extent to which people delivering or receiving a healthcare intervention consider it to be appropriate, based on anticipated or experienced cognitive and emotional responses.” (4). We argue that this definition is also challenging to utilize meaningfully in the cancer screening context. It is unclear whether the term “appropriate” is a proxy for acceptability or is in fact one of a multitude of dimensions that comprise acceptability. Further, the development of the TFA definition of acceptability was theoretically based and may therefore be lacking important insight from the screening-eligible public. The finding, that conceptualizing acceptability remains one of the most challenging issues faced by researchers in cancer screening (Figure 1), suggests that the existing definitions are not wholly appropriate for use in this setting.

The heterogeneity of acceptability research to date is compounded by widespread conflation with several other evaluation and implementation outcomes (2, 3). These include feasibility, satisfaction, appropriateness, utility, tolerability, and preference (18). Further complication arises from the fact that other outcomes are often used as proxies for acceptability; for example, intended or actual uptake. However, it is not clear how far such terms relate to acceptability and by what mechanisms. Additionally, although acceptability and behavior appear intuitively linked, it is possible that an individual may engage in a behavior that they do not find acceptable and vice versa, making it challenging to dissect the relationship between the two. This was captured in survey respondents' comments:

“Acceptability should be related and at the same time differentiated from usability (another vague notion) and actual intentions. Another aspect to be explored is ‘preferences'… What are the drivers of acceptability to intentions and then on to implementation intentions and actual carrying out of the behavior?”

“As a health economist, we use predicted uptake calculated from discrete choice experiment (DCE) surveys. Thinking about it, acceptability is different to uptake, but they are related. For example, in DCE's, researchers can quantify the strength and magnitude of respondents' preferences for specific attributes of a service, e.g., distance to the screening location.”

The relationship between hypothetical and real-world acceptability

To date, much of the existing acceptability research in the context of cancer screening has been hypothetical or prospective. Overall, 70% (n = 37) of survey respondents reported that they find it challenging to know whether acceptability measured in this way translates to experiential acceptability and whether acceptability research leads to better implementation outcomes. This necessitates future investigation into whether acceptability measured in practice, or even retrospectively, differs from prospective acceptability.

Lack of clarity on how to evaluate acceptability

Healthcare services are complex adaptive systems and screening programmes represent complex multidimensional interventions. Moreover, the spectrum of human behaviors and emotional responses to cancer screening is equally varied and nuanced. Due to the apparent level of complexity in this setting, it is challenging to ascertain which aspect(s) of an intervention need to be considered acceptable to fulfill criteria like those proposed by the UK National Screening Committee (6, 16). For example, should researchers be considering acceptability of a screening programme in general, the screening test itself, the approach to defining the eligible population or the frequency of screening? If acceptability should be measured for each of these components, this begs the question of how these individual measures could be reconciled into a single outcome representing the totality of screening acceptability, as explained by a participant:

“…considering whether acceptability should be defined as someone finding the intervention tolerable vs someone actively like/wanting to engage in the intervention – this might differ for different types of intervention.”

Methodological challenges

Choosing an appropriate model, framework or measure of acceptability

The experiences of those conducting acceptability research in cancer screening calls attention to a need to better conceptualize and define acceptability to enable its assessment and evaluation in a context-specific manner with clear guidance on quantitative measures and qualitative assessments. We posit that doing so should involve stakeholders across research, policy, and practice, including those delivering and receiving the offer of engaging with cancer screening tests.

In response to the growing recognition of acceptability of healthcare as a distinct construct, several frameworks/measures have been developed, but the application of these depends on the evaluative context of acceptability in healthcare. For instance, the Consolidated Framework of Implementation Research (CFIR) has been used to evaluate the societal and organizational acceptability of risk-stratified cancer screening (19). However, beyond its “inner setting” the CFIR and other frameworks drawn from implementation science (3, 20), offer minimal guidance for measuring acceptability from an individual-level perspective. Frameworks and measures for the latter perspective are often confined to a specific health context, for example, Treatment Acceptability Questionnaire for psychological support (21), or interventions such as digital health apps which tend to use the Technology Acceptance Model (22). This may explain the popularity of the generic TFA (4, 23) which has been cited across numerous healthcare domains, including cancer screening, with 43.9% (n = 18) of our survey respondents using this theoretical framework.

Notwithstanding the TFA's popularity, its applicability to cancer screening research is mixed. Quantitative applications of the TFA to cancer screening have identified cognitive and affective dimensions of acceptability consistent with the TFA's conceptualization of acceptability (24–26). However, the separation of cognitive domains (e.g., intervention coherence, perceived effectiveness, and ethicality) from affective domains is problematic due to the duality of rational and emotional influences on attitudes to cancer screening (27–29). Several qualitative studies have used the TFA to inform topic guides (30, 31) and have found considerable overlap between some TFA domains, and unsuitability of others for different cancer screening contexts. Nor is it clear to what extent the generic domains of the TFA capture cancer screening preferences, values, and experience (30, 32) and how these map onto cognitive and affective responses. Notably, the TFA appears to have been applied with greater effect to more discrete health interventions, such as vaginal rings (33) rather than interventions with multiple procedural and/or contextual components such as cancer screening (30, 32).

Sekhon et al. suggest the TFA accommodates a range of stakeholder perspectives based on “how appropriate an intervention is perceived to be by those delivering or receiving it.” (4). Nevertheless, a comprehensive assessment of cancer screening acceptability may require the application of a range of theoretical frameworks. For instance, the TFA is primarily informed by a psychological model of health behavior and, therefore, may be more applicable for assessing acceptability from the perspective of patients rather than those delivering an intervention. The need to adapt ways of assessing acceptability from stakeholder perspectives is exemplified by recent research assessing views on risk-based cervical cancer screening which effectively incorporates both the CFIR and TFA for health professionals and patients, respectively (34). This leads to a question of whether existing models of healthcare acceptability, such as the TFA and CFIR, can and ought to be adapted to a cancer screening context or whether we need to develop a specific framework. Interestingly, 36.1% (n = 13) and 38.9% (n = 16) of survey respondents thought developing a framework of acceptability for cancer screening would be “quite useful” or “very useful,” respectively, suggesting that adapting existing models may be a useful starting point.

Knowing what would indicate something is “acceptable” or “unacceptable”

How to determine what is acceptable vs. unacceptable, in term of where the cut off or distinction lies, was reported as challenging by 65.9% of the survey respondents, placing this the fourth most common out of the listed challenges. The following two participants' responses highlight the lack of guidance for defining appropriate thresholds of acceptability:

“Deciding when something is acceptable or unacceptable is a challenge to come to an overall conclusion- e.g., using the TFA you get a lot of detail about different dimensions of acceptability but bringing this to an overall conclusion is a challenge and I think more guidance would be helpful.”

“Deciding on whether something is ‘acceptable' based on mixed views and feedback. With qualitative research, you generate a lot of data on different aspects of acceptability, so it's hard [to] decide whether something is firmly acceptable or not to inform recommendations.”

Therefore, a key challenge in this area is determining acceptability thresholds. This reaffirms the need for a standardized conceptualization of acceptability of cancer screening.

Translation challenges

Stakeholder perspectives are essential to help improve the assessment of acceptability in the cancer screening context. Concerningly, over a third of survey respondents struggled to understand what health professionals, funders and regulatory boards are looking for in terms of acceptability research, as well as how such research should be appraised. Regulatory bodies like the UK NSC may need to clarify their criteria for screening implementation [that screening programmes are “acceptable to health professionals and the public” (6)]. Including a range of perspectives in the development of theories and measures of acceptability will be essential to ensure research is generating useful evidence. One survey participant with lived experience of cancer commented:

“[I] know there are no simple answers to this but involving PPI/partners in research is crucial, listening to them and working to look at most suitable models rather than suggesting their contribution is ‘anecdotal' and somehow less worthy than academic theoretical models.”

Although fewer than one in five participants found involving PPI in acceptability research challenging, 46% (n = 17) of participants agreed that it was a challenge to know how the public perceive acceptability research. This suggests that ongoing discussions about the direction of acceptability research in cancer screening should involve members of the public and align with their values, preferences and priorities.

Limitations

This study has several limitations. The rapid consultation survey involved a small, UK-based convenience sample, limiting its representativeness for those engaged in evaluating and measuring the acceptability of cancer screening. However, the survey aligns with our aim to offer an initial agenda to promote wider discussion and scope for further research at both national and international levels. Further research should also explore the perspectives of those involved in delivering healthcare services related to cancer screening i.e., may be involved in supporting research studies, as they may highlight different challenges in defining acceptability.

While efforts were made to specify our focus on cancer screening, some of the challenges under discussion may intersect with those of existing health evaluations and intervention frameworks (2, 3, 19, 20).

Conclusions

Despite the increasing focus on acceptability as a health implementation outcome, there is a clear need to conceptualize and define what acceptability means in the context of cancer screening. To support meaningful assessment and evaluation by both those delivering and receiving cancer screening, two approaches may be considered: (1) adapting existing healthcare acceptability frameworks to identify relevant dimensions and thresholds for cancer screening, or (2) developing a specific framework tailored to this context. We suggest that the latter may be necessary to capture the inherently adaptive and process-oriented nature of cancer screening. In either case, the incorporation of a range of stakeholder perspectives, especially the screening eligible public, will be key. Further research with a more representative sample population will be required to address these considerations.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://osf.io/fcgq6/.

Ethics statement

The studies involving humans were approved by Queen Mary University of London Research Ethics Committee. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

CK-J: Formal analysis, Project administration, Writing – original draft, Writing – review & editing, Methodology. NS-B: Data curation, Formal analysis, Methodology, Project administration, Writing – original draft, Writing – review & editing. SB: Writing – original draft, Writing – review & editing. LT: Writing – original draft, Writing – review & editing. LM: Writing – review & editing. JW: Supervision, Writing – original draft, Writing – review & editing. EL: Methodology, Writing – review & editing. EK: Writing – review & editing, Methodology. LG: Conceptualization, Investigation, Methodology, Project administration, Supervision, Writing – review & editing. SS: Project administration, Supervision, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was funded by Barts Charity (G-001520; MRC& U0036).

Acknowledgments

Dr. Samantha Quaife and all members of the Acceptability Researchers' Forum.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcacs.2025.1608009/full#supplementary-material

References

1. World Health Organization. Framework on Integrated People-Centred Health Services. Report by the Secretariat. Sixty-Ninth World Health Assembly (2016).

2. Dissemination and Implementation Framework and Toolkit | PCORI. (2016). Available online at: https://www.pcori.org/implementation-evidence/putting-evidence-work/dissemination-and-implementation-framework-and-toolkit (Accessed October 21, 2025).

3. Skivington K, Matthews L, Simpson SA, Craig P, Baird J, Blazeby JM, et al. A new framework for developing and evaluating complex interventions: update of Medical Research Council guidance. BMJ. (2021) 374:n2061. doi: 10.1136/bmj.n2061

4. Sekhon M, Cartwright M, Francis JJ. Acceptability of healthcare interventions: an overview of reviews and development of a theoretical framework. BMC Health Serv Res. (2017) 17:88. doi: 10.1186/s12913-017-2031-8

5. Walter FM, Thompson MJ, Wellwood I, Abel GA, Hamilton W, Johnson M, et al. Evaluating diagnostic strategies for early detection of cancer: the CanTest framework. BMC Cancer. (2019) 19:586. doi: 10.1186/s12885-019-5746-6

6. Public Health England. Criteria for a Population Screening Programme - GOV.UK. (2022). Available online at: https://www.gov.uk/government/publications/evidence-review-criteria-national-screening-programmes/criteria-for-appraising-the-viability-effectiveness-and-appropriateness-of-a-screening-programme (Accessed February 28, 2025).

7. Cancer Research UK. Early Detection and Diagnosis: A Roadmap for the Future. (2020). Available online at: https://www.cancerresearchuk.org/sites/default/files/early_detection_diagnosis_of_cancer_roadmap.pdf (Accessed February 28, 2025).

8. O'Dowd EL, Lee RW, Akram AR, Bartlett EC, Bradley SH, Brain K, et al. Defining the road map to a UK national lung cancer screening programme. Lancet Oncol. (2023) 24:e207–18. doi: 10.1016/S1470-2045(23)00104-3

9. Douglas E, Waller J, Duffy SW, Wardle J. Socioeconomic inequalities in breast and cervical screening coverage in England: are we closing the gap? J Med Screen. (2016) 23:98–103. doi: 10.1177/0969141315600192

10. Gimeno Garca AZ. Factors influencing colorectal cancer screening participation. Gastroenterol Res Pract. (2012) 2012:483417. doi: 10.1155/2012/483417

11. Power E, Miles A, Von Wagner C, Robb K, Wardle J. Uptake of colorectal cancer screening: system, provider and individual factors and strategies to improve participation. Future Oncol. (2009) 5:1371–88. doi: 10.2217/fon.09.134

12. Szczepura A, Price C, Gumber A. Breast and bowel cancer screening uptake patterns over 15 years for UK south Asian ethnic minority populations, corrected for differences in socio-demographic characteristics. BMC Public Health. (2008) 8:346. doi: 10.1186/1471-2458-8-346

13. Chan DNS, Law BMH, Au DWH, So WKW, Fan N. A systematic review of the barriers and facilitators influencing the cancer screening behaviour among people with intellectual disabilities. Cancer Epidemiol. (2022) 76:102084. doi: 10.1016/j.canep.2021.102084

14. Tuschick E, Barker J, Giles EL, Jones S, Hogg J, Kanmodi KK, et al. Barriers and facilitators for people with severe mental illness accessing cancer screening: a systematic review. Psycho Oncol. (2024) 33:e6274. doi: 10.1002/pon.6274

15. Merten JW, Pomeranz JL, King JL, Moorhouse M, Wynn RD. Barriers to cancer screening for people with disabilities: a literature review. Disabil Health J. (2015) 8:9–16. doi: 10.1016/j.dhjo.2014.06.004

16. Wilson JMG, Jungner G. Principles and Practice of Screening for Disease. Geneva, Switzerland: WHO (1968). Public Health Papers.

17. Staniszewska S, Crowe S, Badenoch D, Edwards C, Savage J, Norman W. The PRIME project: developing a patient evidence-base. Health Expect. (2010) 13:312–22. doi: 10.1111/j.1369-7625.2010.00590.x

18. Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. (2011) 38:65–76. doi: 10.1007/s10488-010-0319-7

19. Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. (2009) 4:50. doi: 10.1186/1748-5908-4-50

20. Klaic M, Kapp S, Hudson P, Chapman W, Denehy L, Story D, et al. Implementability of healthcare interventions: an overview of reviews and development of a conceptual framework. Implement Sci. (2022) 17:1–20. doi: 10.1186/s13012-021-01171-7

21. Hunsley J. Development of the treatment acceptability questionnaire. J Psychopathol Behav Assess. (1992) 14:55–64. doi: 10.1007/BF00960091

22. Davis FD. User acceptance of information technology: system characteristics, user perceptions and behavioral impacts. Int J Man Mach Stud. (1993) 38:475–87. doi: 10.1006/imms.1993.1022

23. Sekhon M, Cartwright M, Francis JJ. Development of a theory-informed questionnaire to assess the acceptability of healthcare interventions. BMC Health Serv Res. (2022) 22:279. doi: 10.1186/s12913-022-07577-3

24. Hill E, Nemec M, Marlow L, Sherman S, Waller J. Maximising the acceptability of extended time intervals between screens in the NHS Cervical Screening Programme: an online experimental study. J Med Screen. (2021) 28:333–40. doi: 10.1177/0969141320970591

25. Kelley-Jones C, Scott SE, Waller J. Acceptability of de-intensified screening for women at low risk of breast cancer: a randomised online experimental survey. BMC Cancer. (2024) 24:1–13. doi: 10.1186/s12885-024-12847-w

26. Scott S, Rauf B, Waller J. “Whilst you are here…” Acceptability of providing advice about screening and early detection of other cancers as part of the breast cancer screening programme. Health Expect. (2021) 24:1868–78. doi: 10.1111/hex.13330

27. Ferrer R, Klein WMP, Persoskie A, Avishai-Yitshak A, Sheeran P. The Tripartite Model of Risk Perception (TRIRISK): distinguishing deliberative, affective, and experiential components of perceived risk. Ann Behav Med. (2016) 50:653–63. doi: 10.1007/s12160-016-9790-z

28. Slovic P, Finucane ML, Peters E, MacGregor DG. The affect heuristic. Eur J Oper Res. (2007) 177:1333–52. doi: 10.1016/j.ejor.2005.04.006

29. Slovic P, Finucane ML, Peters E, MacGregor DG. Risk as analysis and risk as feelings: some thoughts about affect, reason, risk, and rationality. Risk Anal. (2004) 24:311–22. doi: 10.1111/j.0272-4332.2004.00433.x

30. Kelley-jones C, Scott S, Waller J. UK women's views of the concepts of personalised breast cancer risk assessment and risk-stratified breast screening: a qualitative interview study. Cancers. (2021) 13:5813. doi: 10.3390/cancers13225813

31. Nemec M, Waller J, Barnes J, Marlow LAV. Acceptability of extending HPV-based cervical screening intervals from 3 to 5 years: an interview study with women in England. BMJ Open. (2022) 12:e058635. doi: 10.1136/bmjopen-2021-058635

32. Taylor LC, Hutchinson A, Law K, Shah V, Usher-Smith JA, Dennison RA. Acceptability of risk stratification within population-based cancer screening from the perspective of the general public: a mixed-methods systematic review. Health Expect. (2023) 26:989–1008. doi: 10.1111/hex.13739

33. Sekhon M, Van Der Straten A. Pregnant and breastfeeding women's prospective acceptability of two biomedical HIV prevention approaches in Sub Saharan Africa: a multisite qualitative analysis using the Theoretical Framework of Acceptability. PLoS ONE. (2021) 16:e0259779. doi: 10.1371/journal.pone.0259779

Keywords: acceptability, cancer screening, early detection, implementation science, theoretical framework of acceptability, Consolidated Framework of Implementation Research, consensus survey

Citation: Kelley-Jones C, Schmeising-Barnes N, Bonfield S, Taylor L, McWilliams L, Waller J, Lidington E, Katsampouris E, Gatting L and Scott SE (2025) Challenges of assessing acceptability in the context of cancer screening: a behavioral science perspective. Front. Cancer Control Soc. 3:1608009. doi: 10.3389/fcacs.2025.1608009

Received: 08 April 2025; Accepted: 05 November 2025;

Published: 25 November 2025.

Edited by:

Edvins Miklaševičs, Riga Stradinš University, LatviaReviewed by:

Claudia Parvanta, University of South Florida, United StatesCopyright © 2025 Kelley-Jones, Schmeising-Barnes, Bonfield, Taylor, McWilliams, Waller, Lidington, Katsampouris, Gatting and Scott. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Charlotte Kelley-Jones, Yy5rZWxsZXlfam9uZXNAcW11bC5hYy51aw==

†These authors have contributed equally to this work and share first authorship

‡These authors have contributed equally to this work and share last authorship

Charlotte Kelley-Jones

Charlotte Kelley-Jones Ninian Schmeising-Barnes

Ninian Schmeising-Barnes Stephanie Bonfield1†

Stephanie Bonfield1† Lily Taylor

Lily Taylor Lorna McWilliams

Lorna McWilliams