Abstract

Bycatch is the most significant threat to marine mammals globally. There are increasing requirements for national governments to fulfil their obligations to international agreements and treaties to assess fisheries catch and bycatch of non-target species. Questionnaire surveys represent one low-cost method to collect data to estimate fisheries catch and bycatch of vulnerable species including marine mammals. Questionnaire surveys can be particularly advantageous when bycatch is being investigated on large spatial and temporal scales, or in data-poor areas. This review aims to provide the necessary guidance required to design and conduct questionnaire studies investigating marine mammal bycatch. To do so, a systematic review was conducted of the methods used in 91 peer-reviewed or grey literature questionnaire studies from 1990 to 2023 investigating marine mammal bycatch. Literature was searched, screened, and analysed following the RepOrting standards for Systematic Evidence Syntheses (ROSES) protocols. A narrative synthesis and critical evaluation of the methods used were conducted and best practice recommendations are proposed. The recommendations include suggestions for how to generate representative samples, the steps that should be followed when designing a questionnaire instrument, how to collect reliable data, how to reduce under-reporting and interviewer bias, and how weighting or model-based bycatch estimation techniques can be used to reduce sampling bias. The review’s guidance and best practice recommendations provide much-needed resources to develop and employ questionnaire studies that produce robust bycatch estimates for marine mammal populations where they are currently missing. Recommendations can be used by scientists and decision-makers across the globe. Whilst the focus of this review is on using questionnaires to investigate marine mammal bycatch, the information and recommendations will also be useful for those investigating bycatch of any other non-target species.

1 Introduction

Globally, more than 25% of marine mammal species are considered threatened with extinction and are classified as Critically Endangered, Endangered or Vulnerable by the IUCN Red List (IUCN, 2023). Fisheries bycatch, or non-target species catch, is the most widespread and dominant threat to marine mammals (Read et al., 2006; Avila et al., 2018; Brownell et al., 2019). In 2006, it was estimated that around 650,000 marine mammals die annually as bycatch around the globe (Read et al., 2006). Gillnets have been identified as the key gear of bycatch concern for odontocete, mysticete, pinniped and sirenian species (e.g. Read et al., 2006; Reeves et al., 2013; Brownell et al., 2019). However, depending on geographical area and species, trawls, encircling nets, long lines and traps, have also been identified for their significant contribution to marine mammal bycatch (Smith, 1983; Read et al., 2006; Campbell et al., 2008; Fernández-Contreras et al., 2010; Hamer et al., 2012; Brownell et al., 2019).

The importance of reducing bycatch to prevent further marine mammal species extirpation and extinction has been noted globally, for example in the United Nation’s 2030 Global Biodiversity Framework targets, the European Union regulations, and the United States’ Import Provision regulations (EU Regulation, 2019; CBD, 2022; 16 U.S.C. 1371(a)(2)). The United States Import Provisions require countries exporting fisheries products to the United states to conduct bycatch assessments, set safe bycatch limits and provide evidence that bycatch levels are below these limits for all exporting fisheries.

Bycatch assessments are necessary to investigate potential impacts of bycatch on marine mammal populations (Wade, 2018; Wade et al., 2021). Assessments can be used to ascertain whether bycatch levels are sustainable and to guide conservation efforts to reduce bycatch (Wade, 1998; Curtis et al., 2015). Wade et al. (2021) set out best practices for conducting assessments. Assessments require key data on population abundance, population dynamics (e.g. reproductive rate), carrying capacity and bycatch estimates (Wade et al., 2021). Hammond et al. (2021) provided a detailed and thorough overview for how to estimate abundance for marine mammal populations. Data for producing bycatch estimates can be collected through (i) onboard fisheries observer programs including dedicated observer programmes or Video Remote Electronic Monitoring (VREM), (ii) self-reporting (e.g. log books), (iii) strandings programs, and/or (iv) questionnaires/interviews (hereafter referred to as questionnaires). Model or ratio-based calculations can then be used to produce bycatch estimates using collected data (Authier et al., 2021; Moore et al., 2021).

Onboard fisheries observer programs can be considered as the gold standard method for collecting bycatch rate data (Moore et al., 2021). This is due to collected data being less susceptible to under-reporting bias (bias that arises when an incorrect answer that is lower than the true answer is recorded for any reason) than that of self-reporting, questionnaire or stranding-based studies. However, observer program data can still be susceptible to under-reporting if observers are not solely focused on observing marine mammal bycatch. Additionally, bias may arise in observer data due to observed vessels altering their fishing practices in the presence of observers. The safety of placing observers on vessels can also be called into question under certain socio-political circumstances. VREM has more recently been used to observe fisheries bycatch and has similar advantages and disadvantages to onboard observer programs, with fewer safety concerns (e.g. Brown et al., 2021; Puente et al., 2023). However, it is important to consider that cameras used in VREM may fail to detect bycatch events if cameras are placed in locations with obscured views of fishing catch, or if cameras are turned off. Strandings programs are also a viable method that can be employed to collect bycatch data (e.g. Baird et al., 2002). However, strandings programs require reporting networks to be well established and considerable cooperation between researchers and authorities. In addition, bycatch data from strandings programs are subject to under-reporting bias. Observer, VREM and strandings programs further all require considerable amounts of time, personnel, and associated cost, particularly when conducted across wide spatial- and temporal-scales. Self-monitoring is a low-cost data collection method. However, the data from self-reporting are often found to be incomplete and unreliable, with bycatch events under-reported (Sampson, 2011; Mangi et al., 2016; Gilman et al., 2019). Questionnaire studies are low-cost and can be conducted using minimal personnel and time to produce bycatch data over wide spatial and temporal scales. Such wide scales may be particularly advantageous where large bycatch knowledge gaps exist. Bycatch data reported through questionnaires may also be subject to under-reporting bias and memory decay (Bradburn et al., 1987). However, questionnaires’ advantageous attributes may make them the most appropriate method to use under many circumstances.

To produce useful and robust bycatch estimates using data collected from questionnaires, a study’s participants (the sample) should be representative of the wider target group that the study aims to investigate (sampling frame). This can be achieved using a probability sampling approach such as simple random sampling (Moore et al., 2010). Probability sampling approaches are those that give each member of the sample frame an equal probability of being sampled (Oppenheim, 1992; Fowler, 2009). The questionnaire instrument (form containing the questions), and implementation procedure (how the questionnaire is conducted e.g. in person or online) must be designed to yield reliable responses from participants with minimised error. Unreliable responses and errors may arise from the participant not understanding the questions, not knowing or recalling the information or intentionally providing incorrect information (Fowler, 2009). Further, any fishery effort data samples used to estimate sampling frame bycatch should be accurate, to limit bias in the estimate. Ethical considerations, such as ensuring participant anonymity, must also be made to protect those who participate in the study (e.g. Zappes et al., 2013).

There are numerous peer-reviewed articles and textbooks providing guidance on questionnaire study design (e.g. Oppenheim, 1992; Lohr, 2021; Fowler, 2009). However, the guidance provided is often lengthy and based on social science studies and not tailored to fisheries and specifically assessment of marine mammal fisheries bycatch. Moore et al. (2010) and Pilcher and Kwan (2012) provided brief guidance on how to design and conduct questionnaires to investigate marine mammal bycatch and both identified the need for consultation with social scientists. Whilst an effort has been made to provide useful guidelines for marine mammal bycatch questionnaire study design, there is no available comprehensive guide for how to conduct a questionnaire study that includes all phases from planning to generating the bycatch estimate.

The purpose of this review is to fill the marine mammal bycatch questionnaire study design resource gap by: (i) conducting a systematic review of questionnaire-based studies used to investigate marine mammal bycatch available in the peer-reviewed and grey literature published between 1990 and 2023; (ii) provide a narrative synthesis of and critically evaluate the reviewed literature, and (iii) provide best practice recommendations for designing questionnaires to assess marine mammal fisheries bycatch.

2 Methodology

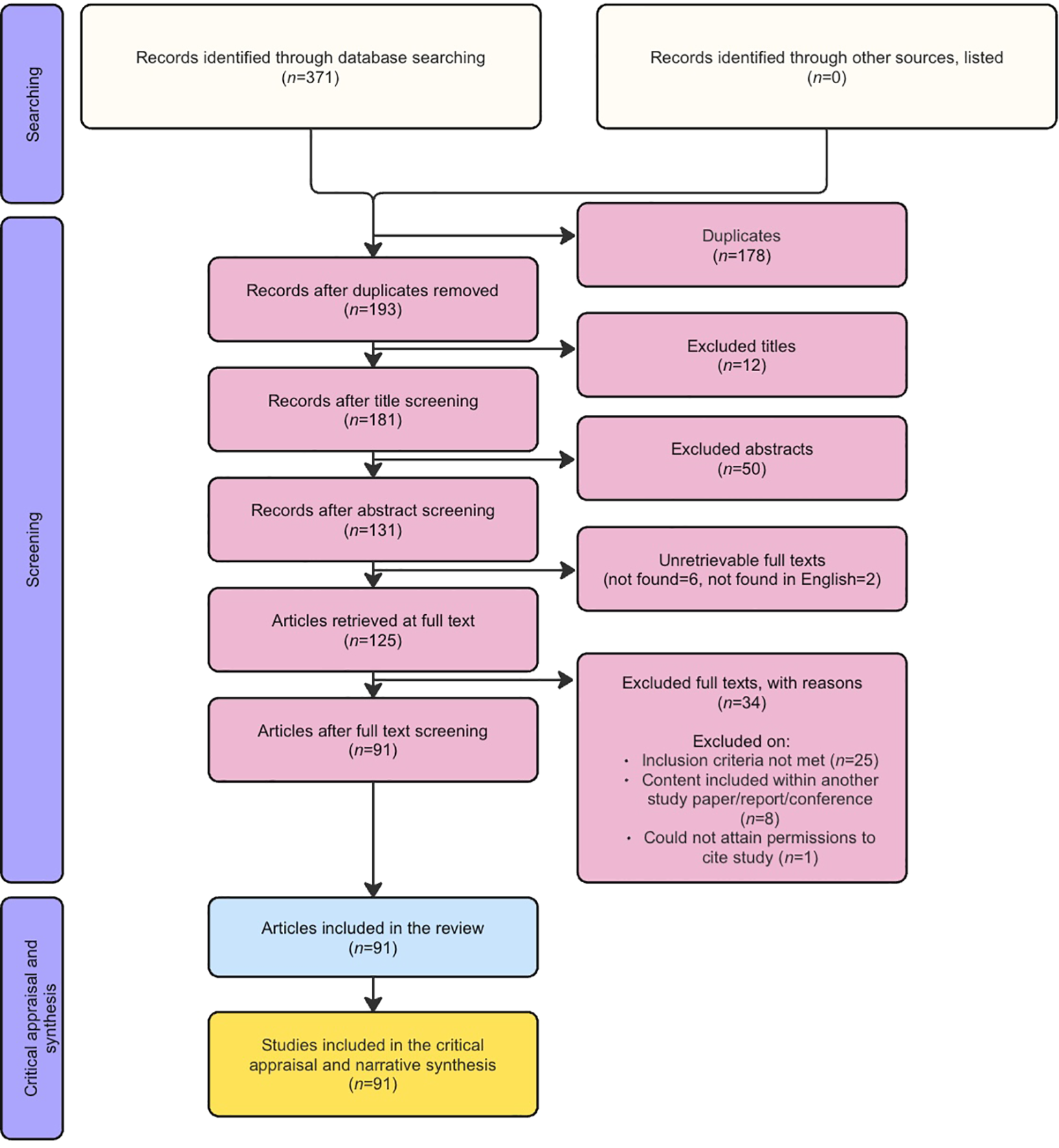

Literature search, screening, data extraction, synthesis and analysis were done following an adapted version of the RepOrting standards for Systematic Evidence Syntheses (ROSES) protocol for systematic reviews (Haddaway et al., 2017). The method’s approach is summarised in Figure 1.

Figure 1

ROSES flow diagram documenting the steps taken to source, screen and appraise studies in the systematic review.

2.1 Literature searching and screening

Literature was searched on Scopus, WebOfScience and ProQuest Natural Science. The search included all literature up to and including the search date of 23/05/2023. Grey literature (e.g. conference proceedings and unpublished reports) was included to maximise the inclusion of available literature. Article titles, abstracts and keywords were searched using the search string ‘(questionnaire* OR interview*) AND (“marine mammal*” OR “aquatic mammal*” OR cetacean* OR mysticete* OR whale* OR odontocete* OR delphinid* OR dolphin* OR porpoise* OR sirenian* OR dugong* OR pinniped* OR seal* OR “sea lion*” OR otariid* OR mustelid* OR otter*) AND (bycatch OR by-catch OR “accidental catch” OR “accidental capture” OR “incidental catch” OR “incidental capture” OR “non-target catch” OR “interactions with fisheries” OR “fisheries interactions”)’. The meta-data of the search results were exported to Excel. Duplicates were identified in Program R (v4.1.2) using the duplicates() function across titles, abstracts and DOI numbers, then removed (R Core Team, 2023). If any duplicates remained, they were removed manually during the screening process. The initial literature search produced a total of 193 items after duplicates were removed.

The literature was screened through a three-stage process. During Stage 1, titles of papers were screened, during Stage 2, abstracts were screened and during Stage 3, the methods of the full texts were screened. The inclusion criterion for screening was whether the literature used questionnaires that included a minimum of one question investigating marine mammal bycatch rate, bycatch count, bycatch occurrence or bycatch distribution. Literature in which questions focused on identifying threats e.g. “Is bycatch a threat?” or “List the threats facing this species” were not included. Questionnaires could be in-person, by telephone, by mail or online (e.g. e-mail). During each stage, each literature item was assessed to investigate if it met the inclusion criteria. If the inclusion criteria were met, papers passed through to the next stage. If it was unclear whether the inclusion criterion was met, the paper also passed to the next stage. If full texts in English could not be located, the authors were contacted before 23/03/2023 and excluded if no response was provided by 31/12/2023. Screening was conducted by the first author. The first author had not authored any of the reviewed articles, eliminating any bias associated with self-inclusion/exclusion. Screening titles (Stage 1) left 181 articles remaining, screening abstracts (Stage 2) left 131 articles remaining, and screening full texts (Stage 3) left 91 articles to be included in the analyses (Figure 1). A list of all literature excluded at Stage 3, and the reasons for their exclusion, are detailed in Supplementary Material 1.

2.2 Data extraction

Descriptive meta-data (e.g. DOI, title, country of research) and data on the questionnaire methods used were extracted. Method data were extracted on the studies’ sampling strategy, questionnaire design, questionnaire implementation procedure and data analysis. Extraction focused on the studies’ measures to reduce bias, increase reliability and to ensure that ethical standards were met. Extracted data were entered into an Excel file. If it was concluded that there was not enough information available in the text on a data topic, ‘No Data (ND)’ was entered into the data extraction file. If a data topic was not applicable (e.g. due to the survey procedure followed), ‘Not Applicable (NA)’ was entered. In addition to the extracted data, descriptive data on the economic status of the study country were obtained from the World Bank (World Bank, 2024). The completed table containing all extracted data can be found in Supplementary Material 2.

2.3 Data analysis and synthesis

Descriptive statistics (percentages and counts) were used to summarise meta-data and methodological techniques used. No statistics were produced for the ‘NA’ or ‘ND’ data categories. For each study element (across studies’ sampling procedure, questionnaire design, questionnaire implementation procedure and analysis) a narrative synthesis and critical evaluation of methods used by the reviewed literature was conducted and best recommendations distilled.

3 Results and discussion

Of the reviewed studies, 85.7% (n=78) were peer-reviewed original research and the remainder were technical reports (11.0%; n=10), PhD or Master’s theses (2.2%; n=2) or book chapters (1.1%; n=1) (Table 1). Most studies (90.1%; n=82) were conducted in a single country, the remainder were studies conducted in up to 18 countries. The majority of the studies were conducted in Europe (27.4%; n=25) and Asia (27.5%; n=25), followed by South America (18.7%; n=17), North America (9.8%; n=9) and Oceania (2.1%; n=2) (Table 1). The highest number of studies were conducted in Brazil (7.6%; n=7), followed by Spain (5.5%; n=5) then Canada, China, Greece, India, Malaysia, Peru, Portugal and Tanzania equally (4.4%; n=4). Most studies (49.5%; n=45) were conducted in high-income countries, 40.7% of studies (n=37) were conducted in each of upper-middle-income and lower-middle-income countries and 7.7% (n=7) were conducted in low-income countries (World Bank, 2024) (Table 1). Odontocetes were the focus of the highest number of studies (75.9%; n=69), followed by pinnipeds (19.7%; n=18), and mysticetes (16.5%; n=15) (Table 1). It was common for studies to have a single focal species (46.2%; n=42). Of these, the common bottlenose dolphin (Tursiops truncatus) was the focus of the most studies (14.3%; n=6), followed by the dugong (Dugong dugon) (9.5%; n=4) and the Mediterranean monk seal (Monachus monachus) (9.5%; n=4) (Table 1).

Table 1

| (A) Metadata | |||||

|---|---|---|---|---|---|

| Unit | Peer-reviewed | Technical report | PhD/Master thesis | Book chapter | |

| % | 85.7 | 11.0 | 2.2 | 1.1 | |

| n | 78 | 10 | 2 | 1 | |

| Single country | Multiple countries | ||||

| % | 90.1 | 9.9 | |||

| n | 82 | 9 | |||

| Europe | Asia | South America | North America | Oceana | |

| % | 27.5 | 27.5 | 18.7 | 9.8 | 2.1 |

| n | 25 | 25 | 17 | 9 | 2 |

| High-income country |

Upper-middle income country | Lower-middle income country | Low-income country | ||

| % | 49.5 | 40.7 | 40.7 | 7.7 | |

| n | 45 | 37 | 37 | 7 | |

| Odontocete | Pinniped | Mysticete | |||

| % | 75.9 | 19.7 | 16.5 | ||

| n | 69 | 18 | 15 | ||

| Single species | Multiple species | ||||

| % | 46.2 | 53.9 | |||

| n | 42 | 49 | |||

| Focal species of studies with single species focus | |||||

| Tursiops truncatus | Dugong dugon | Monachus monachus | |||

| % | 14.3 | 9.5 | 9.5 | ||

| n | 6 | 4 | 4 | ||

| (B) Sampling approach | |||||

|---|---|---|---|---|---|

| Census | Sample | ||||

| % | 6.6 | 93.4 | |||

| n | 6 | 85 | |||

| Purposive sampling | Convenience sampling |

Snowball sampling | Cluster sampling | Stratified sampling | |

| % | 29.6 | 27.4 | 23.1 | 75.8 | 4.4 |

| n | 27 | 25 | 21 | 69 | 4 |

| Reported sampling rate | Reported non-response rate | ||||

| % | 49.0 | 16.5 | |||

| n | 44 | 15 | |||

| (C) Questionnaire instrument | |||||

|---|---|---|---|---|---|

| Ensured questions were simple | Assessed questions for leading question bias |

Wrote questions as they could be read aloud | Described question order |

Included interviewer instructions |

|

| % | 6.6 | 3.3 | 23.3* | 51.6 | 26.1** |

| n | 6 | 3 | 7* | 47 | 6** |

| *only includes studies that included the full questionnaires | |||||

| **of in person or telephone questionnaires where the survey form was included | |||||

| (D) Questionnaire implementation procedure | |||||

|---|---|---|---|---|---|

| In person | Telephone | Online | |||

| % | 89.0 | 8.9 | 5.5 | 2.2 | |

| n | 81 | 8 | 5 | 2 | |

| Reported groups/one-to-one | Reported interviewer age | Reported interviewer gender |

Reported interviewer training | ||

| % | 34.1 | 2.5*** | 6.2*** | 23.8*** | |

| n | 31 | 2*** | 5*** | 20*** | |

| ***of studies with interviewers | |||||

| (E) Data analysis | |||||

|---|---|---|---|---|---|

| Used ratio-based methods |

Used model-based methods | ||||

| % | 37.4 | 0.0 | |||

| n | 34 | 0 | |||

| Fisheries effort data unit | |||||

| Number of vessels | Number of fishers | Time spent fishing | |||

| % | 46.1 | 23.1 | 23.1 | ||

| n | 12 | 6 | 6 | ||

| Source of fishery effort data | |||||

| Government | Current study | Previous studies | |||

| % | 50.0 | 30.8 | 2.0 | ||

| n | 13 | 8 | 7.7 | ||

Summary statistics (proportions and counts) of the meta-data and questionnaire methods used within the reviewed literature. See Supplementary Material 2 for further detail and see Table 2 for definitions. Country income status attained from the World Bank (2024).

Sub table (A) summarises studies' metadata (continents, countries, economic status classifications and taxa are non-exclusive), (B) summarises studies' sampling strategies (sampling approach and survey type are non-exclusive), (C) summarises studies' questionnaire instruments, (D) summarises studies' questionnaire implementation procedure, and (E) summarises studies' data analyses.

3.1 Sampling

3.1.1 Sampling frame

Within the reviewed literature, studies’ sampling frames varied from having a narrow focus (e.g. Alaska’s Copper River Delta salmon drift-net fishers) to a broad focus (e.g. all Swedish fishers) (Wynne, 1990; Lunneryd and Westerberg, 1997). A representative sample can be attained with either scale if the sampling frame is well defined (e.g. Wynne, 1990; Norman, 2000; Lunneryd et al., 2003) and comprehensive (i.e. lists all members of the target group to be studied). Lesage et al. (2006) and Hale et al. (2011) were able to use a list of all licensed fishers in their sampling frame. However, where such lists are not available, larger groups or communities can be listed instead, such as in Whitty (2016). Well defined and comprehensive sampling frames are recommended.

3.1.2 Sampling approach

Within the reviewed literature there was a general lack of detail on the sampling approach used to select questionnaire interviewees. Censuses, as defined in Table 2, were used in 6.6% of studies (n=6) (Table 1). Where a sampling approach was used, one study (1.1%) (Moore et al., 2010) used probability sampling, compared to 63 studies (69.2%) which used non-probability sampling methods (Table 1).

Table 2

| Term | Definition |

|---|---|

| Census | A survey where all members of the sampling frame participate. |

| Central tendency bias | Bias in a respondent’s answer that arises when a respondent selects a mid-range answer from a list of available answer choices. A type of response bias. |

| Closed questions | Questions for which answers are restricted to a limited set. |

| Cluster sampling | A multi-level sampling approach where coarser clusters of individuals (e.g. villages) are sampled before individuals are sampled from within the clusters. |

| Drop-out rate | The proportion of respondents who start the survey but ‘drop-out’ before finishing it. |

| Indicator | A variable that can be used to measure the concept of interest. |

| Interval data | Numerical scale data with known and equal distances between values but with no true zero. |

| Interviewer bias | A type of bias that arises from the influence of the interviewer on the participants’ responses. |

| Introductory statement | A short description of the study, what is requested of the participant and the participants’ ethical rights. |

| Knowledge bias | Bias in a respondent’s answer that arises when a respondent does not know the answer but provides an answer anyway. A type of response bias. |

| Leading question bias | Bias in a respondent’s answer that arises when a respondent provides an incorrect answer because they were led to give that answer due to the suggestive nature of a question. A type of response bias. |

| Model-based bycatch estimate | A method to produce a bycatch estimate where Bycatch Per Unit Effort (BPUE) is calculated by fitting models to data on the frequency of bycatch for a given effort, as a function of various potential explanatory covariates. Models can then be used to predict the total bycatch in the sampling frame. |

| Nominal data | Data that can be divided into mutually exclusive categories where there are no relationships between the categories (e.g. primary fishing gears). |

| Non-probability sampling | Using non-random or non-systematic approaches to select the sample, leading to each member of the sampling frame not having an equal probability of being selected. |

| Non-response bias | A form of sampling bias that arises when the non-responders are a bias sample of the sampling frame. |

| Non-response rate | The proportion of respondents identified for sampling who do not to participate as they cannot be located or choose not to take part. |

| Open questions | Questions for which answers are not restricted to a limited set. |

| Opportunity or Convenience sampling | Participants are selected as they are easy or convenient to access. A type of non-probability sampling. |

| Ordinal data | Data that can be divided into ranked categories where there is no available information on the degree to which one rank differs from the next (e.g. lots, some, none). |

| Pre-testing (field) | Testing the questionnaire with a sample of the sampling frame to ensure the questionnaire flows well, to test timings, and to ensure questions are understood as intended. |

| Pre-testing (laboratory) | Testing the questionnaire in a laboratory setting (e.g. with the interviewer team) to ensure the questionnaire flows well, to test timings, and to ensure questions are understood as intended |

| Probability sampling | Using random or systematic approaches to select the sample, giving each member of the sampling frame an equal probability of being selected. |

| Purposive sampling | Participants are selected due to their possessing a characteristic that is desired for sampling. A type of non-probability sampling. |

| Questionnaire implementation procedure | All of the elements of a questionnaire study related to how the questionnaire is conducted (e.g. in-person or online, one-to-one or in groups, interviewers used). |

| Questionnaire instrument | The form containing the questions and any introductory statement or interviewer instructions. |

| Ratio data | Numerical scale data with known and equal distances between values and a true zero (e.g. bycatch count per year). |

| Ratio-based bycatch estimate | A method to produce a bycatch estimate where BPUE is calculated by, for example and most simply, dividing the number of bycatch events in the sample by the number of vessels sampled, then extrapolating this figure to the total number of vessels in the sampling frame. |

| Recall bias | Bias in a respondent’s answer that arises when a respondent cannot remember the answer but provides an answer anyway. A type of response bias. |

| Response bias | Bias in a respondent’s answer as the result of any factor. |

| Response fatigue bias | Bias in a respondent’s answer that arises when a respondent gives an incorrect answer because they are tired and so are not giving their full attention and effort to the survey. A type of response bias. |

| Sample | The members of the sampling frame that participate in the survey. |

| Sample rate | The proportion of the sampling frame sampled. |

| Sample size | The number of participants in the sample. |

| Sampling approach | The method used to select the sample participants from the sampling frame for the study. |

| Sampling bias | A bias that arises from the sample not being representative of the sampling frame. |

| Sampling frame | The total population that is being investigated. |

| Simple random sampling | Participants are selected at random from a list of all members of the sampling frame using a random number generator. A type of probability sampling. |

| Snowball sampling | The first participant is identified via any sampling method, then this participant identifies further participants, and so on. A type of non-probability sampling. |

| Social desirability bias | Bias in a respondent’s answer that arises when a respondent gives an incorrect answer because the truthful answer may be socially undesirable. A type of response bias. |

| Stratified sampling | The sampling frame is divided into sub-groups, allowing for each sub-group to be sampled independently. |

| Systematic sampling | Participants are selected systematically (e.g. every 10th vessel in a list of vessels) from a list of all members of the sampling frame. A type of probability sampling. |

| Under-reporting bias | Bias in a respondent’s answer that arises when a respondent gives an incorrect answer that is lower than the true answer for any reason. Reasons may involve fear of prosecution, fear of changing laws or because truthful answers may be socially undesirable. A type of response bias. |

| Weighting | A method to correct for non-representative sampling whereby the data of each respondent is multiplied by a weighting factor. Weighting factors can be calculated as the proportion of the category in the sample divided by the proportion of the category in the sampling frame. |

Definitions of key terms for designing and conducting marine mammal bycatch questionnaires (Adapted from: Oppenheim, 1992; Choi and Pak, 2005; Rugg and Petre, 2006; Fowler, 2009; Agresti, 2013).

Purposive, convenience and snowball sampling (defined in Table 2) are types of non-probability sampling. These approaches therefore use non-random or non-systematic methods to select the sample, meaning each member of the sample frame does not have an equal probability of being selected (Fowler, 2009; Oppenheim, 1992). Such approaches result in sampling bias, as defined in Table 2. Purposive sampling was used in 29.6% of studies (n=27) (Table 1). For example, Cruz et al. (2014) sampled islands where squid (Loligo sp.) catch was higher and Lunneryd and Westerberg (1997) interviewed fishers with a high likelihood of bycatch. Such sampling approaches would produce a biased sample, unless the sampling frame was corrected to only reflect the type of fishers interviewed. If a village chief, port master or similar selects fishers to participate (e.g. Svarachorn et al., 2023), this could be a form of either purposive or opportunistic sampling, which could lead to an unrepresentative sample if not addressed during analysis. Maynou et al. (2011) and González and De Larrinoa (2013) sampled retired fishers. Interviewing retired fishers may reduce under-reporting bias arising from fishers fearing changes to their fishing practices. However, the retired fishers can only provide historical bycatch data and their responses may be influenced by recall bias, as defined in Table 2. Convenience sampling was used in 27.4% of studies (n=25) (e.g. Goetz et al., 2014; Dewhurst-Richman et al., 2020; Mustika et al., 2021) (Table 1). This approach may be suitable when only a limited time is available at the port. Snowball sampling was used in 23.1% of studies (n=21) (Table 1). This approach may be appropriate for difficult-to-reach populations where fishers are dispersed and perhaps not registered to a community. Snowball sampling may also reduce non-response rates and increase honesty as a new participant may trust the interviewer if they are introduced by someone who has already completed a questionnaire. However, as a non-probability sampling method, snowball sampling will still yield a biased sample. Zollett (2008) used this approach, aiming to reduce bias by sampling until no new names arose.

Numerous studies within the review stated that they used random sampling (e.g. Zappes et al., 2016; Revuelta et al., 2018; Alexandre et al., 2022). However, sampling approach descriptions aligned more with convenience or purposive sampling. A key difference between these sampling approaches is that random sampling is a probability sampling method whereas convenience and purposive sampling are both non-probability methods. The lack of studies using probability sampling methods may be due in part to the lack of available fisher or vessel lists. In such instances, adapted versions of simple random sampling or systematic sampling, which are both probability sampling methods defined in Table 2, could be employed. Here, a random number generator or systematic approach could be used to identify encountered fishers at a fishing port to be sampled. Pilcher and Kwan (2012) suggested similar adapted methods. However, this approach would not give all members of the sampling frame an equal chance of being sampled as some fishers may not be at the port. Ayissi and Jiofack (2014) conducted interviews mostly in the afternoon and on Sundays when most fishers would be on land. Similarly, Mohamed (2017) conducted interviews when fishers were on their way to or back from fishing, landing their catch, or repairing their gear. Taking such fishing habits into account may increase the representativeness of the sample.

Cluster sampling, defined in Table 2, was a popular method among studies in the review, being employed by 75.8% (n=69) of the studies (Table 1). Here, e.g. villages or islands were used as clusters (Cruz et al., 2014; Leeney et al., 2015). If cluster sampling is used, and clusters are of different sizes, then fishers from different strata will have different probabilities of being sampled. Area probability sampling is a form of cluster sampling that can be used to address this issue, where clusters of individuals are stratified geographically, based on their size. This approach was used by Poonian et al. (2008) who stratified by the number of boats in a community. Cluster sampling may be a suitable approach where no list of fishers/vessels is available or if it is not financially and logistically viable to sample selected participants from a country-wide list of individuals (Moore et al., 2010). However, the approach may produce less precise results than non-cluster sampling.

Stratified sampling, as defined in Table 2, was used by few of the reviewed studies (4.4%, n=4), with respondents stratified across gear type (e.g. Goetz et al., 2014; Alexandre et al., 2022) or port size (e.g. Moore et al., 2010) (Table 1). Stratification allows for variance to be calculated for each stratum, reducing the uncertainty of calculated bycatch estimates. It also allows for different survey efforts to be applied to each stratum which may be useful if there is a higher known variance in a particular strata. If a stratified sampling approach is used, the sample size for each stratum should be proportional to the size of that stratum within the sampling frame (Poonian et al., 2008; Moore et al., 2010; Goetz et al., 2014). Stratification could also be conducted post-data collection (post-stratification). Here, the data are divided between strata after they have been collected, and weighting (as defined in Table 2) can be used to correct for unrepresentativeness between samples (see section 3.4.1).

We recommend that probability sampling methods should be used if fisher or vessel lists are available. Similar recommendations were made by Moore et al. (2021), who recommended simple random sampling. If non-probability sampling is unavoidable, for example, due to the absence of fisher lists, cluster sampling should be employed. If feasible, following an adapted random or systematic sampling approach within clusters should be considered. Where non-probability sampling methods are used, weighting or model-based bycatch estimation techniques are recommended during analysis, to address sampling bias. The only exception to these recommendations is when investigating themes, where representativeness may not be a requirement.

3.1.3 Sample size

Within the review, all studies reported sample size (median sample was: 151; range 5 – 2670). However, only 49% (n=44) of the studies (excluding censuses) reported the sample rate, as defined in Table 2 (mean sample rate: 24.5%; min. 1% - max 63%) (Table 1). Reporting the sample rate is important because it can be used towards understanding the representativeness of the sample.

There are numerous approaches available that can be employed to determine sample size. Determination formulas generally require inputs on (i) the level of accepted variance for the final estimate, (ii) the size of the sampling frame, and (iii) the level of the expected bycatch occurrence (e.g. Krejcie and Morgan, 1970; Aragón-Noriega et al., 2010; Perneger et al., 2015; Uakarn et al., 2021). Sample sizes that generate a bycatch estimate with a coefficient of variation of 0.3 have been deemed appropriate when setting bycatch reference points (Wade, 1998; Moore et al., 2021). Bycatch rates may be known from previous studies, or a pilot study can be conducted to collect these data. Where marine mammal populations have low occurrence and density, per vessel bycatch rates are often very low (Martin et al., 2015; Gray and Kennelly, 2018). Under such circumstances, suitably large sample sizes will be required to estimate the bycatch. We recommend such above described formulas are used to calculate sample size. However, if the aim of the study is to investigate themes present in the sample frame, rather than produce representative bycatch estimates, other approaches may be appropriate. For example, by setting sample size through continuing to interview new participants until no new themes or answers arise (e.g. Seminara et al., 2019; Alves et al., 2012; Zappes et al., 2013). All studies should additionally report details on how the sample size was selected (e.g. López et al., 2003).

3.1.4 Non-response

Very few studies (16.5%, n=15) in the review reported non-response rates (Table 1). Non-response rates influence how representative a sample is of the sampling frame, as the final data set will only represent those who participated. If non-responders shared a specific characteristic (e.g. those who experienced a high frequency of bycatch), the sample will be less representative than if the non-responders had a normal distribution of characteristics of the sample frame. Non-response can therefore lead to non-response bias, as defined in Table 2, and should be reported. Methods to investigate non-response bias are described in section 3.4.1.

3.2 Questionnaire instrument

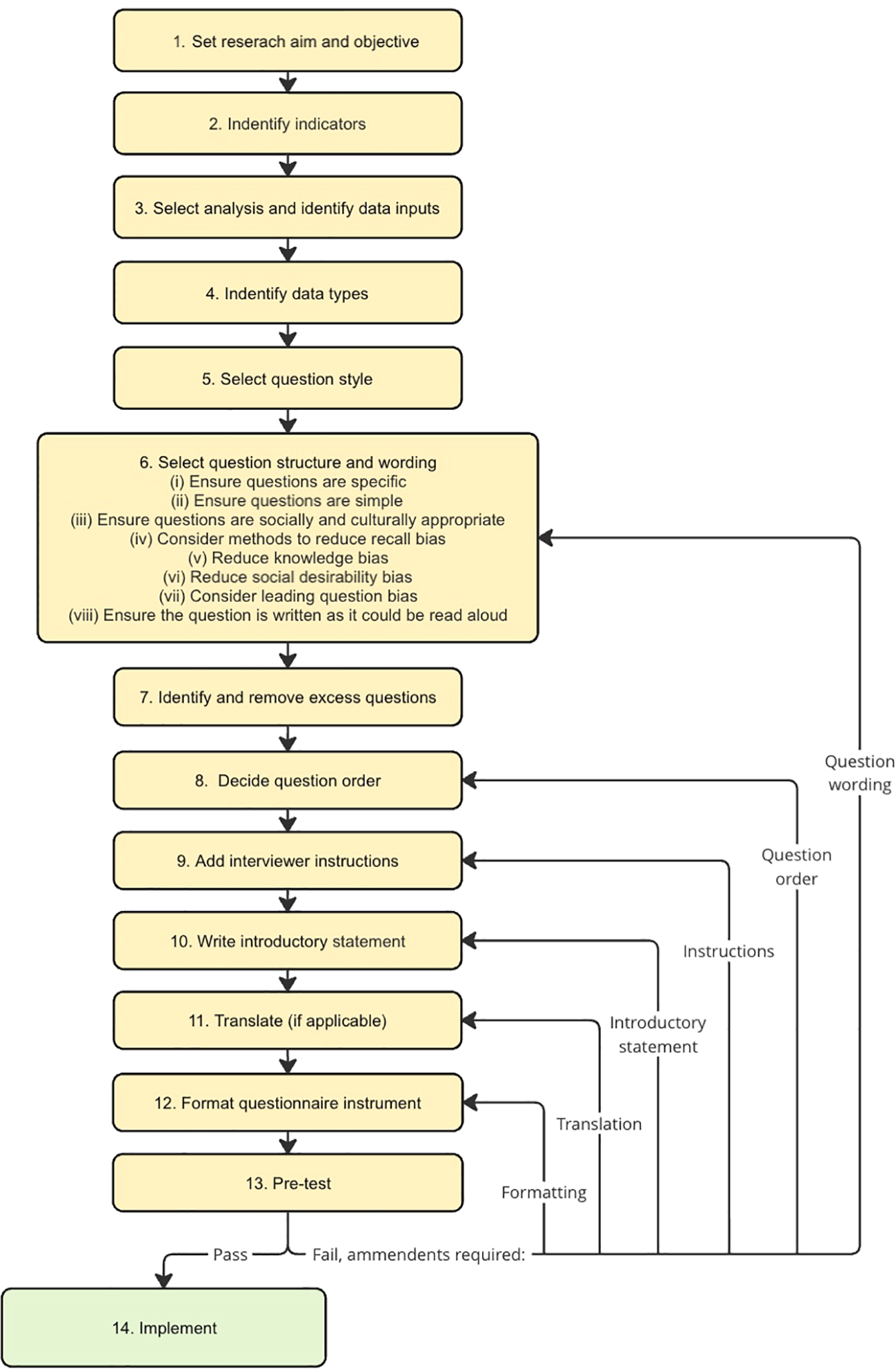

A questionnaire instrument should be built following a clear and transparent process that facilitates reproducibility as described in e.g. Fowler (2009); Hague (2006); Malhorta (2009) and Patel and Joseph (2016). However, within the reviewed literature, there was a general lack of detail on the design process. Here, we set out 14 recommended steps to follow when designing a questionnaire instrument (sections 3.2.1 to 3.2.14). The steps are also summarised in Figure 2.

Figure 2

Questionnaire design flow diagram devised following Fowler (2009); Hague (2006); Malhorta (2009); Patel and Joseph (2016) and the reviewed literature.

3.2.1 Set research aim and objectives

The aim of the study should be clearly defined e.g. ‘To assess the impact of small-scale fisheries bycatch on harbour porpoise (Phocoena phocoena) in Bulgaria’. Specific and realistic objectives should follow that address the aims e.g. ‘Objective 1: Estimate harbour porpoise bycatch rates for different fishing gear in Bulgaria small scale fisheries’. The number of objectives should be conservative but cumulatively allow the stated aim to be addressed. A clearly defined aim and associated objectives can prevent the questionnaire from becoming too long, which can work to reduce drop-out and response fatigue bias (see section 3.2.7). When selecting the time period covered by the questionnaire it is important to consider that recall bias increases with the time passed since a bycatch event (Bradburn et al., 1987). Many studies asked questions regarding bycatch over a one-year period (18.6%, n=17). In general, we support investigating bycatch over this time period if questionnaires are to be one-off as opposed to returning (see section 3.3.2). If entanglements are rare such as for grey whales (Eschrichtius robustus), fishers may have higher recall for these rare events (Baird et al., 2002). In such instances, reporting on wider time periods may be appropriate.

3.2.2 Identify indicators

The indicators, or variables that can be used to measure the concept of interest, should be identified for each objective. Following on from the example objective investigating harbour porpoise bycatch above, the indicators would be annual harbour porpoise Bycatch Per Unit Effort (BPUE) for each fishery.

3.2.3 Select analysis and identify data inputs

The analysis or calculation required to produce the indicator (e.g. BPUE) should be identified. The inputs needed for this analysis can then be identified (e.g. number of porpoises caught in one year period, number of fishers interviewed).

3.2.4 Identify data types

The data types required for each input should be identified. There are four data type categories that questionnaire responses fall into: nominal (data that can be divided into mutually exclusive categories where there are no relationships between the categories), ordinal (data that can be divided into ranked categories where there is no available information on the degree to which one rank differs from the next), interval (numerical scale data with known and equal distances between values but with no true zero) or ratio data (numerical scale data with known and equal distances between values and a true zero) (Rugg and Petre, 2006; Fowler, 2009; Agresti, 2013).

3.2.5 Select question style

It needs to be determined whether each question should be of closed style (answers are restricted to a limited set) or open style (answers are not restricted to a limited set). Closed questions, as used by e.g. Pusineri et al. (2013) and Leaper et al. (2022), may be preferred as they are less open for interpretation and facilitate quick and easy data recording and processing for analysis. In contrast, open questions have been shown to aid recall of past events, reducing recall bias (Huntington, 2000). We recommend that where possible, closed questions are used. However open questions may be considered if recall bias is considered to be an issue.

In the medical field, Tourangeau et al. (1991) found that question style can affect reporting. Specifically, when participants were asked how many cigarettes they smoke per day, closed multiple choice style questions yielded higher responses than open questions. This may be because multiple choice answer options at the higher end of the scale work to normalise smoking behaviour and reduce social desirability bias (bias in a respondent’s answer that arises when a respondent gives an incorrect answer because the truthful answer may be socially undesirable). The same behaviour is possible for fishers answering questions regarding bycatch, as both smoking and bycatch may be seen as taboo topics. However, no studies investigating the influence of closed and open questions on bycatch reporting have been conducted.

3.2.6 Select question structure and wording

The use of filtering or screening questions should be considered for their ability to aid the flow of the questionnaire. For example, Pusineri and Quillard (2008) asked the filtering question ‘Have you ever caught a dolphin?’ before asking more detailed questions on bycatch events. Screening questions could alternatively involve asking respondents to ‘describe a species’, before asking questions on it, as Zappes et al. (2016) and Wise et al. (2007) did. Revuelta et al. (2018) showed images of three different locally occurring species and asked fishers to identify them. Moore et al. (2010) suggested including a species that does not occur in the study area, to test the validity of fishers’ responses. If fishers cannot adequately identify a species, species could be grouped at a higher taxa level (e.g. Bengil et al., 2020; Lopes et al., 2016). Similarly, participants could be screened for their map use skills by asking them to draw their own map, as was done by Zappes et al. (2016). Alternatively, respondents could be screened based on an ‘honesty’, ‘sincerity’ or ‘interest’ rating given by the interviewer (e.g. Van Waerebeek et al., 1997; Shirakihara and Shirakihara, 2013; Li Veli et al., 2023).

When selecting question structure and wording, it is also possible to decide to use two different questions to collect data for the same data input, to validate data collected for an indicator. For example, Mustika et al. (2021) used this technique by asking questions of fishers’ bycatch in the past two years and over their lifetime.

We suggest drafting questions and then assessing each question using the eight criteria set out below.

(i) Ensure Questions are Specific

Questions must be specific to ensure that all respondents interpret each question as the question was intended to be interpreted. Using species- or gear- identification cards can increase question specificity and uniform understanding (e.g. Mohamed, 2017; Alexandre et al., 2022; Li Veli et al., 2023).

(ii) Ensure Questions are Simple

Within the review, 6.6% (n=6) of the studies reported that question wording was assessed to ensure it was simple (e.g. Alves et al., 2012; Seminara et al., 2019; Popov et al., 2023) (Table 1). Simple questions, i.e. those using simple terminology and only one mental step or foci, reduce response bias, defined in Table 2 (Burton and Blair, 1991). Simple questions are therefore recommended. If technical terminology is unavoidable, then definitions should be provided. If a question is not understood by the respondent, then single-question non-response or questionnaire drop-out may occur. If a question is not understood, interviewers may rephrase the question to increase understanding, as in Leeney et al. (2015). However, rephrasing is not advised as it can lead to reliability issues as the question may not be rephrased consistently across participants.

(iii) Ensure Questions are Socially and Culturally Appropriate

Questions must be worded with the study context in mind. For example, if designing a question to ask in what month a bycatch event occurred, it is important to consider whether the study country identifies months with names or numbers. If asking questions regarding participant age, consider whether or not it is rude to directly ask for a participant’s age, or whether it would be more polite to ask for their date of birth (Choi and Pak, 2005).

(iv) Consider Methods to Reduce Recall Bias

Cerchio et al.’s (2015) interviewees approximated dates of bycatch events using landmark life events. Similarly, Leeney et al.’s (2015) interviewees reduced their own recall bias by using historical events to help them remember dates of bycatch. Bradburn et al. (1987) suggested that if questions investigate a large temporal span, then recall is aided by focusing on recent events first and then working backwards in time. Props, such as species identification cards could also be used to aid recall. If using any aids, methods should be standardised across participants.

(v) Reduce Knowledge Bias

Knowledge bias, defined in Table 2, can be reduced by ensuring there is a ‘Do not know’ answer option available and recording ‘No response’ or ‘No data’ if fishers are unsure or could not provide an answer (e.g. Lunneryd and Westerberg, 1997; Whitty, 2016). Multiple studies used species identification cards to aid correct species identification (e.g. Van Waerebeek et al., 1997; Jaaman et al., 2005; Li Veli et al., 2023). Although Moore et al. (2010) and Lopes et al. (2016) found that even when using identification cards, fishers were still not confident in identifying species. Knowledge bias can be further reduced by using screening questions, as described above. Including a ‘Do not know’ option and using species identification cards are both recommended.

(vi) Reduce Social Desirability Bias

To reduce social desirability bias, questions can be asked in a passive style. For example, Whitty (2016) asked ‘Have you ever accidentally had a dolphin entangled in your fishing gear?’, Dewhurst-Richman et al. (2020) asked ‘Do you ever find dolphins caught in your net?’ and Basran and Rasmussen (2021) asked ‘Have you ever witnessed whales, dolphins, or porpoises entangled in the fishing gear deployed by your vessel?’. Choi and Pak (2005) suggested that a preamble can be added to questions on taboo subjects to normalise answers that interviewees may be ashamed to give in attempt to reduce social desirability bias. Zollett (2008) used this technique by asking ‘Bycatch records show that dolphins are occasionally caught in fishing gear. Have you ever caught one?’. Asking bycatch questions in a passive style is recommended.

(vii) Consider Leading Question Bias

The wording of questions should be assessed for their potential to be suggestive or leading. Within the review, 3.3% (n=3) of studies ensured that their questions were not leading (Table 1). Leading question bias, as defined in Table 2, could be reduced by using non-suggestive language, by ensuring that all answer options are available for multiple choice questions and by using a clean map for each fisher for any map-based questions (e.g. Moore et al., 2010; Turvey et al., 2013; Goetz et al., 2014; Dewhurst-Richman et al., 2020).

Leading questions can be used to reduce the effect of under-reporting from social desirability bias. For example, Dewhurst-Richman et al. (2020) asked fishers to describe ‘all’ bycatch events, as opposed to ‘any’ bycatch events. This wording assumes that there would be more than one bycatch event and would encourage true reporting. When using multiple choice questions, answer options available may affect responses. There are no studies investigating the effect of available multiple choice answer options on marine mammal bycatch reporting. However, Schwarz and Hippler (1995) and Holbrook et al. (2007) found that when reporting on smoking, a higher frequency of smoking was reported by respondents when smaller option bins were used. This is perhaps due to respondents seeing that higher frequency answers are possible, therefore reducing social desirability bias, or due to central tendency bias (as defined in Table 2) as the centre bins would represent higher frequencies than if larger bins were used. Further, Krosnick and Presser (2010) found that adding bins at the high end of the spectrum can reduce under-reporting bias.

(viii) Ensure the Question is Written as it could be Read Aloud

In the review, of the 33 studies (33%) that included a full questionnaire, 23.3% (n=7) wrote questions as they would be read aloud (e.g. Whitty, 2016; Revuelta et al., 2018; Dewhurst-Richman et al., 2020) (Table 1). Questions should be written as they would be read aloud to eliminate the need for the interviewer to rephrase questions. Such rephrasing could lead to unreliable data as the rephrased questions might not be reworded consistently and may elicit biased responses.

3.2.7 Identify and remove excess questions

Every question should be assessed to ensure it is essential to meet the objectives. Excess questions should be removed, as in Norman (2000). Removing non-essential questions helps to reduce participation time, response fatigue bias and drop-out rate. It may be possible to remove some essential questions by attaining answers from other sources such as port officials. Within the review, questionnaires lasted an average of 30 minutes (min. 8 minutes – max. 120 minutes). Short questionnaires of less than 30 minutes have been recommended and used to reduce drop-out rate (White et al., 2005; Moore et al., 2010; Dewhurst-Richman et al., 2020). We suggest following these same recommendations. Five studies within the review had both long and short versions of their questionnaires, with the shorter form used if a fisher did not have sufficient time available (e.g. Poonian et al., 2008; Moore et al., 2010; Pilcher et al., 2017). This approach may increase response rates. However, when conducting bycatch questionnaires in Sierra Leone, Moore et al. (2010) found that the percentage of fishers reporting bycatch was higher for those using short forms compared to those using long forms. This may be due to the short form not asking questions on fishing habits, and perhaps therefore fishers were less afraid of potential ramifications of the questionnaire on their fishing practices.

3.2.8 Decide question order

In the reviewed literature, question order was described for 51.6% (n=47) of studies (Table 1). Of these, the majority of studies (57.4%; n=27) followed a logical flow, starting with easier and broader socio-demographic questions (e.g. ‘How old are you?’), leading through topics onto more difficult questions on sensitive or harder to recall topics (Table 1) (e.g. Wambiji, 2007). Questions on bycatch were always either towards the end or in the centre of the questionnaire. No study started with questions regarding bycatch. Starting with easier questions and leading onto more difficult questions can reduce response fatigue and honesty in answers, and is therefore recommended for future studies.

When deciding question order, it should also be considered that the questionnaire may be cut short. It is therefore suggested that data for any primary objective are collected before any secondary objective or validating questions. It is also important to consider whether the question order may influence responses, as demonstrated by Weinstein and Roediger (2010). For example, Dmitrieva et al. (2013) asked questions regarding fishing gear damage from seals before asking bycatch questions, which could lead to more honest bycatch reporting. Similarly, Hale et al. (2011) asked questions regarding perceptions of seals before asking questions about seal bycatch. If perceptions were negative, this may also influence bycatch reporting. Pilcher et al. (2017) asked ‘Do fishers in other villages catch dugongs?’ before asking questions regarding fisher’s personal bycatch. If respondents had honestly reported fishers’ bycatch in other villages, any presence of bycatch may increase respondents’ comfortability of reporting personal bycatch.

3.2.9 Add interviewer instructions

Within the reviewed literature, where the full questionnaire was provided (33.0% of studies; n=30), interviewer instructions were included in 26.1% (n=6) of the questionnaires (Table 1). If a survey is conducted in person or on the telephone, instructions for interviewers on the survey form should be added to aid the flow of the questionnaire. Instructions can also facilitate that every questionnaire be conducted in the same way, which can improve the reliability of the dataset (Fowler, 2009).

3.2.10 Write introductory statement

Within the literature, there was a lack of detail on what information was presented in studies’ introductory statements, as defined in Table 2. While 35.1% of studies (n=32) reported including the studies’ purpose, only 21% (n=19) included that participation was not compulsory, 15.3% (n=14) included that responses were confidential and 13.1% (n=12) included the name of the research group responsible for the study. Whilst it is possible that introductory statements contained more than what was detailed in the reporting, we assume what was not reported was not included. If this assumption is correct, this would indicate that the majority of studies were not sufficiently rigorous in upholding ethical standards. While standards set by ethical boards may differ, in part due to differing legal contexts between countries, we suggest that a thorough introductory statement should include details on the name of the research group, the name of the interviewer (if applicable), the purpose of the study, that the questionnaire is anonymous/confidential and that participation is voluntary. Note, that for anonymity to be upheld, it should not be possible to link participants’ contact details back to their responses. Ensuring anonymity may also reduce social desirability bias and bias from fear of prosecution.

The introductory statement can also be used to reduce non-response and response bias. The statement can include specifics of why the respondents are essential for the study, to motivate participation. For example, Zollett’s (2008) introductory statement included ‘I see you as the expert’.Jog et al. (2018) told fishers that they can eliminate answers to any question at any point, which may also increase response rates. Adding that any question can be skipped may increase participation and decrease drop-out rate. Alternatively, adding how important it is to answer all questions may reduce single-question non-response. Adding that respondents should make it known if they do not know an answer to a question can reduce knowledge bias. Adding that no legal action would be taken against participants as a result of their responses, or that the project is not affiliated with law enforcement, as in Van Waerebeek et al. (1997) and Ermolin and Svolkinas (2018), could reduce fear of prosecution and increase honest reporting. Adding a statement that bycatch is common may also reduce under-reporting. Baird et al. (2002) included that the marine mammal population in question was healthy and increasing in size, which may limit fear of the introduction of fishing restrictions.

3.2.11 Translate (if applicable)

Any translation must be done carefully, taking into account all considerations made when designing the questionnaire instrument. We suggest a detailed brief is given to the translator and that a second translator given the same brief translates the questionnaire back to its original language to ensure the translation is accurate. Lunn and Dearden (2006) recommended that a background in fisheries is advantageous for such translations as many words may be topic specific.

3.2.12 Format questionnaire instrument

Time and care should be taken to ensure that font style and size, and spacing on the questionnaire, are appropriate and aid flow. Suitable formatting will reduce non-response for online/mail questionnaires and aid correct data recording for all questionnaire types.

3.2.13 Pre-test

Within the review, pre-testing was reported in 18.7% of studies (n=17). Laboratory pre-testing (testing the questionnaire in a laboratory setting, such as with the interviewer team) and field pre-testing (testing the questionnaire with a sample of the sampling frame) are important steps required to test timings, ensure questionnaire flow and ensure questions are understood as intended (Oppenheim, 1992; Fowler, 2009; Moore et al., 2010; Kallio et al., 2016). If time and budget allow, both types of pre-testing are recommended. If the questionnaire is to be conducted in a different language, the final pre-testing should occur in the language in which the questionnaire will be conducted. If pre-testing identifies that amendments are required, the step pertaining to the type of amendment required should be revisited and the amendments made, as set out in Figure 2. The subsequent steps should then all be repeated to ensure their criteria are still met (Figure 2). Pre-testing must then be run without amendments being required before implementing the questionnaire.

3.2.14 Implement

Once the questionnaire has passed pre-testing with no amendments required, the questionnaire can be implemented.

3.3 Questionnaire implementation procedure

3.3.1 Survey type

Of the reviewed literature, 99.0% (n=90) of the studies identified the survey type (in-person, telephone, mail, online). However, only 6.6% (n=6) of studies detailed the rationale for their selection. In-person surveys were the most common (89.0%; n=81), followed by mail surveys (8.9%; n=8), telephone surveys (5.5%, n=5) and online surveys (2.2%; n=2) (Table 1). Multiple survey types were used in 4.4% (n=4) of studies.

3.3.1.1 In-person questionnaires

In-person questionnaires have been shown to have higher response rates than other survey types (Dillman et al., 2014). The approach also helps interviewers to build relationships with interviewees, which can increase trust in the interviewer and encourage honest responses. For these reasons, in-person questionnaires are recommended. To reduce non-response during in-person surveys, if pre-identified fishers cannot be located at the port, interviewers can return to the port multiple times. Similarly, if an identified fisher does not wish to take part at a given day/time, alternative times or locations to conduct the questionnaire can be suggested. The identified fisher can also be asked if they can identify another fisher from their vessel to interview instead of them. Working with a local person known to the fishers who can introduce the interviewer to the fishers may also reduce non-response (e.g. Dmitrieva et al., 2013; Briceño et al., 2021).

Within the reviewed literature, in-person questionnaires took place in ports, harbours, fishing camps, fisher rendezvous points, fishers’ houses, fishing vessels, beaches and coffee shops. Some studies conducted questionnaires at a single site (e.g. Cheng et al., 2021). Other studies conducted interviews at multiple sites (e.g. Dmitrieva et al., 2013), which can lead to lower precision in estimates, and differing bias between locations. Instead of using traditional pen and paper to collect data, electronic tablets can be used as in Li Veli et al. (2023). Tablets can make data entry and processing more efficient. However, considerable time may be required to set up the devices, train interviewers and troubleshoot errors. Additionally, devices may make some fishers feel uncomfortable if they are not familiar with them. Similarly, recording interviewees may make them feel uncomfortable and decrease honesty (Fisher and McGown, 1991). If participants are to be recorded, it is essential to receive participant consent, to meet ethical standards.

3.3.1.2 Telephone questionnaires

Telephone questionnaires are only appropriate when a list of the sample with phone numbers is available. Perhaps the biggest strength of telephone questionnaires is their low-cost nature. Further, call-backs to decrease non-response can be carried out with little additional cost. During telephone interviews, the interviewer still has the opportunity to build a degree of trust and rapport with the interviewee, similar to in-person questionnaires.

3.3.1.3 Mail and online questionnaires

Mail and online questionnaires are low-cost methods of deploying questionnaires that may be particularly appropriate when a large sample is distributed across a wide graphical area or if a census is being conducted. Similar to telephone questionnaires, this survey type also requires a list of people to be sampled with corresponding postal or email addresses. Self-administered questionnaires have been shown to reduce bias arising from taboo subjects across other disciplines, for example on reporting drug use (Tourangeau and Smith, 1996; Fowler, 2009). Similar patterns may be found in bycatch reporting. However, no investigation of the influence of self-reporting on bycatch reporting had been conducted in the reviewed literature.

Within the review, mail survey non-response was reduced by using pre-paid and pre-addressed return envelopes (e.g. Norman, 2000; Baird et al., 2002; Basran and Rasmussen, 2021). Basran and Rasmussen (2021) employed a multi-step method for reducing non-response, where first, all fishing companies in the target sample were emailed, then non-responding companies were re-emailed, then finally non-responders were telephoned and re-emailed again.

3.3.2 One-off or returning

In the review, 83.5% (n=76) of studies conducted one-off questionnaires. In other instances, researchers conducted recurring interviews (12.1%, n=11). For example, Díaz López (2006) returned weekly for five years, Abdulqader et al. (2017) returned every two months for 18 months and Fontaine et al. (1994) conducted mail surveys twice, one year apart. Time-series questionnaires may reduce recall issues, as there is a shorter reporting period for participants to recall events. However, if questionnaires are being conducted in person, multiple site visits increase the costs of the study.

3.3.3 Compensation

Some form of compensation can be offered to participants to increase response rates. Compensation has been shown to be effective at reducing non-response outside of the bycatch questionnaire literature (Singer and Ye, 2013). Ambie et al. (2023) was the only study within the review that reported providing some form of compensation to fishers. Here, gifts were given for participation. Ethical considerations should be made when deciding whether compensation should be used as compensating fishers may encourage participation in a study a fisher is not comfortable with. To avoid unethical practices, compensation should not be too large that it is difficult to be turned down. Further, if compensation is too much, it may attract a bias subset of the sample frame. A small financial reward, a free drink or snack, or an entrance into a prize draw may be an appropriate level of compensation.

3.3.4 Reducing under-reporting bias

Within the review, only 34.1% (n=31) of in-person studies reported whether interviews were conducted in groups or one-to-one (Table 1). Reporting such data are important as different interview dynamics may affect responses. Questionnaire respondents have been shown to provide more honest answers when interviews are conducted one-to-one (Tourangeau et al., 2000; Martins et al., 2022). We therefore recommend that where possible, interviews are conducted one-to-one.

Authority figures can be present during interviews. For example, Jaaman et al. (2005) conducted questionnaires with a fisheries officer present, and Leeney et al. (2015) conducted questionnaires with a government official present. Other studies were conducted after the interviewer was introduced to the interviewee by someone trusted by the interviewee (e.g. Dmitrieva et al., 2013; Briceño et al., 2021). The presence of authority figures or introduction by a trusted person may increase or decrease under-reporting bias. However, without dedicated research effort on this topic, the effect of their presence is unknown and warrants investigation.

3.3.5 Pseudo-replication

Pseudo-replication can be reduced by interviewing fishers one-to-one and ensuring only one fisher per vessel is interviewed (e.g. Revuelta et al., 2018; Glain et al., 2001; Trukhanova et al., 2021). To ensure multiple fishers from the same vessel are not interviewed, data can be recorded on the vessel number or name, as in Majluf et al. (2002). However, asking fishers to provide such data may increase under-reporting bias so should be asked at the end of the survey. Any identifying information of vessels should be stored separately to questionnaire responses to uphold interviewee anonymity. Another approach to reduce pseudo-replication is to only interview captains or boat owners (e.g. Fontaine et al., 1994; Negri et al., 2012; Snape et al., 2018). Similarly, Turvey et al. (2013) and Liu et al. (2017) only interviewed one person per fishing family. Basran and Rasmussen (2021) accounted for pseudo-replication during data processing by removing sets of responses that were identical to other sets of responses.

3.3.6 The interviewer

3.3.6.1 Interviewer selection

Within in-person or telephone studies in the review, 19.7% (n=16) of studies used local interviewers (Table 1). Zimmerhackel et al., 2015 and Leeney et al. (2015) both used interviewers known to the participants. Both of these approaches have been shown to lower non-response rates and increase trust in the interviewer (Groves and Couper, 1998; Majluf et al., 2002; Moore et al., 2010). We therefore recommend considering these approaches. Where interviewers were used in the reviewed literature, only 2.5% (n=2) of studies provided age ranges of the interviewers and to 6.2% (n=5) provided genders of interviewers (Table 1). The demographics of the interviewers can influence responses, causing interviewer bias, as defined in Table 2 (Couper and Groves, 1999). It is therefore important to report the demographics of interviewers so insight into potential bias can be gained.

When considering interviewers, selecting individuals interested in the topic, with prior knowledge of the topic and with previous questionnaire experience, will help ensure that the questionnaires are conducted as set out in any interviewer instructions, and following any interviewer training (e.g. López et al., 2003; Maynou et al., 2011; Ermolin and Svolkinas, 2018) (see section 3.3.6.2). Multiple studies used a small team of interviewers (e.g. Alves et al., 2012; Zappes et al., 2016; Ayala et al., 2019) or selected interviewers with similar characteristics (e.g. Majluf et al., 2002; Pusineri and Quillard, 2008). Whilst either of these approaches may increase the reliability of the dataset, neither will eliminate interviewer bias. We, therefore, suggest that if multiple interviewers are used, potential interviewer effects should be investigated (e.g. Goetz et al., 2014).

3.3.6.2 Interviewer training

Within the review, where interviewers were used, only 23.8% (n=20) of studies reported conducting interviewer training (Table 1). Of these, two studies gave details on training length: Pusineri and Quillard (2008) conducted a 1-day training and a debrief after the first week of interviewing, and Moore et al. (2010) conducted 1-9 days of training across different countries. Fowler (2009) recommended that interviewer training should last 2-5 days. We support Fowler’s (2009) recommendation, with training length dependent on prior knowledge and experience of the interviewer and scope and length of the questionnaire. Adequate training is required to ensure that interviewers follow the questionnaire methodology whilst minimising sampling, interviewer and response bias and maximising response reliability (Moore et al., 2010). How an interviewer dresses and behaves can influence how a study is perceived, and may also affect responses, and it is therefore important that the training also cover these topics. A full list of recommendations for what should be included in interviewer training is presented in Table 3.

Table 3

| Topics to cover during interviewer training | ||

|---|---|---|

| 1 | Subject background (e.g. focal species ecosystem importance, fishery descriptions). | |

| 2 | Study objectives. | |

| 3 | How the collected data will be used. | |

| 4 | Study funder. | |

| 5 | Who is conducting the research. | |

| 6 | Samling strategy. | |

| 7 | Sampling bias and how to reduce it. | |

| 8 | Pseudo-replication and how to avoid it. | |

| 9 | Questionnaire ethics (e.g. importance of anonymity and informed consent). | |

| 10 | Bias and reliability issues and how to reduce them: | |

| (i) | Be polite | |

| (ii) | Be neutral | |

| (iii) | Be friendly | |

| (iv) | Treat the respondent with respect | |

| (v) | How to dress (formal/informal, with respect to cultural norms) | |

| (vi) | Listen carefully | |

| (vii) | Do not rush | |

| (viii) | Leave enough time between questions | |

| (ix) | Do not interrupt | |

| (x) | Follow the interviewer instructions | |

| (xi) | Ask the questions as they are written | |

| 11 | How to record data during the survey (e.g. do not leave blanks). | |

| 12 | The importance of labelling data sheets. | |

| 13 | How to enter data into a computer. | |

| 14 | How to take field notes (e.g. on reasons for non-response). | |

| 15 | How to use maps (if applicable). | |

| 16 | A minimum of 10 x supervised practice interviews. | |

Interviewer training topics recommended for questionnaire studies investigating marine mammal bycatch, adapted from guidance in Fowler (2009); Moore et al. (2010); Pilcher and Kwan (2012); Leeney et al. (2015) and Whitty (2016).

3.4 Data analysis

3.4.1 Ratio- and model-based bycatch estimation methods

Within the review, 34 studies (37.4.0%) produced bycatch rate estimates (Table 1). All estimates were produced using ratio-based calculations (e.g. Vanhatalo et al., 2014; Fomin et al., 2014; Abdulqader et al., 2017). Bootstrap methods were most commonly used to calculate associated uncertainty (17.6% of estimates; n=6) (e.g. Lesage et al., 2006; Turvey et al., 2013; Ayala et al., 2019). Chi-squared tests were used to investigate the distribution of bycatch across different variables (e.g. islands, gears) in seven studies (e.g. Zimmerhackel et al., 2015; Lopes et al., 2016; MacLennan et al., 2021). Model-based methods were used to investigate the importance of explanatory variables on bycatch in nine studies (e.g. Majluf et al., 2002; Goetz et al., 2014; Ambie et al., 2023).

When using ratio-based methods to produce bycatch rates, sample representativeness can be investigated by comparing proportions of sample characteristics (e.g. genders, age groups, target catch) to sampling frame characteristics (e.g. Jog et al., 2018; Terribile et al., 2020). Goetz et al. (2014) was the only study in the review that used weighting to correct for non-representative sampling. This suggests that all other studies using non-probability sampling and ratio-based analysis methods contain some degree of bias arising from uncorrected non- representative sampling.

Non-response bias can similarly be investigated by comparing proportions of non-responders’ characteristics (e.g. age, fishing gear) with characteristics of those in the sampling frame. If non-responders are a bias subset of the sample frame, and data are available on the non-responders’ characteristics, it is possible to impute responses for the non-responders. This could be done by fitting regression models to the responses of the responders as a function of their characteristics, and using the fitted model to predict responses for the non-responders (e.g. Lew et al., 2015). Other forms of imputation are discussed by Rubin et al. (1987).

Within the current review, no studies used model-based approaches to estimate bycatch rates or total bycatch. Model-based approaches provide the ability to investigate the influence of a range of explanatory covariates on bycatch and only use covariates found to influence bycatch to predict bycatch rates or total bycatch across the sample frame. Model-based approaches have been used when estimating bycatch rates using fishery observer data. For example, Kindt-Larsen et al. (2023) fitted generalized linear mixed models to count data on the number of harbour porpoise gillnet bycatch events per vessel/area/day in the Baltic region, using explanatory variables including depth, mesh size an year; Cruz et al. (2018) fitted generalised additive models to data on the number of common dolphins (Delphinus delphis) bycatch in a single tuna capture event for Azores pole and line fishers, modelling this as a function of sea surface temperature and year; Martin et al. (2015) fitted both standard and zero-inflated Poisson models to count data on humpback whale (Megaptera novaeangliae) bycatch events in a one-year period in the California drift gillnet fishery, as a function of covariates including location and month. Orphanides (2009) and Cruz et al. (2018) calculated both ratio- and model-based estimates. Orphanides (2009) found similar results produced by each technique. However, Cruz et al. (2018) found model-based methods produced higher estimates (262 dolphins/fishing fleet/12-year reporting period, 95% CI 249–274) compared to ratio-based methods (196 dolphins/fishing fleet/12-year reporting period, 95% CI: 186–205). Authier et al. (2021) used a simulation approach to demonstrate how model-based methods can be used to produce less bias and more reliable estimates than ratio-based methods. When comparing ratio-and model-based approaches, model-based methods can be advantageous as they do not require sampling to have been probability-based or representative (Authier et al., 2021). Whilst the use of model-based methods to estimate bycatch is in its infancy, the ability of models to produce less bias estimates leads us to recommend the adoption of this approach in future studies, or the use of both approaches to produce comparative estimates. However, as models can only make valid predictions within the range of the explanatory variables, it is still important to employ sampling techniques that yield a representative sample.

3.4.2 Fisheries effort data

Within the review, 28.0% (n=26) of studies used fishery effort data to produce fishery-level estimates. The most common fisheries effort data was the total number of vessels in the sampling frame (46.1%, n=12) followed by the number of fishers in the sampling frame (23.1%, n=6) and a measure of time spent fishing (23.1%, n=6) (Table 1). Fishery effort data are often measured differently within and between countries (McCluskey and Lewison, 2008). For this reason, ‘per vessel’ is often used as a universal fishing effort metric.

Fisheries effort data were most commonly sourced from government departments or reports (50.0%, n=13) (e.g. Saudi Directoriate of Fisheries, Swedish Agency for Marine and Water Management) or collected within the respective current studies (30.8%, n=8) (Table 1). In cases where fishery effort data are available, they may be incorrect for numerous reasons. For example, the data may only contain estimates, figures may be out of date due to people leaving or joining the fishery, or the data do not account for Illegal, Unreported Unregistered (IUU) fishery effort. To ascertain the validity of fisheries effort data, data should be ground-truthed. For example, Cappozzo et al. (2007) ground-truthed data with available coastguard data and Negri et al. (2012) used coastguard and protected area personnel data. Van Waerebeek et al. (1997) found that ground-truthed figures were lower than the available fisheries effort data figures. When fishery effort data are unavailable or incorrect, accurate data must be attained. Moore et al. (2021) provides an overview of how to collect such data through fishery effort questionnaires, dockside monitoring, logbooks and using catch data.

3.5 External validation of study results

Popov et al. (2023) and Pusineri and Quillard (2008) compared study results with official fisheries records. Zollett (2008) compared questionnaire data with fishery observer data. Questionnaire data may also be validated using strandings data (e.g Karamanlidis et al., 2008; Izquierdo-Serrano et al., 2022). Alternatively, Shirakihara and Shirakihara (2012) compared bycatch seasonality with known species seasonal occurrence. Whilst external validation can provide insight into the accuracy of the data, it is important to understand that validating respondents or datasets may also be subject to bias.

4 Best practice recommendations

Through the completion of a systematic review of the methodologies of 91 marine mammal bycatch questionnaire studies from 1990-2023, a best practice guide for designing and conducting questionnaire studies was produced and is presented in Supplementary Material 3. We recommend utilising this guide when designing future questionnaire studies to investigate marine mammal bycatch alongside the questionnaire design flow chart, the list of recommendations to include in the interviewer training and the table of bycatch questionnaire definitions presented in Figure 2 and Tables 2, 3. Recommendations can be used by scientists and decision-makers across the globe. Whilst the recommendations are intended for those using questionnaires to investigate marine mammal bycatch, the information will also be useful for those investigating bycatch of any other non-target species.

5 Conclusion