- 1Physical Therapy Department, Prince Sultan Military College of Health Sciences, Dhahran, Saudi Arabia

- 2Clinical Laboratory Sciences Department, Prince Sultan Military College of Health Sciences, Dhahran, Saudi Arabia

- 3Basic Medical Sciences Unit, Prince Sultan Military College of Health Sciences, Dhahran, Saudi Arabia

- 4Computer Skills Unit, Prince Sultan Military College of Health Sciences, Dhahran, Saudi Arabia

- 5Department of Clinical Laboratory Sciences, Imam Abdulrahman Bin Faisal University, Dammam, Saudi Arabia

Introduction: The integration of artificial intelligence technology into healthcare education has been hampered by a number of difficulties. This study aimed to investigate the faculty’s familiarity and perception towards AI applications in medical education concerning various demographic and professional characteristics.

Methods: This observational study used a validated questionnaire distributed to health sciences colleges’ faculty in Saudi Arabia from January 1 to April 30, 2025.

Results: Knowledge rates among the 293 participating faculty were moderate across all groups with an overall average of 58.9%. Knowledge varied significantly with increasing years of experience and academic qualification (p = 0.000). In general, positive perception rates were generally high across all groups, with an overall average of 74.8%. Only 33.7% reported agreement on the reliability and accuracy of AI-generated outputs. A large proportion of the participants (72.3%) reported disagreement that AI use poses ethical concerns in medical education. Faculty opinions on the impact of AI on academic integrity varied.

Discussion: This study shows that there are still significant gaps in general knowledge, formal training, and ethical understanding, despite the supportive perception score of the health science faculty towards AI integration in medical education. Despite a general lack of knowledge and a lack of curricular content on AI applications, the results show considerable support for incorporating AI into medical education. Institutional readiness for the integration of AI in medical education is strongly influenced by obstacles, including a lack of knowledge, a shortage of skilled faculty, and fear of career threat.

Introduction

The general term artificial intelligence (AI) describes the technology that makes it possible for computers and robots to simulate human intelligence (1). The integration of AI technology into health professions education and teaching is anticipated to be a crucial component of any modern curriculum (2). AI has been applied to medical teaching and learning, assessment, medical research, and administration (3). The integration of AI into the teaching of the health sciences is a vital field of research and development. Because AI may enhance learning in both theoretical and practical domains, its role in health professions education is increasingly being recognized. AI techniques are used in simulation-based learning, diagnostic tools, evaluation processes, and healthcare decision-making. AI tools, including data analytics, machine learning, and natural language processing, have the potential to improve student performance and teaching strategies (4, 5). For example, ChatGPT has been reported to enhance higher-order thinking skills, affective-motivational states, and academic achievement, without discernible impact on self-efficacy and mental effort (6).

A rising number of medical specialties, including radiology, ophthalmology, pathology, and dermatology, are utilizing AI (7). Although the majority of AI approaches are used in training laboratories, they are also used in various areas of medical education. By improving future healthcare providers’ knowledge and abilities, AI in medical education has the potential to improve the overall medical care (8). As AI becomes more widely used in the healthcare sector, it is imperative that medical education embraces this technology to guarantee that future healthcare workers have the skills they need to deliver high-quality treatment. However, AI has not been widely used in curriculum review and assessment (9).

Faculty members are essential to the adoption and integration of AI into teaching methods since they have a high influence on the curriculum and pedagogy of health education (10). Faculty perspectives regarding AI in education are influenced by a variety of factors, including technological familiarity, the perceived advantages of AI, and ethical considerations. One of the main issues mentioned in the literature is the differences in faculty members’ levels of AI expertise (11). The lack of familiarity with AI technology may make many health sciences education faculty members reluctant to incorporate it into their syllabi. It has been widely reported that faculty members expressed a need for materials and professional development programs that emphasize AI literacy to integrate AI tools into their teaching strategies (12).

Opinions among faculty members regarding the integration of AI into health professions education vary. Many people see the potential benefits of AI, like increased productivity and improved clinical training, yet some are not comfortable with it. A previous survey found that most faculty members agree AI will play a significant role in health professions education in the years to come (13).

AI adoption in healthcare education has been hampered by a number of problems, including institutional difficulties, faculty reluctance, and technical constraints (4). Faculty members commonly cited the complexity of AI systems, the lack of technical support, and the potential for job displacement as reasons for not integrating AI into their syllabi (14). There has been another concern raised by the faculty members about the ethical issues surrounding AI in education, such as the possibility of data bias, academic integrity, and the need to preserve privacy (10).

Most of the previous studies that assessed the knowledge and perception towards the use of AI in medical education included only the students (15). Very few studies investigated the knowledge and perception of the faculty of the healthcare colleges towards the use of AI in medical education. With the paucity of existing research, increasing need for AI training of healthcare providers, and emerging AI literacy among faculty members, our objectives were to assess the health sciences college’s faculty knowledge and perception toward the use of AI in medical education.

This work aims to examine faculty’s familiarity with, usage of, and perception towards AI in education for different faculty populations based on gender, years of experience as faculty, highest educational qualification, current position, and college. The study questions were: (1). What is the current overall prevalence and pattern of knowledge and perception rates towards AI usage in medical education among the faculty of the health sciences colleges? (2). How do gender, years of experience as faculty, highest educational qualification, current position, and college influence the knowledge and perception towards AI usage in medical education among the faculty of the health sciences colleges, and (3) What is the relationship between faculty’s reported knowledge on their perception towards AI usage in medical education?

Methods

This observational cross-sectional study used a structured questionnaire prepared by the research group based on the study’s objectives. Ethical approval of this study was obtained from the Institutional Review Board of Prince Sultan Military College of Health Sciences (IRB-2025-CD-011).

The participants were informed about the study’s goals and guaranteeing the privacy of their personal information as a condition of participating in it. All participants signed a written informed consent form electronically.

The research team developed a well-structured, validated, and pretested questionnaire by the study’s goals following a comprehensive review of the literature. The first section included demographical and professional information (age, gender, college, years of experience as a medical educator, highest educational qualification, and current position). The second section included an assessment of knowledge and understanding of AI, including participants’ evaluation of their knowledge and past AI experience. The third section measured the participants’ perception of AI on five-point Likert scale questions. This section measured the participants’ perception of the reliability and accuracy of AI, its ethical concerns, interference with academic integrity, and potential. The fourth part included questions that measured the participants’ practice and challenges of AI in medical education. A convenient sample of 60 faculty from the target group, who were not involved in the study, were given the final version of the questionnaire to test its reliability. The survey questions’ overall Cronbach’s alpha score was 0.87.

A simple random sampling method of healthcare professions faculty of nine colleges in Saudi Arabia from January 1 to April 30, 2025, was included in this study. The questionnaire was distributed to participants through a web link via several social media platforms. Data collectors located at the various college campuses assisted in the distribution of the questionnaire and ensured the inclusion of the targeted participants. The survey was available online through Google Forms from January 1st to March 30th, 2025.

Statistical analysis

Participants’ knowledge and perceptions about the application of AI in medical education were evaluated using a series of closed and open-ended questions. Only completed responses to all questions were included in the analysis. One point was allocated for the correct response to the multiple-choice questions and zero marks for the wrong one. Responses to the five-point Likert scale questions were rated as: strongly agree (5), agree (4), neutral (3), disagree (2), and strongly disagree (1). The average knowledge and perception scores were calculated out of the total marks of 21 and 25 for knowledge and perception, respectively. The data were processed with SPSS software version 28.0 (SPSS, Chicago, Illinois). The internal consistency reliability of the questionnaire was evaluated using Cronbach’s alpha, and coefficients of 0.7 indicated internal consistency reliability. We used one-way ANOVA to test the significant differences in the knowledge and perception due to various demographic and professional characteristics. The statistical significance was set at p < 0.05 for all analyses.

Results

Out of 360 respondents, 293 (81.4%) completed the survey and were included in this study. Table 1 shows the demographic and professional characteristics of the health sciences colleges’ faculty members who took part in the study. Female faculty made up 56.0%, while males were 44.0%. Of the participants, 13.0% had a bachelor’s degree, 25.9% of a master’s degree, and the majority of 61.1% were PhD holders. Assistant professors made up the largest group of 38.2%, followed by lecturers of 29.4%. Associate professors and professors made up 17.0% of the total, while teaching assistants and clinical instructors accounted for the remaining 13.0%. Of the total participants, 30.4% had below 5 years of experience, while the rest were fairly distributed according to the years of experience, indicating an equal representation of young faculty and experienced professors. The colleges of applied health sciences represented the highest proportion of faculty (60.1%), followed by nursing (19.8%) and medicine (14.0%), while pharmacy and dental schools had low representations of 5.1 and 1.0%, respectively.

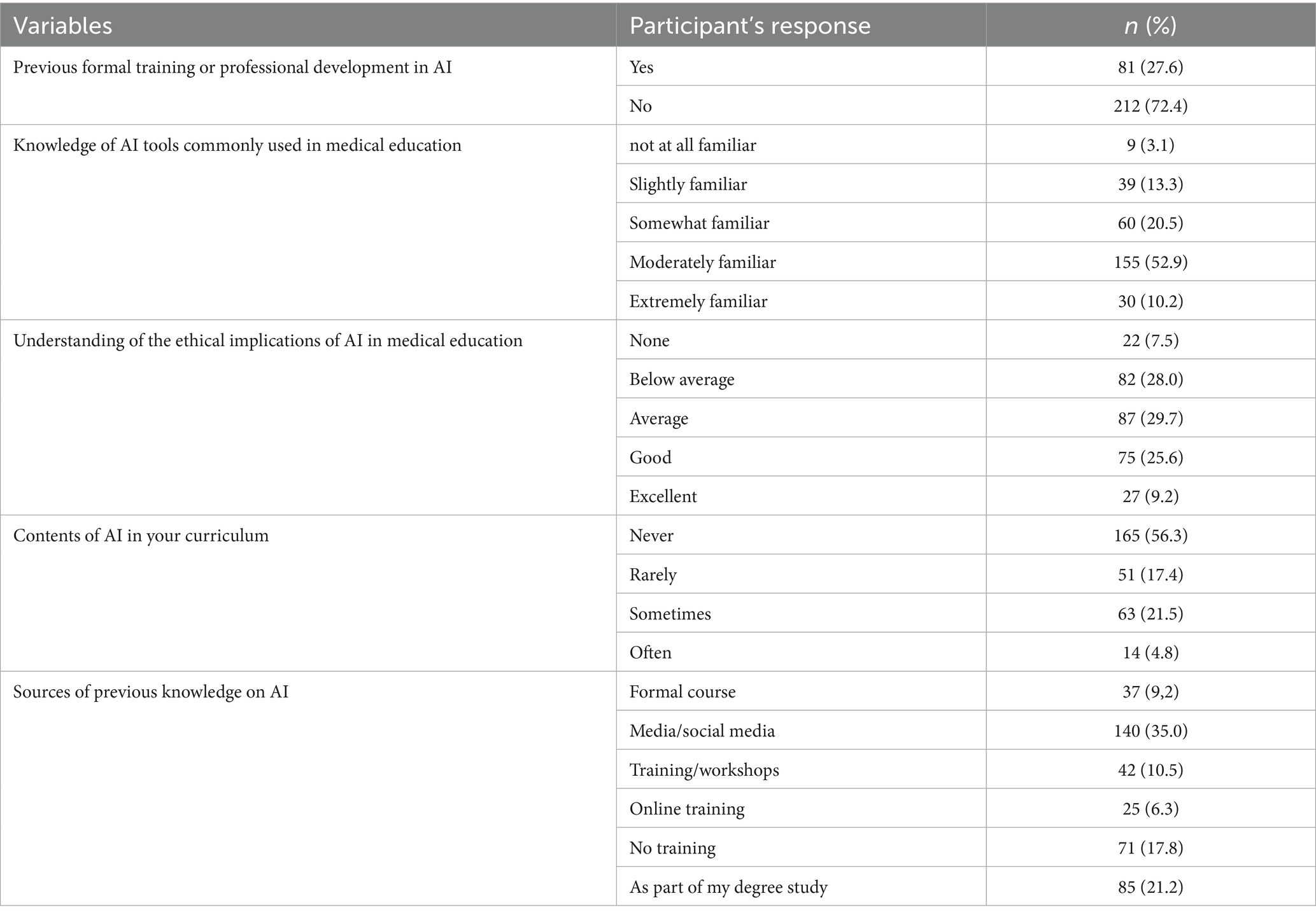

Table 1. Demographic and professional characteristics of health sciences faculty and their disposition toward AI in medical education.

Table 1 also shows the health sciences faculty’s general knowledge and perception scores towards the use of AI in medical education. In general, knowledge rates were moderate across all groups, with an overall average of 58.9%. Female faculty had a slightly higher knowledge rate (59.5%) than males (58.1%), with no significant difference (p = 0.50). Faculty of 6–10 years of experience were the most knowledgeable (62.9%), while the least were among 0–5 years (57.3%), (p = 0.000). Knowledge varied with the participant’s academic qualification from 55.3% for master’s degree holders to 60.8% for bachelor’s ones, (p = 0.35). Knowledge also varied across academic rankings, with clinical instructors and demonstrators showing slightly higher rates of 61.3 and 60.4%, respectively (p = 0.055). Knowledge by college affiliation ranged from 49.7% for Pharmacy to 61.7% for Medicine faculty (p = 0.584).

In general, perception rates were high across all groups with an overall average of 74.8%. Females also demonstrated a higher positive perception (75.6%) than males (73.1%) (p = 0.063). Perception also differed significantly with years of experience, accounting for 76.2% among those with 11–15 years of experience (p = 0.040). All groups of academic rankings had similar perception rates (73.2–75.0%).

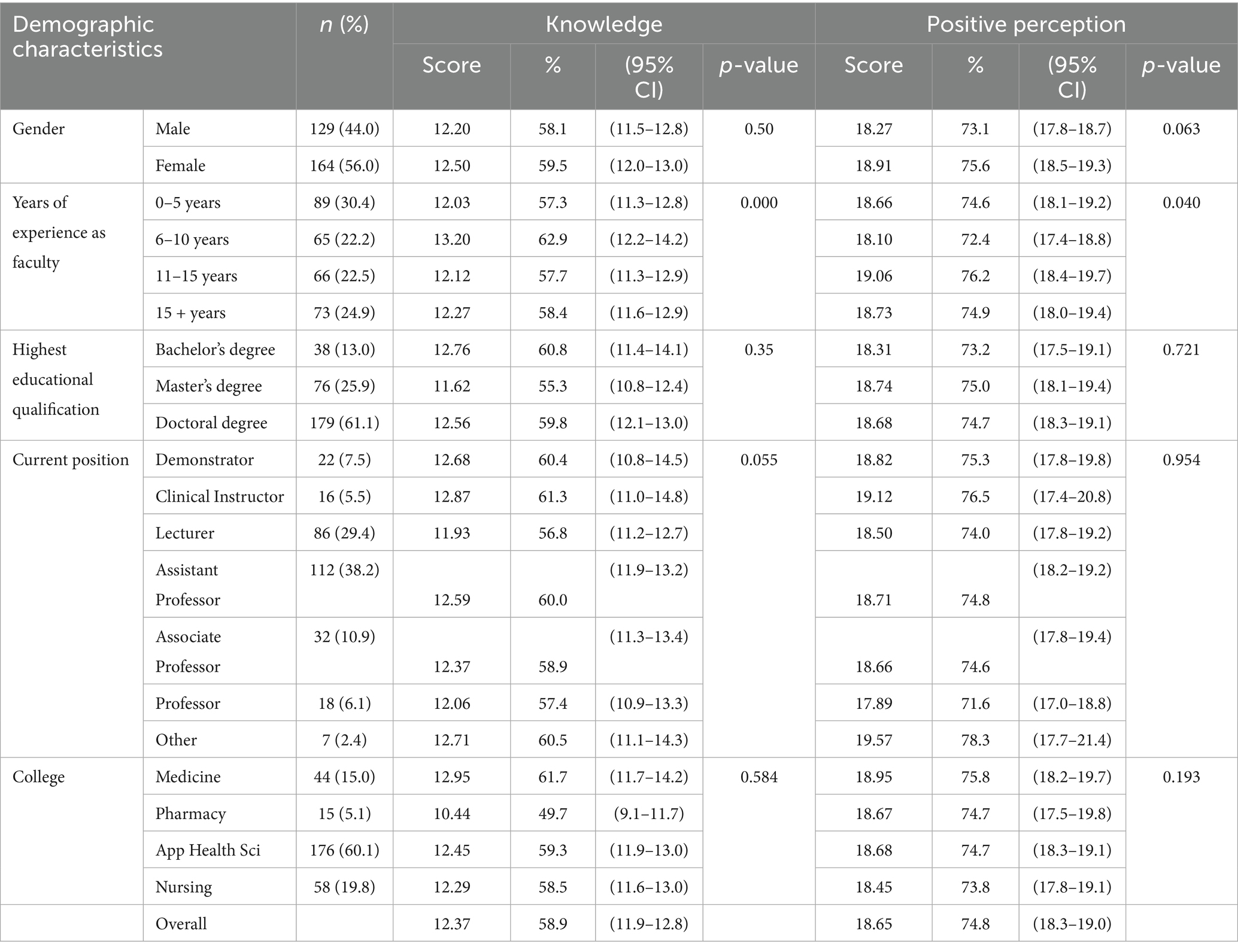

Table 2 shows the faculty’s knowledge on specific topics such as previous formal training, AI tools awareness, ethical understanding, curriculum inclusion, and source of knowledge. Out of the total participants, 212 (72.4%) had no formal training or professional development in AI, whereas only 81 (27.5%) reported that they had. Only 30 (10.2) were very familiar with the regularly used AI tools in medical education, while 155 (52.9%) were somewhat familiar, 39 (13.3%) were unfamiliar, and 60 (20.5%) were somewhat familiar. As of participants’ knowledge on the ethical implications of AI in medical education, 7.5% reported no knowledge at all, 28.0% below average, 29.7% average, and 25.6% reported good knowledge. Only 9.2% of them reported excellent knowledge of the ethical implications of AI in medical education. Of the participants, 165 (56.3%) reported having no AI-related course material at their colleges, 51 (17.3%) said it was rarely taught, 63 (21.5%) said it was occasionally taught, and 14 (4.8%) said it was frequently taught.

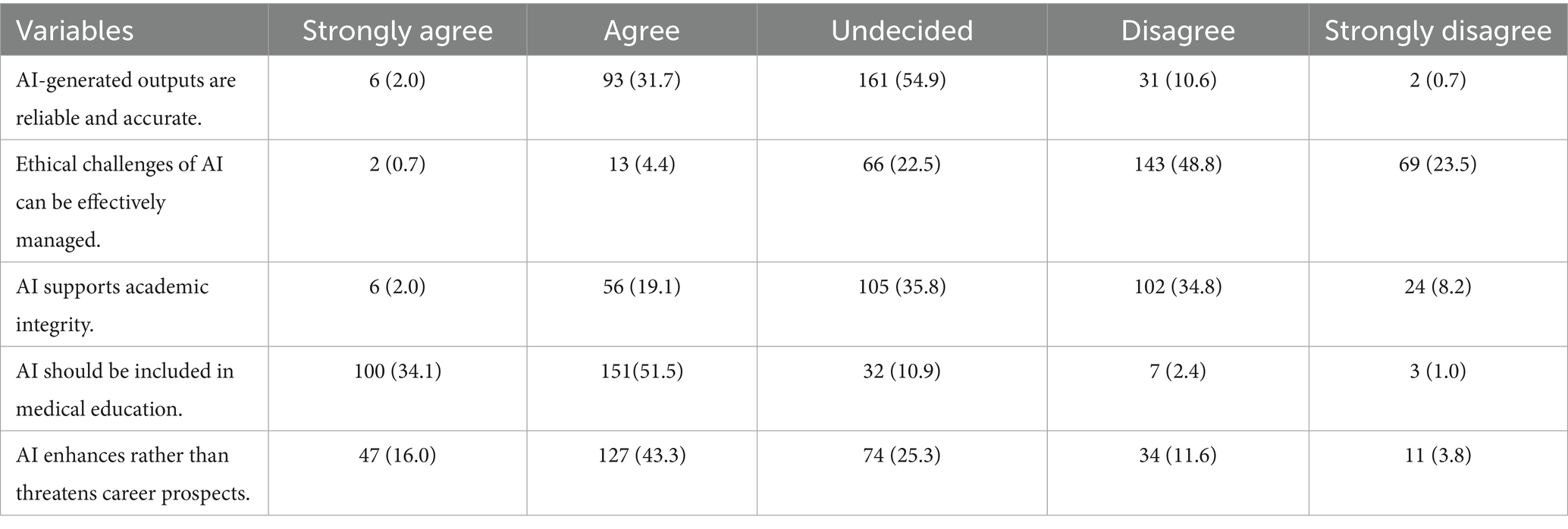

Table 3 shows the faculty perspective on AI’s reliability, ethics, integrity, integration, and career impact in medical education. Only 33.7% reported agreement on the reliability and accuracy of AI-generated outputs, and 11.3% reported disagreement, while the majority of respondents (54.9%) remained neutral. A large proportion of the participants (72.3%) reported disagreement that AI use poses ethical concerns in medical education.

Table 3. Faculty perceptions of AI in medical education: across reliability, ethics, integrity, integration, and career impact in numbers and percentages (in parentheses).

Faculty opinions on the impact of AI on academic integrity varied. While 35.8% were neutral, 21.1% agreed on the interference of AI with integrity, and 34.8% disagreed.

A majority of 85.6% reported agreement on the inclusion of AI in medical education.

While 59.3% perceived some level of threat, 15.4% disagreed, and a quarter remained neutral.

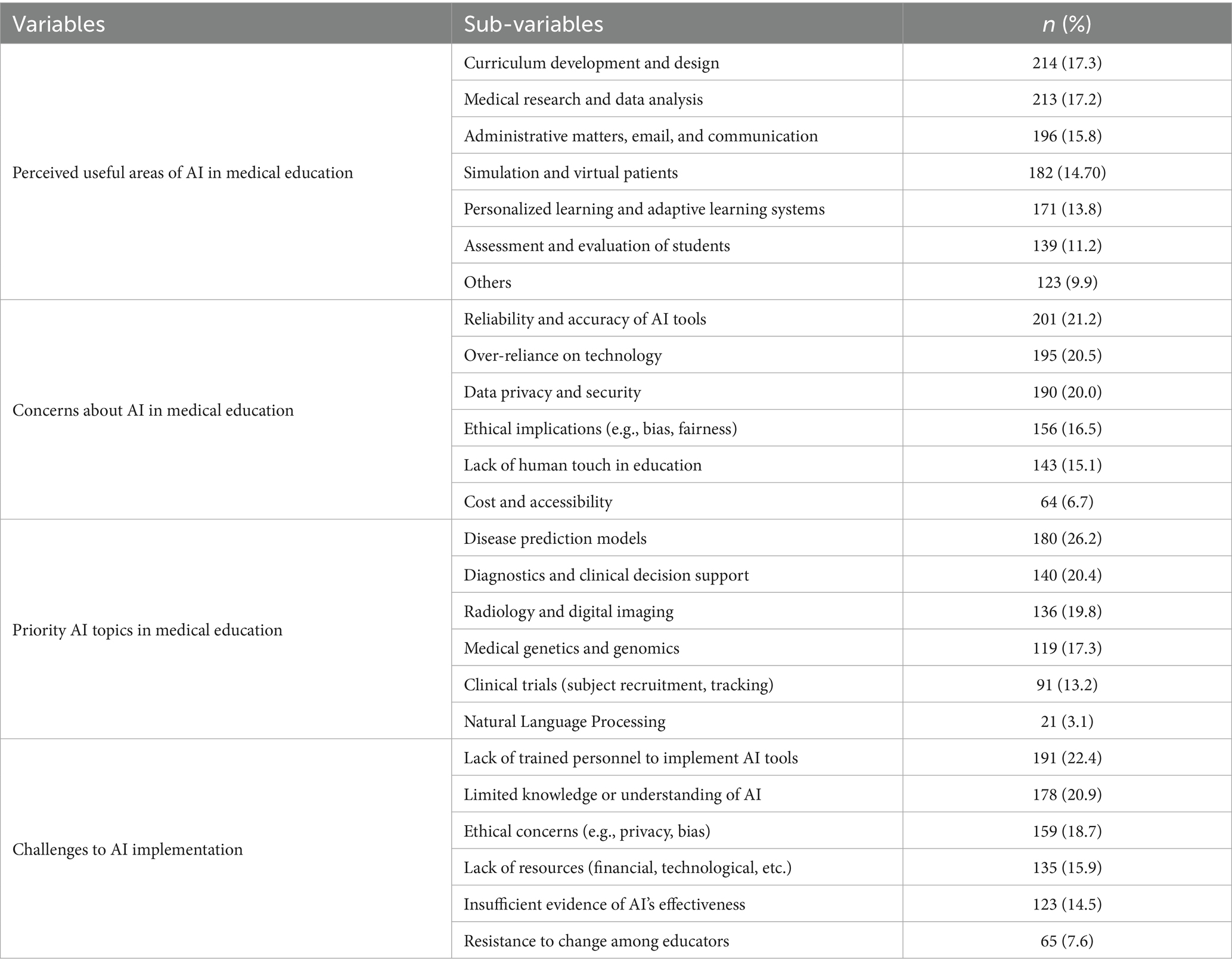

Table 4 shows the faculty perspectives on the relevance, benefits, and challenges of artificial intelligence integration in health professions education. AI was reported as most useful in curriculum development and design (17.3%) and medical research and data analysis (17.2%). Additional applications included administrative communication (15.8%), simulation and virtual patients (14.7%), and personalized/adaptive learning systems (13.8%). Other limitations reported included data privacy and security (20.0%), over-reliance on technology (20.5%), and the accuracy and dependability of AI technologies (21.2%) were the top worries raised. Ethical concerns reported included bias and fairness (15.0%) and the decline of human interaction in education (15.1%). Accessibility and expense concerns were less common (6.7%).

Table 4. Faculty perspectives on the relevance, benefits, and challenges of artificial intelligence integration in health professions education.

Discussion

This study offers valuable insights into the knowledge, perceptions, and readiness of the faculty members of health sciences colleges towards the use of rapidly emerging AI in medical education.

Lack of faculty knowledge and experience, as well as a lack of guidelines regarding AI in medical education, are some of the reasons why many health sciences programs do not currently incorporate AI into their curricula (16). According to the current study, only 27.5% of faculty members had formal AI training, and just 10.2% well familiar with the common AI tools. A similar previous study reported that only 12.04% of the health sciences college’s faculty were very familiar with the AI application in medical education (17). Another study indicated that 50% of faculty in medical colleges who were aware of AI topics were more likely to report that they did not have a basic understanding of AI technologies (18). The current findings have indicated a significant knowledge gap among the faculty, which may be very alarming, even though multiple studies have demonstrated that faculty readiness is a vital component of incorporating AI into medical education.

The study demonstrated statistically significant differences in knowledge rates based on years of experience and academic ranking, with mid-career faculty having the highest knowledge rates. Similar trends were noted in another report (19). This may be attributed to a cohort effect, whereby these individuals are both early adopters of technological innovation and sufficiently established in academia to pursue ongoing education. Numerous studies evaluated the AI gap in knowledge, but mostly included students and healthcare practitioners rather than health science faculty (20).

Notably, all groups had shown favorable perception scores towards the integration of AI in medical education (overall mean = 74.8%), with those with 11–15 years of experience having the strongest positive perceptions (76.2%, p = 0.040). This is consistent with earlier research showing favorable attitudes towards AI in medical education (21). Several studies revealed varied attitudes toward AI in medical education; however, mostly about students (22). Most medical students and doctors acknowledged AI’s potential benefits, such as enhancing clinical judgment, research, and auditing skills, and streamlining administrative processes (20).

The rising agreement in the literature that advocates the AI’s inclusion in medical curricula is being supported by the current findings that 85.6% of participants favored AI’s inclusion in the medical studies curricula (20, 23).

The fact that only 7.5% reported excellent knowledge about the ethical concerns of AI and that 21.1% of faculty members thought AI compromised academic integrity is of concern. However, 72.3% of respondents denied that AI raises ethical issues in medical education, which revealed non-traditional points and the need for more in-depth investigations of digital ethics and AI biases, which are issues that have been raised in the literature (24).

The study’s respondents reported the most important applicable areas were disease prediction and prevention (31.6%), medical imaging and diagnostics (20.7%), and personalized treatment plans (20.4.3%). It has been widely reported that AI revolutionized medical practices by improving diagnostic accuracy (25). AI applications in diagnostic imaging have enabled early detection and improved treatment planning (26).

The incorporation of AI competences into medical curricula has been the subject of multiple studies, which proposed that they be made a required part of medical education in order to prepare future healthcare providers for clinical decision-making based on AI (27, 28).

In this study, AI was reported as most useful in curriculum development and design, medical research and data analysis. Additional applications included administrative communication, simulation and virtual patients, and personalized/adaptive learning systems. Assessment and evaluation, administrative matters, email, and communication were also mentioned.

Previous findings revealed a lack of standardized AI curriculum frameworks and notable global discrepancies in the use of AI in medical education. However, several studies highlighted the usefulness of AI in various areas of medical education, such as plagiarism detection, clinical simulations, and homework support (29, 30). In addition, others mentioned the improvement of self-learning and interdisciplinary teaching (31). Clinical simulations, curriculum development, automated feedback, and plagiarism detection are among applications of AI (32). The most prioritized topics for AI integration in curricula were disease prediction models and diagnostics/clinical decision support, followed by radiology and digital imaging, and medical genetics and genomics.

AI has been applied in medical teaching and learning through various systems such as intelligent tutoring systems (33), virtual patients (34), adaptive learning systems, distance education (35), and adaptive feedback (23). AI has also been applied in assessment processes, including optical mark recognition, automated essay scoring, and virtual reality and simulation assessment (35). Other applications of AI in medical education have been through academic medical researchers and administrative matters such as recruitment and admissions, curriculum design and review, management of staff and student records (19).

Although the majority of participants disagreed with the notion that AI creates ethical concerns, their replies on other open-ended questions show that they perceive specific ethical concerns. The most common limitations raised were over-reliance on technology, data privacy and security, and the accuracy and dependability of AI technologies. Additionally, respondents were concerned about ethical issues, including bias and fairness, and the loss of personal touch in education. The ethical implications of integrating AI into medical education are critical to ensuring that technological advancements enhance learning without compromising the core humanistic values of healthcare practice. The Challenges of the Implementation of AI in medical education were previously identified as the difficulty in assessing the effectiveness of the AI application, privacy and confidentiality of the data, and ethical judgment (19).

The most commonly reported challenges by the participants were the lack of trained personnel and limited knowledge or understanding of AI. Other limitations included ethical concerns, lack of resources, and insufficient evidence of AI effectiveness. Resistance to change among educators (7.6%) was the least reported.

The major concerns on the use of AI in medical education widely reported included the reliability and accuracy of AI-generated outputs, academic integrity and moral norms, data privacy and security, fairness and equal treatment, loss of human touch in education, and ethical implications such as bias and fairness (36).

The majority of the previous studies have been conducted on the students’ knowledge and perception towards the application of AI in medical education. However, this study fills a vital and existing gap in the literature about the incorporation of AI in medical education, especially from the viewpoint of the faculty that has not been well studied. The study also highlights previously unreported discipline-specific trends in AI familiarity and perception by adding academics from multiple health sciences fields, allowing for cross-college comparisons. Our limitations are typical of the other studies using a cross-sectional design and self-reported data.

The absence of robustness checks like sensitivity analyses or bootstrapping is one of the study’s limitations. These techniques could help resolve possible breaches of statistical assumptions and offer more assurance regarding the stability of the findings. Such methods could be useful in future research to improve the methodological rigor.

Conclusion

This study demonstrates that there are still considerable gaps in general knowledge, formal training, and ethical awareness, despite the health science faculty’s supportive attitude toward AI integration in medical education. These results are consistent with worldwide patterns and support pressing demands for faculty development, interdisciplinary curricular reform, and the integration of digital ethics. To fully utilize AI’s potential in influencing medical education in the future, these obstacles must be removed. The study offered data from stakeholder perspectives, primarily to create a foundation for more informed debates and decision-making.

Despite a general lack of knowledge and a lack of curricular content on AI applications, the results show considerable support for incorporating AI into medical education.

In general, institutional readiness for the integration of AI in medical education is strongly influenced by obstacles, including a lack of knowledge, a shortage of skilled faculty, and fear of career threat. The study also raised concerns about the need for proper training as well as the ethical ramifications of over-relying on AI systems.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

EZ: Conceptualization, Formal analysis, Methodology, Supervision, Writing – original draft, Writing – review & editing, Visualization. SE: Conceptualization, Formal analysis, Supervision, Visualization, Writing – original draft, Writing – review & editing, Investigation, Methodology, Validation, Resources. LM: Data curation, Formal analysis, Investigation, Methodology, Software, Visualization, Writing – original draft, Writing – review & editing, Validation. AbA: Investigation, Project administration, Software, Visualization, Writing – original draft, Writing – review & editing. HA: Investigation, Methodology, Project administration, Resources, Software, Writing – original draft, Writing – review & editing. AhA: Validation, Visualization, Writing – original draft, Writing – review & editing, Investigation, Methodology. ZA: Investigation, Software, Supervision, Validation, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Civaner, MM, Uncu, Y, Bulut, F, Chalil, EG, and Tatli, A. Artificial intelligence in medical education: a cross-sectional needs assessment. BMC Med Educ. (2022) 22:772. doi: 10.1186/s12909-022-03852-3

2. Connolly, C, Hernon, O, Carr, P, Worlikar, H, McCabe, I, Doran, J, et al. Artificial intelligence in interprofessional healthcare practice education–insights from the home health project, an exemplar for change. Comput Sch. (2023) 40:412–29. doi: 10.1080/07380569.2023.2247393

3. Feigerlova, E, Hani, H, and Hothersall-Davies, E. A systematic review of the impact of artificial intelligence on educational outcomes in health professions education. BMC Med Educ. (2025) 25:129. doi: 10.1186/s12909-025-06719-5

4. Moldt, J-A, Festl-Wietek, T, Fuhl, W, Zabel, S, Claassen, M, Wagner, S, et al. Assessing AI awareness and identifying essential competencies: insights from key stakeholders in integrating AI into medical education. JMIR Med Educ. (2024) 10:e58355. doi: 10.2196/58355

5. Leming, MJ, Bron, EE, Bruffaerts, R, Ou, Y, Iglesias, JE, Gollub, RL, et al. Challenges of implementing computer-aided diagnostic models for neuroimages in a clinical setting. NPJ Digit Med. (2023) 6:129. doi: 10.1038/s41746-023-00868-x

6. Deng, R, Jiang, M, Yu, X, Lu, Y, and Liu, S. Does ChatGPT enhance student learning? A systematic review and meta-analysis of experimental studies. Comput Educ. (2024) 2024:105224. doi: 10.1016/j.compedu.2024.105224

7. Kulkarni, S, Seneviratne, N, Baig, MS, and Khan, AHA. Artificial intelligence in medicine: where are we now? Acad Radiol. (2020) 27:62–70. doi: 10.1016/j.acra.2019.10.001

8. Nagi, F., Salih, R., Alzubaidi, M., Shah, H., Alam, T., Shah, Z., et al. (2023). Applications of artificial intelligence (AI) in medical education: a scoping review. Healthcare Transformation with Informatics and Artificial Intelligence. pp. 648–651.

9. Shankar, PR. Artificial intelligence in health professions education. Arch Med Health Sci. (2022) 10:256–61. doi: 10.4103/amhs.amhs_234_22

10. Wang, J, and Li, J. Artificial intelligence empowering public health education: prospects and challenges. Front Public Health. (2024) 12:1389026. doi: 10.3389/fpubh.2024.1389026

11. Thompson, RA, Shah, YB, Aguirre, F, Stewart, C, Lallas, CD, and Shah, MS. Artificial intelligence use in medical education: best practices and future directions. Curr Urol Rep. (2025) 26:1–8. doi: 10.1007/s11934-025-01277-1

12. Smith, M, Sattler, A, Hong, G, and Lin, S. From code to bedside: implementing artificial intelligence using quality improvement methods. J Gen Intern Med. (2021) 36:1061–6. doi: 10.1007/s11606-020-06394-w

13. Johnson, M, Albizri, A, Harfouche, A, and Fosso-Wamba, S. Integrating human knowledge into artificial intelligence for complex and ill-structured problems: informed artificial intelligence. Int J Inf Manag. (2022) 64:102479. doi: 10.1016/j.ijinfomgt.2022.102479

14. Hassan, M, Kushniruk, A, and Borycki, E. Barriers to and facilitators of artificial intelligence adoption in health care: scoping review. JMIR Hum Factors. (2024) 11:e48633. doi: 10.2196/48633

15. Elhassan, SE, Sajid, MR, Syed, AM, Fathima, SA, Khan, BS, and Tamim, H. Assessing familiarity, usage patterns, and attitudes of medical students toward ChatGPT and other chat-based AI apps in medical education: cross-sectional questionnaire study. JMIR Med Educ. (2025) 11:e63065. doi: 10.2196/63065

16. Grunhut, J, Marques, O, and Wyatt, AT. Needs, challenges, and applications of artificial intelligence in medical education curriculum. JMIR Med Educ. (2022) 8:e35587. doi: 10.2196/35587

17. Rani, S, Kumari, A, Ekka, SC, Chakraborty, R, and Ekka, S. Perception of medical students and faculty regarding the use of artificial intelligence (AI) in medical education: a cross-sectional study. Cureus. (2025) 17:85348. doi: 10.7759/cureus.85348

18. Wood, EA, Ange, BL, and Miller, DD. Are we ready to integrate artificial intelligence literacy into medical school curriculum: students and faculty survey. J Med Educat Curri Develop. (2021) 8:23821205211024078. doi: 10.1177/23821205211024078

19. Chan, KS, and Zary, N. Applications and challenges of implementing artificial intelligence in medical education: integrative review. JMIR Med Educ. (2019) 5:e13930. doi: 10.2196/13930

20. Gordon, M, Daniel, M, Ajiboye, A, Uraiby, H, Xu, NY, Bartlett, R, et al. A scoping review of artificial intelligence in medical education: BEME guide no. 84. Med Teach. (2024) 46:446–70. doi: 10.1080/0142159X.2024.2314198

21. Salih, S. Perceptions of faculty and students about use of artificial intelligence in medical education: a qualitative study. Cureus. (2024) 16:e57605. doi: 10.7759/cureus.57605

22. Kimmerle, J, Timm, J, Festl-Wietek, T, Cress, U, and Herrmann-Werner, A. Medical students’ attitudes toward AI in medicine and their expectations for medical education. J Med Educat Curri Develop. (2023) 10:23821205231219346. doi: 10.1177/23821205231219346

23. Katona, J, and Gyonyoru, KIK. Integrating AI-based adaptive learning into the flipped classroom model to enhance engagement and learning outcomes. Computers and Education: Artificial Intelligence. (2025) 8:100392. doi: 10.1016/j.caeai.2025.100392

24. Masters, K. Ethical use of artificial intelligence in health professions education: AMEE guide no. 158. Med Teach. (2023) 45:574–84. doi: 10.1080/0142159X.2023.2186203

25. Aggarwal, R, Sounderajah, V, Martin, G, Ting, DS, Karthikesalingam, A, King, D, et al. Diagnostic accuracy of deep learning in medical imaging: a systematic review and meta-analysis. NPJ Digit Med. (2021) 4:65. doi: 10.1038/s41746-021-00438-z

26. Miyoshi, N. Use of AI in diagnostic imaging and future prospects. JMA J. (2025) 8:198–203. doi: 10.31662/jmaj.2024-0169

27. Lee, J, Wu, AS, Li, D, and Kulasegaram, KM. Artificial intelligence in undergraduate medical education: a scoping review. Acad Med. (2021) 96:S62–70. doi: 10.1097/ACM.0000000000004291

28. Pregowska, A, and Perkins, M. Artificial intelligence in medical education: typologies and ethical approaches. Ethics Bioethics. (2024) 14:96–113. doi: 10.2478/ebce-2024-0004

29. Boscardin, CK, Gin, B, Golde, PB, and Hauer, KE. ChatGPT and generative artificial intelligence for medical education: potential impact and opportunity. Acad Med. (2024) 99:22–7. doi: 10.1097/ACM.0000000000005439

30. Xie, Y, Seth, I, Hunter-Smith, DJ, Rozen, WM, and Seifman, MA. Investigating the impact of innovative AI chatbot on post-pandemic medical education and clinical assistance: a comprehensive analysis. ANZ J Surg. (2024) 94:68–77. doi: 10.1111/ans.18666

31. Skryd, A, and Lawrence, K. ChatGPT as a tool for medical education and clinical decision-making on the wards: case study. JMIR Form Res. (2024) 8:e51346. doi: 10.2196/51346

32. Abdellatif, H, Al Mushaiqri, M, Albalushi, H, Al-Zaabi, AA, Roychoudhury, S, and Das, S. Teaching, learning and assessing anatomy with artificial intelligence: the road to a better future. Int J Environ Res Public Health. (2022) 19:14209. doi: 10.3390/ijerph192114209

33. Randhawa, G.K., and Jackson, M. (2020). The role of artificial intelligence in learning and professional development for healthcare professionals. Healthc Manag Forum, SAGE Publications Sage CA: Los Angeles, CA. pp. 19–24.

34. Kononowicz, AA, Woodham, LA, Edelbring, S, Stathakarou, N, Davies, D, Saxena, N, et al. Virtual patient simulations in health professions education: systematic review and meta-analysis by the digital health education collaboration. J Med Internet Res. (2019) 21:e14676. doi: 10.2196/14676

35. Sharma, N, Doherty, I, and Dong, C. Adaptive learning in medical education: the final piece of technology enhanced learning? Ulster Med J. (2017) 86:198–200.

Keywords: artificial intelligence, faculty knowledge, faculty perspectives, health sciences colleges, medical education

Citation: Al Zahrani EM, Elsafi SH, Al Musallam LD, Alharbi AH, Aldossari HM, Alomar AM and Alkharraz ZS (2025) Faculty perspectives on artificial intelligence’s adoption in the health sciences education: a multicentre survey. Front. Med. 12:1663741. doi: 10.3389/fmed.2025.1663741

Edited by:

Predrag Jovanovic, University Clinical Center Tuzla, Bosnia and HerzegovinaReviewed by:

Bo-Wei Zhao, Zhejiang University, ChinaEjeatuluchukwu Obi, Nnamdi Azikiwe University, Nigeria

Copyright © 2025 Al Zahrani, Elsafi, Al Musallam, Alharbi, Aldossari, Alomar and Alkharraz. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Salah H. Elsafi, c2FsYWhlbHNhZmlAaG90bWFpbC5jb20=

Eidan M. Al Zahrani

Eidan M. Al Zahrani Salah H. Elsafi

Salah H. Elsafi Lenah D. Al Musallam2

Lenah D. Al Musallam2 Hala M. Aldossari

Hala M. Aldossari Zeyad S. Alkharraz

Zeyad S. Alkharraz