- 1Faculty of Medical Sciences, Universidade do Estado do Rio de Janeiro (UERJ), Rio de Janeiro, Brazil

- 2Biomedical Center, Universidade do Estado do Rio de Janeiro (UERJ), Rio de Janeiro, Brazil

- 3Faculty of Medicine, Universidade Federal Fluminense (UFF), Niterói, Brazil

- 4Faculty of Medicine, Universidade Federal do Estado do Rio de Janeiro (UNIRIO), Rio de Janeiro, Brazil

- 5Faculty of Medicine, Universidade Federal do Rio de Janeiro (UFRJ), Rio de Janeiro, Brazil

Background: The evaluation of medical education in Brazil relies on instruments such as the National Student Performance Exam (ENADE) and the Preliminary Course Concept (PCC), which guide regulation and funding.

Objectives: To analyze national data from the 2023 ENADE for the medical program, describing variations by administrative category and region, and to discuss implications for building a fairer assessment model aligned with the principles of the Brazilian Unified Health System (SUS).

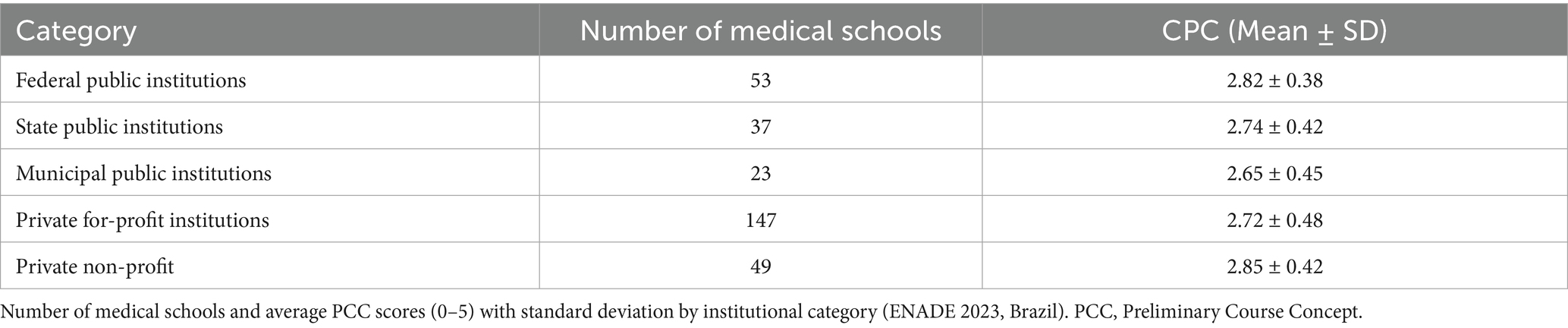

Methods: A descriptive study using consolidated data from 309 Medical Programs participating in ENADE 2023. Mean scores and standard deviations for the continuous PCC (scale 0–5) were calculated by institutional category (federal, state, municipal public institutions, and private with or without profit) and geographic region.

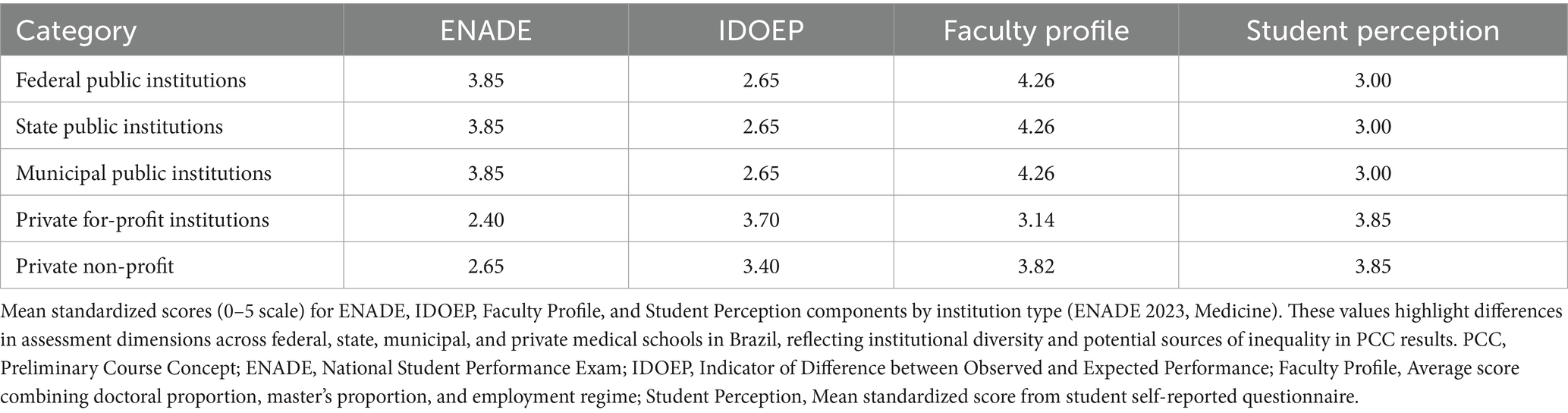

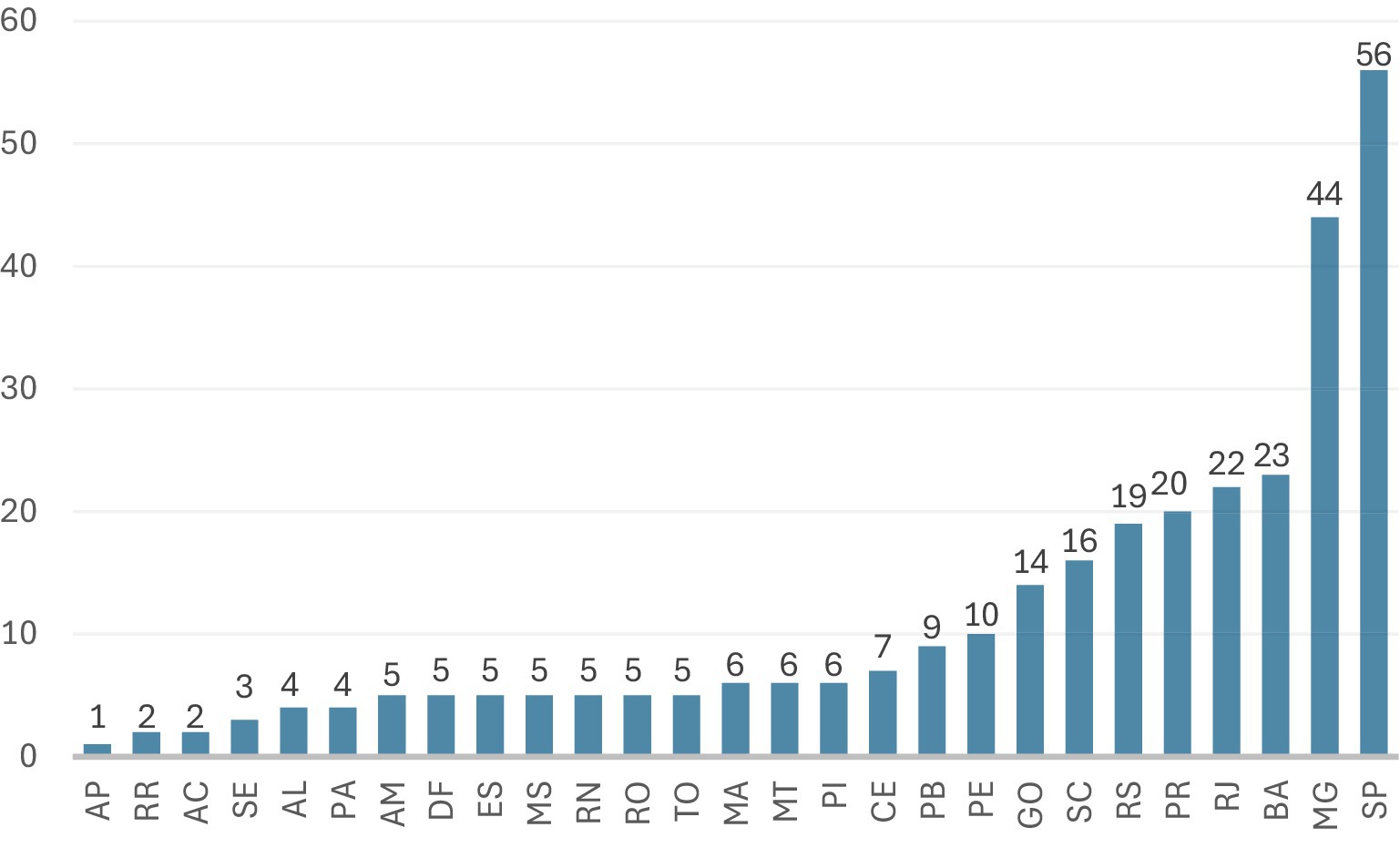

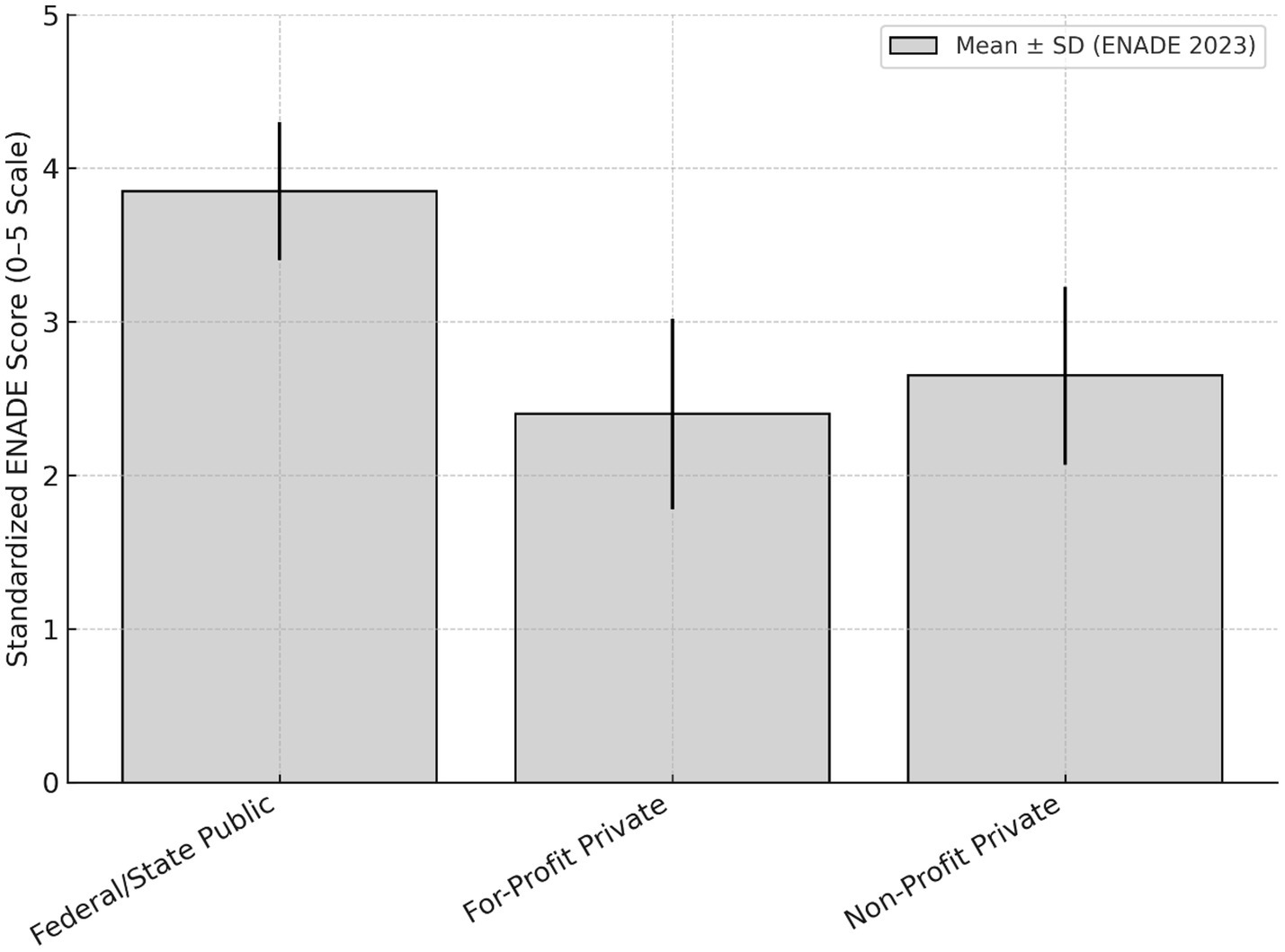

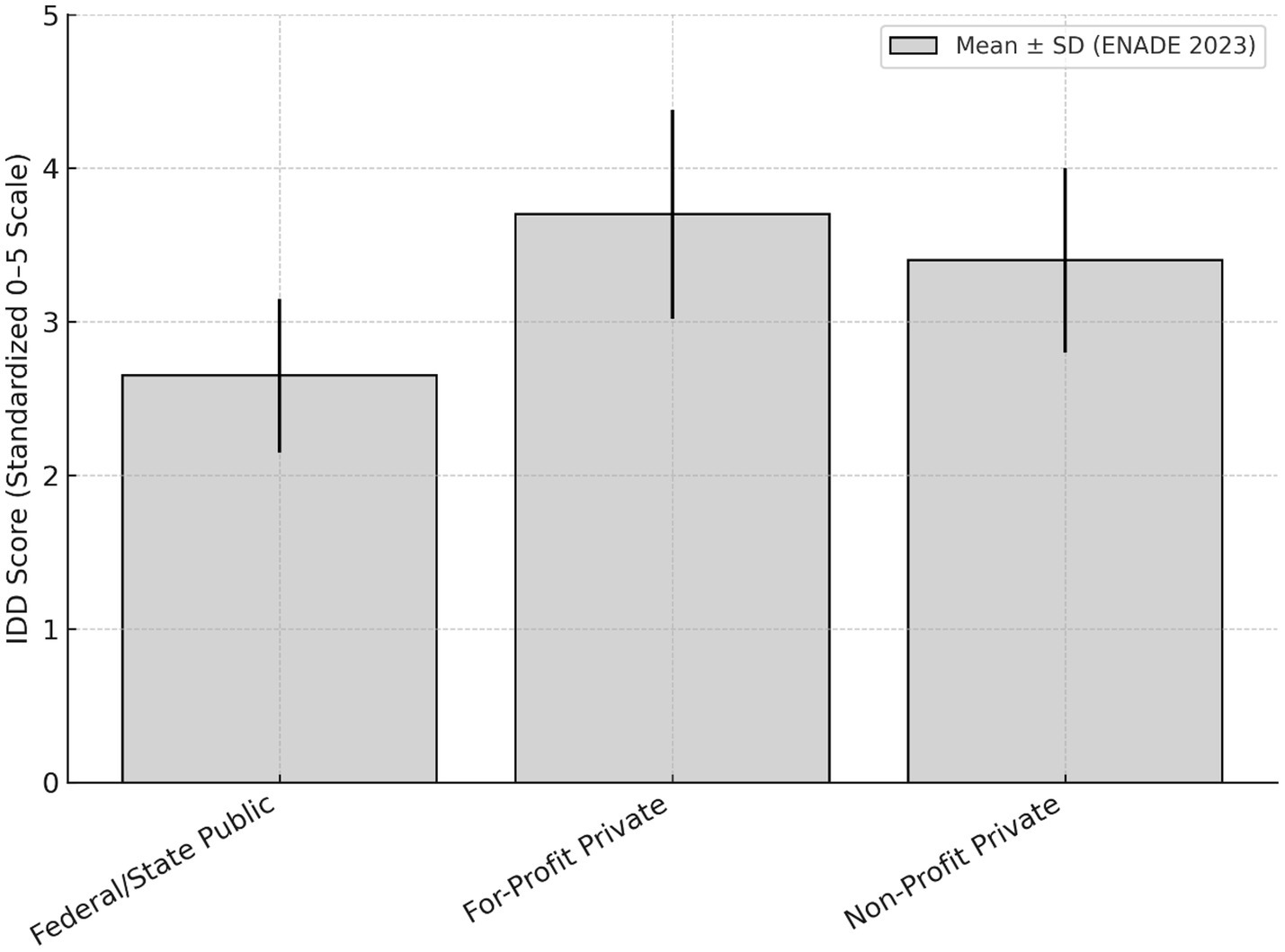

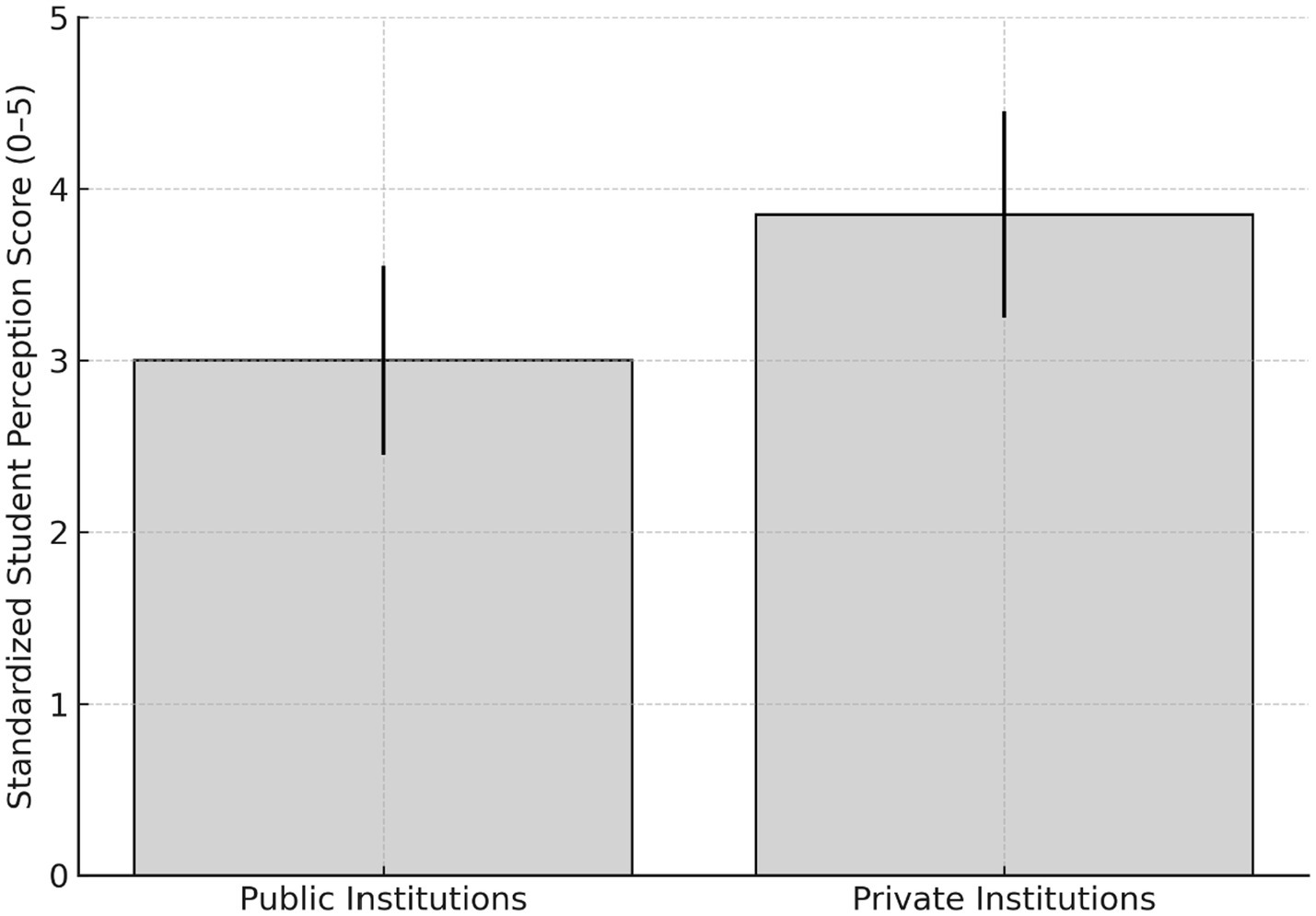

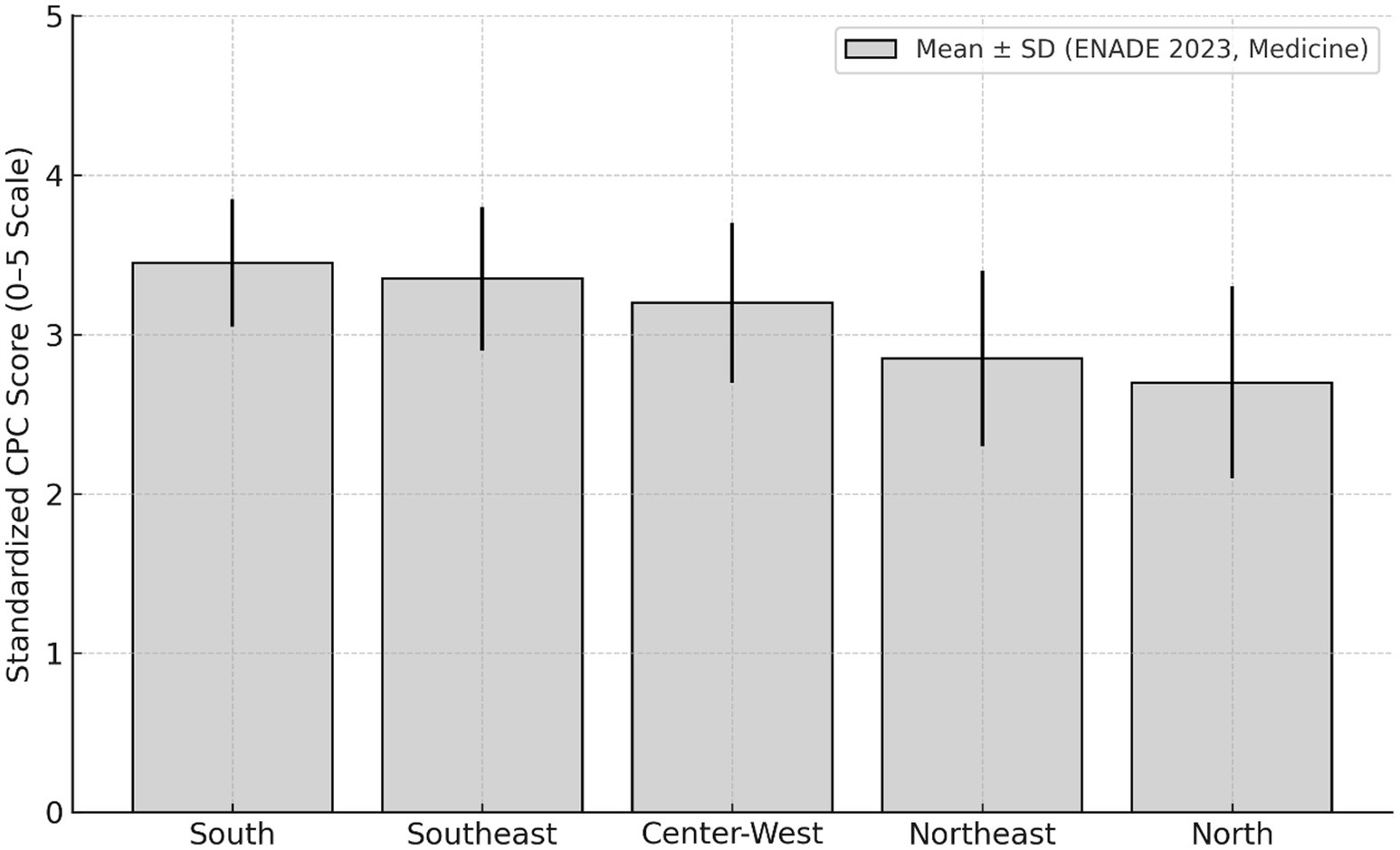

Results: Federal public institutions showed a mean PCC of 2.82 ± 0.38; state, 2.74 ± 0.42; and municipal, 2.65 ± 0.45. Private for-profit institutions had 2.72 ± 0.48, and non-profit institutions 2.85 ± 0.42. The Indicator of Difference Between Observed and Expected Performance (IDOEP) component, which accounts for 35% of the PCC, was higher in for-profit private institutions (3.70 ± 0.68) compared to public ones (2.65 ± 0.50), reflecting limitations in adjusting for socioeconomic intake profiles. Student perception scores were also higher in private institutions (3.85 ± 0.60) than in public ones (3.00 ± 0.55). Regionally, PCC means were higher in the South (3.45 ± 0.40) and Southeast (3.35 ± 0.45) than in the Northeast (2.85 ± 0.55) and North (2.70 ± 0.60).

Conclusion: Results suggest that the current ENADE/PCC model may mask structural and regional inequalities, favoring institutional strategies focused on large-scale enrollment with lower admission requirements.

Introduction

History of medical education in Brazil and the development of National Curriculum Guidelines

Medical education in Brazil has colonial roots, with the first medical schools created in Salvador and Rio de Janeiro in 1808, tied to the arrival of the Portuguese royal court (1). For over a century, training followed a biomedical, hospital-centered model based on isolated disciplines, with limited integration between theory and practice or alignment with population health needs. While several reforms were proposed throughout the 20th century, only in the late 1990s did a consistent national movement for broader, more systematic change emerge. The National Education Guidelines and Framework Law (LDB No. 9.394/96) (2) laid the foundation for reforming curricula across higher education in Brazil, enabling the development of National Curriculum Guidelines (DCNs) for undergraduate Medical Programs (3).

The first Medical DCNs, approved in 2001 (3), defined the graduate profile as a generalist, critical, reflective, and humanistic professional, capable of working according to the principles of the Brazilian Unified Health System (SUS) (4). These guidelines sought to overcome the traditional Flexnerian model, promoting active teaching methodologies, greater integration between teaching and service, and a focus on population health needs (3, 5).

In 2014, updated Medical DCNs were approved (CNE/CES Resolution No. 3/2014) (6), incorporating critiques and lessons accumulated over more than a decade. These guidelines reaffirmed the commitment to competency-based education, lifelong learning, and the social responsibility of training institutions (6).

Despite this progress, implementation remains uneven. Many schools face challenges related to rigid curricula, insufficient funding, inequality among institutions, and lack of qualified practice settings (7, 8). Public initiatives like the More Doctors Program (PMM) (9) aimed to strengthen medical education by expanding undergraduate and residency training and improving regional distribution of professionals.

In this context, external quality assessment of Medical Programs plays a central role in regulation and accountability. Since 2004, the National Student Performance Exam (ENADE) (10) has been the primary instrument for evaluating student performance in line with the DCNs. ENADE results are used to calculate the Preliminary Course Concept (PCC), which informs course recognition and renewal decisions by the Ministry of Education (INEP) (10). However, little is known about whether national large-scale evaluations such as ENADE and PCC adequately reflect these structural inequalities or support curricular improvement aligned with the DCNs.

In 2025, the National Medical Students Assessment Exam (ENAMED) (11) will replace National Student Performance Exam (ENADE), aiming to implement an annual evaluation of medical programs. However, it remains uncertain whether ENAMED will address the current misalignment between assessments and the 2014 National Curriculum Guidelines (DCNs), which emphasize competencies for work within the Unified Health System (SUS). Although ENAMED may allow new graduates to use scores to apply for medical residency, the continuity of ENADE’s evaluation criteria has not been clarified.

The 2014 National Curriculum Guidelines (CNE/CES Resolution No. 3/2014) (12) marked a significant advancement by specifying the competencies expected of medical graduates and promoting integration between teaching, health services, and communities. Yet, their broad language allows varied institutional interpretations, complicating standardization. Implementation has also been hindered by unequal institutional capacity, insufficient practice settings, and limited faculty training in active methodologies. Challenges persist in integrating education with the SUS due to administrative barriers and fragile partnerships, such as the Organizational Contract for Public Teaching-Health Action (COAPES) (13), which often lack safeguards for pedagogical quality in hiring clinical preceptors (14). Despite promoting formative, competency-based evaluation, summative exams remain dominant, and limited investment in faculty development contributes to the persistence of traditional, lecture-based approaches.

Objectives

This study aims to analyze how medical schools in Brazil are evaluated through the 2023 edition of the National Student Performance Exam (ENADE) (10) and the associated Preliminary Course Concept (PCC). It investigates the structure and weighting of key indicators, particularly the ENADE score, the Indicator of Difference Between Observed and Expected Performance (IDOEP) (10), and student questionnaire components. The analysis also examines disparities between public and private institutions regarding infrastructure, faculty qualifications, employment models, and student perceptions. Additionally, the study highlights methodological limitations of the current evaluation model, including the absence of on-site visits, statistical modeling assumptions, and reliance on subjective self-reported instruments.

Methods

Study design

This study is a documentary analysis with a descriptive and exploratory approach, dedicated exclusively to the evaluation of undergraduate medical programs in Brazil. It adopts a qualitative-quantitative design based on public data and academic reports, aiming to examine the 2023 ENADE (10) results for Medicine and the components used to calculate the PCC, identifying methodological limitations and inequalities among different types of institutions.

Data sources

The study used multiple public sources of information. These included official INEP spreadsheets reporting 2023 ENADE results for Medicine (15) (Supplementary Data), including graduating students’ scores, IDOEP values, and continuous PCC scores. It also drew on the official ENADE student perception questionnaire, administered by INEP, which includes standardized items evaluating pedagogical organization, infrastructure, and training opportunities. Additionally, institutional performance reports and spreadsheets used in the PCC calculation were obtained from the INEP Open Data Portal and official public repositories of the Ministry of Education.

Data analysis procedures

Data were extracted and organized for Medical Programs, classifying programs by administrative category such as federal, state, and municipal public institutions, as well as private for-profit and non-profit institutions. This disaggregated classification for public institutions was adopted to reflect the marked heterogeneity in funding, governance structures, and academic support across Brazil’s public higher education sectors, which could be obscured in a simple public vs. private analysis. Indicators including ENADE scores, IDOEP values, and PCC results were compared to identify disparities and performance patterns across institution types. The PCC formula for Medicine was examined in detail, considering its components and weights: ENADE score (20%), IDOEP (35%), faculty profile (30%), and student perception (15%). Finally, the study reviewed the instruments and procedures used for evaluation, highlighting methodological limitations identified in technical documents and academic reports.

Interpretation strategy

Data were interpreted descriptively and comparatively to highlight inequalities among Medical Programs in Brazil. Subsequently, an analysis was conducted on the adequacy of the current model for assessing medical education quality.

Ethical considerations

The research used only publicly available data and institutional reports without any identification of individual students or faculty members, ensuring adherence to applicable ethical principles.

Statistics

Quantitative data were analyzed descriptively, with calculation of means, medians, standard deviations, and distribution of scores among higher education institutions (HEIs) offering medical programs. Comparisons were made by administrative category of HEIs (federal, state, municipal public institutions, and private for-profit or non-profit). Variations in PCC components were also examined, including ENADE scores (15), IDOEP values, faculty qualification and employment regime indicators, and student questionnaire results. Electronic spreadsheets were used for organizing and calculating descriptive statistics. Results were presented in tables and graphs to facilitate comparison among institution groups and to highlight regional and institutional disparities. Inferential or multivariate statistical techniques were not applied, as the data used are aggregated, census-type, and not derived from probabilistic samples. In such cases, methodological literature emphasizes that descriptive and exploratory analyses are the most appropriate approach, focusing on contextual interpretation rather than inferential generalization.

Results

General overview of ENADE 2023 in medicine

In 2023, Brazil’s estimated population was approximately 203 million. The 309 active medical schools evaluated by ENADE correspond to approximately 1.52 medical schools per million inhabitants. In the same year, about 31,054 students graduated, or approximately 153 graduating physicians per million inhabitants. We report these density indicators here for context; the figure itself depicts only the statewide distribution of schools (Figure 1). The PCC is calculated on a continuous scale from 0 to 5 and transformed into an ordinal concept (1 to 5) based on thresholds defined by INEP (15). Available data show substantial dispersion in results, with national means near the lower limit of the range for concept 4, but with unequal distribution across institution types.

Figure 1. Number of medical schools by state (ENADE 2023). Number of medical schools by state (ENADE 2023). Distribution of the 309 medical schools evaluated by ENADE 2023 across Brazilian states. Values represent the number of schools in each state (14, 24). AP, Amapá; RR, Roraima; AC, Acre; SE, Sergipe; AL, Alagoas; PA, Pará; AM, Amazonas; DF, Federal District; ES, Espírito Santo; MS, Mato Grosso do Sul; RN, Rio Grande do Norte; RO, Rondônia; TO, Tocantins; MA, Maranhão; MT, Mato Grosso; PI, Piauí; CE, Ceará; PB, Paraíba; PE, Pernambuco; GO, Goiás; SC, Santa Catarina; RS, Rio Grande do Sul; PR, Paraná; RJ, Rio de Janeiro; BA, Bahia; MG, Minas Gerais; SP, São Paulo.

The continuous PCC results (0–5 scale) for medical programs in 2023 revealed similar means across institutional categories but with differentiated distributions at the scale’s extremes. Federal and state public institutions presented a mean PCC of 2.78 ± 0.40, while private institutions showed an overall mean of 2.79 ± 0.45. Within the private sector, internal differences were evident: for-profit private institutions had a mean of 2.72 ± 0.48, while non-profit institutions reached 2.85 ± 0.42 (Table 1). The distribution by ordinal concept bands (1 to 5) showed that, among the top 100 PCC scores, approximately 62% were private institutions, particularly non-profit ones. In contrast, about 70% of courses with PCC 1 or 2 were for-profit private institutions. Public institutions were more frequently concentrated in the intermediate and higher bands.

ENADE performance (graduate scores)

The ENADE score (15), which accounts for 20% of the PCC calculation, showed consistent differences between public and private institutions in 2023. Federal and state public institutions had a standardized mean of 3.85 ± 0.45, while private institutions had a lower mean of 2.48 ± 0.60. Breaking down the private sector, for-profit institutions averaged 2.40 ± 0.62, and non-profit institutions reached 2.65 ± 0.58. This difference was also observed in internal test components. For general training, public institutions averaged 3.84 compared to 2.47 for private institutions. For specific knowledge (which accounts for 75% of the ENADE score), publics reached 3.70 versus 2.40 for privates. Some high-performing private universities were among the top national scores, with ENADE values ranging from 4.6 to 4.9. Figure 2 shows the standardized ENADE scores (0–5 scale) by institutional category. Federal and state public institutions presented higher mean ENADE scores compared to private institutions, while non-profit private programs performed slightly better than for-profit ones. The ENADE score represents 20% of the PCC calculation, while IDOEP accounts for 35% and student perception for 15%. Public institutions presented higher ENADE means, whereas private institutions presented higher averages in IDOEP and student perception.

Figure 2. Standardized ENADE score (0–5) by institutional category. Mean ENADE scores (0–5 scale) with standard deviation shown by institutional category. Federal and state public institutions show higher average scores compared to private institutions.

Indicator of Difference Between Observed and Expected Performance

The IDOEP (15), which comprises 35% of the PCC, exhibited a pattern opposite to the raw ENADE score. Private institutions, especially for-profit ones, showed considerably higher IDOEP means: 3.70 ± 0.68 for for-profit private programs versus 3.40 ± 0.60 for non-profit ones. In contrast, federal and state public institutions had significantly lower averages of 2.65 ± 0.50. In distributional terms, over 60% of private programs achieved IDOEP scores ≥ 3.5, while only 20–25% of public programs reached this upper range (Figure 3).

Figure 3. IDOEP score (0–5) by institutional category. Mean IDOEP scores with standard deviation for medical programs in Brazil (2023). The mean IDOEP score was 3.70 ± 0.68 for for-profit private institutions, 3.40 ± 0.60 for non-profit private institutions, and 2.65 ± 0.50 for federal and state public institutions. IDOEP, Indicator of Difference Between Observed and Expected Performance.

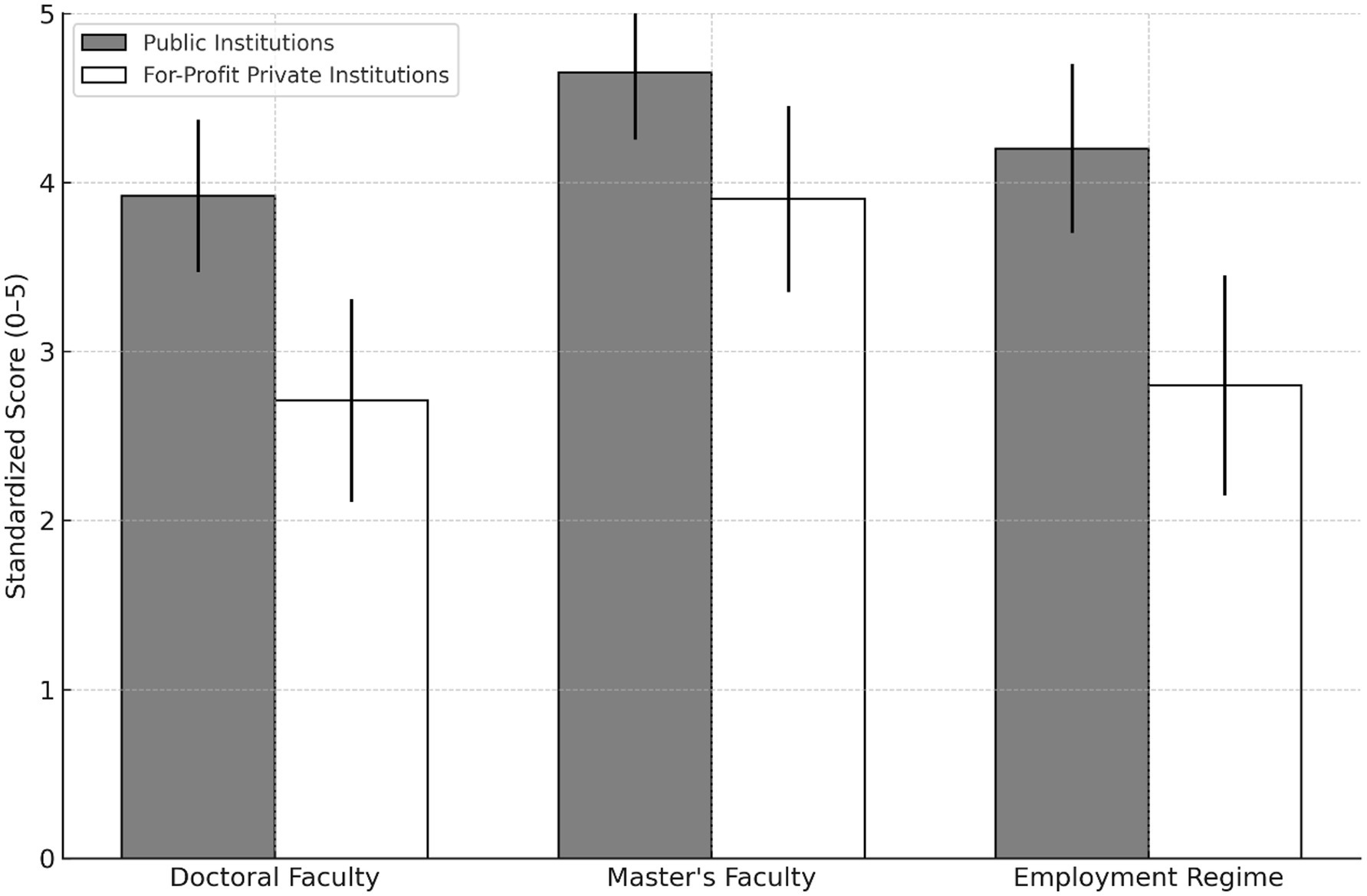

Faculty profile component

The “Faculty Profile” component, which accounts for 30% of the PCC, revealed clear differences between federal and state public institutions and for-profit private institutions. On average, public institutions had a proportion of doctoral faculty of 3.92 ± 0.45, master’s-level faculty of 4.65 ± 0.40, and employment regime scores of 4.20 ± 0.50, indicating predominance of highly qualified faculty with full-time or exclusive dedication. Conversely, for-profit private institutions showed lower means in all indicators: doctoral faculty at 2.71 ± 0.60, master’s-level faculty at 3.90 ± 0.55, and employment regime at 2.80 ± 0.65. In the PCC calculation, the faculty profile component represents 30% of the score, while ENADE accounts for 20% and IDOEP for 35% (Figure 4).

Figure 4. Faculty qualification and employment regime (0–5 Scale) by institution type. Mean standardized scores comparing public and for-profit private medical schools on doctoral and master’s degrees and faculty employment regime, showing higher values for public institutions.

Student perception (student questionnaire)

The “Student Perception” component, accounting for 15% of the PCC calculation, showed statistical differences between public and private institutions. The standardized mean for public institutions was 3.00 ± 0.55, while private institutions had a higher mean of 3.85 ± 0.60, indicating a consistent pattern of higher scores in this dimension (Figure 5) (15). Additionally, among the top 100 scores recorded on the student questionnaire, approximately 85% belonged to private institutions, with only 10 to 15 public programs appearing in this upper range. The standardized mean for student perception was 3.85 ± 0.60 in private institutions and 3.00 ± 0.55 in public institutions, based on a questionnaire administered to graduating students.

Figure 5. Student perception scores by institution type. Mean standardized scores (0–5 scale) with standard deviation for graduating student self-reported perception, comparing public and private medical schools in Brazil (ENADE 2023).

Regional variation

Analysis of the 2023 ENADE data for Medicine (15) revealed significant regional disparities in PCC results. Standardized means of the continuous PCC (0–5 scale) varied consistently by region: the South had a mean of 3.45 ± 0.40, the Southeast 3.35 ± 0.45, while the Center-West averaged 3.20 ± 0.50, the Northeast 2.85 ± 0.55, and the North 2.70 ± 0.60. This variation was also reflected in the distribution of ordinal concepts (1 to 5): approximately 30–35% of programs in the South and Southeast achieved PCC 4 or 5 (considered good or excellent quality indicators), while around 45% of programs in the North and Northeast fell into the lowest bands (concepts 1 or 2). Even when limiting the analysis to federal public institutions, important differences persisted: federal programs in the South and Southeast had a mean PCC of 3.60 ± 0.35, while those in the North and Northeast averaged 3.05 ± 0.45. The standardized mean PCC scores were 3.45 ± 0.40 in the South, 3.35 ± 0.45 in the Southeast, 3.20 ± 0.50 in the Center-West, 2.85 ± 0.55 in the Northeast, and 2.70 ± 0.60 in the North (Figure 6).

Figure 6. Regional disparities in mean PCC scores for medical schools (ENADE 2023). Mean standardized PCC scores (0–5 scale) with standard deviation for Brazilian regions (ENADE 2023, medicine). ENADE, National Student Performance Exam; PCC, Preliminary Course Concept.

Synthesis of findings

Despite similar overall means between public and private institutions, there is greater internal inequality within the private sector, with both centers of excellence and many programs with insufficient scores. The current evaluation model (ENADE + IDOEP + student perception) favors programs admitting students with lower ENEM entrance scores (boosting IDOEP) and with strong perceived infrastructure, which can benefit certain private institutions. Regional and institutional disparities underscore the need for more context-sensitive evaluation, especially in designing public policies for regulation and support (Table 2).

Discussion

This paper seeks to contribute to the debate on the evaluation and regulation of medical education in Brazil by presenting a detailed analysis of the 2023 ENADE data for Medical Schools (15). By exploring national averages, disparities by administrative category and region, and the specific components of the PCC, the analysis provides evidence to rethink the current evaluation model (16).

ENADE was established as part of the National Higher Education Evaluation System (SINAES) with the aim of assessing graduating students’ performance in relation to curriculum guidelines, skills, and competencies (10, 11). In theory, it was intended to serve as a quality-inducing instrument, guiding regulation, oversight, and funding. However, studies show that over the years, ENADE has not systematically demonstrated its ability to promote concrete improvements or reduce inequalities in quality of Medicine Courses. This is partly due to the summative and punitive nature of the model, the limited formative use of results by institutions, and the weakness of mechanisms for monitoring and inducing change (17, 18).

Internationally, many countries require standardized licensing exams for all graduating medical students (e.g., USMLE in the United States (19), MCCQE in Canada (20), KMLE in South Korea (21)). However, these exams assess individual readiness for practice rather than serving as a regulatory ranking tool for institutions. Institutional evaluation relies on external reviews, on-site visits, and qualitative evidence. In contrast, the Brazilian ENADE (and its successor, ENAMED) aims to assess all graduating students but ties the evaluation focusing mainly on its own score and the IDOEP one. This structural difference raises concerns about fairness, validity, and the ability to accurately reflect educational quality across diverse contexts (22, 23).

Results reveal that although the overall means of the continuous PCC (0–5 scale) are very similar between public and private institutions (2.78 to 2.79), there is significant heterogeneity within these groups. Non-profit institutions had means comparable to or higher than federal public institutions (2.85 ± 0.42 vs. 2.82 ± 0.38), while state, municipal, and for-profit private institutions showed lower values. Moreover, the private sector included both centers of excellence (concept 5) and most of the Medical Programs classified as concepts 1 and 2, indicating a segmented and unequal landscape (24, 25).

Decomposition of PCC components highlighted important methodological limitations. The IDOEP, which weighs 35% in the final score, showed higher averages in for-profit private institutions (3.70 ± 0.68) than in public ones (2.65 ± 0.50). This difference stems from how IDOEP is calculated, as the difference between observed ENADE performance and that expected based on ENEM entrance exam score (15). Students in private programs generally have lower ENEM scores, which reduces the expected performance baseline and artificially inflates the measured added value, without adequately adjusting for socioeconomic factors (10, 26). Given that IDOEP has the highest individual weight in the PCC, this bias can significantly affect institutional rankings and incentivize market strategies focused on large-scale student recruitment.

To better reflect the curricular competencies defined by DCNs (12), the weight of the ENADE exam in the PCC calculation could be increased to 35–40%, ensuring that direct, standardized measures of student knowledge receive appropriate emphasis. Meanwhile, the IDOEP could be recalibrated to 25–30% using improved multilevel models that more fully adjust for socioeconomic and institutional factors. Such changes would align the Brazilian system with international best practices that prioritize direct assessments of competence for licensing (as in the USMLE or MCCQE) (19, 20) while still recognizing the value-added component. Statistical approaches like principal component analysis (PCA) could help validate the relative contributions of each metric, ensuring a more robust, fair, and context-sensitive assessment framework (22, 27).

Another important component, Student Perception (15% of the PCC), showed higher means in private institutions (3.85 ± 0.60) compared to public ones (3.00 ± 0.55), potentially reflecting investments in marketing and perceived infrastructure mostly in the earliest and pre-clinical semesters of the course. It is noteworthy that a considerable number of private institutions utilize high-standard public hospitals during the clinical cycle through contractual agreements (COAPES) (13). Therefore, in many private Medical Schools not only the lack of on-site visits in the evaluation process but also the absence of their own hospitals limits the PCC’s ability to capture important structural and pedagogical aspects (7, 8). In the United Kingdom, the General Medical Council (GMC) conducts regular inspections of Medical Schools, using a multi-method approach that includes document review, faculty interviews, and on-site observations (23). While the National Student Survey (NSS) provides valuable student feedback on course quality, it is treated as a qualitative input rather than a direct regulatory score (15). Student perceptions serve as an early warning system, triggering deeper audits or targeted reviews if issues are flagged (23). In Portugal, national reflections on the profile of recently graduated physicians also emphasize the need for strong integration between education and health systems, similar to the intentions of Brazil’s DCNs, highlighting the importance of community-based training and interdisciplinary competencies (28). By contrast, the Brazilian system currently assigns a fixed 15% weight in the PCC calculation to a single, self-reported student questionnaire administered only to graduating students, without complementary on-site visits or external validation. This design risks institutional strategies that optimize student satisfaction scores without necessarily ensuring robust educational quality or addressing structural deficiencies.

Regional analysis confirmed historical inequalities: the South (3.45 ± 0.40) and Southeast (3.35 ± 0.45) had higher means than the North (2.70 ± 0.60) and Northeast (2.85 ± 0.55), even among federal institutions. These differences reflect disparities in funding, teaching infrastructure, and human resources, consistent with evidence highlighting the difficulty of retaining professionals in less developed regions (24, 29).

Beyond these methodological issues, recent literature highlights the need for more effective government regulation to address the impacts of Brazil’s accelerated and uneven expansion of medical education, currently with 448 active Medical Schools (System e-MEC) (30, 31). National experience shows that although public policies such as the More Doctors Program have succeeded in increasing the number of courses and slots, especially in the private sector, this expansion has not always been accompanied by robust mechanisms for quality monitoring and equity. Studies show that unregulated growth, particularly of for-profit private schools, is associated with lower teaching quality, excessively large classes, less qualified faculty, and greater regional and social inequalities in the distribution of graduates (9, 24, 29). Furthermore, most professors with master’s or doctoral degrees are, at the same time, employed by public medical schools, where most postgraduate academic programs are located in Brazil. They are hired by private Medical Schools as hourly or even part-time teachers, without any significant institutional commitment. Therefore, strengthening state regulation, with clear definition of minimum quality standards, systematic oversight, on-site inspections, more comprehensive and transparent evaluation instruments is essential to align the growth of the training system with the needs of the Unified Health System and the goals of reducing regional and social health inequalities.

In the Brazilian context, other studies indicate that Medical Schools with better ENADE performance are associated with factors such as smaller class sizes, presence of stricto sensu postgraduate programs, more highly qualified faculty, and longer institutional histories (32). These variables, currently not directly included in the PCC, suggest that educational quality requires long-term and strong investment in infrastructure, research, and faculty development. In Brazil almost 95% of scientific research is developed in public universities.

Additionally, the current model lacks mechanisms to systematically capture faculty perspectives on teaching conditions and institutional infrastructure. The absence of instruments that reflect teachers’ views on laboratory quality, teaching hospitals, primary care networks, and pedagogical resources limits the analysis of the real conditions of training (18). Likewise, fundamental elements such as investment in stricto sensu postgraduate programs, strengthening university hospitals, and integrating research and extension activities into undergraduate education are not consistently evaluated, even though they are essential for articulating teaching, service, and community and for developing socially committed health professionals (32–34).

Despite concentrating a significant portion of Brazil’s scientific output and postgraduate (stricto sensu) programs in health, the country’s top public medical schools have historically played a limited and poorly coordinated role in shaping national undergraduate policies. This lack of institutional leadership in discussions on curricular guidelines and evaluation models contributes to a disconnect between academic excellence and existing regulatory frameworks. In many instances, strategic decisions regarding medical education are heavily influenced by private sector interests or are based on diagnostic analyses that fail to reflect the realities of teaching in leading institutions. This political-academic void on the part of the most prestigious universities undermines the potential for building a more qualified, equitable regulatory system aligned with the practices already adopted by reference institutions in the training of critical, humanistic, and socially committed professionals for the SUS (34, 36).

Taken together, these aspects point to the need for a thorough review of the evaluation model, capable of incorporating broader, more participatory dimensions sensitive to regional and institutional contexts. Finally, reflecting on ENAMED (11) represents a strategic opportunity to overcome current distortions and move toward a fairer formative evaluation system evaluating that does not reproduce existing inequalities and biases. While standardized national metrics like the Preliminary Course Concept (PCC) provide valuable data for higher education regulation, they must be interpreted within the broader context of structural inequalities across institutions. Without contextual calibration, such indicators risk reinforcing existing disparities rather than promoting meaningful improvement. In particular, subjective components such as student perception may disproportionately influence PCC results, potentially favoring some private institutions or specific regions. However, given the limitations of the available data, this remains a hypothesis that requires further empirical investigation. This study reinforces the need for evaluation models that combine robust quantitative data with qualitative, locally informed insights, especially in systems as heterogeneous as Brazil’s. We advocate for a more equitable and formative approach to medical course evaluation, one that supports continuous improvement and aligns more closely with national health priorities and the principles of the Unified Health System (SUS).

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

RR: Conceptualization, Formal analysis, Funding acquisition, Methodology, Supervision, Writing – original draft, Writing – review & editing. MN: Conceptualization, Writing – review & editing. AB: Conceptualization, Writing – review & editing. AP: Conceptualization, Writing – review & editing. AS: Conceptualization, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This research was supported by the Rogerio Rufino - Grants from Faperj - E-26/200.311/2023.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that Gen AI was used in the creation of this manuscript. During the preparation of this work, the authors used ChatGPT-assisted technologies solely to enhance the readability and presentation of the English language.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fmed.2025.1679924/full#supplementary-material

References

1. Silva, CS, and Jardim, JR. Reflections on medical education in Brazil. J Bras Pneumol. (2025) 51:e20250033. doi: 10.36416/1806-3756/e20250033

2. Brasil. Lei n° 9.394, de 20 de dezembro de 1996. Estabelece as diretrizes e bases da educação nacional. Diário Oficial da União. (1996); Seção 1:27833. Available online at: https://www.planalto.gov.br/ccivil_03/leis/l9394.htm (accessed Jul 22, 2025).

3. Brasil; Ministério da Educação; Conselho Nacional de Educação; Câmara de Educação Superior. Resolução CNE/CES n° 4, de 7 de novembro de 2001. Institui Diretrizes Curriculares Nacionais do Curso de Graduação em Medicina. Diário Oficial da União. (2001); Seção 1:38. Available online at: https://www.in.gov.br/web/dou/-/resolucao-n-4-de-7-de-novembro-de-2001-28128111 (accessed Jul 22, 2025).

4. Brasil. 1990. Lei n° 8.080, de 19 de setembro de 1990. Dispõe sobre as condições para a promoção, proteção e recuperação da saúde, a organização e o funcionamento dos serviços correspondentes e dá outras providências. Diário Oficial da União. (1990); Seção 1:18055. Available online at: https://www.planalto.gov.br/ccivil_03/leis/l8080.htm (accessed Jul 22, 2025).

5. Ceccim, RB, and Feuerwerker, LC. Mudança na graduação das profissões de Saúde sob o eixo da integralidade [changes in undergraduate education in the health professions from the perspective of comprehensive training]. Cad Saude Publica. (2004) 20:1400–10. doi: 10.1590/s0102-311x2004000500036

6. Brasil; Ministério da Educação; Conselho Nacional de Educação; Câmara de Educação Superior. Resolução CNE/CES n° 3, de 20 de junho de 2014. Institui Diretrizes Curriculares Nacionais do Curso de Graduação em Medicina. Diário Oficial da União. (2014); Seção 1:8. Available online at: https://www.in.gov.br/materia/-/asset_publisher/Kujrw0TZC2Mb/content/id/23266129 (accessed Jul 22, 2025).

7. Feuerwerker, LCM. Estratégias de mudança na formação de profissionais de saúde: a experiência da Fundação Oswaldo Cruz. Interface (Botucatu). (1998) 2:91–112. doi: 10.1590/S1414-32831998000200005

8. Silva, SF. Avaliação da formação médica no Brasil: contribuições para a regulação da educação superior em saúde [tese]. São Paulo: Universidade de São Paulo, Faculdade de Medicina (2018).

9. Brasil. Lei n° 12.871, de 22 de outubro de 2013. Institui o Programa Mais Médicos, altera as Leis n° 8.745, de 9 de dezembro de 1993, e n° 6.932, de 7 de julho de 1981, e dá outras providências. Diário Oficial da União. (2013); Seção 1:1. Available online at: https://www.planalto.gov.br/ccivil_03/_ato2011-2014/2013/lei/l12871.htm (accessed Jul 22, 2025).

10. Brasil. Lei n° 10.861, de 14 de abril de 2004. Institui o Sistema Nacional de Avaliação da Educação Superior – SINAES e dá outras providências. Diário Oficial da União. (2004); Seção 1:1. Available online at: https://www.planalto.gov.br/ccivil_03/_ato2004-2006/2004/lei/l10.861.htm (accessed Jul 22, 2025).

11. Brasil; Ministério da Educação; Instituto Nacional de Estudos e Pesquisas Educacionais Anísio Teixeira. Edital n° 81, de 25 de junho de 2025.Diário Oficial da União. (2025); Edição 118, Seção 3:51. Available online at: https://www.in.gov.br/en/web/dou/-/edital-n-81-de-25-de-junho-de-2025-638165525 (accessed Jul 22, 2025).

12. Brasil; Ministério da Educação; Conselho Nacional de Educação. Câmara de Educação Superior. Resolução CNE/CES n° 3, de 20 de junho de 2014. Institui Diretrizes Curriculares Nacionais do Curso de Graduação em Medicina. Brasília: MEC; (2014). Available online at: https://www.gov.br/saude/pt-br/acesso-a-informacao/acoes-e-programas/pnsp/legislacao/resolucoes/rces003_14.pdf/view (accessed Jul 22, 2025).

13. Brasil; Ministério da Educação; Ministério da Saúde. Portaria Interministerial n.° 1.127, de 4 de agosto de 2015. Define diretrizes para celebração de Contrato Organizativo de Ação Pública Ensino-Saúde (COAPES). Brasilia: Diário Oficial da União. (2015) (accessed Jul 22, 2025).

14. Fadel, AVK, Coelho, ICMM, Zarpelon, LFB, and Almeida, MJ. Conquistas, desafios e fragilidades de um Contrato Organizativo de Ação Pública Ensino-Saúde. Revista de Saúde Pública do Paraná (2022); 5. Available online at: http://revista.escoladesaude.pr.gov.br/index.php/rspp/article/view/670 (accessed 22 Jul 2025).

15. Instituto Nacional de Estudos e Pesquisas Educacionais Anísio Teixeira (INEP). Resultados do ENADE 2023. Brasília: INEP/MEC; (2024). Available online at: https://www.gov.br/inep/pt-br/areas-de-atuacao/avaliacao-e-exames-educacionais/enade/resultados (accessed Jul 22, 2025).

16. Cyrino, EG, Pinto, HA, Oliveira, FP, and Oliveira, MC. Repercussões das Diretrizes Curriculares Nacionais de 2014 nos projetos pedagógicos das novas escolas médicas. Interface (Botucatu). (2021) 25:e200358. doi: 10.1590/interface.200358

17. Vieira, APGF, Costa, MC, and Teixeira, CF. Políticas públicas e formação médica: a construção das diretrizes curriculares nacionais de 2014. Rev Polít Públicas (2018);22:493–508. Available online at: https://periodicos.ufpe.br/revistas/index.php/politicaspublicas/article/view/256922/43322 (Accessed July 13, 2025).

18. Feuerwerker, LCM, and Sena, RR. Avaliação na educação superior: limites e possibilidades de uma experiência. EccoS – Revista Científica. (2020) 55:e18874. doi: 10.5585/eccos.n55.18874

19. United States Medical Licensing Examination (USMLE). About the USMLE. (2025). Available online at: https://www.usmle.org/about-usmle (Accessed July 13, 2025).

20. Medical Council of Canada. Qualifying examination (MCCQE) part I. (2025). Available online at: https://mcc.ca/examinations/mccqe-part-i/ (Accessed August 05, 2025).

21. Korea Health Personnel Licensing Examination Institute. Korean medical licensing examination. (2025). Available online at: https://www.kuksiwon.or.kr/eng/ (Accessed August 05, 2025).

22. Ahmed, H, Kortz, M, and Carmody, JB. An update on medical school accreditation in the United States: implications for the single graduate medical education (GME) era. Cureus. (2023) 15:e34884. doi: 10.7759/cureus.34884

23. General Medical Council. Medical school quality assurance: Annual report 2022. London: GMC; (2022). Available online at: https://www.gmc-uk.org/-/media/documents/qa-annual-report-2022-final_pdf-97133425.pdf (Accessed August 13, 2025).

24. Scheffer, M, Cassenote, A, Guilloux, AGA, Miotto, BA, Mainardi, GM, and Machado, MH. Demografia médica no Brasil 2023. São Paulo: Associação Médica Brasileira; (2023). Available online at: https://amb.org.br/wp-content/uploads/2023/02/DemografiaMedica2023_8fev-1.pdf (accessed Jul 22, 2025).

25. Dolci, JEL. The proliferation of medical schools in Brazil: a threat to the quality of medical education? Braz J Otorhinolaryngol. (2023) 89:101354. doi: 10.1016/j.bjorl.2023.101354

26. de Souza Nóbrega, JMV. Avaliação da qualidade da educação superior no Brasil: o ENADE e sua contribuição. Pesquisa Científica em Perspectiva Global. (2024) 3:3–16. doi: 10.56069/2676-0428.2024.486

27. O’Neill, LD, Morcke, AM, and Eika, B. The validity of student tutors’ judgments in early detection of struggling in medical school. A prospective cohort study. Adv Health Sci Educ Theory Pract. (2016) 21:1061–79. doi: 10.1007/s10459-016-9677-6

28. Ministério da Saúde (PT); Ministério da Ciência; Tecnologia e Ensino Superior (PT). Reflexão sobre o perfil do médico recém-formado em Portugal. Lisboa: República Portuguesa; (2018). Available online at: https://www.sns.gov.pt/wp-content/uploads/2018/11/Reflexao-sobre-o-perfil-do-medico-recem-formado-em-Portugal.pd (accessed Sep 7, 2025).

29. Educa Cetrus. Demografia Médica no Brasil 2025: Com mais de 1 milhão de médicos até 2035, como você vai se diferenciar? Educa Cetrus - Carreira Médica. (2025). Available online at: https://educa.cetrus.com.br/carreira-medica-demografia-medica-2025/ (accessed Jul 22, 2025).

30. Brasil; Ministério da Educação. Sistema e-MEC: Cadastro Nacional de Cursos e Instituições de Educação Superior. Brasília: MEC; (2025). Available online at: https://emec.mec.gov.br (accessed Jul 22, 2025).

31. Neto, TA, da Silva Fucuta Pereia, P, Nogueira, ML, Pereira de Gody, JM, and Moscardini, AC. Factors that affect the National Student Performance Examination Grades of Brazilian undergraduate medical programs. GMS J Med Educ. (2018) 35:Doc8. doi: 10.3205/zma001155

32. Cyrino, EG, Pinto, HA, Oliveira, FP, and Oliveira, MC. O desafio da avaliação das escolas médicas no Brasil: elementos para reflexão. ABCS Health Sci. (2015) 40:312–8. doi: 10.7322/abcshs.v40i3.787

33. Abdul Kadir, N, and Schütze, H. Medical educators’ perspectives on the barriers and enablers of teaching public health in the undergraduate medical schools: a systematic review. Glob Health Action. (2022) 15:2106052. doi: 10.1080/16549716.2022.2106052

Keywords: educational assessment, medical education, public policy, regional inequalities, ENADE

Citation: Rufino R, Neves MFT, Barbosa ADM, Povedano A and Schanaider A (2025) Evaluating medical education in Brazil: analysis of the National Student Performance Exam 2023. Front. Med. 12:1679924. doi: 10.3389/fmed.2025.1679924

Edited by:

Nina Pereza, University of Rijeka, CroatiaReviewed by:

Beatriz Soares Garcia Rosa, University of Washington, United StatesEverson Meireles, Federal University of Recôncavo da Bahia, Brazil

Copyright © 2025 Rufino, Neves, Barbosa, Povedano and Schanaider. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rogerio Rufino, cm9nZXJpby5ydWZpbm9AdWVyai5icg==

†ORCID: Rogerio Rufino, https://orcid.org/0000-0002-5071-6045

Mario Fritsch Toros Neves, https://orcid.org/0000-0002-0797-572X

Adauto Dutra Moraes Barbosa, https://orcid.org/0000-0003-2841-9877

Andrea Povedano, https://orcid.org/0000-0003-3552-558X

Alberto Schanaider, https://orcid.org/0000-0002-6645-633X

Rogerio Rufino

Rogerio Rufino Mario Fritsch Toros Neves

Mario Fritsch Toros Neves Adauto Dutra Moraes Barbosa

Adauto Dutra Moraes Barbosa Andrea Povedano4†

Andrea Povedano4†