- 1Psychology Department, New York University, New York, NY, United States

- 2Department of Clinical Psychology, Vrije Universiteit Amsterdam, Amsterdam, Netherlands

- 3Methodology and Statistics Research Unit, Institute of Psychology, Leiden University, Leiden, Netherlands

- 4Electrical Engineering, Mathematics and Computer Science Department, University of Twente, Enschede, Netherlands

- 5Independent Researcher, Amsterdam, Netherlands

- 6Department of Psychiatry, Centre Hospitalier Universitaire Sainte-Justine Research Center, University of Montreal, Montreal, QC, Canada

- 7Mila – Quebec Artificial Intelligence Institute, University of Montreal, Montreal, QC, Canada

- 8Diademics Pty Ltd., Mount Waverley, VIC, Australia

- 9New York University-Max Planck Center for Language, Music, and Emotion, New York University, New York, NY, United States

Recent years have seen a dramatic increase in studies measuring brain activity, physiological responses, and/or movement data from multiple individuals during social interaction. For example, so-called “hyperscanning” research has demonstrated that brain activity may become synchronized across people as a function of a range of factors. Such findings not only underscore the potential of hyperscanning techniques to capture meaningful aspects of naturalistic interactions, but also raise the possibility that hyperscanning can be leveraged as a tool to help improve such naturalistic interactions. Building on our previous work showing that exposing dyads to real-time inter-brain synchrony neurofeedback may help boost their interpersonal connectedness, we describe the biofeedback application Hybrid Harmony, a Brain-Computer Interface (BCI) that supports the simultaneous recording of multiple neurophysiological datastreams and the real-time visualization and sonification of inter-subject synchrony. We report results from 236 dyads experiencing synchrony neurofeedback during naturalistic face-to-face interactions, and show that pairs' social closeness and affective personality traits can be reliably captured with the inter-brain synchrony neurofeedback protocol, which incorporates several different online inter-subject connectivity analyses that can be applied interchangeably. Hybrid Harmony can be used by researchers who wish to study the effects of synchrony biofeedback, and by biofeedback artists and serious game developers who wish to incorporate multiplayer situations into their practice.

Introduction

What does it mean to lose yourself in someone else? How is it possible that the mere physical presence of another human can make us believe we can conquer the world, or conversely, make us feel lonely and incapable? We know, both scientifically and intuitively, that relationships are crucial for our physical and mental well-being (Pietromonaco and Collins, 2017). But they are also sources of frustration in their fluid, messy mix of internal inconsistencies: love and hate, inclusion and exclusion, fascination and comfort, challenge and familiarity. Can we capture this seemingly subjective, fleeting, and elusive notion of “being on the same wavelength” with another person, with objective measurement tools? And if so, can we leverage this information to guide people in their interaction with others?

Successful social interactions require tight spatiotemporal coordination between participants at motor, perceptual, and cognitive levels. Around a decade ago, several labs began to use a variety of methods to record (neuro)physiological data from multiple people simultaneously, a technique known as “hyperscanning.” This has allowed researchers to study dynamic coordination in a range of social situations such as ensembles performing music, multiple people performing actions together, or carrying on a conversation (Babiloni et al., 2006; Tognoli et al., 2007; Dumas et al., 2010; Yun, 2013; Zamm et al., 2018b; see e.g., Hari et al., 2013; Babiloni and Astolfi, 2014; Czeszumski et al., 2020 for reviews). There now exists a growing body of work where pairs or groups of participants engage in social interactions while their brain activity, physiological responses, and (eye) movements are monitored.

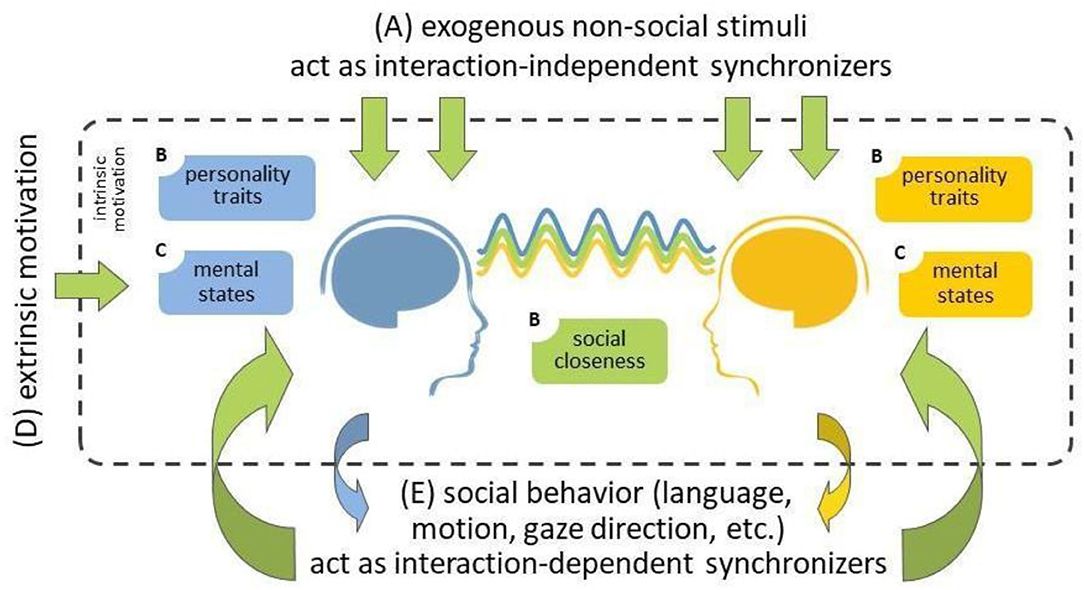

While hyperscanning research is also conducted using hemodynamic neuroimaging tools, including functional Magnetic Resonance Imagining (fMRI; Koike et al., 2016, 2019; Abe et al., 2019) but especially functional near-infrared spectroscopy (fNIRS; Scholkmann et al., 2013; Nozawa et al., 2016; Reindl et al., 2018), we here focus on electroencephalography (EEG) hyperscanning. The extent to which EEG activity becomes synchronized between people is correlated with a range of factors. For example, it has been widely demonstrated, in both single-brain laboratory research and hyperscanning studies, that shared attention to the same stimuli leads to similar brain responses across individuals, and consequently, higher inter-brain synchrony (Figure 1A; Hasson, 2004; Dikker et al., 2017; Czeszumski et al., 2020). Importantly, social behavior has also been shown to serve as an (exogenous) source of interpersonal synchrony (Figures 1B,E): Behaviors such as joint action, language, eye contact, touch, and cooperation drive synchrony in various social contexts (Dumas et al., 2010; Dikker et al., 2014, 2021; Kinreich et al., 2017; Goldstein et al., 2018; Pérez et al., 2019; Reinero et al., 2021). Furthermore, both individuals' social closeness and personality traits (e.g., empathy) affect people's social engagement during an interaction, and thus the extent to which their brain responses become synchronized (Dikker et al., 2017, 2021; Kinreich et al., 2017; Goldstein et al., 2018; Bevilacqua et al., 2019). Participants' mental states (e.g., focus) similarly influence participants' engagement with each other, endogenously motivating them to make an effort to connect to each other (Figure 1C; Dikker et al., 2017, 2021).

Figure 1. A summary of possible sources of inter-brain synchrony during social interaction (adapted from Dikker et al., 2021). (A) External non-social stimuli (top) and (E) social behavior (bottom) provide exogenous sources of shared stimulus entrainment and interpersonal social coordination, respectively, leading to similar brain responses, i.e., inter-brain synchrony. (B) Both individuals' social closeness and personality traits (e.g., affective empathy) affect their social engagement during the interaction, and thus the extent to which their brain responses become synchronized. (C) participants' mental states (e.g., focus) similarly affect participants' engagement with each other, intrinsically (endogenously) motivate participants to make an effort to connect to each other. (D) Such engagement can be “boosted” via extrinsic motivation, which could subsequently lead to increased inter-brain synchrony.

Importantly, people can also be extrinsically motivated to socially engage with each other (Figure 1D). Specifically, our group recently reported that exposing people to a hyperscanning neurofeedback environment can motivate social engagement (Dikker et al., 2021). Using data from the interactive social neurofeedback installation The Mutual Wave Machine (wp.nyu.edu/mutualwavemachine), we show that dyads who were explicitly made aware of the social relevance of the neurofeedback environment, exhibited an increase in inter-brain coupling over time. This suggests that external factors may help boost interpersonal engagement, which raises the possibility that interpersonal synchrony biofeedback may be one fruitful avenue to pursue in such efforts.

However, while neurofeedback applications using data from individual brains are fairly widely used across scientific, clinical, educational, and artistic contexts (see e.g., van Hoogdalem et al., 2020 for a review), to our knowledge multi-person neurofeedback using hyperscanning EEG has been implemented primarily in game and art environments (see contributions in Kovacevic et al., 2015; Dikker et al., 2019, 2021; Nijholt, 2019; see Duan et al., 2013; Salminen et al., 2019 for examples of scientifically oriented dual-brain neurofeedback experiments). As a result, little is known about the possible effectiveness of hyperscanning neurofeedback in improving social communication.

This is further complicated by the fact that consensus is lacking with regard to how synchrony should be computed (Ayrolles et al., 2021). While some metrics have been shown to be “better” than others from a purely statistical perspective (Burgess, 2013), only very few scholars have attempted to map computational choices with regard to interpersonal neural connectivity to psychological processes or constructs (Dumas and Fairhurst, 2021; Hoehl et al., 2021). This distinguishes synchrony neurofeedback from other BCI applications, such as so-called “P3 spellers” (Fazel-Rezai et al., 2012), which are based on well-established neural signatures.

Because of the lack of consensus with regard to optimal synchrony metrics, we argue that it is desirable that multi-brain neurofeedback applications allow users to select from various synchrony metrics that can be used independently, and thus explore the utility of different metrics in different contexts.

To this end, we have developed Hybrid Harmony, a Brain-Computer Interface (BCI) that uses a hyperscanning approach to allow the collection of EEG data from two or more people simultaneously and enables users to visualize/sonify the extent to which participants' biometrics are coupled, choosing between different synchrony metrics. These metrics, described in section Connectivity Analysis, are developed in parallel with HyPyP, an open-source Python-based pipeline that allows researchers to compute and compare different inter-brain connectivity metrics on the same dataset (Ayrolles et al., 2021).

Software Description

Overview

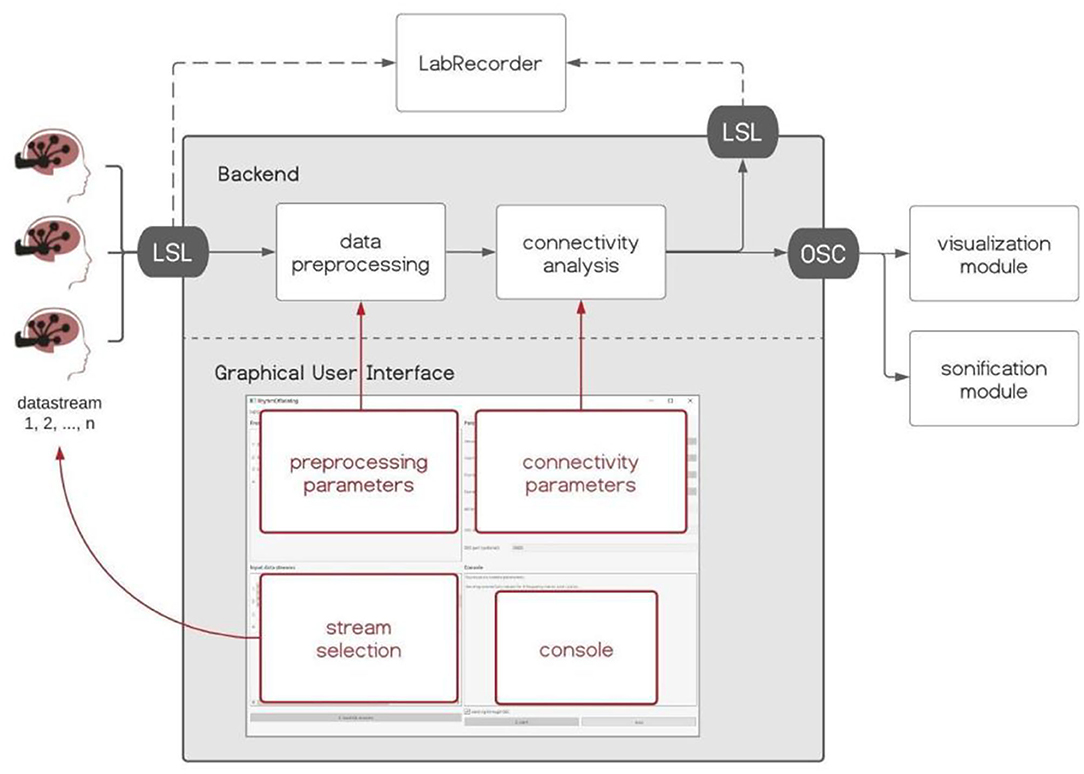

Hybrid Harmony is an open-source software package written in Python (https://github.com/RhythmsOfRelating/HybridHarmony), accompanied by a visualization module and a sonification module (Figure 2). The software consists of a backend that handles data acquisition and performs analyses, and a Graphical User Interface (GUI) made with PyQt5 (https://www.riverbankcomputing.com/software/pyqt/), where users can control parameters for the analyses (Figure 2). We introduce the software and discuss compatible hardware (EEG systems) in section Hardware, the processing pipeline including preprocessing and connectivity analysis in section Data Preprocessing, Connectivity Analysis, Normalization, and the visualization and sonification modules in section Visualization and Sonification. Data transfer protocols, i.e., LabStreamingLayer (LSL, https://github.com/sccn/labstreaminglayer) and Open Sound Control (OSC, Wright and Momeni, 2011), are described in Supplementary Material, Data Transfer Protocol. Detailed instructions can be found on the GitHub page.

Figure 2. Hybrid Harmony software. The backend and Graphical User Interface (GUI) of Hybrid Harmony are shown in the gray box. Hybrid Harmony performs data preprocessing and connectivity analysis on the incoming EEG data from LabStreamingLayer (LSL), and outputs synchrony values to LSL and Open Sound Control (OSC; detailed in Supplementary Material, The Data Transfer Protocol: Open Sound Control). The output can then be recorded by LabRecorder (Supplementary Material, Saving Data Through LSL) and be transformed into sensory experiences through the visualization and sonification modules (section Visualization and Sonification). The GUI enables the user to control parameters for preprocessing and connectivity analysis, as well as monitor the program status on the console.

Hardware

The tool is compatible with any EEG device that interfaces with LabStreamingLayer, and has been tested with MUSE (https://choosemuse.com/; Bhayee et al., 2016), emotiv (EPOC and EPOC+; https://www.emotiv.com/; Williams et al., 2020), the SMARTING system from mBrainTrain (https://mbraintrain.com/; Grennan et al., 2021), and Brain Vision LiveAmp systems (https://www.brainproducts.com/; Fang et al., 2019), and can be expanded to accommodate other systems that export data to LabStreamingLayer.

Data Preprocessing

The first stage of data preprocessing is a buffering procedure that holds and segments incoming data time-series. Incoming streams from LSL are stored in a 30 s buffer updating at the EEG data's sampling rate (e.g., 250 Hz for Brain Vision LiveAmp system). Hybrid Harmony then selects the most recent time window to perform the signal processing procedure. The time window is determined by “window size” and is 3 s by default. The rate of the analysis depends on the computation bandwidth of the system Hybrid Harmony is running on. For example, on many systems we tested data are analyzed roughly 3.5 times per second.

The time window is filtered with the infinite impulse response (IIR) filter (Oppenheim, 1999) into frequency bands of interest (e.g., 8–12 Hz for the alpha frequency band), and then Hilbert transformed to generate the instantaneous analytic signal (Oppenheim, 1999). Users can choose to output spectral power concurrently by selecting the “sending power values” checkbox: power spectral density will be computed and sent to LSL along with connectivity values.

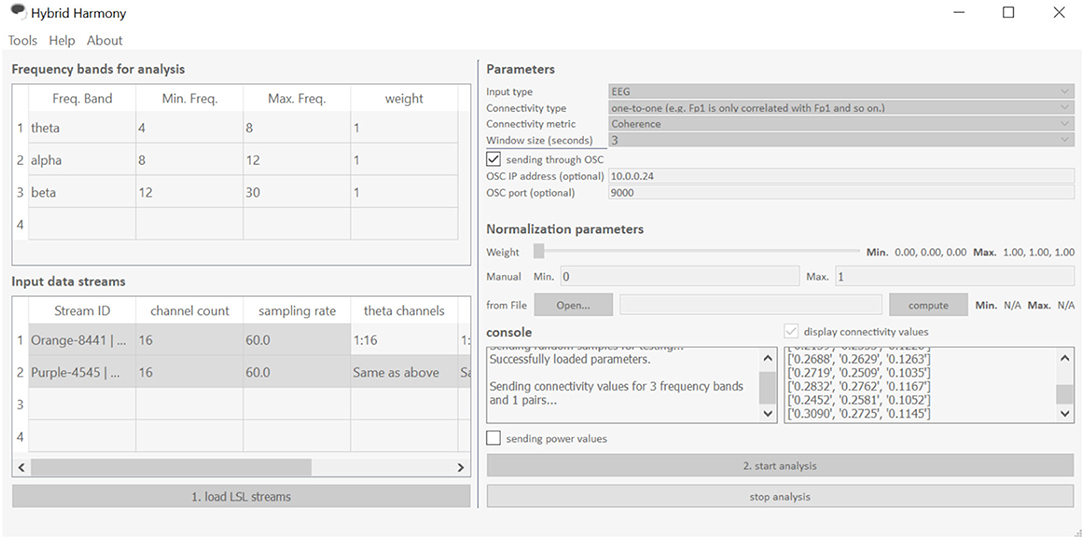

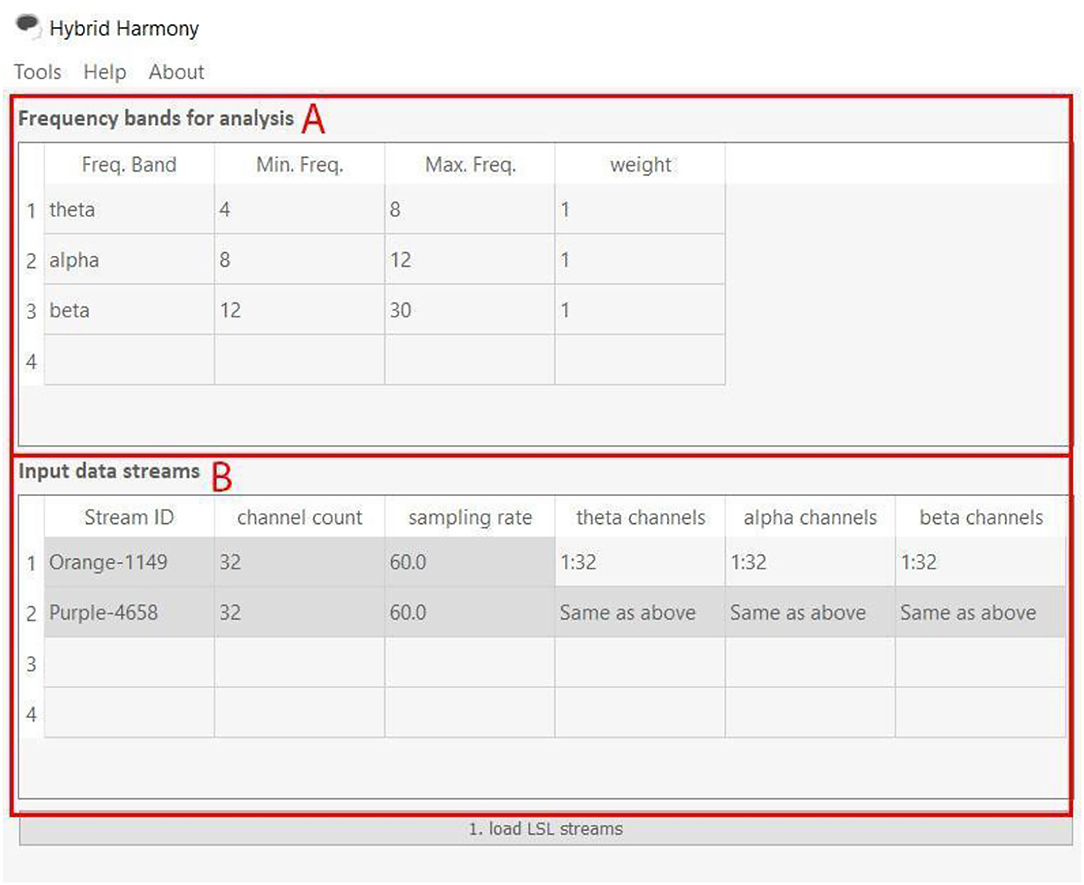

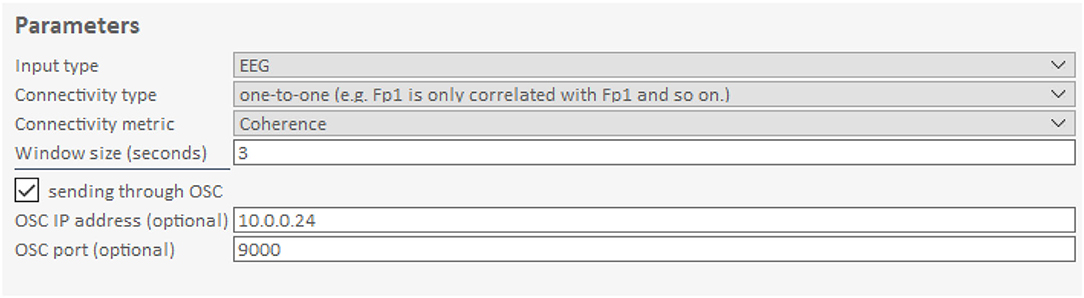

The GUI allows users to change processing parameters via “Frequency bands for analysis,” “Input data streams,” and “window size” (Figure 3). “Frequency bands for analysis” is an editable table specifying the frequency bands of interest, where “Freq. Band” denotes the frequency band name, “Min. Freq” and “Max. Freq” denote the lower and upper bounds of the frequency band, and “weight” determines how the different bands are weighted relative to one another. Figures 3, 4 show the default setup for this table. “Input data streams” displays the name, channel count, and sampling rate of the incoming streams, and its editable cells (e.g., “theta channels,” “alpha channels,” etc.) determine the specific channels to use for each frequency band. Lastly, the “window size” text field determines the length of the data segment for the analysis.

Figure 3. The Hybrid Harmony GUI. The interface is divided into five sections: “Frequency bands for analysis,” “Input data streams,” “Parameters,” “Normalization parameters” and “Console,” the first four of which allow users to specify the parameters detailed in section Data Preprocessing, Connectivity Analysis, Normalization. The GUI facilitates three main actions shown as buttons “load LSL streams,” “start analysis,” and “stop analysis.” “Load LSL streams” will start the stream discovery (Supplementary Material, Stream Discovery Through LSL) in the backend; “start analysis” button initiates the analyses (section Connectivity Analysis and Normalization) and data transferring (Supplementary Material, Using LSL for Output). “Stop analysis” will pause the analyses and allow the parameters to be edited.

Figure 4. Frequency bands for analysis and Input data streams of the Hybrid Harmony GUI. The left half of the GUI, i.e., the “Frequency bands for analysis” and “Input data streams” tables control parameters for data preprocessing (section Data Preprocessing). (A) “Frequency bands for analysis” has four editable columns: Freq. Band: Name of the frequency band; Min. Freq.: The lower bound frequency for the band; Max. Freq.: The upper bound frequency for the band; weight: Weighting factor of the current band (connectivity values will be multiplied by this factor). (B) “input data streams” has three non-editable columns: Stream ID: Name of the EEG stream; channel count: number of EEG channels; sampling rate: sampling rate of the EEG streams. It also has editable columns, corresponding to frequency band names that users typed in (A). The columns theta channels, alpha channels, and beta channels thus determine channel indices to use for computing connectivity values.

Connectivity Analysis

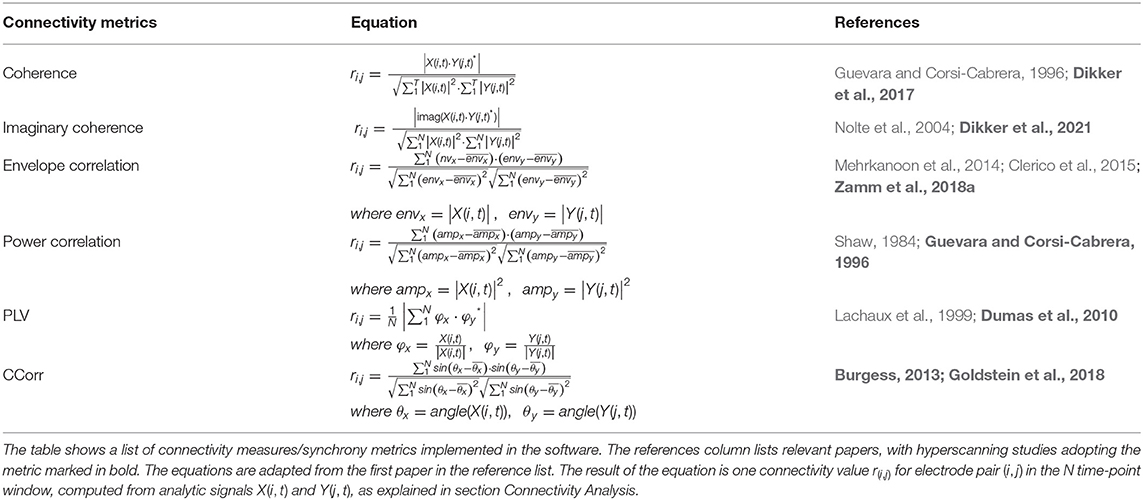

Connectivity Analysis takes the analytic signal from Data Preprocessing as input, and computes one connectivity value for each electrode pair per frequency band in every participant pair. Then, connectivity values are averaged across electrode pairs, so the output is one connectivity value per frequency band for every participant pair. The exact computations are adapted from the python-based inter-brain analysis pipeline HyPyP (Ayrolles et al., 2021), and listed in Table 1. The user may choose from a list of connectivity measures by changing the “Connectivity metric” parameter (Figure 5). Currently implemented metrics include coherence, imaginary coherence, envelope correlation, power correlation, phase-locking value (PLV), and Circular Correlation Coefficient (CCorr). The mathematical equations and references of these metrics are provided in Table 1. In the equations, X(i, t) denotes the analytic signal for subject x at channel i for time point t, and Y(j, t) is that for subject y at channel j for time point t, and the star sign denotes the complex conjugate. The result of each equation is one synchrony value per electrode pair, written as ri, j, and the computation is carried out for all electrode pairs (i, j) where i belongs to subject x 's channels and j belongs to subject y 's channels. Note that we are computing all metrics using the analytic signal from the previous step to streamline the computation. We compute synchrony values from the analytic signals using Hilbert Transform (HT), an alternative analysis to the windowed Fast Fourier Transform (FFT). The analytic signal from HT and the spectra from the windowed FFT both represent the amplitude and phase of the signal in their real and the imaginary parts, respectively, except that the analytic signal is “instantaneous,” while the windowed FFT is an average value over a period (Kovach, 2017). Therefore, while the cross-spectra in coherence is usually the expected value of the product between the two signals' spectra, we used a computation (Table 1) adapted from Equation (1) (Kovach, 2017), i.e., the cross-spectra are expressed as the expectation of the dot product between X(i, t) and the complex conjugate of Y(i, t). Using this formulation is appropriate when investigating the synchronization of signals as it allows us to measure their similarity on a sample-by-sample basis, not just as an average over a relatively long time-window.

Currently, only one metric at a time can be employed, but a user can run multiple instances of the software and thus output multiple metrics simultaneously. The user can then record these streams using LabRecorder (Supplementary Material, Saving Data Through LSL). For visualization, it is possible to differentiate the streams based on the unique source_id in the metadata of the LSL stream, and choose only one to display. However, this feature is not yet developed in our visualization module.

Table 1. Connectivity metrics adapted from Ayrolles et al. (2021).

Figure 5. Connectivity parameters of the Hybrid Harmony GUI. The upper right part of the GUI, i.e., the “Parameters” control parameters for connectivity analysis (section Connectivity Analysis). Input type specifies the type of data (here: EEG). Connectivity Type determines how the connectivity values are averaged across electrode pairs. Connectivity metric determines the calculation of synchrony between two signals. Window size is the length of the data segment to compute synchrony over online. The checkbox sending through OSC determines whether connectivity values are sent through OSC (Supplementary Material, The Data Transfer Protocol: Open Sound Control) in addition to LSL. OSC IP address and OSC port are used to transport data.

The metrics, which, as mentioned above, are a subset of those implemented in the hyperscanning analysis pipeline HyPyP (Ayrolles et al., 2021), include two variations of correlation (envelope and power correlation) and two of coherence (coherence and imaginary coherence) measures, which are traditional linear methods to estimate brain connectivity, as well as two measures of phase synchrony (PLV and CCorr). While correlation methods are predominantly employed in hyperscanning fMRI studies to characterize joint action and shared attention (Koike et al., 2016), as shown in the case study below, we have validated power correlation as a neurofeedback synchrony signal. Coherence is more commonly used in fNIRS and EEG hyperscanning studies (Liu et al., 2016; Dikker et al., 2017; Miller et al., 2019). Imaginary coherence, i.e., the imaginary part of the coherence, was developed in response to coherence's susceptibility to zero-lagged spurious synchrony, and has been found to reflect personality traits and social closeness in hyperscanning studies (Nolte et al., 2004; Dikker et al., 2021). For phase synchrony, we included PLV, which has been widely used in hyperscanning studies to capture joint action (Dumas et al., 2010), verbal interaction (Perez Repetto et al., 2017), decision-making (Tang et al., 2016) and other tasks. CCorr measures the covariance of phase variance between two data streams and is more robust to coincidental synchrony (Burgess, 2013) compared to PLV, and has been used to investigate touch (Goldstein et al., 2018), learning (Bevilacqua et al., 2019) and language (Perez Repetto et al., 2017) in hyperscanning studies.

In addition to the connectivity metric, users are also able to choose “connectivity type” (Figure 3), which determines the electrode pair combination for connectivity. For each frequency band, the computation is carried out for every possible electrode pair between the participants, and then averaged based on the “connectivity type” parameter. If “connectivity type” is “one-to-one,” only electrode pairs in the matching position are considered (e.g., Fp1 channel of participant A is only paired with Fp1 of participant B and C, etc.); alternatively, if it is set to “all-to-all,” all electrode pairs are considered in v the averaging.

Connectivity Analysis outputs data chunks to LSL as a “Marker” stream under the name “Rvalues.” The size of this data chunk depends on the number of subjects and the number of frequency bands chosen for analysis. For example, if there are 4 subjects and 4 frequency bands, the data chunk will be a vector of length 24 (6 combinations of pairs times 4 frequency bands). Additionally, if the checkbox “sending through OSC” is selected, the same data chunks will simultaneously be transmitted through the OSC protocol with parameters in “OSC IP address” and “OSC port.”

Normalization

As part of Connectivity Analysis, normalization of connectivity values is implemented with two options: manual normalization (labeled as “Manual” in Figure 3) and baselining with a pre-recorded file (labeled as “from file” in Figure 3). With a Min-Max normalization method, the user can use either of the options, or a mixture of both with a weighting factor adjusted by the slider “Weight.” The minimum and maximum limits are then weighted between the “Manual” and “from file” options.

Visualization and Sonification

Visualization

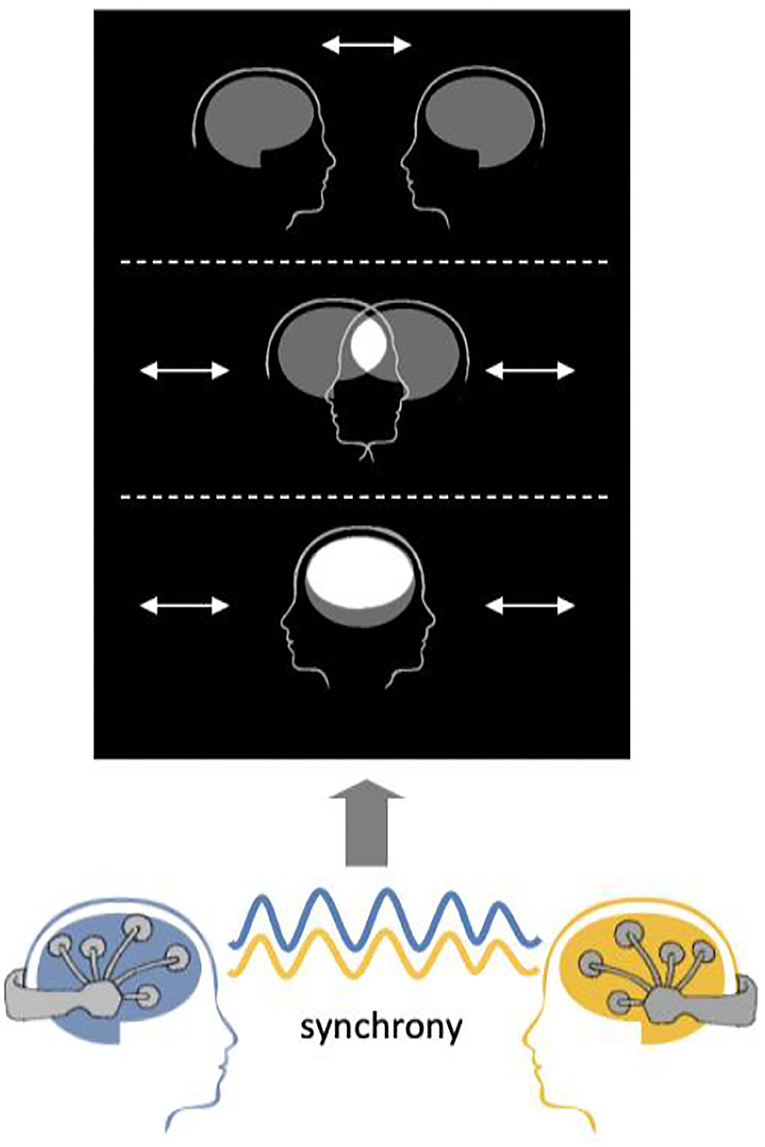

The example visualization protocol provided with the software is based on Mutual Brainwaves Lab (Figure 6), described in Supplementary Material, Mutual Brainwaves Lab. The visualization app was originally built in C++ using the OpenFramework toolkit (https://openframeworks.cc/), a general-purpose framework that wraps together several libraries to assist the creative process. An updated Python3 version makes it easier to deploy the application on different OS (Windows, MacOS, Linux) and more maintainable and extendable for developers, thanks to Python's more accessible syntax and a wide choice of libraries. The application relies on an OSC plugin that listens in real-time for an OSC sender over the network. The GUI of the application is built using OpenFramework for the C++ version and PyQt5 (https://pypi.org/project/PyQt5/) for the Python version. When launched, it presents two avatars representing human heads with a brain icon, and a menu with various options to parameterize the interface. As soon as the OSC receiver starts receiving a stream of data from the Hybrid Harmony running on the same network, the application translates the inter-brain synchrony as the distance between the avatar heads. The user can also set up sessions where participants are encouraged to “score” higher synchrony in a limited timeframe.

Figure 6. Visualization schematic. Connectivity values are visualized as the distance between the two merging heads using the visualization module.

Sonification

Sonification of EEG data has been explored in projects such as EEGsynth (https://github.com/eegsynth/eegsynth), which interfaces electrophysiological recordings (e.g., EEG, EMG and ECG) with analog synthesizers and digital devices. Here, we demonstrate that Hybrid Harmony can be easily interfaced with a digital audio workstation (DAW) in real-time through an OSC (Supplementary Material, The Data Transfer Protocol: Open Sound Control) plugin, allowing for the control of audio parameters based on the connectivity values sent through OSC. We describe the protocol for the control of Ableton Live via LiveGrabber (https://www.showsync.com/tools#livegrabber), a set of free Ableton plugins. LiveGrabber receives messages from any OSC sender on the network and uses OSC messages to control track parameters in Ableton.

After specifying the OSC IP address and port in the Hybrid Harmony GUI, the output can be received by the GrabberReceiver plugin in Ableton (part of the LiveGrabber package). The TrackGrabber plugin allows for the control of track parameters (such as volume, reverb, panning, etc.) using the output from Hybrid Harmony in real time. To illustrate a simple sonification example, we have created a soundscape in which the volume of certain musical pitches can be modulated to create alternating moments of dissonance (harmonic tension or unpleasant sounding chords) and consonance (harmonic resolution or pleasant, stable chords). The volume of each pitch is directly controlled by connectivity parameters output through OSC, such that greater connectivity values (moments of increased interpersonal synchrony) correspond to more pleasant, stable sounding chords.

Validation

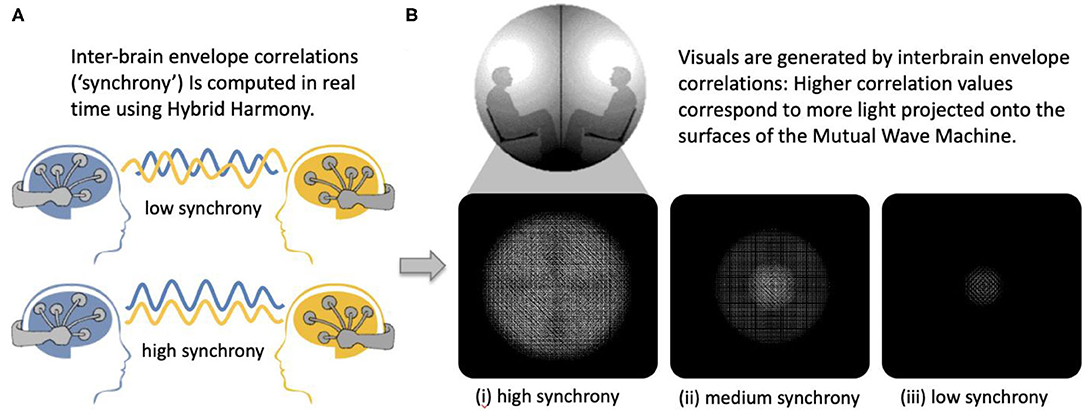

To validate that Hybrid Harmony can capture socially relevant self-report measures, we used a dataset of 243 dyads participating in the Mutual Wave Machine (Figure 7; see Supplementary Material, Case study: The Mutual Wave Machine for details), during which real-time envelope correlations were recorded and translated into light patterns projected onto the surface of two spheres (Hybrid Harmony parameters: “Frequency bands for analysis”: delta 1–4 Hz, theta 4–8 Hz, alpha 8–12 Hz, beta 12–20 Hz; “Connectivity type”: one-to-one; “Connectivity metric”: envelope correlation; “Window size”: 3 s).

Figure 7. The Mutual Wave Machine using inter-brain envelope correlations with Hybrid Harmony. (A) Inter-brain correlations between two participants wearing wireless EEG headsets were computed in real time. (B) Higher inter-brain correlation values correspond to more light projected on each of the surfaces, with the focus point behind each participant's head.

We first asked whether the average envelope correlation reflected dyads' personal distress (Davis, 1980), building on past research where we consistently find a negative relationship between personal distress and inter-brain synchrony (Dikker et al., 2017, 2021; Chen et al., 2021; Reinero et al., 2021). Indeed, we find that pairs' average personal distress was negatively correlated with their neurofeedback synchrony in theta [r(236) = −0.182, pFDR = 0.010], alpha [r(236) = −0.204, pFDR = 0.004] and beta [r(236) = −0.178, pFDR = 0.010].

We then asked whether social closeness (Aron et al., 1992) was positively related to pairs' envelope correlations. We reasoned that this is an interpersonal state measure that might be the target for a social neurofeedback intervention. Indeed, we find significant positive correlations between social closeness and pairs' neurofeedback synchrony in the alpha [r(236) = 0.264, pFDR <0.001] and beta bands [r(236) = 0.210, pFDR = 0.004].

While these results do not speak to the efficacy of Hybrid Harmony as a social neurofeedback tool, they confirm a very important first step, namely that the neurofeedback output is correlated with socially relevant features that might be the target for neurofeedback interventions.

Discussion

We describe Hybrid Harmony, an open-source software that allows researchers to explore interpersonal synchrony in a plug-and-play setup. The project builds on previous work from our group suggesting that incorporating synchrony neurofeedback in naturalistic social interactions may help increase synchrony and interpersonal connectedness, as such raising the possibility that biofeedback may constitute a useful tool to explore meaningful features of social interaction (Dikker et al., 2019, 2021).

As discussed in the Introduction, inter-brain synchrony has been shown to correlate with a range of personal and social characteristics and behaviors, underscoring its relevance in understanding naturalistic social interactions. Interpersonal biofeedback approaches may make it possible to more precisely map such social and psychological factors onto specific neurophysiological processes. For example, testing different types of synchrony in a neurofeedback environment might help inform the field about which metric is the most indicative of social behavior and outcomes in which social contexts. Dyads may be more responsive to multi-brain neurofeedback based on, say, coherence during collaborative tasks, but more responsive to, say, envelope correlations during social sharing. Future findings of such a nature will enrich our knowledge about the social relevance of these metrics and constitute a non-invasive way to probe possible causal links between inter-brain synchrony and social behavior (Moreau and Dumas, 2021; Novembre and Iannetti, 2021). Here we show in a dataset of 243 dyads that social closeness and affective traits can, in fact, be reflected in online synchrony neurofeedback measures, which is an important firspage t step in this direction.

Future Directions and Challenges

Beyond inter-brain coupling, interpersonal synchrony has been examined in more depth in other aspects of behavior, including movement (Oullier et al., 2008; van Ulzen et al., 2008; Varlet et al., 2011), language (Pickering and Garrod, 2004) and physiological rhythms such as heart rate and respiration (Müller and Lindenberger, 2011; Noy et al., 2015). As described above, movement and physiological synchrony may be both cause and effect of inter-brain coupling. In future iterations of Hybrid Harmony, we hope to extend the software to incorporate multiple data streams including physiological and movement data. This would allow users to compare the social relevance of various forms of synchrony, and possibly to tease apart interrelationships between (neuro)physiological and behavioral coupling (e.g., Dumas et al., 2010; Mayo and Gordon, 2020; Pan et al., 2020). Similarly, while we prioritized EEG research in Hybrid Harmony, given the increasingly rich fNIRS hyperscanning literature (Liu et al., 2016; Nozawa et al., 2016; Miller et al., 2019) and recent successful work in fNIRS neurofeedback (Gvirts and Perlmutter, 2020; Kohl et al., 2020) we believe an extension of Hybrid Harmony to include metrics suitable for fNIRS data would be a very welcome and fruitful future direction for the software.

EEG systems' susceptibility to movements and environmental noises can greatly compromise data quality and introduce spurious synchrony in our measure. In controlled lab studies, motion artifacts are often carefully removed manually and through data decomposition (e.g., principal component analysis). In the neurofeedback setting, however, such procedures haven't been widely implemented. In our practice, we tried to address the issue empirically with several solutions. For example, we piloted a version of the Mutual Wave Machine where sudden motion-related fluctuations in the data were removed, but this dramatically influenced the experience: participants often react enthusiastically to a sudden increase in light, only to get “punished” for facial expression, which would discourage them from naturally engaging with each other. We considered patching the data with correlations from non-contaminated stretches of data, but this would lead to arbitrary choices. We therefore instead opted for an alternative solution where participants were told explicitly that because extensive head and facial movements can dramatically affect the EEG signal, what they were seeing could also be caused by synchronous noise or synchronous movement. While this option sufficed for the experiential side of the neurofeedback, it is suboptimal with regard to data fidelity. In future releases of the software, we will incorporate support for online data cleaning procedures such as toolboxes (Mullen et al., 2015) and EEG systems that provide built-in data cleaning options in their software, e.g., the SMARTING system by mBrainTrain (Lee et al., 2020).

In addition to challenges related to data cleaning, the real-time nature of the analysis procedure poses challenges in terms of its interpretation. For instance, data fidelity is much higher when applying filtering and correlation analysis on larger stretches of data, but in the type of analysis employed here, this would compromise the immediacy of the neurofeedback. Therefore, it is important to note that our real-time approach might not be able to characterize those types of synchrony that may not be temporally aligned over short intervals. For example, fMRI studies have suggested there may be delays of up to 8 seconds in inter-brain synchrony between speakers and listeners (Stephens et al., 2010a; Dikker et al., 2014; Misaki et al., 2021). Indeed, while successful joint action is typically associated with the coupling of motor movements (Dumas et al., 2010), being “in sync” or “on the same wavelength” is often taken to imply interactive alignment at the level of mental representations (Garrod and Pickering, 2009; Pickering and Garrod, 2013), usually involving more “abstract” constructs such as sharing viewpoints (Van Berkum et al., 2009). These mental representations may or may not be linked to convergence at the temporal level. In line with this dissociation, in two instances of the Mutual Wave Machine we asked participants to reflect on their “connection strategies.” Pairs who used either eye contact or joint action as a connection strategy (mimicry, laughter, motion coordination) exhibited an increase in inter-brain synchrony over time as measured by Imaginary Coherence and Projected Power Correlation (Dikker et al., 2021). Such an increase in synchrony was not observed for pairs who tried “thinking about the same thing.” While these results validated our approach in capturing synchrony in joint action, they do not exclude the possibility of synchrony on the abstract mental representations, given that such synchrony may entail more complex temporal dynamics.

In future iterations, we hope to incorporate alternatives to the time-aligned approach, such as introducing a temporal delay between the data streams and real-time adaptive normalization. These additions will hopefully increase sensitivity to endogenous synchronizers and facilitate more controlled experimental designs (Stephens et al., 2010b).

Finally, it is worth reiterating that very little is known about the correspondence between different synchrony analysis metrics and socio-psychologically relevant factors. Although some metrics are better than others in theory (e.g., CCorr is more robust to spurious synchrony than PLV), and some are more common in hyperscanning studies than single brain studies (e.g., phase synchrony is more common than coherence/correlation), the exact pros and cons of each metric require further investigation. As more becomes known about the mapping between psychological processes as inter-brain synchrony metrics, we will add guidance for users with respect to the choice of metrics in different situations.

Conclusion

In this study, we describe the background, functionality, and validation of Hybrid Harmony, a multi-person neurofeedback application for interpersonal synchrony. With its user-friendly interface and flexible design, Hybrid Harmony enables researchers to explore the interplay between synchrony as a computational method and the various psychological, cognitive, and social functions potentially associated with it.

Data Availability Statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://github.com/RhythmsOfRelating.

Ethics Statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent to participate in this study was provided by the participants' legal guardian/next of kin. Written informed consent was obtained from the individual(s), and minor(s)' legal guardian/next of kin, for the publication of any potentially identifiable images or data included in this article.

Author Contributions

SD, MO, and PC contributed to conception and design of the study. MO, DM, SH, MR, GD, and PC developed the software. PC performed the statistical analysis. PC, SD, SH, MR, KS DM, and GD wrote sections of the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

Funding

This work was supported by Stichting Niemeijer Fonds, The Netherlands Organization for Scientific Research grant #275-89-018 and #406.18.GO.024, Creative Industries Fund NL, TodaysArt, Marina Abramovic Institute, and Fundación Telefónica.

Conflict of Interest

DM is the co-director of Diademics Pty Ltd company.

The handling Editor declared a shared affiliation, though no other collaboration, with one of the authors MR.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We thank Brett Bayes, Laura Gwilliams, and Jean Jacques Warmerdham for their input in the project; and Brain Products, EMOTIV, MUSE, and mBrain Train for their continued support of our work.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnrgo.2021.687108/full#supplementary-material

References

Abe, M. O., Koike, T., Okazaki, S., Sugawara, S. K., Takahashi, K., Watanabe, K., et al. (2019). Neural correlates of online cooperation during joint force production. Neuroimage 191, 150–161. doi: 10.1016/j.neuroimage.2019.02.003

Aron, A., Aron, E. N., and Smollan, D. (1992). Inclusion of other in the self scale and the structure of interpersonal closeness. J. Pers. Soc. Psychol. 63, 596–612. doi: 10.1037/0022-3514.63.4.596

Ayrolles, A., Brun, F., Chen, P., Djalovski, A., Beauxis, Y., Delorme, R., et al. (2021). HyPyP: a hyperscanning python pipeline for inter-brain connectivity analysis. Soc. Cogn. Affect. Neurosci. 16, 72–83. doi: 10.1093/scan/nsaa141

Babiloni, F., and Astolfi, L. (2014). Social neuroscience and hyperscanning techniques: past, present and future. Neurosci. Biobehav. Rev. 44, 76–93. doi: 10.1016/j.neubiorev.2012.07.006

Babiloni, F., Cincotti, F., Mattia, D., Mattiocco, M., Bufalari, S., De Vico Fallani, F., et al. (2006). “Neural basis for the brain responses to the marketing messages: an high resolution EEG study,” in Conference Proceedings: Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE Engineering in Medicine and Biology Society. Conference, New York, NY, 3676–3679.

Bevilacqua, D., Davidesco, I., Wan, L., Chaloner, K., Rowland, J., Ding, M., et al. (2019). Brain-to-brain synchrony and learning outcomes vary by student–teacher dynamics: evidence from a real-world classroom electroencephalography study. J. Cogn. Neurosci. 31, 401–411. doi: 10.1162/jocn_a_01274

Bhayee, S., Tomaszewski, P., Lee, D. H., Moffat, G., Pino, L., Moreno, S., et al. (2016). Attentional and affective consequences of technology supported mindfulness training: a randomised, active control, efficacy trial. BMC Psychol. 4:60. doi: 10.1186/s40359-016-0168-6

Burgess, A. P. (2013). On the interpretation of synchronization in EEG hyperscanning studies: a cautionary note. Front. Hum. Neurosci. 7:881. doi: 10.3389/fnhum.2013.00881

Chen, P., Kirk, U., and Dikker, S. (2021). Trait mindfulness predicts inter-brain coupling during naturalistic face-to-face interactions. bioRxiv [Preprint]. doi: 10.1101/2021.06.28.448432

Clerico, A., Gupta, R., and Falk, T. H. (2015). “Mutual information between inter-hemispheric EEG spectro-temporal patterns: a new feature for automated affect recognition,” in 2015 7th International IEEE/EMBS Conference on Neural Engineering (NER), Montpellier, 914–917.

Czeszumski, A., Eustergerling, S., Lang, A., Menrath, D., Gerstenberger, M., Schuberth, S., et al. (2020). Hyperscanning: a valid method to study neural inter-brain underpinnings of social interaction. Front. Hum. Neurosci. 14:39. doi: 10.3389/fnhum.2020.00039

Davis, M. H. (1980). A Multidimensional Approach to Individual Differences in Empathy. Available online at: https://www.uv.es/friasnav/Davis_1980.pdf (accessed March 29, 2021).

Dikker, S., Michalareas, G., Oostrik, M., Serafimaki, A., Kahraman, H. M., Struiksma, M. E., et al. (2021). Crowdsourcing neuroscience: inter-brain coupling during face-to-face interactions outside the laboratory. Neuroimage 227:117436. doi: 10.1016/j.neuroimage.2020.117436

Dikker, S., Montgomery, S., and Tunca, S. (2019). “Using synchrony-based neurofeedback in search of human connectedness,” in Brain Art: Brain-Computer Interfaces for Artistic Expression, ed A. Nijholt (Springer International Publishing), 161–206.

Dikker, S., Silbert, L. J., Hasson, U., and Zevin, J. D. (2014). On the same wavelength: predictable language enhances speaker–listener brain-to-brain synchrony in posterior superior temporal gyrus. J. Neurosci. 34, 6267–6272. doi: 10.1523/JNEUROSCI.3796-13.2014

Dikker, S., Wan, L., Davidesco, I., Kaggen, L., Oostrik, M., McClintock, J., et al. (2017). Brain-to-brain synchrony tracks real-world dynamic group interactions in the classroom. Curr. Biol. 27, 1375–1380. doi: 10.1016/j.cub.2017.04.002

Duan, L., Liu, W.-J., Dai, R.-N., Li, R., Lu, C.-M., Huang, Y.-X., et al. (2013). Cross-brain neurofeedback: scientific concept and experimental platform. PLoS ONE 8:e64590. doi: 10.1371/journal.pone.0064590

Dumas, G., and Fairhurst, M. T. (2021). Reciprocity and alignment: quantifying coupling in dynamic interactions. R. Soc. Open Sci. 8:210138. doi: 10.1098/rsos.210138

Dumas, G., Nadel, J., Soussignan, R., Martinerie, J., and Garnero, L. (2010). Inter-brain synchronization during social interaction. PLoS ONE 5:e12166. doi: 10.1371/journal.pone.0012166

Fang, Z., Ray, L. B., Owen, A. M., and Fogel, S. M. (2019). Brain activation time-locked to sleep spindles associated with human cognitive abilities. Front. Neurosci. 13:46. doi: 10.3389/fnins.2019.00046

Fazel-Rezai, R., Allison, B. Z., Guger, C., Sellers, E. W., Kleih, S. C., and Kübler, A. (2012). P300 brain computer interface: current challenges and emerging trends. Front. Neuroeng. 5:14. doi: 10.3389/fneng.2012.00014

Garrod, S., and Pickering, M. J. (2009). Joint action, interactive alignment, and dialog. Top. Cogn. Sci. 1, 292–304. doi: 10.1111/j.1756-8765.2009.01020.x

Goldstein, P., Weissman-Fogel, I., Dumas, G., and Shamay-Tsoory, S. G. (2018). Brain-to-brain coupling during handholding is associated with pain reduction. Proc. Natl. Acad. Sci. U.S.A. 115, E2528–E2537. doi: 10.1073/pnas.1703643115

Grennan, G., Balasubramani, P. P., Alim, F., Zafar-Khan, M., Lee, E. E., Jeste, D. V., et al. (2021). Cognitive and neural correlates of loneliness and wisdom during emotional bias. Cereb. Cortex 31, 3311–3322. doi: 10.1093/cercor/bhab012

Guevara, M. A., and Corsi-Cabrera, M. (1996). EEG coherence or EEG correlation? Int. J. Psychophysiol. 23, 145–153. doi: 10.1016/S0167-8760(96)00038-4

Gvirts, H. Z., and Perlmutter, R. (2020). What guides us to neurally and behaviorally align with anyone specific? A neurobiological model based on fNIRS hyperscanning studies. The neuroscientist: a review. J. Bring. Neurobiol. Neurol. Psychiatry 26, 108–116. doi: 10.1177/1073858419861912

Hari, R., Himberg, T., Nummenmaa, L., Hämäläinen, M., and Parkkonen, L. (2013). Synchrony of brains and bodies during implicit interpersonal interaction. Trends Cogn. Sci. 17, 105–106. doi: 10.1016/j.tics.2013.01.003

Hasson, U. (2004). intersubject synchronization of cortical activity during natural vision. Science 303, 1634–1640. doi: 10.1126/science.1089506

Hoehl, S., Fairhurst, M., and Schirmer, A. (2021). Interactional synchrony: signals, mechanisms and benefits. Soc. Cogn. Affect. Neurosci. 16, 5–18. doi: 10.1093/scan/nsaa024

Kinreich, S., Djalovski, A., Kraus, L., Louzoun, Y., and Feldman, R. (2017). Brain-to-brain synchrony during naturalistic social interactions. Sci. Rep. 7:17060. doi: 10.1038/s41598-017-17339-5

Kohl, S. H., Mehler, D. M. A., Lührs, M., Thibault, R. T., Konrad, K., and Sorger, B. (2020). The potential of functional near-infrared spectroscopy-based neurofeedback – a systematic review and recommendations for best practice. Front. Neurosci. 14:594. doi: 10.3389/fnins.2020.00594

Koike, T., Sumiya, M., Nakagawa, E., Okazaki, S., and Sadato, N. (2019). What makes eye contact special? Neural substrates of on-line mutual eye-gaze: a hyperscanning fMRI STUDY. eNeuro 6, 401–412. doi: 10.1523/ENEURO.0284-18.2019

Koike, T., Tanabe, H. C., Okazaki, S., Nakagawa, E., Sasaki, A. T., Shimada, K., et al. (2016). Neural substrates of shared attention as social memory: a hyperscanning functional magnetic resonance imaging study. Neuroimage 125, 401–412. doi: 10.1016/j.neuroimage.2015.09.076

Kovacevic, N., Ritter, P., Tays, W., Moreno, S., and McIntosh, A. R. (2015). “My virtual dream”: collective neurofeedback in an immersive art environment. PloS ONE 10:e0130129. doi: 10.1371/journal.pone.0130129

Kovach, C. K. (2017). A biased look at phase locking: brief critical review and proposed remedy. IEEE Transac. Signal Proc. 65, 4468–4480. doi: 10.1109/TSP.2017.2711517

Lachaux, J. P., Rodriguez, E., Martinerie, J., and Varela, F. J. (1999). Measuring phase synchrony in brain signals. Hum. Brain Mapp. 8, 194–208.

Lee, Y.-E., Kwak, N.-S., and Lee, S.-W. (2020). A real-time movement artifact removal method for ambulatory brain-computer interfaces. IEEE Transac. Neural Syst. Rehabil. 28, 2660–2670. doi: 10.1109/TNSRE.2020.3040264

Liu, N., Mok, C., Witt, E. E., Pradhan, A. H., Chen, J. E., and Reiss, A. L. (2016). NIRS-based hyperscanning reveals inter-brain neural synchronization during cooperative jenga game with face-to-face communication. Front. Hum. Neurosci. 10:82. doi: 10.3389/fnhum.2016.00082

Mayo, O., and Gordon, I. (2020). In and out of synchrony-behavioral and physiological dynamics of dyadic interpersonal coordination. Psychophysiology 57:e13574. doi: 10.1111/psyp.13574

Mehrkanoon, S., Breakspear, M., Britz, J., and Boonstra, T. W. (2014). Intrinsic coupling modes in source-reconstructed electroencephalography. Brain Connect. 4, 812–825. doi: 10.1089/brain.2014.0280

Miller, J. G., Vrtička, P., Cui, X., Shrestha, S., Hosseini, S. M. H., Baker, J. M., et al. (2019). Inter-brain synchrony in mother-child dyads during cooperation: an fNIRS hyperscanning study. Neuropsychologia 124, 117–124. doi: 10.1016/j.neuropsychologia.2018.12.021

Misaki, M., Kerr, K. L., Ratliff, E. L., Cosgrove, K. T., Simmons, W. K., Morris, A. S., et al. (2021). Beyond synchrony: the capacity of fMRI hyperscanning for the study of human social interaction. Soc. Cogn. Affect. Neurosci. 16, 84–92. doi: 10.1093/scan/nsaa143

Moreau, Q., and Dumas, G. (2021). Beyond correlation versus causation: multi-brain neuroscience needs explanation. Trends Cogn. Sci. 25, 542–543. doi: 10.1016/j.tics.2021.02.011

Mullen, T. R., Kothe, C. A. E., Chi, Y. M., Ojeda, A., Kerth, T., Makeig, S., et al. (2015). Real-time neuroimaging and cognitive monitoring using wearable dry EEG. IEEE Trans. Biomed. Eng. 62, 2553–2567. doi: 10.1109/TBME.2015.2481482

Müller, V., and Lindenberger, U. (2011). Cardiac and respiratory patterns synchronize between persons during choir singing. PLoS ONE 6:e24893. doi: 10.1371/journal.pone.0024893

Nijholt, A. (2019). Brain Art: Brain-Computer Interfaces for Artistic Expression. Springer. Available online at: https://play.google.com/store/books/details?id=qC6aDwAAQBAJ (accessed March 29, 2021).

Nolte, G., Bai, O., Wheaton, L., Mari, Z., Vorbach, S., and Hallett, M. (2004). Identifying true brain interaction from EEG data using the imaginary part of coherency. Clin. Neurophysiol. 115, 2292–2307. doi: 10.1016/j.clinph.2004.04.029

Novembre, G., and Iannetti, G. D. (2021). Hyperscanning alone cannot prove causality. Multibrain Stimul. Can. Trends Cogn. Sci. 25, 96–99. doi: 10.1016/j.tics.2020.11.003

Noy, L., Levit-Binun, N., and Golland, Y. (2015). Being in the zone: physiological markers of togetherness in joint improvisation. Front. Hum. Neurosci. 9:187. doi: 10.3389/fnhum.2015.00187

Nozawa, T., Sasaki, Y., Sakaki, K., Yokoyama, R., and Kawashima, R. (2016). Interpersonal frontopolar neural synchronization in group communication: an exploration toward fNIRS hyperscanning of natural interactions. Neuroimage 133, 484–497. doi: 10.1016/j.neuroimage.2016.03.059

Oppenheim, A. V. (1999). Discrete-Time Signal Processing. Pearson Education India. Available online at: http://182.160.97.198:8080/xmlui/bitstream/handle/123456789/489/13.%20Cepstrum%20Analysis%20and%20Homomorphic%20Deconvolution.pdf?sequence=14

Oullier, O., de Guzman, G. C., Jantzen, K. J., Lagarde, J., and Kelso, J. A. S. (2008). Social coordination dynamics: measuring human bonding. Soc. Neurosci. 3, 178–192. doi: 10.1080/17470910701563392

Pan, Y., Dikker, S., Goldstein, P., Zhu, Y., Yang, C., and Hu, Y. (2020). Instructor-learner brain coupling discriminates between instructional approaches and predicts learning. Neuroimage 211:116657. doi: 10.1016/j.neuroimage.2020.116657

Perez Repetto, L., Jasmin, E., Fombonne, E., Gisel, E., and Couture, M. (2017). Longitudinal study of sensory features in children with autism spectrum disorder. Autism Res. Treat. 2017:1934701. doi: 10.1155/2017/1934701

Pérez, A., Dumas, G., Karadag, M., and Duñabeitia, J. A. (2019). Differential brain-to-brain entrainment while speaking and listening in native and foreign languages. Cortex 111, 303–315. doi: 10.1016/j.cortex.2018.11.026

Pickering, M. J., and Garrod, S. (2004). The interactive-alignment model: developments and refinements. Behav. Brain Sci. 27, 212–225. doi: 10.1017/S0140525X04450055

Pickering, M. J., and Garrod, S. (2013). Authors' response: forward models and their implications for production, comprehension, and dialogue [Review of Authors' response: forward models and their implications for production, comprehension, and dialogue]. Behav. Brain Sci. 36, 377–392. doi: 10.1017/S0140525X12003238

Pietromonaco, P. R., and Collins, N. L. (2017). Interpersonal mechanisms linking close relationships to health. Am. Psychol. 72, 531–542. doi: 10.1037/amp0000129

Reindl, V., Gerloff, C., Scharke, W., and Konrad, K. (2018). Brain-to-brain synchrony in parent-child dyads and the relationship with emotion regulation revealed by fNIRS-based hyperscanning. Neuroimage 178, 493–502. doi: 10.1016/j.neuroimage.2018.05.060

Reinero, D. A., Dikker, S., and Van Bavel, J. J. (2021). Inter-brain synchrony in teams predicts collective performance. Soc. Cogn. Affect. Neurosci. 16, 43–57. doi: 10.1093/scan/nsaa135

Salminen, M., Járvelá, S., Ruonala, A., Harjunen, V., Jacucci, G., Hamari, J., et al. (2019). Evoking physiological synchrony and empathy using social VR with biofeedback. IEEE Transac. Affect. Comput. 1. doi: 10.1109/TAFFC.2019.2958657

Scholkmann, F., Holper, L., Wolf, U., and Wolf, M. (2013). A new methodical approach in neuroscience: assessing inter-personal brain coupling using functional near-infrared imaging (fNIRI) hyperscanning. Front. Hum. Neurosci. 7:813. doi: 10.3389/fnhum.2013.00813

Shaw, J. C. (1984). Correlation and coherence analysis of the EEG: a selective tutorial review. Int. J. Psychophysiol. 1, 255–266. doi: 10.1016/0167-8760(84)90045-X

Stephens, G. J., Silbert, L. J., and Hasson, U. (2010a). Speaker–listener neural coupling underlies successful communication. Proc. Natl. Acad. Sci. 107, 14425–14430. doi: 10.1073/pnas.1008662107

Stephens, G. J., Silbert, L. J., and Hasson, U. (2010b). Speaker–listener neural coupling underlies successful communication. Proc. Natl. Acad. Sci. U.S.A. 107, 14425–14430.

Tang, H., Mai, X., Wang, S., Zhu, C., Krueger, F., and Liu, C. (2016). Interpersonal brain synchronization in the right temporo-parietal junction during face-to-face economic exchange. Soc. Cogn. Affect. Neurosci. 11, 23–32. doi: 10.1093/scan/nsv092

Tognoli, E., Lagarde, J., DeGuzman, G. C., and Kelso, J. A. S. (2007). The phi complex as a neuromarker of human social coordination. Proc. Natl. Acad. Sci. U.S.A. 104, 8190–8195. doi: 10.1073/pnas.0611453104

Van Berkum, J. J. A., Holleman, B., Nieuwland, M., Otten, M., and Murre, J. (2009). Right or wrong? The brain's fast response to morally objectionable statements. Psychol. Sci. 20, 1092–1099. doi: 10.1111/j.1467-9280.2009.02411.x

van Hoogdalem, L. E., Feijs, H. M. E., Bramer, W. M., Ismail, S. Y., and van Dongen, J. D. M. (2020). The effectiveness of neurofeedback therapy as an alternative treatment for autism spectrum disorders in children: a systematic review. J. Psychophysiol. 35, 102–115. doi: 10.1027/0269-8803/a000265

van Ulzen, N. R., Lamoth, C. J. C., Daffertshofer, A., Semin, G. R., and Beek, P. J. (2008). Characteristics of instructed and uninstructed interpersonal coordination while walking side-by-side. Neurosci. Lett. 432, 88–93. doi: 10.1016/j.neulet.2007.11.070

Varlet, M., Marin, L., Lagarde, J., and Bardy, B. G. (2011). Social postural coordination. J. Exp. Psychol. Hum. Percept. Perform. 37, 473–483. doi: 10.1037/a0020552

Williams, N. S., McArthur, G. M., and Badcock, N. A. (2020). 10 years of EPOC: a scoping review of Emotiv's portable EEG device. bioRxiv [Preprint]. doi: 10.1101/2020.07.14.202085

Wright, M., and Momeni, A. (2011). Opensound Control: State of the Art 2003. Available online at: http://opensoundcontrol.org/files/Open+Sound+Control-State+Of+The+Art.Pdf

Yun, K. (2013). On the same wavelength: face-to-face communication increases interpersonal neural synchronization [Review of on the same wavelength: face-to-face communication increases interpersonal neural synchronization]. J. Neurosci. 33, 5081–5082. doi: 10.1523/JNEUROSCI.0063-13.2013

Zamm, A., Debener, S., Bauer, A.-K. R., Bleichner, M. G., Demos, A. P., and Palmer, C. (2018a). Amplitude envelope correlations measure synchronous cortical oscillations in performing musicians. Ann. N. Y. Acad. Sci. 1423, 251–263. doi: 10.1111/nyas.13738

Keywords: hyperscanning, neurofeedback, brain-computer-interface, EEG, inter-brain coupling, real-world neuroscience

Citation: Chen P, Hendrikse S, Sargent K, Romani M, Oostrik M, Wilderjans TF, Koole S, Dumas G, Medine D and Dikker S (2021) Hybrid Harmony: A Multi-Person Neurofeedback Application for Interpersonal Synchrony. Front. Neuroergon. 2:687108. doi: 10.3389/fnrgo.2021.687108

Received: 29 March 2021; Accepted: 16 June 2021;

Published: 12 August 2021.

Edited by:

Anton Nijholt, University of Twente, NetherlandsReviewed by:

Shigeyuki Ikeda, RIKEN Center for Advanced Intelligence Project (AIP), JapanAleksandra Vuckovic, University of Glasgow, United Kingdom

Copyright © 2021 Chen, Hendrikse, Sargent, Romani, Oostrik, Wilderjans, Koole, Dumas, Medine and Dikker. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Suzanne Dikker, c3V6YW5uZS5kaWtrZXJAbnl1LmVkdQ==

Phoebe Chen

Phoebe Chen Sophie Hendrikse

Sophie Hendrikse Kaia Sargent2

Kaia Sargent2 Michele Romani

Michele Romani Tom F. Wilderjans

Tom F. Wilderjans Sander Koole

Sander Koole David Medine

David Medine Suzanne Dikker

Suzanne Dikker