- 1Department of Radiology and Biomedical Imaging, University of California, San Francisco, San Francisco, CA, United States

- 2VA Advanced Imaging Research Center, San Francisco Veteran Affairs Health Care System, San Francisco, CA, United States

- 3Northern California Institute for Research and Education, San Francisco, CA, United States

- 4Department of Neurology, University of California, San Francisco, San Francisco, CA, United States

- 5UCSF Weill Institute for Neurosciences, San Francisco, CA, United States

- 6School of Medicine, National University of Natural Medicine, Portland, OR, United States

- 7Advanced MRI Technologies, Sebastopol, CA, United States

- 8Helen Wills Neuroscience Institute, University of California, Berkeley, Berkeley, CA, United States

- 9Department of Bioengineering and Therapeutic Sciences, University of California, San Francisco, San Francisco, CA, United States

- 10Radiological Sciences Laboratory, Stanford University, Stanford, CA, United States

To facilitate high spatial–temporal resolution fMRI (≦1mm3) at more broadly available field strengths (3T) and to better understand the neural underpinnings of joy, we used SE-based generalized Slice Dithered Enhanced Resolution (gSLIDER). This sequence increases SNR efficiency utilizing sub-voxel shifts along the slice direction. To improve the effective temporal resolution of gSLIDER, we utilized the temporal information within individual gSLIDER RF encodings to develop gSLIDER with Sliding Window Accelerated Temporal resolution (gSLIDER-SWAT). We first validated gSLIDER-SWAT using a classic hemifield checkerboard paradigm, demonstrating robust activation in primary visual cortex even with stimulus frequency increased to the Nyquist frequency of gSLIDER (i.e., TR = block duration). gSLIDER provided ~2× gain in tSNR over traditional SE-EPI. GLM and ICA results suggest improved signal detection with gSLIDER-SWAT’s nominal 5-fold higher temporal resolution that was not seen with simple temporal interpolation. Next, we applied gSLIDER-SWAT to investigate the neural networks underlying joy using naturalistic video stimuli. Regions significantly activated during joy included the left amygdala, specifically the basolateral subnuclei, and rostral anterior cingulate, both part of the salience network; the hippocampus, involved in memory; the striatum, part of the reward circuit; prefrontal cortex, part of the executive network and involved in emotion processing and regulation [bilateral mPFC/BA10/11, left MFG (BA46)]; and throughout visual cortex. This proof of concept study demonstrates the feasibility of measuring the networks underlying joy at high resolutions at 3T with gSLIDER-SWAT, and highlights the importance of continued innovation of imaging techniques beyond the limits of standard GE fMRI.

Introduction

Whole brain functional magnetic resonance imaging (fMRI) at high spatial–temporal resolution (≦1mm3) is invaluable for studying smaller subcortical structures like the amygdala and the brain’s laminar and columnar functional organization (Yacoub et al., 2007, 2008; Kashyap et al., 2018). However, given that the tSNR is generally insufficient for sub-millimeter fMRI at 3T, these studies have been limited to ultra-high field strengths (≥7T) that are prohibitively expensive in most parts of the world. Furthermore, standard fMRI technology relies on GE-EPI that, especially at higher field strengths and resolutions, suffers from large vein bias and susceptibility-induced signal dropout (Engel et al., 1997; Norris et al., 2002; Parkes et al., 2005) in brain regions essential in emotion processing (Townsend and Altshuler, 2012). This limits our ability to acquire true, whole brain, high resolution fMRI. Even with advanced acceleration techniques, scanning the entire brain at high resolution currently requires unacceptably long repetition times (TR > > 3 s).

Originally developed for sub-millimeter diffusion MRI at 3T (Setsompop et al., 2018), we found that spin-echo based generalized Slice Dithered Enhanced Resolution (gSLIDER) is a promising technique for enabling high-resolution fMRI at 3T (Beckett et al., 2021; Torrisi et al., 2023). It reduces both large vein bias and susceptibility-induced signal dropout relative to GE-EPI, and more than doubles SNR efficiency relative to traditional spin-echo based fMRI (Vu et al., 2018; Setsompop et al., 2018). However, for spins to properly relax between gSLIDER shots, the effective repetition time (TR) of gSLIDER is long (~18 s), which is incompatible with most fMRI paradigms and leaves the sequence vulnerable to blurring from head motion. To address the inherently low temporal resolution of gSLIDER fMRI, we developed a novel reconstruction method: Sliding Window Accelerated Temporal resolution (SWAT) that provides up to a five-fold increase in gSLIDER temporal resolution (TR ~ 3.5 s).

After validating gSLIDER-SWAT for high spatial–temporal resolution fMRI with a basic visual task, we applied it to investigate the neural networks underlying the emotion joy. Frontotemporal-limbic regions may benefit particularly from the enhanced spatial resolution and improved signal quality of this SE-based technique, as these regions are prone to susceptibility-induced signal dropout and geometric distortions with standard GE techniques due to their proximity to air-tissue interfaces (Ojemann et al., 1997; Beckett et al., 2020; Townsend et al., 2010, 2019; Yacoub et al., 2008). This integrated approach enables us to validate the gSLIDER-SWAT technique and demonstrate its application to address important neuroscientific questions that have been limited by conventional imaging approaches.

Emotion processing involves complex neural networks that detect, evaluate and regulate affective and visceral responses to environmental stimuli (Barrett et al., 2007). Emotions are characterized by specific patterns of neural and autonomic activation, coordinated through reciprocal connections between corticolimbic structures and systems governing physiological arousal. fMRI emotion studies show significant activation in the amygdala, insula, anterior cingulate cortex (ACC), medial prefrontal cortex (mPFC) and ventrolateral prefrontal cortex (vlPFC) (Phillips et al., 2003a). The amygdala is part of the salience network and is involved in emotion detection and processing, while medial and lateral regions of the PFC are critical for emotion modulation and regulation (Ochsner et al., 2012; Townsend et al., 2013). Recent work further describes the role of amygdala subregions in different emotions (Labuschagne et al., 2024), highlighting the importance of ultra-high resolution in this region.

Positive emotions also activate regions associated with reward processing (Schultz et al., 1997), including the ventral striatum, nucleus accumbens, ACC and orbitofrontal cortex (Wager et al., 2015; Suardi et al., 2016; Yang et al., 2020). Recent studies show that positive emotions encompass emotions with discrete neural representation (ex: joy vs. awe vs. sexual desire) (Cowen and Keltner, 2017; Saarimäki et al., 2016, 2018), which has been shown across a range of stimuli (Cowen and Keltner, 2018, 2020; Cowen et al., 2019). fMRI studies examining joy in the context of music have found activation in these same reward-related regions (Koelsch and Skouras, 2014; Skouras et al., 2014). This neural evidence aligns with Buddhist contemplative traditions, which recognize joy (muditā) as one of four fundamental qualities of mind that can be intentionally cultivated and expanded (Davidson and Lutz, 2008; Esch, 2022). While traditional emotion research predominantly has focused on negative emotions, these ancient contemplative insights and innovative neuroscience methods motivate our investigation into the neural mechanisms underlying positive emotions, specifically joy.

Studies using naturalistic stimuli have begun to reveal the brain’s hierarchical temporal processing involving the hippocampus, attentional mechanisms, and basic and social emotional processing (Dayan et al., 2018; Jääskeläinen et al., 2021). There has only been one study to date investigating the neural underpinnings of joy using naturalistic video stimuli, despite its importance in studying emotion. A recent fMRI study decoded 27 categories of emotions (Horikawa et al., 2020). While decoding joy using standard GE sequence at 3T, they show significant cortical regions, but not subcortical regions like the amygdala. This was surprising given that the amygdala is activated in response to positive emotions in general (Bonnet et al., 2015; Townsend et al., 2017). Given the GE sequence, signal dropout in these inferior regions may have contributed to the lack of significant activity; thus, we investigated whether we see significant limbic activity with these same naturalistic joy stimuli using gSLIDER.

We hypothesize that gSLIDER-SWAT will provide a gain in temporal resolution by recapturing high frequency information and will increase the tSNR relative to traditional SE-EPI, resulting in improved detection of functional networks at high resolutions at 3T.

Methods

The study protocol was approved by the institutional review board at the University of California, San Francisco; each participant gave written informed consent before initiating the study. Due to scanner availability at the time, fMRI data was acquired from 2 healthy volunteers initially on a Siemens 3T Prisma using a 64ch head/neck coil, and then from 6 volunteers (age= 42 ± 13; 1F/4M) on a Siemens 3T Skyra using a 32ch head coil. Data from one volunteer was excluded due to excessive motion.

Sequence parameters

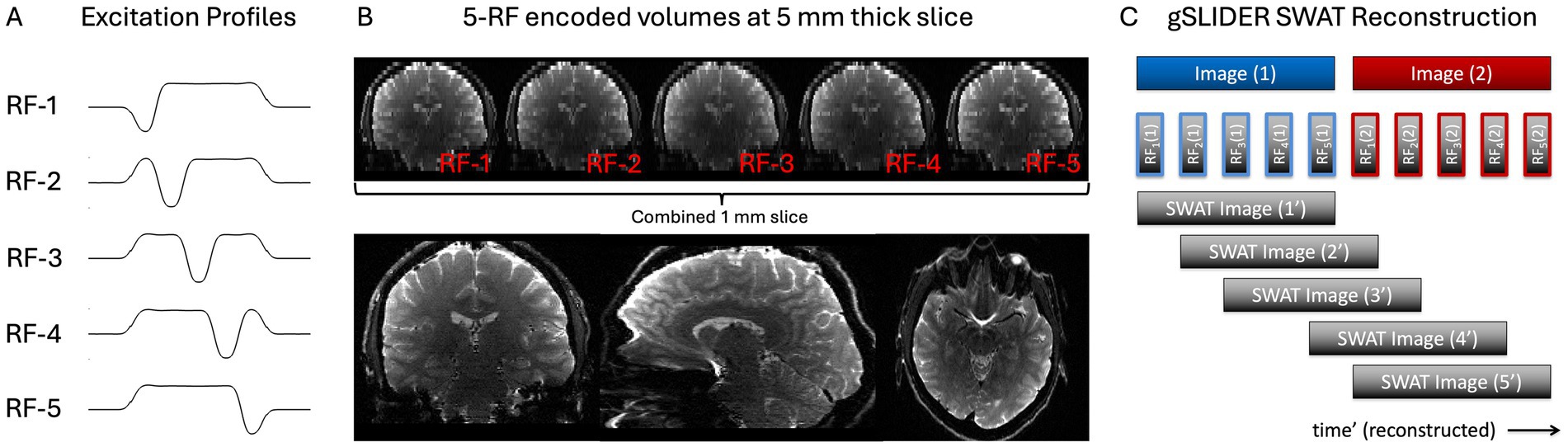

FOV = 220 × 220 × 130 mm3; resolution = 1 × 1 × 1 mm3, PF = 6/8; GRAPPA 3; TE = 69 ms; TR = 18 s (3.6 s per dithered volume). To achieve this resolution for gSLIDER factor 5, 26 thin-slabs (5 mm thick) were acquired, each acquired 5× with a different slice phase (Figures 1A,B). 1mm3 iso SE-EPI tSNR scans were acquired for comparison using matched to the gSLIDER scan parameters (TR = 18 s). A short GE-EPI scan was run for image quality comparison using the same imaging parameters but with TE = 35 ms.

Figure 1. gSLIDER factor 5 acquisition uses five thin-slab volumes that are five times the thickness of the final slice resolution (e.g., 5 mm slab - > 1 mm slices). Each of the five thin-slab volumes are acquired with different slice phase–dither encoding (A) and then combined to create the high-resolution image (B). SWAT gSLIDER reconstruction for 5× gain in temporal resolution (C). Blue Image (1) and Red Image (2) represent two adjacent timepoints of the original gSLIDER method each made up of five of their own dithered RF excitations.

gSLIDER reconstruction SWAT

We used custom MATLAB reconstruction code using magnitude, phase and B1 maps. The thin, 1 mm slices are reconstructed from the five sequentially acquired thin-slabs using standard linear regression with Tikhonov regularization: z = (ATA + λI)−1 ATb = Ainvb, where A is the forward transformation matrix containing the spatial RF-encoding information, λ is the regularization parameter (set to 0.1), b is the concatenation of the acquired RF-encoded slab data, and z is the high-resolution reconstruction. Bloch simulated slab profiles were used to create the forward model (A) which allows the reconstruction to account for the small cross-talk/coupling between adjacent slabs (Setsompop et al., 2018).

For illustrative purposes, the matrix A for 5×-gSLIDER can be roughly approximated as: [−1 1 1 1 1; 1 −1 1 1 1; 1 1 −1 1 1; 1 1 1 −1 1; 1 1 1 1 −1]. This presumes that the underlying signal is stationary. While true for anatomical imaging like diffusion MRI, for fMRI imaging, each of the sequentially acquired slabs contains useful temporal information amenable to a sliding window reconstruction (Figure 1C), where for example the next TR could be reconstructed using the following shifted A matrix: [1 −1 1 1 1; 1 1 −1 1 1; 1 1 1 −1 1; 1 1 1 1 −1; −1 1 1 1 1]. We call this “view-sharing” approach Sliding Window Accelerated Temporal resolution (SWAT) and evaluate the expected 5× gain in temporal resolution and resultant statistical power. The sliding window reconstruction in gSlider-SWAT acts as a temporal frequency-domain filtered upsampling that unaliases and recovers, albeit at an attenuated level, higher-frequency hemodynamic components. This is done by exploiting the unique spatial–temporal information from the five RF excitation profiles per slab, which should enable detection of BOLD activity changes not visible with the original gSLIDER method.

Task 1 Hemifield

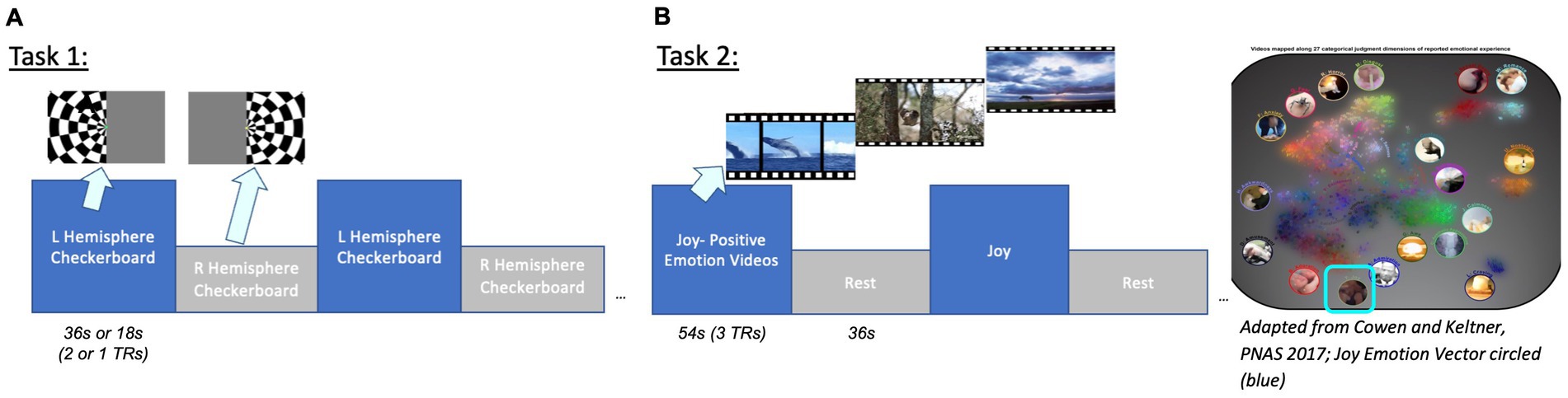

Initial evaluation of the gSLIDER-SWAT sequence was performed using a visual hemifield localizer stimulus consisting of alternating left vs. right visual hemifield flickering checkerboards: 36 s per hemifield block, T = 72 s, 9 repeats per run; 2 runs per subject (Figure 2). Subsequent testing increased the stimulation frequency to the Nyquist frequency of gSLIDER (18 s per hemifield block, T = 36 s, 18 repeats per run). Stimuli were presented using PsychoPy3 and an Avotec SV-6060 Projector. Throughout the hemifield stimulus, volunteers were tasked with focusing on a fixation dot at the center of the screen and to press a button each time the dot turned yellow.

Figure 2. Task designs. Task 1: Visual hemifield localizer stimulus (36 s or 18 s blocks corresponding to 2 or 1 TRs). Task 2: Positive Emotion Joy naturalistic video stimuli (from Cowen and Keltner, 2017). Videos were clustered by emotion vector to group clips with the highest average cosine similarity. Groups of clips with the highest joy category and valence ratings were presented.

Task 2 Joy

Positive Emotion video stimuli (from Cowen and Keltner, 2017; clustered by emotion vector to group clips with the highest average cosine similarity; presented groups of clips with highest valence, arousal and joy category ratings; identical timing as task 1, alternating videos on and rest). The problem of clustering together video clips is defined as follows: given a collection of video clips, each with a duration and emotions vector, group the clips such that each group contains clips with similar emotions vectors and the total duration of each group is ~60s. To group the video clips, we use a greedy algorithm, an approach that makes the locally optimal choice at each step. To build a group, we greedily add the video clip with the highest average cosine similarity to the group that also satisfies the group’s maximum duration constraint with some slack. This process is repeated until the total duration of the group reaches approximately 1 min, after which a new group is created. Videos with the highest joy vectors and highest valence (example clips: Corgi puppies running in grass, babies playing with toys, a blind man seeing for the first time; ave. valence = 7.13; arousal = 5.93; joy rating = 0.25) were selected for video clips, with 54 s on alternating with 36 s rest (cross-hair fixation) for a total of 6 cycles = 540 s/run.

Ljung-Box test

To validate use of FILM to account for the temporal autocorrelation in the fMRI time series, we performed Ljung-Box tests on the GLM residuals for gSLIDER (GS) and gSLIDER-SWAT. The Ljung-Box tests whether autocorrelations of the residuals are significantly different from zero for a given number of lags (Ljung and Box, 1978). Given the hemodynamic response function is on the order of 30–40s, we selected a 36 s window; with TR_GS = 18 s and TR_SWAT = 3.6 s, we tested lags GS nlag = 2, SWAT nlag = 10 capture both short- and long-range temporal dependencies (Yue et al., 2024).

fMRI analysis Hemifield

FMRI data processing was performed using FEAT (FMRI Expert Analysis Tool) v6.00; FSL (FMRIB’s Software Library, www.fmrib.ox.ac.uk/fsl). Independent Component Analysis (ICA) was performed on gSLIDER with and without SWAT reconstructions using FSL’s MELODIC with signal and noise components manually identified. Pre-processed data were whitened and decomposed into sets of vectors to describe signal variation across temporal and spatial domains (Beckmann and Smith, 2004). GLM analysis was performed on the fast 18 s block stimuli data using FSL’s FEAT which included pre-whitening using FILM (Woolrich et al., 2001).

As a control comparison to ensure that the observed benefits of SWAT reconstruction are not artificially inflated due merely to the presence of additional time points (i.e., up-sampled interpolation), we also performed simple 5-fold interpolation of the gSLIDER time series utilizing MATLAB’s interpolation function (which inserts zeros into the original signal and then applies a lowpass interpolation filter at half the Nyquist frequency to the expanded sequence). This implementation allows the original data to pass through unchanged, without adding any additional temporal information, and interpolates to minimize the mean-square error between the interpolated points and their ideal values.

fMRI Analysis Joy

GLM analysis preprocessing included: 2 mm smoothing, high pass filter (90s), FILM, 6 motion parameters regressors, and registration to MNI 2 mm atlas. For random effects group analyses, n = 6 runs were included. Z (Gaussianised T/F) statistical images were thresholded using clusters determined by Z > 1.7 and a (corrected) cluster significance threshold of p = 0.05 (Worsley, 2001), unmasked.

Results

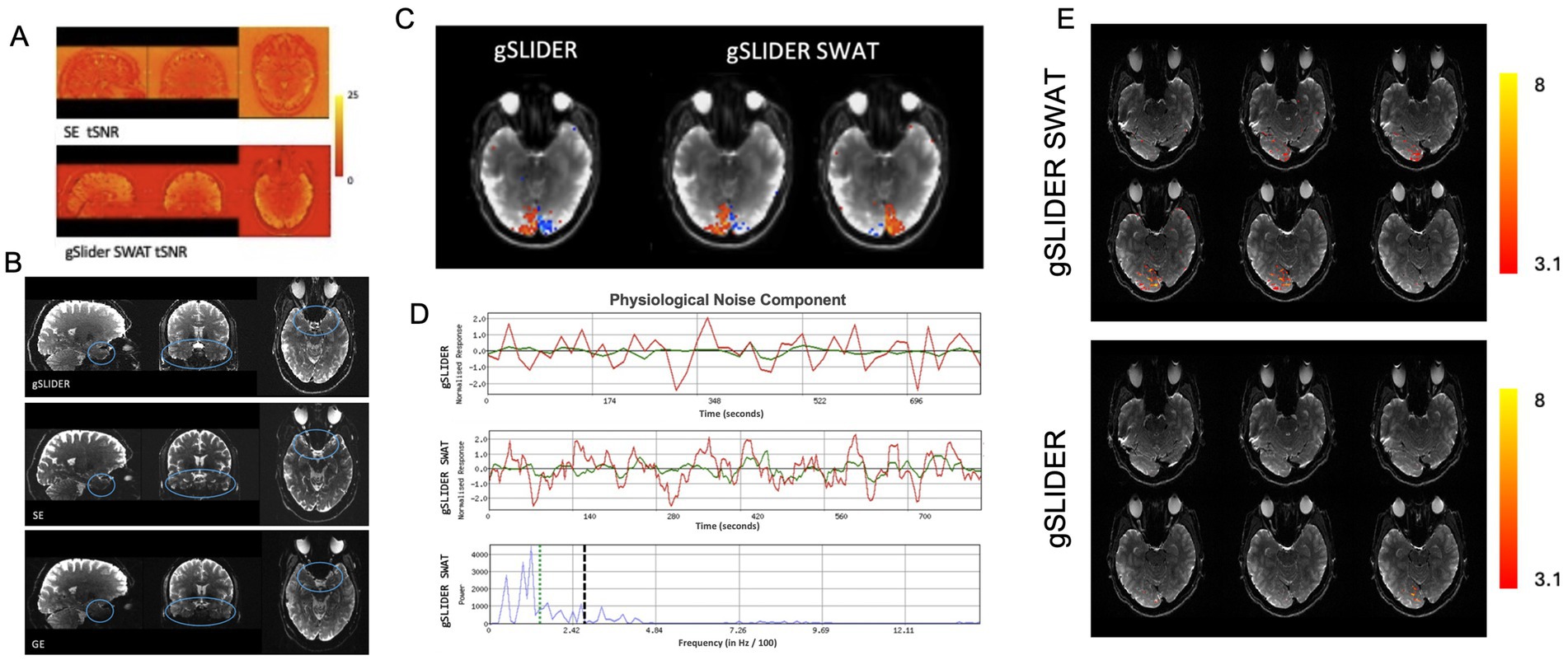

As expected, gSLIDER showed significant tSNR gains (~2×) over traditional SE (Figure 3A), along with improved signal coverage in regions of signal dropout, such as inferior frontal and temporal regions, compared to traditional GE-EPI (Figure 3B).

Figure 3. Advantages of gSLIDER SWAT. (A) Improved (×2) tSNR relative to SE-EPI. (B) Improved signal in inferior frontal and temporal regions compared to traditional GE. (C) Using FSL’s MELODIC analysis of visual hemifield task data, gSLIDER SWAT resolves individual hemispheric activations into two separate ICs, while gSLIDER without SWAT does not. (D) Time courses of a physiological noise component are shown without SWAT (top) and with SWAT (middle). The power spectra of the gSLIDER SWAT time series (bottom) clearly shows power above the Nyquist frequency of gSLIDER without SWAT (vertical dashed black line). For reference, the hemifield task frequency is indicated by the vertical dotted green line. (E) BOLD activations (Z > 3.1) for hemifield localizer task with stimulus frequency set to the Nyquist frequency of gSLIDER (TR = block duration = 18 s) for gSLIDER-SWAT (top) and gSLIDER (bottom).

Ljung-Box

This test is used to assess temporal autocorrelation, and gSLIDER demonstrated no significant autocorrelations with or without FILM pre-whitening (all p > 0.29). In contrast, SWAT exhibited significant autocorrelations without pre-whitening (all p < 0.001), which were successfully mitigated with FILM (all p > 0.3). Consequently, all subsequent analyses incorporate FILM pre-whitening.

Hemifield

Figure 3 demonstrates the advantage of gaining high frequency information with the higher temporal sampling provided by gSLIDER-SWAT. With the 5-fold increase in temporal sampling frequency with SWAT, independent components corresponding to individual visual hemifield activation patterns are resolved, while the original reconstructed 18 s temporal resolution gSLIDER data is only able to resolve merged visual activity (Figure 3C; Supplementary Figure S1). From the gSLIDER-SWAT data, MELODIC detected ~3× more independent components compared to both original gSLIDER and to the 5-fold simple interpolation of the gSLIDER data (i.e., as a control). Figure 3D shows the time courses of a physiological noise component with and without SWAT. The power spectra of the gSLIDER-SWAT time series (bottom) clearly shows power above the Nyquist frequency of gSLIDER without SWAT (vertical dashed black line). For reference, the 36 s block hemifield task frequency is indicated by the vertical dotted green line. As expected, simple interpolation of gSLIDER data did not introduce power above the Nyquist frequency (Supplementary Figure S1A). Importantly, when the stimulus frequency was increased to the Nyquist frequency of gSLIDER (TR = block duration = 18 s), gSLIDER-SWAT was still able to detect robust activity throughout visual cortex while original gSLIDER was not (Figure 3E).

Joy

Initial GLM analysis showed less CNR with SE 1mm3 compared to gSLIDER-SWAT during the Joy task, with SE showing minimal activation restricted to visual cortex (Figure 4A). Unlike with SE, gSLIDER-SWAT showed significant activation in the extended amygdala and medial prefrontal and orbitofrontal cortex, and throughout visual cortex. Next, group random effects analyses revealed significant activation in frontal and occipital regions with both gSLIDER and SWAT: bilateral frontal (superior/middle frontal gyri, BA10/11, including rostral PFC; anterior cingulate BA32); and bilateral occipital (primary visual, middle occipital gyri including V4 and V5 and temporo-parieto-occipital junction). With SWAT, additional significant activation was seen in the left extended amygdala/hippocampus (basolateral amygdalar nucleus; Z = 3.33), with no differences reaching significance with direct comparison. For point of comparison between the reconstructions, the ACC (BA32) was significant at Z = 2.13 with GS and z = 2.99 with SWAT; a 28% gain in this a priori region located inferiorly near susceptible regions. At the individual subject level (Z > 2), significant results were also seen in the reward network, including the right nucleus accumbens and striatum; however, these activations did not reach statistical significance at the group level due to the limited sample size.

Figure 4. Joy. We first demonstrate improved CNR detection of the neural substrates of Joy - Fixation with gSLIDER-SWAT (A, circled) compared to SE. Random effects analyses (B. bottom table/panels, n=6 runs) reveal significant activation in occipital and frontal regions using gSLIDER, and with gSLIDER-SWAT additionally in left hippocampus/extended amygdala-basolateral subregion (circled) (3T 1mm3; Z>1.7 cluster corrected, p<0.05, no masking).

Discussion

This is the first high spatial–temporal resolution fMRI study at 3T using gSLIDER-SWAT, which seeks to improve coverage and detection in areas of high signal dropout near critical brain structures like the amygdala. The amygdala, a complex structure with more than a dozen nuclei, is conserved across vertebrates and situated deep and medial in the temporal lobe in primates (Kim et al., 2011). Additionally, joy has recently been discussed conceptually across species as intense, brief, and event-driven (Nelson et al., 2023). High-resolution (≤1 mm iso) is essential for investigating the functionally distinct amygdalar subnuclei, which are small, have unique neuroanatomical connectivity and serve discrete functions. Here, we found significant activation of the basolateral (BL) nucleus of amygdala during the viewing of Joy stimuli. The BL nucleus is composed of glutamatergic pyramidal neurons (~80–90%) and GABAergic neurons, and BL interneurons receive extensive sensory inputs from cortical and thalamic regions. It has been implicated in emotional learning and memory, fear conditioning and anxiety through projections to areas like the central amygdala, prefrontal cortex and hippocampus (McDonald, 2020) and reward processing through direct excitatory connections to the nucleus accumbens (Ambroggi et al., 2008).

Additionally, at the single subject level during joy, we saw significant activation in the nucleus accumbens and striatum, key regions in the dopaminergic reward system. These findings align with prior research on the mesolimbic system, providing evidence of its involvement in joy. Dopaminergic neurons from the ventral tegmental area (VTA) primarily contribute to the mesolimbic and mesocortical pathways, projecting dopamine to the NAc and PFC (Schultz et al., 1997; Young and Nusslock, 2016). The NAc serves as a hub that links reward-related behavior, integrating inputs from cortical areas involved in executive functions (PFC) with emotional and sensory information from the limbic system. This integration facilitates goal-directed behavior, motivation and learning, and has been implicated in up-regulating positive emotions (Rueschkamp et al., 2019).

The neural circuitry of emotion is critical to study as its dysfunction contributes to various neuropsychiatric conditions including mood disorders characterized by emotion dysregulation (Phillips et al., 2003b; Altshuler et al., 2005). Understanding how these neural networks operate in healthy and clinical populations can provide important insights into the neural basis of emotion processing and its disruption in psychiatric illness (Townsend and Altshuler, 2012; Njau et al., 2020). The amygdala and prefrontal cortex share bidirectional connections, and the BL amygdala has extensive reciprocal projections with the medial and orbital prefrontal regions - a circuit critical for emotional processing, learning and regulation. Studies have shown that disrupted connectivity between these regions is associated with impaired emotion regulation and mood dysregulation (Townsend et al., 2013). Advancing our understanding of emotion circuitry requires robust high-resolution neuroimaging methods that can reliably capture neural activity in regions like the orbitofrontal cortex and amygdala. We hope this study spurs further innovation in sequence development and other alternatives to traditional GE sequences for affective neuroscience fMRI.

With GE, fMRI signal acquisition in the amygdala and the orbitofrontal cortex/PFC, can be compromised due to susceptibility artifacts arising from the adjacent air-filled cavities, particularly the sphenoid sinus and petrous portion of the temporal bone. These artifacts at the tissue-air interfaces lead to signal dropout and geometric distortions in the affected regions, limiting accurate measurement and localization. By incorporating novel SWAT reconstruction into gSLIDER, we were able to achieve both the spatial resolution necessary to resolve these small brain structures and the temporal resolution required for typical fMRI.

One recent study did apply gSLIDER to fMRI (Han et al., 2020). However, in order to achieve a high temporal resolution (TR = 1.5 s), they were limited to a gSLIDER factor of 2 and 1.5mm3 spatial resolution. In contrast, our study utilizes a gSLIDER factor of 5 that provides a theoretical gain of 58% higher SNR efficiency, which facilitates the factor of 3.4× finer volumetric resolution and opens the door to high-resolution fMRI at 3T. Importantly, although our initial testing utilized 1mm3 resolution without multiband to facilitate rapid image reconstruction, optimization and evaluation of our scan protocols, we anticipate that future work will be able to utilize higher resolutions (≤0.8 mm isotropic) in conjunction with multiband acceleration so as to maintain the same gSLIDER-SWAT TR of ~3.6 s.

SWAT is an effective novel gSLIDER reconstruction method that can further enhance BOLD signal detection by reclaiming additional high frequency temporal information. The incorporation of SWAT reconstruction into gSLIDER is a significant improvement as it accelerates and adapts an originally slow, diffusion MRI technique (TR = 18 s) to one with sufficient temporal resolution for most high-resolution fMRI applications (TR = 3.6 s). We validated the benefit of this faster sampling afforded by SWAT through several approaches including using visual stimulation at the Nyquist frequency of gSLIDER (Figure 3E). As expected when sampling at the Nyquist frequency, the ability to detect BOLD activity becomes extremely sensitive to the temporal alignment of the BOLD response and the acquisition of individual slices. This explains why just a single slice of the original gSLIDER showed significant BOLD activity. Importantly, while the broad width of the sliding window results in a temporal point-spread-function that attenuates higher spatial frequencies, it does not eliminate them (Figure 3D; Supplementary Figure S1). This is consistent with the findings of high-resolution fMRI studies evaluating the impact and optimization of spatial blurring versus sampling resolution on the ability to resolve mesoscale functional organization (Yacoub et al., 2008; Vu et al., 2018).

We also confirmed that the observed improvement in statistical power afforded by SWAT was not merely due to the faster sampling rate available (e.g., via simple 5-fold interpolation) since results using simple interpolation were similar to that of the original gSLIDER (Supplementary Figures S1–S3). However, accounting for temporal autocorrelation, a known characteristic of fMRI data, in the fMRI model for gSLIDER-SWAT is essential as they may result in inflated statistics and false positives (Supplementary Figure S4). Future investigation into the impact of different autocorrelation mitigation methods on Type 1 and Type 2 errors in the context of SWAT may be of interest. In this study the LjungBox test confirmed that use of FSL’s FILM pre-whitening was effective at removing the autocorrelations introduced by SWAT.

gSlider fMRI is an evolving technology that holds promise for advancing high-resolution neuroscience research at 3T by combining the benefits of SE with enhanced tSNR. Further refinement is required to address the current limitation of slab-boundary artifacts observed every fifth slice (Figure 1B). Future incorporation of novel techniques like pseudo Partition-encoded Simultaneous Multislab (pPRISM, Chang, 2023) may help to mitigate these limitations and facilitate continued optimization of gSLIDER for fMRI.

Conclusion

This study is the first to demonstrate the feasibility of high spatial–temporal resolution (≦1 mm3) fMRI at 3T using gSLIDER-SWAT, a novel gSLIDER reconstruction technique that shortens the effective TR to values typically used in fMRI and offers one alternative approach to traditional GE and SE sequences. When applied to investigate the neural correlates of joy using naturalistic stimuli in this pilot sample, gSLIDER-SWAT revealed activation in the salience network and emotion processing regions including the basolateral amygdala, anterior cingulate and prefrontal cortex; in visual regions; and in reward network regions like the nucleus accumbens and striatum at the individual subject level. While further optimization is needed to address the slab-boundary artifacts to make it a viable alternative, this study hopes to advance the field of high-resolution fMRI at 3T and spur additional sequence innovation. Combining gSLIDER-SWAT’s enhanced spatial and temporal resolution and reduced susceptibility artifacts may open possibilities for investigating functional organization in regions traditionally challenged by signal dropout.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by University of California, San Francisco. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

JT: Conceptualization, Investigation, Methodology, Software, Validation, Writing – original draft, Writing – review & editing, Data curation, Formal analysis, Visualization. AM: Investigation, Validation, Writing – original draft, Writing – review & editing, Data curation, Formal analysis, Visualization. ZN: Investigation, Writing – review & editing. AB: Software, Writing – review & editing, Conceptualization. BK: Conceptualization, Writing – review & editing. RA-A: Conceptualization, Writing – review & editing. CL: Conceptualization, Methodology, Software, Writing – review & editing. AV: Conceptualization, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. NIH NIBIB R01EB028670 and UCSF RAP 7504825 both funded the work presented in this study (both personnel and MRI scan time). UCSF RAP 7504825 paid for the publishing charges.

Acknowledgments

We gratefully acknowledge John Kornak for his statistical expertise and consultations, and Salvatore Torrisi for his fMRI expertise and consultations, both of which substantially improved our analytical approach and strengthened the methodological rigor of this work.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnimg.2025.1537440/full#supplementary-material

SUPPLEMENTARY FIGURE S1A | FSL’s MELODIC results for visual hemifield task data (36s blocks) from an example subject (Subject 1) for original gSLIDER (top), 5-fold simple interpolated gSLIDER (middle), and gSLIDER-SWAT (bottom).

SUPPLEMENTARY FIGURE S1B | FSL’s MELODIC results for visual hemifield task data (36s blocks) from an additional subject (Subject 2) for original gSLIDER (top), 5-fold simple interpolated gSLIDER (middle), and gSLIDER-SWAT (bottom).

SUPPLEMENTARY FIGURE S2 | FSL’s FEAT GLM results for visual hemifield task data (36s blocks) from Subject 1 with versus without FILM pre-whitening for original gSLIDER (top), 5-fold simple interpolated gSLIDER (middle), and gSLIDER-SWAT (bottom).

SUPPLEMENTARY FIGURE S3 | Example voxel time courses for the visual hemifield task data (36s blocks) from Subject 1 with FILM pre-whitening for original gSLIDER (top), 5-fold simple interpolated gSLIDER (middle), and gSLIDER-SWAT (bottom).

SUPPLEMENTARY FIGURE S4 | FSL’s FEAT GLM results for visual hemifield task data (36s blocks) from Subject 1 comparing SE-EPI (left), gSLIDER (middle), and gSLIDER-SWAT (right).

References

Altshuler, L., Bookheimer, S., Proenza, M. A., Townsend, J., Sabb, F., Firestine, A., et al. (2005). Increased amygdala activation during mania: a functional magnetic resonance imaging study. Am. J. Psychiatry 162, 1211–1213. doi: 10.1176/appi.ajp.162.6.1211

Ambroggi, F., Ishikawa, A., Fields, H. L., and Nicola, S. M. (2008). Basolateral amygdala neurons facilitate reward-seeking behavior by exciting nucleus accumbens neurons. Neuron 59, 648–661. doi: 10.1016/j.neuron.2008.07.004

Barrett, L. F., Mesquita, B., Ochsner, K. N., and Gross, J. J. (2007). The experience of emotion. Annu. Rev. Psychol. 58, 373–403. doi: 10.1146/annurev.psych.58.110405.085709

Beckett, A. J. S., Dadakova, T., Townsend, J., Huber, L., Park, S., and Feinberg, D. A. (2020). Comparison of BOLD and CBV using 3D EPI and 3D GRASE for cortical layer functional MRI at 7T. Magn. Reson. Med. 84, 3128–3145. doi: 10.1002/mrm.28347

Beckett, A, Torrisi, S, Setsompop, K, Feinberg, D, and Vu, AT. (2021). Spin-echo generalized slice dithered enhanced resolution (gSLIDER) for high-resolution fMRI at 3T. Proc. ISMRM.

Beckmann, C. F., and Smith, S. M. (2004). Probabilistic independent component analysis for functional magnetic resonance imaging. IEEE Trans. Med. Imaging 23, 137–152. doi: 10.1109/TMI.2003.822821

Bonnet, L., Comte, A., Tatu, L., Millot, J. L., Moulin, T., and Medeiros de Bustos, E. (2015). The role of the amygdala in the perception of positive emotions: an "intensity detector". Front. Behav. Neurosci. 9:178. doi: 10.3389/fnbeh.2015.00178

Chang, WT. (2023). Pseudo partition-encoded simultaneous multislab (pPRISM) for submillimeter diffusion imaging without navigator and slab-boundary artifacts, ISMRM.

Cowen, A. S., and Keltner, D. (2017). Self-report captures 27 distinct categories of emotion bridged by continuous gradients. Proc. Natl. Acad. Sci. USA 114, E7900–E7909. doi: 10.1073/pnas.1702247114

Cowen, A. S., and Keltner, D. (2018). Clarifying the conceptualization, dimensionality, and structure of emotion: response to Barrett and colleagues. Trends Cogn. Sci. 22, 274–276. doi: 10.1016/j.tics.2018.02.003

Cowen, A. S., and Keltner, D. (2020). What the face displays: mapping 28 emotions conveyed by naturalistic expression. Am. Psychol. 75, 349–364. doi: 10.1037/amp0000488

Cowen, A. S., Laukka, P., Elfenbein, H. A., Liu, R., and Keltner, D. (2019). The primacy of categories in the recognition of 12 emotions in speech prosody across two cultures. Nat. Hum. Behav. 3, 369–382. doi: 10.1038/s41562-019-0533-6

Davidson, R. J., and Lutz, A. (2008). Buddha's brain: neuroplasticity and meditation. IEEE Signal Process. Mag. 25, 176–174. doi: 10.1109/MSP.2008.4431873

Dayan, E., Barliya, A., Gelder, B., Hendler, T., Malach, R., and Flash, T. (2018). Motion cues modulate responses to emotion in movies. Sci. Rep. 8:10881. doi: 10.1038/s41598-018-29111-4

Engel, S. A., Glover, G. H., and Wandell, B. A. (1997). Retinotopic organization in human visual cortex and the spatial precision of functional MRI. Cereb Cortex. 7, 181–192. doi: 10.1093/cercor/7.2.181

Esch, T. (2022). The abc model of happiness—neurobiological aspects of motivation and positive mood, and their dynamic changes through practice, the course of life. Biology 11:843. doi: 10.3390/biology11060843

Han, S., Liao, C., Manhard, M. K., Park, D. J., Bilgic, B., Fair, M. J., et al. (2020). Accelerated spin-echo functional MRI using multisection excitation by simultaneous spin-echo interleaving (MESSI) with complex-encoded generalized slice dithered enhanced resolution (cgSlider) simultaneous multislice echo-planar imaging. Magn. Reson. Med. 84, 206–220. doi: 10.1002/mrm.28108

Horikawa, T., Cowen, A. S., Keltner, D., and Kamitani, Y. (2020). The neural representation of visually evoked emotion is high-dimensional, categorical, and distributed across Transmodal brain regions. iScience 23:101060. doi: 10.1016/j.isci.2020.101060

Jääskeläinen, I. P., Sams, M., Glerean, E., and Ahveninen, J. (2021). Movies and narratives as naturalistic stimuli in neuroimaging. NeuroImage 224:117445. doi: 10.1016/j.neuroimage.2020.117445

Kashyap, S., Ivanov, D., Havlicek, M., Sengupta, S., Poser, B. A., and Uludag, K. (2018). Resolving laminar activation in human V1 using ultra-high spatial resolution fMRI at 7T. Sci. Rep. 8:17063. doi: 10.1038/s41598-018-35333-3

Kim, M. J., Loucks, R. A., Palmer, A. L., Brown, A. C., Solomon, K. M., Marchante, A. N., et al. (2011). The structural and functional connectivity of the amygdala: from normal emotion to pathological anxiety. Behav. Brain Res. 223, 403–410. doi: 10.1016/j.bbr.2011.04.025

Koelsch, S., and Skouras, S. (2014). Functional centrality of amygdala, striatum and hypothalamus in a "small-world" network underlying joy: an fMRI study with music. Hum. Brain Mapp. 35, 3485–3498. doi: 10.1002/hbm.22416

Labuschagne, I., Dominguez, J. F., Grace, S., Mizzi, S., Henry, J. D., Peters, C., et al. (2024). Specialization of amygdala subregions in emotion processing. Hum. Brain Mapp. 45:e26673. doi: 10.1002/hbm.26673

Ljung, G. M., and Box, G. E. P. (1978). On a measure of lack of fit in time series models. Biometrika 65, 297–303. doi: 10.1093/biomet/65.2.297

McDonald, A. J. (2020). Functional neuroanatomy of the basolateral amygdala: neurons, neurotransmitters, and circuits. Handb. Behav. Neurosci. 26, 1–38. doi: 10.1016/b978-0-12-815134-1.00001-5

Nelson, X. J., Taylor, A. H., Cartmill, E. A., Lyn, H., Robinson, L. M., Janik, V., et al. (2023). Joyful by nature: approaches to investigate the evolution and function of joy in non-human animals. Biol. Rev. Camb. Philos. Soc. 98, 1548–1563. doi: 10.1111/brv.12965

Njau, S., Townsend, J., Wade, B., Hellemann, G., Bookheimer, S., Narr, K., et al. (2020). Neural subtypes of euthymic bipolar I disorder characterized by emotion regulation circuitry. Biol. Psychiatry Cogn. Neurosci. Neuroimaging 5, 591–600. doi: 10.1016/j.bpsc.2020.02.011

Norris, D. G., Zysset, S., Mildner, T., and Wiggins, C. J. (2002). An investigation of the value of spin-echo-based fMRI using a Stroop color-word matching task and EPI at 3T. NeuroImage 15, 719–726. doi: 10.1006/nimg.2001.1005

Ochsner, K. N., Silvers, J. A., and Buhle, J. T. (2012). Functional imaging studies of emotion regulation: a synthetic review and evolving model of the cognitive control of emotion. Ann. N. Y. Acad. Sci. 1251, E1–E24. doi: 10.1111/j.1749-6632.2012.06751.x

Ojemann, J. G., Akbudak, E., Snyder, A. Z., McKinstry, R. C., Raichle, M. E., and Conturo, T. E. (1997). Anatomic localization and quantitative analysis of gradient refocused echo-planar fMRI susceptibility artifacts. NeuroImage 6, 156–167. doi: 10.1006/nimg.1997.0289

Parkes, L. M., Schwarzbach, J. V., Bouts, A. A., Deckers, R. H., Pullens, P., Kerskens, C. M., et al. (2005). Quantifying the spatial resolution of the gradient echo and spin echo BOLD response at 3 tesla. Magn. Reson. Med. 54, 1465–1472. doi: 10.1002/mrm.20712

Phillips, M. L., Drevets, W. C., Rauch, S. L., and Lane, R. (2003a). Neurobiology of emotion perception I: the neural basis of normal emotion perception. Biol. Psychiatry 54, 504–514. doi: 10.1016/S0006-3223(03)00168-9

Phillips, M. L., Drevets, W. C., Rauch, S. L., and Lane, R. (2003b). Neurobiology of emotion perception II: implications for major psychiatric disorders. Biol. Psychiatry 54, 515–528. doi: 10.1016/S0006-3223(03)00171-9

Rueschkamp, J. M. G., Brose, A., Villringer, A., and Gaebler, M. (2019). Neural correlates of up-regulating positive emotions in fMRI and their link to affect in daily life. Soc. Cogn. Affect. Neurosci. 14, 1049–1059. doi: 10.1093/scan/nsz079

Saarimäki, H., Ejtehadian, L. F., Glerean, E., Jääskeläinen, I. P., Vuilleumier, P., Sams, M., et al. (2018). Distributed affective space represents multiple emotion categories across the human brain. Soc. Cogn. Affect. Neurosci. 13, 471–482. doi: 10.1093/scan/nsy018

Saarimäki, H., Gotsopoulos, A., Jääskeläinen, I. P., Lampinen, J., Vuilleumier, P., Hari, R., et al. (2016). Discrete neural signatures of basic emotions. Cereb. Cortex 26, 2563–2573. doi: 10.1093/cercor/bhv086

Schultz, W., Dayan, P., and Montague, P. R. (1997). Neural substrate of prediction and reward. Science 275, 1593–1599. doi: 10.1126/science.275.5306.1593

Setsompop, K., Fan, Q., Stockmann, J., Bilgic, B., Huang, S., Cauley, S. F., et al. (2018). High-resolution in vivo diffusion imaging of the human brain with generalized slice dithered enhanced resolution: simultaneous multislice (gSlider-SMS). Magn. Reson. Med. 79, 141–151. doi: 10.1002/mrm.26653

Skouras, S., Gray, M., Critchley, H., and Koelsch, S. (2014). Superficial amygdala and hippocampal activity during affective music listening observed at 3 T but not 1.5 T fMRI. NeuroImage 1, 364–369. doi: 10.1016/j.neuroimage.2014.07.007

Suardi, A., Sotgiu, I., Costa, T., Cauda, F., and Rusconi, M. (2016). The neural correlates of happiness: a review of PET and fMRI studies using autobiographical recall methods. Cogn. Affect. Behav. Neurosci. 16, 383–392. doi: 10.3758/s13415-016-0414-7

Torrisi, S, Liao, C, Townsend, J, and Vu, AT. (2023). “Spin-echo-based generalized slice dithered enhanced resolution (gSLIDER) for mesoscale fMRI at 3 tesla.” in Proc. ISMRM.

Townsend, J., and Altshuler, L. L. (2012). Emotion processing and regulation in bipolar disorder: a review. Bipolar Disord. 14, 326–339. doi: 10.1111/j.1399-5618.2012.01021.x

Townsend, J. D., Eberhart, N. K., Bookheimer, S. Y., Eisenberger, N. I., Foland-Ross, L. C., Cook, I. A., et al. (2010). fMRI activation in the amygdala and the orbitofrontal cortex in unmedicated subjects with major depressive disorder. Psychiatry Res. 183, 209–217. doi: 10.1016/j.pscychresns.2010.06.001

Townsend, J. D., Torrisi, S. J., Lieberman, M. D., Sugar, C. A., Bookheimer, S. Y., and Altshuler, L. L. (2013). Frontal-amygdala connectivity alterations during emotion downregulation in bipolar I disorder. Biol. Psychiatry 73, 127–135. doi: 10.1016/j.biopsych.2012.06.030

Townsend, J.D., Vizueta, N., Sugar, C., Bookheimer, S.Y., Altshuler, L.L., and Brooks, J.O. (2017). Decreasing the negative and increasing the positive: emotion regulation using cognitive reappraisal and fMRI in bipolar I disorder euthymia. Society for Biological Psychiatry.

Townsend, J.D., Yi, H.G., Beckett, A., Leonard, M.K., Vu, A.T., Chang, E.F., et al. (2019). Non-invasive mapping of acoustic-phonetic speech features in human superior temporal gyrus using ultra-high field 7T fMRI, co-chair of session, society for Neuroscience.

Vu, A. T., Beckett, A., Setsompop, K., and Feinberg, D. A. (2018). Evaluation of SLIce dithered enhanced resolution simultaneous MultiSlice (SLIDER-SMS) for human fMRI. NeuroImage 164, 164–171. doi: 10.1016/j.neuroimage.2017.02.001

Wager, T. D., Kang, J., Johnson, T. D., Nichols, T. E., Satpute, A. B., and Barrett, L. F. (2015). A Bayesian model of category-specific emotional brain responses. PLoS Comput. Biol. 11:e1004066. doi: 10.1371/journal.pcbi.1004066

Woolrich, M. W., Ripley, B. D., Brady, J. M., and Smith, S. M. (2001). Temporal autocorrelation in univariate linear modelling of FMRI data. NeuroImage 14, 1370–1386. doi: 10.1006/nimg.2001.0931

Worsley, K. J. (2001). “Statistical analysis of activation images” in Functional MRI: An introduction to methods. eds. P. Jezzard, P. M. Matthews, and S. M. Smith (Oxford: Oxford University Press).

Yacoub, E., Harel, N., and Ugurbil, K. (2008). High-field fMRI unveils orientation columns in humans. Proc. Natl. Acad. Sci. USA 105, 10607–10612. doi: 10.1073/pnas.0804110105

Yacoub, E., Shmuel, A., Logothetis, N., and Ugurbil, K. (2007). Robust detection of ocular dominance columns in humans using Hahn spin Echo BOLD functional MRI at 7 tesla. NeuroImage 37, 1161–1177. doi: 10.1016/j.neuroimage.2007.05.020

Yang, M., Tsai, S. J., and Li, C. R. (2020). Concurrent amygdalar and ventromedial prefrontal cortical responses during emotion processing: a meta-analysis of the effects of valence of emotion and passive exposure versus active regulation. Brain Struct. Funct. 225, 345–363. doi: 10.1007/s00429-019-02007-3

Young, C. B., and Nusslock, R. (2016). Positive mood enhances reward-related neural activity. Soc. Cogn. Affect. Neurosci. 11, 934–944. doi: 10.1093/scan/nsw012

Keywords: joy, emotion, machine learning, gSLIDER, mesoscale, fMRI, SWAT

Citation: Townsend JD, Muller AM, Naeem Z, Beckett A, Kalisetti B, Abbasi-Asl R, Liao C and Vu AT (2025) Imaging joy with generalized slice dithered enhanced resolution and SWAT reconstruction: 3T high spatial–temporal resolution fMRI. Front. Neuroimaging. 4:1537440. doi: 10.3389/fnimg.2025.1537440

Edited by:

Irati Markuerkiaga, University of Mondragón, SpainReviewed by:

Fushun Wang, Nanjing University of Chinese Medicine, ChinaXiu-Xia Xing, Beijing University of Technology, China

Tyler Morgan, National Institutes of Health (NIH), United States

Ebrahim Ghaderpour, Sapienza University of Rome, Italy

Copyright © 2025 Townsend, Muller, Naeem, Beckett, Kalisetti, Abbasi-Asl, Liao and Vu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: An Thanh Vu, YW4udnVAdWNzZi5lZHU=

Jennifer D. Townsend

Jennifer D. Townsend Angela Martina Muller

Angela Martina Muller Zanib Naeem

Zanib Naeem Alexander Beckett

Alexander Beckett Bhavesh Kalisetti4,5

Bhavesh Kalisetti4,5 Reza Abbasi-Asl

Reza Abbasi-Asl Congyu Liao

Congyu Liao An Thanh Vu

An Thanh Vu