- 1School of Electrical Engineering, Shenyang University of Technology, Shenyang, China

- 2Department of Intelligent Mechanical System Engineering, Kochi University of Technology, Kochi, Japan

Background: Physiotherapy robots offer a feasible and promising solution for achieving safe and efficient treatment. Among these, acupoint recognition is the core component that ensures the precision of physiotherapy robots. Although the research on the acupoint recognition such as hand and ear has been extensive, the accurate location of acupoints on the back of the human body still faces great challenges due to the lack of significant external features.

Methods: This paper designs a two-stage acupoint recognition method, which is achieved through the cooperation of two detection networks. First, a lightweight RTMDet network is used to extract the effective back range from the image, and then the acupoint coordinates are inferred from the extracted back range, reducing the inference consumption caused by invalid information. In addition, the RTMPose network based on the SimCC framework converts the acupoint coordinate regression problem into a classification problem of sub-pixel block subregions on the X and Y axes by performing sub-pixel-level segmentation of images, significantly improving detection speed and accuracy. Meanwhile, the multi-layer feature fusion of CSPNeXt enhances feature extraction capabilities. Then, we designed a physiotherapy interaction interface. Through the three-dimensional coordinates of the acupoints, we independently planned the physiotherapy task path of the physiotherapy robot.

Results: We conducted performance tests on the acupoint recognition system and physiotherapy task planning in the physiotherapy robot system. The experiments have proven our effectiveness, achieving a recall of 90.17% on human datasets, with a detection error of around 5.78 mm. At the same time, it can accurately identify different back postures and achieve an inference speed of 30 FPS on a 4070Ti GPU. Finally, we conducted continuous physiotherapy tasks on multiple acupoints for the user.

Conclusion: The experimental results demonstrate the significant advantages and broad application potential of this method in improving the accuracy and reliability of autonomous acupoint recognition by physiotherapy robots.

1 Introduction

With the continuous deepening of population aging and the improvement of public health awareness, traditional Chinese medicine, as a natural therapy for regulating body functions, is gradually attracting more and more attention. Chinese medicine therapy stimulates specific acupoints to trigger local and systemic sensory mechanisms and biological responses in the body, and has significant therapeutic effects in relieving various types of pain, promoting blood circulation, improving physical comfort, and psychological relaxation (Kerautret et al., 2024; Li et al., 2025). However, traditional physical therapy services are expensive, vary in quality, and suffer from a shortage of professional therapists, making it difficult to meet growing health demands. As an innovative product integrating artificial intelligence, robotics technology and traditional Chinese medicine theory, physiotherapy robots offer a new solution and development direction to address this issue (Pang et al., 2019; Zhao et al., 2023). Several countries and regions have successively introduced relevant support policies, providing strong financial support and resource guarantees for research in this field, effectively promoting the application of physiotherapy robots in clinical rehabilitation, home nursing, and other scenarios (Zhao and Guo, 2023). Currently, there are a variety of physiotherapy robot products on the market that offer a range of functions (Yang et al., 2024), including massage, moxibustion, and tuina massage, among other traditional Chinese medicine physiotherapy methods. The level of intelligence is constantly improving, gradually promoting the development of intelligent physiotherapy toward personalization and precision.

Therapeutic acupoints are mainly distributed along the human meridian system and are a core component of traditional Chinese medicine theory. Physiotherapy robots rely on the precise localization of human acupoints to effectively exert their therapeutic effects (Yu et al., 2024). However, there are still many challenges in achieving autonomous acupoint recognition, especially in locating acupoints on the back. Compared to areas such as the face or limbs, the back lacks obvious structural landmarks, has relatively smooth skin texture, and varies greatly between individuals (Yang et al., 2025a). Therefore, how to improve the accuracy of physiotherapy robots in acupoint recognition on the back remains one of the challenges that need to be overcome in its development. In this paper, we propose an acupoint recognition method for autonomous physical therapy robot systems that combines high accuracy and dynamic performance to automatically identify patients’ acupoints. We systematically modeled the location of acupoints on the back as a key point recognition task and trained the two-stage neural network through our own established dataset to predict the relevant acupoints. And this acupoint recognition network was applied to the physiotherapy robot system built by oneself, enabling the physiotherapy robot to perform physiotherapy tasks automatically. Our main contributions are as follows:

• An indirect data annotation method is proposed. Firstly, manual annotation is carried out on the human body, then software annotation is conducted using annotation software, and finally the manual marks on the images are removed through algorithms to systematically improve the consistency of annotation and the quality of training data.

• Based on the two-stage network architecture, a key point prediction network for back physiotherapy acupoints was designed, that is, the combination of target range detection and key point detection was used for physiotherapy acupoint recognition, and it was applied to the self-built physiotherapy robot system, which can significantly improve the accuracy and objectivity of localization.

2 Related work

2.1 Current research status of physiotherapy robots

Many universities and enterprises at home and abroad have invested in the research and development of traditional Chinese medicine physiotherapy robots. This field is gradually moving toward a composite direction that integrates visual perception, force control feedback, and multi-probe coordination (Xing et al., 2021; Liu et al., 2024). Wang et al. (2018) designed a compact, space-saving portable back massage robot that optimizes electromagnetic force distribution through the establishment of a 3D electromagnetic simulation model, ensuring that the massage robot can cover the entire back area and improve massage effectiveness. However, it does not have acupoint recognition capabilities and cannot perform precise physical therapy. However, physiotherapy robots still have insufficient adaptation to individual differences in acupoint recognition and certain limitations in robustness in complex application scenarios. Dong et al. (2022) designed a compliant parallel robotic massage arm that combines serial elastic actuators (SEA) to achieve unified force-position control without relying on a complete dynamic model. Sayapin (2017) developed an intelligent autonomous mobile massage robot. The device uses a three-degree-of-freedom motion system and a triangular topology design. It consists of three vacuum massage cups and linear drivers connected to the vacuum massage cups. It uses a sliding cupping massage method to slide and stretch muscle tissue to achieve a massage effect. Due to structural design limitations, the robot may not be able to adapt to the complex curves of the human body and does not have an acupoint positioning function. Choi et al. (2021) developed an abdominal massaging device named Bamk-001. This device is equipped with five thermoelectric modules and can stably provide a constant-temperature heat compress at 40 °C, bringing continuous warm therapeutic effects to the abdomen. Meanwhile, with the coordinated operation of the five airbags, rhythmic pressure is applied to the patient’s abdomen through a clockwise circulation of inflation, thereby simulating the technique of artificial massage. However, this device is unable to precisely locate human acupoints. Its massage effect is limited to the entire abdominal area and lacks targeted acupoint stimulation functions. Therefore, it has limitations in application scenarios such as traditional Chinese medicine meridian therapy that require precise positioning. Zhu et al. (2023) designed a peristaltic wearable massage robot for the treatment of diseases related to lymph and blood circulation. This robot uses a fluid fabric muscle plate as the driver and is driven by a hydraulic transmission device, which enables the robot to provide dynamic compressive force to meet the massage requirements even at higher frequencies. This robot mainly relies on a fixed compressed wave pattern to improve lymphatic or blood circulation and is unable to precisely identify the locations of human acupoints. Autonomous acupoint recognition by physical therapy robots is the key foundation for realizing personalized physical therapy plans. Accurate acupoint recognition not only directly affects the accuracy and effectiveness of physical therapy, but also provides a reliable basis for technique selection, force control, and treatment path planning. Especially for the back area, there are many acupuncture points distributed over a wide area, and their precise location is particularly critical for effective therapeutic intervention.

2.2 Research status of acupoint recognition method

With the rapid advancement of computer vision, deep learning and sensor technology, researchers have attempted to apply methods such as image recognition, 3D reconstruction, infrared thermal imaging and neural networks to acupoint recognition to enhance its accuracy and degree of automation (Liu et al., 2023; Huang et al., 2025). Mamieva et al. (2023) proposed using a binocular telescope structure as the basis for a keypoint detection network to improve model construction effectiveness. This framework demonstrated high average precision (AP) values under multi-scale inference strategies and achieved excellent facial detection results. However, the model’s ability to process blurry images in low-light environments still needs improvement, and it relies heavily on high-performance external devices. Malekroodi et al. (2024) applied a real-time landmark detection system to identify anatomical landmarks in images, convert their coordinates into spatial coordinates corresponding to acupoints, and locate 38 specific acupoints on the face and hands. He also used a convolutional neural network (CNN) specifically optimized for pose estimation and trained it with restricted medical imaging data, to detect five key acupoints on the arms and hands. Zheng (2022) developed a mobile augmented reality (AR) system based on a facial landmark recognition network, combining deep learning models with traditional Chinese medicine bone measurement methods to achieve real-time identification and localization of facial acupoints. However, in practical applications, this method has certain limitations when dealing with complex situations such as facial rotation, and it relies on complete facial image input. Yuan et al. (2024) improved the YOLOv8-pose key point detection algorithm for facial acupoints. By integrating ECA attention to enhance acupoint feature extraction and replacing the original neck module with a more lightweight Slim-neck module, they provided significant reference value for facial acupoint localization and detection. However, the self-built dataset in the paper fails to fully cover all kinds of extreme scenarios, which may limit the generalization ability of the model when facing the diverse application scenarios in the real world. Wang et al. (2023) constructed a cascaded network model using HRNet as the backbone and introduced a dual attention mechanism combining SE and CA, effectively enhancing the model’s ability to perceive local key features. Seo et al. (2024) focused on the problem of accurately locating acupoints in 2D hand images, comparing the performance of two deep learning architectures, HRNet and ResNet, to explore methods for improving the accuracy of hand acupoint detection. Yang et al. (2025b) proposed a hybrid model RT-DEMT, which combines the efficient state space model Mamba with a Transformer module based on the DETR architecture, improving the accuracy and speed of back acupoint localization. This model primarily relies on visual images as input and has not yet integrated multimodal information such as infrared, depth maps, or human body structure point clouds. Therefore, its recognition robustness in complex environments still has certain limitations. Zhang et al. (2024) proposed a method for detecting back acupoints based on an improved OpenPose, which enhanced the reasoning speed. However, the number of evaluated acupoints is relatively small, and the robustness verification in complex scenarios still needs to be improved. In conclusion, acupoint recognition on the back is of great significance in application scenarios such as moxibustion and physical therapy. Due to the diversity of postural changes on the human back, the design and proposal of an acupoint recognition algorithm that can adapt to postural changes and lighting interference is of great theoretical and practical significance for improving the therapeutic effects and application value of physical therapy robots.

2.3 Physiotherapy robot

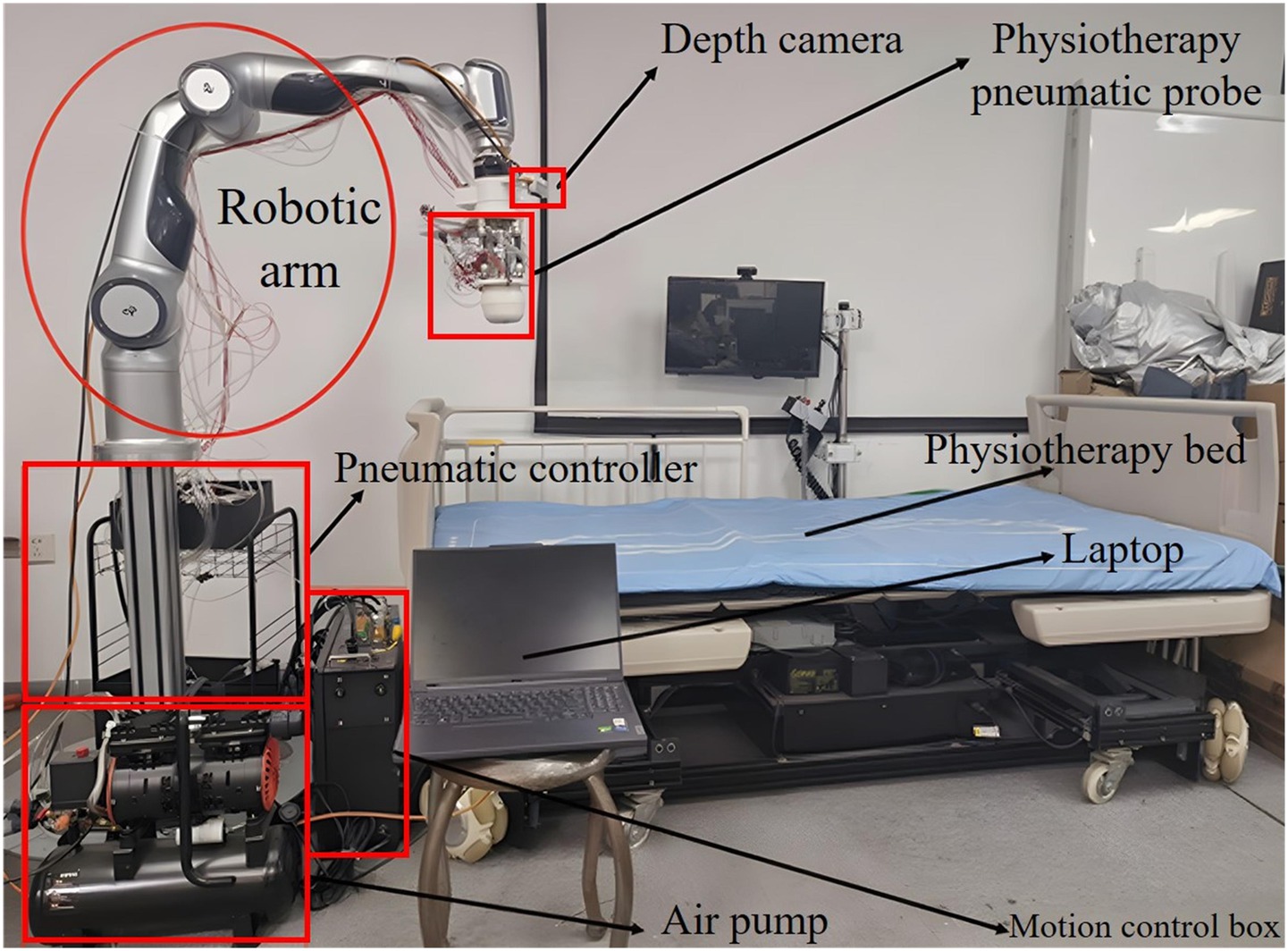

This paper establishes its own physical therapy robot hardware platform, as shown in Figure 1, which mainly consists of a physical therapy robotic arm, a robotic arm control box, a depth camera, a rigid-flexible coupling massage head and air pump, a computer, and a physiotherapy bed.

Among them, the robotic arm is a 7-degree-of-freedom series robotic arm, mainly serving as part of the physiotherapy execution mechanism to complete tasks such as acupoint recognition and reproducing manual techniques. At the end, it is equipped with a pneumatic rigid-flexible coupling probe, which has various forms such as palm and finger. The air pump is a high-pressure air pump equipped with a PWM control system, which can provide high-pressure gas of different frequencies and pressures, and switch and control the force through pneumatic means. The D435i depth camera is selected to obtain the real-time color RGB images of the physiotherapy bed and the corresponding depth image data, and feed them back to the computer. The computer is responsible for the operation of the human-computer interaction system, the acquisition of camera image data, as well as the acupoint recognition, the generation of control instructions for the mechanical arm, and the planning of physiotherapy tasks and other major calculations. The physiotherapy bed can undergo various posture changes and height adjustments.

3 Method

In this section, the proposed network structure and localization framework are systematically described for the problem of acupoint recognition on the back. In Section 3.1, the acquisition process of the experimental dataset and its preprocessing strategy are introduced in detail. In Section 3.2, the constructed acupoint recognition network framework is described in detail. In Section 3.3, evaluation metrics for performance verification are proposed and explained, providing standards for subsequent experimental analysis. Finally, in Section 3.4, the coordinate transformation and trajectory planning of robots when performing physiotherapy tasks are explained.

3.1 Data set and processing

Since deep learning is a data-driven predictive method, the quality of the dataset has a significant impact on model performance. In the current process of constructing acupoint datasets, software annotation directly on images or automatic annotation using mathematical models is commonly used. Due to factors such as projection distortion and camera nonlinear distortion, visual errors are inevitable during the annotation process, and some areas cannot be accurately annotated due to a lack of sufficient positioning information. For this purpose, this study proposes an indirect data annotation method. First, frontline Chinese medicine experts are invited to perform manual annotation on the human body. Then, annotation software is used for software annotation. Finally, an algorithm is used to remove the manual markings from the images. This study recruited 100 people as volunteers. Before the experiment began, they were all informed in detail of the research purpose, experimental procedures and related precautions, and voluntarily signed the informed consent form on the basis of full understanding. The participants’ body types were evaluated based on the body mass index (BMI). Before the experiment, their height and weight were obtained through questionnaires and calculated according to BMI. Among these volunteers, 2 had a BMI less than 18.5, 80 had a BMI between 18.5 and 24, 15 had a BMI between 24 and 28, and 3 had a BMI more than 28. Our sample covered all BMI stratifications, among which normal body types accounted for the majority.

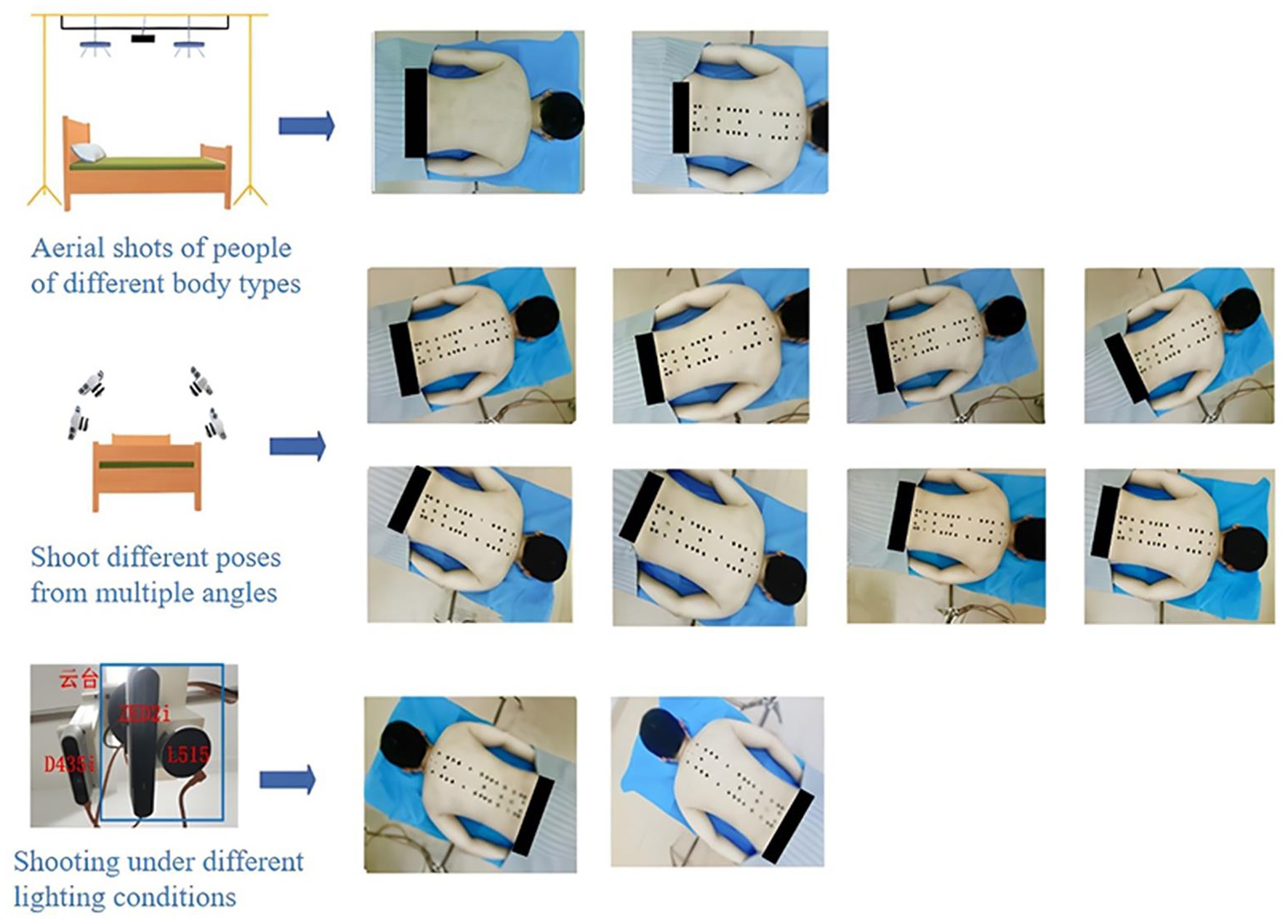

Data collection was carried out in a well-lit laboratory. Three different types of depth cameras were used to obtain multi-resolution images, and the back postures of the volunteers were captured from multiple angles. The specific steps for data collection are as follows:

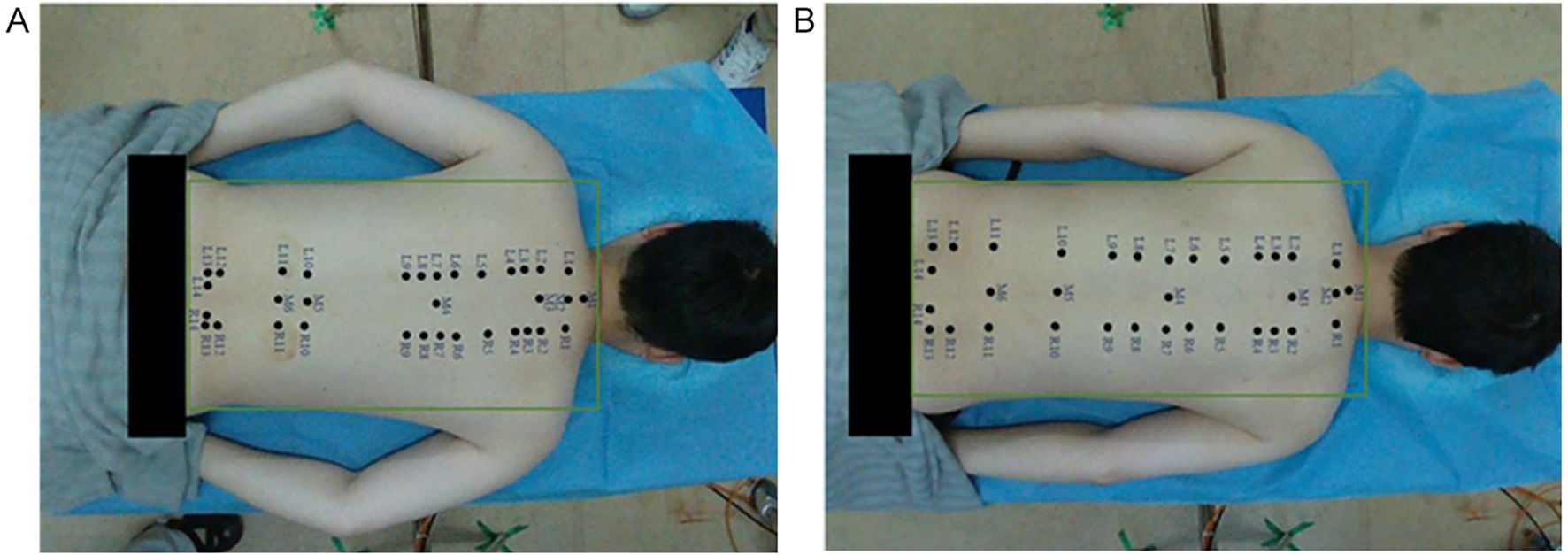

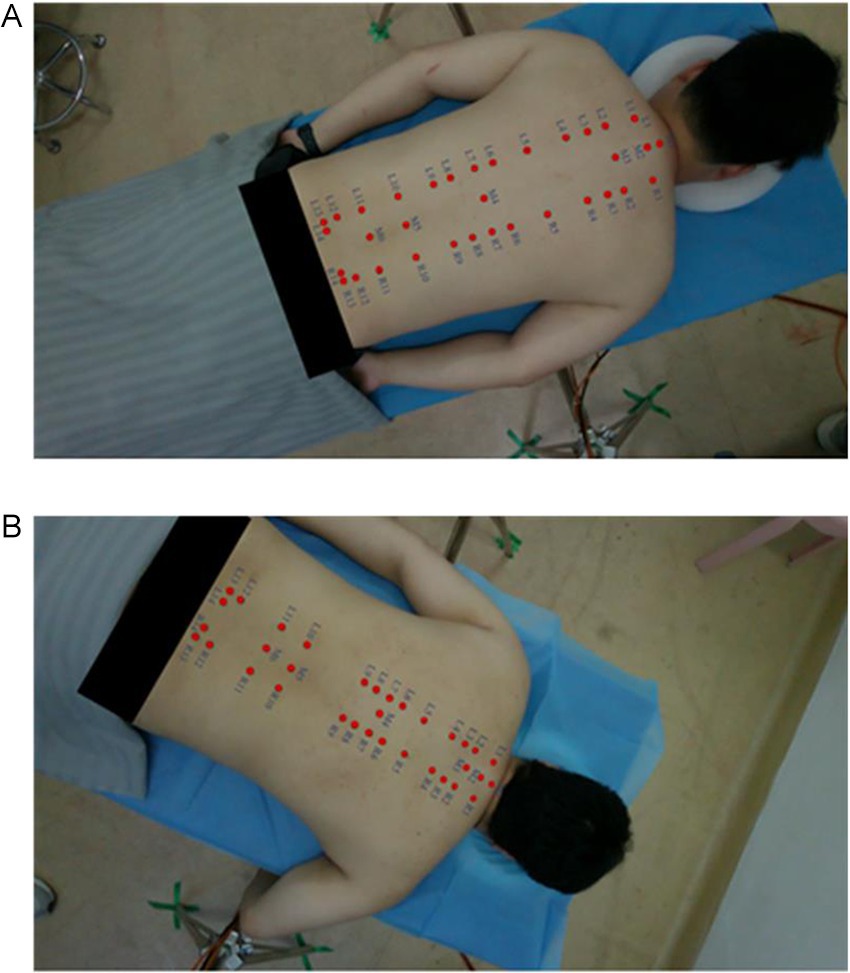

• Volunteers lay prone on the bed, removed their back clothing, and professional Chinese medicine experts identify their acupoints. For female subjects, the experiment was independently conducted by female professionals, and the subjects participated wearing underwear. After locating the acupoints, the experts mark the corresponding locations with round adhesive labels. As shown in Figure 2.

• After completing the acupoint marking, the camera gimbal was controlled by a self-written data collection program to capture images of the volunteer’s back from different angles, including horizontal, multi-angle horizontal rotation, and multi-angle tilt. After the program finished collecting data, a mobile phone was used to supplement the images from multiple random angles to enhance data diversity. As shown in Figure 3.

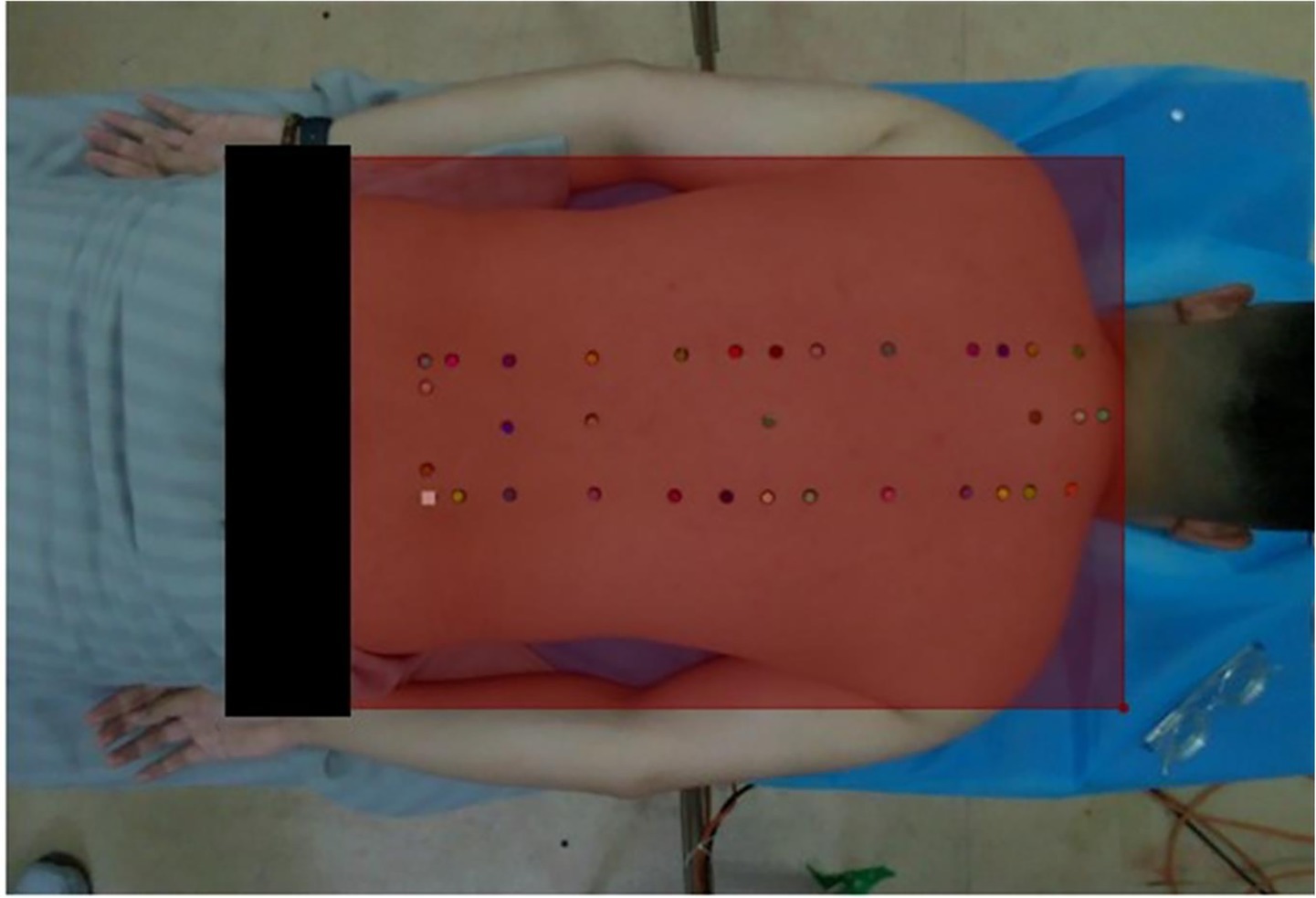

• To avoid the impact of factors such as angle, lighting changes, or annotation errors during data collection, the collected images must be manually screened. After screening, the Labelme annotation tool is used to precisely annotate the center points of circular labels in the images and extract the pixel coordinate information of the acupoints. As shown in Figure 4, it is an example of annotation.

Figure 2. The process of experts locating acupoints and applying labels. (A) The process of locating the acupoint. (B) Label the acupoints.

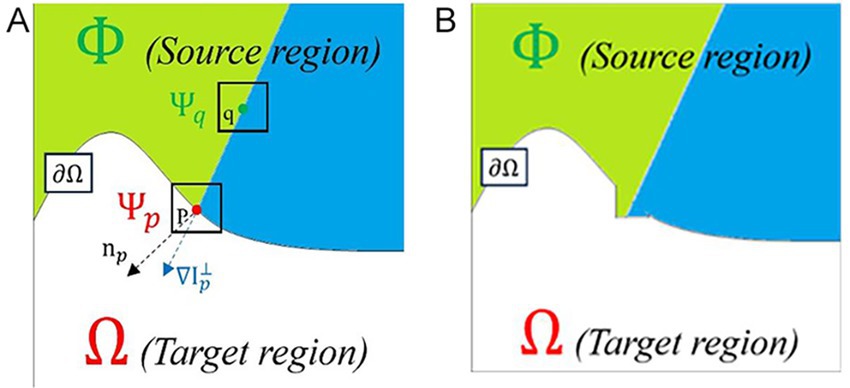

After completing the software annotation, according to the dataset production process, the images need to be de-labeled to obtain images without black labels and corresponding json files. For image de-labeling, this paper makes improvements based on the Criminisi image restoration algorithm (Yang et al., 2020; Li et al., 2024; Yao, 2019). The Criminisi algorithm is a patch filling method that determines the repair order by comparing the effective information of boundary pixels. It finds the optimal filling patch within the entire image range through matching calculation rules to complete the filling of the pixel, and then performs a boundary and priority update cycle after completing one filling. Figure 5 shows the repair process of pixel P using the Criminisi algorithm.

Figure 5. Schematic diagram of the Criminisi algorithm principle. (A) Before repair. (B) After repair.

In Figure 5A, the curve represents the boundary between the normal region and the region to be repaired, the area above the curve is the normal range, different colors represent different textures in the area. The area below the curve is the target area to be repaired, and P is the pixel point on the boundary . is the pixel block to be repaired centered on P, and is the unit normal vector of the boundary of the area to be repaired. is the unit isophote gradient vector of P, is the pixel block searched for and most similar to , and q is the center of . Figure 5B shows the effect of repairing point P.

As filling progresses, the average confidence level for the region will gradually decrease and eventually reach zero. Since the priority calculation is based on the product of the two values, this inevitably leads to the priority calculation failing to accurately reflect the regional information, resulting in calculation failure. This prevents the repair sequence from being reasonably arranged, ultimately leading to poorer repair results. To address this issue, the priority calculation method was improved by changing the priority calculation from a product form to an exponential sum form with base e (Jin et al., 2023), and limiting the confidence level to a certain range to avoid priority failure caused by low confidence levels. Equations (1)–(3) are the improved priority calculation Equations:

When performing matching calculations, the Criminisi algorithm does not consider the texture characteristics of the areas to be repaired and the normal areas, and is somewhat blind. It only mechanically repeats the matching of texture features through matching rules, requiring traversing all pixels in the normal area of the image space, resulting in excessive computing costs. We adopt an improved matching mechanism based on the elliptic model, equivalent the marked region to the elliptic model, dividing the region into four regions along the elliptic symmetry axis, and limiting the entire matching search region within a reasonable range to seek the local optimal solution within this range (Fan et al., 2025). Therefore, when performing similarity matching calculations to find patches to fill in, prioritizing nearby areas for screening can not only effectively shorten the matching time, but also reduce the problem of pixel texture changes caused by mismatches.

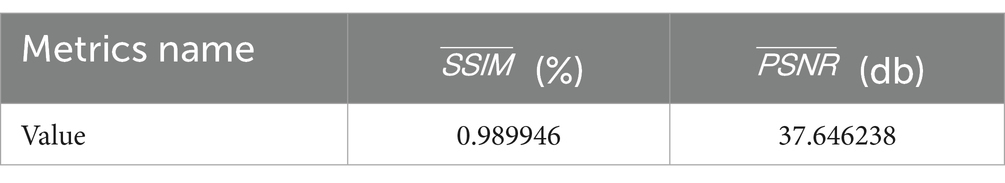

This de-labeling algorithm is an improvement based on Criminisi. It calculates the average peak signal-to-noise ratio (PSNR) and structural similarity index measure (SSIM) between the images processed by the two algorithms to illustrate the differences in image restoration quality and conducts subjective evaluation through subjective visual effects. Equations (4) and (5) are respectively the calculation formulas for PSNR and SSIM:

In these formulas, , , represent the maximum pixel values for their respective channels, and , represents mean values for x and y respectively, , , denotes variance, and denotes covariance, , , and take values of 0.01 and 0.03 respectively, represents dynamic range of the images.

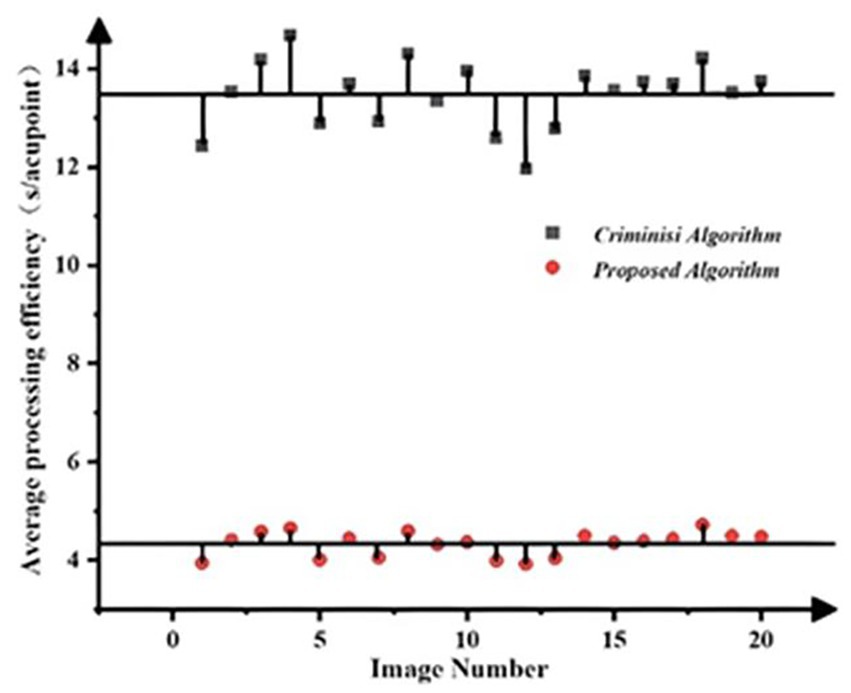

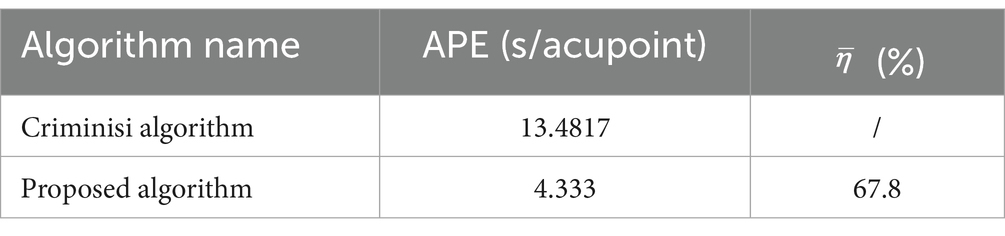

Since the number of acupoint markers is positively correlated with the image processing time, the processing speed of the algorithm for a single acupoint is defined as the average processing efficiency (APE) (unit: seconds per acupoint), as shown in Equation (6):

In the formula, represents the total time used by the algorithm to process a single image.

To evaluate the difference in processing efficiency between the Criminisi algorithm and the improved algorithm, we use the average efficiency improvement metric as defined in Equation (7).

In the formula, , represent the processing time of Criminisi and improved algorithm for single-image processing respectively, n is the total number of samples, and represents the efficiency improvement for a single image.

To enhance the diversity of the dataset and thereby improve the generalization ability and recognition robustness of the model, we adopted a single-graph data augmentation method, mainly achieved through random scale transformation, random clipping, and random horizontal flipping.

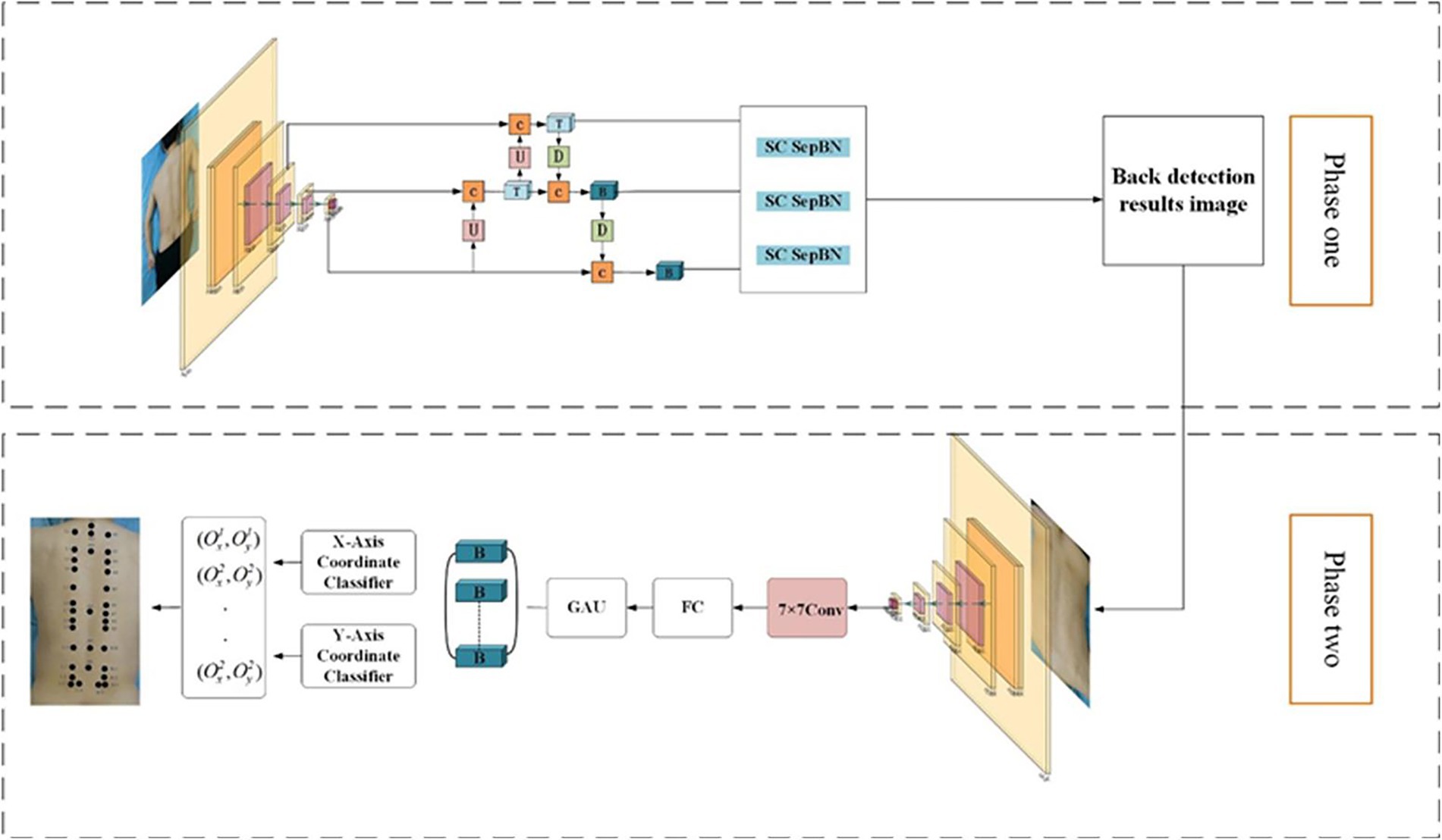

3.2 Acupoint recognition network model

The overall architecture of the network model in this study adopts a two-stage structure, that is, the combination of target range detection and key point detection for acupoint recognition. In this framework, the RTMDet network model (Bakhtiyorov et al., 2025; Lyu et al., 2022; Zhang et al., 2025) is first utilized to conduct object detection on the entire image and obtain the bounding box of the back area. Input this bounding box as ROI (region of interest) information into the RTMPose network model (Jiang et al., 2023; Kang et al., 2025; Zheng et al., 2025) to guide the subsequent key point prediction. The entire image still serves as the input for RTMPose, but the key point search range is effectively constrained by the bounding box, thereby reducing the interference of background noise and irrelevant information. The RTMDet model in the first stage is responsible for accurately locating the back region, enabling the prediction task in the second stage to focus on smaller and more relevant areas. The RTMPose model in the second stage conducts acupoint key point location on this basis, which can focus computing resources on key areas, thereby significantly improving prediction accuracy while ensuring reasoning speed.

The designed acupoint recognition network architecture is shown in Figure 6. Specifically, in the first stage, the RTMDet model is used to efficiently detect the patient’s back area. The CSPNeXt backbone network is used to extract multi-scale features, which are then combined with the PAFPN structure to enhance feature fusion capabilities. A dynamic label assignment strategy, SimOTA, is used to optimize sample matching, ultimately outputting precise back area bounding boxes and their corresponding positions in the camera coordinate system. The second stage is based on the RTMPose model to achieve accurate acupoint recognition. The GAU module (Hua et al., 2022) is the gate self-attention mechanism, which enhances the feature expression ability by combining the gating mechanism of GLU with single-head attention. Through the SimCC framework, coordinate prediction is converted into a sub-pixel classification task. Combined with the KL divergence loss function to optimize localization accuracy, the final result is the precise location of the acupoint. This solution maintains real-time performance while comprehensively balancing detection accuracy and computing efficiency.

This paper employs a learning rate scheduling strategy combining AdamW and Flat CosineLR. The first half adopts AdamW with a fixed learning rate of 0.05, and the second half adopts Flat CosineLR. AdamW replaces L2 regularization through weight decay, effectively preventing overfitting. Flat CosineLR first keeps the learning rate constant for a period of time (flat phase), then gradually reduces the learning rate to a minimum value. This strategy helps the model converge quickly in the early stages of training and fine-tune parameters in the later stages.

3.3 Evaluation indicator

Define the average pixel error for acupoint prediction in a single image , the actual error , the average recognition pixel error corresponding to the detection sample , and the actual error as evaluation indicators for acupoint recognition accuracy. The specific expressions are shown in Equations (8)–(13):

Among them, and are, respectively, the predicted distribution matrix and the true distribution matrix of the acupoints in the image, is the number of identified acupoints, and is the number of volunteers tested.

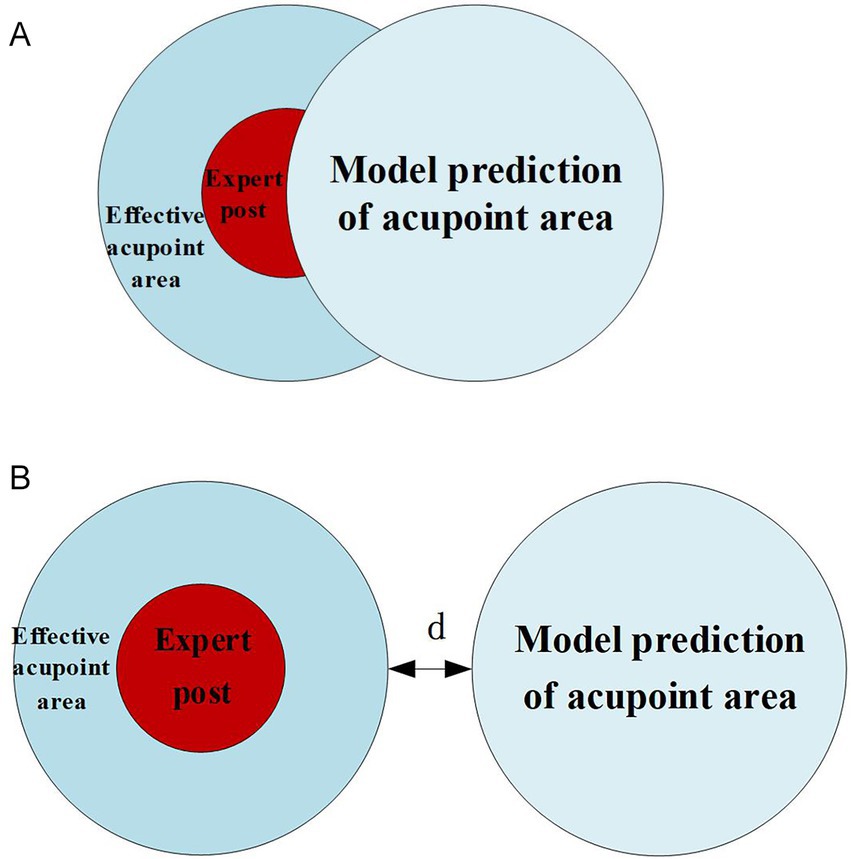

Combining the acupoint patches used during data collection and acupoint selection, the area marked by experts is taken as the center of the effective acupoint area. The size of the effective acupoint region is set to a 10 mm circular area. The model predicts the acupoints to be the same 10 mm circular area. By analyzing the spatial relationship between the two circular regions, the error in the model’s predicted acupoints relative to the effective region is quantified. This defines the accuracy of acupoint recognition and the prediction error of acupoints, as shown in Figure 7.

Figure 7. Relationship between model prediction and effective acupoint regions. (A) Effective identification (TP). (B) Existing error (FN).

If the predicted area of the model overlaps with the effective area of the label set, it is considered an effective recognition and is recorded as a positive example. If the two do not overlap, it indicates that there is an error in recognition and is recorded as a negative example. At this time, the error is denoted as d. When evaluating the model, recall is used to judge the overall accuracy of the model in acupoint recognition in a single image expressed by Equation (14).

where n is the number of volunteers tested, TP and FN correspond, respectively, to the positive and negative examples mentioned above, this formula indicates the average recognition accuracy of a single image.

3.4 Robot physiotherapy task planning

The coordinates of the acupoints output by the acupoint recognition model are the pixel coordinates of the acupoints in the image. During actual physiotherapy, the control of the physiotherapy end is based on the coordinates of the base coordinate system. Therefore, it is necessary to convert the recognized acupoints into the coordinates of the base coordinate system. Due to the different resolutions of depth cameras and color cameras, in order to obtain depth data at pixel coordinates, resolution conversion is required, that is, the registration of depth images and color images. The specific calculation method adopts the equivalence method, as shown in Equations (15) and (16). The image pixel coordinates are interpolated and filled through the normalization factor, that is, the color image coordinates correspond to the coordinates under the depth image, and the current depth value is the depth value z of the pixel coordinates under the color image.

Among them, represents the current pixel coordinates of the acupoint and the coordinates converted to the resolution of the depth map, the normalization factor, the current pixel coordinate depth value, and the depth data, respectively.

Then, convert the pixel coordinates to the camera coordinate system coordinates. Since the D435i camera is a planar camera, a central perspective model is adopted for modeling. The conversion relationship between image coordinates and camera coordinates is shown in Equations (17)–(20):

Thus, Equations (21) and (22) can be obtained.

The parameters such as focal length and principal point in the above calculation process can all be calibrated using the traditional checkerboard method based on Zhang Zhengyou’s calibration method, and can be obtained in the internal parameter matrix of the camera after calibration calculation,as shown in Equation (23).

Among them, respectively represent the focal length of the camera, the width and height of each pixel point, and the coordinates of the principal point, which are the intersection points of the image plane and the optical axis.

Finally, after obtaining the coordinates in the camera coordinate system, they need to be converted to the coordinates in the base coordinate system and provided to the planning system for control, satisfying the following relationship as defined by Equation (24).

Among them, , , are the homogeneous coordinate forms in the base coordinate system and the camera coordinate system respectively, as well as the homogeneous form of the coordinate transformation matrix between the camera coordinate system and the base.

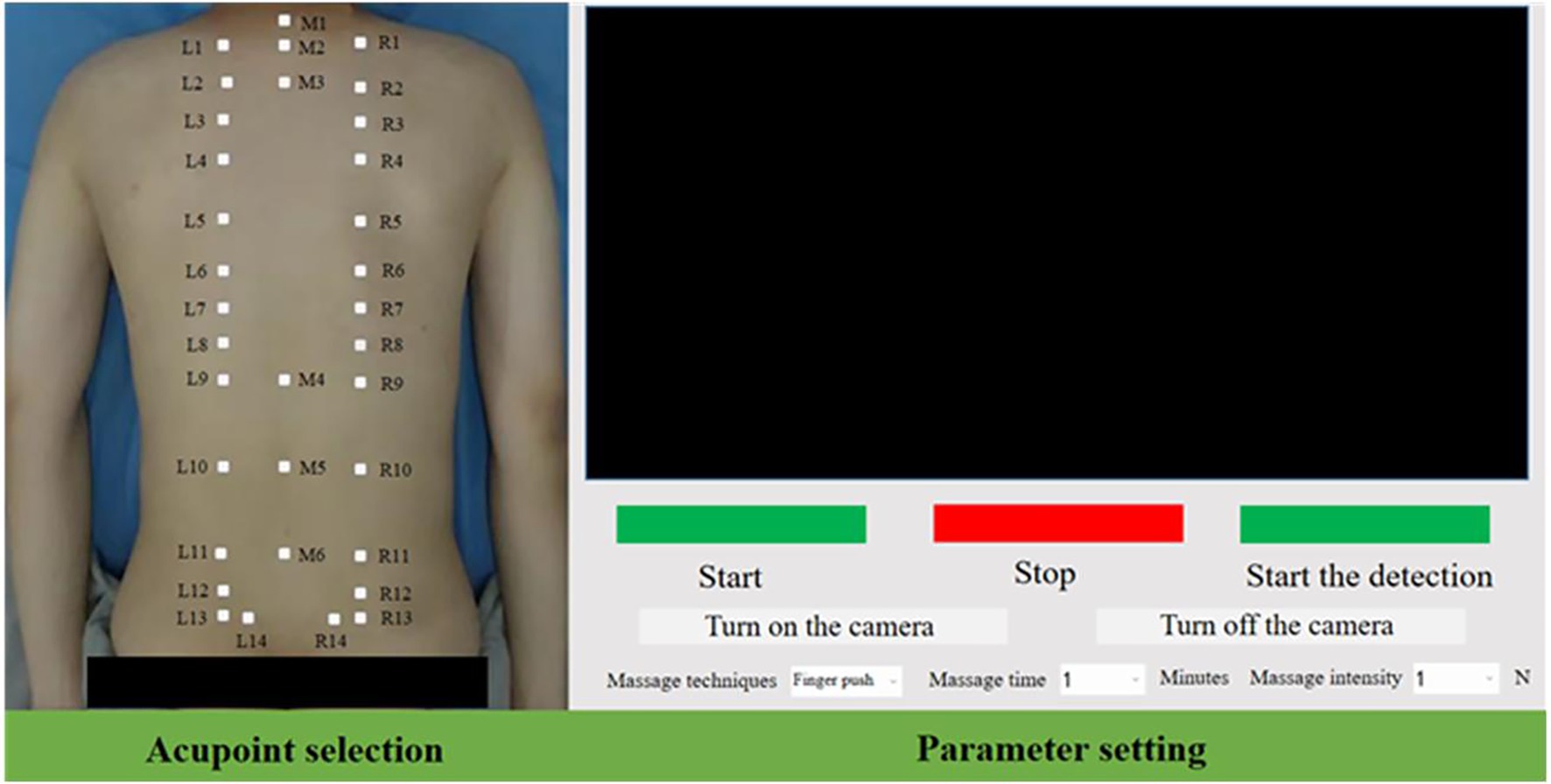

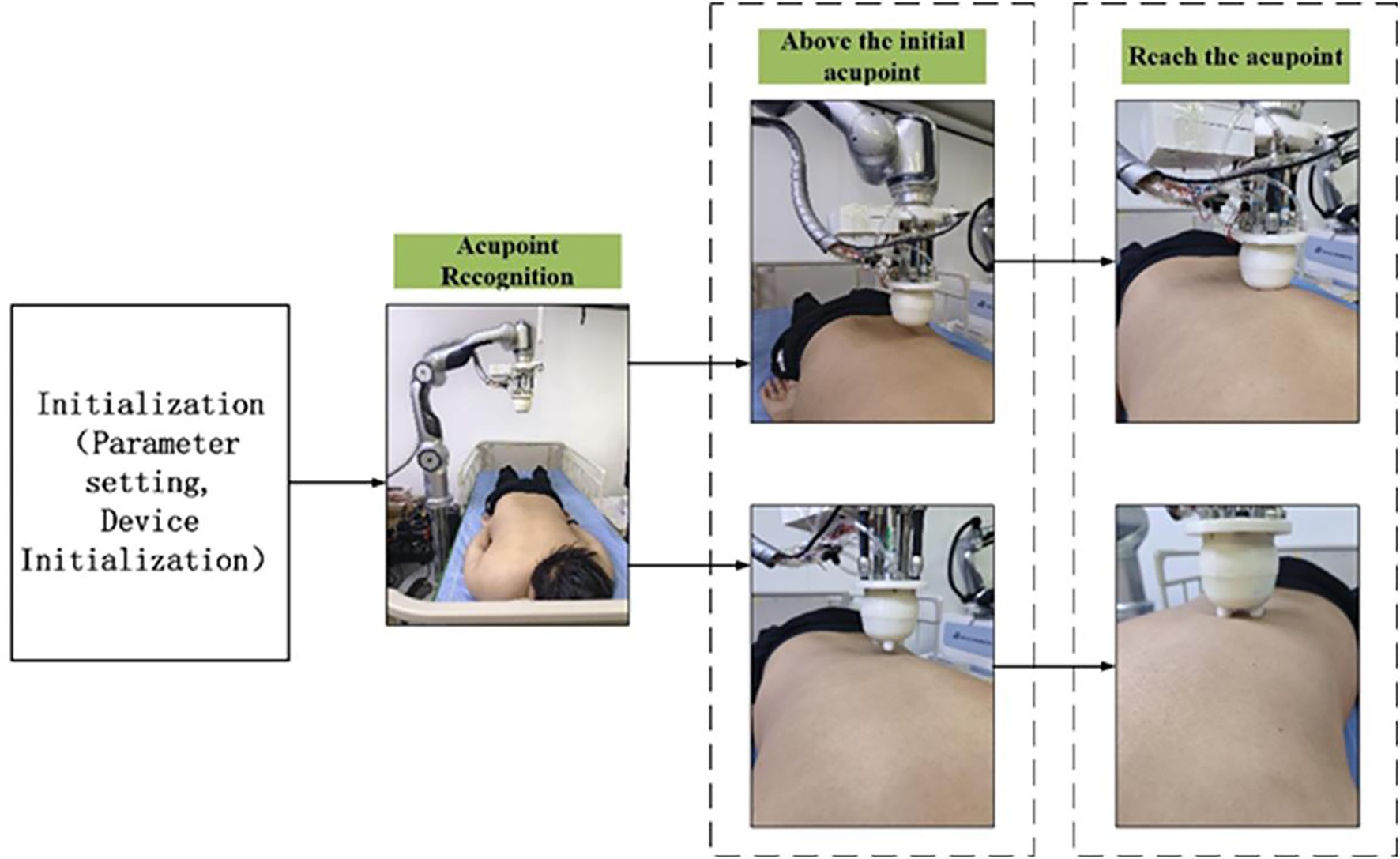

According to the physiotherapy diagnosis plan, the operator sets the physiotherapy acupoints, physiotherapy techniques, as well as parameters such as force and time through the human-machine interface. As the actuator, the physiotherapy robotic arm reaches the acupoint recognition points in sequence for acupoint recognition. After the acupoint recognition is completed, it locates the acupoints to be physiotherapy one by one according to the names of the acupoints to be physiotherapy stored in the system and performs physiotherapy according to the set techniques. To facilitate the control of the physiotherapy process, the human-computer interaction interface design and control integration were carried out based on QT. The interface is shown in Figure 8.

After receiving the physiotherapy task, the entire process trajectory of the mechanical arm physiotherapy mainly consists of the following five steps,

1. The robotic arm moves from the initial posture to the posture for acupoint recognition.

2. The robotic arm moves from the position for acupoint recognition to above the first physiotherapy acupoint.

3. The robotic arm performs physiotherapy actions in accordance with the physiotherapy plan.

4. After the current acupoint physiotherapy is completed, proceed to the next physiotherapy acupoint.

5. After the current physiotherapy stage is completed, return to the physiotherapy point recognition point from the last acupoint.

To enhance the flexibility of human-computer interaction during physical therapy, different trajectory planning methods are adopted for trajectory control in each step. The first three steps and the fifth step are interpolated through the S-velocity curve planning algorithm in the joint Angle space to improve flexibility, while the fourth step uses the arc curve planning in Cartesian space.

4 Experiments and results

The recognition effect is mainly reflected in the accuracy and real-time performance of the key points recognition of physiotherapy. We integrated the acupoint recognition network into the physiotherapy robot system, and designed the accuracy and real-time related experiments of the key points recognition to verify the performance of the recognition system, and further carried out the physiotherapy task trajectory planning experiment. Verify the applicability and reliability of the entire detection system in the robot physiotherapy scenario.

Set up an acupoint recognition system test platform, install the D435i camera at the end of the robotic arm, set the height from the bed to 1 m, collect 40 data sets for acupoint accuracy testing and real-time detection efficiency, configure the computer with a 4070Ti GPU, set the camera resolution to 640 × 480, and measure that each pixel is converted to an actual distance of approximately k = 1.3 mm.

4.1 Result analysis and evaluation of the improved Criminisi algorithm

The marked images were processed one by one according to the Criminisi algorithm and the improved algorithm, and the experimental process data were recorded and saved. Calculate the PSNR and SSIM of the same image after processing by the two algorithms. The calculation results are shown in Table 1.

As can be seen from Table 1, the SSIM of the images processed by the two algorithms is relatively high, while the PSNR is close to 40db. The evaluation of these two objective indicators indicates that the restoration quality of the previous two algorithms is similar, but there are differences.

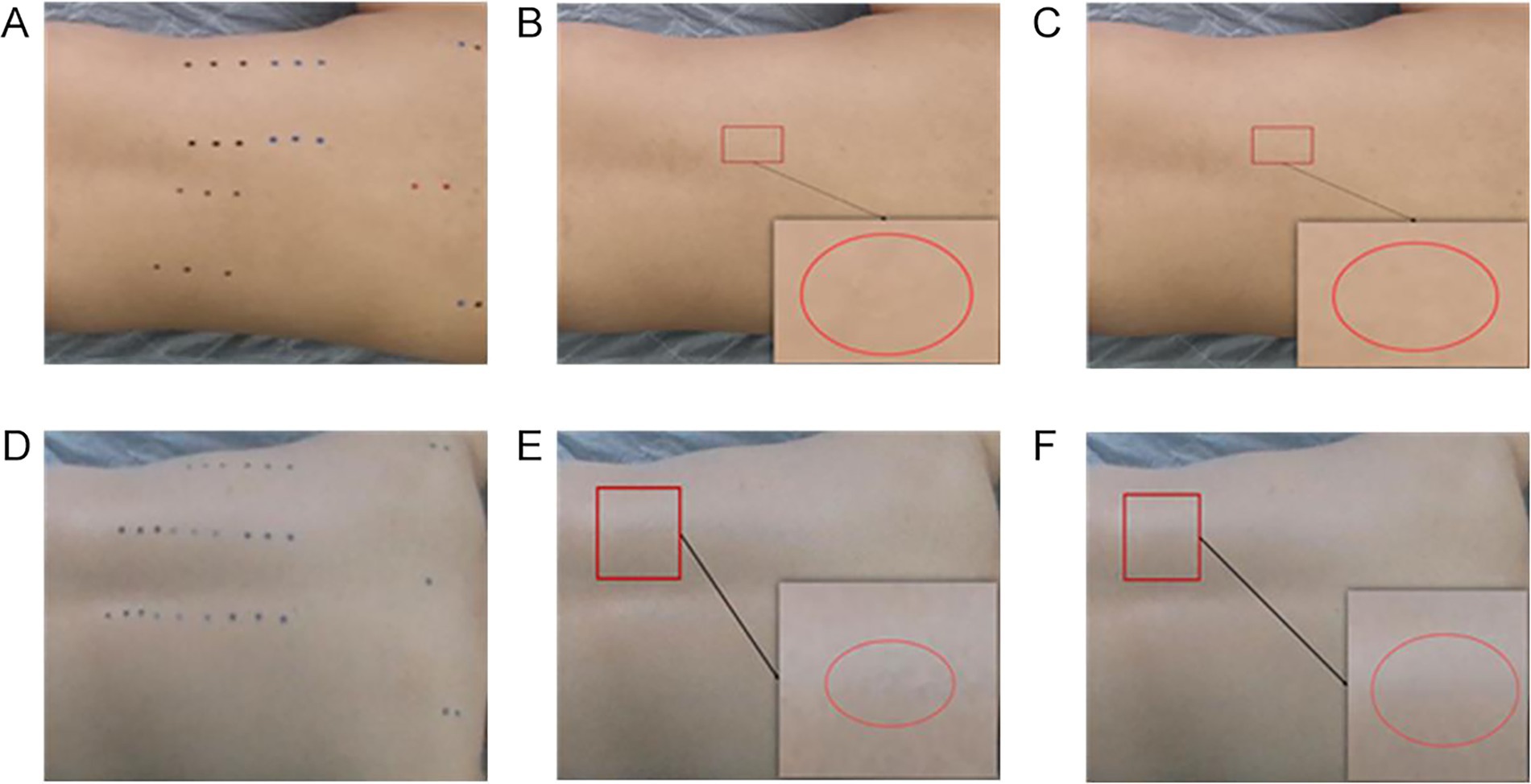

In Figure 9, Figures 9B,C are the result graphs obtained by de-labeling Figure 9A with Criminisi algorithm and improved algorithm respectively, and Figures 9E,F are the result graphs obtained by de-labeling Figure 9D with Criminisi algorithm and improved algorithm respectively. By comparing the images before and after processing and their local magnifications, it can be found that the Criminisi algorithm is prone to problems such as discontinuous boundaries and pixel mutations between different patches after restoration. Under the same conditions, the improved algorithm effectively alleviates the above phenomenon, making the repaired area and the surrounding skin appear more natural and the transition smoother subjectively visually. Since the application scenario of this study is acupoint recognition, there are high requirements for the coherence and naturalness of the repair area. Therefore, the proposed algorithm performs more superior in meeting this demand.

Figure 9. Comparison of the repair effects of the de-labeling algorithm. (A) Sample I. (B) Criminisi algorithm. (C) Improved algorithm. (D) Sample II. (E) Criminisi algorithm. (F) Improved algorithm.

Calculating the APE and for the Criminisi and improved algorithms, and plot the APE of the algorithms as shown in Figure 10, the algorithm repair efficiency evaluation table is shown in Table 2.

Firstly, as can be seen from Figure 10, the APE of the Criminisi algorithm is significantly greater than that of the improved algorithm, indicating that the improvement of the matching mechanism by the algorithm can effectively enhance the computational efficiency of the algorithm and save processing time. Moreover, the processing efficiency of each single sheet of the algorithm fluctuates around a certain mean value, and the fluctuations are relatively small, indicating strong stability of the algorithm. Secondly, as shown in Table 2, the average APE of the Criminisi algorithm is 13.4817. After the improvement, the average APE of the algorithm is reduced by 9.1487 compared with the former, and the algorithm efficiency is increased by 67.8%. Therefore, the improved algorithm can effectively increase the speed of acupoint de-labeling processing and has strong practical significance for the processing of large-scale acupoint datasets.

4.2 Accuracy test for acupoint recognition

To evaluate the accuracy of the acupoint recognition method, this study selected several volunteers as test subjects, collected predicted coordinates, and calculated evaluation indicators.

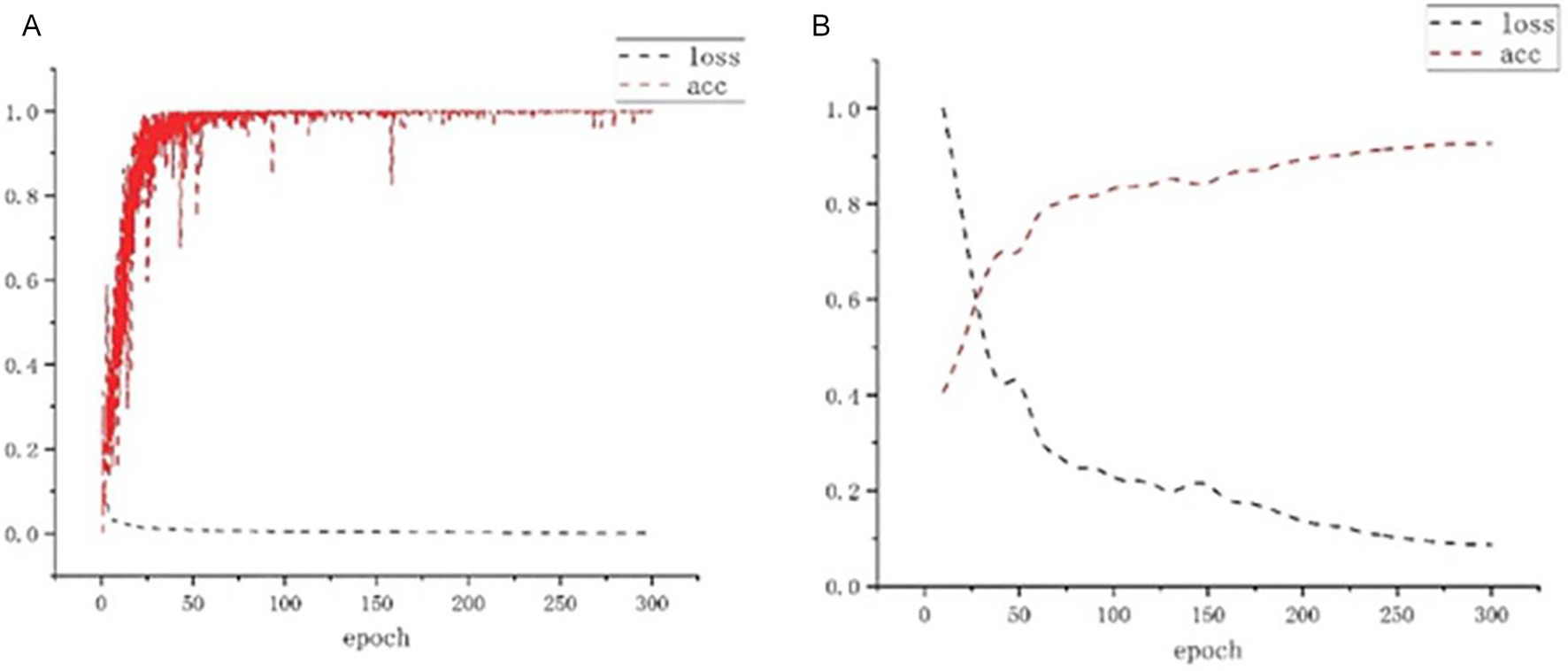

A total of 1,000 high-quality datasets were selected from the dataset and distributed in a ratio of 8:2 between the training set and the validation set. Each was trained for 300 rounds, and the validation set error was calculated every 10 rounds. The training results of the acupoint detection model are shown in Figure 11. As can be seen from Figure 11A, the accuracy is already relatively high around 50 rounds. After 300 rounds of training, the model’s accuracy in the training set is close to 1. From Figure 11B, the accuracy in the validation set reaches over 80% around 150 rounds, which is relatively high. After 300 rounds of training, the accuracy reaches over 90%. Figure 12 shows the prediction results of the acupoint recognition model after training. M1-M6 are the acupoints in the middle of the human back, L1–L14 are the acupoints on the left side of the human back, and R1–R14 are the acupoints on the right side of the human back.

Figure 11. Loss and acc change diagram. (A) Training set loss and accuracy. (B) Validation set loss and accuracy.

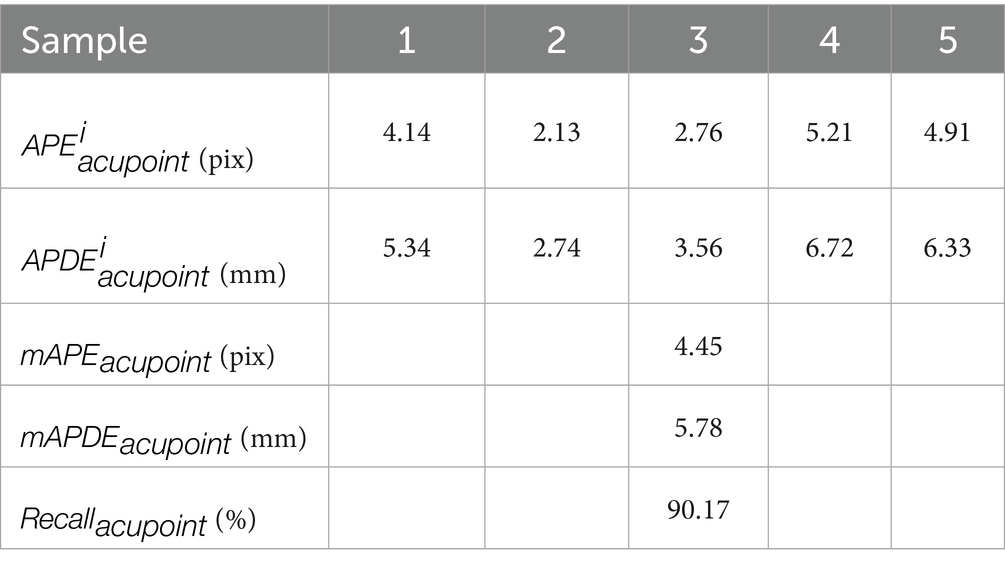

We conducted an average statistical analysis based on 40 samples. To demonstrate the representativeness of the results, the relevant parameters of five samples and the average statistical results of the overall sample are listed in Table 3. Through the evaluation of the positioning accuracy of the model, the average pixel error of acupoint recognition is 4.45 pixels. After the scale coefficient k conversion, the corresponding actual physical error is approximately 5.78 mm, the sample variance is 2.91 and the average recall rate reaches approximately 90.17%. During the execution of the task, this error mainly stems from the positioning error of the X-axis and Y-axis and the approximate error of proportional geometric measurement. Meanwhile, in the Z-axis, it also includes the error of sensor depth information. The results show that this detection method has high accuracy and can meet the application requirements of most physiotherapy scenarios such as massage and moxibustion.

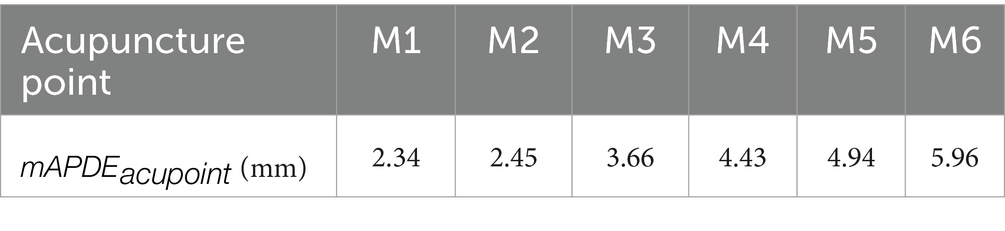

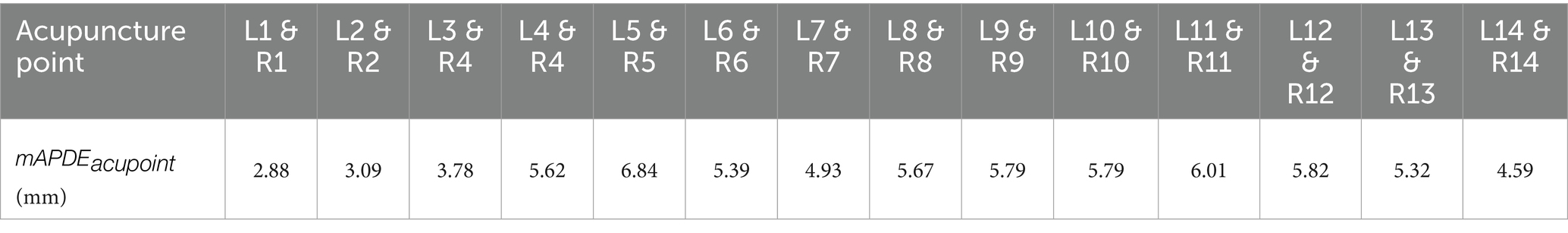

We also measured the average error data and related analysis contents of the relevant acupoints of 20 subjects, and calculated the accuracy of each acupoint one by one. The accuracy statistics of the acupoints located on the spine are shown in Table 4, and the accuracy statistics of the acupoints on both sides of the spine are shown in Table 5. Here, the acupoints at the same height on both sides of the spine were taken as the acupoint pairs for average calculation. Among them, the accuracy rate of acupoints located on the spine is generally higher than that of acupoints on both sides of the spine. Meanwhile, the accuracy rate of M1 and M2 acupoints is higher than that of M5 and M6 acupoints. This is because during the process of experts marking acupoints, they often take M1 and M2 acupoints as the marking benchmarks and give priority to marking them. From the perspective of human musculoskeletal system, the characteristics of these two acupoints are more obvious compared to M5 and M6 acupoints. For acupoints with weaker characteristics, the detection difficulty is greater. Meanwhile, experts often mark the acupoints on the spine first and then look for the pairs of acupoints on both sides, especially for those of the same height. The accuracy of the pairs of acupoints on both sides is directly related to the accurate values of the acupoints on the spine.

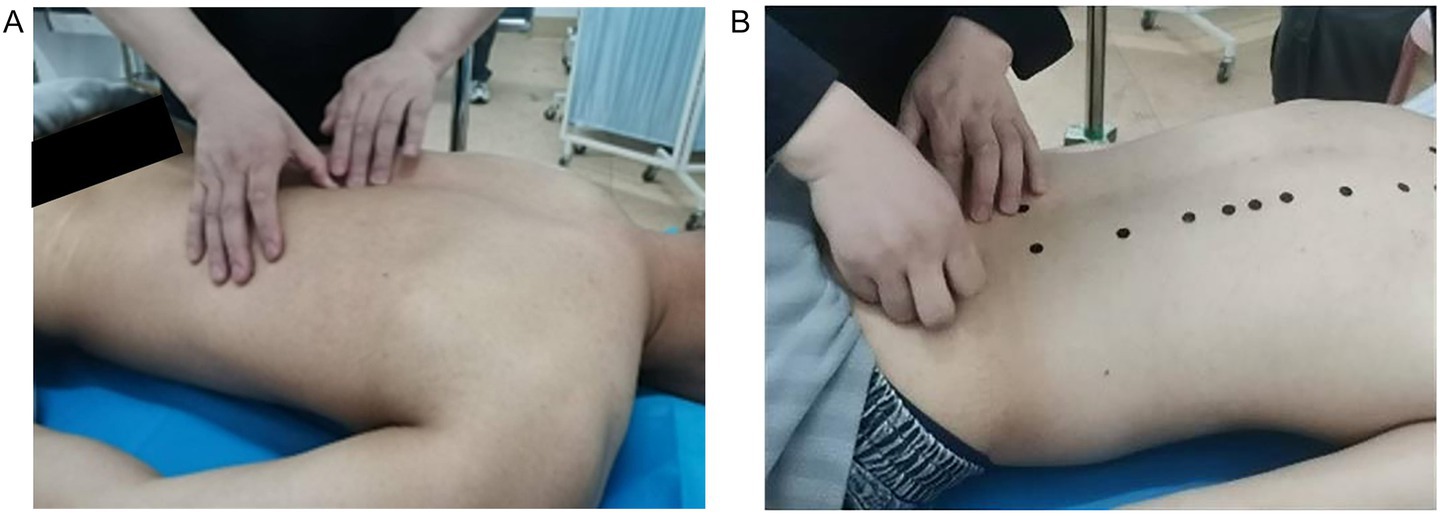

4.3 Real-time testing of acupoint recognition

In physical therapy scenarios, due to the movement of the patient’s body and changes in lighting, it is necessary to constantly update the location of acupoint recognition. Therefore, the acupoint recognition system must have the ability to update detection in real time. To evaluate this real-time performance, the detection speed of the acupoint recognition, that is, the number of image frames inferred per second, is used as the real-time evaluation index for acupoint prediction. Volunteers were arranged to dynamically move their body postures, and the detection effects of different body postures were tested.

The detection results of the acupoint recognition model are shown in Figure 13, where the red areas indicate the acupoint locations predicted by the model. From left to right are dynamic acupoint recognition images of the back under different postures. After stabilization, the number of detection frames was recorded, and the average value was calculated to be 30 (FPS). It can be seen that the detection results have a very high detection efficiency, and this method has good applicability.

4.4 Experiment on trajectory planning for physiotherapy tasks

Through the human-machine interface operation, parameters such as the physiotherapy technique, duration and intensity were selected, and then the physiotherapy began. The experimental process is shown in Figure 14.

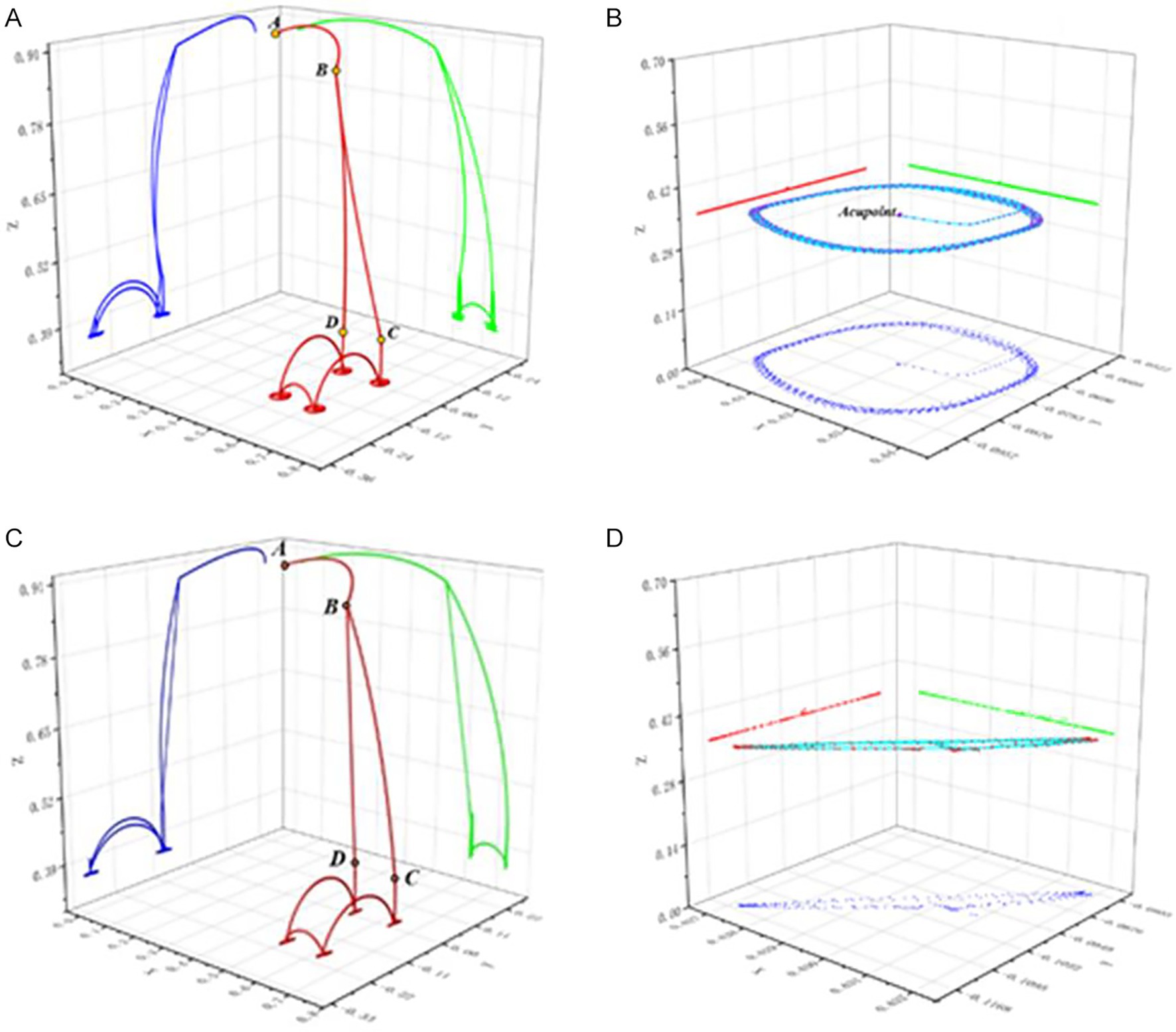

In this section, four acupoints on the back were selected for the full-process physiotherapy trajectory planning experiment. Four techniques were planned in sequence, and the end poses and trajectory planning data were recorded. The full-process trajectories of each technique and the typical trajectories of the techniques are shown in Figure 15. Point A is the system initialization pose location, and point B is the waiting point for acupoint recognition. The system moves from point A to point B for acupoint recognition. After the recognition is completed, points C and D are selected based on the chosen acupoints, which are above the acupoints to be treated. These points are, respectively, the first and last acupoints to be treated. The AB, BC, and CD sections are planned using the S-speed curve.

Figure 15. Physiotherapy task planning trajectory diagram. (A) The entire process trajectory of physiotherapy task planning I. (B) The typical trajectory of physiotherapy task planning I. (C) The entire process trajectory of physiotherapy task planning II. (D) The typical trajectory of physiotherapy task planning II.

4.5 Comprehensive analysis

In Table 6, we made a comprehensive comparison between the proposed method and the existing acupoint recognition methods from four dimensions of positioning accuracy, convenience of use, cost, scope of application. From the comparison results, it can be seen that although the method in literature (Zheng, 2022) stands out in terms of convenience, cost and has a wide scope of application, its positioning accuracy is relatively low. The method in literature (Wang et al., 2023) has high positioning accuracy but has the shortcomings of inconvenience in use, high cost and limited scope of application. The method in literature (Zhang et al., 2024) has advantages in convenience and cost. However, the positioning accuracy is moderate and the scope of application is limited. Overall, our method achieves a more balanced performance in these four dimensions, with more competitive comprehensive performance, providing a more practical solution for acupoint recognition.

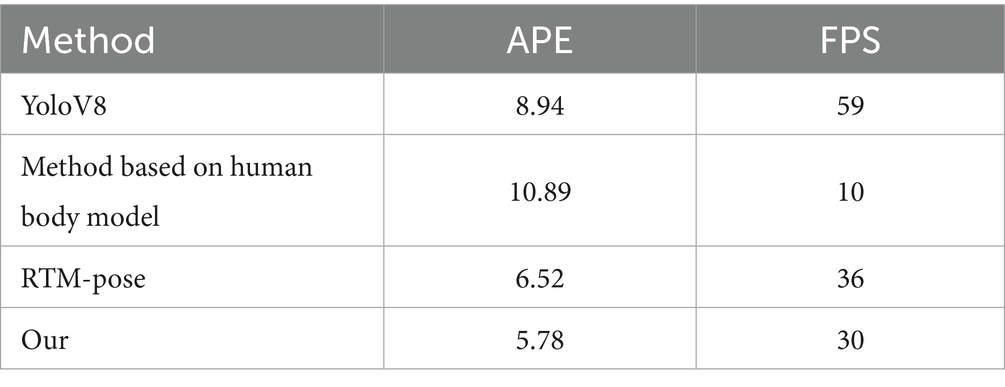

We placed the proposed method and the current mainstream key point detection methods under the same experimental conditions for performance verification, using exactly the same hardware configuration and strictly following the same data preprocessing process to eliminate the interference of experimental environment differences on the evaluation results. The comparative experiment adopts the test dataset. The acupoint recognition accuracy of the proposed method is quantified by calculating the acupoint prediction error (APE) corresponding to all effective pixels, and the detection speed of acupoint recognition, that is, the number of image frames per second (FPS), is calculated to quantify the real-time performance of acupoint recognition of the proposed method. It can be seen from Table 7 that the method we proposed performs best on APE, significantly lower than other methods. In terms of reasoning efficiency, although it is slightly lower than YoloV8, it is higher than the method based on human body model, and the gap with RTM-pose is also within an acceptable range. On the whole, our method has achieved a good balance between accuracy and efficiency, and has an advantage in the accuracy of acupoint coordinate prediction. It can better meet the high requirements for acupoint recognition accuracy in physiotherapy scenarios and lay a solid foundation for the precise execution of subsequent physiotherapy actions.

5 Discussion

This paper conducts research on the autonomous acupoint recognition of physiotherapy robots. In response to the difficulties such as the dense distribution of acupoints on the back, the indistinct external features, and the changes in posture and lighting, a two-stage detection method combining RTMDet and RTMPose is proposed, and model generalization is carried out for acupoint recognition under dynamic conditions. Through experimental verification, we demonstrate the robustness and practicality of the proposed method in complex environments. In the task of locating acupoints, accurately identifying the positions of key points depends not only on local texture features, but also on the overall structure and contextual semantic information. Traditional single-stage networks often struggle to strike a good balance between ensuring target detection and precise prediction of key points simultaneously. The two-stage architecture effectively alleviates this contradiction by decoupling target detection from key point localization. The first stage of the RTMDet network model focuses on the back region of the human body, eliminating redundant interference information; while the second stage of the keypoint localization network RTMPose focuses on high-precision acupoint recognition tasks within the cropped local region. Through module division and task decoupling, this structure not only improves the robustness and generalization ability of the model, but also provides better spatial constraints and feature support for precise acupoint recognition in complex contexts. Based on the defined accuracy error metric, it achieves a recall of 90.17% on the human body dataset, with a detection error of around 5.78 mm. Moreover, it can still conduct real-time detection when the volunteer moves their body and their posture changes, and the accuracy is almost unaffected. The average frame rate remains above 30 frames, indicating that the system can meet the requirements of real-time application scenarios. However, it should be noted that since the data collection is carried out in a well-lit and controllable laboratory environment, which differs from the actual application scenarios, different light intensities may have a certain impact on the accuracy of acupoint recognition. Moreover, the data of this study mainly came from subjects with healthy body types and did not include data cases of diseases such as “scoliosis.” Therefore, when it comes to users with similar physical disabilities and scoliosis, the generalization is reduced. In future research, we plan to introduce other perception methods to build a multimodal fusion acupoint recognition system, in order to enhance the adaptability and accuracy of the model in complex clinical scenarios. Meanwhile, we have expanded our research scope from the back to other parts of the human body such as the abdomen and ears, gradually establishing a high-quality database of acupoints throughout the body.

6 Conclusion

In response to the imbalance between the demand and supply of physical therapy for the aging and sub-healthy population, the application of robots for physical therapy has become a highly promising solution. However, the current level of automation in acupoint recognition of physical therapy robots is insufficient, and there is still room for improvement in recognition accuracy. In terms of dataset collection and production, a high-precision dataset production method was designed, which involves manual labeling first and then marking through algorithms, thereby improving the quality of the dataset. Based on the deep learning algorithm model, a two-stage acupoint recognition model is proposed. Object detection adopts network architectures such as CSPNeXt and enhances detection speed and accuracy through a multi-head sharing mechanism. Key point detection is based on the SimCC architecture, converting regression problems into classification problems, which improves detection speed and raises detection accuracy to the sub-pixel level. By transforming the coordinates, we planned the task execution trajectory of the physiotherapy robot to ensure that it could precisely reach the target acupoints when performing physiotherapy actions. The algorithm model has been verified. The average pixel error of acupoint recognition is 4.45 pixels, the actual physical error is about 5.78 mm, and the average recall rate is about 90.17%. This indicates that the detection model method has a high detection accuracy and can meet the needs of most physiotherapy scenarios. This research is conducive to promoting the deep integration of traditional Chinese medicine and artificial intelligence technology, and driving the development of physiotherapy robots.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

YZ: Validation, Conceptualization, Methodology, Writing – original draft. SS: Writing – original draft, Investigation, Software. DZ: Funding acquisition, Writing – review & editing, Supervision. JY: Project administration, Resources, Writing – review & editing. SW: Supervision, Writing – review & editing, Validation.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This research was funded by National Natural Science Foundation of China (Grant No. 92248304), Key Research and Development Projects of Artificial Intelligence (Grant No. 2023JH26/10200018), Basic Research Project of Liaoning Provincial Department of Education (Grant No. LJ212410142073), Dreams Foundation of Jianghuai Advance Technology Center (Grant No. ZM01Z016).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Bakhtiyorov, S., Umirzakova, S., Musaev, M., Abdusalomov, A., and Whangbo, T. K. (2025). Real-time object detector for medical diagnostics (RTMDet): a high-performance deep learning model for brain tumor diagnosis. Bioengineering 12:274. doi: 10.3390/bioengineering12030274

Choi, Y. I., Kim, K. O., Chung, J. W., Kwon, K. A., Kim, Y. J., Kim, J. H., et al. (2021). Effects of automatic abdominal massage device in treatment of chronic constipation patients: a prospective study. Dig. Dis. Sci. 66, 3105–3112. doi: 10.1007/s10620-020-06626-3

Dong, H., Feng, Y., Qiu, C., Pan, Y., He, M., and Chen, I. M. (2022). Enabling massage actions: an interactive parallel robot with compliant joints. Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). 4632–4637.

Fan, J., Jin, L., Li, P., Liu, J., Wu, Z. G., and Chen, W. (2025). Coevolutionary neural dynamics considering multiple strategies for nonconvex optimization. Tsinghua Sci. Technol. doi: 10.26599/TST.2025.9010120

Hua, W., Dai, Z., Liu, H., and Le, Q. V. (2022). Transformer quality in linear time. Proc. Mach. Learn. Res. 162, 9099–9117. Available at: https://proceedings.mlr.press/v162/hua22a.html

Huang, H., Jin, L., and Zeng, Z. (2025). A momentum recurrent neural network for sparse motion planning of redundant manipulators with majorization–minimization. IEEE Trans. Ind. Electron., 1–10. doi: 10.1109/TIE.2025.3566731

Jiang, T., Lu, P., Zhang, L., Ma, N., Han, R., Lyu, C., et al. (2023). RTMPose: Real-Time Multi-Person Pose Estimation based on MMPose. arXiv. Available online at: https://arxiv.org/abs/2303.07399. [Epub ahead of preprint]

Jin, L., Wei, L., and Li, S. (2023). Gradient-based differential neural-solution to time-dependent nonlinear optimization. IEEE Trans. Autom. Control 68, 620–627. doi: 10.1109/TAC.2022.3144135

Kang, Z., Shi, G., Zhu, Y., Li, F., Li, X., and Wang, H. (2025). Development of a model for measuring sagittal plane parameters in 10–18-year old adolescents with idiopathic scoliosis based on RTMpose deep learning technology. J. Orthop. Surg. Res. 20:41. doi: 10.1186/s13018-024-05334-2

Kerautret, Y., Di Rienzo, F., Eyssautier, C., and Guillot, A. (2024). Comparative efficacy of robotic and manual massage interventions on performance and well-being: a randomized crossover trial. Sports Health 16, 650–660. doi: 10.1177/19417381231190869

Li, J., Fei, Z., Xie, Y., Deng, D., Ming, X., and Niu, F. (2025). A review of acupoint localization based on deep learning. Chin. Med. 20:116. doi: 10.1186/s13020-025-01173-3

Li, F. F., Zuo, H. M., Jia, Y. H., and Qiu, J. (2024). A developed Criminisi algorithm based on particle swarm optimization (PSO-CA) for image inpainting. J. Supercomput. 80, 16611–16629. doi: 10.1007/s11227-024-06099-5

Liu, M., Chen, L., Du, X., Jin, L., and Shang, M. (2023). Activated gradients for deep neural networks. IEEE Trans. Neural Netw. Learn. Syst. 34, 2156–2168. doi: 10.1109/TNNLS.2021.3106044

Liu, M., Li, Y., Chen, Y., and Jin, L. (2024). A distributed competitive and collaborative coordination for multirobot systems. IEEE Trans. Mob. Comput. 23, 11436–11448. doi: 10.1109/TMC.2024.3397242

Lyu, C., Zhang, W., Huang, H., Zhou, Y., Wang, Y., Liu, Y., et al. (2022). Rtmdet: an empirical study of designing real-time object detectors. arXiv. Available online at: https://arxiv.org/abs/2212.07784. [Epub ahead of preprint]

Malekroodi, H. S., Seo, S. D., Choi, J., Na, C. S., Lee, B. I., and Yi, M. (2024). Real-time location of acupuncture points based on anatomical landmarks and pose estimation models. Front. Neurorobot. 18:1484038. doi: 10.3389/fnbot.2024.1484038

Mamieva, D., Abdusalomov, A. B., Mukhiddinov, M., and Whangbo, T. K. (2023). Improved face detection method via learning small faces on hard images based on a deep learning approach. Sensors 23:502. doi: 10.3390/s23010502

Pang, Z., Zhang, B., Yu, J., Sun, Z., and Gong, L. (2019). Design and analysis of a Chinese medicine based humanoid robotic arm massage system. Appl. Sci. 9:4294. doi: 10.3390/app9204294

Sayapin, S. N. (2017). Intelligence self-propelled planar parallel robot for sliding cupping-glass massage for back and chest. International Conference of Artificial Intelligence, Medical Engineering, Education. 166–175

Seo, S. D., Madusanka, N., Malekroodi, H. S., Na, C. S., Yi, M., and Lee, B. I. (2024). Accurate acupoint localization in 2D hand images: evaluating HRNet and ResNet architectures for enhanced detection performance. Curr. Med. Imaging 20:e15734056315235. doi: 10.2174/0115734056315235240820080406

Wang, H., Liu, L., Wang, Y., and Du, S. (2023). Hand acupuncture point localization method based on a dual-attention mechanism and cascade network model. Biomed. Opt. Express 14, 5965–5978. doi: 10.1364/BOE.501663

Wang, W., Zhang, P., Liang, C., and Shi, Y. (2018). Design path planning improvement and test of a portable massage robot on human back. Int. J. Adv. Robot. Syst. 15:1729881418786631. doi: 10.1177/1729881418786631

Xing, K., Chen, D., Xue, R., and Wang, D. (2021). Flexible physiotherapy massage robot. 2021 IEEE International Conference on Robotics and Biomimetics (ROBIO 2021). December 6–9, 2021: Sanya, China. 417–421.

Yang, S., Li, Y., Huang, L., Liu, J., Teng, Y., Zou, H., et al. (2025a). Exploring an innovative deep learning solution for acupuncture point localization on the weak feature body surface of the human back. IEEE J. Biomed. Health Inform. 29, 4599–4611. doi: 10.1109/JBHI.2024.3511128

Yang, S., Liang, H., Wang, Y., Cai, H., and Chen, X. (2020). Image inpainting based on multi-patch match with adaptive size. Appl. Sci. 10:4921. doi: 10.3390/app10144921

Yang, J., Lim, K. H., Mohabbat, A. B., Fokken, S. C., Johnson, D. E., Calva, J. J., et al. (2024). Robotics in massage: a systematic review. Health Serv. Res. Manag. Epidemiol. 11:23333928241230948. doi: 10.1177/23333928241230948

Yang, S., Zang, Q., Zhang, C., Huang, L., and Xie, Y. (2025b). Rt-DEMT: a hybrid real-time acupoint detection model combining mamba and transformer. arXiv. Available online at: https://arxiv.org/abs/2502.11179. [Epub ahead of preprint]

Yao, F. (2019). Damaged region filling by improved criminisi image inpainting algorithm for thangka. Clust. Comput. 22, 13683–13691. doi: 10.1007/s10586-018-2068-4

Yu, Z., Zhang, S., Zhang, L., Wen, C., Yu, S., Sun, J., et al. (2024). Design and performance evaluation of a home-based automatic acupoint identification and treatment system. IEEE Access 12, 25491–25500. doi: 10.1109/ACCESS.2024.3359717

Yuan, Z., Shao, P., Li, J., Wang, Y., Zhu, Z., Qiu, W., et al. (2024). YOLOv8-ACU: improved YOLOv8-pose for facial acupoint detection. Front. Neurorobot. 18:1355857. doi: 10.3389/fnbot.2024.1355857

Zhang, Y., Jia, Y., and Tang, Y. (2025). Accurate detection of arbitrary ship directions using SAR based on RTMDet. Remote Sens. Lett. 16, 156–169. doi: 10.1080/2150704X.2024.2440666

Zhang, Y., Zeng, B., Xian, Y., and Yang, R. (2024). Detection of back acupoints based on improved OpenPose. FAIML 2024: Proceedings of the 3rd International Conference on Frontiers of Artificial Intelligence and Machine Learning. 329–335.

Zhao, L., and Guo, Y. (2023). Development of rehabilitation and assistive robots in China: dilemmas and solutions. J. Shanghai Jiaotong Univ. 28, 382–390. doi: 10.1007/s12204-023-2596-9

Zhao, D., Sun, X., Shan, B., Yang, Z., Yang, J., Liu, H., et al. (2023). Research status of elderly-care robots and safe human-robot interaction methods. Front. Neurosci. 17:1291682. doi: 10.3389/fnins.2023.1291682

Zheng, C. (2022). Research on AR system for facial acupoint recognition based on deep learning. Qingdao: Qingdao University of Science and Technology.

Zheng, W., Zhang, M., Dong, R., Qiu, M., and Wang, W. (2025). Feasibility and accuracy of an RTMPose-based Markerless motion capture system for single-player tasks in 3 × 3 basketball. Sensors 25:4003. doi: 10.3390/s25134003

Keywords: physiotherapy robot, acupoint recognition, RTMDet network, RTMPose network, physiotherapy task

Citation: Zhang Y, Sun S, Zhao D, Yang J and Wang S (2025) A novel intelligent physiotherapy robot based on dynamic acupoint recognition method. Front. Neurorobot. 19:1696824. doi: 10.3389/fnbot.2025.1696824

Edited by:

Mei Liu, Multi-Scale Medical Robotics Center Limited, ChinaReviewed by:

Ali Raza, University of Engineering and Technology, Taxila, PakistanJiawang Tan, Chinese Academy of Sciences (CAS), China

Liang Xuan, Jianghan University, China

Copyright © 2025 Zhang, Sun, Zhao, Yang and Wang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Donghui Zhao, cHV0b25nZGV5dUAxMjYuY29t

Yuhan Zhang

Yuhan Zhang Shiyang Sun1

Shiyang Sun1 Donghui Zhao

Donghui Zhao