- Department of Psychology, Catholic University of Brasília (UCB), Brasília, Brazil

This theoretical article proposes an integrative model to understand the dissemination of misinformation on social networks, articulating three central pillars of social and cognitive psychology: overconfidence bias, social conformity, and cognitive dissonance. It is argued that these factors interact to favor the perception of legitimacy and repeated sharing of false information, even in the face of corrective evidence. The model describes a psychosocial cycle composed of exposure, judgment, action, dissonance, and rationalization. We identify theoretical conditions for the delegitimization of misinformation and analyze how the digital environment intensifies the described psychosocial mechanisms. The model contributes to theoretical reflection by articulating previously isolated constructs into an integrated explanatory theory, offering implications for both future research and practical interventions in combating misinformation.

Introduction

The dissemination of misinformation on digital social networks has become a central concern in the contemporary sociopolitical landscape, especially in Brazil, where the impact of false information has been significant in various domains, including electoral processes and public health. This phenomenon, often framed within a broader context of “information disorder” (Wardle and Derakhshan, 2017), represents not only an informational challenge but also a complex psychosocial problem that demands interdisciplinary analyses (Nascimento and Silva, 2024). Research shows that 72% of Brazilians reported encountering false news on social media in the past 6 months, and 81% believe this information can substantially influence election results (Federal Senate, 2024). This scenario is exacerbated by Brazilians' high confidence in social media as an information source. A report by the Organization for Economic Cooperation and Development (OECD) 2024 indicated that Brazil ranks last among 21 countries evaluated for the ability to identify fake news, being the country where the population most believes in false news.

The problem is multifaceted and was recently characterized by the World Economic Forum (2024) in its global risks report as the most serious issue for the next 2 years, surpassing even extreme climate events, pollution, or social inequality. Nobel Prize winner in Economics, Robert J. Shiller, highlights, in an analogy to viruses, that narratives spread in a similar way, impacting behaviors in fundamental social aspects (Shiller, 2022).

During the COVID-19 pandemic, for example, there was a proliferation of false information related to vaccines, often framed within a charged political context (Soares et al., 2021), which negatively impacted vaccination rates and trust in health authorities. Even after factual corrections, many people continue to believe and share information proven to be false (Cunha and Santos, 2020). This persistence of misinformation, a phenomenon known as the continued influence effect (Lewandowsky et al., 2012), suggests the existence of deep psychological and social mechanisms that sustain its perception of legitimation.

Despite the growing literature on misinformation, there is a gap regarding integrative theoretical models that articulate the various psychological and social processes involved in the acceptance and dissemination of false information. Much of the research has focused on isolated aspects of the phenomenon, such as specific cognitive biases or algorithmic dynamics of digital platforms, without offering a systemic view that connects these elements in a coherent explanatory cycle.

It is important to clarify that although we name the framework the Psychosocial Cycle of Misinformation, the proposal is not a theory built from first principles, but an integrative model that synthesizes and interrelates existing psychological constructs into a coherent explanatory cycle.

This article proposes to advance this knowledge by presenting the Psychosocial Cycle of Misinformation, an integrative theoretical model that articulates three central pillars of social and cognitive psychology: overconfidence bias, social conformity, and cognitive dissonance. It is argued that these factors interact to favor the legitimation and repeated sharing of false information, even in the face of corrective evidence.

The proposed model describes a psychosocial cycle composed of five interdependent stages: exposure to socially relevant information, judgment and decision to share, receiving feedback or correction, regulation of dissonance, and reinforcement of behavior and group status. This cycle explains how individuals are exposed to, process, disseminate, and rationalize false information on social networks, contributing to the understanding of the mechanisms that sustain the persistence of misinformation in the digital environment.

Additionally, the cycle addresses the role of emotions, which not only accompany social judgments but often guide, reinforce, or replace cognitive rationalizations in contexts of high public visibility and symbolic polarization.

The theoretical contribution of this work lies in the articulation of well-established psychological and social constructs in an original explanatory model, which not only identifies the factors that contribute to the legitimation of misinformation but also describes their dynamic interactions and points to possible breaking points in the cycle. Furthermore, the proposed model offers a conceptual framework for the development of more effective interventions in combating misinformation, considering the psychosocial complexity of the phenomenon. In the following sections, we will present the theoretical foundation of the three pillars that support the model, detail the psychosocial cycle of misinformation, discuss the conditions for the delegitimization of misinformation, and analyze how the digital environment intensifies the mechanisms of psychosocial legitimation. Finally, we will explore the theoretical and practical implications of the proposed model, as well as its limitations and directions for future research.

Theoretical foundation

Overconfidence bias and informational judgment

Overconfidence bias refers to the systematic tendency to overestimate the accuracy of one's judgments, available knowledge, or personal ability relative to other individuals (Moore and Healy, 2008; Bazerman and Tenbrunsel, 2011). This bias commonly manifests in three forms: overprecision (exaggerated confidence in one's certainty), overestimation (inflated assessment of one's abilities), and overplacement (perception of superiority relative to peers). In social media contexts, individuals with high overconfidence may exhibit less propensity to verify information, relying exclusively on their own criteria and subjective perceptions of validity (Cheng et al., 2017).

This bias does not operate in isolation and frequently interacts with other important biases that help preserve a positive self-image, avoid threats, sustain illusions, and/or produce distortions in the assessment of one's competence, such as confirmation bias (Nickerson, 1998), information avoidance bias (Golman et al., 2017), hindsight bias (Fischhoff, 1975), illusion of control (Langer, 1975), optimistic bias or unrealistic optimism (Sharot, 2011), Dunning-Kruger effect (Kruger and Dunning, 1999), self-serving bias (Miller and Ross, 1975), and third-person effect (Davison, 1983). When operating together, these biases produce self-reinforcing cycles that make overconfidence a particularly resistant phenomenon to change.

While overplacement refers to the individual's belief in being more competent than others in identifying misinformation, the third-person effect concerns the perception that others are more vulnerable to misinformation than oneself. Although both are comparative self-assessments, they operate on different evaluative dimensions—competence vs. influence.

In a study on vaccine hesitancy, a non-linear relationship between education and vaccine acceptance was verified. Individuals with intermediate levels of education (complete elementary/incomplete high school and complete high school/incomplete higher education) demonstrated greater hesitation, while those with higher or very low levels of education showed less hesitation (Nascimento, 2024).

This pattern suggests additional complexity to the overconfidence bias. The observed phenomenon can be interpreted in light of the Dunning-Kruger effect, in which individuals with partial knowledge may overestimate their understanding of complex scientific issues, exhibiting greater overconfidence in their judgments than those with very little knowledge (who recognize their limitations) or those with advanced knowledge (who recognize the complexity of the topic).

This phenomenon reveals an additional layer of complexity within the overconfidence bias. It is consistent with research on the Dunning-Kruger effect, which demonstrates that individuals with moderate levels of knowledge often display greater overconfidence than those with either low or high levels of knowledge (Ehrlinger et al., 2008; Motta et al., 2018). These individuals tend to know enough to feel confident, but not enough to recognize the limitations of their understanding—particularly regarding complex scientific issues—making them especially susceptible to misinformation.In the context of misinformation on social networks, overconfidence bias plays a fundamental role in informational judgment and the decision to share content. This bias operates in the digital environment and contributes to the dissemination of false information. Gil de Zúñiga et al. (2017) identified a phenomenon called “News-Finds-Me” (NFM), a widespread perception in which individuals believe that important information will naturally reach them through social networks without active effort. NFM is associated with a cognitive style of low attention to news, without greater involvement and reflection on the accessed content, with a greater propensity to consider the news credible, with social networks assuming the role of an information source that creates the sensation of being informed, replacing the active and engaged search for news.

In a study with 1,014 adults in the United States, researchers found that 55.2% of participants endorsed this mentality, being more common among young users and people who use social networks extensively.

This NFM perception is directly associated with overconfidence in the ability to identify fake news. Researchers observed that individuals with strong NFM perception were more likely to consider fake news credible and, consequently, to share it. More concerning still, about 40% of participants who expressed confidence in their ability to identify fake news were, in reality, less capable than average of distinguishing between true and false information.

The study also revealed a significant relationship between NFM perception and Third-Person Perception, a cognitive bias in which individuals believe they are less influenced by misinformation than others. This combination creates an “illusion of knowledge,” in which passive consumption of news through social networks generates a false sense of being well-informed, leading to the dismissal of verification processes due to the subjective conviction of being correct (Tian and Willnat, 2025).

Digital platforms promote the illusion of expertise in which everyone can position themselves on everything and everyone, with fragmentation of knowledge and without necessarily a clear authority, that is, information frequently circulates in short formats (posts, tweets, short videos), decontextualized and disconnected from a larger body of knowledge. At the same time, traditional cues of authority (institutional credentials, peer review) are often absent or obscured. The algorithm may give more visibility to engaging content than to factual content or from authorized sources. This makes it difficult for the user to discern who really has expertise and what information is reliable, which increases confidence in individual “common sense,” reinforced by self-validation and the search for leadership in opinion formation from “likes” or favorable comments.

Individuals who accumulate high engagement may be perceived as “opinion leaders” or influencers, achieving social rank through pathways of prestige and dominance (Cheng et al., 2013), even if their popularity is due more to the ability to generate engagement than to the quality or veracity of their information. This creates a system where popularity can supplant authority based on knowledge and, in the absence of clear authorities and faced with a fragmented flow of often contradictory information, people may resort more to their own “common sense” or intuition, frequently shaped by cognitive biases.

Thus, excessive confidence in one's own informational discernment capabilities, combined with dependence on algorithms and peer networks that reinforce existing beliefs, creates favorable conditions for the acceptance and dissemination of misinformation.

Within the digital environment, this process fosters what we define as digital overconfidence—the inflated belief in one's own ability to discern truth from falsehood, amplified by algorithmic affirmation, low informational friction, and frequent social endorsement (likes, shares). In contrast, one potential protective factor is epistemic humility, defined as the acknowledgment of the limitations of one's knowledge and a willingness to revise beliefs in the face of new evidence, especially under conditions of uncertainty or contradiction.

Social conformity and group validation

Social conformity can be divided into two main aspects: informational conformity, where in an ambiguous situation with some uncertainty, people use others to reach a definition with private acceptance; and normative conformity, a situation where people yield to group pressure to avoid criticism, without necessarily accepting privately, which concerns the alignment of attitudes or behaviors to group norms for obtaining social acceptance (Asch, 1956; Cialdini and Goldstein, 2004; Aronson et al., 2015). This phenomenon, widely studied in social psychology since the classic experiments of Sherif and Asch in the mid-20th century, acquires new dimensions and mechanisms in the contemporary digital environment.

Social conformity gains new dimensions when analyzed in light of the concept of “false consensus,” defined as the “impression of consensus where people tend to believe in those discourses that the majority of others seem to support” (Recuero, 2020). This phenomenon explains how the perception of majority support socially legitimizes certain beliefs, even when they do not actually represent a consensual position. On social networks, false consensus is amplified by echo chambers and informational bubbles, where selective exposure to similar content creates the illusion of unanimity.

The influence of authority, an element contemplated in social conformity, deserves specific highlight in light of recent research. Studies have identified that the “legitimacy of the authority that endorses the discourse” (Penteado et al., 2022; Massarani et al., 2023) constitutes a determining factor in the acceptance and sharing of misinformation.

In a literature review on misinformation (Nascimento and Silva, 2024), an additional dimension of this phenomenon was revealed: the “ability to exploit ignorance to gain more power” (Barcelos et al., 2021), in which misinformation is strategically used to shape knowledge according to specific interests. This perspective shows how authority not only influences conformity but can also be instrumentalized to amplify the cycle of misinformation. In a study on vaccine hesitancy, a strong association was found between political orientation and attitude toward vaccination. Individuals with right-wing and center-right political orientation demonstrated greater hesitation or rejection of COVID-19 vaccination, while those with left-wing and center-left orientation showed a “definite” willingness to get vaccinated (Nascimento, 2024). This online group conformity has been shown to translate into real-world political mobilization on a massive scale (Bond et al., 2012).

Although the referenced study did not control for group influence variables, the verification of this political polarization in the acceptance of scientific information may offer elements that evidence the role of social conformity, as the decision to accept or reject vaccination transcends the objective evaluation of scientific evidence, being strongly influenced by the individual's political identity and their need for conformity with the norms and beliefs of the group with which they identify (Nascimento, 2024).

The study also revealed a significant association between religious affiliation and vaccine hesitancy, with evangelicals demonstrating greater hesitation compared to people without religion. This finding shows how different epistemic communities can socially legitimize distinct positions regarding scientific information (Nascimento, 2024).

The influence of religion on vaccine hesitancy shows that within certain religious communities, vaccine hesitancy may be socially rewarded, reinforcing group identity and social cohesion.

This mechanism can offer elements for understanding how social conformity not only influences individual decisions but also contributes to the maintenance of shared beliefs, even when they contradict scientific evidence.

In digital environments, where groups are structured in ideological communities and informational bubbles, conformity becomes a powerful force of cohesion and validation, occurring in real-time, with public or restricted visibility where social sanction is instantaneous, positive or negative. Silva et al. (2025) conducted a literature review on the formation of informational bubbles in digital communication, analyzing algorithmic metrics within the social networks Facebook, Twitter, and TikTok. The researchers identified that these bubbles are strongly influenced by the metrics of algorithms that analyze users' digital traces to direct content of interest.

The study demonstrated that direct and indirect engagement interactions with content are the main mechanisms used by algorithms to generate informational bubbles. The bubbles not only limit exposure to diverse viewpoints but also reinforce group homogeneity and mutual validation of beliefs, including false information. The acceptance of information shared within the group and active participation in the diffusion of these messages function as signals of belonging and loyalty.

The phenomenon of informational bubbles indicates significant consequences for modern society, including addictions, intellectual impoverishment, and, in more severe cases, risks to physical and mental health. In the context of misinformation, these bubbles create environments where false information can be widely accepted and shared without critical questioning, as long as it aligns with the predominant beliefs of the group.

We will call this phenomenon “algorithmic social conformity,” which is amplified on digital platforms and organizes information flows in a way that reinforces group homogeneity. The public visibility of social reactions (likes, comments, shares) transforms adherence to beliefs into symbolic performance, intensifying the desire for belonging and acceptance. This mechanism creates social pressure to accept and share information validated by the group, regardless of its factual veracity.

Cognitive dissonance and self-regulation strategies

Cognitive dissonance theory, proposed by Festinger (1957), establishes that incongruence between cognitions, or between cognitions and behaviors, generates a state of psychological discomfort that motivates the individual to restore internal coherence. This theory has proven particularly relevant for understanding how people respond when confronted with evidence that contradicts their beliefs, especially in the context of misinformation. Neves and Oliveira (2024) conducted a conceptual articulation between the post-truth phenomenon and Cognitive Dissonance Theory, demonstrating how this theory, later imported by the field of communication, helps understand the process of information selection/exclusion by the public. Festinger pointed out in his empirical study that people tend to seek information to reinforce their own prior beliefs or opinions, a phenomenon directly related to the dissemination of fake news.

Additionally, the psychological discomfort experienced in the face of inconsistencies between cognitions or between cognitions and behaviors is, in itself, emotionally disturbing, which demonstrates the importance of affect in this context. Emotions such as guilt, shame, and embarrassment emerge in this context as self-regulation mechanisms that motivate defensive strategies (denial, rationalization) or, under favorable psychosocial conditions, openness to belief revision (Festinger, 1957).

When an individual perceives that they have shared incorrect information, especially if this conflicts with their positive self-image (e.g., “I am discerning,” “I am not easily deceived”), the resulting dissonance requires resolution. Common strategies to regulate this discomfort include denying the validity of the correction, devaluing corrective sources, or rationalizing the false information.

In the digital environment, cognitive dissonance is aggravated by broad or public exposure where corrections can be seen as public attacks, with possible defensive reactions. When corrected in digital spaces, individuals experience intensified dissonance, as the error becomes visible and potentially stigmatizing. Instead of favoring belief revision, this encourages defensive reactions such as denial, counterattack, or public rationalization, hindering the reconfiguration of the original belief, that is, there is personalization of information that makes the truth uncomfortable: if it contradicts my bubble, it is easier to rationalize than to change. Furthermore, the persistence of digital content as memes, screenshots, videos makes social forgetting of the error difficult.

Neves and Oliveira (2024) concluded that Cognitive Dissonance Theory proved valid as a theoretical, bibliographical, and conceptual basis for elucidating, at least in part, the post-truth phenomenon and the dissemination of fake news. The tendency to selectively seek information that confirms pre-existing beliefs, reject contradictory information, and rationalize beliefs even in the face of contrary evidence are fundamental psychological mechanisms that explain resistance to factual corrections and the persistence of false beliefs.

These three theoretical constructs—overconfidence bias, social conformity, and cognitive dissonance—do not operate in isolation but interact in complex ways that potentiate the legitimation of misinformation. In the next section, we will present an integrative model that articulates these three pillars in a psychosocial cycle that explains how individuals are exposed to, process, disseminate, and rationalize false information on social networks.

The role of emotions

Emotions play a pivotal role in the acceptance and dissemination of misinformation on social networks. Rather than functioning as automatic reactions to content, they act as modulators of social judgment, sharing behavior, and belief defense—guiding how individuals interpret, align with, or reject information.

Festinger (1957) emphasized that the discomfort produced by cognitive dissonance is not purely rational but deeply affective, involving emotions such as guilt, shame, and embarrassment. These feelings motivate individuals to restore internal coherence, either by revising their beliefs or by rationalizing and dismissing corrective evidence.

In parallel, Cialdini and Goldstein (2004) showed that social conformity is regulated not only by calculations of social approval but also by self-conscious emotions such as pride and admiration, which reinforce group alignment and identity expression.

Theories of overconfidence bias (Moore and Healy, 2008) likewise highlight the role of affect in sustaining inflated judgments. Feelings of epistemic pride, self-assurance, and perceived competence can hinder the recognition of error and promote the confident spread of misinformation.

Contemporary research on moral and self-conscious emotions (Tangney et al., 2007; Haidt, 2012) further supports this view, showing that affects such as indignation, loyalty, and gratitude shape the construction of collective beliefs and social norms. Thus, adherence to or rejection of informational content—whether true or false—depends not only on argument quality but also on the emotional and identity relevance that content holds in a given social context.

Taken together, these findings suggest that the persistence of misinformation is not merely a cognitive error but often reflects strategic affective responses. These are guided by psychological needs for belonging, identity protection, and social validation. In the following sections, we examine how emotional forces interact with cognitive and social mechanisms to sustain the psychosocial cycle of misinformation in digital environments.

Integrative model: the psychosocial cycle of misinformation

Based on the analysis of the three central pillars—overconfidence bias, social conformity, and cognitive dissonance—as well as the identification of the modulating role of emotions in belief legitimation processes, it becomes possible to integrate these elements into a broader theoretical structure. It is observed that these factors do not operate in isolation but interact in a dynamic and self-reinforcing manner, favoring the acceptance and dissemination of misinformation in digital environments.

Based on this articulation, we propose the Psychosocial Cycle of Misinformation, an integrative model that describes the interdependent stages through which individuals are exposed to, judge, share, and rationalize false information, even in the face of corrective evidence. This cycle incorporates cognitive, social, and affective processes, revealing how the legitimation of misinformation is sustained by mechanisms of identity protection, social validation, and emotional preservation.

In the following sections, we will present the five stages that compose the cycle, exploring how overconfidence bias, pressure for social conformity, regulation of cognitive dissonance, and emotional influence interact to structure and strengthen the persistence of misinformation in contemporary social networks.

Stages of the psychosocial cycle of misinformation exposure to socially relevant information

The cycle begins when the individual is exposed to (potentially false) information validated or not by their social group or digital bubble. This exposure may or may not be random, structured by the algorithms of digital platforms which, as demonstrated by Silva et al. (2025), analyze users' digital traces to direct content of interest. The implicit norm of the group—what one should believe and share—acts as a pressure factor for conformity.

At this stage, informational bubbles play a crucial role in limiting exposure to diverse viewpoints and reinforcing group homogeneity. The visibility of social reactions (likes, comments, shares) transforms adherence to beliefs into symbolic performance, intensifying the desire for belonging and acceptance. This environment creates favorable conditions for the initial acceptance of false information, as long as it aligns with the predominant beliefs of the group.

Judgment and decision to share

In the second stage, the individual evaluates the credibility of the received information and decides whether to share it. It is at this moment that overconfidence bias exerts a determining influence. As evidenced by Tian and Willnat (2025), the “News-Finds-Me” phenomenon affects the perception of one's own ability to evaluate the veracity of information, creating an “illusion of knowledge” in which passive consumption of news generates a false sense of being well-informed.

At this moment, overconfidence affects the perception of one's own ability to evaluate the veracity of information. The decision to share is based on the subjective conviction of being correct, dispensing with verification processes. Digital platforms promote the illusion of expertise, in which everyone can position themselves on everything, there is fragmentation of knowledge and absence of clear authority, which increase confidence in individual common sense, self-validated by likes, shares, or favorable comments.

There is a direct relationship with social conformity, in real-time with public visibility, where the individual is motivated to share information that aligns with the group's beliefs to obtain social validation and reinforce their belonging. The fear of social exclusion or criticism for not sharing “important” information can also motivate sharing without prior verification.

The effects of overconfidence and Dunning-Kruger are especially pronounced among individuals who are partially informed and rely heavily on social media for news, particularly when combined with strong “news-finds-me” perceptions. These conditions foster a subjective sense of being informed, which undermines skepticism and verification.

Receiving feedback or correction

After sharing information, the individual may receive feedback indicating that the shared content is false or misleading. This correction can come from various sources: fact-checking agencies, other users, or even the platform itself through warning labels. At this moment, cognitive dissonance emerges as a central mechanism.

The dissonance arises from the incongruence between the behavior performed (sharing false information) and the positive self-image that most individuals maintain about their informational discernment (“I am not easily deceived,” “I am careful with what I share”). This psychological discomfort is intensified when the correction occurs publicly, potentially threatening not only the individual's self-image but also their social status within the group.

Regulation of dissonance

Faced with the discomfort of cognitive dissonance, the individual employs various strategies to restore internal coherence. Instead of accepting the correction and revising the belief, which would threaten both self-image and group belonging, most individuals resort to defensive strategies:

a) Questioning the credibility of the corrective source (“fact-checkers are biased”);

b) Selectively seeking information that confirms the original belief;

c) Minimizing the importance of the error (“it doesn't matter if it's not 100% true, the essence is correct”);

d) Attributing the error to external factors (“I was misled by others”);

e) Reinterpreting the original information to make it seem more compatible with the facts.

These strategies allow the individual to maintain both their positive self-image and their alignment with the group, despite having shared false information. The emotional component is crucial at this stage, as feelings of embarrassment, shame, or threat to identity intensify defensive reactions and hinder the acceptance of corrections.

Reinforcement of behavior and group status

In the final stage, by justifying their conduct and maintaining alignment with the group, the individual preserves their self-image and social position. This reinforcement leads to the persistence of the behavior and the continuous legitimation of misinformation, closing the cycle. The sharing of information aligned with the group's beliefs, even if false, is socially rewarded with approval, engagement, and symbolic status.

This social reinforcement not only consolidates the specific false belief but also strengthens the behavioral pattern of sharing without careful verification, increasing the probability of repeating the cycle with new false information. Additionally, each successful repetition of the cycle (without significant negative consequences) increases the individual's confidence in their informational judgment, potentiating the overconfidence bias for future interactions.

Mediation and moderation mechanisms

In this model, cognitive dissonance acts as a mediator between confrontation with error (receiving feedback) and the subsequent behavioral response (regulation of dissonance). The level of dissonance experienced determines the intensity of the rationalization strategies employed and, consequently, the resistance to correction of false information.

The “Regulation of Dissonance” stage in the cycle can be enriched by the perspective of “informational guerrilla warfare, which seeks hegemony of meaning over the other discourse” (Recuero and Soares, 2020). This approach shows how the regulation of dissonance is not just an individual psychological process, but also a social phenomenon where different narratives compete for legitimacy.

The “disputes between a disinformative discourse and an informative discourse” (Recuero and Soares, 2020) occur in an asymmetric field, where the former often has structural advantages: it is emotionally engaging, cognitively simple, and socially validated. This perspective complements the cycle by making explicit the power dynamics underlying the persistence of misinformation even in the face of corrections.

In addition, recent studies contribute further empirical support to the moderating and mediating mechanisms proposed in the model. For instance, Chen et al. (2022) demonstrate that incidental exposure to counter-attitudinal information on social media can lead to either polarization or depolarization depending on the level of cognitive elaboration, suggesting that cognitive dissonance may mediate belief change when elaboration is encouraged, but reinforce resistance when defensive processing prevails. Similarly, Masood et al. (2024) show that online political expression tends to increase perceived disagreement and incivility, particularly when social identity is salient—indicating that social conformity and identity-based dynamics can intensify affective responses and obstruct corrective feedback. Furthermore, Meng and Wang (2024) find that lower network diversity and higher reliance on messaging platforms like WhatsApp are associated with lower trust and higher belief in misinformation, suggesting that informational homogeneity moderates the influence of overconfidence and conformity by limiting corrective exposure. Together, these findings reinforce the model's proposition that psychosocial mechanisms such as dissonance regulation, identity-driven conformity, and digital overconfidence are not static but vary depending on contextual and structural factors in the digital environment. Social conformity, in turn, functions as a moderator, influencing the relationship between exposure to information and the decision to share, as well as between the regulation of dissonance and the reinforcement of behavior. In contexts of strong group cohesion, pressure for conformity intensifies the propensity to share information validated by the group and hinders the adoption of a corrective stance after feedback. The desire for conformity can lead individuals to accept and even share misinformation that initially causes dissonance, in order to avoid social exclusion. The social cost of disagreeing with the group may outweigh the discomfort of maintaining a dissonant belief.

Overconfidence, finally, reinforces resistance to corrections and feeds the dissemination cycle, moderating the relationship between informational judgment and the decision to share, as well as between receiving feedback and regulating dissonance. Individuals with low overconfidence are more prone to informational conformity, with the presumption that others know more. On the other hand, individuals with higher levels of overconfidence may resist informational conformity more, except when there is overprecision combined with confirmation bias, at which point there is a greater tendency to dispense with verifications before sharing and to resist corrections more intensely afterward. In the aspect of normative conformity, the individual may overestimate how well they are viewed by the group when sharing certain information, so that sharing can be seen as an amplifier of their status or alignment with the group. It is a way of self-affirming as an opinion leader.

Studies indicate that “there is a tendency for people to receive, validate, and reproduce information that confirms their own points of view” (Araújo and Oliveira, 2020; Recuero, 2020). This process of selectively seeking information that reinforces pre-existing beliefs creates a feedback cycle that intensifies overconfidence. The “introjection of information that reinforces a certain understanding” (Recuero, 2020) establishes cognitive structures resistant to correction, explaining why, even in the face of contrary evidence, individuals remain confident in their original beliefs. This mechanism complements the understanding of the “Judgment and Decision to Share” stage in the psychosocial cycle, in which overconfidence operates as a moderator.

In this cycle, social conformity and overconfidence bias not only coexist but reinforce each other in social media contexts. The overestimation of one's own cognitive abilities (overconfidence) can reduce the necessary skepticism toward misinformation, while the search for acceptance and prestige (normative conformity) amplifies the motivation to share information with excessive confidence, even when incorrect. Additionally, overconfidence intensifies dissonance and, paradoxically, reinforces adherence to false information, instead of promoting correction.

In sum, overconfidence bias prevents the detection or acceptance of errors; social conformity pressures for the adoption of dominant narratives in the group, and the resulting cognitive dissonance is resolved through rationalization, not necessarily correction.

Furthermore, three main affective functions are perceived in the psychosocial cycle of misinformation:

• Cycle amplification: emotions such as pride, anger, and euphoria increase conviction and willingness to share false information, strengthening resistance to corrections.

• Modulation according to context: emotions such as shame, embarrassment, gratitude, and empathy adjust the response to information according to public visibility, group reaction, and degree of identity threat.

• Disruption and epistemic openness: emotions such as guilt, doubt, and humility increase the likelihood of belief revision, favoring interruption of the cycle.

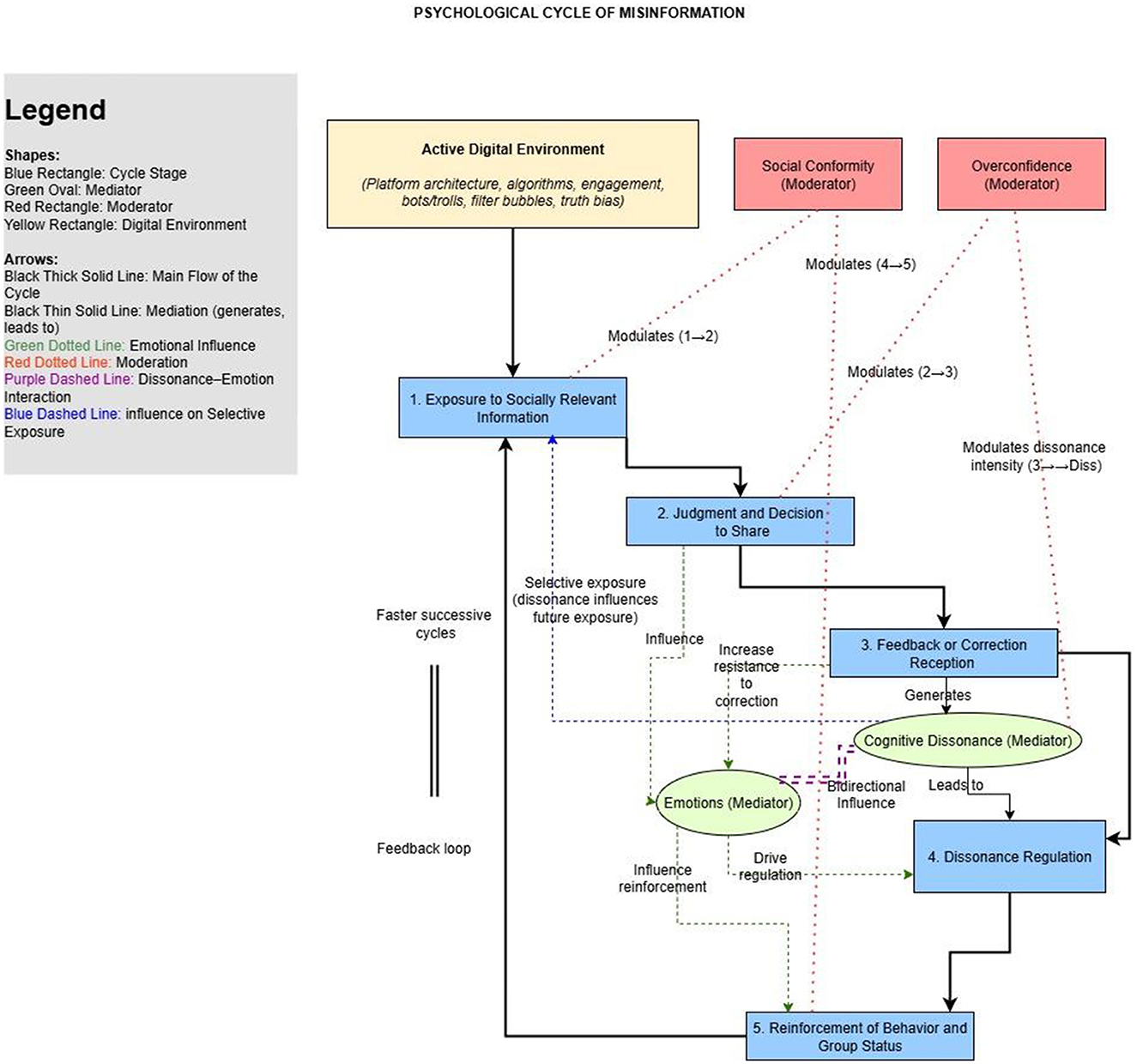

Each stage of the cycle is, therefore, influenced by specific emotions, which can function as catalysts, intensifiers, or attenuators of the cognitive and social processes already described. This dynamic interaction gives the model greater explanatory capacity, by recognizing that the persistence of misinformation is not just the result of reasoning errors or social pressures, but also of emotional processes deeply rooted in the maintenance of identity and group cohesion. This process favors not only the acceptance of false information but its active defense and repeated sharing, creating a pernicious and self-reinforcing cycle of legitimation and dissemination of fake news that can be represented graphically (see Figure 1 for the graphical representation of the model).

Figure 1. Graphical representation of the psychosocial cycle of misinformation. The cycle comprises five interdependent stages—exposure, judgment and sharing, feedback, dissonance regulation, and reinforcement—sustained by the interaction of overconfidence bias, social conformity, and cognitive dissonance. Emotional factors act as modulators, amplifying or attenuating the dynamics at each stage. This cycle explains the psychosocial legitimation and persistence of misinformation in digital environments. Shapes: blue rectangle: cycle stage; green oval: mediator; red rectangle: moderator; yellow rectangle: digital environment; arrows: black thick solid line: main flow of the cycle; black thin solid line: mediation (generates, leads to); green dotted line: emotional influence; red dotted line: moderation; purple dashed line: dissonance-emotion interaction; blue dashed line: influence on selective exposure.

This cyclical model offers an integrative explanation for the persistence of misinformation, articulating individual psychological processes and social dynamics in a feedback system. By identifying the interactions between overconfidence bias, social conformity, and cognitive dissonance, the model reveals how these factors reinforce each other, creating a resilient cycle of legitimation of misinformation.

The cycle is represented as a circular sequence of five stages connected by arrows, illustrating feedback dynamics. Each stage is color-coded and mapped to psychological constructs and emotional modulators. The external layer indicates amplification loops, showing how emotional responses reinforce or disrupt each mechanism.

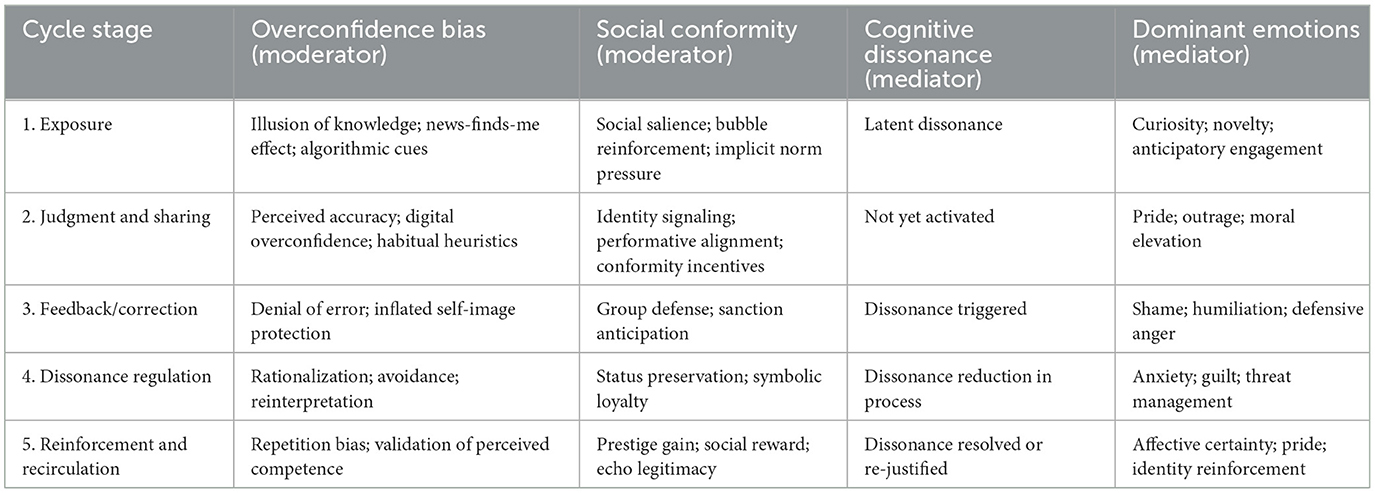

To improve conceptual clarity, we present a summary matrix in Table 1, cross tabulating the five stages of the psychosocial cycle with associated psychological biases, conformity dynamics, dissonance activation, and dominant emotional responses.

Table 1. Cross-matrix of the psychosocial cycle stages with associated psychological mechanisms and dominant emotions.

Each stage of the cycle engages specific psychological processes (overconfidence, conformity, dissonance) and is modulated by distinct emotional profiles that shape whether misinformation is reinforced or disrupted.

In the next section, we will explore how this cycle can be interrupted, identifying theoretical conditions that, if present, tend to weaken or break the process of legitimation of misinformation.

Interruption of the cycle: conditions for the delegitimization of misinformation

We propose that the persistence of misinformation stems from a psychosocial cycle, in which social conformity, overconfidence, and cognitive dissonance interact to protect and reaffirm false beliefs. However, the very cyclical structure of the model reveals critical breaking points, whose interferences can interrupt the feedback and significantly reduce the strength of misinformation.

We identify, below, five theoretical conditions that, if present, tend to weaken or break the legitimation cycle.

Reduction of homogeneous group exposure

The diversification of social networks, contact with different viewpoints, and the weakening of ideological bubbles could weaken the pressure of normative and informational conformity or, at least, decrease the speed of convergence by consensus (Fazelpour and Steel, 2022), although this issue still depends on empirical support and specific analysis of social networks. One can envision that the absence of initial group validation could weaken the automatic acceptance of misinformation and favor doubt, affecting confidence, conformity, and consensus formation. Silva et al. (2025) demonstrated that informational bubbles are caused by the algorithmic metrics of social networks that analyze users' digital traces to direct content of interest. Interventions that alter these algorithms to expose users to a greater diversity of perspectives can reduce informational homogeneity and, consequently, weaken the first stage of the legitimation cycle.

Studies on political polarization on social networks suggest that controlled exposure to contrary viewpoints, when conducted in non-confrontational contexts, can reduce the extremity of positions and increase willingness to consider contradictory evidence. This cross-exposure acts directly in weakening social conformity as a moderator of the misinformation cycle (Lilliana et al., 2022).

Recognition of one's own fallibility

Interventions that promote critical thinking and awareness of one's own biases can reduce overconfidence bias. Cohen et al. (2007), when discussing self-affirmation, shows that when people become aware of their own biases and are willing to consider the possibility of error, they become less closed to contrary viewpoints. Although the article does not use the expression “overconfidence bias,” the concept of close-mindedness (cognitive closure) is tangential to the bias of strongly believing one is right. In this sense, openness to the possibility of error increases the willingness to verify information and adopt epistemically humble postures.

Tian and Willnat (2025) identified that ~40% of participants who expressed confidence in their ability to identify fake news were, in reality, less capable than average. This mismatch between confidence and actual competence suggests that educational interventions that concretely demonstrate this discrepancy can promote a more realistic calibration of informational self-confidence.

Aspects such as “open dialogue” and self-persuasion can facilitate the recognition of weaknesses in one's own position, thereby increasing the willingness to verify information and adopt a more modest stance toward personal convictions. This process aligns with the notion of epistemic humility, particularly when fostered by interventions that promote internal reflection or respectful exposure to divergent opinions (Müller et al., 2020). Although we did not identify explicit analysis of overconfidence bias, together, we identified support for the idea that making room for the possibility of error and stimulating critical examination of one's own beliefs can help in the “de-intensification” of rigid positions and the adoption of epistemically more humble postures.

Thus, the development of a posture of “epistemic humility,” the recognition of the limits of one's own knowledge and one's own discernment capacity, can increase the willingness to verify information before sharing it and to reconsider positions after receiving corrections. This condition acts directly in weakening overconfidence bias as an amplifier of the misinformation cycle.

Constructive confrontation of dissonance

Environments that allow belief revisions without stigmatization or humiliation favor the healthy resolution of cognitive dissonance. This avoids motivated defensiveness and allows the incorporation of corrections without identity loss.

Neves and Oliveira (2024) highlighted that, according to Festinger's theory, people tend to seek information to reinforce their own prior beliefs or opinions when confronted with cognitive dissonance. However, when belief revision is socially valued as a sign of intelligence and intellectual openness, instead of being seen as weakness or inconsistency, resistance to correction can be significantly reduced (Harmon-Jones and Mills, 2019; Gawronski, 2012). Contexts that normalize changing opinion based on new evidence and that separate personal identity from specific beliefs facilitate the constructive confrontation of dissonance. At this moment, emotions act as modulators that can amplify or reduce the cycle. Emotions such as pride and anger tend to amplify the cycle, emotions such as shame and empathy can modulate according to the social context, and emotions such as guilt, doubt, and humility present potential to break the cycle, by promoting epistemic openness and constructive regulation of dissonance. They are emotional mechanisms of protection, validation, and/or belonging. This condition acts directly in the fourth stage of the cycle (regulation of dissonance), redirecting the strategies for resolving dissonance toward the incorporation of correction instead of the rationalization of the false belief.

Absence of social reinforcement for misinformation

When misinformation is not socially rewarded, whether by engagement, group support, or symbolic status, the sharing behavior tends to weaken. The absence of reinforcement weakens the repetition of the cycle.

Digital platforms currently reward engagement, regardless of the veracity of the content. Emotionally provocative content, including misinformation, frequently generates more engagement than more nuanced factual information. Alterations in the reward mechanisms of platforms, such as reducing the visibility of content identified as potentially false or implementing frictions in the sharing process, can reduce the social reinforcement associated with the dissemination of misinformation (Munger and Phillips, 2022).

In this sense, this point indicates the need to advance the debate about the role of social network regulation, especially in the aspect related to the social reinforcement of misinformation, something crucial in the cycle.

Additionally, social norms that value accuracy and fact verification, instead of the speed and emotional impact of sharing, can alter the structure of social rewards associated with the dissemination of information. This condition acts directly in the fifth stage of the cycle (reinforcement of behavior and group status), weakening the continuous legitimation of misinformation.

Development of media and informational literacy

Although not explicitly mentioned in the original model, a fifth condition for the delegitimization of misinformation emerges from the integrated analysis of the three theoretical pillars: the development of media and informational literacy competencies. These competencies include the ability to critically evaluate information sources, understand how the algorithms of digital platforms shape exposure to content, and recognize informational manipulation techniques.

Educational interventions focused on media literacy can increase resistance to misinformation, reducing both belief in fake news and the propensity to share it. These interventions act simultaneously on the three pillars of the model: they reduce overconfidence by demonstrating the complexity of the contemporary informational environment; they weaken social conformity by promoting independent evaluation of information; and they facilitate the constructive confrontation of dissonance by providing tools for the critical analysis of content. It is important to emphasize that these conditions do not operate in isolation but interact in complex ways. For example, the diversification of informational exposure can be more effective when combined with the development of media literacy, which provides the tools to critically process the diversity of perspectives. Similarly, the constructive confrontation of dissonance is facilitated by the reduction of overconfidence through the promotion of epistemic humility.

In the next section, we will explore how the described psychosocial mechanisms are intensified in the contemporary digital environment, making the cycle of legitimation of misinformation more resilient and resistant to interruption.

The digital intensification of the psychosocial legitimation of misinformation

The psychosocial mechanisms described in the cycle—social conformity, obedience, overconfidence, and cognitive dissonance—are not exclusive to the digital era. Historically, different societies have legitimized and disseminated false beliefs through collective processes that involved obedience to group norms, social validation of leadership, motivated rationalizations, and resistance to belief change. We can recall historical cases such as heresy trials during the Inquisition, totalitarian propaganda in authoritarian regimes, and the spread of rumors in traditional communities to illustrate the existence of social cycles of legitimation of untruths.

It is verified that in the Inquisition trials or in the propaganda of totalitarian regimes, the main objective was not necessarily to deceive, in the sense of transmitting information known to be false to manipulate public perception. There is much more the objective of consolidating authority, reinforcing dogmas, or exercising moral/social control, often with the use of false information. Many inquisitors genuinely believed they were fighting evil, so they saw their actions as protection of the faith (Peters, 1988), and many political leaders in totalitarianism might believe in their narratives, making it difficult to separate “conscious lie” from “ideological self-deception” (Arendt, 1958).

Additionally, rumors in traditional communities may not arise with the objective of deceiving, but spontaneously, with a mixture of beliefs, orality, and symbolic dynamics (Berger and Luckmann, 1991).

Thus, the legitimation of lies in these contexts was predominantly institutional and/or cultural and not distributed in digital networks as we see today, where there is selective exposure (Bakshy et al., 2015).

In modern times, misinformation is linked to mass society, communication media, and mainly to the digital context, so that digital social networks have intensified these mechanisms in an unprecedented way, making the psychosocial cycle faster, more visible, more emotionally engaging, and more resistant to correction. This section analyzes how the contemporary digital environment potentiates each element of the cycle of legitimation of misinformation.

Beyond psychological and social mechanisms, these dynamics are embedded in broader systemic structures. The attention economy and platform capitalism incentivize emotionally charged, polarizing, and misleading content through engagement-based algorithms (Zuboff, 2019; Wu, 2016). Misinformation thrives in this environment not just due to cognitive vulnerabilities, but because false or extreme content often performs better in monetized digital architectures. Therefore, the psychosocial cycle of misinformation is not only intensified by individual-level processes, but structurally sustained by economic and political logics of the contemporary media ecosystem.

Algorithmic and amplified social conformity

Digital environments organize information flows in ideological bubbles, reinforcing group homogeneity and mutual validation. Silva et al. (2025) demonstrated that the algorithmic metrics of social networks analyze users' digital traces to direct content of interest, creating informational bubbles that limit exposure to diverse viewpoints.

The public visibility of social reactions (likes, comments, shares) transforms adherence to beliefs into symbolic performance, intensifying the desire for belonging and acceptance. Unlike face-to-face contexts, where conformity can be expressed in a more subtle and private manner, digital platforms make conformity (or dissent) highly visible and quantifiable.

Additionally, recommendation algorithms tend to amplify content that generates greater engagement, frequently favoring emotionally provocative information, including misinformation. This mechanism creates a feedback cycle where extreme or false content receives greater visibility, increasing the normative pressure for conformity and progressively legitimizing misinformation.

It is verified that “the structural characteristics of platforms (Twitter, Facebook, WhatsApp) facilitate engagement with false content” (Nascimento and Silva, 2024), creating an environment conducive to the accelerated dissemination of misinformation. The affordances of social networks not only amplify the reach of false information but also intensify the psychological mechanisms underlying the misinformation cycle. There is even indication that this is profitable for the platforms, given the greater retention of attention.

For example, reward systems based on engagement (likes, shares) reinforce overconfidence, while the visibility of social metrics potentiates conformity. Simultaneously, personalization algorithms create homogeneous informative environments that reduce exposure to corrections, hindering the experience of cognitive dissonance.

Social conformity in the digital environment is, therefore, algorithmically structured and amplified, intensifying its role as a moderator in the cycle of legitimation of misinformation. Informational bubbles not only limit exposure to diverse viewpoints but also create the illusion of social consensus, where minority beliefs can appear majority within the individual's personalized informational ecosystem.

Digitalized overconfidence

Unrestricted access to publication and the decline of traditional epistemological authority produce a certain “illusory democratization of expertise,” in which everyone perceives themselves as bearers of legitimate knowledge (Tian and Willnat, 2025).

The engagement received on social networks functions as positive feedback, increasing confidence in one's own judgments, even when erroneous. Comments, likes, and shares are interpreted as validation of the informational quality of the shared content, reinforcing the overconfidence bias.

Additionally, the ease of access to online information creates an illusion of knowledge, in which the ability to quickly locate information is confused with deep understanding of this information. This “search fallacy” contributes to overconfidence, leading individuals to overestimate their ability to discern true from false information.

The digital environment also facilitates the formation of “epistemic echo chambers” (Nguyen, 2020), in which individuals with similar beliefs validate each other, creating the perception that their knowledge is superior to that of other groups. This dynamic intensifies overplacement, one of the forms of overconfidence bias, where individuals perceive their capabilities as superior to those of others.

Dissonance aggravated by public exposure

When corrected in digital spaces, individuals experience intensified cognitive dissonance, as the error becomes visible and potentially stigmatizing. Instead of favoring belief revision, this encourages defensive reactions such as denial, counterattack, or public rationalization, hindering the reconfiguration of the original belief.

Neves and Oliveira (2024) highlight that, according to Festinger's theory, people tend to seek information to reinforce their own prior beliefs or opinions when confronted with cognitive dissonance. In the digital environment, where corrections are often public and can be perceived as attacks on identity or the group, this tendency is amplified.

The permanent and retrievable nature of digital interactions also contributes to the intensification of dissonance. Unlike face-to-face contexts, where statements can be forgotten or reinterpreted over time, the digital environment preserves past statements, making contradictions and errors more salient and difficult to ignore or reinterpret.

Furthermore, the political and ideological polarization frequently observed on social networks increases the identity load associated with specific beliefs. When a false belief is strongly associated with group identity, factual corrections can be interpreted as attacks on the group and the individual's social identity, intensifying dissonance and resistance to correction.

Acceleration and amplification of the complete cycle

Beyond intensifying each individual component, the digital environment accelerates and amplifies the complete cycle of legitimation of misinformation. The speed of information dissemination on social networks allows the cycle to complete in a matter of hours or minutes, in contrast to the days or weeks it could take in pre-digital contexts.

The scale of dissemination is also amplified, with false information potentially reaching millions of people in a short period. This quantitative amplification has qualitative effects, creating the perception that widely shared information must contain some element of truth, simply due to its ubiquity.

The digital environment also facilitates the formation of transnational communities united by shared beliefs, including false beliefs. These communities can persist and evolve independently of local contexts, creating alternative informational ecosystems where misinformation is continuously legitimized and refined.

Implications for interventions

The digital intensification of the mechanisms of legitimation of misinformation has significant implications for the development of effective interventions. Approaches that may have been sufficient in pre-digital contexts, such as simple factual correction, are often inadequate given the speed, scale, and emotional intensity of the digital legitimation cycle.

Effective interventions must consider the specificities of the digital environment, including:

1. The need for algorithms that promote informational diversity instead of homogeneity, reducing algorithmic social conformity;

2. Mechanisms that calibrate informational confidence, such as feedback on the accuracy of past judgments, countering digital overconfidence;

3. Spaces that allow non-threatening corrections to identity, mitigating dissonance aggravated by public exposure;

4. Approaches that consider the speed and scale of digital dissemination, including early detection and rapid intervention.

In this way, we describe a cycle that has always existed, but which, in the era of social networks, gains speed, strength, and persistence, making misinformation more resilient to refutation. The digital intensification of legitimation is not only quantitative (more people reached), but qualitative: the cycle becomes more emotionally rewarding and identity protective, raising the complexity of corrective interventions.

In order to empirically test the proposed cycle, future research may operationalize each construct using validated psychometric instruments. For instance, overconfidence can be measured using the Overclaiming Technique (Paulhus et al., 2003), social conformity through the Social Conformity Scale (Mehrabian and Stefl, 1995), and cognitive dissonance using dissonance arousal indices (Elliot and Devine, 1994). Experiments could manipulate variables such as group pressure, visibility of correction, or emotional framing to observe behavioral and attitudinal responses across cycle stages. Longitudinal studies may examine the stability and evolution of the cycle over time, particularly regarding emotional feedback and digital context. These empirical approaches would allow formal testing and refinement of the theoretical framework.

Discussion

The proposal in this article offers an integrative perspective to understand the mechanisms that sustain the persistence and dissemination of false information on social networks. By articulating three well-established theoretical pillars in social and cognitive psychology—overconfidence bias, social conformity, and cognitive dissonance—the model contributes to the advancement of knowledge about misinformation in multiple dimensions.

Synthesis of the main arguments

The Psychosocial Cycle of Misinformation model describes five interdependent stages that explain how individuals are exposed to, process, disseminate, and rationalize false information on social networks. In the first stage, the individual is exposed to potentially false information validated by their social group or digital bubble. In the second, overconfidence bias influences informational judgment, leading to the decision to share based on the subjective conviction of being correct. In the third stage, the receipt of factual corrections generates cognitive dissonance between the performed behavior and the self-image of competence. In the fourth, this dissonance is regulated through rationalization strategies, such as discrediting the corrective source. Finally, in the fifth stage, alignment with the group preserves the individual's self-image and social position, reinforcing the behavior and continuously legitimizing misinformation.

This cycle is intensified in the contemporary digital environment, where social conformity is algorithmically structured, overconfidence is amplified by received engagement, and cognitive dissonance is aggravated by public exposure. The speed, scale, and emotional intensity of digital interactions make the legitimation cycle more resilient and resistant to interruption.

Theoretical contributions of the model

The main theoretical contribution lies in the articulation of psychological and social constructs previously studied in isolation into an integrated explanatory model. Unlike approaches that focus exclusively on individual cognitive aspects or social dynamics, the proposed model demonstrates how these factors interact in a feedback cycle that explains the persistence of misinformation.

The model also advances theoretical understanding by identifying specific mechanisms of mediation and moderation. Cognitive dissonance acts as a mediator between confrontation with error and the subsequent behavioral response, while social conformity and overconfidence function as moderators that intensify or attenuate the relationships between different stages of the cycle.

Additionally, the model contributes to the literature by identifying theoretical conditions for the delegitimization of misinformation. The reduction of homogeneous group exposure, recognition of one's own fallibility, constructive confrontation of dissonance, absence of social reinforcement for misinformation, and development of media literacy represent potential breaking points in the legitimation cycle.

Limitations of the proposed theory

Like any theoretical model, the Psychosocial Cycle has limitations that should be recognized. First, the model focuses predominantly on psychosocial processes, devoting less attention to structural, economic, and political factors that also influence the production and dissemination of misinformation. The attention economy that incentivizes sensationalist content, political interests in informational manipulation, and inequalities in access to quality education are elements that, although not central to the model, interact with the described psychosocial processes.

Second, the model assumes a certain homogeneity in psychological processes, when evidence suggests significant variations among individuals regarding susceptibility to cognitive biases, social conformity, and experience of dissonance. Factors such as personality traits, cognitive styles, and cultural differences may moderate the intensity and manifestation of the processes described in the model.

Third, while the model incorporates digital affordances and algorithmic amplification within the psychosocial cycle, it does not fully account for the structural and economic drivers that underlie the production and persistence of misinformation. Platform business models based on engagement maximization, monetization of outrage, and algorithmic personalization operate as systemic incentives that interact with—but also transcend—individual cognitive and social processes (Wu, 2016). Likewise, political agendas and the deregulation of media ecosystems shape the broader informational context in which psychosocial mechanisms unfold. Although these macro-level forces are not the primary focus of the model, their influence suggests that effective interventions require not only psychological insight but also structural change at the platform and policy levels.

A complementary element to the Psychosocial Cycle emerges from the thesis of “cultural cognition,” which suggests how “personal and group values influence the formation of opinions and attitudes” (Nascimento and Silva, 2024), sometimes leading those with higher cognitive abilities to become even more polarized in their views (Kahan et al., 2012). This perspective reinforces the identity dimension of the misinformation cycle, in which content sharing is not just a matter of conformity, but also an affirmation of group belonging.

Cultural cognition helps explain why correction of false information frequently fails: when a belief is anchored in identity values, its revision implies not only a cognitive adjustment but a potential distancing from the reference group. This mechanism enriches the understanding of the “Regulation of Dissonance” stage in the cycle, where resistance to correction can be intensified by identity issues.

Implications for future research

The proposed model opens several avenues for future research. Empirical studies are needed to test the proposed relationships between the components of the cycle, particularly the mechanisms of mediation and moderation. Longitudinal research could examine how the cycle develops over time and how interventions at different points affect its dynamics. Investigations into individual differences in susceptibility to the legitimation cycle also represent a promising direction. Understanding how factors such as cognitive capacity, critical thinking, personality traits, and political orientation moderate the operation of the cycle can inform more personalized and effective interventions.

Comparative studies between different digital platforms and cultural contexts could examine how specific characteristics of informational environments affect the intensity and manifestation of the legitimation cycle. Similarly, research on the historical evolution of mechanisms of legitimation of misinformation could illuminate continuities and discontinuities between pre-digital and digital contexts.

The “conceptual nuances” between misinformation, misinformation, and erroneous information may trigger distinct psychosocial mechanisms or with varying intensities (Nascimento and Silva, 2024). Intentional misinformation, for example, is often designed to maximize emotional engagement and social conformity, while unintentional misinformation may depend more heavily on overconfidence for its dissemination. This differentiation will allow a refinement of the model in future research, potentially increasing its explanatory and predictive power. Finally, applied research could develop and test interventions based on the conditions for delegitimization identified by the model. Randomized controlled experiments could evaluate the relative effectiveness of interventions focused on reducing overconfidence, diversifying informational exposure, constructively confronting dissonance, and developing media literacy.

Potential practical applications

Beyond its theoretical contributions, the psychosocial cycle has significant practical implications for the development of strategies to combat misinformation. The model suggests that effective interventions should target the underlying psychosocial mechanisms that sustain the legitimation cycle, instead of focusing exclusively on the factual correction of specific false information.

For digital platforms, the model suggests algorithmic modifications that reduce informational homogeneity and alter reward mechanisms that currently favor emotionally provocative content regardless of its veracity. Functionalities that promote verification before sharing and provide feedback on the accuracy of past judgments could reduce overconfidence bias. For educators, the model highlights the importance of approaches that go beyond the transmission of factual knowledge, focusing on the development of metacognitive competencies, critical thinking, and media literacy. Educational programs that promote epistemic humility and normalize the revision of beliefs based on new evidence could reduce resistance to correction.

For communicators and journalists, the model suggests correction strategies that minimize identity threat and cognitive dissonance, such as the affirmation of shared values before factual correction and the framing of informational accuracy as a value that transcends different ideological groups.

Finally, for public policy makers, the model highlights the need for multidimensional approaches that combine platform regulation, education for media literacy, and awareness campaigns about the psychological processes that sustain misinformation.

Conclusion

This article proposed the Psychosocial Cycle of Misinformation, an integrative theoretical model that articulates three central pillars of social and cognitive psychology—overconfidence bias, social conformity, and cognitive dissonance—to understand the mechanisms that sustain the persistence and dissemination of false information on social networks.

The Psychosocial Cycle of Misinformation model describes how these factors interact in five interdependent stages: exposure to socially relevant information, judgment and decision to share, receiving feedback or correction, regulation of dissonance, and reinforcement of behavior and group status. This cycle explains how individuals are exposed to, process, disseminate, and rationalize false information, contributing to the understanding of the resilience of misinformation even in the face of corrective evidence.

The original contribution of this work lies in the articulation of previously isolated theoretical constructs into an integrated explanatory model that identifies not only the factors that contribute to the legitimation of misinformation but also their dynamic interactions and potential breaking points. By demonstrating how the contemporary digital environment intensifies these psychosocial mechanisms, the model also offers an explanation for the apparent amplification of the misinformation phenomenon in the era of social networks. The identification of theoretical conditions for the delegitimization of misinformation— reduction of homogeneous group exposure, recognition of one's own fallibility, constructive confrontation of dissonance, absence of social reinforcement for misinformation, and development of media literacy—represents a significant contribution to the development of more effective interventions in combating misinformation.

Although the model presents limitations, particularly in relation to the attention devoted to structural factors and individual variability in the described psychological processes, it offers a robust conceptual framework for future research and practical applications. Empirical studies are needed to test the proposed relationships between the components of the cycle, examine individual differences in susceptibility to the legitimation cycle, and develop and evaluate interventions based on the identified conditions for delegitimization.

In a scenario where misinformation represents a significant threat to democracy, public health, and social cohesion, we offer an integrative perspective that can inform more effective strategies to combat this phenomenon. By understanding the psychosocial mechanisms that sustain the legitimation of misinformation, we can develop interventions that not only correct specific false information but also interrupt the cycle that allows its persistence and dissemination.

The complexity of the misinformation phenomenon requires interdisciplinary approaches that consider both individual psychological processes and social dynamics and the characteristics of the informational environment. The Psychosocial Cycle represents a step in this direction, offering a theoretical model that integrates multiple perspectives and can serve as a basis for future research and practical interventions.

Finally, it is important to recognize that combating misinformation is not just a technical or psychological issue, but also ethical and political. Any intervention strategy must balance the concern with informational accuracy with respect for individual autonomy and freedom of expression. The Psychosocial Cycle does not offer definitive answers to these ethical dilemmas, but provides a conceptual framework that can inform more modulated discussions about how to promote a healthier informational environment without compromising fundamental democratic values.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

IN: Conceptualization, Investigation, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This study was supported in part by a tuition scholarship provided by the Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (CAPES), Brazil. The funder had no involvement in the design, execution, analysis, or interpretation of the research.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Correction Note

A correction has been made to this article. Details can be found at: 10.3389/frsps.2025.1621794.

Generative AI statement

The author(s) declare that Gen AI was used in the creation of this manuscript. This manuscript received editorial and formatting assistance from a generative AI tool (ChatGPT, developed by OpenAI), under the full intellectual control, critical judgment, and authorship of the submitting author. The AI was used exclusively to support linguistic refinement, translation, and structural consistency, without generating original scientific content or influencing the theoretical claims.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Araújo, R. F., and Oliveira, T. M. (2020). Misinformation and messages about hydroxychloroquine on Twitter: from political pressure to scientific dispute. AtoZ New Pract. Inform. Knowl. 9, 196–205.

Aronson, E., Wilson, T. D., and Akert, R. M. (2015). Social psychology, 8th Edn. Boston, MA: Pearson.

Asch, S. E. (1956). Studies of independence and conformity: I. a minority of one against a unanimous majority. Psychol. Monogr. Gen. Appl. 70, 1–70. doi: 10.1037/h0093718

Bakshy, E., Messing, S., and Adamic, L. A. (2015). Exposure to ideologically diverse news and opinion on Facebook. Science 348, 1130–1132. doi: 10.1126/science.aaa1160

Barcelos, R. H., Dantas, D. C., and Sénécal, S. (2021). Fake news and its impact on consumer behavior: a marketing communications perspective. J. Market. Commun. 27, 15–31.

Bazerman, M. H., and Tenbrunsel, A, E. (2011). Blind Spots: Why We Fail to Do What's Right and What to do About It. Princeton, NJ: Princeton University Press. doi: 10.1515/9781400837991