- Institute for Infrastructure and Environment (IIE), School of Engineering, The University of Edinburgh, Edinburgh, United Kingdom

The biomechanics of head acceleration events (HAEs) in sport have received increasing attention due to growing concern over concussion and long-term neurodegenerative disease risk. While wearable sensors, such as instrumented mouthguards (iMGs), are now commonly used to measure HAEs, these devices face well-documented challenges, including poor skull coupling, limited compliance, and high false-positive rates. Video footage is routinely collected in sports for performance analysis, and is a perhaps underutilised source for both retrospective and in situ measurement surrounding HAEs. Traditionally used to confirm HAE exposure in wearable sensor studies, video has more recently been explored as a quantitative tool in its own right. This review synthesises the current state of the art in video-based measurement of HAEs, with a particular focus on videogrammetric methods, including manual point tracking and model-based image matching. Recent advances in computer vision and deep learning that offer the potential to automate and extend these approaches are also examined. Key limitations of current video-based methods are discussed, alongside opportunities to improve their scalability, accuracy, and biomechanical insight. By consolidating evidence across traditional and emerging approaches, this review highlights the potential of video as a practical and valuable measurement source for quantitative measurement and modelling of HAEs in sport.

1 Introduction

The high-speed and collision-intensive nature of contact sports such as association football (or soccer, globally), rugby, and American football exposes athletes to frequent head acceleration events (HAEs) (Tierney, 2021; Tooby et al., 2024), contributing to high rates of sports-related concussion (SRC) (Daneshvar et al., 2011; Daniel et al., 2012) and an increased risk of long-term neurodegenerative diseases (NDs), including chronic traumatic encephalopathy (CTE) (Mackay et al., 2019; McKee et al., 2009; Russell et al., 2021; 2022; Stewart et al., 2023). These concerns have attracted widespread media coverage and public attention, including through high-profile legal actions, such as the $765 million settlement between the NFL and former players in 2013 (Belson, 2013), the class-action lawsuit filed against the NHL (Kaplan, 2021), and ongoing legal proceedings in the UK involving the Rugby Football Union (RFU) and Rugby Players Association (RPA) (Bull, 2024). Going forward, there is therefore a clear need for improved understanding and monitoring of HAEs to inform preventative strategies and provide better protection to athletes across all levels of play.

The precise mechanisms by which SRC and HAE exposure contribute to ND risk remain unknown. The most recent report of the Lancet Commission included head injury as a modifiable risk factor for dementia for the first time (Livingston et al., 2020). In an attempt to quantify the burden of HAEs, recent efforts in the biomedical and biomechanical engineering fields have seen the adoption of wearable sensors for field-based HAE monitoring (Le Flao et al., 2022; O’Connor et al., 2017), and advanced computational modelling strategies to quantify brain injury risk (Ji et al., 2022). However, wearable devices have several limitations, including sensor-skull coupling issues (Wu et al., 2016b), varying accuracy with proximity of impact to sensor (Le Flao et al., 2025), user compliance challenges (Jones et al., 2022; Kenny et al., 2024; Roe et al., 2024), and the need for extensive manual video confirmation to verify true positives (Kuo et al., 2018; Patton et al., 2020).

Video, however, is already widely used in sport for performance analysis and incident review, and offers a low-cost, non-invasive means of collecting exposure data retrospectively (Funk et al., 2022; Tierney et al., 2019). When combined with appropriate analysis techniques, video footage can support both qualitative assessments (e.g., verifying impact events or classifying impact scenarios (Rotundo et al., 2023; Sherwood et al., 2025)) and quantitative measurements of head motion and impact mechanics (Bailey et al., 2018; Gyemi et al., 2023; Stark N. E.-P. et al., 2025), making it increasingly valuable in HAE research.

Nevertheless, the use of video for quantitative measurement remains underdeveloped. Traditional videogrammetric methods, including point tracking and model-based image matching (MBIM), have been validated for measuring impact velocities in controlled environments (Bailey et al., 2018; Tierney et al., 2018a). However, they often rely on high-speed cameras, multiple calibrated views, and considerable manual effort, limiting their scalability in field settings (Stark N. E.-P. et al., 2025; Tierney et al., 2019). These constraints have historically prevented widespread deployment in large-scale epidemiological studies. More recently, however, advances in computer vision and deep learning, such as human and head pose estimation (Asperti and Filippini, 2023; Marchand et al., 2016) and action detection (Giancola et al., 2023; Rezaei and Wu, 2022), have shown potential as approaches for automating the process of extracting motion data from standard broadcast or handheld video. These developments present new opportunities for applying video-based HAE analysis in both research and clinical settings.

This narrative review synthesises the literature on quantitative videogrammetric methods applied to consumer-grade video footage for analysing head, body and object motion resulting from HAEs. The focus is on approaches that estimate measurable kinematic parameters associated with HAEs, including positions, velocities, orientations, and trajectories. Emphasis is placed on both traditional methods and emerging deep learning solutions, evaluating their current accuracy, scalability, and relevance to broader HAE research interests.

The remainder of this review is structured as follows. Section 2 introduces the research areas associated with measurement of HAEs, highlighting the expanding role of quantitative video analysis across the full spectrum of HAE research, and the associated challenges that come with it. This includes not only direct estimation of head kinematics, but also broader contextual measurements (such as player pose and inbound velocities) that can be extracted from video to support downstream tasks like physical or computational reconstructions. Section 3 covers the methodological details of existing traditional videogrammetry techniques (e.g., point tracking and MBIM) which have been developed and applied for use in HAE research, analysing current capabilities and limitations with regards to measurement accuracy and the wider value that they contribute to the HAE practice and policy discussed in Section 2. Section 4 then explores recent advances in deep learning, with a focus on architectures and applications that offer near-term, actionable improvements to both existing quantitative video-based HAE analysis efforts and developing novel methodologies. Finally, Section 5 reflects on the current state of the field, highlighting key limitations in existing approaches, and outlining future directions for improving the accuracy, scalability, and practical impact of quantitative video analysis of HAEs.

2 Context and motivation for video-based quantification

Although the primary focus of this review is to evaluate the current state of video-based methods for quantitatively measuring kinematics associated with HAEs, it is important to situate these techniques within the broader landscape of HAE research. Video-derived measurements, despite current limitations, are used to support downstream applications such as physical reconstruction, computational modelling, and brain injury risk estimation. This section therefore provides a brief overview of these related domains to contextualise the role and potential value which videogrammetric methods add to the wider HAE analysis field, before expanding discussion around specific methodological details in Sections 3,4.

2.1 HAE background

The term head acceleration event (HAE) was introduced to address the limitations of the term head impact, which implies direct contact with the head and fails to capture inertial loading from indirect forces (Nguyen et al., 2019; Tierney, 2021). To clarify terminology and support standardisation, the 2022 Consensus Head Acceleration Measurement Practices (CHAMP) group defined a HAE as any event that induces an acceleration response of the head due to short-duration collision forces, applied either directly to the head or indirectly via the body. In contrast, a head impact event (HIE) refers specifically to events involving direct contact with the head (Arbogast et al., 2022).

To structure the field, CHAMP identified six priority areas relevant to HAE research:

1. Study design and statistical analysis (Rowson et al., 2022);

2. Laboratory validation of wearable kinematic devices (Gabler et al., 2022);

3. On-field validation and deployment of wearable kinematic devices (Kuo et al., 2022);

4. Video analysis of HAEs (Arbogast et al., 2022);

5. Physical reconstruction of HAEs (Funk et al., 2022);

6. Computational modelling of HAEs (Ji et al., 2022).

Each of these areas has been addressed by CHAMP through peer-reviewed technical manuscripts, excluding video analysis of HAEs, that is represented in the consensus framework only by a reporting checklist (Arbogast et al., 2022) and an unpublished companion manuscript describing the videogrammetry process (Neale et al., 2022). Despite growing interest in using video to support HAE measurement and interpretation, there remains no review which provides a comprehensive overview of the use of quantitative video-based methods in the literature.

This narrative review addresses that gap by examining how videogrammetric approaches have been used to extract quantitative kinematic parameters from standard video footage, as well as identifying future trends for research in the field. While wearable sensors remain the dominant tool for direct kinematic measurement, video data (particularly from consumer-grade cameras) offers a scalable, complementary avenue for quantifying HAEs. The remainder of this section therefore aims to situate video analysis within the broader HAE ecosystem, highlighting the motivations, benefits, and limitations of its use in both research and applied sport contexts.

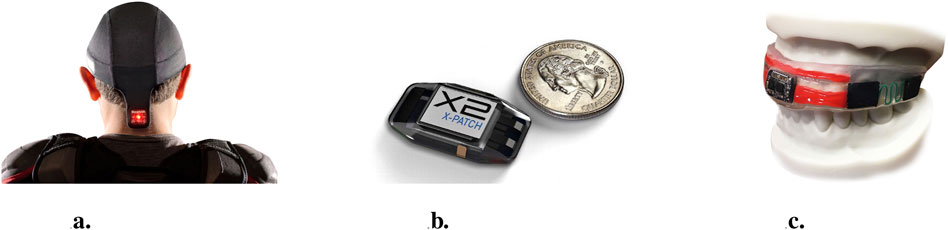

2.2 Direct measurement

In the context of this work, direct measurement of HAEs refers to the measurement of kinematic properties (position, velocity, and acceleration) of the head during HAEs. To accomplish this, a range of approaches to measuring head kinematics have been proposed in the literature, from wearable sensors such as headgear, skin patches and mouthguards instrumented with gyroscopes and/or accelerometers (see Figure 1) (Le Flao et al., 2022; Patton, 2016), to videogrammetry approaches where HAE parameters are manually extracted from video footage (Bailey et al., 2018; Gyemi et al., 2023; Stark N. E.-P. et al., 2025; Tierney, 2021).

Figure 1. Sensor-based approaches to HAE measurement: (a) skull cap (Luna, 2013), (b) skin patch (Linendoll, 2013), and (c) mouthguard (Wu, 2020).

To detect and record HAEs with wearable sensors, a threshold for linear acceleration (e.g.,

To improve the sensitivity of wearable sensors, many head impact exposure studies also employ manual video analysis to visually confirm detected instances of head impact (Basinas et al., 2022; Patton et al., 2020). However, this is a heavily time-consuming and resource-intensive process. For example, in one study, 163 h of video footage was manually reviewed by a team of 14 raters to verify 217 impact instances (Kuo et al., 2018).

The use of video to qualitatively verify HAE incidence has been well-documented in other reviews (Basinas et al., 2022; Patton et al., 2020), and recent studies have expanded on this by introducing qualitative descriptors into human-rater video analysis frameworks like tackle technique, phase of play, and action of player (Rotundo et al., 2023; Sherwood et al., 2025; Woodward et al., 2025). This review, however, is focused on approaches which can be used to extract quantitative HAE outcome measures directly from video footage using videogrammetric techniques. Point tracking and MBIM methods, for example, have been previously used to extract head kinematics from video footage. A complete overview of these techniques, including specific implementation details and practical considerations, is given in Section 3.

At present, a significant limitation of videogrammetric approaches to direct measurement is that without sufficient camera sampling rates, they are limited to capturing only the pre and post HAE kinematics (Bailey et al., 2018). According to the Nyquist-Shannon theorem, a signal must be sampled at a rate at least twice its highest frequency to avoid aliasing. Wu et al. (2016a) demonstrated that, in unhelmeted sports, gyroscopes with bandwidths of at least

2.3 Reconstruction and modelling

In the absence of detailed kinematic data for the head during a HAE (as is typical with videogrammetric measurement due to insufficient frame rate/resolution), there are additional downstream computational and physical reconstruction steps which have been utilised alongside quantitative video analysis to gain higher fidelity estimates of head kinematics.

Computational reconstruction approaches typically involve the use of mathematical models of the human body, such as MADYMO (MAthematical DYnamic MOdels) (Bailly et al., 2017; Frechede and McIntosh, 2007; Fréchède and Mcintosh, 2009; Gildea et al., 2024; McIntosh et al., 2014; Tierney and and Simms, 2017; Tierney et al., 2018b; Tierney and Simms, 2019; Tierney et al., 2021), commercially developed finite element models such as the Total Human Model for Safety (THUMS) (Chen et al., 2015; Sharma and Smith, 2024; Yuan et al., 2024b), and custom models developed for specific impact scenarios (Cazzola et al., 2017; Johnson et al., 2016; Perkins et al., 2022). It should be noted that the validation criteria used for these models vary widely, with many human body models originally developed for automotive crash applications, validated using Post Mortem Human Subject (PMHS) experiments, where cadavers of voluntary donors are used as specimens for testing (Wdowicz and Ptak, 2023). Therefore, caution must be exercised when applying these models to sports-related HAEs, in particular where active muscle control has been identified as a significant factor (Tierney and Simms, 2019).

Physical reconstructions, on the other hand, use surrogate models of the human body in laboratory setups, such as crash test dummies or anthropometric test devices (ATDs). These typically include a model of the head instrumented with accelerometers and gyroscopes to measure head kinematics during reconstructed HAEs (Funk et al., 2022). The Hybrid III (Hubbard and McLeod, 1974) and NOCSAE (Hodgson, 1975) headforms have been most commonly used in the literature for physical reconstruction of HAEs. However, the biofidelity of the surrogates used range in sophistication, from headforms rigidly attached to test frames to systems including the head, neck, torso, and limbs with matched joint angles. It has been highlighted that these surrogates may not be able to match an athlete’s pre-impact posture due to their limited head-neck adjustability (Funk et al., 2022), and concerns around unrealistically high stiffness of the Hybrid III neck have also been raised (Gibson et al., 1995), leading to the recent development of more biofidelic sport-specific surrogate necks, for example, (Farmer et al., 2022).

A key consideration in the development of any reconstruction is the choice of HAE parameters used in the reconstruction setup. For computational reconstructions, these parameters are used for simulation setup in the form of initial conditions, such as head and full-body pose, inbound velocities, and impact location and direction (Bailly et al., 2017; Gildea et al., 2024; Yuan et al., 2024a). For physical reconstructions, these parameters are used to set up the test rig, including the initial position of the surrogate headform and the impactor used to recreate the HAE (Funk et al., 2022; Zimmerman et al., 2023). In any case, quantitative video analysis has been identified as a valuable source of data for estimating these parameters, particularly in the context of both computational and physical reconstructions (Funk et al., 2022; Tierney, 2021).

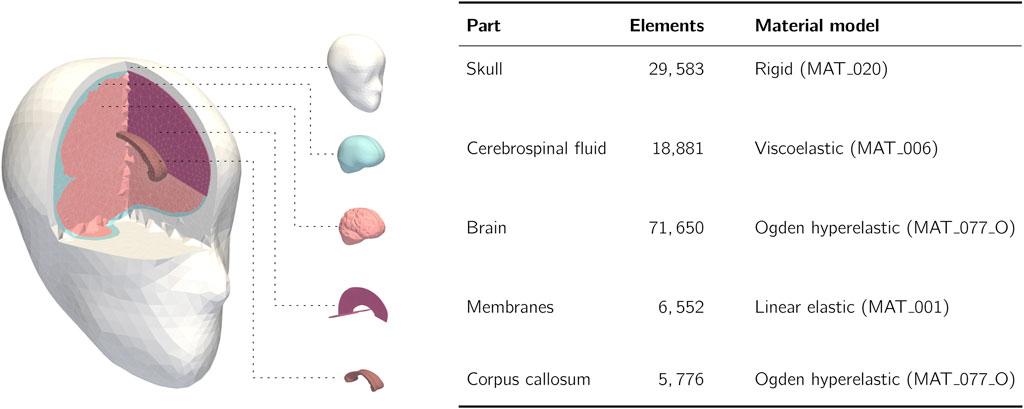

Both reconstruction types are used to generate detailed kinematic data for the head, which is often the input to subsequent injury analysis using brain injury criteria (BIC), which provide a link between the kinematic data and risk of brain injury. Simpler models use peak kinematics values (Laksari et al., 2020; Denny-Brown and Russell, 1941; Holbourn, 1943), reduced-order physical models (Gabler et al., 2018; 2019; Laksari et al., 2020; Takahashi and Yanaoka, 2017) and statistical model fitting (Rowson and Duma, 2013; Greenwald et al., 2008), while more advanced approaches use finite element head models (FEHMs), adhering to a range of differing validation criteria (Dixit and Liu, 2017; Madhukar and Ostoja-Starzewski, 2019; McGill et al., 2020) to estimate brain strain, a critical parameter for assessing brain injury risk (Hajiaghamemar et al., 2020; Hajiaghamemar and Margulies, 2021; O’Keeffe et al., 2020; Wu et al., 2020). One such model, The University of Edinburgh’s 50th-percentile male FEHM (EdiFEHM) is depicted in Figure 2.

Figure 2. The University of Edinburgh’s Finite Element Head Model (EdiFEHM), with details of its structural components and material models. Adapted from McGill (2022).

The peak 95th percentile of maximum principal strain

To link video analysis with brain injury modelling, several early-stage workflows have emerged in which pose and velocity estimates derived from video are used to estimate full six degrees of freedom head kinematics which drive brain injury predictions. For instance, Yuan et al. (2024a) proposed a pipeline where human pose (joint angles) extracted from monocular footage is used to initialise a biomechanical human body model simulation, with the resulting head kinematics used as input to a FEHM to estimate brain strain. However, this approach has only been demonstrated in a single case study involving a skiing accident, where predicted high-strain regions were compared to magnetic resonance imaging (MRI) and computed tomography (CT) images from a diagnosed TBI. Commercial interest is also growing, with a recent US patent application from Brainware AI (Karton et al., 2025) describing a system for predicting brain injury directly from video using machine learning techniques, bypassing traditional sensing hardware.

Despite these developments, no workflows that integrate video-derived measurements directly into FEHMs or other brain injury models have yet been validated or deployed at scale. This remains a clear gap in the field, previously limited by the video quality and sampling rate issues discussed previously. To address this, the remainder of this review examines the suitability of current videogrammetric techniques for extracting quantitative head kinematics from video, beginning with traditional methods and their limitations, before turning to emerging deep learning-based approaches and their potential to overcome existing barriers.

3 Traditional videogrammetry

3.1 Overview

As highlighted by Neale et al. (2022) in the CHAMP report on video analysis of HAEs, video footage can be used to extract both qualitative and quantitative information. Qualitative approaches do not involve precise measurements, but instead rely on visual inspection to identify or confirm HAEs. This can include manually reviewing footage to verify impacts detected by wearable sensors (Kuo et al., 2018; Patton, 2016; Patton et al., 2020), or to classify descriptive features such as tackle type, impact location, and game context (Rotundo et al., 2023; Sherwood et al., 2025; Woodward et al., 2025). By contrast, quantitative approaches involve the extraction of measurable data from video using videogrammetric techniques, such as the positions, orientations, and velocities of the head and other body segments. It is important to distinguish between these two methods, as this review focuses specifically on quantitative video analysis techniques for reconstructing and analysing the mechanics of HAEs. These measurements enable a more detailed biomechanical analysis beyond simple impact verification, and can provide valuable inputs to downstream applications, including physical or computational models for the evaluation of specific HAE case studies.

Photogrammetry is defined as “the science and art of making precise and reliable measurements from images” (Gruen, 1997; Förstner and Wrobel, 2016). Extending this principle to moving images leads to videogrammetry: the science and art of making precise and reliable measurements from video, or still frames extracted from video (Neale et al., 2022; Gruen, 1997). Most quantitative video analysis approaches for HAEs are based on this principle. Traditionally, these techniques can be broadly categorised into manual methods (including point tracking and MBIM) and optoelectronic marker-based tracking methods (Colyer et al., 2018). Modern advances in computer vision and deep learning have also enabled more sophisticated approaches to video-based motion estimation, which build on or replace traditional manual methods. These emerging methods are discussed in detail in Section 4.

In this review, only methods that rely on standard consumer-grade video are considered. This focus reflects the aim to review scalable techniques that can be implemented flexibly in both laboratory and field settings using readily available video cameras, without the need for specialised equipment such as reflective markers, high-end optical tracking systems, or other dedicated hardware. By relying solely on standard video, these methods are adaptable to a wide range of sports contexts and can be applied retrospectively to footage captured during normal play or training, making them practical and widely accessible tools for the study of HAEs.

It is important to note that even with standard video footage there can be significant variation between studies in terms of camera setups, calibration methods, and tracking techniques depending on the context in which a method is developed and applied. This variability can lead to differences in the accuracy and reliability of the measurements obtained, particularly when applying a method outwith the context in which it was developed. For example, a method developed for high-frame-rate laboratory video may not perform well when applied to low-frame-rate broadcast footage (Tierney et al., 2019).

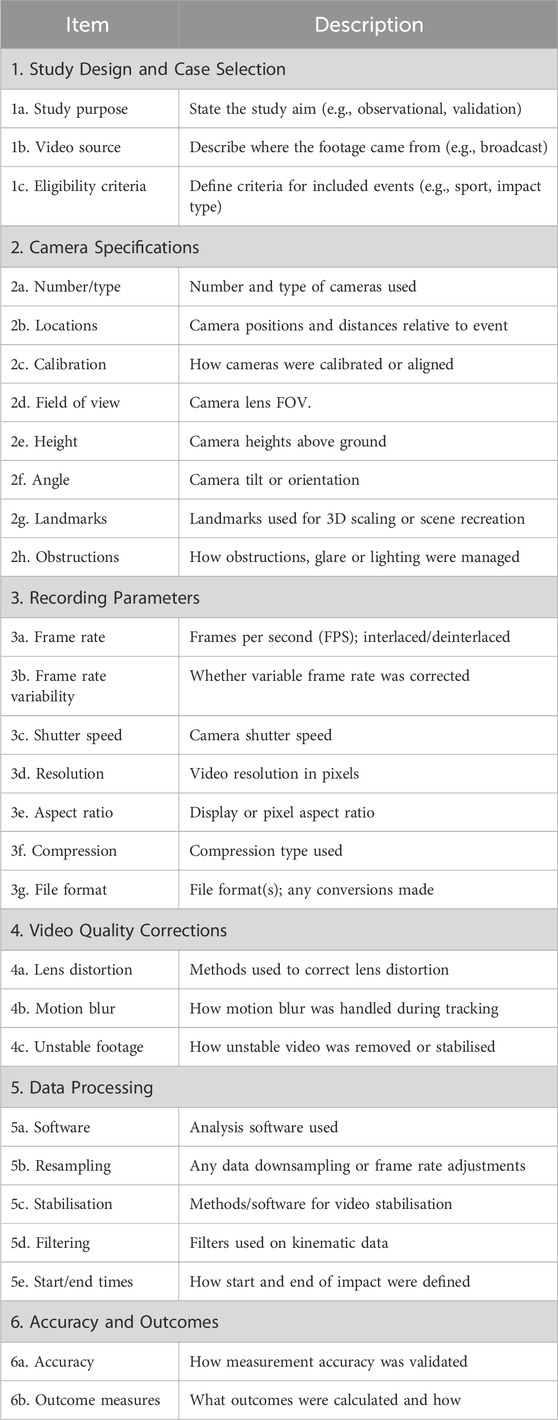

These differences can introduce challenges in comparing results across studies and methods, as well as in interpreting the findings in the context of HAE biomechanics. The CHAMP checklist of information to include when reporting video analysis of HAEs, summarised in Table 1, therefore provides a valuable framework with which methods can be compared and evaluated, and enables complete transparency and reproducibility of methods (Arbogast et al., 2022). While the full checklist is useful for comprehensive reporting of a methodology, including every checklist item in detail for each study considered in this review would add little value. Instead, a streamlined reporting structure is adopted in the comparison tables (e.g., Table 2), which broadly summarise essential elements such as video source, calibration method, outcome measures, and validation approach, so as to support consistent discussion.

Table 1. Adapted CHAMP checklist for reporting quantitative video reconstruction studies of head acceleration events (Arbogast et al., 2022).

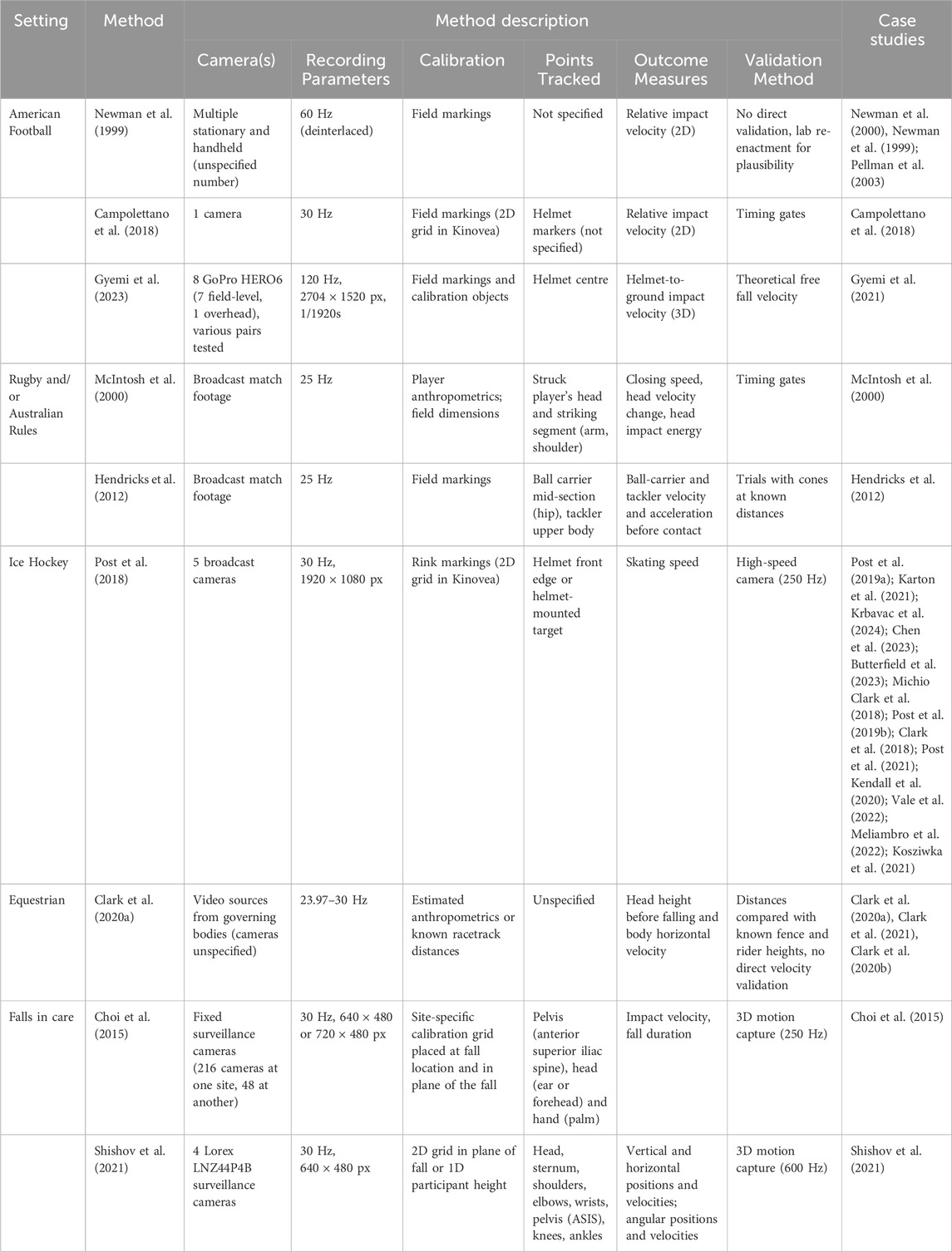

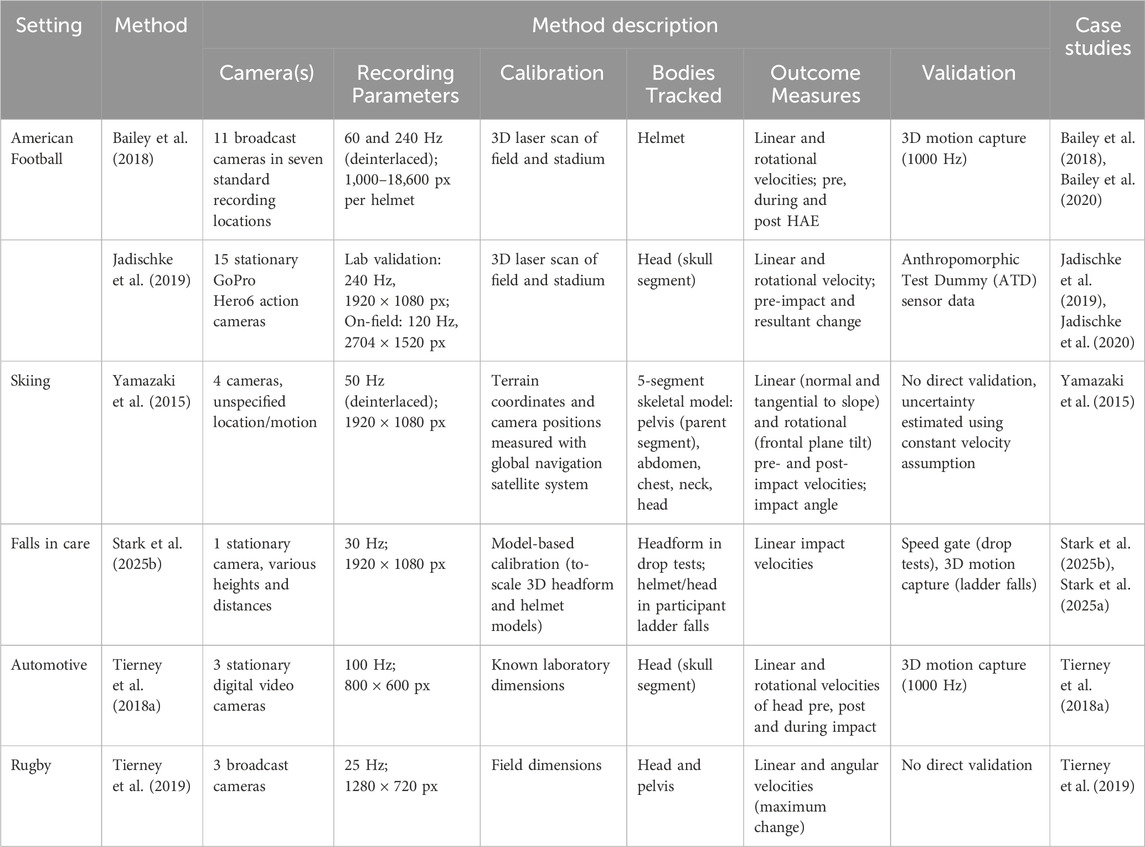

Table 2. Overview of selected point tracking methods used in estimating kinematic outcomes associated with HAEs, including method description and case studies where the method is applied.

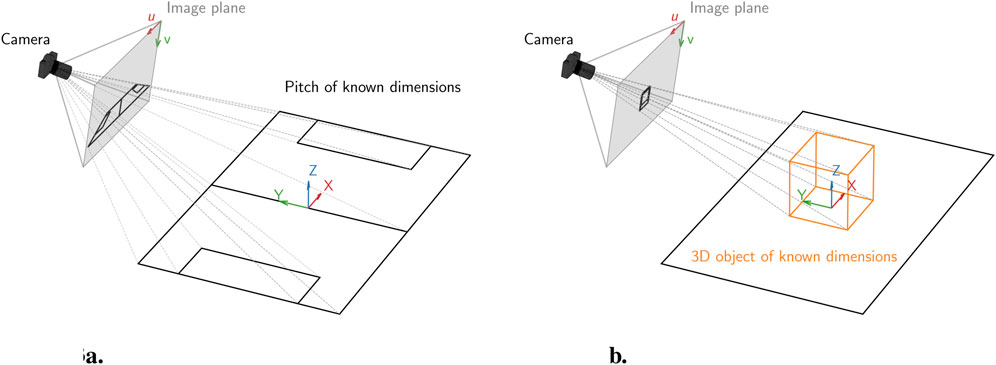

3.2 Camera calibration

In general, the mathematical objective of videogrammetric approaches is to estimate the position and/or orientation of an object in 3D space (or 2D assuming planar motion) based on a combination of 2D image coordinates over time. Traditionally, a crucial precursor to this process is a camera calibration procedure to establish the relationship between 2D image coordinates and 3D world coordinates. The calibration process involves determining the intrinsic (focal length, principal point and distortion coefficients) and extrinsic (position and orientation in the world) parameters of a camera, and can be performed using calibration objects with known geometry or by leveraging fixed known reference geometry in the scene.

Direct Linear Transformation (DLT) is one of the most widely used calibration and reconstruction techniques in biomechanics and sports analysis (Abdel-Aziz et al., 2015; Hedrick, 2008). DLT relates 2D image coordinates to 3D world coordinates via a linear mapping, which can be robustly estimated when a sufficient number of known reference points are visible. Its simplicity, flexibility, and compatibility with both controlled laboratory and field environments have made DLT the backbone of traditional multi-camera calibration workflows in many sports applications.

In single-camera (monocular) setups where it is reasonable to assume that all tracked motion occurs on a known planar surface (e.g., a football pitch or ice rink), 2D-DLT is generally used (Hedrick, 2008) (although more complex non-linear techniques also exist to address issues such as radial lens distortions (Zhang, 1999; Dunn et al., 2012)). 2D-DLT establishes a projective mapping between 2D world coordinates

Here,

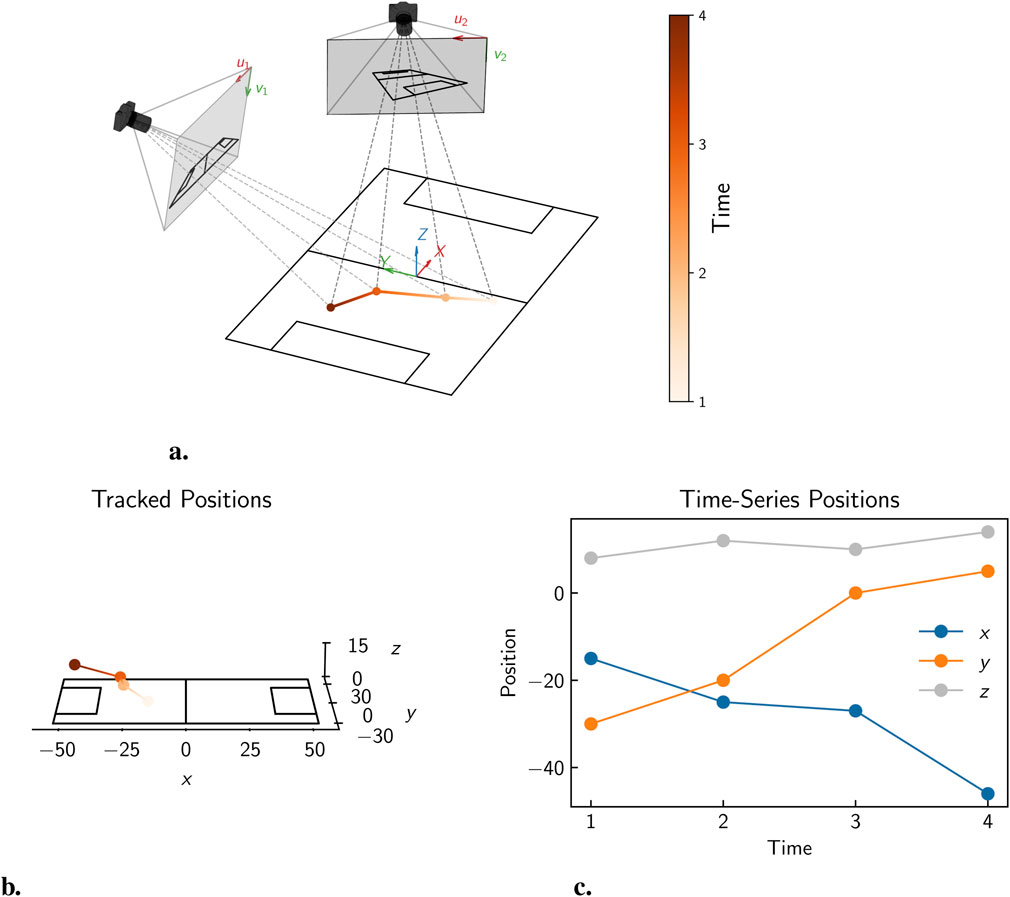

When full 3D motion tracking is required (such as capturing a helmet’s movement through space to estimate the velocity of a fall) 3D DLT must be used. This requires synchronised views from at least two calibrated cameras with overlapping fields of view. A set of known 3D reference points

The matrix

Figure 3. Illustration of the camera calibration process using (a) known reference points in the scene, such as field markings for 2D planar calibration, and (b) a typical 3D calibration object, such as a chessboard or calibration grid, for 3D calibration.

In both 2D and 3D calibration contexts, the placement of cameras plays a critical role in reconstruction accuracy. For 3D calibration in which a multi-camera setup is used, achieving a wide angular separation is important to ensure strong triangulation geometry and reduce depth ambiguity (Hartley and Zisserman, 2004). Cameras should ideally capture the scene from multiple angles with overlapping fields of view, and their positions should be stable and known throughout the recording. In 2D calibrations, for example, using known field markings, the camera must capture a sufficient portion of the calibrated surface (e.g., a flat pitch) without extreme perspective distortion (Szeliski, 2022). Oblique or high-angle views can reduce the reliability of 2D-to-3D mappings, especially when estimating motion in depth.

In scenarios where the camera is not stationary (as is often the case for “in-the-wild” sports video or handheld footage) DLT calibration can be performed independently for each frame, provided that enough reliable 2D–3D correspondences are visible. This results in a time-varying projection matrix (

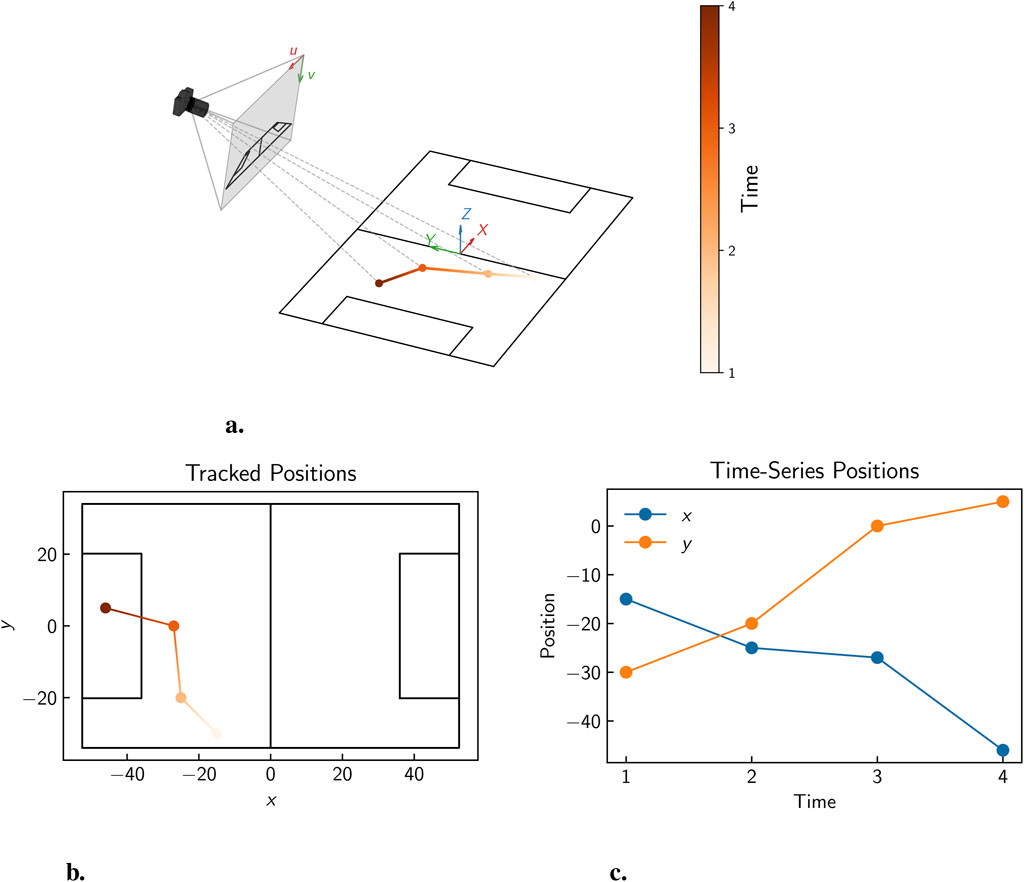

3.3 Point tracking

Once cameras have been calibrated, points of interest (such as anatomical landmarks, joint centres or the estimated centre of mass of the head) can be identified and tracked across successive video frames. In general, point tracking methods involve the identification and subsequent tracking of specific points or landmarks on an athlete’s body or equipment over a sequence of images. Historically, this process was performed through “manual digitisation” or “point-click” techniques, whereby researchers meticulously clicked on and marked points of interest frame-by-frame (Colyer et al., 2018; Yeadon and Challis, 1994; Hedrick, 2008). While advancements have introduced semi-automated tracking algorithms, these often still require initial manual seeding to define the points of interest (Hedrick, 2008). Recent developments in computer vision and deep learning have also enabled fully automated markerless tracking systems, which will be discussed in more detail in Section 4.

In the context of HAEs, accurate point tracking has primarily been used to estimate impact velocities and other collision parameters, which in turn inform physical reconstructions, computational simulations, and injury risk assessments. Some of the earliest attempts at estimating head accelerations in sporting collisions relied on point tracking techniques in the absence of wearable sensors to measure player speeds around the instant of impact, which were then used in physical reconstructions to estimate head kinematics (McIntosh et al., 2000; Newman et al., 1999; Withnall et al., 2005).

A range of both 2D and 3D point tracking methods have been developed in the literature, with the choice of method often depending on the available camera setups in a chosen setting and the specific requirements of the analysis. In 2D point tracking, a single calibrated camera is used to track points in a plane, while in 3D point tracking, two or more calibrated cameras are used to reconstruct the 3D position of points in space. A schematic illustration of the process of 2D point tracking using a single camera is shown in Figure 4. In this example, a point in 3D space is viewed by a single calibrated camera, and the tracked

Figure 4. Illustration of 2D point tracking, showing (a) the movement of a point in 3D space over time, viewed by a single calibrated camera, (b) the tracked

Figure 5. Illustration of 3D point tracking, showing (a) the movement of a point in 3D space over time, viewed by two calibrated cameras, (b) the reconstructed

Table 2 provides a summary of a number of point tracking methods which have been used in the literature to estimate kinematic outcome measures associated with HAEs. Where specified in the original studies, the table includes details of the setting and method specifications (including camera configurations, recording specifications, calibration approaches, points tracked, kinematic outcomes, and validation methods) in order to provide context for each of the methods in a manner consistent with the CHAMP reporting checklist in Table 1. Table 2 also includes references to relevant case studies demonstrating practical applications of these methods in real-world scenarios.

Note that when a camera’s sample rate is referred to as deinterlaced, this means that the original interlaced video (typical with broadcast footage) has been converted to a progressive format, effectively doubling the frame rate for analysis purposes. When measuring positions in interlaced frames, there are two possible positions, whereas the deinterlaced frames correctly provide both positions in sequence, thereby improving the accuracy of motion tracking and kinematic calculations. However, the accuracy of deinterlaced footage depends on the method used: for instance, simple line-doubling or field-repeating methods may introduce artefacts, while motion-compensated deinterlacing offers more accurate reconstruction at the cost of computational complexity (Sugiyama and Nakamura, 1999). As such, appropriate deinterlacing techniques should be applied and clearly reported when analysing interlaced footage in HAE studies (Neale et al., 2022).

As shown in Table 2, a wide variety of camera configurations, calibration procedures, tracking strategies, and outcome measures have led to the development of a diverse range of point tracking methods for analysing HAEs. These methods have supported a range of practical applications, influencing both research and policy. In some cases (such as studies in American football, rugby, and Australian Rules) the estimated velocities from point tracking have been used primarily as surrogate measures of impact magnitude, without being applied in further modelling or reconstruction. For example, Campolettano et al. (2018) noted that their method, alongside wearable sensors, could be directly used to inform the design of impact testing conditions that better reflect youth football collisions.

In contrast, other studies have further leveraged point tracking as a foundation for more in-depth biomechanical analysis. The approach developed by Post et al. (2018), which involved tracking helmet markers to estimate skating speed in ice hockey, has been widely applied in follow-up HAE research. Extracted velocities from this method have been used to drive physical reconstructions investigating the influence of athlete age (Chen et al., 2023), playing position (Butterfield et al., 2023), and rule changes related to body contact (Krbavac et al., 2024), to name a few examples only. Crucially, these reconstructions have enabled further evaluation of brain injury risk based on the resulting head kinematics, demonstrating that a relatively simple point tracking approach can provide the foundation for sophisticated biomechanical modelling and injury risk assessment.

However, it should be emphasised that each method has been designed and validated within the context of a specific sport or activity and thus may not be directly transferable to other settings without additional validation. For example, several studies in American football (Taylor et al., 2019; Karton et al., 2020; Vale et al., 2022; Meliambro et al., 2022) have cited the accuracy demonstrated by Post et al. (2018) for a 2D point tracking method for measuring player impact velocities in ice hockey to support their own measurement approaches. However, differences in camera configurations, calibration protocols, and the features tracked mean that such validation results may not be generalisable beyond their original context. This highlights the importance of initiatives such as the CHAMP project, which advocates for transparent reporting and rigorous validation of video-based analysis methods for HAEs (Arbogast et al., 2022).

The observed differences in camera setups across point tracking studies also have important implications for the scalability and wider applicability of these methods. In particular, the financial and logistical demands associated with high-end camera configurations can limit their use beyond elite sporting contexts. For example, studies by McIntosh et al. (2000) and Hendricks et al. (2012), conducted in rugby and Australian Rules football, used footage from multiple broadcast-quality cameras to reconstruct player kinematics. While such setups were shown to yield high-fidelity data, and may be readily available in elite environments where broadcast footage is routinely captured, they are clearly less suited to large-scale research efforts focused on non-professional settings, where such infrastructure is unlikely to exist. By contrast, lower-cost approaches such as the single pitchside camera method used by Campolettano et al. (2018), or the multi-GoPro configuration employed by Gyemi et al. (2021), offer more practical and affordable alternatives. These methods can be deployed more flexibly in training sessions or amateur competitions with minimal setup, reducing both financial barriers and the practical burden of collecting and processing large volumes of video data.

This variety in methods and their practical applications has also contributed to a range of reported accuracies. When measuring the velocities of ice skaters during slow and fast trials, Post et al. (2018) observed variations in the accuracy of their 2D point tracking method, with mean absolute errors of approximately 0.2–0.7 m/s for slow skating speeds (around 4.5 m/s) and 0.4–1.3 m/s for fast speeds (around 7 m/s). Hendricks et al. (2012) reported mean differences of 0.11–0.62 m/s between their video-derived speeds and timing gates on rugby fields, and in American football, Campolettano et al. (2018) measured player velocities ranging from 0.2 to 5.4 m/s, reporting mean errors under 10% (suggesting absolute errors up to about 0.5 m/s). Using a 3D point tracking method with multiple cameras, Gyemi et al. (2023) validated helmet impact velocities in controlled drop tests (4.5–6.1 m/s) and found relative errors below 3.4% in optimal camera configurations, equivalent to absolute errors below 0.22 m/s, but noted higher errors (up to 10.9% or 0.55 m/s) when camera angles were suboptimal. However, inconsistencies in validation procedures, sample sizes, and the choice of reference standards make it difficult to directly compare the accuracy of different methods. Standardised reporting of camera placements, calibration steps, and statistical validation metrics will support clearer cross-study comparisons, but direct comparison will remain challenging due to the inherent variability in sports contexts, camera setups, and tracking techniques.

In addition, few studies have explicitly quantified the manual workload associated with these approaches. Nevertheless, it is evident that methods requiring manual digitisation or semi-automated tracking with manual point seeding are inherently limited in their scalability, particularly for exposure studies that demand the analysis of hundreds or thousands of HAEs across many frames. For example, Campolettano et al. (2018) analysed only a subset (50) of the 336 high-acceleration game impacts recorded, citing practical limitations such as insufficient field markings or camera movement preventing the establishment of a reliable reference grid. These constraints highlight how manual or labour-intensive methods, while feasible for focused case studies, can become prohibitively time-consuming in larger-scale applications.

Finally, another crucial limitation of most point tracking methods is that they are primarily used to estimate translational parameters, such as linear velocity or displacement, while providing little or no information about rigid body orientations, such as that of the head, for example,. This is because tracking a single point on an object does not sufficiently constrain its rotation in three-dimensional space. Therefore, multiple (3 or more) non-collinear markers are required to resolve rotational degrees of freedom reliably in this context (Marchand et al., 2016), which comes with the challenges of marker visibility and extensive manual effort. As a result, point-tracking methods typically do not capture the full six degrees of freedom motion of the head during HAEs. This is a significant drawback, since rotational motion has been shown to be a major contributor to brain injury risk (Ji et al., 2014; Bian and Mao, 2020; Kleiven, 2013). Consequently, alternative approaches have been developed in an effort to estimate both translation and rotation parameters for HAEs.

3.4 Model-based image matching

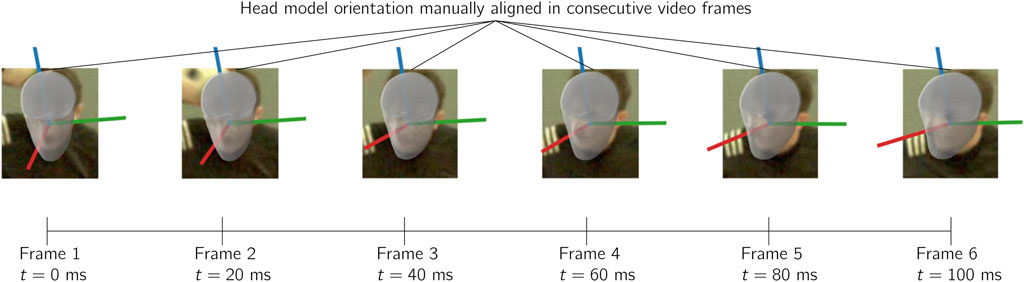

An alternative to point tracking methods is the use of model-based image matching (MBIM) techniques, which involve aligning a 3D model of an object of interest (e.g., a human body or helmet) with the corresponding observed instance of the object in the video frames. Originally developed and validated to measure 6 DOF motion for the pelvis, hip and knee during simple movements such as jogging and side step cutting in a laboratory setting (Krosshaug and Bahr, 2005), MBIM methods have since been adapted to estimate kinematic parameters associated with HAEs in a variety of sports contexts. Figure 6 illustrates an example in which a 3D head model has been aligned with the observed head in frames of a video depicting a head impact.

Several studies cite the use of “uncalibrated” video as a benefit of MBIM methods (Stark N. E.-P. et al., 2025; Krosshaug and Bahr, 2005). While these studies do not use explicit camera calibration prior to video recording, as with a number of the point tracking methods in the previous section, they utilise objects of known dimensions present in the scene to establish the necessary scale and spatial references needed for meaningful measurement. For example, some studies achieve this by using known field markings (Tierney et al., 2018a) or full 3D scans of stadiums (Bailey et al., 2018; Jadischke et al., 2019) to reconstruct camera positions and orientations relative to a global coordinate system, enabling measurement of both absolute position and orientation of the head or helmet in a global frame. Stark N. E.-P. et al. (2025), bypass the need for environmental calibration altogether by relying on a known size 3D headform or helmet model being matched directly to the video. This model calibration approach defines a local scale around the head itself, which enables estimates of head position, depth motion, and rotational pose relative to the camera to be obtained, even in footage where the wider scene remains unmeasured. Therefore, while MBIM methods may not require explicit camera calibration in the traditional sense, the fundamental requirement of a reference object or model of known size remains essential for accurate measurement.

Table 3 summarises a number of MBIM methods which have been used in the literature to estimate kinematic outcome measures associated with HAEs. As with Table 2, the table includes details of the setting and method specifications in a similar manner to the CHAMP reporting checklist in Table 1.

Table 3. Overview of selected model-based image matching methods used in estimating kinematic outcomes associated with HAEs, including method description and case studies where the method is applied.

As shown in Table 3, there is once again considerable variation in camera setups used across MBIM studies. These range from multi-camera broadcast systems (Bailey et al., 2018), to pitch-side GoPro arrays (Jadischke et al., 2019), and even single-camera surveillance footage (Stark N. E.-P. et al., 2025). As noted earlier, calibration procedures also differ greatly in both cost and practicality. At one end of the spectrum are resource-intensive approaches involving full 3D scans of stadium environments (Bailey et al., 2018; Jadischke et al., 2019), while other studies used more accessible methods involving localised calibration by aligning a 3D model of the headform or helmet directly to the video frames (Stark N. E.-P. et al., 2025).

These differences extend beyond calibration to the broader implementation of MBIM, particularly in how six degree of freedom model alignment, and subsequent calculation of kinematic data, is achieved. Studies have used a range of software environments, including commercial packages such as Poser (Tierney et al., 2018a) and SideFX Houdini (Bailey et al., 2018), as well as in-house tools developed using the Godot game engine (Stark N. E.-P. et al., 2025). The model positioning methodology also varies. For instance, Bailey et al. (2018) describe a two-stage process in which the translational path of an American football helmet is first estimated by fitting an outline to the helmet in each frame, followed by manual adjustment of all six degrees of freedom to align the virtual and video helmet markings. Other studies describe approaches such as self-assessing alignment based on minimising discrepancies in visible facial features (e.g., edges, nose, eyes, mouth) (Stark N. E.-P. et al., 2025), or manipulating a 3D skeleton model frame-by-frame to match the skull orientation to that of the cadaver model present in the video (Tierney et al., 2018a). Once model alignment is complete, positions and orientations are typically converted into velocities using numerical differentiation. To mitigate the noise introduced by this numerical differentiation process, various strategies have been employed, ranging from fitting interpolating cubic spline polynomials (Tierney et al., 2018a) to applying traditional filtering techniques, such as low-pass Butterworth filters guided by fast Fourier transforms of the positional data (Bailey et al., 2018).

Naturally, it follows that the variety in setups associated with MBIM methods has led to a range of reported accuracies. Using multiple camera views and environmental alignment, Tierney et al. (2018a) reported errors ranging from

Despite methodological differences between MBIM and point tracking techniques, the outcome measures reported in MBIM studies are often similar to those derived from point tracking (primarily translational kinematics) with the notable addition of rotational velocity estimates in some cases. As with point tracking studies, the extracted velocity data are rarely used beyond basic comparisons of raw magnitudes. For instance, Bailey et al. (2020) used MBIM to compare impact velocities across different contact scenarios (e.g., helmet-to-helmet, helmet-to-shoulder, etc.) with the goal of producing a biomechanical characterisation of concussive events, but did not extend their analysis into further modelling or simulation.

Of the studies listed in Table 3, only Stark N. E. P. et al. (2025) used MBIM-derived velocities as input for downstream modelling beyond simple magnitude comparisons. In their study of elderly falls, linear head impact velocities obtained from MBIM were used to inform drop-weight impact testing conditions. Outside the peer-reviewed literature, England (2025) also applied a MBIM approach to extract helmet orientation at the moment of impact between ball and helmet in cricket, which was then used to support physical reconstructions of injury events. To the authors’ knowledge, however, no published computational modelling studies of HAEs have explicitly detailed the use of MBIM-derived kinematics to initialise their simulations, despite the clear potential of MBIM to provide full-body pose data for this purpose (Bailly et al., 2017; Frechede and McIntosh, 2007; Fréchède and Mcintosh, 2009; Gildea et al., 2024; McIntosh et al., 2014; Tierney and and Simms, 2017; Tierney et al., 2018b; Tierney and Simms, 2019; Tierney et al., 2021). For example, Fréchède and Mcintosh (2009) describe using HYPERMESH to manually recreate pre-impact player positions for multibody simulation in MADYMO (a method later referenced by McIntosh et al. (2014)) but do not provide methodological detail or validation of a complete MBIM protocol. In other cases, researchers have opted for alternative approaches: Tierney and Simms (2019) used marker-based motion capture to stage rugby union tackles in the lab, from which initial conditions for MADYMO simulations were extracted, while Gildea et al. (2024) employed a deep learning-based pose estimation method (see Section4) to initialise similar computational reconstructions of cyclist falls.

A significant drawback of MBIM, perhaps limiting its widespread use for informing model positioning for computational and physical reconstructions, is the time-consuming nature of the manual model alignment process. For example, a case study of a single rugby HAE required an estimated 60 h of manual effort by one researcher to complete the MBIM procedure across three camera views, where only the orientation of the head was estimated in each frame (Tierney et al., 2019). When full-body orientation is required, this effort would increase substantially. Results are also sensitive to small inconsistencies in how the model is positioned and scaled in each frame, which Stark N. E.-P. et al. (2025) addressed by using an iterative re-tracking process to refine alignment until the measurement error fell below a set threshold, emphasising the need for well-trained operators. This repeated refinement further adds to the time demands, limiting the practicality of MBIM for large datasets or real-time applications.

4 Deep learning

As discussed earlier, videogrammetric analysis of HAEs has often been limited by practical and technical limitations such as occlusions, frame rate restrictions, highly labour intensive processes, and the difficulty of recovering six degrees of freedom motion from uncalibrated monocular footage. In recent years, advances in machine learning (particularly deep learning) have provided powerful solutions to these challenges in other domains, enabling scalable and automated extraction of complex spatiotemporal features from video data. These methods are now gaining traction in the context of HAE analysis, and more specifically in quantitative video analysis, where they hold significant promise for reducing manual workload and enhancing the accuracy and completeness of motion estimation.

Traditional ML methods (such as support vector machines and random forests) have been effective in processing lower-dimensional wearable sensor data. However, these methods do not scale effectively to the high-dimensional, non-linear nature of video data. The shift to deep learning has therefore not only improved performance but also enabled new applications, particularly in video-based pose estimation and motion reconstruction.

This section traces the evolution of machine learning techniques relevant to the quantitative analysis of HAEs, beginning with early sensor-based applications of classical methods and progressing toward recent advances in deep learning for image- and video-based approaches. It also draws on developments from related domains that demonstrate promising potential for advancing HAE quantification specifically through video analysis.

4.1 Context and motivation

Traditional machine learning methods have played a foundational role in classifying, detecting, and quantifying HAEs from wearable sensor data. These approaches typically involve hand-engineered features derived from time-series signals such as linear acceleration and angular velocity. For example, Wu et al. (2017) used a support vector machine to detect impacts from accelerometer data, while Gabler et al. (2020) expanded this work by benchmarking multiple classification algorithms and handcrafted feature sets. Zhan et al. (2023) applied random forests to subtype impacts and used nearest-neighbour regression to estimate brain strain, showing the potential of classical ML models in injury modelling. Other applications include distinguishing between head and body impacts (Goodin et al., 2021) and generating synthetic head impact signals using low-rank representations via principal component analysis (PCA) (Arrué et al., 2020).

More relevant to quantitative video analysis are the earliest algorithmic attempts to automate the estimation of human and object motion from video. Silhouette-based techniques such as the visual hull method (Laurentini, 1994) formed the foundation for early markerless motion capture systems (Corazza et al., 2010), enabling coarse reconstruction of human movement without physical markers. As machine learning methods developed, classical algorithms were adopted for pose and motion estimation. These included the use of random decision forests for real-time 3D human pose estimation from depth images (Shotton et al., 2011), support vector machines paired with handcrafted spatiotemporal features for action recognition (Wang et al., 2011), and kernel ridge regression and nearest-neighbour approaches for estimating full-body 3D pose from monocular video (Ionescu et al., 2014). However, these early methods were typically constrained to laboratory environments with controlled lighting and camera setups. In the context of HAEs, practical limitations, such as the scarcity of ground truth data in real-world sport settings and the visual challenges posed by occlusions and fast motion (to which early methods were not robust), have limited their widespread adoption for quantitative video analysis in this field.

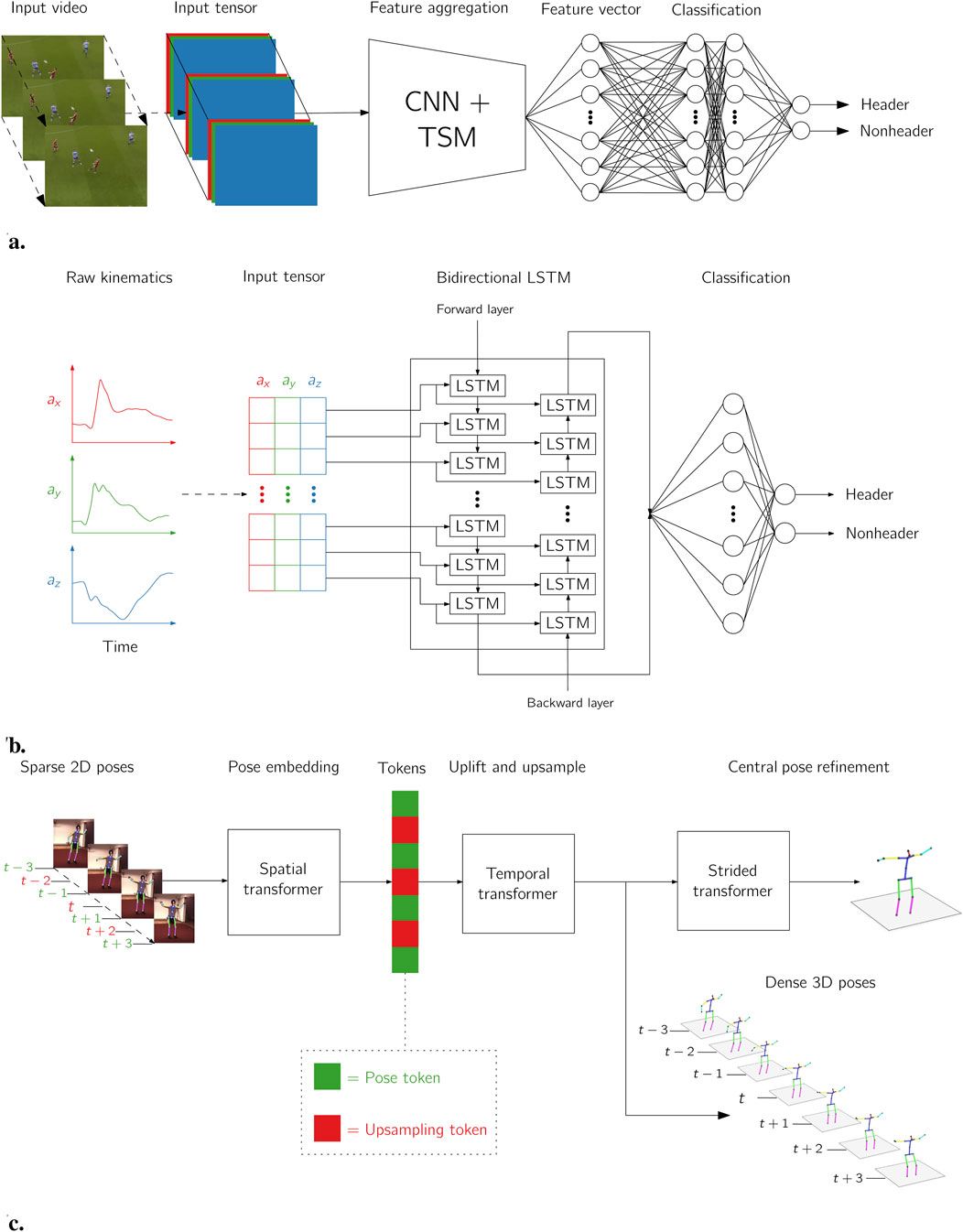

The advent of deep learning (LeCun et al., 2015) has driven rapid advancements in video analysis, significantly expanding what is possible when estimating human movement from “in-the-wild” images (Bogo et al., 2016). Convolutional Neural Networks (CNNs) have emerged as the foundational architecture for spatial feature extraction in images and video. Introduced by Lecun et al. (1998) and popularised in computer vision tasks by Krizhevsky et al. (2012), CNNs can learn spatial filters that capture local patterns such as edges, shapes, and textures. In the context of HAE analysis, Rezaei and Wu (2022) used a CNN backbone to classify football headers from cropped video clips. To capture temporal dependencies, Recurrent Neural Networks (RNNs) and particularly LSTMs (Hochreiter and Schmidhuber, 1997) have been widely used. These models are capable of modelling sequential data by maintaining hidden states that evolve over time, making them well-suited to recognising events that unfold across multiple timesteps or frames. Kern et al. (2022) employed LSTM networks to detect HAEs in time-series data from wearable sensors. More recently, transformer architectures (Vaswani et al., 2017) have gained popularity due to their scalability and ability to model long-range dependencies using self-attention mechanisms. These models have seen early applications in biomechanics, including pose tracking, video frame interpolation, and temporal upsampling. Einfalt et al. (2023), for instance, used a transformer-based model to uplift and upsample low sample rate 2D pose estimations to higher temporal resolutions in 3D. Figure 7 illustrates the representative examples of CNN, LSTM, and transformer architectures mentioned above.

Figure 7. Examples of deep learning architectures applied to video analysis of HAEs and pose estimation domains: (a) a convolutional neural network (CNN) architecture for header classification (Rezaei and Wu, 2022), (b) a recurrent neural network (RNN) architecture for header detection (Kern et al., 2022), and (c) a transformer architecture for temporal upsampling (Einfalt et al., 2023).

With modern deep learning methods demonstrating strong performance in human pose estimation, and video-based motion analysis more broadly, their application to HAEs is beginning to emerge. The remainder of this section highlights these early deep learning efforts, both those applied directly to HAE analysis and promising approaches from adjacent domains that offer clear potential for future adaptation.

4.2 Action detection

While this review focuses primarily on methods for the quantitative measurement of HAEs from video, it is important to acknowledge the foundational role that automated event detection and localisation plays in enabling such measurements at scale. In large video datasets, the initial task of identifying where and when HAEs occur is often the most time-consuming and labour-intensive stage of the analysis pipeline (as described in Section 2.2). Manual review workflows, such as those employed in many exposure studies, involve frame-by-frame inspection of footage to locate potential HAEs, a process that is not only slow and resource-intensive but also prone to inconsistency across annotators (Patton et al., 2020). Therefore, although these methods may not yield quantitative kinematic data directly, their ability to efficiently localise events of interest is critical for making subsequent measurement methods (e.g., point tracking, MBIM, or pose estimation) practically viable.

The detection of HAEs by applying deep learning techniques to wearable sensor data has been considered. Motiwale et al. (2016) explored the use of multilayer perceptrons for impact detection, while Kern et al. (2022) introduced long short-term memory (LSTM) networks to account for temporal dynamics. Raymond et al. (2022) developed a physics-informed neural network that integrates domain knowledge with data-driven learning. More recent works have extended deep learning applications to tasks such as kinematic denoising (Zhan et al., 2024a) and the estimation of impact characteristics (e.g., location, speed, force) from time-series sensor data using LSTM-based models (Zhan et al., 2024b).

With respect to video analysis, specifically, Giancola et al. (2023) and Rezaei and Wu (2022) fine-tuned existing CNN-based models to automatically detect headers in football, while Azadi et al. (2024) addressed a similar problem regarding impacts in ice hockey. Mohan et al. (2025) extended this line of work to rugby, using temporal action localisation techniques to detect head-on-head collisions. Reported model accuracies vary depending on sport and dataset complexity, as well as the evaluation metrics used to benchmark model performance. For example, Rezaei and Wu (2022) reported a sensitivity of 92.9% (indicating the model’s ability to correctly identify true head contact events) and a precision of 21.1%, reflecting a high number of false positives relative to true positives. Mohan et al. (2025) reported a lower sensitivity of 68%, suggesting more missed events, while Azadi et al. (2024) reported only a validation accuracy of 87%, which measures the overall proportion of correctly classified instances in a held-out validation set, without distinguishing between false positives and false negatives.

The majority of these methods adopt a modular architecture, using object detection models to crop relevant video regions (e.g., around the ball or players), which are then passed to a separate action classification model. For example, Rezaei and Wu (2022) used a YOLO-based ball tracker to crop image patches, which were then fed into a temporal shift module (TSM) (Lin et al., 2019) with a ResNet-50 backbone (He et al., 2015). Similarly, Azadi et al. (2024) and Mohan et al. (2025) implemented player or head detection to crop and isolate regions of interest for classification. By contrast, Giancola et al. (2023) developed a fully end-to-end approach using uncropped broadcast video and popular architectures such as NetVLAD++ (Giancola and Ghanem, 2021) and PTS (Hong et al., 2022) for direct action spotting. These models differ substantially in complexity. The ResNet-50 backbone used in Rezaei and Wu (2022) includes around 25 million trainable parameters, making it computationally expensive to train from scratch. In contrast, Azadi et al. (2024) used a more compact long-term recurrent convolutional network (LRCN) (Donahue et al., 2016) with an estimated 100,000 parameters, while Mohan et al. (2025) describe a small 3D CNN of unspecified size. These simpler architectures offer advantages in deployment, albeit potentially at the cost of detection performance. Transfer learning and fine-tuning of pretrained models, such as ResNet-50 trained on the widely used Kinetics human action dataset (Kay et al., 2017), were employed by Rezaei and Wu (2022) to reduce the computational cost of training a large network from scratch and to leverage knowledge learned from similar datasets.

In all cases discussed here, training has relied on manually annotated datasets of ground truth head impacts, often sourced from elite sport broadcast footage. This not only raises ethical and legal considerations for researchers (e.g., content rights), but also presents a highly labour-intensive bottleneck preceding model training. For example, the dataset used by Rezaei and Wu (2022) consisted of 4,843 manually annotated head contact events, while (Azadi et al., 2024) manually annotated 150 events, later augmented to 600.

The issues discussed here highlight the practical importance of reusing pretrained models and aligning with existing open-source efforts where possible. For instance, the latest release of the SoccerNet action spotting challenge includes annotated headers (Cioppa et al., 2024), offering a useful benchmark for association football-specific HAE detection tasks. Such datasets can serve as a foundation for fine-tuning rather than full re-training of models from scratch, significantly reducing the development burden for prospective models in the field of HAE research.

4.3 Pose estimation

As stated earlier, with the focus of this review being on quantitative video analysis methods from which kinematic parameters can be extracted, an area of significant interest in the field of HAE study is that of pose estimation (Edwards et al., 2021; Tierney, 2021). In the computer vision field, the goal of the pose estimation task is to detect the position and orientation of a person or an object. By definition, this objective therefore aligns closely with the goals of videogrammetric techniques such as MBIM, and to a lesser extent, point tracking, which were discussed in Section 3.

Pose estimation can offer deeper biomechanical insight into HAEs than simple action detection approaches, as it enables the tracking of body segment kinematics throughout an event. In general, pose estimation involves predicting the 2D or 3D positions of anatomical landmarks across video frames, and can be applied to reconstruct full-body or joint-specific motion dynamics. Yuan et al. (2024a), Yuan et al. (2024b) used pose estimation to model the dynamics of skiing and other fall events, while Gildea et al. (2024) applied similar techniques to reconstruct cyclist crash kinematics. In rugby, pose tracking has been used to quantify joint motion during staged tackles (Blythman et al., 2022) and to inform injury risk classification models based on tackle biomechanics (Martin et al., 2021; Nonaka et al., 2022). To date, pose estimation accuracy in the context of HAE analysis has only been evaluated in a controlled laboratory setting by Blythman et al. (2022). In that study, a pre-trained 3D pose estimation model (Iskakov et al., 2019) achieved “out-of-the-box” mean per-joint position errors (the average Euclidean distance between predicted and ground truth joint locations, from marker-based motion capture) of approximately

Several studies have identified the potential of pose estimation for head acceleration event (HAE) analysis (Edwards et al., 2021; Tierney, 2021; Rezaei and Wu, 2022); however, systematic validation of these methods in real-world sports settings using large-scale ground truth pose datasets remains in its early stages. Efforts such as the WorldPose dataset (Jiang et al., 2024), used to benchmark monocular pose estimation in broadcast football footage, mark important progress toward closing this gap (albeit in a related but distinct domain to HAEs). The dataset includes 88 association football video sequences with approximately 2.5 million ground truth full-body poses. Notably, constructing such a dataset is significantly more complex than those developed for action detection models. As part of their annotation process, Jiang et al. (2024) used multi-camera bounding box tracking, initial 2D and 3D pose estimation, followed by bootstrapping and bundle adjustment processes to correct inaccuracies in the initial predictions, particularly those caused by occlusions or poor visibility. As a result, developing a comparable dataset specifically for HAE analysis would demand substantial financial resources and manual effort.

In parallel, practical deployment of player tracking and pose estimation in real-world sports settings is increasingly being explored through systems that rely on lower-quality, monocular video. For example, commercial tools such as Spiideo offer cloud-based player tracking using fixed-position broadcast-style cameras, which are already deployed in many elite and semi-professional sporting environments. Similarly, UAV-based videogrammetry has shown promise in capturing full-body kinematics from single or multiple aerial camera views, even in outdoor, unstructured environments. Approaches such as DroCap (Zhou et al., 2018) and FlyCap (Xu et al., 2016) have demonstrated that drone-mounted cameras can also be used to reconstruct 3D human pose. Despite indicating the potential for low-cost player tracking and pose estimation with more flexible camera setups, these methods come with additional technical and regulatory challenges that could further limit their widespread adoption in HAE contexts.

Furthermore, a key limitation in using many of the generic human pose estimation models for head tracking lies in their representation of the head. Many models treat the head as a single point or segment rigidly linked to the neck/torso without resolving its independent motion, which limits their ability to resolve full six degrees of freedom motion. Advances in parametric human mesh models, such as HybrIK (Li et al., 2022), HybrIK-X (Li et al., 2023), and GLAMR (Yuan et al., 2022), enable more detailed recovery of local head motion, though often still rely on coarse body-level cues. More precise results have been achieved using dedicated head pose estimation networks that directly regress six degree of freedom pose from cropped head regions (Hempel et al., 2022; 2024; Martyniuk et al., 2022; Cobo et al., 2024). A recent benchmark by Kupyn et al. (2024) showed that specialised head pose models outperformed full-body estimators on challenging sequences, supporting their adoption for accurate video-based head motion recovery. Hasija and Takhounts (2022) bypassed the need for explicit head pose estimation by using a deep learning model (combining CNNs and LSTMs) to directly predict head kinematics from frames of simulated crash videos, achieving correlation coefficients for predicted peak angular velocities of 0.73, 0.85, and 0.92 for X,Y, and Z components, respectively. The model was, however, trained and evaluated on entirely synthetic data under ideal conditions, which limits its applicability to real-world scenarios.

4.4 Video quality enhancement

An avenue which potentially presents greater actionability in the near-term is in the use of deep learning for various video quality enhancement processes, with Stark N. E.-P. et al. (2025) noting the potential benefit of using advanced algorithms, such as motion deblurring techniques or deep learning-based video enhancement, as a preliminary step to mitigate the effects of motion blur in MBIM analysis.

Deep learning-based motion deblurring and denoising methods offer a promising avenue to improve low-quality footage for quantitative analysis of HAEs. Models such as DeblurGAN-v2 (Kupyn et al., 2019) and Restormer (Zamir et al., 2022) have shown strong performance in recovering detail from motion-blurred video sequences. More generally, efforts have also addressed the task of increasing the spatial (pixel) resolution of low-resolution images and videos. For example, ESRGAN (Wang et al., 2018) and Real-ESRGAN (Wang X. et al., 2021) use generative adversarial networks to enhance image resolution while preserving realistic textures and sharpness. Video-specific approaches such as BasicVSR++ (Chan et al., 2021) extend this capability to temporal sequences, maintaining consistency across frames. These spatial enhancement techniques may prove particularly useful in scenarios requiring precise localisation of visual features, such as helmet markings, facial keypoints, or anatomical joint centres for landmark tracking and model alignment. However, it is important to note that these models do not recover true resolution in a physical sense. Instead, they infer plausible high-frequency content based on learned priors from their training data, and should therefore be applied with caution, particularly in relation to footage with characteristics that differ substantially from the original training data of the model.

In the wider field of biomechanics, there is also growing interest in the use of deep learning methods to enhance the temporal resolution of video, representing a potentially delimiting factor in the analysis of low sample rate video footage. Although such techniques have not yet seen widespread application in HAE analysis, they have shown promise in related domains. For example, Einfalt et al. (2023) demonstrated that transformer-based models can perform temporal upsampling, learning to upsample low-frame-rate pose data and thereby reconstruct kinematics at finer temporal scales. Similarly, Dunn et al. (2023) applied video frame interpolation to recover sub-frame motion details in the context of human gait analysis. These approaches could be especially valuable in sports applications, where high-speed impacts often occur between frames, and accurately capturing fine-grained motion dynamics is critical for understanding injury mechanisms and improving predictive modelling.

Together, these video enhancement techniques may serve as valuable preprocessing tools that improve the reliability and accuracy of downstream quantitative methods, especially when analysing HAEs in low quality or existing video datasets, where reshooting is not an option. However, as with any of the deep learning methods discussed throughout this section, it is vital that care is taken, particularly where models are applied in scenarious which differ significantly from their training data.

5 Discussion

As shown throughout this review, quantitative video analysis represents a promising yet underutilised approach for the study of HAEs in sport. While wearable sensors remain the primary method for measuring HAE kinematics in the field, they are subject to several limitations, including poor coupling to the skull (Wu et al., 2016b), varying accuracy with proximity of impact to sensor (Le Flao et al., 2025), user discomfort or non-compliance (Roe et al., 2024), and high false-positive rates that necessitate time-consuming manual verification (Patton et al., 2020). Video-based approaches (especially when quantitative rather than qualitative) offer a flexible, scalable, and non-invasive alternative that can supplement or, in certain contexts, replace sensor-based methods by providing estimations of key kinematic parameters associated with HAEs.

An overview of the videogrammetric tools and techniques currently used to extract HAE kinematics from video has been provided, with a particular focus on current capabilities of widely-used point tracking and MBIM methods. These approaches, while validated in controlled environments (Bailey et al., 2018; Tierney et al., 2018a), remain labour-intensive and limited by the frame rate and camera coverage of the source footage. As such, their application to routine, “in-the-wild” (i.e., real-world, uncontrolled settings outside of a laboratory) sports analysis remains limited in practice. MBIM pipelines typically require hours of manual effort per impact (Tierney et al., 2019), as a result of typically requiring manual model alignment required across multiple camera views, thus constraining their scalability in large datasets or real-time settings.

Recent developments in deep learning and computer vision present significant opportunities to overcome these limitations. Markerless pose estimation models (Zheng et al., 2023), specialised head pose networks (Asperti and Filippini, 2023), and action detection pipelines (Giancola et al., 2023; Rezaei and Wu, 2022) now enable the automatic detection and tracking of head, body and object motion directly from video, including broadcast footage. To potentially improve accuracy of both traditional and deep learning approaches, additional video quality enhancement steps leveraging modern deep learning algorithms may be of use. Methods for temporal upsampling (Einfalt et al., 2023) also offer the potential to recover motion signals at frame rates that exceed the source video, opening the door to higher-fidelity kinematic reconstruction in scenarios where camera sampling rate would otherwise be a limiting factor.

Despite these advances, several key challenges remain. Many current pose estimation models still treat the head as a single point or segment rigidly linked to the neck/torso without resolving its independent motion, which limits their ability to resolve full six degrees of freedom motion. Second, the generalisability of these models to real-world, high-occlusion sports environments remains an open question; many pose estimation networks are trained on clean, lab-style datasets with well-lit scenes and unobstructed views. Third, there is a lack of standardised evaluation protocols for validating these models in the context of HAE biomechanics. Efforts to incorporate quantitative video measures into workflows in which downstream predictions of brain injury metrics, such as peak angular acceleration, or predicted brain strain (Zhan et al., 2021) are obtained remain in their infancy (Yuan et al., 2024a; Karton et al., 2025), and thus their accuracy on a large scale remains to be demonstrated.

To realise the full potential of video-based HAE analysis, several future research directions are recommended:

In summary, it appears that quantitative video analysis has the potential to transition from a labour-intensive supplementary tool to a viable method for measuring, reconstructing, and modelling HAEs in sport. Realising this potential, however, will require continued methodological development and rigorous validation efforts. With these advances, video-based approaches could play a central role in large-scale exposure surveillance, retrospective concussion analysis, and, ultimately, real-time decision support to enhance athlete safety.

Author contributions

TA: Writing – review and editing, Conceptualization, Writing – original draft, Visualization, Data curation. FT-D: Supervision, Project administration, Writing – review and editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The authors would like to acknowledge the support given to this project by the Engineering and Physical Sciences Research Council (EPSRC), UKRI, United Kingdom (Grant Number: EP/W524384/1).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abdel-Aziz, Y. I., Karara, H. M., and Hauck, M. (2015). Direct linear Transformation from Comparator coordinates into object space coordinates in Close-range Photogrammetry. Photogrammetric Eng. and Remote Sens. 81, 103–107. doi:10.14358/PERS.81.2.103

Arbogast, K. B., Caccese, J. B., Buckley, T. A., McIntosh, A. S., Henderson, K., Stemper, B. D., et al. (2022). Consensus head acceleration measurement practices (CHAMP): Origins, methods, transparency and disclosure. Ann. Biomed. Eng. 50, 1317–1345. doi:10.1007/s10439-022-03025-9

Arrué, P., Toosizadeh, N., Babaee, H., and Laksari, K. (2020). Low-rank representation of head impact kinematics: a data-driven Emulator. Front. Bioeng. Biotechnol. 8, 555493. doi:10.3389/fbioe.2020.555493

Asperti, A., and Filippini, D. (2023). Deep learning for head pose estimation: a survey. SN Comput. Sci. 4, 349. doi:10.1007/s42979-023-01796-z

Azadi, A., Dehghan, P., Graham, R., and Hoshizaki, B. (2024). “A deep learning network for detecting head impacts in ice hockey from 2D Game video,” in Proceedings. International IRCOBI Conference on the Biomechanics of impacts (Stockholm, Sweden).

Bailey, A., Funk, J., Lessley, D., Sherwood, C., Crandall, J., Neale, W., et al. (2018). Validation of a videogrammetry technique for analysing American football helmet kinematics. Sports Biomech. 19, 678–700. doi:10.1080/14763141.2018.1513059

Bailey, A. M., Sherwood, C. P., Funk, J. R., Crandall, J. R., Carter, N., Hessel, D., et al. (2020). Characterization of concussive events in professional American football using videogrammetry. Ann. Biomed. Eng. 48, 2678–2690. doi:10.1007/s10439-020-02637-3

Bailly, N., Llari, M., Donnadieu, T., Masson, C., and Arnoux, P. J. (2017). Head impact in a snowboarding accident. Scand. J. Med. and Sci. Sports 27, 964–974. doi:10.1111/sms.12699

Basinas, I., McElvenny, D. M., Pearce, N., Gallo, V., and Cherrie, J. W. (2022). A systematic review of head impacts and acceleration associated with soccer. Int. J. Environ. Res. Public Health 19, 5488. doi:10.3390/ijerph19095488

Bian, K., and Mao, H. (2020). Mechanisms and variances of rotation-induced brain injury: a parametric investigation between head kinematics and brain strain. Biomechanics Model. Mechanobiol. 19, 2323–2341. doi:10.1007/s10237-020-01341-4

Blythman, R., Saxena, M., Tierney, G. J., Richter, C., Smolic, A., and Simms, C. (2022). Assessment of deep learning pose estimates for sports collision tracking. J. Sports Sci. 40, 1885–1900. doi:10.1080/02640414.2022.2117474

[Dataset] Bogo, F., Kanazawa, A., Lassner, C., Gehler, P., Romero, J., and Black, M. J. (2016). Keep it SMPL: automatic estimation of 3D human pose and shape from a single image. 561, 578. doi:10.1007/978-3-319-46454-1_34

Bull, A. (2024). Rugby brain injury lawsuit stuck in legal limbo – and players are still suffering. Guardian. Available online at: https://www.theguardian.com/sport/2024/oct/20/rugby-brain-injury-lawsuit-stuck-in-legal-limbo-and-players-are-still-suffering#:∼:text=But%20those%20players%20are%20still,to%20properly%20begin%20before%202026.

Butterfield, J. P., Andrew, K., Clara, R., Michael, A., and Hoshizaki, T. B. (2023). A video analysis examination of the frequency and type of head impacts for player positions in youth ice hockey and FE estimation of their impact severity. Sports Biomech. 0, 1–17. doi:10.1080/14763141.2023.2186941

Campolettano, E. T., Gellner, R. A., and Rowson, S. (2018). “Relationship between impact velocity and resulting head accelerations during head impacts in youth football,” in Proceedings. International IRCOBI Conference on the biomechanics of impacts.

Cazzola, D., Holsgrove, T. P., Preatoni, E., Gill, H. S., and Trewartha, G. (2017). Cervical spine injuries: a Whole-body Musculoskeletal model for the analysis of Spinal loading. PLOS ONE 12, e0169329. doi:10.1371/journal.pone.0169329

Chan, K. C. K., Zhou, S., Xu, X., and Loy, C. C. (2021). BasicVSR++: improving video Super-resolution with enhanced Propagation and alignment. doi:10.48550/arXiv.2104.13371