- 1Computer, Electrical and Mathematical Science and Engineering Division, King Abdullah University of Science and Technology, Thuwal, Saudi Arabia

- 2Rain Neuromorphics, San Francisco Inc. CA, San Francisco, United States

With the rapid development of machine learning, Deep Neural Network (DNN) exhibits superior performance in solving complex problems like computer vision and natural language processing compared with classic machine learning techniques. On the other hand, the rise of the Internet of Things (IoT) and edge computing set a demand on executing those complex tasks on corresponding devices. As the name suggested, deep neural networks are sophisticated models with complex structures and millions of parameters, which overwhelm the capacity of IoT and edge devices. To facilitate the deployment, quantization, as one of the most promising methods, is proposed to alleviate the challenge in terms of memory usage and computation complexity by quantizing both the parameters and data flow in the DNN model into formats with shorter bit-width. Consistently, dedicated hardware accelerators are developed to further boost the execution efficiency of DNN models. In this work, we focus on Convolutional Neural Network (CNN) as an example of DNNs and conduct a comprehensive survey on various quantization and quantized training methods. We also discuss various hardware accelerator designs for quantized CNN (QCNN). Based on the review of both algorithm and hardware design, we provide general software-hardware co-design considerations. Based on the analysis, we discuss open challenges and future research directions for both algorithms and corresponding hardware designs of quantized neural networks (QNNs).

1 Introduction

Convolutional Neural Network (CNN) is one of the fundamental building blocks in modern computer vision systems proven to be effective in image classification, video processing, and object detection. The state-of-the-art CNNs are capable of performing very complex image classification tasks with an accuracy comparable to or even outperforming a human (Krizhevsky et al., 2017; Simonyan and Zisserman, 2014; He et al., 2016; Pham et al., 2021). However, the size of a state-of-the-art CNN can reach hundreds of megabytes preventing it from being deployed on edge or IoT devices for vision-related applications. Moreover, a 32-bit floating-point format is used for data representation in state-of-the-art CNN models. This leads to the challenges of deployment of these models to edge/IoT devices with restricted memory bandwidth, throughput, computation resources, and battery life, especially for real-time applications. Hence, there is an increasing demand for compact efficient CNN hardware maintaining acceptable performance.

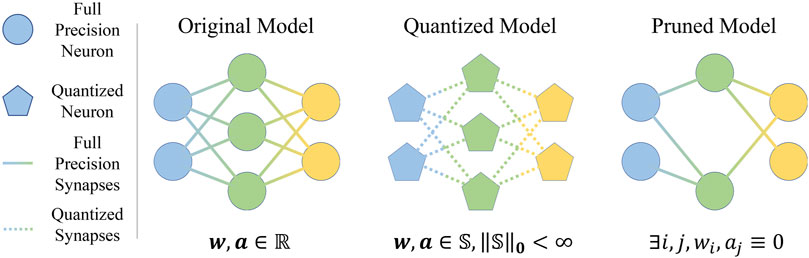

Due to redundant parameters of state-of-the-art CNN models (Han et al., 2015), pruning and quantization techniques can be used to reduce the size or number of CNN weights (Janowsky, 1989; Fiesler et al., 1990; Courbariaux et al., 2015). The conceptual comparison between quantization and pruning is shown in Figure 1. Quantization is a compression technique, that reduces the number of bits used for computation leading to CNN size reduction and hardware-friendly operations, e.g., integer arithmetic or bit-wise operations, rather than full precision floating-point operations (Neill, 2020; Guo, 2018; Qin et al., 2020; Kulkarni et al., 2022; Rokh et al., 2022; Gholami et al., 2021). Both pruning and quantization are important techniques for reducing model size and computational complexity. However, in this work, we specifically focus on quantization techniques and their impact on hardware implementations.

Figure 1. Comparison between the original, quantized and pruned models.

In addition to quantization and pruning, which facilitate the hardware implementation of CNNs, dedicated hardware accelerator designs can further improve energy and computation efficiency. The major motivation to develop specific neural network hardware comes from the memory bottleneck of the traditional von Neumann architectures (CPUs/GPUs), especially noticeable when deploying memory-dense applications, e.g., CNNs with millions of parameters. Specific hardware accelerators, e.g., FPGA-based (Umuroglu et al., 2017; Zhang et al., 2021), ASIC-based (Chang and Chang, 2019; Biswas and Chandrakasan, 2018) or In-memory computing (IMC) based (Sun et al., 2018b; Ankit et al., 2019) designs, help to address von Neumann bottleneck issues and deploy CNNs on low-power devices. Therefore, in this work, we try to provide a comprehensive review of specific hardware designs of quantized CNNs (QCNNs) and connect software-based QCNN methodologies with hardware deployment.

While previous studies have primarily reviewed neural network compression and quantization techniques from an algorithmic perspective (Neill, 2020; Guo, 2018; Qin et al., 2020; Kulkarni et al., 2022; Rokh et al., 2022; Gholami et al., 2021), they barely pay attention hardware implementations and often overlook the critical interplay between these algorithms and their hardware implementations. In contrast, our work bridges this gap by surveying both quantization algorithms and a wide range of QCNN-specific acceleration hardware. Furthermore, we offer insights into the challenges and open problems in QCNN hardware accelerator design, along with general guidelines for effective software-hardware co-design. Our main contributions are as follows:

• Integrated Review of Algorithms and Hardware: We survey various quantization techniques for CNNs alongside a detailed review of dedicated hardware accelerators—such as ASIC- and FPGA-based designs—that implement these methods. This dual perspective highlights how algorithmic choices impact hardware performance and vice versa.

• Guidelines for Software-Hardware Co-Design: We discuss practical strategies for co-designing quantization algorithms and hardware architectures. By outlining design trade-offs and optimization strategies, we provide a roadmap for developing CNN systems that maintain high performance under strict energy and resource constraints.

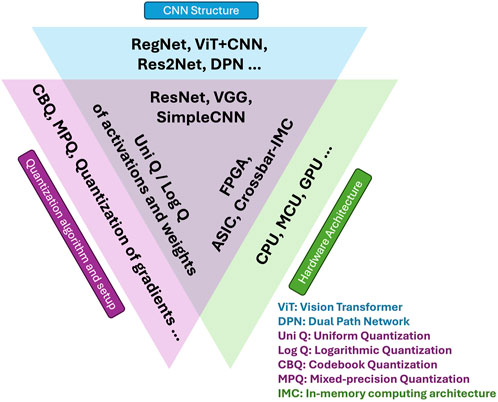

Both quantization algorithms, QCNN acceleration hardware design, and even network structure designs are rapidly evolving research topics. Hence, it is challenging to encompass an exhaustive survey of all relevant literature. The focus of this work is specifically narrowed to hardware accelerators for QCNNs that are tailored to maximize energy efficiency (e.g., ASIC- and FPGA-based designs), as opposed to those designed with an emphasis on scalability and peak performance. In alignment with this focus, the review of quantization algorithms and CNN models is mainly on those that are prevalently adopted by energy-efficient QCNN hardware accelerator designs. Given the defined scope of this paper, it is noteworthy that some cutting-edge CNN models (e.g., Vision Transformer + CNN(Guo et al., 2022), RegNet (Xu et al., 2022), DPN(Chen et al., 2017), Res2Net (Gao et al., 2019)), quantization techniques (e.g., codebook quantization, gradient quantization), and QCNN hardware platforms (e.g., GPUs, CPUs) will not be reviewed in extensive detail, as they are not common for energy-efficient on-edge processing. An overview illustrating the scope of this paper is provided in Figure 2. Nonetheless, we acknowledge and value the significant contributions of researchers in both fields who are missed by this work and extend our apologies to our readers for the inevitable limitations in coverage.

The remaining part of this paper is organized as follows. Section 2 introduces the basics of convention CNN and QCNN. Section 3 presents different quantization methods commonly used to quantize CNNs. In Section 4, different methods to generate a QNN are illustrated and benchmarked. In Section 5, various hardware accelerator designs are reviewed. Section 6 presents the future outlook on algorithms leading to more efficient QCNNs and corresponding hardware accelerator implementations. Section 7 concludes the paper.

2 Convolutional neural network

2.1 Full precision convolutional neural network

2.1.1 Convolution layer and fully connected layer

Inspired by the hierarchy model of the visual nervous system, Fukushima proposed the first neural network similar to modern-day convolution layers (Fukushima, 1980). LeCun et al. introduced the “LeNet-5” CNN in (LeCun et al., 1989; Lecun et al., 1998) for handwritten digit recognition systems, which is referred to as the first modern CNN trained with gradient-based backpropagation including all the essential building blocks in modern CNNs (convolution layers, pooling layers, and fully connected layers). Convolution layers are used to extract spatial features due to their spatial invariance.

Following the convolution layers, the fully connected layers are used to classify the abstracted features from the convolution layers and generate the final classification output.

2.1.2 Other auxiliary layers

In modern CNN architectures, additional auxiliary layers, including pooling, normalization, and dropout layers, are used along with the main convolution and fully connected layers. The pooling layers reduce the sizes of the convolution layers by sub-sampling the output feature maps with max/average operations.

As CNNs are becoming deeper and more complex, they also tend to be hard to be trained. To solve this problem, Ioffe et al. proposed the batch normalization technique in (Ioffe and Szegedy, 2015). Batch normalization normalizes the data over a mini-batch during training as

The other challenge in deep CNN training is overfitting. Srivastava et al. proposed the dropout technique in (Srivastava et al., 2014). By inserting dropout layers after convolution and fully connected layers, a certain portion of neurons is randomly chosen and dropped out during training, equivalent to training an ensemble of networks with different connections.

2.2 Typical convolutional neural networks

The design of CNNs has been widely explored recently. However, due to the limitation of IoT and edge devices, to the best of our knowledge, the latest CNN models like the RegNet family (Xu et al., 2022) and CNN-transformer hybrid architectures (Guo et al., 2022) are not implemented on dedicated hardware accelerators. Instead, only limited types of CNNs are referred to in the study of QCNNs. In this section, the commonly used CNNs in the study of hardware-related and mobile device-related QCNNs are introduced rather than the state-of-the-art CNN models.

2.2.1 LeNet-5

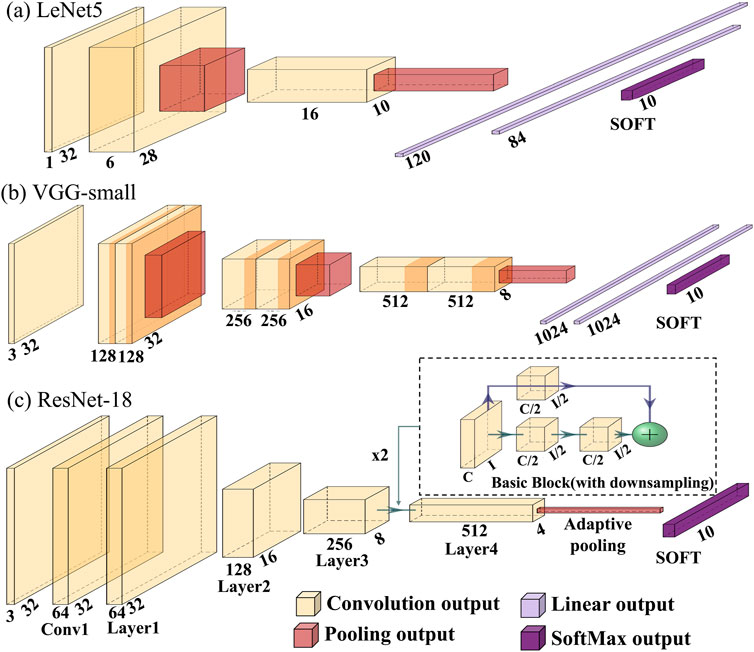

LeNet-5 (LeCun et al., 1989) is one of the earliest modern CNN architectures. It is designed for handwritten digit recognition with the Modified National Institute of Standards and Technology (MNIST) dataset. The structure of LeNet-5 can be shown in Figure 3a. It is often introduced in the studies as a case of light-weighted CNN.

2.2.2 VGG family

Supported by GPU acceleration, CNNs have become deeper. One such network is AlexNet (Krizhevsky et al., 2017), which can support large datasets classification, e.g., Imagenet. AlexNet adapts a rectified-linear unit (ReLU) as the non-linear activation function. The other example of deep CNN is VGGNet, which achieves high accuracy using small convolution kernel sizes. Such deep networks contain up to a hundred million parameters overwhelming the edge/IoT-based implementations of these networks due to the limited memory and resources. Therefore, a simplified version of VGGNet, VGG-small (VGG-9), is designed for a smaller dataset (CIFAR-10) (Courbariaux et al., 2015). The structure of the VGG-small is shown in Figure 3b.

2.2.3 ResNet family

The challenges of deep neural networks with simply cascaded layers are vanishing or exploding gradient issues during the training. To address this issue, the ResNet CNN model was proposed, which is divided into small blocks (He et al., 2016). Each block consists of a few (usually 2 or 3) convolution layers, with an identity bypass connecting the input and output of the block to alleviate vanishing and exploding gradient problems. This allows the implementation of narrower but deeper networks, showing better performance than wider, shallower networks. Due to the modular design, the ResNet architecture can be scaled to up to a thousand layers. Figure 3c shows the network structure of a ResNet-18 modified for the CIFAR-10 dataset.

2.2.4 CNNs for mobile devices and TinyML

To achieve better power efficiency and lower inference latency on modern mobile devices, the complicated CNNs need to be redesigned. MobileNet (Howard et al., 2017) proposes a network design with depth-wise separable convolution layers (Sifre and Mallat, 2014). Compared with traditional convolution layers, the depth-wise separable convolution layers reduce both the number of computations and the number of parameters by

To scale up a CNN further, compound scaling factors are introduced in EfficientNet (Tan and Le, 2019). They scale up the width, depth, and resolution simultaneously leading to higher accuracy when more floating point operations (FLOPs) are allowed by the hardware setup. To generate a baseline CNN model with a certain target number of FLOPs, Neural Architecture Search (NAS) can be used. Then, a grid search can be performed with the generated baseline model to acquire the compound scaling factors for this model.

To further extend AI towards the edge, the concept of TinyML was proposed (Warden and Situnayake, 2019). TinyML includes hardware, software, and algorithms that enable on-device sensor data analysis on the edge. It also includes network structure design and optimization targeting low-power edge devices like microcontroller units (MCUs). Even though MobileNet and EfficientNet are optimized towards compactness, they still overwhelm the RAM size of typical MCUs. To overcome this memory bottleneck challenge, the MCUNet framework is proposed in (Lin J. et al., 2020). MCUNet adopts a two-stage NAS to generate an optimized model towards throughput and accuracy while meeting the memory constraint. The two-stage NAS is co-designed with a memory-efficient inference library supporting code generator-based compilation, model-adaptive memory scheduling, computation kernel specialization, and in-place depth-wise convolution. MCUNetV2 (Lin et al., 2021) introduces patch-by-patch inference scheduling in the inference library and receptive filed redistribution in NAS to further reduce peak memory consumption caused by the imbalanced memory distribution in CNNs.

2.3 Quantized convolutional neural network

To enable efficient deployment of CNNs on edge or IoT devices, CNNs need to be compressed. Various techniques have been developed for neural network compression, including pruning (Janowsky, 1989; Han et al., 2015; Guo et al., 2020), low-rank tensor approximation (Denton et al., 2014; Jaderberg et al., 2014), and quantization (Teng et al., 2019; Gong et al., 2014; Courbariaux et al., 2015; Hubara et al., 2017; Sun et al., 2020). In this work, we focus on quantization methods, which can be independently applied with other compression methods.

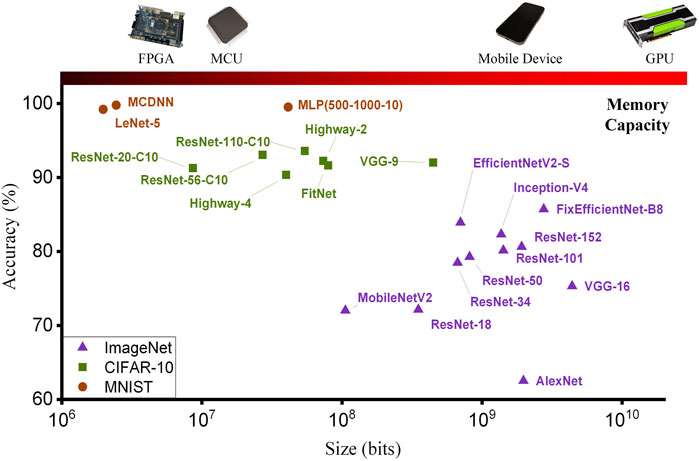

We summarize the achieved accuracies of various full-precision CNNs targeting different datasets in Figure 4. Due to the small memory size of MCUs and FPGAs, full-precision CNNs are too large to be deployed on such devices. To reduce the memory footprint, the parameters in the neural network can be quantized into a format with a shorter bit-width compared with the original 32-bit floating-point format. In (Gupta et al., 2015; Zhou et al., 2016; Rastegari et al., 2016), the authors quantize the weights of CNN into different bit-width formats achieving up to 32 times the size reduction with a cost of mild accuracy degradation. To reduce the issues of limited computation ability and power in IoT/edge devices, activation quantization has been proposed leading to more efficient computation kernels. For instance, when using binary quantization, multiply-accumulate (MAC) operations in a traditional full-precision neural network can be replaced by energy-efficient combination of XNOR and bit counting operation (Hubara et al., 2017; Rastegari et al., 2016; Zhou et al., 2016). Similar to weight quantization, accuracy degradation caused by quantization is acceptable.

Figure 4. Comparison of convolutional neural network (CNN) model sizes against the memory capacities of various hardware platforms. For FPGA, memory capacity corresponds to on-chip block random access memory (BRAM), while standard random access memory (RAM) for other platforms.

Generally, QCNN is commonly referred to as a CNN with a quantized format of parameters (weights) and data flow (activations) to achieve a smaller network size and more efficient computation. Weights in CNN account for the majority of the size of the neural network. As the number of weights in the convolution and fully connected layers are in the order of

3 Quantization methods

Quantization maps a continuous interval into a set of discrete values. There are various mapping algorithms, which can be categorized into two subsets, deterministic quantization, and stochastic quantization. In deterministic quantization, the quantized value and original value have a one-to-one mapping. While the stochastically quantized value is sampled from a certain probability distribution parameterized by the original value. Sampling from a distribution requires more computation than a deterministic calculation. Additionally, gradient estimation is difficult with stochastic quantization leading to training complexity (Bengio, 2013). Hence, we focus on deterministic quantization algorithms.

3.1 Uniform quantization

Uniform quantization is the most commonly used quantization algorithm, which divides an interval into equal sub-intervals where all the data is represented by a single value. Each sub-interval corresponds to a set of linear uniformly distributed discrete values. Uniformly quantized value

where

Another widely used uniform quantization is n-bits integer quantization (Equation 3):

In practice, the full-precision parameter

Generally, uniform quantization is simple, as all operations on the quantized parameters are either integer or bit-wise operations, suitable to be executed by arithmetic logic units (ALUs) in von Neumann systems, gate-level circuits in ASICs, and lookup tables (LUTs) in FPGA. However, uniform quantization inherently exhibits a poor dynamic range. With

3.2 Low-precision floating point format and logarithmic quantization

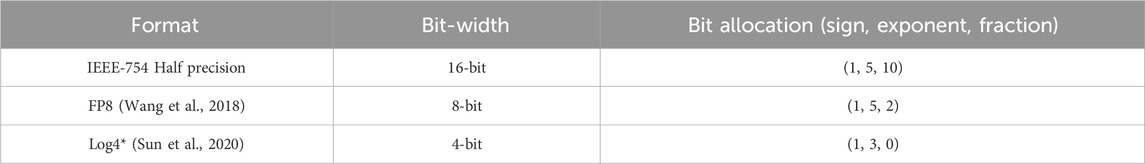

One of the straightforward approaches to reduce data precision (reduce bit-width) while maintaining dynamic range is to truncate the commonly used IEEE-754 single-precision floating-point format to half-precision format. Examples of low-precision floating point formats are presented in Table 1. To further reduce the bit-width and computation complexity while maximizing the dynamic range, a 4-bit radix-4 logarithmic quantization algorithm (Sun et al., 2020) can be used in the format of

Derived from Equation 4, the ratio between the largest and smallest magnitude can be expressed as

Table 1. Examples of different floating point format and logarithmic quantization format (* Log4 is a radix-4 format).

From the hardware point of view, the multiplication operation of logarithmically quantized data can be simply implemented with shift operations. However, the sum operation puts high requirements on accumulators due to the high dynamic range. Therefore, to support logarithmic quantization, the accumulator usually has large bit-width of the output or supports a floating point sum operation.

3.3 Codebook quantization

The parameters of a well-trained neural network follow a certain distribution, which is neither linear nor logarithmic. To represent these parameters, the quantized value set and corresponding mapping rules can be customized resulting in a codebook-style quantization. In (Gong et al., 2014), the quantized value set is found by k-mean clustering (Equation 5):

Then, each value

A similar method is adopted and implemented on hardware in (Lee et al., 2017). In (Han et al., 2015), a network is quantized by codebook quantization using Equation 6 and finetuned. In (Teng et al., 2019), codebook quantization is applied, where the most frequent values form a quantization value set instead of the cluster centroids. The codebook is updated after every epoch during training. Uniform quantization and logarithmic quantization can be treated as a special case of codebook quantization with the quantized value showing uniform or logarithmic distribution.

The hardware requirements to implement codebook quantization depend on the values in the codebook. For instance, if these values are floating-point values, the hardware should support floating-point operations. Compared to uniform and logarithmic methods, codebook quantization brings additional overhead of reading the codebook.

3.4 Mixed-precision quantization

Different parts of the neural network tend to exhibit different levels of abstraction and expression ability (Chu et al., 2021). Hence, different quantization parameters can be chosen for different parts of the neural network to ensure optimum model size without accuracy degradation, which is named mixed-precision quantization (MPQ) (Rakka et al., 2022). MPQ can provide a full-precision accuracy while maintaining the same model size as extremely low bit-width quantization (Nguyen et al., 2020; Kim et al., 2020). In MPQ, quantization parameters (bit-width, scale factors, quantization boundaries, etc.) for different parts of the neural network can be determined by some specification/metrics of the corresponding part (Ma et al., 2021; Yao et al., 2021), by differentiable optimization (Li et al., 2020; Habi et al., 2020), or by reinforcement learning (Wang et al., 2020; Elthakeb et al., 2019).

MPQ implementation requires additional hardware support and creates hardware overhead to handle the heterogeneity brought by MPQ (Nguyen et al., 2020; Wu et al., 2021). Any quantization method, e.g., uniform, logarithmic or codebook, can be used to create a mixed-precision model.

4 How to generate a quantized neural network?

There are two main approaches to generate a quantized neural network (QNN) model: (1) quantizing a well-trained full-precision model, known as Post-Training Quantization (PTQ), and (2) training or fine-tuning the model with quantization effects incorporated, referred to as Quantization-Aware Training (QAT). PTQ is typically faster and more efficient in terms of runtime, energy consumption, and computation cost because it uses a small calibration dataset without modifying the model weights. However, PTQ often results in lower performance compared to QAT (Jiang et al., 2022; Gholami et al., 2021; Rokh et al., 2022). As discussed in subsequent sections, current edge-oriented hardware accelerators do not fully support neural network training. Consequently, in edge-oriented vision applications (where QCNNs are commonly deployed), models are usually prepared offsite—on servers where runtime, energy consumption, and computational cost are less critical—making the extra overhead of QAT acceptable in exchange for improved accuracyMenghani (2023). To fully exploit the advantages of PTQ, instead of applying it to edge-oriented vision tasks, PTQ is frequently employed in domains like large language models, where updating weights is prohibitively expensive even with modern computational resources Shen et al. (2024a). Given these considerations, we focus on QAT methods in this paper.

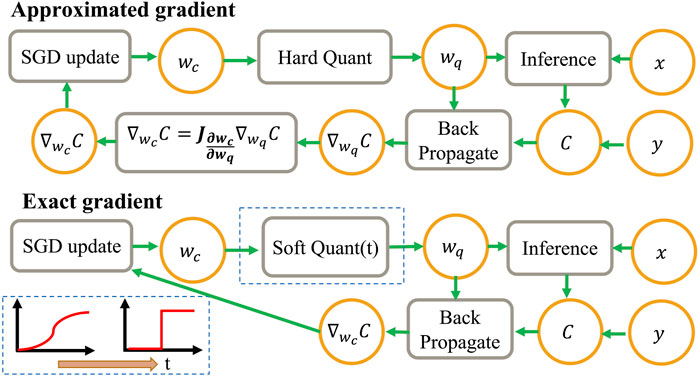

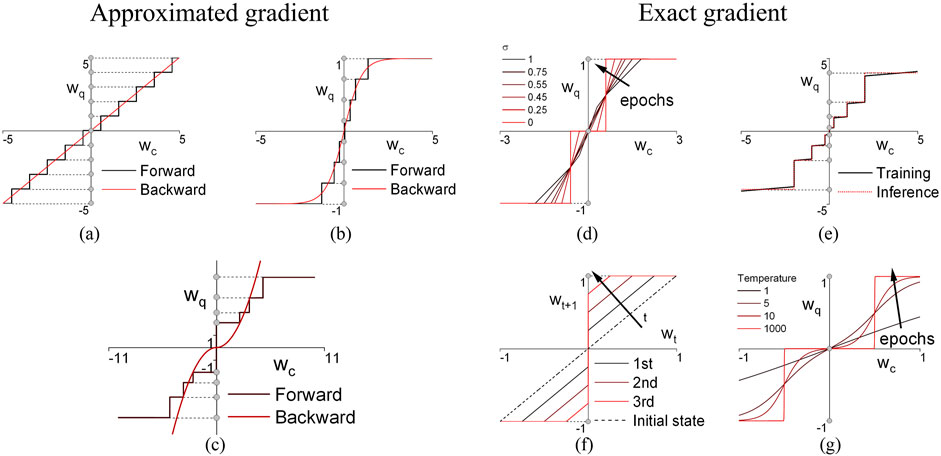

The major challenge for training QNNs is the stair-like nature of the quantization function, resulting in zero gradients. Therefore, traditional stochastic gradient descent (SGD)-based training methods cannot be applied directly for QNN training. Hence, the key challenge in QNN training is backpropagation methods. Based on the backpropagation of the loss, QNN training methods can be categorized into (1) approximated gradient methods with exact gradients and (2) exact gradient methods with gradual quantization (Figure 5).

4.1 Approximated gradient under exact quantization

One of the solutions to the zero-gradient problem of the quantization function is to generate an approximated gradient to update the weights. The most straightforward approximation strategy is called the straight-through estimator (STE), which offers a simple and efficient way to backpropagate gradients through quantization functions. In STE, the Jacobian matrix

4.1.1 Binary-connect and QNN

One of the first CNNs with binarized weights trained using STE is presented in (Courbariaux et al., 2015) (shown in Figure 6). This model achieved the accuracy comparable with floating-point models. Following the idea of training QNN using STE, this method is extended to n-bits uniform quantization of both weights and activations in (Hubara et al., 2017). In n-bit quantization (Hubara et al., 2017), the constraints are added to the weights and gradient values (a binary case example) (Equation 7):

where

Figure 6. Visualization of weights before and after quantization during training using different training methods. (a) Basic STE. (b) DoReFa. (c) LNS-Madam. (d) ANA. (e) nBitQNN. (f) ProxQuant. (g) Sigmoid QN.

4.1.2 DoReFa-Net

In (Zhou et al., 2016), the method to quantize weights, activations, and gradients is presented (Figure 6) (Equation 8):

where

As shown in Equation 9, a layer-wise scale factor

4.1.3 LQ-Net

Even though Binary-Connect and Dorefa are based on uniform quantization, the data distribution of weights and activations in a well-trained neural network is non-uniform. In (Zhang et al., 2018), to reduce the quantization error, a quantized value is obtained as:

where

Training using this method consists of optimization of the quantizer (vector

4.1.4 LNS-Madam

As logarithmic quantization offers a better dynamic range than uniform quantization, tailored logarithmic number system (LNS) with fractional exponents is proposed in (Zhao et al., 2022) and represented as (Equation 12):

where

Low-precision LNS training framework based on a modified Madam optimizer presented in (Bernstein et al., 2020) directly optimizes the exponents in the LNS enabling 8-bit low-precision training. Figure 6 visualize the relation between quantized weight

4.1.5 PACT

In (Choi et al., 2018), the parameterized clipping activation (PACT) algorithm to quantize an activation to low bit-width without significant accuracy drop is proposed. One of the challenges of activation quantization is to decide the clipping range of a quantizer. A manual-designed clipping range is hard to adapt to different activation value distributions from various neural network architectures. An excessively small or large clipping range causes important values to be clipped or vanish in a quantization step size. PACT determines the clipping range automatically via gradient update (Equation 13):

where

4.1.6 Summary

The relations between the continuous and quantized versions of the weights for both forward and backward passes are visualized in Figure 6. Overall, approximated gradient based training methods approximate the gradient according to a “trend line” (red line in Figure 6). Also, these training methods execute the forward pass using quantized values, showing the potential of being deployed to low-end devices. However, most of the methods use full precision weights to aggregate the full precision gradients. The possibilities of training QNNs using low-precision latent weights (Banner et al., 2018; Gupta et al., 2015; Zhao et al., 2022) and gradients (Zhou et al., 2016; Rastegari et al., 2016; Sun et al., 2020) have been explored. Such methods make approximated gradient-based training methods to be good candidates for deployment on low-end devices.

4.2 Exact gradient with a gradual quantization

Besides approximated gradient methods, the other solution to the zero-gradient problem is a “soft” quantization using the time-evolving quantization function with non-zero derivatives, which converges to a “hard” quantization function as training proceeds.

4.2.1 Additive noise annealing (ANA)

In (Spallanzani et al., 2019), the expectations of quantized values and corresponding gradients are defined and derived considering a noise being added to the full-precision values. Consider

where

Figure 6 shows an example of different expectations

4.2.2 ProxQuant

Unlike other methods, the ProxQuant algorithm (Bai et al., 2018) does not modify traditional SGD-based training, being directly compatible with SGD optimizers, e.g., Momentum SGD and Adam. The key point of the ProxQuant algorithm is adding a regularization process after each SGD update (Equation 15):

where

Since the

4.2.3 nBitQNN

In (Chen et al., 2020), a QNN training method that mixes the full precision weights and quantized weights to generate mixed-precision QNN is applied as (Equation 16):

where

4.2.4 Quantization using sigmoid and hyperbolic tangent

In (Yang et al., 2019), the quantization function reformulated using a combination of shifted and scaled step functions:

where

where

In (Gong et al., 2019), the differentiable soft quantization framework (DSQ) is proposed, which shares a similar idea of quantizing data piece-wisely using a series of evolving hyperbolic tangent basis functions. Different from (Yang et al., 2019), the evolution of the basis functions is not performed explicitly with the training progress. A characteristic variable (describing the error between hard quantization and basis functions) is calculated and minimized during training. Additionally, DSQ adopts trainable clipping ranges similar to PACT (Choi et al., 2018).

4.2.5 Summary

Exact gradient methods adopt evolving quantization functions to guarantee a feasible gradient during training and reach hard quantization at the end of training. However, this implies that these methods need to be operated with full precision data. Hence, they are more suitable for a cloud-based execution generating QNNs to be deployed on edge devices.

4.3 Fake and real quantization

Most quantization studies implement their algorithms in software (e.g., using Pytorch, Tensorflow) (Li et al., 2021). Therefore, all the quantization operations and quantized data are simulated in floating-point format, which can be referred to as “Fake Quantization”. On the contrary, when the quantized models are deployed on hardware, the quantized data is processed by executors supporting the corresponding format (e.g., 8-bit integer arithmetic unit for 8-bit uniform quantization). This can be referred to as “Real Quantization”. The data format mismatch between fake and real quantization may cause unavoidable data value differences. Adaption from fake quantization to real quantization considering different hardware architectures is discussed in Section 5.

4.4 Benchmarking

In this section, the training methods mentioned in Section 4 are benchmarked under different configurations using corresponding open-source codes modified where required.

4.4.1 Network and training configurations

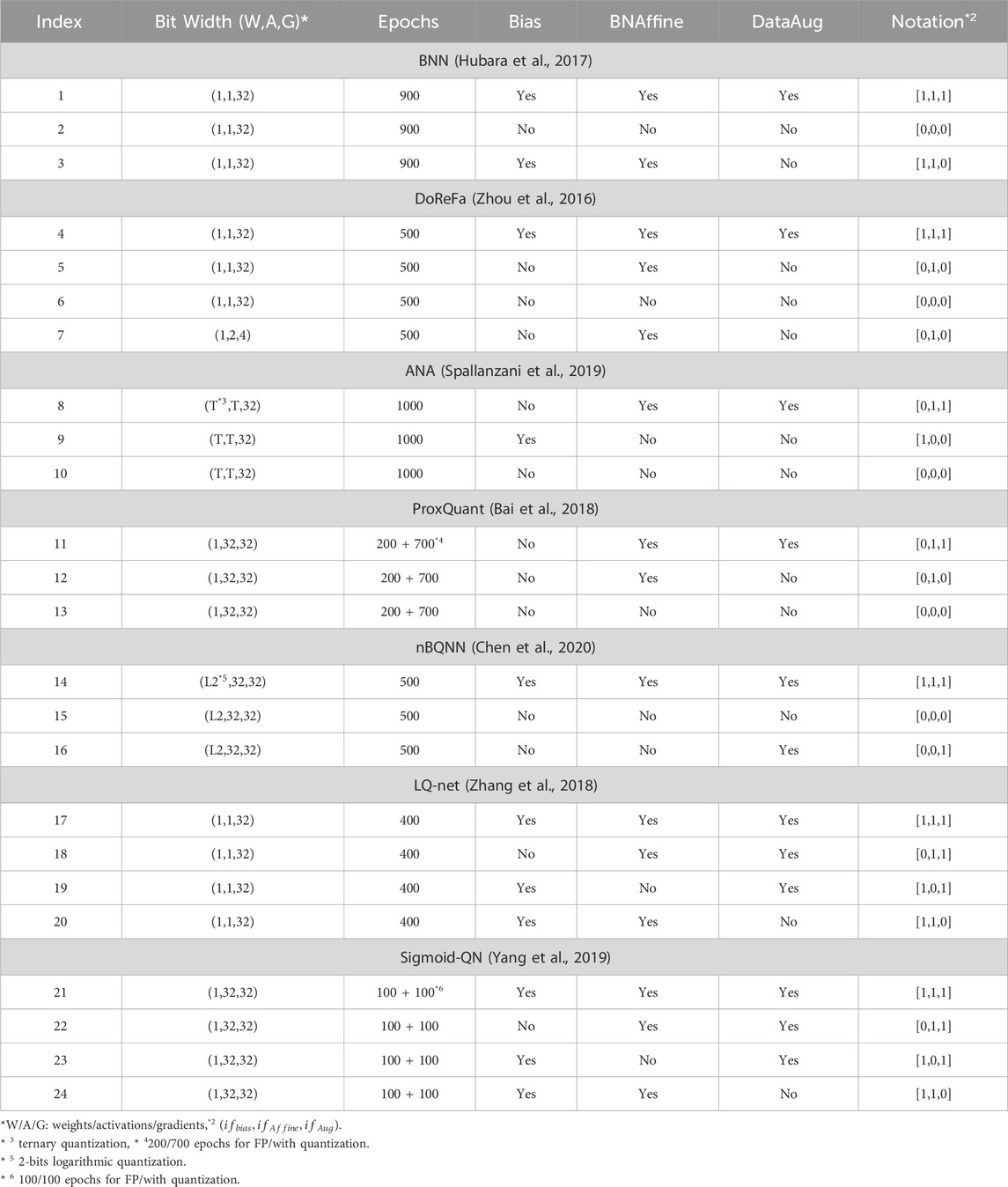

In the aforementioned works, different configurations of either the network or training are used. In all the original works, the affine operation of the batch-norm layer is enabled and all the trainable parameters for affine operation are not quantized. While in our experiments the affine operation is only enabled according to configurations. Affine operation adds two full precision trainable parameters at each output channel. They do not affect the performance during inference with batch normalization fusing, however, during training they degrade the compression rate and increase the computation complexity. To improve generalization ability, all the aforementioned methods involve data augmentation. To analyze the influence of each setting, we benchmark the aforementioned training methods using the settings summarized in Table 2.

4.4.2 Experiments

The dataset and neural network structure adopted in the experiments are CIFAR-10 and VGG-small, respectively. We prioritize the official code provided by the authors during the benchmark and keep a minimum modification. For a fair comparison, the data augmentation conducted in the experiments only includes random cropping and random horizontal flipping. The number of training epochs is chosen to be large enough for each case to be well-trained.

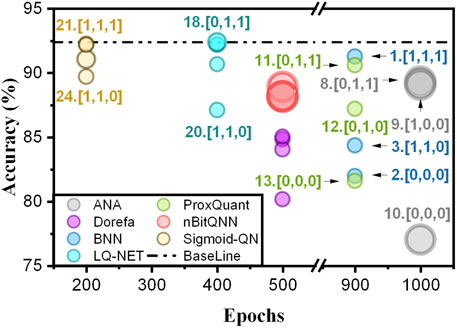

The results of different cases are visualized in Figure 7. To compare the relative size of the networks in different cases, we calculate the averaged bit-width using the following equation (Equation 19):

where

Figure 7. Visualization of the benchmark results, with important cases marked using “Index” and “Notation” in Table 2. The circle diameter represents the size of the model.

Overall, all the methods can achieve an accuracy higher than 85

An average bit-width is represented by the diameter of the circles in Figure 7, which shows that the additional parameters from the biases and affine operations occupy a small portion of the network size. Even though shift-based batch normalization is introduced in (Hubara et al., 2017) and realized in (Zhijie et al., 2020), batch normalization still brings overhead during training.

LQ-net and Sigmoid-QN achieve the best accuracy close to the full precision baseline model at the cost of additional scale factors and the first and the last layers are not quantized. The BNN method can achieve an accuracy of over 90% while maintaining the size of a binary neural network with all the layers quantized without scale factors. However, it is sensitive to bias and batch normalization parameters. ProxQuant and nBitQNN also achieve high accuracy, but they do not quantize the activations. The ANA method also demonstrates high accuracy but has a larger model size and is sensitive to changes. DoReFa is robust against configuration changes and supports gradient quantization. However, the first and last layers are not quantized in DoReFa, and layer-wise scaling factors are adopted bringing overhead in terms of compression rate and computation complexity.

4.5 Strategies to improve QNN performance

This section introduces general strategies helping to improve the performance of QNN, including learning rate scheduling and trade-offs between accuracy and model complexity.

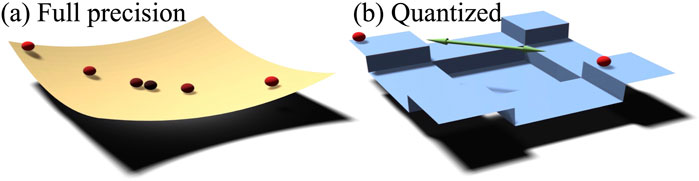

4.5.1 Learning rate scheduling

For QNNs, the selection of learning rate is more critical than full precision networks. A low learning rate leads to slow convergence, while a high learning rate causes an unstable weight update. Figure 8 shows the gradient descent process for both full precision and quantized networks. In full precision models, the loss surface is smooth, the gradients shrink down as the loss approaches the minima (Figure 8a). In QNNs, the loss surface is stair-liked. If the gradients are calculated and referred to as the quantized value (methods using approximated gradients in Section 4.1), the gradient preserves a constant value regardless of the current position on the flat plateau of the loss surface, causing a cross over the minimum (Figure 8b). Hence, without gradient shrinking down as in the full precision case, the training of QNNs must be performed with lower learning rates compared with a full precision case. This phenomenon is confirmed by experiments in (Tang et al., 2017).

QNN training usually starts with lower learning rates compared to full-precision networks. For instance, the learning rate for training full precision VGG network in (Simonyan and Zisserman, 2014) starts at

4.5.2 Trade for a higher accuracy

The majority of QNN training methods are offline methods, which can be performed on high-performance computation platforms, e.g., cloud servers. Meanwhile, some QNN applications are not strictly constrained by computation power or computation time. Hence, there are some strategies to increase the QNN accuracy at the cost of computation complexity or computation time.

The easiest way to improve accuracy without increasing a model size is to increase activation precision. For example, if the same network is trained with the DoReFa-Net method, a model trained with 2-bit activations achieves the accuracy of 86.5

where

In (Tang et al., 2017), a new regularization term substituting commonly-used L2 norm regularization is proposed. In binary QNN, the ideal quantized parameters take the values of {-1, 1}. However, the traditional L2 norm regularization term forces the parameters to approach zero, which contradicts the distribution of the parameters in a binarized network, resulting in frequent weight fluctuation during training. Hence, new regularization biases the update of parameters toward their designated quantized value (e.g., in binary quantization case, the parameters are biased toward

where

The other QNN training strategies, including two-stage optimization (TS), progressive quantization (PQ), and guided training, are shown in (Zhuang et al., 2018). TS consists of two steps: (1) weight quantization during training, and (2) activation quantization with trained weights. TS helps to avoid local minima when training a network from scratch. Moreover, instead of quantizing directly to the fixed bit-width (e.g., 2 bits), the network is quantized progressively (e.g., 32-bits

The other method to improve QNN accuracy is relaxing the compression rate if the application is not strictly constrained by memory size. In (Chu et al., 2021), mixed-precision QNN is proposed. As the features propagate along the network, they become more abstract and separable. Therefore, each layer of QNN can be quantized using a set of decreasing bit-widths as the layer goes downstream. For example, VGG-7 is quantized using {8-4-2-1-1-1}-bits for each layer from the input to the output, respectively. For the CIFAR-10 dataset, this network shows an accuracy of 93.22

In (Sakr et al., 2022), tensor clipping during QNN training is considered. Commonly, the tensor values are scaled based on the maximum in this tensor; however such scaling results in a large quantization step (especially for uniform quantization). As most of the tensor values are small, maximum scaling makes quantization bit-insufficient. In (Sakr et al., 2022), an algorithm to determine the optimal clipping and scaling factor for each tensor during each iteration of neural network training is shown. The optimal clipping factor minimizes the overall mean squared error including quantization error and clipped error.

For conventional quantization-aware training methods, it is common to use STE for gradient estimation. However, STE can cause gradient explosion by assigning clipped values with constant gradients. Though assigning zero gradients to the clipped values can avoid such explosion, the clipped values are prevented from being trained equivalent to shrink model size. To mitigate these two challenges, a magnitude-based gradient estimator, which assigns smaller gradients to the clipped values away from the threshold, is proposed in (Sakr et al., 2022). By applying both the optimal clipping values and gradient estimator,

5 Hardware implementation of QNNs

Efficient hardware implementation of a deep neural network for low-resource hardware requires compression techniques, including quantization, pruning, and Huffman coding (Chen et al., 2020). Usually, reduction of energy consumption, memory access, and data transfers between memory and computation units is achieved by simplifying computationally complex operations, e.g., floating-point computations. In this section, we discuss three main types of QNN implementations: FPGA-based solutions, ASIC solutions, and emerging non-volatile memory (NVM) based CNN implementations. This review focuses on state-of-the-art works considering the studies from the last 5 years with systematic neural network evaluations on hardware.

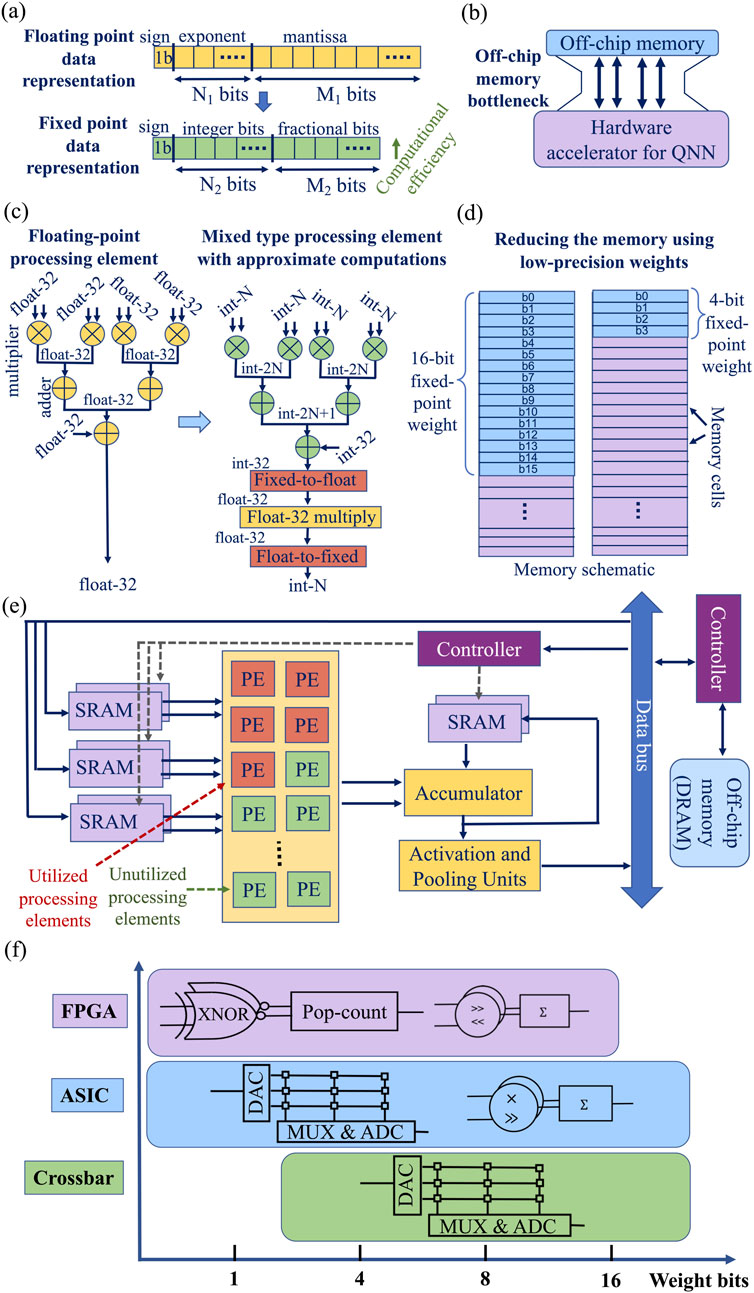

In QNN hardware, different quantization schemes require different bit configurations (Ryu et al., 2020). QNN hardware can be divided into 3 main groups based on the quantization scheme: (1) fixed-point arithmetic, (2) power-of-two quantization (logarithmic quantization), and (3) binary representation. Compared to the floating-point representation, fixed-point arithmetic keeps the location of the radix point fixed (Figure 9a). The power-of-two scheme represents the weights in the form of

Figure 9. (a) Floating-point versus fixed-point operations. (b) Off-chip memory bottleneck. (c) An example of moving from floating point operations to approximate operations in a processing element (Wei et al., 2019). (d) Reducing the memory space with low-precision computation. (e) Example of QNN accelerator (PE-processing element), modified from (Chang and Chang, 2019). (f) PE preference for different hardware architecture under different bit-width.

QNN hardware accelerators focus on different issues affecting hardware efficiency, including data movement, hardware efficiency, memory consumption, and hardware utilization.

Similar to any neural network hardware design, one of the main challenges of QNN hardware is data movement between the QNN accelerator and off-chip memory storing QNN weights (Figure 9b). Typically, data movement is more energy-consuming than computation (Chen et al., 2016). This problem is targeted by in-memory computing-based QNN accelerators (Krestinskaya et al., 2023). The other challenge is to improve the energy efficiency of QNN hardware while preserving the performance accuracy. This can be addressed by combining fixed-point approximate operations with floating-point operations to create mixed-type processing elements (Figure 9c) (Wei et al., 2019). Memory consumption problem is tackled by lowering data (weights) precision (Figure 9d) (Wei et al., 2019). Various data reuse strategies are explored to improve memory efficiency (Ankit et al., 2019; Yao et al., 2020; Song et al., 2017; Shafiee et al., 2016). Hardware efficiency often comes with the cost of hardware utilization, when some processing elements (PEs) remain unused (Figure 9e). Data utilization, data flow complexity, and resource allocation along with resource parallelisms should be considered in any QNN design, especially for FPGA-based QNNs (Chang and Chang, 2019). Different processing engine designs are summarized in Figure 9f. For FPGA-based accelerators, the “on-chip” part only refers to the parallel acceleration parts implemented on FPGA fabrics. For some FPGA accelerator designs, the host CPU is either implemented using on-chip ARM processors or directly synthesized using resources on FPGA fabrics.

The other challenge of translating QNN to hardware implementation is relate to algorithm-hardware co-design challenges (Zhang et al., 2021). This involves finding the trade-offs between performance accuracy and hardware cost. The transition from software design of QNN to hardware implementation involves loops unrolling in a software algorithm, mapping software computations to particulate hardware blocks, array partitioning, matrix decomposition, loop pipelining, etc. In addition, it is also important to accommodate the quantization and processing differences in different types of layers. For example, convolution layers are computational-centric (few parameters requiring many computations), while fully connected layers are memory-centric (many parameters used once requiring loading from external memory in some cases contributing to the hardware efficiency limitations) (Qiu et al., 2016). The other challenge is to accommodate layer-wise and mixed-precision quantization implemented in software into the hardware, which varies from one QNN design to the other.

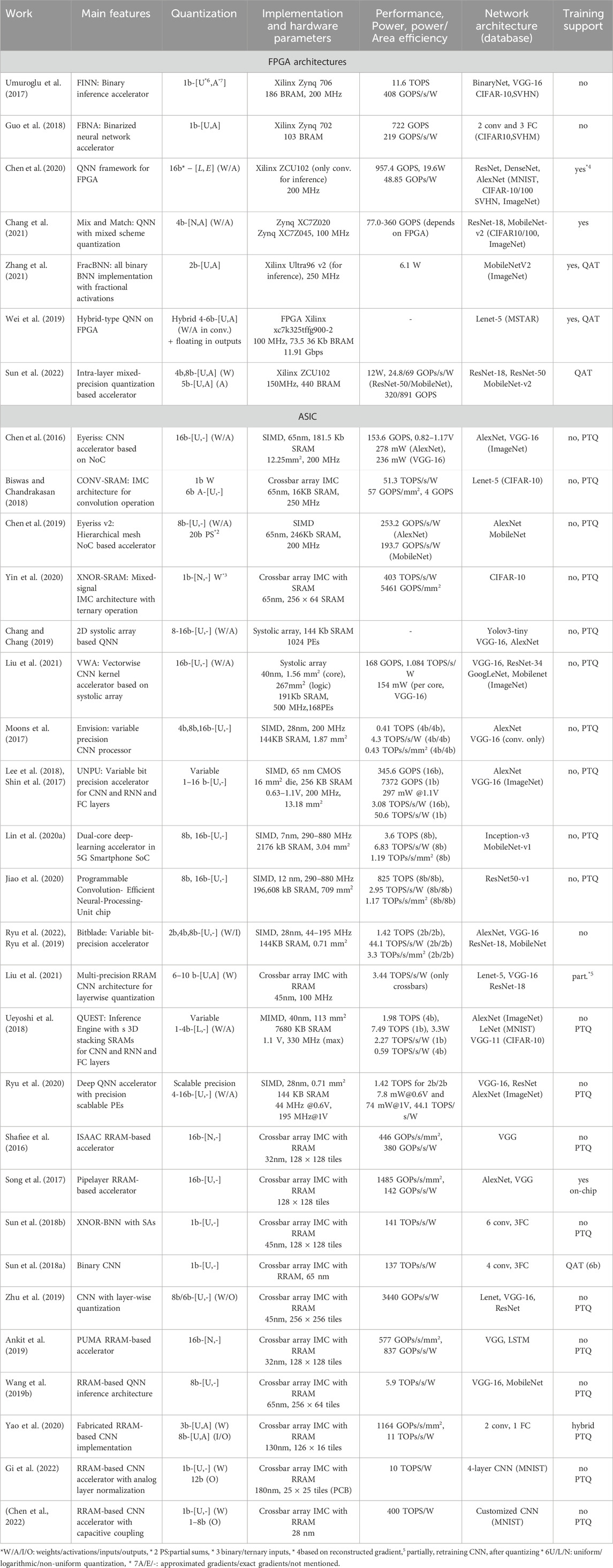

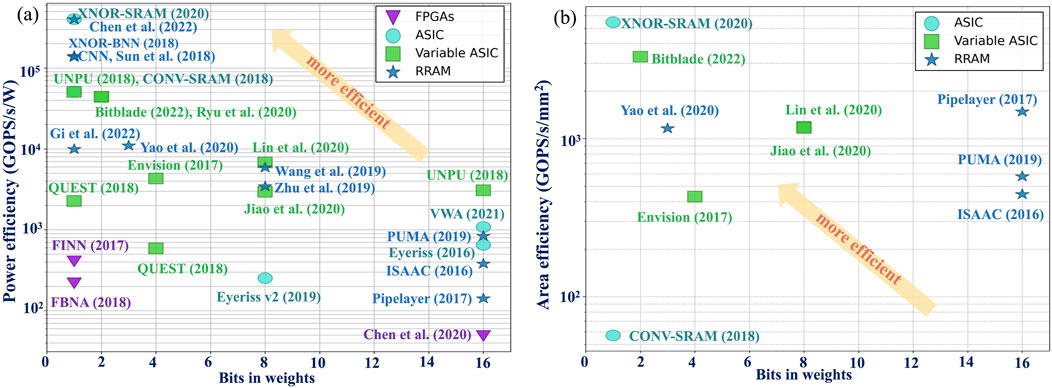

This section focuses on QNN architectures implemented on FPGA and ASIC, and related open challenges. Also, designs with emerging non-volatile memory devices are considered. Table 3 shows the summary of QNN hardware architectures. Figure 9 illustrates the area and power efficiency of different QNN architectures with respect to the precision of weights. The performance of RRAM-based architectures and SRAM-based ASIC implementations of QNNs are comparable in terms of power efficiency, while FPGA-based designs compromise energy efficiency due to hardware reconfigurability. This section also provides general guidelines on software-hardware co-design of QNN accelerators.

5.1 FPGA-based implementations of QNNs

Field Programmable Gate Arrays (FPGAs) are designed for fixed point computations implemented using lookup tables (LUTs). Even though floating-point computation is possible to implement on FPGA using Digital Signal Processing (DSP) blocks, this is expensive and inefficient. To convert a QNN design into FPGA-based hardware implementation, different frameworks can be created to automate such conversion (Umuroglu et al., 2017; 2020). For example, LogicNets framework converts trained QNNs into equivalent netlists of truth tables for FPGA including network sparsity exploration to reduce neuron fan-in (Umuroglu et al., 2020). Fan-in reduction contributes to efficient LUT-based QNN implementations on FPGA.

5.1.1 Binarized and multi-bit precision neural networks on FPGA

Binarized Neural Networks (BNNs) are the most resource-efficient QNN designs on FPGA (Figure 10) (Umuroglu et al., 2017; Guo et al., 2018; Zhang et al., 2021; Qin et al., 2020). One of the most well-known BNN accelerators on FPGA is FINN (Umuroglu et al., 2017), which automatically converts Theano-trained BNN to synthesizable C++ description with optimized hardware blocks to synthesize a bitfile through High-Level Synthesis (HLS) software to deploy to FPGA. The binarization of neural network weights reduces memory consumption and improves computation speed. Typically, the input layer of BNN is not binarized to preserve input features and accuracy. To binarize the input layer in BNN, binary padding can be used to provide resource parallelism and scalability for FPGA-based implementations, as in (Guo et al., 2018; Zhang et al., 2021).

Figure 10. Power (a) and area (b) efficiency of QNN hardware implementations with respect to weight precision.

Maintaining high accuracy after binarization is one of the main challenges of BNN, which requires specific training methods. Real-to-Binary Net framework proposed in (Martinez et al., 2020) performs progressive teacher-student training. Starting with a full-precision teacher model and a student model with soft-binarized activations (using

There have been several multi-bit FPGA-based implementations of QNN proposed recently (Ding et al., 2019; Hu et al., 2022; Chen et al., 2020). One of the most common quantized weights representations used for FPGA-based QNNs is the power-of-two method quantization method, as in FlightNN (Ding et al., 2019). To improve hardware efficiency further, the multiplication operations can be replaced by a lightweight shift operation (Ding et al., 2019) or be approximated by a different number of shift-and-add operations (Chen et al., 2020). To improve QNN efficiency further, the design of DSP blocks for quantized MAC operations can be optimized (Hu et al., 2022).

5.1.2 Mixed-precision and hybrid neural networks on FPGA

Mixed-precision and hybrid QNN designs require additional design considerations for efficient implementation. Inconsistent precision throughout the neural network layers can affect the utilization of heterogeneous FPGA hardware resources (Chang et al., 2021). In the Mix-and-Match FPGA-based QNN optimization framework (Chang et al., 2021), this problem is avoided using mixed quantization, combining sum-of-power-of-2 (SP2) and fixed-point quantization schemes for different rows of weight matrix due to different distribution of weights in different rows. The quantization scheme can also be adjusted for the distribution of the weights. For example, the Mix-and-Match framework uses the quantization scheme suitable for Gaussian-like weight distribution, where multiplication arithmetic is replaced with logic shifters and adders that can be implemented on FPGA using LUTs.

Hybrid quantization can also be used to improve QNN accuracy and efficiency in FPGA-based implementations. For example, in hybrid-type inference in (Wei et al., 2019), both convolution kernels (feature maps) and parameters are quantized to a signed integer, while integer/floating mixed calculations are used for the outputs. In the inference phase, the weights and activations in convolution layers are quantized, while the dot product output is de-quantized and represented as a 32-bit floating-point number before batch-normalization operation. The floating-point batch normalization output is fetched to the activation function, and the activation function output is quantized to integer representation. This helps to reduce the number of LUTs, flip-flops, DSP blocks, and BRAM blocks in the design.

Mixed-precision can also be used for intra-layer quantization. In (Sun et al., 2022), a mixed-precision algorithm combines a majority of low-precision weights, e.g., 4 bits, with a minority of high-precision weights, e.g., 8 bits, within a layer. The weights leading to high quantization errors are assigned to be of high precision. Moreover, in (Sun et al., 2022), quantization optimization techniques, including DSP packing, weight reordering, and data packing, are used.

5.2 ASIC implementations of QNNs

ASIC implementations of QNNs can be broadly categorized into conventional digital and mixed-signal designs, such as systolic arrays (Chang and Chang, 2019; Liu et al., 2021) or single/multiple instruction multiple data (S/MIMD)-based architectures with multiple cores (Lee et al., 2018; Shin et al., 2017), as well as designs leveraging emerging technologies like In-memory computing (IMC) (Krestinskaya et al., 2022; 2024a) and neuromorphic computing (Shen G. et al., 2024; Matinizadeh et al., 2024). Among these emerging technologies, SRAM- and RRAM-based IMC implementations have advanced the most, therefore, this work primarily focuses on them. The key distinction between IMC-based designs and traditional von Neumann architectures, where memory and processing units are separate, is that computation occurs directly within the memory. IMC designs can be based on either volatile (SRAMs and DRAMs) or non-volatile memory devices, e.g., resistive random-access memory devices (RRAMs), phase-change memory devices (PCM or PCRAM), etc (Krestinskaya et al., 2023).

5.2.1 Fixed-precision ASIC implementations of QNN

Based on Figure 9, ASIC implementations of QNNs are more efficient than FPGA-based implementations, as they are usually hardwired in an optimum way and cannot be reconfigured. Same as FPGA-based designs, ASIC-based implementations also use a shift operator instead of the multipliers via power-of-two quantization to improve energy efficiency. The multiplication can also be converted to two shift operations and one addition, as in LightNN (Ding et al., 2017; Ding et al., 2018). Also, approximate multiplication can be used, which drops the least significant powers of two limiting the number of shifts and adds (Ding et al., 2018). Some ASIC accelerator designs retain a certain level of flexibility (Moon et al., 2022; Lee S. K. et al., 2021). In (Moon et al., 2022), a framework supporting from 1 to 4-bit of arbitrary base quantization (Park et al., 2017) is proposed. For arbitrary base quantization, hardware blocks performing sorting, grouping, and population counting are adopted. In (Lee S. K. et al., 2021), an accelerator supporting both 8/16-bit floating point format and 2/4-bit integer format, where data pipelines for these formats are separated and implemented in dedicated hardware, is proposed. This accelerator supports both training and inference using floating point and integer data correspondingly.

The hardware efficiency of QNN accelerators is affected by architecture hierarchy, organization of processing elements (PEs), network-on-chip (NoC) structure, and the type of NoC. For example, Eyeriss is the other accelerator using 16-bit fixed-point computation, where data movement and DRAM access are reduced by reusing data locally (Chen et al., 2016). The improved version of Eyeriss, Eyeriss v2 (Chen et al., 2019), has a hierarchical mesh NoC with sparse PE architecture adaptable to the different amounts of data reuse and bandwidth requirements aiming to improve resource utilization.

CONV-SRAM (Biswas and Chandrakasan, 2018) and XNOR-SRAM (Yin et al., 2020) architectures are the other ASIC QNN accelerators to improve energy efficiency and reduce the number of computations. In (Yin et al., 2020), binary weights and ternary data representation [-1,0,1] for XNOR-and-accumulate operation are used. To improve computation speed and reduce memory access, systolic array-based CNN implementation can be used (Chang and Chang, 2019; Liu et al., 2021). In (Chang and Chang, 2019), a systolic array-based CNN, VWA, aiming for high hardware utilization with a low area overhead and suitable for different sizes of convolution kernels with 8-bit fixed point computation is shown. In (Liu et al., 2021), the systolic array-based accelerator with 8/16-bit integer linear symmetric quantization of both activations and weights in convolution and fully connected layers is illustrated.

In IMC-based implementations, SRAM-based QNN architectures, such as CONV-SRAM (Biswas and Chandrakasan, 2018) and XNOR-SRAM (Yin et al., 2020), offer greater energy efficiency than traditional designs. Meanwhile, RRAM-based QNNs provide high computational density, energy efficiency, non-volatility, and scalability (Krestinskaya et al., 2022; Smagulova et al., 2023). With non-volatile multi-level memories, MAC operations occur in the analog domain, enabling higher storage density and faster computations (Krestinskaya and James, 2020). In IMC architectures, memory devices in a crossbar structure multiply row voltages by device conductances (weights), with accumulated column current as the MAC output. Quantization in multi-level IMC arises from the limited conductance levels per device (Zhu et al., 2019). Activation quantization is managed by peripheral DACs and ADCs. However, non-volatile IMC devices face variations, non-linear switching, and conductance drift, which require mitigation techniques.

In IMC-based binarized neural networks (BNNs), weights are represented using 1-bit or multi-level devices, utilizing only a high-resistive state (HRS) and a low-resistive state (LRS). Low-bit IMC designs are simpler, more robust, and less susceptible to device variations than higher-bit IMC architectures. Several RRAM-based BNN implementations have been proposed, including those in (Sun et al., 2018b; a). In (Sun et al., 2018b), MAC operations are performed using XNOR logic, enabling the replacement of complex, power-hungry ADCs with 1-bit sense amplifiers (SAs) (Sun et al., 2018a). To enhance area efficiency (Chen et al., 2022), introduces an RRAM-based accelerator using capacitive coupling (1T1R1C) cells with binary weights and multi-bit output. In (Gi et al., 2022), an RRAM-based accelerator with analog layer normalization is proposed, eliminating the need to store intermediate layer outputs in external memory. Meanwhile (Kim et al., 2022), presents an ADC-free RRAM-based BNN, reducing hardware overhead compared to conventional RRAM-based IMC architectures with ADCs (Sun et al., 2018b).

Multi-bit IMC-based QNN implementations have the advantage of higher computation density, however, may suffer from ADC complexity (Krestinskaya et al., 2022). In IMC architectures, high-precision neural network weights are often formed by combining several low-bit IMC devices in a crossbar (Krestinskaya et al., 2023). The design combining several 1-bit RRAM cells for higher precision weights are shown in (Shafiee et al., 2016; Song et al., 2017; Ankit et al., 2019; Wang Q. et al., 2019), where higher bit weight, e.g., 8 or 16 bits, are represented by 2-bit and 4-bit devices. Most IMC-based QNN accelerators process high-precision inputs using low-precision DACs and serial encoding, as seen in ISAAC (Shafiee et al., 2016), Pipelayer (Song et al., 2017), and PUMA (Ankit et al., 2019). ISAAC reduces ADC precision requirements by storing weights in both original and flipped forms to maximize zero-sums (Shafiee et al., 2016). Pipelayer enhances efficiency by leveraging intra-layer parallelism for training and inference (Song et al., 2017). PUMA employs a Network-on-Chip (NoC) architecture, where multiple cores, each integrating an RRAM crossbar and CMOS peripherals, facilitate scalable computation, additionally, its specialized instruction set architecture (ISA) and spatial architecture explicitly capture various access and reuse patterns, reducing the energy cost of moving data (Ankit et al., 2019). A fabricated CNN architecture presented in (Yao et al., 2020) adopts hybrid training to mitigate device variations and uses multiple copies of identical kernels in different parts of the memristor array so that the same weight data can be applied in parallel to different inputs. The network is first trained off-chip and then fine-tuned on-chip to improve robustness against hardware non-idealities.

5.2.2 Variable-precision and layer-wise quantization in ASIC implementations of QNN

Variable precision in ASIC QNN implementations aims to optimize the energy efficiency and the number of memory accesses without reducing the performance accuracy (Jiao et al., 2020; Ueyoshi et al., 2018). Fully fabricated CNN accelerators with variable precision are demonstrated in (Lin C.-H. et al., 2020; Jiao et al., 2020). State-of-the-art variable-precision QNN designs support flexibility and can vary the precision of neural network weights, as in a unified neural processing unit (UNPU) (Lee et al., 2018; Shin et al., 2017) supporting convolution, fully connected, and recurrent network layers. UNPU also explores the full architecture hierarchy of QNN accelerator, including 2-D mesh type NoC with the unified DNN cores including weights memory and PE performing MAC operation, 1-D SIMD core, RISC controller for instructions execution, aggregation core, and two external gateways connected to this NoC. The main aim of UNPU is to achieve the trade-off between accuracy and energy consumption.

Variable precision configuration can be controlled by additional circuit blocks supporting the variable quantization and additional hardware modifications. For example, in Envision (Moons et al., 2017), a dynamic-voltage-accuracy-frequency-scalable (DVAFS) multiplier switching on and off sub-multipliers to control the precision is used. The main drawback of such an approach is inefficient hardware utilization for low-precision operation, e.g., for 4-bit precision configuration, only 25% of sub-multipliers are utilized. In the other variable-precision accelerator, Bit Fusion (Sharma et al., 2018), bit-level processing elements dynamically fuse to match the bit-width of individual DNN layers aiming to reduce computation and communication costs. It divides the MAC operations into multiple operations to support variable precision reducing the number of required resources. In BitBlade (Ryu et al., 2022; 2019), a bit-wise summation method based on

IMC-based QNN designs with layer-wise quantization and variable precision are demonstrated in (Zhu et al., 2019; Liu et al., 2021; Umuroglu et al., 2020).

5.3 QNN hardware challenges and open problems

5.3.1 Memory access issues

The complexity of state-of-the-art network models and the number of weights stored in the memory grows exponentially with the network size. Therefore, memory access and communication between memory and processor becomes the main bottleneck for speed and energy consumption rather than computation. According to (Wang J. et al., 2019), the bus bandwidth between the memory and processing unit is around 167 GB/s, while the reading operation bandwidth in traditional SRAM memories is 328 TB/s. The trend is the same for the energy spent on data transmission between the memory and processing unit. If the readout operation requires an energy of 1.6pJ, the data transmission may take up to 42 pJ in the same system (Wang J. et al., 2019). Overall, the problem with memory access is common for all types of neural network hardware implementations. Even though QNN designs target the reduction of memory accesses by lowering the computation precision, thus reducing the number of stored bits, the memory access problem is still relevant.

The problem of memory access is addressed by IMC-based designs keeping the processing of MAC operations close to the memory. However, the local or external memory is required to store the outputs of intermediate layers in the inference and preserve the gradients during the training. Even though the memory access challenge is reduced in IMC-based QNN implementations, IMC-based architectures can experience other problems related to the immaturity of non-volatile memory, which is the cause of device non-idealities. In addition, thorough design considerations are still required to create efficient QNN architectures, especially for on-chip QNN training.

5.3.2 Hardware overhead and hardware utilization in variable and reconfigurable precision designs

Flexibility and reconfigurability of the architecture are key for moving from task-specific to general-purpose neural network architectures. However, this reconfigurability leads to area overhead and hardware underutilization (Ryu et al., 2020). In QNN designs with variable bit precision and layer-wise quantization, the implementation of bit-reconfigurable designs and circuits is necessary to ensure minimum hardware overhead and the efficient utilization of hardware resources. Several mixed-precision quantization frameworks mentioned in previous sections focus on improving energy efficiency; however, they do not consider the control circuits overhead to implement mixed-precision models. For example, FPGA-based QNN architecture FlightNN is based on mixed-precision convolution filters on FPGA, while does not discuss the challenges of a full architecture implementation and scheduling (Ding et al., 2019).

Variable precision within and between QNN layers and adaptive quantization based on the distribution of weights and activations (Ding et al., 2018) may also lead to inefficient resource utilization. In many cases of variable bit precision in QNNs, the extra weights are simply switched off causing hardware utilization inefficiency. This problem is also valid for QNN on-chip training, where full precision computation is often required for weight update while the inference is typically quantized. To implement this, a QNN accelerator should support both full-precision and fixed-precision quantization. While full-precision computations are not used during the inference leading to inefficient hardware utilization.

5.3.3 Lack of efficient on-chip training on quantized hardware

Training complexity and duration are the other QNN challenges. The lack of differentiable gradients in QNN training leads to more training iterations compared to full-precision networks. Moreover, QNN training algorithms use full-precision computations for weight updates (Ding et al., 2018). Therefore, transferring such an algorithm to low-power hardware for on-chip training is complicated leading to the lack of QNN on-chip training architectures. In addition, such architectures may require variable precision support, and additional hardware overhead for routing, computation, and additional memory to store intermediate outputs during the training.

Several QNN frameworks make attempts to simplify the on-chip training on QNNs (Wei et al., 2019). For example, the reconstructed gradients in backpropagation can be used to solve the vanishing gradient problem instead of STE (Chen et al., 2020). Merging quantization and de-quantization operations can be used to perform “fake quantization” to improve QNN accuracy with low bit-precision (Liu et al., 2021). However, some functions still require full-precision computation. Implementation of QNN training algorithms with low-precision weight updates is also possible. For example, in LNS-Madam training precision is reduced to 4 bits combining a logarithmic number system (LNS) and a multiplicative weight update (Zhao et al., 2022). However, such algorithms and related hardware implementation for low-precision QNN training is still an open challenge.

5.3.4 Automated mixed-precision quantization

In some cases, it may be difficult to find the optimum quantization precision within or between the layers manually. Therefore, the automated mixed-precision quantization techniques are used to convert a software-based QNN to a hardware implementation (Benmeziane et al., 2021). Automated mixed precision quantization is a part of hardware-aware neural network search (HW-NAS). Various optimization techniques, from constrained problem optimization to reinforcement learning and evolutionary algorithm-based methods, which automatically assign multiple bits to the layer, can be applied for automated mixed-precision quantization. The main problems in this domain include a large search space and the high computational cost required for such a search. Also, many approaches do not consider hardware-related metrics in such optimization.

5.4 General considerations for hardware-software co-design in QNN

Hardware-software co-design implies efficient mapping and optimization of a software-based neural network to hardware Krestinskaya et al. (2024a), Krestinskaya et al. (2024b). For full-precision networks, this can be accomplished by compilers and software development kits (SDK), e.g., GLOW (Rotem et al., 2018), ONNX (ONNX, 2024), and TensorRT (for Nvidia GPUs) (Nvidia, 2024), focusing on the optimization of instruction scheduling and memory allocation based on the target platform specifications. Similarly, this can be done for QNNs with moderate bit-widths

A QNN accelerator can be divided into two parts: the off-chip hosting computer and the on-chip acceleration hardware. The off-chip host computer runs the software application, transmits the data between off-chip storage (e.g., DRAM) and on-chip data buffers, and reconfigures the on-chip hardware by sending control signals. The on-chip hardware executes neural network operations parallelly with arrays of processing engines. The interaction between these two parts should be optimized. By the level of operation executed on-chip each time, the accelerator designs can be divided into three categories: network-level acceleration, layer-level acceleration, and tensor-level acceleration. In network-level acceleration designs, a complete neural network is implemented on-chip achieving the best throughput and efficiency while being capable of processing only simple QNN models due to the limited on-chip storage capacity. With increased complexity and quantization bits, the accelerator design alternates to layer-level or even tensor-level acceleration with lower throughput and efficiency due to the frequent loading of data for different layers/tensors. Different accelerators require specific hardware-software co-design and optimization techniques to reach the optimum efficiency.

5.4.1 Processing element (PE) optimization

According to Table 3, FPGA-based accelerator designs favor low bit-width quantization (Umuroglu et al., 2017; Guo et al., 2018; Zhang et al., 2021). With binary quantization, the MAC operations can be replaced by XNOR and bit count operations, which can be efficiently implemented using LUTs. While with higher bit-width uniform quantization, the MAC operation is more efficient on DSP blocks (Chang et al., 2021). Meanwhile, some designs adopt logarithmic quantization on weights simplifying multiply operation to the shift operation carried out by LUTs (Chen et al., 2020).

The PE implementation of an ASIC QNN accelerator can be divided into two categories based on the domain where the computation is performed: (1) analog domain-based IMC with SRAM and RRAM (Biswas and Chandrakasan, 2018; Yin et al., 2020), and (2) digital domain with classical digital adders and multipliers (Chen et al., 2016; 2019; Chang and Chang, 2019). In the first category, an SRAM and RRAM cell stores one or more bits of data requiring analog or mixed-signal computation level optimizations (e.g., crossbar and peripheral circuits). SRAMs have fast and efficient writing capabilities, in turn, the design can be easily reconfigured to different weight values. Therefore, the SRAM-based accelerators perform layer-level or tensor-level acceleration. Different from SRAMs, the non-volatile memory elements do not support runtime write operation; however, such cells are more area-efficient and dense. Hence, network-level acceleration with higher bit-width is more suitable for non-volatile memory-based crossbar designs.

In the second category, the ASIC implementation of adders and multipliers can benefit from explicit optimization. Therefore, compared with FPGA-based accelerators, the ASIC implementation favors a data format with a higher bit-width

5.4.2 Auxiliary operations optimization

Except for the major matrix-vector multiplication operations in neural network inference, other operations like batch normalization, activation, pooling, etc., are noted as auxiliary operations in this section. In ASIC- and FPGA-based designs, one of the optimizations of auxiliary operations in QNN is the operation fusion. For example, the batch normalization layer first normalizes the tensor based on historical statistical data and then linearly affines the tensor. During inference, these two operations can be fused into one linear transform of the tensor, with both the normalization and affine parameters being constant:

In Equation 22,

The other possible fusion is binary quantization and ReLU, where the scale term in the fused operation can be omitted if the output is directly quantized (e.g., not a bypass in a ResNet). The ReLU activation function can be implemented as a compare-with-zero logic. It should be noticed that such compare-with-zero logic is not equivalent to the sign function (returns zero when input is zero) used to perform binary quantization in the software training phase. Hence, it is important to explicitly output either 1 or

Compared to FPGA, crossbar-based accelerators can efficiently execute most operations, e.g., convolution, linear operations, etc. However, operations like pooling, activation, and batch normalization need to be performed in the peripheral auxiliary blocks, commonly in the digital domain (rarely in analog (Krestinskaya et al., 2018)). Hence, crossbar array-based designs typically do not involve batch normalization fusion.

5.4.3 Data routing and reconfigurability

As the ASIC-based accelerators focus mostly on layer-level or tensor-level acceleration, to maximize the throughput and hardware utilization, the data flow should be routed efficiently and the PE arrangement should be reconfigured flexibly. To reduce unnecessary data movement, different levels of data reuse are implemented by broadcasting and multi-casting the common input to multiple PEs. Additionally, the inputs to the PEs are multiplexed increasing the reconfigurability of the PE array. Furthermore, the PEs can be organized into a network-on-chip which substantially increases both data routing efficiency and the reconfigurability of the PE array (Chen et al., 2019). In general, a data reuse strategy should be designed according to the accelerated operation. For instance, traditional convolution should have a different data reuse strategy from depth-wise separable convolutions.

5.4.4 Data value mismatch

Different from FPGA or ASIC implementations, the crossbar array-based accelerators perform computation in the analog domain. Therefore, there is a performance gap when pre-trained QNNs are directly deployed on the crossbars, due to device and circuit non-idealities of the crossbar and IMC cells. These non-idealities cause data mismatches between software and hardware. These non-idealities include device variation, non-linear switching, conductance drift, ADC/DAC non-linearity, mismatch, etc (Krestinskaya et al., 2019). To reduce the performance gap, these non-idealities should be modeled and explicitly considered during neural network training (Xiao et al., 2022).

6 Discussion and future directions

In this paper, we discuss different types of quantization and QNN training methods. These methods can generate well-trained QNNs featuring comparable accuracy as full-precision models. However, high accuracy is achieved at the cost of involving full-precision parameters (like scale factors). Even though these full precision parameters do not cause an obvious model size increase (shown in Figure 7), they introduce computation overhead, especially when there’s no dedicated floating-point unit in the hardware accelerators. Meanwhile, during backward propagation, all the aforementioned methods rely on full-precision weights or fixed-point weights with large bit-width to accumulate the gradient.

Various hardware accelerator designs (introduced in Section 5) can achieve higher computation efficiencies compared with traditional general-purpose computation units (GPU/CPU). However, most of the accelerator designs only support efficient neural network inference rather than training. Additionally, higher reconfigurability is expected from the accelerator designs, which is a key component for edge online learning or federated learning.

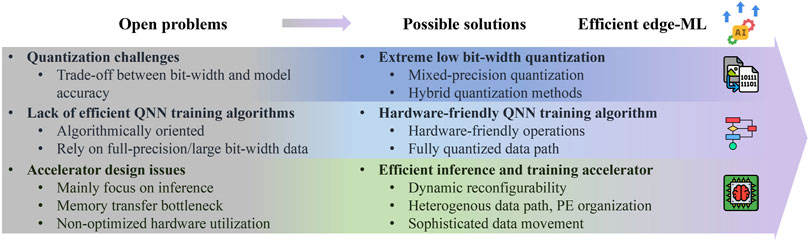

From algorithm and hardware co-design perspectives, we propose future directions for both the QNN algorithms and accelerator designs as summarized in Figure 11.

Figure 11. Technology advancements towards efficient edge machine learning (algorithm, hardware co-design).

6.1 Extreme low bit-width quantization

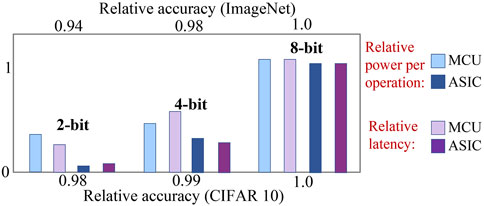

Figure 12 shows the influence of quantization precision on power consumption and latency with different hardware platforms. For MCU-based platforms, the latency and power consumption scale down with the quantization precision due to the fixed length of the arithmetic units. While for ASIC-based accelerators, the latency and power consumption scale down drastically with the quantization precision. With a simple dataset (CIFAR-10), the model accuracy experiences less degradation than a complex dataset (ImageNet) as the quantization precision decreases. Figure 12 shows that a close-to-FP accuracy can be obtained as the quantization bit-width is larger than 4-bit. Consequently, the majority of the QNN hardware designs shown in Table 3 adopt a quantization precision higher than 4-bit. Hence, there is a demand to improve model accuracy under sub-4-bit quantization scenarios. With low-bit quantization, the hardware platforms can be more energy efficient and fast, especially with ASIC-based platforms.

Figure 12. Qualitative study on relative power consumption per operation and latency under different precision quantization with MCU-oriented acceleration kernel (Garofalo et al., 2021) and ASIC-based NN accelerator (Ryu et al., 2022). Accuracy relative to full precision models is shown with the corresponding bit-width tested with CIFAR-10 (Yang et al., 2020) and ImageNet dataset (Zhang et al., 2018).

6.2 Study of the quantization of biases and batch normalization parameters during training

Compared to training, biases and batch normalization parameters can be fused into the following layer during inference. There are no studies offering a systematic discussion or implementation of quantization towards biases or batch normalization parameters during training. Meanwhile, comparing the accuracy resulting from cases with and without biases or affine operations (Figure 7), these operations play an important role in guaranteeing high performance accuracy. Hence, there is a strong demand for a systematic study of the quantization algorithms towards biases and batch normalization parameters during training. Only with quantized biases and batch normalization parameters expensive floating-point operations can be completely removed from the data path, which is a key point for efficient hardware accelerator design that supports training.

6.3 QNN training methods relying only on hardware-friendly operations (integer arithmetic operation, shift, bit-wise operation)

All QNN training methods depend on floating-point or long bit-width fixed-point parameters to accumulate the gradient preventing them from being deployed on low-end edge or IoT devices. At the same time, out of privacy concerns, machine learning methods, e.g., federated learning, require local training on low-end devices. Since there are some existing works (Sun et al., 2018b; Zhou et al., 2016; Sun et al., 2020) supporting the quantization of gradients during the backpropagation, the critical part of developing QNN training methods relying only on hardware-friendly operations is finding a substitution of the floating-point format in accumulating gradients. Therefore, it is worth exploring the fusion of new gradient accumulating methods and existing gradient quantization methods.

6.4 Hardware accelerator design supporting both efficient inference and training

The existing hardware accelerator designs focus more on inference rather than training assuming that costly training can be performed on powerful servers or clusters. However, as IoT technology, edge computing, and corresponding privacy concerns arise, it is required to switch neural network training from a centralized manner to a more distributed one. This trend puts a requirement on the hardware design to support not only the inference but also training. As analyzed in the previous sections, different data in the neural network, like weights, activations, and gradients, possess different ranges and distributions. This results in different types of quantization methods being applied to different data. To support both inference and training, the hardware architecture should be based on a heterogeneous design and compatible with various quantization methods and support arithmetic operations and corresponding data formats while maintaining high efficiency.

6.5 Dynamically reconfigurable accelerator designs

In addition to inference and training support, hardware reconfigurability is also critical. The edge device (e.g., mobile phone) may be required to run different applications and tasks. Neural networks running on the hardware accelerator could have different network structures, quantization methods, precision, and execution time requirements. All these factors bring challenges to the hardware design to be dynamically reconfigurable, especially in ASIC and non-volatile memory-based hardware architectures.

7 Conclusion