- 1The University of Utah, Salt Lake City, UT, United States

- 2Pacific Islands Ocean Observing System, School of Ocean and Earth Science and Technology, University of Hawai‘i at Mānoa, Honolulu, HI, United States

Comprehensive and objective evaluation of all observing assets, tools, and services within an ocean observing system is essential to maximize effectiveness and efficiency; yet, it often eludes programs due to the complexity of such robust evaluation. In order to address this need, the Pacific Islands Ocean Observing System (PacIOOS) transformed an evaluation matrix developed for the energy sector to one suitable for ocean observing. The resulting innovation is a decision analysis methodology that factors in multiple attributes (market, risk, and performance factors) and allows for selective weighting of attributes based on system maturity, external forcing, and consumer demand. This evaluation process is coupled with an annual review of priorities with respect to stakeholder needs and the program’s 5-year strategic framework in order to assess the system’s components. The results provide information needed to assess the effectiveness, efficiency, and impact of each component within the system, and informs a decision-making process that determines additional investment, refinement, sustainment, or retirement of individual observing assets, services, or component groups. Regularly evaluating, and taking action to improve, modify, or terminate weak system components allows for the continuous improvement of PacIOOS services by ensuring resources are directed to the priorities of the stakeholder community. The methodology described herein is presented as an innovative opportunity for others looking for a systematic approach to evaluate their observing systems to inform program-level decision-making as they develop, refine, and distribute data and information products.

Introduction

Program evaluation, defined as “systematic investigation to determine the success of a specific program,” (Barker, 2003) is an important tool used by organizational managers to inform decision-making with respect to program effectiveness. Coastal and ocean observing programs, in particular regional observing systems, are striving to increase the quality and quantity of the public-facing tools and services they deliver while maximizing value in a relatively flat-growth budget environment. Comprehensive and objective evaluation of all observing assets, tools, and services within an observing system is essential to inform the optimal allocation of resources; yet, the multiple – sometimes conflicting – objectives, preferences, and value tradeoffs inherent within these hybrid scientific-public service programs necessitate a complex evaluation protocol that often eludes observing systems.

Federal disinvestment in Research and Development in the years following the Great Recession have resulted in consistently flat budgets for ocean observing programs nation-wide. Level funding, while more desirable than actual funding cuts, still results in a need to make tough decisions with regard to resource allocation. In a typical year, program costs increase for salaries, wages, and fringe benefits, and buying power decreases due to inflation. In other years, negotiated indirect cost rates increase, resulting in what is in effect, a direct cut to the program. While horizontal cuts can help alleviate the impacts for some time, there is a tipping point in budgets when system services cannot operate effectively; requiring the consideration of vertical (whole service) cuts. In 2012, Pacific Islands Ocean Observing System (PacIOOS), the first federally certified regional component of the U.S. Integrated Ocean Observing System (IOOS®; Ostrander and Lautenbacher, 2015) started to reach this tipping point, and vertical cuts needed to be considered. The PacIOOS management team and Governing Council agreed that an objective method to evaluate the various components was necessary. In order to better assess program effectiveness and inform the allocation of annual and future investment, PacIOOS transformed an evaluation matrix developed for the energy sector (Waganer, 1998; Waganer and the ARIES Team, 2000) to one suitable for a coastal ocean observing system.

The resulting innovation is a decision analysis methodology that factors in multiple attributes (e.g., market, risk, economic, and performance factors) and allows for selective weighting of attributes based on system maturity, external forcing, and consumer demand. In 2013, PacIOOS began utilizing this evaluation process coupled with an annual review of stakeholder priorities and the program’s 5-year strategic framework to assess the system’s components. The results provide information to analyze the effectiveness, efficiency, and impact of each component within the system and inform a decision-making process that determines additional investment, refinement, sustainment, or retirement of individual observing assets, services, or component groups.

Regularly evaluating and taking action to improve, modify, or terminate weak system components allows for the continuous improvement of PacIOOS services by availing limited resources to address the evolving priorities of stakeholders. The methodology described herein is presented as an innovative opportunity for others looking for a systematic approach to evaluate their observing systems to inform program-level decision-making as they develop, refine, and distribute timely, accurate, and reliable data and information products.

Innovating an Effective Decision Analysis Methodology

Evaluation Attributes

In order to ensure a balanced appraisal of diverse PacIOOS services (e.g., high-frequency radars, wave buoys, data systems, outreach programs, etc.), the program requires a decision analysis methodology that factors in multiple attributes and allows for selective weighting of attributes based on enterprise maturity and external forcing. Review of modern evaluation systems in corporate and non-profit systems (Conley, 1987; Keeney and Raiffa, 1993; Waganer, 1998; Wholey et al., 2010) yields numerous potential attributes an enterprise can consider when evaluating service effectiveness. Due to the perceived importance of the decision makers, the identification of attributes for evaluating, and the weighting of those attributes may be somewhat subjective; however, chosen attributes are valuable not only for a consistent annual review but also for the analysis of trends over multiple evaluation cycles.

Market, risk, and economic factors were considered in the selection of evaluation attributes for PacIOOS. Market factors help determine the potential for each service to fulfill a needed role among stakeholders, while economic considerations allow for the review of competing services, resource commitment, and future cost considerations. Risk is assessed in the return on capital, maturity of the service, annual resource requirements, and independent performance of the assessed service.

A sample of the attributes gleaned from systems examined (Conley, 1987; Keeney and Raiffa, 1993; Waganer, 1998; Wholey et al., 2010) but not selected by PacIOOS for evaluation purposes include the following: liquidity; time to market; leverage (debt); profit; policy landscape; public perception; revenue diversity; prestige; competitiveness; return on investment; depletion of valued resource; supply chain dependence; environmental impact. As evidenced by this list, even in commercial models, revenue and other economic factors are not the only attributes of interest when evaluating success of a company or a system. The institutional vision, mission, and values, or guiding principles, of an organization provide the foundation by which that organization should be measured and evaluated. PacIOOS’ core vision, mission, and guiding principles are the framework that informed which attributes to employ for this evaluation process. The attributes selected by PacIOOS for use in the evaluation of each program service are described below.

Need

Has the service been identified by stakeholders as critical and desired? If so, does a large and diverse (geography, organization type, interest sector) cohort of the stakeholder community desire the service?

Uniqueness

Do any other providers deliver a similar or identical service to stakeholders in the PacIOOS region? If so, does the similar service provide a greater, lesser, or same level of utility?

Potential

Is this service, as intended, conceived, and fully applied likely to significantly improve the health, safety, economy, or environment of stakeholders in the region?

Financial Capital

Is the current funding of service operation, per year to maintain operations (not including recapitalization), sufficient for stable operation of the service?

Human Capital

Does the service require a significant commitment of human capital above those individuals required for direct operations and maintenance (i.e., does it need individuals from other enterprise components like data management, outreach, executive leadership) to continue operation?

Integration

Is the service part of a regional, national, or global network of complementary services (e.g., sole regional node within the United States national high frequency radar network)? If so, is it a critical component within the larger network? Also, has the service been integrated into the operations of local stakeholders (e.g., wave buoy data used to inform a National Weather Service surf forecast) and/or into other program operations (e.g., high frequency radar data ingested into regional ocean model)?

Technical Maturity

Does the service need improvement to function as intended? Is the technology still under development?

Saturation

Does the entire identified stakeholder base utilize the service? Can more customers be identified to utilize the service?

Required Service

Is the service required through an agreement maintained with a partner (i.e., service contract), a core part of the national IOOS® program, and/or the recipient of directed federal resources (e.g., supplemental appropriation to purchase specific equipment)?

Incremental Cost

Does the service need to be repaired, recapitalized, or is further investment needed?

Performance

Is the service reliable? How often is the service unavailable per year?

Scoring

The program’s executive leadership and board assigned attribute weights to each attribute (ranging 1–3), based on their perception of the importance of each attribute to the total assessment of the program. Specific attribute values for each service are scored on a scale ranging from 1 (lowest score) to 5 (highest score), based on an established rubric for each attribute. The assignment of clear attribute values, and consistent use of them, is an important step toward removing subjectivity from the annual evaluation process and helping ensure a higher degree of consistency between annual cycles, evaluators, and funding scenarios.

For example, the first attribute in the evaluation is Need. The evaluators assign a score under Need for each program service evaluated based on the following rubric:

Need

Has the service been identified by stakeholders as critical and desired? Does a large and diverse (geography, organization type, interest sector) cohort of the stakeholder community desire the service?

(1) No desire for service has been identified within stakeholder community.

(2) Service is viewed as “nice to have” by some but not considered to be critical by any.

(3) Service is desired by a diverse set of stakeholders, and has the potential to become critical to their operations.

(4) Service has been identified as a critical tool for a small set of the stakeholder community.

(5) A large and diverse stakeholder base identifies the service as critical.

For PacIOOS, the wave buoy program typically scores a 5 under Need, as there are numerous and diverse stakeholders that identify the information these assets provide across our region as critical. Stakeholders include, but are not limited to, the National Weather Service, the United States Coast Guard, the United States Navy, natural resource managers, university researchers, ocean engineers, commercial fishermen, and recreational ocean users. Through stakeholder engagement, surveys, and other feedback received, it is clear that the wave buoys aid all of these stakeholders by providing real-time information that is deemed critical for their decision-making needs.

After the attributes and weights are established, an additive utility theory methodology is used to quantitatively evaluate each service. Additive, as opposed to a multiplicative utility function, was chosen for this process as a score of zero under any attribute would return a total score of zero under a multiplicative function. Multiplicative scoring might be appropriate if all aspects of the enterprise were fully developed; however, enterprise maturity and continual evolution of PacIOOS services necessitates careful and objective consideration of services that are under development. Using an additive utility function will partially penalize developing services, but not eliminate them from further consideration (Waganer and the ARIES Team, 2000).

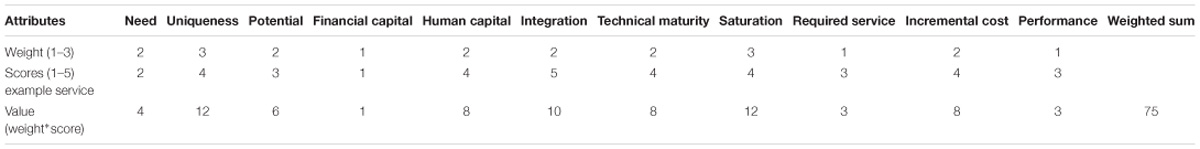

The score for each service within the program is determined by summing, across all attributes, the product of the weight and value for each attribute. The weighted score calculation, illustrated below as an equation, has the potential to produce scores ranging from 0 to 210. Table 1 provides a complete example calculation of a weighted score for a PacIOOS service.

As noted in the previous section, while the assigned weights might seem arbitrary, both the weights and the rubric remain consistent across time. This allows for the opportunity to examine each individual service as well as the overall system performance from year to year and over time.

Decision-Making

The resulting assignment of annual scores for each PacIOOS service is a major component of an established review process to inform program-level decision-making, but it is not the only component of the process. Stakeholder needs and priorities garnered during ongoing engagement, realities of current capacities of the program and across the region, and the program’s 5-year strategic framework are also essential pieces of this matrix. PacIOOS’ Governing Council, other signatory partners, and PacIOOS staff and researchers all inform the goals and objectives within the 5-year strategic framework. In this manner, they also help inform the annual decision-making of the program.

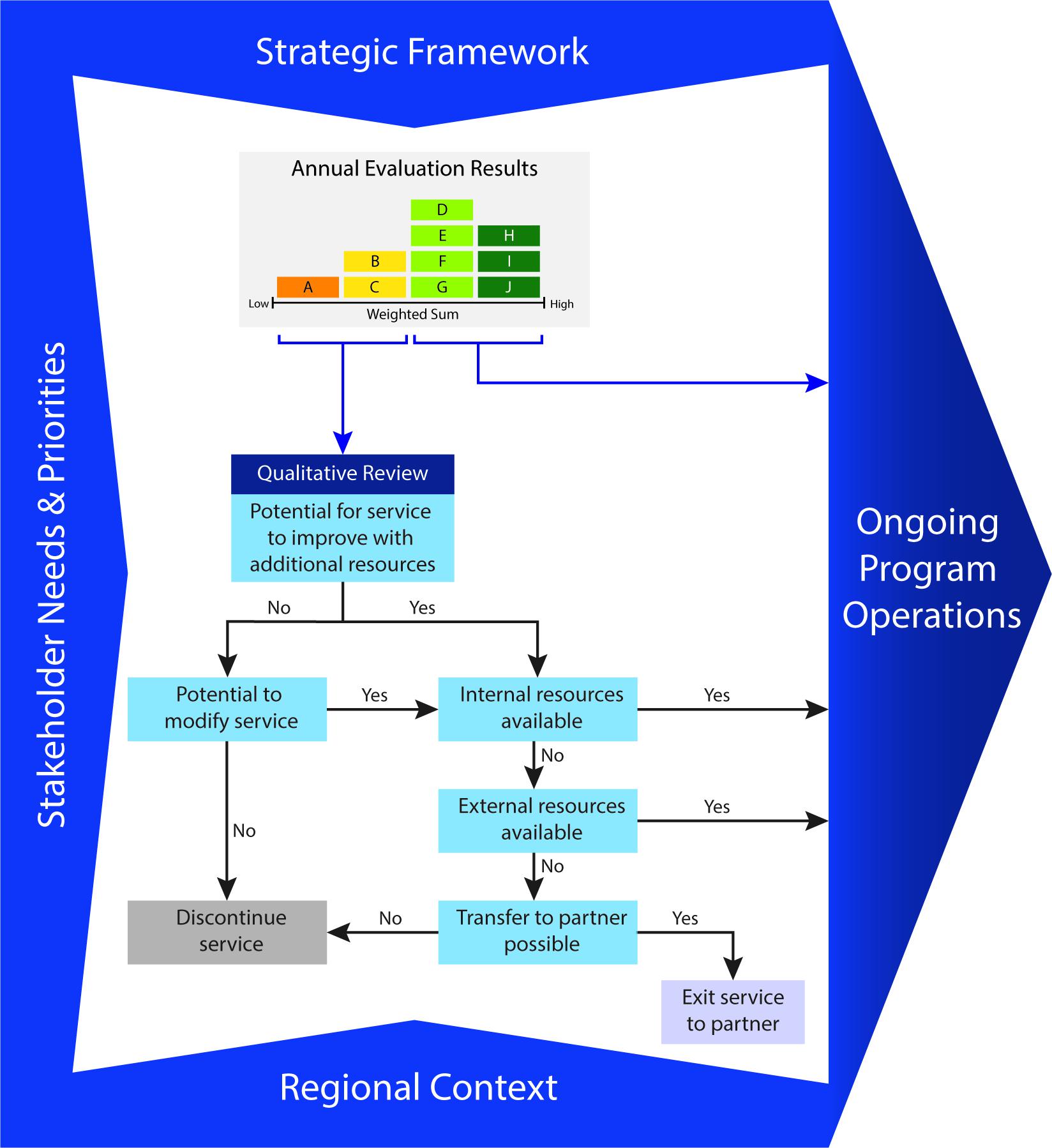

If a tool or service is found to be less successful during evaluation, this is the opportunity for an in-depth qualitative analysis to examine the reasoning behind the result. PacIOOS management explores the potential for the low-scoring service to improve with the allocation of additional resources (e.g., funding, staff time, outreach, etc.), or if there are opportunities to modify the service in some manner to bring it to the next level. If so, and internal resources are available to make such improvements or modifications, then the necessary adjustments to effort and funding are implemented as part of the program. If internal resources are not available to enhance or modify the component as needed, management examines opportunities for external resources to support the desired changes. Where feasible, the program helps to secure such external resources to facilitate the improvement of the service for the enhanced benefit of stakeholders. If external resources are not available, the program may examine if a partner organization has the capacity to improve upon it, or operate the service. If there are no such options, the resulting decision may be to discontinue the service. In other cases, when the program cannot identify opportunities to modify a low-scoring service in order to improve its performance, this is also a signal to the program to seriously consider discontinuing the service.

This inquiry process helps to ensure the continuation of successful services, the refinement or reinvestment in developing services, and the termination or transfer of underperforming services. Indeed, PacIOOS has employed this methodology to inform difficult decisions such as vertical cuts, refinement of service aim, and bolstering support for other components. As illustrated in Figure 1, this decision-making process ensures the outcomes of the evaluation scoring are both incorporated into and informed by ongoing stakeholder engagement, long-term strategic goals and objectives, and the regional context of the program. Inter-annual consideration of service scores, by attribute, allows PacIOOS to regularly evaluate program success toward achieving maximum service value.

Utilizing Evaluation Results to Improve: PacIOOS Examples

In 2014, following 2 years of annual evaluation, PacIOOS implemented its first vertical cut. There were two services that year that scored much lower than the other services evaluated. At this point, the qualitative review (see Figure 1) was employed. For service A, it was clear that additional resources would not improve the service performance. Rather, it was a situation in which assets inherited from a partner served a very localized (almost individual researcher) need, at a high relative cost, and were poorly aligned with both the strategic goals of the program and the defined needs of the broad stakeholder community. Relevant federal and local partners viewed the assets as “nice to have” but not critical and were not interested in providing additional support, leading PacIOOS to discontinue funding service A.

For the other low scoring service, labeled here as service B, in depth discussions with staff, researchers, leadership, and regional liaisons highlighted some significant aspects of the issue. While some of the assets within service B were of value to stakeholders, a few were underutilized and performing so poorly that they were bringing the assessment of the whole service down. However, the assets within service B addressed a long-term strategic goal of the program: increasing observational coverage across the region geographically. In addition, it had the potential to help address a strategic objective to foster the capabilities for ocean observing in the Insular Pacific and to address identified needs of stakeholders. Similar to service A, it did not seem that additional resources alone would improve the service. The team then asked whether the service could be modified in some way to improve it. After examining numerous options within the larger context of the program’s guiding principles (i.e., culturally rooted, stakeholder-driven, collaborative, science-based, and accessible) and the long-term strategic goals and objectives, leadership implemented a pilot project by removing the instruments from the two worst performing sites and making them available to shorter-term (6 months to 2 years) projects of program partners, along with training of how to maintain and operate the instrument and data management support. This pilot project has become successful in terms of forging new partnerships, helping address stakeholder needs, increasing capacity in the region, and communicating the benefits of ocean observing in general, and PacIOOS specifically – aligning well with the values and goals of the program.

Four years later, this small instrument loaner program has aided five different partners serving stakeholder needs across locations in Hawai‘i, The Federated States of Micronesia, Palmyra Atoll, and Palau. The low evaluation score in the case of service B objectively shed light on a weakness within the program and provided an opportunity to examine its potential for improvement. In the end, the team was able to improve it through modification, thereby lifting up the entire service.

These examples highlight the importance of evaluation within a clearly defined program mission – and strategic plan that guides action toward specific program goals. The evaluation is set up with the assumption that an organization’s structure is already built upon a foundation of a strong vision, mission, guiding principles, and strategic goals. However, these specific values, guiding principles, and goals will certainly vary among observing systems to some degree. A low evaluation score does not automatically mean a service should be discontinued, but it does signal the need for a deeper discussion about the goals of the service, the goals and values of the program, and options for making both of them better.

Summary

While optimal program effectiveness is a rather subjective goal, there are tools for evaluation and decision-making to help managers and leaders arrive at relatively objective conclusions. This paper describes an innovative approach that PacIOOS’ leadership and management refined and adopted in order to account for market, risk, economic, and performance factors as they collaborate to sustain and enhance a regional coastal and ocean observing system that addresses stakeholder needs.

Once the evaluation tool is established, personnel that are intimately familiar with all aspects of the program and its various tools and services utilize it to provide a more objective base to inform their decision-making. Paired with other programmatic and stakeholder knowledge, evaluation results can be used to ensure a program remains relevant, forward looking, and effective.

Ocean observing systems across the globe, including at all scales from deep ocean to coastal waters, share a common vision to help improve the lives and livelihoods of people and communities. We encourage those in the coastal and ocean observing world to continue to think about how they can have a greater impact on the varied stakeholders in their respective communities, regions, states, and nations. Indeed, in ocean observing, stakeholder needs, observing and data management technologies, program capacities, and more continuously evolve. In order to remain relevant to stakeholders and partners and be as effective as possible, observing systems need to evaluate how they allocate limited resources. As such, this process is helpful for developing as well as more mature programs. It is never too late to evaluate and reassess a program’s success, however, it may be defined.

Pacific Islands Ocean Observing System has benefited from this methodology over the past 6 years, and we hope it may inspire or help others that are looking for a way to measure the effectiveness (however, they define it) of their various components or efforts and utilize those outputs to inform programmatic decision-making. Although the specific attributes chosen might not be the same ones that another ocean observing system prefers to use, the framework of how to refine and employ such a methodology may prove beneficial.

This methodology also highlights the value of looking to other industries or organizational frameworks for potentially innovative ideas that may translate to coastal and ocean observing systems. While it may not be optimal to always take a corporate mentality when operating an observing system, there are lessons to be learned and tools that can be adjusted to accommodate for other needs and circumstances. Transforming tools made for others to fit the needs of ocean observing can save resources, improve efficiency, and ultimately, increase the benefits to society.

Author Contributions

CO conceived and designed the evaluation process. CO, MI, and FL refined the process. MI organized the development of the manuscript and, with CO, contributed the language that served as the foundation of the manuscript. FL designed the figures and tables. All authors contributed to manuscript revision and have read and approved the submitted version.

Funding

The development and ongoing utilization of the decision analysis methodology herein was developed by PacIOOS under funding from the National Oceanic and Atmospheric Administration via IOOS® Awards #NA11NOS0120039, “Developing the Pacific Islands Ocean Observing System” and #NA16NOS0120024, “Enhancing and Sustaining the Pacific Islands Ocean Observing System.”

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors acknowledge current and former members of the PacIOOS Governing Council who, through their leadership in the advancement of the PacIOOS, have greatly contributed to the evolution of the evaluation process detailed herein.

References

Keeney, R. L., and Raiffa, H. (1993). Decisions With Multiple Objectives: Preferences and Value Tradeoffs. Cambridge: Cambridge University Press. doi: 10.1017/CBO9781139174084

Ostrander, C. E., and Lautenbacher, C. C. (2015). Seal of approval for ocean observations. Nature 525:321. doi: 10.1038/525321d

Waganer, L. M. (1998). Can fusion do better than boil water for electricity? Fusion Technol. 34:496. doi: 10.13182/FST98-A11963661

Waganer, L. M., and the ARIES Team (2000). Assessing a new direction for fusion. Fusion Eng. Design 48, 467–472. doi: 10.1016/S0920-3796(00)00156-3

Keywords: ocean observing, program evaluation, prioritization, Pacific Islands, program analysis

Citation: Ostrander CE, Iwamoto MM and Langenberger F (2019) An Innovative Approach to Design and Evaluate a Regional Coastal Ocean Observing System. Front. Mar. Sci. 6:111. doi: 10.3389/fmars.2019.00111

Received: 30 October 2018; Accepted: 22 February 2019;

Published: 18 March 2019.

Edited by:

Justin Manley, Just Innovation Inc., United StatesReviewed by:

Oscar Schofield, Rutgers, The State University of New Jersey, United StatesLaVerne Ragster, University of the Virgin Islands, US Virgin Islands

Copyright © 2019 Ostrander, Iwamoto and Langenberger. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Melissa M. Iwamoto, bWVsaXNzYS5pd2Ftb3RvQGhhd2FpaS5lZHU=

Chris E. Ostrander

Chris E. Ostrander Melissa M. Iwamoto

Melissa M. Iwamoto Fiona Langenberger

Fiona Langenberger