- State Key Laboratory of Robotics and System, Harbin Institute of Technology (HIT), Harbin, China

Underwater images often suffer from various degradations, such as color distortion, reduced visibility, and uneven illumination, caused by light absorption, scattering, and artificial lighting. However, most existing methods have focused on addressing singular or dual degradation aspects, lacking a comprehensive solution to underwater image degradation. This limitation hinders the application of vision technology in underwater scenarios. In this paper, we propose a framework for enhancing the quality of multi-degraded underwater images. This framework is distinctive in its ability to concurrently address color degradation, hazy blur, and non-uniform illumination by fusing RGB and Event signals. Specifically, an adaptive underwater color compensation algorithm is first proposed, informed by an analysis of the color degradation characteristics prevalent in underwater images. This compensation algorithm is subsequently integrated with a white balance algorithm to achieve color correction. Then, a dehazing method is developed, leveraging the fusion of sharpened images and gamma-corrected images to restore blurry details in RGB images and event reconstruction images. Finally, an illumination map is extracted from the RGB image, and a multi-scale fusion strategy is employed to merge the illumination map with the event reconstruction image, effectively enhancing the details in dark and bright areas. The proposed method successfully restores color fidelity, enhances image contrast and sharpness, and simultaneously preserves details of the original scene. Extensive experiments on the public dataset DAVIS-NUIUIED and our dataset DAVIS-MDUIED demonstrate that the proposed method outperforms state-of-the-art methods in enhancing multi-degraded underwater images.

1 Introduction

The underwater environment possesses unique physical properties and complex lighting conditions, resulting in a degradation of image quality when captured by traditional cameras. Generally, the selective absorption and light scattering of water can induce color deviation, reduced contrast, and blurred details in underwater images (Zhang et al., 2022b). Moreover, artificial lighting can distribute non-uniform illumination across images, leading to a bright center, darker periphery, or even overexposure. These image degradation issues may have adverse implications for underwater applications, including ocean exploration (Chen et al., 2020), underwater object detection (Liu et al., 2020; Wang et al., 2021), and robot control (Cho and Kim, 2017).

In recent years, various enhancement methods have been developed to address the problem of decreasing RGB image quality captured by traditional cameras in underwater environments (Jian et al., 2021), but they all have their limitations. Physical model-based methods (Akkaynak and Treibitz, 2019; Xie et al., 2021) use estimated underwater imaging model parameters to reverse the degradation process and obtain more explicit images. However, these methods heavily rely on the model’s assumptions, lack generalizability, and may produce unstable results when enhancing multi-degraded images. Non-physical model-based methods (Fu et al., 2014; Dong et al., 2022) can enhance image contrast and brightness by adjusting the pixel values of degraded underwater images but may suffer from the problem of over-enhancement. Deep learning-based methods (Guo et al., 2019; Peng et al., 2023) have shown remarkable performance in underwater image enhancement. However, the complexity and variability of the underwater environment make it challenging to obtain high-quality underwater training images, and a single trained network may not cover multiple underwater degradation problems. Additionally, due to the limited dynamic range of traditional cameras, images captured in unevenly illuminated underwater environments are prone to detail loss, and existing enhancement methods face difficulties restoring details in bright areas. Therefore, further research and development are needed to overcome these limitations and improve the quality of multi-degraded underwater images, particularly in unevenly illuminated areas.

Event cameras are novel biological sensors with high dynamic range and low latency (Gallego et al., 2020), enabling them to capture scene information in non-uniform illumination environments. As such, they provide a promising solution to address the degradation of underwater images. Compared to traditional cameras, event cameras output asynchronous and discrete event streams rather than RGB images (Gallego and Scaramuzza, 2017). Several methods have been proposed to reconstruct intensity images from the event stream, such as HF (Scheerlinck et al., 2019), E2VID (Rebecq et al., 2019), etc. These techniques effectively preserve details within both bright and dark areas but fall short of retaining color information. To address this issue, some image restoration methods based on events and RGB image fusion have been proposed (Pini et al., 2018; Paikin et al., 2021; Bi et al., 2022b, 2023). Among these, Bi et al. (2022b) proposed an Event and RGB signals fusion method for underwater image enhancement, successfully restoring details in bright areas while maintaining the original RGB image’s color. However, this method overlooks color distortion and detail blurriness in underwater images, crucial factors impacting image quality.

In this study, we present an enhanced version of our previous work introduced in Bi et al. (2022b). The proposed method adds color correction and dehazing modules, which can effectively address color degradation, detail blurring, and non-uniform illumination simultaneously. Our new contributions can be summarized as follows.

1. We propose a novel method for enhancing multi-degraded underwater images by fusing Event and RGB signals. To the best of our knowledge, this is a pioneering attempt to employ Event and RGB signals fusion to address multiple degradation problems prevalent in underwater images.

2. A color correction method for underwater images is proposed, leveraging an adaptive color compensation algorithm and white balance technology. Notably, the adaptive color compensation algorithm is introduced guided by the color degradation characteristics observed in underwater images.

3. An underwater dehazing method that utilizes the fusion of sharpening maps and artificial multi-exposure images is proposed. Taking into account the characteristics of event reconstruction images and RGB images, distinct fusion strategies are designed to enhance the details of both image types.

4. We conduct extensive experiments on the public dataset DAVIS-NUIUIED and our dataset DAVISMDUIED to assess the effectiveness of the proposed method. The experimental results establish the superiority of our method in color correction, contrast enhancement, and detail recovery of underwater images, as compared to the state-of-the-art methods.

The rest of the article is organized as follows. Section 2 reviews existing underwater RGB image enhancement methods and RGB/Event signal fusion methods. Section 3 provides detailed technical details and implementation methods of the proposed method. Section 4 presents experimental studies for performance evaluation. Section 5 summarizes the key findings of our research.

2 Related works

2.1 Underwater RGB image enhancement methods

Existing underwater RGB image enhancement methods can be broadly classified into three categories: physical model-based methods (Chiang and Chen, 2011), non-physical model-based methods (Bai et al., 2020), and deep learning-based methods (Wang et al., 2017).

2.1.1 Physical model-based methods

The physical model-based approach is employed to estimate the parameters of underwater image degradation by utilizing manually crafted prior assumptions, which are then reversed to restore image quality. These priors include red channel prior (Galdran et al., 2015), underwater dark channel prior (Yang et al., 2011; Liang et al., 2021), minimum information loss (Li et al., 2016), and attenuation curve prior (Liu and Liang, 2021), etc. For example, Serikawa and Lu (2014) combined the traditional dark channel prior with a joint trilateral filter to achieve underwater image dehazing. Li et al. (2016) proposed a novel underwater image dehazing algorithm based on the minimum information loss principle and histogram distribution prior, aiming to enhance the visibility and contrast of the image. Song et al. (2020) proposed an improved background light estimation model, which applies the underwater dark channel and light attenuation to estimate the model parameters, achieving color correction and enhancement of underwater images. Marques and Albu (2020) created two lighting models for detail recovery and dark removal, respectively, followed by combining these outputs through a multi-scale fusion strategy. Similarly, Xie et al. (2021) introduced a novel red channel prior guided underwater normalized total variation model to deal with underwater image haze and blur. Even though physical model-based methods can improve color bias and visibility of underwater images in some cases, they are sensitive to prior assumptions. These methods may not effectively restore underwater images and may lack flexibility in practical applications if the prior assumptions do not align with the underwater scene.

2.1.2 Non-physical model-based methods

Utilizing non-physical models to directly adjust the pixel values of degraded underwater images can improve visual quality. Some of the representative methods include histogram-based (Hitam et al., 2013), Retinex-based (Zhuang et al., 2022), fusion-based (Yin et al., 2023), and white balance methods (Tao et al., 2020). For instance, Ancuti et al. (2012) and Zhao et al. (2021) introduced a fusion-based underwater dehazing approach, aiming to restore image texture details and improve contrast by integrating different versions of images. Azmi et al. (2019) proposed a novel color enhancement approach for underwater images inspired by natural landscape images. This method comprises three stages: color cast neutralization, dual-image fusion, and mean value equalization. Zhang et al. (2022b) introduced local adaptive color correction and local adaptive contrast enhancement methods to enhance underwater images. Similarly, Zhang et al. (2022a) proposed a fusion method inspired by Retinex to merge contrast-enhanced and detail-enhanced versions of underwater images. While non-physical model-based methods can increase the contrast and visual quality of underwater images to some extent, they do not account for the underwater imaging mechanism, which makes it challenging to achieve high-quality restoration of degraded underwater images.

2.1.3 Deep learning-based methods

Deep learning-based methods have made significant progress in visual tasks such as underwater image dehazing and super-resolution (Islam et al., 2020), thanks to powerful computing capabilities and abundant training data. Guo et al. (2019) proposed a new multi-scale dense block method that combines residual learning, dense connectivity, and multi-scale operations to enhance underwater images without constructing an underwater degradation model. Furthermore, Jiang et al. (2022) designed a target-oriented perception adversarial fusion network based on underwater degradation factors. Liu et al. (2022) proposed an unsupervised twin adversarial contrastive learning network to achieve task-oriented image enhancement and better generalization capability. Recently, Peng et al. (2023) introduced a U-shaped Transformer network and constructed a large underwater dataset, the first application of Transformer models to underwater image enhancement tasks. However, deep learning-based methods require a large amount of high-quality underwater image data, which is challenging to collect. Additionally, the performance of a singular well-trained network diminishes when confronted with diverse manifestations of underwater image degradation, thereby limiting the application of these methods.

In general, the three types of enhancement methods have demonstrated their ability to improve color and contrast in specific scenarios. However, these methods primarily focus on enhancing images with one or two types of degradations. In reality, underwater image degradation is complex and encompasses various factors. Additionally, existing methods encounter difficulties handling uneven illumination caused by artificial lighting. This limitation primarily arises from the limited dynamic range of traditional cameras, which results in a significant loss of details in bright areas of RGB images captured under non-uniform lighting conditions. Unlike previous underwater image enhancement methods, we propose a multi-degradation underwater image enhancement approach that addresses color degradation, hazy blur, and uneven illumination issues. Significantly, our framework incorporates event cameras, capitalizing on their high dynamic range capabilities. This innovative method adeptly preserves details in both bright and dark areas of the scene, even when subjected to uneven lighting conditions.

2.2 RGB/Event signal fusion methods

Event cameras are a type of biomimetic sensor that differ from standard cameras in their working principles (Messikommer et al., 2020). While standard cameras output intensity frames at a fixed frequency, each pixel of an event camera can independently sense changes in the brightness of a moving scene and output them as an event stream (Rebecq et al., 2018). To bridge the gap between event cameras and traditional cameras, and to apply traditional camera algorithms to event cameras, several methods have been proposed to reconstruct events into intensity images. One approach, proposed by Scheerlinck et al. (2019), uses complementary filters and continuous-time equations to generate high dynamic range images. Cadena et al. (2021) developed a SPADE-E2VID neural network model inspired by the SPADE model, which employs a many-to-one training method to shorten training time. Zou et al. (2021) proposed a convolutional recurrent neural network that leverages features from adjacent events to achieve fast image reconstruction. While the reconstructed images from events have a high dynamic range, they lack color information.

Recently, researchers have proposed several methods to reconstruct images with high dynamic range and color by integrating Event and RGB signals. Pini et al. (2018) proposed a color image synthesis framework based on conditional generative adversarial networks. Paikin et al. (2021) introduced an event-frame interpolation network that combines frames and event streams to generate high dynamic range images while preserving the original color information. In addition, for underwater image enhancement, Bi et al. (2022b) proposed a novel method that leverages the complementary advantages of event cameras and traditional cameras to restore details in regions with uneven illumination. However, this method does not address the issues of color degradation and detail blurring in underwater images.

Compared with our previous work (Bi et al., 2022b), we add an adaptive color correction method to adjust the color of underwater images. Additionally, we introduce an underwater image dehazing method that combines sharpened images and gamma-corrected images to improve image visibility. As a result, the proposed method can simultaneously solve the problems of color degradation, haze, and uneven illumination in underwater images.

3 Proposed method

In underwater image capture, standard cameras frequently face challenges such as color distortion, decreased visibility, and non-uniform illumination. Our previous work (Bi et al., 2022b) demonstrated that combining event cameras with traditional cameras can help address the issue of uneven underwater illumination. However, this method only applies to clear water and cannot overcome the problems of color degradation and haze in underwater images. To overcome these limitations, we introduce a framework for the fusion of RGB and Event signals, aiming to enhance multi-degraded underwater images.

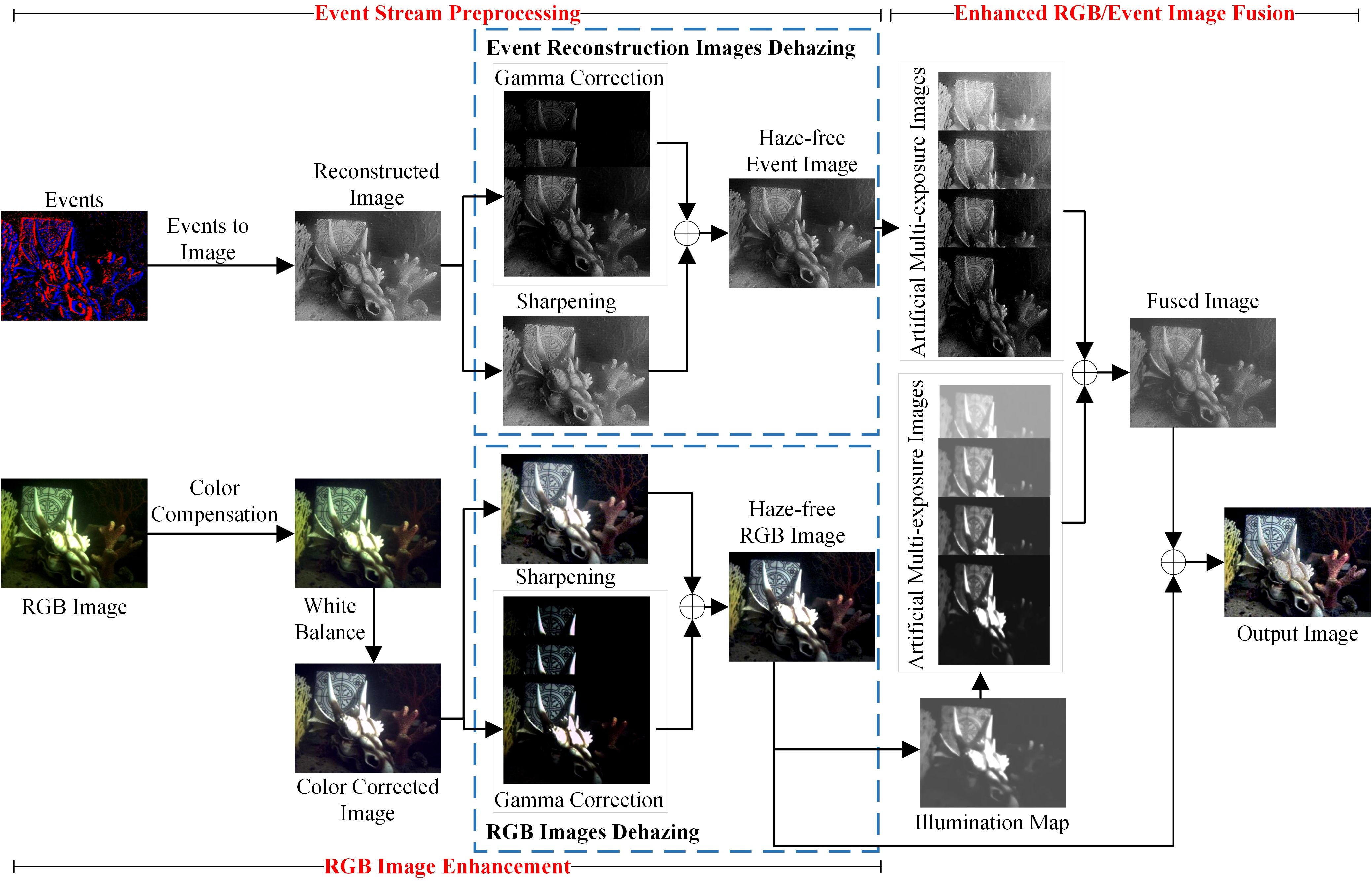

Figure 1 depicts the flowchart of the proposed method, which comprises three principal components: RGB image enhancement, event stream preprocessing, and enhanced RGB/Event image fusion. In the RGB image enhancement process, we propose a color correction method that uses adaptive color compensation and white balance to reduce color deviation in underwater environments. To reduce haze and scattered light, we then apply a fusion method based on sharpening maps and gamma-corrected images, combined with a fusion strategy designed for RGB images. In the event stream preprocessing process, we reconstruct the event stream into an intensity image using the E2VID method (Rebecq et al., 2019). Then, we use the proposed fusion dehazing method to restore the details and clarity of the image. In the fusion process of the enhanced RGB and event reconstruction images, we utilize the RRDNET method to extract an illumination map from the RGB image (Zhu et al., 2020). After that, we employ gamma correction to adjust the brightness of the illumination map and the event-reconstructed image to generate two sets of multi-exposure image sequences. Subsequently, we apply the pyramid fusion strategy to fuse the images and restore the details of the bright areas. Finally, relying on the Retinex theory (Li et al., 2018), we convert the fused image into an RGB, which serves as the final enhanced image.

3.1 Underwater RGB images color correction

In marine environments, the attenuation rates of various wavelengths of light vary, leading to potential color distortion in corrected underwater images if the traditional gray world assumption is used without considering this. To avoid such a situation, it is imperative to perform color compensation for degraded underwater images. This paper proposes an adaptive color compensation method for underwater images by analyzing the color distribution characteristics of natural terrestrial landscapes and degraded underwater images. Afterward, we utilize the gray world algorithm (Buchsbaum, 1980) to correct the color bias of the light source.

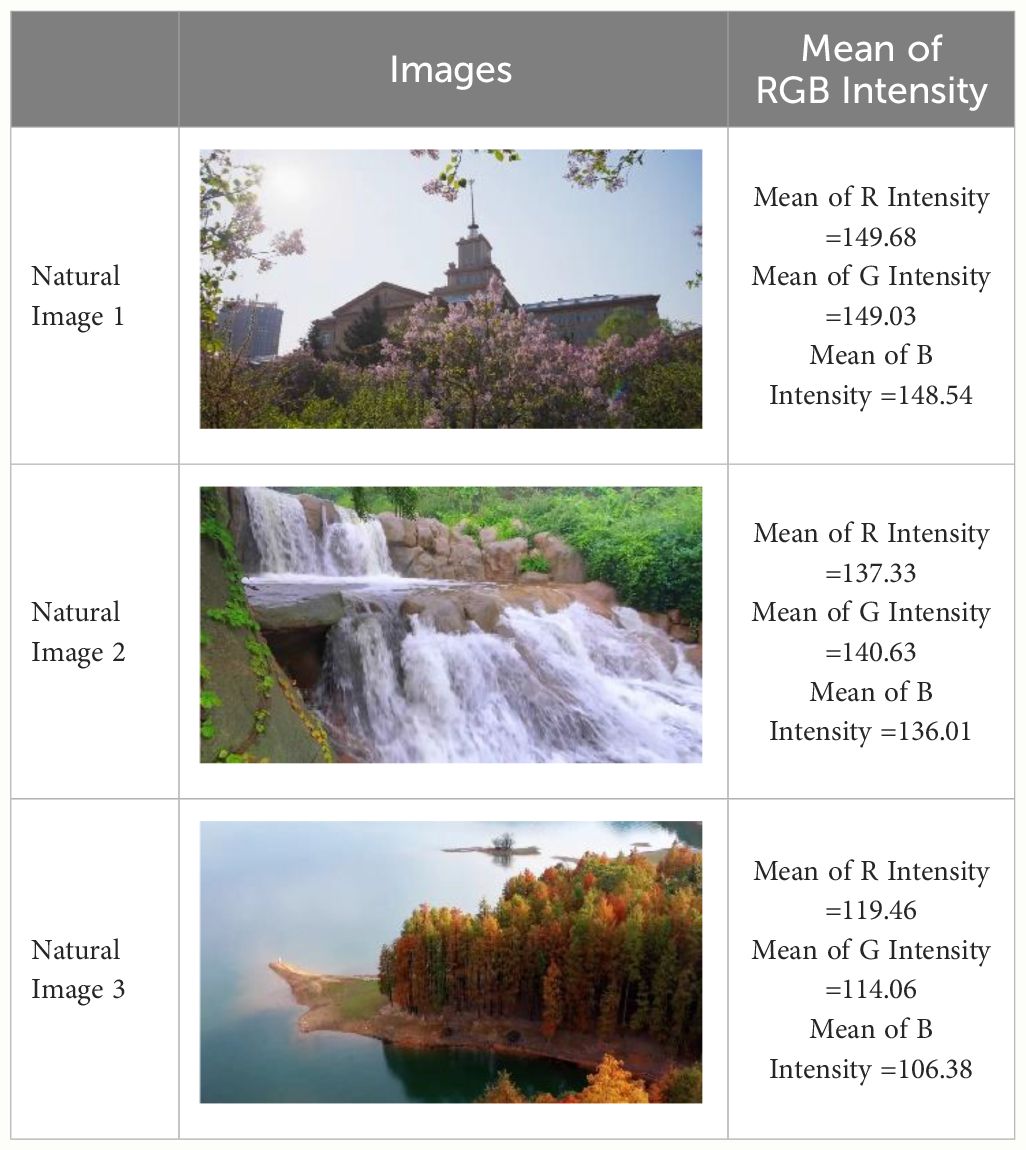

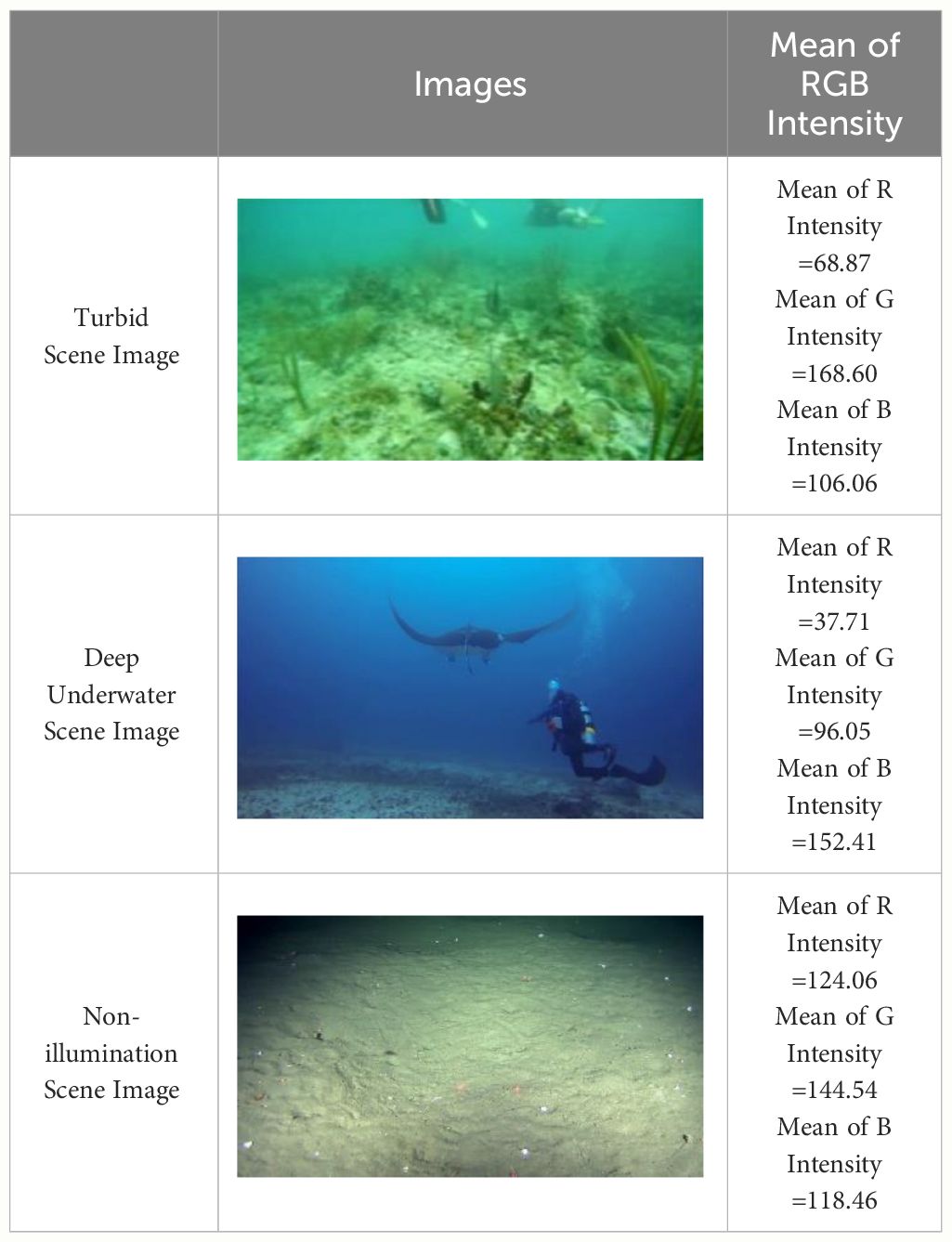

Due to the absorption of light by the aquatic medium, underwater images undergo substantial color degradation, which differs from the color characteristics of natural landscape images. Tables 1, 2, respectively, present the average intensity values of the red, green, and blue (RGB) color channels for typical natural landscape images and degraded underwater images. Through comparative analysis, the following conclusions can be drawn:

1) In natural landscape images captured on terrestrial surfaces without degradation, colors exhibit a uniform distribution.

2) For images taken in turbid water scenes, the green channel’s wavelength is less absorbed and relatively well-preserved. Thus, it is advisable to utilize the green channel as a reference to compensate for the red and blue channels.

3) When images are acquired from deep-water scenes, the wavelength of the blue channel is relatively short, which can be preserved relatively well. Therefore, the blue channel is utilized as a reference to compensate for the red and green channels.

4) For images taken in underwater uneven lighting scenes, the values of the three color channels commonly exhibit relatively higher levels, thus rendering restoration unnecessary.

We propose an adaptive underwater image color compensation method in light of the abovementioned investigation on terrestrial natural scene images and subaquatic scene images. Initially, the three color channels are categorized based on the mean value of the RGB color channels. Subsequently, utilizing the least attenuated channel as a reference, calculate the gain factors and compensate for the remaining two color channels. The computation of the average for the red, green, and blue channels is shown in Equation (1).

where and represent the height and width of the input image, respectively. We divide the three RGB color channels into high-quality color channels , medium color channels , and low-quality color channels according to their average values in descending order.

Next, to address the attenuation that occurred in the medium color channels and the low-quality color channels , the reference channel is selected to be the high-quality color channel . The gain factors J and K are then derived using computation, wherein the gain factor J is defined as the disparity between the average values of the high-quality color channel and the medium color channel , and the gain factor K represents the discrepancy between the high-quality color channel and the low-quality color channel . The gain factors J and K are calculated as shown in Equations (2) and (3), respectively.:

where , and are the average value of , and , respectively.

By using the gain factors J and K, we compensate for the attenuation of the medium and low-quality color channels at every pixel position , as calculated in Equations (4) and (5).

The proposed adaptive underwater image color correction method effectively mitigates the presence of color casts caused by underwater conditions. However, it should be noted that the method cannot fully address color casts in regions influenced by light sources. To overcome this limitation, the white balance algorithm based on gray world assumptions (Buchsbaum, 1980) is employed subsequently to compensate for the attenuation channel. Adjusting the color of each pixel in the image to a neutral gray that conforms to the gray world assumption effectively corrects color degradation in areas influenced by the light source. This procedure enhances the overall natural appearance of the image and alleviates color distortion issues caused by underwater conditions.

3.2 Underwater images dehazing

To tackle the challenges of blurriness and haze in underwater images, we present a straightforward yet efficient underwater dehazing technique. Drawing inspiration from the methodology outlined in Ancuti and Ancuti (2013), our approach similarly adopts a multi-scale fusion framework. However, unlike Ancuti and Ancuti (2013), our fusion framework incorporates both sharp images and multi-exposure images as inputs. The inclusion of sharp images serves to accentuate edge delineation and improve local visibility by merging them with gamma-corrected images. As depicted in Figure 1, our approach entails separate processing of event reconstruction images and RGB images via parallel dehazing processes.

3.2.1 Intensity image reconstruction from events

We utilize the E2VID method (Rebecq et al., 2019) to reconstruct intensity images from events captured by event cameras. Initially, we establish a convolutional recursive network grounded on the UNet architecture. This network can learn and reconstruct intensity frames from asynchronous event data. Subsequently, we employ the Event Simulator (ESIM) (Rebecq et al., 2018) to generate a series of simulated event sequences for network training. The actual event stream is then partitioned into discrete time blocks and fed into the trained network to reconstruct events into intensity images.

3.2.2 Derived inputs

We generate a sharpened image and a series of multi-exposure images from the input image (event reconstruction image or RGB image).

Sharpened Image. To improve the texture details of an image, we introduce the concept of a sharpened image, which is a sharpness-enhanced version of the reconstructed intensity image or the color-corrected RGB image. We use the normalized unsharp masking method (Ramponi et al., 1996) to sharpen the input images, as expressed in Equation (6).

where, denotes the blurred version of the input image I, and N {·} represents the linear normalization operator.

Gamma-corrected image sequences. We use the gamma correction method to adjust the dynamic range of images and generate artificial multi-exposure image sequences that enhance the contrast in foggy regions. Gamma correction is a method of adjusting the brightness and contrast of an image by applying a non-linear transformation to its pixel values. Studies demonstrate that when γ > 1, the visibility of hazy areas in the output image improves, but the contrast in dark areas decreases. Conversely, when γ < 1, the visibility of details in darker regions is improved, but it affects the details in bright areas. The formula for gamma correction is shown in Equation (7).

where α and γ are positive constants. Figure 2 demonstrates the effects of gamma correction operation on an image. Figure 2A shows the original image. The resulting image exhibits reduced contrast and heightened haziness when γ < 1, as illustrated in Figure 2B. Conversely, for γ > 1, as depicted in Figures 2C–E, the gamma-corrected images showcase heightened contrast and improved visibility, with distinct details in brighter areas. Figure 2C achieves a softer dehazing effect while preserving detailed information, whereas Figure 2E emphasizes brightness contrast and clarity at the expense of some finer details. To optimize image quality and restore intricate details, we employ three distinct γ values (γ = 1.5, 2, 2.5) to generate diverse exposure images.

Figure 2 The results of gamma correction operations. (A) Original image. (B) Corrected image with γ=0.5. (C) Corrected image with γ=1.5. (D) Corrected image with γ=2.0. (E) Corrected image with γ=2.5.

3.2.3 Fusion weights of event reconstruction images

Since event reconstruction images lack color information, saturation is not a factor to consider during the image fusion. This study utilizes contrast and exposure as metrics to achieve the fusion of four inputs from event reconstruction images: one sharpened version and three gamma-corrected versions.

Contrast Weight Map : we apply Laplacian filtering to each input image’s luminance channel and use the filtering result’s absolute value to calculate the global contrast. A higher weight value for contrast indicates better preservation of image texture details, resulting in a clearer image. The contrast weight map is computed as Equation (8).

where L denotes the Laplacian operator and ℐi represents the luminance of the i-th input image.

Exposure Weight Map : images with different exposure levels contain varying degrees of detail information, with well-exposed pixels exhibiting clearer details. In optimal exposure conditions, pixel brightness values should be close to 0.5. The calculation of is shown in Equation (9).

where σ is a parameter representing the standard deviation, influencing the width of the Gaussian distribution in the exponential term. σ is usually set as 0.2 (Lee et al., 2018; Zhang et al., 2018; Xu et al., 2022).

To obtain the final weight map for each input , we compute the product of the contrast weight map and the exposure weight map, which gives us . Subsequently, we normalize the weight map for every pixel position by dividing each weight value by the sum of the weight values at that position, as follows: . This normalization ensures that the sum of weight values at each position adds up to 1.

3.2.4 Fusion weights of RGB images

To enhance the visual fidelity of RGB images, we adopt a contrast weight map, saliency weight map, and saturation weight map to efficiently blend four inputs from RGB images.

Saliency weight map : we detect the saliency level of adjacent pixels and extract regions with high saliency for fusion, resulting in images with clear boundaries. To measure saliency levels, we employed the saliency estimator developed by Achanta et al. (2009), which utilizes color and brightness features to estimate saliency and can swiftly generate saliency maps with clear boundaries and full resolution.

Saturation weight map : Regions with high saturation are extracted to generate images with more vibrant and true-to-life colors. To compute the weight values, we calculate the standard deviation of the color channels , , and at each pixel position, which reflects the color saturation of that position. Regions with higher saturation are assigned greater weight values in the map, resulting in better preservation of their color during the image fusion process. The saturation weight map is expressed in Equation (10).

where L is the average of the three RGB color channel values.

In the process of RGB images dehazing, the final weight map calculation formula for each input is Wj (x, y) = Cj (x, y) · Sj (x, y) · Tj (x, y), and the normalization method for the weight map is .

3.2.5 Multi-scale fusion process

To avoid unwanted artifacts in the fused image, we adopt the multi-scale image fusion method proposed by Burt and Adelson (1983) to achieve the fusion of the sharpness map and the multi-exposure images. Taking the RGB image as an example to introduce the fusion process, we first decompose the sharpness map and multi-exposed images into Laplacian pyramids , and their corresponding normalized weight maps into Gaussian pyramids . The Laplacian pyramid is employed to represent the detailed information of an image, while the Gaussian pyramid is utilized to depict the blurred information in an image. The calculation for the l-th level of the Gaussian pyramid is shown in Equation (11).

where is the Gaussian filter template, N is the maximal level of the pyramid, and represent the column and row number of the l-th level pyramid respectively.

Subsequently, derive the Laplacian pyramid by computing the difference between two layers of the Gaussian pyramid (Wang and Chang, 2011). The detailed calculation is expressed in Equations (12) and (13).

Then, we combine the Laplacian pyramids and the Gaussian pyramids at each level using Equation (14).

where l represents the level of the pyramid, and · denotes element-wise multiplication.

Finally, we reconstruct the defogged RGB image by upsampling and adding up the Laplacian pyramid levels, as shown in Equation (15).

where is the upsampling operator.

3.3 RGB/Event image fusion

To tackle the issue of uneven illumination in degraded underwater images, we utilized a multi-scale fusion method to blend the enhanced event-reconstructed image with the enhanced RGB image . This method is similar to Bi et al. (2022b) and aims to recover fine details in both bright and dark areas while retaining color information. The following are the specific steps taken:

1) Extraction of illuminance map. We employ the RRDNET image decomposition method proposed in Zhu et al. (2020), a three-branch convolutional neural network that extracts an illuminance map from the enhanced RGB image. This is done to prepare for subsequent fusion with the enhanced event reconstruction image.

2) Generation of artificial multi-exposure image sequences. We use the gamma correction method (Equation 7) to adjust the exposure of the illuminance map and the enhanced event reconstruction image, resulting in two multi-exposure image sequences.

3) Designing weight maps. To ensure that more scene details are preserved and have consistent brightness during the image fusion process, we use the three features, namely contrast, exposure, and average luminance, to set the weight map for each artificial exposure image.

4) Image fusion. We use the multi-scale fusion strategy described in Section 3-B of this article to fuse the multi-exposure image sequences and weight maps, generating the fused image .

5) Image restoration. Based on the Retinex theory (Li et al., 2018), we restore the fused image to an RGB image, and the specific calculation method is represented as Equation (16).

4 Experiments

This section thoroughly assesses the proposed method’s effectiveness, utilizing four distinct approaches: qualitative analysis, quantitative analysis, ablation study, and application testing. Qualitative and quantitative analyses are performed to compare the performance of our method with other state-of-the-art underwater image enhancement methods on two datasets and to assess its effectiveness in multi-degraded underwater scenes. Ablation experiments are conducted to measure the contribution of different components of the proposed method to its overall performance. Lastly, application testing is carried out to verify the feasibility and efficacy of our method in practical underwater applications.

4.1 Experimental settings

4.1.1 Comparison methods

We conduct a comparative analysis of the proposed method with 9 state-of-the-art methods, namely, color balance and fusion (CBAF) method (Ancuti et al., 2017), image blurriness and light absorption (IBLA) method (Peng and Cosman, 2017), contrast and information enhancement of underwater images (CIEUI) (Sethi and Sreedevi, 2019), contour bougie morphology (CBM) method (Yuan et al., 2020), texture enhancement model based on blurriness and color fusion (TEBCF) method (Yuan et al., 2021), underwater shallow neural network (Shallow-uwnet) (Naik et al., 2021), minimal color loss and locally adaptive contrast enhancement (MLLE) method (Zhang et al., 2022b), hyper-laplacian reflectance priors (HLRP) method Zhuang et al. (2022) events and frame fusion (EAFF) non-uniform illumination underwater image enhancement method (Bi et al., 2022b).

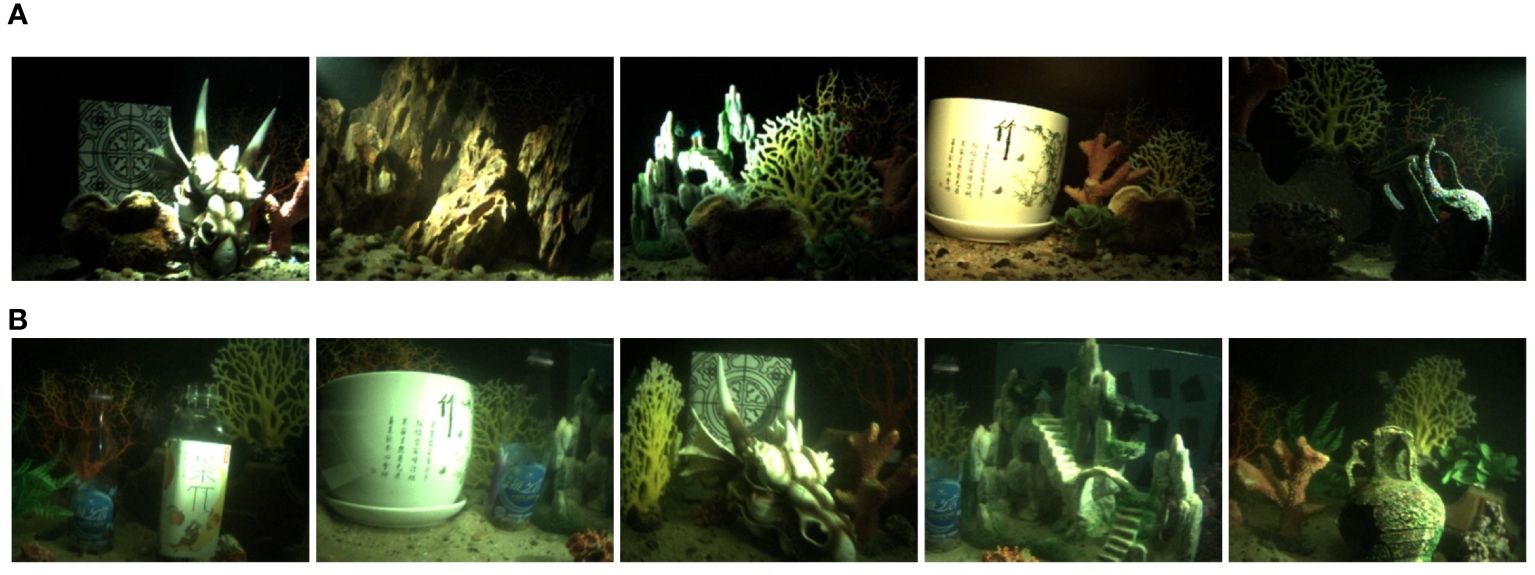

4.1.2 Datasets

Our experiment employed two datasets: the DAVIS-NUIUIED dataset (Bi et al., 2022a) and the multi-degraded underwater image enhancement dataset (DAVIS-MDUIED). Both datasets were captured using the event camera DAVIS346, which can simultaneously acquire RGB images and event streams. The DAVIS-NUIUIED dataset was captured in unevenly illuminated clear water scenes. We selected 5 representative recording scenes (“Head”, “Mountain”, “Rockery”, “Flowerpot”, and “Vase”) from it, as shown in Figure 3A. This dataset serves as a benchmark for evaluating the ability of various algorithms to restore details in underwater images with non-uniform illumination. The DAVIS-MDUIED dataset was collected in scenes with both uneven illumination and turbid water quality. As depicted in Figure 3B, we constructed 5 different recording scenes (“Bottle”, “Flowerpot”, “Head”, “Rockery”, and “Vase”). This dataset was specifically designed to evaluate the capability of various methods to address haze, color degradation, and uneven illumination simultaneously.

Figure 3 Experimental datasets. (A) Five scenes from the DAVIS-NUIUIED dataset. (B) Five scenarios in the DAVIS-MDUIED dataset.

4.1.3 Evaluation metrics

We employ four popular no-reference image assessment metrics to evaluate enhanced image quality.

These metrics include Average Gradient (AG) (Du et al., 2017), Edge Intensity (EI) (Wang et al., 2012), Underwater Image Quality Measurement (UIQM) (Panetta et al., 2015), and Natural Image Quality Evaluator (NIQE) (Mittal et al., 2012).

Average Gradient (AG): AG is a metric that quantifies the average gradient of an image, with a higher AG score indicating the presence of greater texture detail within the image. The formula for calculating AG is shown in Equation (17).

where M and N represent the height and width of the image F, respectively.

Edge Intensity (EI): EI evaluates the edge intensity of an image, and a higher score implies improved edge intensity in the image. EI can be represented by Equation (18).

where and denote the gradients in the horizontal and vertical directions, respectively. The calculation is expressed as Equations (19) and (20).

Underwater Image Quality Measurement (UIQM): UIQM is a linear combination of three individual metrics, i.e., underwater image colorfulness measure (UICM), underwater image sharpness measure (UISM), and underwater image contrast measure (UIConM), which collectively represent the quality of an underwater image. A higher score in UIQM indicates the enhanced quality of the underwater image. UIQM is represented as Equation (21).

where c1, c2, and c3 are obtained through multiple linear regression (MLR).

Natural Image Quality Evaluator (NIQE): NIQE evaluates the overall naturalness of the image, where a lower score implies better naturalness. This approach begins by independently extracting Natural Scene Statistics (NSS) features from natural and test image corpus. These features are then individually modeled using a Multivariate Gaussian (MVG) approach. The divergence between these models is subsequently calculated, serving as a measure of the quality of distorted images.

4.2 Comparisons on the DAVIS-NUIUIED dataset

4.2.1 Qualitative comparisons

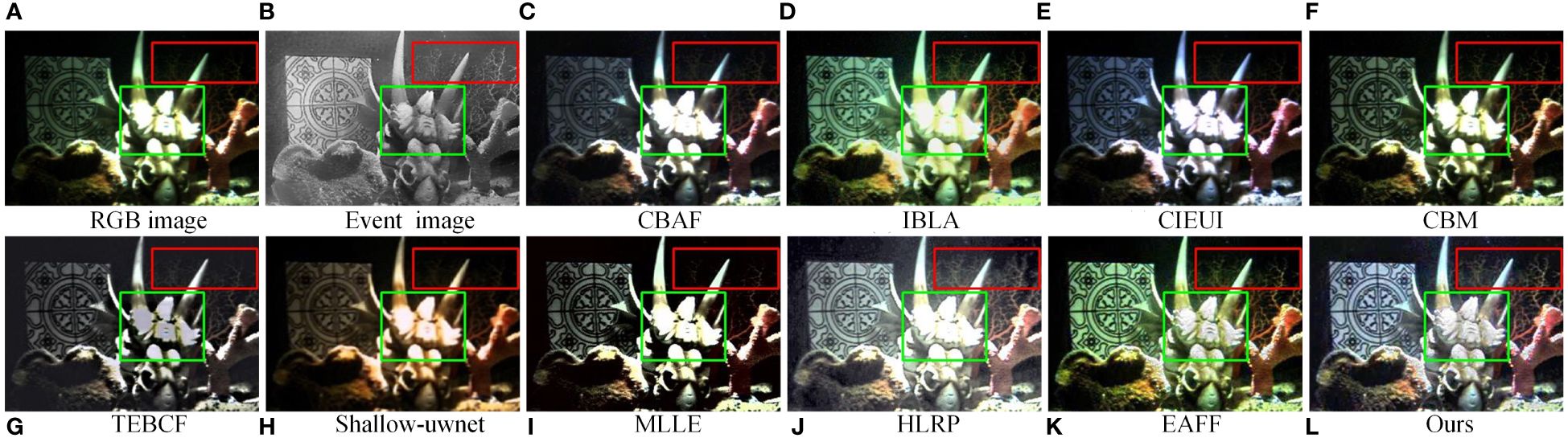

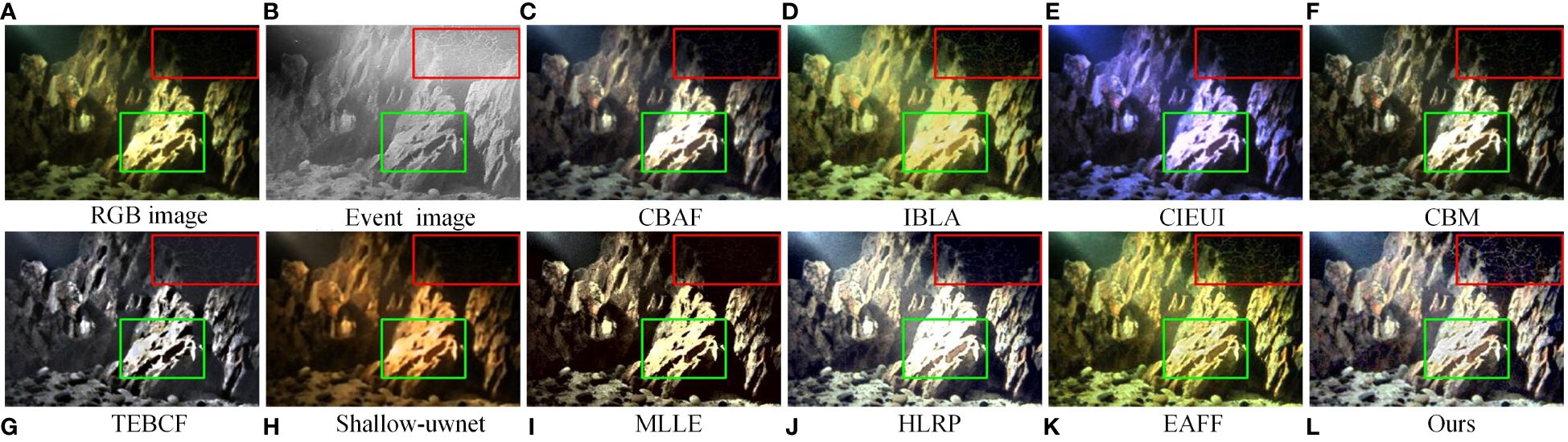

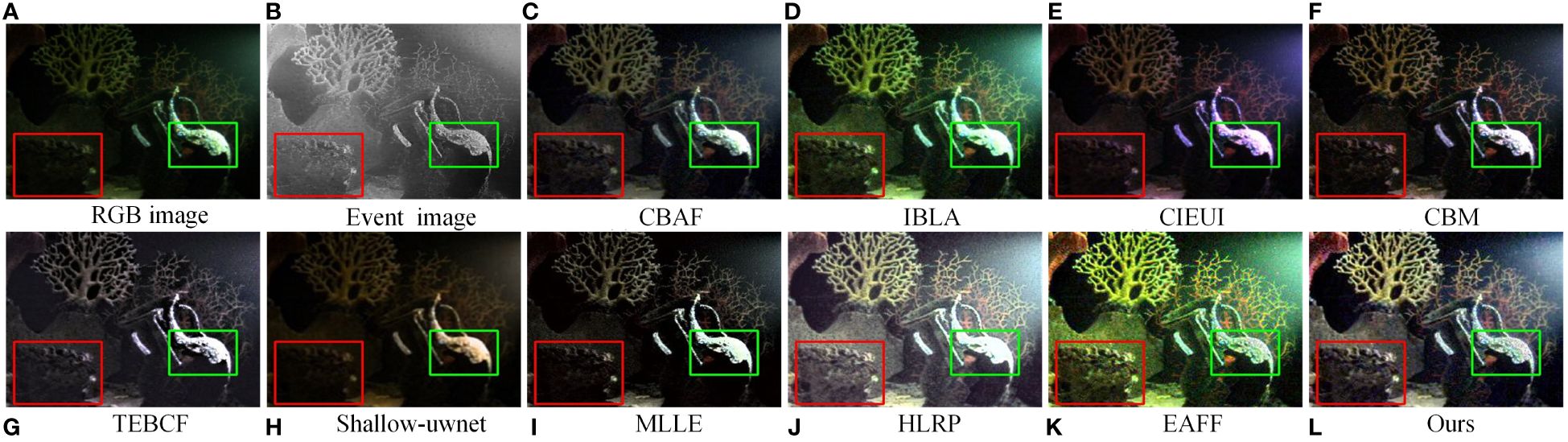

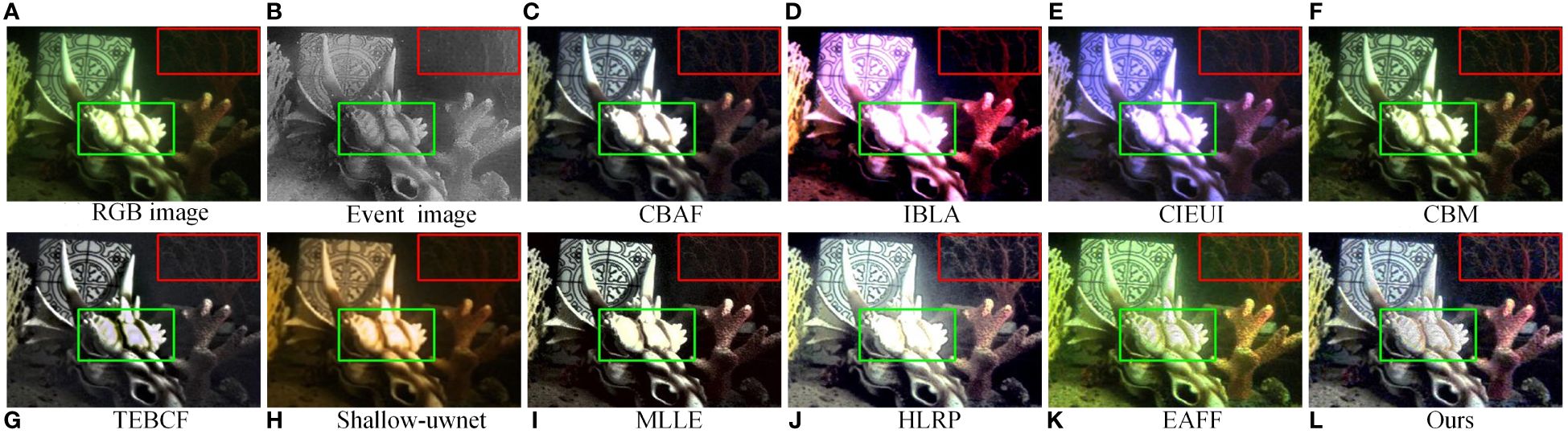

To illustrate the effectiveness of the proposed methodology in enhancing underwater images with non-uniform illumination, we conduct a series of comparative experiments using the DAVIS-NUIUIED dataset. The enhancement results achieved by diverse methods are depicted in Figures 4–8. Figures 4A, 5A, 6A, 7A, 8A are the original RGB images(RGB images), and Figures 4B, 5B, 6B, 7B, 8B are the intensity images reconstructed by the E2VID method from event stream (Event images). Among these methods, the CBAF and HLRP methods perform well in eliminating color aberration. However, CBAF falls short in terms of contrast enhancement and restoration of details in dark and bright areas. On the other hand, the HLRP method effectively enhances image contrast but may lead to overexposure, causing a more severe loss of details in bright areas. The IBLA method can restore details in the dark areas but oversaturates the colors and introduces halos around the bright areas, as exemplified by the green rectangle in Figures 4D, 5D. The CIEUI, TEBCF, and Shallow-uwnet methods can somewhat suppress halos, but they still exhibit inadequate contrast and fail to address color degradation, especially the results shown in Figures 5E, G, H, 8E, G, H. Specifically, the CIEUI method excessively compensates for red and blue light, resulting in a purple-enhanced image; the TEBCF method generates images with a dark tone, and the Shallow-uwnet method introduces a yellow tone. Although the CBM and MLLE methods can improve image contrast somewhat, they can still not restore details in the dark and bright regions, such as the rectangle in Figures 4F, I, 5F, I, 6F, I, 7F, I, 8F, I. On the other hand, the EAFF method can recover details in the dark and bright regions effectively and improve contrast, but it cannot eliminate color aberration, as depicted in Figures 4K, 5K, 6K, 7K, 8K. In contrast, the proposed method can effectively recover details in dark and bright regions, improve contrast and visibility, correct color deviation, and generate images with realistic colors and clear details.

Figure 4 Visual comparison on the image ‘Head’ in the DAVIS-NUIUIED dataset (Bi et al., 2022a). (A) Original RGB image. (B) Event reconstruction image. (C) CBAF (Ancuti et al., 2017). (D) IBLA (Peng and Cosman, 2017). (E) CIEUI (Sethi and Sreedevi, 2019). (F) CBM (Yuan et al., 2020). (G) TEBCF (Yuan et al., 2021). (H) Shallow-uwnet (Naik et al., 2021). (I) MLLE (Zhang et al., 2022b). (J) HLRP (Zhuang et al. 2022). (K) EAFF (Bi et al., 2022b). (L) Ours.

Figure 5 Visual comparison on the image ‘Mountains’ in the DAVIS-NUIUIED dataset (Bi et al., 2022a). (A) Original RGB image. (B) Event reconstruction image. (C) CBAF (Ancuti et al., 2017). (D) IBLA (Peng and Cosman, 2017). (E) CIEUI (Sethi and Sreedevi, 2019). (F) CBM (Yuan et al., 2020). (G) TEBCF (Yuan et al., 2021). (H) Shallow-uwnet (Naik et al., 2021). (I) MLLE (Zhang et al., 2022b). (J) HLRP (Zhuang et al. 2022). (K) EAFF (Bi et al., 2022b). (L) Ours.

Figure 6 Visual comparison on the image ‘Rockery’ in the DAVIS-NUIUIED dataset (Bi et al., 2022a). (A) Original RGB image. (B) Event reconstruction image. (C) CBAF (Ancuti et al., 2017). (D) IBLA (Peng and Cosman, 2017). (E) CIEUI (Sethi and Sreedevi, 2019). (F) CBM (Yuan et al., 2020). (G) TEBCF (Yuan et al., 2021). (H) Shallow-uwnet (Naik et al., 2021). (I) MLLE (Zhang et al., 2022b). (J) HLRP (Zhuang et al. 2022). (K) EAFF (Bi et al., 2022b). (L) Ours.

Figure 7 Visual comparison on the image ‘Flowerpot’ in the DAVIS-NUIUIED dataset (Bi et al., 2022a). (A) Original RGB image. (B) Event reconstruction image. (C) CBAF (Ancuti et al., 2017). (D) IBLA (Peng and Cosman, 2017). (E) CIEUI (Sethi and Sreedevi, 2019). (F) CBM (Yuan et al., 2020). (G) TEBCF (Yuan et al., 2021). (H) Shallow-uwnet (Naik et al., 2021). (I) MLLE (Zhang et al., 2022b). (J) HLRP (Zhuang et al. 2022). (K) EAFF (Bi et al., 2022b). (L) Ours.

Figure 8 Visual comparison on the image ‘Vase’ in the DAVIS-NUIUIED dataset (Bi et al., 2022a). (A) Original RGB image. (B) Event reconstruction image. (C) CBAF (Ancuti et al., 2017). (D) IBLA (Peng and Cosman, 2017). (E) CIEUI (Sethi and Sreedevi, 2019). (F) CBM (Yuan et al., 2020). (G) TEBCF (Yuan et al., 2021). (H) Shallow-uwnet (Naik et al., 2021). (I) MLLE (Zhang et al., 2022b). (J) HLRP (Zhuang et al. 2022). (K) EAFF (Bi et al., 2022b). (L) Ours.

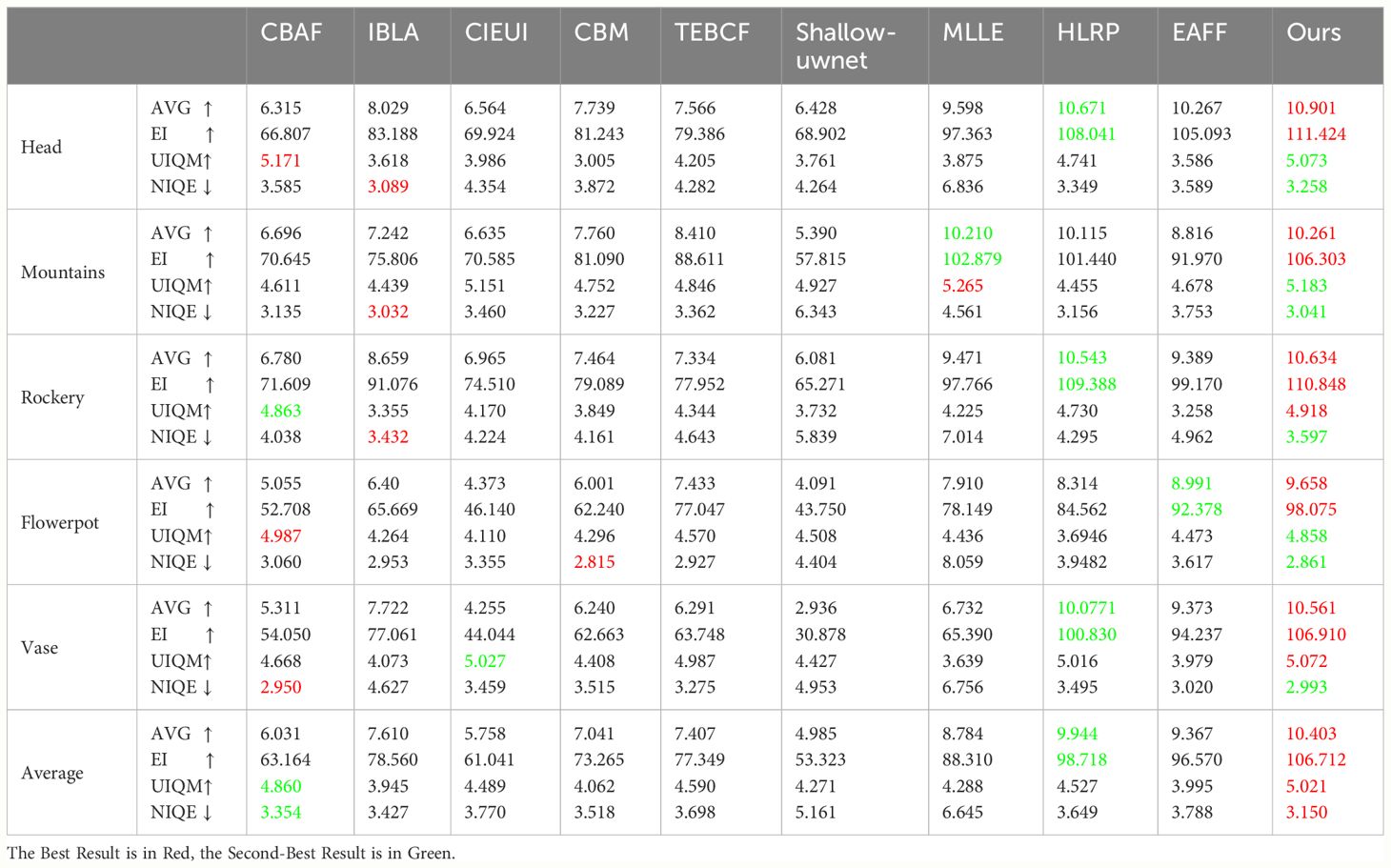

4.2.2 Quantitative comparisons

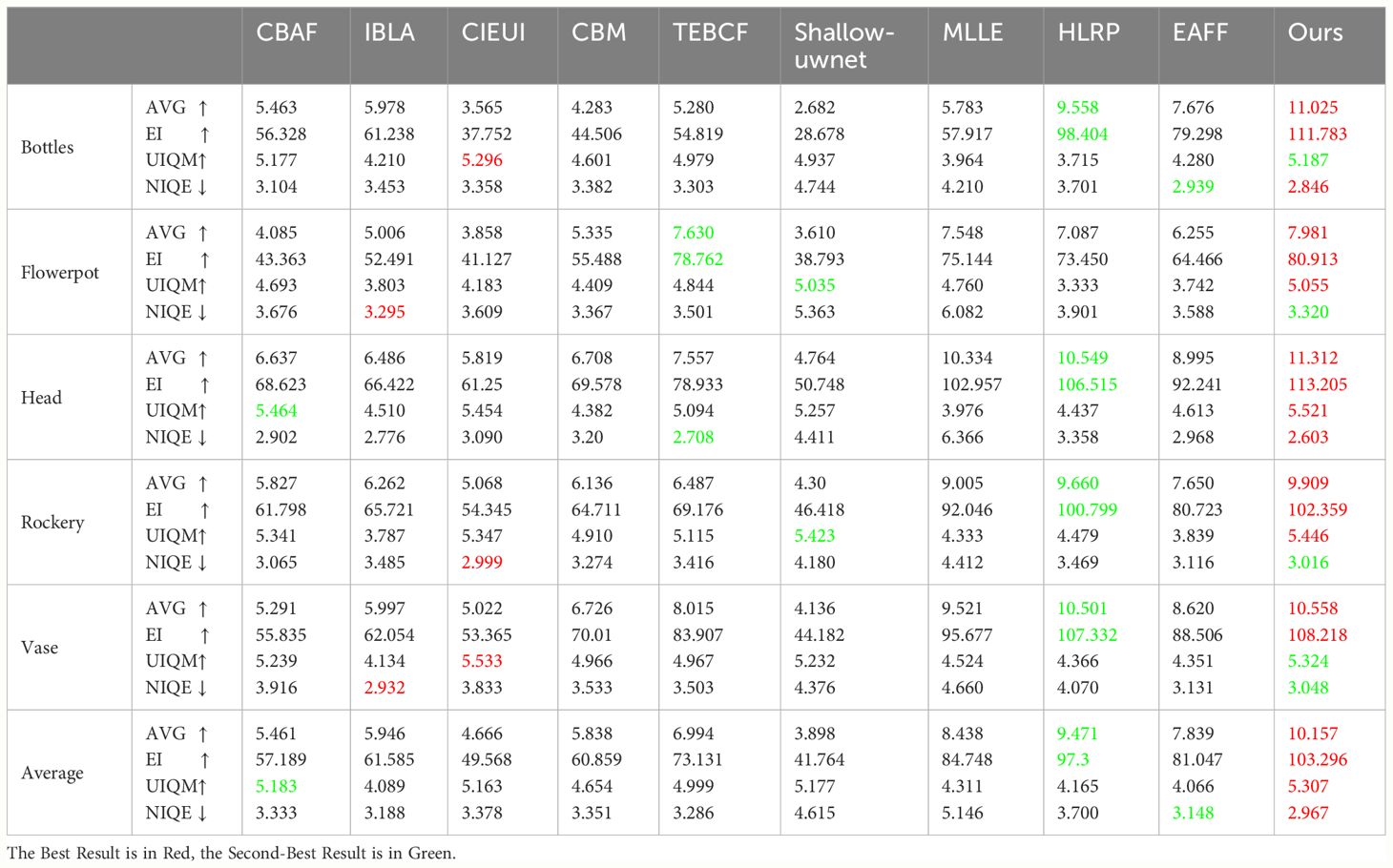

To accurately assess the performance of the proposed method in improving underwater image color correction and detail restoration, we conduct the quantitative assessment using four well-established metrics on the DAVIS-NUIUIED dataset: AG (Du et al., 2017), EI (Wang et al., 2012), UIQM (Panetta et al., 2015), and NIQE (Mittal et al., 2012). The evaluation results are presented in Table 3. Our approach attains the top rankings in AG and EI, indicating that the enhanced images exhibit richer texture details. Although the proposed method ranked second on individual image enhancement results for UIQM and NIQE metrics, it secures first place in the average value, demonstrating the robustness of the proposed method in enhancing underwater image quality and visual experience. The qualitative and quantitative comparison results establish the outstanding capability of our method in color correction and detail restoration of underwater images.

Table 3 Quantitative comparisons on the DAVIS-NUIUIED Dataset (Bi et al., 2022a).

4.3 Comparisons on the DAVIS-MDUIED dataset

4.3.1 Qualitative comparisons

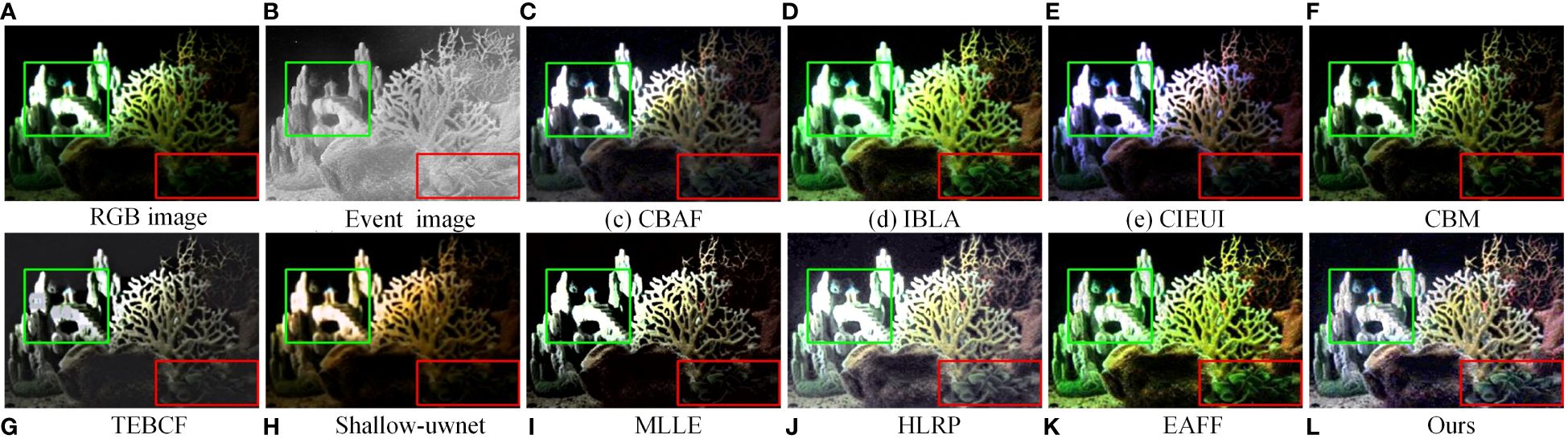

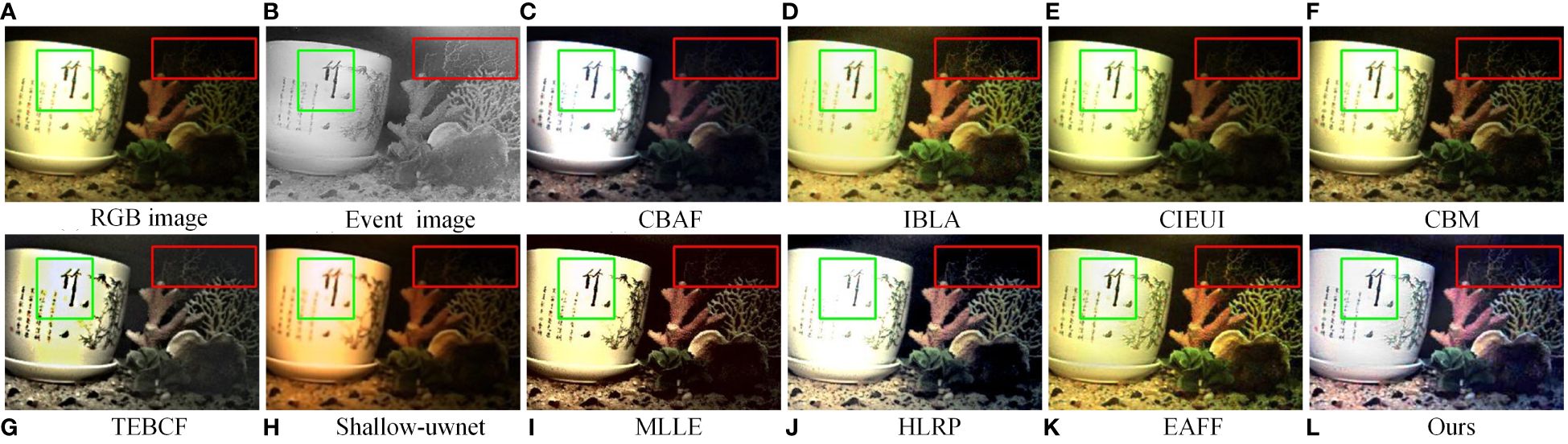

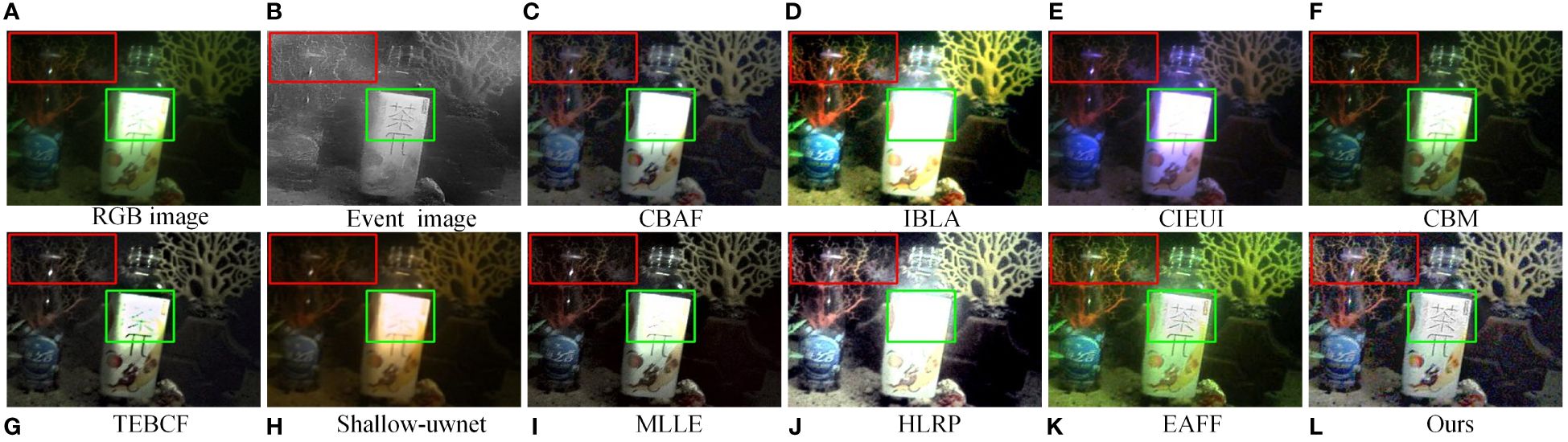

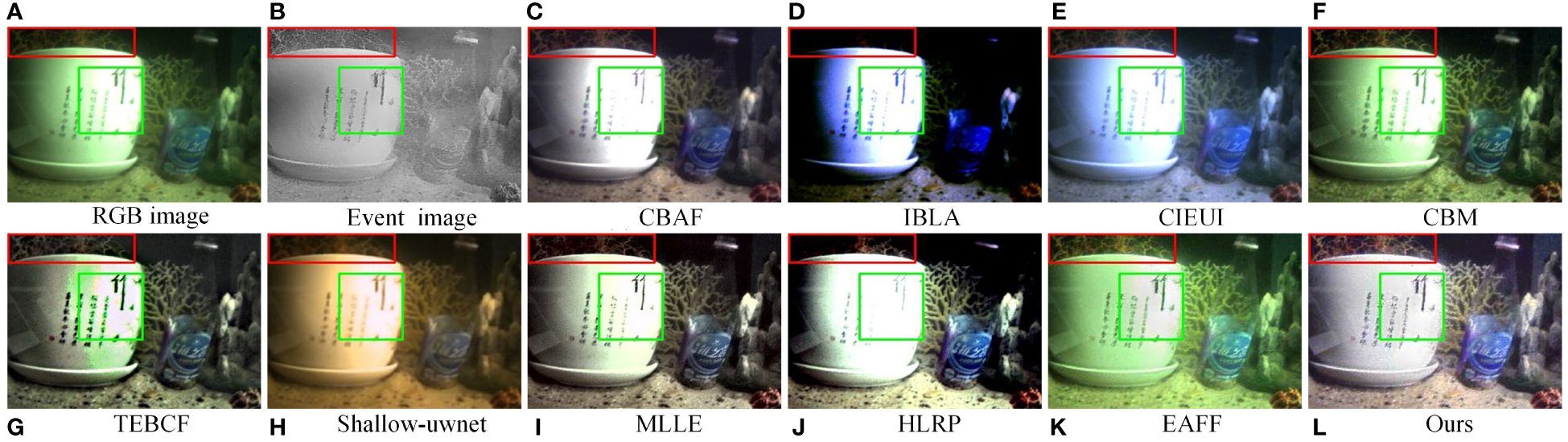

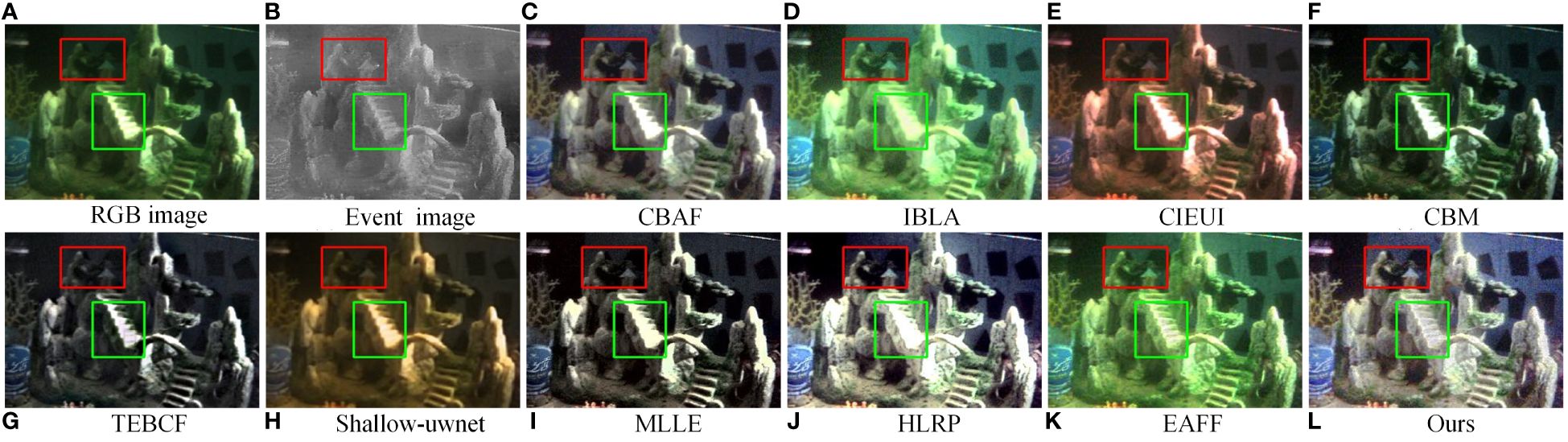

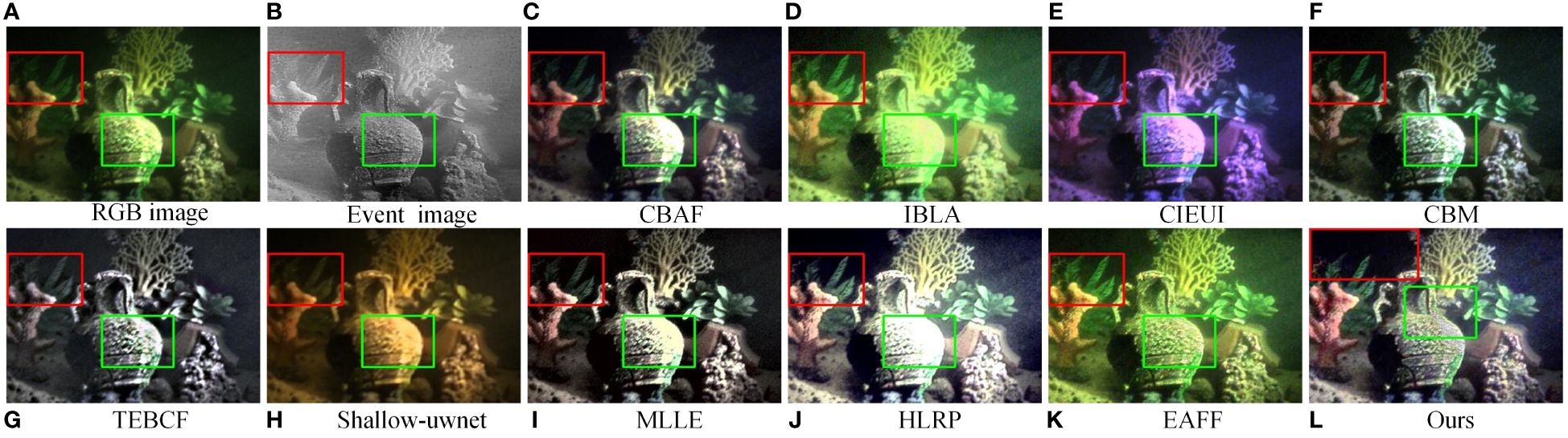

To further validate the capability of the proposed method to simultaneously address color degradation, haze, and non-uniform illumination of underwater images, we undertook comparative experiments on the DAVIS-MDUIED dataset. Figures 9C–L, 10C–L, 11C–L, 12C–L, 13C–L displays the enhancement results of 9 state-of-the-art methods and our method on the DAVIS-MDUIED dataset. We find that IBLA, CIEUI, Shallow-uwnet, and EAFF methods are unable to eliminate color deviations, leading to unwanted color distortions in the enhanced images. Furthermore, IBLA, Shallow-uwnet, and EAFF methods fail to completely remove haze, particularly in the overexposed areas (green rectangles in Figures 9–13), resulting in low contrast in the enhanced images. Additionally, CIEUI, Shallow-uwnet, and MLLE methods do not effectively restore details in the dark regions (red rectangles in Figures 9–13), reducing the visibility of the enhanced images. Moreover, CBAF, IBLA, CIEUI, CBM, TEBCF, MLLE, and HLRP methods do not satisfactorily restore details in the bright regions and may introduce over-enhancement and local halo issues. In contrast, our method effectively corrects color deviations and eliminates haze while successfully restoring details in both dark and bright regions, without introducing over-enhancement and halo. As a result, the proposed method exhibited the best visual effects among all the evaluated methods.

Figure 9 Visual comparison on the image ‘Bottles’ in the DAVIS-MDUIED dataset. (A) Original RGB image. (B) Event reconstruction image. (C) CBAF (Ancuti et al., 2017). (D) IBLA (Peng and Cosman, 2017). (E) CIEUI (Sethi and Sreedevi, 2019). (F) CBM (Yuan et al., 2020). (G) TEBCF (Yuan et al., 2021). (H) Shallow-uwnet (Naik et al., 2021). (I) MLLE (Zhang et al., 2022b). (J) HLRP (Zhuang et al. 2022). (K) EAFF (Bi et al., 2022b). (L) Ours.

Figure 10 Visual comparison on the image ‘Flowerpot’ in the DAVIS-MDUIED dataset. (A) Original RGB image. (B) Event reconstruction image. (C) CBAF (Ancuti et al., 2017). (D) IBLA (Peng and Cosman, 2017). (E) CIEUI (Sethi and Sreedevi, 2019). (F) CBM (Yuan et al., 2020). (G) TEBCF (Yuan et al., 2021). (H) Shallow-uwnet (Naik et al., 2021). (I) MLLE (Zhang et al., 2022b). (J) HLRP (Zhuang et al. 2022). (K) EAFF (Bi et al., 2022b). (L) Ours.

Figure 11 Visual comparison on the image ‘Head’ in the DAVIS-MDUIED dataset. (A) Original RGB image. (B) Event reconstruction image. (C) CBAF (Ancuti et al., 2017). (D) IBLA (Peng and Cosman, 2017). (E) CIEUI (Sethi and Sreedevi, 2019). (F) CBM (Yuan et al., 2020). (G) TEBCF (Yuan et al., 2021). (H) Shallow-uwnet (Naik et al., 2021). (I) MLLE (Zhang et al., 2022b). (J) HLRP (Zhuang et al. 2022). (K) EAFF (Bi et al., 2022b). (L) Ours.

Figure 12 Visual comparison on the image ‘Rockery’ in the DAVIS-MDUIED dataset. (A) Original RGB image. (B) Event reconstruction image. (C) CBAF (Ancuti et al., 2017). (D) IBLA (Peng and Cosman, 2017). (E) CIEUI (Sethi and Sreedevi, 2019). (F) CBM (Yuan et al., 2020). (G) TEBCF (Yuan et al., 2021). (H) Shallow-uwnet (Naik et al., 2021). (I) MLLE (Zhang et al., 2022b). (J) HLRP (Zhuang et al. 2022). (K) EAFF (Bi et al., 2022b). (L) Ours.

Figure 13 Visual comparison on the image ‘Vase’ in the DAVIS-MDUIED dataset. (A) Original RGB image. (B) Event reconstruction image. (C) CBAF (Ancuti et al., 2017). (D) IBLA (Peng and Cosman, 2017). (E) CIEUI (Sethi and Sreedevi, 2019). (F) CBM (Yuan et al., 2020). (G) TEBCF (Yuan et al., 2021). (H) Shallow-uwnet (Naik et al., 2021). (I) MLLE (Zhang et al., 2022b). (J) HLRP (Zhuang et al. 2022). (K) EAFF (Bi et al., 2022b). (L) Ours.

4.3.2 Quantitative comparisons

Table 4 presents the quantitative analysis results of our image enhancement methods on the DAVIS-MDUIED dataset. Our method exhibits outstanding performance in the AG (Du et al., 2017), EI (Wang et al., 2012), UIQM (Panetta et al., 2015), and NIQE (Mittal et al., 2012) metrics and ranks among the top-performing methods. Specifically, our method performs the best results in the AG and EI metrics, indicating that it can better restore valuable details and enhance contrast. As for the UIQM and NIQE metrics, our method is superior to most of the comparison methods and ranks in the top two, which indicates that it enhances underwater images with satisfactory chromaticity, sharpness, and contrast, creating pleasing visual effects. The consistency between the qualitative and quantitative analysis results validates the efficacy of our method in correcting color distortions, improving visibility and contrast, and restoring details in both dark and bright regions of underwater images.

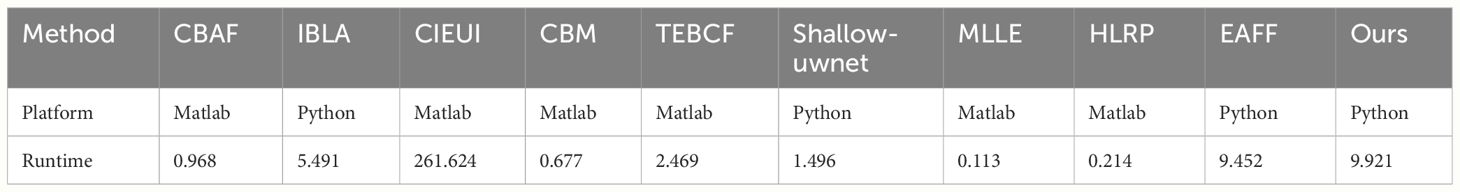

4.4 Complexity evaluation

To assess the runtime performance of the proposed method, we conducted a comparative analysis against other competing methods. Matlab was utilized for implementing CBAF, CIEUI, CBM, HLRP, TEBCF, MLLE, and HLRP methods, while Python was employed for IBLA, SHALLOW, EAFF, and our method. Table 5 presents the comparison results of the average runtime for various underwater image enhancement methods. Notably, the proposed method exhibits a relatively prolonged runtime in comparison to other methods, constituting a primary limitation. This limitation primarily arises from the time-intensive process of extracting the luminance map from RGB images through the RRDNET method before integration with event reconstruction images, requiring approximately 10 seconds. Our future research efforts will concentrate on resolving this challenge.

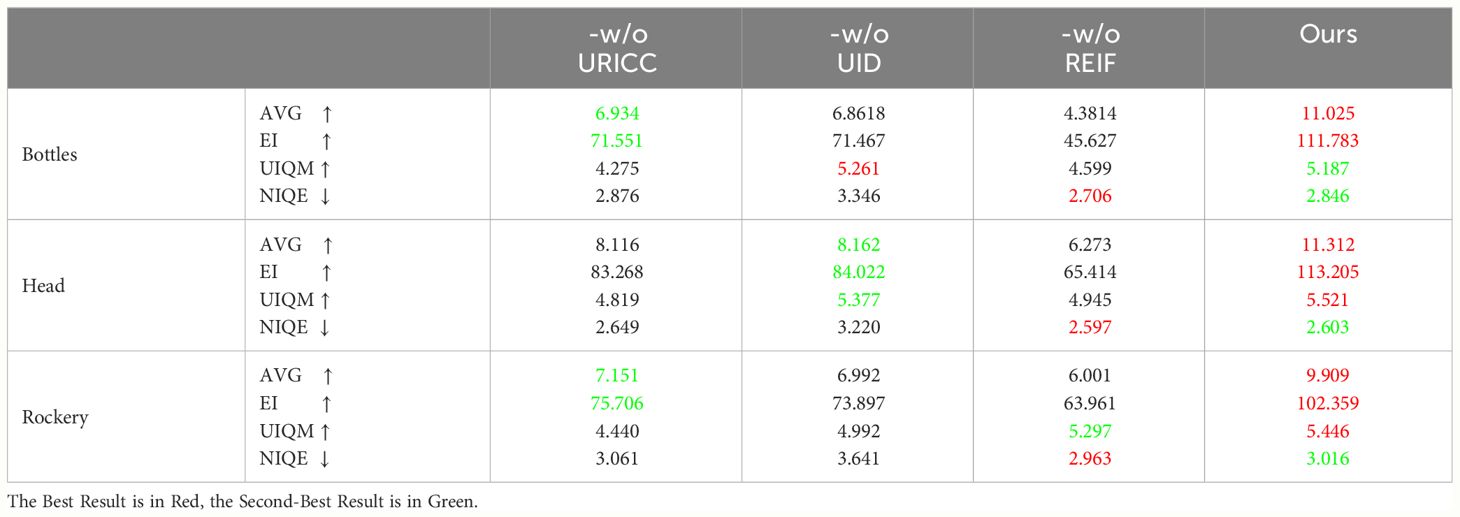

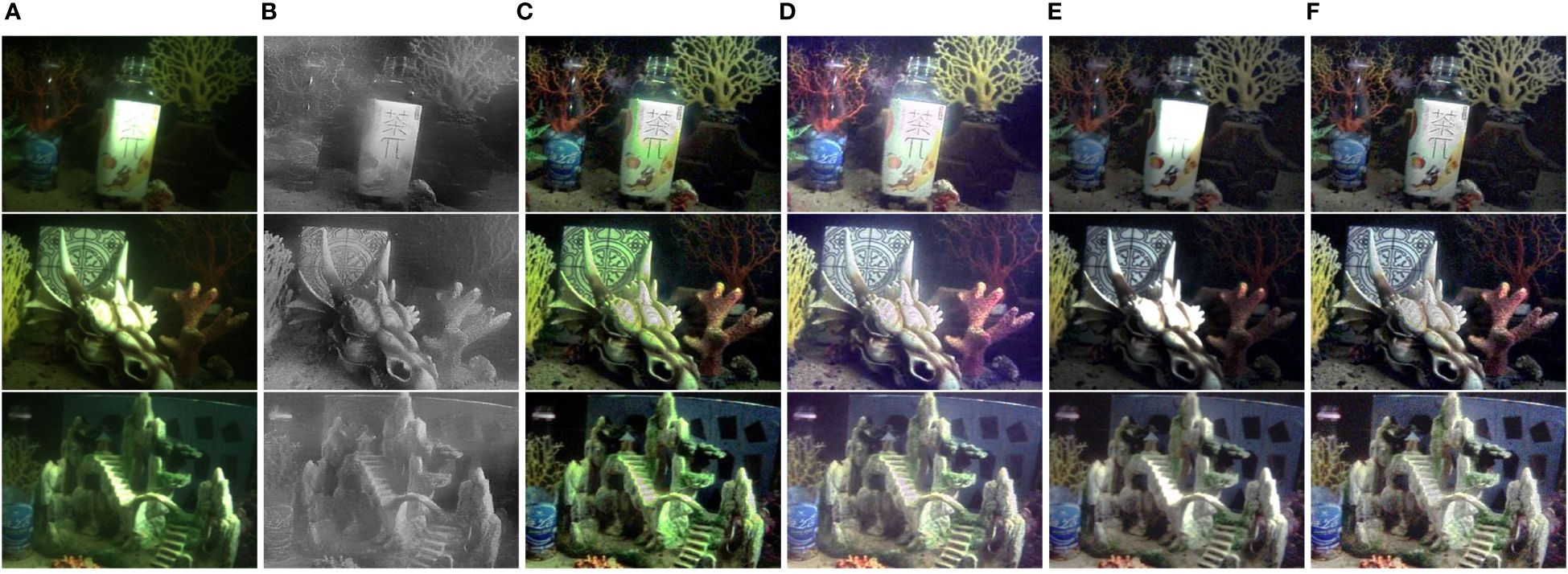

4.5 Ablation study

To demonstrate the efficacy of our method’s core components, we perform several ablation experiments on the DAVIS-MDUIED dataset, namely: (a) the proposed method without underwater RGB image color correction (w/o URICC), (b) the proposed method without underwater image dehazing (w/o UID), (c) the proposed method without RGB/Event image fusion (w/o REIF).

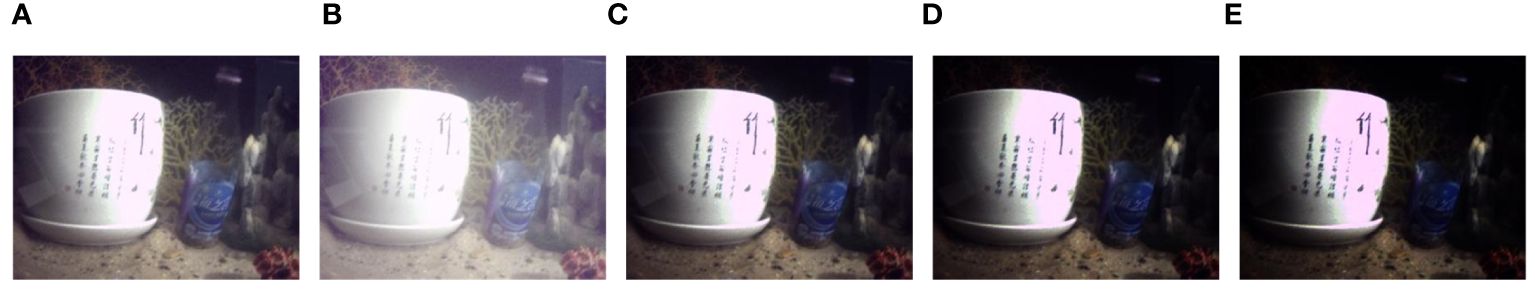

Figure 14 illustrates the visual comparison results of these ablation experiments. Our findings are as follows: 1) w/o URICC fails to perform color correction on underwater images; 2) w/o UID successfully removes color deviation and restores image texture, but it is unable to eliminate haze; 3) w/o REIF restores color and improves visibility, but it cannot restore details in bright areas; 4) The complete implementation of the proposed method, which includes all essential components, produces the most visually appealing outcome.

Figure 14 Ablation results for each core component of the proposed method on the DAVIS-MDUIED dataset. (A) Raw RGB images. (B) Event reconstruction images. (C) w/o URICC. (D) w/o UID. (E) w/o REIF. (F) Our method (full model).

The quantitative analysis results of the ablation models on the DAVIS-MDUIED dataset using four non-reference metrics are shown in Table 6. Based on the outcomes delineated in Table 6, the full model performs superior performance across the AVG, EI, and UIQM metrics, indicating that our method enhances underwater images with rich texture details and good quality. As for the NIQE metric, the w/o REIF model attains the best results, exhibiting relatively small disparities when compared to the full model. However, in terms of the AVG, EI, and UIQM metrics, the full model significantly outperforms the w/o REIF model. This suggests that our method entails a slight compromise in terms of image naturalness during the fusion of RGB images and event-reconstructed images to achieve better restoration of image details and overall improvement in underwater image quality. Overall, the combination of these key components facilitates the commendable performance exhibited by our method.

4.6 Applications

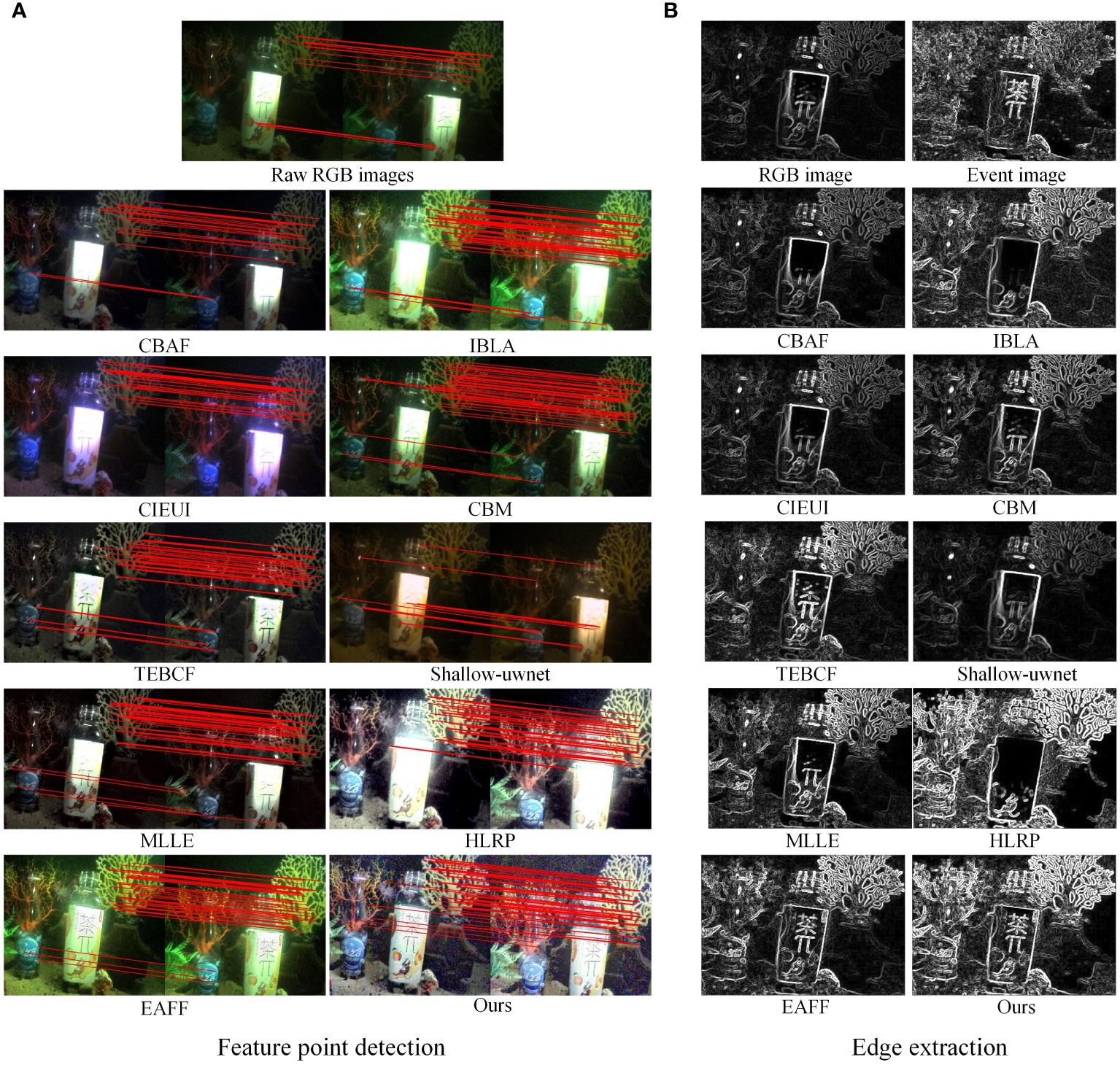

To provide a comprehensive evaluation of the proposed method, we choose to apply it to two common computer vision tasks: feature point detection and edge extraction.

4.6.1 Feature point detection

Image feature point detection is a fundamental task in computer vision, which aims to automatically detect points with significant features. These feature points have broad applications in computer vision, such as image matching, target tracking, and 3D reconstruction. We utilize the Harris algorithm (Harris et al., 1988) to detect feature points in two original underwater images and their enhanced versions, and the detection results are presented in Figure 15A. The Harris local feature matching points obtained from the two original underwater images and their corresponding enhanced versions are 18, 21, 55, 36, 56, 46, 14, 52, 54, 50, and 64, respectively. The experimental results demonstrate that the images enhanced by our method perform well in detecting key points, leading to a significant increase in the number of detected feature points.

Figure 15 Application testing on two computer vision tasks. (A) Testing on feature point detection. (B) Testing on edge extraction.

4.6.2 Edge extraction

Image edge extraction is a crucial feature in computer vision, with the primary objective of extracting the position and shape of object edges. It has diverse applications, such as object detection, image segmentation, and 3D reconstruction. We use the Sobel operator (Kutty et al., 2014) to extract significant edges from two original underwater images and their enhanced versions. As depicted in Figure 15B, our method produces clearer and more complete edge structures for prominent targets in the enhanced underwater images, indicating that our method performs well in underwater image edge extraction.

5 Conclusion

In this paper, we propose a novel approach for enhancing multi-degraded underwater images via the fusion of RGB and Event signals. The proposed method comprises three main modules: adaptive color correction, dehazing through the integration of a sharpened image and artificial multi-exposure images, and RGB/Event image fusion. Extensive experiments conducted on the DAVIS-NUIUIED and DAVIS-MDUIED datasets demonstrate the superior performance of the proposed method in enhancing multi-degraded underwater images. The quantitative and qualitative comparison results show that the proposed method generates images with natural colors, enhanced contrast, improved details, and superior visual quality. Furthermore, ablation studies confirm the effectiveness of the three key modules in our method. Additionally, we further validate the practical value of our enhanced images through application experiments. However, the use of the RRDNET algorithm in our method leads to increased computation time. In future work, we aim to refine the algorithm’s details and reduce its computational complexity.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding author.

Author contributions

XB: Conceptualization, Investigation, Methodology, Software, Writing – original draft. PW: Funding acquisition, Methodology, Validation, Writing – review & editing. WG: Funding acquisition, Supervision, Writing – review & editing. FZ: Resources, Supervision, Writing – review & editing, Methodology. LS: Funding acquisition, Supervision, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work was supported by the National Natural Science Foundation of China (U2013602, 52075115, 51521003, 61911530250), National Key R&D Program of China (2020YFB13134, 2022YFB4601800), Self-Planned Task (SKLRS202001B, SKLRS202110B) of State Key Laboratory of Robotics and System (HIT).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Achanta R., Hemami S., Estrada F., Susstrunk S. (2009). “Frequency-tuned salient region detection,” in 2009 IEEE conference on computer vision and pattern recognition. 1597–1604 (IEEE). doi: 10.1109/CVPR.2009.5206596

Akkaynak D., Treibitz T. (2019). “Sea-thru: A method for removing water from underwater images,” in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 1682–1691. doi: 10.1109/CVPR.2019.00178

Ancuti C. O., Ancuti C. (2013). Single image dehazing by multi-scale fusion. IEEE Trans. Image Process. 22, 3271–3282. doi: 10.1109/TIP.2013.2262284

Ancuti C. O., Ancuti C., De Vleeschouwer C., Bekaert P. (2017). Color balance and fusion for underwater image enhancement. IEEE Trans. image Process. 27, 379–393. doi: 10.1109/TIP.2017.2759252

Ancuti C., Ancuti C. O., Haber T., Bekaert P. (2012). “Enhancing underwater images and videos by fusion,” in 2012 IEEE conference on computer vision and pattern recognition. 81–88 (IEEE). doi: 10.1109/CVPR.2012.6247661

Azmi K. Z. M., Ghani A. S. A., Yusof Z. M., Ibrahim Z. (2019). Natural-based underwater image color enhancement through fusion of swarm-intelligence algorithm. Appl. Soft Comput. 85, 105810. doi: 10.1016/j.asoc.2019.105810

Bai L., Zhang W., Pan X., Zhao C. (2020). Underwater image enhancement based on global and local equalization of histogram and dual-image multi-scale fusion. IEEE Access 8, 128973–128990. doi: 10.1109/ACCESS.2020.3009161

Bi X., Li M., Zha F., Guo W., Wang P. (2023). A non-uniform illumination image enhancement method based on fusion of events and frames. Optik 272, 170329. doi: 10.1016/j.ijleo.2022.170329

Bi X., Wang P., Wu T., Zha F., Xu P. (2022a). DAVIS-NUIUIED: A DAVIS-based non-uniform illumination underwater image enhancement dataset. doi: 10.6084/m9.figshare.19719898

Bi X., Wang P., Wu T., Zha F., Xu P. (2022b). Non-uniform illumination underwater image enhancement via events and frame fusion. Appl. Optics 61, 8826–8832. doi: 10.1364/AO.463099

Buchsbaum G. (1980). A spatial processor model for object colour perception. J. Franklin Institute 310, 1–26. doi: 10.1016/0016-0032(80)90058-7

Burt P. J., Adelson E. H. (1983). The laplacian pyramid as a compact image code. IEEE Transations On Commun. 31, 532–540. doi: 10.1109/TCOM.1983.1095851

Cadena P. R. G., Qian Y., Wang C., Yang M. (2021). Spade-e2vid: Spatially-adaptive denormalization for event-based video reconstruction. IEEE Trans. Image Process. 30, 2488–2500. doi: 10.1109/TIP.2021.3052070

Chen L., Jiang Z., Tong L., Liu Z., Zhao A., Zhang Q., et al. (2020). Perceptual underwater image enhancement with deep learning and physical priors. IEEE Trans. Circuits Syst. Video Technol. 31, 3078–3092. doi: 10.1109/TCSVT.2020.3035108

Chiang J. Y., Chen Y.-C. (2011). Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans. image Process. 21, 1756–1769. doi: 10.1109/TIP.2011.2179666

Cho Y., Kim A. (2017). “Visibility enhancement for underwater visual slam based on underwater light scattering model,” in 2017 IEEE International Conference on Robotics and Automation (ICRA). (IEEE), 710–717. doi: 10.1109/ICRA.2017.7989087

Dong L., Zhang W., Xu W. (2022). Underwater image enhancement via integrated rgb and lab color models. Signal Processing: Image Commun. 104, 116684. doi: 10.1016/j.image.2022.116684

Du J., Li W., Xiao B. (2017). Anatomical-functional image fusion by information of interest in local laplacian filtering domain. IEEE Trans. Image Process. 26, 5855–5866. doi: 10.1109/TIP.2017.2745202

Fu X., Zhuang P., Huang Y., Liao Y., Zhang X.-P., Ding X. (2014). “A retinex-based enhancing approach for single underwater image,” in 2014 IEEE international conference on image processing (ICIP). (IEEE), 4572–4576. doi: 10.1109/ICIP.2014.7025927

Galdran A., Pardo D., Picón A., Alvarez-Gila A. (2015). Automatic red-channel underwater image restoration. J. Visual Commun. Image Representation 26, 132–145. doi: 10.1016/j.jvcir.2014.11.006

Gallego G., Delbrück T., Orchard G., Bartolozzi C., Taba B., Censi A., et al. (2020). Event-based vision: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 44, 154–180. doi: 10.1109/TPAMI.2020.3008413

Gallego G., Scaramuzza D. (2017). Accurate angular velocity estimation with an event camera. IEEE Robotics Automation Lett. 2, 632–639. doi: 10.1109/LRA.2016.2647639

Guo Y., Li H., Zhuang P. (2019). Underwater image enhancement using a multiscale dense generative adversarial network. IEEE J. Oceanic Eng. 45, 862–870. doi: 10.1109/JOE.2019.2911447

Harris C., Stephens M.. (1988). “A combined corner and edge detector,” in Alvey vision conference, Vol. 15. 10–5244 (Citeseer). Available at: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=88cdfbeb78058e0eb2613e79d1818c567f0920e2

Hitam M. S., Awalludin E. A., Yussof W. N. J. H. W., Bachok Z. (2013). “Mixture contrast limited adaptive histogram equalization for underwater image enhancement,” in 2013 International conference on computer applications technology (ICCAT). (IEEE), 1–5. doi: 10.1109/ICCAT.2013.6522017

Islam M. J., Luo P., Sattar J. (2020). Simultaneous enhancement and super-resolution of underwater imagery for improved visual perception. arXiv preprint arXiv:2002.01155. doi: 10.48550/arXiv.2002.01155

Jian M., Liu X., Luo H., Lu X., Yu H., Dong J. (2021). Underwater image processing and analysis: A review. Signal Processing: Image Commun. 91, 116088. doi: 10.1016/j.image.2020.116088

Jiang Z., Li Z., Yang S., Fan X., Liu R. (2022). Target oriented perceptual adversarial fusion network for underwater image enhancement. IEEE Trans. Circuits Syst. Video Technol. 32, 6584–6598. doi: 10.1109/TCSVT.2022.3174817

Kutty S. B., Saaidin S., Yunus P. N. A. M., Hassan S. A. (2014). “Evaluation of canny and sobel operator for logo edge detection,” in 2014 International Symposium on Technology Management and Emerging Technologies. (IEEE), 153–156. doi: 10.1109/ISTMET.2014.6936497

Lee S.-h., Park J. S., Cho N. I. (2018). “A multi-exposure image fusion based on the adaptive weights reflecting the relative pixel intensity and global gradient,” in 2018 25th IEEE international conference on image processing (ICIP). (IEEE), 1737–1741. doi: 10.1109/ICIP.2018.8451153

Li C.-Y., Guo J.-C., Cong R.-M., Pang Y.-W., Wang B. (2016). Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 25, 5664–5677. doi: 10.1109/TIP.2016.2612882

Li M., Liu J., Yang W., Sun X., Guo Z. (2018). Structure-revealing low-light image enhancement via robust retinex model. IEEE Trans. Image Process. 27, 2828–2841. doi: 10.1109/TIP.2018.2810539

Liang Z., Ding X., Wang Y., Yan X., Fu X. (2021). Gudcp: Generalization of underwater dark channel prior for underwater image restoration. IEEE Trans. Circuits Syst. Video Technol. 32, 4879–4884. doi: 10.1109/TCSVT.2021.3114230

Liu R., Fan X., Zhu M., Hou M., Luo Z. (2020). Real-world underwater enhancement: Challenges, benchmarks, and solutions under natural light. IEEE Trans. Circuits Syst. Video Technol. 30, 4861–4875. doi: 10.1109/TCSVT.2019.2963772

Liu R., Jiang Z., Yang S., Fan X. (2022). Twin adversarial contrastive learning for underwater image enhancement and beyond. IEEE Trans. Image Process. 31, 4922–4936. doi: 10.1109/TIP.2022.3190209

Liu K., Liang Y. (2021). Underwater image enhancement method based on adaptive attenuation-curve prior. Optics express 29, 10321–10345. doi: 10.1364/OE.413164

Marques T. P., Albu A. B. (2020). “L2uwe: A framework for the efficient enhancement of lowlight underwater images using local contrast and multi-scale fusion,” in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). 538–539. doi: 10.1109/CVPRW50498.2020.00277

Messikommer N., Gehrig D., Loquercio A., Scaramuzza D. (2020). “Event-based asynchronous sparse convolutional networks,” in Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part VIII 16 (Springer), 415–431. doi: 10.1007/978-3-030-58598-3_25

Mittal A., Soundararajan R., Bovik A. C. (2012). Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 20, 209–212. doi: 10.1109/LSP.2012.2227726

Naik A., Swarnakar A., Mittal K. (2021). “Shallow-uwnet: Compressed model for underwater image enhancement (student abstract),” in The Thirty-Fifth AAAI Conference on Artificial Intelligence (AAAI-21), Vol. 35. 15853–15854. doi: 10.1609/aaai.v35i18.17923

Paikin G., Ater Y., Shaul R., Soloveichik E. (2021). “Efi-net: Video frame interpolation from fusion of events and frames,” in 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 1291–1301. doi: 10.1109/CVPRW53098.2021.00142

Panetta K., Gao C., Agaian S. (2015). Human-visual-system-inspired underwater image quality measures. IEEE J. Oceanic Eng. 41, 541–551. doi: 10.1109/JOE.2015.2469915

Peng Y.-T., Cosman P. C. (2017). Underwater image restoration based on image blurriness and light absorption. IEEE Trans. image Process. 26, 1579–1594. doi: 10.1109/TIP.2017.2663846

Peng L., Zhu C., Bian L. (2023). “U-shape transformer for underwater image enhancement,” in Computer Vision–ECCV 2022 Workshops: Tel Aviv, ISRAEL, October 23–27, 2022, Proceedings, Part II (Springer), 290–307. doi: 10.1007/978-3-031-25063-7_18

Pini S., Borghi G., Vezzani R. (2018). Learn to see by events: Color frame synthesis from event and rgb cameras. arXiv preprint arXiv:1812.02041. doi: 10.48550/arXiv.1812.02041

Ramponi G., Strobel N. K., Mitra S. K., Yu T.-H. (1996). Nonlinear unsharp masking methods for image contrast enhancement. J. electronic Imaging 5, 353–366. doi: 10.1117/12.242618

Rebecq H., Gehrig D., Scaramuzza D. (2018). “Esim: an open event camera simulator,” in Conference on robot learning. (PMLR), 969–982. Available at: https://proceedings.mlr.press/v87/rebecq18a.html.

Rebecq H., Ranftl R., Koltun V., Scaramuzza D. (2019). High speed and high dynamic range video with an event camera. IEEE Trans. Pattern Anal. Mach. Intell. 43, 1964–1980. doi: 10.1109/TPAMI.2019.2963386

Scheerlinck C., Barnes N., Mahony R. (2019). “Continuous-time intensity estimation using event cameras,” in Computer Vision–ACCV 2018: 14th Asian Conference on Computer Vision, Perth, AUSTRALIA, December 2–6, 2018, Revised Selected Papers, Part V (Springer), 308–324. doi: 10.1007/978-3-030-20873-8_20

Serikawa S., Lu H. (2014). Underwater image dehazing using joint trilateral filter. Comput. Electrical Eng. 40, 41–50. doi: 10.1016/j.compeleceng.2013.10.016

Sethi R., Sreedevi I. (2019). Adaptive enhancement of underwater images using multi-objective pso. Multimedia Tools Appl. 78, 31823–31845. doi: 10.1007/s11042-019-07938-x

Song W., Wang Y., Huang D., Liotta A., Perra C. (2020). Enhancement of underwater images with statistical model of background light and optimization of transmission map. IEEE Trans. Broadcasting 66, 153–169. doi: 10.1109/TBC.2019.2960942

Tao Y., Dong L., Xu W. (2020). A novel two-step strategy based on white-balancing and fusion for underwater image enhancement. IEEE Access 8, 217651–217670. doi: 10.1109/ACCESS.2020.3040505

Wang Y., Cao Y., Zhang J., Wu F., Zha Z.-J. (2021). Leveraging deep statistics for underwater image enhancement. ACM Trans. Multimedia Comput. Commun. Appl. (TOMM) 17, 1–20. doi: 10.1145/3489520

Wang W., Chang F. (2011). A multi-focus image fusion method based on laplacian pyramid. J. Comput. 6, 2559–2566. doi: 10.4304/jcp.6.12.2559-2566

Wang Y., Du H., Xu J., Liu Y. (2012). “A no-reference perceptual blur metric based on complex edge analysis,” in 2012 3rd IEEE International Conference on Network Infrastructure and Digital Content. (IEEE), 487–491. doi: 10.1109/ICNIDC.2012.6418801

Wang Y., Zhang J., Cao Y., Wang Z. (2017). “A deep cnn method for underwater image enhancement,” in 2017 IEEE international conference on image processing (ICIP). (IEEE), 1382–1386. doi: 10.1109/ICIP.2017.8296508

Xie J., Hou G., Wang G., Pan Z. (2021). A variational framework for underwater image dehazing and deblurring. IEEE Trans. Circuits Syst. Video Technol. 32, 3514–3526. doi: 10.1109/TCSVT.2021.3115791

Xu K., Wang Q., Xiao H., Liu K. (2022). Multi-exposure image fusion algorithm based on improved weight function. Front. Neurorobotics 16, 846580. doi: 10.3389/fnbot.2022.846580

Yang H.-Y., Chen P.-Y., Huang C.-C., Zhuang Y.-Z., Shiau Y.-H. (2011). “Low complexity underwater image enhancement based on dark channel prior,” in 2011 Second International Conference on Innovations in Bio-inspired Computing and Applications. (IEEE), 17–20. doi: 10.1109/IBICA.2011.9

Yin M., Du X., Liu W., Yu L., Xing Y. (2023). Multiscale fusion algorithm for underwater image enhancement based on color preservation. IEEE Sens. J. 23, 7728–7740. doi: 10.1109/JSEN.2023.3251326

Yuan J., Cai Z., Cao W. (2021). Tebcf: Real-world underwater image texture enhancement model based on blurriness and color fusion. IEEE Trans. Geosci. Remote Sens. 60, 1–15. doi: 10.1109/TGRS.2021.3110575

Yuan J., Cao W., Cai Z., Su B. (2020). An underwater image vision enhancement algorithm based on contour bougie morphology. IEEE Trans. Geosci. Remote Sens. 59, 8117–8128. doi: 10.1109/TGRS.2020.3033407

Zhang W., Dong L., Xu W. (2022a). Retinex-inspired color correction and detail preserved fusion for underwater image enhancement. Comput. Electron. Agric. 192, 106585. doi: 10.1016/j.compag.2021.106585

Zhang W., Liu X., Wang W., Zeng Y. (2018). Multi-exposure image fusion based on wavelet transform. Int. J. Advanced Robotic Syst. 15, 1729881418768939. doi: 10.1177/1729881418768939

Zhang W., Zhuang P., Sun H.-H., Li G., Kwong S., Li C. (2022b). Underwater image enhancement via minimal color loss and locally adaptive contrast enhancement. IEEE Trans. Image Process. 31, 3997–4010. doi: 10.1109/TIP.2022.3177129

Zhao W., Rong S., Li T., Cao X., Liu Y., He B. (2021). “Underwater single image enhancement based on latent low-rank decomposition and image fusion,” in Twelfth International Conference on Graphics and Image Processing (ICGIP 2020) (SPIE) 11720, 357–365. doi: 10.1117/12.2589359

Zhu A., Zhang L., Shen Y., Ma Y., Zhao S., Zhou Y. (2020). “Zero-shot restoration of underexposed images via robust retinex decomposition,” in 2020 IEEE International Conference on Multimedia and Expo (ICME). 1–6 (IEEE).

Zhuang P., Wu J., Porikli F., Li C. (2022). Underwater image enhancement with hyper-laplacian reflectance priors. IEEE Trans. Image Process. 31, 5442–5455. doi: 10.1109/TIP.2022.3196546

Keywords: underwater image enhancement, RGB/Event camera, color correction, dehazing, non-uniform illumination

Citation: Bi X, Wang P, Guo W, Zha F and Sun L (2024) RGB/Event signal fusion framework for multi-degraded underwater image enhancement. Front. Mar. Sci. 11:1366815. doi: 10.3389/fmars.2024.1366815

Received: 07 January 2024; Accepted: 19 April 2024;

Published: 30 May 2024.

Edited by:

David Alberto Salas Salas De León, National Autonomous University of Mexico, MexicoReviewed by:

Xuebo Zhang, Northwest Normal University, ChinaManigandan Muniraj, Vellore Institute of Technology (VIT), India

Copyright © 2024 Bi, Wang, Guo, Zha and Sun. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Fusheng Zha, emhhZnVzaGVuZ0BoaXQuZWR1LmNu

Xiuwen Bi

Xiuwen Bi Pengfei Wang

Pengfei Wang Fusheng Zha

Fusheng Zha