Abstract

In behavioral neuroscience, many experiments are developed in 1 or 2 spatial dimensions, but when scientists tackle problems in 3-dimensions (3D), they often face problems or new challenges. Results obtained for lower dimensions are not always extendable in 3D. In motor planning of eye, gaze or arm movements, or sensorimotor transformation problems, the 3D kinematics of external (stimuli) or internal (body parts) must often be considered: how to describe the 3D position and orientation of these objects and link them together? We describe how dual quaternions provide a convenient way to describe the 3D kinematics for position only (point transformation) or for combined position and orientation (through line transformation), easily modeling rotations, translations or screw motions or combinations of these. We also derive expressions for the velocities of points and lines as well as the transformation velocities. Then, we apply these tools to a motor planning task for manual tracking and to the modeling of forward and inverse kinematics of a seven-dof three-link arm to show the interest of dual quaternions as a tool to build models for these kinds of applications.

1. Introduction

Our environment is 3-dimensional (3D) and our body can be represented as a rigid multibody system evolving in that 3D environment, with different body parts moving relative to each other. Accurately describing the 3D kinematics of such systems is important for diverse applications in neuroscience which involve 3D kinematics: (1) forward kinematics of different 3D systems: the position and/or orientation of an end-effector (a tool held by the hand for example), (2) 3D Reference frame transformation or reference frame encoding [for example: 3D vestibulor-ocular reflex (VOR), 3D visuomotor transformation, 3D eye-head gaze shifts, 3D spatial updating, …], and (3) inverse kinematics: the set of joint angles corresponding to a given end-effector position and velocity. In practice, these models allow us to analyze 3D data acquired through behavioral experiments as well as to make predictions.

To model the kinematics of a rigid multibody system, we use the framework of the geometric algebra introduced by David Hestenes (see the textbook Hestenes, 1986), which easily applies to and is very convenient to model mechanical systems, in particular the kinematics. Hestenes applied this approach to some eye (Hestenes, 1994a) and arm reaching (Hestenes, 1994b) movements. Actually, he chose the geometric algebra of quaternions which have been sometimes used to model 3D eye, head and arm movements (see for example: (Tweed and Vilis, 1987; Straumann et al., 1991; Hore et al., 1992; Haslwanter, 1995; Crawford and Guitton, 1997). Quaternions allow us to easily deal with rotations but not with translations. Yet we have to deal with translations since the origins of reference frames attached to each body generally do not coincide. For example, the eye rotation center is offset from the head rotation center. In order to deal with translations, in addition to the rotation, we will use the geometric algebra of dual quaternions (see Bayro-Corrochano, 2003). Dual quaternions also allow to elegantly represent a screw motion (Funda and Paul, 1990b; Aspragathos and Dimitros, 1998), defined as a combined rotation and translation along the rotation axis. They have been used in Blohm and Crawford (2007) to model combined 3D eye and head rotations in the context of 3D visuomotor transformation for reaching movements. While dual quaternions have not been widely used in the neuroscience community, they have been applied in other fields. Indeed, they have been employed with success in computer vision (Walker et al., 1991; Phong et al., 1993; Goddard and Abidi, 1998; Torsello et al., 2011) or in robotics (Daniilidis, 1999; Bayro-Corrochano et al., 2000).

The goal of this paper is to provide a tutorial of the dual quaternion geometric algebra to the neuroscientists and then to show its interests and advantages to several applications in sensorimotor control. First, we summarize the theory necessary to introduce the different applications. The different dual quaternion operations are described and we also provide our MATLAB implementation of these operations in supplementary materials for the potential interested reader. Then we describe several applications using the dual quaternion formalism.

There are several reasons to use dual quaternions to represent the 3D kinematics. First, it is a compact and geometrically meaningful way of representing 3D rotations, translations and screw motions (Funda and Paul, 1990a; Kim and Kumar, 1990; Aspragathos and Dimitros, 1998; Daniilidis, 1999; Kavan et al., 2008). Furthermore, there are no singularities when representing 3D rotations (this is not the case if a Fick, Helmoltz or Euler representation is chosen), because a rotation is represented through a single angle θ and the axis of rotation n (Chou, 1992; Grassia, 1998). Moreover computational requirements (in terms of storage and speed) are lower than for other methods, including homogeneous coordinates (Funda et al., 1990; Funda and Paul, 1990b; Walker et al., 1991; Aspragathos and Dimitros, 1998), which could be important for behavioral experiments needing real-time data and processing (for example, a forward kinematic model applied to on-line raw data) but also for post-processing treatments in data analysis and model predictions. In an implementation perspective again, the neuroscience researcher concerned with the practical implementation of the transformations using dual quaternions will most likely want to consider many transformations simultaneously. For example, when analyzing experimental data, or when making predictions (from a few thousand to many more). In this context, dual quaternions are much more convenient than homogenous matrices for example. Indeed, we easily deal with an arbitrary number N of simultaneous dual quaternions transformations (using standard matrix operations), while for homogeneous coordinates, it requires the use of 3D tensors, which is possible but not easy to implement. Yet neuroscience researchers want a tool efficient and easy to use at the same time. Last but not least the dual quaternion formalism can be used to model point and lines transformations (Bayro-Corrochano, 2003), which is not the case for other formalisms like homogeneous coordinates (only point transformations). Yet applications sometimes demand that we combine both types of transformations (see section 3.3 of this article) and dual quaternions provide a unified and easy way to do that. We would have had to employ two different approaches to perform kinematic operations on points and lines if we had not used dual quaternions.

2. Theory

In this section, we provide a tutorial or short description of the dual quaternion algebra based on the literature (Hestenes,

1994a; Bayro-Corrochano,

2003). The reader familiar with these concepts can skip this part and move to section 3. However, as far as we know of, many readers in the sensorimotor science community are not familiar with dual quaternions and therefore they will find in this section many useful dual quaternions operations which can be useful to model their 3D problem in neuroscience. Moreover, we provide a MATLAB implementation for each of these operations, for the reader who would like to use dual quaternions but who perhaps would be restrained by the implementation. This toolbox is available for download at the followings urls:

On the File exchange of Matlab Central: http://www.mathworks.com/matlabcentral/fileexchange/39288. Users are able to provide feedback, comment and rate the files.

In the following, we introduce the dual quaternion algebra, based on the quaternion algebra. Then we introduce the rotation and translation operations using the dual quaternion algebra. Afterwards we describe how points and lines can be encoded using dual quaternions, before explaining how applying the rotation or translation on these objects. Then we describe what a screw motion is and how it is encoded using a dual quaternion. Finally we briefly talk about the implementation and conversion to other formalisms.

2.1. Basics

2.1.1. Quaternion geometric algebra

First let us define the geometric product of two 3D vectors and (see Hestenes, 1994a; Bayro-Corrochano, 2003). It is denoted and can be expressed as: where is the classical dot product yielding a scalar number and is a new entity called a bivector, resulting from the wedge product of and . This wedge product is antisymmetric: . We will see later how to compute it in practice. Now let us consider three orthonormal basis vectors in a right-handed reference frame, , , and . From the definition of the geometric product and the antisymmetric property of the wedge product, we can get that:

Therefore, we obtain three basis bivectors: , , and . Then the unit right-handed pseudoscalar i is defined (see Hestenes, 1994a) as: i is thus an entity which is called a trivector. The i symbol is used by analogy with the complex numbers. Indeed, using the properties of the geometric product and the definition of i, we have: i2 = −1. i is a duality operator since we can notice that:

Therefore, the three basis bivectors are dual entities to the three basis vectors and vice-versa, which implies that for every bivector a, there exists a corresponding vector such that . In the following, we will always use the bold notation for bivectors, and top arrows for vectors. In particular, the bivector obtained by the wedge product can be expressed as: where is a vector representing the classical cross product of the vectors and . A quaternion is the sum of a scalar and a bivector (Hestenes, 1994a): and can be obtained as the geometric product of two vectors, as shown by Equation (1). The sum of two quaternions and is a quaternion computed as follows:

The multiplication of two quaternions and is a quaternion . It is computed as (see details in Appendix A):

Other quaternion operations are also useful. The conjugate of a quaternion A is defined by:

The norm of a quaternion A is denoted: . The inverse of a quaternion A is denoted A−1 and defined as .

2.1.2. Dual quaternion geometric algebra

A dual quaternion D is defined by where D0 and D1 are two quaternions and ϵ is a mathematical operator with the property that:

The multiplication of two dual quaternions D = D0 + ϵD1 and E = E0 + ϵE1 is another dual quaternion F = F0 + ϵF1 and using the property (2), F is equal to:

We notice that the dual quaternion multiplication involves three quaternion multiplications.

Several conjugates exist for the dual quaternion D = D0 + ϵD1, which are used depending on the operations needed. The first one is obtained by applying the quaternion conjugate to each quaternion composing the dual quaternion.

The conjugate of a dual number can also be used:

And both operations can also be combined:

The following identities are also useful:

The norm of a dual quaternion D is a dual number (a dual number is of the form a = a0 + ϵa1 where a0 and a1 are real scalar numbers and ϵ is the same operator as for dual quaternions, with the property that ϵ2 = 0). The dual quaternion norm is computed in the following way (see Kavan et al., 2008): where ||D0|| is the quaternion norm of D0 and [Q]scalar is the scalar part q0 of a quaternion Q = q0 + q. The inverse of a dual quaternion A = A0 + ϵA1 can be computed and is written (see Kavan et al., 2008):

If the non-dual part, A0, of the dual quaternion A has a zero norm, then A has no inverse.

In the next sections, the dual quaternions representing rotations, translations and or screw motions, as well as points and lines, are described using unitary dual quaternions, i.e., dual quaternions with a norm equal to 1. They are unitary under the condition that:

Therefore, unit dual quaternions belong to a 6-dimensional manifold and are specified by six different parameters. From now on, we always use the bivector notation in order to avoid writing the i symbol at each equation. Anyway, remember that each bivector a is directly related to its counterpart vector by the relation .

2.2. Rotations and translations

In this section, we describe the dual quaternions associated with rotations and translations, as well as their velocity: rotational velocity, translational velocity. These operators will be used and combined later to carry out complex kinematic transformations.

2.2.1. Rotation

By Euler's theorem, any 3D rotation may be described by a unique unitary rotation axis n and a single rotation angle θ. In the dual quaternion framework, a pure rotation (whose rotation axis goes through the origin of the reference frame) is described by: where n is a bivector representing the unitary rotation axis coordinates expressed in the reference frame and θ is the rotation angle. Actually, Rrot is a quaternion, or a dual quaternion with a dual part (the part premultiplied by ϵ) equal to 0.

2.2.2. Translation

A pure translation is defined by its unitary axis t and its distance d. A translation dual quaternion Ttrans is written under the following form:

2.2.3. Rotational velocity

Potential users should pay attention to the fact that in 3-D, the rotational velocity quaternion is not the derivative of the rotation quaternion (this is also the case if classical rotation matrices are considered). It has been shown by Hestenes (1986) that the derivative of the rotation quaternion with respect to time can be written as: where is a rotational velocity dual quaternion which has a dual part equal to zero, and which has a non-dual part with a zero scalar component. Ωrot represents the rotational velocity. Kinematically, the norm of Ωrot, represents the instantaneous angular velocity while the normalized vector represents the instantaneous rotation axis. Also, it can be shown that:

2.2.4. Translational velocity

The translational velocity dual quaternion is derived from the translation dual quaternion Ttrans. Differentiating the expression of the translation dual quaternion Ttrans, we obtain: where .

2.3. Description of point and line positions and velocities

Here we describe how we can describe either points (location and velocity) or lines (location and velocity) using the dual quaternion formalism.

2.3.1. Point position and velocity

Following what is done by Bayro-Corrochano (2003) for example, a 3D physical point P with coordinates x in a given reference frame R is represented by the dual quaternion X = 1 + ϵx.

The velocity ẋ of point P is represented by the dual quaternion V = Ẋ = ϵẋ.

2.3.2. Line position and velocity

Dual quaternions provide a convenient way to represent lines (Daniilidis, 1999; Bayro-Corrochano, 2003). A line can be represented by six coordinates (Plücker coordinates), specifying the line orientation n and the position of an arbitrary point of the line in space, p. The line representation is moreover independent of the choice of the point p. A line dual quaternion L is written:

We can notice that m is indeed independent of the choice of p since the cross product depends only on the component of orthogonal to , and this orthogonal component is obviously the same for every point on the line L. Note also that a line dual quaternion is unitary.

The line velocity, , is obtained by computing the derivative of the line dual quaternion L:

We see that the line velocity dual quaternion is related to the rate of change of , as well as the rate of change of . Note that Equation (6) does not depend on the arbitrary choice of and its rate of change (see proof in Appendix B).

2.4. Kinematic operations on points and lines

Now we describe the kinematic transformations on point and lines, as the dual quaternion formalism allows us to use the same kinematic operators for point or line transformations, which is a major advantage.

Let us consider the reference frame R′, with the same origin as reference frame R, but lying in a different orientation. The transformation between both reference frames is a pure rotation with axis n and angle θ. Then, a point P has the following coordinates in the R′ frame (passive rotation): where Rrot is the rotation quaternion. is the conjugate quaternion: . For rotations dual quaternions, , since rotation dual quaternions have a dual component equal to zero. Therefore, in the following, we use R*rot instead of since it is shorter to write.

From another point of view, we may want to know the new position of a mobile point P after a rotation Rrot applied to a reference position P0 = 1 + ϵx0 in a given reference frame R (an active rotation):

Let us now assume that reference frame R″ has the same orientation as R but R″ origin is offset from R origin. The transformation between both reference frames is a simple translation along a given unitary axis t and distance d. The unitary axis t is parallel to the line connecting the origins of frames R″ and R and is directed from R″ origin toward R origin. In R″ frame, point P has coordinates: where Ttrans is the translation dual quaternion. The conjugate dual quaternion that we use for point transformation (see Bayro-Corrochano, 2003) is . We can also apply the same reasoning for the active translation of a given point.

The velocity ẋ of the 3D physical point P with coordinates x in a given reference frame R is represented by the dual quaternion . It can be of interest to compute the velocity of P in the frame R′, which is itself rotating compared to R. In that reference frame R′, the velocity dual quaternion V′ is:

Using relationship (4), expression (10) may be developed:

We can observe that if frame R′ does not move compared to frame R, Ωrot = 0, then the only difference is that V′ has its coordinates expressed in the R′ frame instead of the R frame.

From another point of view, we may want to know the velocity Ṗ of a mobile point P during a rotation Rrot(t) applied to a reference position P0 = 1 + ϵx0 with reference velocity Ṗ0 in a given reference frame R (an active rotation):

Let us now compute the velocity V″ of point P in the reference frame R″ that is translated with respect to R. The transformation between both reference frames is a simple translation motion along a given unitary axis t and distance d(t). In R″ frame, the velocity of point P is:

For some applications, we may be interested in applying the kinematic operators to lines instead of points. For example, we may be interested in the orientation of an end-effector (in addition to the position) and/or the way this orientation changes with time. Lines provide a useful way to answer those questions. An example will be described in the applications. The operations are exactly identical to those described for point transformations except that another dual quaternion conjugate is used: the quaternion conjugate, A*, instead of the mixed conjugate, (see Equations 7, 9). This slight difference is due to the way that points and lines are encoded with dual quaternions (Bayro-Corrochano, 2003).

2.5. Screw motion

Before moving to the applications, we describe a last (less known) kinematic operator: a screw displacement, or screw motion. First we explain what a screw motion is and how this kinematic operation is encoded with dual quaternions. Then we derive the screw motion velocity. Finally we show how screw motion can be applied easily to both points and lines.

The reader should be aware that the screw motion is not a revolutionary kinematic operator, but it is a simple alternative, providing a single dual quaternion directly for an operation which can be also obtained by combining a translation along and a rotation around an axis line which is offset from the origin. Whatever the approach chosen by the user, the final result for your application will be the same. However, screw motion dual quaternions provide a more compact way to write the kinematics for this type of movement.

2.5.1. Screw motion definition

A screw motion describes a rotation of angle θ about a line whose direction is specified by a unitary axis n and whose location can be described by an arbitrarily chosen point on the line, with coordinates a, followed/combined by a translation of distance d along this axis n. a is an arbitrary point of the line and this line does not necessarily go through the origin, such that the screw motion formalism also can be used to describe rotations about eccentric points—in contrast to quaternions and rotation matrices, which can only characterize rotations about the origin. A screw motion can be represented by the following dual quaternion:

Note that the quantity is independent of the vector a we choose on the line to describe the line location. Equation (12) is derived (see Daniilidis, 1999; Bayro-Corrochano, 2003) by starting from the rotation quaternion R describing a rotation of angle θ around the unitary axis n. However, as previously noted, the quaternion formulation implicitly assumes that the rotation axis line passes through the reference frame origin. If the rotation line passes through a point with coordinates a, a general expression for the rotation dual quaternion whose axis is offset by a, RT, is obtained by applying a translation operator to the quaternion R, by left-multiplying it by the conjugate of TL and right-multiply it by TL. It is quite similar to the translation operation that we apply to lines (instead of rotation dual quaternion here) and that we described in section 2.4 :

The dual quaternion RT describes the same rotation but takes the offset between the reference frame origin and the line into account. RT is a transformation of R and therefore TL had to be applied from both sides. To obtain the screw motion dual quaternion M, we need to combine a translation along the line axis to the rotation RT (here we combine operators instead of transforming one operator, so there is only a simple left-multiplication):

2.5.2. Screw motion velocity

The screw motion velocity dual quaternion, Ṁ, is derived from the screw motion dual quaternion expression, M, in Equation (12). Indeed, a screw motion can be decomposed into a translational part and a rotational part, as shown in section 2.5.1. We have:

Therefore, where the second line is obtained through a simple calculation (translation has no effect on a velocity dual quaternion). We can observe the contributions of several terms related to the translational velocity along the screw axis, the translational velocity of the screw axis itself and the rotational velocity term.

Similarly to rotation and translation operations, we may easily apply screw motion operations on points or lines.

2.5.3. Screw motion applied to points

Let us consider now an active screw motion M = RTT applied to a reference point P0. The resulting position is then:

If we consider again the active screw motion M = RTT applied to the reference point P0 with velocity Ṗ0, the resulting velocity is then:

As will be seen in the applications, we can linearly combine several rotations, translations and screw motions in a compact expression, facilitating the geometrical interpretation and the clarity.

2.5.4. Screw motion applied to lines

Let us describe the final parameters of the line L = n + ϵm resulting from applying a screw motion described by M = RTT to a reference line L0 = n0 + ϵm0. Compared to the expression used for points (see Equation 14), the only slight difference is the use of another conjugate, as previously mentioned (see Bayro-Corrochano, 2003).

Knowing the structure of a line dual quaternion (see Equation 5), we can extract the line parameters n and m. Since where is any point located on line L, we can choose a particular by adding an additional constraint. An example would be to take the point which is the closest to the origin.

We can also compute the line velocity resulting from a screw motion M to a reference line L0 with velocity .

Again, we can retrieve the parameters ṅ and ṁ. Having retrieved a particular point p of the line, we can extract its velocity (orthogonally to the line orientation n).

In the Appendix C, we also describe some useful dual quaternions identities which may be used in the diverse sensorimotor applications that we will present in section 3. In the next section, we discuss the implementation of dual quaternions.

2.6. Implementation of dual quaternions

From an implementation perspective, we can write a quaternion as a four-element vector Atab: where where T is the transpose matrix operator. If we consider N quaternions simultaneously, we just create a 4 × N matrix where each column is a the vector representation of the corresponding quaternion.

Using this representation, the quaternion multiplication is implemented using matricial operations:

We can also consider N simultaneous multiplications of two quaternions. This is achieved by adapting the above formula to matrices instead of vectors. These simultaneous operations are not just for theoretical purposes or generalizations. Indeed, again addressing to the neuroscientists communities, we often have to deal with hundreds or thousands of trials in behavioral experiments. Often, we compare our data to model predictions. In this framework, let us consider that we use quaternions for our model predictions. In this case, it is much faster to transform N quaternions (corresponding to N trials) simultaneously than to run them individually through a for loop.

A dual quaternion can be represented using a 8-dimensional vector Dtab (which can also be considered as the juxtaposition of two 4-dimensional vectors representing the two quaternion components of the dual quaternion):

Again, we can generalize to N dual quaternions by using a 8 × N matrix. The dual quaternion multiplication is implemented using matricial operations and the quaternion multiplication defined in Equation (15):

We will not describe how all dual quaternion operators and transformation are implemented, as most of them are easy or just a consequence of the encoding of a general dual quaternion that we just described. However, for the reader who would not like to implement herself/himself all the dual quaternion operations in her/his favorite language, we provide in supplementary materials a dual quaternion toolbox written in MATLAB providing functions implementing all the dual quaternions operations previously mentioned. Furthermore, an example and a read me file are also available. A document listing several quaternion and dual quaternion Matlab toolboxes developed by others is also provided. In this way, the potential user has access to our toolbox but also to others, and therefore he can judge which one is the most suitable for him/herself. We wanted to develop our own Matlab toolbox because the other ones did not gather all the functionalities we needed.

After introducing the dual quaternion algebra in this first part and how it can be applied in kinematics, we now move to the second part of this manuscript. This part describes how dual quaternions and their derivatives can be used to easily describe several applications from sensorimotor control in neuroscience.

3. Applications

The dual quaternion algebra is very convenient to express the motion of rigid bodies, especially our body parts. In the following, we describe several applications of this theory. First we describe the reference frame transformations required in the 3D visuomotor transformation for reaching and tracking movements. Then we describe the forward and inverse kinematics problem for a two-link arm focusing only on the end-effector position. For that problem, we will use point transformations. Finally we focus on the forward and inverse kinematics problem for a three-link arm holding a tool whose position and orientation matters. We will use both point and line transformations. One huge advantage of dual quaternions is the fact that we can use them for both point and line transformations, which is not the case with other formalisms (homogeneous matrices are not suitable for line transformation for example, while dual matrices were developed to tackle line transformations and not point transformations).

3.1. Reference frame transformation: movement planning

Here we study reference frame transformations in the context of visuomotor transformations. For instance, visually guided arm movements to reach for a seen object. Indeed, the brain has to transform the visual information about the target of interest into a set of motor commands for the arm muscles. To this end, the brain plans the movement ahead (see Shadmehr and Wise, 2005). However, the transformation between retinal information and the spatial motor plan is not trivial (see Blohm and Crawford, 2007), since our eyes and head move relative to the body (and thus relative to the shoulder, insertion point of the arm). For instance, let us consider the motion of a target in space. If the head is rolled toward the left or right shoulder, the projection of the spatial motion onto the retina will be different depending on the head roll angle (Leclercq et al., 2012). Therefore, the brain should take the head roll into account in order to generate an accurate motor plan for the arm. This transformation amounts to expressing the retinal motion into a spatial motion, thus it is a reference frame transformation problem.

In the following we describe two transformations. First we describe how we express the retinal position and motion of a target, e.g., a tennis ball, as a function of the spatial trajectory of this target and the 3D eye-head-shoulder geometry. This transformation is useful for a neuroscientist dealing with behavioral experiments. Indeed, the spatial target position and velocity are often specified by designing the experiment (by choice of the experimenter), while the retinal position and motion can not be measured directly. These signals need to be estimated using the transformation model that we develop in this first part and measured and/or known signals about the 3D eye-head-shoulder kinematics. Then we describe the inverse transformation which computes the spatial position and velocity of a target from the knowledge of the retinal position and velocity as well as the 3D eye-head-shoulder geometry. This transformation is important from a neuroscience perspective as it is the transformation that the brain should implement in order to generate a spatially accurate arm movement (see Blohm and Crawford, 2007; Leclercq et al., 2012). Furthermore, using this theoretical transformation, we can easily test hypotheses about the availability, accuracy or precision of a signal (retinal and/or extra-retinal) in the brain. For that last application, we use the retinal position and velocity estimated through the first transformation described above.

In this context, dual quaternions provide a useful tool to express these transformations since they provide a geometrically meaningful way of expressing them, and they are easily implemented using a dual quaternion toolbox (as we provide it in the supplementary materials).

3.1.1. Computing the retinal position and motion

Figure 1 depicts a situation where a subject is confronted with a moving object (e.g., a ball), the target (denoted P), and she/he is going to interact with it (track or catch the target). Here we describe how the retinal position and velocity of the target are expressed as a function of the spatial position and velocity, using the dual quaternion formalism to express the 3D kinematics. In an experiment, we typically specify P position and velocity in a spatial reference frame, for example, the environment reference frame, or the shoulder reference frame in this case since we assume the shoulder remains fixed in the environment. So we need to estimate what the position and the velocity of the projection of P onto the retina are.

Figure 1

The picture represents a front view of the eye-head-shoulder system. The pointing target position (black star) and velocity (dashed arrow) are represented. These position and velocity can be represented in different reference frames, represented on the figure. These reference frames are: SS (shoulder-centered shoulder-fixed), HH (head-centered head-fixed), and EE (eye-centered eye-fixed). The offsets between the rotation centers are also represented: the the head position in eye-centered head-fixed coordinates, and tsh the shoulder position in head-centered shoulder-fixed coordinates.

In order to compute the P projection trajectory onto the retina, it is obvious that we need to know P kinematics in shoulder (space) coordinates and also what the kinematics of the eye-head-shoulder rigid body system are. In addition to a spatial reference frame, a right-handed orthonormal reference frame is attached to each rigid body (the head, the eye and the shoulder in this example, see Figure 1). Let us assume that P trajectory is known in the shoulder reference frame, PSS(t) = (PSSx, PSSy, PSSz). PSS(t) refers to P position in the Shoulder-centered Shoulder-fixed reference frame (-centered refers to the reference frame origin while -fixed denotes that the orientation of the axes is constant with respect to the specified rigid body) as a function of time.

The first step is to compute P trajectory (position and velocity) in an eye-centered eye-fixed reference frame, denoted PEE(t) and PĖE. By combining eye-in-head rotation (REH quaternion), offset between eye and head rotation centers (THE dual quaternion), head-on-shoulder rotation (RHS quaternion), and offset between head and shoulder rotation centers (TSH dual quaternion), we obtain the following expression for P position in eye-centered eye-fixed coordinates as a function of P position in shoulder-centered shoulder-fixed coordinates (using point transformations, as described in section 2.4; see also Appendix E for the derivation).

where the rotation quaternions are expressed as a function of the rotation angles and unitary axis.

For the P velocity, we differentiate Equation (16). And after a few calculations (see Appendix E), we obtain: where PEH (resp.PHS) is the target position expressed in eye-centered head-fixed (resp. head-centered shoulder-fixed) coordinates. They can be computed, similarly to the expression in Equation (16), as:

There are three terms in the right side of this expression: the first one depends on the eye-in-head rotational velocity, ΩEH. The second one depends on the head-on-shoulder rotational velocity, ΩHS. The third term is related to the target velocity in the shoulder-centered shoulder-fixed reference frame, PṠS. When the eye-head-shoulder configuration is static during a trial, only the last term remains. In this case, the only change in velocity is due to the different orientations of the reference frames.

The first step for computing the retinal position and motion was to express the target position and velocity in a reference frame centered on the eye and fixed to the eye, PEE(t) and PĖE. Then the second step is to compute the projection of the target position onto the retina and the resulting velocity of this projection, which is the retinal position and velocity available for further processing in the brain. Computationally, it amounts to project PEE = 1 + ϵ PEE onto a sphere of radius reye (see Figure 2A). For simplicity, we assume reye = 1 (it is just a scaling factor).

where where is the distance of the target (in eye-centered coordinates). The velocity of the projection is computed as follows:

The resulting dual quaternion is . Note that if the motion of the target is spherical with the eye as center (isodistance trajectory), then , and the expression simplifies to . However, most of the time, the distance is not constant, for example, if the target is moving in a frontoparallel plane. can be expressed as (see Appendix F):

Figure 2

Geometry of the velocity projection (A) and velocity reconstruction (B). (A) Projection of P position and velocity onto the eye (actually we plot the reversed retinal projection and velocity projection, to be directly comparable to the spatial position and velocity). References to the reference frame are omitted on the figure for the sake of clarity. The two components which add to obtain are represented :  . (B) Reconstruction of P position and velocity. The two components which add to obtain ṖEE are represented:

. (B) Reconstruction of P position and velocity. The two components which add to obtain ṖEE are represented:  .

.

In this first part, we have computed the retinal target projection vector in eye-centered eye-fixed coordinates from the knowledge of target motion in spatial coordinates. This transformation is useful because we do not know the projection vector in retinal coordinates in advance. Therefore, from an experimental or simulation point of view, this transformation is important. Moreover the above transformation could be useful for the brain if the target is also known to the brain in spatial (or also head) coordinates (for example: auditory target, proprioceptive target in addition to the visual information about the target). Indeed, multisensory integration is carried out using multiple reference frames (McGuire and Sabes, 2009) and therefore auditory (head coordinates) information should be expressed in eye coordinates. This transformation could also be useful if the brain was to implement a forward model, predicting the sensory consequences of the arm motor command, in retinal coordinates.

Now we move to the inverse transformation, which computes the target motion in spatial (or shoulder) coordinates, with the retinal position and velocity as input. This transformation must be carried out by the brain in order to specify a spatially accurate motor plan for the arm (see Blohm and Crawford, 2007; Leclercq et al., 2012).

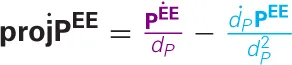

3.1.2. Computing the spatial position and velocity

Now we describe the model with projPEE and as inputs, and PSS and PṠS as outputs. These outputs would be used to specify the motor plan (direction, velocity) of an arm tracking movement for example (Leclercq et al., 2012). Using this model, predictions about the motor plan can therefore be easily computed under different hypotheses of incomplete transformation. These hypotheses are made by asking whether extra-retinal signals (3D eye and head positions, 3D eye and head velocities) are available to the brain and whether they are biased or not, and whether they are highly or not much variable (in the framework of bayesian estimation for example).

We start the complete transformation by reversing the above described model. From projPEE and , we first compute PEE and PĖE (see Figure 2B). Theoretically,

We observe that dP is necessary to reconstruct PEE. It is interesting to consider how the brain estimates dP. Most of the time, our vision is binocular and dP can be estimated with the use of binocular cues (see Blohm et al., 2008). However, vision is sometimes monocular (e.g., Leclercq et al. (2012) patched one eye in order to have only monocular vision). In this case, no binocular clues are available to estimate target depth. But monocular cues and a priori information also help to have an estimation of the target depth, but they are quite reduced for point-like target movements in complete darkness (see Leclercq et al., 2012). A paradigm of tracking in depth would be interesting to test, in monocular and binocular situations. That would allow to differentiate between monocular and binocular clues in order to estimate depth in the context of arm movements.

Theoretically, we also have: thus also has to be estimated for the estimation of the target velocity in space.

To compute the target position in spatial coordinates, we invert Equation (16), using the fact that the kinematic dual quaternions are unitary and therefore, for any unitary dual quaternion A, we have A−1 = A*. Applying that, we have: where and

For the target velocity in spatial coordinates, we inverse the relationship (17) and obtain:

These two transformations are implemented by the brain for the planning of spatially accurate movements, as it has been shown by Blohm and Crawford (2007) and Leclercq et al. (2012). These transformations are useful for the experimenter, in order to estimate the retinal position and motion of a target, and also to make different predictions on the motor plan generated by the brain, depending on hypotheses made about the signals available to the brain (accuracy, precision). However, we believe that dual quaternions are just a tool to easily model this transformation, and they are most likely not used by the brain as a way to implement these transformations. Most likely, these transformations are implemented in a distributed way by a neural network. Blohm et al. (2009) and Blohm (2012) show how these transformations could be achieved by the brain in a distributed way using a biologically inspired artificial neural network. Moreover they relate the properties of the artificial neurons to those of real neurons. Let us mention that dual quaternions are used in these theoretical studies to generate a huge number of input–output pairs (retinal motion as input and spatial motion as output).

3.2. Two-link arm movements: forward and inverse kinematics for end-effector position

We will now consider another example of active movements: 3D arm movements. In section 3.2, we focus on the end-effector trajectory of a two-link arm, describing its forward kinematics and the inverse kinematics using the dual quaternion formalism applied to point transformations. In section 3.3, we consider a three-link arm holding a tool (a screwdriver for example) to emphasize the end-effector orientation importance in several everyday life situations. The dual quaternion formalism is again used in order to model the forward and inverse kinematics for the orientation and position of the end-effector, but it is also applied to lines, in order to deal with the orientation transformation.

3.2.1. Forward kinematics for end-effector position

In the following, we consider the forward kinematics of the end-effector position of a two-link arm (see Figure 3). The forward kinematics consists in computing the end-effector position (and sometimes orientation) from the knowledge of the joints kinematics (rotation angles and axes, joint velocities). The advantage of dual quaternions to represent such transformations is the compactness and geometrical significance to express the joint kinematics.

Figure 3

Two-link arm top view. The shoulder insertion point, elbow and end-effector location are represented. The different reference frames are also represented: body-fixed shoulder-centered (BS, in red), upper arm -fixed shoulder-centered (US, in blue), and the lower arm -fixed elbow centered (LE, in green). The rotation angles of the shoulder and elbow are respectively denoted φ and θ.

Assuming a length of a (resp. b) for the upper arm (resp. lower arm), the position PBS of the end-effector P (B for body-fixed coordinates and S for shoulder-centered coordinates) can be expressed as: where S stands for shoulder, U for upper arm, E for elbow and L for lower arm. RUB represents the upper arm rotation around the shoulder compared to a reference position (rotation angle: φ = 0). RLU represents the lower arm rotation around the elbow compared to a reference position (θ = 0). represents translation dual quaternion associated with the offset between the elbow and the shoulder, in shoulder-centered upper-arm fixed coordinates. PLE is the (fixed) position of point P in lower-arm fixed elbow-centered coordinates:

Note that we can represent the forward kinematics of the end-effector if we know the parameters of the shoulder and elbow rotations (for example, angle and rotation axis), whatever the degrees of freedom.

We can also express the velocity ṖBS of the end-effector P: where we obviously assume that ṖLE = 0. ΩUB and ΩLU represent the rotational velocities of the upper arm around the shoulder and of the lower arm around the elbow.

3.2.2. Inverse kinematics from end-effector position

Estimating the joint kinematic parameters from the knowledge of the end-effector position and velocity (and sometimes the orientation, see next) is a much more difficult task in general. This process is called inverse kinematics. It can be difficult for several reasons. First, we could solve the inverse kinematics equations (e.g., Equation 19) for the joint kinematic parameters, but theses equations are highly non-linear and no general method exists to solve this problem. For a particular case, it is sometimes possible and advantageous to compute analytical solutions, but it can not always be done. Numerical methods are also widely used to solve this problem, and in the following we will use this approach, which allows us to develop one general method for several different problems. Then, the kinematic system is usually redundant (like the human arm), meaning that there is an infinity of joint configurations that can achieve the prescribed end-effector kinematics. Finally, there are some geometrical configurations in which there are singularities and which tend to complicate the resolution of the inverse kinematics problem. This is a well studied problem in the field of robotics (Klein and Huang, 1983; Cheng and Gupta, 1991; Wang and Chen, 1991; Sciavicco and Siciliano, 1996; Tolani, 2000). Here, we take the two-link arm as an example and develop a methodology from our dual quaternion formalism to compute the inverse kinematics numerically.

The inverse kinematics problem is complicated, especially because the degree of freedom (dof) exceeds the dimension of the end-effector motion (this is called redundancy). For the two-link arm, we consider four degrees of freedom (three at the shoulder and one at the elbow), while the end-effector motion is only 3D. Therefore, there is an infinity of solutions to the problem.

First, we simplify the problem: we assume that the two-link arm moves in the horizontal plane, which reduces the joint dof to 2, yielding a well-posed problem for inverse kinematics. In this case, the velocity dual quaternion representing the 2D velocity of point P may be written as (see details in Appendix G.1): where n is the rotation axis of the shoulder and the elbow (since we only consider planar motions) and where JDQ is an array composed of two point velocity dual quaternions (from an implementation perspective, JDQ is an 8 × 2 array, see section 2.6). We can build the 2 × 2 jacobian matrix J by extracting the 2D vector part of the velocity dual quaternion composing each column of JDQ (see Equation 20):

The dual quaternion equation (20) can then be expressed in a matrix form: where ṖBS is the 2D velocity vector component of the velocity dual quaternion ṖBS. We then compute and as a function of ṖBS to solve the inverse kinematics problem, assuming we know the current position of the joints, θ and φ, since the jacobian matrix J depends on these parameters: J = J(θ, φ). Indeed, J depends on PBS, and PBS depends on the rotation dual quaternions RUB and RLU (see Equation 17). Since θ is the rotation angle of RLU and φ is the rotation angle of RUB, it shows that J depends on θ and φ. Also, RUB and PUE depend on φ and appear in the expression of J.

However, we can solve for and by inverting J only if J is invertible. It is the case in most geometrical configurations of the two-link arm (indeed, J depends on φ and θ, so does the rank of J), except when θ = 0 (or θ = 180°), which represents a situation where the upper and lower arms are aligned. In this case, J is not invertible (its rank is equal to 1), and we can not apply the above method. This situation can correspond to two cases. If ṖBS has a component parallel to the aligned arm, then there are no solutions for and . If ṖBS is orthogonal to the aligned arm, then there exists an infinity of solutions (in which case we can express the general form of the solution using the pseudo-inverse formalism, see later in the manuscript).

We notice that the differential velocity relationship linking the joint velocities and end-effector velocity is linear if we assume that θ and φ are known, and that will be the case in the numerical approach we use to solve the inverse kinematics problem. In order to solve the inverse kinematics problem for the joint positions, we can numerically integrate the joint velocities across time, knowing the joint positions at time t0 (an initial condition is needed). The simplest numerical integration is the Euler method: where Δt is the integration step and should not be too large to achieve reasonable accuracy. Then, the new rotation dual quaternions can be updated as a function of θ(t + Δt) and φ(t + Δt) and we can iterate this process.

Now we want to consider movements in 3D space, not restricted to the plane. In this context, the shoulder has three-dof and the elbow has one-dof. It is clear that there are too many dof compared to the 3D motion of the end-effector, and intuitively many shoulder-elbow configurations will lead to the same end-effector position and velocity. In the following we explain one approach which can be taken to obtain one specific solution for the joints, as well as a general formula to express the set of all possible solutions.

The idea is to start with the dual quaternion expression of the velocity of the end-effector (e.g., Equation 19) and rewrite it in a matrix expression. We move from the dual quaternion representation to the matrix notation because we use tools from the matrix algebra (e.g., the pseudo-inverse matrix) to derive the inverse kinematics results (see below).

The relationship between the end-effector velocity ṖBS and the rotational velocities of the shoulder, ΩUB, and the elbow, ΩLU, is described by Equation (19). Using the fact that: (see Appendix G.2) we can show that Equation (19) can be expressed as a linear matrix expression (see Appendix G.3 for the derivation): where ṖBS is the dual bivector part of ṖBS, RMUB is the rotation matrix associated with the rotation dual quaternion RUB [see Equation (D.2) in Appendix D for how to compute this rotation matrix] and is the anti-symmetric matrix of rank 2 associated with the cross product: if , it can also be written where: where a = (a1a2a3). Note that this matrix has always rank 2 since for an equation in : , the component of parallel to can be arbitrary. All the solutions lie on a line.

Coming back to Equation (22), we see that the matrix J is of size 3 × 6 and we want to solve for the unknowns ΩUB and ΩLU. Since we consider one-dof at the elbow, the vector ΩLU must be aligned with a specific rotation axis nelbow = [0 0 1] (in upper arm fixed coordinates) since we assume that the elbow rotates around an axis which is orthogonal to both the lower and upper arms (see also Figure 3 to see why this axis is along the z-axis). This constraint can be written as . Therefore, we add this constraint to the kinematics equation (22) to obtain:

Now this modified matrix J has generally rank 5 since has rank 2 (but the rank can be even lower in certain geometrical configurations of the two-link arm, as explained previously with the two-dof two-links arm). Indeed, because we can rotate the two-link arm system around an axis linking the shoulder and the end-effector, without changing the end-effector position and velocity, there is an infinity of solutions (ΩUB, ΩLU) which lie in a one-dimensional manifold, which is mathematically defined as the kernel of J, i.e., the set Ker(J) = {x ∈ ℝ6 such that Jx = 0}. Therefore, since there is an infinity of solutions, we need some criterion that may be optimized to choose one optimal solution (according to this criterion), while Equation (23) is a constraint for the optimization problem.

The matrix J−1 is not defined and thus we choose to use a generalized inverse matrix (which exists for any matrix): the pseudo-inverse [see Klein and Huang (1983) for the use of pseudo-inverse in inverse kinematics], also called the Moore–Penrose inverse. We will denote it by J+. Therefore, one solution of the redundant system given by Equation (23) is given by:

This solution is actually the solution with minimal norm (Klein and Huang, 1983; Sciavicco and Siciliano, 1996), which minimizes the joint velocities in the context of the four-dof two-link arm. The general solution can also be expressed (see Sciavicco and Siciliano, 1996): where w can be any vector in ℝ6, and I is the identity matrix of order 6 (for this example). In practice, Equation (24) requires that we explicitly compute the pseudo-inverse J+. The interested reader can refer to Appendix G.4 to see how this can be done.

Once we have a solution for the joint velocities , we can numerically integrate the joint velocities across time, knowing the joints positions at time t0. Several methods for numerical integration exist (see for example, Atkinson, 1989) and are used in the context of inverse kinematics (see Cheng and Gupta, 1991). Here, we will use the simple Euler method to update the rotation quaternions RUB and RLU. It is a bit more tricky than in the simple two-dof two-link arm (see Equation 21). Indeed, given that , we can update the rotation dual quaternion as: but the major problem is that in general R(t + Δt) is not a rotation quaternion anymore, and we have to normalize it by the norm of R(t + Δ t) to ensure it is a rotation dual quaternion again. From a computational perspective, Funda et al. (1990) showed that the normalization operation is carried out much faster with quaternions than with rotation matrices, which is one of the advantages of using quaternions over rotation matrices. One alternative for the computation is the following. In angular vector notation, the magnitude of the vector represents the rotation angle while the normalized unit vector represents the rotation axis. Using this fact, the rotational displacement between time t and t + Δt is characterized by the angular vector ΩΔt which is of the form θn. Then, this angular vector can be expressed as a rotation dual quaternion (see Equation 3), ΔR. Then,

However, by using such a numerical integration scheme, errors arise since the numerical integration is not perfect. These errors propagate from one iteration to the other and the reconstructed end-effector location (using the forward kinematic model, see Equation 18) will drift from the real end-effector position (see Sciavicco and Siciliano, 1996). In order to avoid this problem, we take the position error between the reconstructed end-effector position, , and the real (or desired) end-effector position, PBS, into account. Actually, we apply the correction scheme described in Sciavicco and Siciliano (1996). The vector error at time t is: where FK(.) is the forward kinematic function described by Equation (18). We take this error into account by adding a vector term proportional to the error in Equation (23): where K is a positive definite (usually diagonal) matrix. We can for example choose K as the identity matrix multiplied by a factor k1, which is tuned by the user. Then, we can simply compute a solution using the same pseudo-inverse technique as described above [see Equation (24) to this modified problem].

We described how we deal with the inverse kinematics of the end-effector position of a two-link arm but we can generalize this procedure to a n-link arm. The interested reader can refer to Appendix G.5.

3.2.3. Inverse kinematics: numerical simulation

Here we test the numerical method for inverse kinematics developed in section 3.2.2 on the four-dof two-link arm. Figure 4 shows one particular example of inverse kinematics for this two-link arm. First, we choose an end-effector motion with a bell-shaped velocity profile and a straight line path in the 3D workspace (see Figure 4B), to mimic hand velocity profiles described in the neuroscience literature (Abend et al., 1982; Atkeson and Hollerbach, 1985). From the subject perspective, the hand is moving from a central position to the right, up and away from the shoulder. The initial configuration of the arm is represented in black (see Figure 4A). From the end-effector motion, we apply the inverse kinematics technique described in the previous section to estimate the joint rotations over time for the movement (Figure 4C).

Figure 4

Numerical simulation example of inverse kinematics of the four-dof two-link arm. For this simulation, we used k0 = 1000 and k1 = 1 (see Appendix G.4) and a simulation step of 1 ms duration. (A) Top view (top), behind view (middle), and side view (bottom) of the computed inverse kinematics for the joints. The end-effector (point P) motion in 3D space is described by the blue line. The initial two-link arm configuration is represented in black. Upper arm (dark gray) and lower arm (light gray) are represented at different instants during the end-effector motion. (B) The end-effector speed (left) and displacement (right) are described as a function of time: the movement is a typical bell-shaped speed profile lasting 800 ms and with a peak velocity of 100 cm/s. The end-effector displacement is about 25 cm. (C) The estimated elbow (left) and shoulder (right) rotation angles are represented as a function of time. For the shoulder, there are three components of rotation: around the x-axis (red line), the y-axis (green line), and the z-axis (blue line). (D) Representation of the position error, the norm of the difference between the estimated and actual end-effector position, as a function of time. The maximal error is about 0.02 cm.

The estimation accuracy was assessed by computing the norm of the position error, i.e., the difference between the true end-effector position and the estimated end-effector position. The end-effector position was estimated by using the forward kinematic model (see Equation 19) and the estimated joint angles (and therefore rotation quaternions). We can observe (Figure 4D) that the position error was always smaller than 0.02 cm. This position error depends on the value of k1 (see Equation 26) that we use. For this simulation, we used k1 = 1000. The larger k1 the smaller is the position error, because we penalize the position error more with larger values of k1. But k1 can not be too large for discrete time systems. If k1 is too large, the system will be unstable and the error will grow. The threshold value for k1 depends on the simulation step Δt (see Appendix G.6 for details). For our simulation, we used Δt = 1 ms and therefore we could not choose a value for k1 larger than 2000.

We tested several directions in space for the end-effector motions and our inverse kinematics algorithm was successful in every case, inferring joint angles for the elbow and shoulder. Note that this method does not necessarily reproduce the joints angles observed in human subjects but it yields the solution with the smallest joint angle rotations.

3.3. Three-link arm movements: forward and inverse kinematics for end-effector position and orientation

In this section, we describe the forward and inverse kinematics for the end-effector position and orientation of a three-link arm. The main difference compared to section 3.2 is that we perform line transformations (using dual quaternions) to transform the orientation.

For this section, we decided to provide an example where we directly use screw motion to model the joints (shoulder, elbow, and wrist) movements, but we could have kept the same approach as in section 3.2 by alternating rotations and translation (offsets). Similarly, we could have used screw motion dual quaternions as well in section 3.2 for the two-link arm example.

3.3.1. Forward kinematics for end-effector position and orientation

Here, we describe a seven-dof arm with three links (see Figure 5): the upper and lower arms as well as the wrist. We consider a seven-dof joint model: three for the shoulder, one for the elbow, and three for the wrist. Up to here, we could just apply the methodology described above in section 3.2. However, now we consider an example where the end-effector orientation matters. For example, Figure 5 shows that the hand holds a screwdriver, and we are interested in the location and orientation of this end-effector.

Figure 5

seven-dof three-link arm top view. The shoulder insertion point, elbow and wrist are represented as well as the end-effector (a screwdriver) location and orientation. The different reference frames are also represented: body-fixed shoulder-centered (BS, in red), upper arm -fixed shoulder-centered (US, in blue), lower arm -fixed elbow centered (LE, in green), and hand-fixed wrist-centered (HW, in magenta). The rotation angles of the shoulder, elbow, and wrist are respectively denoted φ, θ, and α. The configuration in gray represents the reference configuration.

In order to describe the end-effector (the screwdriver) orientation (in body-fixed coordinates), we now use the formalism of line dual quaternions (see section 2.3.2). Let

be the line dual quaternion describing the reference line passing through the screwdriver in its reference configuration [see the gray configuration in Figure

5: the arm is fully extended, φ = θ = α = 0° and

n0= (0 1 0)

Tand

r0= (

a+

b+

c0 0)

T]. The forward kinematics describing the end-effector line position,

L(

t) =

n(

t) + ϵ

m(

t), is described by line transformations, using the tools described in the previous sections (again, here we use screw motions but we could have used rotations and translations offsets):

where:

SUB = RUB = cos(φ/2) + nUB sin(φ/2) is a pure rotation quaternion describing the 3D rotation of the upper arm around the shoulder.

- SLU

=

T*ESRLUTESis a screw motion dual quaternion describing the 1D rotation of the lower arm around the elbow, whose rotation axis is offset from the origin (the shoulder):

− RLU = cos(θ/2) + nLU sin(θ/2) is a pure rotation quaternion describing the 3D rotation of the lower arm around the elbow.

− TES = 1 + ϵtes/2 where tes = [a 0 0]T is the offset between the elbow and the shoulder in the reference configuration.

- SHL

= T

*WSRHLTWSis a screw motion dual quaternion describing the 3D rotation of the hand around the wrist, whose rotation axis is offset from the origin (the shoulder):

− RHL = cos(α/2) + nHL sin(α/2) is a pure rotation quaternion describing the 3D rotation of the hand around the wrist.

− TWS = 1 + ϵtws/2 where tws = [a + b 0 0]T is the offset between the wrist and the shoulder in the reference configuration.

We can also derive the expression for the end-effector line velocity,

, deriving the above expressions:

where

Lw = S*HLL0SHL is the end-effector line position after applying the wrist rotation to the reference line L0.

Le = S*LULwSLU is the end-effector line position after applying the elbow rotation to the line Lw.

Remembering the description of a screw motion velocity dual quaternion Ṁ (see Equation 13) in terms of the pure rotational component, Ω, the offset velocity term ṪL and the translational velocity term along the screw axis Ṫ, we can express these screw motion velocities for the seven-dof three-link arm. For each of the screw motion dual quaternions considered here, only the rotational part is moving, in which case Ṁ simplifies to . Therefore, it is quite easy to compute .

From the line forward kinematics for position and velocity, we can derive the orientation of the end-effector, n(t), as well as its rate of change, ṅ(t). The actual position and velocity of the end-effector position is more tricky to obtain. Indeed, we can retrieve the quantities m(t) and ṁ(t), but with these quantities, we can only infer the component of the end-effector position orthogonal to the line orientation. And, even if the end-effector position is known, we can not uniquely determine the end-effector velocity component along the line orientation (see also Appendix B). Therefore, in parallel to the line transformation applied to the end-effector line, a position transformation should be applied to the end-effector position. The transformation equations applied to the reference point dual quaternion P0 = 1 + ϵr0 are similar to Equations (27) and (28) except that we use the conjugate definition instead of S* for point transformation (as described in section 2.4).

3.3.2. Inverse kinematics from end-effector position and orientation

In the case of line transformations, we are also interested in estimating the joint kinematic parameters from the knowledge of the end-effector position and orientation as well as the velocity and orientation rate of change. The problems and the challenges are similar to what we saw before in section 3.2.2 except for the construction of the jacobian matrix J which links the joint-velocities to the end-effector velocity and orientation rate of change. For the interested reader, Appendix H describes in detail how to compute this jacobian matrix J for the three-link arm and also generalizes to the n-link arm. Then we can proceed similarly to what is shown in section 3.2.2 to solve the inverse kinematics problem.

4. Discussion

In many applications of behavioral neuroscience or vision, there is a need to represent the 3D position and/or orientation of objects as well as their velocity. These objects may be external objects/stimuli that a human subject has to deal with (e.g., the position and orientation of a target object). For example, in order to catch the ball, a rugby player needs to estimate the 3D motion of the ball but also the time course of its orientation, since the ball is non-spherical. This object can also be a body part (e.g., the eye, the head, and the hand), whose position and/or orientation is of interest for the brain to monitor body movements. Dual quaternions provide a convenient compact and geometrically meaningful way to describe either position (through point dual quaternions) or position and orientation (through line dual quaternions). Moreover, dual quaternions provide a way to describe natural geometrical transformations like rotations, translations, or screw motions, and these geometrical transformations can easily be combined together and applied to points or lines. In this paper, we described the useful concepts of the dual quaternion geometric algebra and how dual quaternions can be used to model these transformations. We also described the dual quaternion formalism to cope with velocity: rotational velocity, screw motion velocity, point velocity, and line velocity. Then we applied these concepts to a few examples in behavioral neuroscience: the 3D eye-head-shoulder system reference frame transformation needed for the accurate planning of manual tracking movements. Another example was provided with the forward kinematics for the multi-link arm, either considering the end-effector (the hand) position alone, or considering the end-effector position and/or orientation. Finally we also derived the inverse kinematics of the same multi-link arm from the dual quaternion formalism. In these applications, we do not claim that the brain actually uses dual quaternions to implement these transformations. However, these complex transformations are easily expressed mathematically using dual quaternions, which helps the neuroscience researcher to make predictions, for a theoretical goal or just in designing an experiment.

A main advantage of dual quaternions is that we can combine several rotations and/or translations. Therefore, it is quite easy to compute and write the expression for complex systems. We used that advantage throughout our applications. Another advantage of using quaternions (or dual quaternions) to represent rotations is that it is an efficient way to parameterize rotations, without any singularity (Chou, 1992; Grassia, 1998), which occur when using the classical ways to parameterize rotations (Euler angles for instance). An important advantage of dual quaternions is the compactness in terms of memory requirements: we only need 8 elements to represent them, compared to 12 for homogeneous matrices (Kim and Kumar, 1990; Funda and Paul, 1990a; Aspragathos and Dimitros, 1998; Daniilidis, 1999; Kavan et al., 2008).

Quaternions have been widely used to model 3D eye rotations (Tweed and Vilis, 1987; Hestenes, 1994a; Haslwanter, 1995; Crawford and Guitton, 1997; Bayro-Corrochano, 2003) while dual quaternions have been used less (see Bayro-Corrochano, 2003; Blohm and Crawford, 2007; Leclercq et al., 2012). Here, we described how the dual quaternion formalism can be used to model the multi-body kinematics, which can be useful for modeling purposes in applications like motor planning for eye and arm movements, 3D eye-head gaze shifts, 3D VOR, 3D updating, computing predictions of an inverse or forward internal model.

Dual quaternions have been commonly used in robotics and computer vision, for various purposes. Quaternion parametrization of rotations are used in graphics applications (Grassia, 1998; Tolani, 2000; Azariadis, 2001; Kavan et al., 2008; Ding et al., 2012). Tolani (2000) developed a real-time inverse kinematic technique for anthropomorphic limbs. In Ding et al. (2012), they develop an image processing technique which is built upon a so-called dual quaternion Fourier transform. According to Grassia (1998), quaternions are the best choice to interpolate 3D rotations. Indeed, when we interpolate element by element between two rotations quaternions, it interpolates on the geodesic (shortest) path onto the sphere (Shoemake, 1985; Dam et al., 1998). Dual quaternions are also used for the process of pose estimation, which consists in estimating the position and orientation of an object. In this context, dual quaternions allow to solve simultaneously for both the position and orientation components (Daniilidis, 1999; Bayro-Corrochano et al., 2000) in the context of robotics (hand-eye calibration). Pose estimation is also used with dual quaternions in computer vision (Walker et al., 1991; Phong et al., 1993; Torsello et al., 2011), but with a projective component in addition. In Goddard and Abidi (1998), they used the iterative extended Kalman filter for this purpose of pose estimation in order to deal with uncertainty. Forward kinematics equations for robotic manipulators have been derived with dual quaternions (Kim and Kumar, 1990) and compared with other methods (Aspragathos and Dimitros, 1998). Perez and McCarthy (2004) use dual quaternions for the synthesis of constrained robotic systems where several serial constrained (less than six-dof) manipulators are combined. Inverse kinematics techniques have been developed using dual quaternions for six-dof manipulators (Aydin and Kucuk, 2006; Sariyildiz and Temeltas, 2009) and compared to other methods (Sariyildiz et al., 2011). They are also used in the context of cooperative control of multiple manipulators: several robotic manipulators (Adorno et al., 2010) or a robotic manipulator interacting with a human arm (Adorno et al., 2011). In Pham et al. (2010), they develop a control law using dual quaternion to control simultaneously the position and orientation of a robotic manipulator.

Thus dual quaternions are powerful mathematical constructs that are widely used in robotics and computer vision, and could make important contributions to neuroscience research.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Statements

Acknowledgments

This paper presents research results of the Belgian Network DYSCO (Dynamical Systems, Control, and Optimization), funded by the Interuniversity Attraction Poles Programme, initiated by the Belgian State Science Policy Office. The scientific responsibility rests with its author(s). This work was also supported by the Belgian Fonds National de la Recherche Scientifique, the Fondation pour la Recherche Scientifique Médicale, and internal research grants from the Université catholique de Louvain (Fonds Spéciaux de Recherche, Action de Recherche Concertée), The Botterell Foundation (Queen's University, Canada), NSERC (Canada), and the Ontario Research Fund (Canada).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

1

AbendW.BizziE.MorassoP. (1982). Human arm trajectory formation. Brain105, 331–348. 10.1093/brain/105.2.331

2

AdornoB.FraisseP.DruonS. (2010). Dual position control strategies using the cooperative dual task-space framework, in Intelligent Robots and Systems (IROS), 2010 IEEE/RSJ International Conference, (Taipei), 3955–3960.

3

AdornoB. V.BoA. P. L.FraisseP. (2011). Interactive manipulation between a human and a humanoid: when robots control human arm motion, in 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, (San Fransisco, CA), 4658–4663

4

AspragathosN. A.DimitrosJ. K. (1998). A comparative study of three methods for robot kinematics. IEEE Trans. Syst. Man Cyberne. B Cybern. 28, 135–145. 10.1109/3477.662755

5

AtkesonC. G.HollerbachJ. M. (1985). Kinematic features of unrestrained vertical arm movements. J. Neurosci. 5, 2318–2330.

6

AtkinsonK. (1989). An introduction to numerical analysis, 2nd Edn. New York, NY: John Wiley Edition.

7

AydinY.KucukS. (2006). Quaternion based inverse kinematics for industrial robot manipulators with Euler Wrist, in 2006 IEEE International Conference on Mechatronics, (Budapest), 581–586.

8

AzariadisP. (2001). Computer graphics representation and transformation of geometric entities using dual unit vectors and line transformations. Comput. Graph. 25, 195–209.

9

Bayro-CorrochanoE. (2003). Modeling the 3D kinematics of the eye in the geometric algebra framework. Pattern Recogn. 36, 2993–3012.

10

Bayro-CorrochanoE.DaniilidisK.SommerG. (2000). Motor algebra for 3D kinematics: the case of the hand-eye calibration. J. Math. Imaging Vis. 13, 79–100.

11

BlohmG. (2012). Simulating the cortical 3D visuomotor transformation of reach depth. PLoS ONE7:e41241. 10.1371/journal.pone.0041241

12

BlohmG.CrawfordJ. D. (2007). Computations for geometrically accurate visually guided reaching in 3-D space. J. Vis. 7, 4.1–4.22. 10.1167/7.5.4

13

BlohmG.KeithG. P.CrawfordJ. D. (2009). Decoding the cortical transformations for visually guided reaching in 3D space. Cereb. Cortex19, 1372–1393. 10.1093/cercor/bhn177

14

BlohmG.KhanA. Z.RenL.SchreiberK. M.CrawfordJ. D. (2008). Depth estimation from retinal disparity requires eye and head orientation signals. J. Vis. 8, 3.1–3.23. 10.1167/8.16.3

15

ChengH.GuptaK. (1991). A study of robot inverse kinematics based upon the solution of differential equations. J. Robot. Syst. 8, 159–175.

16

ChouJ. (1992). Quaternion kinematic and dynamic differential equations. IEEE Trans. Robot. Automat. 8, 53–64.

17

CrawfordJ. D.GuittonD. (1997). Visual-motor transformations required for accurate and kinematically correct saccades. J. Neurophysiol. 78, 1447–1467.

18

DamE.KochM.LillholmM. (1998). Quaternions, interpolation and animation. Technical report, University of Copenhagen.

19

DaniilidisK. (1999). Hand-eye calibration using dual quaternions. Int. J. Robot. Res. 18, 286–298.

20

DingZ.YuY.WangB.ZhangL. (2012). An approach for visual attention based on biquaternion and its application for ship detection in multispectral imagery. Neurocomputing76, 9–17.

21

FundaJ.PaulR. P. (1990a). A computational analysis of screw transformations for robotics. IEEE Trans. Robot. Automat. 6, 348–356.

22

FundaJ.PaulR. P. (1990b). A computational analysis of screw transformations in robotics. IEEE Trans. Robot. 6, 348–356.

23

FundaJ.TaylorR. H.PaulR. P. (1990). On homogeneous transforms, quaternions, and computational efficiency. IEEE Trans. Robot. Automat. 6, 382–388.

24

GoddardJ. S.AbidiM. A. (1998). Pose and motion estimation using dual quaternion-based extended Kalman filtering, in Proceedings of SPIE Conference on Three-Dimensional Image Capture and Applications. Vol. 3313, (San Jose, CA), 189–200.

25

GrassiaF. (1998). Practical parameterization of rotations using the exponential map. J. Graph. Tools3, 29–48.

26

HaslwanterT. (1995). Mathematics of three-dimensional eye rotations. Vis. Res. 35, 1727–1739. 10.1016/0042-6989(94)00257-M

27

HestenesD. (1986). New Foundations for Classical Mechanics. Dordrecht: D. Reidel Publishing Company.

28

HestenesD. (1994a). Invariant body kinematics: I. Saccadic and compensatory eye movements. Neural Netw. 7, 65–77.

29

HestenesD. (1994b). Invariant body kinematics: II. Reaching and neurogeometry. Neural Netw. 7, 79–88.

30

HoreJ.WattsS.VilisT. (1992). Constraints on arm position when pointing in three dimensions: Donders' law and the Fick gimbal strategy. J. Neurophysiol. 68, 374–383.

31

KavanL.CollinsS.ŽáraJ.O'SullivanC. (2008). Geometric skinning with approximate dual quaternion blending. ACM Trans. Graph. 27, 1–23.

32

KimJ.-H.KumarV. R. (1990). Kinematic of robot manipulators via line transformations. J. Robot. Syst. 7, 649–674.

33

KleinC.HuangC. (1983). Review of pseudoinverse control for use with kinematically redundant manipulators. IEEE Trans. Syst. Man Cybern. SMC-13, 245–250.

34

LeclercqG.BlohmG.LefèvreP. (2012). Accurate planning of manual tracking requires a 3D visuomotor transformation of velocity signals. J. Vis. 12, 1–21. 10.1167/12.5.6

35

McGuireL. M. M.SabesP. N. (2009). Sensory transformations and the use of multiple reference frames for reach planning. Nat. Neurosci. 12, 1056–1061. 10.1038/nn.2357

36

PerezA.McCarthyJ. M. (2004). Dual quaternion synthesis of constrained robotic systems. J. Mech. Des. 126, 425.

37

PhamH.PerdereauV.AdornoB.FraisseP. (2010). Position and orientation control of robot manipulators using dual quaternion feedback, in Intelligent Robots and Systems (IROS), 2010 IEEE/RSJ International Conference, (Taipei), 658–663.

38

PhongT.HoraudR.YassineA.PhamD. (1993). Optimal estimation of object pose from a single perspective view, in 1993 (4th) International Conference on Computer Vision (Berlin: IEEE Computer Society Press), 534–539.

39