- Washington University in St. Louis, St. Louis, MO, United States

The education sector has benefited enormously through integrating digital technology driven tools and platforms. In recent years, artificial intelligence based methods are being considered as the next generation of technology that can enhance the experience of education for students, teachers, and administrative staff alike. The concurrent boom of necessary infrastructure, digitized data and general social awareness has propelled these efforts further. In this review article, we investigate how artificial intelligence, machine learning, and deep learning methods are being utilized to support the education process. We do this through the lens of a novel categorization approach. We consider the involvement of AI-driven methods in the education process in its entirety—from students admissions, course scheduling, and content generation in the proactive planning phase to knowledge delivery, performance assessment, and outcome prediction in the reactive execution phase. We outline and analyze the major research directions under proactive and reactive engagement of AI in education using a representative group of 195 original research articles published in the past two decades, i.e., 2003–2022. We discuss the paradigm shifts in the solution approaches proposed, particularly with respect to the choice of data and algorithms used over this time. We further discuss how the COVID-19 pandemic influenced this field of active development and the existing infrastructural challenges and ethical concerns pertaining to global adoption of artificial intelligence for education.

1. Introduction

Integrating computer-based technology and digital learning tools can enhance the learning experience for students and knowledge delivery process for educators (Lin et al., 2017; Mei et al., 2019). It can also help accelerate administrative tasks related to education (Ahmad et al., 2020). Therefore, researchers have continued to push the boundaries of including computer-based applications in classroom and virtual learning environments. Specifically in the past two decades, artificial intelligence (AI) based learning tools and technologies have received significant attention in this regard. In 2015, the United Nations General Assembly recognized the need to impart quality education at primary, secondary, technical, and vocational levels as one of their seventeen sustainable development goals or SDGs (United Nations, 2015). With this recognition, it is anticipated that research and development along the frontiers of including artificial intelligence for education will continue to be in the spotlight globally (Vincent-Lancrin and van der Vlies, 2020).

In the past there has been considerable discourse about how adoption of AI-driven methods for education might alter the course of how we perceive education (Dreyfus, 1999; Feenberg, 2017). However, in many of the earlier debates, the full potential of artificial intelligence was not recognized due to lack of supporting infrastructure. It was not until very recently that AI-powered techniques could be used in classroom environments. Since the beginning of the twenty-first century, there has been a rapid progress in the semiconductor industry in manufacturing chips that can handle computations at scale efficiently. In fact, in the coming decade too it is anticipated that this growth trajectory will continue with focus on wireless communication, data storage and computational resource development (Burkacky et al., 2022). With this parallel ongoing progress, using AI-driven platforms and tools to support students, educators, and policy-makers in education appears to be more feasible than ever.

The process of educating a student begins much before the student starts attending lectures and parsing lecture materials. In a traditional classroom education setup, administrative staff, and educators begin preparations related to making admissions decisions, scheduling of classes to optimize resources, curating course contents, and preliminary assignment materials several weeks prior to the term start date. In an online learning environment, similar levels of effort are put into structuring the course content and marketing the course availability to students. Once the term starts, the focus of educators is to deliver the course material, give out and grade assignments to assess progress and provide additional support to students who might benefit from that. The role of the students is to regularly acquire knowledge, ask clarifying questions and seek help to master the material. The role of administrative staff in this phase is less hands-on—they remain involved to ensure smooth and efficient overall progress. It is therefore a multi-step process involving many inter-dependencies and different stakeholders. Throughout this manuscript we refer to this multi-step process as the end-to-end education process.

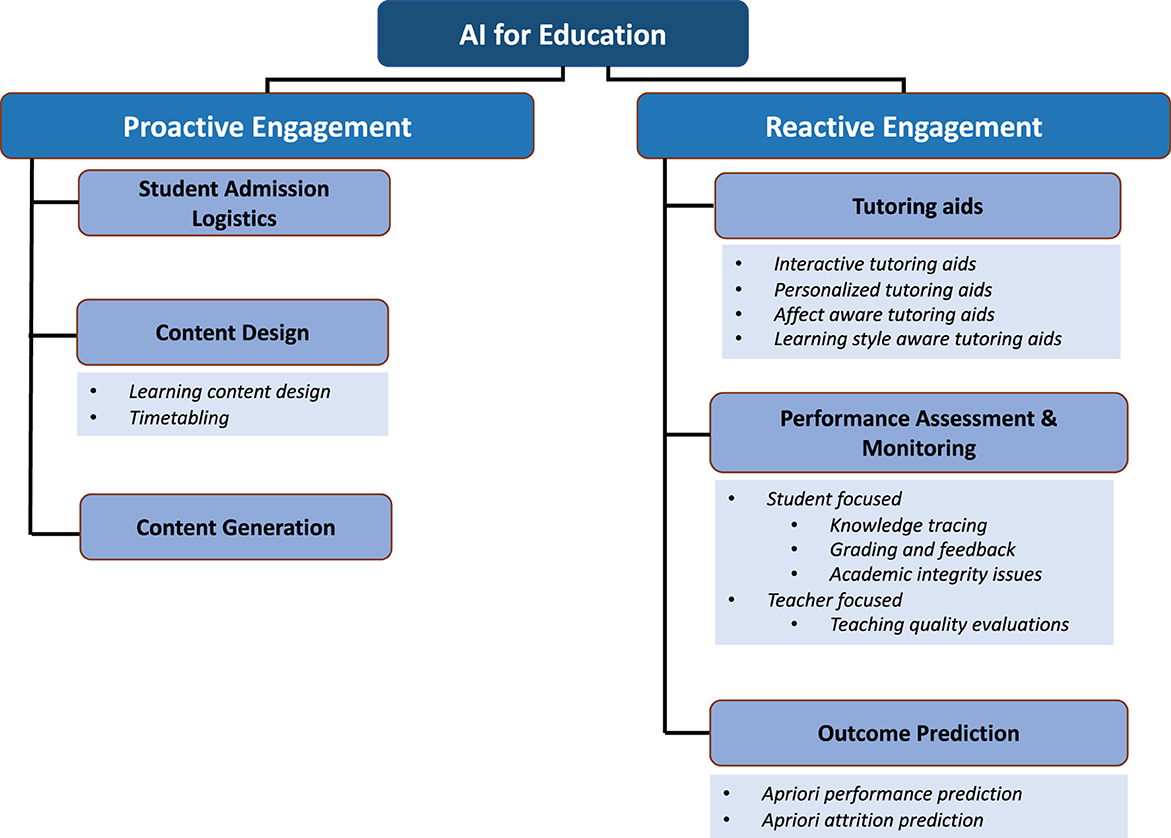

In this review article, we review how machine learning and artificial intelligence can be utilized in different phases of the end-to-end education process—from planning and scheduling to knowledge delivery and assessment. To systematically identify the different areas of active research with respect to engagement of AI in education, we first introduce a broad categorization of research articles in literature into those that address tasks prior to knowledge delivery and those that are relevant during the process of knowledge delivery—i.e., proactive vs. reactive engagement with education. Proactive involvement of AI in education comes from its use in student admission logistics, curriculum design, scheduling and teaching content generation. Reactive involvement of AI is considerably broader in scope—AI-based methods can be used for designing intelligent tutoring systems, assessing performance and predicting student outcomes. In the schematic in Figure 1, we present an overview of our categorization approach. We have selected a sample set of research articles under each category and identified the key problem statements addressed using AI methods in the past 20 years. We believe that our categorization approach exposes to researchers the wide scope of using AI for the educational process. At the same time, it allows readers to identify the timeline of when certain AI-driven tool might be applicable and what are the key challenges and concerns with using these tools at that time. The article further summarizes for expert researchers how the use of datasets and algorithms have evolved over the years and the scope for future research in this domain.

Through this review article, we aim to address the following questions:

• What were the widely studied applications of artificial intelligence in the end-to-end education process in the past two decades? How did the 2020 outbreak of the COVID-19 pandemic influence the landscape of research in this domain? Over the past two decades in retrospective view, has the usage of AI for education widened or bridged the gap between population groups with respect to access to quality education?

• How has the choice of datasets and algorithms in AI-driven tools and platforms evolved over this period—particularly in addressing the active research questions in the end-to-end education process?

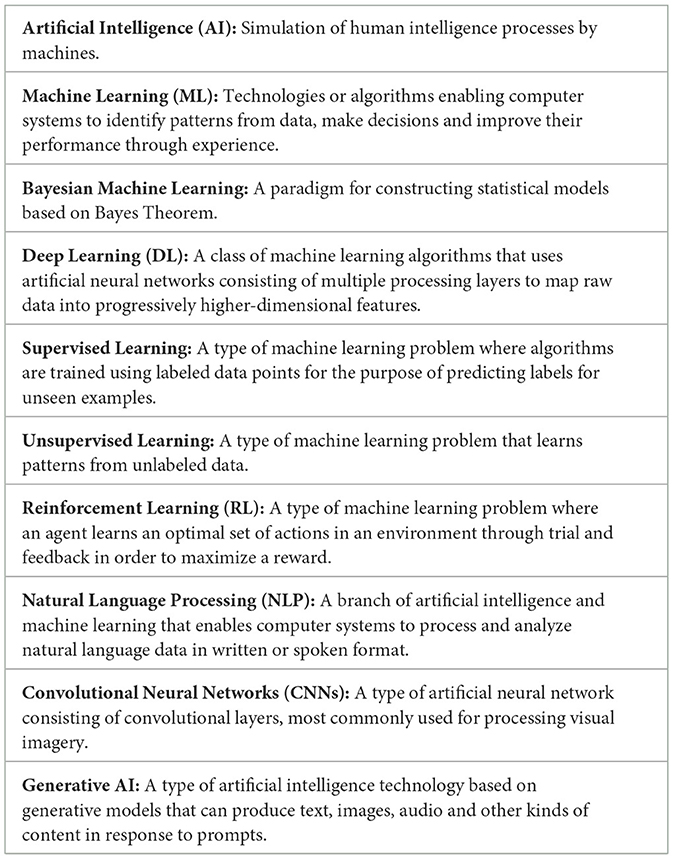

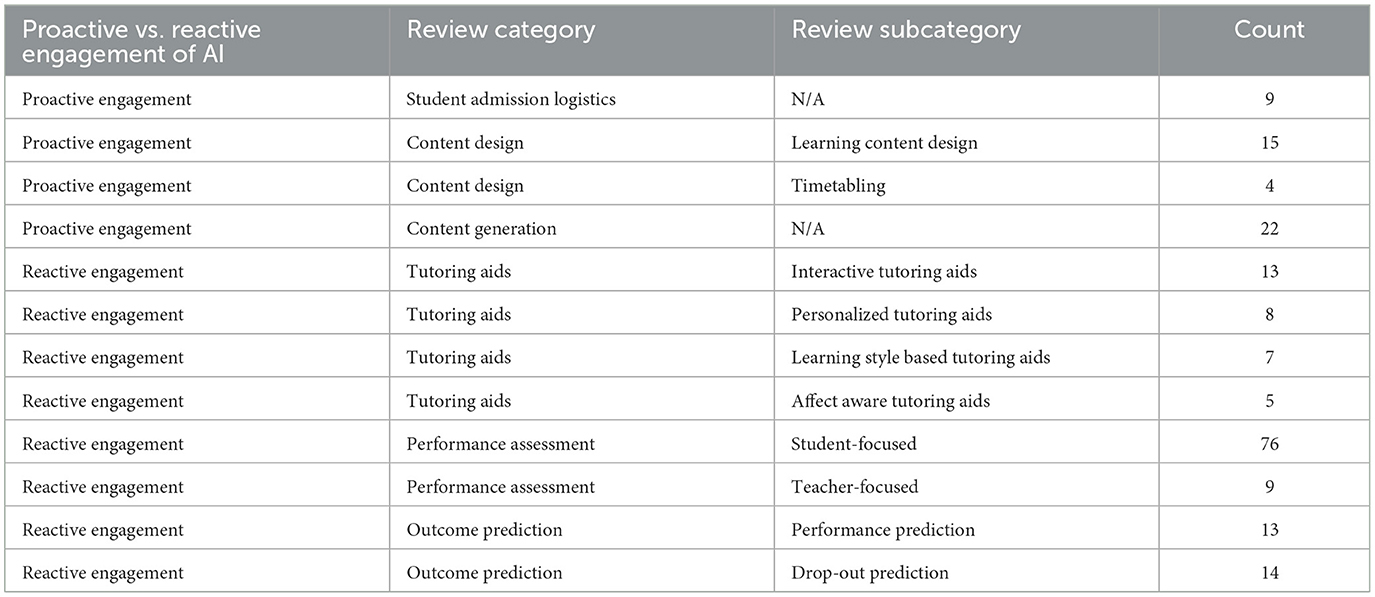

The organization of this review article from here on is as follows. In Section 2, we define the scope of this review, outline the paper selection strategy and present the summary statistics. In Section 3, we contextualize our contribution in the light of technical review articles published in the domain of AIEd in the past 5 years. In Section 4, we present our categorization approach and review the scientific and technical contributions in each category. Finally, in Section 5, we discuss the major trends observed in research in the AIEd sector over the past two decades, discuss how the COVID-19 pandemic is reshaping the AIEd landscape and point out existing limitations in the global adoption of AI-driven tools for education. Additionally in Table 1, we provide a glossary of technical terms and their abbreviations that have been used throughout the paper.

2. Scope definition

The term artificial intelligence (AI) was coined in 1956 by John McCarthy (Haenlein and Kaplan, 2019). Since the first generally acknowledged work of McCulloch and Pitts in conceptualizing artificial neurons, AI has gone through several dormant periods and shifts in research focus. From algorithms that through exposure to somewhat noisy observational data learns to perform some pre-defined tasks, i.e., machine learning (ML) to more sophisticated approaches that learns the mapping of high-dimensional observations to representations in a lower dimensional space, i.e., deep learning (DL)—there is a plethora of computational techniques available currently. More recently, researchers and social scientists are increasingly using AI-based techniques to address social issues and to build toward a sustainable future (Shi et al., 2020). In this article, we focus on how one such social development aspect, i.e., education might benefit from usage of artificial intelligence, machine learning, and deep learning methods.

2.1. Paper search strategy

For the purpose of analyzing recent trends in this field (i.e., AIEd), we have sampled research articles published in peer-reviewed conferences and journals over the past 20 years, i.e. between 2003 and 2022, by leveraging the Google Scholar search engine. We identified our selected corpus of 195 research articles through a multi-step process. First, we identified a set of systematic review, survey papers and perspective papers published in the domain of artificial intelligence for education (AIEd) between the years of 2018 and 2022. To identify this list of review papers we used the keywords “artificial intelligence for education”, “artificial intelligence for education review articles” and similar combinations in Google Scholar. We critically reviewed these papers and identified the research domains under AIEd that have received much attention in the past 20 years (i.e., 2002–2022) and that are closely tied to the end-to-end education process. Once, these research domains were identified, we further did a deep dive search using relevant keywords for each research area (for example, for the category tutoring aids, we used several keywords including intelligent tutoring systems, intelligent tutoring aids, computer-aided learning systems, affect-aware learning systems) to identify an initial set of technical papers in the sub-domain. We streamlined this initial set through the lens of significance of the problem statement, data used, algorithm proposed by thorough review of each paper by both authors and retained the final set of 195 research articles.

2.2. Inclusion and exclusion criteria

Since the coinage of the term artificial intelligence, there is considerable debate in the scientific community about what is the scope of artificial intelligence. It is specifically challenging to delineate the boundaries as it is indeed a field that is subject to rapid technological change. Deep-dive analysis of this debate is beyond the scope of this paper. Instead, we have clearly stated in this section our inclusion/exclusion criteria with respect to selecting articles that surfaced in our search of involvement of AI for education. For this review article, we include research articles that use methods such as optimal search strategies (e.g., breadth-first search, depth-first search), density estimation, machine learning, Bayesian machine learning, deep learning and reinforcement learning. We do not include original research that proposes use of concepts and methods rooted in operations research, evolutionary algorithms, adaptive control theory, and robotics in our corpus of selected articles. In this review, we only consider peer-reviewed articles that were published in English. We do not include patented technologies and copyrighted EdTech software systems in our scope unless peer-reviewed articles outlining the same contributions have been published by the authors.

2.3. Summary statistics

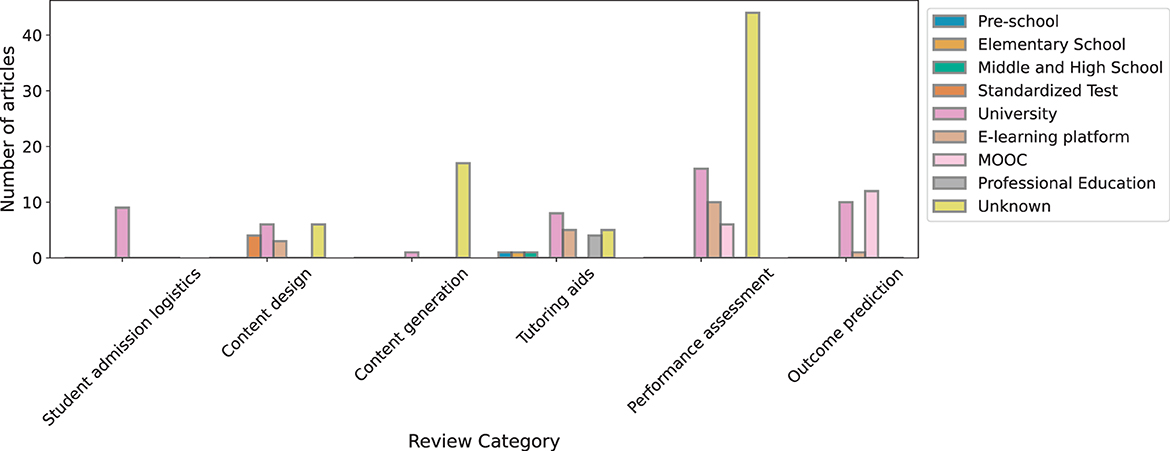

With the scope of our review defined above, here we provide the summary statistics of the 195 technical articles we covered in this review. In Figure 2, we show the distribution of the included scientific and technical articles over the past two decades. We also introspected the technical contributions in each category of our categorization approach with respect to the target audiences they catered to (see Figure 3). We primarily identify target audience groups for educational technologies as such—pre-school students, elementary school students, middle and high school students, university students, standardized test examinees, students in e-learning platforms, students of MOOCs, and students in professional/vocational education. Articles where the audience group has not been clearly mentioned were marked as belonging to “Unknown” target audience category.

Figure 3. Distribution of reviewed technical articles across categories and target audience categories.

In Section 4, we introduce our categorization and perform a deep-dive to explore the breadth of technical contributions in each category. If applicable, we have further identified specific research problems currently receiving much attention as sub-categories within a category. In Table 2, we demonstrate the distribution of significant research problems within a category.

We defer the analysis of the identified trends from these summary plots to the Section 5 of this paper.

3. Related works

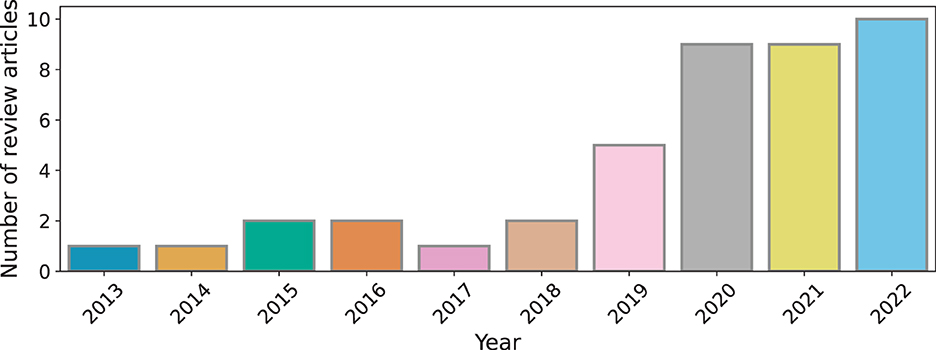

Artificial intelligence as a research area in technology has evolved gradually since 1950s. Similarly, the field of using computer based technology to support education has been actively developing since the 1980s. It is only however in the past few decades that there has been significant emphasis in adopting digital technologies including AI driven technologies in practice (Alam, 2021). Particularly, the introduction of open source generative AI algorithms, has spear-headed critical analyses of how AI can and should be used in the education sector (Baidoo-Anu and Owusu Ansah, 2023; Lund and Wang, 2023). In this backdrop of emerging developments, the number of review articles surveying the technical progress in the AIEd discipline has also increased in the last decade (see Figure 4). To generate Figure 4, we used Google Scholar as the search engine with the keywords artificial intelligence for education, artificial intelligence for education review articles and similar combinations using domain abbreviations. In this section, we discuss the premise of the review articles published in the last 5 years and situate this article with respect to previously published technical reviews.

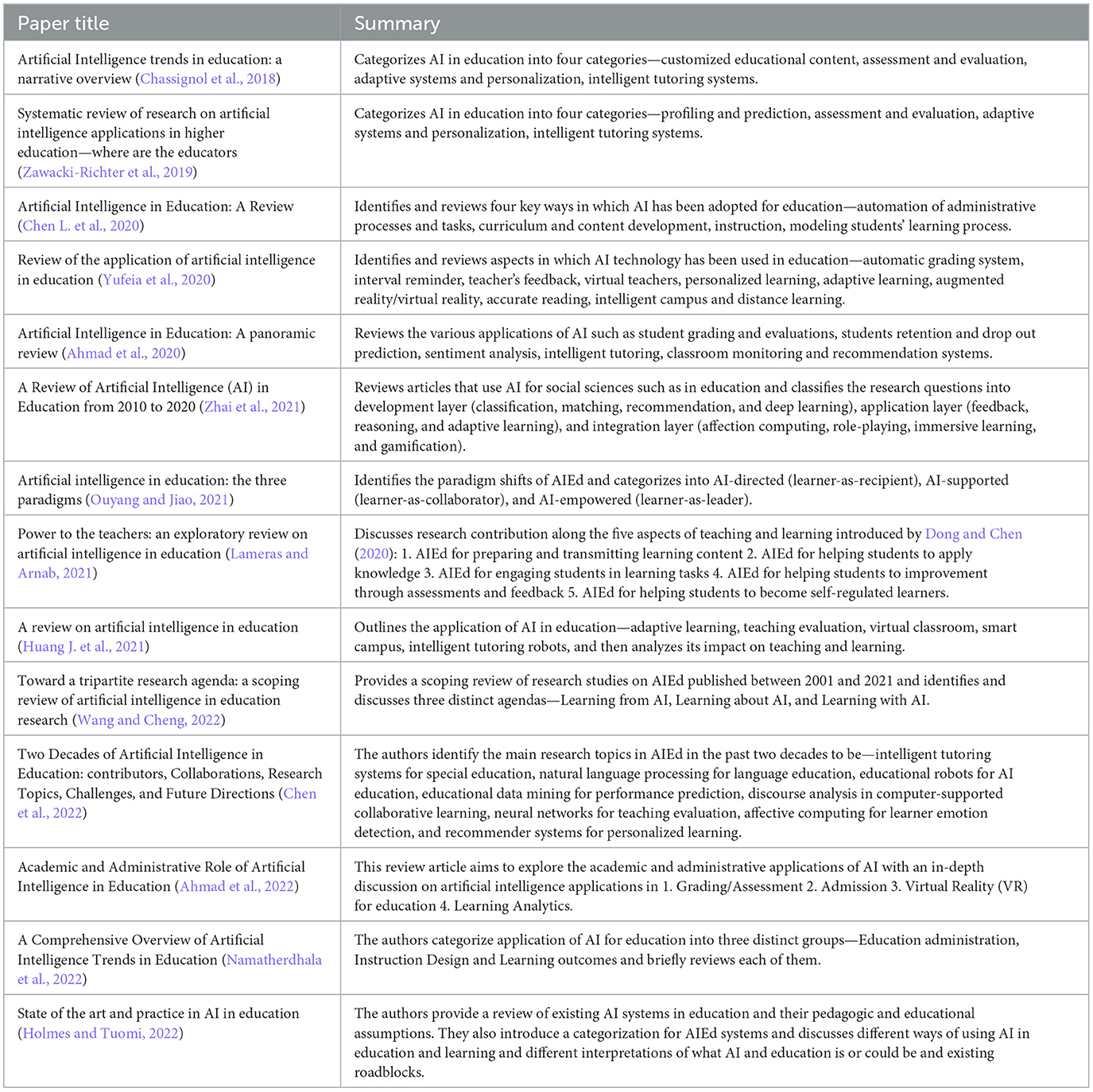

Among the review articles identified based on the keyword search on Google Scholar and published between 2018 and 2022, one can identify two thematic categories—(i) Technical reviews with categorization: review articles that group research contributions based on some distinguishing factors, such as problem statement and solution methodology (Chassignol et al., 2018; Zawacki-Richter et al., 2019; Ahmad et al., 2020, 2022; Chen L. et al., 2020; Yufeia et al., 2020; Huang J. et al., 2021; Lameras and Arnab, 2021; Ouyang and Jiao, 2021; Zhai et al., 2021; Chen et al., 2022; Holmes and Tuomi, 2022; Namatherdhala et al., 2022; Wang and Cheng, 2022). (ii) Perspectives on challenges, trends, and roadmap: review articles that highlight the current state of research in a domain and offer critical analysis of the challenges and the future road map for the domain (Fahimirad and Kotamjani, 2018; Humble and Mozelius, 2019; Malik et al., 2019; Pedro et al., 2019; Bryant et al., 2020; Hwang et al., 2020; Alam, 2021; Schiff, 2021). Closely linked with (i) are review articles that dive deep into the developments within a particular sub-category associated with AIEd, such as AIEd in the context of early childhood education (Su and Yang, 2022) and online higher education (Ouyang F. et al., 2022). We have designed this review article to belong to category (i). We distinguish between the different research problems in the context of AIEd through the lens of their timeline for engagement in the end-to-end education process and then perform a deeper review of ongoing research efforts in each category. To the best of our knowledge, such distinction between proactive and reactive involvement of AI in education along with an granular review of significant research questions in each category is presented for the first time through this paper (see schematic in Figure 1).

In Table 3, we have outlined the context of recently published technical reviews with categorization.

Table 3. Contextualization with respect to technical reviews published in the past 5 years (2018–2022).

4. Engaging artificial intelligence driven methods in stages of education

4.1. Proactive vs. reactive engagement of AI—An introduction

In the introductory section of this article, we have outlined how the process of education is a multi-step process and how it involves different stakeholders along the timeline. To this end, we can clearly identify that there are two distinct phases of engaging AI in the end-to-end education process. First, proactive engagement of AI—efforts in this phase are to design, curate and to ensure optimal use of resources, and second, reactive engagement of AI—efforts in this phase are to ensure that students acquire the necessary information and skills from the sessions they attend and provide feedback as needed.

In this review article, we distinguish between the scientific and technical contributions in the field of AIEd through the lens of these two distinct phases. This categorization is significant for the following reasons:

• First, through this hierarchical categorization approach, one can gauge the range of problems in the context of education that can be addressed using artificial intelligence. AI research related to personalized tutoring aids and systems has indeed had a head-start and is a mature area of research currently. However, the scope of using AI in the end-to-end education process is broad and rapidly evolving.

• Second, this categorization approach provides a retrospective overview of milestones achieved in AIEd through continuous improvement and enrichment of the data and algorithm leveraged in building AI models.

• Third, as this review touches upon both classroom and administrative aspect of education, readers can formulate a perspective for the myriad of infrastructural and ethical challenges that exist with respect to widespread adoption of AI-driven methods in education.

Within these broad categorizations, we further break down and analyze the research problems that have been addressed using AI. For instance, in the proactive engagement phase, AI-based algorithms can be leveraged to determine student admission logistics, design curricula and schedules, and create course content. On the other hand, in the reactive engagement phase, AI-based methods can be used for designing intelligent tutoring systems (ITS), performance assessment, and prediction of student outcomes (see Figure 1). Another important distinction between the two phases lies in the nature of the available data to develop models. While the former primarily makes use of historical data points or pre-existing estimates of available resources and expectations about learning outcomes, the latter has at its disposal a growing pool of data points from the currently ongoing learning process, and can therefore be more adaptive and initiate faster pedagogical interventions to changing scopes and requirements.

4.2. Proactive engagement of AI for education

4.2.1. Student admission logistics

In the past, although a number of studies used statistical or machine learning-based approaches to analyze or model student admissions decisions, they had little role in the actual admissions process (Bruggink and Gambhir, 1996; Moore, 1998). However in the face of growing numbers of applicants, educational institutes are increasingly turning to AI-driven approaches to efficiently review applications and make admission decisions. For example, the Department of Computer Science at University of Texas Austin (UTCS) introduced an explainable AI system called GRADE (Graduate Admissions Evaluator) that uses logistic regression on past admission records to estimate the probability of a new applicant being admitted in their graduate program (Waters and Miikkulainen, 2014). While GRADE did not make the final admission decision, it reduced the number of full application reviews as well as review time per application by experts. Zhao et al. (2020) used features extracted from application materials of students as well as how they performed in the program of study to predict an incoming applicant's potential performance and identify students best suited for the program. An important metric for educational institutes with regard to student admissions is yield rate, the rate at which accepted students decide to enroll at a given school. Machine learning has been used to predict enrollment decisions of students, which would help the institute make strategic admission decisions in order to improve their yield rate and optimize resource allocation (Jamison, 2017). Additionally, whether students enroll in suitable majors based on their specific backgrounds and prior academic performance is also indicative of future success. Machine learning has also been used to classify students into suitable majors in an attempt to set them up for academic success (Assiri et al., 2022).

Another research direction in this domain approaches the admissions problem from the perspective of students by predicting the probability that an applicant will get admission at a particular university in order to help applicants better target universities based on their profiles as well as university rankings (AlGhamdi et al., 2020; Goni et al., 2020; Mridha et al., 2022). Notably, more than one such work finds prior GPA (Grade Point Average) of students to be the most significant factor in admissions decisions (Young and Caballero, 2019; El Guabassi et al., 2021).

Given the high stakes involved and the significant consequences that admissions decisions have on the future of students, there has been considerable discourse on the ethical considerations of using AI in such applications, including its fairness, transparency, and privacy aspects (Agarwal, 2020; Finocchiaro et al., 2021). Aside from the obvious potential risks of worthy applicants getting rejected or unworthy applicants getting in, such systems can perpetuate existing biases in the training data from human decision-making in the past (Bogina et al., 2022). For example, such systems might show unintentional bias toward certain demographics, gender, race, or income groups. Bogina et al. (2022) advocated for explainable models for making admission decisions, as well as proper system testing and balancing before reaching the end user. Emelianov et al. (2020) showed that demographic parity mechanisms like group-specific admission thresholds increase the utility of the selection process in such systems in addition to improving its fairness. Despite concerns regarding fairness and ethics, interestingly, university students in a recent survey rated algorithmic decision-making (ADM) higher than human decision-making (HDM) in admission decisions in both procedural and distributive fairness aspects (Marcinkowski et al., 2020).

4.2.2. Content design

In the context of education, we can define content as—(i) learning content for a course, curriculum, or test; and (ii) schedules/timetables of classes. We discuss AI/ML approaches for designing/structuring both of the above in this section.

(i) Learning content design: Prior to the start of the learning process, educators, and administrators are responsible for identifying an appropriate set of courses for a curriculum, an appropriate set of contents for a course, or an appropriate set of questions for a standardized test. In course and curriculum design, there is a large body of work using traditional systematic and relational approaches (Kessels, 1999), however the last decade saw several works using AI-informed curriculum design approaches. For example, Ball et al. (2019) uses classical ML algorithms to identify factors prior to declaration of majors in universities that adversely affect graduation rates, and advocates curriculum changes to alleviate these factors. Rawatlal (2017) uses tree-based approaches on historical records to prioritize the prerequisite structure of a curriculum in order to determine student progression routes that are effective. Somasundaram et al. (2020) proposes an Outcome Based Education (OBE) where expected outcomes from a degree program such as job roles/skills are identified first, and subsequently courses required to reach these outcomes are proposed by modeling the curriculum using ANNs. Doroudi (2019) suggests a semi-automated curriculum design approach by automatically curating low-cost, learner-generated content for future learners, but argues that more work is needed to explore data-driven approaches in curating pedagogically useful peer content.

For designing standardized tests such as TOEFL, SAT, or GRE, an essential criteria is to select questions having a consistent difficulty level across test papers for fair evaluation. This is also useful in classroom settings if teachers want to avoid plagiarism issues by setting multiple sets of test papers, or in designing a sequence of assignments or exams with increasing order of difficulty. This can be done through Question Difficulty Prediction (QDP) or Question Difficulty Estimation (QDE), an estimate of the skill level needed to answer a question correctly. QDP was historically estimated by pretesting on students or from expert ratings, which are expensive, time-consuming, subjective, and often vulnerable to leakage or exposure (Benedetto et al., 2022). Rule-based algorithms relying on difficulty features extracted by experts were also proposed in Grivokostopoulou et al. (2014) and Perikos et al. (2016) for automatic difficulty estimation. As data-driven solutions became more popular, a common approach used linguistic features (Mothe and Tanguy, 2005; Stiller et al., 2016), readability scores, (Benedetto et al., 2020a; Yaneva et al., 2020), and/or word frequency features (Benedetto et al., 2020a,b; Yaneva et al., 2020) with ML algorithms such as linear regression, SVMs, tree-based approaches, and neural networks for downstream classification or regression, depending on the problem setup. With automatic testing systems and ready availability of large quantities of historical test logs, deep learning has been increasingly used for feature extraction (word embeddings, question representations, etc.) and/or difficulty estimation (Fang et al., 2019; Lin et al., 2019; Xue et al., 2020). Attention strategies have been used to model the difficulty contribution of each sentence in reading problems (Huang et al., 2017) or to model recall (how hard it is to recall the knowledge assessed by the question) and confusion (how hard it is to separate the correct answer from distractors) in Qiu et al. (2019). Domain adaptation techniques have also been proposed to alleviate the need of difficulty-labeled question data for each new course by aligning it with the difficulty distribution of a resource-rich course (Huang Y. et al., 2021). AlKhuzaey et al. (2021) points out that a majority of data-driven QDP approaches belong to language learning and medicine, possibly spurred on by the existence of a large number of international and national-level standardized language proficiency tests and medical licensing exams.

(ii) Timetabling: Educational Timetabling Problem (ETP) deals with the assignment of classes or exams to a limited number of time-slots such that certain constraints (e.g., availability of teachers, students, classrooms, and equipments) are satisfied. This can be divided into three types—course timetabling, school timetabling, and exam timetabling (Zhu et al., 2021). Timetabling not only ensures proper resource allocation, its design considerations (e.g., number of courses per semester, number of lectures per day, number of free time-slots per day) have noticeable impact on student attendance behavior and academic performance (Larabi-Marie-Sainte et al., 2021). Popular approaches in this domain such as mathematical optimization, meta-heuristic, hyper-heuristic, hybrid, and fuzzy logic approaches. Zhu et al. (2021) and Tan et al. (2021) mostly is beyond the scope of our paper (see Section 2.2). Having said that, it must be noted that machine learning has often been used in conjunction with such mathematical techniques to obtain better performing algorithms. For example, Kenekayoro (2019) used supervised learning to find approximations for evaluating solutions to optimization problems—a critical step in heuristic approaches. Reinforcement learning has been used to select low-level heuristics in hyper-heuristic approaches (Obit et al., 2011; Özcan et al., 2012) or to obtain a suitable search neighborhood in mathematical optimization problems (Goh et al., 2019).

4.2.3. Content generation

The difference between content design and content generation is that of curation versus creation. While the former focuses on selecting and structuring the contents for a course/curriculum in a way most appropriate for achieving the desired learning outcomes, the latter deals with generating the course material itself. AI has been widely adopted to generate and improve learning content prior to the start of the learning process, as discussed in this section.

Automatically generating questions from narrative or informational text, or automatically generating problems for analytical concepts are becoming increasingly important in the context of education. Automatic question generation (AQG) from teaching material can be used to improve learning and comprehension of students, assess information retention from the material and aid teachers in adding Supplementary material from external sources without the time-intensive process of authoring assessments from them. They can also be used as a component in intelligent tutoring systems to drive engagement and assess learning. AQG essentially consists of two aspects: content selection or what to ask, and question construction or how to ask it (Pan et al., 2019), traditionally considered as separate problems. Content selection for questions was typically done using different statistical features (sentence length, word/sentence position, word frequency, noun/pronoun count, presence of superlatives, etc.) (Agarwal and Mannem, 2011) or NLP techniques such as syntactic or semantic parsing (Heilman, 2011; Lindberg et al., 2013), named entity recognition (Kalady et al., 2010) and topic modeling (Majumder and Saha, 2015). Machine learning has also been used in such contexts, e.g., to classify whether a certain sentence is suitable to be used as a stem in cloze questions (passage with a portion occluded which needs to be replaced by the participant) (Correia et al., 2012). The actual question construction, on the other hand, traditionally adopted rule-based methods like transformation-based approaches (Varga and Ha, 2010) or template-based approaches (Mostow and Chen, 2009). The former rephrased the selected content using the correct question key-word after deleting the target concept, while the latter used pre-defined templates that can each capture a class of questions. Heilman and Smith (2010) used an overgenerate-and-rank approach to overgenerate questions followed by the use of supervised learning for ranking them, but still relied on handcrafted generating rules. Following the success of neural language models and concurrent with the release of large-scale machine reading comprehension datasets (Nguyen et al., 2016; Rajpurkar et al., 2016), question generation was later framed as a sequence-to-sequence learning problem that directly maps a sentence (or the entire passage containing the sentence) to a question (Du et al., 2017; Zhao et al., 2018; Kim et al., 2019), and can thus be trained in an end-to-end manner (Pan et al., 2019). Reinforcement learning based approaches that exploit the rich structural information in the text have also been explored in this context (Chen Y. et al., 2020). While text is the most common type of input in AQG, such systems have also been developed for structured databases (Jouault and Seta, 2013; Indurthi et al., 2017), images (Mostafazadeh et al., 2016), and videos (Huang et al., 2014), and are typically evaluated by experts on the quality of generated questions in terms of relevance, grammatical, and semantic correctness, usefulness, clarity etc.

Automatically generating problems that are similar to a given problem in terms of difficulty level, can greatly benefit teachers in setting individualized practice problems to avoid plagiarism and still ensure fair evaluation (Ahmed et al., 2013). It also enables the students to be exposed to as many (and diverse) training exercises as needed in order to master the underlying concepts (Keller, 2021). In this context, mathematical word problems (MWPs)—an established way of inculcating math modeling skills in K-12 education—have witnessed significant research interest. Preliminary work in automatic MWP generation take a template-based approach, where an existing problem is generalized into a template, and a solution space fitting this template is explored to generate new problems (Deane and Sheehan, 2003; Polozov et al., 2015; Koncel-Kedziorski et al., 2016). Following the same shift as in AQG, Zhou and Huang (2019) proposed an approach using Recurrent Neural Networks (RNNs) that encodes math expressions and topic words to automatically generate such problems. Subsequent research along this direction has focused on improving topic relevance, expression relevance, language coherence, as well as completeness and validity of the generated problems using a spectrum of approaches (Liu et al., 2021; Wang et al., 2021; Wu et al., 2022).

On the other end of the content generation spectrum lie systems that can generate solutions based on the content and related questions, which include Automatic Question Answering (AQA) systems, Machine Reading Comprehension (MRC) systems and automatic quantitative reasoning problem solvers (Zhang D. et al., 2019). These have achieved impressive breakthroughs with the research into large language models and are widely regarded in the larger narrative as a stepping-stone toward Artificial General Intelligence (AGI), since they require sophisticated natural language understanding and logical inferencing capabilities. However, their applicability and usefulness in educational settings remains to be seen.

4.3. Reactive engagement of AI for education

4.3.1. Tutoring aids

Technology has been used to aid learners to achieve their learning goals for a long time. More focused effort on developing computer-based tutoring systems in particular started following the findings of Bloom (Bloom, 1984)—students who received tutoring in addition to group classes fared two standard deviations better than those who only participated in group classes. Given its early start, research on Intelligent Tutoring Systems (ITS) is relatively more mature than other research areas under the umbrella of AIEd research. Fundamentally, the difference between designs of ITS comes from the difference in the underlying assumption of what augments the knowledge acquisition process for a student. In the review paper on ITS (Alkhatlan and Kalita, 2018), a comprehensive timeline and overview of research in this domain is provided. Instead of repeating findings from previous reviews under this category, we distinguish between ITS designs through the lens of the underlying hypotheses. We primarily identified four hypotheses that are currently receiving much attention from the research community—emphasis on tutor-tutee interaction, emphasis of personalization, inclusion of affect and emotion, and consideration of specific learning styles. It must be noted that tutoring itself is an interactive process, therefore most designs in this category have a basic interactive setup. However, contributions in categories (ii) through (iv), have other concept as the focal point of their tutoring aid design.

(i) Interactive tutoring aids: Previous research in education (Jackson and McNamara, 2013) has pointed out that when a student is actively interacting with the educator or the course contents, the student stays engaged in the learning process for a longer duration. Learning systems that leverage this hypothesis can be categorized as interactive tutoring aids. These frameworks allow the student to communicate (verbally or through actions) with the teacher or the teaching entity (robots or software) and get feedback or instructions as needed.

Early designs of interactive tutoring aids for teaching and support comprised of rule-based systems mirroring interactions between expert teacher and student (Arroyo et al., 2004; Olney et al., 2012) or between peer companions (Movellan et al., 2009). These template rules provided output based on the inputs from the student. Over the course of time, interactive tutoring systems gradually shifted to inferring the student's state in real time from the student's interactions with the tutoring system and providing fine-tuned feedback/instructions based on the inference. For instance, Gordon and Breazeal (2015) used a Bayesian active learning algorithm to assess student's word reading skills while the student was being taught by a robot. Presently, a significant number of frameworks belonging to this category uses chatbots as a proxy for a teacher or a teaching assistant (Ashfaque et al., 2020). These recent designs can use a wide variety of data such as text and speech, and rely on a combination of sophisticated and resource-intensive deep-learning algorithms to infer and further customize interactions with the student. For example, Pereira (2016) presents “@dawebot” that uses NLP techniques to train students using multiple choice question quizzes. Afzal et al. (2020) presents a conversational medical school tutor that uses NLP and natural language understanding (NLU) to understand user's intent and present concepts associated with a clinical case.

Hint construction and partial solution generation is yet another method to keep students engaged interactively. For instance, Green et al. (2011) used Dynamic Bayes Nets to construct a curriculum of hints and associated problems. Wang and Su (2015) in their architecture iGeoTutor assisted students in mastering geometry theorems by implementing search strategies (e.g., DFS) from partially complete proofs. Pande et al. (2021) aims to improve individual and self-regulated learning in group assignments through a conversational system built using NLU and dialogue management systems that prompts the students to reflect on lessons learnt while directing them to partial solutions.

One of the requirements of certain professional and vocational training such as biology, medicine, military etc. is practical experience. With the support of booming infrastructure, many such training programs are now adopting AI-driven augmented reality (AR)/virtual reality (VR) lesson plans. Interconnected modules driven by computer vision, NLU, NLP, text-to-speech (TTS), information retrieval algorithms facilitate lessons and/or assessments in biology (Ahn et al., 2018), surgery and medicine (Mirchi et al., 2020), pathological laboratory analysis (Taoum et al., 2016), and military leadership training (Gordon et al., 2004).

(ii) Personalized tutoring aids: As every student is unique, personalizing instruction and teaching content can positively impact the learning outcome of the student (Walkington, 2013)—tutoring systems that incorporate this can be categorized as personalized learning systems or personalized tutoring aids. Notably, personalization during instruction can occur through course content sequencing and display of prompts and additional resources among others.

The sequence in which a student reviews course topics plays an important role in their mastery of a concept. One of the criticisms of early computer based learning tools was the “one approach fits all” method of execution. To improve upon this limitation, personalized instructional sequencing approaches were adopted. In some early developments, Idris et al. (2009) developed a course sequencing method that mirrored the role of an instructor using soft computing techniques such as self organized maps and feed-forward neural networks. Lin et al. (2013) propose the use of decision trees trained on student background information to propose personalized learning paths for creativity learning. Reinforcement learning (RL) naturally lends itself to this task. Here an optimal policy (sequence of instructional activities) is inferred depending on the cognitive state of a student (estimated through knowledge tracing) in order to maximize a learning-related reward function. As knowledge delivery platforms are increasingly becoming virtual and thereby generating more data, deep reinforcement learning has been widely applied to the problem of instructional sequencing (Reddy et al., 2017; Upadhyay et al., 2018; Pu et al., 2020; Islam et al., 2021). Doroudi (2019) presents a systematic review of RL-induced instructional policies that were evaluated on students, and concludes that over half outperform all baselines they were tested against.

In order to display a set of relevant resources personalized with respect to a student state, algorithmic search is carried out in a knowledge repository. For instance, Kim and Shaw (2009) uses information retrieval and NLP techniques to present two frameworks: PedaBot that allows students to connect past discussions to the current discussion thread and MentorMatch that facilitates student collaboration customized based on student's current needs. Both PedaBot and MentorMatch systems use text data coming from a live discussion board in addition to textbook glossaries. In order to reduce information overload and allow learners to easily navigate e-learning platforms, Deep Learning-Based Course Recommender System (DECOR) has been proposed recently (Li and Kim, 2021)—this architecture comprises of neural network based recommendation systems trained using student behavior and course related data.

(iii) Affect aware tutoring aids: Scientific research proposes incorporating affect and behavioral state of the learner into the design of the tutoring system as it enhances the effectiveness of the teaching process (Woolf et al., 2009; San Pedro et al., 2013). Arroyo et al. (2014) suggests that cognition, meta-cognition and affect should indeed be modeled using real time data and used to design intervention strategies. Affect and behavioral state of a student can generally be inferred from sensor data that tracks minute physical movements of the student (eyegaze, facial expression, posture etc.). While initial approaches in this direction required sensor data, a major constraint for availing and using such data pertains to ethical and legal reasons. “Sensor-free” approaches have thereby been proposed that use data such as student self-evaluations and/or interaction logs of the student with the tutoring system. Arroyo et al. (2010) and Woolf et al. (2010) use interaction data to build affect detector models—the raw data in these cases are first distilled into meaningful features and then fed into simple classifier models that detect individual affective states. DeFalco et al. (2018) compares the usage of sensor and interaction data in delivering motivational prompts in the course of military training. In Botelho et al. (2017), uses RNNs to enhance the performance of sensor-free affect detection models. In their review of affect and emotion aware tutoring aids, Harley et al. (2017) explore in depth the different use cases for affect aware intelligent tutoring aids such as enriching user experience, better curating learning material and assessments, delivering prompts for appraisal, navigational instructions etc., and the progress of research in each direction.

(iv) Learning style aware tutoring aids: Yet another perspective in the domain of ITS pertains to customizing course content according to learning styles of students for better end outcomes. Kolb (1976), Pask (1976), Honey and Mumford (1986), and Felder (1988) among others proposed different approaches to categorize learning styles of students. Traditionally, an individual's learning style was inferred via use of a self-administered questionnaire. However, more recently machine learning based methods are being used to categorize learning styles more efficiently from noisy subject data. Lo and Shu (2005), Villaverde et al. (2006), Alfaro et al. (2018), and Bajaj and Sharma (2018) use as input the completed questionnaire and/or other data sources such as interaction data and behavioral data of students, and feed the extracted features into feed-forward neural networks for classification. Unsupervised methods such as self-organizing map (SOM) trained using curated features have also been used for automatic learning style identification (Zatarain-Cabada et al., 2010). While for categorization per the Felder and Silverman learning style model, count of student visits to different sections of the e-learning platform are found to be more informative (Bernard et al., 2015; Bajaj and Sharma, 2018), for categorization per the Kolb learning model, student performance, and student preference features were found to be more relevant. Additionally, machine learning approaches have also been proposed for learning style based learning path design. In Mota (2008), learning styles are first identified through a questionnaire and represented on a polar map, thereafter neural networks are used to predict the best presentation layout of the learning objective for a student. It is worthwhile to point out, however, that in recent years instead of focusing on customizing course content with respect to certain pre-defined learning styles, more research efforts are focused on curating course material based on how an individual's overall preferences vary over time (Chen and Wang, 2021).

4.3.2. Performance assessment and monitoring

A critical component of the knowledge delivery phase involves assessing student performance by tracing their knowledge development and providing grades and/or constructive feedback on assignments and exams, while simultaneously ensuring academic integrity is upheld. Conversely, it is also important to evaluate the quality and effectiveness of teaching, which has a tangible impact on the learning outcomes of students. AI-driven performance assessment and monitoring tools have been widely developed for both learners and educators. Since a majority of evaluation material are in textual format, NLP-based models in particular have a major presence in this domain. We divide this section into student-focused and teacher-focused approaches, depending on the direct focus group of such applications.

(i) Student-focused:

Knowledge tracing. An effective way of monitoring the learning progress of students is through knowledge tracing, which models knowledge development in students in order to predict their ability to answer the next problem correctly given their current mastery level of knowledge concepts. This not only benefits the students by identifying areas they need to work on, but also the educators in designing targeted exercises, personalized learning recommendations and adaptive teaching strategies (Liu et al., 2019). An important step of such systems is cognitive modeling, which models the latent characteristics of students based on their current knowledge state. Traditional approaches for cognitive modeling include factor analysis methods which estimate student knowledge by learning a function (logistic in most cases) based on various factors related to the students, course materials, learning and forgetting behavior, etc. (Pavlik and Anderson, 2005; Cen et al., 2006; Pavlik et al., 2009). Another research direction explores Bayesian inference approaches that update student knowledge states using probabilistic graphical models like Hidden Markov Model (HMM) on past performance records (Corbett and Anderson, 1994), with substantial research being devoted to personalizing such model parameters based on student ability and exercise difficulty (Yudelson et al., 2013; Khajah et al., 2014). Recommender system techniques based on matrix factorization have also been proposed, which predict future scores given a student-exercise performance matrix with known scores (Thai-Nghe et al., 2010; Toscher and Jahrer, 2010). Abdelrahman et al. (2022) provides a comprehensive taxonomy of recent work in deep learning approaches for knowledge tracing. Deep knowledge tracing (DKT) was one of the first such models which used recurrent neural network architectures for modeling the latent knowledge state along with its temporal dynamics to predict future performance (Piech et al., 2015a). Extensions along this direction include incorporating external memory structures to enhance representational power of knowledge states (Zhang et al., 2017; Abdelrahman and Wang, 2019), incorporating attention mechanisms to learn relative importance of past questions in predicting current response (Pandey and Karypis, 2019; Ghosh et al., 2020), leveraging textual information from exercise materials to enhance prediction performance (Su et al., 2018; Liu et al., 2019) and incorporating forgetting behavior by considering factors related to timing and frequency of past practice opportunities (Nagatani et al., 2019; Shen et al., 2021). Graph neural network based architectures were recently proposed in order to better capture dependencies between knowledge concepts or between questions and their underlying knowledge concepts (Nakagawa et al., 2019; Tong et al., 2020; Yang et al., 2020). Specific to programming, Wang et al. (2017) used a sequence of embedded program submissions to train RNNs to predict performance in the current or the next programming exercise. However as pointed out in Abdelrahman et al. (2022), handling of non-textual content as in images, mathematical equations or code snippets to learn richer embedding representations of questions or knowledge concepts remains relatively unexplored in the domain of knowledge tracing.

Grading and feedback. While technological developments have made it easier to provide content to learners at scale, scoring their submitted work and providing feedback on similar scales remains a difficult problem. While assessing multiple-choice and fill-in-the-blank type questions is easy enough to automate, automating assessment of open-ended questions (e.g., short answers, essays, reports, code samples) and questions requiring multi-step reasoning (e.g., theorem proving, mathematical derivations) is equally hard. But automatic evaluation remains an important problem not only because it reduces the burden on teaching assistants and graders, but also removes grader-to-grader variability in assessment and helps accelerate the learning process for students by providing real-time feedback (Srikant and Aggarwal, 2014).

In the context of written prose, a number of Automatic Essay Scoring (AES) and Automatic Short Answer Grading (ASAG) systems have been developed to reliably evaluate compositions produced by learners in response to a given prompt, and are typically trained on a large set of written samples pre-scored by expert raters (Shermis and Burstein, 2003; Dikli, 2006). Over the last decade, AI-based essay grading tools evolved from using handcrafted features such as word/sentence count, mean word/sentence length, n-grams, word error rates, POS tags, grammar, and punctuation (Adamson et al., 2014; Phandi et al., 2015; Cummins et al., 2016; Contreras et al., 2018) to automatically extracted features using deep neural network variants (Taghipour and Ng, 2016; Dasgupta et al., 2018; Nadeem et al., 2019; Uto and Okano, 2020). Such systems have been developed not only to provide holistic scoring (assessing essay quality with a single score), but also for more fine-grained evaluation by providing scoring along specific dimensions of essay quality, such as organization (Persing et al., 2010), prompt-adherence (Persing and Ng, 2014), thesis clarity (Persing and Ng, 2013), argument strength (Persing and Ng, 2015), and thesis strength (Ke et al., 2019). Since it is often expensive to obtain expert-rated essays to train on each time a new prompt is introduced, considerable attention has been given to cross-prompt scoring using multi-task, domain adaptation, or transfer learning techniques, both with handcrafted (Phandi et al., 2015; Cummins et al., 2016) and automatically extracted features (Li et al., 2020; Song et al., 2020). Moreover, feedback being a critical aspect of essay drafting and revising, AES systems are increasingly being adopted into Automated Writing Evaluation (AWE) systems that provide formative feedback along with (or instead of) final scores and therefore have greater pedagogical usefulness (Hockly, 2019). For example, AWE systems have been developed for providing feedback on errors in grammar, usage and mechanics (Burstein et al., 2004) and text evidence usage in response-to-text student writings (Zhang H. et al., 2019).

AI-based evaluation tools are also heavily used in computer science education, particularly programming, due to its inherent structure and logic. Traditional approaches for automated grading of source codes such as test-case based assessments (Douce et al., 2005) and assessments using code metrics (e.g., lines of code, number of variables, number of statements), while simple, are neither robust nor effective at evaluating program quality.

A more useful direction measures similarities between abstract representations (control flow graphs, system dependence graphs) of the student's program and correct implementations of the program (Wang et al., 2007; Vujošević-Janičić et al., 2013) for automatic grading. Such similarity measurements could also be used to construct meaningful clusters of source codes and propagate feedback on student submissions based on the cluster they belong to Huang et al. (2013), Mokbel et al. (2013). Srikant and Aggarwal (2014) extracts informative features from abstract representations of the code to train machine learning models using expert-rated evaluations in order to output a finer-grained evaluation of code quality. Piech et al. (2015b) used RNNs to learn program embeddings that can be used to propagate human comments on student programs to orders of magnitude more submissions. A bottleneck in automatic program evaluation is the availability of labeled code samples. Approaches proposed to overcome this issue include learning question-independent features from code samples (Singh et al., 2016; Tarcsay et al., 2022) or zero-shot learning using human-in-the-loop rubric sampling (Wu et al., 2019).

Elsewhere, driven by the maturing of automatic speech recognition technology, AI-based assessment tools have been used for mispronunciation detection in computer-assisted language learning (Li et al., 2009, 2016; Zhang et al., 2020) or the more complex problem of spontaneous speech evaluation where the student's response is not known apriori (Shashidhar et al., 2015). Mathematical language processing (MLP) has been used for automatic assessment of open response mathematical questions (Lan et al., 2015; Baral et al., 2021), mathematical derivations (Tan et al., 2017), and geometric theorem proving (Mendis et al., 2017), where grades for previously unseen student solutions are predicted (or propagated from expert-provided grades), sometimes along with partial credit assignment. Zhang et al. (2022), moreover, overcomes the limitation of having to train a separate model per question by using multi-task and meta-learning tools that promote generalizability to previously unseen questions.

Academic integrity issues. Another aspect of performance assessment and monitoring is to ensure the upholding of academic integrity by detecting plagiarism and other forms of academic or research misconduct. Foltỳnek et al. (2019) in their review paper on academic plagiarism detection in text (e.g., essays, reports, research papers) classifies plagiarism forms according to an increasing order of obfuscation level, from verbatim and near-verbatim copying to translation, paraphrasing, idea-preserving plagiarism, and ghostwriting. In a similar fashion, plagiarism detection methods have been developed for increasingly complex types of plagiarism, and widely adopt NLP and ML-based techniques for each (Foltỳnek et al., 2019). For example, lexical detection methods use n-grams (Alzahrani, 2015) or vector space models (Vani and Gupta, 2014) to create document representations that are subsequently thresholded or clustered (Vani and Gupta, 2014) to identify suspicious documents. Syntax-based methods rely on Part-of-speech (PoS) tagging (Gupta et al., 2014), frequency of PoS tags (Hürlimann et al., 2015), or comparison of syntactic trees (Tschuggnall and Specht, 2013). Semantics-based methods employ techniques such as word embeddings (Ferrero et al., 2017), Latent Semantic Analysis (Soleman and Purwarianti, 2014), Explicit Semantic Analysis (Meuschke et al., 2017), and word alignment (Sultan et al., 2014), often in conjunction with other ML-based techniques for downstream classification (Alfikri and Purwarianti, 2014; Hänig et al., 2015). Complementary to such textual analysis-based methods, approaches that use non-textual elements like citations, math expressions, figures, etc. also adopt machine learning for plagiarism detection (Pertile et al., 2016). Foltỳnek et al. (2019) also provides a comprehensive summary of how classical ML algorithms such as tree-based methods, SVMs and neural networks have been successfully used to combine more than one type of detection method to create the best-performing meta-system. More recently, deep learning models such as different variants of convolutional and recurrent neural network architectures have also been used for plagiarism detection (El Mostafa Hambi, 2020; El-Rashidy et al., 2022).

In computer science education where programming assignments are given to evaluate students, source code plagiarism can also been classified based on increasing levels of obfuscation (Faidhi and Robinson, 1987). The detection process typically involves transforming the code into a high-dimensional feature representation followed by measurement of code similarity. Aside from traditionally used features extracted based on structural or syntactic properties of programs (Ji et al., 2007; Lange and Mancoridis, 2007), NLP-based approaches such as n-grams (Ohmann and Rahal, 2015), topic modeling (Ullah et al., 2021), character and word embeddings (Manahi, 2021), and character-level language models (Katta, 2018) are increasingly being used for robust code representations. Similarly for downstream similarity modeling or classification, unsupervised (Acampora and Cosma, 2015) and supervised (Bandara and Wijayarathna, 2011; Manahi, 2021) machine learning and deep learning algorithms are popularly used.

It is worth noting that AI itself makes plagiarism detection an uphill battle. With the increasing prevalence of easily accessible large language models like InstructGPT (Ouyang L. et al., 2022) and ChatGPT (Blog, 2022) that are capable of producing natural-sounding essays and short answers, and even working code snippets in response to a text prompt, it is now easier than ever for dishonest learners to misuse such systems for authoring assignments, projects, research papers or online exams. How plagiarism detection approaches, along with teaching and evaluation strategies, evolve around such systems remains to be seen.

(ii) Teacher-focused: Teaching Quality Evaluations (TQEs) are important sources of information in determining teaching effectiveness and in ensuring learning objectives are being met. The findings can be used to improve teaching skills through appropriate training and support, and also play a significant role in employment and tenure decisions and the professional growth of teachers. Such evaluations have been traditionally performed by analyzing student evaluations, teacher mutual evaluations, teacher self-evaluations and expert evaluations (Hu, 2021), which are labor-intensive to analyze at scale. Machine learning and deep learning algorithms can help with teacher evaluation by performing sentiment analysis of student comments on teacher performance (Esparza et al., 2017; Gutiérrez et al., 2018; Onan, 2020), which provides a snapshot of student attitudes toward teachers and their overall learning experiences. Further, such quantified sentiments and emotional valence scores have been used to predict students' recommendation scores for teachers in order to determine prominent factors that influence student evaluations (Okoye et al., 2022). Vijayalakshmi et al. (2020) uses student ratings related to class planning, presentation, management, and student participation to directly predict instructor performance.

Apart from helping extract insights from teacher evaluations, AI can also be used to evaluate teaching strategies on the basis of other data points from the learning process. For example, Duzhin and Gustafsson (2018) used a symbolic regression-based approach to evaluate the impact of assignment structures and collaboration type on student scores, which course instructors can use for the purpose of self-evaluation. Several works use a combination of student ratings and attributes related to the course and the instructor to predict instructor performance and investigate factors affecting learning outcomes (Mardikyan and Badur, 2011; Ahmed et al., 2016; Abunasser et al., 2022) .

4.3.3. Outcome prediction

While a course is ongoing, one way to assess knowledge development in students is through graded assignments and projects. On the other hand, educators can also benefit from automatic prediction of students' performance and automatic identification of students at risk of course non-completion. This can be accomplished by monitoring students' patterns of engagement with the course material in association with their demographic information. Such apriori understanding of a student's outcome allows for designing effective intervention strategies. Presently, most K-12, undergraduate and graduate students, when necessary resources are available, rely on computer and web-based infrastructure (Bulman and Fairlie, 2016). A rich source of data indicating student state is therefore generated when a student interacts with the course modules. Prior to computers being such an integral component in education, researchers frequently used surveys and questionnaires to gauge student engagement, sentiment, and attrition probability. In this section we will summarize research developments in the field of AI that generate early prediction of student outcomes—both final performance and possibility of drop-out.

Early research in outcome prediction focused on building explanatory regression-based models for understanding student retention using college records (Dey and Astin, 1993). The active research direction in this space gradually shifted to tackling the more complex and more actionable problems of understanding whether a student will complete a program (Dekker et al., 2009), estimating the time a student will take to complete a degree (Herzog, 2006) and predicting the final performance of a student (Nghe et al., 2007) given the current student state. In the subsequent paragraphs, we will be discussing the research contributions for outcome prediction with distinction between performance prediction in assessments and course attrition prediction. Note that we discuss these separately as poor performance in any assessment cannot be generalized into a course non-completion.

(i) Apriori performance prediction: Apriori prediction of performance of a student has several benefits—it allows a student to evaluate their course selection, and allows educators to evaluate progress and offer additional assistance as needed. Not surprisingly therefore AI-based methods have been proposed to automate this important task in the education process.

Initial research articles predicting performance estimated time to degree completion (Herzog, 2006) using student demographic, academic, residential and financial aid information, student parent data and school transfer records. In a related theme, researchers have also mapped the question of performance prediction into a final exam grade prediction problem (e.g., excellent, good, fair, fail; Nghe et al., 2007; Bydžovská, 2016; Dien et al., 2020). This granular prediction eventually allows educators to assess which students require additional tutoring. Baseline algorithms in this context are Decision Trees, Support Vector Machines, Random Forests, Artificial Neural Networks etc. (regression or classification based on the problem setup). Researchers have aimed to improve the performance of the predictors by including relevant information such as student engagement, interactions (Ramesh et al., 2013; Bydžovská, 2016), role of external incentives (Jiang et al., 2014), and previous performance records (Tamhane et al., 2014). Xu et al. (2017) proposed that a student's performance or when the student anticipates graduation should be predicted progressively (using an ensemble machine learning method) over the duration of the student's tenure as the academic state of the student is ever-evolving and can be traced through their student records. The process of generalizing performance prediction to non-traditional modes of learning such as hybrid or blended learning and on-line learning has benefitted from the inclusion of additional information sources such as web-browsing information (Trakunphutthirak et al., 2019), discussion forum activity and student study habits (Gitinabard et al., 2019).

In addition to exploring a more informative and robust feature set, recently, deep learning based approaches have been identified to outperform traditional machine learning algorithms. For example, Waheed et al. (2020) used deep feed-forward neural networks and split the problem of predicting student grade into multiple binary classification problems viz., Pass-Fail, Distinction-Pass, Distinction-Fail, Withdrawn-Pass. Tsiakmaki et al. (2020) analyzed if transfer learning (i.e., pre-training neural networks on student data on a different course) can be used to accurately predict student performance. Chui et al. (2020) used a generative adversarial network based architecture, to address the challenges of low volume of training data in alternative learning paradigms such as supportive learning. Dien et al. (2020) proposed extensive data pre-processing using min-max scaler, quantile transformation, etc. before passing the data in a deep-learning model such as one-dimensional convolutional network (CN1D) or recurrent neural networks. For a comprehensive survey of ML approaches for this topic, we would refer readers to Rastrollo-Guerrero et al. (2020) and Hellas et al. (2018).

(ii) Apriori attrition prediction: Students dropping out before course completion is a concerning trend. This is more so in developing nations where very few students finish primary school (Knofczynski, 2017). The outbreak of the COVID-19 pandemic exacerbated the scenario due to indefinite school closures. This led to loss in learning and progress toward providing access to quality education (Moscoviz and Evans, 2022). The causes for dropping out of a course or a degree program can be diverse, but early prediction of it allows administrative staff and educators to intervene. To this end, there have been efforts in using machine learning algorithms to predict attrition.

Massive Open Online Courses (MOOCs): In the context of attrition, special mention must be made of Massive Open Online Courses (MOOCs). While MOOCs promise the democratization of education, one of the biggest concerns with MOOCs is the disparity between the number of students who sign up for a course versus the number of students who actually complete the course—the drop-out rate in MOOCs is significantly high (Hollands and Kazi, 2018; Reich and Ruipérez-Valiente, 2019). Yet in order to make post-secondary and professional education more accessible, MOOCs have become more a practical necessity than an experiment. The COVID-19 pandemic has only emphasized this necessity (Purkayastha and Sinha, 2021). In our literature search phase, we found a sizeable number of contributions in attrition prediction that uses data from MOOC platforms. In this subsection, we will be including those as well as attrition prediction in traditional learning environments.

Early educational data mining methods (Dekker et al., 2009) proposed to predict student drop-out mostly used data sources such as student records (i.e., student demographics, academic, residential, gap year, financial aid information) and administrative records (major administrative changes in education, records of student transfers) to train simple classifiers such as Logistic Regression, Decision Tree, BayesNet, and Random Forest. Selecting an appropriate set of features and designing explainable models has been important as these later inform intervention (Aguiar et al., 2015). To this end, researchers have explored features such as students' prior experiences, motivation and home environment (DeBoer et al., 2013) and student engagement with the course (Aguiar et al., 2014; Ramesh et al., 2014). With the inclusion of an online learning component (particularly relevant for MOOCs), click-stream data and browser information generated allowed researchers to better understand student behavior in an ongoing course. Using historical click-stream data in conjuction with present click-stream data, allowed (Kloft et al., 2014) to effectively predict drop-outs weekly using a simple Support Vector Machine algorithm. This kind of data has also been helpful in understanding the traits indicative of decreased engagement (Sinha et al., 2014), the role of a social cohort structure (Yang et al., 2013) and the sentiment in the student discussion boards and communities (Wen et al., 2014) leading up to student drop-out. He et al. (2015) addresses the concern that weekly prediction of probability of a student dropping out might have wide variance by including smoothing techniques. On the other hand, as resources to intervene might be limited, Lakkaraju et al. (2015) recommends assigning a risk-score per student rather than a binary label. Brooks et al. (2015) considers the level of activity of a student in bins of time during a semester as a binary features (active vs. inactive) and then uses these sequences as n-grams to predict drop-out. Recent developments in predicting student attrition propose the use of data acquired from disparate sources in addition to more sophisticated algorithms such as deep feed-forward neural networks (Imran et al., 2019) and hybrid logit leaf model (Coussement et al., 2020).

5. Discussion

In this article, we have investigated the involvement of artificial intelligence in the end-to-end educational process. We have highlighted specific research problems both in the planning and in the knowledge delivery phase and reviewed the technological progress in addressing those problems in the past two decades. To the best of our knowledge, such distinction between proactive and reactive phases of education accompanied by a technical deep-dive is an uniqueness of this review.

5.1. Major trends in involvement of AI in the end-to-end education process

The growing interest in AIEd can be inferred from Figures 2, 4 which show how both the count of technical contributions and the count of review articles on the topic have increased over the past two decades. It is to be noted that the number of technical contributions in 2021 and 2022 (assuming our sample of reviewed articles is representative of the population) might have fallen in part due to pandemic-related indefinite school closures and shift to alternate learning models. This triggered a setback on data collection, reporting, and annotation efforts due to a number of factors including lack of direct access to participants, unreliable network connectivity and the necessity of enumerators adopting to new training modes (Wolf et al., 2022). Another important observation from Figure 3 is that AIEd research in most categories focuses heavily on learners in universities, e-learning platforms and MOOCs—work targeting pre-school and K-12 learners is conspicuously absent. A notable exception is research surrounding tutoring aids that has a nearly uniform attention for different target audience groups.

In all categories, to different extents, we see a distinct shift from rule-based and statistical approaches to classical ML to deep learning methods, and from handcrafted features to automatically extracted features. This advancement goes hand-in-hand with the increasingly complex nature of the data being utilized for training AIEd systems. Whereas, earlier approaches used mostly static data (e.g., student records, administrative records, demographic information, surveys, and questionnaires), the use of more sophisticated algorithms necessitated (and in turn benefited from) more real-time and high-volume data (e.g., student-teacher/peer-peer interaction data, click-stream information, web-browsing data). The type of data used by AIEd systems also evolved from mostly tabular records to more text-based and even multi-modal data, spurred on by the emergence of large language models that can handle large quantities of such data.

Even though data-hungry models like deep neural networks have grown in popularity across almost all categories discussed here, AIEd often suffers from the availability of sufficient labeled data to train such systems. This is particularly true for small classes and new course offerings, or when existing curriculum or tests are changed to incorporate new elements. As a result, another emerging trend in AIEd focuses on using information from resource-rich courses or existing teaching/evaluation content through domain adaptation, transfer learning, few-shot learning, meta learning, etc.

5.2. Impact of COVID-19 pandemic on driving AI research in the frontier of education

COVID-19 pandemic, possibly the most significant social disruptor in recent history, impacted more than 1.5 billion students worldwide (UNESCO, 2022) and is believed to have had far-reaching consequences in the domain of education, possibly even generational setbacks (Tadesse and Muluye, 2020; Dorn et al., 2021; Spector, 2022). As lockdowns and social distancing mandated a hastened transition to fully virtual delivery of educational content, the pandemic era saw an increasing adoption of video conferencing softwares and social media platforms for knowledge delivery, combined with more asynchronous formats of learning. These alternative media of communication were often accompanied by decreasing levels of engagement and satisfaction of learners (Wester et al., 2021; Hollister et al., 2022). There was also a corresponding decrease in practical sessions, labs, and workshops, which are quite critical in some fields of education (Hilburg et al., 2020). However, the pandemic also led to an accelerated adoption of AI-based approaches in education. Pilot studies show that the pandemic led to a significant increase in the usage of AI-based e-learning platforms (Pantelimon et al., 2021). Moreover, a natural by-product of the transition to online learning environments is the generation and logging of more data points from the learning process (Xie et al., 2020) that can be used in AI-based methods to assess and drive student engagement and provide personalized feedback. Online teaching platforms also make it easier to incorporate web-based content, smart interactive elements and asynchronous review sessions to keep students more engaged (Kexin et al., 2020; Pantelimon et al., 2021).

Several recent works have investigated the role of pandemic-driven remote and hybrid instruction in widening gaps in educational achievements by race, poverty level, and gender (Halloran et al., 2021; UNESCO, 2021; Goldhaber et al., 2022). A widespread transition to remote learning necessitates access to proper infrastructure (electricity, internet connectivity, and smart electronic devices that can support video conferencing apps and basic file sharing) as well as resources (learning material, textbooks, educational softwares, etc.), which create barriers for low-income groups (Muñoz-Najar et al., 2021). Even within similar populations, unequal distribution of household chores, income-generating activities, and access to technology-enabled devices affect students of different genders disproportionately (UNESCO, 2021). Moreover, remote learning requires a level of tech-savviness on the part of students and teachers alike, which might be less prevalent in people with learning disabilities. In this context, Garg and Sharma (2020) outlines the different ways AI is used in special need education for development of adaptive and inclusive pedagogies. Salas-Pilco et al. (2022) reviews the different ways in which AI positively impacts education of minority students, e.g., through facilitating performance/engagement improvement, student retention, student interest in STEM/STEAM fields, etc. Salas-Pilco et al. (2022) also outlines the technological, pedagogical, and socio-cultural barriers for AIEd in inclusive education.

5.3. Existing challenges in adopting artificial intelligence for education

In 2023, artificial intelligence has permeated the lives of people in some aspect or other globally (e.g. chat-bots for customer service, automated credit score analysis, personalized recommendations). At the same time, AI-driven technology for the education sector is gradually becoming a practical necessity globally. The question therefore is, what are the existing barriers in global adoption of AI for education in a safe and inclusive manner—we discuss some of our observations with regards to deploying existing AI driven educational technology at scale.

5.3.1. Lack of concrete legal and ethical guidelines for AIEd research