- 1Digital Systems, University of Piraeus, Piraeus, Greece

- 2RapidMiner GmbH, Dortmund, Germany

- 3Universite Paris-Saclay, CNRS, Centrale Supelec, Laboratoire des Signaux et Systémes, Gif-sur-Yvette, France

With the vision to transform the current wireless network into a cyber-physical intelligent platform capable of supporting bandwidth-hungry and latency-constrained applications, both academia and industry turned their attention to the development of artificial intelligence (AI) enabled terahertz (THz) wireless networks. In this article, we list the applications of THz wireless systems in the beyond fifth generation era and discuss their enabling technologies and fundamental challenges that can be formulated as AI problems. These problems are related to physical, medium/multiple access control, radio resource management, network and transport layer. For each of them, we report the AI approaches, which have been recognized as possible solutions in the technical literature, emphasizing their principles and limitations. Finally, we provide an insightful discussion concerning research gaps and possible future directions.

1 Introduction

As a response to the spectrum scarcity problem that was created due to the aggressive proliferation of wireless devices and quality-of-service (QoS) and quality-of-experience (QoE) hungry services, which are expected to support a broad range of diverse multi-scale and multi-environment applications, sixth-generation (6G) wireless networks adopt higher frequency bands, such as terahertz (THz) that ranges from 0.1 to 10 THz) (Boulogeorgos et al., 2018b; Boulogeorgos and Alexiou, 2020c; Boulogeorgos and Alexiou, 2020d; Boulogeorgos et al., 2018a). In more detail and according to the IEEE 802.15.3 days standard (IEEE Standard for Information technology, 2009; IEEE Standard for High Data Rate Wireless Multi-Media Networks, 2017), THz wireless communications are recognized as the pillar technological enabler of a varied set of use cases stretching from in-body nano-scale, to indoor and outdoor wireless personal/local area and fronthaul/backhaul networks. Nano-scale applications require compact transceiver designs and self-organized ad-hoc network topologies. On the other hand, macro-scale applications demand flexibility, sustainability, adaptability in an ever changing heterogeneous environment, and security. Moreover, supporting high data-rate that may reach 1 Tb/s, and energy-efficient massive connectivity are only some of the key demands. To address the aforementioned requirements, artificial intelligence (AI), in combination with novel structures capable of altering the wireless environment, have been regarded as complementary pillars to 6G wireless THz systems.

AI is expected to enable a series of new features in next-generation networks, including, but not limited to, self-aggregation, context awareness, self-configuration as well as opportunistic deployment (Dang et al., 2020). In addition, integrating AI in wireless networks is envisioned to bring a revolutionary transformation of conventional cognitive radio systems into intelligent platforms by unlocking the full potential of radio signals and exploiting new degrees-of-freedom (DoF) (Letaief et al., 2019; Saad et al., 2020). Identifying this opportunity, a significant amount of researchers turned their eyes on AI-empowered wireless systems and specifically in machine learning (ML) algorithms (see e.g., (Wang J. et al., 2020; Liu Y. et al., 2020; Jiang, 2020; Boulogeorgos et al., 2021a) and references therein). In more detail, in (Wang J. et al., 2020), the authors reviewed the 30-year history of ML highlighting its application in heterogeneous networks, cognitive radios, device-to-device communications and Internet-of-Things (IoT). Likewise, in (Liu Y. et al., 2020), big data analysis is combined with ML in order to predict the requirements of mobile users and enhance the performance of “social network-aware wireless.” Moreover, in (Jiang, 2020), a brief survey was conducted that summarizes the basic physical layer (PHY) authentication schemes that employ ML in fifth generation (5G)-based IoT. Finally, in (Boulogeorgos et al., 2021a), the authors provided a systematic and comprehensive review of the ML approaches that was employed to address a number of nano-scale biomedical challenges, including once that refer to molecular and nano-scale THz communications.

The methodologies, which are presented in the aforementioned contributions, are tightly connected to the communication technology characteristics and building blocks of radio and microwave communication systems and networks, which are inherently different from higher frequency bands that can support higher data rates, and propagation environments with conventional and unconventional structures, like RIS. Additionally, in order to quantify the AI-approaches efficiency, new key performance indicators (KPIs) need to be defined. Motivated by this, this article focuses on reporting the role of AI in THz wireless networks. In particular, we first identify the THz wireless systems particularities that require the adoption of AI. Building upon this, we present a brief survey that summarizes the contributions in this area and focus on indicative AI approaches that are expected to play an important role in different layers of the THz wireless networks. Finally, possible future research directions are provided.

The structure of the rest of the paper is as follows. Section 2 discusses the role of ML in THz wireless systems and networks. In particular, a systematic review is conducted concerning the ML algorithms that are used to solve PHY, medium access control (MAC), radio resource management (RRM), network and transport layer related problems. Moreover, in Section 2, the ML techniques that was employed or have the potential to be adopted to THz wireless systems and networks are documented. In Section 3, the aforementioned ML algorithms are reviewed and a methodology to select a suitable ML algorithm is presented. Likewise, in Section 4, ML deployment strategies are discussed, while, in Section 5, future research directions are reported.

Notations

In this paper, matrices are denoted in bold, capital letters, while vectors in bold, lower case letters. The base-10 logarithm of x is given by

2 The Role of ML in THz Wireless Systems and Networks

Together with the promise of supporting high data-rate massive connectivity, THz wireless systems and networks come with several challenges. In particular, these challenges can be summarized as:

• Due to the high transmission frequency, i.e. the small wavelength, in the THz band, we can design high-directional antennas (with gains that may surpass 30 dBi) with unprecedented low beamwidths, which may be less than 4°1. These antennas are used to counterbalance the high channel attenuation by establishing high-directional links. On the one hand, high directionality creates additional DoFs, which, if they are appropriately exploited, they can enhance both dynamic spectrum access and network densification; thus, boost its connectivity capabilities. In this direction, new approaches to support intelligent interference monitoring and cognitive access are required. On the other hand, high directionality comes with the requirement of extremely accurate beam alignment between the fixed and moving communication nodes. To address this beam tracking and channel estimation approaches of high latency needs to be developed, which account for the latency requirement.

• Molecular absorption causes frequency- and distance-dependent path loss, which creates frequency windows that are unsuitable for establishing communication links (Boulogeorgos et al., 2018d). As a consequence, despite the high bandwidth availability in the THz band, windowed transmission with time varying loss and per-window adaptive bandwidth as well as power usage is expected to be employed. This characteristic is expected to influence both beamforming design as well as resource allocation and user association.

• The large penetration loss in the THz band, which may surpass 40 or even 50 dB (Kokkoniemi et al., 2016; Stratidakis et al., 2019; Petrov et al., 2020; Stratidakis et al., 2020a; Stratidakis et al., 2020b), renders questionable the establishment of the non-LoS links. As a result, blockage avoidance schemes are needed. Note that in lower frequency bands, such as mmW, the penetration loss is in the range of 20–30 dB (Mahapatra et al., 2015; Zhu et al., 2018; Zhang et al., 2020a).

• To exploit the spatial dimension of THz radio resources, support MU-connectivity as well as increase the link capacity in heterogeneous environments of moving nodes, suitable beamforming and mobility management designs that predict the number and motion of UEs need to be designed. Moreover, in mobile scenarios, accurate channel state information (CSI) is needed. In lower-frequency systems, this is achieved by performing frequent channel estimation. However, in THz wireless systems, due to the small transmission wavelength, the channel can be affected by slight variations in the micrometer scale (Sarieddeen et al., 2021). As a result, the frequency of channel estimation is expected to be extremely high and the corresponding overhead unaffortable. This motivates the design of prediction-based channel estimation and beam tracking approaches.

• From the hardware point of view, high-frequency transceivers suffer from a number of hardware imperfections. In particular, the EVM of THz transceiver may even reach 0.4 (Koenig et al., 2013). It is questionable that conventional mitigation approaches would be able to limit the impact of hardware imperfections. As a result, smarter signal detection approaches need to be developed. Of note, as stated in (Schenk, 2008; Boulogeorgos, 2016; Boulogeorgos and Alexiou, 2020b), in lower-frequency communication systems including mmW, the EVM does not exceed 0.17.

• THz wireless systems are expected to support a large variety of applications with diverse set of requirements as well as UEs equipped with antennas of different gains. To guarantee high availability and association rate that tends to 100%, association schemes that take into account both the nature of THz resource block, the UEs’ transceivers capabilities, as well as the applications requirements, need to be presented. Moreover, to ensure uninterrupted connectivity, these approaches should be highly adaptable to network topology changes. In other words, they should be able to predict network topologies changes and pre-actively perform hand-overs.

• Conventional routing strategies account neither the communication nodes distance nor their memory limitations. However, in THz mobile scenarios, where both the transmission range is limited to some decades of meter and the device memory is comparable to the packet length, routing may become a complex optimization problem.

• Finally, for several realistic scenarios, the aggregated data-rate of the fronthaul is expected to reach 1 Tb/s, which is comparable with the backhaul’s achievable data-rate. This may cause service latency due to data congestion in network nodes. To avoid this intelligent traffic prediction and caching strategies need to created to pre-actively bring the future-requested content near the end-user.

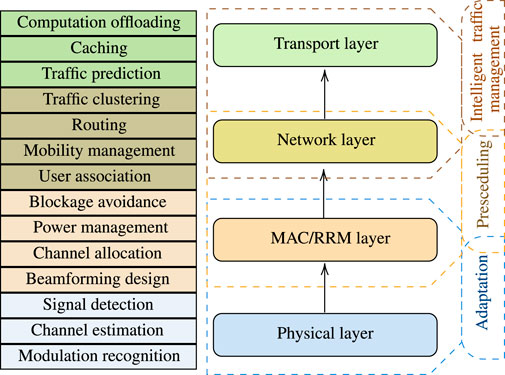

The rest of this section is focused on explaining the role of ML in THz wireless systems and networks by presenting the current state-of-the-art. As illustrated in Figure 1 and in order to provide a comprehensive understanding of the need to formulate ML problems and devise such solutions, we classify the ML problems into four categories, namely 1) PHY, 2) MAC and RRM, 3) Network, and 4) transport. Of note, this classification is in line with the open system interconnection (OSI) model. For each category, we identify the communications and networks problems for which ML solutions have been proposed as well as the needs of utilizing them. Finally, this section provides a review on the ML problems and solutions that have been employed. Apparently, some of the aforementioned challenges have been also discussed in lower-frequency systems and networks. For the sake of completeness, in our literature review, we have also included contributions that although refer to lower frequency, can find application to THz wireless systems and networks.

2.1 PHY Layer

The additional DoFs, which have been brought by the ultra-wide band THz channels as well as their spatial nature, allow us to establish high data-rate links with limited transmission power. Moreover, advances in the fields of communication-components designs, fueled by new artificial materials, such as reconfigurable intelligent surfaces (RISs), created a controllable wireless propagation environment; thus, offered new opportunities in simplifying the PHY layer processes and further increased the systems DoFs (Boulogeorgos et al., 2021b). Finally, the use of direct conversion architectures (DCAs) in both transceivers created the need to utilize digital signal processing (DSP) algorithms in order to “decouple” the system’s reliability from the hardware imperfection related degradation. The computational complexity of these algorithms increases as the modulation order of the transmission signal increases. The aforementioned factors create a rather dynamic high-dimensional complex environment with processes that are hard or even impossible to be analytically expressed. Motivated by this, the objectives of employing ML in the PHY layer is to provide adaptability to an ever changing wireless environment consisted of heterogeneous components (transceivers, RIS, active and passive relays, etc.) and to countermeasure the impact of transceivers hardware imperfections with increasing neither the communication and computation overheads.

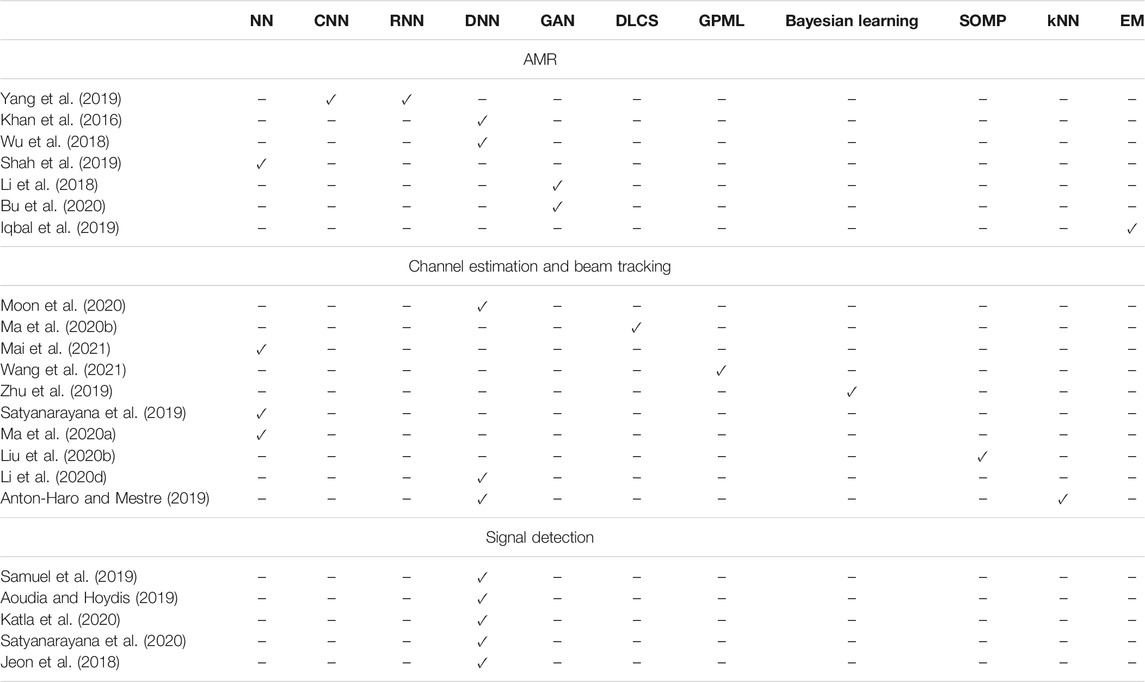

In this direction, several researcher have focused on presenting ML-related solutions for automatic modulation recognition (AMR) (Khan et al., 2016; Li et al., 2018; Wu et al., 2018; Iqbal et al., 2019; Shah et al., 2019; Yang et al., 2019; Bu et al., 2020), channel estimation (Satyanarayana et al., 2019; Zhu et al., 2019; Liu S. et al., 2020; Ma et al., 2020a; Ma et al., 2020b; Moon et al., 2020; Mai et al., 2021; Wang et al., 2021), and signal detection (Jeon et al., 2018; Aoudia and Hoydis, 2019; Samuel et al., 2019; Katla et al., 2020; Satyanarayana et al., 2020). In more detail, AMR has been identified as an important task for several wireless systems, since it enables dynamic spectrum access, interference monitoring, radio fault self-detection as well as other civil, government, and military applications. Moreover, it is considered as a key enabler of intelligent/cognitive nano- and macro-scale receivers (RXs). Fast AMR can significantly improve the spectrum utilization efficiency (Mao et al., 2018). However, it is a very challenges tasks, since it depends to the variation of the wireless channel. This aspires the introduction of intelligence in this task. In this sense, in (Yang et al., 2019), the authors employed convolutional NNs (CNNs) and recurrent NNs (RNNs) in order to perform AMR. As inputs, the algorithms used the in-phase and quadrature (I/Q) components of the unknown received signal and classified it to one of the following modulation schemes: binary frequency shift keying (BFSK), differential quadrature phase shift keying (DQPSK), 16 quadrature amplitude modulation (16-QAM), quaternary pulse amplitude modulation (4PAM), minimum shift keying (MSK), Gaussian minimum shift keying (GMSK). Moreover, in (Khan et al., 2016), a deep NN (DNN) approach was discussed for identifying the received signal modulation in coherent RXs. The DNN is comprised of two auto-encoders and an output perceptron layer. To train and verify the ML network, two datasets of numerous amplitude histograms are used. After training, the network is capable of accurately extracting the modulation format of the signal after receiving a number of symbols.

Similarly, in (Wu et al., 2018), the authors presented a DNN approach that is based on RNNs with short memory and is capable of to exploit the temporal and spatial correlation of the received samples in order to accurately extract the their modulation type. In this contribution, as an input, the ML algorithm requires a predetermined number of samples. Meanwhile, in (Shah et al., 2019), the authors reported a extreme supervised-learning ML algorithm capable of accurately and time-efficiently estimating the modulation type of the received samples. The disadvantage of the aforementioned approaches is that the training period is large and if the characteristics of channel changes, for example due to a RIS reconfiguration, a partial blockage phenomenon or the user equipment movement, the algorithm need to be re-trained.

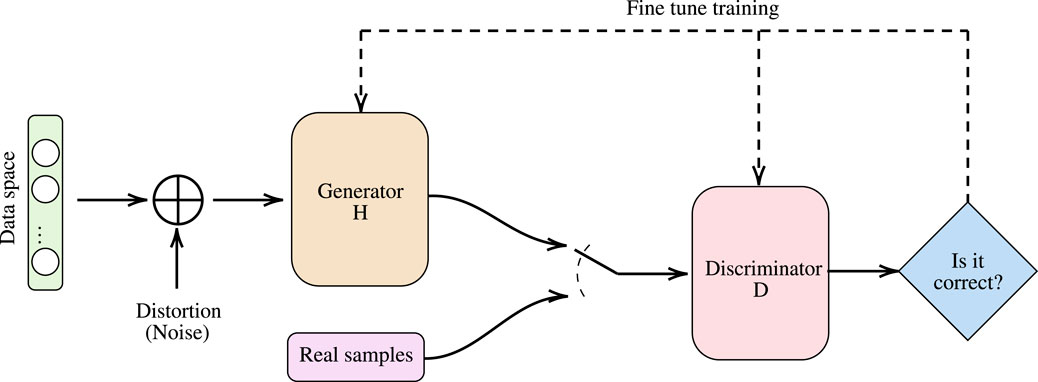

Additionally, in (Li et al., 2018), a semi-supervised deep convolutional generative adversarial network (GAN) was presented that consists of a pair of GANs that collaboratively create a powerful modulator discriminator. The ML network receives as inputs the I/Q components of a number of received signal samples and matches them to a set of modulation formats. The main disadvantage of this approach is its high-computational resource demands as well as its sensitivity to received signal distribution variations. To deal with the data distribution variation, Bu et al. (Bu et al., 2020) introduced an adversarial transfer learning architecture that exploits transfer learning and is capable of achieving accuracy comparable to the ones of supervised learning approaches. However, this approach demands careful handling of former knowledge since there may exist differences between wireless environments. In (Bu et al., 2020), the ML algorithm uses as an input the (I/Q) components of the received signal. Finally, in (Iqbal et al., 2019), an expectation maximization (EM) algorithm was employed in order to perform modulation mode detection and systematically differentiate between pulse- and carrier-based modulations. The presented results revealed the existence of a unique Pareto-optimal point for both the SNR and the classification threshold, where the error probability is minimized.

Another important task in PHY layer is channel estimation and tracking. In particular, in order to employ high directional beaforming in THz wireless systems, it is necessary to acquire channel information for all the transmitter (TX) and RX antenna pairs. The conventional approach that is supported by several standards, including IEEE 802.11ad and IEEE 802.11ay (Ghasempour et al., 2017; Silva et al., 2018; Boulogeorgos and Alexiou, 2019), depends on creating a set of transmission and reception beamforming vector pairs and scanning between them in order to identify the optimal one. During this process, the access point (AP) sends synchronization signals using all its possible beamforming vectors, while the user equipment (UE) performs energy detection in all possible reception directions. In the end of this process, the UE determines the optimal AP beamforming vector and feed it back to the AP. Next, the roles of the AP and UE interchange in order to allow the AP to identity the optimal UE beamforming vector and feed it back to it. Notice that in the second phase, the AP locks its RX to its optimal beamforming vector. Then, channel estimation is performed using the optimal beamforming pair and a classical DSP technique (e.g., minimum least square error, minimum mean square error, etc.). Let us assume that the AP and the UE respectively have LA and LU available beamforming vectors and that Na and Ne received signal samples are needed for energy detection and channel estimation. As a consequence, the latency and power consumption due to channel estimation is proportionally to LALUNa + LUNa + Ne + 2. This indicates that as the number of the antennas at TX and RX increases, the number of beamforming pairs also increases; thus, the training overhead significantly increase and the conventional channel estimation approach becomes more complex as well as time and power inefficient.

To better understand the importance of this challenge in THz wireless systems, let us refer to an indicative example. Assume that we would like to support a virtual reality (VR) application for which the transmission distance between the AP and the UE is 20 m, a data-rate of 20 Gb/s is required with an uncoded bit error rate (BER) in the order of 10–6. Furthermore, a false-alarm probability that is lower than 1% is required. This indicates that Na ≥ 100. Let us assume that the transmission bandwidth is 10 GHz, the transmission power is set to 10 dBm, while the receives mixer convention and miscellaneous losses are respectively 8 and 5 dB. Additionally, the RX’s low noise amplifier (LNA) gain is 25 dB, whereas the RX’s mixer and LNA noise figures are respectively 6 and 1 dB. If the transmission frequency is 287.28 GHz, both the TX and RX need to be equipped with antennas of 35 dBi gain. Such antennas have a beamwidth of 3.6°; hence, LA = LU = 100. Also, by assuming that Ne = 100, the channel estimation latency becomes equal to approximately 1 ms. Of note, VR requires a latency that is lower than 1 µs

To address the aforementioned problem, researchers turned their attention to regression and clustering ML-based methods. Specifically, in (Moon et al., 2020), the authors employed a DNN-based algorithm to predict the user’s channel in a sub-THz multiple-input multiple-output (MIMO) vehicular communications system. he ML algorithm of (Moon et al., 2020) takes as input the signals received by a predetermined number of APs and outputs a vector containing the estimated channel coefficients. Similarly, in (Ma et al., 2020b), the authors reported a deep learning compressed sensing (DLCS) algorithm for channel estimation scheme in multi-user (MU) massive MIMO sub-THz systems. The DLCS is a supervised learning algorithm that takes as input a simulation-based generated received signal vector as well as real measurements and performs two functionalities, i.e. beamspace channel amplitude estimation; and 2) channel reconstruction. The results indicate that this approach can achieve a minimum mean square error that is comparable with the one of the orthogonal matching pursuit scheme. Likewise, in (Mai et al., 2021), a manifold learning-extreme learning machine was presented for estimating high-directional channels. In more detail, manifold learning was employed for dimentionality reduction, whereas the extreme learning algorithm with one-shot training was employed for channel state information estimation. Moreover, in (Wang et al., 2021), the authors presented a Gaussian process based ML (GPML) algorithm to predict the channel in an unmanned aerial vehicle (UAV) aided coordinated multiple point (CoMP) communication system. The main idea was to provide real-time predictions of the location of the UAV and reconstruct the line-of-sight (LOS) channel between the AP and the UAV. Similarly, in (Zhu et al., 2019), a sparse Bayesian learning algorithm was introduced to estimate the propagation parameters of the wireless system. Meanwhile, in (Satyanarayana et al., 2019), the authors presented a neural network (NN)-based algorithm for channel prediction and showed that, after sufficient training, it can faithfully reproduce the channel state.

Likewise, in (Ma et al., 2020a), a NN-based algorithm, which consists of three hidden layers and one fully-connected layer, was reported to obtain the beam distribution vector and reproduce the channel state. The algorithm uses as inputs the (I/Q) components of the received signal. It is trained offline using simulations and is able to achieve similar performance in terms of total training time slots and the spectral efficiency with previously proposed approaches, like adaptive compressed sensing, hierarchical search, and multi-path decomposition. In order for this approach to work properly, the simulation and real world data should follow the same distribution. Also, the propagation parameters of both types of data should coincide. Furthermore, in (Liu S. et al., 2020), the authors presented a deep denoising NN assisted compressive sensing broadband channel estimation algorithm that exploits the relation of angular-delay domain MIMO channels in sub-THz RIS-assisted wireless systems. In particular, the algorithm takes as inputs the received signals at a number of active elements of the RIS and forward them to a compressive sensing block, which feeds the NN. The proposed approach outperformed the well-known simultaneous orthogonal match pursuit (SOMP) algorithm in terms of normalized mean square error (NMSE). The main disadvantage of deep denoising NN approach is that it is not adaptable to changes in the propagation environment characteristics. Furthermore, in (Li et al., 2020d), Li et al. reported a deep leaning architecture for channel estimation in RIS-assisted THz MIMO systems. The idea behind this approach was to convert the channel estimation problem into the sparse recovery problem by exploiting the space nature of THz wireless channels. In this direction, the algorithm uses for training the (I/Q) components of received signals that carry pilot symbols. In the operation phase, the (I/Q) components of the received signal are used as inputs. Finally, in (Anton-Haro and Mestre, 2019), the authors employed k-nearest neighbors (kNN) and support vector classifiers (SVC) to estimate the angle-of-arrival (AoA) in hybrid beamforming wireless systems.

Signal detection is another PHY layer task. Conventional approaches require accurate estimation of both the channel model and the impact of hardware imperfections in order to design suitable equalizers and detectors. However, as the wireless environment becomes more complex and the influence of hardware imperfections become more severe, due to the high number of transmission and reception antennas, data detection becomes more challenging. This fact motivates the study of ML-based solution for end-to-end signal detection, in which no channel and hardware imperfections equalization is required. In this sense, in (Samuel et al., 2019), the authors employed a DNN architecture for data detection, whereas, in (Aoudia and Hoydis, 2019), the effectiveness of deep learning for end-to-end signal detection was reported. Similarly, in (Katla et al., 2020), the authors presented a deep learning assisted approach for beam index modulation detection in high-frequency massive MIMO systems. Additionally, in (Satyanarayana et al., 2020), the authors demonstrated the use of deep learning assisted soft-demodulator in multi-set space-time shift keying millimeter wave (mmW) wireless systems. To cancel the impact of hardware imperfections without employing equalization units, a supervised ML signal detection approach was presented in (Jeon et al., 2018).

To sum up, PHY-specific ML algorithms usually employ as inputs I/Q components of the received signal samples. In case of modulation recognition, due to the lack of unlabeled data for training, unsupervised learning approaches are adopted. On the other hand, in channel estimation and signal detection, pilot signals can be exploited to train the ML algorithm. As a consequence, for channel estimation, supervised learning approaches are the usual choice. In particular, as observed in Table 2, both supervised and unsupervised learning approaches were applied that return classification, regression, dimensionality reduction and density estimation rules. Interestingly, it is observed that for signal detection only DNN-based algorithms have been reported. Finally, it is worth noting that the main requirement for the algorithm selection in most cases was to provide high adaptation to the THz wireless system. It is worth mentioning that, for the sake of competence, Table 2 includes some contributions that although do not refer to THz wireless systems, they can be straightforwardly applied to them. For example, (Shah et al., 2019; Yang et al., 2019) and (Li et al., 2018) can be applied to any system that employ I/Q modulation and demodulations approaches, whereas (Khan et al., 2016) is suitable for the ones that use coherent RXs. Of note, according to (Boulogeorgos et al., 2018b), coherence RXs are a usual choice in THz wireless fiber extenders. Similarly, since an inherent characteristic of THz channels is the high temporal correlation, the ML methodology presented in (Wu et al., 2018) is expected to find application in THz wireless systems. Additionally, despite the fact that the ML algorithms in (Zhu et al., 2019; Ma et al., 2020a; Liu S. et al., 2020; Ma et al., 2020b; Katla et al., 2020; Moon et al., 2020; Satyanarayana et al., 2020; Mai et al., 2021; Wang et al., 2021) were applied to mmW systems and exploit the spatio-temporal and directional characteristics of the channels in this band, the same approach can be used in THz systems that have the similar particularities. Finally, the ML approach presented in Jeon et al. (2018) can be applied in any MIMO regardless the operating frequency.

2.2 MAC and RRM Layer

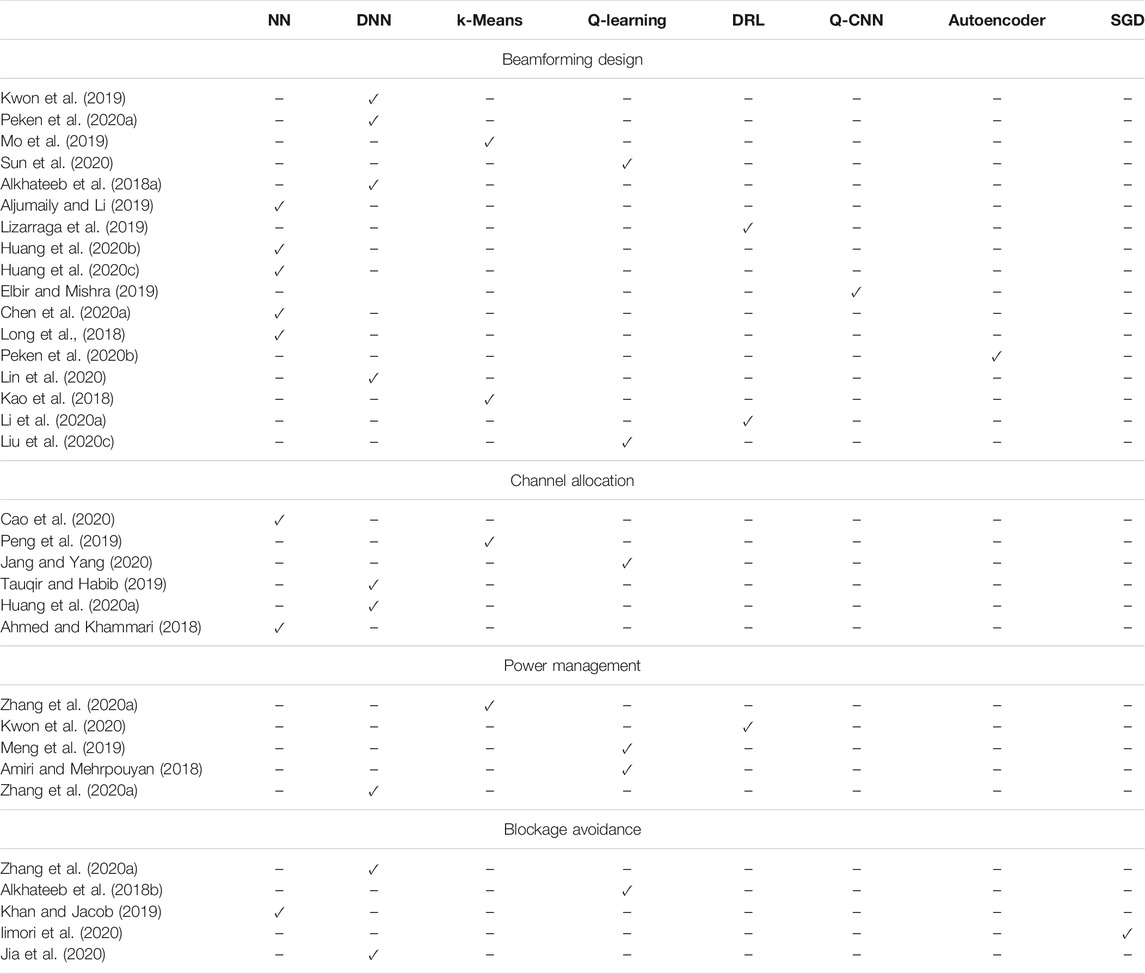

MAC and RRM layers are responsible of providing uninterrupted high quality-of-experience (QoE) to mutliple end users. In contrast to lower-frequency communications, where omni-directional or quasi-omni-directional links are established, in THz wireless systems both the AP/BS and the UE employ beamforming. As a result, an additional to time and frequency resource, i.e., space, is created. The optimal exploitation of the tertiary nature of the channel require the design of beamforming approaches2 and channel allocation strategies. Moreover, to satisfy the end user’s data rate demands and to support non-orthogonal multiple access (NOMA), power management policies are required. Finally, to address the impact of blockage, environmental awareness need to be obtained by the wireless THz network and proactive blockage avoidance mechanisms are a necessity. Motivated by the above, the rest of this section discuss ML-based beamforming designs, channel allocation strategies, power management schemes and blockage avoidance mechanisms.

Beamforming design is a crucial task for MIMO and MU-MIMO THz wireless systems. However, conventional beamforming approaches strongly rely on accurate channel estimation; as a result, their complexity is relatively high. To simplify the beamfoming design process, a great amount of research effort was put on investigating ML-based approaches. In more detail, in (Kwon et al., 2019), the authors presented a DNN for determining the optimal beamforming vectors that maximizes the sum rate in a two-user multiple-input single-output (MISO) wireless system. The ML algorithm uses as inputs the real and imaginary part of the complex MISO channel coefficients as well as the transmitted power. For training the ML model, the cross-entropy is used as a cost function, which evaluates the errors by calculating the difference between probability distributions labeled and model outputted data. Three DNN architectures to approximate the hybrid beamformer’s singular value decomposition, with varying levels of complexity were discussed in (Peken et al., 2020a). The architectures take as inputs the real and imaginary components of the MIMO channel coefficients. The first architecture predicts a predetermined number of the most important singular values and vectors of a given channel matrix by employing a single DNN. The second architecture employs k DNNs. Each one of them returns the largest singular value and corresponding right and left singular vectors of the MIMO channel matrix. The third architecture is suitable for channel matrices of rank-1 and outputs a predetermined number of singular values and vectors by recursively using the same DNN. The architectures are trained through comparison of the extracted channel matrices to the channel matrices extracted by the ML-models. The proposed ML-based architectures were shown, by means of Monte Carlo simulations, to improve the system’s date rate by up to 50−70% compared to conventional approaches.

The main disadvantage of the ML-architectures presented in (Peken et al., 2020a) is that they require a large number of estimations of channel matrices for training, which may generate an unaffortable latency in a fast changing environment. To counterbalance this, in (Mo et al., 2019), an unsupervised K-means algorithm is employed, which exploits the electric-field response of each antenna element in order to design beam codebooks that optimize the average received power gain of UEs that are located within a cluster. Although this approach does not require large training periods, it is sensitive to drastical changes of the wireless environment, which may arise due to users or scatterers/blockers movement. Meanwhile, in (Sun et al., 2020), the authors formulated an interference managing problem by means of coordinated beamforming in ultra-dense networks that aims at “almost real-time” sum rate maximization. To solve the aforementioned problem, a Q-learning based ML algorithm was introduced, which only require large scale channel fading parameters and achieves similar results to the ones of the corresponding analytical approach that demands full channel state information. Note that the Q-learning algorithm in (Sun et al., 2020) takes as input the state of the system, i.e. a logarithmic transformation of the second-norm of the MIMO channel matrix. Likewise, in (Alkhateeb et al., 2018a), the authors reported a centralized deep learning based algorithm for coordinated beamforming vector design in high-mobility and high-directional wireless systems. This algorithm uses as inputs the I/Q components of a virtual omni-directional received signal and extracts a prediction for the optimal beamforming vectors. To train the deep-learning algorithm pilot symbols are employed, which are exchanged between the UE and basestations (BSs) within the coherence time. This approach provided high-adaptation with the cost of creating important overhead due to the message exchange need from/to BSs to/from a central/cloud processing unit. In (Aljumaily and Li, 2019), a supervised NN was employed, which is based on singular value decomposition, to design hybrid beamformers in massive MIMO systems that are capable of mitigating the impact of limited resolution phase shifters. The proposed approach is executed in a single BS and achieve a higher spectral efficiency compared with unsupervised learning ones. However, the performance enhancement demands a relatively high training period.

To address this inconvenience, in (Lizarraga et al., 2019), the authors presented a reinforcement learning algorithm to jointly-design the analog and digital layer vectors of a hybrid beamformer in large-antenna wireless systems. The algorithms take as input the achievable data rate and returns the phase shifts of each antenna element. The disadvantage of this algorithm is that it is unable to achieve the same performance as the corresponding supervised ML one. Moreover, in (Huang S. et al., 2020), the authors studied the use of extreme learning machine for jointly optimizing transmit and receive hybrid beamforming in MU-MIMO wireless systems. The algorithm requires as inputs the real and imaginary parts of the MIMO channel coefficients and returns the an optimal beamforming vectors estimation. As a training cost function the difference between the targeted and achievable SNR is used. In (Huang et al., 2020c), the extreme learning and NNs were employed in order to extract the transmit and receive beamforming vectors in full-duplex massive MIMO systems.

In (Elbir and Mishra, 2019), a CNN with quantized weights (Q-CNN) algorithm was utilized as a solution to the problem of jointly designing transmit and receive hybrid beamforming vectors. As input, the real and imaginary components of the MIMO channel matrix is used. Q-CNN has limited memory and low-overhead demands; hence, it is suitable for deployment in mobile devices. Furthermore, in (Chen J. et al., 2020), three NN-based approaches for designing hybrid beamforming schemes were reported. The first one is based on mapping various hybrid beamformers to NNs and thus transforming the beamforming codeword design non-convex optimization problem into a NN training one. The second approach is an extension of the first one that aims at optimizing the beam vectors for the case of MU access. In comparison to the aforementioned approach, the third one takes into account the hardware limitations, namely low-resolution phase shifters and analog-to-digital converters (ADCs). Simulation results revealed that the proposed approaches outperform analytical ones in terms of BER. All the aforementioned approaches in (Chen J. et al., 2020) require as input the MIMO channel matrix. In (Long et al., 2018), the authors presented a support vector machine (SVM) algorithm for analog beam selection in hybrid beamforming MIMO systems that uses as inputs the complex coefficients of the channel samples. This approach provides near-optimal uplink sum rates with reduced complexity compared to conventional strategies. On the other, it requires sufficient training data that leads to high training periods, when the characteristics of the wireless channel changes.

To countermeasure the aforementioned problem, unsupervised learning approaches were employed in several works (Kao et al., 2018; Peken et al., 2020b; Lin et al., 2020). In more detail, in (Peken et al., 2020b), an autoencoding-based SVD methodology was used in order to estimating the optimal beamforming codes at the TX and RX, while, in (Lin et al., 2020), Lin et al. introduced a deep NN architecture for beamforming design that outperforms several previously presented deep learning approaches. Additionally, in (Kao et al., 2018), a ML-based clustering strategy with feature selection was employed to design three dimensional (3D) beamforming. In particular, the algorithm has three steps. In the first step, it uses pre-collected data in order to obtain a set of eigenbeams, while, in the second one, the aforementioned sets are used to estimate the channel state information, which in the third step is feed to a Rosenbrock search engine. The two first steps are executed offline, whereas, the third one is an online process. As the number of antenna elements increases, the size of the eigenbeam vector set also increases; thus, the practicality of this approach may be questionable in massive MIMO systems. All the aforementioned ML algorithms employ as input the MIMO channel matrix.

ML was also employed to optimize the beamforming vectors of relaying and reflected assisted high-directional THz links. For example, in (Li L. et al., 2020), Li et al. presented a cross-entropy hybrid beamforming vector estimation deep reinforcement learning-based scheme for unmanned aerial vehicle (UAV)-assisted massive MIMO network. The deep reinforcement learning method was employed in order to minimize the AP transmission power by jointly optimizing the AP and RIS active and passive beamforming vectors. Finally, in (Liu et al., 2020c), Liu et al. used Q-learning in order to jointly designing the movement of the UAV, phase shifts of the RIS, power allocation policy from the UAV to mobile UEs, as well as determining the dynamic decoding order of a NOMA scheme, in a RIS-UAV assisted THz wireless system. Both the ML algorithms utilized in (Li L. et al., 2020) and (Liu et al., 2020c) use as input the estimated channel coefficient matrix.

Another challenging and important task in THz wireless networks is channel allocation. Of note, in this band, the radio resource block (RRB) has three dimensions, i.e., time, frequency, and space. As a result, the additional DoF, namely space, creates a more complex resource allocation problem. Aspired by this fact, several contributions studied the use ML approaches in order to design suitable resource allocation policies. Indicative examples are (Ahmed and Khammari, 2018; Peng et al., 2019; Tauqir and Habib, 2019; Huang H. et al., 2020; Cao et al., 2020; Jang and Yang, 2020). In particular, in (Cao et al., 2020), a centralized NN was employed to return the channel allocation strategy that minimizes the co-channel interference in an ultra-dense wireless network. The NN takes as input a binary matrix that contains the user-channel association and estimates the up-link SINR. In (Peng et al., 2019), a centralized supervised cluster-based ML interference management channel allocation that takes into account the time-varying network load was introduced. As inputs to the algorithm, the RRB allocation data, the acknowledgement (ACK) and the negative acknowledgement (NACK) data collected from the network are used. The algorithm outputs an estimation of the interference intensity.

To deal with the ever changing topology and time-varying channel conditions of ultra-dense mobile wireless networks, in (Jang and Yang, 2020), a deep Q-learning model was presented that uses quantized local AP and UE channel state information to cooperatively allocate the channels in a downlink scenario. The algorithm is self-adaptive and does not require any training. Additionally, in (Tauqir and Habib, 2019), a DNN was used that takes as inputs the channel state information and returns the spatial resource block (i.e. beam) allocation in massive MIMO wireless systems. Moreover, in (Huang H. et al., 2020), a deep learning approach was proposed for channel and power allocation in MIMO-NOMA wireless systems that aims at maximizing the sum data rate and energy efficiency of the overall network. The approach uses as inputs the channel vectors, precoding matrix and the power allocation factors. Finally, in (Ahmed and Khammari, 2018), a feedforward NN that takes as inputs the uplink channel state information and returns a channel allocation strategy in a rank and power constrained massive MIMO wireless system, was employed.

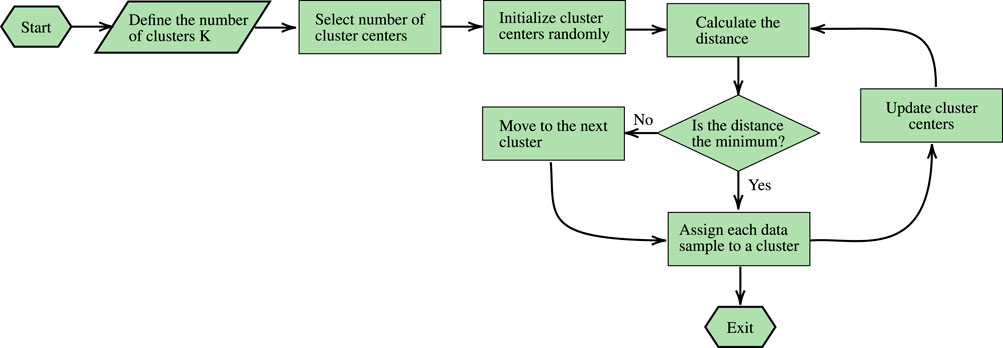

For multi-antenna THz transceivers, the hardware complexity and power management become a burden toward practical implementation (Han et al., 2015). To lighten the power management process, several researchers turned their eyes on ML. For instance, in (Zhang et al., 2020a), the K-means algorithm was employed to cluster the users of a NOMA-THz wireless network and to maximize the energy efficiency by optimizing the power allocation. The K-means algorithm in (Zhang et al., 2020a) requires as inputs the number of clusters and set of users as well as the channel vectors and outputs the UE-AP association matrix. Moreover, in (Kwon et al., 2020), Kwon et al. reported a self-adaptive DRL deterministic policy gradient-based power control of BS and proactive cache allocation toward BSs in distributed Internet-of-vehicle (iov) networks. In (Kwon et al., 2020), the system’s state that is the input of the DRL, takes into account the available and total buffer capacity of each BS, the average e quality state of the provisioned video at each UE. Meanwhile, in (Meng et al., 2019), a transfer learning approach that is based to the Q-learning algorithm, was used to allocate power in MU cellular networks, in which each cell has different user densities. Similarly, Q-learning was employed in (Amiri and Mehrpouyan, 2018) to develop a self-organized power allocation strategy in mmW networks. Finally, in (Zhang et al., 2020b), Zhang et al. presented a semi-supervised learning and DNN for sub-channel and power allocation in directional NOMA wireless THz networks. The algorithm requires as inputs the set of users and their channel vectors as well as a predetermined number of clusters.

Another burden that THz wireless system face is blockage. Sudden blockage of the THz LOS path cause communication interruptions; thus, creates a detrimental impact on the system’s reliability. Further, re-connections to the same or other BS/AP demands high beam training overhead, which in turn result to high latency. To avoid this, some contributions discuss the use of ML in order to predict dynamic (moving) obstacles position and their probability to block the LOS between the AP/BS and UE in order to proactively hand-over users to other AP/BS. Towards this direction, in (Alkhateeb et al., 2018b), a reinforcement learning algorithm was used to create a proactive hand-off blockage avoidance strategy. The state of the system that is defined by the beam index of each AP/BS at every time step is used as an input to the reinforcement learning agent. Moreover, in (Khan and Jacob, 2019), NN and CoMP clustering was employed to predict the channel state and avoid blockage. In the scenario under investigation, the authors consider dynamic blockers (i.e., cars) that perform deterministic motion. The presented algorithm use as inputs the UE location as well as the system’s clock time. Similarly, in (Iimori et al., 2020), stochastic gradient descent (SGD) was employed to design outage-minimization robust directional CoMP systems by selecting communication paths that minimize the blockage probability. In this direction, the algorithm uses as inputs an initial estimation of the beamforming vector as well as the channel state information and returns the optimized beamforming vector. Finally, in (Jia et al., 2020), a DNN was employed to provide environmental awareness to an RIS-assisted wireless sub-THz network that performs beam switching between direct and RIS-assisted connectivity in order to avoid blockage. The inputs of the algorithm in (Jia et al., 2020) are the network topology and the links line-of-sight conditions.

To conclude and according to Table 3, supervised, unsupervised, and reinforcement learning were employed to solve different problems in MAC layer. In particular, for beamforming design, where the main requirement is adaptation to the ever changing propagation environment, unsupervised learning was used to cluster UEs, supervised learning was applied to design appropriate codebooks, and reinforcement learning was employed for beam refinement and fast adaptation. As a consequence, reinforcement learning approaches are attractive for mobile and non-deterministic varying wireless environments. On the other hand, supervised learning approaches are more suitable for static environments or environments that change in deterministic way. A key requirement that supervised learning approaches have is the need to be training through a set of channel or received signal vectors that are accompanied by an achievable performance indicator. The indicator can be the data-rate, outage probability or any other KPI of interest. Likewise, unsupervised clustering and supervised learning were used for channel allocation, which was performed based on the UE communication demands. Due to lack of extensive training data sets and need of adaptation, unsupervised and reinforcement learning approaches were employed for power management. Finally, supervised and reinforcement learning were used to provide proactive policies for blockage avoidance based on statistical or instantaneous information, respectively. Of note, some of the contributions in Table 3 refer to wireless systems that employ the same technological enablers as THz communications without specifying the operating frequency band. Indicative examples are (Aljumaily and Li, 2019; Elbir and Mishra, 2019; Kwon et al., 2019; Peken et al., 2020a; Liu et al., 2020c; Lin et al., 2020; Sun et al., 2020) that discuss ML approaches for beamforming design in analog and hybrid beamforming systems, as well as (Ahmed and Khammari, 2018; Peng et al., 2019; Tauqir and Habib, 2019; Cao et al., 2020; Jang and Yang, 2020), which present ML-based channel allocation approaches for ultra-dense networks in which the communication channels are high directional. Apparently, the aforementioned approaches are suitable for THz wireless deployments.

2.3 Network Layer

The ultra-wideband extremely directional nature of the sub-THz and THz links in combination with the non-uniform UE spatial distribution may lead to inefficient user association, when the classical minimum-distance criterion is employed. Networks operating in such frequencies can be considered noise- and blockage-limited, due to the fact that high path and penetration losses attenuate the interference (Boulogeorgos et al., 2018b; Papasotiriou et al., 2018). Hence, user association metrics designed for interference limited homogenous systems are not well suited to sub-THz and THz networks (Boulogeorgos A.-A. A. A. et al., 2018). As a result, user association should be designed to meet the dominant requirements of throughput and guarantee low blockage probability. Another challenge that user association schemes need to face is the user orientation, which is observed to have a detrimental effect on the performance of THz wireless systems (Boulogeorgos et al., 2019; Boulogeorgos and Alexiou, 2020a).

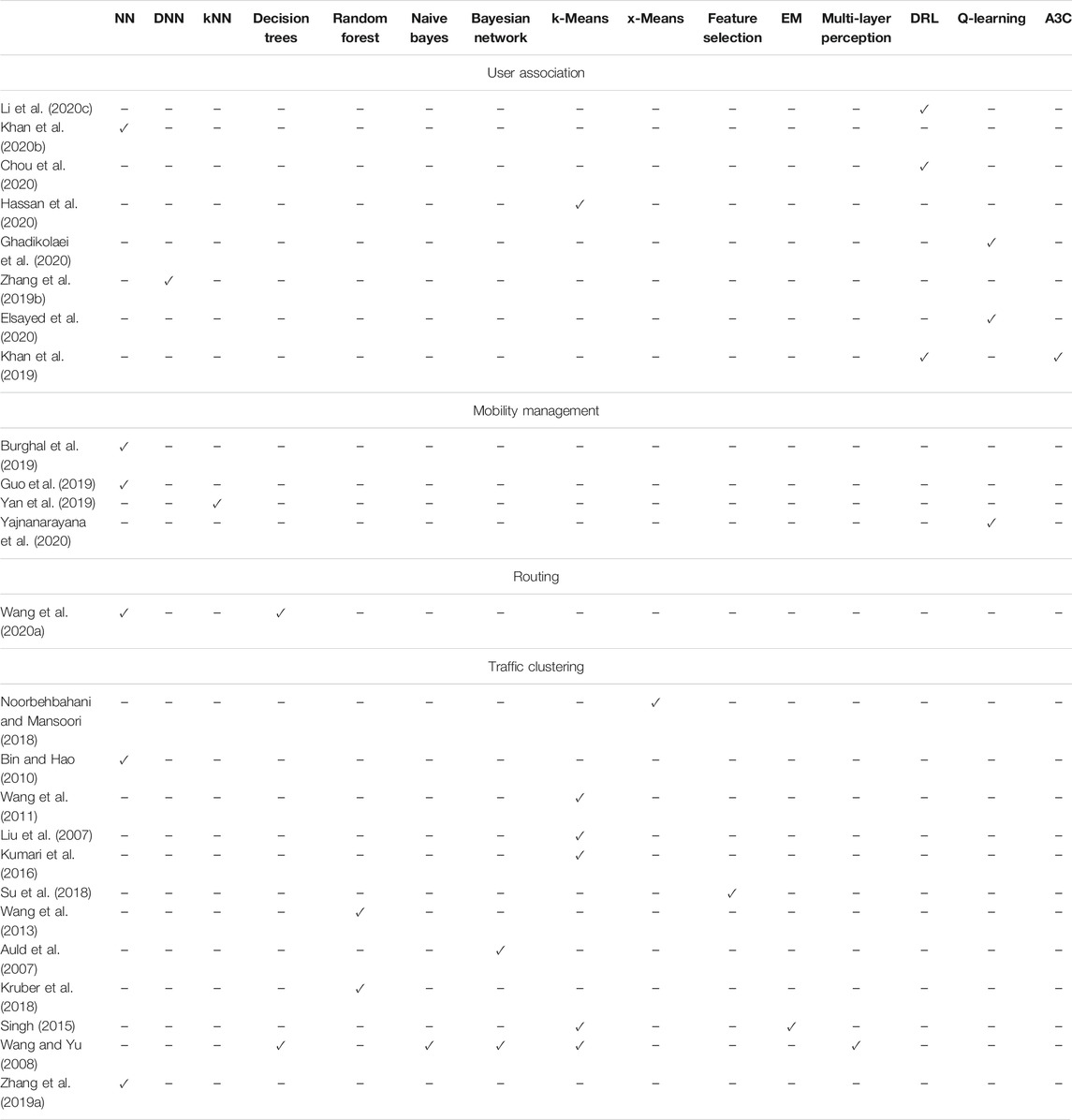

Scanning the technical literature, we can identify several contributions that employ ML for user association in sub-THz and THz wireless networks (Zhang H. et al., 2019; Khan et al., 2019; Liu R. et al., 2020; Khan L. U. et al., 2020; Chou et al., 2020; Li et al., 2020c; Elsayed et al., 2020; Ghadikolaei et al., 2020; Hassan et al., 2020). In more detail, in (Liu R. et al., 2020), the authors employed multi-label classification ML that takes as input both topological as well as network characteristics and returns a user association policy that satisfy users’ latency demands. Meanwhile, in (Li et al., 2020c), the authors presented an online deep reinforcement learning (DRL) based algorithm for heterogeneous networks, where multiple parallel DNNs generate user association solutions and shared memory is used to tore the best association scheme. Moreover, in (Khan L. U. et al., 2020), Khan et al. introduces a federate learning approach to jointly minimize the latency and the effect of model accuracy losses due to channel uncertainties.The inputs of the ML algorithm presented in (Khan L. U. et al., 2020) are the device association and the resource block matrices, while the output is the resource alocation matrix. Likewise, in (Chou et al., 2020), a deep gradient reinforcement learning based policy was presented as a solution to the joint user association and resource allocation problem in mobile edge computing. The reinforcement learning agent of (Chou et al., 2020) takes as input the system state that is described by the current backhaul and resource block usage.

In (Hassan et al., 2020), two clustering approaches, namely least standard deviation user clustering and redistribution of BSs load-based clustering were presented that take into account the characteristics of both radio frequency (RF) and THz as well as the traffic load across the network in order to provide appropriate associations in RF and THz heterogeneous networks. Furthermore, in (Ghadikolaei et al., 2020), a transfer learning methodology was employed for inter-operator spectrum sharing in mmW cellular networks. The aforementioned methodology takes as input the network topology, the association matrix, the coordination matrix, the effective channels and outputs approximate the achievable data-rate. In (Zhang H. et al., 2019), an asynchronous distributed DNN based scheme, which takes as inputs the channel coefficient matrix, was reported as a solution to the joint user association and power minimization problem. In (Elsayed et al., 2020), Elsayed et al. reported a transfer Q-learning based strategy for joint user-cell association and selection of number of beams for the purpose of maximizing the aggregate network capacity in NOMA-mmW networks. Finally, in (Khan et al., 2019), the authors exploited distributed deep reinforcement learning (DDRL) and the asynchronous actor critic algorithm (A3C) to design a low complexity algorithm that returns a suboptimal solution for the vehicle-cell association problem in mmW. The DDRL takes as input the current state of the network that is described by a set of a predetermined number of channel observation, the current achievable and required data rates.

After associating UEs to APs, uninterrupted connectivity needs to be guaranteed. However, the network is continuously undergoing change; thus, its management should be adaptive as well. This is where the conventional heuristic based exploration of state space needs to be extended to support UE mobility in an online manner. Aspired by this, several contributions presented ML-based mobility management solutions that aim at accurately tracking the UE and proactively steering the AP and UE beams (Burghal et al., 2019; Guo et al., 2019) as well as performing hand-overs between beams and APs/BSs (Yan et al., 2019; Ali et al., 2020). In particular, in (Burghal et al., 2019), a RNN with a modified cost function that takes as input the observed received signal as well as the previous AoA estimation, was employed to track the AoA in a mmW network. The proposed approach was shown to outperform the corresponding Kalman-based one in terms of accuracy. Moreover, in (Guo et al., 2019), a long short term memory (LSTM) structure was designed to prevent the user position in order to proactively perform beam steering in mmW vehicular networks. The structure uses as input the estimated by the BS channel vector. Additionally, in (Yan et al., 2019), the authors employed kNN to predict handover decisions without involving time-consuming target selection and beam training processes in mmW vehicle-to-infrastructure (V2I) wireless topologies. Finally, in (Yajnanarayana et al., 2020), centralized Q-learning was employed that takes into account the current received signal strength in order to provide real-time controlling capabilities to the hand-over process between neighbor BS in directional wireless systems.

Another challenging task of THz wireless networks is routing. The limited transmission range in combination with the transmission power constraints and memory limitations of mobile devices render conventional routing strategies that are employed in lower-frequency networks unsuitable for THz ones. Motivated by this, in (Wang C.-C. et al., 2020), the authors presented a reinforcement learning routing algorithm and compared it with NN and decision tree-based solutions. The results showed that the reinforcing learning approach not only provides on-line routing optimization suggestions but also outperforms the NN and decision tree ones. To the best of the authors knowledge, the aforementioned contribution is the only published one that discuss ML-based routing policies in high-frequency wireless networks.

To create a fully automated THz wireless network or even integrate THz technologies into current cellular networks, one of the essential problems that a network manager need to solve is traffic clustering. An accurate traffic clustering allows the detection of suspicious data and can aid in the identification of security gaps. However, as the diversity of the data increases, due to their generation for different type of sources, e.g., sensors, artificial/virtual reality devices, robotics, etc, traffic clustering may become a difficult task. Additionally, labeled samples are usually scarce and difficult to obtain.

To address the aforementioned challenges, several researchers turned their eye to ML. In particular, in (Noorbehbahani and Mansoori, 2018), Noorbehbahani et al. presented a semi-supervised method for traffic classification, which is based on x-means clustering algorithm and a label propagation technique. This approach takes as input network traffic flow data. It was tested in real-data and achieved 95% accuracy using a limited labeled data. Similarly, in (Bin and Hao, 2010), the authors reported a semi-supervised classification method that exploits both labeled and unlabeled samples. This method combines offline particle swarm optimization (PSO) to cluster the labeled and unlabeled samples of the dataset with a mapping approach that enables matching clusters to applications.

In (Wang et al., 2011), Wang et al. applied K-means algorithm that takes into account the correlations of network domain background information and transforms them into pair-wise must-link constraints that are incorporated in the process of clustering. Experimental results highlighted that incorporating constraints in the clustering process can significantly improve the overall accuracy. Furthermore, in (Liu et al., 2007), Liu et al. employed feature selection to identify optimal feature sets and log transformation to improve the accuracy of K-means based network traffic classification. Another use of K-means was presented in (Kumari et al., 2016), where the authors used it to detect networks cyber-attacks. In particular, the K-means in (Kumari et al., 2016) takes as input network traffic-related data and identifies irregularities. Moreover, a network traffic feature selection scheme that provides accurate suspicious flow detection was reported in (Su et al., 2018), whereas, in (Wang et al., 2013), random forest was employed to perform the same task. Also, Bayesian ML was used to (Auld et al., 2007), which take as input transport control protocol traffic flaws in order to identify internet traffic. Although, this approach does not require access to packet content, it demands a significant set of training data. To deal with the lack of training data, in (Kruber et al., 2018), the authors presented an unsupervised random forest clustering methodology for automatic network traffic categorization. Meanwhile, in (Singh, 2015), the performance of EM and K-means based algorithms for network traffic clustering were compared and it was shown that K-means outperforms EM in terms of accuracy. In addition, in (Wang and Yu, 2008), the authors evaluated and compared the effectiveness of a number of supervised, unsupervised and feature selection algorithms in real-time traffic classification problem, which takes as input network’s statistical characteristics. Naive Bayes, Bayesian networks, multilayer perception, decision trees, K-means, and best-first search were among the algorithms that their performance were quantified. The results revealed that in terms of accuracy decision trees outperforms all the aforementioned algorithms. Finally, in (Zhang C. et al., 2019), the authors combined LSTM with CNN in order to develop a two-layer convolution LSTM mechanism capable of accurately clustering traffic generated by different application types.

Table 4 connects the published contributions to the ML algorithms that was applied in the network layer. Note that some of the contributions in these table does not explicitly refer to THz networks, however, the presented algorithms can find applications to THz wireless systems, due to system and network topologies commonalities. For example in (Li et al., 2020c), the ML approach can be used in any heterogeneous network that consists of macro-, pico- and femto-cells. Notice that femto cells can be established in the THz band. Similarly, the contributions in (Liu et al., 2007; Bin and Hao, 2010; Noorbehbahani and Mansoori, 2018; Zhang H. et al., 2019; Khan L. U. et al., 2020; Chou et al., 2020) and (Kumari et al., 2016) can be applied in any femto-cell network that support high data traffic demands, such as the THz wireless networks, independently from the operation frequency. Moreover, (Auld et al., 2007; Wang et al., 2013; Kruber et al., 2018; Su et al., 2018; Burghal et al., 2019; Guo et al., 2019; Khan et al., 2019; Yan et al., 2019; Elsayed et al., 2020; Ghadikolaei et al., 2020) and (Wang and Yu, 2008) refer to mmW networks that support data-rates in the order of 100 Gb/s in 60 GHz. In such networks, both the AP and UEs employ high-gain antennas. Notice that THz wireless network are also designed to support data-rates in the order of 100 Gb/s and establish high directional links in order to ensure an acceptable transmission range. Thus, the aforementioned contributions can be adopted to THz wireless networks.

From Table 4, we observe that based on the network nature, i.e. fixed or mobile topology, supervised and reinforcement learning approaches are respectively employed for user association. Additionally, when the network had prior knowledge of the UE possible direction supervised learning approaches were employed for mobility management. On the other hand, in problems in which the UE motion is stochastic, reinforcement learning mechanisms were adopted. For routing, where accuracy plays an important role and no instantaneous adaptation is required, supervised learning was used. Finally, for traffic clustering both supervised and unsupervised learning were employed. In more detail, unsupervised learning seems an attractive approach when searching for data irregularities.

2.4 Transport Layer

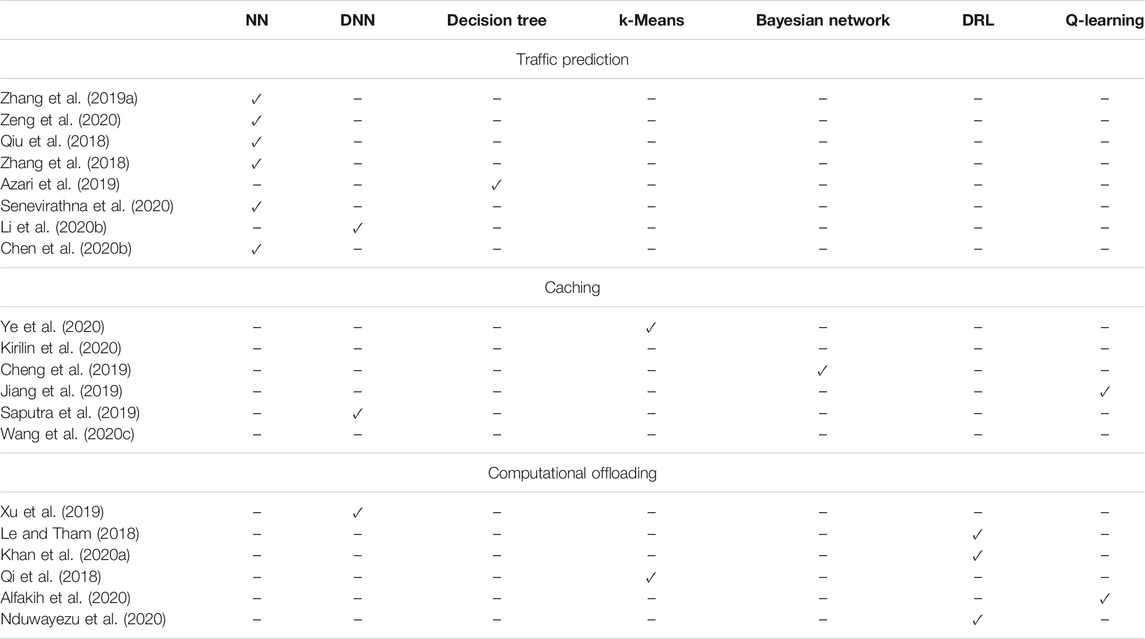

In order to design self-management wireless network that embrace efficient automation, accurate traffic prediction is necessary. However, traffic prediction is a challenging task due to the nonlinear and complex nature of traffic patterns. In face of this challenge, ML-based approaches was recently discussed. In this sense, in (Zhang C. et al., 2019), Zhang et al. presented a deep transfer learning algorithm that combines spatial-temporal cross-domain NNs with LSTM in order to extract the relationship between cross-domain datasets and external factors that influence the traffic generation. Building upon the extracted relationship, traffic predictions were conducted. Similarly, in (Zeng et al., 2020), a cross-service and regional fusion transfer learning strategy, which was based on spatial-temporal cross-domain NNs was reported. The modeled reported in (Zeng et al., 2020) takes as input wireless cellular traffic data of Milan area. Likewise, in (Qiu et al., 2018), the authors investigated the use of RNNs that are fed by traffic data for extracting the data spatio-temporal correlation; thus, improve the data traffic prediction. Moreover, a CNN was used in (Zhang et al., 2018) to predict the traffic demands in cellular wireless networks.

In (Azari et al., 2019), a decision tree and random forest based method was presented for user traffic prediction that enables proactively resource management. The algorithm inputs are the number and size of both uplink and downlink packets, the ratio of number of uplink to downlink packets, as well as the used communication protocol. Additionally, in (Senevirathna et al., 2020), a LSTM architecture was designed in order to predict the traffic variations in a machine-type communication scenario, in which the devices access the network in a random fashion. The algorithm takes as input the network traffic flow. In (Li Y. et al., 2020), SVM was employed to predict video traffic and improve the video stream quality in beyond the fifth generation (B5G) networks. Finally, in (Chen M. et al., 2020), a single NN architecture, in which the weights connected to the output are adjusted by means of linear regression, whereas other weights are randomly initialized, was employed. Simulation results revealed that this approach outperforms LSTM in terms of execution time.

Wireless content caching in THz wireless networks is an attractive way to reduce the service latency and alleviate backhaul pressure. The main idea is to proactively transfer the content to be requested by a single of a cluster of UEs to the nearest possible BS/AP. Towards this direction, several researchers presented ML-based caching policies (Cheng et al., 2019; Jiang et al., 2019; Saputra et al., 2019; Wang X. et al., 2020; Kirilin et al., 2020; Ye et al., 2020). Specifically, in (Ye et al., 2020), the authors reported a device to primary and secondary BS clustering approach based on the requested content location in mmW ultra-dense wireless networks. Moreover, in (Kirilin et al., 2020),the authors presented a reinforcement learning architecture, which increases the caching hit rate by deciding whether or not to admit a requested object into the content delivery network, and whether to evict contents, when the cache is full. Moreover, in (Cheng et al., 2019), Bayesian learning method to predict personal preferences and estimate the individual content request probability, which reflects preferences in order to precache the most popular contents at the wireless network edge, like small-cell BSs. The algorithm takes as input the channel matrix. Meanwhile, in (Jiang et al., 2019), Wei et al. proposed a Q-learning based approach to coordinate the caching decision in a mobile device-to-device network. Additionally, in (Saputra et al., 2019), a DNN architecture, which takes as inputs each user and requested content identifier, was introduced that enables network’s mobile edge nodes to collaborate and exchange information in order to minimize the content demand prediction error with ensuring no mobile user privacy leakage. Finally, in (Wang X. et al., 2020), a federated reinforcement learning based algorithm was presented that allows BSs to cooperatively device a common predictive model by employing, as initial local training inputs, the first-round training parameters of BSs, and exchange near-optimal local parameters between the participating BSs.

Despite the paramount importance that latency plays in THz wireless systems, another challenge that need to be addressed is the limited computing resource and battery of mobile UEs. To address this challenge, computation offloading approaches were proposed. These approaches demand intelligence in order to decide in which of the networks nodes the tasks should be offloaded in order to guarantee applications’ latency demands. Towards this direction, in (Xu et al., 2019), the authors described a DNN architecture that minimizes the total task transmission latency and overhead by optimizing the task placement in cloud and edge computing nodes based on the computation resources and load of the participating nodes. Likewise, in (Le and Tham, 2018), the authors solved the same problem applying deep reinforcement learning and assuming that the tasks can be offloaded either in nearby cloudlets by means of device-to-device communications. Similarly, in (Khan I. et al., 2020), Khan et al. used DRL to maximize the energy efficiency of cloud and mobile edge computing assisted wireless networks that support a large variety of machine type applications, under the constraints of computing power resources and delays. Moreover, in (Qi et al., 2018), Qi et al. presented an unsupervised K-means clustering algorithm in order to identify center user group sets that enhance task offloading and allow load balancing. Additionally, in (Alfakih et al., 2020), the authors reported a Q-learning based algorithm that decides whether a UE’s tasks should be offloaded in the nearest edge server, adjacent edge server, or remote cloud in order to minimize the total system cost that is quantified in terms of energy consumption and computing time delay. In this algorithm the uploading and downloading bandwidths define the state of the agent. Finally, in (Nduwayezu et al., 2020), the authors applied DRL in order to decide whether to execute a task locally or remotely based on the computation rate maximization criterion in mobile edge computing assisted NOMA system. As input, the algorithm takes the input data size and workload.

Table 5 documents the different ML algorithms that have been used in transport layer. From this table, it becomes evident that supervised learning approaches have been the usual choice for traffic prediction problems, while unsupervised and reinforcement learning ones have been usually employed to solve caching and computational offloading problems. Of note, most of the contributions in Table 5 refer to networks that have less backhaul than aggregated communication and computational fronthaul resources, without specifying their operation frequency. This is a common characteristic of stand-alone THz wireless deployments. As a consequence, they can be applied in THz wireless networks.

3 A Methodology to Select a Suitable ML Algorithm

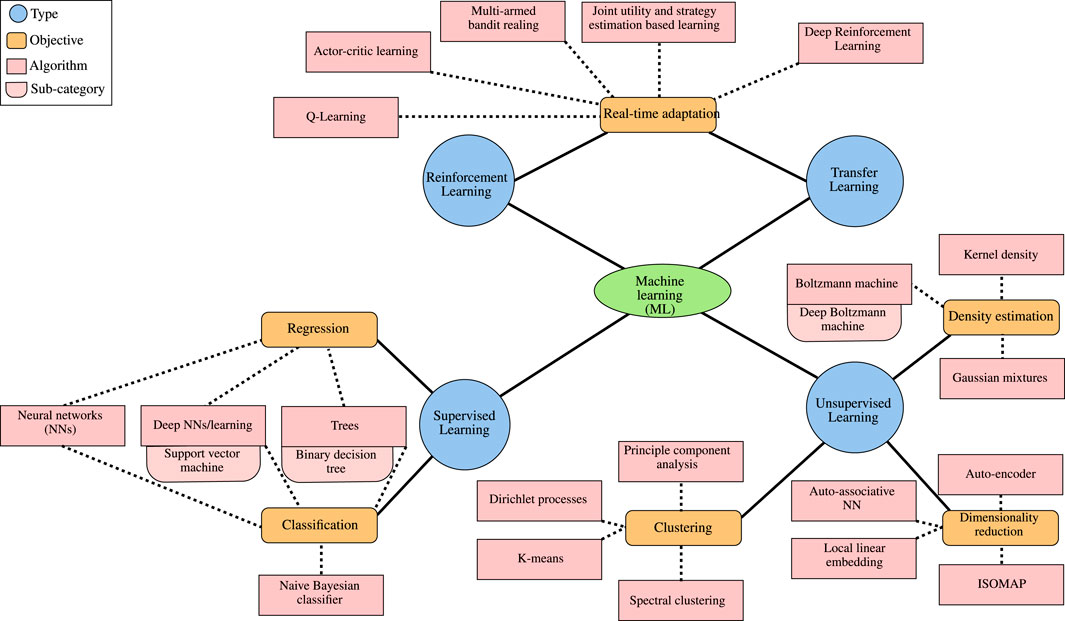

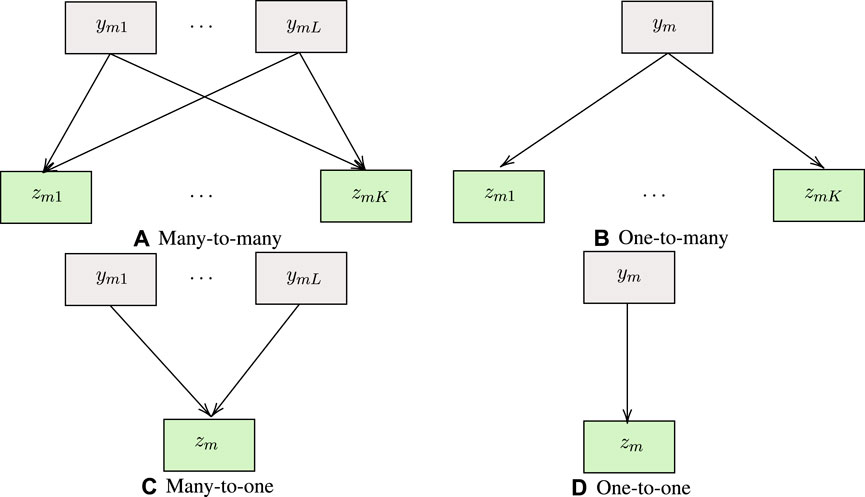

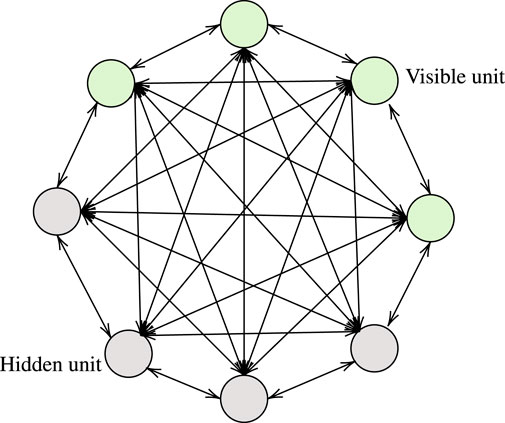

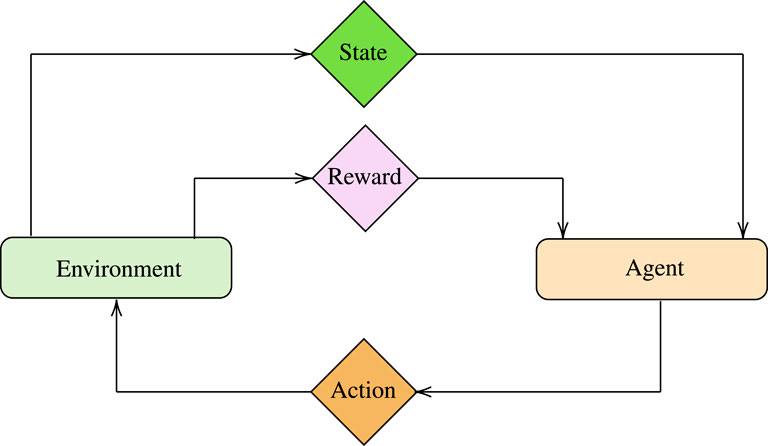

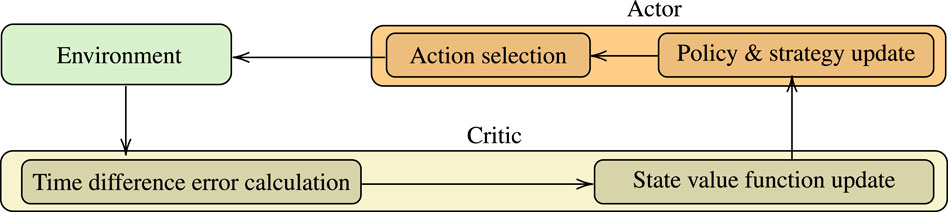

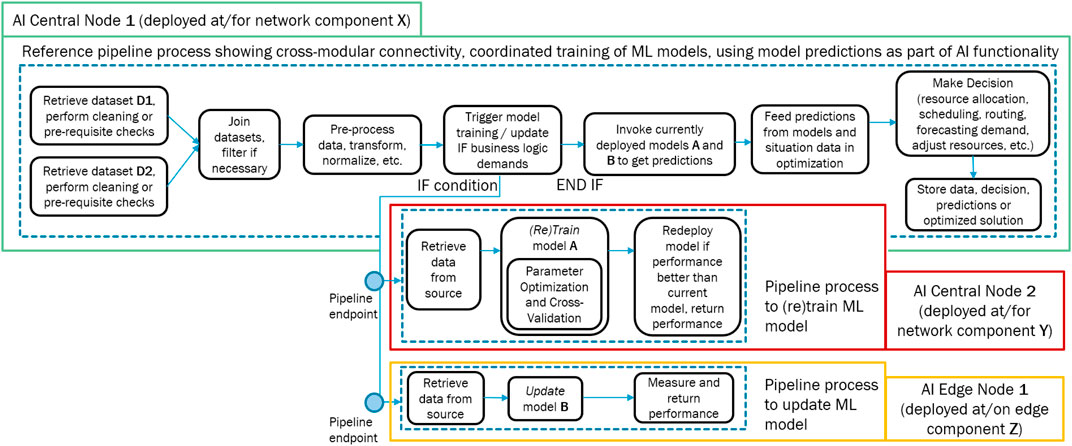

As illustrated in Figure 2, ML can be classified into four categories, namely 1) supervised, 2) unsupervised, 3) reinforcement, and 4) transfer learning. In what follows, we report the main features of each category and revisit indicative ML algorithms emphasizing their operation, training process, advantages and disadvantages.

3.1 Supervised Learning

Supervised learning focuses on extracting a mapping function between the input and output values based on a labeled dataset. As a consequence, it can be applied as a solution to regression and classification problems. In more detail, in this paper, the following supervised learning algorithms are discussed.

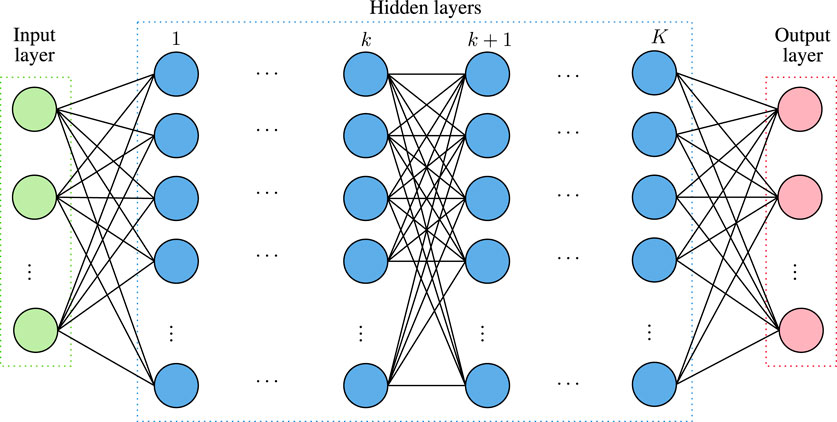

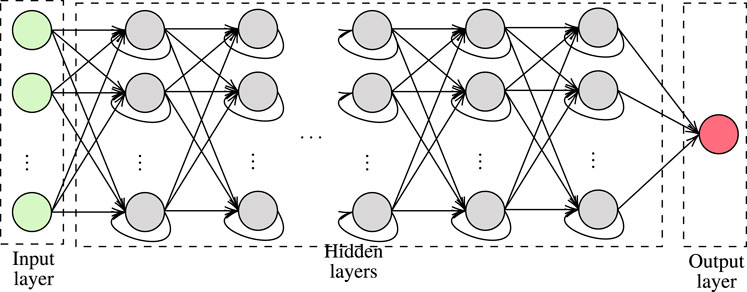

• NNs: are computing machines inspired by biological NN. In more detail and as illustrated in Figure 3, they consist of three types of layers, namely input, hidden, and output. Each layer has a certain number of nodes that are called neurons and process their input signal. A neuron in layer k implements a linear or non-linear manipulation, called activation function, of the input data and forwards its output to a number of edges, which connects neurons that belong to layer k with the ones of layer k + 1. In more detail, let

being the input vector of the k−th layer of the NN, and

being the weight vector of the n−th node of the k−th layer, then the output of this node would be

or equivalently

where

The learning process aims at finding the optimal parameters wk,n so that

to be as close as possible to the target yk,n. To achieve this a cost/error function

The analytical differentiation of Eq. 6 is usually impossible; thus, numerical optimization methods are applied. The most commonly used methods are gradient descent as well as single and batch perceptron training (Graupe, 2013).

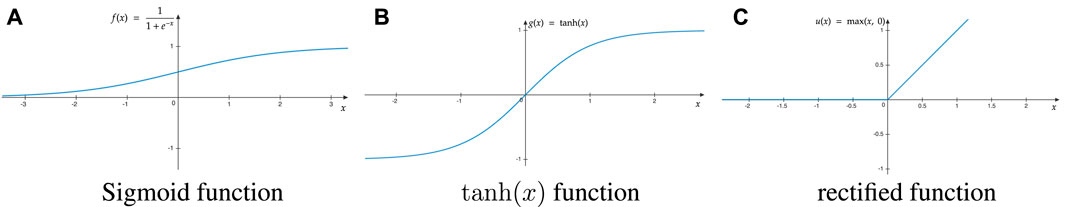

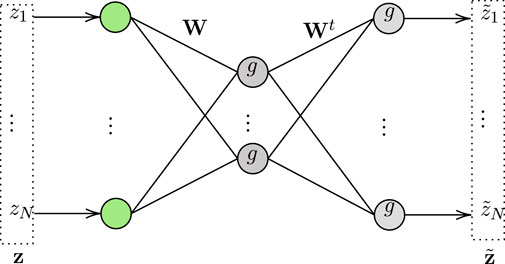

• DNNs: are NNs with multiple hidden layers that, as depicted in Figure 4, commonly employs tanh, sigmoid or rectified as an activation function. A DNN is segmented into two phases, i.e. training and execution. Training phase employs labeled data in order to extract the weights of all the activation functions of the DNN. Usually, the SGD with back-propagation algorithm is employed for this task. In general, as the number of hidden layers increases, the number of training data that is requires increases; however, the classification or regression accuracy also increases. In the execution phase, the DNN returns proper decisions based on its inputs, even when the input values have not been within the training data set. As a result, the main challenge of DNN is to optimally select its weights (Zhang L. et al., 2019).

The special types of DNNs have been extensively used in THz wireless systems and networks, namely CNN, and RNN.

RNN can be used for regression and classification. In contrast to conventional DNNs and as illustrated in Figure 5, it allows back-propagation by connecting neurons of layer k, with the ones of previous layers. In other words, it creates a memory that enables future inputs to be inherited by previous layers (Lipton et al., 2015). As a result, fewer tensor operations in comparison to the corresponding DNN need to be implemented, which is translated into lower computational complexity and training latency. Building upon this advantage, RNNs have been widely used for a large variety of applications ranging from automation modulation recognition, where channel correlation was discovered by exploiting the recurrent property, to traffic prediction, in which the data spatial-temporal correlation may play an important role.

Finally, CNNs have been employed as solutions to several THz wireless networks problems from AMR to traffic prediction. Their objective is to identify local correlations within the data and exploit them in order to reduce the number of parameters as we move from the input to the output through the hidden layers. In this type of networks, a hidden layer may play the role of a convolution, a rectifier linear unit (RELU), a pooling, or a flattening layer (Boulogeorgos et al., 2020). Convolution layers are used to extract the distinguished feature of each sample, while RELUs impose decision boundaries. Likewise, pooling layers are responsible for spatial dimensions down-sampling. Last, flattening is used to reorganize the values of high-dimensional matrices into vectors.

• SVM: can be employed as a solution for both high-dimensional regression and classification problems by mapping the original feature space into a higher-dimensional one, in which their discriminability is increased (Gholami and Fakhari, 2017). In other words, SVM aims at creating a space in which the minimum distance between nearest points are maximized. In this direction, let us describe the new space as a linear transformation of the original one, which can be described according to the following kernel function:

where b and c are SVM optimization parameters, while

where lm is the label of the m−th class. As a consequence, the optimization problem that describes SVM can be formulated as

This problem may return a non-linear classification or regression of the original space. Another weakness is that training an SVM model is computationally expensive especially as the training data size increases. In practice, it can take long time to train an SVM model as the number of dimensions (features) increase in a dataset and the problem is exacerbated with increase in datapoints beyond a few hundreds of thousands. SVM has been extensively used for traffic clustering. The main challenge is the optimal selection of the kernel function. This function may be a linear, a polynomial, a radial, or an NN one. To device a suitable kernel function, we usually apply inner product operations between input samples over the Hilbert space in order to extract feature mappings.

• KNN: A widely-used algorithm for classification is KNN. KNN consists of three steps, namely 1) distance calculation, 2) neighbor identification, and 3) label voting. To provide a comprehensive understanding of KNN, let us define a set of N training samples as

In the second step, the K most similar labeled samples, i.e., with the lowest distance from

In THz wireless systems, KNN has been employed for channel estimation and beam tracking as well as mobility management purposes. Its main challenge is to appropriately select K. On the one hand, a large K can aim at counterbalancing the negative impact of noise. On the other hand, it may fuzzify the boundary of each class. This calls for heuristic approaches that returns approximations for K.

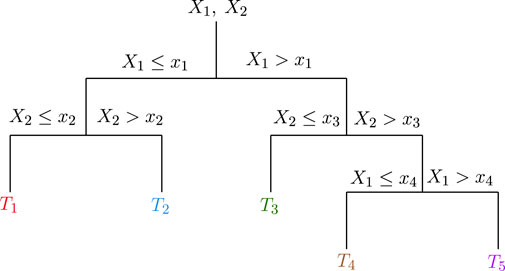

• Decision trees: are considered one the most attractive ML approach for both regression and clustering, due to their simplicity and intelligibility. They are defined by recursively segmenting the input space in order to create a local model for each one of the resulting regions. To provide a comprehensive understanding of decision trees operation, we consider an indicative tree that is depicted in Figure 6. We represent the target values by the tree’s leaves, while branches stand for observations. In more detail, the first node checks whether the observation X1 is lower or higher than the threshold x1. If X1 ≤ x1, then, we check whether the observation X2 is lower or higher than another threshold x2. If both X1 ≤ x1 and X2 ≤ x2, the decision tree returns the target value T1. On the other hand, if X1 ≤ x1 and X2 < x2, the target value T2 is returned. Similarly, if X1 > x1, the decision tree checks whether X2 is lower than the threshold x3. If a positive answer is returned, the target value is set to T3, otherwise, it checks whether X1 is lower or higher of x4. If it is lower, the target value T4 is returned; otherwise, T5 is returned.

In general, the decision tree model can be analytically expressed as

where

Moreover, Rm stands for the m−th decision region, and rm represents the mean response of this region. Finally, M represents the total number of regions. From Eq. 11, it becomes evident that training a decision tree network can be translated into finding the optimal partitioning, i.e., the optimal regions Rm with m ∈ [1, M]. This is usually an NP hard optimization problem and its solution require the implementation of greedy algorithms.

Although decision trees are easy to implement, they come with some fundamental limitations. In particular, they are have lower accuracy in comparison with NNs and DNNs. This is due to the greedy nature of the training process. Another disadvantage of decision trees is that their sensitive to changes to the input data. In other words, even small changes to the inputs may greatly affect the structure of the tree. In more detail, due to the hierarchical nature of the training process, errors that are caused at the top layers of the decision tree affect the rest of its structure.

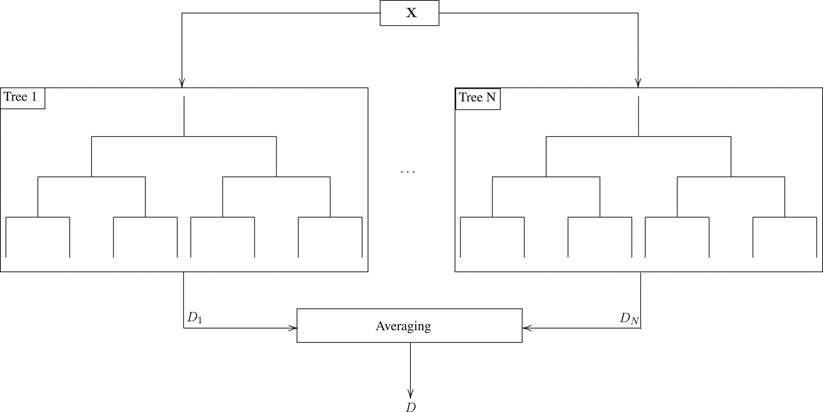

• Random forests3: improve the accuracy of decision trees by averaging several estimations. In more detail and as illustrated in Figure 7, instead of training a single tree, random forest methodology is based on training N different trees using different sets of data, which are randomly chosen. The outputs of the N trees are averaged; hence, the random forest model can be described as

with

The main challenge of random forests is to guarantee that the trees operate as uncorrelated predictors. To achieve this, the training data is randomly divided into subsets, where each subset is used to train a tree. It is very confusing to say “input variable subsets”. Basically, one of the ideas behind the random splits of training data into subsets is that it makes the model resilient to outliers and overfitting. For example, if there is an outlier in one or more subsets, the model’s accuracy is not skewed due to it, as this is an ensemble model and the outcome reflects joint decision of all trees, and since different trees have “seen” different distributions in data (due to random subsetting), it is expected to handle overfitting better. This indicates that there exists a trade-off between the accuracy and training latency/overhead. In more detail, as the number of trees increases and thus the accuracy of the random forest improves, the training set need to be lengthen. Therefore, both the training latency and the overhead in the network increases. Another disadvantage of random forests is the interpretability of the model is not as simple in comparison with singular or non-ensemble model such as a decision tree.

• Naive Bayesian classifier: aims at choosing the class that maximizes the posteriori probability of occurrence. In particular, let us define the vector x ∈{1,…,R}S, where R stands for the number of values for each feature, while D represents the number of features. Naive Bayesian classifier assigns a class conditional probability,

Moreover, by “naively” assuming that, for a given class label Ct, the features are conditionally independent,