- 1Kathmandu University School of Education, Lalitpur, Nepal

- 2Department of English, United International University, Dhaka, Bangladesh

- 3Centre for Foundation and Continuing Education, University Malaysia Terengganu, Kuala Nerus Terengganu, Malaysia

- 4Department of English and Modern Languages, International University of Business Agriculture and Technology, Dhaka, Bangladesh

Integrating generative AI (GenAI) in qualitative research offers innovation but intensifies core epistemological, ontological, and ethical challenges. This article conceptualizes the meta-crisis of generativity—a convergence of Denzin and Lincoln's three crises: representation (blurring human/AI authorship), legitimation (questioning trust in AI-generated claims), and praxis (ambiguity in non-human participation). We examine how human-GenAI collaboration challenges researchers' voice, knowledge validity, and ethical agency across research paradigms. To navigate this, we propose strategic approaches: preserving positionality via voice annotation and reflexive bracketing (representation); ensuring trustworthiness through algorithmic audits and adapted validity checklists (legitimation); and redefining agency via participatory transparency and posthuman ethics (praxis). Synthesizing these, we expand qualitative rigor criteria—such as credibility and reflexivity—into collaborative frameworks that emphasize algorithmic accountability. The meta-crisis is thus an invitation to reanimate the critical ethos of qualitative research through interdisciplinary collaboration, balancing the potential of GenAI with ethical accountability while preserving humanistic foundations.

1 Introduction

OpenAI introduced ChatGPT in November 2022, a conversational Generative Artificial Intelligence (GenAI) system that offers unrestricted access and advanced language processing capabilities (Dahal, 2024b). Integrating generative artificial intelligence tools in qualitative research has flashed transformative possibilities—and profound ethical, epistemological, and ontological challenges. Building on the foundational “crises” articulated in the early 90s—representation, legitimation, and praxis (Denzin and Lincoln, 2005)—this article introduces the meta-crisis of generativity: a convergence of uncertainties arising when human researchers collaborate with GenAI to produce knowledge.

The advent of Generative Artificial Intelligence (GenAI) tools has brought about a paradigm shift in qualitative research (Agarwal, 2025; Bai and Wang, 2025; Baytas and Ruediger, 2025; Bozkurt et al., 2024; Chan and Hu, 2023; Dahal, 2024b; Haouam, 2025; Hughes et al., 2025; Karataş et al., 2025; Owoahene Acheampong and Nyaaba, 2024; Yildirim et al., 2025; Zawacki-Richter et al., 2019; Zhou et al., 2024). While these tools promise efficiency and enhanced analytical capabilities (Agarwal, 2025; Arosio, 2025; Bai and Wang, 2025; BaiDoo-Anu and Owusu Ansah, 2023; Baytas and Ruediger, 2025; Bennis and Mouwafaq, 2025; Bozkurt et al., 2024; Burleigh and Wilson, 2024; Chan and Hu, 2023; Dahal, 2023, 2024a; Drinkwater Gregg et al., 2025; Fui-Hoon Nah et al., 2023; García-López and Trujillo-Liñán, 2025; Haouam, 2025; Hitch, 2024; Hughes et al., 2025; Ilieva et al., 2025; Jack et al., 2025; Karataş et al., 2025; Lakhe Shrestha et al., 2025; Moura et al., 2025; Nguyen-Trung, 2025; Owoahene Acheampong and Nyaaba, 2024; Sun et al., 2025; Wood and Moss, 2024; Yildirim et al., 2025; Zawacki-Richter et al., 2019; Zhang et al., 2025; Zhou et al., 2024), they raise critical concerns regarding authorship, representation, legitimacy, and praxis. These concerns resonate with the foundational crises conceptualized by Denzin, and Lincoln in the early 90s (Denzin and Lincoln, 2005). In this transformed research climate, we propose that these crises be understood as components of a broader challenge: the meta-crisis of generativity.

Likewise, integrating generative artificial intelligence (GenAI) in qualitative research has created remarkable opportunities for innovation while intensifying foundational epistemological, ontological, and ethical challenges (BaiDoo-Anu and Owusu Ansah, 2023; Bennis and Mouwafaq, 2025; García-López and Trujillo-Liñán, 2025; Hitch, 2024; Ilieva et al., 2025; Moura et al., 2025; Nguyen-Trung, 2025; Sun et al., 2025). This article conceptualizes the meta-crisis of generativity—a convergence of three interconnected crises adapted from the seminal work of Denzin and Lincoln (2005): representation (who speaks?), legitimation (can we trust this knowledge?), and praxis (who participates in change?). By exploring the implications of human-GenAI collaboration, this article examines how qualitative researchers can navigate the diminishing authorial voice, the lack of clarity in AI-generated knowledge claims, and the ethical ambiguities surrounding non-human agency in traditional, modern, and community-oriented qualitative research.

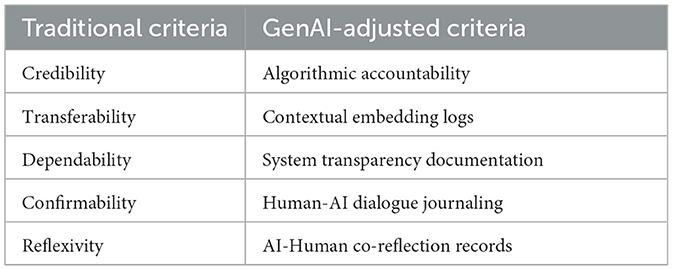

First, we discuss the crisis of representation, which explores the ontological tensions between human subjectivity and GenAI's synthetic objectivity, proposing strategies such as voice annotation and reflexive bracketing to preserve the researcher's positionality. Second, we examine the crisis of legitimation that arises from epistemological uncertainties associated with AI's hidden biases and algorithmic opacity, advocating for algorithmic audits and adapted validity checklists to ensure trustworthiness. Third, the crisis of praxis examines ethical dilemmas in traditional, modern, and community-oriented qualitative research when GenAI tools act as co-participants, urging frameworks for participatory transparency and posthuman ethics that redefine agency. Synthesizing these challenges, the article expands a revised framework for qualitative rigor, reimagining traditional criteria like credibility and reflexivity through the lens of algorithmic accountability and human-AI dialogue.

Thus, we argue that the meta-crisis is not a threat but an invitation to reanimate the critical ethos of qualitative research, demanding interdisciplinary collaboration to balance GenAI's generative potential with ethical accountability. Thus, integrating interpretive, critical, and postmodern paradigms empowers researchers to leverage GenAI as a provocateur while preserving the humanistic foundations of qualitative inquiry. To this end, this article addresses the following questions:

• How can researchers ensure their thoughts and personal views stay clear in their work when AI tools—built on incomplete and biased data—might replace or distort their understanding of people's stories?

• Can we still trust research that uses AI, even though AI systems are often unclear and carry hidden biases? What new rules or checks do we need to implement to ensure that AI-generated insights are fair and reliable?

• If AI is used as a collaborator in research with communities, how do we deal with the fact that it's not human—while still respecting the community's right to make decisions, take responsibility, and push for empower or change?

2 Literature review

A review of existing literature on the meta-crisis of generativity while adapting qualitative research quality criteria in the era of Generative AI was conducted to contextualize the authors' reflections and provide supporting evidence.

3 Methodology

This perspective article is based on the authors' personal insights and opinions, drawing from their experiences on the meta-crisis of generativity while adapting qualitative research quality criteria in the era of Generative AI.

3.1 Data sources

The authors' personal experiences and observations, along with relevant literature, are the foundation of this article.

4 Meta-crisis of generativity

The meta-crisis of generativity underscores the profound disruption of qualitative research principles brought about by the integration of Generative AI (GenAI) in knowledge production Dahal, (2024a); García-López and Trujillo-Liñán, (2025); Moura et al., (2025). This crisis unfolds across three interrelated dimensions: representation (ontology), legitimation (epistemology), and praxis (ethics). The crisis of representation questions how to distinguish between human and AI voices in co-authored texts and how to authentically convey lived experiences when GenAI-generated content—shaped by biased and fragmented datasets—mimics objectivity, potentially silencing the researcher's reflexive voice. The crisis of legitimation challenges the trustworthiness of AI-assisted research, as opaque algorithms and biased training data undermine traditional standards of credibility and transferability. Likewise, the crisis of praxis interrogates the ethical implications of AI acting as a participant or agent in change-oriented research, such as autoethnography or Participatory Action Research (PAR), raising concerns about power imbalances and accountability. Understanding these dimensions is essential for adapting qualitative methodologies to responsibly integrate GenAI, which requires methodological innovation and reflexivity to uphold research integrity.

4.1 Crisis of representation: “Who speaks?”—ontological challenges

The crisis of representation asks: How do we authentically portray lived experiences when the researcher's voice is entangled with GenAI's synthetic text? In traditional qualitative research, the researcher's subjectivity is foregrounded; GenAI disrupts this by introducing an “objective” synthetic voice trained on biased, fragmented datasets. One of the most significant challenges in qualitative research involving GenAI is the blurred line between content generated by AI and that produced by human researchers. However, qualitative research is grounded in subjective interpretations, which reflect the unique perspectives and insights of human researchers. However, AI-generated outputs often present information in a seemingly objective manner, making it difficult to attribute specific parts of the research to either the AI or the human contributors. This indistinct boundary complicates the process of determining authorship and understanding the nuances of the research findings.

4.1.1 GenAI's impact on ontology

GenAI tools like ChatGPT generate text that lacks embodied experience, cultural context, and emotional resonance. This leads to a loss of human nuance in research outputs. Additionally, when AI drafts field notes or analyzes data, the human researcher's reflexive voice risks being diluted, potentially leading to the erasure of positionality, a crucial element in qualitative research. Case studies have demonstrated this problem. For example, in a hybrid autoethnography on migrant labor where ChatGPT drafted interview summaries, cultural nuances were inadvertently flattened. Similarly, in a collaborative study on mental health, AI-generated themes conflicted with participants' lived realities, which underline the ontological challenges of integrating GenAI in qualitative research.

4.1.2 Strategies for clarifying representation

To ensure transparency in GenAI-assisted qualitative research, researchers can implement several integrated strategies. First, explicitly document all AI involvement in methodology sections—specifying roles in data collection, analysis, or writing—using disclosure statements. Second, employ voice labeling with typographic cues (e.g., AI-generated text vs. human reflections) to distinguish authorship. Third, practice reflexive bracketing: position GenAI as a “provocateur” for initial drafts, then critically interrogate its outputs through iterative human-AI dialogue to surface biases. Fourth, formalize co-authorship frameworks that ethically credit GenAI contributions (e.g., acknowledging tools like GPT-4 as “non-human collaborators”). Finally, a reflective journal should be maintained to track AI interactions and content modifications, supplemented by peer debriefing to assess the alignment between human interpretation and AI-generated content. Thus, these approaches preserve research integrity while leveraging GenAI's analytical capabilities.

4.2 Crisis of legitimation: “Can we trust this knowledge?”—epistemological challenges

The Crisis of Legitimation centers on epistemological concerns, specifically how we can validate the knowledge claims produced through AI-human collaborations. AI tools are trained on extensive datasets that are inherently biased, which raises significant questions about the validity and reliability of the findings generated with AI assistance. This issue is crucial for ensuring that the knowledge produced is credible and trustworthy. Legitimation concerns the trustworthiness of knowledge claims. GenAI's reliance on biased training data (e.g., excluding marginalized voices) and opaque algorithms undermines traditional validity criteria, such as credibility and transferability.

4.2.1 GenAI's impact on epistemology

GenAI tools reproduce structural inequalities embedded in their training corpora (e.g., racial, gender, or cultural biases). This leads to hidden biases in AI-generated analyses. Additionally, researchers cannot fully trace how AI tools code data or generate themes, violating interpretive transparency. This “black box” nature of AI analysis challenges the epistemological foundations of qualitative research. For instance, a GenAI system analyzing marginalized communities may lack cultural nuance due to limitations in its training data. This raises important questions about the epistemological validity of AI-assisted qualitative research.

4.2.2 Strategies for strengthening trustworthiness

To safeguard the validity of AI-assisted qualitative research, researchers should implement multifaceted strategies: (1) Conduct algorithmic audits with data scientists to document training data sources, decision pathways, and analytical roles; (2) Employ triangulation by cross-verifying AI outputs with independent human coding, multi-tool comparisons, and traditional techniques like member-checking and thick description; (3) Maintain rigorous audit trails logging all AI interactions, prompt iterations, and textual modifications; (4) Embed bias mitigation through critical interrogation of AI assumptions and counter-frameworks that challenge dominant discourses; and (5) Adapt validity checklists (e.g., Lincoln and Guba, 1985) to include AI transparency metrics and protocols like Trustworthiness Audits. These measures uphold epistemological integrity while harnessing the analytical capabilities of GenAI.

4.3 Crisis of praxis: “Who participates in change?”—ethical challenges

In methodologies such as autoethnography and Participatory Action Research (PAR), researchers actively engage as participants in the research process. This involvement prompts a provocative question: should AI tools also be recognized as co-participants in the design, execution, and reporting of research? This consideration is crucial as it challenges traditional notions of participation and acknowledges the significant role AI can play in shaping research outcomes. Participatory action research (PAR) prioritizes co-creation with communities to drive social change. But when GenAI tools act as “participants” (e.g., designing surveys, analyzing narratives), their role as non-human agents raises ethical dilemmas.

4.3.1 GenAI's impact on praxis

When GenAI tools are used in PAR, such tools create power imbalances, as communities may perceive AI as an extension of institutional authority. Additionally, there is an agency ambiguity: can an AI tool be a “change agent” if it lacks intentionality? For example, in a PAR project on school transformation projects where ChatGPT was used to analyze stakeholder interviews, community members expressed distrust, perceiving AI as an extension of institutional authority rather than a neutral analytical tool. This underlines the ethical complications of integrating GenAI into participatory research methodologies.

4.3.2 Strategies for ethical integration of AI in research praxis

To navigate the Crisis of Praxis, researchers must ethically reconceptualize GenAI's role by acknowledging its agency as a co-constructor of knowledge rather than a neutral tool. Drawing on actor-network theory (Latour, 2005) and posthuman ethics, this involves: (1) collaborative boundary-setting with communities (e.g., limiting AI to “scribe” roles to mitigate power imbalances); (2) participatory transparency through plain-language disclosure in consent processes and accountability statements; and (3) critical reflexivity via ethical guidelines that uphold autonomy and informed consent. These approaches reframe non-human participation while preserving the humanistic foundations of qualitative research.

5 Adapting quality criteria for the GenAI era: toward a new framework

The Meta-Crisis of Generativity demands a paradigm shift in how we define quality in qualitative research. Traditional criteria for evaluating qualitative research, as established by Lincoln and Guba, 1985, need to be reimagined to account for the integration of GenAI tools.

5.1 Contemporary paradigms

Interpretivism, criticalism, and postmodernism research paradigms demand adapted quality criteria that balance human subjectivity with GenAI's generative potential. Within interpretivism, AI can identify latent themes in data; however, researchers should manually contextualize cultural nuances by revising AI-generated thematic maps through member checking to ensure alignment with participants' lived experiences (Combrinck, 2024). Criticalism leverages GenAI to expose power structures (e.g., detecting gender bias in corporate documents), though such insights require validation by marginalized stakeholders to confirm inequities. Postmodernism uniquely embraces GenAI's capacity to destabilize singular “truths,” employing it to generate contradictory narratives for ideological deconstruction—such as producing competing interpretations of social events to reveal underlying tensions.

5.2 A new framework for qualitative rigor

We propose a new framework for evaluating quality in GenAI-assisted qualitative research based on these interpretivism, criticalism, and postmodernism research paradigms. Table 1 shows the traditional and GenAI-adjusted criteria.

To maintain research rigor while leveraging GenAI, qualitative researchers should adopt three interconnected principles: generative transparency through documenting all human-AI interactions in research logs by researchers for ensuring the ethical relationality by prioritizing human accountability over AI efficiency to ensure ethical compliance; and postmodern pluralism that embraces GenAI as a critical “provocateur” challenging human-centric epistemology (BaiDoo-Anu and Owusu Ansah, 2023; Burleigh and Wilson, 2024; Dahal, 2023; Haouam, 2025; Zhang et al., 2025). Thus, these adapted criteria enable researchers to harness GenAI's potential while safeguarding the integrity essential to qualitative inquiry.

6 Discussion

The integration of Generative AI (GenAI) in qualitative research has triggered what can be described as a meta-crisis of generativity—an entanglement of Denzin and Lincoln's (1994) foundational crises of representation, legitimation, and praxis (Agarwal, 2025; Bai and Wang, 2025; Baytas and Ruediger, 2025; Haouam, 2025; Ilieva et al., 2025; Yildirim et al., 2025). This convergence challenges the epistemological and ethical foundations of qualitative inquiry. GenAI's so-called “synthetic objectivity” often masks the researcher's subjectivity, diluting positionality and flattening cultural nuance, particularly when AI is used to generate field notes or thematic codes. This is evident in cases like autoethnographies of migrant labor and/or STEAM teachers, where lived experiences risk being erased. Such representational issues directly contribute to legitimation crises, as the opaque nature of AI algorithms and their biased training data undermine trustworthiness, thereby violating credibility standards, such as those proposed by Lincoln and Guba (1985). In participatory research contexts, such as Participatory Action Research (PAR), GenAI's role as a “co-participant” introduces power dynamics, with communities potentially perceiving AI as a proxy for institutional authority, thereby destabilizing the ethics of co-creation. These challenges necessitate integrated strategies to maintain qualitative rigor (Dahal, 2023). For representation, techniques like voice annotation and reflexive bracketing can foreground human voices, while co-authorship frameworks that credit AI (e.g., GPT-4) as a non-human collaborator help mitigate ontological erasure. To address legitimation, algorithmic audits and triangulation practices enhance transparency, while member-checking, alongside thick description, anchors AI outputs in human interpretation. In terms of praxis, participatory transparency—such as limiting AI to scribe roles with community consent—helps strike a balance between efficiency and ethical relationality. Rigor itself must be reimagined: algorithmic accountability can replace traditional credibility by documenting training data biases, while Human-AI dialogue journaling offers a new form of confirmability by tracing how human reflexivity shapes AI outputs. Postmodern pluralism, meanwhile, leverages GenAI to challenge hegemonic narratives and foster counter-narratives (Haouam, 2025). Rather than signaling an endpoint, this meta-crisis invites a reanimation of the critical ethos of qualitative research. It calls for interdisciplinary collaboration among data scientists, ethicists, and communities to co-design culturally attuned AI tools for Global East-West contexts. Actor-network theory (Latour, 2005) offers a lens for reconceptualizing AI as an actor within knowledge networks, thereby demanding ethical frameworks for non-human agency. Ultimately, paradigmatic synergy—drawing on interpretivist, critical, and postmodern traditions—can resist techno-determinism and center the voices of marginalized individuals. Yet, tensions remain unresolved: Can AI ever embody phronesis, or practical wisdom, in participatory change? The field urgently needs standardized disclosure protocols for AI use, decolonized training datasets to prevent epistemic violence, and longitudinal studies to assess the long-term impact of GenAI on research praxis.

7 Conclusion

As qualitative research adapts to the integration of GenAI tools, the challenges of authorship, trustworthiness, and ethical praxis require proactive strategies to maintain research integrity. The Meta-Crisis of Generativity—comprising the Crisis of Representation, the Crisis of Legitimation, and the Crisis of Praxis—demands methodological innovation and reflexivity. The Meta-Crisis of Generativity is not a threat, but an invitation to reanimate the critical spirit of qualitative research. Researchers can harness GenAI's potential while safeguarding ethical rigor by adapting Denzin and Lincoln's different forms of crises and representations (Denzin and Lincoln, 2005). This requires interdisciplinary collaboration among ethicists, communities, and engineers to forge tools and norms that honor the humanistic roots of qualitative research. Thus, qualitative researchers can navigate this evolving landscape while upholding the foundational values of rigor, trust, and ethical responsibility by transparently documenting AI's involvement, critically assessing claims, and ethically integrating AI in research praxis. Thus, future discussions should focus on establishing best practices for AI-human collaboration in qualitative research, developing ethical guidelines, and refining methodologies to align with contemporary interpretivist, criticalist, and postmodernist paradigms. As the field evolves, qualitative researchers must engage in continuous dialogue to ensure that AI serves as a tool for enhancement rather than a disruptor of research integrity.

8 Limitations

This article's limitations include:

• Acknowledges that the subject matter is purely conceptual in nature.

• Subjective nature of personal insights and opinions.

• Limited generalizability due to focus on experiences on the meta-crisis of generativity while adapting qualitative research quality criteria in the era of Generative AI.

9 Recommendations

The recommendations of the article include:

• Label AI-generated content clearly and use GenAI as a draft initiator, followed by human critique to retain positionality and nuance.

• Audit AI training data and triangulate outputs with human validation methods, such as member-checking.

• Disclose AI's role, obtain consent, and set boundaries—e.g., limiting AI to support roles in participatory research.

• Utilize GenAI-specific criteria, such as algorithmic accountability and dialogue journals, to replace traditional validity criteria.

• Partner with ethicists, engineers, and communities to build culturally sensitive AI tools and ethical standards.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

ND: Supervision, Methodology, Investigation, Writing – review & editing, Funding acquisition, Writing – original draft, Conceptualization, Formal analysis, Visualization, Project administration, Resources, Validation. MH: Writing – review & editing, Methodology, Writing – original draft, Conceptualization, Funding acquisition. AO: Funding acquisition, Writing – review & editing, Conceptualization, Writing – original draft. MN: Funding acquisition, Writing – review & editing, Writing – original draft, Methodology. HK: Methodology, Writing – original draft, Funding acquisition, Writing – review & editing.

Funding

The authors declare that financial support was received for the research and/or publication of this article. Partial publication grant was received from the Institute for Advanced Research Publication Grant of United International University, for the publication of this article. Ref. No.: IAR-2025-Pub-081.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that Gen AI was used in the creation of this manuscript. We wish to acknowledge the use of ChatGPT—GPT-3.5 or GPT-4.0, DeepSeek, and Co-pilot for this article. ChatGPT and DeepSeek were used to brainstorm and structure the content, while Co-pilot was used to refine the language and ensure a consistent flow and cohesion throughout the sentences and paragraphs. The authors have evaluated the text and approve it for publication.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Agarwal, A. (2025). Optimizing employee roles in the era of generative AI: a multi-criteria decision-making analysis of co-creation dynamics. Cogent Soc. Sci. 11:2476737. doi: 10.1080/23311886.2025.2476737

Arosio, L. (2025). Generative AI as a teaching tool for social research methodology: addressing challenges in higher education. Societies 15:157. doi: 10.3390/soc15060157

Bai, Y., and Wang, S. (2025). Impact of generative AI interaction and output quality on university students' learning outcomes: a technology-mediated and motivation-driven approach. Sci. Rep. 15:24054. doi: 10.1038/s41598-025-08697-6

BaiDoo-Anu, D., and Owusu Ansah, L. (2023). Education in the era of generative Artificial Intelligence (AI): understanding the potential benefits of ChatGPT in promoting teaching and learning. J. AI 7, 52–62. doi: 10.61969/jai.1337500

Baytas, C., and Ruediger, D. (2025). Making AI Generative for Higher Education: Adoption and Challenges among Instructors and Researchers. doi: 10.18665/sr.322677

Bennis, I., and Mouwafaq, S. (2025). Advancing AI-driven thematic analysis in qualitative research: a comparative study of nine generative models on Cutaneous Leishmaniasis data. BMC Med. Inform. Decis. Mak. 25:124. doi: 10.1186/s12911-025-02961-5

Bozkurt, A., Xiao, J., Farrow, R., Bai, J. Y. H., Nerantzi, C., Moore, S., et al. (2024). The manifesto for teaching and learning in a time of generative AI: a critical collective stance to better navigate the future. Open Praxis 16, 487–513. doi: 10.55982/openpraxis.16.4.777

Burleigh, C., and Wilson, A. M. (2024). Generative AI: is authentic qualitative research data collection possible? J. Educ. Technol. Syst. 53, 89–115. doi: 10.1177/00472395241270278

Chan, C. K. Y., and Hu, W. (2023). Students' voices on generative AI: perceptions, benefits, and challenges in higher education. Int. J. Educ. Technol. High. Educ. 20:43. doi: 10.1186/s41239-023-00411-8

Combrinck, C. A. (2024). A tutorial for integrating generative AI in mixed methods data analysis. Discov. Educ. 3:116. doi: 10.1007/s44217-024-00214-7

Dahal, N. (2023). Ensuring quality in qualitative research: a researcher's reflections. Qual. Rep. 28, 2298–2317. doi: 10.46743/2160-3715/2023.6097

Dahal, N. (2024a). “Ethics” and “Integrity” in research in the era of generative ai-are we ready to contribute to scientific inquiries? GS Spark: Journal of Applied Academic Discourse 2, 1–6. doi: 10.5281/zenodo.14828619

Dahal, N. (2024b). How can generative AI (GenAI) enhance or hinder qualitative studies? A critical appraisal from South Asia, Nepal. Qual. Rep. 29, 722–733. doi: 10.46743/2160-3715/2024.6637

Denzin, N. K., and Lincoln, Y. S., (eds.). (1994). Handbook of Qualitative Research. Thousand Oaks, CA: Sage.

Denzin, N. K., and Lincoln, Y. S., (eds.). (2005). “Introduction: the discipline and practice of qualitative research,” in Handbook of Qualitative Research, 3rd Edition. Thousand Oaks, CA: Sage, 1–32.

Drinkwater Gregg, K., Ryan, O., Katz, A., Huerta, M., and Sajadi, S. (2025). Expanding possibilities for generative AI in qualitative analysis: fostering student feedback literacy through the application of a feedback quality rubric. J. Eng. Educ. 114:e70024. doi: 10.1002/jee.70024

Fui-Hoon Nah, F., Zheng, R., Cai, J., Siau, K., and Chen, L. (2023). Generative AI and ChatGPT: applications, challenges, and AI-human collaboration. J. Inform. Technol. Case Appl. Res. 25, 277–304. doi: 10.1080/15228053.2023.2233814

García-López, I. M., and Trujillo-Liñán, L. (2025). Ethical and regulatory challenges of Generative AI in education: a systematic review. Front. Educ. (Lausanne) 10:1565938. doi: 10.3389/feduc.2025.1565938

Haouam, I. (2025). Leveraging AI for advancements in qualitative research methodology. J. Art. Intel. 7, 85–114. doi: 10.32604/jai.2025.064145

Hitch, D. (2024). Artificial Intelligence augmented qualitative analysis: the way of the future? Qual. Health Res. 34, 595–606. doi: 10.1177/10497323231217392

Hughes, L., Malik, T., Dettmer, S., Al-Busaidi, A. S., and Dwivedi, Y. K. (2025). Reimagining higher education: navigating the challenges of generative AI adoption. Inform. Syst. Front. 1–23. doi: 10.1007/s10796-025-10582-6

Ilieva, G., Yankova, T., Ruseva, M., and Kabaivanov, S. (2025). A framework for generative AI-Driven assessment in higher education. Information 16:472. doi: 10.3390/info16060472

Jack, T., Cooper, A., and Flower, L. (2025). Generative AI in Social Science: A snapshot of the Current Integration of Charming Chatbots and Cautiously Complementary Tools in Research. doi: 10.2139/ssrn.5299982

Karataş, F., Eriçok, B., and Tanrikulu, L. (2025). Reshaping curriculum adaptation in the age of artificial intelligence: mapping teachers' AI -driven curriculum adaptation patterns. Br. Educ. Res. J. 51, 154–180. doi: 10.1002/berj.4068

Lakhe Shrestha, B. L., Dahal, N., Hasan, Md. K., Paudel, S., and Kapar, H. (2025). Generative AI on professional development: a narrative inquiry using TPACK framework. Front. Educ. (Lausanne) 10:1550773. doi: 10.3389/feduc.2025.1550773

Latour, B. (2005). Reassembling the Social: An Introduction to Actor-Network-Theory. Oxford University Press.

Lincoln, Y. S., and Guba, E. G. (1985). Naturalistic Inquiry. Thousand Oaks, CA: SAGE, 289–331. doi: 10.1016/0147-1767(85)90062-8

Moura, A., Fraga, S., Ferreira, P. D., and Amorim, M. (2025). Are we still at this point?: Persistent misconceptions about the adequacy, rigor and quality of qualitative health research. Front. Pub. Health 13:1586414. doi: 10.3389/fpubh.2025.1586414

Nguyen-Trung, K. (2025). ChatGPT in thematic analysis: can AI become a research assistant in qualitative research? Qual. Quant. 1–34. doi: 10.1007/s11135-025-02165-z

Owoahene Acheampong, I., and Nyaaba, M. (2024). Review of qualitative research in the era of generative artificial intelligence. SSRN Electron. J. doi: 10.2139/ssrn.4686920

Sun, H., Kim, M., Kim, S., and Choi, L. (2025). A methodological exploration of generative artificial intelligence (AI) for efficient qualitative analysis on hotel guests' delightful experiences. Int. J. Hosp. Manag. 124:103974. doi: 10.1016/j.ijhm.2024.103974

Wood, D., and Moss, S. H. (2024). Evaluating the impact of students' generative AI use in educational contexts. J. Res. Innov. Teach. Learn. 17, 152–167. doi: 10.1108/JRIT-06-2024-0151

Yildirim, H. M., Güvenç, A., and Can, B. (2025). Reporting quality in qualitative research using artificial intelligence and the COREQ checklist: a systematic review of tourism literature. Int. J. Qual. Methods 24:16094069251351487. doi: 10.1177/16094069251351487

Zawacki-Richter, O., Marín, V. I., Bond, M., and Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education – where are the educators? Int. J. Educ. Technol. High. Educ. 16:39. doi: 10.1186/s41239-019-0171-0

Zhang, H., Wu, C., Xie, J., Lyu, Y., Cai, J., and Carroll, J. M. (2025). Harnessing the power of AI in qualitative research: Exploring, using and redesigning ChatGPT. Comput. Hum. Behav. Artif. Hum. 4:100144. doi: 10.1016/j.chbah.2025.100144

Keywords: generative AI, qualitative research, crisis of representation, crisis of legitimation, crisis of praxis, algorithmic bias, posthuman ethics

Citation: Dahal N, Hasan MK, Ounissi A, Haque MN and Kapar H (2025) Navigating the meta-crisis of generativity: adapting qualitative research quality criteria in the era of generative AI. Front. Res. Metr. Anal. 10:1685968. doi: 10.3389/frma.2025.1685968

Received: 14 August 2025; Accepted: 16 October 2025;

Published: 04 November 2025.

Edited by:

Patrick Ngulube, University of South Africa, South AfricaReviewed by:

Celeste-Marie Combrinck, University of Pretoria, South AfricaCopyright © 2025 Dahal, Hasan, Ounissi, Haque and Kapar. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Niroj Dahal, bmlyb2pAa3Vzb2VkLmVkdS5ucA==

†ORCID: Niroj Dahal orcid.org/0000-0001-7646-1186

Md. Kamrul Hasan orcid.org/0000-0003-2353-4673

Amine Ounissi orcid.org/0000-0002-7318-1880

Md. Nurul Haque orcid.org/0000-0003-2046-8586

Hiralal Kapar orcid.org/0009-0000-9123-4600

Niroj Dahal

Niroj Dahal Md. Kamrul Hasan

Md. Kamrul Hasan Amine Ounissi

Amine Ounissi Md. Nurul Haque

Md. Nurul Haque Hiralal Kapar

Hiralal Kapar