- Human-Computer Interaction (HCI) Group, Informatik, University of Würzburg, Würzburg, Germany

Measurements of physiological parameters provide an objective, often non-intrusive, and (at least semi-)automatic evaluation and utilization of user behavior. In addition, specific hardware devices of Virtual Reality (VR) often ship with built-in sensors, i.e. eye-tracking and movements sensors. Hence, the combination of physiological measurements and VR applications seems promising. Several approaches have investigated the applicability and benefits of this combination for various fields of applications. However, the range of possible application fields, coupled with potentially useful and beneficial physiological parameters, types of sensor, target variables and factors, and analysis approaches and techniques is manifold. This article provides a systematic overview and an extensive state-of-the-art review of the usage of physiological measurements in VR. We identified 1,119 works that make use of physiological measurements in VR. Within these, we identified 32 approaches that focus on the classification of characteristics of experience, common in VR applications. The first part of this review categorizes the 1,119 works by field of application, i.e. therapy, training, entertainment, and communication and interaction, as well as by the specific target factors and variables measured by the physiological parameters. An additional category summarizes general VR approaches applicable to all specific fields of application since they target typical VR qualities. In the second part of this review, we analyze the target factors and variables regarding the respective methods used for an automatic analysis and, potentially, classification. For example, we highlight which measurement setups have been proven to be sensitive enough to distinguish different levels of arousal, valence, anxiety, stress, or cognitive workload in the virtual realm. This work may prove useful for all researchers wanting to use physiological data in VR and who want to have a good overview of prior approaches taken, their benefits and potential drawbacks.

1 Introduction

Virtual Reality (VR) provides the potential to expose people to a large variety of situations. One advantage it has over the exposure to real situations is that the creator of the virtual environment can easily and reliably control the stimuli that are presented to an immersed person (Vince, 2004). Usually, the presented stimuli are not arbitrary but intentionally chosen to evoke a certain experience in the user, e.g. anxiety, relaxation, stress, or presence. Researchers require tools that help them to determine whether the virtual environment fulfills its purpose and how users respond to certain stimuli. Evaluation methods are an essential part of the development and research of VR.

Evaluation techniques can be divided into implicit and explicit methods (Moon and Lee, 2016; Marín-Morales et al., 2020). Explicit methods require the user to explicitly and actively express the own experience. Hence, they can also be called subjective methods. Examples include interviews, thinking-aloud and questionnaires. In the evaluation of VR, questionnaires are the most prominent explicit method. They are very versatile and designed for the quantification of various characteristics of experience. Some assess VR specific phenomena, e.g. presence (Slater et al., 1994; Witmer and Singer, 1998), simulator sickness (Kennedy et al., 1993), or the illusion of virtual body-ownership (Roth and Latoschik, 2020). Other questionnaires capture more generic characteristics of experience, but are still useful in many VR scenarios, e.g. workload (Hart and Staveland, 1988) or affective reactions (Watson et al., 1988; Bradley and Lang, 1994).

Traditionally, questionnaires and other explicit methods also bring with them some disadvantages. There is a variety of self-report biases that can manipulate the way people respond to questions. A common example is the social desirability bias. It refers to the idea that subjects tend to choose a response that they expect to meet social expectations instead of one that reflects their true experience (Corbetta, 2003; Grimm, 2010). Other common examples for response biases are the midpoint bias where people tend to choose neutral answers (Morii et al., 2017) or extreme responding where people tend to choose the extreme choices on a rating scale (Robins et al., 2009). In general, there is a variety of characteristics and circumstances that can negatively influence the human capacity to evaluate oneself. For a detailed description of erroneous self-assessment of humans, refer to Dunning et al. (2004). Another point that can limit the validity of questionnaires is that one never knows if the questions were understood by participants (Rowley, 2014). The complexity of the information and the language skills of the respondents can influence how questions are interpreted and thus how answers turn out (Redline et al., 2003; Richard and Toffoli, 2009). Another problem is that explicit methods often separate the evaluation from the underlying stimulus. Thus, they rely on a correct recapitulation of experience. People might not be able to remember how exactly they were feeling when they were interacting with a software (Cairns and Cox, 2008). This is especially relevant for VR, as leaving the virtual environment can lead to a change in the evaluation of the experience (Schwind et al., 2019). In addition, some mental processes are not even accessible to consciousness and are therefore not recorded by explicit methods (Barsade et al., 2009).

Implicit evaluation methods avoid a lot of those drawbacks. In contrast to the explicit measures, they do not require the user to actively participate in the evaluation. Rather, they analyze the user behavior based on the response to a certain stimulus or event. This can be done either by direct observation or by analysis of physiological data. These implicit methods can also be referred to as objective methods as they do not rely on the ability of subjects to assess their own condition. Implicit evaluation that is based on physiological data has the advantage that it can assess both, automatic and deliberate processes. With automatic processes, we refer to organic activations that are unconsciously controlled by the autonomous nervous system, e.g. bronchial dilation or the activation of the sweat secretion (Jänig, 2008; Laight, 2013). These activations cannot be observed from the outside. With measures like electrodermal activity, electrocardiography, or electroencephalography, however, we can assess them. This allows quantification of how current stimuli are processed by the nervous system (Jänig, 2008; Laight, 2013). Deliberate processes, on the other hand, do not depend on unconscious activations of the autonomous nervous system. Nevertheless, physiological measures can help to understand these processes. Electromyography, for example, measures the strength of contraction of skeletal muscles (De Luca, 2006). Thus, this signal can also depend on arbitrary control by the human being.

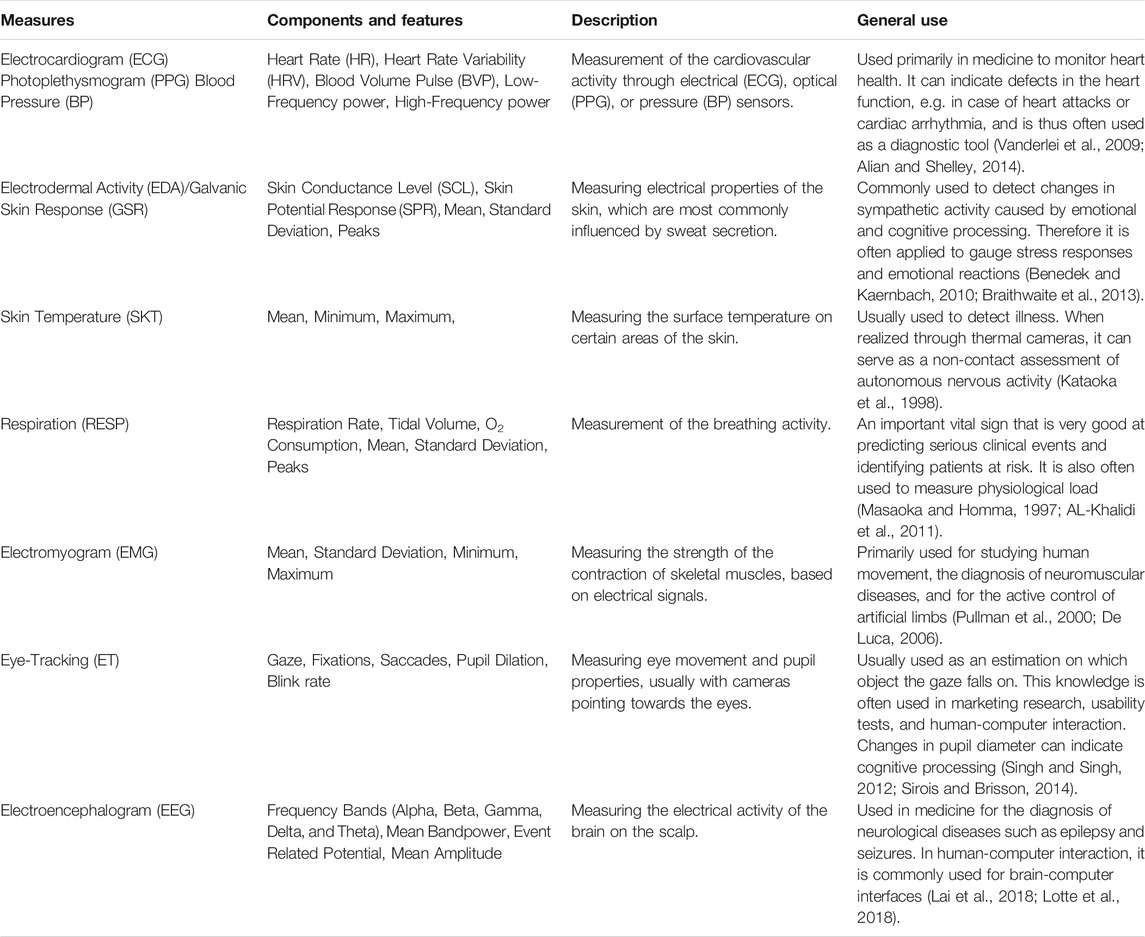

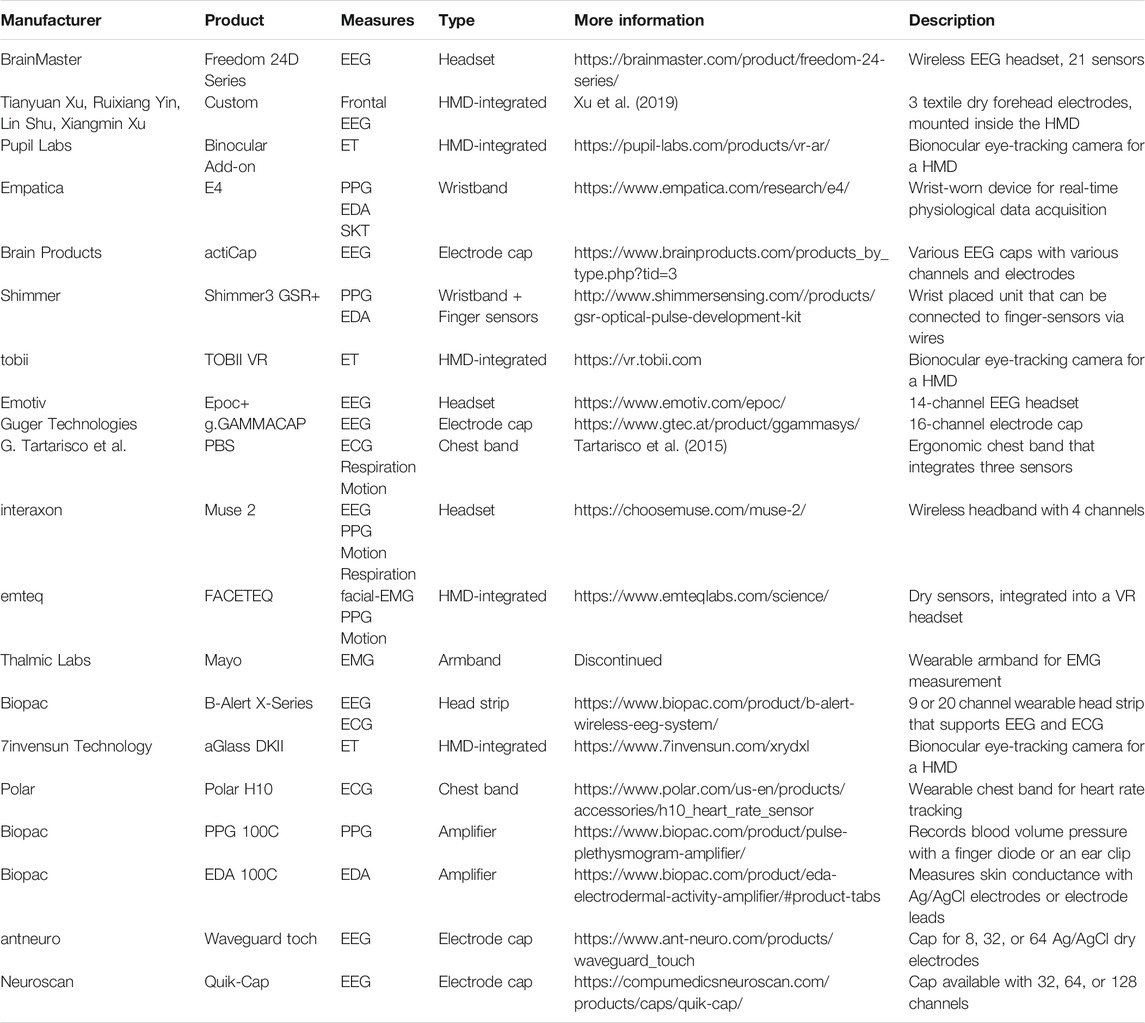

Physiological measurements offer decisive advantages. They can be taken during exposure, they do not depend on memory, they can capture sub-conscious states, data can be collected fairly unobtrusively, and they yield quantitative data that can be leveraged for machine-learning approaches. A depiction of the discussed structure of evaluation methods can be found in Figure 1. An overview of the physiological measures that are considered in this work can be found in Table 1. It also contains abbreviations for the measurements that are used from now on.

FIGURE 1. Categorization of evaluation methods. The overview should not be considered comprehensive, but mereley as an orientation.

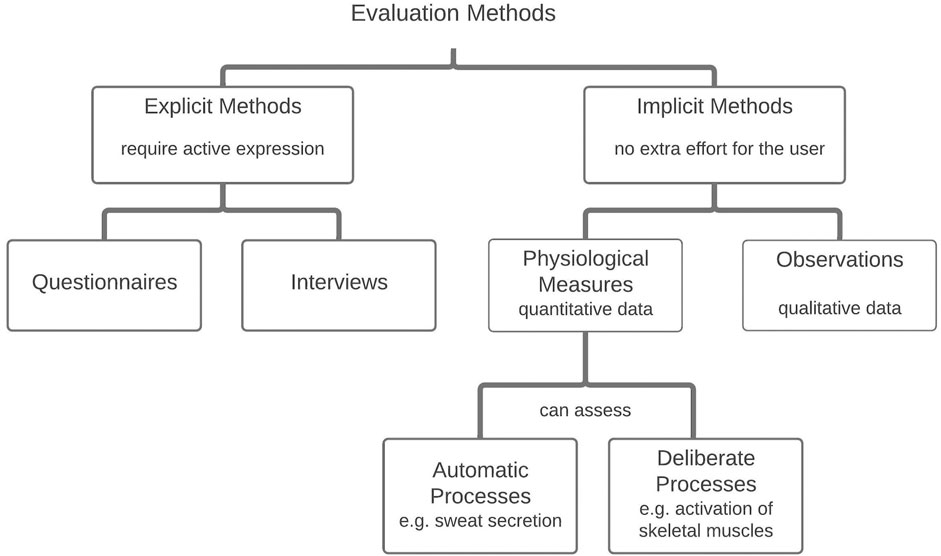

The availability of easy-to-use wearable sensors is spurring the use of physiological data. EEG headsets such as the EPOC+1 or the Muse 2,2 wrist and chest worn trackers from POLAR,3 fitbit,4 and Apple5; as well as the EMPATICA E46 all make it easier to collect physiological data. In addition, VR headsets already come with built-in sensors that can be used for behavior analysis. Data from gyroscopes and accelerometers, included in VR-headsets and controllers, provide direct information about movement patterns. Moreover, eye-tracking devices from tobii7 or Pupil Labs8 can be used to easily extend VR-headsets so they deliver even more data, e.g. pupil dilation or blinking rate.

Due to the aforementioned advantages in combination with the availability of easy-to-use sensors and low-cost head-mounted displays (HMD) (Castelvecchi, 2016), the number of research approaches that combine physiological data with VR has increased considerably in recent years. In their meta-review about emotion recognition in VR with physiological data, Marín-Morales et al. (2020) even report an exponential growth of this field. Researchers who want to use physiological measures for their own VR application, however, are faced with a very rapidly growing field that offers a wide range of possibilities. As previously implied, there are a variety of signals that can be collected with a variety of sensors. While it is clear that a presence questionnaire is used to assess presence, such a 1-to-1 linkage of measure and experience is not possible for physiological measures. Their usage in VR applications is therefore anything but trivial. A structured reappraisal of the field is necessary.

This systematic review consists of two parts that address the following issues:

In the first part of this article, we examine the different use cases of physiological measurements in VR. We collect a broad selection of works that use physiological measures to assess the state of the user in the virtual realm. Then we categorize the works into specific fields of application and explain the functionality of the physiological measurements within those fields. As a synthesis of this part, we describe a list of the main purposes of using physiological data in VR. This serves as a broad, state of the art overview of how physiological measures can be used in the field of VR.

However, knowing what this data can be used for is only half the battle. We still need to know how to work with this data, in order to gain knowledge about a user’s experience. Hence, in the second part of this paper, we will discuss concrete ways to collect and interpret physiological data in VR. Works that tell us a lot about how to get data and what can be deduced from it are classification approaches. To be precise, this includes approaches, that classify different levels of certain characteristics of experience. The works from this domain usually adopt the characteristics of experiences as their independent variable. Subjects in those studies were exposed to stimuli known to elicit a certain experience, such as anxiety. These studies then examined the extent to which the change in experience was reflected in physiological measurements. Thus, the works focus on the physiological measures themselves and their ability to quantify a particular experience. This review of classifiers, therefore, provides a clear overview of signals, sensors, tools and algorithms, that have been sensitive enough to distinguish different levels of the targeted experience in a VR setup. They show concrete procedures on how to extract the information that is hidden in the physiological data.

2 Methods

On the basis of the Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA) statement (Liberati et al., 2009), we searched and assessed literature to find papers that make use of physiological data in VR. We always searched for one specific signal in combination with VR, so the search terms consisted of two parts that are connected with an AND. Thus, the individual search terms can be summarized in one big query that can be described like this: (“Virtual Reality” OR “Virtual Environment” OR “VR” OR “HMD”) AND (Pupillometry OR “Pupil* Size” OR “Pupil* Diameter” OR “Pupil* Dilation” OR “Pupil” OR “Eye Tracking” OR “Eye-Tracking” OR “Eye-Tracker*” OR “Gaze Estimation” OR “Gaze Tracking” OR “Gaze-Tracking” OR “Eye Movement” OR “EDA” OR “Electrodermal Activity” OR “Skin Conductance” OR “Galvanic Skin Response” OR “GSR” OR “Skin Potential Response” OR “SPR” OR “Skin Conductance Response” OR “SCR” OR “EMG” OR “Electromyography” OR “Muscle Activity” OR “Respiration” OR “Breathing” OR “Heart Rate” OR “Pulse” OR “Skin Temperature” OR “Thermal Imaging” OR “Surface Temperature” OR “Blood Pressure” OR “Blood Volume Pressure” OR “EEG” OR “Electroencephalography”). The terms had to be included in the title, abstract, or keywords of an article. Queried databases were ACM Digital Library, Web of Science, PubMed, APA PsycInfo, PsynDex, and IEEE Xplore. The date of the search was October 15, 2020.

We gathered the results and inserted them into a database together with some extra papers that were known to be relevant for the topic. We removed duplicates and then started with the screening process. Papers were excluded if they were from completely different domains (VR, for example, can not only stand for “Virtual Reality”), if new sensors or algorithms were only introduced (but not actually used), if they only dealt with augmented reality, or if they were just presenting the idea of using physiological data and VR (but not actually did it). Furthermore, we excluded poster presentations, abstracts, reviews, and works that were not written in English. That means, left after this screening process were all works that present a use case in which the sought-after physiological measures were used together with a VR application. We usually screened papers on basis of title and abstract. About 10% required inspection of the full text to determine if they met the criteria. If the full text of those works was not available they were also excluded. We used the papers that were left after this process for the first part of this work. During the screening process, we began to note certain repetitive fields of application and compiled a list of categories and sub-categories of field of application. We then tagged the papers with these categories according to their field of application. We also noted the purpose for which the physiological data was used, also with the help of tags.

As already explained in the introduction (Section 1), in the further course of the review we focused on classification approaches. During the aforementioned tagging process, we identified papers that deal with some kind of classification. Those papers were then examined for their eligibility to be included in the second part of the review. The criterion for the inclusion of a paper here was that it is a work that presents a classification based on physiological data which was captured during exposure to immersive VR (CAVE-based or HMD-based). In order to be included, the work also had to distinguish different levels of current experience (e.g. high vs. low stress) and not different groups of people (e.g. children with and without ADHD). Excluded were classifiers that aim at the recognition of user input, an adaption of the system, or the recognition of the used technology. Also excluded were works that just look for correlations between signals and certain events, classification approaches that are based on desktop VR or non-physiological data.

3 Results

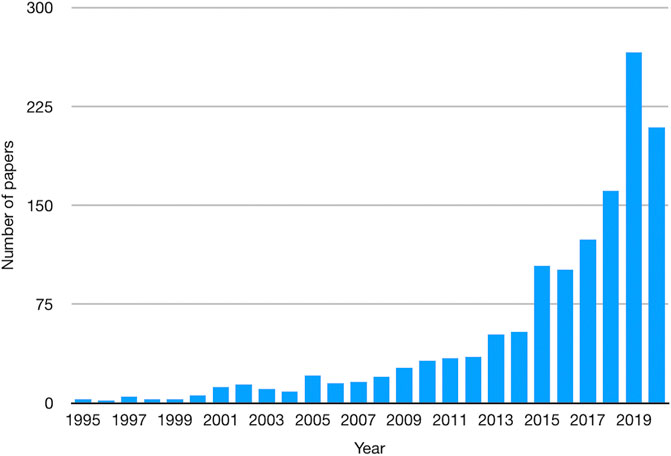

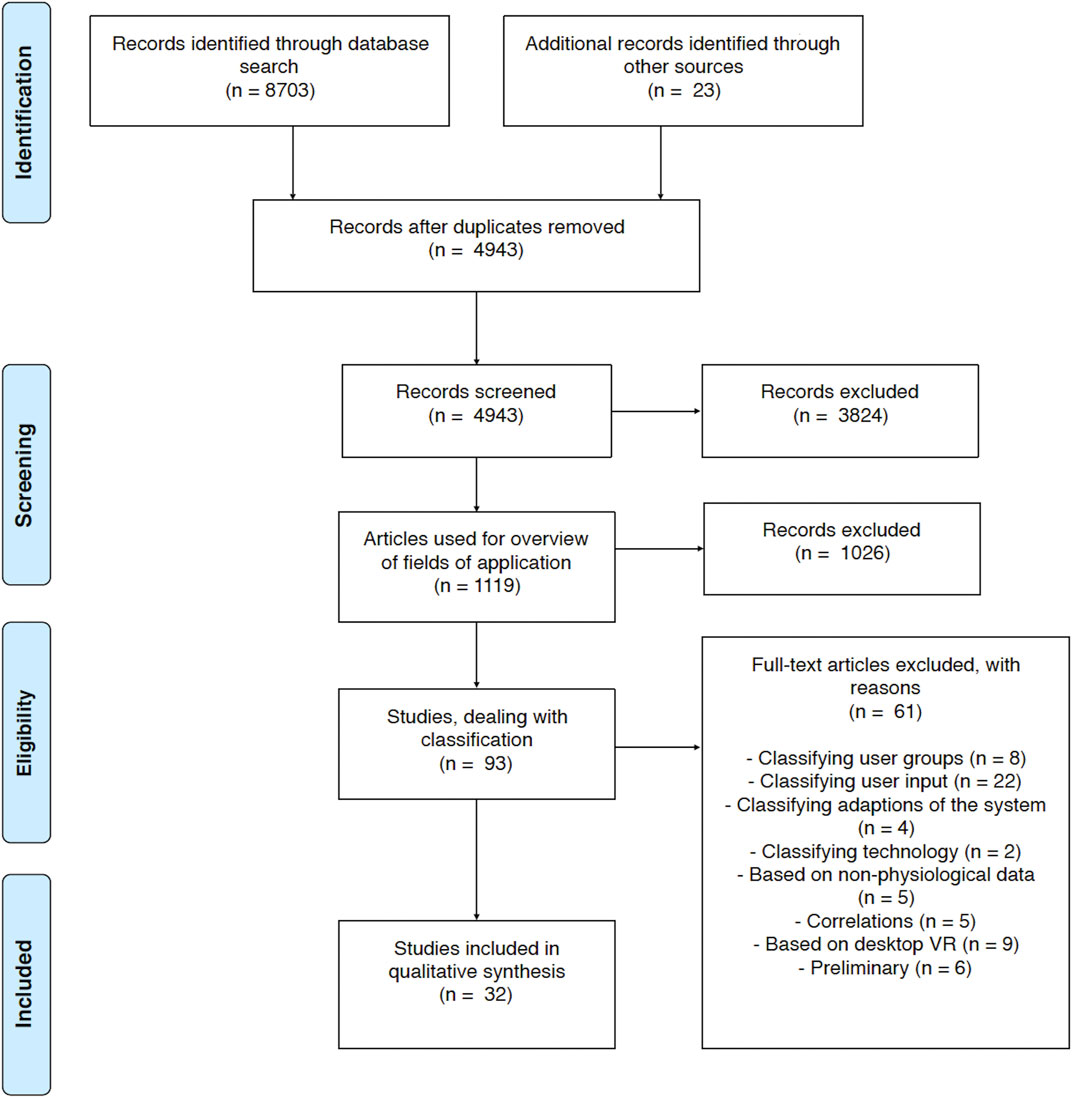

An overview of the specific phases of the search for literature and the results can be seen in Figure 2. In total, the literature research yielded 4,943 different works. After the first screening process, 1,408 works were left over. They all show examples of how physiological data can be used in immersive VR to assess the state of the user. Figure 3 shows the distribution of the papers over the years since 1995.

FIGURE 2. Process and Results of the Literature Review. Diagram is adapted from Liberati et al. (2009).

From this point forward, we chose to continue with works from 2013 and later. Thus, we shifted the focus to current trends. The numbers show that most of the papers were published during the last years (see Figure 3). The year 2013 was the first year for which we found more than 50 papers. This left 1,119 of the 1,408 papers that were published in 2013 or later. During the screening process, described in Section 2, we identified five major fields of application to which most works can be assigned to. These domains are therapy and rehabilitation, training and education, entertainment, functional VR properties and general VR properties. In the first part of the discussion section, we use this domain division to give a broad overview of the usage of physiological measures in VR (see Section 4.1).

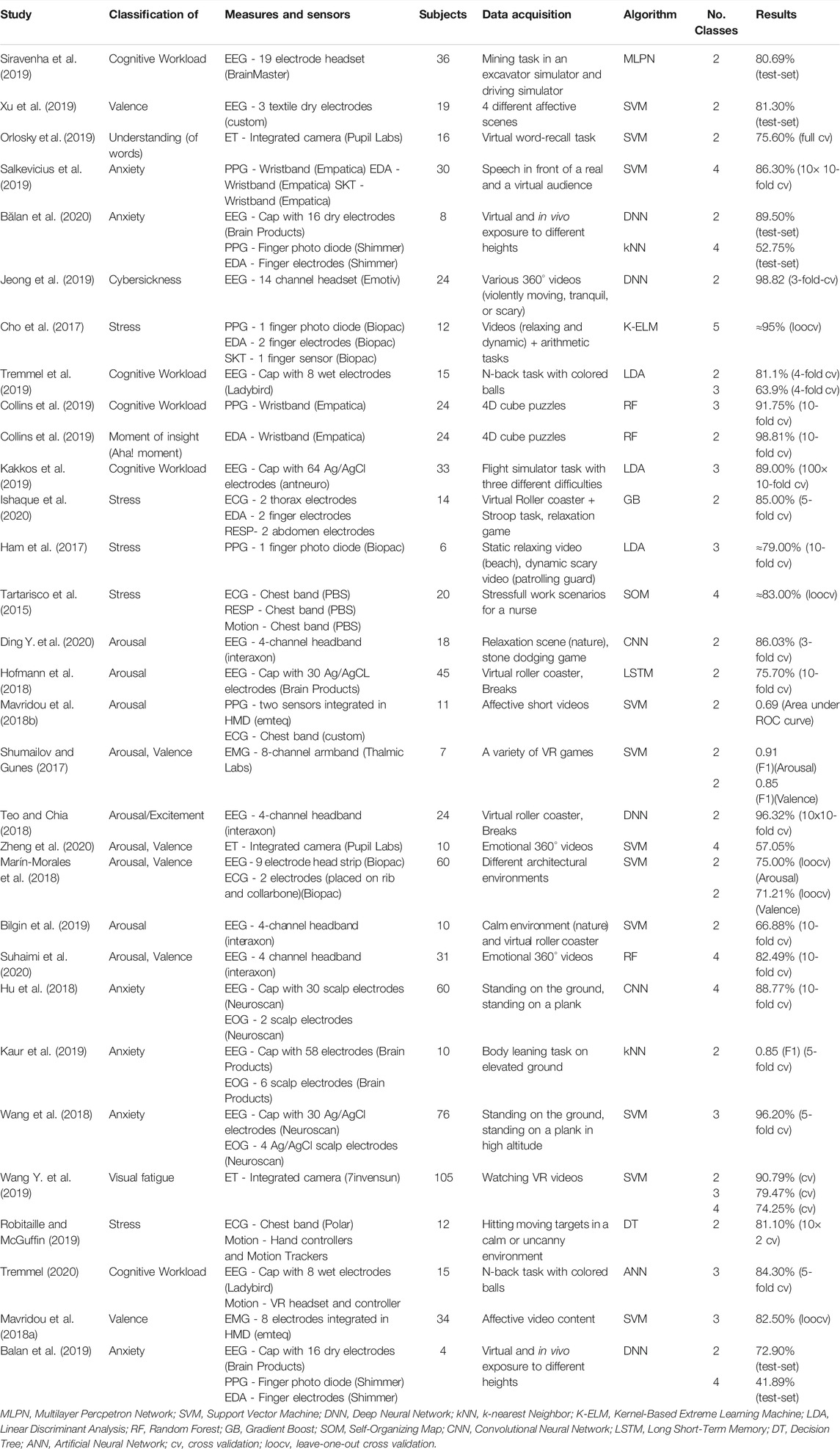

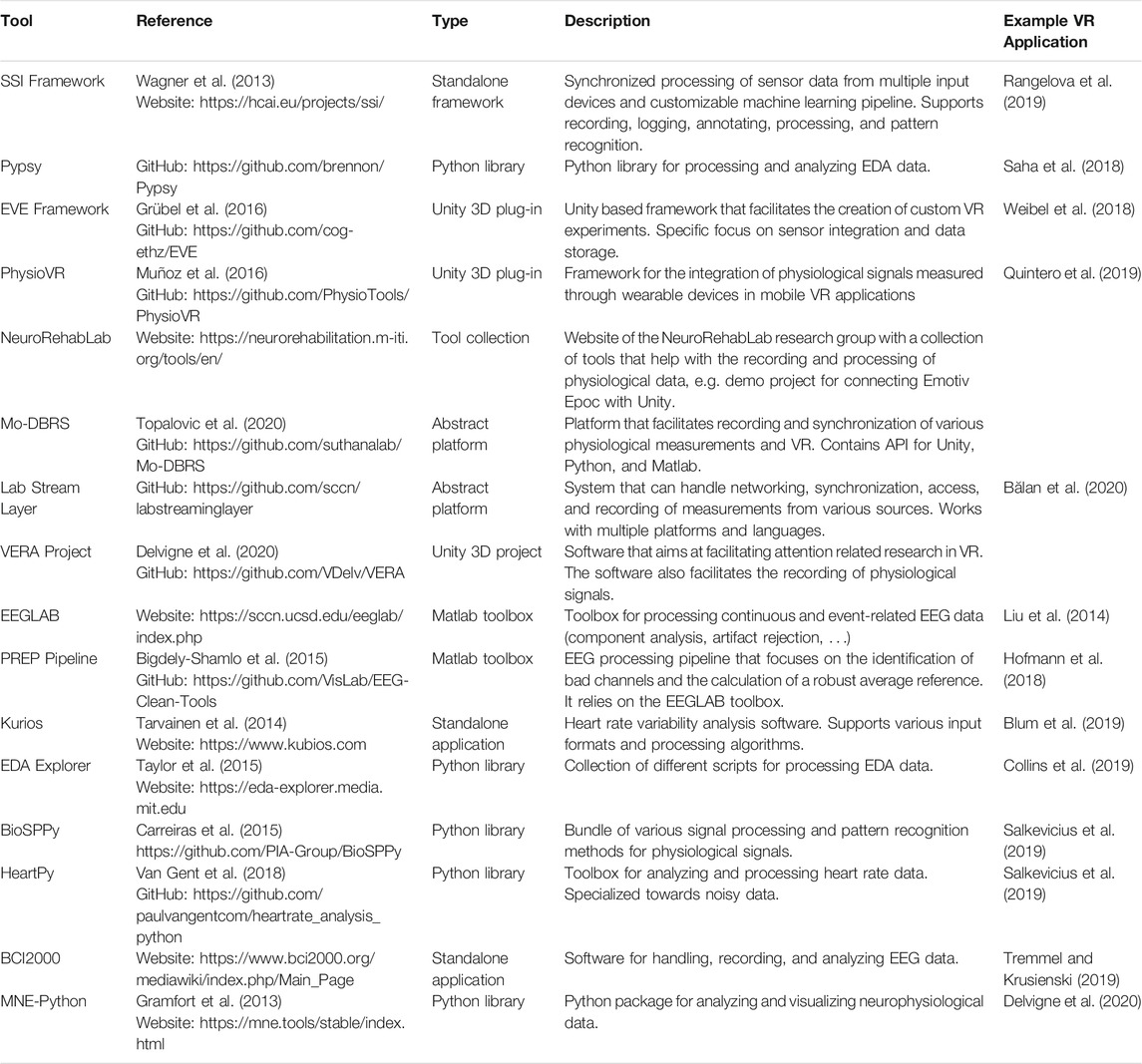

After screening and checking for eligibility, 32 works that deal with the classification of experience in VR were left for further qualitative analysis. Each of the 32 works use physiological measures as dependent variables. As independent variables they manipulate the intensity of a target characteristic of experience. Thus, the works show the extent to which the physiological measures were able to reflect the manipulation of the independent variable. In our results, the most commonly assessed characteristic of experience was arousal, used in nine works, followed by valence and anxiety, both used in six works. Five works classify stress, and four, cognitive workload. The following characteristics of experience were measured in only one work: Visual fatigue, moments of insight, cybersickness, and understanding. An overview of the 32 works can be found in Table 2. This overview shows which characteristics of experience the works assessed, which measures and sensors they used for their approach, and which classification algorithms were chosen for the interpretation of the data. Table 3 provides a separate overview of the sensors that were used in the 32 works. In Table 4 we list different tools that were used in various classification approaches to record, synchronize, and process the physiological data. In the second part of the discussion, we deal with the listed characteristics of experience individually and summarize the corresponding approaches with a focus on signals and sensors (see Section 4.2).

TABLE 2. Overview of classification approaches based on physiological data collected in full-immersive VR. If not stated otherwise, the values presented in the Results column usually refer to the accuracy that was achieved in a cross validation or on an extra test-set. These values serve only as a rough guide to the success of the method and are not comparable 1-to-1.

TABLE 4. Various tools that were used in the identified classification approaches for the recording, synchronization, and processing of physiological data.

4 Discussion

As already indicated in Section 3 the discussion of this work is divided into two parts.

4.1 Part 1: Fields of Application for Physiological Data in Virtual Reality

In the first part, we give a categorized overview of the copious use cases of physiological measures in VR. This overview is based on the 1,119 works and the fields of application that we identified during the screening process. This section is structured according to those fields. We highlight which works belong to which fields and how physiological measures are used. We summarize this overview by listing meta purposes for which physiological data are used in VR. This overview cannot cover all the works that are out there. What we describe are the types of work that have occurred most frequently. After all, this is an abstraction of the field. With only a few exceptions, all the examples we list here are HMD-based approaches.

4.1.1 Therapy and Rehabilitation

Therapy and rehabilitation applications are frequent fields of application for physiological measurements. Here, we talk about approaches that try to reduce or completely negate the effects or causes of diseases and accidents.

4.1.1.1 Exposure Therapie

A very common type of therapy that leverages physiological data in virtual reality is exposure therapy. Heart rate, skin conductivity, or the respiration rate are often used to quantify anxiety reactions to stimuli that can be related to a phobia. Common examples for this are public speaking situations (Kothgassner et al., 2016; Kahlon et al., 2019), standing on elevated places (Gonzalez et al., 2016; Ramdhani et al., 2019), confrontations with spiders (Hildebrandt et al., 2016; Mertens et al., 2019), being locked up in a confined space (Shiban et al., 2016b; Tsai et al., 2018), or reliving a war-scenario (Almeida et al., 2016; Maples-Keller et al., 2019).

Physiological measures can also be used to evaluate the progress of the therapy. Shiban et al. (2017) created a virtual exposure application for the treatment of aviophobia. Heart rate and skin conductance were measured as indicators for the fear elicited by a virtual airplane flight. The exposure session consisted of three flights, while a follow-up test session, one week later, contained two flights. By analyzing the psychophysiological data throughout the five flights, the researchers were able to show that patients continuously got used to the fear stimulus.

Another way in which the physiological data can be utilized is for an automatic adaption of the exposure therapy system. Bălan et al. (2020) used a deep learning approach for the creation of a fear-level classifier based on heart rate, GSR, and EEG data. This classifier was then used as part of a virtual acrophobia therapy in which the immersed person stands on the roof of a building. Based on a target anxiety level and the output of the fear classifier the system can steer the height of the building and thus the intensity of the exposure. A similar approach comes from Herumurti et al. (2019) in the form of an exercise system for people with public speaking anxiety. Here, the behavior of a virtual audience depends on the heart rate of the user, i.e. the audience pays attention, pays no attention, or mocks the speaker.

What is also often done in research with exposure therapy applications is the comparison of different stimuli, systems, or groups of people. Physiological signals often represent a reference value that enhances such approaches. Comparisons have been made between traditional and virtual exposure therapy (Levy et al., 2016), fear-inducing stimuli in VR and AR (Li et al., 2017; Yeh et al., 2018), or just between phobic and healthy subjects (Breuninger et al., 2017; Kishimoto and Ding, 2019; Freire et al., 2020; Malta et al., 2020).

4.1.1.2 Relaxation Applications

Many approaches work with the idea to use a virtual environment to let people escape from their current situation and immerse themselves in a more relaxing environment. Physiological stress indicators can help to assess the efficacy of these environments. Common examples for this include scenes with a forest (Yu et al., 2018; Browning et al., 2019; Wang X. et al., 2019; De Asis et al., 2020), a beach (Ahmaniemi et al., 2017; Anderson et al., 2017), mountains (Ahmaniemi et al., 2017; Zhu et al., 2019) or an underwater scenario (Soyka et al., 2016; Liszio et al., 2018; Fernandez et al., 2019). Other works go one step further and manipulate the virtual environment, based on the physiological status of the immersed person. So-called biofeedback applications are very common in the realm of relaxation applications and aim to make the users aware of their inner processes. The way this feedback looks can be very different. Blum et al. (2019) chose a virtual beach scene at sunset with palms, lamps, and a campfire. Their system calculates a real-time feedback parameter based on the heart rate variability as an indicator for relaxation. This parameter determines the cloud coverage in the sky and if the campfire and lamps are lit or not. Fominykh et al. (2018) present a similar virtual beach where the sea waves become higher and the clouds become darker when the heart rate of the user rises. Patibanda et al. (2017) present the serious game Life Tree which aims to teach a stress reducing breathing technique. The game revolves around a tree, that is bare at the start. By exhaling, the player can blow leaves towards the tree. The color of the leaves become green if the player breathes rhythmically and brown if not. Also, the color of the tree itself changes as the player practices correct breathing. Parenthoen et al. (2015) realized biofeedback with the help of EEG data by animating ocean waves according to surface cerebral electromagnetic waves of the immersed person. Most of these works aim at transferring the users from their stressful everyday life into a meditative state. Refer to Döllinger et al. (2021) for a systematic review of such works.

Relaxation applications can not only be used to escape the stress of everyday life but also as a distraction from painful medical procedures and conditions. This was applied in different contexts, e.g. during intravenous cannulation of cancer patients (Wong et al., 2020), preparation for knee surgery (Robertson et al., 2017), stay on an intensive care unit (Ong et al., 2020), or a dental extraction procedure (Koticha et al., 2019). Physiological stress indicators are commonly used to compare the effects of the virtual distraction to control groups (Ding et al., 2019; Hoxhallari et al., 2019; Rao et al., 2019).

4.1.1.3 Physical Therapy

VR stroke therapies often aim for the rehabilitation of impaired extremities. Here, virtual environments are commonly used to enhance motivation with gamification (Ma et al., 2018; Solanki and Lahiri, 2020) or to offer additional feedback, e.g. with a virtual mirror (Patel et al., 2015; Patel et al., 2017). In the domain of motor-rehabilitation, EMG-data can be of particular importance. It can be used to demonstrate the basic effectiveness of the system by showing that users of the application really activate targeted muscles (Park et al., 2016; Drolet et al., 2020). This is of special interest when impairments do not allow visible movement of the target-limb (Patel et al., 2015). Another strategy to combine VR stroke therapy and EMG signals is a feedback approach. Here, the strength of the muscle activation is made available to the user visually or audibly which can result in positive therapy effects, as the user becomes aware of internal processes (Dash et al., 2019; Vourvopoulos et al., 2019). The use of psychophysiological data in stroke-therapy is not necessarily restricted to EMG. Calabro et al. (2017) created a virtual gait training for lower limb paralysis and compared it to a non-VR version of the therapy. With the help of EEG measurements, they showed that the VR version was especially useful for activating brain areas that are responsible for motor learning.

Also Parkinson’s disease requires motor-rehabilitation. Researchers have used physiological data to assess the anxiety experience of Parkinson patients with impaired gait under different elevations or on a virtual plank (Ehgoetz Martens et al., 2015; Ehgoetz Martens et al., 2016; Kaur et al., 2019). This data has helped researchers and therapists to understand the experience of the patients and to adapt the therapy accordingly.

4.1.1.4 Addiction Therapy

Another field of application where physiological measurements prove useful is in the therapy of drug addictions. Gamito et al. (2014) showed that virtual cues have the potential to elicit a craving for nicotine in smokers. With the help of eye-tracking, they demonstrated that smokers exhibit a significantly higher number of eye fixations on cigarettes and tobacco packages. In a similar studies, Thompson-Lake et al. (2015) and García-Rodríguez et al. (2013) showed that virtual, smoking-related cues can cause an increase in the heart rate of addicts. Yong-Guang et al. (2018) and Ding X. et al. (2020) did the same for methamphetamine users. They found evidence that meth-users show significant differences in EEG, GSR, and heart rate variability measurements when being exposed to drug-related stimuli in a virtual environment. Based on this, Wang Y.-G. et al. (2019) created a VR counter-conditioning procedure for methamphetamine users. With this virtual therapy, they were able to suppress cue-induced reactions in patients with meth-dependence. The use of physiological data to study the effects of addiction-related stimuli that are presented in VR has also been applied for gambling (Bruder and Peters, 2020; Detez et al., 2019).

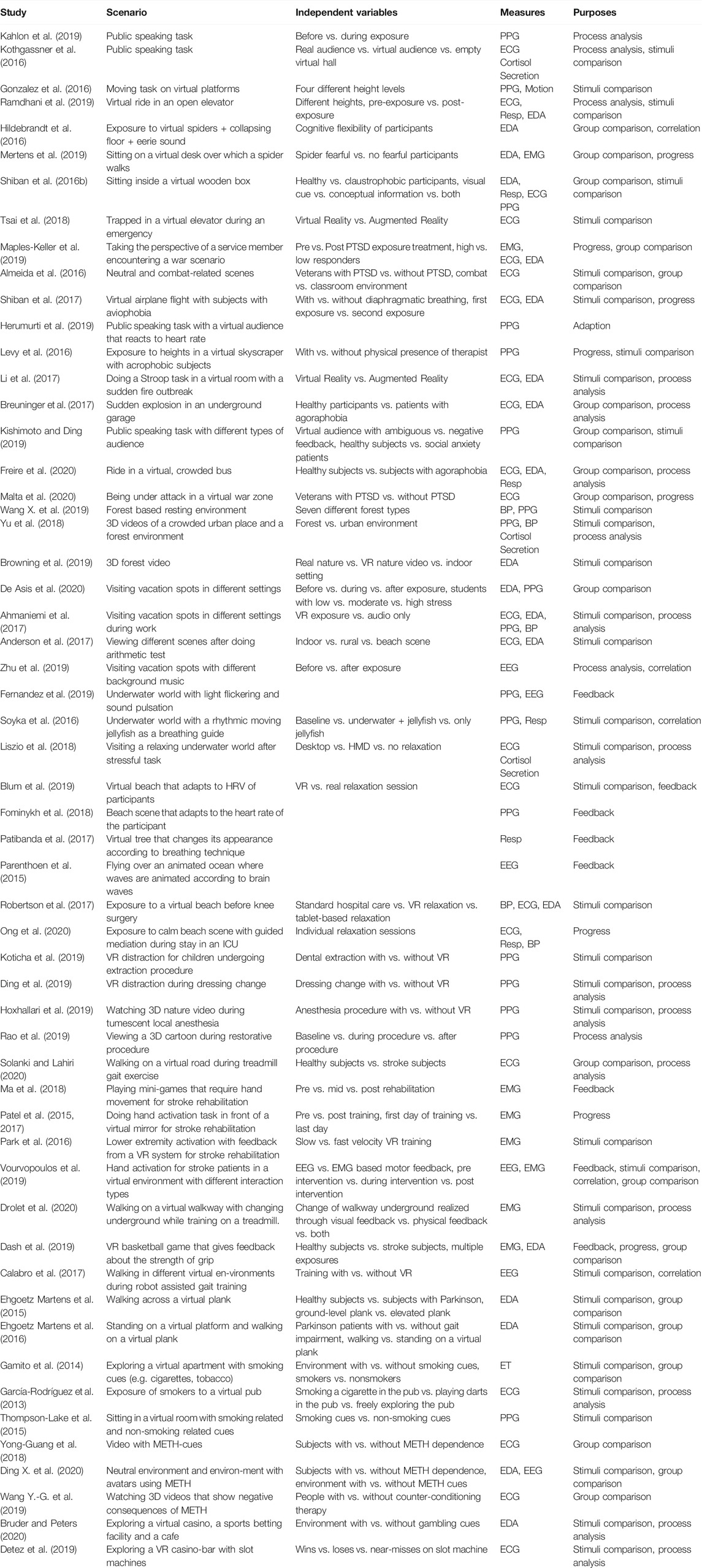

A summary of the works discussed in this section can be found in Table 5.

TABLE 5. Overview of the works from the field of therapy and rehabilitation that were discussed in Section 4.1.1. The Measures column refers to the physiological measures used in the work. The entries of the Independent Variables column often do not cover everything that was considered in the work. Entries in the Purposes column refer to the categories listed in Section 4.1.6.

4.1.2 Training and Education

A considerable amount of VR applications help people to learn new skills, enhance existing ones, or facilitate knowledge in a certain area. A major reason why physiological data comes in handy in training and teaching applications is its potential to indicate cognitive workload and the stress state of a human subject.

4.1.2.1 Simulator Training

Training simulators from various domains include an estimation of mental workload based on physiological data, e.g. surgery training (Gao et al., 2019), virtual driving (Bozkir et al., 2019), or flight simulation (Zhang S. et al., 2017). One way to use knowledge about cognitive load is by adapting task difficulty. Dey et al. (2019a) created a VR training task that requires the user to select a target object, defined by a combination of shape and color. The system uses the EEG alpha band signal to determine how demanding the task is. Based on this information the system can steer the difficulty of the task by altering the number and properties of distractors. In this way, it can be ensured that the task is neither too easy nor too difficult. In the application of Faller et al. (2019) the user has to navigate a plane through rings. The difficulty, i.e. the size of the rings, can be adjusted based on EEG data. With this approach, they were able to keep trainees on an arousal level that is ideal for learning.

In certain fields, physiological measures can even be used to determine the difference between experts and novices. Clifford et al. (2018) worked with a VR application for the training of aerial firefighting. It is a multi-user system that requires communication from the trainees. To cause additional stress the system includes a scenario where the communication is distorted. They evaluated the system with novice and experienced firefighters. By analyzing the heart rate variability of subjects, they were able to show that the communication disorders were effective in eliciting stress throughout the subjects. More interestingly, however, experts showed an increased ability to maintain their heart rate variability, compared to the inexperienced firefighters. This indicates that they were better able to cope with the stress (Clifford et al., 2020). Currie et al. (2019) worked with a similar approach. Their virtual training environment is focused on a high-fidelity surgical procedure. Eye-tracking was used to gain information about the attention patterns of users. A study with novice and expert operators showed that the expert group had significantly greater dwell time and fixations on support displays (screens with X-ray or vital signs). Melnyk et al. (2021) showed how this knowledge can be used to support learning, as they augmented surgical training by using expert gaze patterns to guide the trainees.

In simpler cases, stress and workload indicators are used to substantiate the basic effectiveness of virtual stimuli in training. Physiological indicators can be used to show that implemented scenarios are really able to elicit desired stress responses (Loreto et al., 2018; Prachyabrued et al., 2019; Spangler et al., 2020).

4.1.2.2 Virtual Classrooms

Physiological measures can also benefit classical teacher-student scenarios. Rahman et al. (2020) present a virtual education environment in which the teacher is provided with a visual representation of the gaze behavior of students. This allows the teachers to identify distracted or confused students, which can benefit the transfer of knowledge. Yoshimura et al. (2019) developed a strategy to deal with inattentive listeners. They constructed an educational virtual environment in which eye-tracking is used to identify distracted students. The system can then present visual cues, e.g. arrows or lines, that direct the attention of the pupils towards critical objects that are currently discussed. In the educational environment of (Khokhar et al., 2019) the knowledge about inattentive students is provided to a pedagogical agent. Sakamoto et al. (2020) tested pupil metrics for their eligibility to gain information about the comprehension of people. They recorded gaze behavior during a learning task in VR and compared this to the subjective comprehension ratings of subjects. In a similar example from Orlosky et al. (2019), they used eye movement and pupil size data to build a support vector machine that predicts if a user understood a given term or not. Even the experience of flow can be assessed with the help of physiological indicators (Bian et al., 2016). Information about attention and comprehension of students can be used to optimize teaching scenarios. It is an illustrative example of how physiological data can augment virtual learning spaces and create possibilities that would be unthinkable in real-life ones.

4.1.2.3 Physical Training

Our discussion of training applications has thus far revolved around mental training. However, there are also applications for physical training in VR. Again, physiological data can be used to emphasize the basic effectiveness of the application. Changes in heart rate or oxygen consumption can show that virtual training elicits physical exertion and give insights into the extent of it (Mishra and Folmer, 2018; Xie et al., 2018; Debska et al., 2019; Kivelä et al., 2019). This can also provide a reference value for the comparison of real-life and virtual exercising. Works like Zeng et al. (2017) and McDonough et al. (2020) compared a VR-based bike exercise with a traditional one. Their assessment of exertion with the help of BP measurements showed no significant difference between the virtual and analog exercises. Measurements of the subjectively perceived exertion, however, showed that participants of a VR-based training felt significantly less physiological fatigue.

As in other fields of application, physiological data can be used to adapt the virtual environment. Campbell and Fraser (2019) present an application where the trainee rides a stationary bike while wearing an HMD. In the virtual environment, the user is represented by a cyclist avatar. The goal of the training is to cover as much distance as possible in the virtual world, however, the speed at which the avatar moves is determined by the heart rate of the user. This way, the difficulty cannot be reduced by simply reducing the resistance of the bike and unfit users have the opportunity to cover more distance. In the exercise environment of Kirsch et al. (2019), the music-tempo is adapted to the user’s heart rate which was perceived as motivating by the trainees. Other works just take the physiological data and display it to the users so they can keep track of their real-time physical exertion (Yoo et al., 2018; Kojić et al., 2019; Greinacher et al., 2020).

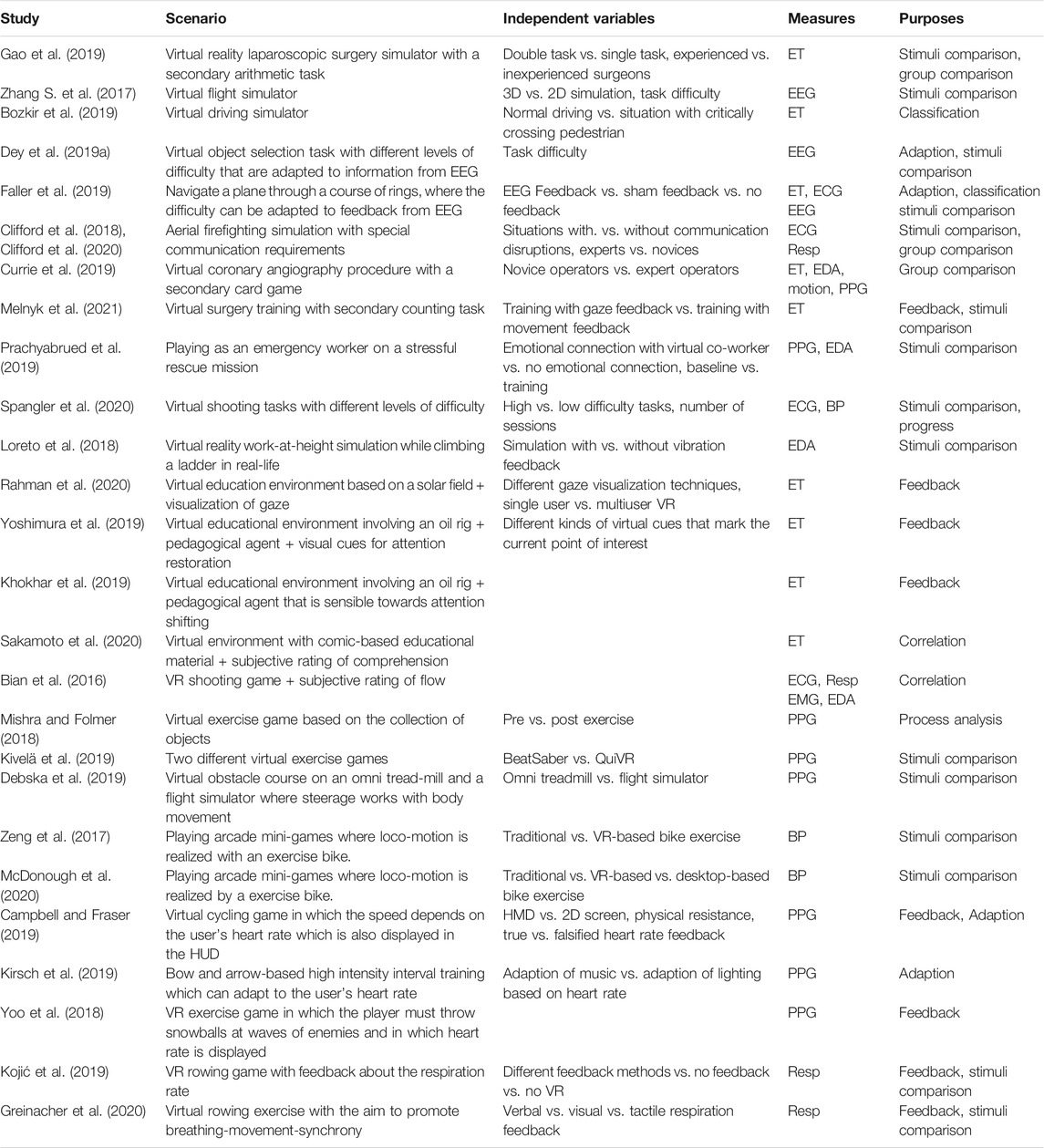

A summary of the works discussed in this section can be found in Table 6.

TABLE 6. Overview of the works from the field of training and education that were discussed in Section 4.1.2. The Measures column refers to the physiological measures used in the work. The entries of the Independent Variables column often do not cover everything that was considered in the work. Entries in the Purposes column refer to the categories listed in Section 4.1.2.

4.1.3 Entertainment

Another field of application comprises VR systems that are primarily built for entertainment purposes, i.e. games and videos. Often, researchers use physiological data to get information about the arousal video or a game elicits (Shumailov and Gunes, 2017; Ding et al., 2018; Mavridou et al., 2018b; Ishaque et al., 2020). Physiological measures can also be used as explicit game features. For example, progress may be denied if a player is unable to adjust their heart rate to a certain level (Houzangbe et al., 2019; Mosquera et al., 2019). Additionally, the field of view in a horror game can be adjusted depending on the heartbeat (Houzangbe et al., 2018). Kocur et al. (2020) present a novel way to help novice users in a shooter game by introducing a gaze-based aiming assistant. If the user does not hit a target with his shot, the assistant can guess what the actual target was, based on the gaze. When the shot is close enough to the intended target it hits nevertheless. Moreover, eye-tracking can be used to optimize VR video streaming. Yang et al. (2019) used gaze-tracking to analyze the user’s attention and leverage this knowledge to reduce the bandwidth of video streaming by reducing the quality of those parts of a scene that are not focused.

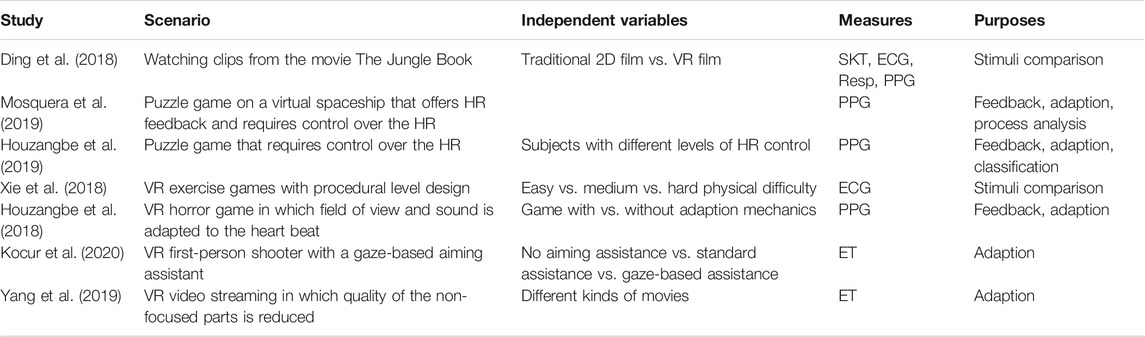

A summary of the works discussed in this section can be found in Table 7.

TABLE 7. Overview of the works from the field of entertainment that were discussed in Section 4.1.3. The Measures column refers to the physiological measures used in the work. The entries of the Independent Variables column often do not cover everything that was considered in the work. Entries in the Purposes column refer to the categories listed in Section 4.1.6.

4.1.4 Functional Virtual Reality Properties

Within this section, we describe applications that make use of common techniques of VR. These are applications that include embodiment, agent interaction, or multiuser VR. We are talking about functional properties that may or may not be part of the system. These properties can also be part of the fields of application that we discussed before, e.g. therapy and training. Nevertheless, we have identified them as separate fields because physiological measurements have their own functions in applications that use embodiment, agent interaction, or multiuser VR. Researchers who want to use those techniques in their own applications can find separate information about the role of the physiological measurements here.

4.1.4.1 Applications With Embodiment

A range of VR applications use avatars as a representation of the user in the virtual realm (Lugrin et al., 2018; Lugrin et al., 2019b; Wolf et al., 2020). VR has the potential to elicit the illusion of owning a digital body which can be referred to as the Illusion of Virtual Body Ownership (Lugrin et al., 2015; Roth et al., 2017). This concept is an extension of the rubber-hand illusion (Botvinick and Cohen, 1998), which has the consequence that the feeling of ownership is often based on the synchrony of multi sensory information, e.g. visuo-tactile or visuo-motor (Tsakiris et al., 2006; Slater et al., 2008). Physiological data can provide information about whether and to what extent the virtual body is perceived as the own. One way to provide objective evidence for the illusion of body ownership is to threaten the artificial body-part while measuring the skin response to get information about whether the person shows an anxiety reaction (Armel and Ramachandran, 2003; Ehrsson et al., 2007). One of the most common paradigms still used in more recent literature is to threaten the virtual body (part) with a knife stab (González-Franco et al., 2014; Ma and Hommel, 2015; Preuss and Ehrsson, 2019). Alchalabi et al. (2019) present an approach that uses EEG data to estimate embodiment. They worked with a conflict between visual feedback and motor control. That means subjects had to perform a moving task on a treadmill that was replicated by their virtual representation. However, the avatar stopped walking prematurely while the subject was still moving in real life. This modification in feedback was reflected in EEG data and results showed a strong correlation between the subjective level of embodiment and brain activation over the motor- and pre-motor cortex. Relations between EEG patterns and the illusion of body ownership were also shown in virtual variations of the rubber-hand illusion (González-Franco et al., 2014; Skola and Liarokapis, 2016). Furthermore, there is also evidence that the feeling of ownership and agency over a virtual body or limb can be reflected in skin temperature regulation (Macauda et al., 2015; Tieri et al., 2017).

Other works connect embodiment and physiological measures by investigating how the behavior or properties of an avatar can change physiological responses. In their study, Czub and Kowal (2019) introduced a visuo-respiratory conflict, i.e. the avatar that represented the subjects showed a different respiration rate than its owner. They found out that the immersed subjects actually adapted their respiration rate to their virtual representation. The frequency of breathing increased when the breathing animation of the avatar was played faster and vice versa. Kokkinara et al. (2016) showed that activity of the virtual body, i.e. climbing a hill, can increase the heart rate of subjects, even if they are sitting on a chair in real life.

Another link between physiological measures and body-ownership can be made when those measures are used as input for the behavior of the avatar. Betka et al. (2020) executed a study in which they measured the respiration rate of the subjects and mapped it onto the avatar that was used as their virtual representation. Results showed that congruency of breathing behavior is an important factor for the sense of agency and the sense of ownership over the virtual body.

4.1.4.2 Applications With Agent Interaction

In the last section, we focused on applications that use avatars to embody users in the virtual environment. Now we move from the virtual representation of the user to the virtual representation of an artificial intelligence, so-called agents (Luck and Aylett, 2000).

Physiological data is often used to analyze and understand the interaction between a human user and agents. The study of Gupta et al. (2019), that was revised in Gupta et al. (2020), aimed to learn about the trust between humans and agents. The primary task of this study comprised a shape selection where subjects had to find a target object that was defined by shape and color. An agent was implemented that gave hints about the direction in which the object could be found. There were two versions of the agent, whereat one version always gave an accurate hint and the other one did not. With the help of a secondary task, an additional workload was induced. EEG, GSR, and heart rate variability were captured throughout the experiment, as an objective indicator for the cognitive workload of the subjects. In the EEG data, Gupta et al. (2020) found a significant main effect for the accuracy of the agent’s hints. That means subjects who received correct hints showed less cognitive load. The authors interpret this as a sign of trust towards the agent as the subjects did not seem to put any additional effort into the shape selection task as soon as they got the correct hints from the virtual assistant. In another example, Krogmeier et al. (2019) investigated the effects of bumping into a virtual character. In their study, they manipulated the haptic feedback during the collision. They explored how this encounter and the introduction of haptic feedback changed the physiological arousal of the subjects gauged with EDA. In a related study, Swidrak and Pochwatko (2019) showed a heart-rate deceleration of people who are touched by a virtual human. Another facet of human-agent interaction is the role of different facial expressions and how they affect physiological responses (Mueller et al., 2017; Ravaja et al., 2018; Kaminskas and Sciglinskas, 2019).

Other works investigate different kinds of agents and use physiological data as a reference for their comparison. Volante et al. (2016) investigated different styles of virtual humans, i.e. visually realistic vs. cartoon-like vs. sketch-like. The agents were depicted as patients in a hospital which showed progressive deterioration of their medical condition. With the help of EDA data, they were able to quantify emotional responses towards those avatars and analyze how these responses were affected by the visual appearance. Other works compared gaze behavior during contact with real people and agents (Syrjamaki et al., 2020) or the responses to virtual crowds showing different emotions (Volonte et al., 2020).

Another category can be seen in studies that leverage agents to simulate certain scenarios and use physiological data to test the efficacy of these scenarios to elicit desired emotional responses. At this point, there is a relatively large overlap with the previously discussed exposure therapies (Section 4.1.1.1). Applications aimed at the treatment of social anxiety often include the exposition to a virtual audience that aims to generate a certain atmosphere (Herumurti et al., 2019; Lugrin et al., 2019a; Streck et al., 2019). Kothgassner et al. (2016) asked participants of their study to speak in front of a real and a virtual audience. Heart rate, heart rate variability, and saliva cortisol secretion were assessed. For both groups, these stress indicators increased similarly, which demonstrates the fundamental usefulness of such therapy systems, as the physiological response to a virtual audience was comparable to a real one. Other studies investigated stress reactions depending on the size (Mostajeran et al., 2020) or displayed emotions (Barreda-Angeles et al., 2020) of the audience. The potential of virtual audiences to elicit stress is not only applicable to people with social anxiety. Research approaches that investigate human behavior and experience under stress can use a speech task in front of a virtual audience as a stressor. This can be referred to as the Trier Social Stress Test, which was often transferred to the virtual realm (Delahaye et al., 2015; Shiban et al., 2016a; Kothgassner et al., 2019; Zimmer et al., 2019; Kerous et al., 2020). Social training applications that work with virtual audiences are also available specifically for people with autism. Again, physiological measurements help to understand the condition of the user and thus to adjust the training (Kuriakose et al., 2013; Bekele et al., 2016; Simões et al., 2018). Also physical training applications can use physiological data to determine the effect of agents. Murray et al. (2016) worked with a virtual aerobic exercise, i.e. rowing on an ergometer. One cohort of their study had a virtual companion that performed the exercise alongside the subject. In a related study, Haller et al. (2019) investigated the effect of a clapping virtual audience on the performance in a high-intensity interval training. In both examples the effect of the agents was evaluated with a comparison of the heart rate. It indicates changes in the physical effort and can thus show whether the presence of agents changes training behavior.

4.1.4.3 Applications With Multiuser Virtual Reality

In multiuser VR applications, two or more users can be present and interact with each other at the same time (Schroeder, 2010). This concept offers the possibility of exchanging physiological data among those users. Dey et al. (2018) designed three different collaborative virtual environments comprising puzzles that must be solved together. They evaluated those environments in a user-study, whereat one group got auditory and haptic feedback about the heart rate of the partner. Results indicated that participants who received the feedback felt the presence of the collaborator more. There is even evidence that the heart rate feedback received from a partner can cause an adaption in the own heart rate (Dey et al., 2019b). In a similar approach, Salminen et al. (2019) used an application that shares EEG and respiration information among subjects in a virtual meditation exercise. The feedback was depicted as a glowing aura that pulsates according to the respiration rate and is visualized with different colors, depending on brain activity. Users who had this kind of feedback perceived more empathy towards the other user. Desnoyers-Stewart et al. (2019) built an application that deliberately aims to achieve such synchronization of physiological signals in order to establish a connection between users. Another way in which multiuser VR applications can benefit from physiological measures is in terms of communication. Lou et al. (2020) present a hardware solution that uses EMG sensors to track facial muscle activity. These activities are then translated to a set of facial expressions that can be displayed by an avatar. This offers the possibility of adding nonverbal cues to interpersonal communication in VR.

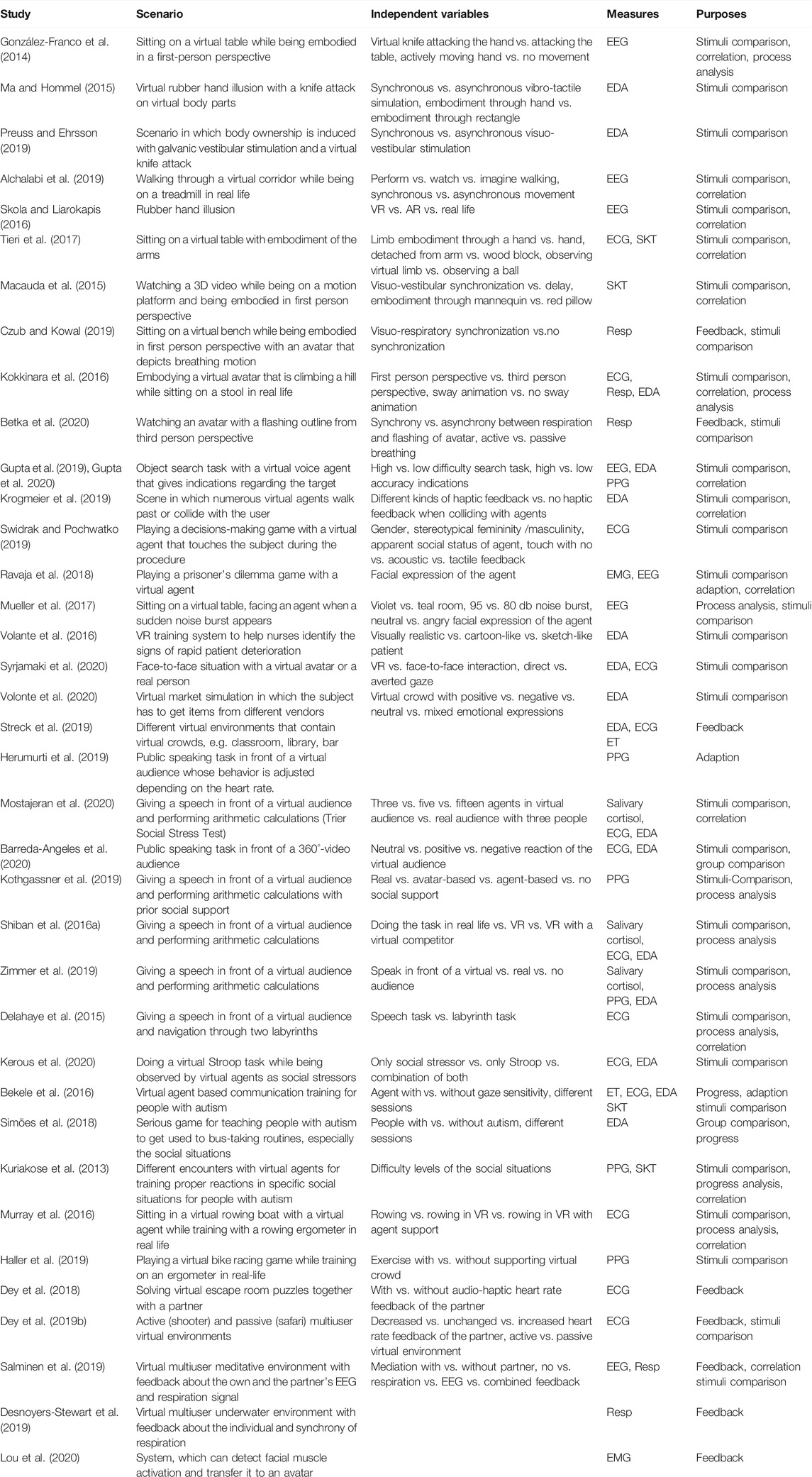

A summary of the works discussed in this section can be found in Table 8.

TABLE 8. Overview of the works from the field of functional VR properties that were discussed in Section 4.1.4. The Measures column refers to the physiological measures used in the work. The entries of the Independent Variables column often do not cover everything that was considered in the work. Entries in the Purposes column refer to the categories listed in Section 4.1.6.

4.1.5 General Virtual Reality Properties

Our last field of application focuses on properties that are relevant for every VR application as they are inherent to the medium itself. These are cybersickness and presence. Here we are talking about non-functional properties of a VR system, as they can occur to varying degrees. These varying degrees of cybersickness and presence are either actively manipulated or passively observed. In both cases, consideration of physiological measurements can provide interesting insights.

4.1.5.1 Presence

Presence describes the experience of a user to be situated in the virtual instead of the real environment Witmer and Singer (1998). Hence, knowledge about the extent to which a virtual environment can elicit the feeling of presence in a user is relevant in most VR applications. Beyond the classic presence questionnaires from Slater et al. (1994) or Witmer and Singer (1998), there are also approaches that aim to determine presence based on physiological data.

Athif et al. (2020) present a comprehensive study that relates presence factors to physiological signals. They worked with a VR forest scenario in which the player needs to collect mushrooms that spawn randomly. This scenario was implemented in six different gradations based on the four factors of presence, described by Witmer and Singer (1998). These are distraction, control, sensory, and realism. That means the base version fulfilled the requirements for all these factors. Four versions suppressed one factor each and one version suppressed all the factors simultaneously. In the study, participants were presented with each of the scenarios while their physiological reactions were measured. Data showed that EEG features indicated changes in presence particularly well, while ECG and EDA features did not. Signals from temporal and parietal regions of the brain showed correlations with the suppression of the specific presence factors. In a similar investigation Dey et al. (2020) implemented two versions of a cart-ride through a virtual jungle. Their high presence version was realized through higher visual fidelity, more control, and object-specific sound. In this setup, they were able to show a significant increase in the heart rate of people presented to the high presence version, whereat EDA showed no systematic changes. The study of Deniaud et al. (2015) showed correlations between presence questionnaire scores, skin conductance, and heart rate variability. Other studies again, found the heart rate or EDA data to be weak indicators for presence (Felnhofer et al., 2014; Felnhofer et al., 2015). Szczurowski and Smith (2017) suggest to gauge presence through a comparison of virtual and real stimuli. Accordingly, a high presence is characterized in such a way that the exposure to the virtual stimulus elicits similar physiological responses as the exposure to the real stimulus. As such, one could take any physiological measure to gauge presence, as long as one has a comparative value from a real life stimulus.

The exact relationship between specific physiological measures and the experience of presence still seems ambiguous. This may also be due to the fact that the concept of presence is understood and defined differently. Only recently, Latoschik and Wienrich (2021) introduced a new theoretical model for VR experiences which also shows a new perspective on presence. Just as the understanding of presence evolves, so does the measurement of it.

4.1.5.2 Cybersickness

Cybersickness can be described as a set of adverse symptoms that are induced by the visual stimuli of virtual and augmented reality applications (Stauffert et al., 2020). Common symptoms include headache, dizziness, nausea, disorientation, or fatigue (Kennedy et al., 1993; LaViola, 2000). There are multiple theories on what might be the causes of cybersickness, whereas the most common revolve around sensory mismatches and postural instability (Rebenitsch and Owen, 2016).

Besides questionnaires and tests for postural instability, the assessment of the physiological state of a VR user is one of the common ways to measure cybersickness (Rebenitsch and Owen, 2016). In recent years researchers used several approaches to assess physiological measures and find out how much they correlate with cybersickness. Gavgani et al. (2017) used a virtual roller-coaster ride that subjects were asked to ride on three consecutive days. This roller-coaster ride was quite effective at inducing cybersickness as only one of fourteen subjects completed all rides while the others terminated theirs due to nausea. However, it took the participants significantly more time to abort the ride on the third day, compared to the first, which speaks for a habituation. During the 15-min rides, heart rate, respiration rate, and skin conductance were monitored and participants had to give a subjective assessment of their felt motion sickness. Results demonstrated that the nausea level of subjects continuously increased over the course of the ride. The measurement of the forehead skin conductance was the best physiological correlate to the gradually increasing nausea. A virtual roller-coaster ride was also leveraged in the study of Cebeci et al. (2019). Here, pupil dilation, heart rate, blink count, and saccades were analyzed. In this study, the average heart rate and the saccade mean speed were the highest when cybersickness symptoms occurred. Moreover, they found a correlation between the blink count, nausea and oculomotor discomfort (Kennedy et al., 1993). Approaches that use physiological data to assess cybersickness mainly use this data for the sake of comparison. This can serve to gain knowledge about the connection of unpleasant VR experiences and latency jitter (Stauffert et al., 2018), navigation techniques (Líndal et al., 2018), or the display type (Guna et al., 2019; Gersak et al., 2020; Guna et al., 2020). Plouzeau et al. (2018) used cybersickness indicators in an adaption mechanism for their VR application. They introduced a navigation method that allows the user to move and rotate in the virtual environment with the help of two joysticks. The acceleration of the navigation is adapted according to an objective indicator for simulator sickness, i.e. EDA. When the EDA increases the acceleration decreases proportionally and vice versa.

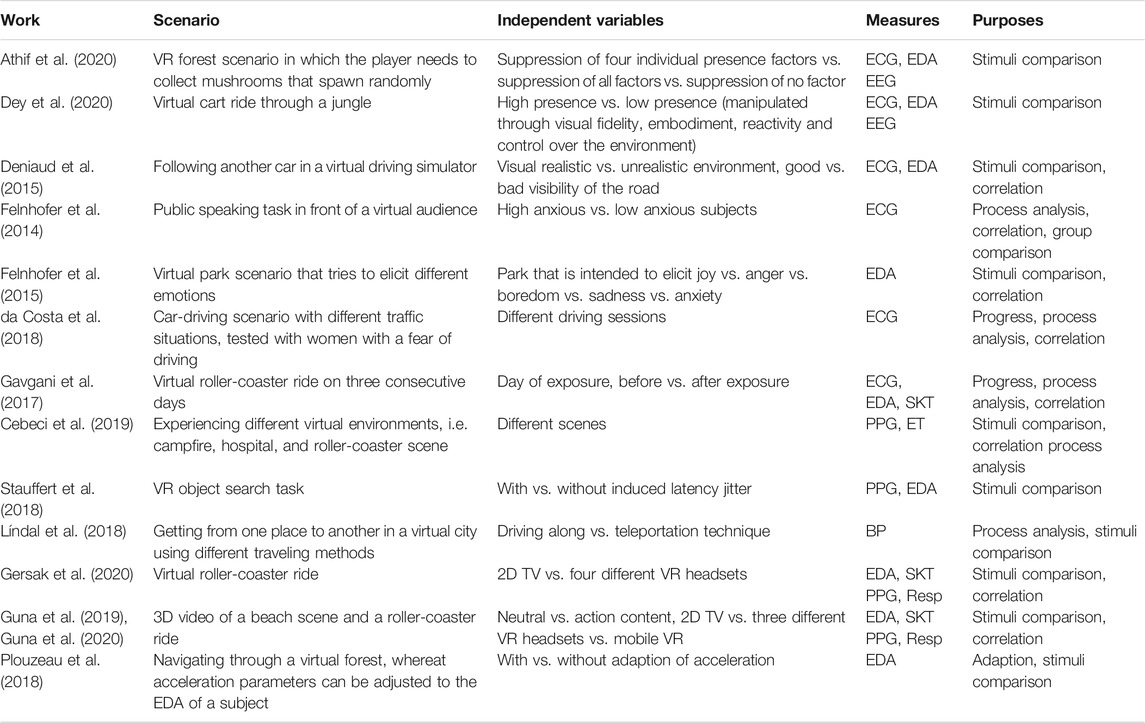

A summary of the works discussed in this section can be found in Table 9.

TABLE 9. Overview of the works from the field of general VR properties that were discussed in Section 4.1.5. The Measures column refers to the physiological measures used in the work. The entries of the Independent Variables column often do not cover everything that was considered in the work. Entries in the Purposes column refer to the categories listed in Section 4.1.6.

4.1.6 High-Level Purposes

Throughout this section, we gave an overview of the usage of physiological measures in VR to assess the state of the user. We listed fields of application and concretely explained how physiological measures are used in them. Across the fields of application, physiological measures are used for recurring purposes. To summarize this overview we turn to the meta-level to highlight these recurring themes for the usage of physiological data in VR. The categories are not mutually exclusive and are not always clearly separable.

• Stimuli Comparison: Physiological measures can be used to determine how the response to a virtual stimulus compares to the response to another (virtual) stimulus. In these cases the independent variable is the stimulus and the dependent variable is the physiological measure. Examples include works that compare responses to real life situations and their virtual counterparts (Chang et al., 2019; Syrjamaki et al., 2020). Others compare how different kinds of virtual audiences impact stress responses (Barreda-Angeles et al., 2020; Mostajeran et al., 2020).

• Group Comparison: Physiological measures can be used to determine how the response to the same stimulus compares between groups of people. In these studies the independent variable is the user group and the dependent variable is the physiological measure. Examples include works that compare phobic with non-phobic subjects (Breuninger et al., 2017; Kishimoto and Ding, 2019) or subjects with and without autism (Simões et al., 2018).

• Process analysis: Physiological measures can be used to determine how the response changes over the course of a virtual simulation. In these cases the independent variable is the time of measurement and the dependent variable is the physiological measure. Thus, the effect of the appearance of a certain stimulus can be determined, e.g. a knife attack (González-Franco et al., 2014) or a noise burst (Mueller et al., 2017).

• Progress: Physiological measures can be used to determine a change in response to the same stimulus throughout multiple expositions. In these studies the independent variable is the number of expositions or sessions and the dependent variable is the physiological measure. This is often done to quantify the progress of a therapy or training (Lee et al., 2015; Shiban et al., 2017) but can, for example, also be used to determine a habituation to cybersickness inducing stimuli (Gavgani et al., 2017).

• Correlation: Physiological measures can be used to establish a relationship between the measure and a second variable. Usually, both measures are dependent variables of the research setup. Typical examples assess the relationship between physiological and subjective measurements, e.g. of embodiment (Alchalabi et al., 2019) or cybersickness (John, 2019).

• Classification: Physiological measures can be used to differentiate users based on the response to a virtual stimulus. The goal of these approaches is to determine if the information in the physiological data is sufficient to reflect the changes in the independent variable. Examples can be the classification of specific groups of people, e.g. healthy and addicted people (Ding X. et al., 2020) or people under low and high stress (Ishaque et al., 2020).

• Feedback: Physiological measures can be presented to the user or a second person to make latent and unconscious processes visible. This is particularly common in relaxation applications where the stress level can be visualized for the user (Patibanda et al., 2017; Blum et al., 2019) but it can also be used to inform the supervisor of a therapy or training session about the user’s condition (Bayan et al., 2018; Streck et al., 2019). This purpose differs from the previous ones in that the physiological measurement is no longer intended to indicate the manipulation of an independent variable.

• Adaption: Physiological measurements can be used to adapt the system status to the state of the user. A typical example is the adaption of training and therapy systems based on effort and stress indicators (Campbell and Fraser, 2019; Bălan et al., 2020). This is similar to the feedback purpose in that the measurements here are used to make changes to the system and not to allow comparisons. While feedback approaches are really just focused on visualizing the physiological data, here it is more about changing the behavior of the application.

4.2 Part 2: Characteristics of Experience and Their Measurement in Virtual Reality

In the second part of the discussion, we focus on the results of the search for classification approaches depicted in Table 2. Here, we discuss approaches that expose participants to a particular VR stimulus that is known to trigger a particular characteristic of experience. The focus of the studies is on how well this manipulation is reflected by the physiological measurements. We use those classification approaches to show which measures, sensors, and algorithms have been used to gauge the targeted characteristic of experience. A universal solution to measure and interpret those specific experiences does not exist, as this is usually context dependent. So what this work cannot do is to give strict guidelines for which measures should be used for which case. The field is too diverse and the focus of the work too broad.

A comparison of the accuracy of the specific approaches, should be treated with caution as they are partly obtained under different circumstances. Results show more of a rough guide to how well the classification works and should not be compared 1-to-1. All the classifiers reported here are, in principle, successful in distinguishing different levels of an experience. This means all examples show combinations of signals, sensors, and algorithms that can work for the assessment of experience in VR.

Our review of classification approaches showed that in immersive VR there are some main characteristics of experience that are predominantly assessed with the help of physiological data. These experiences are arousal, valence, stress, anxiety, and cognitive workload. Those constructs are similar and interrelated. Stress and anxiety can be seen as a form of hyperarousal and cognitive workload itself can be seen as a stress factor (Gaillard, 1993; Blanco et al., 2019). Nevertheless, most of the works focus on one of the characteristics of experience and they have different approaches to elicit and assess them. The discussion is separated according to these characteristics of experience. The reader should still keep in mind that the constructs are related.

4.2.1 Arousal and Valence

Studies from this domain usually base their work on the Circumplex Model of Affects (Russell and Mehrabian, 1977; Posner et al., 2005). This model arranges human emotions in a two-dimensional coordinate system. One axis of this coordinate system represents arousal, i.e. the activation of the neural system, and one axis represents valence, i.e. how positive or negative an emotion is perceived. Hence, classifiers from this category usually distinguished high and low levels of arousal or positive and negative valence. Arousal inducing scenes often comprise a virtual roller-coaster ride (Hofmann et al., 2018; Teo and Chia, 2018; Bilgin et al., 2019) or dynamic mini-games (Shumailov and Gunes, 2017; Ding Y. et al., 2020). Emotional scenes are often used to manipulate the valence of people (Shumailov and Gunes, 2017; Mavridou et al., 2018b; Zheng et al., 2020). Such scenes can be taken from a database (Samson et al., 2016) or be tested in a pre-study to see what emotions they trigger (Zhang W. et al., 2017).

The most commonly used physiological measure for the classification of arousal and valence is EEG. The trend here seems to be towards the more comfortable wearable EEG sensors, e.g. a EEG headset (Teo and Chia, 2018; Bilgin et al., 2019; Ding Y. et al., 2020; Suhaimi et al., 2020) or textile electrodes inside the HMD (Xu et al., 2019). Some works use cardiovascular data next to the EEG information (Marín-Morales et al., 2018; Mavridou et al., 2018b). Deviating from the EEG approach, Zheng et al. (2020) leveraged pupillometry and Shumailov and Gunes (2017) forearm EMG to classify arousal and valence. Both examples also worked with comfortable and easy-to-setup sensors.

The deep neural network for the two-level classification of emotional arousal of Teo and Chia (2018) achieved an accuracy of 96.32% in a 10-fold cross validation. This result was achieved, just with the data from the Muse 4-channel EEG headband. For a binary valence classification Shumailov and Gunes (2017) reported an F1 value of 0.85. This value was achieved with the help of a support vector machine and EMG armband data, captured while playing VR games. The highest value for classifying arousal and valence at the same time (four classes) comes from Suhaimi et al. (2020). Their random-forest classifier achieved an accuracy of 82.49% in a 10-fold cross validation, distinguishing four different emotions that are embedded in the valence-arousal model.

When a researcher wants to assess arousal in a virtual environment EEG signals appear to be the go-to indicators. In addition, cardiovascular data also appears to be useful for this purpose. Six out of the eight presented arousal classifiers successfully used one or both of the signals to distinguish high and low arousal in the virtual realm. The systematic review of Marín-Morales et al. (2020) about the recognition of emotions in VR generally confirms this impression. They list sixteen works that assessed arousal in VR, whereat fifteen of them used EEG or heart rate variability signals. However, the review of Marín-Morales et al. (2020) also shows that nine of the sixteen works used EDA data to estimate arousal, a signal that did not appear among recent classification algorithms. One reason for this could be that, among the works listed by Marín-Morales et al. (2020), the older ones tended to leverage EDA for arousal assessment, and this work here just considers literature from the last few years. Nevertheless, this does not mean that EDA measurements are not important for estimating arousal anymore. Only recently, Granato et al. (2020) found the skin conductance level to be one of the most informative features when it comes to the assessment of arousal. Also worth mentioning is the work of Shumailov and Gunes (2017) which showed that also forearm EMG is suitable for the classification of arousal levels. They showed this in a setup where subjects moved a lot as they were playing VR games, while other approaches usually gather their data in a setup where subjects must remain still. Due to movement artifacts, it is questionable to what extent the other classifiers are transferable to setups that include a lot of motion. As for the sensors, various works showed that EEG data collected with easy-to-use headsets is sufficient to distinguish arousal levels in VR (Teo and Chia, 2018; Bilgin et al., 2019; Ding Y. et al., 2020).

The differentiation of positive and negative valence appears to be similar to arousal. Our results showed that most frequently EEG data was used for its assessment. Also notable is the attempt to classify arousal based on facial expressions. Even if an HMD is worn, this is possible through facial EMG (Mavridou et al., 2018a).

4.2.2 Stress

Studies that work on the classification of stress often used some kind of dynamic or unpredictable virtual environment to elicit the desired responses, e.g. a roller-coaster ride (Ishaque et al., 2020) or a guard, patrolling in a dark room (Ham et al., 2017). Stress is usually regulated with an additional assignment, e.g. an arithmetic task (Cho et al., 2017) or a Stroop task (Ishaque et al., 2020).

Looking at the signals with which stress was attempted to be classified, it is noticeable that each approach measures the cardiovascular activity. Either with optical sensors on the finger (Cho et al., 2017; Ham et al., 2017) or with electrical sensors (Tartarisco et al., 2015; Robitaille and McGuffin, 2019; Ishaque et al., 2020). Additional measures that were used by these studies are EDA (Cho et al., 2017; Ishaque et al., 2020), skin temperature (Cho et al., 2017), respiration Ishaque et al. (2020), or motion activity (Tartarisco et al., 2015; Robitaille and McGuffin, 2019).

The kernel-based extreme learning machine of Cho et al. (2017) distinguishes five stress levels and it achieved an accuracy of over 95% in a leave-one-out cross validation. Their classifier was trained with PPG, EDA, and skin temperature signals that were gathered relatively simple with four finger electrodes. In an even simpler setup, with only one finger-worn PPG sensor and a Linear Discriminant Analysis, Ham et al. (2017) achieved an accuracy of approximately 80% for three different classes. Tartarisco et al. (2015) took an approach with a wearable chest band. They collected ECG, respiration, and motion data and trained a neuro-fuzzy neural network that achieved an accuracy of 83% for four different classes.

Traditionally, heart rate variability is regarded as one of the most important indicators of stress (Melillo et al., 2011; Kim et al., 2018). This coincides with our results as the most commonly used signals for stress classification were PPG and ECG. This impression is also confirmed when considering non-classification approaches in VR. For example, if one looks at the VR adaptations of the Trier Social Stress Test mentioned in Section 4.1.4, one finds that in all the listed examples the heart activity is measured. Two other signals frequently used in research to indicate changes in stress level is EDA (Kurniawan et al., 2013; Anusha et al., 2017; Bhoja et al., 2020) and skin temperature (Vinkers et al., 2013; Herborn et al., 2015). Both signals and heart rate variability were compared by Cho et al. (2017) in VR. Results indicated that PPG and EDA provided more information about the stress level of the immersed people than the skin temperature, whereas PPG features were best suited for the distinction of stress. The combination of EDA and cardiovascular data seems to be a good compromise for measuring stress in VR.

Our results also suggest that the better classification results were achieved with the help of more obtrusive sensors like multiple electrodes on the fingers or the body (Cho et al., 2017; Ishaque et al., 2020). This is somewhat problematic as a lot of VR scenarios require quite some movement interaction. Individual electrodes distributed over the body could be bothersome. Approaches with more comfortable chest bands showed somewhat worse accuracy values, yet were able to effectively classify different levels of stress (Tartarisco et al., 2015; Robitaille and McGuffin, 2019). Future research could aim on improving the quality of stress indicators in VR based on unobtrusive sensors. In addition to chest bands, wrist-worn devices could be used given that they can deliver the important cardiovascular and EDA signals. Indeed, the focus in these scenarios is only on creating stress. Relaxation environments that do the opposite could also provide data to train future classifiers.

4.2.3 Cognitive Workload

As the name suggests, VR studies that work on the estimation of cognitive workload often used mentally demanding tasks that allow for a manipulation with different levels of difficulty. This can be abstract assignments like the n-back task (Tremmel et al., 2019; Tremmel, 2020) or a cube puzzle (Collins et al., 2019), but also more concrete scenarios like a flight simulator with different difficulties (Kakkos et al., 2019). It is in these scenarios that cognitive workload differs from the other characteristics of experiences listed here. While the other experiences can be placed somewhere in the Circumplex Model of Affects and therefore have an emotional character, the focus here is on a mental effort that must be performed by the subjects. In contrast to the stress simulations, here it is purely a matter of the cognitive demands of the tasks and not on environmental factors that are supposed to create additional stress.

Most frequently cognitive workload classifiers worked with an EEG signal (Kakkos et al., 2019; Siravenha et al., 2019; Tremmel et al., 2019; Tremmel, 2020). An exception to this is the work of (Collins et al., 2019) who approached the classification of workload in VR with PPG and EDA signals.

It is also (Collins et al., 2019) who reached the highest accuracy among the cognitive workload classifiers that are listed here. Based on information about the cardiovascular activity, collected with a wristband, they created a random forest classifier that predicts three different levels of cognitive workload with an accuracy of 91.75%. Among the EEG based approaches, Kakkos et al. (2019) report the highest accuracy. With data from 64-scalp electrodes, they trained a linear discriminant analysis classifier that reached an accuracy of 89% for a prediction of three different workload levels.

Older studies established heart rate features as the most reliable predictors of cognitive workload (Hancock et al., 1985; Vogt et al., 2006). More recent works argue for EEG data as the most promising signal for classifying workload (Christensen et al., 2012; Hogervorst et al., 2014). This trend is also visible in our results, as almost all of the classifiers for workload used EEG. However, Collins et al. (2019) showed that a cognitive workload classification in VR can also work with PPG signals. So both, heart rate and EEG features seem to be usable for workload classification in VR. A recent review on the usage of physiological data to assess cognitive workload also shows that cardiovascular and EEG data are two main measures for this purpose (Charles and Nixon, 2019). Charles and Nixon (2019) report that the second most used signal is the assessment of cognitive workload are ocular measures, i.e. blink rate and pupil size. Those measures did not appear at all in the classifiers for workload that we found. Closing this gap could be a task for future research, especially because of the availability of sensors that allow capturing pupillometry data inside an HMD.

Regarding the sensors, we found that all the EEG devices that were used for a workload classification were quite cumbersome (caps with multiple wet electrodes). The classification with more comfortable devices like the Emotiv Epoc or the Muse headset is still pending. When using pulse sensors, Collins et al. (2019) already showed that the data from a convenient wristband can be sufficient to distinguish workload levels, however, more examples are needed to confirm this impression.

4.2.4 Anxiety

The classification of anxiety is closely related to the virtual exposure therapies presented in Section 4.1.1. This becomes particularly clear when one considers the scenarios in which the data for the classifiers were gathered. The scenario is either the exposure to different altitudes (Hu et al., 2018; Wang et al., 2018; Bălan et al., 2020) or a speech in front of a crowd (Salkevicius et al., 2019).

The studies of Hu et al. (2018) and Wang et al. (2018) work with more cumbersome sensors, i.e. over 30 scalp electrodes, for capturing EEG data. The convolutional neural network of Hu et al. (2018) reached an accuracy of 88.77% in a 10-fold cross validation when classifying four different levels of acrophobia. The support vector machine of Wang et al. (2018) reached an accuracy of 96.20% in a 5-fold cross validation, yet only distinguished three levels of fear.

Salkevicius et al. (2019) present a VR anxiety classification based on a wearable sensor. With the help of the Empatica E4 wristband sensor, they collected PPG, EDA, and skin temperature data. They created a fusion-based support vector machine that classifies four different levels of anxiety. In a 10× 10-fold cross validation it reached an accuracy of 86.10%, which is comparable to what Hu et al. (2018) achieved with a more elaborate 30 electrodes setup.

Anxiety is usually characterized by sympathetic activation. Therefore, in the past many studies have found correlations between anxiety levels and numerous features of cardiovascular activity and EDA measurements (Kreibig, 2010). In VR applications, too, most researchers use heart rate variability and EDA data to make anxiety measurable (Marín-Morales et al., 2020). In our results, however, this combination only appeared in the study of Salkevicius et al. (2019). From this work, it can be concluded that heart rate variability, EDA, and skin temperature data are in general suitable for distinguishing different anxiety levels in VR. Moreover, it showed that the fusion of these three signals can considerably increase the quality of the prediction, which is particularly useful when using a wristband that can conveniently deliver this data like the Empatica E4.

Our results indicate the suitability of EEG data as a sensitive measure for anxiety in VR. Each of the fear-related approaches, except that of Salkevicius et al. (2019), used knowledge of the brain activity for classification. Additionally, the combination with cardiovascular measures seems to work fine (Balan et al., 2019; Bălan et al., 2020). As with cognitive workload, the EEG data here has been mainly captured with comprehensive electrode setups. Future work could seek for classification with the more comfortable headsets. Future anxiety classification approaches could also include EMG signals from the orbicularis oculi muscle. This measure can serve to identify startle responses (Maples-Keller et al., 2019; Mertens et al., 2019).

4.2.5 Other Classifiers