- 1iSpace Lab, School of Interactive Arts and Technology, Burnaby, BC, Canada

- 2Microsoft Corporation, Seattle, WA, United States

- 3Institute of Visual Computing, Bonn-Rhein-Sieg University of Applied Sciences, Sankt Augustin, Germany

- 4VdH-IT, Weima, Germany

When users in virtual reality cannot physically walk and self-motions are instead only visually simulated, spatial updating is often impaired. In this paper, we report on a study that investigated if HeadJoystick, an embodied leaning-based flying interface, could improve performance in a 3D navigational search task that relies on maintaining situational awareness and spatial updating in VR. We compared it to Gamepad, a standard flying interface. For both interfaces, participants were seated on a swivel chair and controlled simulated rotations by physically rotating. They either leaned (forward/backward, right/left, up/down) or used the Gamepad thumbsticks for simulated translation. In a gamified 3D navigational search task, participants had to find eight balls within 5 min. Those balls were hidden amongst 16 randomly positioned boxes in a dark environment devoid of any landmarks. Compared to the Gamepad, participants collected more balls using the HeadJoystick. It also minimized the distance travelled, motion sickness, and mental task demand. Moreover, the HeadJoystick was rated better in terms of ease of use, controllability, learnability, overall usability, and self-motion perception. However, participants rated HeadJoystick could be more physically fatiguing after a long use. Overall, participants felt more engaged with HeadJoystick, enjoyed it more, and preferred it. Together, this provides evidence that leaning-based interfaces like HeadJoystick can provide an affordable and effective alternative for flying in VR and potentially telepresence drones.

1 Introduction

Spatial updating is a largely automated mental process of establishing and maintaining the spatial relationship between ourselves and our immediate surroundings as we move around Wang (2016). That is, as we move around through our environment and self-to-object relationships constantly change in non-trivial ways, our mind helps us to remain oriented by automatically updating our spatial knowledge of where we are with respects to relevant nearby objects in our surroundings. This ability allows us to navigate and interact with our immediate environment almost effortlessly Wang and Spelke (2002), Loomis and Philbeck (2008), McNamara et al. (2008). Spatial updating can also support complex activities like driving, climbing, diving, flying, or playing sports.

While spatial updating is mostly automatic or even obligatory (i.e., hard to suppress) during natural walking, it cannot be deliberately triggered when merely imagining self-motions Rieser (1989), Presson and Montello (1994), Farrell and Robertson (1998), Wang (2004). Similarly, spatial updating is impaired if self-motions are only visually simulated in virtual reality (VR) and people are not physically walking, especially when reliable landmarks are missing Klatzky et al. (1998). This has been demonstrated by comparing physical walking with a head-mounted display (HMD) to hand-held controller operated locomotion in VR Klatzky et al. (1998), Ruddle and Lessels (2006), Riecke et al. (2010). Moreover, a large percentage of participants completely fail to update rotations that are not physically performed but only visually simulated in VR Klatzky et al. (1998), Riecke (2008). This illustrates how critical it is to support reliable and automatic spatial updating in VR through, e.g., more embodied interaction and locomotion methods that can tap into such automatized and low-cognitive-load mechanisms.

While physical walking in VR is often considered the “gold standard” and can reliably elicit automatic spatial updating with low cognitive load, it is often not feasible due to restrictions on the available free-space walking area and/or safety concerns Steinicke et al. (2013). Moreover, walking does not allow for full 3D (flying or diving) locomotion, where there is currently no comparable or “gold standard” locomotion interface. To address this gap, we investigate in this study if an embodied leaning-based flying interface can help improve users’ navigation performance in a 3D navigational search tasks that requires spatial updating, as well as improve other usability, performance, and user experience aspects in comparison to a commonly used dual-thumbstick flying interface. Recent research indicates that more embodied interfaces such as leaning-based interfaces can indeed improve task performance in a ground-based navigational search task, and almost reach the performance levels of physical walking Nguyen-Vo et al. (2019). However, it remains an open research question if such benefits of leaning-based interfaces would generalize to full 3D locomotion (flying) where an additional degree of freedom (DoF) needs to be controlled. If such embodied and affordable 3D locomotion interfaces could indeed improve navigation tasks relying on spatial updating, this could have substantial benefits for a variety of scenarios and use cases in both VR and immersive telepresence (UAV/drone) flying, as spatial updating is essential for reducing cognitive load during locomotion and thus leaving more cognitive resources for other tasks. These scenarios include training, disaster or emergency response management, embodied virtual tourism, or flying untethered (as no hand controllers are needed). With the advancement in both VR and drone technologies, affordable use cases across educational, commercial, health, and recreational sectors lie ahead.

To tackle this challenge, we conducted a user study to compare HeadJoystick, an embodied leaning-based flying interface adapted from Hashemian et al. (2020) (discussed in detail in Section 2.1), with Gamepad, a standard controller-based interface. We compared these interfaces in a novel 3D (flying) navigational search task. This task is a 3D generalization of a standard paradigm used to assess spatial updating and situational awareness (and other supporting aspects such as the user’s ability to maneuver and forage effectively, and memorize which targets they have already visited vs. not etc.) in ground-based VR or real-world navigation Ruddle (2005), Ruddle and Lessels (2006), Ruddle and Lessels (2009), Riecke et al. (2010), Ruddle et al. (2011b), Fiore et al. (2013), Ruddle (2013), Nguyen-Vo et al. (2018), Nguyen-Vo et al. (2019). Further, we investigated if using HeadJoystick could help to reduce motion sickness and task load. Finally, we triangulated our finding through a post-experiment questionnaire and open-ended interviews.

2 Related Works

2.1 Locomotion in VR

In VR, hand-held controllers cannot provide physical self-motion cues that would normally accompany real-world locomotion. Since these non-visual cues, such as vestibular and proprioceptive cues, are missing, they cannot support the visual self-motion cues provided by the HMD, making it challenging to provide an embodied and compelling sensation of self-motion (vection) for the user Riecke and Feuereissen (2012), Lawson and Riecke (2014). This lack of non-visual and embodied self-motion cues has also been shown to impair performance in navigational search tasks requiring spatial updating Ruddle and Lessels (2009), Riecke et al. (2010) and spatial tasks such as directional estimates Chance et al. (1998), Klatzky et al. (1998), homing Kearns et al. (2002), pointing Waller et al. (2004), Ruddle et al. (2011b), Ruddle (2013) and estimation of distance traveled Sun et al. (2004). Moreover, missing body-based sensory information has also been shown to increase cognitive load Marsh et al. (2013), Nguyen-Vo et al. (2019) and motion sickness Aykent et al. (2014), Lawson (2014).

To provide at least some of these essential body-based cues, a variety of systems have been proposed and investigated, including large omnidirectional treadmills for ground-based locomotion and full-scale VR flight simulators Groen and Bles (2004), Ruddle et al. (2011a), Krupke et al. (2016), Perusquía-Hernández et al. (2017). Although these simulators provide a more believable experience of walking/flying using vestibular/proprioceptive sensory cues, the cost and maintenance needs of the equipment, complicated setups, required extensive safety measures, and weight and space requirements of some designs make them unfeasible for general VR home users.

VR researchers have designed leaning-based locomotion interfaces as a low-cost alternative that provide embodied control system and partial body-based sensory information. In these interfaces, leaning or stepping away from the center towards the desired direction instantiates a virtual motion in that direction. User studies have shown promising results for ground locomotion, such as improvement in spatial perception and orientation Harris et al. (2014), Kruijff et al. (2015), Nguyen-Vo et al. (2019), the sensation of self-motion, i.e., vection Kruijff et al. (2016), Riecke (2006), immersion Marchal et al. (2011), presence Kitson et al. (2015a), engagement Kitson et al. (2015a), Kruijff et al. (2016), Harris et al. (2014) and reduced cognitive load Marsh et al. (2013). Leaning-based interfaces (or a variation of stepping away from the center instead of just leaning) have also been adapted to 3D (flying) locomotion with similar effects. As we are mainly concerned with 3D locomotion in this experiment, we discuss these studies below in detail.

2.1.1 Flying in Real/Virtual Environments With 2DOF Leaning-Based Interfaces

Below we discuss several relevant leaning-based flying interfaces that allow users to control two DoF. While this is not sufficient for full control of 3D flight (which requires four DoF), they provide useful insights and inspiration.

Schulte et al. (2016) presented upper-body leaning-based flying interfaces using either the Kinect or Wii Balance Board. Both interfaces rely on the (novel) metaphor of riding a dragon. Leaning in the sagittal and coronal planes controls the dragon’s pitch and combined yaw and roll, respectively. Though it travels at a constant speed, a hand gesture with Kinect temporarily accelerates the speed as well.

Miehlbradt et al. (2018) suggested a similar upper-body leaning-based interface. Using Kinect, the user’s torso motion is used to perform five distinct behaviors (constant forward motion, right-banked turn (roll), left-banked turn (roll), upward pitch, and downward pitch). Users’ performance (accuracy) with the leaning-based interface was better than a joystick and comparable to Birdly, a commercial mechanical interface for flying like a bird in VR Rheiner (2014).

Rognon et al. (2018) designed an upper-body soft exoskeleton, FlyJacket, that controls a fixed-wing drone flying at a constant speed. Participants use an HMD to view the real-world unmanned aerial vehicle (UAV) perspective, and control the pitch and roll through their torso leaning using an inertial measurement unit (IMU). Though FlyJacket showed no significant performance improvement compared to a standard two-thumbstick remote controller (RC), participants found it to be more natural, more intuitive, and less uncomfortable.

2.1.2 Flying in Real/Virtual Environments With Four DoF Leaning-Based Interfaces

Higuchi and Rekimoto (2013) developed a system, flying-head, that synchronizes a human head with UAV motions. Users see the UAV’s camera feed through an HMD, and control the UAV’s horizontal movement by walking around, elevation by crouching, and orientation by physically rotating. In a user study, their interface was found to be better than a joystick in completion time, accuracy, ease of control, ease of use and enjoyment. However, the interface implements a position control paradigm (1:1 mapping of the user’s head and UAV position). This makes long distance navigation of the UAV impractical as its movement is limited to the user’s head movement in the real world.

Cirio et al. (2009)’s and Marsh et al. (2013)’s design solves that problem by a concept of hybrid position/rate control. In this design, there is a 1:1 zone (positional mapping) where user can move freely and perform everyday action like bending and ducking. If the user gets out of the zone then the interface applies velocity in the direction they crossed the threshold. Marsh et al.’s study showed that using this interface in place of controllers lessens the cognitive load. In Cirio et al.’s study, participants completed the task faster compared to “freezing at the boundary and going back to the center” or redirected walking. However, this interface was only useful when users need to travel from one point in space to another in a relatively straight line and they struggled when navigation included constant twists and turns. This is also seen in Circo et al.’s study where the deviation from the ideal path is maximum for this interface.

Pittman et al. (2014) proposed “Head-Translation” and “Head-Rotation” with a very small 1:1 zone. In Head-Translation and Head-Rotation, a user controls the UAV’s velocity (both magnitude and direction) in the horizontal plane by physically moving in the desired direction or tilting their head in the desired direction, respectively. In both interfaces, standing on tiptoe or squatting changes the elevation of the UAV, while returning the body to its original position halts the UAV. As they use velocity (i.e., rate) control, their interface supports sharp turns. However, it is not possible to perform actions like ducking and rolling. Among the six flying interfaces compared, Wiimote, a hand-held controller, showed the shortest completion time for passing through the waypoints. It also yielded better ratings for predictability, ease of use, and comfort. However, participants used a monitor instead of HMD with Wiimote. Excluding the Wiimote condition, the participants preferred Head-Rotation the most. However, participants had a hard time locating their original heading with Head-Translation and drifted away from their starting position.

Xia et al. (2019) also developed a VR telepresence UAV system with velocity control instead of position control. Similar to “Head-Translation,” a user controls the UAV’s velocity in the horizontal plane by moving in the desired direction. Their interface updates the reference point to mitigate the problem of drifting users. Whenever the user gets far away from the reference point, stepping in the opposite direction of the UAV flight automatically updates the stepped back position as the new reference point. The user no longer needs to keep track of the origin. However, during prolonged use, the reference point can keep moving away from the center of the tracked space and eventually out of the tracking space.

Hashemian et al. (2020) developed a seated leaning-based interface, “HeadJoystick,” with a virtual quadcopter model. In their model, a user freely rotates the swivel chair to control the simulated rotation. The user leans in the direction they want to navigate. Further, they attach a tracker to the back of the chair to account for any difference between the head’s resting position and the chair’s center. The implementation, based on the tracker’s orientation, updates the reference point as the chair rotates. This allows the user to rotate freely without worrying about the initial reference point. Assessing the interface in a maneuvering task, Hashemian et al. concluded that HeadJoystick improved both user experience and performance. They found the leaning-based interface to perform better than hand-held controllers in terms of accuracy, precision, ease of use, ease of learning, usability, long term use, presence, immersion, a sensation of self-motion, workload, and enjoyment.

To summarize, the studies mentioned above show that leaning-based interfaces can be a low-cost and relatively easy alternative for providing embodied control in VR. Hashemian et al.’s iteration of a flying leaning-based interface addresses the shortcomings of previous designs (except for actions like ducking and rolling; actions not required in this study’s task). However, Hashemian et al. only used a fast maneuvering (waypoint travel) task Hashemian et al. (2020), and there seems to be no prior research investigating human spatial updating ability and situational awareness using embodied flying interfaces. Interfaces designed for maneuvering should support high precision of motion without compromising speed, while interfaces made for exploration and search should support spatial knowledge acquisition and knowledge gathering by freeing cognitive resources Bowman et al. (2004). So, both kind of travels are important but require the interfaces to support different kinds of motion. This motivated us to design and conduct this study which will shed light on whether HeadJoystick is suitable for only maneuvering tasks, or it can support navigational search and the underlying automatic spatial updating processes as well.

2.2 Navigational Search

Navigational search is one of the established tasks for investigating that rely on spatial updating and situational awareness Ruddle and Jones (2001), Lessels and Ruddle (2005), Ruddle (2005), Ruddle and Lessels (2006), Ruddle and Lessels (2009), Riecke et al. (2010), Ruddle et al. (2011b), Fiore et al. (2013), Nguyen-Vo et al. (2018)|, Nguyen-Vo et al. (2019). It is a complex spatial task with high ecological validity as it is equivalent to a person walking around a cluttered room looking for target objects Ruddle and Jones (2001). Ruddle and Lessels introduced a variant of a navigational search task in a series of experiments studying spatial updating as a key mechanism underlying task performance Lessels and Ruddle (2005), Ruddle and Lessels (2006), Ruddle and Lessels (2009). In their version, participants were located in a virtual rectangular room with a regular arrangement of 33 pedestals. Sixteen of those pedestals had a box on top of them, and half of those boxes contained a hidden object inside. The objective was to collect all of those eight hidden objects while minimizing revisits to previously visited boxes. To do this, participants need to be able to maneuver and forage effectively, and remember which targets they have already visited or not. Especially when there are no reliable landmarks that support re-orientation (as in our study), navigational search tasks critically rely on participants’ ongoing situational awareness and more specifically their ability to spatially update where the various boxes are with respect to themselves as they constantly move around the area: once they get lost or loose track of where the already-visited-boxes are with respect to their own position and orientation (i.e., failure to spatially update), their performance will drop noticeably. That is, without a locomotion method affording automatic spatial updating, such landmark-free navigational search tasks cannot be performed effectively, making spatial updating a necessary (but not sufficient) condition for effective task performance (see chapter IV of Riecke, 2003).

Lessels and Ruddle (2005) showed that the task was trivial to perform when walking in the real world. Even when the field of view (FOV) was restricted to (20 × 16°) and thus much smaller than the FOV of current HMDs, performance for real world walking was not significantly reduced. However, when the task was performed in VR, performance was significantly reduced whenever visual cues provided via HMD were not accompanied by real walking, both in a real-rotation and visual-only condition Ruddle and Lessels (2006), Ruddle and Lessels (2009).

Later, Riecke et al. (2010) pointed out that in Ruddle and Lessels’ setup, navigators could use the room’s geometry, a rectangular arrangement of the pedestals and the regular orientation of the objects to maintain global orientation. To avoid these confounds, they removed the surrounding room, removed the pedestals without the boxes, refrained from using a landmark-rich environment, and randomly positioned and oriented all objects for each trial in their experiment. With this modified experimental design, participants performed substantially better when they were allowed to physically rotate compared to visual-only simulation. Physical walking provided additional (but smaller) performance benefits.

Nguyen-Vo et al. (2018) showed that if a participant can walk out of the array of boxes and look at the whole scene, they could memorize the overall layout of the boxes and plan their trajectory. Studies also suggest that even a single viewing of the layout can help a user retain spatial orientation knowledge, including relative distances, directions, and scale Shelton and McNamara (1997), Zhang et al. (2011). This implied that participants just needed to memorize a pre-planned trajectory instead of needing to gradually build up their spatial knowledge as they navigated, especially if they could see the layout from an advantageous position in a fully lit room. To ensure continuous spatial updating in participants, Nguyen-Vo et al. (2019) later experimented in a dark virtual environment with a virtual headlamp attached to the avatar’s head. The virtual lamp illuminated only half of the play area and prevented participants from ever seeing the overall layout and all boxes at once. Using this experimental design, Nguyen-Vo et al. compared four levels of translational cues and controls (none, upper-body leaning while sitting, whole-body leaning while standing/stepping, physical walking) accompanied by full rotational cues in all conditions. Their findings show that even providing partial body-based translational cues can help to bring performance to the level of real walking, whereas just using the hand-held controller significantly reduced both performance and usability.

In summary, the navigational search task has gone through numerous iterations with each iteration addressing previously found confounds. Successful and effective completion of the task critically relies on spatial updating as discussed above. Here, we build on and expand on this task, by for the first time including vertical locomotion in a navigational search task to study full 3D locomotion, similar to what drones and many computer games provide.

3 Motivation and Goal

To close this gap in the literature, we are in this paper mainly concerned with investigating if leaning-based flying interfaces like HeadJoystick can not only improve maneuvering ability Hashemian et al. (2020) compared to the standard 2-thumbstick flying interfaces, but also improve navigation in a task reliant on automatic spatial updating, which is critical for effective and low-cognitive-load navigation and situational awareness Rieser (1989), Presson and Montello (1994), Klatzky et al. (1998), Farrell and Robertson (1998), Riecke et al. (2007). Further, we want to ground the applicability of the interface by studying its impact on motion sickness and task load, as well as diverse aspects of user experience and usability. Reduced cognitive/task load can be an indicator of improved spatial updating, as automatic spatial updating by it’s very definition will automatize part of the spatial orientation challenges and thus reduce task load (TLX mental load, effort and frustration). Similarly, if an interface induces high motion sickness (disorientation, dizziness) then it can adversely impact spatial updating.

3.1 RQ1: Does HeadJoystick Improve Navigational Search Performance Compared to a Hand-Held Controller for 3D Locomotion?

In the HeadJoystick interface, the upper body is leaning in the direction of the simulated motion. This partial body movement of leaning provides minimal vestibular cues that are at least somewhat consistent with the virtual translation. This partial consistency is both spatial (i.e., leaning movements provide some proprioceptive and vestibular cues that are aligned with the acceleration direction in VR) and temporal, in that leaning directly controls the simulated self-motion without additional delay, thus providing almost immediate feedback. These (although limited) embodied translational cues have been shown to help reduce disorientation in 2D navigational search Nguyen-Vo et al. (2019). Hence, we hypothesize that they should yield improved task performance (by more effectively supporting spatial updating) for the HeadJoystick in 3D navigation as well (H1).

3.2 RQ2: Does HeadJoystick Help to Reduce Motion Sickness in 3D Navigation?

When physically stationary individuals view compelling visual representations of self-motion without any matching non-visual cues, it can cause unease and induce motion sickness Hettinger et al. (1990), Cheung et al. (1991), Riecke et al. (2015). This can cause illness in the user or even incapacitate them, limiting the utility of VR Hettinger and Riccio (1992). Further, sensory conflict is most prominent during the change in velocity (acceleration/deceleration) Bonato et al. (2008), Keshavarz et al. (2015).

Previous studies for ground-based locomotion have also shown that if virtual locomotion is accompanied by matching body-based sensory information similar to real-world locomotion, it can help to reduce motion sickness Aykent et al. (2014), Lawson (2014). However, the literature indicates mixed results for partial body-based sensory information. Some ground-based locomotion studies reported no significant difference in motion sickness between leaning-based interfaces and hand-held controllers Marchal et al. (2011), Hashemian and Riecke (2017a), while others reported significant reductions of motion sickness with a leaning-based interface Nguyen-Vo et al. (2019). Further, as far as the authors know, the literature does not provide a definitive answer on whether the benefits of such implementation translate to flying locomotion. Rognon et al. hypothesized increased motion sickness with the remote controller to explain their results, but do not have explicit measurements. In Pittman and LaViola’s study, participants using Wiimote reported significantly less motion sickness than those using head-rotation and head-translation, but Wiimote did not use an HMD. Further, only five out of 18 participants reported more than 10% of total SSQ score after the experiment. In Hashemian et al. (2020)’s study, participants found a significant difference (again, change of <10% of total SSQ score) in motion sickness between real-rotation with leaning-based translation and controller-based translation and rotation conditions; however, implementing real-rotation with both leaning-based and controller-based translation did not produce a change that was statistically significant.

Despite these conflicting findings, HeadJoystick is designed for providing at leat some vestibular and proprioceptive cues that aid the visual self-motion perception provided by the HMD Hashemian et al. (2020). Handheld controllers like a Gamepad cannot provide these physical self-motion cues. To change the velocity in HeadJoystick the user has to physically lean in the direction of acceleration, thus providing at least some vestibular self-motion cues in the correct direction and thus reducing the visual-vestibular cue conflict. Thus, we hypothesize HeadJoystick should reduce motion sickness and thus potentially allow for more extended headset usage (H2). We performed a planned contrast to see if there is any trend in change in motion sickness even if both interfaces should produce minimal motion sickness.

3.3 RQ3: How do HeadJoystick and Gamepad Affect the Overall User Experience and Usability in 3D Navigation?

For the HeadJoystick interface, the simulated motion is consistent with the direction of the upper body. So, any point in the space can be reached by freely leaning towards that direction. However, each thumbstick on a Gamepad is constrained to control only two DoF. So, even with real-rotation, to control three degrees of translation simultaneously the user needs to proportionately combine inputs from those two thumbsticks to travel in the desired 3D direction. Alternatively, users could only use one thumbstick at a time and alternate their input to the thumbsticks, and keep switching their plane of movement until they reach the target. Hashemian et al. (2020)’s study also showed that participants found HeadJoystick to be easier to learn and use than Gamepad. Thus, we hypothesize that HeadJoystick should be more intuitive to use and learn, and users should be able to use HeadJoystick more effectively, even without any previous exposure (H3).

In addition to the three specific aspects mentioned above, we are also interested in more generally exploring how the two interfaces affect user experience and usability. In Hashemian et al. (2020)’s study, participants rated HeadJoystick as providing overall better user experience and usability than Gamepad. We hypothesize that these findings can be replicated even in a different environment with a different task (navigational search based on spatial updating, instead of maneuvering).

4 Methods

4.1 Participants

From 25 users whom participated in our experiment, we excluded three participants and performed analysis with remaining 22 participants (10 female), 19–32 years old (M = 24.0, SD = 3.70). 15 of them casually/regularly played video-games on a computer or a gaming console; 13 of them had used 3D navigation with video games, 3D modeling or flight simulator; and 15 of them had used a HMD before. Among the three excluded participants, one experienced motion sickness during the study and dropped the experiment. The other two excluded participants showed unusually high SSQ scores. Although we did not observe anything unusual during the experiment, we realized they reported high SSQ scores even before the start of the study. Due to this discrepancy and unreliability, we excluded their data as well. The studies had approval of the SFU Research Ethics Board (#2018s0649).

4.2 Virtual Environment and Task

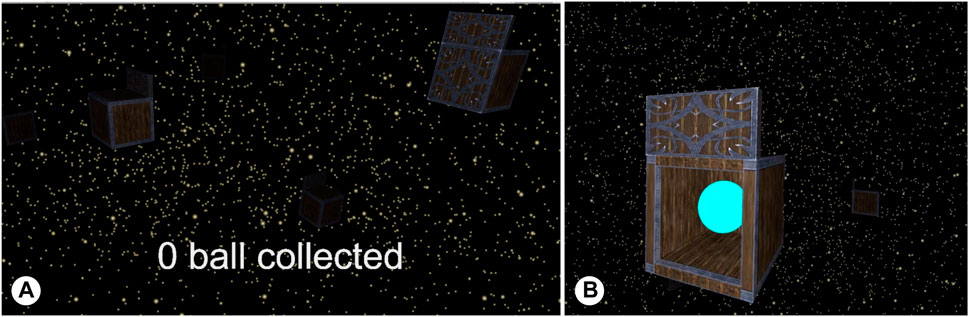

As our main goal was to investigate how well different interfaces support participants’ spatial updating and situational awareness, we carefully designed the virtual environment (Figure 1) and task to avoid potential confounds reported in prior works (see Section 2.2). Specifically, as our main focus was to investigate and compare locomotion interfaces and spatial orientation/updating performance, we carefully avoided all landmarks or global orientation cues. The experimental task was, apart from the changes described below, similar to the ground-based navigational search task in Nguyen-Vo et al. (2019)’s experiment, but generalized for 3D locomotion (flying) 1. To generalize for 3D, we tested different shapes and sizes of the play area prior to the main experiment. If the play area was too small, a user could quickly collect all eight balls without revisiting the boxes. If the virtual area was too big, they got easily lost in the vast void space with no global orientation cues (landmarks). We also observed that participants would keep going out of the play area when it was spherical. As a result of iterative pilot testing, we determined a cylindrical virtual area 6 m in diameter and 3 m in height would be a good fit for our experiment.

FIGURE 1. (A) A snapshot of a participant’s view of the play area (6 m × 3 m cylinder) with the closed boxes. The light attached to the avatar’s head lit half of the play area, 4.24 m. Boxes became dimly lit as their distance increased from the player and stopped being visible when they were further than 4.24 m. (B) The target, a blue ball, seen after approaching the box.

During each trial, sixteen boxes were randomly placed within this area, with eight of the boxes containing a blue ball (see Figure 1). The remaining eight boxes served as decoys. The participants’ objective was to efficiently collect as many balls as possible. In our navigational search task, participants started each trial from the center of the cylinder. A trial ended when participants found all eight balls or the trial ran for 5 min. We chose to limit the trial length to reduce motion sickness.

Participants were explicitly told that the criteria for efficiency were the number of balls collected, the total distance traveled, and the number of revisits. Since it was possible to complete the game by collecting all the balls before 5 min, we also recorded the completion time.

4.3 Interaction

To check if there was a ball inside a box, participants needed to approach it from its front side, indicated by an additional banner (Figure 1B). The box automatically opened when participants’ viewpoints were close (within 90 cm from the box’s center) and facing the front side (within ±45° from the box’s forward vector). To prevent the accidental collection of the balls, the user needed to keep the box open for one second. The user was alerted through a ticking sound as the box opened, and it was followed by a “ding” sound for collection.

As colliding with the boxes and any subsequent physics simulation would disorient the user and even induce motion sickness, we switched off collision detection for the boxes and the user could pass through them. However, to prevent participants from peeking into the boxes from the other sides, the ball became visible only when a box was approached from the front side.

4.4 Locomotion Modes

Hand-based controllers are still the most common interfaces for navigation in VR, especially when physical walking is not feasible. To choose among the hand-based controllers for the experiment, we compared the Vive controller that came with the headset and a gamepad. Through our pilot testing, we learned that the participants use their thumbs for controlling both kinds of controllers and release their thumbs to come to a halt. As a trackpad has no physical feedback that indicates the center, participants had difficulty providing proper input once they released their thumb, and as a result took time to adjust their input. A gamepad has thumbsticks loaded with springs that force the thumbsticks to come back to their center when released. Because of this, the user can locate the center much more quickly. Further, a gamepad’s thumbsticks are similar in design to the most common remote controller for drones. So, we chose to compare the HeadJoystick interface with a gamepad. Further, it adds comparability with Hashemian et al. (2020)’s original paper which proposed the HeadJoystick interface.

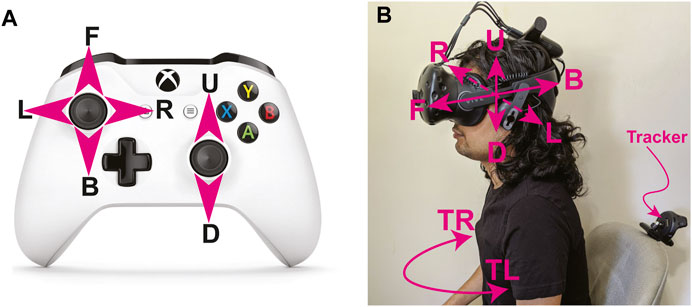

For both the Gamepad and HeadJoystick conditions, participants rotated the swivel chair they were sitting on to control the simulated rotations in VR. However, they translated in different manners. We chose to include only the interfaces that allow physical rotation because the importance of rotation in spatial updating has already been proved multiple times Klatzky et al. (1998), Riecke et al. (2010). Further, implementing physical rotations is no longer an issue, as HMDs are becoming increasingly wireless, and therefore have no cables to be entangled.

4.4.1 Gamepad Interface

For the Gamepad interface, the left control stick controlled horizontal translation velocities as illustrated in Figure 2. The right control stick controlled upward/downward translation speeds. Although physical rotation controlled yaw, for simplicity, we will refer to this interface as the Gamepad throughout the paper.

FIGURE 2. (A) In Gamepad, the left thumbstick controls the horizontal velocity (Forward, Backward, Left, Right) and the right thumbstick controls the vertical velocity (Up, Down) (B) In HeadJoystick, the user’s head position controls the velocity. In both cases, physical rotation (Turning Right, Turning Left) is applied.

4.4.2 HeadJoystick Interface

In the HeadJoystick interface, head position determined the translation. The interface calibrated the zero-point before each use. Moving the user’s head in any particular direction from that zero-point made the player move in the same direction. The distance of the head from the zero-point determined the speed of the virtual motion. To stop the motion, the user had to bring their head back to the zero-point. As a subsequent result, leaning forward and backward caused the user to move forward and backward, leaning left or right caused sideways motions, stretching their body up or slouching down created upward or downward motions, and coming back to the center stopped the motion. In this kind of interface, many prior implementations include a small 1:1 zone (also known as idle, dead, or neutral zone) where the physical head motion correspond to 1:1 mapped virtual motion, to allow users to more easily break or be stationary. However, during the pilot testing for this study, we observed that our exponential curve (relating head deflection to virtual translation speed, see Section 4.5) is fairly flat around the zero point and was sufficient for the user to easily slow down and collect the balls. Hence, we opted to not include a 1:1 zone. This also helped to reduce the amount users had to lean to travel with a faster speeds.

4.5 Motion Control Model

The velocity calculation is based on a scaled exponential function, similar to the function for a smooth translation proposed by LaViola et al. (2001)’s study on leaning based locomotion.

where α is the maximum speed factor, β controls the steepness of the exponential curve,

4.6 Experimental Design and Procedure

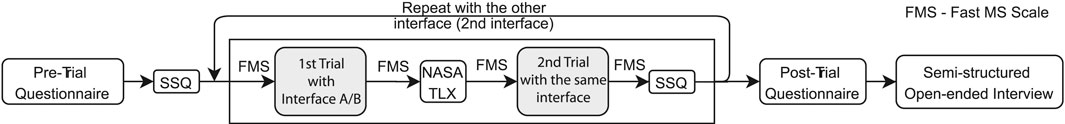

In this experiment, we compared the performance of the HeadJoystick and Gamepad interface. We collected the users’ behavioral data while they were performing the tasks. After completing the trials, we asked them to fill out a questionnaire and performed a semi-structured open-ended interview. We deployed a 2-blocked, repeated measure experimental design. All participants performed the navigational search task twice for each interface, totaling four trials and thus up to 20 min of VR exposure in total. The order of the interfaces was counter-balanced to account for the order effects and maturation effects.

The overall procedure is illustrated in Figure 3. After reading and signing the informed consent form, participants filled out a pre-experiment questionnaire before starting the experiment, asking about their age, gender and previous experience with video games and HMDs. Then, they were guided through the tasks and tried out both interfaces before the actual experiment started. Before they began the experiment, they filled out the Simulator Sickness Questionnaire (SSQ) Kennedy et al. (1993). They started with one of the two interfaces. They performed two trials with the first interface. Participants were asked to take off the Vive Headset after the first trial and fill out NASA’s Task Load Index (TLX) Hart and Staveland (1988) to reduce the potential for motion sickness. After completing the second trial, they filled out the SSQ again. The questionnaires were strategically placed between each trial to provide a short break between the trials. They repeated the same procedure including two trials with the interface they had not used in the first two trials.

Further, to assess motion sickness issues, we asked them to estimate their current state of motion sickness before and after each trial. They rated their motion sickness on a scale of 0–100. A rating of “0” meant “I am completely fine and have no motion sickness symptoms” and “100” meant “I am feeling very sick and about to throw up.” Based on their scale, we recommended that they go ahead with the trial, take a longer break or drop the experiment. This scale was adapted from the Fast Motion Sickness Scale (FMS), which goes from 0–20 Keshavarz and Hecht (2011).

Before switching the interface, participants were asked to take a minimum 5 min break, including the time required to fill out the questionnaires. In addition to taking a mandatory break, participants were encouraged to take a short walk or drink water.

After completing all four trials, they completed a post-study survey questionnaire (detailed in Section 5.2.3) and responded verbally to semi-structured open-ended interview questions. The whole study took, on average, about 1 h to complete.

5 Results

5.1 Behavioral Measures

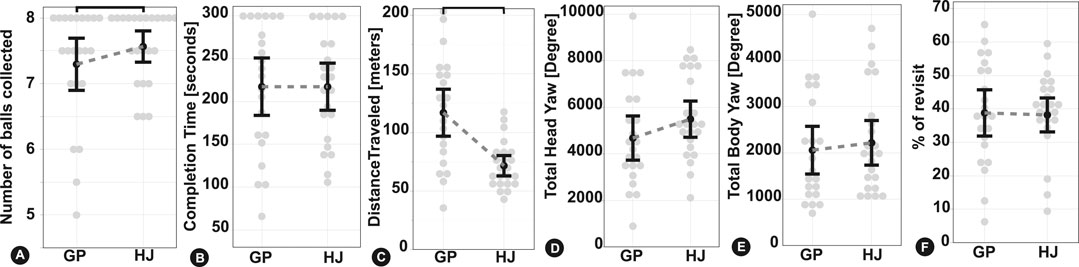

Six quantitatively measured behavioral data types are summarized and plotted in Figure 4. Improved spatial updating for a given interface would be expected to increase the number of balls collected, and reduce task completion time and the number of revisits needed. Similarly, if an interface better supports spatial awareness, this might yield an increase in participants’ head and body rotation, and reduce travel distance to reach the same number of targets. All six measures were analyzed using 2 × 2 × 2 repeated-measures ANOVAs with the independent variables interface (HeadJoystick vs. Gamepad), repetition (first vs. Second trial) and order of the interface assignment, group (GamepadFirst vs. HeadJoystickFirst). Since neither repetition, group, any interaction with the group, or the interaction between interface and repetition showed any significant effects for any of the dependent variables (all p′s > 0.05), we only report the main effects of interface below. Unless stated otherwise, all test assumptions for ANOVA were confirmed in each case, p < 0.05 was considered a significant effect and its double p < 0.10 was considered a marginally significant effect.

FIGURE 4. Behavioral data plot for Gamepad (GP) and HeadJoystick (HJ) with an overall mean (black dots), individual participants’ average (gray dots) and error bars at 95% Confidence Intervals (CI).

5.1.1 Participants Collected Significantly More Balls When Using HeadJoystick

Figure 4A. Participants collected all eight balls in 31 out of 44 trials with HeadJoystick and 26 out of 44 trials with Gamepad. All participants were able to collect at least six balls with HeadJoystick and at least four balls with Gamepad. On average, participants were able to collect more balls when using HeadJoystick (M = 7.61, SD = 0.655) than Gamepad (M = 7.30, SD = 1.01),

5.1.2 Task Completion Time did not Differ Between Interfaces

Figure 4B. Participants reached the time limit of 5 min in 13 trials when using the HeadJoystick vs. 18 trials when using the Gamepad. The fastest participant finished the task in 69 s with HeadJoystick and 48 s with Gamepad. Repeated measures ANOVA showed no significant difference in completion time between HeadJoystick (M = 214 s, SD = 76.5 s) and Gamepad (M = 217 s, SD = 84.6 s),

5.1.3 Participants Travelled Significantly Less While Using HeadJoystick

Figure 4C. Participants travelled from 33.5 to 143.9 m with HeadJoystick and from 30.8 to 277.4 m with Gamepad. ANOVA showed that participants overall travelled significantly less with the HeadJoystick (M = 72.3 m, SD = 28.3 m) than the Gamepad (M = 116.8 m, SD = 56.9 m), F (1, 42) = 25.4, p < 0.001,

5.1.4 HeadJoystick Marginally Increased Overall Head Rotations, but not Body Rotations

Figures 4D,E. We recorded the users’ body rotation (rotation of the chair) and head rotation (rotation of HMD) because it would inform us if either of the interfaces restricted or reduced looking around and thus potentially hindered situational awareness. The accumulated body rotation for HeadJoystick (M = 2,255°, SD = 1,360°) and Gamepad (M = 2061°, SD = 1,260°) did not differ statistically, F (1, 42) = 0.782, p = 0.382,

5.1.5 There was no Significant Difference Between % of Revisit (Ratio of Revisited Boxes to the Total Number of Boxes Visited)

Figure 4F. Only five participants (two with Gamepad, three with HeadJoystick) had no revisits to the target boxes before the trial completed. Some participants travelled slowly and visited only a few boxes. Others travelled quickly and visited as many boxes as possible. Since the total number of revisits depends on the total number of targets visited by the participants, we analyzed the ratio of revisited boxes to the total number of boxes visited by the participants. The mean % revisits was not significantly different, F (1, 42) = 0.018, p = 0.894,

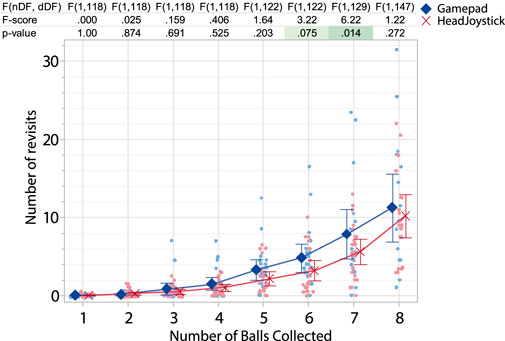

FIGURE 5. Total target (boxes) revisited by the participants as they collected new balls. Light shades of dots represent the mean values per participant. ⧫ (blue) and X (red) indicate the mean for Gamepad and HeadJoystick interface respectively, CI = 95%. Table above shows the planned contrast between the interfaces as the number of revisits accumulated with number of balls collected.

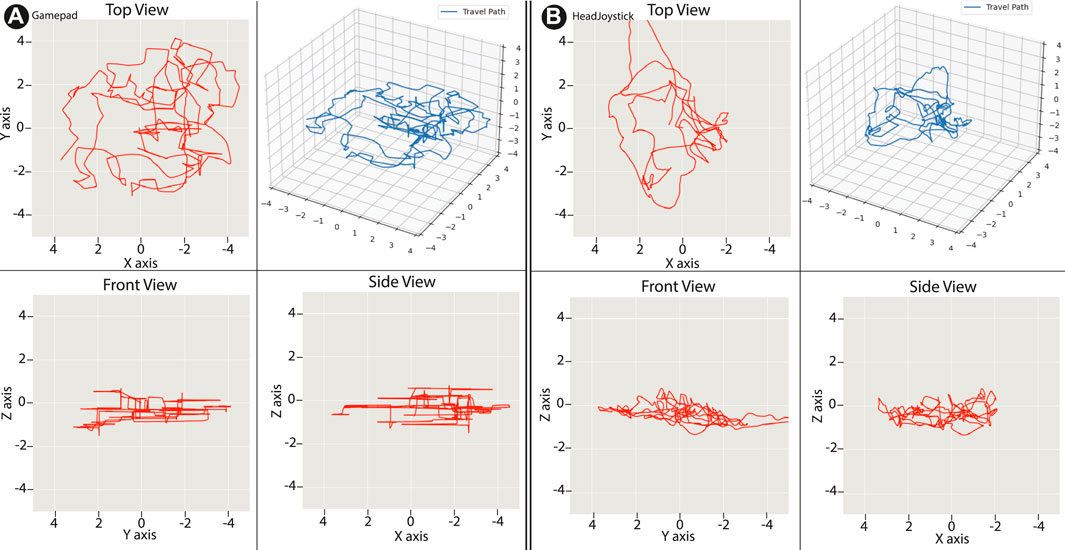

We also recorded the travel path of the trials to investigate potential behavioral difference between the interfaces during navigation. Since putting the travel path of all the users and trials in a single graph created a dense path plot with impossible to distinguish travel instances, we show representative travel paths for Gamepad and HeadJoystick from a randomly selected participant (Figure 6). As the figure illustrates, with the Gamepad, participants restricted themselves to controlling no more than two translational DoF at a time, while with HeadJoystick, they controlled all available DoFs simultaneously. This is indicated by the straight horizontal and vertical lines with almost perpendicular turns with Gamepad (front and side views, Figure 6A) and curved paths with HeadJoystick in all projections (Figure 6B). Plots of almost all travel paths of individual trials showed similar trends and are submitted as a Supplementary Material for reference.

FIGURE 6. Representative travel path (isometric, top, front and side) for (A) Gamepad and (B) HeadJoystick from a randomly selected participant.

5.2 Subjective Ratings

5.2.1 Motion Sickness

5.2.1.1 Simulation Sickness Questionnaire

The time of SSQ measurement (participant’s SSQ score before-0, after completing the trials with the first interface-1, and after completing the trials with the second interface-2) was one of the independent variables (within-subject factor). The order of assignment of the first interface (group-HeadJoystickFirst/GamepadFirst) was the second independent variable (between-subject factor). We chose to interpret the data with the time of SSQ measurement rather than the interfaces themselves because motion sickness accumulates over time. We performed a two-way mixed ANOVA using those two factors. Greenhouse-Geisser correction was applied whenever the assumption of sphericity was violated. As discussed in Section 3, we also compared Pre-Experiment SSQ scores to SSQ scores after using the first and second interfaces as planned contrasts with Bonferroni correction, summarized in Table 1. Finally, we tested the correlation between the distance travelled and motion sickness as participants travelled significantly more with Gamepad. However, linear correlation analysis showed that motion sickness did not correlate with travelled distance for either of the interfaces (all p′s > 5%).

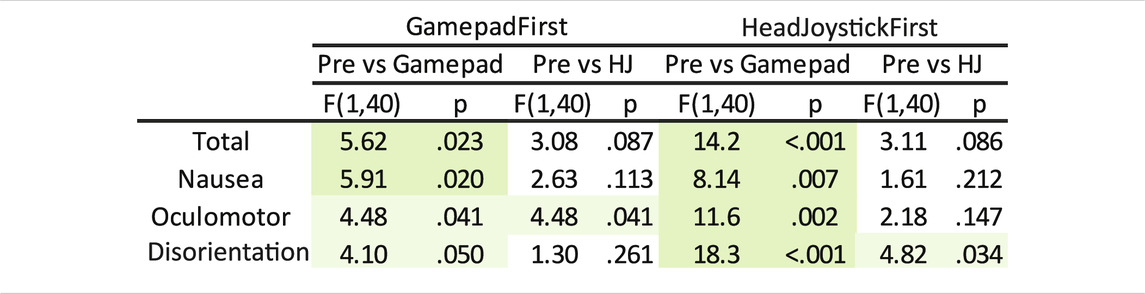

TABLE 1. LSMeans Contrast was used to compare the Pre-Experiment SSQ score with SSQ score after Gamepad use and HeadJoystick use. Bonferroni correction was used for doubling the comparison. Significant differences (p ≤ 2.5%) are highlighted in green, and a lighter shade of green indicates marginally significant results (p ≤ 5%).

Each trial produced only minimal motion sickness on average, and the average SSQ score after the experiment was 16.8% (or 39.7 in the SSQ scale from 0 to 235.32). The highest SSQ scores reached by any participant was 44.4% (104.7). We can see from Figure 7 that for an average participant, when they used Gamepad as their first interface (blue line), SSQ total and its sub-scales increased from Pre-Experiment to after using the Gamepad, and it stayed at the same level or even decreased after switching to HeadJoystick. For an average participant using HeadJoystick as their first interface (red line), not only did SSQ total and its sub-scales increase after using the HeadJoystick, it further continued increasing after switching to Gamepad. Inferential statistical analysis done below shows the same result.

FIGURE 7. User’s self-report of motion sickness using SSQ questionnaire before the experiment started (“Pre-Experiment”), after using the first interface, and after using the second interface. Blue and red lines indicate an average participant using Gamepad and HeadJoystick as their first interface respectively. ⧫ = Gamepad interface, X = HeadJoystick interface, CI = 95%.

5.2.1.1.1 Total SSQ Scores Increased Significantly After Using Gamepad and Increased Marginally After Using HeadJoystick

Figure 7A. ANOVA revealed a main effect of time (Pre-Experiment, after the first interface, and after the second interface),

5.2.1.1.2 Participants Got Significantly Nauseous After Using Gamepad While there was no Significant Change With HeadJoystick

Figure 7B. ANOVA revealed a main effect of time,

5.2.1.1.3 Participants had Oculomotor Issues After Using Gamepad While There were Mixed Results With HeadJoystick

Figure 7C. ANOVA revealed a main effect of time,

5.2.1.1.4 Participants had Disorientation Issues After Using Gamepad While There were Mixed Results With HeadJoystick

Figure 7D. ANOVA revealed a main effect of time,

5.2.1.2 Fast MS Scale

5.2.1.2.1 Participants Reported Higher FMS Increase After Using Gamepad than After Using HeadJoystick

Figure 7E. Participants’ self-reported motion sickness score (Scale: 0–100) given before and after each trial was analyzed using 2-factor repeated measures ANOVA. The results show that self-reported motion sickness score increased overall from before to after a trial,

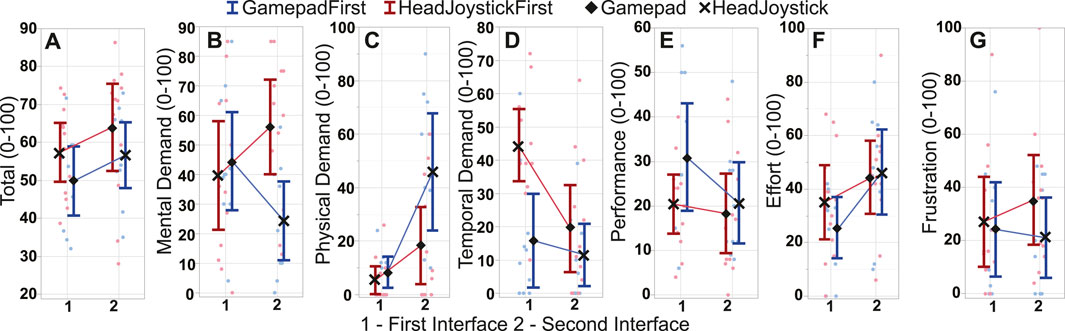

5.2.2 Task Load

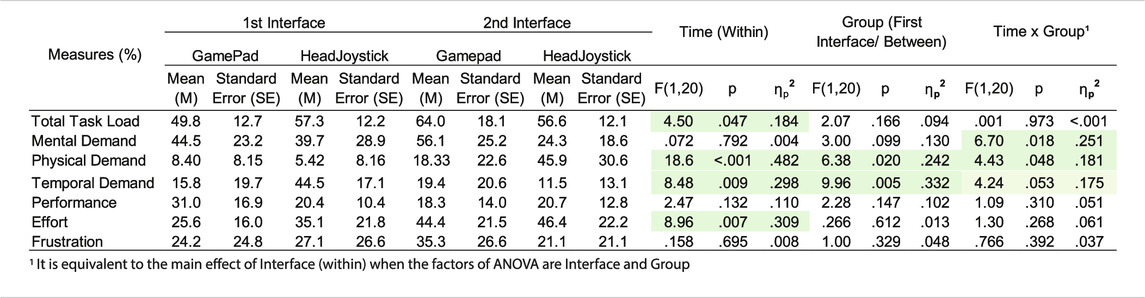

The final weighted score as well as individual six sub-scores from the NASA Task Load Index (TLX) for the two factors, time (first vs. second interface), within-subject factor) and interface order (participant group: GamepadFirst or HeadJoystickFirst, between-subject factor) were analyzed with two-way mixed ANOVAs, with statistical results, means, and standard errors summarized in Table 2. We chose to analyze the TLX with time rather than interface as a main factor because it considers the effect of switching from HeadJoystick to Gamepad and vice versa. Further, this 2 × 2 ANOVA has factors with only two levels each. Therefore, the interaction between time and group (time × group) is equivalent to the main effect of interface in an ANOVA analysis with interface as one of the factors. To make the results’ interpretation more easily understandable and comparable, we have scaled each measurement to 0–100.

TABLE 2. NASA Task Load demands for both interfaces are analyzed with ANOVA. Significant differences (p ≤ 5%) are highlighted in green, and a lighter shade of green indicates marginally significant results (p ≤ 10%).

5.2.2.1 The Participants Felt the Task was Overall More Demanding With the Second Interface Irrespective of the Group

Figure 8A and Table 2. However, there was no significant effect of group and no significant interaction between time and group for total NASA TLX scores. This equivalently means that there was no significant main effect of the interface.

FIGURE 8. NASA TLX in %, after using the first interface then second interface. The blue line and red line indicate participants using Gamepad and HeadJoystick respectively, for their first trial. ⧫ = Gamepad interface, X = HeadJoystick interface, CI = 0.95.

5.2.2.2 Participants Felt HeadJoystick was Mentally Less Demanding

Figure 8B. The analysis did not show a main effect of time or group. However, there was a significant interaction between time and group. Irrespective of the group, the mental demand with the first interface was around 50%. However, when the participants switched from Gamepad to HeadJoystick they found the mental demand to be significantly reduced, whereas in the group that switched to Gamepad from HeadJoystick, the mental demand ratings went significantly up for the second interface. This is corroborated by the significant overall effect of interface, with significantly higher mental demand ratings for the Gamepad (M = 50.8, SD = 24.5) than HeadJoystick (M = 32.7, SD = 5.43),

5.2.2.3 Participants Felt HeadJoystick was Physically More Demanding

Figure 8C. The analysis showed a main effect of time as well as group. The second interface was rated as more physically demanding (M = 30.9, SD = 29.5) than the first interface (M = 6.77, SD = 8.11) and the GamepadFirst group found the task to be more physically demanding (M = 27.2, SD = 4.47) than the HeadJoystickFirst group (M = 11.9, SD = 4.08). There was also an interaction between time × group. Thus, the physical demand of the HeadJoystick (M = 23.8, SD = 29.4) was rated higher than that of Gamepad (M = 13.8, SD = 18.0),

5.2.2.4 Participants Found Gamepad to Marginally Decrease Temporal Demand (Time Pressure)

Figure 8D As with the physical demand, there was a main effect on time as well as group. In general, the second interface (M = 15.8, SD = 17.7) had lower temporal demand than the first interface (M = 31.5, SD = 23.1). In particular, the group that switched from HeadJoystick to Gamepad reported lower time pressure registering a marginally significant interaction; i.e., Gamepad (M = 17.8, SD = 19.8) had marginally lower temporal demand than HeadJoystick (M = 29.5, SD = 22.6).

5.2.2.5 The Participants Felt the Task Needed More Effort in their Second Trial Irrespective of the Interface

Figure 8F. The second interface (M = 45.3, SD = 21.3) had significantly higher effort ratings than the first interface (M = 30.8, SD = 19.6). There was no significant difference between the groups or interaction between time and group.

There were no statistically significant main effects or interactions on performance or frustration Figures 8E,G.

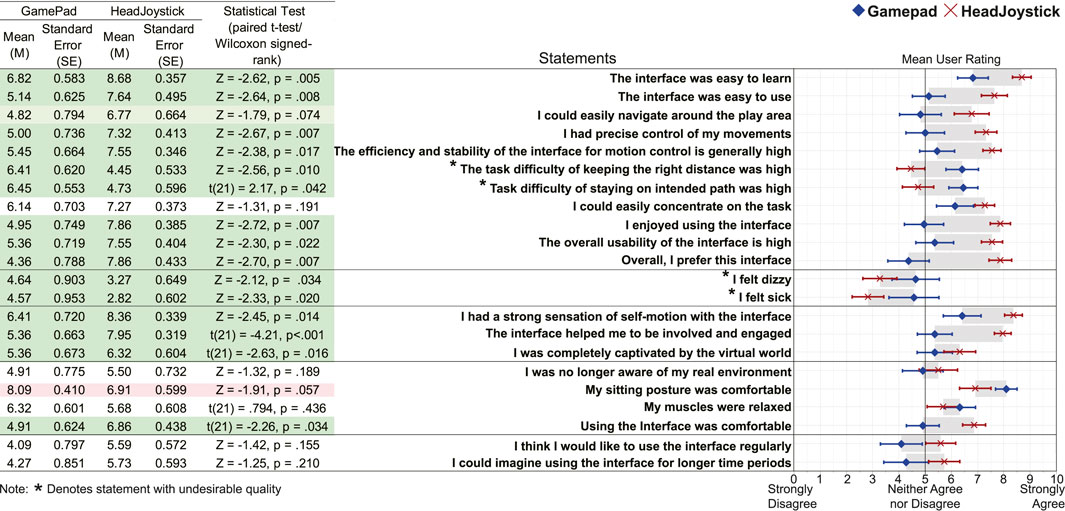

5.2.3 Post-Experiment Questionnaire and Interview

Participants filled out a post-experiment questionnaire with 22 questions for each of the two interfaces, addressing different aspects like usability and performance, motion sickness, comfort, and immersion. The ratings were compared using t-tests, or Wilcoxon signed-rank tests whenever the assumption of normality was violated.

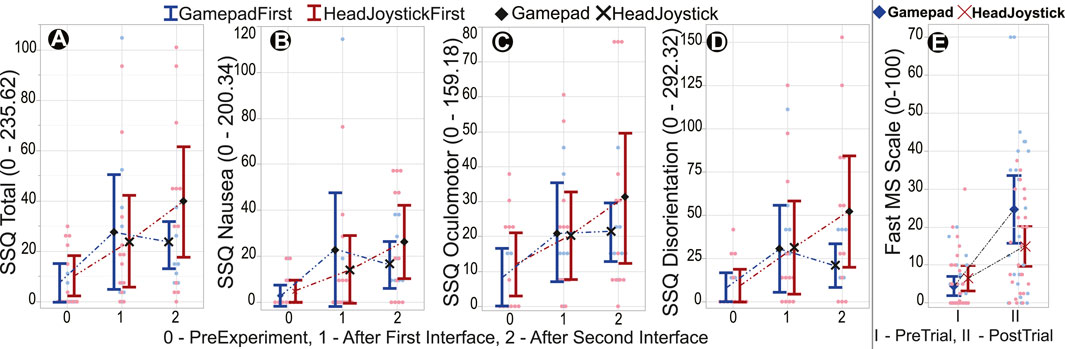

Figure 9 summarizes descriptive and inferential statistics. As seen from the plot, while participants did not have a strong opinion about Gamepad for the majority of the statements (most averages were near 5, neither agree nor disagree), for HeadJoystick they had a stronger positive opinion (positive statements) or stronger negative opinion (negative statements). Compared to the Gamepad, the HeadJoystick interface was rated as easier to learn, easier to use, gave more control, and was more enjoyable and preferable. It also made them less motion sick than Gamepad while increasing immersion and vection. That is, all significant effects were in favour of the HeadJoystick over the Gamepad. Both interfaces were judged to provide a comfortable sitting position (Gamepad, M = 6.83, SD = 0.551| HeadJoystick, M = 7.92, SD = 0.394), although the Gamepad provided a slightly (but only marginally significantly) more comfortable sitting posture.

FIGURE 9. User rating regarding statements about usability and preference, motion sickness, immersion, comfort, and long-term use. Green and lighter shade of green respectively indicate significant (p ≤ 5%) and marginally significant (p ≤ 10%) differences in favor of HeadJoystick. Lighter shade of red indicates marginally higher ratings in favor of Gamepad (p ≤ 10%). Note: * denotes statements with undesirable quality (reversed scale). Effectively, the green highlights in those statements with * indicate that HeadJoystick had lower task difficulty and made participants less motion sick. ⧫ = Gamepad interface, × = HeadJoystick interface, CI = 95%.

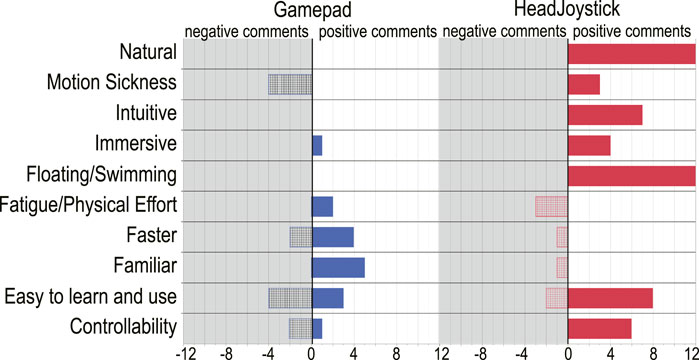

We performed semi-structured open-ended interviews with the participants after they completed the post-experiment questionnaire to get more insight into their choices and underlying reasons for those choices. 16 out of 22 participants mentioned in the interview that they preferred HeadJoystick over Gamepad. As seen in Figure 10, the recurrent themes among the participants for preferring the HeadJoystick over Gamepad were that HeadJoystick made them less sick, it was easier to learn and use, it was intuitive, it provided better controllability, it felt natural, there was a stronger sense of self-motion (floating/swimming), and the virtual environment felt more immersive. Even though these participants preferred HeadJoystick over Gamepad, they still felt Gamepad had the advantages of familiarity, HeadJoystick would be fatiguing after a long use, and “it would be hard to stand still with HeadJoystick” {P13}.

FIGURE 10. Thematic count of participants’ response in post-experiment interview. The x-axis represents the number of participants mentioning the themes on the y-axis. Bars to the left (gray area) correspond to the number of negative comments about that topic whereas bars to the right (white background) represent the number of positive comments.

Five participants preferred Gamepad over HeadJoystick. The recurrent themes among the participants for preferring the Gamepad were familiarity, ease of control, faster and less physical effort. However, even among those who preferred Gamepad over HeadJoystick, some mentioned that the HeadJoystick made them less sick and a few appreciated the novel approach to VR locomotion.

Among the listed thematic counts in Figure 10, motion sickness turned out to be such a predominant concern with Gamepad that when we asked, “How was your experience?” as the first question after the experiment, several participants immediately responded:

“Felt like throwing up after using Gamepad” {P03}

“Fun with the HeadJoystick but got dizzying after using Gamepad” {P18}

“Gamepad was terrible … made me almost sick” {P13}

“…Gamepad made me sick … ” {P25}

As we wanted to understand why they preferred one interface over the other we had also specifically asked “What did you like about the locomotion interfaces?”, the minimal cognitive load and intuitiveness of the HeadJoystick was one of the most consistent responses.

“HeadJoystick is more intuitive. You don’t really have to learn to use it.” {P17}

“There was no cognitive load … with the HeadJoystick, motion was intuitive and [I] could concentrate more in the task.” {P13}

“Head one [HeadJoystick] is more intuitive. It feels like I am swimming.” {P23}

As for the Gamepad, participants liked that they were “familiar” {P16, P22} with its mechanics. However, they were split between “easy to use” {P12} due to its familiarity and “difficult” {P25} due to confinement in a single plane, i.e., “moved in either vertical direction or moved in the horizontal plane” {P01} as well as the apparent disjunction between “two different kind of movements” {P09, P23}, i.e., physical rotation and controller translation.

Other reasons for preferring one interfaces over the others included enjoyment, better control, naturalness and required effort:

“Because [HeadJoystick] was easier to learn. More enjoyable-feels like flying in VR.” {P05}

“[HeadJoystick] gives more control and [is] more precise.” {P09}

“[HeadJoystick] is more matched to the body. Felt similar to scuba diving.” {P14}

“HeadJoystick is easy to control and felt more immersive.” {P15}

“The Vive did not fit perfectly. It was also heavy. So, I was wary about moving properly for the HeadJoystick. {P06}

“Gamepad made me dizzy, but still, it required less effort.” {P18}

We also asked specific question regarding their strategies with both interfaces. When we asked, “Did you use any strategies? Were they different for the different interfaces?”, many participants indicated using different search strategies depending on which interface they used. For Gamepad, they tended to first search horizontally, went up or down and then searched on that new level and so on, while avoiding motions that involved all three translational degrees of freedom. For example, P12 stated that they “stayed in [the] same level, searched there then changed altitude”. At the same time, for HeadJoystick they “looked around in circle” {P13}. Participants’ descriptions of their travel strategies of moving in distinct planes for the controller but more fluidly through 3D space with the HeadJoystick mirrors the plots of their trajectories in Figure 6.

6 Discussion

This paper presents the first study exploring the effect of partial body-based self-motion cues, in the form of a leaning-based interface, on spatial orientation/updating while flying in virtual 3D space. Currently, flying is typically achieved through low-fidelity interfaces like a gamepad, joystick, keyboard, or point-and-click teleportation, or through high-fidelity interfaces with actuators or motors, like motion platforms McMahan et al. (2011). We explore a relatively novel flying interface (HeadJoystick) that tries to bring together the advantages of both low- and high-fidelity interfaces: it is embodied, inexpensive, easy to set up, provides at least minimal translational motion cueing and full rotational cues, and is capable of controlling all four DoFs needed for full flight control, as discussed in more detail in Hashemian et al. (2020).

Though past studies have shown that leaning-based interfaces can improve spatial perception and orientation in ground-based (2D) locomotion Harris et al. (2014), Nguyen-Vo et al. (2019), leaning-based interfaces with the capability to control all four DoFs needed for full flight control have not been scrutinized in these contexts. We discuss the findings of our experiment in the context of our main research questions below.

6.1 RQ1: Does HeadJoystick Improve Navigational Search Performance Compared to a Hand-Held Controller for 3D Locomotion?

Participants collected significantly more balls with HeadJoystick, while being more efficient, i.e., travelling less distance. These quantitative findings indicate improved navigational search performance for the HeadJoystick. We propose that the better performance of HeadJoystick can be mainly attributed to two factors. First, participants mention a strong sense of self-motion in our study when using the HeadJoystick. They felt they were “actually floating” {P19} and moving in the space. The importance of non-visual and embodied self-motion in a variety of spatial orientation and updating tasks has been shown through a number of studies Chance et al. (1998), Klatzky et al. (1998), Kearns et al. (2002), Sun et al. (2004), Waller et al. (2004), Ruddle and Lessels (2009), Riecke et al. (2010), Ruddle et al. (2011b), Ruddle (2013), Harris et al. (2014), Riecke et al. (2015), as discussed in Section 2.1. Further, in Nguyen-Vo et al. (2019)’s study, the interfaces providing partial body-based sensory information (in particular, leaning-based minimal translational self-motion cues) performed significantly better compared to controller-based locomotion in a task heavily reliant in spatial updating, even though real rotation was applied in all cases (standing and sitting interfaces). Together with these earlier findings, the current study confirms that the improved navigational search performance of a well-designed leaning-based interface for ground-based locomotion Nguyen-Vo et al. (2019) do indeed generalize to 3D locomotion (flying). As we argued in Section 2.2, spatial updating is a necessary prerequisite for effective navigational search. This suggests that the improved performance for the HeadJoystick might be related to it better supporting automatic spatial updating, although further research is needed to disambiguate spatial updating from other potential underlying mechanisms.

Second, the Gamepad and HeadJoystick interfaces used in the current study facilitated different kinds of motions and subsequently led to different locomotion trajectories and potential underlying search strategies-at least when the vertical direction was involved. For instance, HeadJoystick afforded changing the travel direction in all three axes with a single head motion while Gamepad required combination of two thumbsticks. Participants stated in the post-experiment interview that they searched on different horizontal levels with the Gamepad and searched in a more spiraling fashion with HeadJoystick. These statements are corroborated by the difference in travel paths between the interfaces illustrated in Figure 6 and the Supplementary Material. With Gamepad the participants’ search pattern reflected a switching between the motion control between two hands (and thumbsticks), i.e., they typically did not make use of the bi-manual control of motion to go directly towards a selected target and change height at the same time. Hence, when seen from above the foraging paths look qualitatively similar, whereas the side view highlights the switch between horizontal and vertical locomotion. However, with the HeadJoystick, their movements seemed to be less restricted to any plane or axis. This could be based on participants being reluctant to control multiple degrees of freedom simultaneously when using the Gamepad: participants mostly controlled just one DoF at a time (e.g., forward translation) in combination with full body rotations with their head mostly facing forward. In contrast, HeadJoystick seems to much better facilitate traveling in any direction and controlling all three translational degrees of freedom simultaneously as evident from participant quotes and differences in movement trajectories. This assumption is supported by the travel path shown in Figure 6 and the Supplementary Material, where the top-down views for both interfaces are fairly similar, but show a stark contrast in the side/front views. Future research is needed to investigate if these different interfaces might lead to different foraging strategies (such as different orders in which the boxes where visited), or if participants use overall fairly similar foraging strategies but separate horizontal and vertical locomotion due to different affordances of the interfaces. The observed locomotion trajectories seem to suggest that the order of boxes visited did not depend a lot on the locomotion interface, at least for the first few boxes. However, as the task became successively harder with less balls left to find, participants using the gamepad seemed to travel further and revising more boxes, potentially because they got more easily lost and situational awareness and spatial updating ability was reduced. Further research is needed to further investigate these aspects and more specifically address underlying strategies and processes.

In sum, the above analysis suggests that the standard two-thumbstick controller creates a mapping problems between the interface input and the resulting effect (simulated self-motion in VR), especially when used for 3D (flying) locomotion. This is supported by participant feedback, e.g., {P01} mentioned that “With gamepad I moved in either vertical direction or moved in the horizontal plane. With HeadJoystick it was a combination. So, it felt much easier”. Motivated in part by the observed challenges in controlling multiple degrees of freedom with separate hands/thumbsticks in the current gamepad condition, in a recent spatial updating study we implemented a controller-directed steering mechanism where the direction of the hand-held controller indicates the direction they want to travel Adhikari et al. (2021). However, that study showed that even adding some level of embodiment (the controller direction) and not requiring 2-handed operation was still not enough for it to support the same spatial updating performance as observed with leaning-based interfaces. We propose that the additional vestibular/proprioceptive cues provided by the head/trunk movement of leaning-based interfaces might help reduce the intersensory cue conflict in VR when users cannot physically walk, and more effectively trigger compelling sensations of self-motion and support automatic spatial updating, thus improving navigation performance while reducing cognitive load. Future carefully designed studies are needed to test these hypotheses and for example disambiguate contributions of spatial updating from other potential underlying factors.

Note that using the HeadJoystick lead to a marginally significant increase in overall head (but not body) rotations, suggesting that participants might be looking around more and/or looking more into their periphery. This might contribute to an increased situational awareness with HeadJoystick.

The behavior of being confined to planes or axes is quite common for input devices that separate control dimensions. Thereby, studies of user performance of 3D tasks with 2D input devices have shown performance decrements, especially for selection and manipulation tasks Zhai (1998). Basically, six DoF need to be mapped to controllers that usually only afford two DoF (e.g., a micro-joystick). While precision (accuracy) may be higher (an issue we will reflect on below), trials tend to take longer than with 3D input devices as different control axes have to be controlled independently and serially (e.g., in case of a mouse). Even when multiple axes can be controlled in parallel by being able to use two controllers at once with two hands (or fingers, e.g., by using a gamepad), analysis of the movement paths of such tasks still show jagged patterns (a sign of non-optimal movement paths), similar to our typical paths in Figure 6. Even more so, control also requires coordination between both hands. The comparison between HeadJoystick and Gamepad shows that HeadJoystick performs better for larger (course) movements as evident from our task (quickly traveling from one location to another while maintaining spatial orientation). Surprisingly, fine grained movement has also been shown to be better with HeadJoystick, compared to Gamepad, as shown in Hashemian et al. (2020)’s maneuvering task (travelling through narrow tunnels). While some of these benefits have also been shown for specialized 3D desktop input devices (in contrast to handheld “free-air” input devices), these devices tend to require much training to precisely control them. In contrast, users of HeadJoystick did improve performance over time (steady learning slope), yet not as drastically as shown for 3D desktop input devices with a steep slope over multiple usage sessions, where users started with low performance Zhai (1998).

Despite these interface-specific locomotion strategies there was no significant difference in task completion time or revisit percentage to the boxes. The completion time is influenced by the auto-termination of the program after 5 min, terminating 13 HeadJoystick trials and 20 Gamepad trials. As for the revisits, the participants complained that the task was “difficult” {P14} and that they easily “lost track” {P1, P20, P25} with both interfaces. Removing any global orientation cues dropped the percentage of perfect trials (no revisits) in HMD with real walking from 90% in Ruddle and Lessels’ studies Ruddle and Lessels (2006, 2009) to 13.9% in Riecke Riecke et al. (2010)’s study. Further limiting overall visibility such that the whole layout of boxes could never be seen at once but had to be integrated during locomotion further decreased the percentage of perfect trials. There were no perfect trials without revisit in Nguyen-Vo et al. (2019)’s ground based locomotion study, and just 5.68% in this study (5 out of 88 trials). Thus, similar to the ground-based locomotion study, we did not find any difference between the interfaces for revisits. However, we can see a trend of increasing revisits with the Gamepad compared to HeadJoystick as seen in Figure 5. As the data have been split for eight different balls, the data is too scarce to provide reliable statistical conclusions, though. Further study concentrating on how interfaces’ performance changes with increasing difficulty could shed more light into the matter.

6.2 RQ2: Does HeadJoystick Help to Reduce Motion Sickness?

Table 1 shows a clear advantage for the HeadJoystick in comparison to Gamepad interface. Gamepad caused a significant increase in motion sickness (for total SSQ and Nausea sub-scale) independent of whether it was the first or second interface. When Gamepad was used as the second interface, oculomotor and disorientation issues also significantly increased compared to Pre-Experiment scores. Further, oculomotor (p = 0.041) and disorientation (p = 0.050) issues showed a marginal increase compared to Pre-Experiment scores (that would be significant if we had not applied the fairly conservative Bonferroni correction), even with the Gamepad as first interface. Although HeadJoystick caused a marginal increase in oculomotor issues when it was the first interface and a marginally significant increase in disorientation when it was the second interface, there was no overall significant increase in overall motion sickness score or any of the sub-scales. In sum, there was a consistent increase in overall and some of the SSQ subscores for the Gamepad, and only marginal increases in oculomotor and disorientation scores for the HeadJoystick. This confirms our hypothesis 2.

The single-item Fast Motion-Sickness Scale (FMS) also showed a distinct difference in motion sickness between Gamepad and HeadJoystick. With a more straight-forward rating system of “0-I am completely fine and have no motion sickness symptoms” and “100-I am feeling very sick and about to throw up”, the participants reported that their motion sickness increased significantly more with Gamepad than HeadJoystick. Further, one of the participants completed the tasks with the HeadJoystick but dropped it due to motion sickness after trying the Gamepad. Two more participants let us know after the trial that they were “about to drop the trial” {P03, P06} with the Gamepad before it auto-terminated due to the time limit.

These quantitative findings are corroborated by the participants’ responses in open-ended interviews. Previous ground-based locomotion studies have shown that combining visual motion with body-based sensory information can help to make people less sick Aykent et al. (2014), Lawson (2014). However, the results have been mixed for leaning-based interfaces. Marchal et al. (2011)’s study (Joyman) and Hashemian and Riecke (2017a)’s study (SwivelChair) showed no significant difference in motion sickness between a leaning-based interface and a hand-based controller. Nguyen-Vo et al. (2019)’s study showed a significant motion sickness reduction for a standing interface (NaviBoard) as compared to a hand-held controller, but not for a sitting interface (NaviChair). Similarly, Hashemian et al. (2020)’s flying study (HeadJoystick) showed a significant reduction in motion sickness for real-rotation with leaning-based translation compared to controller-based translation and rotation conditions, but only varying the translation mechanism did not produce a change that was statistically significant. A closer look into Marchal et al.’s study show that a Likert scale of 7 was used to measure motion sickness, and both conditions barely caused any motion sickness (average of 6, where 7 meant no motion sickness symptoms). Further, Hashemian and Riecke’s SwivelChair and Nguyen-Vo et al.’s Navichair were not compared with pre-experiment SSQ scores but only with SSQ scores after using Gamepad (HeadJoystick vs. Gamepad) Hashemian and Riecke (2017a), Nguyen-Vo et al. (2019). Hashemian et al.’s study compared the motion sickness of the interfaces by subtracting the pre-experiment values of SSQ from each interface (HeadJoystick-Pre-experiment vs. Gamepad-Pre-Experiment). In these three studies, the leaning-based interfaces (SwivelChair, NaviChair and HeadJoystick) showed a trend towards lower motion sickness than the controller condition, but were shy of statistical significance. In our study, we performed a planned contrast to compare all the conditions with pre-experiment SSQ scores (HeadJoystick vs. Pre-experiment and Gamepad vs. Pre-experiment). Combining this comparison with the results from FMS, post-experiment questionnaires and participants’ testimony shows that Gamepad makes participants significantly more motion sick than HeadJoystick, and that the SSQ might be too conservative a measure for registering those differences. Even in the meta-analysis of the effectiveness of SSQ, in spite of having a strong correlation between SSQ scores and participant drop out, the dropped-out participant still rated only a 39.63 total SSQ score on average out of 235.62 (16.8%) Balk et al. (2013). Thus, we recommend using the SSQ for comparing the pre-vs. post-use motion sickness scores for each interfaces, rather than just comparing post-use motion sickness scores among the interfaces.

Therefore, our study, along with Hashemian et al. (2020)’s study indicates that a partial body-based locomotion interface can help to reduce motion sickness not only for ground-based locomotion but also for flying locomotion. In addition, participants also reported a significantly stronger sensation of self-motion with the HeadJoystick in the post-experiment questionnaire Section 5.2.3. They described their HeadJoystick experience as “natural” in post-experiment interviews and compared it to “swimming” or “floating,” see Figure 10. This rejects the concern sometimes mentioned in the literature that increasing vection might also increase motion sickness Hettinger et al. (1990), Hettinger and Riccio (1992), Stoffregen and Smart (1998), Smart et al. (2002). Instead, our data show that increasing vection can, in fact, be accompanied by reduced motion sickness, e.g., if a carefully designed embodied interface like HeadJoystick is used. That is, vection is not in general a sufficient prerequisite or predictor for motion sickness, and often does not even correlate with motion sickness-see discussion in Keshavarz et al. (2015), Riecke and Jordan (2015).

6.3 RQ3: How do HeadJoystick and Gamepad Affect the Overall User Experience and Usability?

In terms of cognitive load, the participants felt HeadJoystick could be used with significantly lower mental demand than Gamepad. Users also rated HeadJoystick to be significantly easier to learn, easier to use, and more precise. Though some participants preferred Gamepad for its familiarity, others complained the apparent disjunction between physical rotation and controller translation. Previous studies have also documented participants complaining about the disjunction between the physical rotation and controller translation Hashemian et al. (2020), Hashemian and Riecke (2017b). All of these observations confirm our hypothesis 3, that HeadJoystick should be more intuitive to use and learn, and the user should be able to use HeadJoystick more effectively, even without any previous exposure.