- 1Foundation of Research and Technology (FORTH)–Greece, Institute of Computer Science, Heraklion, Greece

- 2Department of Computer Science, University of Crete, Heraklion, Greece

- 3ORamaVR, Heraklion, Greece

- 4Department of Mathematics and Applied Mathematics, University of Crete, Heraklion, Greece

- 5Department of Emergency Medicine, Inselspital, University Hospital of Bern, Bern, Switzerland

Efficient and riskless training of healthcare professionals is imperative as the battle against the Covid-19 pandemic still rages. Recent advances in the field of Virtual Reality (VR), both in software and hardware level, unlocked the true potential of VR medical education (Hooper et al., The Journal of Arthroplasty, 2019, 34 (10), 2,278–2,283; Almarzooq et al., Virtual learning during the COVID-19 pandemic: a disruptive technology in graduate medical education, 2020; Wayne et al., Medical education in the time of COVID-19, 2020; Birrenbach et al., JMIR Serious Games, 2021, 9 (4), e29586). The main objective of this work is to describe the algorithms, models and architecture of a medical virtual reality simulation aiming to train medical personnel and volunteers in properly performing Covid-19 swab testing and using protective measures, based on a world-standard hygiene protocol. The learning procedure is carried out in a novel and gamified way that facilitates skill transfer from virtual to real world, with performance that matches and even exceeds traditional methods, as shown in detail in (Birrenbach et al., JMIR Serious Games, 2021, 9 (4), e29586). In this work we are providing all computational science methods, models together with the necessary algorithms and architecture to realize this ambitions and complex task verified via an in-depth usability study with year 3–6 medical school students.

1 Introduction

SARS-CoV-2, a virus first detected in Wuhan, China on December 2019, swiftly spread worldwide and caused the ongoing Covid-19 pandemic. Having infected tens of millions and causing more than 2.4 million confirmed deaths, this is an unprecedented crisis that humanity faces. Healthcare professionals around the globe strive against the virus, most of the time without sufficient resources.

One of the most important issues that the impaired healthcare system has to deal with is the effective and secure training of medical personnel, medical students, physicians, nurses and even volunteers. The restrictions and regulations, such as social distancing, that were imposed worldwide by governments, hindered the training progress of these people. Courses in medical schools were canceled or became virtual to protect the undergraduate students, leading to sub-optimal training. Active medical staff were diminished and exhausted, as doctors and their teams got infected and quarantined afterwards, as the protocol dictates.

The increased pressure posed by the extended Covid-19 pandemic inevitably created the need of crucial changes in the landscape of medical education (Edigin et al., 2020; Wayne et al., 2020). Under the current circumstances, the impaired healthcare system can recuperate only by providing powerful tools to the doctors in the front line. One such tool regards the effective training of medical staff that will help tackle the personnel deficiency and also enable doctors to adapt to the new health protocols and procedures deployed in the fight against SARS-CoV-2.

To this end, Virtual Reality (VR) can provide substantial aid by utilizing its powerful training enhancement techniques. Using VR medical simulations based on the World Health Organization (WHO) guidelines (Almarzooq et al., 2020; Tabatabai, 2020), medical trainees can be educated in an infection-free, safe environment, with reduced stress and anxiety (Besta, 2021). There is a growing evidence, that the usage of suitable applications (CoronaVRus, 2020; Singh et al., 2020; EonReality, 2021; SituationCovid, 2021) in VR, Augmented Reality (AR) and Mixed Reality (XR) delivers performance outcomes that are not only on par with traditional methods (Hooper et al., 2019; Kaplan et al., 2020) but, in some cases, even outperform them.

Several VR medical training applications have appeared in the past few years, supported by either Windows VR or Stream-VR compatible headsets. The design of such applications is intriguing as several issues have to be addressed in order for the user to have a fully immersive experience, avoiding the so-called uncanny valley phenomenon (see Section 4.3. Challenges). Interesting implementation challenges arise in each application, depending the nature of the original training procedure that is simulated. The successfulness of the final product is evaluated by the overall experience of the users when compared or used in parallel with the respective traditional learning method.

In this work, we present a novel VR application called “Covid19 VR Strikes Back” (CVRSB) that is publicly available (see Section 4.2. Application Features) for all mobile and desktop-based Head Mount Displays (HMDs). In short, CVRSB targets towards a faster and more efficient training experience of medical trainees regarding the acquisition of a nasopharyngeal swab and the proper handling of Personal Protective Equipment (PPE) during donning/doffing.

A detailed description including the scope of this application is presented in Section 3, whereas implementation characteristics and special features are described in Section 4. Details of a clinical research that demonstrates usability testing of the trainees (medical students) that used our application can be found in Section 5. Lastly, conclusions of our work can be found in Section 6.

2 Description and Scope of the CVRSB Application

2.1 The Real Medical Problems Addressed

The Nasopharyngeal Swab. A significant weapon of doctors against the virus is the ability to rapidly and infallibly determine if someone is infected via a swab test, usually from the nasopharynx region (Vandenberg et al., 2020; Wyllie et al., 2020). Although the procedure itself is quite simple (Marty et al., 2020), a protocol containing essential guidelines, such as the proper donning and doffing of personal equipment, must be followed to reduce risk of contamination between the patient and the medical staff and compromising the reliability of the test results (Hong et al., 2020).

The most common way of detecting an infected person, even without obvious symptoms, is via a nasopharyngeal swab test. This procedure involves collecting specimen of nasal secretions from the back of the nose and throat of the patient. The sample can then either be used for a rapid point-of-care antigen test or for more sophisticated polymerase chain reaction (PCR) based testing. The strict protocol of the sampling procedure has to be followed to the letter by the personnel; otherwise, the test results may become corrupt or, in the worst-case scenario, the medical staff may be contaminated.

PPE Donning and Doffing. As the world faces this global Covid-19 pandemic, the necessity of using personal protective equipment has never been greater. However, the proper use of mask, gloves, goggles and gowns acts as the best shield against the virus and reduces the rate of spreading. In high-risk procedures, such as a nasopharyngeal swab test, the proper use of PPE is dictated by a strict health security protocol that must be followed by the professionals in order to minimize potential risks. However, there is ample evidence of incorrect technique for handling PPE and hand hygiene throughout the healthcare personnel (Beam et al., 2011; Hung et al., 2015; John et al., 2017; Nagoshi et al., 2019; Verbeek et al., 2020).

Our CVRSB application was designed bearing in mind a health protocol that described in detail the hygiene rules that govern state-of-the-art swab testing and PPE donning/doffing. This detailed protocol is provided by WHO, CDC (World Health Organization, 2009; Centers for Disease Contr, 2019) and from state hospitals; in our case, it was our collaborators at the University of Bern that supplied and explained us the security parameters.

2.2 A Detailed Description of the CVRSB Features and Capabilities

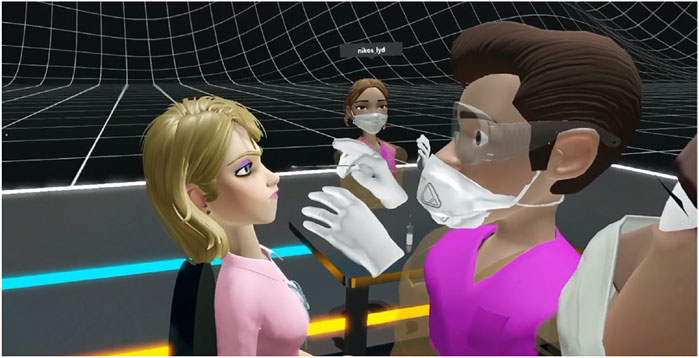

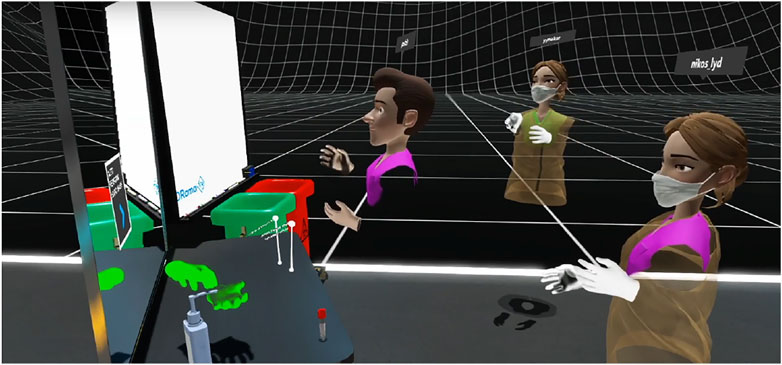

Our project “Covid-19 VR Strikes Back” is an open-accessible simulation that supports all current SteamVR enabled Desktop HMDs, as well as the untethered, mobile HMDs. Its main objective is to train healthcare professionals, medical personnel and volunteers in Covid-19 testing and protective measures. Using our application, trainees learn how to perform swabs and how to get in and out of PPE, in an engaging, gamified manner that facilitates skill transfer from the virtual, to the real world (see Figure 1, Figure 2, Figure 3, Figure 4 and Figure 5).

FIGURE 1. A collaborative, shared, networked virtual experience where the instructor demonstrates to the trainees the proper technique to perform the nasopharyngeal swab testing. Note the HIO rendering methodology described in this work to avoid the uncanny valley for virtual human rendering as well as interaction.

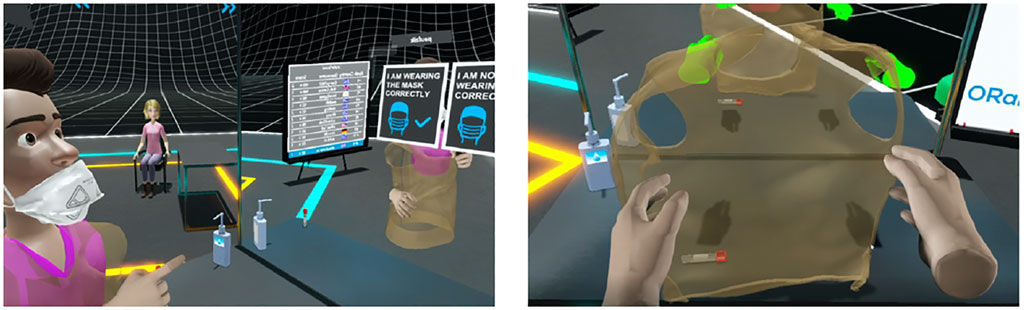

FIGURE 2. An experienced doctor describes the proper way to discard gloves and protective equipment. The whiteboard can be used to write down notes and make remarks whereas, in future updates, presentations can also be made.

FIGURE 3. Left: The instructor uses cognitive questions to help trainees understand the correct usage of a mask. Right: The trainees are asked to wear their gown in a proper way. A reflective mirror enhances the learning experience by providing a better cognitive understanding of the user’s actions and surroundings.

FIGURE 4. (Left) The trainee writes the patient’s name on the swab. (Right) Inserting the swab into the testing tube.

FIGURE 5. Using the CVRSB application, the users will learn to perform a nasopharyngeal swab test but also acquire better knowledge and understanding of the hygiene protocol that, among others, involves proper hand hygiene (left) and correct removal of used gloves (right).

This module is ideal for recently graduated doctors or medical students, fast-tracked through graduation to be used as frontline doctors in response to the coronavirus pandemic (Lapolla and Mingoli, 2020). This first Covid-19 multisensory VR training of its kind aims to engage the user body and give trainees perspectives that they cannot easily attain in reality, lead to high quality measurements and ultimately be infinitely repeatable.

Using cheap accessible Head Mount Displays (HMDs), medical trainees can download and run applications that provide step-by-step instructions for various operations. The aim of such application is to provide the opportunity to perform specific medical procedures, such as a Covid-19 test or a surgery, in a virtual environment, usually with remote guidance from a specialist. This long-distance collaboration complies with the imposed social distancing measures, reducing the chances of spreading the disease. It also enables the trainees to practice techniques countless times without additional cost while providing the necessary analytics that will help them understand and accelerate their progress.

3 Implementation and Innovations

3.1 The MAGES SDK

The VR software development kit (SDK) upon which we based the CVRSB application is the MAGES SDK, developed by ORamaVR. MAGES is the world’s first hyper-realistic VR-based authoring SDK platform for accelerated surgical training and assessment, introduced in (Papagiannakis et al., 2020). Using MAGES, a user can create prototypes of VR psychomotor simulations of medical operations in an almost code-free way. The embedded visual scripting engine makes it ideal for rapid code-free creation of prototypes of VR psychomotor simulations of medical operations. Since CVRSB is based on MAGES, it naturally inherits a set of novel features.

The under-the-hood Geometric Algebra (GA) interpolation engine, allows for up to 4x improvement on reduced data network transfer and lower CPU/GPU usage with respect to traditional implementations (Kamarianakis et al., 2021). As a consequence, a higher number of multiple concurrent users in the same collaborative virtual environment is supported. CVRSB cooperative mode is tested to work with at least 20 users from different countries and with different headsets. Using GA representation to store and transmit rotation and translation data also enables a set of diverse modules that can be used in creative way to enrich the immersive experience.

The MAGES incorporated analytics engine allows for efficient detection and logging of the user actions in a format suitable for further processing. The respective module is employed to detect deviations from the expected course of action, e.g., a warning UI will advise the trainee that tries to move close to the examinee without using PPE. Furthermore, this GA representation unlocks interactive modules that enable tearing, cutting and drilling on the models’ skin, which can be used in creative ways as part of more complicated VR actions (Kamarianakis and Papagiannakis, 2021).

3.2 Application Features

Multiplayer. The MAGES SDK support one active user-instructor and a large number of spectators due to MAGES GA based networking layer. Cooperative mode is tested to work with at least a total of 20 users from different countries and with different headsets.

Analytics and assessment. Errors and warnings UIs inform users when they deviate from the expected course of action, e.g., when moving close to the examinee without using PPE. Analytics for each action are uploaded and available through ORamaVR portal for further assessment which is especially helpful for the supervision of students’ progress. A global Leaderboard was introduced containing the global ranking of all CVRSB users, independent of the device used.

Virtual Whiteboard. We implemented an interactive whiteboard, ideal for VR webinars. The instructor or the participants may write using the available markers. Over our past sessions it has been used to properly demonstrate the correct placement of the PPE. In the future we aim to support live presentation with annotation features from the presenter.

Avatar customization. Users can customize their avatars to distinguish themselves from other participants in the cooperative mode. Users may choose skin color, gender and scrub color to match their preferences.

Reflective mirror. The integrated reflective mirror allows for better immersion in case of PPE donning and doffing, amending the lack of human body of the virtual doctor.

Hardware agnostic. The CVRSB application can be deployed in all SteamVR compatible headsets, Windows Mixed Reality headsets and on the android-based untethered VR headsets. This is due to the MAGES SDK which can generate hardware agnostic training scenarios.

Available at no cost. The application is free to download for the following platforms:

• Desktop VR: https://elearn.oramavr.com/cvrsb/,

• Vive Focus Plus: http://bit.ly/3sarvbK,

• Oculus Quest: http://bit.ly/3k2WUK8.

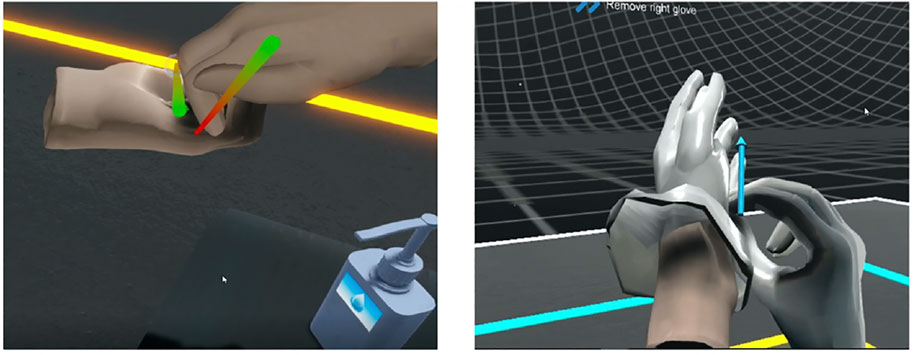

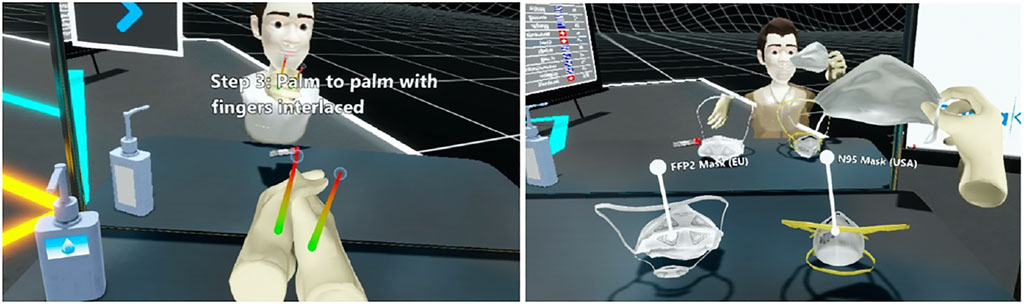

3.3 Challenges

A lot of challenges had to be confronted while implementing the CVRSB application; a major one concerned the hand disinfection protocol. MAGES SDK uses a velocity-based interaction system where virtual hands are following the VR controllers by generating a velocity vector leading them to the current position of the controllers. Such an implementation makes interaction with physical items feel more robust however, it causes issues when hands are applying reciprocal forces as they start rumbling and moving unexpectedly in directions due to their physical colliders. To overcome this challenge, we developed the washing hands Action (see more about Actions on Section 4.4. From visual storyboard to scenegraph) in a cognitive way. When the user moves the controllers close to each other, we magnetize the physical hands and apply the washing animation (see Figure 6, Left). To stop the washing animation and separate the hands, users move the controllers apart. Additionally, users can influence the animation speed by following interactive trails indicating the proper hand movements.

FIGURE 6. (Left) It is crucial for the trainees to sanitize their hands properly. CVRSB provides guided instructions from the WHO with visual help and haptic feedback. The colorful lines indicate the proper placement of the hand. If the VR controllers deviate from the virtual hands a vibration is applied to alarm the users. (Right) The trainees decide which mask to use among three available types.

Another challenge we faced was the implementation of the swab insertion to the nasopharynx due to the Unity’s physics system. We configured a custom pose for the virtual hands to grab the swab properly using only the fingertips. However, when applying pressure to the nasopharynx the swab was popping out due to the forces applied. For this reason, we implemented a custom insertion mechanic using configurable joints to disable physical movements from the swab when in contact with the nasopharynx. When removing the swab, we automatically re-apply physical forces. We also made the nasal cavity transparent to let the users know the swab’s insertion depth.

The donning and doffing of the gown are processes that needs attention to avoid any contamination. During this process the medical staff should reach behind their back to fasten the gown. This gesture is not feasible with VR controllers due to tracking limitations as controllers must always be visible from the headset’s cameras (inside-out tracking). We introduced cognitive questions to bypass the need to fasten the gown. In our case, once the user has put on the gown, we ask him if it is properly fastened or not. To enhance the insertion, we also placed a virtual mirror in front of the user to see himself during the donning and doffing. This feature improves the perception of the gown and the virtual avatar. A variety of cognitive questions are also used to enhance the learning experience (see Figure 6, Right).

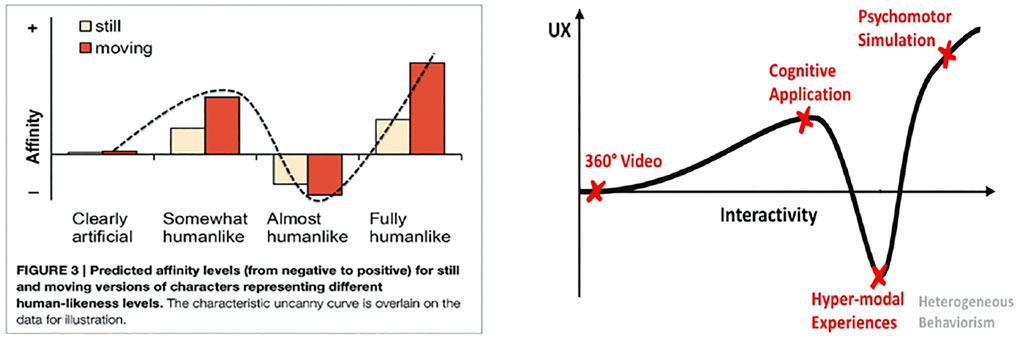

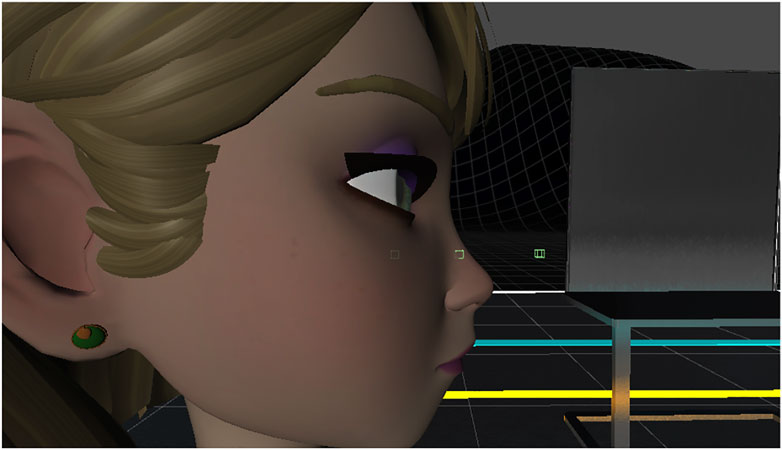

Finally, a challenge that had to be overcome was the Uncanny Valley (UV) phenomenon, regarding representation. According to this phenomenon, the user experience is augmented as the complexity of the character representation grows but only up to a certain point - called the uncanny valley. After this point, people feel a sense of unease or even revulsion in response to highly realistic human representations (see Figure 7, Left). To avoid the uncanny valley, we used our novel “hybrid inside-out” (HIO) rendering methodology for virtual reality characters. A key insight of HIO is that non-anthropomorphic external character appearance (out) and realistic internal anatomy (inside) can be combined in a hybrid VR rendering simulation approach for networked, deformable, interactable psychomotor and cognitive training simulations. Based on HIO, we introduce non-photorealistic modelling and rendering of non-anthropomorphic embodied conversational agents and avatars. With this technique, early qualitative user study evaluations have shown that trainees can focus entirely on the learning objectives without being distracted by any UV effects (Kätsyri et al., 2015). Therefore, by employing such suitable models in our application to represent human avatars (see Figure 1, Figure 6 and Figure 13, Figure 12), we further enhance the learning experience and improve the training outcome for our users.

FIGURE 7. Left: The Uncanny Valley (UV) effect: affinity (empathy, likeness, attractiveness) vs human-likeness from (Kätsyri et al., 2015). Right: The same phenomenon is observed when comparing the user experience vs the interactivity (level of detail and automation of every action) (Zikas et al., 2020).

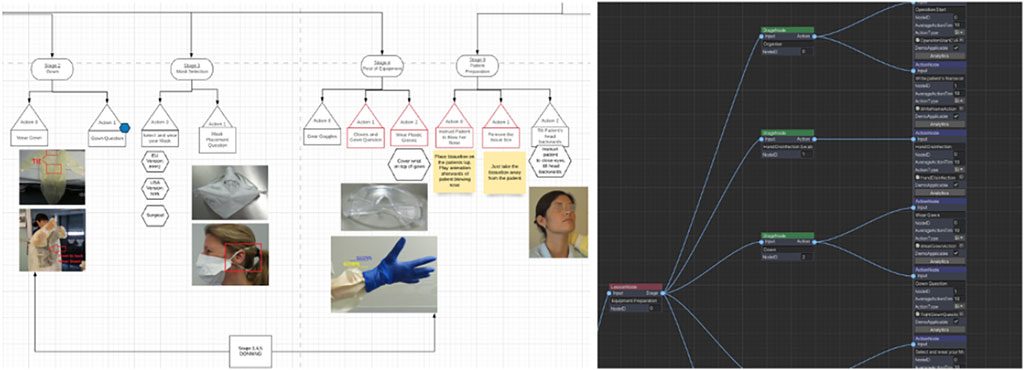

3.4 From Visual Storyboard to Scenegraph

In this section we demonstrate how we created the scenegraph from the visual storyboard. To faithfully recreate a medical procedure in VR, we have to understand its basic steps and milestones. The best way to obtain such a break-down analysis is to consult professionals specialized in this method. In our case, the Subject Matter Experts (SMEs) material, required for the design of a complete storyboard tailored for the VR training module, was provided by our collaborators at the University Hospital of Bern in Switzerland, and the New York University in the United States. Our medical advisors also provided all required information to reconstruct a visual storyboard with clearly defined steps, called Actions in MAGES.

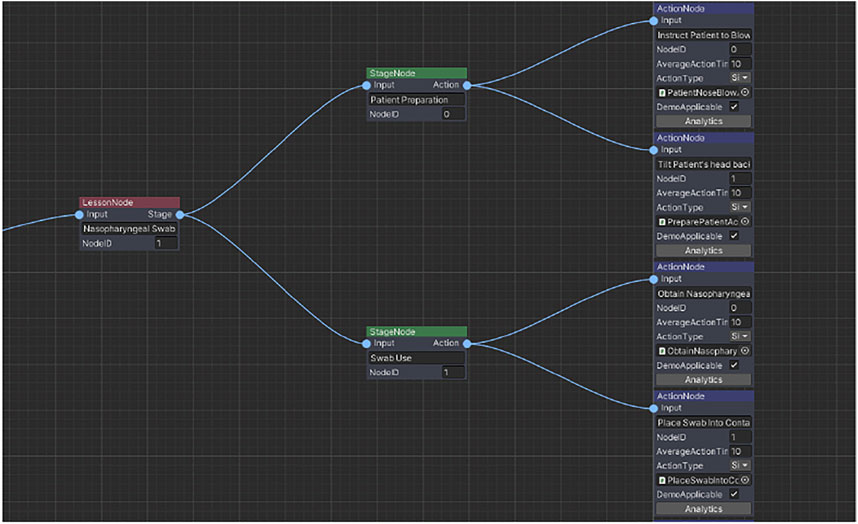

Given the storyboard, the next step is to translate this visual information into a training scenegraph (Zikas et al., 2020). This acyclic graph holds the structure of our training scenario describing all the steps and possible branching due to deviations from the expected path (see Figure 8). The MAGES SDK provides the scenegraph editor, a build-in visual editor to rapidly and code-free generate the training scenegraph.

FIGURE 8. On the left, the steps that must be followed to properly complete a swab test form a diagram. On the right, this diagram is translated into the so called scenegraph, which is used by MAGES to register the user’s progress.

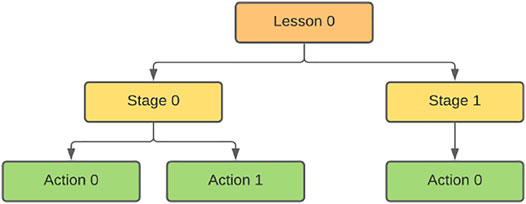

The training scenegraph consists of hierarchical nodes separated in three levels: 1) Lessons, 2) Stages and 3) Actions (see Figure 9). We use three levels of hierarchy to better reconstruct the scenario. Each Action corresponds to information obtained from the storyboard and the medical experts.

FIGURE 9. This image represents a simple scenegraph tree. At the root, the Lesson reflects an autonomous part of the training scenario. In our case we have dedicated Lessons for the donning, swab and the doffing. The Stages are used as educational subdivisions. The Action nodes are considered the heart of each MAGES module, containing the programmable behavior, the 3D assets and everything needed to describe the scenario in VR.

The developer needs to study this material to successfully create a faithful Action. The most significant component of an Action is its dedicated script which embeds all the logical steps associated with its functionality. It also consists of one or more Prefabs (interactive objects) and information regarding the analytics. The Action coding is minimal due to the programming design patterns utilized in MAGES. One of the most useful features of MAGES SDK is the Action classification mechanism. In training scenarios, there are certain repetitive behaviors that can be grouped to achieve rapid prototyping. For this reason, we integrated native Action templates (e.g., UseAction, RemoveAction, InsertAction, ToolAction, etc.); these Action prototypes improve code structure and allow the implementation of a common methodology across the development team. For example, the UseAction template is mainly used in situations where we need to pick an object and hold it in a specific area for a predefined period of time.

3.5 Swab Action: From Conceptualization to Implementation

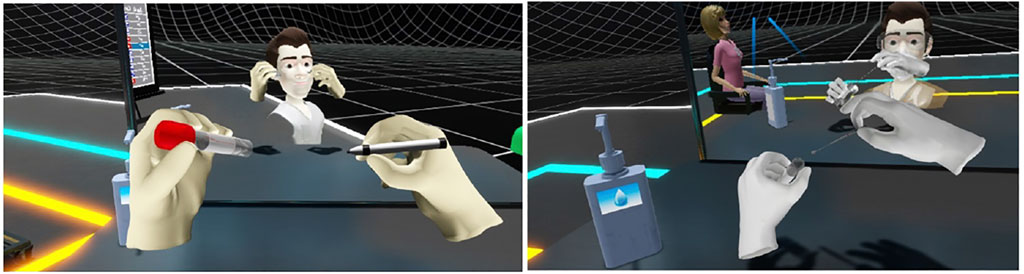

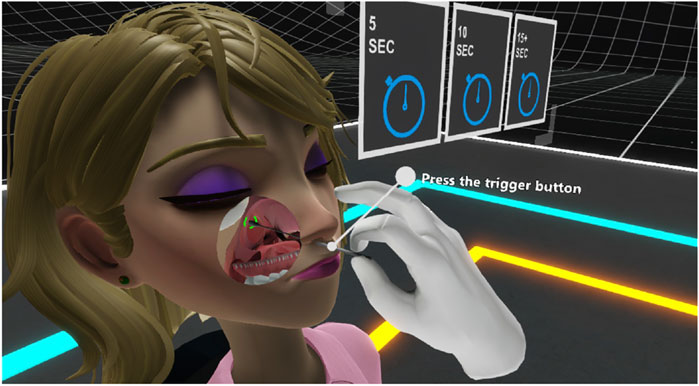

This section demonstrates the creation of the swab Action. In this Action, the user needs to take the sterile swab, insert it to the patient’s nostril for a certain amount of time and then place it carefully in the testing tube for further processing.

The first stage involves the user taking the swab from the testing tube and inserting it into the patient’s nostril. We implemented this behavior as a UseAction since it allows to pick an object and place it in a specific area for a predefined time. This stage of the Action consists of four steps.

3.5.1 Step 1: Action prefabs

To implement the UseAction, we need the following Prefabs:

• Interactable Prefab: This is the swab where the user should use to perform the Action.

• Use Collider: This collider is responsible to perform the Action when triggered from the interactable prefab.

• Animated Hologram: This item is used to instruct the user regarding the correct procedure of completing the Action.

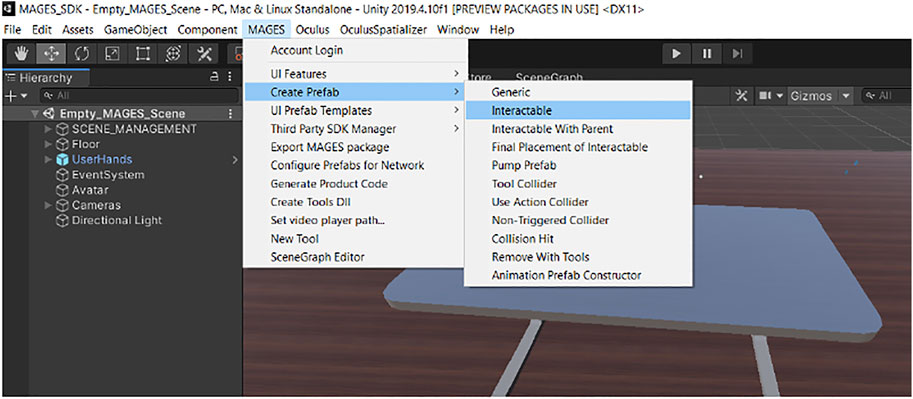

MAGES SDK provides easy to use UI menus to automatically generate those prefabs (see Figure 10). After generating the prefabs, we can customize their behaviors from the embedded components.

FIGURE 10. Mages SDK contains UI menus that can be used to provide a code-free experience to users creating new content.

3.5.2 Step 2: Action script

The next step is to code the Action script that is responsible to initialize the Action, execute it and set all parameters related to the VR behavior. Below you can see the script associated with the swab Action.

This elegant script reflects the behavior of our Action. In more detail, the class, derived from the UseAction class, inherits the needed methods and functionalities. The Initialize method is called when this Action starts to instantiate and configure all the prefabs linked with this Action. We override the Initialize method to include the necessary callbacks. The SetUsePrefab method takes two arguments, the first one is the trigger collider placed inside the patient’s nostril and the second one is the interactable prefab, in our case the swab. The SetHoloObject method instantiates a hologram for this Action to let the user know about the proper orientation of the swab inside the nostril. It is very important to insert it with the correct angle and into the proper depth, otherwise the practitioner may hurt the patient or take insufficient sample. Step 3: Connection with scenegraph.

After generating the Action script, we have to link it to the training scenegraph. We can easily link our newly generated script to our training scenegraph by using the Visual Scripting editor (see Figure 11). After creating the Action node on the editor, the developer can bind the Action script with the new node, simply by dragging and dropping the script to the dedicated input field.

FIGURE 11. This screenshot from the Visual Scripting editor shows the swab Lesson (red node), containing two Stages (green nodes) and four Actions (blue nodes).

3.5.3 Step 4: Action customization

Up to this point we had created a simple UseAction where the user needs to take the swab and insert it into the patient’s nostril to take the Covid-19 sample. However, as this is a rather basic VR behavior, we included additional components and mechanics to enhance user immersion and user friendliness.

The first challenge was to make the swab insertion more engaging. Let us note here that the velocity-based interaction system that is normally used provides a smooth experience for the user. The grabbed objects follow the hand using velocities but are not attached with a parent–child relation. In this way, the forces and complex interactions applied to the objects are more fluent. In the alpha version of the application, simple colliders were used around the nostril to insert the swab. However, this implementation caused a lot of rumbling on the swab and difficulty in the insertion.

To overcome this issue, we switched into a different approach. In front of the nostril, we lined three trigger colliders to identify if the swab is placed correctly, paying attention to its distance and orientation (see Figure 12). When all three colliders are triggered, meaning the swab is aligned, we apply a configurable joint runtime to only allow positional and rotational movements on the axis parallel to the swab. This enables the back-and-forth movement of the swab. When the swab touches the walls of the nasopharynx canal, a time counter checks if the swab is in contact for long enough, and then performs the Action. After this, the user needs to pull the swab out of the nasopharynx canal. When the swab is no longer in contact with one of the three trigger colliders outside the nostril, the configurable joint is removed allowing the swab to be moved naturally without any restrictions.

FIGURE 12. When the instructor inserts the swab in order to reach the nasopharynx, a transparency layer enhances the trainee’s perception regarding the correct angle and depth of insertion.

This mechanic was implemented on top of the basic functionalities of the UseAction using the provided features of MAGES SDK.

As a final UX addition, to enhance the visualization of the swab insertion, we implemented a custom cutout shader used to cull vertices of the face and the nasopharynx canal to let the user have a better image of the process. When the swab is close enough to the nostril, we enable this effect to make the selected areas transparent (see Figure 13). After taking the swab, the user needs to insert it into the testing tube. This stage of the Action is implemented using an InsertAction, which needs a similar configuration as well.

FIGURE 13. This image shows how the three collides were positioned to identify if the swab was properly inserted to lock it in place.

The same methodology as the one provided in this Section 4.5 was replicated successfully for the PPE donning/doffing learning objectives as well as the hand disinfection mechanisms in VR using the underlying MAGES platform. Using the information provided our medical collaborators from the University of Bern, we interpreted the steps of the training scenegraph into Actions and Prefabs. Most Actions use the UseAction and InsertAction prototypes as they involve picking objects and placing them into specific areas respectively, e.g., placing the specimen into a tube, placing the user’s VR hands inside the gloves or disposing the used PPE in the correct bin. The most intriguing challenges we faced when making these Actions, in order to avoid Uncanny Valley effects, are described in the Challenges Section 4.6. We chose to describe previously the Swab Action in detail, in order to illustrate the complete process of ‘VR-ifying’ a medical therapeutic procedure. The novelty and scalability of MAGES allowed us to replicate the same methodology for these other two different in 3D content medical procedures, using the same interaction and simulation metaphors.

3.6 Analytics Engine: In VR We Can Track Everything

It is very important that medical training simulations should include a trainee evaluation method, that presents to the users their errors, and their progress. Main objective of this evaluation is to help them identify the actions that they performed well and the ones where there is room for improvement. MAGES SDK provides a build-in analytics engine to configure, track and visualize a detailed analytics report at the end of each session.

Each Action can be configured to track specific analytic scoring factors. For example, in CVRSB the contamination risk is high when interacting with PPE and the swab. For this reason, we introduced contaminated areas and surfaces, to track if the user touches or enters certain regions of the VR environment.

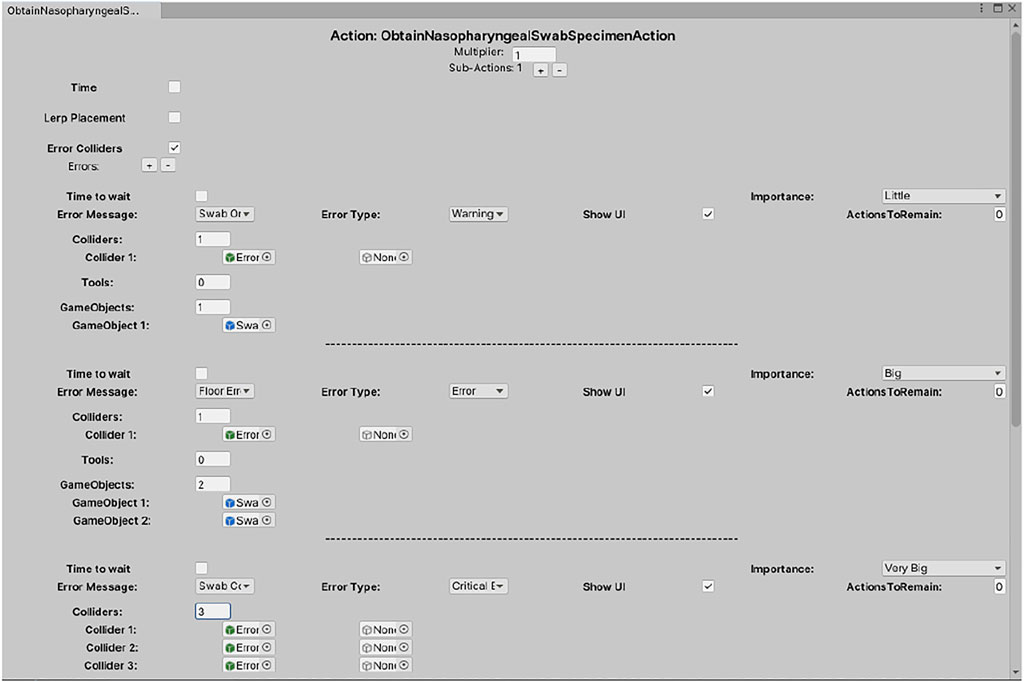

The analytics can be configured from the Visual Scripting editor seen in Figure 14.

FIGURE 14. This is the analytic editor for the swab Action. In this example we configured the contamination regions and which objects can trigger them. Later those errors will appear on the assessment report and on the ORamaVR portal site.

3.7 The Uncanny Valley of Interactivity

As mentioned before, the Uncanny Valley usually refers to a sense of unease and discomfort when people look at increasingly realistic virtual humans. This theory became popular with the rise of robotic humans referring to a graph of emotional reaction against the similarity of a robot to human appearance and movement. However, UV is not only applied on robotics and virtual humans. Other manifestations of the Uncanny Valley such as UV in haptics (Berger et al., 2018) started to gain attention proving that If the fidelity of the haptic sensation increases but is not rendered in accordance with other sensory feedback the subjective impression of realism actually gets worse, not better. The phenomenon of UV has been proved to exist in medical VR training simulations too (Zikas et al., 2020).

In VR, developers have a lot of flexibility with different headsets, controllers and various mechanics, and therefore the possibilities to develop an Action are endless. However, developers need to find the most intuitive solution to implement psychomotor Actions in a meaningful way. During the development of CVRSB, we faced this issue due to the psychomotor nature of this training application. The first prototypes we developed contained complex tasks to be accomplished, leading the users to fail the scenarios, not due to lack of their capability but due to limited understanding of the stages of the performed Action. Even if they know how to perform certain tasks in real life, they had issues with transferring those skills to the virtual environment (see Figure 7, Right).

A practical example, where we fell into the uncanny valley of interactivity in CVRSB, was the swab Action. When the user inserts the sterile swab into the patient’s nostril, he/she needs to rotate it a number of.

Times. In our first implementation the user had to rotate the swab by pressing the trigger button which performed the rotational movement with an animation. However, that was not very intuitive, and the first testers had issues to perform this Action because they didn’t know what to do; pressing the trigger button is not a normal behavior in a real swab testing procedure. In this case, we decided to simplify this Action (moving to the right side of the valley) and automate this process. As soon as the trainee inserts the swab into the nostril and reaching the desired depth, the swab automatically rotates to collect the specimen. This may not be a true psychomotor Action as it is not normal for the swab to rotate automatically however, during the second testing phase, users completed the scenario successfully without issues in this Action.

In general, to avoid the creation of a complex VR behavior that would fall in the uncanny valley of interactivity, we may either simplify the Action highlighting the cognitive side, or make it more realistic, paying attention not to remain inside the valley. The decision depends on the design and objectives of each training module.

3.8 Collaboration and Interactive Learning

The learning process is a collaborative experience requiring two or more people, the instructor and the students. In CVRSB, we used the build-in collaborative capabilities of MAGES SDK with some minimal changes adapted to our needs. By default, there are two distinct user roles in projects generated by the collaborative module of MAGES. The first one is called the server, which is the first user that creates the online session. Usually this is the course instructor. The rest of the users that will join the session are called clients.

The first user to create the session is also the instructor of the session. This means that he/she can interact with the virtual objects and perform the Actions. The rest of the users cannot interact with the medical equipment, since they are just observers. However, they can write on the whiteboard using the available markers. We designed the collaborative mode in this way to avoid clients contaminating the medical equipment. In the future, we aim to develop a collaborative mode where every client will be able to perform donning and doffing and the instructor will be able to select a student to perform the swab Action.

4 Usability Testing

4.1 Description of the Experiment

We performed a randomized controlled pilot study in medical students at the University of Bern, Switzerland, to explore media-specific variables of the CVRSB application influencing training outcomes, such as usability, satisfaction, workload, emotional response, simulator sickness, and the experience of presence and immersion, compared to traditional training methods.

4.2 Setting, Participants, Ethical Approval

The study took place at the Emergency Department of the University Hospital Bern, Switzerland, in September 2020. A convenience sample of medical students (year 3–6 out of a 6-year medical curriculum) participated on a voluntary basis. The local ethics committee deemed our study exempt from approval, as the project does not fall under the Human Research Act (BASEC-No: Req-2020-00889). TCS and TB devised the study and carried out the evaluation.

4.3 Baseline Investigation

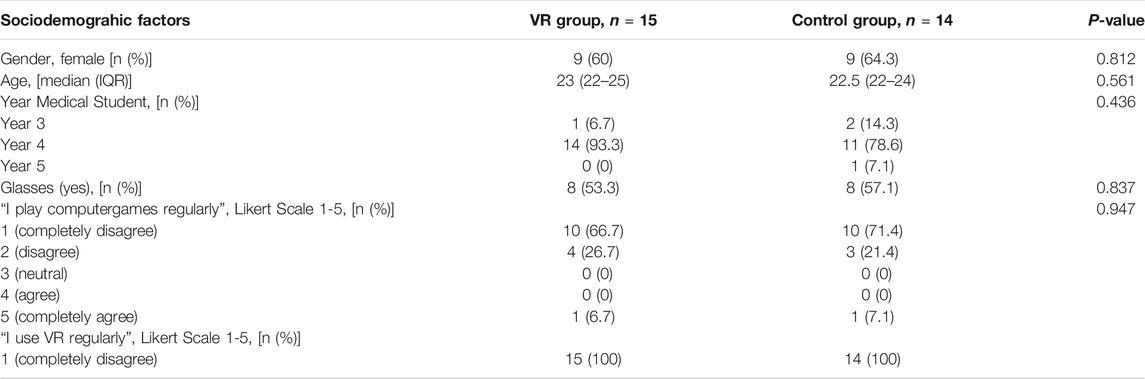

Sociodemographic factors, prior training and experience in hand hygiene/PPE use, taking of respiratory samples (nasopharyngeal swab) as well as prior experience with VR were collected in a printed survey.

4.4 Intervention

Participants were randomized to either the intervention group (VR simulation) or control group (traditional learning methods) in a 1:1 ratio using a computer-generated system to receive training for correct and safe acquisition of a nasopharyngeal swab and proper use of PPE, lasting about 30 min.

The intervention group received training with the CRVSB application, version 1.1.6, using the Oculus Rift S head-mounted display device and hand controllers (Facebook Inc., Menlo Park, California, United States). The participants had two runs in the simulation as a single-player. The training was accompanied by an experienced physician.

The control group was instructed by an experienced physician using traditional learning methods (printed instructions, local instruction videos on PPE donning/doffing, as well as formal videos on proper hand hygiene according to the WHO and on taking a correct nasopharyngeal sample). Training took about 20–30 min.

4.5 Variables of Media Use

Evaluation of both groups regarding variables of media use was carried out according to established questionnaires directly after the training.

Usability for both training modules was assessed using the System Usability Scale (SUS), which is composed of 10 questions with a five-point Likert attitude scale (Brooke, 1996), and the After-Scenario Questionnaire (ASQ) (Lewis, 1991), which assesses the ease of task completion, satisfaction with completion time and satisfaction with supporting information on a 7-point Likert scale (total score ranges from 1 = full satisfaction to 7 = poor satisfaction). Reliability and validity of the SUS are high (Sauro, 2011).

The User Satisfaction Evaluation Questionnaire (USEQ) has six questions with a five-point Likert scale to evaluate user satisfaction (total score ranges from 6 = poor satisfaction to 30 = excellent satisfaction) (Gil-Gómez et al., 2017).

For the VR-simulation, “visually-induced motion sickness” was assessed with four-items (nausea, headache, blurred vision, dizziness) according to the Simulator Sickness Questionnaire (SSQ) adapted from Kennedy et al. (total score ranges from 1 = no simulator sickness to 5 = strong simulator sickness) (Kennedy et al., 1993).

Presence and immersion in the virtual world were determined according to the 6-item questionnaire developed by Slater-Usoh-Steed (total score ranges from 1 = no immersion to 7 = full immersion) (Usoh et al., 2006).

For both groups, perceived subjective workload on a scale from 0 to 100 was assessed using the NASA-Task Load Index (Hart et al., 1988).

The Self-Assessment Manikin (SAM), a picture-oriented three-item questionnaire, was used to measure pleasure of the response (from positive to negative), perceived arousal/excitement (from high to low levels), and perceptions of dominance/control (from low to high levels), each on a five-point scale, associated with the participants’ affective reaction to the learning module (Bradley and Lang, 1994).

4.6 Statistics

Data was analyzed in SPSS Statistics (Version 22 (IBM Zurich, Switzerland) and Stata 16.1 (StataCorp, The College Station, Texas, United States). Intervention and control group are compared regarding the baseline characteristics by means of Chi-square test and Wilcoxon-rank sum test as applicable. For the comparison of the variables of media use the Wilcoxon-rank sum test was used. For all tests, a p-value <0.05 is considered significant.

5 Results

In total, n = 29 students completed the study (control group, n = 14; intervention group, n = 15). Details of the sociodemographic characteristics are available in Table 1. 60% of the participants in the VR group were females, median age was 23 (IQR 22-25), 94% did not play computer games regularly, none used VR regularly. No significant differences were found regarding gender, mean age, educational level in medical school, need to wear glasses, previous experience with computer games and VR. Likewise, previous education and experience regarding hand disinfection, use of PPE, and taking nasopharyngeal swabs did not show any significant differences.

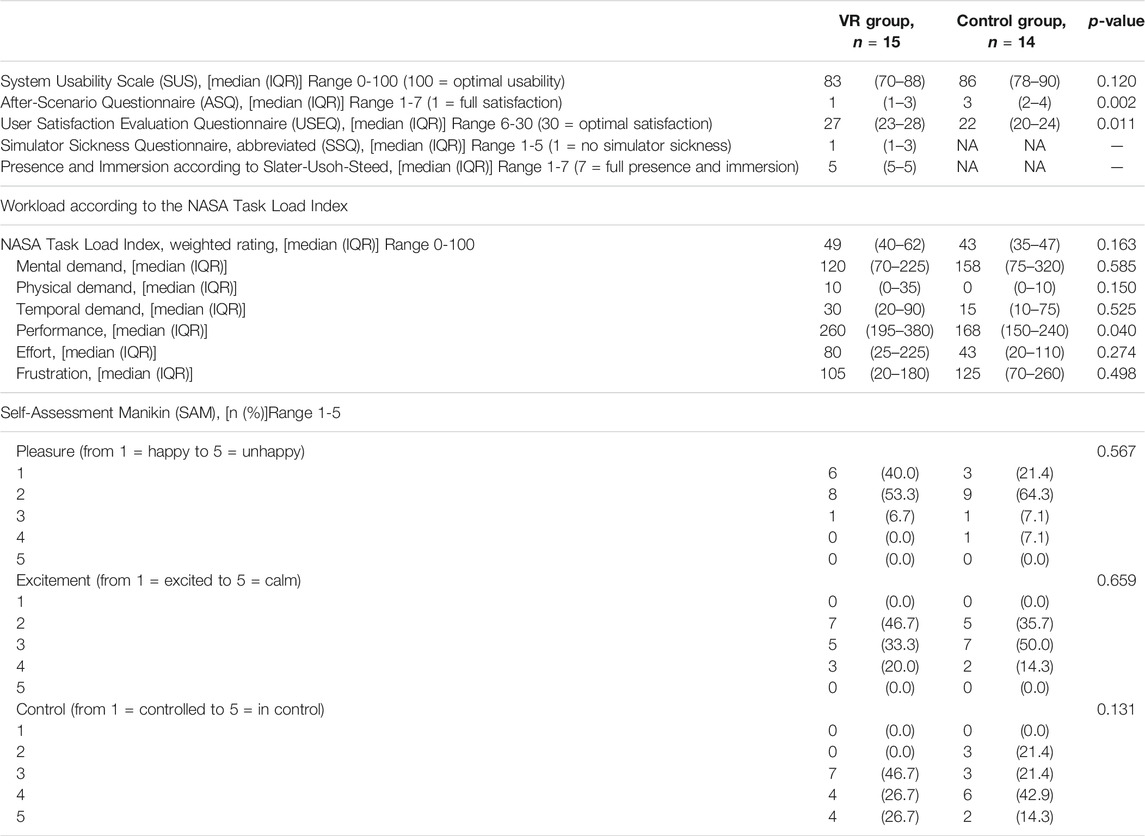

Results of the intervention survey regarding usability, satisfaction, subjective workload, emotional response, simulator sickness, sense of presence and immersion are detailed in Table 2.

System usability as assessed by the System Usability scale SUS was good to excellent (median score in the intervention group 83 (IQR 78-90) with no significant difference between the two groups (p = 0.120).

The After-Scenario Questionnaire (ASQ) revealed a significantly better result for the VR-module (median score intervention group 1 (IQR 1-3), median in the control group 3 (IQR 2-4), respectively, p = 0.0002). As did the User Satisfaction Evaluation Questionnaire (USEQ) (median score intervention group 27 out of 30 (IQR 23-28), in the control group 22 out of 30 (IQR 0-24), respectively, p = 0.011).

The median score in the 4-item Simulator Sickness Questionnaire in the intervention group was 1 (IQR 1-3), thus revealing a good tolerability of the VR simulation.

Presence and immersion in the virtual world according to the questionnaire of Slater, Usoh and Steed was high (median five on a scale from 1 to 7, IQR 5-5).

The overall weighted rating of the workload according to the NASA-Task Load Index showed no significant different between the groups (median intervention group 49 (IQR 40-62), in the control group 43 (IQR 35-47), respectively, p = 0.163).

No significant differences were detected regarding the emotional response in the Self-Assessment Manikin (SAM).

5.1 Conclusion Drawn

We demonstrated good usability of the CVRSB application in training medical students in the correct performance of hand hygiene, use of personal protective equipment, and execution of obtaining a nasopharyngeal swab specimen. Satisfaction measured in the ASQ and USEQ was even higher compared to traditional learning methods. The VR application was well tolerated, and immersion and sense of presence in the virtual environment, which correlate positively with training effectiveness (Mantovani et al., 2003; Lerner et al., 2020), was high.

Whereas for many technical appliances, data on usability scoring systems has been available for a long time, there is little guidance on how to select and perform usability evaluations for VR health-related interventions (Zhang et al., 2020). For post-hoc questionnaires, we choose commonly available and recommended assessment approaches such as the SUS and NASA-Task Load Index, as well as newer approaches such as the USEQ. Whereas we found no difference in the SUS between the groups, ASQ and USEQ scores were significantly higher in the VR group. One possible explanation might be, that these two scores focus more on user satisfaction, whereas the SUS focusses more on general usability. The higher satisfaction might partly be explained by the novelty effect (increase in perceived usability of a technology due to its newness), as our study population did not use VR regularly.

In comparison, Lerner et al. (2020) reported a high subjective training effectiveness and satisfying usability (mean SUS score 66) for their immersive room-scaled multi-user 3-dimensional VR simulation environment in a sample of emergency physicians, that also did not use VR regularly.

Limitations of our study include the single center design, small group sample, and the missing assessment of physiological measures (i.e., galvanic skin response, heart rate (variability). Furthermore, the impact of the novelty effect on these VR-naïve participants must be considered.

Further evaluations regarding the short- and long-term effectiveness of the VR simulation using this study cohort have recently been published (Birrenbach et al., 2021). Hand disinfection performance and correct use of PPE was evaluated using a fluorescent marker and UV-light scanning, a manikin setup was used to create a simulation scenario for obtaining the nasopharyngeal swab. Both groups performed significantly better after training, with the effect sustained over 1 month. After training, the VR group performed significantly better in taking a nasopharyngeal swab.

Further studies are needed to gain deeper insight into the relationships between VR features, presence experience, usability, learning processes, and learning effectiveness. In the future, we also aim to introduce a more advanced cooperative mode where each participant will perform the swab testing separately in a booth while guided from the instructor, where all participants share the same virtual environment and benefit from the social interaction.

6 Conclusion

We present the “Covid-19: Virtual Reality Strikes Back” project, a VR simulation application, publicly available free of charge for all available HMDs as well as Desktop VR. Our application offers a fast and safe method for cooperative, gamified, remote training to assist healthcare professionals with a good utility and even better satisfaction compared to traditional training. The significance of using VR in medical training and especially the impact of using CVRSB is supported by researchers of medical institutions, actively using the software. Providing such a powerful VR tool to augment the training of medical personnel brings humanity one step closer to the exit from this pandemic and accelerating world’s transition to medical VR training.

Data Availability Statement

The raw data supporting the conclusion of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the kantonale Ethikkommission Bern (BASEC-No: Req-2020-00889). The patients/participants provided their written informed consent to participate in this study.

Author Contributions

PZ, SK, NL, MK, EG, MK, GE, IK, and AA guided by Prof. Papagiannakis created the CVRSB Application and contributed in the writing of this paper, apart from Section 5. Birrenbach, Exadaktylos and Sauter performed the clinical trials described in Section 5. All authors meet the criteria needed and therefore qualify for authorship.

Funding

The CVRSB project was co-financed by European Regional Development Fund of the European Union and Greek national funds through the Operational Program Competitiveness, Entrepreneurship and Innovation, under the call RESEARCH - CREATE—INNOVATE (project codes: T1EDK-01149 and T1EDK-01448). The project also received funding from the European Union’s Horizon 2020 research and innovation program under grant agreement No 871793. TS holds an endowed professorship supported by the Touring Club Switzerland. The sponsor has no influence on the research conducted, in particular on the results or the decision to publish.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Almarzooq, Z. I., Lopes, M., and Kochar, A. (2020). Virtual Learning during the COVID-19 Pandemic: A Disruptive Technology in Graduate Medical Education. Washington DC: American College of Cardiology Foundation.

Beam, E. L., Gibbs, S. G., Boulter, K. C., Beckerdite, M. E., and Smith, P. W. (2011). A Method for Evaluating Health Care Workers' Personal Protective Equipment Technique. Am. J. Infect. Control. 39 (5), 415–420. doi:10.1016/j.ajic.2010.07.009

Berger, C. C., Gonzalez-Franco, M., Ofek, E., and Hinckley, K. (2018). The Uncanny valley of Haptics. Sci. Robot 3 (17). doi:10.1126/scirobotics.aar7010

Besta, F. I. I. N. C. (2021). MIND-VR: Virtual Reality for COVID-19 Operators' Psychological Support. Availableat: https://clinicaltrials.gov/ct2/show/NCT04611399.

Birrenbach, T., Zbinden, J., Papagiannakis, G., Exadaktylos, A. K., Müller, M., Hautz, W. E., et al. (2021). Effectiveness and Utility of Virtual Reality Simulation as an Educational Tool for Safe Performance of COVID-19 Diagnostics: Prospective, Randomized Pilot Trial. JMIR Serious Games 9 (4), e29586. doi:10.2196/29586

Bradley, M. M., and Lang, P. J. (1994). Measuring Emotion: the Self-Assessment Manikin and the Semantic Differential. J. Behav. Ther. Exp. Psychiatry 25 (1), 49–59. doi:10.1016/0005-7916(94)90063-9

Brooke, J. (1996). “SUS-A Quick and Dirty Usability Scale,” in Usability Evaluation in Industry (London: Taylor & Francis), 189–194.

Centers for Disease Control and Prevention (2019). Protecting Healthcare Personnel | HAI | CDC [Internet]. Availableat: https://www.cdc.gov/hai/prevent/ppe.html.

CoronaVRus, (2020). CoronaVRus Application. Availableat: http://bit.ly/3autS2U.

Edigin, E., Eseaton, P. O., Shaka, H., Ojemolon, P. E., Asemota, I. R., and Akuna, E. (2020). Impact of COVID-19 Pandemic on Medical Postgraduate Training in the United States. Med. Educ. Online 25 (1), 1774318. doi:10.1080/10872981.2020.1774318

EonReality, (2021). Covid-19 Virtual Rapid Test. Availableat: https://eonreality.com/xr-medical/.

Gil-Gómez, J.-A., Manzano-Hernández, P., Albiol-Pérez, S., Aula-Valero, C., Gil-Gómez, H., and Lozano-Quilis, J.-A. (2017). USEQ: A Short Questionnaire for Satisfaction Evaluation of Virtual Rehabilitation Systems. Sensors 17 (7), 1589. doi:10.3390/s17071589

Hart, S. G., and Staveland, L. E. (1988). Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. Editors P. A. Hancock, and N. Meshkati (North-Holland: Human Mental Workload), 52, 139–183. Advances in Psychology [Internet]. Availableat: http://www.sciencedirect.com/science/article/pii/S0166411508623869. doi:10.1016/s0166-4115(08)62386-9

Hong, K. H., Lee, S. W., Kim, T. S., Huh, H. J., Lee, J., Kim, S. Y., et al. (2020). Guidelines for Laboratory Diagnosis of Coronavirus Disease 2019 (COVID-19) in Korea. Ann. Lab. Med. 40 (5), 351–360. doi:10.3343/alm.2020.40.5.351

Hooper, J., Tsiridis, E., Feng, J. E., Schwarzkopf, R., Waren, D., Long, W. J., et al. (2019). Virtual Reality Simulation Facilitates Resident Training in Total Hip Arthroplasty: A Randomized Controlled Trial. The J. Arthroplasty 34 (10), 2278–2283. doi:10.1016/j.arth.2019.04.002

Hung, P.-P., Choi, K.-S., and Chiang, V. C.-L. (2015). Using Interactive Computer Simulation for Teaching the Proper Use of Personal Protective Equipment. Comput. Inform. Nurs. 33 (2), 49–57. doi:10.1097/cin.0000000000000125

John, A., Tomas, M. E., Hari, A., Wilson, B. M., and Donskey, C. J. (2017). Do medical Students Receive Training in Correct Use of Personal Protective Equipment? Med. Education Online 22 (1), 1264125. doi:10.1080/10872981.2017.1264125

Kamarianakis, M., Lydatakis, N., and Papagiannakis, G. (2021). Never ‘Drop the Ball’ in the Operating Room: An Efficient Hand-Based VR HMD Controller Interpolation Algorithm, for Collaborative, Networked Virtual Environments. In Advances in Computer Graphics. CGI 2021. Lecture Notes in Computer Science. Editor N. Magnenat-Thalmann. Cham: Springer 13002. doi:10.1007/978-3-030-89029-2_52

Kamarianakis, M., and Papagiannakis, G. (2021). An All-In-One Geometric Algorithm for Cutting, Tearing, and Drilling Deformable Models. Adv. Appl. Clifford Algebras 31 (3), 58. doi:10.1007/s00006-021-01151-6

Kaplan, A. D., Cruit, J., Endsley, M., Beers, S. M., Sawyer, B. D., and Hancock, P. A. (2020). “The Effects of Virtual Reality, Augmented Reality, and Mixed Reality as Training Enhancement Methods: A Meta-Analysis,” Human Factors, 0018720820904229.

Kätsyri, J., Förger, K., Mäkäräinen, M., and Takala, T. (2015). A Review of Empirical Evidence on Different Uncanny valley Hypotheses: Support for Perceptual Mismatch as One Road to the valley of Eeriness. Front. Psychol. 6, 390. doi:10.3389/fpsyg.2015.00390

Kennedy, R. S., Lane, N. E., Berbaum, K. S., and Lilienthal, M. G. (1993). Simulator Sickness Questionnaire: An Enhanced Method for Quantifying Simulator Sickness. Int. J. Aviation Psychol. 3 (3), 203–220. doi:10.1207/s15327108ijap0303_3

Lapolla, P., and Mingoli, A. (2020). COVID-19 Changes Medical Education in Italy: Will Other Countries Follow? Postgrad. Med. J. 96 (1137), 375–376. doi:10.1136/postgradmedj-2020-137876

Lerner, D., Mohr, S., Schild, J., Göring, M., and Luiz, T. (2020). An Immersive Multi-User Virtual Reality for Emergency Simulation Training: Usability Study. JMIR Serious Games [Internet]. Availableat: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7428918/.

Lewis, J. (1991). Psychometric Evaluation of an After-Scenario Questionnaire for Computer Usability Studies: The ASQ. SIGCHI Bull. 1 (23), 78–81. doi:10.1145/122672.122692

Mantovani, F., and Castelnuovo, G. (2003). “The Sense of Presence in Virtual Training: Enhancing Skills Acquisition and Transfer of Knowledge through Learning Experience in Virtual Environments,” in Being There: Concepts, Effects and Measurement of User Presence in Synthetic Environments. Editors G. Riva, F. Davide, and W. A. Ijsselsteijn (Amsterdam: Ios Press), 167–182.

Marty, F. M., Chen, K., and Verrill, K. A. (2020). How to Obtain a Nasopharyngeal Swab Specimen. N. Engl. J. Med. 382 (22), e76. doi:10.1056/nejmvcm2010260

Nagoshi, Y., Cooper, L. A., Meyer, L., Cherabuddi, K., Close, J., Dow, J., et al. (2019). Application of an Objective Structured Clinical Examination to Evaluate and Monitor Interns' Proficiency in Hand hygiene and Personal Protective Equipment Use in the United States. J. Educ. Eval. Health Prof. 16, 31. doi:10.3352/jeehp.2019.16.31

Papagiannakis, G., Zikas, P., Lydatakis, N., Kateros, S., Kentros, M., Geronikolakis, E., et al. (2020). MAGES 3.0: Tying the knot of medical VR. In ACM SIGGRAPH 2020 Immersive Pavilion (SIGGRAPH '20). New York, NY: Association for Computing Machinery, 1–2. doi:10.1145/3388536.3407888

Sauro, J. (2011). SUStisfied? Little-Known System Usability Scale Facts. User Experience Mag. 10 (3). Availableat: https://uxpamagazine.org/sustified/.

Singh, R. P., Javaid, M., Kataria, R., Tyagi, M., Haleem, A., and Suman, R. (2020). Significant Applications of Virtual Reality for COVID-19 Pandemic. Diabetes Metab. Syndr. Clin. Res. Rev. 14 (4), 661–664. doi:10.1016/j.dsx.2020.05.011

SituationCovid, (2021). SituationCovid Oculus Page. Availableat: http://ocul.us/3aynIPA.

Tabatabai, S. (2020). COVID-19 Impact and Virtual Medical Education. J. Adv. Med. Educ. Prof. 8 (3), 140–143. doi:10.30476/jamp.2020.86070.1213

Usoh, M., Catena, E., Arman, S., and Slater, M. (2006). “Using Presence Questionnaires in Reality” [Internet]. Cambridge: MIT Press. Availableat: https://www.mitpressjournals.org/doix/abs/10.1162/105474600566989.

Vandenberg, O., Martiny, D., Rochas, O., van Belkum, A., and Kozlakidis, Z. (2020). Considerations for Diagnostic COVID-19 Tests. Nat. Rev. Microbiol., 1–13. doi:10.1038/s41579-020-00461-z

Verbeek, J. H., Rajamaki, B., Ijaz, S., Sauni, R., Toomey, E., Blackwood, B., et al. (2020). Personal Protective Equipment for Preventing Highly Infectious Diseases Due to Exposure to Contaminated Body Fluids in Healthcare Staff. Cochrane Database Syst. Rev. 5, CD011621. doi:10.1002/14651858.CD011621.pub5

Wayne, D. B., Green, M., and Neilson, E. G. (2020). Medical Education in the Time of COVID-19. American Association for the Advancement of Science.

World Health Organization, (2009). WHO | WHO Guidelines on Hand hygiene in Health Care [Internet]. WHO. Availableat: https://www.who.int/gpsc/5may/tools/9789241597906/en/.

Wyllie, A. L., Fournier, J., Casanovas-Massana, A., Campbell, M., Tokuyama, M., Vijayakumar, P., et al. (2020). Saliva or Nasopharyngeal Swab Specimens for Detection of SARS-CoV-2. N. Engl. J. Med. 383 (13), 1283–1286. doi:10.1056/nejmc2016359

Zhang, T., Booth, R., Jean-Louis, R., Chan, R., Yeung, A., Gratzer, D., et al. (2020). A Primer on Usability Assessment Approaches for Health-Related Applications of Virtual Reality. JMIR Serious Games 8 (4), e18153. doi:10.2196/18153

Keywords: virtual reality, medical training, COVID-19, virtual reality application, effective training, swab testing

Citation: Zikas P, Kateros S, Lydatakis N, Kentros M, Geronikolakis E, Kamarianakis M, Evangelou G, Kartsonaki I, Apostolou A, Birrenbach T, Exadaktylos AK, Sauter TC and Papapagiannakis G (2022) Virtual Reality Medical Training for COVID-19 Swab Testing and Proper Handling of Personal Protective Equipment: Development and Usability. Front. Virtual Real. 2:740197. doi: 10.3389/frvir.2021.740197

Received: 12 July 2021; Accepted: 22 December 2021;

Published: 04 February 2022.

Edited by:

Georgina Cardenas-Lopez, National Autonomous University of Mexico, MexicoReviewed by:

Vangelis Lympouridis, University of Southern California, United StatesDavid Alejandro Pérez Ferrara, National Autonomous University of Mexico, Mexico

Daniel Jeronimo González Sánchez, National Autonomous University of Mexico, Mexico

Copyright © 2022 Zikas, Kateros, Lydatakis, Kentros, Geronikolakis, Kamarianakis, Evangelou, Kartsonaki, Apostolou, Birrenbach, Exadaktylos, Sauter and Papapagiannakis. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Manos Kamarianakis, a2FtYXJpYW5ha2lzQHVvYy5ncg==

Paul Zikas

Paul Zikas Steve Kateros1,2,3

Steve Kateros1,2,3 Manos Kamarianakis

Manos Kamarianakis Aristomenis K. Exadaktylos

Aristomenis K. Exadaktylos Thomas C. Sauter

Thomas C. Sauter