- 1Department of Psychology, University of Salford, Salford, United Kingdom

- 2Aston Laboratory for Immersive Virtual Environments, Aston Institute of Health and Neurodevelopment, Aston University, Birmingham, United Kingdom

- 3School of Psychology, University College Dublin, Dublin, Ireland

Introduction

Joint attention, defined as the coordination of orienting between two or more people toward an object, person or event (Billeci et al., 2017; Mundy and Newell, 2007; Scaife and Bruner, 1975), is one of the essential mechanisms of social interaction (Chevalier et al., 2020). This joint attention can be signalled through both verbal and visual cues, with gaze direction providing an important visual signal of attention and thus joint attention (Kleinke, 1986; Emery, 2000; Land and Tatler, 2009). Therefore, it is crucial to understand how eye gaze is used as part of joint attention in social scenarios. Importantly, the emergence of virtual agents and social robots gives us the opportunity to better understand these processes as they may occur in human-to-human interaction, as well as enabling realistic human to agent/robot interaction.

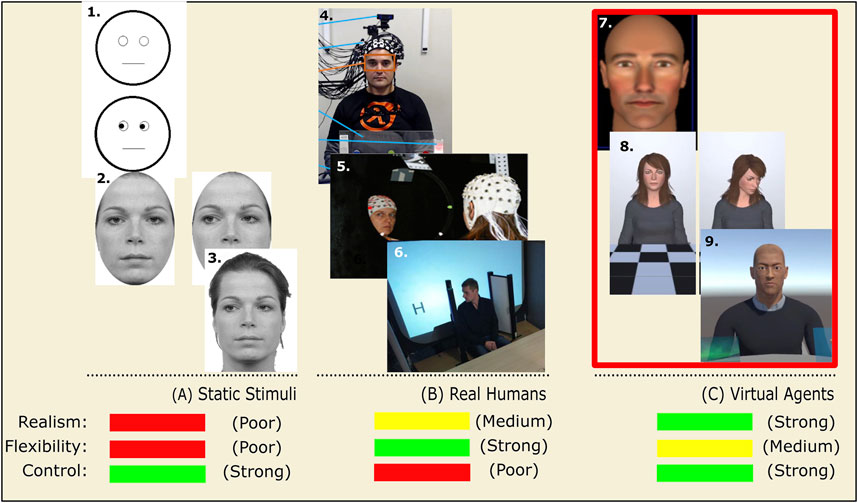

Traditionally, the influence of gaze on attentional orienting has been studied in cueing paradigms based on Posner’s original spatial task (e.g., Posner, 1980). Using simple, static face stimuli, e.g., photographs of real faces or drawings of schematic faces (see Box 1, panel A), results show that gaze provides a very strong attentional cue (Frischen et al., 2007). Importantly, though the basic cueing effect has also been replicated with non-social cues such as arrows or direction words (Hommel et al., 2001; Ristic et al., 2002; Tipples, 2002, 2008), it is argued that the strength and immediacy of gaze cuing demonstrates something special about how we respond to social information, and in particular eye gaze (Frischen et al., 2007; Kampis and Southgate, 2020; Stephenson et al., 2021).

BOX 1 Examples of the range of stimuli used in research presented in this review rated on realism, flexibility (of movement) and experimental control. The left side begins with highly controlled but unrealistic and inflexible stimuli of static faces (A), next we show the poorly controlled, but flexible and potentially realistic approach of using real humans (B), though arguably realism is weakened by the contrived nature of studies and environments, with this being exacerbated by the addition of experimentally necessary but distinctive brain imaging technology in the examples shown here. Finally, on the right we arguably achieve greater realism and control, as well as reasonable movement flexibility by using dynamic virtual agents (C) where the eyes and heads can move. Presented examples have either been reproduced with permission or created for the box to be representative of stimuli used. 1. Schematic faces, e.g., Friesen and Kingstone, (1998); 2. “Dynamic” photograph gaze shift stimuli where a direct face is present prior to an averted gaze face*, e.g., Chen et al. (2021); 3. Averted gaze photograph stimuli*, e.g., Driver et al. (1999); 4. Human-human interaction, reproduced with permission from Dravida et al. (2020); 5. Human-human shared attention paradigm, reproduced with permission from Lachat et al. (2012); 6. Human as gaze cue, example used with permission from Cole et al. (2015); 7. Dynamic virtual agent head, permitted reproduction from Caruana et al. (2020); 8. Virtual agent as gaze cue (lab stimuli), as used in Gregory (2021); 9. Virtual agent as interaction partner with realistic gaze movement (lab stimuli) Kelly at al., (in prep; see video youtu.be/sgrxOpYP91E).

*Face images from The Radboud Faces database, Langner et al. (2010).

While research conducted into gaze cuing phenomena to date has been informative, the use of simplistic and most often static face stimuli such as photographs of real faces, drawings of schematic faces or even just eyes (see Box 1, panel A), as well as the frequent use of unrealistic tasks and environments (e.g., a face image “floating” in a 2D spatial environment) has been highlighted as problematic if we truly wish to understand social processes (Gobel et al., 2015; Risko et al., 2012, 2016; Zaki and Ochsner, 2009). For example, while research in traditional 2D settings shows that participants tend to focus on the eye region of faces (Birmingham et al., 2008), research conducted in real life shows participants avoiding direct eye contact (Foulsham et al., 2011; Laidlawet al., 2011; Mansour and Kuhn, 2019). Since the engagement of eye contact and the following of eye gaze direction are crucial aspects of joint attention, it is extremely important to look for alternative approaches that may better reflect real life social interaction.

Investigating Joint Attention Using Real Humans

One obvious option to better understand how joint attention might manifest in real world social interaction is to observe interaction between real humans. However, while observational studies can be informative, it is also important to employ experimental studies in order to build theories and test hypotheses about the nature of human interaction. Indeed, a number of empirical studies have been conducted using real human interaction, which in principle replicate known gaze cuing and joint attention effects (Cole et al., 2015; Dravida et al., 2020; Lachat et al., 2012, see Box 1, panel B). However, using real humans as interaction partners in such empirical studies has a number of important limitations which affect the kinds of studies and level of nuance possible.

First, such studies are resource heavy, requiring a confederate’s concentration and time, thus limiting the number of repetitions possible (trials and participants). Second, study design is limited; for example, it is impossible to subtly change timings of gaze shifts in the millisecond range, change the identity of the gaze cue during the task, and change other aspects of stimulus presentation. Third, experimental control is limited; real people will not perform the same action in the same way multiple times during an experimental session and are also unlikely to behave in exactly the same way in each different experimental session. Further, they may make many involuntary nuanced facial expressions such as smirking or raising their eyebrows, as well as uncontrollable micro expressions (e.g., Porter and ten Brinke, 2008; Porter et al., 2012), potentially affecting the validity of the study (e.g., Kuhlen and Brennan, 2013). Finally, while using human confederates, participants are often taking part in a very unnatural “social” experience, i.e., engaging in a highly artificial task in a lab environment while being stared at by a stranger who is not communicating in a particularly natural way (See Box 1, panel B).

Therefore, while using real humans provides real-life dynamic interaction, this comes at the cost of experimental control and design complexity. While others have suggested the use of social robots (e.g., Chevalier et al., 2020), here, we propose that virtual agents are the optimum alternative for the experimental study of joint attention.

Investigating Joint Attention Using Virtual Agents

Virtual Agents (VAs: also referred to as virtual humans or characters) are defined as computer-generated virtual reality characters with human-like appearances, in contrast to an avatar, which is a humanoid representation of a user in a virtual world (Pan and Hamilton, 2018). These VAs offer the opportunity to conduct social interaction studies with higher realism while retaining experimental control. Importantly, similar social behaviours have been found during interactions with VAs as are observed during real human interaction (for reviews and further discussion see Bombari et al., 2015; Kothgassner and Felnhofer, 2020; Pan and Hamilton, 2018).

The use of VAs is highly cost effective, with ready-made or easy to modify assets available on several platforms (Examples: Mixamo: www.mixamo.com; SketchFab: sketchfab.com; MakeHuman: www.makehumancommunity.org). These VA assets can then be manipulated for the purposes of a study using free software such as Blender for animation and Unity3D for experimental development and control. The VAs can be presented in fully immersive virtual systems through immersive VR headsets as well as through augmented virtual reality and finally through basic computer setups, each of which having pros and cons related to expense, portability and immersion (Pan and Hamilton, 2018). Importantly, even using VA-based stimuli (e.g., dynamic video recordings) in traditional screen-based studies offers significantly more social nuance and realism compared to using static images of disembodied heads (e.g., Gregory, 2021, see Box 1, panel C). Indeed, gaze is associated with an intent to act (Land and Tatler, 2009) and it is difficult to imagine a static head performing a goal directed action.

When considering using VAs as social partners, researchers may consider factors such as the realism of the VAs, including the issue of the “uncanny valley” and whether the participant perceives the VA as a social partner at all. It may be argued that people will not interact with VAs in the same way they will with real humans. However, research indicates that this concern is unfounded (Bombari et al., 2015; Kothgassner and Felnhofer, 2020; Pan and Hamilton, 2018), indeed, research in moral psychology demonstrates strong realistic response in VR (Francis et al., 2016; Francis et al., 2017; Niforatos et al., 2020).

The “uncanny valley” refers to our loss of affinity to computer-generated agents when they fail to attain human-like realism (Mori et al., 2012). This can serve as a reminder that the VA is not real, limiting the naturalness of the interaction. First, it is important to note that the same arguments of unnaturalness can also be applied to some studies using real humans, as discussed above and presented in Box 1, panel B. Therefore, even rudimentary VAs can be beneficial when investigating social interaction. Indeed, research suggests that even presenting the most basic VA can be successful if the eyes are communicative, with findings showing responses to gaze in human-to-agent interactions are comparable to those in human-human interaction (Ruhland et al., 2015).

Joint attention as initiated through eye gaze is therefore an area ripe for the use of VAs because it is generally investigated in isolation from verbal cues, as well as in isolation from body movements which can affect responses independently (e.g., Mazzarella et al., 2012), mitigating concerns regarding the complexity of producing realistic speech and action (Pan and Hamilton, 2018). Importantly, for use in joint attention research VAs can mimic the dynamic behaviour of human gaze with significant precision, with VAs having full eye movement available (e.g., Ruhland et al., 2015). Similar to whole-body motion capture for animations, it is possible to apply real-time and/or recorded human eye movements onto VAs, e.g., by using HMDs with eye-tracking such as the HTC Vive Pro Eye (See video: https://youtu.be/sgrxOpYP91E), creating the impression of a highly naturalistic interaction, but one with the key aspects of experimental control. This therefore goes significantly beyond traditional gaze cuing studies where gaze is presented statically in the desired directions (See Box 1, panel A). Importantly, VAs allow investigation of social-interaction with real human gaze sequences as well as allowing the possibility of a closed-loop system that would determine the VAs’ behaviour based on the users’ gaze (Kelly et al., 2020). This therefore allows fundamental investigations into how a person responds when their gaze is followed.

Going beyond eye gaze, VAs also enable the consistent use of age-matched stimuli, especially when investigating child development or aging, where it may be difficult to use age-matched confederates. Age matching as well as controlled age mismatching can be vital in understanding changes in joint attention and other aspects of social cognition across the lifespan (e.g., Slessor et al., 2010), as well as when investigating differences in children with learning difficulties related to ADHD and autism diagnosis (e.g., Bradley and Newbutt, 2018; Jyoti et al., 2019). VAs also facilitate investigations of cueing with a controlled variety of different “people” as well as more general effects of multiagent joint attention (Capozzi et al., 2015; Capozzi et al., 2018). Indeed, the presentation of VAs allows full control of the distance between each partner and their gaze behaviour while allowing for a natural configuration of people in a room; a difficult endeavour with multiple human confederates.

Virtual scenarios also enable the manipulation of the environment in which the interaction occurs, and of the stimuli presented as part of the task. This allows investigation of how interactions may occur differently in indoor vs outdoor environments, as well as comparing formal learning environments like a classroom to less formal environments. Consequently, future investigations of joint attention in more dynamic, interactive VR scenarios could focus on how the participant explores the virtual world(s) with their virtual social partner(s).

Conclusion

The use of virtual reality and agents (VAs) in studying human interaction allows researchers a high level of experimental control while allowing participants to engage naturally in a realistic experimental environment. This enables researchers to study the nuances of joint attention—and social interaction more generally—without the common pitfalls of both simplistic static face-stimuli as well as studies using real humans. In contrast to using human-to-human interaction, the functionality and control of VAs mitigates any lack of realism that may be experienced. Arguably it is more natural to interact with a VA presented in a realistic VR environment than with a real human in unusual experimental settings/headgear (see Box 1). Further, though it has been proposed that social robots are an ideal alternative to human to human interaction, particularly in terms of control and realism of eye gaze interaction (Chevalier et al., 2020), VAs, particularly when presented in fully immersive VR environments, are more versatile, more cost effective and potentially more realistic than social robots. VAs offer infinite options for quick adjustments in terms of appearance (e.g., age), environment and kinematics as well as a significantly higher control over the experimental setting. This is further corroborated by the accelerated emergence of the so-called “Metaverse” and we therefore argue that future breakthroughs in understanding human social interaction will likely come from investigations using VAs believed to have genuine agency in a task. Therefore, having VAs that can react based on a participant’s behaviour, and in particular to their gaze behaviour, opens the door to countless experiments that can provide stronger insights into how joint attention affects our cognition.

Author Contributions

SG and CK contributed equally to this work and share first authorship. KK is the senior author. All authors worked together on conceiving and drafting the manuscript, working collaboratively on a shared google document. All authors read, and approved the submitted version.

Funding

This work was supported by a Leverhulme Trust early career fellowship (ECF-2018-130) awarded to S.E.A. Gregory.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Billeci, L., Narzisi, A., Tonacci, A., Sbriscia-Fioretti, B., Serasini, L., Fulceri, F., et al. (2017). An Integrated EEG and Eye-Tracking Approach for the Study of Responding and Initiating Joint Attention in Autism Spectrum Disorders. Sci. Rep. 7 (1), 1–13. doi:10.1038/s41598-017-13053-4

Birmingham, E., Bischof, W. F., and Kingstone, A. (2008). Gaze Selection in Complex Social Scenes. Vis. Cogn. 16 (2), 341–355. doi:10.1080/13506280701434532

Bombari, D., Schmid Mast, M., Canadas, E., and Bachmann, M. (2015). Studying Social Interactions through Immersive Virtual Environment Technology: Virtues, Pitfalls, and Future Challenges. Front. Psychol. 6 (June), 1–11. doi:10.3389/fpsyg.2015.00869

Bradley, R., and Newbutt, N. (2018). Autism and Virtual Reality Head-Mounted Displays: a State of the Art Systematic Review. Jet 12 (3), 101–113. doi:10.1108/JET-01-2018-0004

Capozzi, F., Bayliss, A. P., Elena, M. R., and Becchio, C. (2015). One Is Not Enough: Group Size Modulates Social Gaze-Induced Object Desirability Effects. Psychon. Bull. Rev. 22 (3), 850–855. doi:10.3758/s13423-014-0717-z

Capozzi, F., Bayliss, A. P., and Ristic, J. (2018). Gaze Following in Multiagent Contexts: Evidence for a Quorum-like Principle. Psychon. Bull. Rev. 25 (6), 2260–2266. doi:10.3758/s13423-018-1464-3

Caruana, N., Alhasan, A., Wagner, K., Kaplan, D. M., Woolgar, A., and McArthur, G. (2020). The Effect of Non-communicative Eye Movements on Joint Attention. Q. J. Exp. Psychol. 73 (12), 2389–2402. doi:10.1177/1747021820945604

Chen, Z., McCrackin, S. D., Morgan, A., and Itier, R. J. (2021). The Gaze Cueing Effect and its Enhancement by Facial Expressions Are Impacted by Task Demands: Direct Comparison of Target Localization and Discrimination Tasks. Front. Psychol. 12 (March), 696. doi:10.3389/fpsyg.2021.618606

Chevalier, P., Kompatsiari, K., Ciardo, F., and Wykowska, A. (2020). Examining Joint Attention with the Use of Humanoid Robots-A New Approach to Study Fundamental Mechanisms of Social Cognition. Psychon. Bull. Rev. 27 (2), 217–236. doi:10.3758/s13423-019-01689-4

Cole, G. G., Smith, D. T., and Atkinson, M. A. (2015). Mental State Attribution and the Gaze Cueing Effect. Atten Percept Psychophys 77 (4), 1105–1115. doi:10.3758/s13414-014-0780-6

Dravida, S., Noah, J. A., Zhang, X., and Hirsch, J. (2020). Joint Attention during Live Person-To-Person Contact Activates rTPJ, Including a Sub-component Associated with Spontaneous Eye-To-Eye Contact. Front. Hum. Neurosci. 14. doi:10.3389/fnhum.2020.00201

Driver, J., Davis, G., Ricciardelli, P., Kidd, P., Maxwell, E., and Baron-Cohen, S. (1999). Gaze Perception Triggers Reflexive Visuospatial Orienting. Vis. Cogn. 6 (5), 509–540. doi:10.1080/135062899394920

Emery, N. J. (2000). The Eyes Have it: The Neuroethology, Function and Evolution of Social Gaze. Neurosci. Biobehavioral Rev. 24 (6), 581–604. doi:10.1016/S0149-7634(00)00025-7

Foulsham, T., Walker, E., and Kingstone, A. (2011). The where, what and when of Gaze Allocation in the Lab and the Natural Environment. Vis. Res. 51 (17), 1920–1931. doi:10.1016/j.visres.2011.07.002

Francis, K. B., Howard, C., Howard, I. S., Gummerum, M., Ganis, G., Anderson, G., et al. (2016). Virtual Morality: Transitioning from Moral Judgment to Moral Action? PLoS ONE 11 (10), e0164374–22. doi:10.1371/journal.pone.0164374

Francis, K. B., Terbeck, S., Briazu, R. A., Haines, A., Gummerum, M., Ganis, G., et al. (2017). Simulating Moral Actions: An Investigation of Personal Force in Virtual Moral Dilemmas. Sci. Rep. 7 (1), 1–11. doi:10.1038/s41598-017-13909-9

Friesen, C. K., and Kingstone, A. (1998). The Eyes Have it! Reflexive Orienting Is Triggered by Nonpredictive Gaze. Psychon. Bull. Rev. 5 (3), 490–495. doi:10.3758/BF03208827

Frischen, A., Bayliss, A. P., and Tipper, S. P. (2007). Gaze Cueing of Attention: Visual Attention, Social Cognition, and Individual Differences. Psychol. Bull. 133 (4), 694–724. doi:10.1037/0033-2909.133.4.694

Gobel, M. S., Kim, H. S., and Richardson, D. C. (2015). The Dual Function of Social Gaze. Cognition 136, 359–364. doi:10.1016/j.cognition.2014.11.040

Gregory, S. E. A. (2021). Investigating Facilitatory versus Inhibitory Effects of Dynamic Social and Non-social Cues on Attention in a Realistic Space. Psychol. Res. 1. 0123456789. doi:10.1007/s00426-021-01574-7

Hommel, B., Pratt, J., Colzato, L., and Godijn, R. (2001). Symbolic Control of Visual Attention. Psychol. Sci. 12 (5), 360–365. doi:10.1111/1467-9280.00367

Jyoti, V., Gupta, S., and Lahiri, U. (2019). “Virtual Reality Based Avatar-Mediated Joint Attention Task for Children with Autism: Implication on Performance and Physiology,” in 2019 10th International Conference on Computing, Communication and Networking Technologies, ICCCNT, IIT KANPUR, July 2019, IEEE, 1–7. doi:10.1109/ICCCNT45670.2019.8944467

Kampis, D., and Southgate, V. (2020). Altercentric Cognition: How Others Influence Our Cognitive Processing. Trends Cogn. Sci. 24, 945–959. doi:10.1016/j.tics.2020.09.003

Kelly, C., Bernardet, U., and Kessler, K. (2020). “A Neuro-VR Toolbox for Assessment and Intervention in Autism: Brain Responses to Non-verbal, Gaze and Proxemics Behaviour in Virtual Humans,” in 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), March 2020, United Kingdom, (IEEE), 565–566. doi:10.1109/vrw50115.2020.00134

Kleinke, C. L. (1986). Gaze and Eye Contact: A Research Review. Psychol. Bull. 100 (1), 78–100. doi:10.1037/0033-2909.100.1.78

Kothgassner, O. D., and Felnhofer, A. (2020). Does Virtual Reality Help to Cut the Gordian Knot between Ecological Validity and Experimental Control? Ann. Int. Commun. Assoc. 44, 210–218. Routledge. doi:10.1080/23808985.2020.1792790

Kuhlen, A. K., and Brennan, S. E. (2013). Language in Dialogue: When Confederates Might Be Hazardous to Your Data. Psychon. Bull. Rev. 20 (1), 54–72. doi:10.3758/s13423-012-0341-8

Lachat, F., Conty, L., Hugueville, L., and George, N. (2012). Gaze Cueing Effect in a Face-To-Face Situation. J. Nonverbal Behav. 36 (3), 177–190. doi:10.1007/s10919-012-0133-x

Laidlaw, K. E. W., Foulsham, T., Kuhn, G., and Kingstone, A. (2011). Potential Social Interactions Are Important to Social Attention. Proc. Natl. Acad. Sci. 108 (14), 5548–5553. doi:10.1073/pnas.1017022108

Land, M., and Tatler, B. W. (2009). Looking and Acting: Vision and Eye Movements in Natural Behaviour. Oxford University Press.

Langner, O., Dotsch, R., Bijlstra, G., Wigboldus, D. H. J., Hawk, S. T., and van Knippenberg, A. (2010). Presentation and Validation of the Radboud Faces Database. Cogn. Emot. 24 (8), 1377–1388. doi:10.1080/02699930903485076

Mansour, H., and Kuhn, G. (2019). Studying "natural" Eye Movements in an "unnatural" Social Environment: The Influence of Social Activity, Framing, and Sub-clinical Traits on Gaze Aversion. Q. J. Exp. Psychol. 72 (8), 1913–1925. doi:10.1177/1747021818819094

Mazzarella, E., Hamilton, A., Trojano, L., Mastromauro, B., and Conson, M. (2012). Observation of Another's Action but Not Eye Gaze Triggers Allocentric Visual Perspective. Q. J. Exp. Psychol. 65 (12), 2447–2460. doi:10.1080/17470218.2012.697905

Mori, M., MacDorman, K., and Kageki, N. (2012). The Uncanny Valley [From the Field]. IEEE Robot. Automat. Mag. 19 (2), 98–100. doi:10.1109/MRA.2012.2192811

Mundy, P., and Newell, L. (2007). Attention, Joint Attention, and Social Cognition. Curr. Dir. Psychol. Sci. 16 (5), 269–274. doi:10.1111/j.1467-8721.2007.00518.x

Niforatos, E., Palma, A., Gluszny, R., Vourvopoulos, A., and Liarokapis, F. (2020). Would You Do it?: Enacting Moral Dilemmas in Virtual Reality for Understanding Ethical Decision-Making. Conf. Hum. Factors Comput. Syst. - Proc. 1–12. doi:10.1145/3313831.3376788

Pan, X., and Hamilton, A. F. C. (2018). Why and How to Use Virtual Reality to Study Human Social Interaction: The Challenges of Exploring a New Research Landscape. Br. J. Psychol. 109 (3), 395–417. doi:10.1111/bjop.12290

Porter, S., and ten Brinke, L. (2008). Reading between the Lies. Psychol. Sci. 19 (5), 508–514. doi:10.1111/j.1467-9280.2008.02116.x

Porter, S., ten Brinke, L., and Wallace, B. (2012). Secrets and Lies: Involuntary Leakage in Deceptive Facial Expressions as a Function of Emotional Intensity. J. Nonverbal Behav. 36 (1), 23–37. doi:10.1007/s10919-011-0120-7

Posner, M. I. (1980). Orienting of Attention. Q. J. Exp. Psychol. 32 (1), 3–25. doi:10.1080/00335558008248231

Risko, E. F., Laidlaw, K., Freeth, M., Foulsham, T., and Kingstone, A. (2012). Social Attention with Real versus Reel Stimuli: toward an Empirical Approach to Concerns about Ecological Validity. Front. Hum. Neurosci. 6 (May), 1–11. doi:10.3389/fnhum.2012.00143

Risko, E. F., Richardson, D. C., and Kingstone, A. (2016). Breaking the Fourth Wall of Cognitive Science. Curr. Dir. Psychol. Sci. 25 (1), 70–74. doi:10.1177/0963721415617806

Ristic, J., Friesen, C. K., and Kingstone, A. (2002). Are Eyes Special? it Depends on How You Look at it. Psychon. Bull. Rev. 9 (3), 507–513. doi:10.3758/BF03196306

Ruhland, K., Peters, C. E., Andrist, S., Badler, J. B., Badler, N. I., Gleicher, M., et al. (2015). A Review of Eye Gaze in Virtual Agents, Social Robotics and HCI: Behaviour Generation, User Interaction and Perception. Computer Graphics Forum 34 (6), 299–326. doi:10.1111/cgf.12603

Scaife, M., and Bruner, J. S. (1975). The Capacity for Joint Visual Attention in the Infant. Nature 253 (5489), 265–266. doi:10.1038/253265a0

Slessor, G., Laird, G., Phillips, L. H., Bull, R., and Filippou, D. (2010). Age-related Differences in Gaze Following: Does the Age of the Face Matter? Journals Gerontol. Ser. B: Psychol. Sci. Soc. Sci. 65B (5), 536–541. doi:10.1093/geronb/gbq038

Stephenson, L. J., Edwards, S. G., and Bayliss, A. P. (2021). From Gaze Perception to Social Cognition: The Shared-Attention System. Perspect. Psychol. Sci. 16, 553–576. doi:10.1177/1745691620953773

Tipples, J. (2002). Eye Gaze Is Not Unique: Automatic Orienting in Response to Uninformative Arrows. Psychon. Bull. Rev. 9 (2), 314–318. doi:10.3758/BF03196287

Tipples, J. (2008). Orienting to Counterpredictive Gaze and Arrow Cues. Perception & Psychophysics 70 (1), 77–87. doi:10.3758/PP.70.1.77

Keywords: joint attention, virtual agents, dynamic gaze, avatars, social interaction, ecological validity, virtual reality, social cognition

Citation: Gregory SEA, Kelly CL and Kessler K (2021) Look Into my “Virtual” Eyes: What Dynamic Virtual Agents add to the Realistic Study of Joint Attention. Front. Virtual Real. 2:798899. doi: 10.3389/frvir.2021.798899

Received: 20 October 2021; Accepted: 16 November 2021;

Published: 16 December 2021.

Edited by:

Evelien Heyselaar, Radboud University, NetherlandsReviewed by:

Hiroshi Ashida, Kyoto University, JapanCopyright © 2021 Gregory, Kelly and Kessler. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Samantha E. A. Gregory, cy5lLmEuZ3JlZ29yeUBzYWxmb3JkLmFjLnVr

†These authors have contributed equally to this work and share first authorship

Samantha E. A. Gregory

Samantha E. A. Gregory Clíona L. Kelly

Clíona L. Kelly Klaus Kessler

Klaus Kessler