- School of Behavioral and Brain Sciences, Center for BrainHealth, The University of Texas at Dallas, Dallas, TX, United States

Low immersion virtual reality (LIVR) is a computer-generated, three-dimensional virtual environment that allows for authentic social interactions through a personal avatar, or digital representation of oneself. Lab-based delivery of LIVR social skills intervention has been shown to support social learning through controlled, targeted practice. Recent remote technological advancements allow LIVR-based social skills training to potentially overcome accessibility barriers by delivering to youth in their home. This study investigated the impact of 10-h of Charisma™ Virtual Social Training (CHARISMA-VST), a LIVR-based intervention, on social skill changes in children and adolescents who struggle socially via either in-person or remote training protocols. Specifically, the aims examined both the impact of training location (in-person vs remote access) and diagnosis (parent report of autism spectrum disorder (ASD) diagnosis versus parent report of other non-ASD diagnosis) on objective measures of social skill change following CHARISMA-VST. Researchers delivered the CHARISMA-VST via Charisma 1.0, a customized virtual gaming environment. Sixty-seven participants (49 males, 18 females) between the ages of 9–17, with parent reported social challenges, completed 10, 1-h CHARISMA-VST sessions during which nine social cognitive strategies were taught and then practiced within a LIVR environment with interspersed social coaching. Four social cognitive domains were measured pre-post training: emotion recognition, social inferencing, social attribution, and social self-schemata. Results revealed improvements in emotion recognition, social inferencing, social attribution, and social self-schemata with medium to large effect sizes following the CHARISMA-VST. There was no moderating effect of training location on emotion recognition, social inferencing, and social self-schemata, suggesting comparable gains whether participants accessed the technology in their own homes or from a school or specialty center. There was no moderating effect of ASD versus non-ASD diagnosis on performance measures, suggesting CHARISMA-VST may be effective in improving social skills in individuals beyond its initially designed use focused on individuals with ASD. These encouraging findings from this pilot intervention study provide some of the first evidence of potential new virtual technology tools, as exemplified by CHARISMA-VST, to improve one of the most important aspects of human behavior—social skills and human connectedness in youth with a range of social competency challenges.

1 Introduction

Virtual reality (VR) offers a unique gateway to optimizing the intersection of immersive technology and social skill training for pediatric populations. Commonly defined as a computer-generated, three-dimensional virtual environment, low immersion virtual reality (LIVR) promotes social and emotional wellness, engaged learning, and targeted practice of pivotal social skills (Kriz, 2003; Miller and Bugnariu, 2016; Freeman et al., 2017; Kaplan-Rakowski and Gruber, 2019). Authentic immersive design is centered on the idea that a simulation must capture the basic truth of what it represents and evoke a sense of realism that can enable individuals to share space, experiences, thoughts, and emotion (Jerald, 2015; Parsons, 2016; Jacobson et al., 2017; Scavarelli et al., 2020). Researchers have recently considered the quality and utilization of LIVR to reach individuals with autism spectrum disorders (ASD) and found moderate evidence for its effectiveness using a traditional laboratory-based delivery model to train skills such as emotion recognition, social communication, and cognition (Grinberg, 2018; Mesa-Gresa et al., 2018; Karami et al., 2021; Farashi et al., 2022; Zhang et al., 2022). Furthermore, VR applications for pediatric populations not diagnosed with ASD, but struggling with engagement have also shown positive effects on social behavior (Grinberg et al., 2014; Ip et al., 2018; Rus-Calafell et al., 2018; Tan et al., 2018; Nijman et al., 2019).

Foundational principles established in early work in VR by Greenleaf and Tovar (1994) suggest a highly adaptable interface that harnesses a person’s strongest ability—what the user can do and control best—within a familiar environment. In contrast to LIVR, high immersion technology, such as VR head-mounted displays (HMD) provide an avenue for social skills training, however, developing and deploying HMD programming requires elevated levels of technical proficiency and adult supervision for the youth user. Additionally, although fully immersive environments are becoming more mainstream in the gaming industry, discomfort is a concern for HMD-systems, as headsets (e.g., Oculus Rift, HTC VIVE) have historically been associated with “cybersickness”, characterized by eye strain, headache, dizziness, and/or nausea (Caserman et al., 2021). As such, careful consideration of the negative sensory, ocular, and physical effects that prolonged VR exposure may have for children under 13 is required, making it difficult to deliver programming across multiple pediatric age groups (Malihi et al., 2020; Kaimara et al., 2022). LIVR provides the opportunity for pediatric users to experience the therapeutic benefits of VR programming without the added risks of high immersion HMD systems.

Remote access is the act of connecting to networks or computers located in another location via the internet. In a post-Covid-19 pandemic world, requests for and access to remotely delivered clinical services are on the rise. Remote access may entail using a desktop or portable computer system combined with standard audio-video teleconferencing to offer in-home accessibility for pediatric populations and can promote an engaging and accessible clinical environment for both the provider and the client (Dechsling et al., 2021; Pandey & Vaughn, 2021). A recent meta-analysis reviewed the effects of remotely delivered psychosocial services in an adult population and concluded that being physically present with a client does not appear essential to generating positive outcomes, as remote treatment effects were largely equivalent to in-person delivered interventions (Batastini et al., 2021). However, further research investigating remote treatment effects in pediatrics is warranted.

Computer-based LIVR social training programs may broaden opportunities to provide virtual, first-person, and gamified training experiences, paired with remote clinical services, to facilitate accessible interventions for youth. Prior work conducted by our researchers at the Center for BrainHealth, utilizing computer-generated 3D LIVR gaming technology, suggests positive effects of a virtual reality social cognition training on social skills for children and adolescents with both ASD and non-ASD conditions (Didehbani et al., 2016; Johnson et al., 2021). This multi-user, virtual environment has been used to facilitate non-scripted social interaction role-play between clinicians, who act as “peer” avatars, and individuals with social deficits. Recent advancements in our LIVR include feature implementations that may facilitate a deeper sense of immersion and lower the technology barrier via remote access. As a result, an updated LIVR software, Charisma 1.0, and related virtual reality social cognition training was designed for use in this study. Charisma™ Virtual Social Training (CHARISMA-VST) is a personalized, avatar-driven 10 session training that allows participants to engage in an authentic virtual experience, using a combination of social cognitive strategy training, strength-based coaching, and real-time practice in our LIVR environment.

Given the promise of utilizing virtual technology to deliver social skill training remotely, the purpose of this study was to 1) measure changes in social skills in youth with social difficulties following a 10 session CHARISMA-VST on measures of emotion recognition, social inferencing, social attribution, and social self-schemata and 2) examine the impact of training location (in-person vs. remote access) and diagnosis of ASD (ASD parent reported primary diagnosis vs. non-ASD diagnosis by parent report) on social skill change following CHARISMA-VST.

We hypothesized that children with social difficulties would demonstrate significant improvement following CHARISMA-VST in the areas of emotion recognition, social inferencing, and social attribution, consistent with our previous laboratory-based pediatric virtual reality social cognition studies (Didehbani et al., 2016; Johnson et al., 2021). Moreover, literature suggests a positive effect of social cognitive training on the formation of social schemata (Markus, 1997). Therefore, we hypothesized a significant improvement on social self-schemata following the CHARISMA-VST. Additionally, we hypothesized that there would not be a significant difference in the changes in any of our social cognition outcome measures based on in-person vs remote delivery. This is because with our LIVR technology advancements, in-person and remote participants will be able to complete study activities in a similar manner regardless of training location. Furthermore, past versions of our virtual reality social cognition training yielded positive effects on social skills outcomes for youth with and without ASD diagnosis (Johnson et al., 2021). Therefore, we hypothesized that there would not be a significant difference in the changes in any of our social cognition outcome measures between ASD and non-ASD youth participants.

2 Methods and materials

2.1 Participants

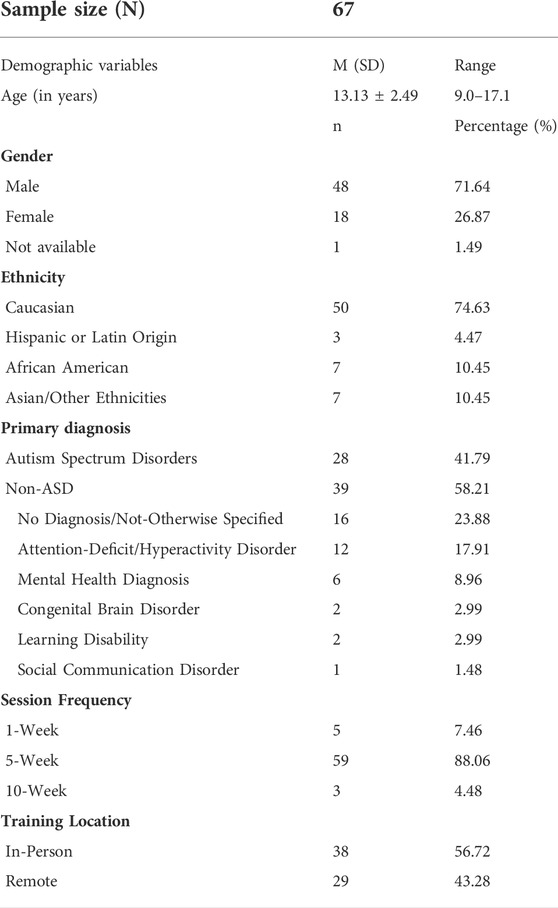

Sixty-seven pediatric participants (male = 49, female = 18) participated in the CHARISMA-VST study. Study participants between the ages of nine through seventeen (M = 13.13, SD = 2.49) received the 10-session CHARISMA-VST training. Research was conducted in accordance with the standards provided by The University of Texas at Dallas Institutional Review Board, number 16–49. Participants were recruited for the study by word of mouth or through online advertisement. Participant parent or guardian indicated interest by completing an inquiry form on the Center for BrainHealth webpage and completed a phone screening with a research clinician to ensure appropriateness for the training (i.e., age, English-speaking in 4-5-word phrases, ability to follow two-step directions). All parents and participants were informed about the study protocol before obtaining written informed consent and assent. Additionally, upon enrollment in the study, parents of youth participants selected the delivery location of the CHARISMA-VST. The CHARISMA-VST was delivered either 1) on-site, at the Center for BrainHealth or the participant’s school of attendance using an assigned computer, or 2) remotely, from the participant’s own home using a personal computer. Although clinical diagnosis, or lack thereof, was reported by parents upon intake, due to the non-diagnostic nature of the training, researchers relied on parent reports of primary and secondary diagnosis. Some parents elected to provide researchers with copies of reports (neuropsychological, school-based assessments, developmental pediatrician, etc.) confirming the diagnosis. Within the sample, 28 of participants’ parents reported a primary diagnosis of ASD, while the remaining 39 reported non-ASD diagnosis, but with social challenges. The non-ASD group contained other diagnosis, such as ADHD (Attention-deficit/hyperactivity disorder), learning disability, congenital brain disorder, social communication disorder, mental health diagnoses, or no diagnosis/not-otherwise specified. See Table 1 for additional participant demographic information.

2.2 Charisma 1.0 platform

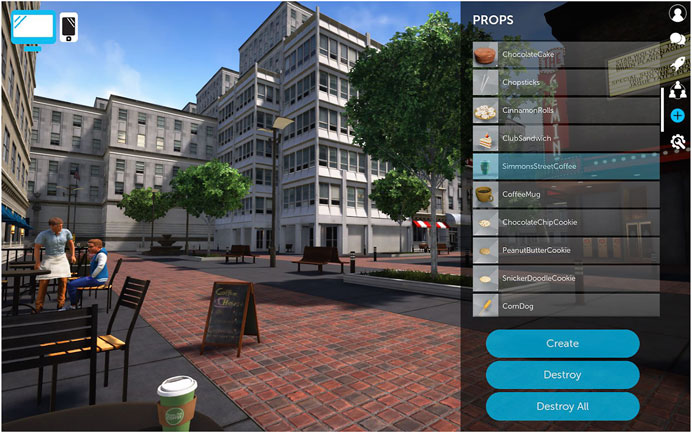

Building on the successes of our LIVR prototype used with previous iterations of our virtual reality social cognition training, Charisma 1.0 is a game-based virtual environment designed to facilitate social training between a research clinician and a participant. Charisma 1.0, complete with a software launcher to streamline installs, updates, and feature enhancements, is built on Unreal Engine four and packaged for Windows 10. Technology advancements include: 1) an avatar creator that provided additional options for customization, 2) an open virtual world and research clinician driven fast-travel 3) prop spawning panel that allowed research clinicians and participants to engage in more interactive and immersive scenarios, and 4) cloud-based virtual machines which offered remote access to Charisma 1.0 for participants.

The creation of a customized avatar creator allows users to build as unique of an avatar as they are themselves. Research clinicians and participants select desired facial features, height, skin tone, hair color and style, clothing, and accessories such as hats, jewelry, gloves, and shoes (Figure 1). This feature gave the user more agency in their self-representation in the virtual world. Likewise, the avatar creator provided research clinicians the ability to create a library of “faux friend” avatars, that could be modified and adapted to represent the peer group and community of participants more fully. Researcher clinicians drive themed interactions and select from a variety of avatars to portray differing affective characteristics such as sociable peers or nefarious strangers. Embodied avatars with distinct personality traits and fluctuating conversational styles aim to create a sense of realism and familiarity. Researcher clinicians further customized this library through the utilization of voice manipulation software MorphVox, which allowed research clinicians to modify their pitch, timber, and quality of voice. When role-playing as another persona/avatar, the voice the participant heard was appropriate for the gender and age of said avatar.

FIGURE 1. Charisma 1.0 avatar creator. Participants have the option to customize their avatar within the virtual world to create a sense of authenticity.

Charisma 1.0’s open virtual world is a sprawling city center with multiple environments and props that facilitate several types of social scenarios; ranging from public interactions such as buying coffee at a café to relational one-on-one interactions such as playdates or get togethers at the community movie theater (Figure 2). Charisma 1.0’s open world environment also includes a school, an apartment building with a manager’s office, two apartments, and an outdoor central city square. This open world format allowed users to seamlessly transition from one scenario and setting to the next. With an open world, research clinicians required the ability to traverse the environment in a quick and efficient way. Fast-travel, the ability to teleport from one location to another, was implemented to meet this need. Fast-travel allowed for the research clinicians to teleport themselves, or themselves and the participant. This feature allowed for “faux-friend” avatar transitions and was a tool for supporting participant navigation as needed.

FIGURE 2. The LIVR open world and additional prop features were designed to facilitate dynamic interactions.

Research clinicians could dynamically contextualize scenarios based on the immediate needs of the participant, rather than adhering to the constraints of a static virtual world. A prop spawning panel with over 100 interactive props allowed research clinicians to be even more responsive to participant needs and heighten the authentic feel of interactions. With this, increased levels of interactivity were possible as research clinicians controlled what, when, where and how additional props such as food, money, computers, basketballs, etc. could be used. Participants were not able to spawn or destroy props. Participants were instructed to request a desired prop during the LIVR real-time social engagement, when desired. Once spawned by the research clinician, the participant could then interact, pick up, move, and set down the props. Spawned objects, such as the basketball, were physics responsive and could be interacted with in the expected manner as a basketball will rebound from the hoop, fall to the ground, roll, and come to a stop in the distance.

Lastly, there was a call to expand the types of devices upon which Charisma 1.0 could be installed and delivered. Previous versions of the software were limited to Windows machines with a dedicated graphics processing unit (GPU), which excluded some participants from accessing the software. For this study, to expand access to Charisma 1.0, we utilized cloud-based virtual machines via Paperspace, a fully managed cloud GPU platform. Additionally, an internet connection capable of streaming high-definition video was required to stream the software from the cloud-based virtual machine directly to the participant’s computer. This remote technology allowed participants to use at-home computers with a Windows or Apple operating system to access the LIVR. Participants could then launch a specifically assigned, password protected virtual machine and join a Charisma 1.0 server through Paperspace.

2.3 Clinical protocol

This was a non-randomized pilot intervention examining the impact of CHARISMA-VST on social skills. Parents elected a preferred training cadence of a 5-, 1-, or 10-week training period for their child to complete the 10 CHARISMA-VST sessions. On average, participants completed the 10 CHARISMA-VST sessions in 42.7 days (SD = 16.82). Most participants (88.06%) completed the training in 5 weeks, twice per week for 1-h sessions, being seen on average every two to 5 days. The remaining participants completed their 10 sessions at an accelerated rate using a 1-week training cadence (7.46%) meeting daily for 2-h, or at a decelerated rate of a 10-week training cadence (4.48%) meeting once per week for 1-h.

2.4 CHARISMA-VST delivery model

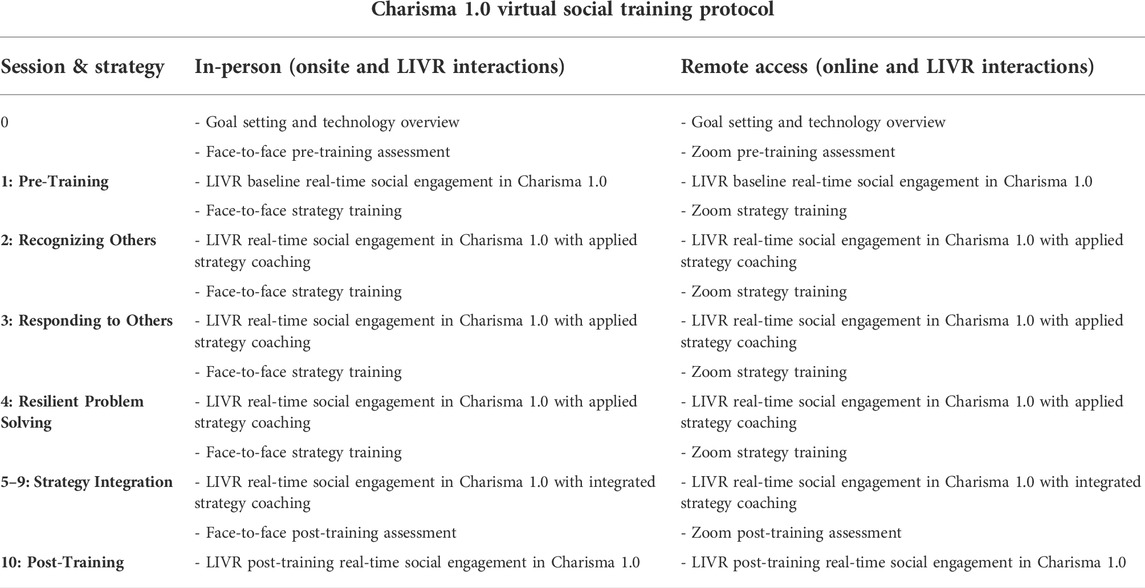

All participants completed a 30-min intake interview with a research clinician, which consisted of a 10-min interview prompting participants to discuss social goals and a 20-min “technology orientation” to ensure appropriate setup of technology and access to a computer with a keyboard, headphones with built-in microphone (if preferred), and webcam. Within 1 week of completing the intake interview, participants began the ten session CHARISMA-VST either in-person or remotely (Table 2).

TABLE 2. The following model was used to deliver CHARISMA-VST to both in-person and remote participants.

In-person participants (n = 38) met with a research clinician at the designated location (Center for BrainHealth or the participant’s school of attendance). During the CHARISMA-VST sessions, in-person participants completed the pre and post training assessment tasks and strategy training in face-to-face interactions with research clinicians. LIVR real-time social engagement between the participants and research clinicians took place in Charisma 1.0 via on-site laboratory computers.

Remote participants (n = 29) were given a private, personal link to log-in to a video and audio-conferencing tool (Zoom) to meet with a research clinician from their homes. During the CHARISMA-VST sessions, remote participants completed the pre and post training assessment tasks and strategy training with research clinicians via an online, Zoom interaction. LIVR real-time social engagement between the participants and research clinicians took place in Charisma 1.0 from participants’ personal computers.

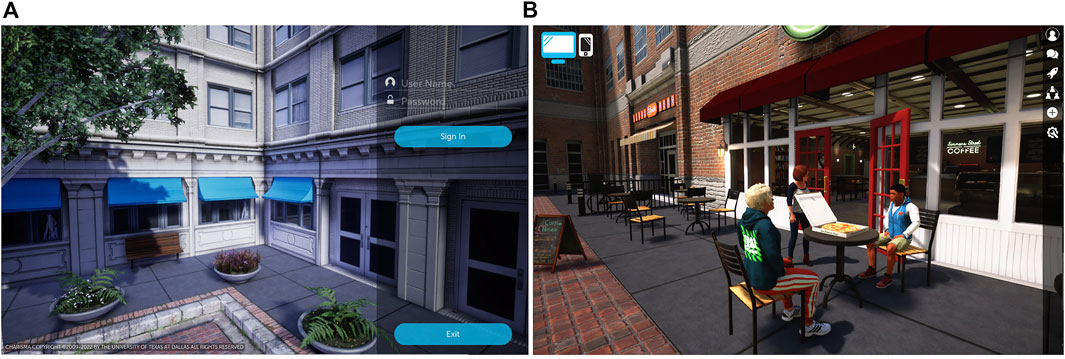

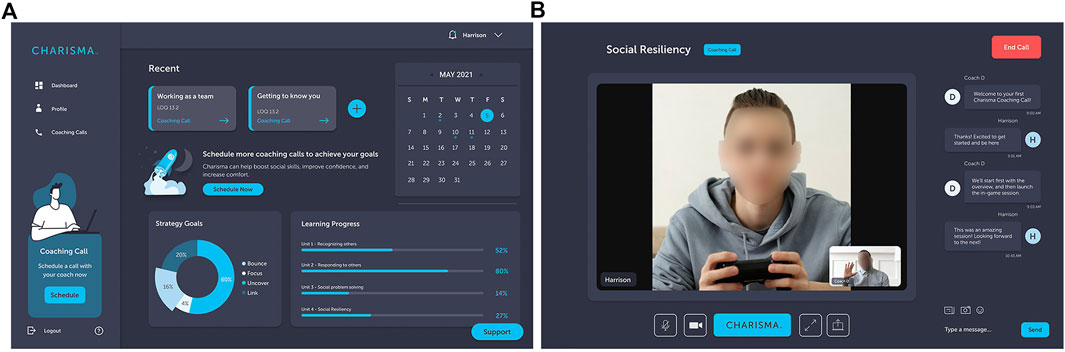

To access Charisma 1.0, research clinicians created password-protected servers and instructed all participants to launch the Charisma 1.0 gaming software. In-person participants launched Charisma 1.0 from an on-site computer in a private room, with the software installed. Participants receiving the training remotely on their home computer utilized Paperspace, a fully managed cloud GPU platform, which housed Charisma 1.0 on virtual machines. Each participant and research clinician then joined a designated server from their individual computers (Figure 3A). Both the research clinician and participant utilized the LIVR to engage in real-time, authentic social interactions through a personal avatar, or digital representation of oneself in a customized virtual gaming environment (Figure 3B). Research clinicians played a dual role, one of a coach facilitator, as well as peer “faux friend” avatars who role-played as a social model, encouraged and challenged participants, and provided opportunities to practice skills towards increased social awareness and understanding through a narrative arc within the LIVR.

FIGURE 3. Charisma 1.0 virtual world. (A) Participants used a personalized login code to sign into the virtual training platform (B) Participants used their avatar to interact with clinician and faux friend avatars.

Sessions 1 through 10 were each 60-min, individual CHARISMA-VST sessions between a single research clinician and participant. Session 1 (pre-training) and 10 (post-training) consisted of assessment tasks; no strategy training or social coaching was provided during the assessment sessions. Participants completed brief assessments in the areas of emotion recognition, social inferencing, social attribution, and social self-schemata outside of the LIVR. Participants then completed four clinician-driven LIVR real-time social engagement scenarios in Charisma 1.0. These LIVR interactions provided clinicians qualitative information about participants’ social interaction style and skill.

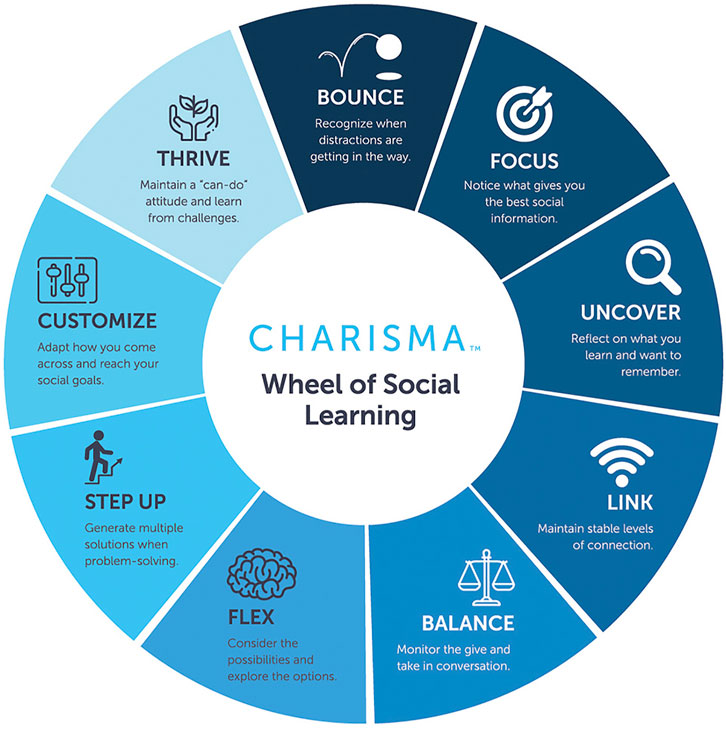

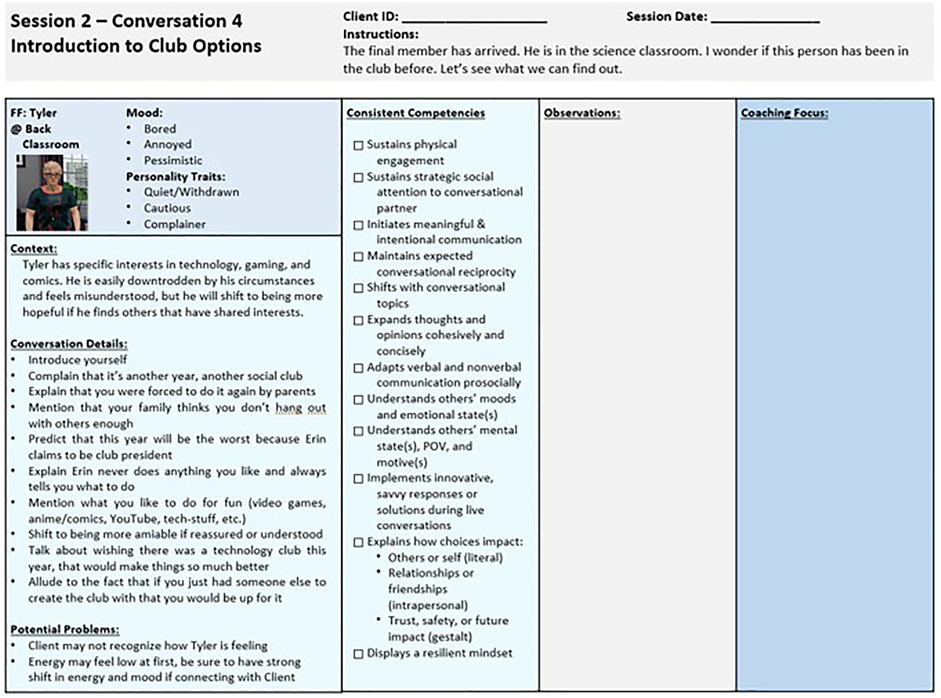

Sessions two to nine encompassed social strategy training, which consisted of three core areas: Recognizing Others (building social understanding), Responding to Others (building social connection), and Resilient Problem Solving (building social resiliency and assertion). During sessions two to four, participants spent the first 15 min of each session learning three of the nine social cognitive strategies, via an interactive, PowerPoint slide presentation with the research clinician (Figure 4). The remaining 45 min were allotted for application of the strategies, during which participants practiced strategies in four social interaction scenarios that were role-played between the research clinician “faux friend” and participant in the LIVR. During sessions five to nine, participants continued to practice the nine strategies in the LIVR, across four social interaction scenarios with the research clinician “faux friend” per session. Overall, participants engaged in eight sessions of LIVR real-time social engagement scenarios with social coaching, totaling 32 “faux friend” training interactions.

2.5 CHARISMA-VST intervention design

Learning in virtual environments allows participants to cognitively construct knowledge for themselves as they interact with the virtual world and observe the consequences of their actions by controlling the avatar independently and communicating directly through speech, movement, and props within the space (Bailenson et al., 2008). For each of the 10 LIVR social interaction role play sessions, a standardized CHARISMA-VST training manual was designed, containing four conversations per session that represented common childhood and adolescent/teen-oriented themes such as meeting new peers, working in groups, and standing-up for oneself. Consistent with work conducted by Standen and Brown (2006), three key areas of curriculum development were considered. First, scenarios encouraged participants to learn by making mistakes without suffering real-world consequences. Scenarios included themes such as “stranger danger”, standing up against bullies, and navigating conflict, to provide safe practice of navigating difficult social encounters. Second, conversational details were manipulated to meet the participant in their comfort zone and then slowly increased the complexity of the interaction in ways real-world exchanges do not. For example, research clinicians scaffolded conversations to meet participant skill level by providing cueing in the form of extra pausing within and between conversational exchanges, making indirect statements followed by asking direct questions, and highlighting certain emotional contexts and social cues through affectation, prosody, and physical proximity of the avatars. Third, strategies were applied through experience, not simply scripts or video models of what others can do. All participants were provided with the experience of taking social risks and problem solving through challenging social interactions, while allowing for flexibility in the researcher’s faux friend responses. For example, in session 2, a faux friend ‘Tyler’ tells the participant he feels forced into a new school club by his parents (Figure 5). For a participant with basic theory of mind goals, the intended resolution of this scenario could be to recognize ‘Tyler’s’ interests and mood, and then attempt to cheer him up. However, for a participant working toward more complex social goals such as social problem solving or resiliency, ‘Tyler’ could reject the participant’s first attempt to relate to the situation, allowing the participant an opportunity to consider other ways they could connect (e.g., share a personal experience, inquire more about family dynamics, profess to feeling similarly).

FIGURE 5. Conversations are outlined in the session manual to provide some guidance, while maintaining the opportunity for dynamic interaction.

An integrated social coaching approach promoted strategy-informed interactions, providing a structured framework to guide researchers in top-down cognitive coaching between each interaction. In this, strength-based coaching prompts included 1) gestalt interpretations and real-life application (e.g., summarize what was helpful to you today and how this could help you at school this week), 2) the synthesis of motives, intentions, and impressions (e.g., what strategy were you using to figure out why Tyler was treating you that way), 3) verbal explanation and expressions of thoughts, emotions, and actions (e.g., how/why did you express your opinion and stand up for yourself), and 4) insightful queries on participants’ attention, comfort, and confidence in recognizing and responding in social situations (e.g., on a scale from one to five, how focused/comfortable/confident were you in that conversation). Overall, social coaching moved towards participants exploring a perceived sense of social self during moments of strife and success.

2.6 Measures

2.6.1 Emotion recognition

The Developmental Neuropsychological Assessment-II Affect Recognition (NEPSY-II AR; Korkman et al., 2007), a subcomponent of this social perception subtest, was used to measure participants’ ability to recognize others’ emotions. This subcomponent includes three task areas that require participants to select photographs where children are feeling the same way. NEPSY-II AR has high reliability coefficients (rs = 0.85–0.87) and moderate test-retest coefficients (rs = 0.50 to 0.58; Brooks et al., 2009).

2.6.2 Social inferencing

The Social Language Development Test (SLDT; Elementary and Adolescent Editions; Bowers et al., 2008; Bowers et al., 2010) Making Inferences subtest was administered to formally assess participants’ ability to use contextual clues (i.e., facial expressions, gestures, and posture) to infer a pictured character’s perspective. During the Making Inferences subtest of the SLDT, the student takes the perspective of someone in a photograph and tells what the person is thinking as a direct quote. The second question in each item asks the student to identify the relevant visual clues supporting the character’s thought. Scoring for the SLDT Making Inferences subtest includes assigning a score of one or 0 to each response, based on relevancy and quality. The SLDT has demonstrated good test-retest reliability (SLDT-Elementary κ = 0.79 SLDT-Adolescent κ = 0.82), excellent interrater reliability (SLDT-Elementary 84%, SLDT-Adolescent 85%), and good content and criterion validity.

2.6.3 Social attribution

The Social Attribution Task (SAT) measured participants’ accuracy when describing personified objects interacting socially (Abell et al., 2000). In this experimental measure, adapted from the original videos of Heider and Simmel (1944), participants were asked to narrate the movements of blue and red triangles presented in six separate brief videos. In the current study, pre and post-test administrations were randomized, and two different sets of six videos were used for each participant. For the first three videos, participants were instructed to “Watch each video. At the end of each video, you will describe what you think the triangles were doing”. Then, before the start of the last three videos, the participants were prompted to “Pretend the triangles are people and tell me what they are doing”. Narratives were recorded, transcribed, and double-scored by two blind raters. Using the methods of Castelli et al. (2000), each video from the SAT was given an accuracy score based on three-point Likert scale methods (Heider and Simmel, 1944). For the accuracy score, more points were awarded when the participant used specific, purposeful vocabulary to describe the movement and interaction of the triangles (e.g., “two people dancing with each other” received higher points than “two triangles moving around”).

2.6.4 Social self-schemata

The three-word interview task measured participants’ subjective sense of social self before and after the training. Participants were asked, “If you could describe your social self, using three different words, what would you say?”. Each word that the participants gave was given a valence score based on three-point Likert scale methods and assigned a value based on if the word was a positive (3), neutral (2), negative (1), or non-social (0) descriptor. Results were recorded and double-scored by two blind raters. More points were awarded when the participants used specific, positive vocabulary to describe their sense of social self (e.g., “friendly (3), compassionate (3), nice (3)” received higher points than “gamer (0), ok (2), annoying (1)”).

2.7 Procedure

After giving informed consent and assent, participants’ demographic information and clinical diagnosis were collected by having their parents complete a form during a 30-min intake interview prior to Session 1. Based on the parents’ preferences, participants were assigned to one of the training locations (in-person vs remote access). Afterward, participants took part in Session one and completed the pre training test (“pretest”) consisting of assessment tasks in the areas of emotion recognition, social inferencing, social attribution, and social self-schemata outside of the LIVR. After the CHARISMA-VST training intervention, participants completed the post training test (“posttest”) as part of the Session 10, in which the same set of assessment tasks were administered.

3 Results

To test hypotheses, linear mixed-effects models were employed to evaluate the main effects of time (pretest vs posttest), the interaction between time and training location (in-person vs remote), as well as the interaction between time and diagnosis of ASD (ASD vs non-ASD) on assessment task scores, with participants as random effects. Cohen’s d was reported for effect sizes (Cohen, 1988).

R (R Core Team, 2020) and RStudio (v.2022.7.01; RStudio Team, 2022) were utilized for data analysis, with the package tidyverse (Wickham et al., 2019) for data manipulation and visualization, and the nlme package (vs. 3.1–148; Pinheiro et al., 2020) for linear mixed-effects model.

3.1 Emotion recognition

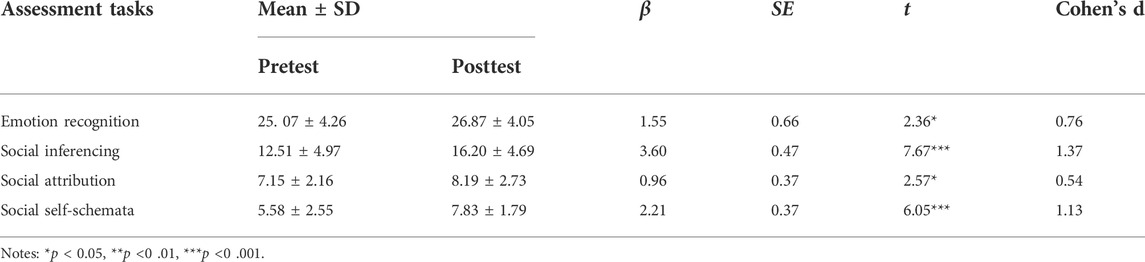

Results showed a significant main effect of time on NEPSY-II scores. Posttest scores were significantly greater than pretest scores (β = 1.55, SE = 0.66, t = 2.36, p < 0.05, Cohen’s d = 0.76), resulting in a medium to large effect size for improvement in emotion recognition following CHARISMA-VST. See Table 3. The change in NEPSY-II scores over time was not moderated by training locations or diagnosis of ASD.

3.2 Social inferencing

Results showed a significant main effect of time on SLDT score. Posttest scores were significantly greater than pretest scores (β = 3.60, SE = 0.47, t = 7.67, p < 0.001, Cohen’s d = 1.37), resulting in a large effect size for increase in inferring others’ perspectives following CHARISMA-VST. See Table 3. The change in scores over time was not moderated by training locations or diagnosis of ASD.

In addition, results indicated that participants had a significantly greater raw score in inferring a pictured character’s perspective at posttest than pretest (β = 1.79, SE = 0.24, t = 7.37, p < 0.001, Cohen’s d = 1.32), suggesting a large training effect following CHARISMA-VST. Neither training location nor diagnosis of ASD affected the change in the score in inferring a pictured character’s perspective from pretest to posttest.

Results also showed that participants at posttest had greater raw scores in identifying visual contextual clues to support the character’s thought than at pretest (β = 1.78, SE = 0.33, t = 5.35, p < 0.001, Cohen’s d = 0.96), implying a large training effect size following CHARISMA-VST. The change in scores in identifying contextual clues to support the character’s thought was not affected by training location or diagnosis of ASD.

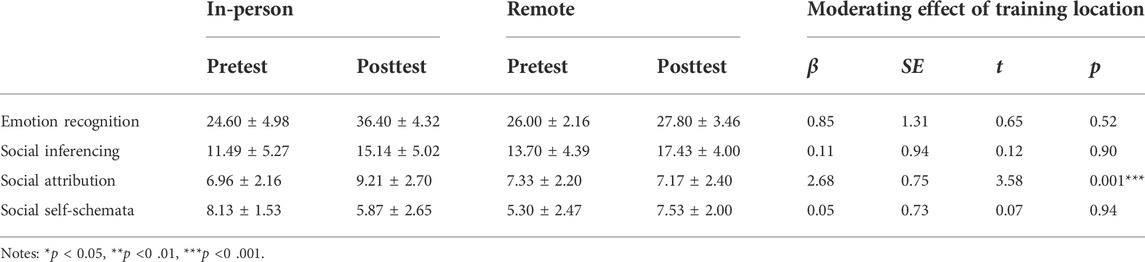

3.3 Social attribution

Results showed a significant main effect of time on SAT score. Posttest scores were significantly greater than pretest scores (β = 0.96, SE = 0.37, t = 2.57, p < 0.05, Cohen’s d = 0.54), a medium effect size for increase in accurate, specific, and purposeful vocabulary to describe movement and social interaction following CHARISMA-VST. See Table 3. In addition, our results showed a significant interaction between time and training location (β = 2.68, SE = 0.75, t = 3.58, p < 0.001), suggesting that the social attribution score changed differently between in-person and remote participants. More specifically, participants who took in-person training improved their social attribution scores (β = 2.30, t = 4.39, SE = 0.52, p < 0.001, Cohen’s d = 1.29), while those who took training remotely did not see any significant improvement. The change in SAT scores over time did not differ between participants with or without diagnosis of ASD.

3.4 Social self-schemata

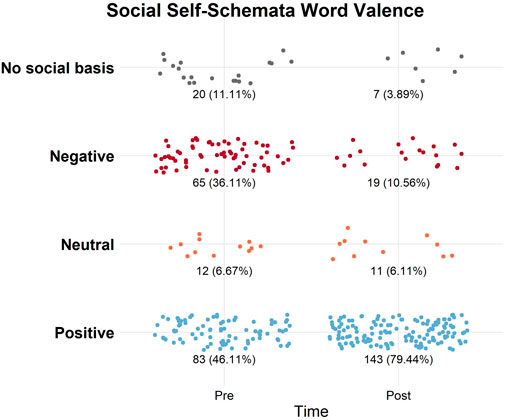

Results showed a significant main effect of time on social self-schemata score. Posttest scores were significantly greater than pretest scores (β = 2.21, SE = 0.37, t = 6.05, p < 0.001, Cohen’s d = 1.13), indicating a large training effect on improvements in using specific, positive vocabulary to describe their sense of social self following CHARISMA-VST. See Table 3. More specifically, participants used more positive words and less negative and no social basis vocabulary to describe their sense of social self at posttest than at pretest (Figure 6). The change in scores over time was not moderated by training locations or ASD diagnosis.

FIGURE 6. Social-self schemata word valence across time. Number of word responses of valences used to describe the sense of social self at pre and post interviews (N = 180). The number of no social basis (grey) and negative words (red) decreased between pre and post interviews. More positive words (blue) were used after the training. The number of neutral words (orange) used was comparable between pre and post.

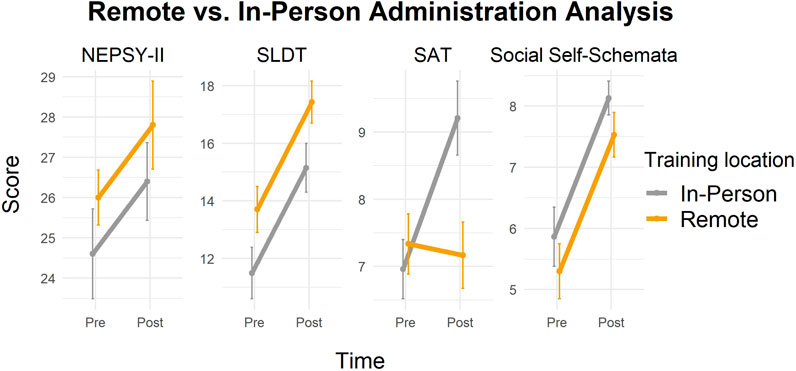

3.5 Summary of in-person vs remote administration analysis

This section summarizes the comparison of in-person vs remote training outcomes described in Sections 3.1–3.4, where a linear mixed-effects model was conducted on each assessment measure with time as within-subject variable and training location as a between-subject variable. It was observed that emotion recognition (NEPSY-II), social inferencing (SLDT), and social self-schemata for both in-person and remote participants increased from pretest to posttest in the same manner. Social attribution (SAT) accuracy score for the in-person participants increased from pretest to posttest, however, no increase was seen in remote participants (Table 4 and Figure 7).

FIGURE 7. Changes in assessment task scores in participants with in-person vs remote training. Emotion recognition (NEPSY-II), social inferencing (SLDT), and social self-schemata increased from pretest to posttest in the same manner in participants regardless of training location.

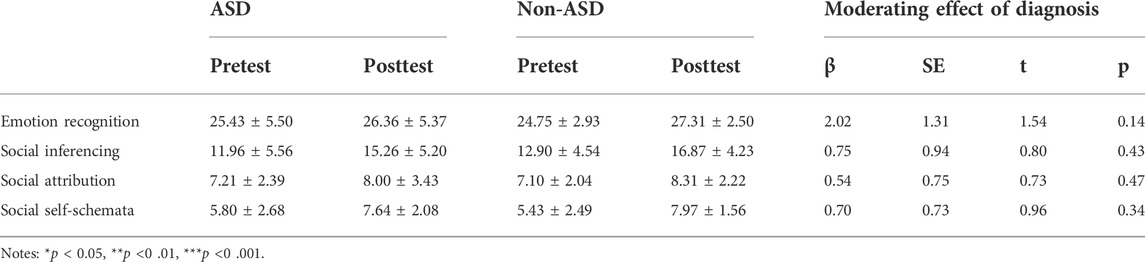

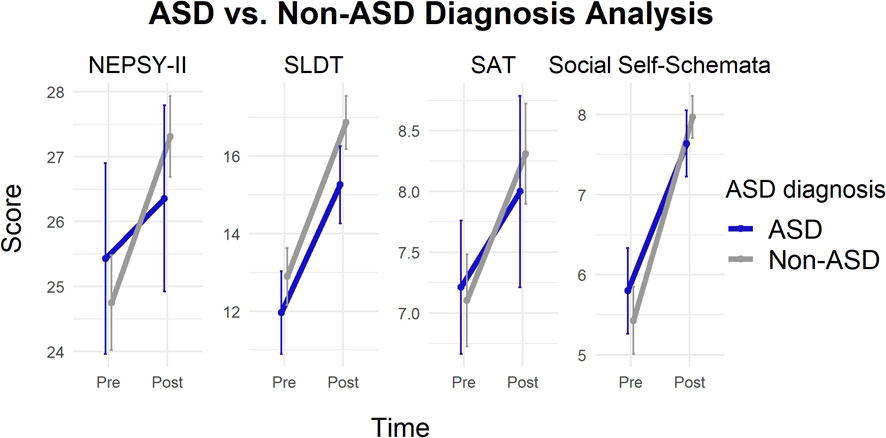

3.6 Summary of ASD vs non-ASD diagnosis analysis

This section summarizes the comparison of ASD vs non-ASD participant training outcomes as described in Sections 3.1–3.4, where linear mixed-effects model was conducted on each assessment measure with time as within-subject variable and diagnosis as a between-subject variable. It was observed that emotion recognition (NEPSY-II), social inferencing (SLDT), Social attribution (SAT) and social self-schemata scores for participants with or without diagnosis of ASD increased from pretest to posttest in the same fashion (Table 5 and Figure 8).

TABLE 5. Comparison of ASD vs non-ASD participant training outcomes on four measures of social cognition.

FIGURE 8. Changes in assessment task scores in participants with ASD vs non-ASD. Emotion recognition (NEPSY-II), social inferencing (SLDT), social attribution (SAT), and social self-schemata increased from pretest to posttest in the same manner in participants regardless of diagnosis condition.

4 Discussion

The first aim of this analysis was to measure social skill changes in children with social difficulties following Charisma™ Virtual Social Training (CHARISMA-VST). We hypothesized that children with social difficulties would demonstrate significant improvement in emotion recognition, social inferencing, social attribution, and social self-schemata following CHARISMA-VST. Supporting our hypotheses, current findings yield statistically significant improvements in emotion recognition, social inferencing, and social attribution accuracy. These findings are consistent with the training outcomes from our previous work (Didehbani et al., 2016; Johnson et al., 2021). Additionally, an added measure of social self-schemata demonstrated that CHARISMA-VST positively improved participants’ sense of social self, indicating a potential mindset shift towards social resiliency. This is of importance because information about the self continually informs and evolves across the life span based on one’s personal and social experiences (Cervone, 2021). The significant shift from negative to positive attributions expressed by our participants supports that our clients had authentic social experiences while interacting with clinicians through the training protocol. Shifting from negative to positive attribution for one’s social self-schema is not frequently addressed in behavioral social skill trainings but may be relevant in taking a comprehensive approach to developing social skill interventions.

Additionally, towards the second aim, researchers set out to compare in-person vs remote training outcomes to examine the changes in social skill development for pediatric participants. We hypothesized there would be no difference between remote vs in person administration on improvements in emotion recognition, social inferencing, social schemata formation and social attribution. This hypothesis was supported across three of the four outcome measures, including emotion recognition, social inferencing, and social self-schemata. However, contrary to what we expected, we found that only participants who completed the training in-person showed significant growth in social attribution accuracy. In-person participants achieved significant gains in social attribution accuracy scores pre vs post, while the remote participants did not. This outcome is surprising given that both in-person and remote participants received the same virtual social training curriculum. It is possible that research clinicians who met with participants onsite observed client social behaviors that influenced training interactions within Charisma 1.0. However, we believe this is unlikely because there was no effect of remote vs in person administration on skills such as social inferencing and emotion recognition. Additional exploration is warranted to further explain this finding. This study allowed for a broader reach with increased accessibility, showing that CHARISMA-VST could be delivered at home, meeting with the research clinician online with similar efficacy as onsite, in-person delivery.

Furthermore, this study extended our previous work by comparing the training outcomes of individuals with and without an ASD diagnosis. We hypothesized there would not be a significant difference in the changes in any of our social cognition outcome measures between ASD and non-ASD youth participants. Consistent with this hypothesis, our results showed that there was no difference in how social cognitive outcomes changed between individuals with and without an ASD diagnosis. The effect perceived was not driven by participants with ASD. The changes in social cognitive outcomes were the same across the sample. These findings demonstrate that while our virtual reality social cognition training was originally designed to support individuals with autism spectrum disorder (Kandalaft et al., 2013; Didehbani et al., 2016; Yang et al., 2017), this program appears to also be effective at supporting social learners with neurodiverse backgrounds, including those without any formal diagnosis. In addition, the location effect on the SAT score was not influenced by participants’ diagnoses. Within our sample, although there was a higher proportion of children with an ASD diagnosis training in-person (n = 17) than training remotely (n = 11), the proportion was not significantly different.

Charisma 1.0 was focused on building an immersive and accessible solution. Findings suggest that technology advancements from our previous work designed to increase authenticity, such as a more ecologically valid and diverse avatar creator, offered added flexibility to research clinicians and participants to create an avatar in their own likeness. This customization feature also provided a method where an avatar library could be created that allowed for gender, cultural, and age diversity across faux friend characters, lending itself to expanded representation and relatedness. Secondly, traversing the open world environment with salient props provided opportunities for participants to be drivers of their own social experience, by navigating within and between contextual social environments and interactivities that simulated their real-life experiences. Last, previous versions of our LIVR required participants to be on-site to access the virtual environment via a windows computer with preinstalled software. In this study, the use of virtual machines allowed Charisma 1.0 to be accessed by users with Apple operating systems, which had previously been restricted. Hosting Charisma 1.0 on virtual machines also opened access to participants that did not meet the minimum hardware requirements for local download and installation of the software. Our current findings suggest that utilizing remote technology such as virtual machines enable delivery of this type of social skills intervention to other populations that may not have had access. Making Charisma 1.0 available more widely was achieved through enhancements to many of the backend processes and infrastructure that were established in previous versions. As software was improved, so were our development processes. Charisma 1.0 infrastructure supported updates and patches being released quickly as they were completed. This created an environment for quick corrections of software bugs and feedback from user acceptance testing. Even with the ability to deploy updates in this manner we were still faced with limitations due to the technical fluency needed to successfully operate the software.

5 Future directions

5.1 CHARISMA-VST digital health platform

The preliminary data informs our own virtual reality environment design and provides evidence of the potential for an immersive digital health platform as a promising tool for professionals in supporting social skill development. In efforts to expand empirical research to translational clinical services, future directions should include efforts to train dedicated Charisma coaches to work with pediatric clients using a refined CHARISMA-VST protocol. Moving forward CHARISMA-VST could be offered as a ‘one click’ digital health solution that provides an easily scalable and authentic solution to training pivotal social skills. Secure parallel CHARISMA-VST portals could be developed for Charisma clients and coaches to provide an end-to-end user experience without the need for multiple software. For Charisma coaches, features such as user management (creating and managing client profiles, scheduling sessions), installing the desktop application and virtually meeting with a client via integrated audio and video conferencing could be easily managed. The client focused portal can provide a simplified user experience through fully integrated communications and access to the Charisma LIVR via a browser delivered web real time chat RTC (RTC) stream (Figure 9). This would be achieved by integrating Twilio video conferencing into the user portals to connect coaches and clients. Additionally, accessing the LIVR utilizing on-demand, scalable server system (Amazon GameLift) and on-demand virtual client machines (Amazon EC2 and Unreal Engine 4) would eliminate the need for multiple software programs. The browser delivered web RTC stream, can connect users to the Charisma LIVR by clicking a button in their video coaching session. When clicked, a new browser window would open and connect the user to the Charisma LIVR application.

FIGURE 9. Charisma digital health platform. (A) The dashboard displays previous and upcoming sessions. (B) The coach and client meet using an integrated audio-video conferencing call.

Additionally, of specific interest to researchers at the Center for BrainHealth is the creation of a suite of immersion opportunities from low-immersion, single-player applications to review and refresh strategy teaching to fully immersive HMD social training experiences. Additional future directions encourage developers to collect ongoing, incremental user experience data to test and advance usability with targeted pediatric populations to identify feasibility considerations. Following best practice in continued translational research may require a shift from individual and group performance data on standardized measures of social cognition to client-centered health care and personalized social coaching programs that track individual performance across multiple settings and levels of immersion.

5.2 Interactive analysis of social emotional reasoning and resilient thinking

As a mainstream clinical tool, VR offers the potential to optimize social behavior evaluation, training, and treatment environments through immersive, dynamic 3D stimulus presentations, within which sophisticated interaction, behavioral tracking, and performance recording of real-world behaviors can occur (Rizzo & Kim, 2005). Research clinicians at the Center for BrainHealth aim to evaluate a mechanism to categorize and track social behavior and suggest the following social cognitive constructs: 1) Strategic Social Attention: block out distractions and direct social attention to important verbal and non-verbal cues to recognize what others are doing, saying, thinking, and feeling 2) Discourse: initiate, maintain, adapt and balance conversational flow to get to know others and be known 3) Theory of Mind: notice one’s own and others’ thoughts, perspectives, mental and emotional states to interpret and reason through others’ motives and intentions 4) Expressive Reasoning: engage in critical thinking and social problem-solving to cohesively explain social experiences and decisions, as well as potential impact and 5) Transformation: personal reflection to better understand the social self, see the bigger picture, and embrace social risks with a dynamic, savvy, growth mindset. These five-core social cognitive constructs could be compiled to comprise the Interactive Analysis of Social Emotional Reasoning and Resilient Thinking (I-ASSER2T). The I-ASSER2T™ design is to specify lagging or mastered social competencies during live conversation within the LIVR and inform social coaching techniques. Such a tool may be used to actively track progress on target social behaviors in varying social contexts and use data-driven performance markers to examine the complexity and nuances in real time during live social interactions. Blakemore (2019) supports the notion that adolescents are more adept at completing complicated social cognition tasks in the laboratory than they are at dealing with situations that arise in everyday life, therefore more naturalistic paradigms might be useful in tracking social behavior. Future CHARISMA-VST research may further explore the standardization, validity, and utilization of the I-ASSER2T™ social cognitive behavioral checklist to measure social competency within and across conversations in LIVR role-played social interactions as well as during generalized conversations in the real-life face to face conversations. Additionally, advanced gaming systems controlling multicomputer interaction through deep machine learning and artificial intelligence may lead to more automated clinical integrations of embedded coaching prompts and behavior tracking within the software.

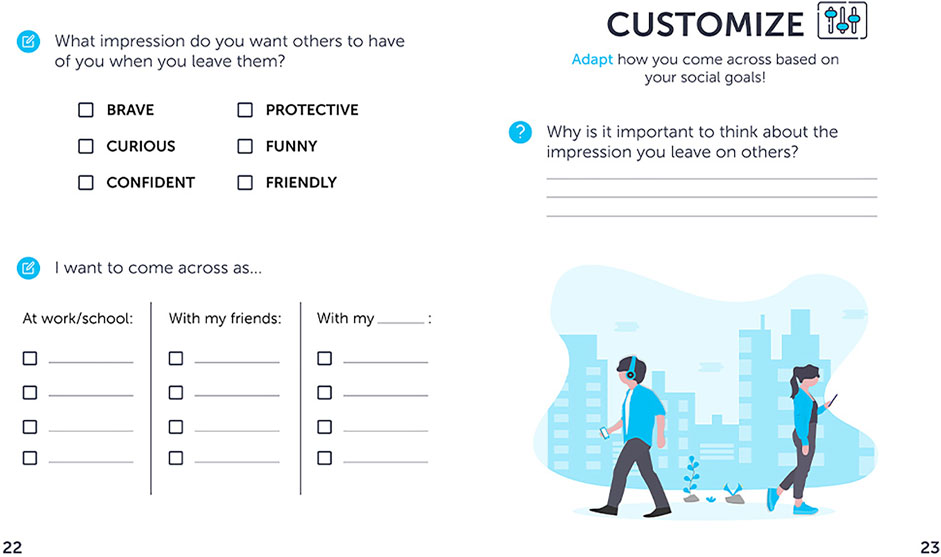

5.3 Professional development for CHARISMA-VST

The view that emerges from this study suggests that the field of VR assessment and training has progressed from pilot stages of development, including multiple proof-of-concept studies to a potential LIVR clinical solution for training social skills in youth. As such, CHARISMA-VST strategies have been developed into project deliverables in the form of student workbooks and interactive digital training content (Figure 10).

FIGURE 10. Strategy workbook page. Future Charisma™ Virtual Social Coaching will offer both interactive digital training modules and a corresponding workbook for strategy training.

The CHARISMA-VST protocol, LIVR Charisma software, and I-ASSER2T have been made available to select professionals across clinical and educational settings via an approved continuing education course, the Charisma Coach Training, through the Center for BrainHealth. These providers are currently evaluating the material and user feedback by offering CHARISMA-VST to students and clients through their private practices and school settings. Feedback from this Charisma Coach Training is ongoing and will be continually analyzed as part of the user experience process across multiple diverse settings such as college university autism clinics, home health care services, private practice, secondary schools for students with learning disabilities, state vocational training institutions and non-profit organizations.

6 Conclusion

Within this paper, we present encouraging initial research results that present a groundbreaking shift from laboratory research to the ability to reach clients remotely, in their homes. This technological advancement may eliminate the need for youth to interact with clinicians onsite while maintaining clinical benefits. Easily customizable environments, a library of avatars and the ability to synchronously deploy updates were notable achievements for the remote training delivery. These enhancements spurred on the need for a one-click solution where all connecting software was fully integrated and set the stage for Charisma 1.0 to move beyond its humble beginnings into the wider area of an end-to-end digital health solution for social coaching. Prospects to integrate Charisma 1.0 into other use-cases such as cultural immersion, language training, workplace etiquette training, and vocational and rehabilitative therapies hold exciting opportunity. These encouraging findings show the potential of CHARISMA-VST as a remote accessible, low immersion, virtual reality social cognition training for pediatric populations with and without neurodiverse backgrounds. Overall, these results demonstrate initial evidence to support a shift in delivery and reflect the clinical and technological advancements in the field of low immersion virtual reality social cognition training.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving human participants were reviewed and approved by The University of Texas at Dallas Institutional Review Board. Written informed consent to participate in this study was provided by the participants’ legal guardian/next of kin.

Author contributions

MJ is a lead author and co-principal investigator and leads the youth and family innovations and Charisma Virtual Social Training labs at the Center for BrainHealth. A speech-language pathologist, her research focus is in social–emotional development, pediatric neurodiversity and training professionals. AT is a co-principal investigator. He leads Charisma and technology initiatives at Center for BrainHealth. His focus is on virtual reality, human computer interface, biosensors and the use of these technologies for improving brain health. KT is a clinician and brain health coach. Her research contributions focus on data collection and analysis, manuscript preparation, and collaboration on the social cognition initiatives at the Center for BrainHealth. SL is a doctoral candidate at the The University of Texas at Dallas, Center for BrainHealth. Her research focus is on brain health in the pediatric and adult population, and the effects of cognitive training on overall wellbeing. ZC is a doctoral candidate affiliated with The University of Texas at Dallas, Center for BrainHealth. Her research focus is on the application of virtual reality in psychology and clinical studies. SC is a Founder and Chief Director of the Center for BrainHealth. Her research focus is committed to maximizing cognitive potential across the entire lifespan.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2022.1004162/full#supplementary-material

References

Abell, F., Happé, F., and Frith, U. (2000). Do triangles play tricks? Attribution of mental states to animated shapes in normal and abnormal development. Cogn. Dev. 15, 1–16. doi:10.1016/S0885-2014(00)00014-9

Bailenson, J. N., Yee, N., Blascovich, J., Beall, A. C., Lundblad, N., and Jin, M. (2008). The use of immersive virtual reality in the learning sciences: Digital transformations of teachers, students, and social context. J. Learn. Sci. 17 (1), 102–141. doi:10.1080/10508400701793141

Batastini, A. B., Paprzycki, P., Jones, A., and MacLean, N. (2021). Are videoconferenced mental and behavioral health services just as good as in-person? A meta-analysis of a fast-growing practice. Clin. Psychol. Rev. 83, 101944. doi:10.1016/j.cpr.2020.101944

Blakemore, S. J. (2019). Adolescence and mental health. Lancet 393, 2030–2031. doi:10.1016/S0140-6736(19)31013-X

Bowers, L., Huisingh, R., and LoGiudice, C. (2008). Social Language development test: Elementary. East Moline, IL: LinguiSystems.

Bowers, L., Huisingh, R., and LoGiudice, C. (2010). Social Language development test: Adolescent. East Moline, IL: LinguiSystems.

Brooks, B. L., Sherman, E. M. S., and Strauss, E. (2009). NEPSY-II: A developmental neuropsychological assessment, second edition. Child. Neuropsychol. 16, 80–101. doi:10.1080/09297040903146966

Caserman, P., Garcia-Agundez, A., Gámez Zerban, A., and Göbel, S. (2021). Cybersickness in current-generation virtual reality head-mounted displays: systematic review and outlook. Virtual Real. 25, 1153–1170. doi:10.1007/s10055-021-00513-6

Castelli, F., Happé, F., Frith, U., and Frith, C. (2000). Movement and mind: A functional imaging study of perception and interpretation of complex intentional movement patterns. NeuroImage 12, 314–325. doi:10.1006/nimg.2000.0612

Cervone, D. (2021). “The KAPA model of personality structure and dynamics,” in The handbook of personality dynamics and processes. Editor J. F. Rauthmann (Cambridge, MA: Academic Press), 601–620.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences. New Jersy: Lawrence Eerlbaum Associates.

Dechsling, A., Shic, F., Zhang, D., Marschik, P. B., Esposito, G., Orm, S., et al. (2021). Virtual reality and naturalistic developmental behavioral interventions for children with autism spectrum disorder. Res. Dev. Disabil. 111, 103885. doi:10.1016/j.ridd.2021.103885

Didehbani, N., Allen, T., Kandalaft, M., Krawczyk, D., and Chapman, S. B. (2016). Virtual reality social cognition training for children with high functioning autism. Comput. Hum. Behav. 62, 703–711. doi:10.1016/j.chb.2016.04.033

Farashi, S., Bashirian, S., Jenabi, E., and Razjouyan, K. (2022). Effectiveness of virtual reality and computerized training programs for enhancing emotion recognition in people with autism spectrum disorder: a systematic review and meta-analysis. Int. J. Dev. Disabil. 68, 1–17. doi:10.1080/20473869.2022.2063656

Freeman, D., Reeve, S., Robinson, A., Ehlers, A., Clark, D., Spanlang, B., et al. (2017). Virtual reality in the assessment, understanding, and treatment of mental health disorders. Psychol. Med. 47, 2393–2400. doi:10.1017/S003329171700040X

Greenleaf, W. J., and Tovar, M. A. (1994). Augmenting reality in rehabilitation medicine. Artif. Intell. Med. 6, 289–299. doi:10.1016/0933-3657(94)90034-5

Grinberg, A. M., Careaga, J. S., Mehl, M. R., and O'Connor, M. F. (2014). Social engagement and user immersion in a socially based virtual world. Comput. Hum. Behav. 36, 479–486. doi:10.1016/j.chb.2014.04.008

Grinberg, A. M. (2018). Accepting and growing from "bad therapy" as a young clinician. J. Palliat. Med. 21, 103–104. doi:10.1089/jpm.2017.0312

Heider, F., and Simmel, M. (1944). An experimental study of apparent behavior. Am. J. Psychol. 57, 243–259. doi:10.2307/1416950

Ip, H. H. S., Wong, S. W. L., Chan, D. F. Y., Byrne, J., Li, C., Yuan, V. S. N., et al. (2018). Enhance emotional and social adaptation skills for children with autism spectrum disorder: A virtual reality enabled approach. Comput. Educ. 117, 1–15. doi:10.1016/j.compedu.2017.09.010

Jacobson, D., Chapman, R., Ye, C., and Os, J. V. (2017). A project-based approach to executive education. Decis. Sci. J. Innovative Educ. 15, 42–61. doi:10.1111/dsji.12116

Jerald, J. (2015). The VR book: Human-centered design for virtual reality. New York, NY: Association for Computing Machinery, San Rafael, CA: Morgan & Claypool Publishers.

Johnson, M. T., Troy, A. H., Tate, K. M., Allen, T. T., Tate, A. M., and Chapman, S. B. (2021). Improving classroom communication: The effects of virtual social training on communication and assertion skills in middle school students. Front. Educ. (Lausanne). 6, 678640. doi:10.3389/feduc.2021.678640

Kaimara, P., Oikonomou, A., and Deliyannis, I. (2022). Could virtual reality applications pose real risks to children and adolescents? A systematic review of ethical issues and concerns. Virtual Real. 26, 697–735. doi:10.1007/s10055-021-00563-w

Kandalaft, M. R., Didehbani, N., Krawczyk, D. C., Allen, T. T., and Chapman, S. B. (2013). Virtual reality social cognition training for young adults with high-functioning autism. J. Autism Dev. Disord. 43, 34–44. doi:10.1007/s10803-012-1544-6

Kaplan-Rakowski, R., and Gruber, A. (2019). “Low immersion versus high-immersion virtual reality definitions classification and examples with a foreign language focus [conference presentation],” in Proceedings of the Innovation in Language Learning International Conference, Florence, Italy November 14, 2019.

Karami, B., Koushki, R., Arabgol, F., Rahmani, M., and Vahabie, A. H. (2021). Effectiveness of virtual/augmented reality-based therapeutic interventions on individuals with autism spectrum disorder: A comprehensive meta-analysis. Front. Psychiatry 12, 665326. doi:10.3389/fpsyt.2021.665326

Korkman, M., Kirk, U., and Kemp, S. (2007). NEPSY-II review. J. Psychoeduc. Assess. 28, 175–182. doi:10.1177/0734282909346716

Kriz, W. C. (2003). Creating effective learning environments and learning organizations through gaming simulation design. Simul. Gaming 34, 495–511. doi:10.1177/1046878103258201

Malihi, M., Nguyen, J., Cardy, R. E., Eldon, S., Petta, C., and Kushk, i. A. (2020). Short report: Evaluating the safety and usability of head-mounted virtual reality compared to monitor-displayed video for children with autism spectrum disorder. Autism 4 (7), 1924–1929. doi:10.1177/1362361320934214

Markus, H. (1997). Self-schemata and processing information about the self. J. Pers. Soc. Psychol. 35, 63–78. doi:10.1037/0022-3514.35.2.63

Mesa-Gresa, P., Gil-Gomez, H., Lozano-Quilis, J. A., and Gil-Gomez, J. A. (2018). Effectiveness of virtual reality for children and adolescents with autism spectrum disorder: An evidence-based systematic review. Sensors 18, 2486. doi:10.3390/s18082486

Miller, H. L., and Bugnariu, N. (2016). Level of immersion in virtual environments impacts the ability to assess and teach social skills in autism spectrum disorder. Cyberpsychol. Behav. Soc. Netw. 19, 246–256. doi:10.1089/cyber.2014.0682

Nijman, S. A., Veling, W., Greaves-Lord, K., Vermeer, R. R., Vos, M., Zandee, C. E. R., et al. (2019). Dynamic interactive social cognition training in virtual reality (DISCoVR) for social cognition and social functioning in people with a psychotic disorder: study protocol for a multicenter randomized controlled trial. BMC Psychiatry 19, 272. doi:10.1186/s12888-019-2250-0

Pandey, V., and Vaughn, L. (2021). The potential of virtual reality in social skills training for autism: Bridging the gap between research and adoption of virtual reality in occupational therapy practice. Open J. Occup. Ther. 9, 1–12. doi:10.15453/2168-6408.1808

Parsons, S. (2016). Authenticity in virtual reality for assessment and intervention in autism: A conceptual review. Educ. Res. Rev. 19, 138–157. doi:10.1016/j.edurev.2016.08.001

Pinheiro, J., Bates, D., DebRoy, S., and Sarkar, D., and R Core Team (2020). nlme: Linear and nonlinear mixed effects models. R package version 3.1-148. Available at: https://CRAN.R-project.org/package=nlme (Accessed September 8, 2022).

R Core Team (2020). R: A language and environment for statistical computing. R Foundation for Statistical Computing. Available at: http://www.r-project.org/(Accessed September 8, 2022).

Rizzo, A., and Kim, G. H. (2005). A SWOT analysis of the field of virtual reality rehabilitation and therapy. Presence. (Camb). 14, 119–146. doi:10.1162/1054746053967094

RStudio Team (2022). RStudio. Boston, MA: Integrated Development for R. RStudio, PBC. Available at: http://www.rstudio.com/(Accessed September 8, 2022).

Rus-Calafell, M. R., Garety, P., Sason, E., Craig, T. J. K., and Valmaggia, L. R. (2018). Virtual reality in the assessment and treatment of psychosis: a systematic review of its utility, acceptability, and effectiveness. Psychol. Med. 48, 362–391. doi:10.1017/S0033291717001945

Scavarelli, A., Arya, A., and Teather, R. J. (2020). Virtual reality and augmented reality in social learning spaces: a literature review. Virtual Real. 25, 257–277. doi:10.1007/s10055-020-00444-8

Standen, P., and Brown, D. J. (2006). Virtual reality and its role in removing the barriers that turn cognitive impairments into intellectual disability. Virtual Real. 10, 241–252. doi:10.1007/s10055-006-0042-6

Tan, B. L., Lee, S. A., and Lee, J. (2018). Social cognitive interventions for people with schizophrenia: A systematic review. Asian J. Psychiatr. 35, 115–131. doi:10.1016/j.ajp.2016.06.013

Wickham, H., Averick, M., Bryan, J., Chang, W., McGowan, L., François, R., et al. (2019). Welcome to the tidyverse. J. Open Source Softw. 4 (43), 1686. doi:10.21105/joss.01686

Yang, D. Y. J., Allen, T., Abdullahi, S. M., Pelphrey, K. A., Volkmar, F. R., and Chapman, S. B. (2017). Brain responses to biological motion predict treatment outcome in young adults with autism receiving Virtual Reality Social Cognition Training: Preliminary findings. Behav. Res. Ther. 93, 55–66. doi:10.1016/j.brat.2017.03.014

Keywords: virtual reality, social cognition, pediatric, digital health, immersion

Citation: Johnson M, Tate AM, Tate K, Laane SA, Chang Z and Chapman SB (2022) Charisma™ virtual social training: A digital health platform and protocol. Front. Virtual Real. 3:1004162. doi: 10.3389/frvir.2022.1004162

Received: 26 July 2022; Accepted: 21 October 2022;

Published: 14 November 2022.

Edited by:

Valentino Megale, Softcare Studios, ItalyReviewed by:

Joey Ka-Yee Essoe, Johns Hopkins Medicine, United StatesTetsunari Inamura, National Institute of Informatics, Japan

Copyright © 2022 Johnson, Tate, Tate, Laane, Chang and Chapman. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Maria Johnson, TWFyaWEuam9obnNvbkB1dGRhbGxhcy5lZHU=

Maria Johnson

Maria Johnson Aaron M. Tate

Aaron M. Tate Kathleen Tate

Kathleen Tate Sarah A. Laane

Sarah A. Laane Zhengsi Chang

Zhengsi Chang Sandra Bond Chapman

Sandra Bond Chapman