- 1Human-Computer Interaction, University of Hamburg, Hamburg, Germany

- 2Virtual and Augmented Reality Lab (VARLab), University of Bologna, Bologna, Italy

- 3HIT Lab NZ, University of Canterbury, Christchurch, New Zealand

Introduction: Virtual Reality (VR) applications often require two-handed interactions, which can pose accessibility challenges for users with missing limbs or limited mobility in the arms or hands. This paper investigates how to make bimanual input more accessible and inclusive using electromyography and motion tracking.

Methods: Through an inclusive user-centered design approach, we developed three interaction techniques after interviewing a person with unilateral upper limb differences. To assess baseline metrics on the efficiency and usability of the three prototypes, a user study was conducted with 26 participants without upper limb differences.

Results, discussion, study 1: We found that those interaction methods can be as efficient as unimanual interactions, even without prior learning, showing the potential of electromyography and motion tracking for bimanual interaction in VR.

Methods study 2: In a second user study, feedback was gathered from four participants with unilateral upper limb impairments to refine the interaction techniques and identify accessibility barriers in the design.

Results and discussion study 2: Results of the thematic analysis indicate that people with upper limb differences enjoyed the proposed bimanual interaction techniques, while they suggested improvements in ergonomics and system stability.

1 Introduction

Many real-world tasks are inherently bimanual, requiring coordinated hand use where each hand assumes a distinct role—typically, a primary hand for action and a secondary hand for support or stabilization (Hinckley et al., 1997; Sainburg, 2005).

Even in activities often perceived as one-handed, such as writing, the non-dominant hand plays a crucial role by steadying the paper while the dominant hand writes. Technical tasks further highlight the necessity of bimanual coordination: assembling mechanical components usually involves holding parts in place with one hand while manipulating a tool like a screwdriver or soldering iron with the other. In laboratory settings, technicians frequently use one hand to operate delicate instruments while simultaneously recording data or adjusting parameters with the other. Surgeons, too, depend on precise bimanual coordination to manipulate instruments, using one hand for action and the other for retraction or stabilization. This principle extends to interactions with visual display systems. For example, people often hold a smartphone in one hand while tapping, scrolling, or typing with the other. Similarly, laptop users may use one hand to navigate with the trackpad or mouse while the other executes keyboard commands. These everyday scenarios underscore how deeply bimanual coordination is embedded in our interactions with technical systems and digital interfaces.

Given the ubiquity and importance of bimanual coordination in everyday contexts, it is no surprise that interactive technologies—particularly immersive systems such as VR—increasingly replicate and rely on two-handed input paradigms to support naturalistic interaction. In particular, VR has gained traction across various domains, including entertainment, education, and healthcare (Al-Ansi et al., 2023; Bansal et al., 2022; Ansari et al., 2021). In VR environments, bimanual interactions are commonly replicated to enhance realism and immersion, aligning with users’ preferences for bimanual engagement (Ullrich et al., 2011). However, VR has been critiqued as an ableist technology1 (Gerling and Spiel, 2021), predominantly designed for able-bodied individuals. Many VR systems rely on dual-controller input or hand tracking with both hands, lacking essential accessibility features and alternative input methods for users with upper limb differences or limited fine motor skills (Yildirim, 2024). Upper limb differences, whether congenital or acquired, encompass a wide spectrum of variations in the structure and function of the arms, hands, and fingers (Le and Scott-Wyard, 2015; Bae et al., 2009; 2018). With over 5.6 million individuals in the United States alone affected by limb loss or differences, the imperative for inclusive design is not merely ethical—it is practical and necessary2.

Assistive technologies such as EMG-controlled prosthetics (Gailey et al., 2017; Liu et al., 2013), motion tracking systems (Cordella et al., 2016; Jarque-Bou et al., 2021), and gaze or voice-based controls (Porter and Zammit, 2023) have demonstrated promise in expanding access to digital interfaces. These modalities illustrate that it is possible to reimagine interaction beyond conventional bimanual input.

In VR, Gerling and Spiel proposed three key hardware design strategies: embracing diverse body types, challenging design norms, and investing in individualized solutions. However, hardware barriers persist. Mott et al. (2020) identified seven major accessibility obstacles, including the difficulty of manipulating dual motion controllers and inaccessible buttons for users with upper limb impairments. Naikar et al. (2024) found that only two out of 39 free VR experiences offered a one-handed mode, while Yildirim (2024) reported that only five out of 16 VR applications were fully usable with one hand. Beyond hardware, interaction techniques also pose challenges. Franz et al. (2023) highlighted that users prioritize enjoyment, presence, and exercise over efficiency when selecting locomotion techniques, emphasizing the need for diverse options.

Despite progress in desktop accessibility, 3D immersive systems remain constrained by outdated paradigms. Existing guidelines like WCAG 2.13 offer useful principles for web and mobile content but lack translatability to VR contexts. Although initiatives such as XR Access4 and Meta’s VRCs5 provide preliminary accessibility frameworks, they often focus on visual, auditory, or cognitive impairments—leaving physical impairments, such as upper limb loss, insufficiently addressed.

Our study addresses this gap by exploring the potential of electromyography (EMG) and motion capture technology as input methods, enabling users with diverse motor abilities to engage with VR. These technologies can replicate fundamental functionalities of the conventional controller or hand tracking interaction in VR: selecting, usually done by moving the controller or the hand directly, can be realized with any motion capture system. Confirming, typically done using a button on the controller or a gesture for hand tracking, can be realized by using a simple threshold function on the EMG data. This means that the user needs to flex the appropriate muscle shortly for the selection to be confirmed. By introducing these alternative interaction techniques, we demonstrate how accessibility in VR can be improved at both the software and hardware levels, reducing reliance on standardized input methods and expanding usability for a broader range of users. However, it is important to clarify the intended user group. The interaction techniques developed in this study are primarily suited for individuals with partial upper limb functionality—particularly those with unilateral limb differences who retain residual muscle control, such as in the biceps—which can be leveraged for EMG input or upper limb motion tracking (Gailey et al., 2017). For users with complete limb absence, especially without viable musculature, the current methods may not offer immediate applicability. Nonetheless, the inclusive design principles and input flexibility explored here offer a foundation for extending accessibility to a broader population. Future adaptations could integrate other modalities such as foot-based EMG, voice commands, or gaze-based interaction to accommodate more severe impairments (Siean and Vatavu, 2021; Yue et al., 2023; Porter and Zammit, 2023).

We developed three interaction techniques for VR interaction techniques in an inclusive user-centered design approach. We conducted an interview with a participant with unilateral limb differences to gain insights into his experience with conventional PC gaming - using mouse and keyboard, and what could be transferred to VR input systems. One of the core results of the interview was that the participant uses the affected side as much as possible in everyday life as well as for gaming, Based on our findings, we designed three interaction techniques with varying levels of secondary hand involvement, allowing for different distributions of interaction responsibilities between primary and secondary or affected hand.

To evaluate the interaction techniques, we assessed their usability and efficiency in comparison to one-handed interaction with a single motion controller, leading to our first research question:

RQ1 How do the proposed inclusive bimanual interaction techniques differ in usability and efficiency?

To address this research question, we conducted a user study with 26 participants without upper limb differences.

Building on these findings, we then developed alternative input methods that enabled the use of the affected side for pointing, confirming, or both. This led to our second research question:

RQ2 How do people with upper limb differences assess different levels of responsibility of the secondary hand?

To answer RQ2, we conducted an additional user study with four participants with unilateral upper limb differences.In the first phase of this iterative user-centered design process (Houde, 1992; Buxton and Sniderman, 1980), we conducted an interview with a single experienced participant, to learn about his experience with PC and gaming console usage. Although this may reduce the generalizability of the findings and increase the risk of bias in early design iterations, the subsequent iterations of the user-centered design process will involve a broader audience, including three additional participants with unilateral limb differences. This approach is expected to strengthen the validity of our findings by incorporating diverse perspectives and refining the design based on broader user feedback (Holtzblatt and Beyer, 1997).

The contribution of this paper can be summarized as follows:

The paper is structured as follows: Section 2 reviews related work on bimanual interaction, adaptive hardware, and VR accessibility for users with upper limb differences. Section 3 details the materials, methods, and user-centered design approach used to develop the interaction techniques. Section 4 presents the design process, including an interview with a one-handed participant who is experienced in computer and gaming console usage, a large-scale usability study, and feedback from participants with upper limb differences. Section 5 discusses the findings and future research directions, while Section 6 concludes with key takeaways and recommendations for improving VR accessibility.

2 Related work

This section reviews adaptive hardware and sensor-based input systems designed to support users with motor impairments. It highlights how these innovations have shaped accessible computing and identifies key limitations in their application to immersive VR systems.

2.1 Adaptive hardware and alternative input systems

In the field of PC and console gaming, alternative input devices such as the Xbox Adaptive Controller6 and Quadstick7, are available, providing customizable configurations with external switches, joysticks, and sip-and-puff devices, allowing users with varying motor abilities to interact with digital systems (Fanucci et al., 2011; Dicianno et al., 2010; Bierre et al., 2005). Additionally, ergonomic one-handed keyboards and foot-operated input interfaces further extend accessibility options (Sears et al., 2007; Angelocci et al., 2008; Velloso et al., 2015). Voice-controlled interfaces and eye-tracking systems also offer viable solutions for hands-free interaction, particularly for users with severe physical disabilities (Kanakaprabha et al., 2023; Majaranta and Räihä, 2002; Pradhan et al., 2018). These systems collectively expand the range of accessible input methods, enabling personalized configurations tailored to the user’s motor capabilities.

2.2 Sensor-based input systems: EMG and motion tracking

Emerging wearable sensor-based input systems, such as electromyography (EMG) and motion tracking, offer non-invasive alternatives for assistive technology. EMG-based systems detect muscle activity to translate neural signals into functional movements, enabling users to control devices with minimal physical effort. Many systems can provide real-time, high-fidelity muscle data, widely applied in rehabilitation, prosthetic control, and ergonomic research (Merletti and Farina, 2016). Research has demonstrated EMG’s potential for prosthetic adaptation and assistive interfaces, improving both digital accessibility and physical mobility for individuals with motor impairments (Choi and Kim, 2007; Resnik et al., 2018; Subasi, 2019). Beyond EMG, motion-tracking technologies contribute to mobility solutions, rehabilitation, and assistive robotics. Such systems can be used to analyze movement patterns, providing objective insights for rehabilitation interventions (Sletten et al., 2021; Nirme et al., 2020; Wan Idris et al., 2019). Studies highlight the role of motion tracking in prosthetic control, enhancing precision in powered prosthetic devices, and upper limb rehabilitation (Varghese et al., 2018; Cowan et al., 2012; Sethi et al., 2020). Integrating biological and sensor-based input systems into VR accessibility solutions has the potential to redefine interaction paradigms, reducing reliance on conventional controllers and expanding usability for individuals with diverse mobility needs. As these technologies evolve, they present new opportunities for inclusive and adaptive interaction techniques in immersive environments.

2.3 Interaction design strategies and user autonomy in VR

While sensor-based and adaptive hardware solutions expand the technical toolkit for accessibility, the design of interaction strategies in VR plays an equally critical role in shaping user experience. Yamagami et al. (2022) proposed a design space for mapping unilateral input to bimanual VR interactions, demonstrating that system-assisted techniques can help compensate for physical limitations. However, their findings also revealed a significant trade-off: heavy reliance on automated assistance can diminish user autonomy, engagement, and self-efficacy. These insights underscore the importance of developing interaction techniques that are both accessible and user-driven—preserving agency and fostering meaningful engagement in immersive environments.

2.4 Gaps in VR accessibility

This review of related work reveals key limitations in the accessibility of VR systems, particularly for users with upper limb differences. While adaptive hardware and alternative input methods—such as EMG and motion tracking—have made significant strides in traditional computing and rehabilitation contexts, their integration into immersive VR remains limited. To address this gap, the present study focuses on developing autonomous, user-driven interaction techniques that do not rely on system-assisted interventions or mirrored hand behaviors. Instead, we propose flexible input strategies that align with individual motor capabilities, supporting greater inclusivity and independence within immersive environments.

3 Materials

This section outlines the hardware design choices and the user-centered design process used in developing and evaluating the interaction techniques.

3.1 Materials

When designing features for people with disabilities, these innovations can also benefit a wider audience. This effect has also been referred to as the curb-cut effect (Blackwell, 2017; Lawson, 2015). Curb cuts are small ramps that make side walks accessible for wheelchair users, but are also appreciated by people with luggage, strollers, or those who have difficulties walking steps. The curb-cut effect belongs to the idea of universal design, which is a set of guidelines to create an environment that is usable for everyone, independent of their abilities. It consists of seven principles (Story et al., 1998; Vanderheiden, 1998):

1. Equitable Use: Useful for people with diverse abilities.

2. Flexibility in Use: The design accommodates a wide range of individual preferences and abilities.

3. Simple and Intuitive Use: Use of the design is easy to understand, regardless of the user’s experience, knowledge, language skills, or current concentration level.

4. Perceptible Information: The design communicates necessary information effectively to the user, regardless of ambient conditions or the user’s sensory abilities.

5. Tolerance for Error: The design minimizes hazards and the adverse consequences of accidental or unintended actions.

6. Low Physical Effort: The design can be used efficiently and comfortably and with a minimum of fatigue.

7. Size and Space for Approach and Use: Appropriate size and space is provided for approach, reach, manipulation, and use regardless of the user’s body size, posture, or mobility.

These guidelines were central to our hardware selection, ensuring that each component supports accessibility, adaptability, and inclusivity. This is reflected in our choices of EMG technology, marker-based motion tracking, and VR hardware, all selected to accommodate diverse motor abilities and provide flexible interaction options.

3.2 Electromyography (EMG) technology

EMG is a rising technology that is becoming more and more commercially available8,9,10. There are wireless solutions that offer good movement range and are also resistant to water or sweat. The sensor captures the electric signal that occurs when a muscle is flexed. It can be attached to various muscles all over the body, that are close to the surface and can be consciously controlled by the user. This makes it not only usable for people with diverse abilities and health conditions, but can also be used according to our own preferences or abilities (Guideline 1 and 2). Moreover, the sensors and the whole system can be small, and using the EMG system can be done by flexing the according muscle without moving any body part. This makes it not only space-saving, but also offers the possibility to use EMG sensors as an input method, without it being observable for others (Guideline 7).

The EMG signal was acquired using the Delsys Trigno Lite System11 together with the Trigno Avanti Sensor12. The sensors were set to a mode that outputs rectified EMG data (EMG RMS, 148 sa/s RMS Update rate, 100 m RMS window, 20–450 Hz EMG bandwidth). The participants attached the sensor to their upper arm on their secondary side in the area of the biceps with medical tape. They were then instructed to tighten the biceps without moving the arm. For classification purposes, we utilized a threshold technique. During preliminary tests, we observed the rest potential to be below 0.05 mV. Even slight contractions of the biceps usually exceeded 0.01 mV. So we set the threshold to 0.03 mV, which was easy to activate while balancing false-positive classification. During both studies, participants could practice activating the threshold and fine-tune it if necessary, until they felt comfortable. The data was transferred via Bluetooth to the Trigno Lite System, which is connected via USB to the PC, running the necessary software. The data was live streamed into Unity via the API.

3.3 Motion tracking setup

There are several approaches for motion tracking. Most up-to-date VR headsets come with built-in hand tracking technology based on 3D input, such as Pico413, Varjo XR314 or Meta Quest15. These algorithms are usually optimized to detect hands with five fingers. For other hand structures, the tracking quality can be decreased, or the hand may not be recognized at all. However, there are tracking technologies that are independent on the appearance of the tracked body part, such as marker-based tracking with infrared cameras, making it also possible to be used with diverse bodies (Guideline 1), such as motion capture systems by Optitrack16, Vicon17 or Qualisys18. These are also flexible to use, as the markers can be placed on different body parts (Guideline 2). Also, the markers can be small and do not require a large interaction space for the user (Guideline 7).

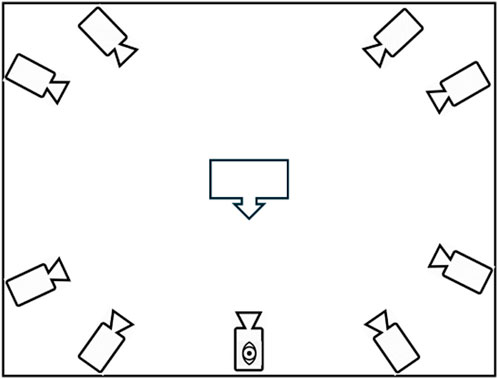

We used the Qualisys Miqus Motion Capture System. It provides high-accuracy real-time motion tracking data. In our setup we used 9 cameras19 (see Figure 1, eight mounted to the walls at a height of approx. 3 m and one on eye level, to ensure the users hands are tracked at all times and avoid occlusion. The cameras detect passive markers at 120 Hz. We used a marker cluster20 for rigid body tracking, attached with a stretchable strip. The system is connected to the PC via LAN, and the data was live-streamed directly into Unity via the API.

Figure 1. Schematic illustration of the motion tracking setup. The user (arrow) was sitting on a chair, facing a tracking camera at eye level (marked with an eye). Eight cameras were mounted on the walls at a height of approximately 3 m.

3.4 VR system and computing setup

We used the Meta Quest 3 with one motion controller. It provides a visible field of view (FoV) of 110° horizontally and 96° vertically, a

4 User-centered design

We followed a user-centered design process, similar to Harte et al. (2017), based on the process model of human-centered design as defined in ISO 9241-21024. In the first step in this work, we invited a person with unilateral limb differences to share their experience with gaming and PC usage and his wishes and ideas for VR input methods. From the results, we derived concepts for novel VR interaction techniques. To evaluate the interaction techniques, we conducted two studies: first, we tested the interaction methods in terms of usability and efficiency with a broad audience. Additionally, we invited four participants with unilateral limb differences to provide feedback.

4.1 Initial data acquisition and development of the user interfaces

This section describes the first phase of the user-centered design process, where we focused on understanding user needs and gathering insights.

4.1.1 Methodology

To achieve this, we conducted a semi-structured interview with a person with unilateral upper limb differences to learn about his habits and preferences when using PC and gaming consoles.

The interview aimed to understand how individuals with unilateral limb impairments interact with conventional input devices (e.g., keyboard, mouse, controllers), the challenges they encounter, and potential strategies to overcome them. Additionally, we explored the participant’s insights on how the position of the affected limb could be effectively visualized in VR. In this exploratory phase of our research (Hanington, 2012), our objective was to gather in-depth qualitative insights that could inform the design of our prototypes and provide valuable initial guidance for our project. The data was analyzed in a content analysis approach, using inductive coding. Based on our findings, we developed three interaction techniques.

We recruited one male participant from the age group 25 to 34 through personal contact, who stated having a missing hand from birth. He is a computer science student with experience in both PC and console gaming and has previously used a VR headset. His background provided a strong foundation for the user-centered design process, as he could offer insights not only from an end-user perspective on accessibility in PC and gaming environments but also from a technical standpoint, given his expertise in hardware and software capabilities.

This also aligns with the recommendation by Kruse et al. (2022), which emphasizes the importance of involving experienced users in the evaluation process. Since first-time VR users may struggle to identify design flaws due to the novelty of the technology, selecting a participant with prior exposure to VR ensures more informed feedback, leading to a more effective assessment of accessibility challenges. The participant also volunteered to take part in the study presented in Section 4.3.

Questions were designed to elicit information on the participant’s daily use of input devices (e.g., keyboard, mouse, controllers, VR systems), his strategies for interacting with both conventional and VR systems, and the challenges he faced in various contexts. Additionally, we explored his preferences for avatar representations, control schemes, and feedback mechanisms in VR environments. The participant was encouraged to discuss his ideal VR experience, with particular attention to how control systems could be made more accessible for users with upper limb differences. Furthermore, we explored the participant’s insights on how the position of the affected limb could be effectively visualized in VR. To ensure thorough documentation, the interviews were audio-recorded and later transcribed for analysis.

4.1.2 Summary of interview results

The interview provided insights into the participant’s adaptations in gaming due to unilateral upper limb differences. He primarily uses a keyboard with his affected left hand and a right-handed mouse, remapping controls for better usability, such as enhancing functions near the WASD keys and using a mouse with numerous programmable keys. Despite extensive experience with gaming, he faces challenges with devices like the Nintendo Switch, notably in Mario Kart, where control remapping is not possible, restricting the level of control he can have in the game.

Regarding VR, he expressed frustration with two-handed setups, as he could only utilize one control option in shooter games, limiting his gameplay experience. He also emphasized a desire for more customizable control options and locomotion methods in VR systems.

Overall, the participant seeks adaptable control schemes and clear feedback mechanisms in both traditional gaming and virtual environments.

Moreover, the interview included the possibility for the participant to try out the EMG system. He was asked to try out different muscles on his affected arm that seemed suitable for him to use for VR interaction. The signal strength was visible on a monitor. He preferred using the biceps, as it balances ease of use and signal strength for him.

Additionally, he stated to like abstract or creative avatars over realistic ones.

4.1.3 Conclusion

In summary, it can be concluded that a focus on flexible control schemes, adaptability, and intuitive input methods is crucial for users of VR systems. The participant’s desire for individual customization indicates that users increasingly expect to tailor their control experiences to personal needs and preferences. The preference for abstract or creative avatars suggests that realistic representations in VR may not appeal to every user, particularly due to the uncanny valley effect. Furthermore, the selection of the biceps as the preferred method for interaction in VR underscores the importance of efficiency in input methods for such applications. These insights can assist developers and designers in adopting user-centered approaches and enhancing accessibility and user experience in future VR systems.

Insights related to upper-limb use in gaming/VR have inspired the development of interaction techniques (e.g., mapping all functions onto one hand is used as a comparative condition, as it appears to be a viable alternative to the presented EMG/tracker interaction). Other responses represent subjective opinions or individual user behaviors and will therefore not be directly incorporated into the design. Instead, various hand visualizations will be developed, which will later be tested with four participants.

4.1.4 Design of interaction techniques

Our design was guided by the central question: What role should the secondary hand play in VR? To explore this, we employed motion tracking for pointing and EMG signals for confirmation, investigating three distinct techniques. The first technique relied solely on motion tracking for pointing on the affected side, allowing users to interact through hand movements. The second technique focused exclusively on EMG-based confirmation, enabling interaction through muscle signals. The third technique combined both motion tracking and EMG confirmation on the affected side, aiming to balance precision and usability. By evaluating these techniques, we sought to determine the most effective method for enhancing accessibility and interaction in VR environments.

To systematically evaluate these techniques, we defined four interaction conditions, each varying the role of the secondary hand in VR interaction:.

Figure 2. A participant with unilateral upper limb differences illustrating the different tested interaction techniques based on EMG and motion tracking. From left to right: Interaction with only one motion controller, secondary hand is responsible for confirming, secondary hand is responsible for selecting, and secondary hand has confirming and selecting responsibility.

4.2 Usability and efficiency study

In the context of universal design, we investigate the novel interaction techniques in terms of efficiency and usability and to locate and eliminate possible design errors. For that, we invited 26 student participants to test the interaction techniques. We did not limit the participants to people without upper limb differences to get feedback from a broader audience, including people with VR experience. This way, we will be able to present a more refined prototype in the third stage of this user-centered design, where we will especially focus on feedback from a user group of people with unilateral limb differences, who will then be able to focus on user-specific opinions.

4.2.1 User study setup

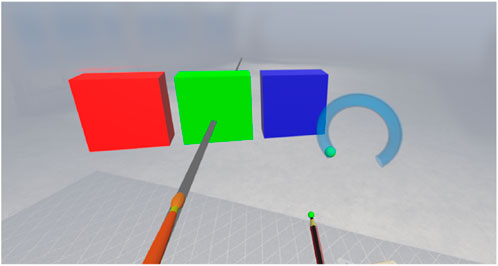

The virtual version of the Quest controller in the primary hand was always visible in VR. When the motion tracking was used, a gray ray was visible, with its origin spatially registered to the position of the marker on the (secondary) lower arm. If motion tracking was not used, the ray was attached to the VR controller in the primary hand. The EMG sensor was not visualized in the VE. The setup for right-handed participants can be seen in Figure 3.

Figure 3. A screenshot from the perspective of a right-handed participant in the EMG + MT condition.

We designed a two-part task: i) a primary task, requiring higher dexterity, that was always done with the primary hand, and ii) a secondary task with less dexterity required, with different levels of responsibility for the secondary hand. The primary task required participants to manipulate a virtual sphere through a pipe using a virtual pen. The color of the ball changed regularly and could only be moved if the color of the pen matched the ball color. The color could be changed by pointing towards the corresponding color panel (either with the controller or with a motion-tracked arm, depending on condition), and the selection was confirmed by either button pressing on the controller or tensing the biceps with the EMG sensor. The four conditions are described in detail in the following:

We used 8 different tubes with different levels of curvature. Each appeared twice in each condition, resulting in 16 trials per condition. The virtual setup was adjusted to fit the handedness of the participant. The pen initially appeared closer to the primary side of the participant, and the color selection panel appeared on the contralateral side, facing toward the user’s head at a 45-degree angle. This ensured that for the conditions including motion tracking (on the secondary hand), the hand would not interfere with the primary hand holding the pen. The ball always appeared on the left side of the tube and ended on the right side.

We chose a within-subjects design with four conditions (C, C + EMG, C + MT, EMG + MT). Conditions were counterbalanced using Latin Square.

4.2.2 Hypothesis

Researchers have shown that bimanual interaction can be more efficient than unimanual interaction (Lévesque et al., 2013). Furthermore, there is an indication that people prefer bimanual over unimanual interaction (Ullrich et al., 2011). We developed three novel bimanual interaction techniques based on EMG and motion tracking. We hypothesize that the interaction techniques will have better usability and efficiency compared to unimanual interaction. However, due to the novelty and unfamiliarity of the system and the used technology, especially EMG, we suspect to see increased mental and physical demand, while we expect the other sub-scales of NASA TLX to be improved. Our hypotheses are the following:

H1 Task Completion Time of C + EMG and C + MT and EMG + MT is lower compared to C because people are used to working with both hands simultaneously.

H2 Usability of C + EMG and C + MT and EMG + MTis better compared to C because people prefer bimanual over unimanual task completion (Ullrich et al., 2011).

Regarding the sub-scales of NASA TLX, we do not expect a trend in task load in general, because we expect some subcomponents to develop in different directions, such that the final task load score might have a similar magnitude but a different composition.

In particular, we hypothesize:

H3a Mental Demand is higher for the three proposed interaction techniques, compared to unimanual interaction.

H3b Physical Demand is higher for the three proposed interaction techniques, compared to unimanual interaction.

H3c Temporal Demand is lower for the three proposed interaction techniques, compared to unimanual interaction.

H3d Performance increased for the three proposed interaction techniques, compared to unimanual interaction.

H3e Effort decreased for the three proposed interaction techniques, compared to unimanual interaction.

H3f Frustration decreased for the three proposed interaction techniques, compared to unimanual interaction.

4.2.3 Participants

We invited 26 participants via the student participant pool, 7 female and 19 male. Participants received either financial compensation or study credits, as they wished. Eight participants were in the age group 18-24, 16 in the age group 25-34 and two in the age group 35-44. Three participants were left-handed, and 23 were right-handed. None of them had upper limb differences.

4.2.4 Procedure

After giving informed consent, participants filled out the demographic part of the questionnaire. The EMG sensor was attached to their biceps on the secondary arm, and they could see the signal of the EMG sensor on a monitor in front of them and practice the activation. They were given as much time as necessary to practice flexing the biceps to produce a short signal. Then, also, the tracker of the motion tracking system was attached to their lower arm on the secondary side. The participants then were asked to put on the HMD and get familiar with the task environment. In each condition, the task was explained, and the participants could ask questions and practice the task until they felt ready to start. They then proceeded through 16 trials. After each condition, the participant answered the System Usability Scale (Brooke, 1996) and the NASA TASK Load Index (Hart and Staveland, 1988).

4.2.5 Results

We evaluated the interaction techniques in terms of efficiency by measuring task completion time, usability with the System Usability scale (Brooke, 1996) and task load via NASA Task Load Index (Hart and Staveland, 1988) and present the results and analysis in the following.

4.2.5.1 Task completion time

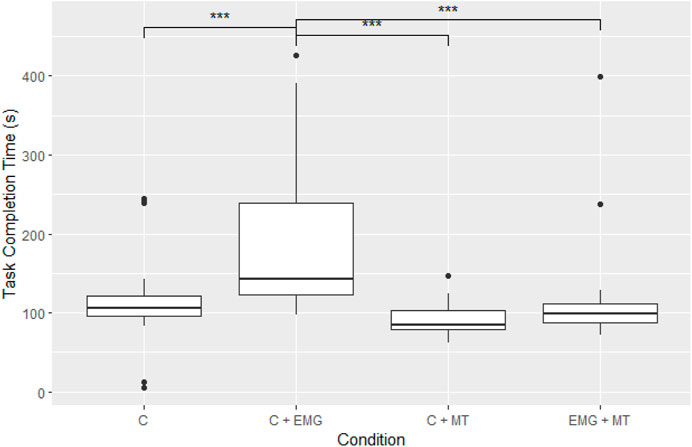

For analyzing the task completion time results, we calculated the sum of all 16 trials per condition for each participant. According to a Shapiro-Wilk test and inspecting the QQ-Plot, we found that the normality assumption for residuals was not met. We analyzed the data with the Friedman test and found a statistically significant difference in task completion time between the four tested input methods

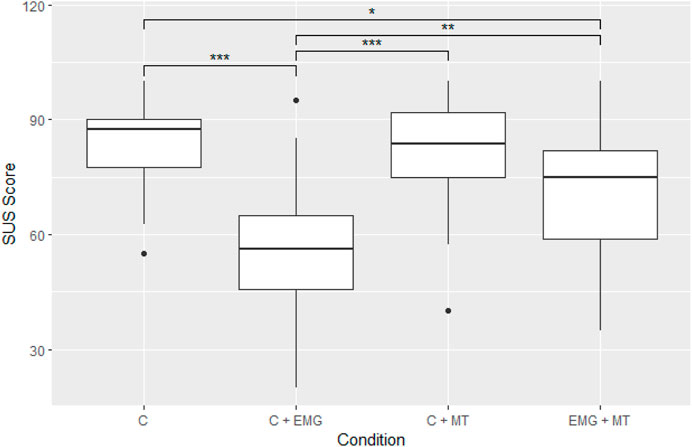

4.2.5.2 Usability

Shapiro-Wilk test and inspection of the Q-Q plot showed that the normality assumption was met. A one-way repeated measures ANOVA was performed to compare the effect of the input method on system usability. We found a significant difference between at least two groups

4.2.5.3 Task load

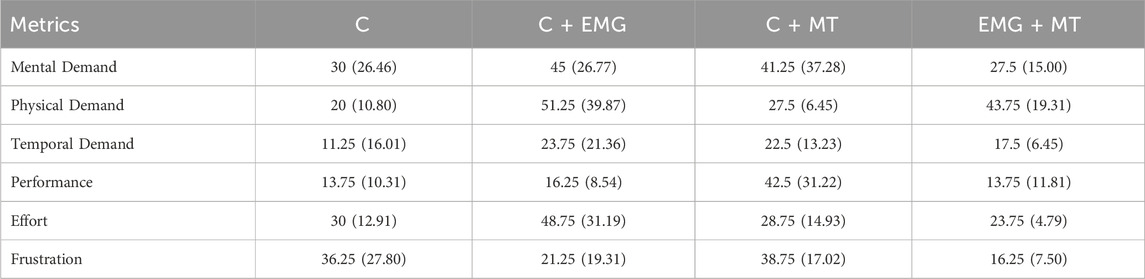

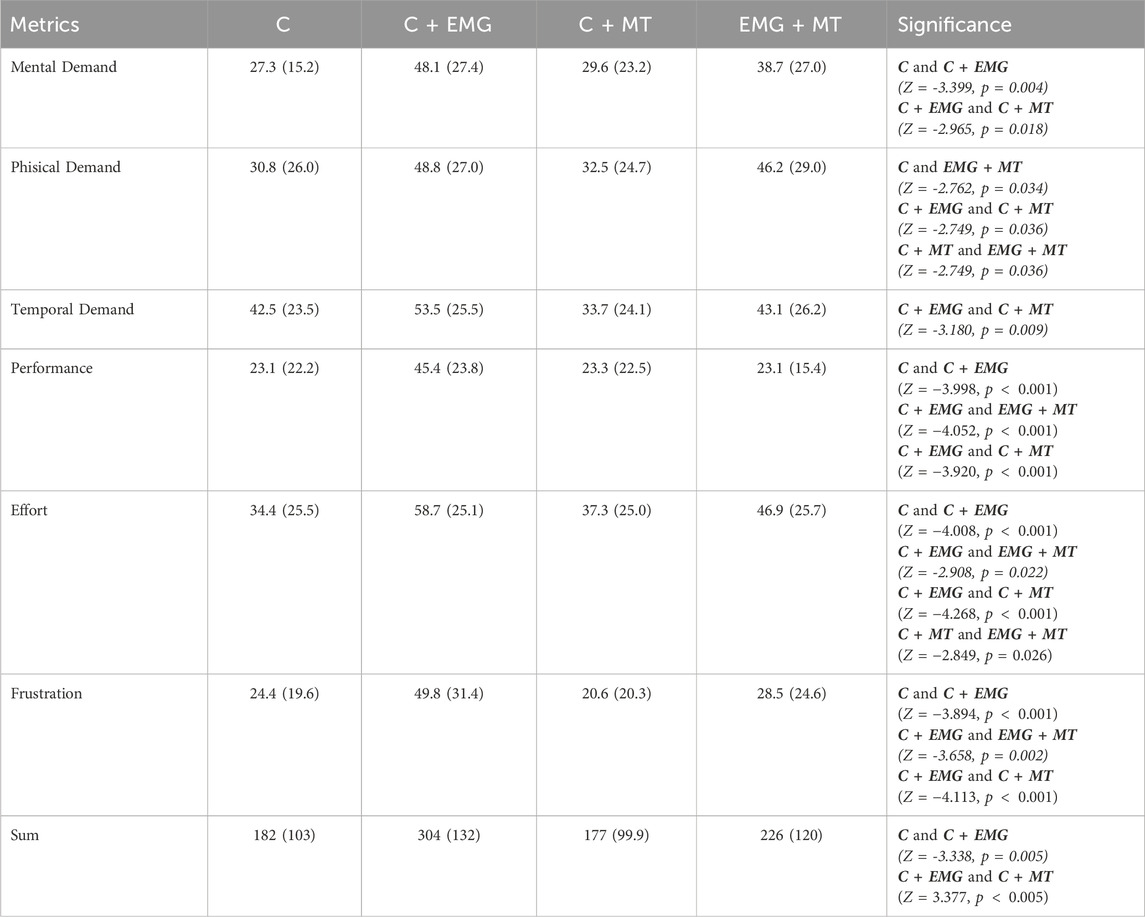

We evaluated the NASA TLX (Hart and Staveland, 1988) results for each of the six sub-scales. The mean and SD values can be found in Table 1.

Table 1. Mean and standard deviations (SDs) of the NASA-TLX questionnaire and statistical comparisons.

According to Shapiro-Wilk test and inspecting the QQ-Plot, we found that the normality assumption for residuals was not met in all cases. Analysis of the data with the Friedman test showed a significant effect for interaction method on each sub-scale (Mental Demand:

Post-hoc tests with Bonferroni correction revealed significant differences between interaction methods in each NASA-TLX sub-scale, as listed in Table 1.

4.2.6 Discussion

In the following, the findings on task completion time, usability, and task load for the three proposed interaction techniques are discussed in detail.

4.2.6.1 Task completion time

We found that C + EMG had a significantly higher task completion time than all other tested interaction methods. For some trials, it took participants taking longer than 3 seconds to change color successfully. Although participants could practice tensing the muscle beforehand, some faced challenges during the experiment to successfully reach the necessary activation level. This usually happened in the first and last trials, showing that it needs more practice time to learn how to activate the muscle, as well as that it is a physical activity that is tiring for the muscle. However, the same issue also appeared in EMG + MT, which had task completion times in a similar range as C and C + MT, indicating that successfully activating the EMG signal was not the main problem in C + EMG.

We also noticed participants accidentally changing color after setting the color correctly because they moved the primary hand back to the sphere in the pipe on the shortest path, which was for some color crossing other colors. The threshold we used to simulate a “pressed button” signal was determined from the prototyping phase. It was set relatively low so that everyone was able to activate the threshold. Some participants seemed to need more time to relax the muscle again than others. So perhaps a different algorithm than the simple threshold approach would have been necessary for this interaction method. We observed two techniques that participants developed to avoid this. First, many participants tried not to move the ray over or through the other color panels when moving back to the sphere. This seems to lead to a higher task completion time. Furthermore, some participants just waited for the color of the ball to change to the current color of the pen. This indicated that people feared not to be fast enough with changing the color anyway, so they thought waiting for an unknown amount of time (the next color was selected randomly) was the better option. It could also show that it was too much work to activate the muscle.

Interestingly, task completion time in the other interaction method, including EMG (EMG + MT) was significantly lower than in C + EMG. Here we could observe the users’ strategy to just keep the motion tracking pointer directed onto the color panel according to the current ball color. This was possible because here the division of the tasks between both sides allowed one hand to stay on the pipe and the other to stay on the color panel.

Although we did find shorter task completion times in EMG + MT compared to C + EMG, our findings do not support H1. All interaction methods seem to have task completion times in a similar range, except for C + EMG with the problem of the still activated muscle discussed above.

People are seemingly quite efficient with one controller only. This might be related to the training effect with the use of a mouse cursor, where also sequential tasks are usual in everyday tasks.

It is interesting to see that the use of new technologies can reach a similar level without much practice in two of three novel interaction methods.

4.2.6.2 Usability

The SUS data shows a similar pattern: EMG performs worse than the other conditions, which fits with our observations discussed above. Due to activation and deactivation issues, the condition was harder than the others, which is also reflected in the SUS score. Again, in the other condition with EMG, the deactivation was not an issue because it was possible to keep the ray on the color panel and operate the pen with the controller. Here, however, also a significant difference between C and EMG + MT was found, with lower usability scores for EMG + MT. This might be due to the still quite hard activation of the color panel. The data partly supports our hypothesis, with novel technologies C + EMG and EMG + MT having lower usability ratings than C. However, C + EMG and C + MT were different, although having a similar level of responsibility for the hands in both cases. This indicates that motion tracking per se is easier usable without much practice than EMG.

4.2.6.3 Task load

In all sub-scales of NASA TLX, at least one combination of C + EMG with another condition was significant in a way that C + EMG performed worse than some other condition. This is also the case in the summed up score: Here, C + EMG had a significantly higher task load than C as well as C + MT.

4.3 Feedback from users with upper limb differences

We invited four participants with unilateral impairments of the upper limb to provide feedback in a semi-structured think-aloud process. This evaluation provides valuable insights into which interaction methods are most accessible, effective, and user-friendly for users with upper limb differences.

4.3.1 User study setup

The technical setup, including the design of the four conditions, was similar to 4.2. To allow for a more free exploration of the interaction techniques, we simplified the game: Only one type of tube was used, and participants were asked to freely move the ball through the tube back and forth. The ball also changed its color and was moved with the pen, but could also be moved, if the colors did not match, unlike in the first experiment. Users gained one point per second when the pen color and ball color were equal and touched. The score was displayed on a panel in VR. Participants explored each condition for about 2 minutes. Furthermore, different from Study 1, the users’ secondary hand was not visualized at all, to avoid drawing attention away from the interaction mechanics.

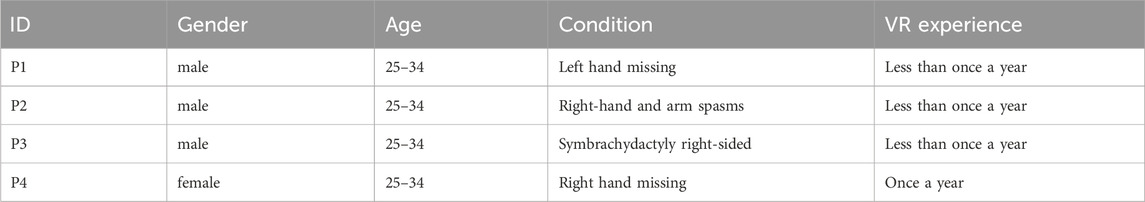

4.3.2 Participants

The study involved four participants, from the age group 25-34, all of whom had unilateral upper limb impairments, see Table 2, from birth or early childhood. Among the four participants was participant P1, who also participated in the initial interview, presented in Section 4.1. Three were male, and one was female. One male participant had a missing left hand, while another experienced right-hand and arm spasm caused by meningitis during infancy. A third male participant had Symbrachydactyly, resulting in limited finger development and no functional grasp. The female participant had a missing right hand. Regarding their experience with VR systems, three participants reported using VR less than once a year, while one participant reported using VR approximately once a year.

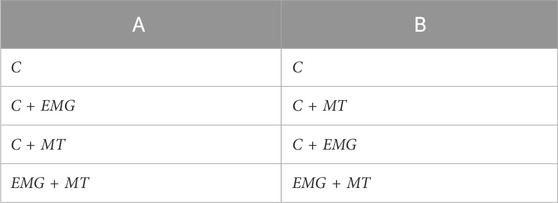

4.3.3 Procedure

The study began with a briefing on the objectives, consent, and a pre-test questionnaire to collect demographic data and prior VR experience. The participants then were asked to put on the HMD and get familiar with the task environment. From the larger-scale usability study, we learned that participants need to understand the task on the one hand and also get familiar with the used sensor technologies. So for this evaluation we decided to have the baseline condition, with only the controller in the primary hand and no additional sensors involved C, as the first condition, as many people have used some kind of controller before and thus can focus on the task itself. Moreover, we decided to have the condition including both sensors and the controller as the last condition (EMG + MT), to ensure both sensor technologies have been experienced before in the according conditions before combining both. So we had two orders of conditions A and B, which can be seen in Table 3. Two participants conducted order A and two conducted order B.

Both the EMG sensor and the Motion Capture marker were only attached, when it was necessary for the current condition and removed afterward, to provide the most realistic experience for each prototype. As in the first study, the EMG sensor was always attached to the biceps of the secondary arm, and the motion tracker marker was attached to the lower arm of the secondary side.

Participants were given as much time as necessary to practice each condition before they could freely explore each condition for up to 2 min. After completing each condition, participants provided immediate feedback through verbal interviews and SUS (Brooke, 1996) and NASA-TLX (Hart and Staveland, 1988) questionnaires, discussing usability, comfort, and physical effort. In these interviews, participants reflected on their experiences in each condition, offering detailed insights into specific challenges and preferences. After all interaction techniques were completed, participants took part in a final interview where they compared the different techniques, provided suggestions for improvement, and shared their preferences. Subsequently, the participants were asked to put on the headset again, and three different visualization options for the secondary hand (see Figure 6) were shown to them one by one. The participants then shared their preferences for the visualization of the secondary hand related to these choices, or provided any other suggestions they had.

Figure 6. Suggested visualizations for the secondary hand. From left to right: White motion controller, black motion controller, wand.

4.3.4 Data collection and analysis

The qualitative data were collected through semi-structured interviews. These interviews were designed to be open-ended, allowing participants to freely discuss their experiences. The analysis employed a thematic approach, in which the transcribed interview data were systematically coded by two experimenters to identify key topics, challenges, and preferences articulated by participants. The resulting codes were then grouped into broader themes that captured recurring patterns and critical insights.

4.3.5 Results

We analyzed the data in terms of usability and task load and performed a reflexive thematic analysis (Braun and Clarke, 2006) of the users’ feedback.

4.3.5.1 Usability and task load results

The average SUS score was 71.88 (SD = 14.40), indicating moderate usability of the overall setup. Table 4 shows the NASA-TLX results for each of the four conditions on all subscales.

Using condition C as a baseline, the results show that C + EMG increases mental, physical, and temporal demands and effort but reduces frustration, indicating a trade-off between workload and user comfort. Meanwhile, C + MT enhances perceived performance substantially but slightly increases mental and temporal demands without significantly raising physical demand. The combination of EMG + MT effectively balances workload by reducing effort and frustration while sustaining high physical and temporal demands, although it entails greater costs in these areas compared to the baseline.

4.3.5.2 Thematic comparison across conditions

The study’s evaluation provided valuable insights into how users adapted to and perceived each condition, highlighting both challenges and positive experiences. The following subsections explore these interactions, focusing on user feedback, to compare the different techniques and identify areas for improvement in the design of interaction methods for individuals with upper limb differences.

User Experience: Across the different conditions, users’ experiences evolved as they adapted to the various tasks and controls. In C, although the initial learning curve for understanding the controller and grip function was steep, users eventually found the controls simple and satisfying. One remarked, “once you have understood this to some extent and tried it out a bit, then it was quite simple to use”. In C + EMG, users appreciated the innovative approach of using both arms for different inputs and found the sensor’s small and unobtrusive design appealing. One user noted, ”The cool thing is that you somehow do not even notice the sensor. It’s small and light and not bulky.” The novelty of independent inputs for each arm made the experience more engaging. However, some users encountered difficulties with muscle tension and coordination, with one explaining, ”I had a bit of a problem with relaxing my arm again.” C + MT introduced issues with the beam direction and intuitive control, making the experience feel less fluid than in previous conditions. One user commented that ”the problem was that it was pointing in the wrong direction, and it was a bit unintuitive.” However, using both hands for different tasks was still considered a positive aspect. Users enjoyed the cognitive challenge of dividing attention between tasks, with one noting, ”It was fun because I could split the task more efficiently.” Yet, this condition was physically more demanding, as users found it strenuous to keep their arms raised for extended periods. In EMG + MT, users found the experience smooth and enjoyable, particularly appreciating the novelty of performing distinct tasks with each arm. One participant likened it to playing drums, saying, ”It felt a little bit like I had two hands, like when playing drums, where each hand has a different rhythm.” Despite the fun and ease of use, muscle tension remained a challenge, especially for users with less-developed muscle groups. One user pointed out, ”Muscle tension is still not for me the go-to remedy,” underscoring the physical difficulty of controlling the game through muscle contraction. In sum, while the user experience improved with each condition and users adapted more effectively, challenges related to physical effort, coordination, and muscle fatigue persisted, revealing key areas where further refinement could enhance both comfort and ease of use.

Learning Curve and Adaptation: The learning curve and adaptation varied notably across the different conditions, with users gradually becoming more comfortable and efficient as they progressed. In C, users found the initial challenge to understand how the gripping function worked and which buttons on the controller were responsible for specific actions. One user explained, ”Once you have understood this to some extent and tried it out a bit, then it was simple to use.” While the learning curve was steep at first, practice allowed them to gain familiarity with the controls, making the task manageable over time. In C + EMG, users found the experience required more adjustment. They commented that ”it takes a bit more getting used to

Suggested Improvements and Technical Difficulties: Users provided various suggestions and improvements across the conditions, reflecting their evolving understanding of the system and preferences for optimizing the experience. In condition C, emphasis was placed on the need for more ergonomic hand positioning that mimics real-life tasks, with one user stating, “The hand position would have been more ergonomic or more similar to real life.” In C + EMG, feedback and threshold settings were crucial, with a participant suggesting that better haptic feedback would enhance the experience. Users also found it unnatural to use their biceps for gaming input and highlighted the need to lower the threshold for triggering actions to make tasks less physically taxing. Suggestions in C + MT focused on fine-tuning the control system’s layout, with users proposing adjustments such as moving the controls “further to the left and further down.” One imaginative suggestion involved adding dynamic elements, like “having a machine gun on my right shoulder.” In EMG + MT, while users appreciated the ease of use, they proposed starting with both hands simultaneously for a smoother experience and acknowledged the importance of properly setting thresholds for fluidity. Overall, participants pointed to the need for ergonomic adjustments, better feedback systems, and optimized threshold settings to improve usability. However, technical and operational issues impacted the user experience across several conditions. In C, users reported technical problems, including complete picture freezes and instances of lagging, creating frustration and interrupting task flow. Comments like “now it is stuck” highlighted the system’s unreliability. In C + EMG, although users encountered fewer outright failures, they faced issues with the EMG sensor, which lacked the tactile feedback and speed of traditional buttons. One participant noted that the impulse required to trigger responses took longer than intended, affecting fluidity. While EMG + MT showed improved system reliability, it still grappled with nuanced issues related to sensor responsiveness. Overall, conditions C and EMG + MT experienced broader technical difficulties that hindered performance. The absence of significant difficulties in C + EMG and C + MT suggests these conditions were more stable, but recurring issues in C and EMG + MT underline the need for refinements in system stability and EMG sensor responsiveness to create a smoother user experience.

Exemplifying: In terms of exemplifying and drawing parallels to daily tasks, the experiences in different conditions varied. In C, users related the experience to familiar activities such as writing or everyday tasks that involve single-handed operation. One user noted, ”Yes, I can do that when I’m writing my master’s thesis.” In EMG + MT, users made a more specific comparison to complex activities that require independent hand movements. One participant likened the experience to ”one hand making a movement or having a rhythm and the other hand in an entirely different rhythm,” drawing a parallel to playing drums, where each hand operates separately but in coordination. This analogy helped explain the novelty of performing distinct tasks with each arm, which felt unusual yet familiar in terms of multitasking. For C + EMG and C + MT, no explicit comparisons to everyday tasks were mentioned. The lack of relatable examples in these conditions suggests that users may have found the actions less intuitive or less aligned with routine tasks in their daily lives.

Visualization of the Secondary Hand: The visualization of the hand significantly impacted users’ experiences, with feedback highlighting the need for intuitive and immersive representations that align with the physical and virtual interaction. Many users expressed dissatisfaction when the visualization felt disconnected from their actual movements. For instance, one user noted that the controller felt as though it was ”floating and not connected to my hand,” which caused discomfort and a lack of embodiment. This disconnect between the visual feedback and physical control was a recurring issue, with users proposing that the representation should feel more integrated with their movements. Additionally, there was a preference for a contextualized visualization based on the theme or setting of the virtual experience. Some users mentioned that in specific environments, such as fantasy games, using a wand or lightsaber would make more sense, while in other scenarios, a more realistic representation of the hand might be preferable. One participant explained, ”If we are in a fantasy world

5 General discussion and future work

Regarding the used technologies, we found that EMG signal strength can differ more than we expected from the development phase. Even though we chose a very low amplitude value as a threshold, some participants had a harder time than others reaching that value, resulting in different effort needed to use that input mechanism, probably biasing the results. Furthermore, some people need longer than others to figure out how to contract the biceps, without any body movement. In future studies, it would be interesting to see whether people prefer to decide a muscle to attach the EMG sensor to on their own. In general, EMG sensors need to be integrated in a more user-friendly way, to be useful for a larger audience.

For motion tracking, on the other hand, we did not experience such problems. Having the movement of the arm directly mapped into the virtual world seems to be an easy and promising concept to further explore. While we used a cost-intensive external camera rig, tracking the hands is already possible for many up-to-date HMDs and should be extended by the manufacturer to support a broader variety of upper limbs.

We developed two interaction techniques that only featured one mechanic (either selecting or confirming). While the EMG-based one for pointing in many measures did not perform as well as other conditions, probably because of implementation issues, the motion tracking-based one for selecting performed significantly better than EMG only. This suggests, that providing a controller with only limited functionality, and thus having to complete a task with both sides, is a promising concept. Hardware and software developers should strive for more flexible input designs, that offer different levels of responsibility for each hand. Even with conventional input methods like controllers and hand tracking, solutions could be realized that offer only selecting responsibility for the secondary hand, while the primary hand takes on more responsibility.

With the input mechanic with both selecting and confirming for the secondary hand, people tended to enjoy that condition, even though it included the relatively hard-to-use EMG system. This confirms the findings on the user preference for bimanual tasks over unimanual tasks (Ullrich et al., 2011).

The findings from the second user study highlight the critical role of adaptive interaction techniques in enhancing the usability and accessibility of VR environments for individuals with upper limb differences. While participants initially faced a steep learning curve, particularly in conditions utilizing EMG sensors, usability improved with practice, indicating that familiarity with the system can mitigate some early challenges. The dual-hand tasks introduced cognitive engagement, which was generally appreciated by participants; however, muscle tension and coordination difficulties remained significant barriers to sustained use. Ergonomic considerations emerged as a key area for improvement. Participants suggested that more natural hand positioning, along with fine-tuned input thresholds, could reduce physical strain and enhance overall comfort. Additionally, system stability played a major role in user experience. Technical difficulties, such as lagging and misalignment, disrupted task flow and contributed to user frustration, emphasizing the need for more reliable performance in future iterations. Another important factor was the visualization of the secondary hand. Participants expressed a preference for visual feedback that felt intuitive and connected to their movements. Suggestions included context-sensitive visual representations, such as using a magic wand in fantasy environments, which would increase immersion and provide a more engaging experience. Regarding task load, we found that EMG + MT reduces effort and frustration while maintaining high physical and temporal demands, offering a balanced workload despite higher costs in certain areas, compared to the unimanual condition. We found that the interaction techniques were received well not only by users with upper limb differences, but also by a broader audience, showing the value of universal design.

Taken together, we suggest that VR systems should be designed in a more inclusive way, not only to enable all users to use them but also because everyone could benefit from novel input modalities and various input techniques to choose from.

6 Conclusion

In this work, we developed three alternative VR input techniques in a user-centered design process and evaluated them in two user studies. To answer research question RQ1, user satisfaction with bimanual interaction techniques was similar or less compared to unimanual interaction. Both conditions that include EMG performed worse in terms of usability than the baseline condition with the controller only, suggesting more familiarization time is needed to use that technology easily. However, the bimanual interaction technique with motion tracking only was not statistically different from the usability of the baseline condition, suggesting two things: First, motion tracking is an intuitive input mechanic that can be easily used. Second, having only limited responsibility of the secondary hand (selecting only) does not limit the user experience. Regarding efficiency, all conditions were on a similar level, except for one, where we assume this is due to the design issue of the virtual environment. Both the condition with motion tracking only, and the one with EMG and motion tracking, were not statistically significant compared to the baseline condition. This means, that even if EMG was herder to use, the user was still equally successful in completing the task at the same time. This highlights the importance of bimanual interaction in terms of efficiency. To answer research question RQ2, users with upper limb differences enjoyed performing tasks with different arms, showing the importance not only of software-based accessibility solutions like uni-manual input modes, but also the users’ desire to use their secondary hand in VR.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The requirement of ethical approval was waived by Universität Hamburg, Fachbereich Informatik, Ethikkommission for the studies involving humans. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

JH: Conceptualization, Data curation, Formal Analysis, Investigation, Methodology, Project administration, Resources, Software, Validation, Visualization, Writing – original draft, Writing – review and editing. SH: Conceptualization, Data curation, Formal Analysis, Investigation, Resources, Software, Validation, Visualization, Writing – original draft, Writing – review and editing. SS: Conceptualization, Data curation, Formal Analysis, Methodology, Project administration, Supervision, Writing – original draft, Writing – review and editing. GM: Funding acquisition, Project administration, Resources, Supervision, Writing – original draft, Writing – review and editing. FS: Funding acquisition, Resources, Supervision, Writing – original draft, Writing – review and editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The authors of this paper received funding from the German Research Foundation (DFG) and the National Science Foundation of China in the project Crossmodal Learning (CML), TRR 169/C8 grant No 261402652, the European Union’s Horizon Europe research and innovation program under grant agreement No. 101135025, PRESENCE project, and Germany’s Ministry of Education and Research (BMBF), grant No 16SV8878, HIVAM project.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Generative AI statement

The authors declare that Gen AI was used in the creation of this manuscript. Early draft of this paper was helped by OpenAI’s GPT-4o model for improving writing clarity.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1https://www.ablegamers.org/thoughts-on-accessibility-and-vr/

2https://amputee-coalition.org/wp-content/uploads/Prevalence-of-Limb-Loss-and-Limb-Difference-in-the-United-States_Implications-for-Public-Policy.pdf

3https://www.w3.org/WAI/WCAG22/quickref/

5https://developers.meta.com/horizon/blog/introducing-the-accessibility-vrcs/

6https://www.xbox.com/accessories/controllers/xbox-adaptive-controller

8https://physio.kinvent.com/product-page/portable-emg-k-myo/

9https://www.biometricsltd.com/surface-emg-sensor.htm

10https://www.pluxbiosignals.com/products/electromyography-emg

11https://delsys.com/trigno-lite/

12https://delsys.com/trigno-avanti/

13https://www.picoxr.com/products/pico4

14https://varjo.com/products/varjo-xr-3/

19https://www.qualisys.com/cameras/miqus/

20https://www.qualisys.com/accessories/marker-accessories/small-cluster/

21https://vr-compare.com/headset/metaquest3

23https://www.khronos.org/openxr/

24https://www.iso.org/standard/52075.html

References

Al-Ansi, A. M., Jaboob, M., Garad, A., and Al-Ansi, A. (2023). Analyzing augmented reality (ar) and virtual reality (vr) recent development in education. Soc. Sci. and Humanit. Open 8, 100532. doi:10.1016/j.ssaho.2023.100532

Angelocci, R., Lacho, K. J., Lacho, K. D., and Galle, W. (2008). Entrepreneurs with disabilities: the role of assistive technology, current status and future outlook. Proc. Acad. Entrepreneursh. (Citeseer) 14, 1–5.

Ansari, S. Z. A., Shukla, V. K., Saxena, K., and Filomeno, B. (2021). “Implementing virtual reality in entertainment industry,” in Cyber intelligence and information retrieval: proceedings of CIIR 2021 (Springer), 561–570.

Bae, D. S., Barnewolt, C. E., and Jennings, R. W. (2009). Prenatal diagnosis and treatment of congenital differences of the hand and upper limb. JBJS 91, 31–39. doi:10.2106/jbjs.i.00072

Bae, D. S., Canizares, M. F., Miller, P. E., Waters, P. M., and Goldfarb, C. A. (2018). Functional impact of congenital hand differences: early results from the congenital upper limb differences (could) registry. J. Hand Surg. 43, 321–330. doi:10.1016/j.jhsa.2017.10.006

Bansal, G., Rajgopal, K., Chamola, V., Xiong, Z., and Niyato, D. (2022). Healthcare in metaverse: a survey on current metaverse applications in healthcare. Ieee Access 10, 119914–119946. doi:10.1109/access.2022.3219845

Bierre, K., Chetwynd, J., Ellis, B., Hinn, D. M., Ludi, S., and Westin, T. (2005). “Game not over: accessibility issues in video games,” in Proc. Of the 3rd international conference on universal access in human-computer interaction, 22–27.

Braun, V., and Clarke, V. (2006). Using thematic analysis in psychology. Qual. Res. Psychol. 3, 77–101. doi:10.1191/1478088706qp063oa

Brooke, J. (1996). “enSUS: a ’quick and dirty’ usability scale,” in Usability evaluation in industry. Boca Raton, FL: CRC Press, 207–212. doi:10.1201/9781498710411-35

Buxton, W., and Sniderman, R. (1980). “Iteration in the design of the human-computer interface,” in Proc. Of the 13th annual meeting, human factors association of Canada, 72–81.

Choi, C., and Kim, J. (2007). “A real-time emg-based assistive computer interface for the upper limb disabled,” in IEEE 10th international conference on rehabilitation robotics (IEEE), 459–462.

Cordella, F., Ciancio, A. L., Sacchetti, R., Davalli, A., Cutti, A. G., Guglielmelli, E., et al. (2016). Literature review on needs of upper limb prosthesis users. Front. Neurosci. 10, 209. doi:10.3389/fnins.2016.00209

Cowan, R. E., Fregly, B. J., Boninger, M. L., Chan, L., Rodgers, M. M., and Reinkensmeyer, D. J. (2012). Recent trends in assistive technology for mobility. J. neuroengineering rehabilitation 9, 20–28. doi:10.1186/1743-0003-9-20

Dicianno, B. E., Cooper, R. A., and Coltellaro, J. (2010). Joystick control for powered mobility: current state of technology and future directions. Phys. Med. rehabilitation Clin. N. Am. 21, 79–86. doi:10.1016/j.pmr.2009.07.013

Fanucci, L., Iacopetti, F., and Roncella, R. (2011). “A console interface for game accessibility to people with motor impairments,” in 2011 IEEE international Conference on consumer electronics-berlin (ICCE-Berlin) (IEEE), 206–210.

Franz, R. L., Yu, J., and Wobbrock, J. O. (2023). “enComparing locomotion techniques in virtual reality for people with upper-body motor impairments,” in The 25th international ACM SIGACCESS Conference on Computers and accessibility (New York NY USA: acm), 1–15. doi:10.1145/3597638.3608394

Gailey, A., Artemiadis, P., and Santello, M. (2017). Proof of concept of an online emg-based decoding of hand postures and individual digit forces for prosthetic hand control. Front. neurology 8, 7. doi:10.3389/fneur.2017.00007

Gerling, K., and Spiel, K. (2021). “A critical examination of virtual reality technology in the context of the minority body,” in Proceedings of the 2021 CHI conference on human factors in computing systems, 1–14.

[Dataset] Hanington, B. (2012). Universal methods of design: 100 ways to research complex problems, develop innovative ideas, and design effective solutions

Hart, S. G., and Staveland, L. E. (1988). Development of NASA-TLX (task load Index): results of empirical and theoretical research. Adv. Psychol. (Elsevier) 52, 139–183. doi:10.1016/S0166-4115(08)62386-9

Harte, R., Glynn, L., Rodríguez-Molinero, A., Baker, P. M., Scharf, T., Quinlan, L. R., et al. (2017). enA human-centered design methodology to enhance the usability, human factors, and user experience of connected health systems: a three-phase methodology. JMIR Hum. Factors 4, e8. doi:10.2196/humanfactors.5443

Hinckley, K., Pausch, R., Proffitt, D., Patten, J., Kassell, N., et al. (1997). Cooperative bimanual action. In: “Proceedings of the ACM SIGCHI conference on Human factors in computing systems”. CHI Proceedings, 27–34.

Holtzblatt, K., and Beyer, H. (1997). Contextual design: defining customer-centered systems. Elsevier.

Houde, S. (1992). Iterative design of an interface for easy 3-d direct manipulation. Proc. SIGCHI Conf. Hum. factors Comput. Syst., 135–142. doi:10.1145/142750.142772

Jarque-Bou, N. J., Sancho-Bru, J. L., and Vergara, M. (2021). A systematic review of emg applications for the characterization of forearm and hand muscle activity during activities of daily living: results, challenges, and open issues. Sensors 21, 3035. doi:10.3390/s21093035

Kanakaprabha, S., Arulprakash, P., Praveena, S., Preethi, G., Hussain, T. S., and Vignesh, V. (2023). “Mouse cursor controlled by eye movement for individuals with disabilities,” in 2023 7th international conference on intelligent computing and control systems ICICCS (IEEE), 1750–1758.

Kruse, L., Karaosmanoglu, S., Rings, S., and Steinicke, F. (2022). “Evaluating difficulty adjustments in a vr exergame for younger and older adults: transferabilities and differences,” in Proceedings of the 2022 ACM symposium on spatial user interaction, 1–11.

Lawson, D. D. (2015). Building a methodological framework for establishing a socio-economic business case for inclusion: the curb cut effect of accessibility accommodations as a confounding variable and a criterion variable

Le, J. T., and Scott-Wyard, P. R. (2015). Pediatric limb differences and amputations. Phys. Med. rehabilitation Clin. N. Am. 26, 95–108. doi:10.1016/j.pmr.2014.09.006

Lévesque, J.-C., Laurendeau, D., and Mokhtari, M. (2013). “enAn asymmetric bimanual gestural interface for immersive virtual environments,” in Virtual augmented and mixed reality. Designing and developing augmented and virtual environments. Editor R. Shumaker (Berlin, Heidelberg: Springer), 192–201. doi:10.1007/978-3-642-39405-8_23

Liu, L., Liu, P., Clancy, E. A., Scheme, E., and Englehart, K. B. (2013). Electromyogram whitening for improved classification accuracy in upper limb prosthesis control. IEEE Trans. Neural Syst. Rehabilitation Eng. 21, 767–774. doi:10.1109/tnsre.2013.2243470

Majaranta, P., and Räihä, K.-J. (2002). “Twenty years of eye typing: systems and design issues,” in Proceedings of the 2002 symposium on Eye tracking research and applications, 15–22.

Merletti, R., and Farina, D. (2016). Surface electromyography: physiology, engineering, and applications. John Wiley and Sons.

Mott, M., Tang, J., Kane, S., Cutrell, E., and Ringel Morris, M. (2020). ““i just went into it assuming that i wouldn’t be able to have the full experience” understanding the accessibility of virtual reality for people with limited mobility,” in Proceedings of the 22nd international ACM SIGACCESS conference on computers and accessibility, 1–13.

Naikar, V. H., Subramanian, S., and Tigwell, G. W. (2024). “Accessibility feature implementation within free vr experiences,” in Extended abstracts of the CHI conference on human factors in computing systems, 1–9.

Nirme, J., Haake, M., Gulz, A., and Gullberg, M. (2020). Motion capture-based animated characters for the study of speech–gesture integration. Behav. Res. methods 52, 1339–1354. doi:10.3758/s13428-019-01319-w

Porter, C., and Zammit, G. (2023). “Blink, pull, nudge or tap? the impact of secondary input modalities on eye-typing performance,” in International conference on human-computer interaction (Springer), 238–258.

Pradhan, A., Mehta, K., and Findlater, L. (2018). “Accessibility came by accident use of voice-controlled intelligent personal assistants by people with disabilities,” in Proceedings of the 2018 CHI Conference on human factors in computing systems, 1–13.

Resnik, L., Huang, H., Winslow, A., Crouch, D. L., Zhang, F., and Wolk, N. (2018). Evaluation of emg pattern recognition for upper limb prosthesis control: a case study in comparison with direct myoelectric control. J. neuroengineering rehabilitation 15, 23–13. doi:10.1186/s12984-018-0361-3

Sainburg, R. L., et al. (2005). Differential specializations for control of trajectory and position. Exp. Brain Res. 164, 305–320.

Sears, A., Young, M., and Feng, J. (2007). “Physical disabilities and computing technologies: an analysis of impairments,” in The human-computer interaction handbook Boca Raton, FL: CRC Press, 855–878.

Sethi, A., Ting, J., Allen, M., Clark, W., and Weber, D. (2020). Advances in motion and electromyography based wearable technology for upper extremity function rehabilitation: a review. J. Hand Ther. 33, 180–187. doi:10.1016/j.jht.2019.12.021

Siean, A.-I., and Vatavu, R.-D. (2021). “Wearable interactions for users with motor impairments: systematic review, inventory, and research implications,” in Proceedings of the 23rd international ACM SIGACCESS conference on computers and accessibility, 1–15.

Sletten, H. S., Eikevåg, S. W., Silseth, H., Grøndahl, H., and Steinert, M. (2021). Force orientation measurement: evaluating ski sport dynamics. IEEE Sensors J. 21, 28050–28056. doi:10.1109/jsen.2021.3124021

Story, M. F., Mueller, J. L., and Mace, R. L. (1998). The universal design file: designing for people of all ages and abilities

Subasi, A. (2019). “Electromyogram-controlled assistive devices,” in Bioelectronics and medical devices (Elsevier), 285–311.

Ullrich, S., Knott, T., Law, Y. C., Grottke, O., and Kuhlen, T. (2011). “Influence of the bimanual frame of reference with haptics for unimanual interaction tasks in virtual environments,” in 2011 IEEE symposium on 3D user interfaces (3DUI) (IEEE), 39–46.

Vanderheiden, G. C. (1998). Universal design and assistive technology in communication and information technologies: alternatives or complements? Assist. Technol. 10, 29–36. doi:10.1080/10400435.1998.10131958

Varghese, R. J., Freer, D., Deligianni, F., Liu, J., Yang, G.-Z., and Tong, R. (2018). “Wearable robotics for upper-limb rehabilitation and assistance: a review of the state-of-the-art challenges and future research,” in Wearable technology in medicine and health care, 23–69.

Velloso, E., Schmidt, D., Alexander, J., Gellersen, H., and Bulling, A. (2015). The feet in human–computer interaction: a survey of foot-based interaction. ACM Comput. Surv. (CSUR) 48, 1–35. doi:10.1145/2816455

Wan Idris, W. M. R., Rafi, A., Bidin, A., Jamal, A. A., and Fadzli, S. A. (2019). A systematic survey of martial art using motion capture technologies: the importance of extrinsic feedback. Multimedia tools Appl. 78, 10113–10140. doi:10.1007/s11042-018-6624-y

Yamagami, M., Junuzovic, S., Gonzalez-Franco, M., Ofek, E., Cutrell, E., Porter, J. R., et al. (2022). Two-in-one: a design space for mapping unimanual input into bimanual interactions in VR for users with limited movement. ACM Trans. Accessible Comput. 15, 1–25. doi:10.1145/3510463

Yildirim, C. (2024). “enDesigning with two hands in mind? a review of mainstream vr applications with upper-limb impairments in mind,” in Proceedings of the 16th international Workshop on immersive Mixed and virtual environment systems (bari Italy: acm), 29–34. doi:10.1145/3652212.3652224

Keywords: virtual reality, user-centered design, universal design, upper limb differences, bimanual interaction, EMG, motion tracking

Citation: Hartfill J, Hajahmadi S, Schmidt S, Marfia G and Steinicke F (2025) Embracing differences in virtual reality: inclusive user-centered design of bimanual interaction techniques. Front. Virtual Real. 6:1586875. doi: 10.3389/frvir.2025.1586875

Received: 03 March 2025; Accepted: 22 April 2025;

Published: 13 May 2025.

Edited by:

Eliot Winer, Iowa State University, United StatesReviewed by: