- 1Department of Communication Sciences and Disorders, University of Kentucky, Lexington, KY, United States

- 2Department of Hearing, Speech & Language Sciences, Ohio University, Athens, OH, United States

- 3School of Allied Health & Communicative Disorders, Northern Illinois University, DeKalb, IL, United States

Affective prosody, the expression of emotion via speech, is critical for successful communication. In dementia of the Alzheimer’s type (DAT), impairments in expressive prosody may contribute to interpersonal difficulties, yet the underlying acoustic and neural mechanisms are not well understood. This exploratory study examined affective prosody production and cortical activation in individuals with DAT using a multimodal approach. Ten participants with DAT completed three speech tasks designed to elicit happy, sad, and neutral emotional tones. Acoustic features were extracted using Praat software, and cerebral hemodynamics were recorded using functional near-infrared spectroscopy (fNIRS), focusing on oxygenated (HbO) and total (HbT) hemoglobin levels across hemispheres. Multinomial logistic regression showed that initial fundamental frequency and speech rate significantly predicted emotional condition. Paired-sample t-tests revealed hemispheric differences in HbO and HbT during affective speech, particularly in the happy and neutral conditions. These findings suggest that individuals with DAT may exhibit reduced modulation of affective prosody and altered patterns of hemispheric activation during emotionally expressive speech. While preliminary and limited by the absence of a control group, this study highlights behavioral and neural features that may contribute to communication challenges in DAT and provides a foundation for future research on affective prosody as a potential target for intervention or monitoring.

1 Introduction

Affective prosody, the modulation of pitch, loudness, tempo, and rhythm to express emotion, is fundamental to effective communication. It conveys emotional intent beyond the literal meaning of speech and plays a key role in social interaction. Prosody is commonly divided into two types: linguistic and affective. While linguistic prosody supports syntactic and pragmatic cues, affective prosody expresses the speaker’s emotional state (Banse and Scherer, 1996; Beach, 1991).

Prosodic impairments have been observed across a range of neurological and communication disorders, including aphasia, Parkinson’s disease, and traumatic brain injury. In aphasia, disruptions in both expressive and receptive prosody have been documented, reflecting damage to right-hemisphere or interhemispheric networks (Baum and Pell, 1999; Patel et al., 2018; Ross and Monnot, 2008). Similarly, dysprosody in Parkinson’s disease has been consistently reported and linked to motor and basal ganglia dysfunction (Harel et al., 2004; Skodda et al., 2010; Pell et al., 2006). Findings from traumatic brain injury and other neuropathologies further underscore prosody’s vulnerability to diffuse neural damage (Ilie et al., 2017; Bornhofen and McDonald, 2008; Belyk and Brown, 2017). Together, these literatures highlight prosody as a sensitive marker of neuropathology more broadly.

Within this broader context, dementia of the Alzheimer’s type (DAT), the most common form of dementia, represents a particularly important case, as prosodic changes may provide insight into both communication impairments and underlying neural dysfunction. Although primarily associated with cognitive decline, dementia has increasing been linked to prosodic impairments. Affective prosody, in particular, engages distributed interhemispheric networks that are often compromised in DAT (Lian et al., 2020; Qi et al., 2019). However, how individuals with DAT express affective prosody and the associated neural mechanisms remain poorly understood. Most prior studies on prosodic changes in individuals with dementia have focused on the perception of affective prosody, yielding mixed results. Some report impaired comprehension of affective prosody in DAT and frontotemporal dementia (Taler et al., 2008; Rohrer et al., 2012), while others suggest that people with dementia have a preserved ability to comprehend affective prosody and intact emotional processing (Koff et al., 1999; Kumfor and Piguet, 2012). Even less is known about expressive prosody in dementia, particularly in relation to its neural underpinnings.

This pilot study explored the expression of affective prosody and its cortical correlates in individuals with DAT using functional near-infrared spectroscopy (fNIRS). fNIRS is a noninvasive, portable neuroimaging modality well-suited for studying real-time communication in clinical populations (Kato et al., 2018; Meilán et al., 2012; Ferdinando et al., 2023). We addressed two research questions:

1. How do individuals with DAT manipulate acoustic features (e.g., pitch, rate) to express different emotions (happiness, sadness, neutral)?

2. What cortical activation patterns are associated with affective prosody production in DAT?

Given that expressive affective prosody in DAT has not been fully investigated, our hypotheses were exploratory and informed by related literature. Prior work on perception suggests that individuals with DAT may have difficulty processing affective prosody (Taler et al., 2008; Rohrer et al., 2012), and right hemisphere networks are known to play a central role in prosodic expression (Ross and Monnot, 2008). Accordingly, we expected that individuals with DAT would show minimal variation in acoustic features across emotions and no significant hemispheric differences in cortical activation during emotional speech production.

2 Methods

2.1 Participants

This study was approved by the Institutional Review Board of Northern Illinois University (HS23-0135), and all procedures and all procedures adhered to ethical standards and regulatory guidelines. Participants were eligible if they (1) were aged 65 years or older, (2) had a documented diagnosis of dementia of the Alzheimer’s type (DAT) confirmed by a board-certified neurologist or neuropsychologist, and (3) scored between 1 and 20 on the Saint Louis University Mental Status (SLUMS) examination (Morley and Tumosa, 2002), indicating mild to moderate cognitive impairment. The SLUMS is a validated cognitive screening tool that assesses orientation, memory, attention, language, and executive function, with reliability and validity comparable to or exceeding other brief cognitive assessments (Spencer et al., 2022; Dautzenberg et al., 2020). Exclusion criteria included current use of psychiatric medications or a history of comorbid neurological conditions.

Ten individuals with DAT were enrolled (4 male, 6 female; mean age = 71.5 years, SD = 6.31), all of whom self-identified as non-Hispanic White and native English speakers. Educational backgrounds varied: five had some college experience, three were high school graduates, one held an associate degree, and one a bachelor’s degree. All participants were right-handed, nonsmokers at the time of testing, and demonstrated sufficient cognitive and communicative abilities to follow multi-step instructions necessary for task compliance. Hair characteristics were inspected to ensure adequate scalp-optode contact, and fNIRS headbands were adjusted to optimize signal quality. Cognitive status was confirmed by SLUMS scores within the specified range.

This project was designed as an exploratory brief report and no demographically matched control group was included. Our focus was to establish feasibility of combining acoustic analysis and fNIRS in individuals with DAT, thereby generating preliminary data to inform larger controlled studies.

2.2 Procedures

After obtaining informed assent and consent from participants and their legal guardians, demographic information (e.g., age, education, race/ethnicity) was collected, and cognitive status was assessed using the SLUMS examination. Participants then completed three spontaneous speech tasks: (1) recounting a happy life event (happy), (2) describing a sad life event (sad), and (3) explaining how to make a peanut butter and jelly sandwich (neutral). For the emotional tasks, participants were prompted to recall and narrate a personally meaningful experience (e.g., “Tell me about a time when you felt very happy/sad”). This autobiographical recall method is known to reactivate emotions and their physiological correlates, which in turn influence vocal production and prosodic patterns (Murphy et al., 2021; Amir et al., 2000; Krasnodębska et al., 2024; Labov and Waletzky, 1997). For the neutral task, participants described the steps of making a peanut butter and jelly sandwich as if instructing someone unfamiliar with the procedure. Such procedural descriptions rely on overlearned, automatic routines, engage subcortical networks, and typically elicit factual, affect-free speech (Lum et al., 2012; Seger et al., 2011; Leaman and Archer, 2023). These tasks were selected to elicit naturalistic emotional and neutral speech, enhancing ecological validity compared to traditional reading or repetition paradigms (Kato et al., 2018; Meilán et al., 2012; Horley et al., 2010; Meilán et al., 2014; Testa et al., 2001; Wright et al., 2018). Speech was recorded in a quiet room using a Sony PCM-M10 digital recorder, with a 10 cm mouth-to-microphone distance at a 45-degree angle. Task order was randomized, and brief rest periods were provided between tasks. Each session lasted less than 20 minutes.

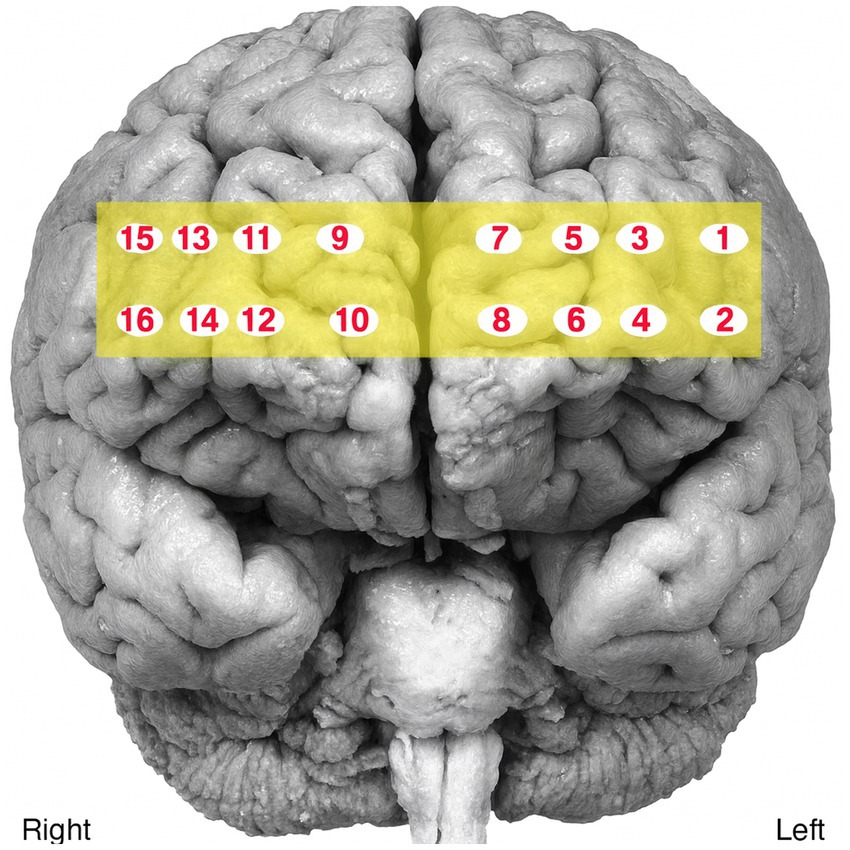

Cortical activity was recorded during speech production using a 16-channel functional near-infrared spectroscopy (fNIRS) system (fNIR 400, Biopac) with two wavelengths (730 nm and 850 nm). The sensor band was placed bilaterally on the participants’ forehead to monitor prefrontal activation (Figure 1). Signals were sampled at 2 Hz and recorded using COBI Studio and fNIRSOFT software. The fNIRS signals were visually inspected to confirm proper contact of the sensor band with the participants’ foreheads and to identify any interference from hair beneath the sensors (raw signal values >4,000 mV or <400 mV). Data preprocessing included applying a 0.1 Hz low-pass filter to reduce noise. Motion artifacts and signal saturation were detected using sliding-window motion artifact rejection (SMAR), with lower and upper thresholds set at 3 and 50, respectively. A 30 s rest interval was inserted between tasks to allow hemodynamic responses to return to baseline, as recommended for fNIRS protocol (Yang and Hong, 2021).

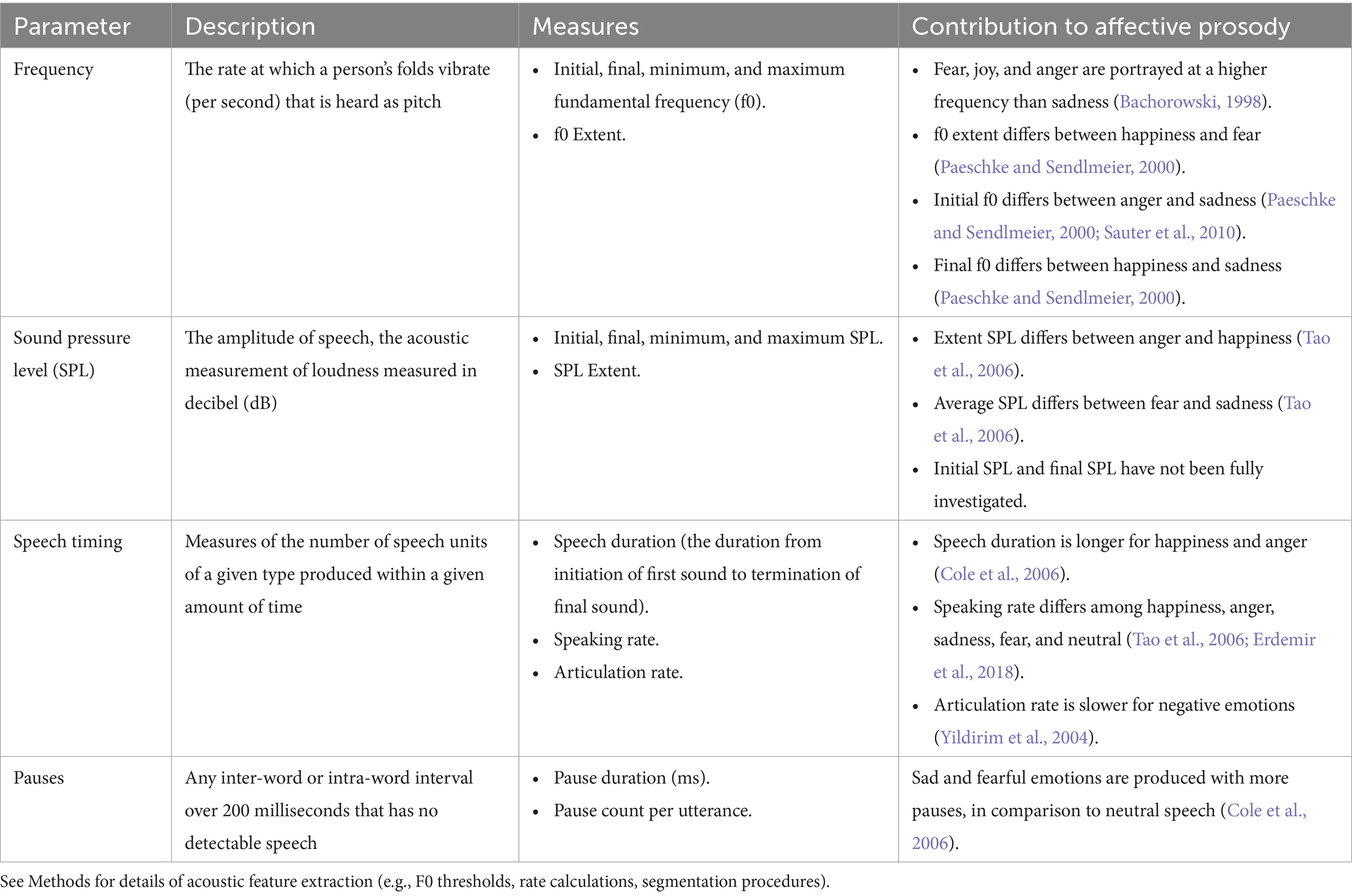

Speech recordings were parsed into utterances by two independent raters, with disagreements resolved through discussion (initial agreement rate = 89%). Audio files were processed in Praat software to extract acoustic features of prosody (e.g., pitch, intensity, speech rate). Unvoiced segments were removed, and prosodic markers were extracted as described in Table 1. F0 was extracted in Praat using pitch-tracking thresholds set at 75–500 Hz. Speech rate was calculated as the number of syllables divided by total speech time, while articulation rate was calculated as the number of syllables divided by articulation time (excluding pauses >200 ms). Utterances were used as the unit of analysis. Speech duration was measured at the utterance level, defined as the total time from the onset of the first sound to the offset of the final sound, including all intervening pauses. All segmentation of syllables, pauses, and utterance boundaries was performed manually in Praat by 3 trained research assistants with 87% initial agreement and 100% agreement after reconciliation.

The fNIRS data were analyzed using the modified Beer–Lambert Law to calculate relative concentrations of oxygenated hemoglobin (HbO) and total hemoglobin (HbT) in the left and right hemispheres. Overall, channels 5, 7, 11, and 14 were determined to be of poor quality; therefore, the raw data from these channels were excluded from preprocessing and all subsequent analyses.

All statistical analyses were performed using Stata Version 17. Multinomial logistic regression was used to model emotional condition (Happy, Sad, or Neutral) as a function of continuous acoustic predictors. Paired-sample t-tests were conducted to assess hemispheric differences in HbO and HbT during each speech condition. To control for multiple comparisons within analytic families, the Benjamini–Hochberg false discovery rate (FDR) procedure (α = 0.05) was applied separately to the acoustic analyses (multinomial logistic regression predictors) and fNIRS hemisphere contrasts.

3 Results

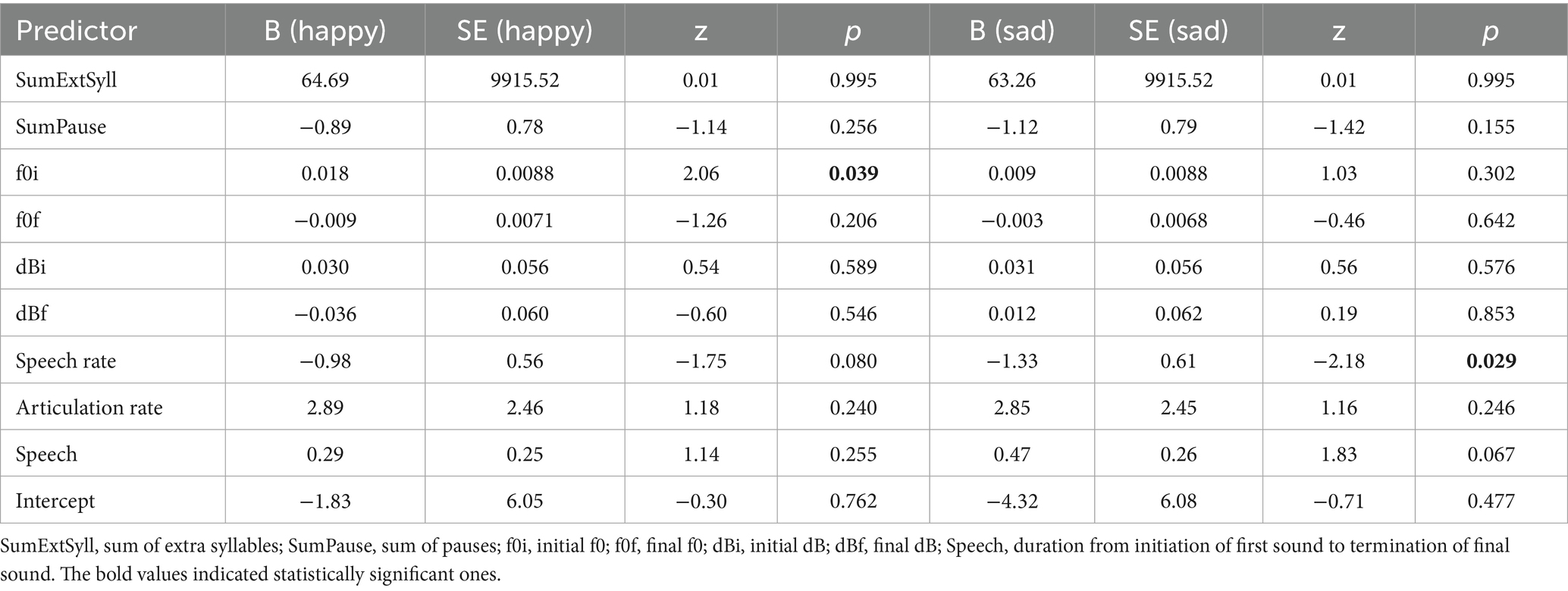

The multinominal logistic regression model’s goodness-of-fit was statistically significant, χ2(18) = 50.92, p < 0.001, indicating that the model provided a better fit to the data than the null model. The log likelihood ratio test revealed a significant improvement in model fit compared to the null model, χ2(18) = 50.92, p < 0.001 (Table 1). The model accounted for approximately 26.18% of the variance in utterance type (i.e., the emotional condition of the utterances: Happy, Sad, Neutral), as indicated by the pseudo R2. For the comparison between the neutral and happy tasks, the initial fundamental frequency (f0i) was a significant predictor (β = 0.018, standard error [SE] = 0.009, z = 2.06, p = 0.039, 95% confidence intervals [CI] = [0.001, 0.035]). For the comparison between the neutral and sad tasks, the speech rate was a significant predictor (β = −1.325, SE = 0.607, z = −2.18, p = 0.029, 95% CI = [−2.515, −0.135]). Importantly, after FDR correction within the acoustic family, both effects (f0i for Neutral vs. Happy, and speech rate for Neutral vs. Sad) remained significant (q = 0.039 for both) (Table 2).

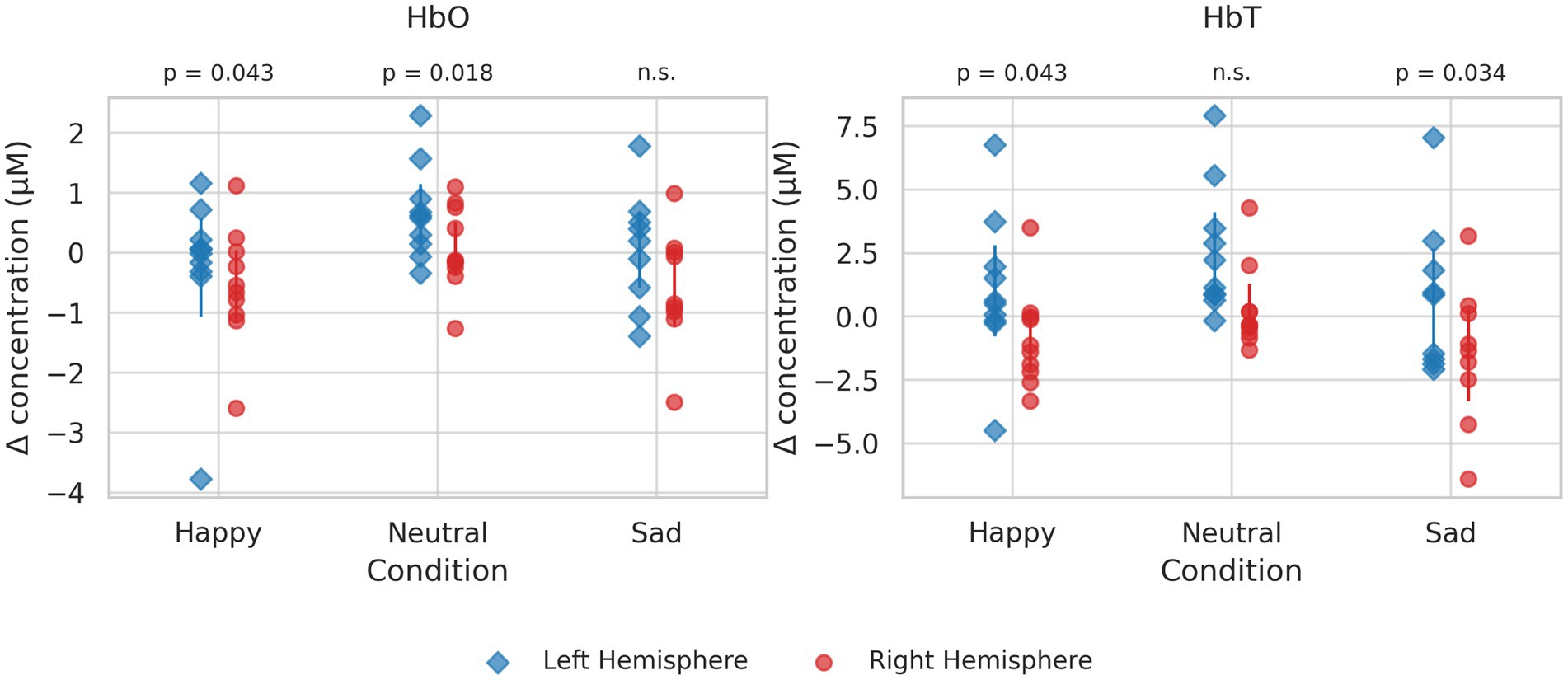

The HbO showed nominally significant differences between the two hemispheres during the happy (t(5) = 0.699, p = 0.043) and neutral tasks (t(5) = 3.459, p = 0.018). The HbT also significantly differed during the happy (t(5) = 2.691, p = 0.043) and sad (t(5) = 2.900, p = 0.034) speech tasks. However, none of these effects survived the FDR correction (HbO: Happy q = 0.065, Neutral q = 0.065; HbT: Happy q = 0.065, Sad q = 0.065), and thus the findings should be interpreted as preliminary. Figure 2 illustrates the fNIRS results.

Figure 2. Panels show oxygenated hemoglobin (HbO, left) and deoxygenated hemoglobin (HbT, right) across three conditions (happy, neutral, sad). Each point represents an individual participant. Vertical error bars indicate group mean ± 95% confidence interval for each hemisphere within each condition. Statistical comparisons between hemispheres are displayed below the panel titles.

4 Discussion

This pilot study explored the production of affective prosody and its neural correlates in individuals with DAT using fNIRS during spontaneous speech tasks. By combining acoustic and neuroimaging analyses, we aimed to assess feasibility and identify preliminary patterns of impairment relevant to affective communication in dementia.

Prosody is essential for expressing emotion and intent in everyday interactions. In neurotypical populations, specific acoustic patterns, such as higher pitch and intensity for happiness or slower speech rate for sadness, have been consistently observed across emotional states (Yildirim et al., 2004; Huttunen et al., 2019). In contrast, our findings suggest that individuals with DAT demonstrate reduced modulation of affective prosody. Only two acoustic markers (i.e., initial fundamental frequency (f0) and speech rate) emerged as significant predictors of emotional condition, with additional trends observed for speech duration. These results, which remained significant after FRD correction, align with previous work showing diminished expressive abilities in individuals with cognitive impairment (Horley et al., 2010; Haider et al., 2020; Oh et al., 2023) and underscore the challenges individuals with DAT may face in conveying emotion through speech.

With respect to neural activation, participants exhibited predominantly left-hemisphere engagement during both emotional and neutral speech tasks. However, although several contrasts reached nominal significance, none survived FDR correction, and thus these findings should be regarded as preliminary. In healthy individuals, emotional processing is typically supported by bilateral and interhemispheric networks (Frühholz and Grandjean, 2012; Grandjean et al., 2005; Meyer et al., 2004; Mitchell and Ross, 2013; Pell and Leonard, 2005; Wildgruber et al., 2004; Balconi et al., 2015), which are often disrupted in DAT (Lian et al., 2020; Qi et al., 2019). Prior neuroimaging studies of emotion processing in healthy adults have yielded inconsistent results regarding lateralization (Bendall et al., 2016; Kreplin and Fairclough, 2013; Vytal and Hamann, 2010), highlighting the complexity of these networks and the potential for further insights through dementia-focused research. Despite the preliminary nature, the findings of this study are broadly consistent with prior evidence of altered hemispheric lateralization in dementia (Amlerova et al., 2022; Cadieux and Greve, 1997; Hazelton et al., 2023; Kumfor et al., 2016) and raise the possibility that impaired interhemispheric integration may contribute to reduced affective prosody expression.

The ability to express emotion clearly is critical for meaningful communication, particularly in care settings. Impairments in affective prosody may contribute to miscommunication between individuals with dementia and caregivers, potentially affecting emotional well-being, social connection, and care outcomes. The present findings have translational implications by pointing to specific acoustic and neural features that may be targeted in future interventions to improve communicative functioning in dementia.

Several limitations must be acknowledged. First, the small sample size, homogeneity of participants, and absence of a control group substantially limit the generalizability of our findings. In particular, the fNIRS results should be considered preliminary; although several contrasts reached nominal significance, none remained significant after FDR correction, underscoring the risk of Type I error in this small sample. Without controls, dementia-related changes cannot be disentangled from those attributable to normal aging. In addition, the statistical analyses are underpowered for such a dataset, and no a priori power analysis was conducted given the feasibility-oriented nature of this pilot. Consistent with the exploratory nature of the study, our claims are intentionally conservative and limited to feasibility and descriptive patterns within DAT.

Second, the study was limited in the scope of emotional categories examined. Only happy and sad narratives, alongside a neutral procedural task, were included, which does not capture the full range of affective prosody relevant to everyday communication (e.g., anger, fear, surprise, or mixed emotions). Moreover, we did not perform perceptual validation of the elicited utterances (e.g., listener ratings), leaving open the question of whether the intended emotions were reliably perceived. The neuroimaging analysis was also restricted to prefrontal regions, whereas prosody production engages broader cortical and subcortical networks that were not measured here.

Third, the acoustic feature set used for this study was limited, relying mainly on pitch, intensity, timing, and pause measures. Modern approaches to acoustic analysis including spectral, cepstral, and perturbation-based features have shown sensitivity in identifying cognitive impairment (Ding et al., 2023). In addition, Measures of voice quality (e.g., jitter, shimmer, harmonics-to-noise ratio), which are important correlates of emotional expression (Schewski et al., 2025), were not evaluated here, limiting the scope of the acoustic analysis. The exclusion of these features limits the depth of interpretation and may underestimate the full extent of prosodic change in DAT.

Building on these limitations, several directions for future research are warranted. To address issues of sample size and generalizability, larger and more demographically diverse cohorts should be recruited, and matched control groups incorporated, to distinguish dementia-specific changes from those associated with normal aging. Power analyses based on effect sizes from preliminary studies should be used to guide recruitment and ensure adequately powered hypothesis-driven designs (Faul et al., 2007; Riley et al., 2020).

Expanding the scope of emotional categories beyond happiness and sadness will provide a more comprehensive view of affective prosody in dementia. Incorporating additional emotions such as anger, fear, or surprise, as well as mixed or ambiguous states, will capture the complexity of real-world communication. Perceptual validation of elicited utterances through independent listener ratings will also be critical to confirm that speech is reliably perceived as intended. At the neural level, future studies should extend cortical coverage beyond the prefrontal regions examined here, and ideally include subcortical structures, to better characterize the distributed networks supporting affective prosody.

Finally, future work should broaden the acoustic feature set beyond pitch, intensity, timing, and pauses. Incorporating spectral, cepstral, perturbation-based, and other advanced measures, and ideally integrating multimodal acoustic–linguistic analyses, may yield more sensitive and specific markers of cognitive decline. Together, these advances will strengthen the interpretability of findings and help clarify whether prosodic changes are uniquely associated with DAT or reflect broader age-related processes.

Despite these limitations, this study demonstrates the feasibility of using fNIRS to investigate affective prosody production in individuals with DAT and highlights behavioral and neural features that may underlie communication challenges in this population. These findings provide a foundation for larger-scale, hypothesis-driven studies that can support the development of targeted communication interventions and contribute to early functional markers of dementia progression.

Data availability statement

Due to the sensitive nature of the raw audio recordings and the potential risk of participant re-identification, these data cannot be made publicly available. De-identified, coded datasets underlying the analyses may be shared by the authors upon reasonable request, and decisions will be made on a case-by-case basis in accordance with institutional and ethical guidelines.

Ethics statement

This study involving humans was approved by the Institutional Review Board of Northern Illinois University. This study was conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants’ legal guardians or next of kin, and verbal assent was obtained from all participants.

Author contributions

CO: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Visualization, Writing – original draft, Writing – review & editing. I-SK: Conceptualization, Formal analysis, Funding acquisition, Investigation, Methodology, Resources, Software, Visualization, Data curation, Supervision, Writing – review & editing. AF: Investigation, Resources, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This study was supported with the Advancing Scholarship in Research and Education grant by the College of Health Sciences and Professions of Ohio University.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that Gen AI was used in the creation of this manuscript. It was used for language editing and formatting.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Amir, N., Ron, S., and Laor, N. Analysis of an emotional speech corpus in Hebrew based on objective criteria. Proceedings ISCA Workshop on Speech and Emotion. (2000), 29–32.

Amlerova, J., Laczó, J., Nedelska, Z., Laczó, M., Zhang, B., Sheardova, K., et al. (2022). Emotional prosody recognition is impaired in Alzheimer’s disease. Alzheimer's Res Ther 14:50. doi: 10.1186/s13195-022-00989-7

Bachorowski, J.-A. (1998). Vocal expression and perception of emotion. Curr. Dir. Psychol. Sci. 8, 53–57. doi: 10.1111/1467-8721.00013

Balconi, M., Grippa, E., and Vanutelli, M. E. (2015). What hemodynamic (fNIRS), electrophysiological (EEG) and autonomic integrated measures can tell us about emotional processing. Brain Cogn. 95, 67–76. doi: 10.1016/j.bandc.2015.02.001

Banse, R., and Scherer, K. (1996). Acoustic profiles in vocal emotion expression. J. Pers. Soc. Psychol. 70, 614–636. doi: 10.1037//0022-3514.70.3.614

Baum, S. R., and Pell, M. D. (1999). The neural bases of prosody: insights from lesion studies and neuroimaging. Aphasiology 13, 581–608. doi: 10.1080/026870399401957

Beach, C. M. (1991). The interpretation of prosodic patterns at points of syntactic structure ambiguity: evidence for cue trading relations. J. Mem. Lang. 30, 644–663. doi: 10.1016/0749-596X(91)90030-N

Belyk, M., and Brown, S. (2017). The origins of the vocal brain in humans. Neuro. Biobehav. Rev. 77, 177–193. doi: 10.1016/j.neubiorev.2017.03.014

Bendall, R. C. A., Eachus, P., and Thompson, C. (2016). A brief review of research using near-infrared spectroscopy to measure activation of the prefrontal cortex during emotional processing: the importance of experimental design. Front. Hum. Neurosci. 10:529. doi: 10.3389/fnhum.2016.00529

Bornhofen, C., and McDonald, S. (2008). Emotion perception deficits following traumatic brain injury: a review of the evidence and rationale for intervention. J. Int. Neuropsychol. Soc. 14, 511–525. doi: 10.1017/S1355617708080703

Cadieux, N. L., and Greve, K. W. (1997). Emotion processing in Alzheimer’s disease. J. Int. Neuropsychol. Soc. 3, 411–419. doi: 10.1017/S1355617797004116

Cole, J., Kim, H., Choi, H., and Hasegawa-Johnson, M. (2006). Prosodic effects on acoustic cues to stop voicing and place of articulation: evidence from radio news speech. J. Phon. 35, 180–209. doi: 10.1016/j.wocn.2006.03.004

Dautzenberg, G., Lijmer, J., and Beekman, A. (2020). Diagnostic accuracy of the Montreal cognitive assessment (MoCA) for cognitive screening in old age psychiatry: determining cutoff scores in clinical practice. Avoiding spectrum bias caused by healthy controls. Int. J. Geriatr. Psychiatry 35, 261–269. doi: 10.1002/gps.5227

Ding, H., Hamel, A. P., Karjadi, C., Ang, T. F. A., Lu, S., Thomas, R. J., et al. (2023). Association between acoustic features and brain volumes: the Framingham heart study. Front. Dement. 2:1214940. doi: 10.3389/frdem.2023.1214940

Erdemir, A., Walden, T. A., Jefferson, C. M., Choi, D., and Jones, R. M. (2018). The effect of emotion on articulation rate in persistence and recovery of childhood stuttering. J. Fluen. Disord. 56, 1–17. doi: 10.1016/j.jfludis.2017.11.003

Faul, F., Erdfelder, E., Lang, A.-G., and Buchner, A. (2007). G*power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191. doi: 10.3758/BF03193146

Ferdinando, H., Moradi, S., Korhonen, V., Helakari, H., Kiviniemi, V., and Myllylä, T. (2023). Spectral entropy provides separation between Alzheimer’s disease patients and controls: a study of fNIRS. Eur. Phys. J. Spec. Top. 232, 655–662. doi: 10.1140/epjs/s11734-022-00753-w

Frühholz, S., and Grandjean, D. (2012). Multiple subregions in superior temporal cortex are differentially sensitive to vocal expressions: a quantitative meta-analysis. Neurosci. Biobehav. Rev. 36, 2071–2083. doi: 10.1016/j.neubiorev.2012.06.005

Grandjean, D., Sander, D., Pourtois, G., Schwartz, S., Seghier, M. L., Scherer, K. R., et al. (2005). The voices of wrath: brain responses to angry prosody in meaningless speech. Nat. Neurosci. 8, 145–146. doi: 10.1038/nn1381

Haider, F., de la Fuente, S., and Luz, S. (2020). An assessment of paralinguistic acoustic features for detection of Alzheimer's dementia in spontaneous speech. IEEE J. Sel. Top. Signal Process 14, 272–281. doi: 10.1109/jstsp.2019.2955022

Harel, B. T., Cannizzaro, M., Cohen, H., Reilly, N., and Snyder, P. J. (2004). Acoustic characteristics of parkinsonian speech: a potential biomarker of early disease progression and treatment. J. Neurolinguistics 17, 439–453. doi: 10.1016/j.jneuroling.2004.06.001

Hazelton, J. L., Devenney, E., Ahmed, R., Burrell, J., Hwang, Y., Piguet, O., et al. (2023). Hemispheric contributions toward interoception and emotion recognition in left- vs right-semantic dementia. Neuropsychologia 188:108628. doi: 10.1016/j.neuropsychologia.2023.108628

Horley, K., Reid, A., and Burnham, D. (2010). Emotional prosody perception and production in dementia of the Alzheimer's type. J. Speech Lang. Hear. Res. 53, 1132–1146. doi: 10.1044/1092-4388(2010/09-0030)

Huttunen, T., Toivonen, H., Suni, A., and Väyrynen, E. (2019). “The temporal domain of speech and its relevance in the context of emotion” in Speech and language Technology in Education. eds. A. Šimić and M. Ptaszyński (Springer), 61–73.

Ilie, G., Cusimano, M. D., and Li, W. (2017). Prosodic processing post-traumatic brain injury—a systematic review. Syst. Rev. 6:1. doi: 10.1186/s13643-016-0385-3

Kato, S., Homma, A., and Sakuma, T. (2018). Easy screening for mild Alzheimer’s disease and mild cognitive impairment from elderly speech. Curr. Alzheimer Res. 15, 104–110. doi: 10.2174/1567205014666171120144343

Koff, E., Zaitchik, D., Montepare, J., and Albert, M. S. (1999). Emotion processing in the visual and auditory domains by patients with Alzheimer’s disease. J. Int. Neuropsychol. Soc. 5, 32–40. doi: 10.1017/S1355617799511053

Krasnodębska, P., Szkiełkowska, A., Pollak, A., Romaniszyn-Kania, P., Bugdol, M., Bugdol, M., et al. (2024). Analysis of the relationship between emotion intensity and electrophysiology parameters during a voice examination of opera singers. Int. J. Occup. Med. Environ. Health 37, 84–97. doi: 10.13075/ijomeh.1896.02272

Kreplin, U., and Fairclough, S. H. (2013). Activation of the rostromedial prefrontal cortex during the experience of positive emotion in the context of esthetic experience. An fNIRS study. Front. Hum. Neurosci. 7:879. doi: 10.3389/fnhum.2013.00879

Kumfor, F., Landin-Romero, R., Devenney, E., Hutchiings, R., Grasso, R., Hodges, J. R., et al. (2016). On the right side? A longitudinal study of left- versus right-lateralized semantic dementia. Brain 139, 986–998. doi: 10.1093/brain/awv387

Kumfor, F., and Piguet, O. (2012). Disturbance of emotion processing in frontotemporal dementia: a synthesis of cognitive and neuroimaging findings. Neuropsychol. Rev. 22, 280–297. doi: 10.1007/s11065-012-9201-6

Labov, W., and Waletzky, J. (1997). Narrative analysis: oral versions of personal experience. J. Narrat. Life Hist. 7, 3–38. doi: 10.1075/jnlh.7.02nar

Leaman, M. C., and Archer, B. (2023). Choosing discourse types that align with person-centered goals in aphasia rehabilitation: a clinical tutorial. Perspect. Asha Spec. Interest Groups 8, 54–273. doi: 10.1044/2023_PERSP-22-00160

Lian, C., Liu, M., Zhang, J., and Shen, D. (2020). Hierarchical fully convolutional network for joint atrophy localization and Alzheimer's disease diagnosis using structural MRI. IEEE Trans. Pattern Anal. Mach. Intell. 42, 880–893. doi: 10.1109/tpami.2018.2889096

Lum, J. A. G., Conti-Ramsden, G., Page, D., and Ullman, M. T. (2012). Working, declarative and procedural memory in specific language impairment. Cortex J. Devoted Study Nerv. Syst. Behav. 48, 1138–1154. doi: 10.1016/j.cortex.2011.06.001

Meilán, J. J., Martínez-Sánchez, F., Carro, J., López, D. E., Milliam-Morell, L., and Arana, J. M. (2014). Speech in Alzheimer’s disease: can temporal and acoustic parameters discriminate dementia? Dement. Geriatr. Cogn. Disord. 37, 327–334. doi: 10.1159/000356726

Meilán, J. J., Martínez-Sánchez, F., Carro, J., Sánchez, J. A., and Pérez, E. (2012). Acoustic markers associated with impairment in language processing in Alzheimer’s disease. Span. J. Psychol. 15, 487–494. doi: 10.5209/rev_sjop.2012.v15.n2.38859

Meyer, M., Steinhauer, K., Alter, K., Friederici, A. D., and von Cramon, D. Y. (2004). Brain activity varies with modulation of dynamic pitch variance in sentence melody. Brain Lang. 89, 277–289. doi: 10.1016/S0093-934X(03)00357-2

Mitchell, R. L. C., and Ross, E. D. (2013). fMRI evidence for the effect of verbal complexity on lateralisation of the neural response associated with decoding prosodic emotion. Neuropsychologia 51, 1749–1757. doi: 10.1016/j.neuropsychologia.2013.05.011

Morley, J. E., and Tumosa, N. (2002). Saint Louis university mental status examination (SLUMS) [database record]. APA PsycTests. doi: 10.1037/t27282-000

Murphy, S., Melandri, E., and Bucci, W. (2021). The effects of story-telling on emotional experience: An experimental paradigm. J. Psycholinguist. Res. 50, 117–142. doi: 10.1007/s10936-021-09765-4

Oh, C., Morris, R. J., Wang, X., and Raskin, M. S. (2023). Analysis of emotional prosody as a tool for differential diagnosis of cognitive impairments: a pilot research. Front. Psychol. 14:1129406. doi: 10.3389/fpsyg.2023.1129406

Paeschke, A., and Sendlmeier, W. (2000) Prosodic characteristics of emotional speech: measurements of fundamental frequency movements. ISCA Archive. Available online at: https://www.iscaspeech.org/archive_open/archive_papers/speech_emotion/spem_075.pdf (accessed Sep 12, 2025).

Patel, S., Oishi, K., Wright, A., Sutherland-Foggio, H., Saxena, S., Shppard, S. M., et al. (2018). Right hemisphere regions critical for expression of emotion through prosody. Front. Neurol. 9:224. doi: 10.3389/fneur.2018.00224

Pell, M. D., Cheang, H. S., and Leonard, C. L. (2006). The impact of Parkinson’s disease on vocal-prosodic communication from the perspective of listeners. Brain Lang. 97, 123–134. doi: 10.1016/j.bandl.2005.08.010

Pell, M. D., and Leonard, C. L. (2005). Processing emotional tone from speech in Parkinson's disease: a role for the basal ganglia. Cogn. Affect. Behav. Neurosci. 5, 153–162. doi: 10.3758/cabn.5.2.153

Qi, Z., An, Y., Zhang, M., Li, H.-J., and Lu, J. (2019). Altered cerebro-cerebellar limbic network in AD Spectrum: a resting-state fMRI study. Front. Neural Circuits 13:72. doi: 10.3389/fncir.2019.00072

Riley, R. D., Ensor, J., Snell, K. I. E., Harrell, F. E., Martin, G. P., Reitsma, J. B., et al. (2020). Calculating the sample size required for developing a clinical prediction model. BMJ 368:m441. doi: 10.1136/bmj.m441

Rohrer, J. D., Sauter, D., Scott, S., Rossor, M. N., and Warren, J. D. (2012). Receptive prosody in nonfluent primary progressive aphasias. Cortex 48, 308–316. doi: 10.1016/j.cortex.2010.09.004

Ross, E. D., and Monnot, M. (2008). Neurology of affective prosody and its functional–anatomic organization in right hemisphere. Brain Lang. 104, 51–74. doi: 10.1016/j.bandl.2007.04.007

Sauter, D. A., Eisner, F., Calder, A. J., and Scott, S. K. (2010). Perceptual cues in nonverbal vocal expressions of emotion. Q. J. Exp. Psychol. 63, 2251–2272. doi: 10.1080/17470211003721642

Schewski, L., Doss, M. M., Beldi, G., and Keller, S. (2025). Measuring negative emotions and stress through acoustic correlates in speech: a systematic review. PLoS One 20:e0328833. doi: 10.1371/journal.pone.0328833

Seger, C. A., Dennison, C. S., Lopez-Paniagua, D., Peterson, E. J., and Roark, A. A. (2011). Dissociating hippocampal and basal ganglia contributions to category learning using stimulus novelty and subjective judgments. NeuroImage 55, 1739–1753. doi: 10.1016/j.neuroimage.2011.01.026

Skodda, S., Visser, W., and Schlegel, U. (2010). Short- and long-term dopaminergic effects on dysprosody in Parkinson’s disease. J. Neural. Trans. 117, 197–205. doi: 10.1007/s00702-009-0351-5

Spencer, R. J., Noyes, E. T., Bair, J. L., and Ransom, M. T. (2022). Systematic review of the psychometric properties of the saint Lous university mental status (SLUMS) examination. Clin. Gerontol. 45, 454–466. doi: 10.1080/07317115.2022.2032523

Taler, V., Baum, S. R., Chertkow, H., and Saumier, D. (2008). Comprehension of grammatical and emotional prosody is impaired in Alzheimer’s disease. Neuropsychology 22, 188–195. doi: 10.1037/0894-4105.22.2.188

Tao, J., Kang, Y., and Li, A. (2006). Prosody conversion from neutral speech to emotional speech. IEEE Trans. Audio Speech Lang. Process. 14, 1145–1154. doi: 10.1109/tasl.2006.876113

Testa, J. A., Beatty, W. W., Gleason, A. C., Orbelo, D. M., and Ross, E. D. (2001). Impaired affective prosody in AD: relationship to aphasic deficits and emotional behaviors. Neurology 57, 1474–1481. doi: 10.1212/wnl.57.8.1474

Vytal, K., and Hamann, S. (2010). Neuroimaging support for discrete neural correlates of basic emotions: a voxel-based meta-analysis. J. Cogn. Neurosci. 22, 2864–2885. doi: 10.1162/jocn.2009.21366

Wildgruber, D., Hertrich, I., Riecker, A., Erb, M., Anders, S., Grodd, W., et al. (2004). Distinct frontal regions subserve evaluation of linguistic and emotional aspects of speech intonation. Cereb. Cortex 14, 1384–1389. doi: 10.1093/cercor/bhh099

Wright, A., Saxena, S., Sheppard, S. M., and Hillis, A. E. (2018). Selective impairments in components of affective prosody in neurologically impaired individuals. Brain Cogn. 124, 29–36. doi: 10.1016/j.bandc.2018.04.001

Yang, D., and Hong, K. S. (2021). Quantitative assessment of resting-state for mild cognitive impairment detection: a functional near-infrared spectroscopy and deep learning approach. J Alzheimer's Dis 80, 647–663. doi: 10.3233/JAD-201163

Keywords: dementia, speech, acoustics, fNIRS, emotion

Citation: Oh C, Kim I-S and Feltis A (2025) Affective prosody and cortical activation in dementia of the Alzheimer’s type: an exploratory acoustic and fNIRS study. Front. Dement. 4:1681602. doi: 10.3389/frdem.2025.1681602

Edited by:

Alexander V. Glushakov, University of Virginia, United StatesReviewed by:

Vesa Olavi Korhonen, Oulu University Hospital, FinlandFasih Haider, University of Edinburgh, United Kingdom

Copyright © 2025 Oh, Kim and Feltis. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chorong Oh, Q2hvcm9uZ09oQHVreS5lZHU=

Chorong Oh

Chorong Oh In-Sop Kim3

In-Sop Kim3