- 1Center of Innovation for Complex Chronic Healthcare, Edward Hines Jr. VA Hospital, Hines, IL, United States

- 2Iowa City VA Medical Center, Center for Access and Delivery Research and Evaluation, United States Department of Veterans Affairs, Iowa City, IA, United States

- 3Department of Medicine, School of Medicine, Case Western Reserve University, Cleveland, OH, United States

- 4Feinberg School of Medicine, Northwestern University, Chicago, IL, United States

- 5VA National Infectious Diseases Service, MDRO Prevention Division, Washington, DC, United States

- 6Rocky Mountain Regional VA Medical Center, Center of Innovation for Veteran Centered and Value Driven Care, Aurora, CO, United States

- 7Department of Medicine, University of Colorado Anschutz Medical Campus, Aurora, CO, United States

- 8VA Salt Lake City Health Care System, Informatics, Decision Enhancement, and Analytics Sciences Center, Salt Lake City, UT, United States

- 9Department of Internal Medicine, Division of Epidemiology, Spencer Fox Eccles School of Medicine, University of Utah, Salt Lake City, UT, United States

- 10Medicine Service, VA Portland Healthcare System, Portland, OR, United States

- 11Department of Medicine, School of Medicine, Oregon Health and Science University, Portland, OR, United States

- 12VA Central Office, VA National Pathology and Laboratory Medicine Program Office, Washington, DC, United States

- 13Iowa City VA Medical Center, Iowa City VA Health Care System, Veterans Health Administration, United States Department of Veterans Affairs, Iowa City, IA, United States

- 14Carver College of Medicine, Pittsburgh VA Medical Center, The University of Iowa, Iowa City, IA, United States

- 15Center for Health Equity Research and Promotion, Pittsburgh, PA, United States

- 16Division of General Internal Medicine, University of Pittsburgh, Pittsburgh, PA, United States

Background: The Veterans Health Administration (VHA) launched VA Bug Alert (VABA) to identify admitted patients who are infected or colonized with multidrug-resistant organisms (MDROs) in real time and promote timely infection prevention measures. However, initial VABA adoption was suboptimal. The objective of this project was to compare the effectiveness of standard vs. enhanced implementation strategies for improving VABA adoption.

Methods: 121 VA healthcare facilities were evaluated for adoption of VABA (at least 1 user registered at a facility) April 2021–September 2022. All facilities initially received standard implementation, which included: VABA revisions based on end-user feedback, education, and internal facilitation via monthly meetings with the MDRO Prevention Division of the VHA National Infectious Diseases Service. Surveys evaluated VABA perspectives among MDRO Prevention Coordinators (MPCs) and/or Infection Preventionists (IPs) before and after initial standard implementation. Facilities not registered for VABA following initial standard implementation (n = 31) were cluster-randomized to continue to receive standard implementation or enhanced implementation (audit and feedback reports and external facilitation via guided interviews to assess VABA use barriers). Percentages of facilities adopting VABA at baseline, after standard implementation (Follow-up 1), and after the enhanced vs. standard implementation trial period (Follow-up 2) were assessed and compared across time points using McNemar’s test. VABA adoption was compared by trial condition using Fisher's exact test.

Results: Before education, 25% of 167 MPC/IP survey respondents across 116 facilities reported no knowledge/use of VABA. After education, 82% of 92 survey respondents across 80 facilities reported intending to use VABA. At baseline, VABA registrations were 40%. Registrations significantly increased aft Follow-up 1(75%, p < 0.01) and at Follow-up 2 (89%, p < 0.01). Adoption did not significantly differ by assigned implementation condition but was higher among facilities that completed all components of enhanced implementation than those who did not (87.5% vs. 43.5%, p = 0.045). Guided interviews revealed key facilitators of VABA registration, which included perceived fit, implementation activities, and organizational context (e.g., staffing resources).

Conclusions: Implementation efforts dramatically increased VABA registrations. Incorporating interview feedback to increase VABA's fit with users' needs may increase its use and help reduce MDRO spread in VA.

Background

Antimicrobial-resistant infections are a critical threat to public health. They cause three million illnesses, 48,000 deaths and $35 billion in excess costs each year in the United States (U.S.) (1). Multidrug-resistant organisms (MDROs) such as carbapenem-resistant Enterobacterales (CRE) are especially important. They are designated by the Centers for Disease Control and Prevention (CDC) as urgent threats to public health in the U.S (1). Patients who are infected/colonized with MDROs may transmit MDROs to others.

Identification and placement into isolation/contact precautions of such patients is recommended by the CDC and World Health Organization (WHO) (2, 3). Early identification is important to ensure appropriate precautions are enacted as soon as possible, minimizing the opportunity for MDRO transmission. However, actionable information regarding whether patients are colonized or infected with MDRO(s) from prior hospital admissions is often not readily available (4, 5). This information gap can delay isolation (4) and increase risk of MDRO spread. Therefore, such delays can contribute to MDRO outbreaks in hospitals.

The U.S. Veterans Health Administration (VHA) launched a tool to expedite identification of patients with a history of MDRO infection/colonization (6). This tool is now called VA Bug Alert (VABA) (6). VHA is a large, nationwide, integrated healthcare system. It uses a centralized electronic health record. This allows VHA to track and monitor MDRO-positive patients longitudinally and between hospitals system-wide. VABA alerts local facilities when such patients are admitted to their facility. VABA allows for more timely identification of MDRO-positive patients upon interfacility transfer than previous protocols involving manual tracking. Similar system-wide electronic alerts have been found to improve isolation precaution compliance (4, 7). Guidelines recommend such alerts for preventing MRSA infection and transmission (8).

Development and implementation of the tool was overseen by the MDRO Prevention Division of the VHA's National Infectious Diseases Service. Prior efforts to implement VABA included education provided to VA MDRO prevention coordinators (MPCs) and infection preventionists (IPs) nationwide in June 2020. The MDRO Prevention Division conducted an unpublished survey of 163 MPCs and/or IPs (response rate = 100%) in May 2021. It found that only 32.5% of survey participants were registered users of VABA (unpublished data). A lack of knowledge about its existence (62.7%) and how to register for it (37.3%) were reported reasons for non-use. Among those that did report using VABA, only 30.2% were routine users. Users indicated that including more organisms (69.8%) and the specimen source (54.7%) in VABA would make it more useful and increase utilization.

The MDRO Prevention Division and VABA developers launched a quality improvement initiative. The goal of this initiative was to improve VABA adoption and ultimately achieve VA-wide implementation. For this initiative, they partnered with the VA Combating Antimicrobial Resistance through Rapid Implementation of Guidelines and Evidence (CARRIAGE) II Quality Enhancement Research Initiative Program. This partnership is collectively henceforth called the VABA Group.

To accomplish the goals of the initiative, the VABA Group employed an adaptive protocol (9, 10). This protocol comprised strategies that were previously successful for implementing evidence-based practice interventions. Strategies delivered to all sites included facilitation from the MDRO Prevention Division (i.e., internal facilitation), education, and tailoring and adaptation (11–25). It was anticipated that some sites would not register shortly after deployment of these initial “standard” implementation strategies. For such sites, the CARRIAGE II team also provided external facilitation and audit and feedback (15, 26, 27). These “enhanced” implementation strategies are useful with late adopters (10, 25, 28, 29).

The current paper aims to describe these implementation efforts and their evaluation. We assessed the effects of implementation strategies on VABA adoption (i.e., registration) over time. We also compared the impact of enhanced vs. continued standard implementation on late adopters. We describe how target user perspectives were evaluated at multiple periods. These perspectives were used to refine and individualize implementation activities at subsequent periods. Implications for implementation science and future directions are discussed.

Methods

Ethical considerations for human subjects

This project was conducted as part of the CARRIAGE II QUERI program. This program was designated as a non-research quality improvement project for program implementation and evaluation purposes in accordance with VHA Program Guide 1200.21 the VHA National Infectious Diseases Service. Participants' decision to participate in surveys was taken as an indication of their consent. Verbal consent was obtained for participation in and audio recording of guided interviews.

Study design and setting

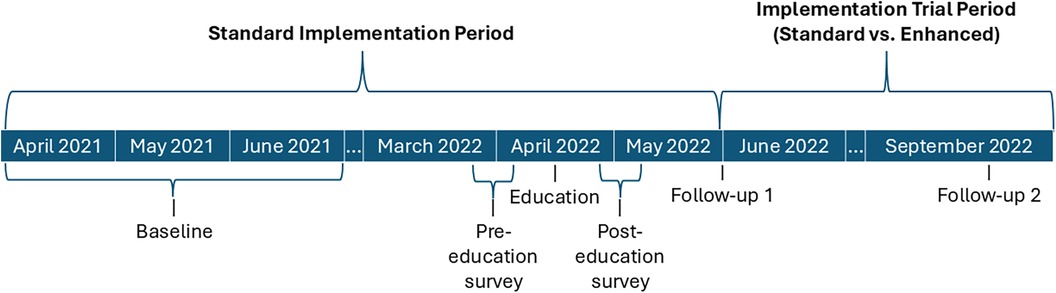

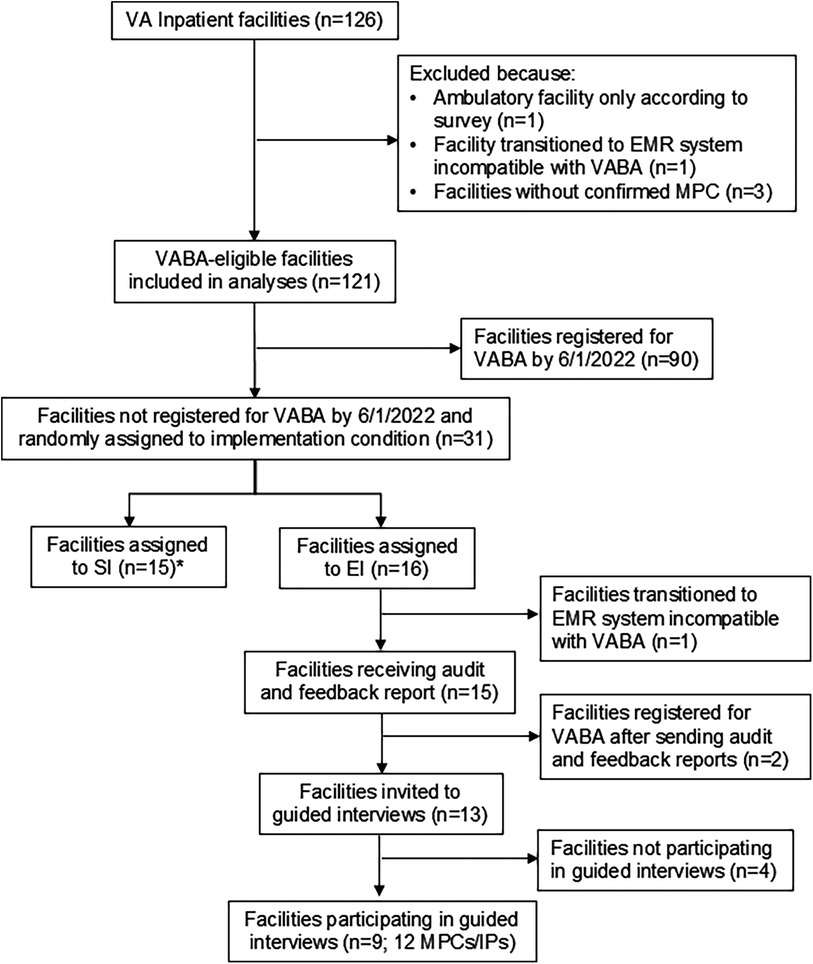

VA facilities were eligible for VABA use if they had an MPC, saw inpatients, and did not transition to an electronic health record vendor that was incompatible with VABA at the time. These facilities included stand-alone, single-hospital facilities and integrated, multi-hospital facilities. Eligible facilities (n = 121) and individual MPCs and IPs were evaluated for VABA registration and VABA beliefs and use using a pre-post design. Study procedures occurred March 2021 through October 2022. A timeline of implementation and measurement time periods can be found in Figure 1. Briefly, standard implementation was delivered to all sites in the Standard Implementation Period. Adoption was measured at baseline and Follow-up 1 (after the Standard Implementation Period). Facilities unregistered by Follow-up 1 underwent a cluster-randomized controlled trial to evaluate the impact of standard vs. enhanced implementation in the Implementation Trial Period on registration at Follow-up 2. Guided interviews were conducted with enhanced implementation facilities to assess barriers to VABA use. The Consolidated Standards of Reporting Trials were used to describe trial design.

Figure 1. Implementation timeline. Timeline of implementation activities and registration (adoption) outcome measurement.

Intervention

The Template for Intervention Description and Replication checklist was used to compile this description of the intervention (30). VABA was originally developed as an alert system and web report for individuals for patients with CRE (6). The goal of this system was to target interfacility movement and transfers between all VA facilities. This strategy is a key measure to prevent transmission of MDROs. VABA's development has been described previously (6).

Briefly, VABA includes cases of MRSA, vancomycin-resistant Enterococcus (VRE), and other organisms. VABA uses information from the VA Corporate Data Warehouse, a database of VA-wide patient-level health characteristics and care utilization (31). It provides automatic (push) email alerts for new admissions with prior positive results for these organisms (within the past 1 year for MRSA, and no time limit for other included organisms) and current admissions with new positive results. Alert settings can be customized to notify users about specific pathogens and culture result source facility (internal or external to the target user's facility). VABA also allows users to pull and sort reports of MDRO-positive admissions and recent weekend discharges, with information on positive surveillance swabs and clinical cultures for report entry. The target users for VABA are MPCs and IPs who are responsible for monitoring, tracking and reporting MDROs in VA.

Implementation strategies

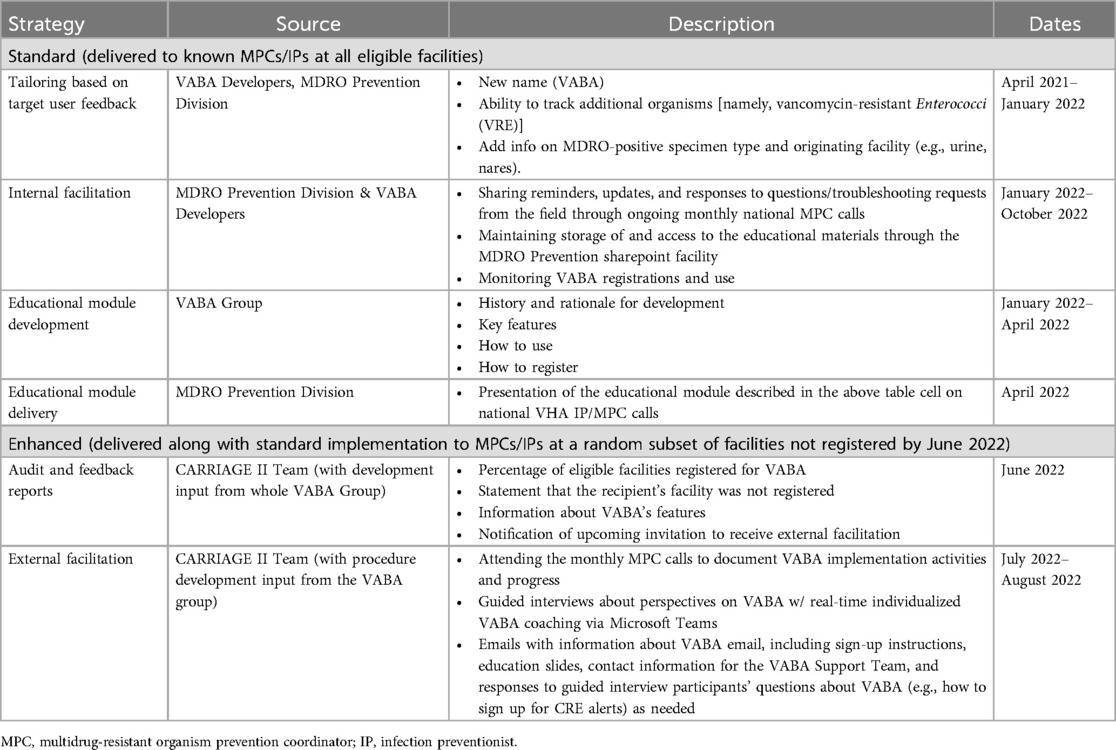

Implementation strategies are described in detail in Table 1. Standard strategies included: (1) tailoring of VABA to include new features based on target end-user feedback, (2) development and delivery of a VABA educational module to target users, and (3) ongoing internal facilitation by the MDRO Prevention Division. Enhanced implementation strategies included standard strategies plus: (1) audit and feedback reports on VABA registration (see Supplementary Figure S1) and (2) external facilitation from members of the CARRIAGE II team.

External facilitation included guided, semi-structured interviews held via Microsoft Teams (MS) Teams with at least one MPC/IP from a target facility. These guided interviews were facilitation sessions where individualized, real-time VABA coaching was offered and provided to those who accepted the offer. Interviewers first assessed participants' knowledge, attitudes, and beliefs regarding VABA use. Information about how VABA may improve the ability to meet participants' MDRO prevention needs was offered to guided interview participants. Participants could choose to accept or decline to receive this additional information. For participants who accepted, this information was tailored in real-time to individual participants based on their post-education-survey-reported reasons for not registering for VABA or their specific needs noted in the guided interview. An example included showing participants how to obtain close-to-real-time admission notifications of patients whose MDROs were identified at VA facilities besides the end user's (a key feature of VABA).

Within the same guided interview, participants were also asked for their impression of the information shared (if they accepted additional information), VABA registration and use intentions, and recommendations for improving VABA and/or its' implementation efforts. External facilitators had backgrounds in implementation science, social psychology, social work, and epidemiology. They received basic training about VABA from the developers and MDRO Prevention Division. The VABA group met quarterly to share updates and preliminary results regarding VABA adoption and implementation (e.g., recommendations from the CARRIAGE II Team for VABA design and internal facilitation based on guided interview results, with additional meetings scheduled as needed.

Randomization

All facilities initially received standard implementation (see Figure 2 for cohort inclusion). If a facility was confirmed to have an MPC according to the MDRO Prevention Division and did not register by Follow-up 1 (n = 31), the facility was randomized to continue standard implementation alone (n = 15) or to enhanced implementation (n = 16). Random assignment to condition was performed by a member of the implementation team (C.R.) by entering VA facility codes into an online random assignment tool (32) and assigning them to standard vs. enhanced. The CARRIAGE III implementation team was not blinded to condition, as they only provided enhanced implementation to sites in the enhanced implementation condition. C.R. conducted the analyses unblinded to condition.

Figure 2. Registration analyses cohort creation/inclusion-exclusion diagram *Facilities that transferred to a new electronic health record system were identified and excluded from enhanced implementation after random assignment to implementation condition; only those that were included in the random assignment (n = 2, one in the enhanced implementation condition, one in the standard implementation condition) are included in analyses.

Outcomes

The primary outcome measure of adoption was VABA registrations at the 121 eligible VA facilities. Registration was defined by having at least one user register at a given facility, as many VA facilities may only have 1 MPC/IP tasked with tracking MDROs. Percentage of facilities registered for VABA was evaluated at baseline, Follow-up 1, and Follow-up 2 (Figure 1). Secondary outcomes evaluated included self-reported knowledge, use of, and intentions to register for VABA through pre- and post-education surveys of target users. Barriers to and facilitators of adoption were also identified from guided interviews.

Data collection

VABA registration was obtained from the MDRO Prevention Division. Data on facility characteristics were obtained from administrative records in the VA's Corporate Data Warehouse from fiscal years 2021–2022 (October 2020–September 2022; Figure 1). These characteristics included geographic region, complexity, and rates of VABA-eligible MDROs (MRSA, CRE, and VRE). The list of unique facilities for VABA registration analyses was obtained from VHA Support Service Center (VSSC) administrative records and the VA facility listing website (33).

Voluntary, identifiable (non-anonymous) pre- and post-education surveys were delivered via email using SurveyMonkey by the MDRO Prevention Division to a total of 651 target users across 121 facilities with active MPCs/IPs nationwide. Education session attendance was unknown. Therefore, surveys were sent to all known target users regardless of whether they attended the education session. (Supplementary Appendices 1, 2 include surveys.) Recipients included 283 MPCs, 61 IPs, and 307 individuals serving as both MPC and IP.

After randomization, facilities randomized to enhanced implementation were invited via email to participate in guided interviews. Up to one email reminder, one MS Teams message, and one phone call were sent to potential guided interview participants. If an MPC could not be reached at that facility, the implementation team identified and contacted the local facility's IP. Our purposive sampling strategy involved a limited pool of eligible key informants (i.e., target users at unregistered sites). Given this, guided interviews were conducted until recruitment attempts were exhausted rather than until thematic saturation was reached (34).

The guided interview guide (Supplementary Appendix 3) was developed by the research team using concepts from user-centered design and piloted in the Standard Implementation Period (35, 36). Participants were told that guided interviews would last a maximum of 30 min. Guided interviews were audio recorded and transcribed using MS Teams. Transcripts were uploaded into MAXQDA, a qualitative data management and analysis software program (VERBI Software, Berlin, Germany), for subsequent analysis.

Data analysis

Pre- and post-education surveys were analyzed using descriptive statistics including frequencies and percentages. Facility-level VABA adoption (the percentage of facilities with at least one registrant for VABA) was compared between baseline and follow-up using McNemar's test. Facility complexity and geographic region were compared by facility registration status at baseline and Follow-up 1 using Chi-square tests. We obtained MDRO incidence-rate differences by registration status. Facility characteristics (complexity and geographic region) for standard vs. enhanced implementation groups of the cluster-randomized control implementation trial were assessed using Fisher's exact test to ensure no differences between groups. Follow-up 2 adoption rate was compared by implementation condition group using Fisher's exact tests. MDRO incidence-rate differences by implementation group were obtained.

Implementation groups were defined by assigned implementation condition in an intent-to-treat analysis, by whether the facility completed a guided interview in a second analysis, and by whether the facility received VABA coaching (i.e., not only participated in a guided interview, but agreed to hear more information and guidance about VABA during the guided interview and thereby “completed full external facilitation,” as we henceforth describe these facilities) in a post-hoc sensitivity analysis. In this analysis, all sites that did not complete full external facilitation were grouped together. This category therefore contained standard implementation sites, enhanced implementation sites that did not complete guided interviews, and an enhanced implementation site that completed a guided interview but declined additional information about VABA (and thus did not complete full external facilitation). We also examined differences in facility characteristics by guided interview completion status to identify any differences that may have potentially biased results.

VABA utilization is only available at the facility level. Therefore, for multi-hospital facilities, the highest-complexity hospitals with MDRO admissions during the evaluation rating were chosen for analyses. These hospitals likely weighed most heavily in their target users' VABA adoption decisions. All quantitative analyses were conducted using StataMP 17 (37). The threshold for statistical significance was set at p < 0.05 for all analyses involving inferential statistics.

Guided interview responses were coded using an inductive/deductive approach (38). Deductive codes were identified using the Systems Engineering Initiative for Patient Safety (SEIPS) 2.0 framework (39). SEIPS 2.0 specifies how work system elements influence “processes” (e.g., care processes, teamwork), and “outcomes.” Elements include “tools/technologies,” “tasks,” “persons,” “internal environment,” and “organization.” All work elements may interact with each other. See Supplementary Table S1 for the coding scheme. SEIPS is based on decades of healthcare research. SEIPS has been successfully applied to understanding the adoption and implementation of infection prevention measures and healthcare information technology, including systems used in VA to access information from other facilities (40–49). Our inductive approach allowed themes on how work-systems components interact and shape VABA adoption) to emerge (38, 50).

All transcripts were double-coded by trained qualitative researchers (CR, CCG, AMH) with disagreements resolved via consensus for 100% agreement. A matrix approach was used to examine intersecting codes and organize excerpts into themes for sensemaking purposes. We report on themes that were deemed well-represented within the data (i.e., that were reported by two or more facilities). Participants were not provided the opportunity to validate transcripts or findings.

Results

Pre- and post-education surveys

Demographics and responses of MPCs/IPs to pre- and post- survey questions are presented in Supplementary Table S2. 167 individuals across 116 VA facilities responded to the pre-education survey (representing 95.9% of the 121 facilities). VABA familiarity and use were low before the education session. Only 25.1% (n = 42) were familiar with VABA's previous iteration and had used it. Those who reportedly used VABA most often responded that they used VABA monthly (28.6%). Only 9.5% used VABA every day.

92 individuals across 80 VA facilities completed the post-education survey (facility response rate = 66.1%). Of these, 76 (81.7%) said they planned to register for VABA. Among the 16 participants who reported that they would not or were unsure whether they would register, the most common reason cited for not registering was that they already had a way to obtain the information provided by VABA (e.g., a commercially available tool; n = 11, 70.6%). Of the subset who planned to register for VABA (n = 76), 71.1% said they would use the feature to create custom email alerts.

Facility characteristics and VABA adoption

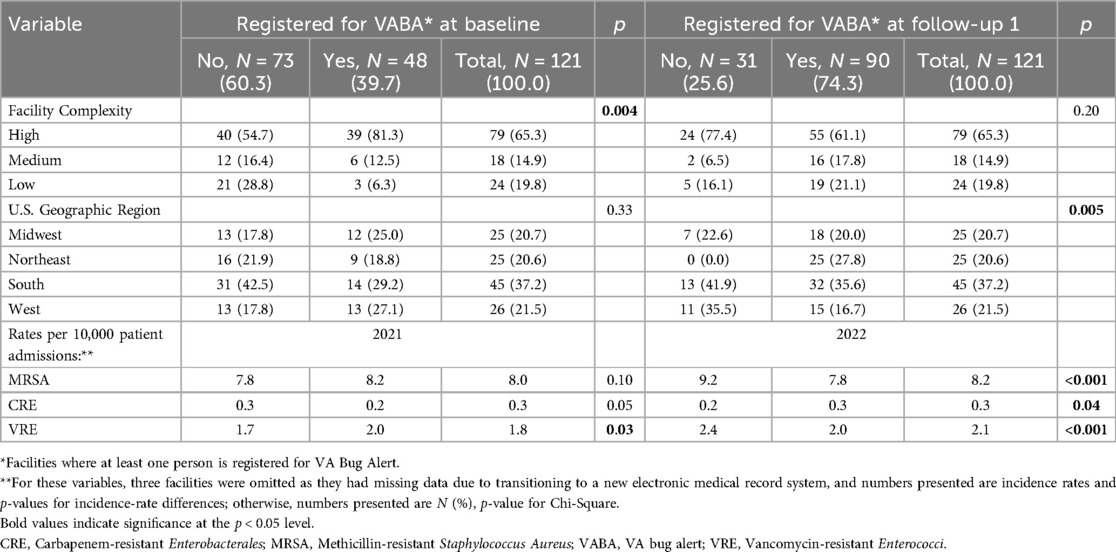

Exclusions and participation in each component of standard and enhanced are displayed in Figure 1. Characteristics of 121 VABA-eligible facilities were compared by registration status at baseline and after standard implementation in Table 2. At baseline, facilities that were registered were higher complexity (p-value = 0.004) and had higher rates of VRE cases per 10,000 patients (p-value = 0.03) than those that were not registered. At Follow-up 1, there were significant geographic differences (p = 0.005) such that no unregistered facilities were from the Northeast, and unregistered facilities were more commonly from the South and West. Fiscal year 2022 MRSA and VRE rates were lower among facilities that had registered at Follow-up 1 (p-value < 0.001 for both) than those that had not, while CRE rates were higher (p-value = 0.04). The adoption rate was 40% at baseline and 75% at Follow-up 1. (p-value < 0.0001).

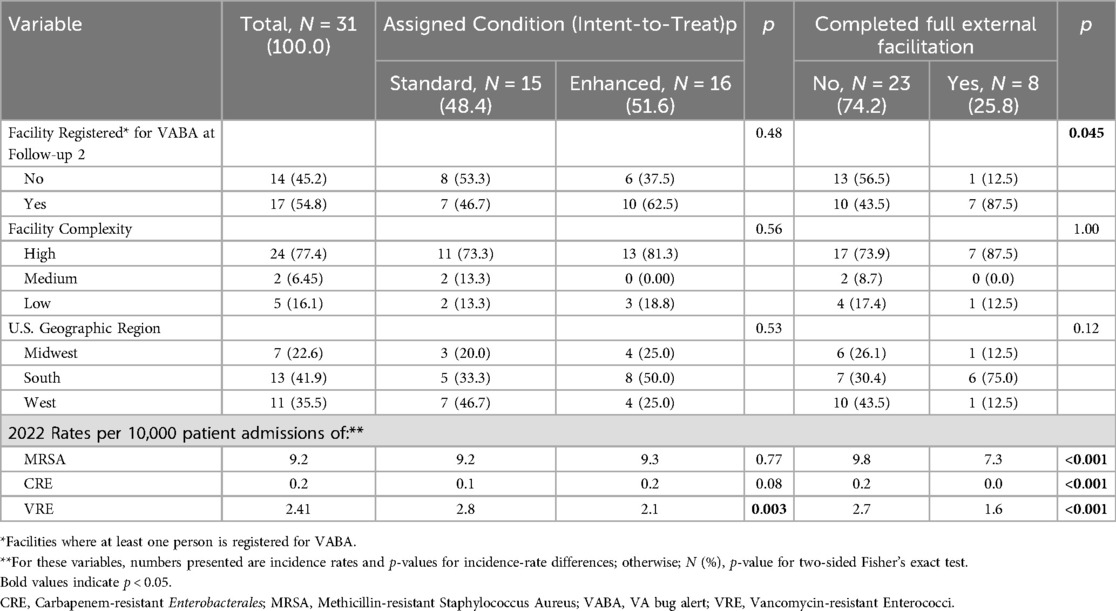

Of the 31 facilities that were not registered at Follow-up 1 and that had confirmed MPCs, 15 were randomized to enhanced implementation, and 16 were randomized to continued standard implementation. Of the 16 enhanced implementation facilities, 9 completed guided interviews. Of these, 8 facilities accepted additional information and coaching about VABA during the guided interviews. Thus, across both conditions, 8 facilities completed full external facilitation, and 23 did not.

Adoption of VABA and facility characteristics by implementation condition

Table 3 displays facility characteristics and registration status at Follow-up 2 by assigned implementation condition of facilities (the intent-to-treat analysis) and whether they completed full external facilitation. There were no significant differences in facility complexity or geographic region by type of implementation assigned or received. However, VRE incidence rates per 10,000 patients were significantly higher among those assigned to standard implementation than enhanced implementation (p-value = 0.003). Furthermore, rates of CRE, VRE, and MRSA per 10,000 patients were significantly higher among the 23 facilities who did not complete full external facilitation (i.e., both participate in guided interviews and accepted coaching about VABA during said guided interviews) than the 8 who did (p-value < 0.001 for all).

At Follow-up 2, 89.2% of eligible facilities were registered for VABA (n = 107), which was a significant increase from baseline (p-value = 0.0001). Overall, 10 enhanced implementation facilities (62.5%) registered for VABA, compared to 7 standard implementation facilities (46.7%) (p = 0.48). A sensitivity analysis found that while 7 of the 8 facilities completing full external facilitation registered for VABA (87.5%), only 10 of the 23 facilities that did not complete full external facilitation registered for VABA (43.5%), such that completing full external facilitation was associated with significantly more frequent VABA registration (p = 0.045).

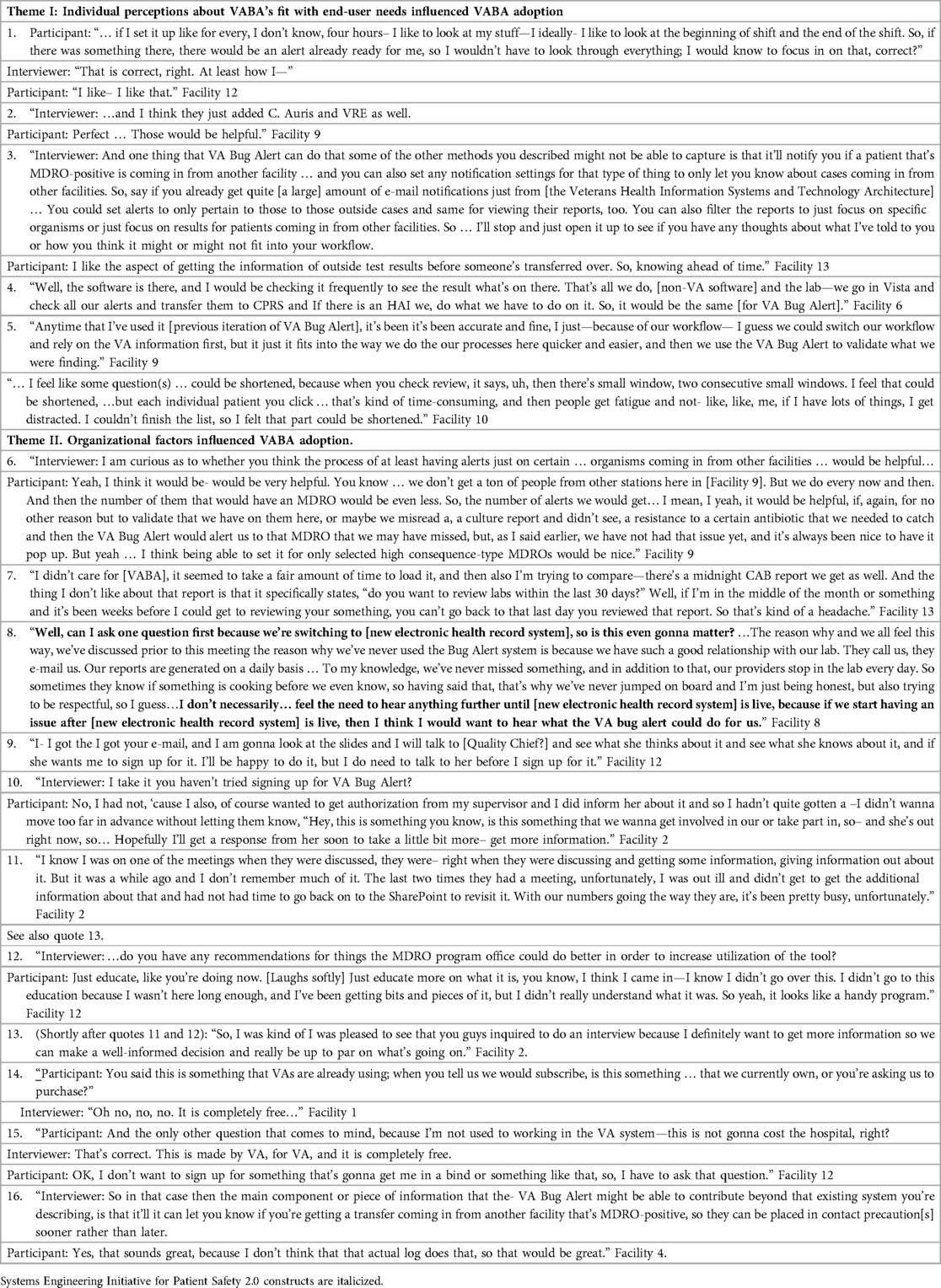

Factors influencing adoption—guided interviews results

Guided interviews were completed by participants from 8 facilities (out of 13 eligible facilities in the enhanced implementation condition; response rate = 61.5%). Guided interviews lasted 20.5 min on average (between 12.1 to 29.0 min). Emergent themes centered around factors influencing VABA adoption including perceptions about VABA, organizational factors, and implementation activities (Table 4).

Table 4. Key themes and illustrative quotes regarding factors influencing VABA registration from guided interviews.

Theme I: Individual perceptions about VABA's fit with end-user needs (e.g., preferences, intended tasks, existing processes or workflows, and usability needs) influenced adoption. For example, one participant liked that they could customize alert settings to their timing preferences (e.g., to receive them at start of shift; quote 1). Another user described alerts of select organisms (quote 2) as being useful. Yet another user described liking that VABA provided alerts of cases coming from other facilities ahead of time (i.e., a useful feature of VABA; quote 3). VABA adoption also appeared to be supported to the perceived extent that VABA use fits in users' existing processes or workflows (quotes 4 and 5). Conversely, viewing VABA as time-consuming or difficult to use (i.e., low usability) seemed to inhibit VABA adoption (quote 6).

Theme II: Organizational factors influenced adoption. Some organizational factors appeared to influence perceived fit of VABA with users' needs; for example, the facility's volume of MDRO cases that could be identified by VABA as opposed to other available tools (Quote 7). Similarly, VABA adoption was also impeded by perceived incompatibility with other technologies provided by the organization (quotes 8 and 9). Other organizational influenced adoption outside of directly shaping perceptions about VABA's fit with their needs. For instance, some target users reported needing to obtain supervisor permission before they could adopt VABA after coaching (quotes 10 and 11). Staffing challenges such as absences, turnover, or high workload made it difficult to attend education sessions (quotes 12 and 13) or review the presentation slides after the session (quote 12).

Theme III: Implementation activities (e.g., external facilitation) appeared to influence adoption and hinge on the extent to which they could compensate for organizational barriers or highlight relevant positive attributes of VABA. Specifically, quotes 13 and 14 suggest that the one-on-one guided interview format of external facilitation may have helped compensate for these participants' aforementioned inability to make the education session (quotes 12 and 13). Quotes 15 and 16 show external facilitators pointing out that VABA is free within VA (reportedly an important precondition of VABA adoption according to quote 16). Furthermore, participants responded favorably to information about VABA attributes that fit their needs. Such attributes included VABA's interfacility transfer tracking abilities (quote 17) and alert customizability (quotes 1 and 7).

Discussion

Key findings

Across 121 VA facilities, VABA adoption was low at baseline. Registration increased after education, where three-quarters adopted VABA. Enhanced implementation strategies were associated with improved VABA adoption compared to standard implementation (Follow-up 2). However, this finding was specific to the coaching component of external facilitation in a post-hoc sensitivity analysis. This may have been because the coaching component of external facilitation may have compensated for the effects of key organizational influences such as staffing (that constrained the reach of education sessions). Coaching also appeared to convey important adoption-relevant information, for instance, about VABA's fit with user needs. Thus, our efforts appeared largely successful for increasing VABA adoption.

Target users' perceptions that VABA fit their preferences and needs were related to adoption, as particularly illustrated by guided interview findings. Furthermore, survey respondents who reportedly did not intend to adopt VABA often indicated that they already had a method of obtaining the information provided by VABA. In other words, for this subset of participants, VABA did not have additional perceived usefulness beyond that of other tools they were already using. These findings are consistent with prior evidence illustrating the importance of perceived usefulness and usability on technology use (51–54). VABA's compatibility with existing processes was another important determinant of adoption. Similarly, other studies have found that implementation of antimicrobial stewardship interventions is linked to the interventions' fit or compatibility with existing processes (55–57). Our findings also echo work outlining the role of innovation adaptability, complexity, design, relative advantage over alternative tools, and inner setting compatibility in implementation (58).

Organizational factors also shaped VABA adoption. They appeared to do so in part by limiting the reach or impact of implementation activities. For instance, VABA adoption was constrained by the perceived need to obtain supervisor permission to register, even following external facilitation. Attendance of large-group education sessions was impaired by staffing challenges at unregistered facilities, as previously described in a past systematic review (26).

Implementation activities shaped adoption. The significant increase in registration from baseline to after follow-up 1 is concordant with past reports demonstrating the effectiveness of provider training as an implementation strategy (59, 60). We also found that external facilitation (specifically, the coaching component of guided interviews) increased registration. This finding also partially echo past work demonstrating the effectiveness of external facilitation (9, 10). The success of implementation activities for promoting adoption also appeared to hinge on the extent to which they could influence target users' perceptions, also consistent with prior evaluations (61). External facilitation highlighting VABA's customizable features (i.e., adaptability) appeared effective for fostering adoption. This is perhaps because external facilitation demonstrated that VABA can meet end-user preferences and needs, as illustrated by the exchange in Table 4, quote 1.

Implementation activities' success also seemed to depend on the extent to which they could surmount organizational barriers to implementation. External facilitation provided additional support for staff who were unable to attend education sessions due to staffing constraints, also consistent with a past systematic review (26). Strategies more commonly recommended to reduce challenges with available resources, such as obtaining more funding to hire additional staff, may be infeasible in some cases (58, 62). Approaches such as external facilitation may be particularly helpful in such instances by setting aside time in busy target users' schedules for one-on-one interaction.

Implications for future innovation design and implementation efforts

Findings emphasize the need to identify and address organizational barriers and end user needs or preferences when designing and implementing technological interventions (26, 62). Our results also illustrate the importance of engaging key stakeholders for identifying these key considerations (12). Tailoring of implementation to local context can be guided by stakeholder feedback (17–20, 25).

Our findings and expert recommendations also suggest which implementation strategies may be most useful to combat specific barriers for future innovation design and implementation efforts. For instance, stakeholder engagement should involve individuals beyond target users, such as their supervisors. This strategy can help obtain clear support and permission for intervention use. This suggestion is consistent with that of Miake-Lye and colleagues' suggestion to promote “visibility with multi-level leadership” of late adopters (26). It also aligns with experts' endorsement of informing local opinion leaders to address issues with key stakeholders and opinion leaders (58, 62).

When perceived compatibility of intervention is low, it may be beneficial to promote adaptability and implement cyclical small tests of change (25, 49). A systematic review highlighted two strategies: (1) enhancing the adaptability of innovations and (2) refining them based on feedback from trials with late adopters. These strategies may increase an innovation's appeal or perceived advantage relative to alternative tools (26, 58). Additionally, one project that used the SEIPS 2.0 model to evaluate a tool designed to convey safety-related information about patients from outside facilities (similar to the current project) found that tool usage improved when its fit with the intended task (i.e., usefulness) was enhanced (49). User-centered design can guide tailoring and (re)design promote implementation (35, 36, 58, 63–65). It can do so by incorporating end-user perspectives into intervention design and implementation to ensure user needs are met (35, 36, 58, 63–65). More studies should explore this possibility (66).

Strengths and limitations

This project has several strengths. Our adaptive trial design, multiple periods of data collection, and novel guided interview approach allowed us to refine our implementation strategies in response to differences in beliefs across users and shifting contexts over time. Our semi-structured guided interview included suggested facilitator responses to comments from interview participants promoted standardization of the provision and measurement of often “black box” external facilitation processes (67) and helped to address complexities associated with navigating dual roles of interviewer and external facilitator. To our knowledge, this is the first report describing a formalized method and guide for collecting data on barriers and facilitators, providing external facilitation support, and collecting data on initial reactions to external facilitation in a single interview. This method thus provides structure to the interactive problem-solving processes of external facilitation (15).

Our analysis included guided interview text comprising VABA guidance from external facilitators/interviewers in addition to participant responses. This approach allowed us to extract the content conveyed during the guided interviews that appeared to promote adoption intentions. Furthermore, access to VABA utilization metrics allowed us to measure behavior directly, rather than intentions reported in surveys and guided interviews alone.

We used the SEIPS 2.0 framework, which connects work system inputs with processes and outcomes and allows for interactions between work system elements. This in turn gives flexibility for understanding and contextualizing implementation of technological interventions. We were thus able to identify relationships between technology adoption and other work systems elements from the ground up without pre-conceived notions. These relationships correspond to prior implementation science constructs despite not looking for those constructs a-priori. This correspondence provides particularly compelling evidence for the role of these constructs in adoption.

Despite the strengths of this project, it also had some limitations. Because educational module attendance was unavailable, we were unable to calculate survey response rates among attendees. Because VABA utilization metrics were only available at the integrated facility/healthcare system level, in which some facilities comprise multiple medical centers, we were unable to assess the role of implementation strategies and possible covariates on registration of each medical center (68). Furthermore, VABA registrants may have included individuals using VABA for administrative or reporting purposes rather than frontline MDRO prevention efforts. Thus, our registration numbers may include others besides target users. The ineligibility of some facilities for enhanced implementation and VABA use was discovered after randomization and implementation began. Thus, the analyses include some facilities not eligible for VABA (69, 70).

The small sample of facilities participating in the trial compromised the statistical power to detect effects of enhanced implementation. Furthermore, higher VABA adoption among sites that received enhanced implementation as intended (which included the guided interviews) as compared to those who did not may have been influenced by the lower MDRO rates rather than the external facilitation itself. Thus, evaluation of the effectiveness of external facilitation efforts from this study must be interpreted with caution, as resource-intensive strategies such as external facilitation may not always be merited. This may particularly be true in cases such as the current initiative, in which late adopters were scarce.

The scarcity of non-adopters attests to the success of the education session and tailoring of the tool based on prior target user surveys. This latter finding lends further credence to our recommendations regarding the importance of tailoring design based on user input. However, because all sites received standard implementation at a minimum, we cannot rule out the possibility that history effects, such as decreased competing priorities following the COVID-19 pandemic, drove increased registration from baseline. Finally, the increased VABA use from baseline in response to standard and enhanced implementation may be due to prolonged exposure to implementation efforts (i.e., time) rather than the merits of any one implementation strategy (e.g., internal facilitation).

Conclusions

Overall, design tailoring and adaptation, education, and facilitation successfully promoted adoption of a MDRO alert tool in the VHA. These strategies were particularly successful to the extent that they addressed organizational factors and shaped end-user perceptions regarding the usability and utility of VABA. Future work includes in-depth analysis of internal facilitation processes. We will also continue to refine VABA design, implementation activities, and evaluation methods based on current findings and participant recommendations in collaboration with the MDRO Prevention Division. The goal of these activities will be to increase and sustain VABA use among registrants. Finally, we will evaluate the effects of VABA implementation and use on clinical processes (e.g., timeliness of isolation/contact precautions) and patient care outcomes (e.g., MDRO transmission).

Data availability statement

The datasets presented in this article are not readily available because we cannot directly share underlying data as it would compromise participants' anonymity, and permissions from VA are needed to obtain the data. We are committed to collaborating and sharing these data to maximize their value to improve Veterans and others' health and health care, to the greatest degree consistent with current Department of Veterans Affairs regulations and policy. We can provide access to the programming code used to conduct quantitative analyses. Requests to access the datasets should be directed toY2FyYS5yYXlAdmEuZ292.

Author contributions

CR: Data curation, Formal analysis, Investigation, Methodology, Project administration, Visualization, Writing – original draft, Writing – review & editing. CG: Data curation, Formal analysis, Investigation, Methodology, Writing – review & editing. AH: Formal analysis, Methodology, Writing – review & editing. GW: Writing – review & editing, Methodology. NH: Writing – review & editing, Investigation, Methodology, Resources, Supervision. MF: Methodology, Writing – review & editing. MJ: Methodology, Software, Writing – review & editing, Funding acquisition. CP: Software, Methodology, Writing – review & editing, Funding acquisition. JK: Resources, Supervision, Writing – review & editing. ME: Resources, Supervision, Methodology, Writing – review & editing. KS: Methodology, Writing – review & editing. CE: Conceptualization, Methodology, Funding acquisition, Supervision, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by the Department of Veterans Affairs (VA), Veterans Health Administration, Office of Research and Development, Health Services Research and Development (HSR&D) Quality Enhancement Research Initiative [grant number QUE 20-016 to CE] and Research Career Scientist Award [RCS 20-192 to CE].

Acknowledgments

We would like to acknowledge:

• Linda Flarida for fielding the pre-and post-education surveys and providing the data and preliminary results to the authors.

• Swetha Ramanathan, PhD, for her assistance with developing the VABA education materials and evaluation surveys.

• Tina Willson for her role in developing and maintaining VABA, developing the education slides and user guide, and providing VA Bug Alert utilization metrics and education regarding the tool's features to the quality improvement team.

• Reside “Lorie” Jacob for providing data from VA administrative records regarding MDRO cases and facility characteristics.

An earlier version of this work was presented at the Society for Healthcare Epidemiology of America (71). The current manuscript contains new data that was collected and new findings from new analyses that were conducted since then.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Author Disclaimer

The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the Department of Veterans Affairs or the U.S. government.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frhs.2025.1566454/full#supplementary-material.

Abbreviations

CARRIAGE, combating antimicrobial resistance through rapid implementation of guidelines and evidence; CRE, carbapenem-resistant Enterobacterales; IP, infection preventionist; MDRO, multidrug-resistant organisms; MPC, MDRO program coordinator; MRSA, methicillin-resistant Staphylococcus Aureus; VA, department of veterans affairs; VABA, VA bug alert; VHA, veterans health administration; VRE, vancomycin-resistant Enterococci.

References

1. Centers for Disease Control and Prevention. Antibiotic Resistance Threats in the United States, 2019. Atlanta, GA: U.S. Department of Health and Human Services, CDC (2019).

2. World Health Organization. Guidelines for the Prevention and Control of Carbapenem-Resistant Enterobacteriaceae, Acinetobacter Baumannii and Pseudomonas Aeruginosa in Health Care Facilities. Geneva: World Health Organization (2017).

3. Siegel JD, Rhinehart E, Jackson M, Chiarello L. Management of multidrug-resistant organisms in health care settings, 2006. Am J Infect Control. (2007) 35(10):S165–S93. doi: 10.1016/j.ajic.2007.10.006

4. Kac G, Grohs P, Durieux P, Trinquart L, Gueneret M, Rodi A, et al. Impact of electronic alerts on isolation precautions for patients with multidrug-resistant bacteria. Arch Intern Med. (2007) 167(19):2086–90. doi: 10.1001/archinte.167.19.2086

5. Robinson JO, Phillips M, Christiansen KJ, Pearson JC, Coombs GW, Murray RJ. Knowing prior methicillin-resistant Staphylococcus aureus (MRSA) infection or colonization status increases the empirical use of glycopeptides in MRSA bacteraemia and may decrease mortality. Clin Microbiol Infect. (2014) 20(6):530–5. doi: 10.1111/1469-0691.12388

6. Pfeiffer CD, Jones MM, Klutts JS, Francis QA, Flegal HM, Murray AO, et al. Development and implementation of a nationwide multidrug-resistant organism tracking and alert system for veterans affairs medical centers. Infect Control Hosp Epidemiol. (2024) 45(9):1073–8. doi: 10.1017/ice.2024.79

7. Borbolla D, Taliercio V, Schachner B, Gomez Saldano AM, Salazar E, Luna D, et al. Isolation of patients with vancomycin resistant enterococci (VRE): efficacy of an electronic alert system. In: Mantas J, Andersen SK, Mazzoleni MC, Blobel B, Quaglini S, Moen A, editors. Quality of Life Through Quality of Information. Amsterdam: IOS Press (2012). p. 698–702.

8. Yokoe DS, Advani SD, Anderson DJ, Babcock HM, Bell M, Berenholtz SM, et al. Executive summary: a compendium of strategies to prevent healthcare-associated infections in acute-care hospitals: 2022 updates. Infect Control Hosp Epidemiol. (2023) 44(10):1540–54. doi: 10.1017/ice.2023.138

9. Kilbourne AM, Almirall D, Eisenberg D, Waxmonsky J, Goodrich DE, Fortney JC, et al. Protocol: adaptive implementation of effective programs trial (ADEPT): cluster randomized SMART trial comparing a standard versus enhanced implementation strategy to improve outcomes of a mood disorders program. Implement Sci. (2014) 9(1):132. doi: 10.1186/s13012-014-0132-x

10. Kilbourne AM, Abraham KM, Goodrich DE, Bowersox NW, Almirall D, Lai Z, et al. Cluster randomized adaptive implementation trial comparing a standard versus enhanced implementation intervention to improve uptake of an effective re-engagement program for patients with serious mental illness. Implement Sci. (2013) 8:1–14. doi: 10.1186/1748-5908-8-136

11. Ritchie MJ, Parker LE, Kirchner JE. From novice to expert: methods for transferring implementation facilitation skills to improve healthcare delivery. Implement Sci Commun. (2021) 2(1):39. doi: 10.1186/s43058-021-00138-5

12. Ritchie M, Dollar K, Miller C, Smith J, Oliver K, Kim B, et al. Using Implementation Facilitation to Improve Healthcare (Version 3). Veterans Health Administration, Behavioral Health Quality Enhancement Research Initiative (QUERI). 2020. (2023).

13. Ritchie MJ, Parker LE, Kirchner JE. From novice to expert: a qualitative study of implementation facilitation skills. Implement Sci Commun. (2020) 1:1–12. doi: 10.1186/s43058-020-00006-8

14. Kirchner J, Edlund CN, Henderson K, Daily L, Parker LE, Fortney JC. Using a multi-level approach to implement a primary care mental health (PCMH) program. Fam Syst Health. (2010) 28(2):161–74. doi: 10.1037/a0020250

15. Stetler CB, Legro MW, Rycroft-Malone J, Bowman C, Curran G, Guihan M, et al. Role of “external facilitation” in implementation of research findings: a qualitative evaluation of facilitation experiences in the Veterans Health Administration. Implement Sci. (2006) 1:23. doi: 10.1186/1748-5908-1-23

16. Dogherty EJ, Harrison MB, Graham ID. Facilitation as a role and process in achieving evidence-based practice in nursing: a focused review of concept and meaning. Worldviews Evid Based Nurs. (2010) 7(2):76–89. doi: 10.1111/j.1741-6787.2010.00186.x

17. Penney LS, Damush TM, Rattray NA, Miech EJ, Baird SA, Homoya BJ, et al. Multi-tiered external facilitation: the role of feedback loops and tailored interventions in supporting change in a stepped-wedge implementation trial. Implement Sci Commun. (2021) 2(1):82. doi: 10.1186/s43058-021-00180-3

18. Scott K, Jarman S, Moul S, Murphy CM, Yap K, Garner BR, et al. Implementation support for contingency management: preferences of opioid treatment program leaders and staff. Implement Sci Commun. (2021) 2(1):47. doi: 10.1186/s43058-021-00149-2

19. Ridgeway JL, Branda ME, Gravholt D, Brito JP, Hargraves IG, Hartasanchez SA, et al. Increasing risk-concordant cardiovascular care in diverse health systems: a mixed methods pragmatic stepped wedge cluster randomized implementation trial of shared decision making (SDM4IP). Implement Sci Commun. (2021) 2:1–14. doi: 10.1186/s43058-021-00145-6

20. Pittman JO, Rabin B, Almklov E, Afari N, Floto E, Rodriguez E, et al. Adaptation of a quality improvement approach to implement eScreening in VHA healthcare settings: innovative use of the Lean Six Sigma Rapid Process Improvement Workshop. Implement Sci Commun. (2021) 2:1–11. doi: 10.1186/s43058-021-00132-x

21. Ritchie MJ, Kirchner JE, Parker LE, Curran GM, Fortney JC, Pitcock JA, et al. Evaluation of an implementation facilitation strategy for settings that experience significant implementation barriers. Implement Sci. (2015) 10(1):A46. doi: 10.1186/1748-5908-10-S1-A46

22. Kirchner JE, Ritchie MJ, Pitcock JA, Parker LE, Curran GM, Fortney JC. Outcomes of a partnered facilitation strategy to implement primary care–mental health. J Gen Intern Med. (2014) 29(4):904–12. doi: 10.1007/s11606-014-3027-2

23. Chinman M, Goldberg R, Daniels K, Muralidharan A, Smith J, McCarthy S, et al. Implementation of peer specialist services in VA primary care: a cluster randomized trial on the impact of external facilitation. Implement Sci. (2021) 16:1–13. doi: 10.1186/s13012-021-01130-2

24. Smith SN, Liebrecht CM, Bauer MS, Kilbourne AM. Comparative effectiveness of external vs blended facilitation on collaborative care model implementation in slow-implementer community practices. Health Serv Res. (2020) 55(6):954–65. doi: 10.1111/1475-6773.13583

25. Powell BJ, Beidas RS, Lewis CC, Aarons GA, McMillen JC, Proctor EK, et al. Methods to improve the selection and tailoring of implementation strategies. J Behav Health Serv Res. (2017) 44(2):177–94. doi: 10.1007/s11414-015-9475-6

26. Miake-Lye I, Mak S, Lambert-Kerzner A, Lam C, Delevan D, Secada P, et al. Scaling beyond early adopters: a systematic review and key informant perspectives. VA ESP project #05-226. (2019).

27. Dulko D. Audit and feedback as a clinical practice guideline implementation strategy: a model for acute care nurse practitioners. Worldviews Evid Based Nurs. (2007) 4(4):200–9. doi: 10.1111/j.1741-6787.2007.00098.x

28. Barwick M, Brown J, Petricca K, Stevens B, Powell BJ, Jaouich A, et al. The implementation playbook: study protocol for the development and feasibility evaluation of a digital tool for effective implementation of evidence-based innovations. Implement Sci Commun. (2023) 4(1):21. doi: 10.1186/s43058-023-00402-w

29. Jamtvedt G, Young JM, Kristoffersen DT, O’Brien MA, Oxman AD. Does telling people what they have been doing change what they do? A systematic review of the effects of audit and feedback. BMJ Qual Saf. (2006) 15(6):433–6. doi: 10.1136/qshc.2006.018549

30. Hoffmann TC, Glasziou PP, Boutron I, Milne R, Perera R, Moher D, et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. Br Med J. (2014):348:g1687. doi: 10.1136/bmj.g1687

31. Vincent BM, Wiitala WL, Burns JA, Iwashyna TJ, Prescott HC. Using veterans affairs corporate data warehouse to identify 30-day hospital readmissions. Health Serv Outcomes Res Methodol. (2018) 18(3):143–54. doi: 10.1007/s10742-018-0178-3

32. Random Assignment: BestRandoms; (2024). Available online at: https://www.bestrandoms.com/random-assignment (Accessed May 24, 2024).

33. U.S. Department of Veterans Affairs. Facility Listing—Locations. Washington, DC: U.S. Department of Veterans Affairs (2024). Available online at: https://www.va.gov/directory/guide/rpt_fac_list.cfm (Accessed May 22, 2024).

34. Hamilton AB, Finley EP. Qualitative methods in implementation research: an introduction. Psychiatry Res. (2019) 280:112516. doi: 10.1016/j.psychres.2019.112516

35. Dopp AR, Parisi KE, Munson SA, Lyon AR. A glossary of user-centered design strategies for implementation experts. Transl Behav Med. (2018) 9(6):1057–64. doi: 10.1093/tbm/iby119

36. Dopp AR, Parisi KE, Munson SA, Lyon AR. Aligning implementation and user-centered design strategies to enhance the impact of health services: results from a concept mapping study. Implement Sci Commun. (2020) 1(1):17. doi: 10.1186/s43058-020-00020-w

39. Holden RJ, Carayon P, Gurses AP, Hoonakker P, Hundt AS, Ozok AA, et al. SEIPS 2.0: a human factors framework for studying and improving the work of healthcare professionals and patients. Ergonomics. (2013) 56(11):1669–86. doi: 10.1080/00140139.2013.838643

40. Barker AK, Brown K, Siraj D, Ahsan M, Sengupta S, Safdar N. Barriers and facilitators to infection control at a hospital in northern India: a qualitative study. Antimicrob Resist Infect Control. (2017) 6(1):35. doi: 10.1186/s13756-017-0189-9

41. Ford JH, Nora AT, Crnich CJ. Moving behavioral interventions in nursing homes from planning to action: a work system evaluation of a urinary tract infection toolkit implementation. Implement Sci Commun. (2023) 4(1):156. doi: 10.1186/s43058-023-00535-y

42. Gurses AP, Marsteller JA, Ozok AA, Xiao Y, Owens S, Pronovost PJ. Using an interdisciplinary approach to identify factors that affect clinicians’ compliance with evidence-based guidelines. Crit Care Med. (2010) 38:S282–91. doi: 10.1097/CCM.0b013e3181e69e02

43. Keating JA, Parmasad V, McKinley L, Safdar N. Integrating infection control and environmental management work systems to prevent Clostridioides difficile infection. Am J Infect Control. (2023) 51(12):1444–8. doi: 10.1016/j.ajic.2023.06.008

44. Musuuza JS, Hundt AS, Carayon P, Christensen K, Ngam C, Haun N, et al. Implementation of a Clostridioides difficile prevention bundle: understanding common, unique, and conflicting work system barriers and facilitators for subprocess design. Infect Control Hosp Epidemiol. (2019) 40(8):880–8. doi: 10.1017/ice.2019.150

45. Parmasad V, Keating J, McKinley L, Evans C, Rubin M, Voils C, et al. Frontline perspectives of C. difficile infection prevention practice implementation within veterans affairs health care facilities: a qualitative study. Am J Infect Control. (2023) 51(10):1124–31. doi: 10.1016/j.ajic.2023.03.014

46. Yanke E, Carayon P, Safdar N. Translating evidence into practice using a systems engineering framework for infection prevention. Infect Control Hosp Epidemiol. (2014) 35(9):1176–82. doi: 10.1086/677638

47. Yanke E, Zellmer C, Van Hoof S, Moriarty H, Carayon P, Safdar N. Understanding the current state of infection prevention to prevent Clostridium difficile infection: a human factors and systems engineering approach. Am J Infect Control. (2015) 43(3):241–7. doi: 10.1016/j.ajic.2014.11.026

48. Sijm-Eeken M, Marcilly R, Jaspers M, Peute L. Organizational and human factors in green medical informatics—a case study in Dutch hospitals. Stud Health Technol Inform. (2023) 305:537–540. doi: 10.3233/SHTI230552

49. Snyder ME, Nguyen KA, Patel H, Sanchez SL, Traylor M, Robinson MJ, et al. Clinicians’ use of health information exchange technologies for medication reconciliation in the U.S. Department of veterans affairs: a qualitative analysis. BMC Health Serv Res. (2024) 24(1):1194. doi: 10.1186/s12913-024-11690-w

50. Ryan GW, Bernard HR. Techniques to identify themes. Field Methods. (2003) 15(1):85–109. doi: 10.1177/1525822X02239569

51. Davis FD. A Technology Acceptance Model for Empirically Testing New End-User Information Systems: Theory and Results. Cambridge, MA: Massachusetts Institute of Technology (1985).

52. Ajzen I. Attitudes, traits, and actions: dispositional prediction of behavior in personality and social psychology. Adv Exp Soc Psychol. (1987) 20:1–63. doi: 10.1016/S0065-2601(08)60411-6

53. Ajzen I. From Intentions to Actions: A Theory of Planned Behavior. In: Kuhl J, Beckmann J, editors. Action Control: From Cognition to Behavior. Berlin, Heidelberg: Springer (1985). p. 11–39.

54. Holden RJ, Karsh BT. The technology acceptance model: its past and its future in health care. J Biomed Inform. (2010) 43(1):159–72. doi: 10.1016/j.jbi.2009.07.002

55. Katz MJ, Gurses AP, Tamma PD, Cosgrove SE, Miller MA, Jump RL. Implementing antimicrobial stewardship in long-term care settings: an integrative review using a human factors approach. Clin Infect Dis. (2017) 65(11):1943–51. doi: 10.1093/cid/cix566

56. Keller SC, Tamma PD, Cosgrove SE, Miller MA, Sateia H, Szymczak J, et al. Ambulatory antibiotic stewardship through a human factors engineering approach: a systematic review. J Am Board Fam Med. (2018) 31(3):417–30. doi: 10.3122/jabfm.2018.03.170225

57. Carayon P, Thuemling T, Parmasad V, Bao S, O’Horo J, Bennett NT, et al. Implementation of an antibiotic stewardship intervention to reduce prescription of fluoroquinolones: a human factors analysis in two intensive care units. J Patient Saf Risk Manag. (2021) 26(4):161–71. doi: 10.1177/25160435211025417

58. Damschroder LJ, Reardon CM, Widerquist MAO, Lowery J. The updated consolidated framework for implementation research based on user feedback. Implement Sci. (2022) 17(1):75. doi: 10.1186/s13012-022-01245-0

59. Jackson CB, Quetsch LB, Brabson LA, Herschell AD. Web-based training methods for behavioral health providers: a systematic review. Admin Policy Ment Health. (2018) 45:587–610. doi: 10.1007/s10488-018-0847-0

60. Kelly JA, Leonard NR. Orientation and training: preparing agency administrators and staff to replicate an HIV prevention intervention. AIDS Educ Prev. (2000) 12:75–86. Available online at: https://www.proquest.com/scholarly-journals/orientation-training-preparing-agency/docview/198001920/se-2 11063071

61. Amoako-Gyampah K, Salam AF. An extension of the technology acceptance model in an ERP implementation environment. Inform Manag. (2004) 41(6):731–45. doi: 10.1016/j.im.2003.08.010

62. Waltz TJ, Powell BJ, Fernandez ME, Abadie B, Damschroder LJ. Choosing implementation strategies to address contextual barriers: diversity in recommendations and future directions. Implement Sci. (2019) 14(1):42. doi: 10.1186/s13012-019-0892-4

63. McCurdie T, Taneva S, Casselman M, Yeung M, McDaniel C, Ho W, et al. Mhealth consumer apps: the case for user-centered design. Biomed Instrum Technol. (2012) 46(s2):49–56. doi: 10.2345/0899-8205-46.s2.49

64. Abras C, Maloney-Krichmar D, Preece J. User-centered design. In: Bainbridge W, editor. Encyclopedia of Human-Computer Interaction Thousand. Oaks: Sage Publications (2004), 37(4):445–56.

66. Munson SA, Friedman EC, Osterhage K, Allred R, Pullmann MD, Areán PA, et al. Usability issues in evidence-based psychosocial interventions and implementation strategies: cross-project analysis. J Med Internet Res. (2022) 24(6):e37585. doi: 10.2196/37585

67. Kilbourne AM, Geng E, Eshun-Wilson I, Sweeney S, Shelley D, Cohen DJ, et al. How does facilitation in healthcare work? Using mechanism mapping to illuminate the black box of a meta-implementation strategy. Implement Sci Commun. (2023) 4(1):53. doi: 10.1186/s43058-023-00435-1

68. Verenes CG. VA Facility addresses, identification numbers, and territorial jurisdictions. In: Consolidated Address and Territorial Bulletin. 1-N. Washington, DC: Department of Veterans Affairs Office of Administration (2007), p. 1–99.

69. Newell DJ. Intention-to-treat analysis: implications for quantitative and qualitative research. Int J Epidemiol. (1992) 21(5):837–41. doi: 10.1093/ije/21.5.837

Keywords: infection prevention, implementation facilitation, user-centered design, veterans affairs, multidrug-resistant organism (MDRO)

Citation: Ray C, Goedken C, Hughes AM, Wilson GM, Hicks NR, Fitzpatrick MA, Jones MM, Pfeiffer C, Klutts JS, Evans ME, Suda KJ and Evans CT (2025) Standard vs. enhanced implementation strategies to increase adoption of a multidrug-resistant organism alert tool: a cluster randomized trial. Front. Health Serv. 5:1566454. doi: 10.3389/frhs.2025.1566454

Received: 24 January 2025; Accepted: 4 August 2025;

Published: 18 September 2025.

Edited by:

Aimee Campbell, Columbia University, United StatesReviewed by:

Katrina Maree Long, Monash University, AustraliaSamuel Cumber, University of the Free State, South Africa

Copyright: © 2025 Ray, Goedken, Hughes, Wilson, Hicks, Fitzpatrick, Jones, Pfeiffer, Klutts, Evans, Suda and Evans. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Cara Ray, Y2FyYS5yYXlAdmEuZ292

Cara Ray

Cara Ray Cassie Goedken

Cassie Goedken Ashley M. Hughes

Ashley M. Hughes Geneva M. Wilson1,4

Geneva M. Wilson1,4 Natalie R. Hicks

Natalie R. Hicks Christopher Pfeiffer

Christopher Pfeiffer Katie Joy Suda

Katie Joy Suda Charlesnika T. Evans

Charlesnika T. Evans