Error in Figure/Table

In the published article, there were errors in Figure 6 and Figure 7 as published. The image for Figure 6 was labeled as Figure 7 and the image for Figure 7 was labeled as Figure 6. In addition, there were values referenced in the caption of Figure 6 which were not applicable to that Figure. The corrected Figure 6 and Figure 7 and their captions appear below.

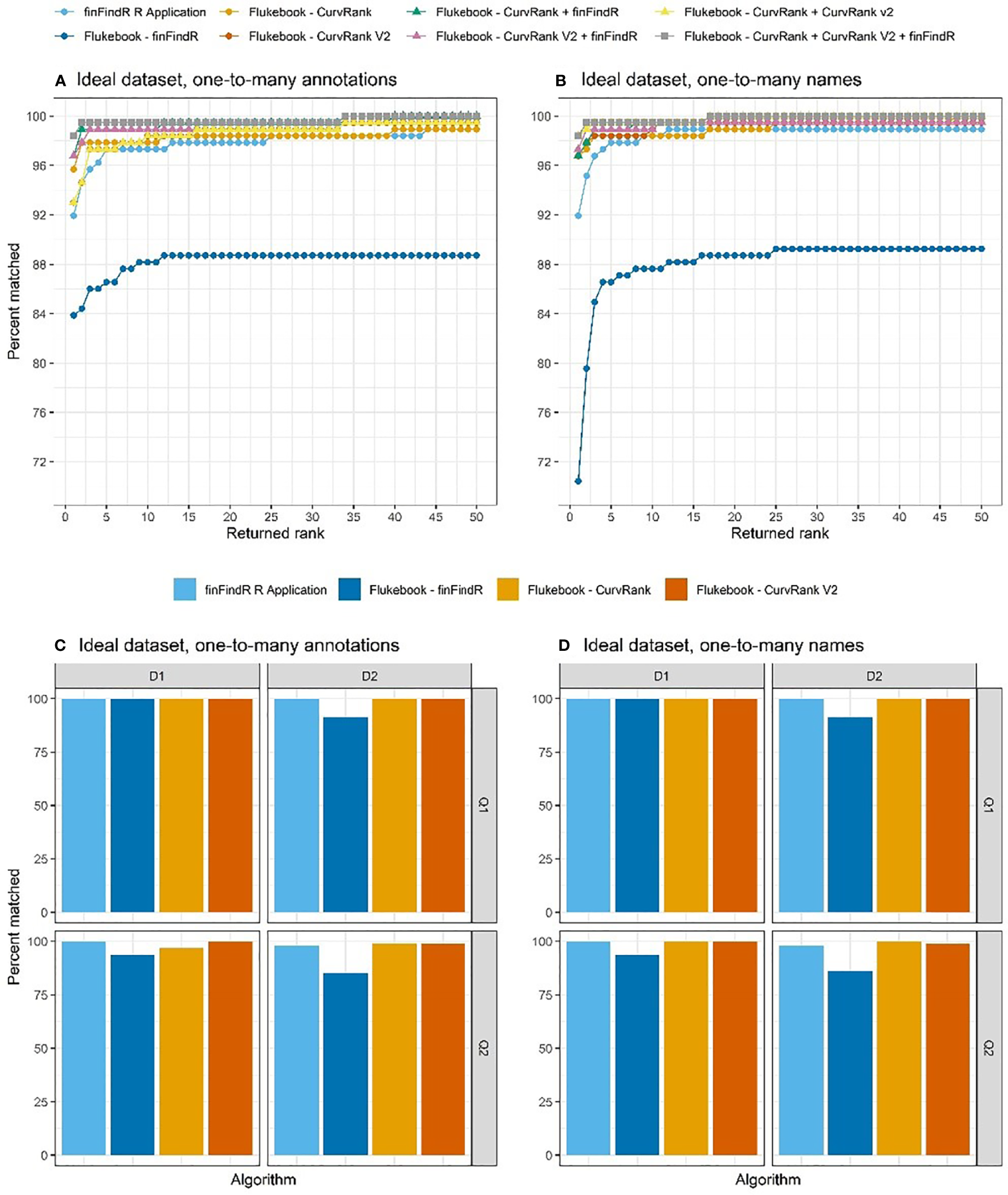

Figure 6

The percentage of images correctly matched by each algorithm and combination of algorithms (Flukebook algorithms only) and their cumulative rank position for the ideal tests in the (A) one-to-many annotations comparisons and (B) the one-to-many names comparisons; as well as the percentage of images of varying image quality and fin distinctiveness correctly matched by the independent algorithms for the ideal tests in the (C) one-to-many annotations comparisons and (D) the one-to-many names comparisons. For reference, Q1 = excellent quality image, Q2 = average quality image, D1 = very distinctive fin, and D2 = average amount of distinctive features on fin (Urian et al., 1999; Urian et al. 2014).

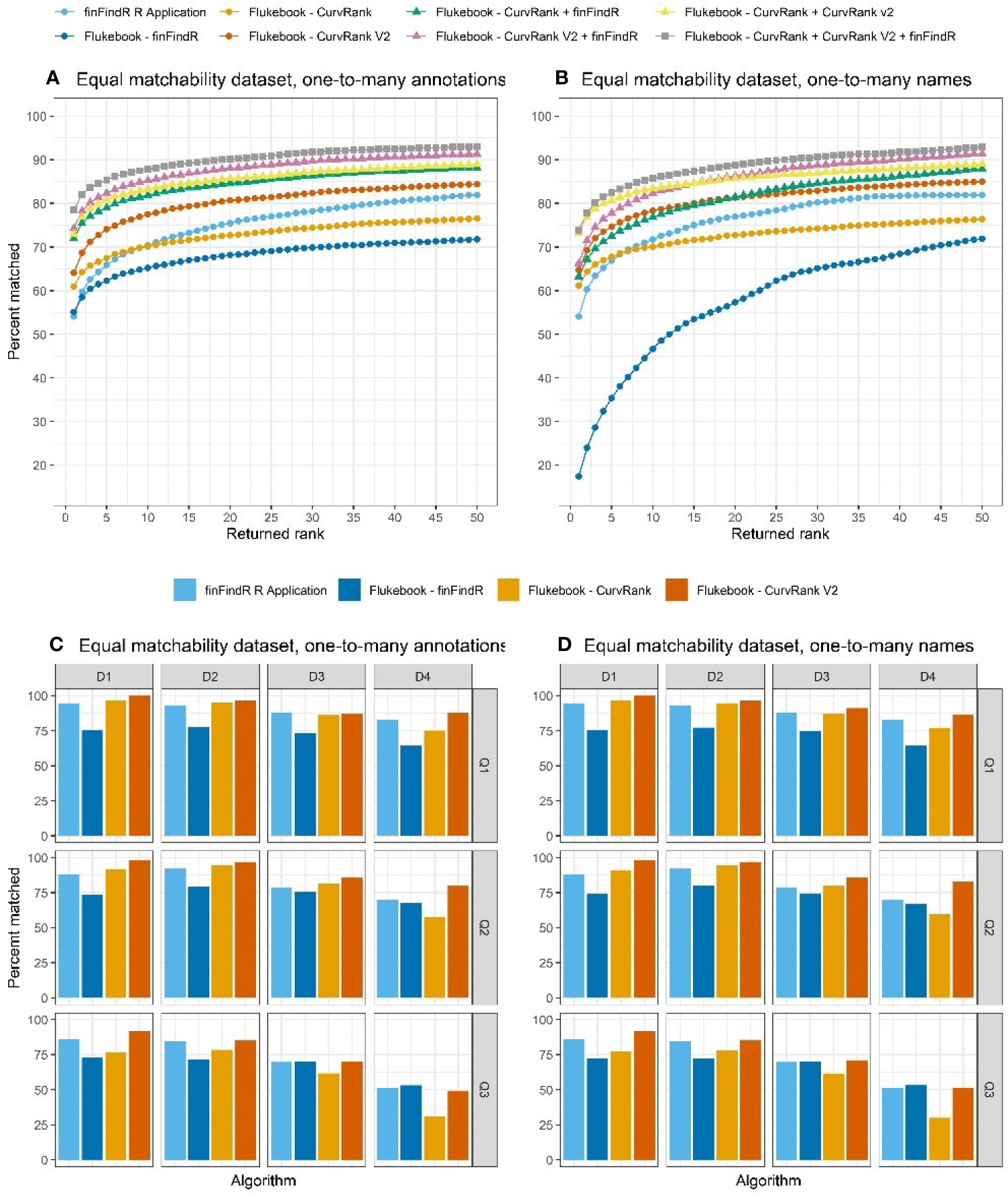

Figure 7

The percentage of images correctly matched by each algorithm and combination of algorithms (Flukebook algorithms only) and their cumulative rank position for the equal matchability tests in the (A) one-to-many annotations comparisons and (B) the one-to-many names comparisons; as well as the percentage of images of varying image quality and fin distinctiveness correctly matched by the independent algorithms for the equal matchability tests in the (C) one-to-many annotations comparisons and (D) the one-to-many names comparisons. For reference, Q1 = excellent quality image, Q2 = average quality image, Q3 = poor quality image, D1 = very distinctive fin, D2 = average amount of distinctive features on fin, D3 = low distinctiveness, and D4 = not distinct fin (Urian et al., 1999;Urian et al. 2014).

In the published article, there was an error in Table 1 as published. A few of the percentages listed of images in the first ranked position for the ideal tests in the one-to-many names comparisons were incorrect. The corrected Table 1 and its caption appear below.

Table 1

| Algorithm(s) evaluated | Comprehensive Test (N = 604) | |||

|---|---|---|---|---|

| One-to-many annotations | One-to-many names | |||

| finFindR R Application | 86.09% top 50 | 69.21% first position | 90.07% top 50 | 71.03% first position |

| Flukebook - finFindR | 78.31% top 50 | 66.39% first position | 79.14% top 50 | 52.32% first position |

| Flukebook - CurvRank | 82.95% top 50 | 70.20% first position | 82.28% top 50 | 70.03% first position |

| Flukebook - CurvRank V2 | 88.08% top 50 | 72.85% first position | 88.58% top 50 | 75.17% first position |

| Flukebook - finFindR + CurvRank | 89.57% top 50 | 77.98% first position | 89.57% top 50 | 73.51% first position |

| Flukebook - finFindR + CurvRank V2 | 91.56% top 50 | 79.64% first position | 92.05% top 50 | 76.99% first position |

| Flukebook - CurvRank + CurvRank V2 | 90.56% top 50 | 79.14% first position | 89.74% top 50 | 78.81% first position |

| Flukebook - CurvRank + CurvRank V2 + finFindR | 92.55% top 50 | 81.62% first position | 92.38% top 50 | 79.80% first position |

| Ideal Test (N = 186) | ||||

| Algorithm(s) evaluated | One-to-many annotations | One-to-many names | ||

| finFindR R Application | 98.92% top 50 | 91.94% first position | 98.92% top 50 | 91.94% first position |

| Flukebook - finFindR | 88.71% top 50 | 83.87% first position | 89.25% top 50 | 70.43% first position |

| Flukebook - CurvRank | 98.92% top 50 | 95.70% first position | 100.00% top 47 | 96.77% first position |

| Flukebook - CurvRank V2 | 99.46% top 50 | 93.01% first position | 99.46% top 50 | 96.77% first position |

| Flukebook - finFindR + CurvRank | 100.00% top 40 | 96.77% first position | 100.00% top 17 | 96.77% first position |

| Flukebook - finFindR + CurvRank V2 | 99.46% top 50 | 96.77% first position | 99.46% top 11 | 97.31% first position |

| Flukebook - CurvRank + CurvRank V2 | 100.00% top 33 | 97.85% first position | 100.00% top 17 | 98.39% first position |

| Flukebook - CurvRank + CurvRank V2 + finFindR | 100.00% top 33 | 98.39% first position | 100.00% top 17 | 98.39% first position |

| Equal Matchability Test (N = 2,485) | ||||

| Algorithm(s) evaluated | One-to-many annotations | One-to-many names | ||

| finFindR R Application | 81.88% top 49 | 54.10% first position | 81.88% top 49 | 54.10% first position |

| Flukebook - finFindR | 71.67% top 49 | 55.09% first position | 71.71% top 49 | 17.46% first position |

| Flukebook - CurvRank | 76.41% top 49 | 60.95% first position | 76.23% top 49 | 61.15% first position |

| Flukebook - CurvRank v2 | 84.32% top 49 | 64.08% first position | 84.90% top 49 | 64.74% first position |

| Flukebook - finFindR + CurvRank | 88.06% top 49 | 71.99% first position | 87.78% top 49 | 63.25% first position |

| Flukebook - finFindR + CurvRank v2 | 91.21% top 49 | 74.27% first position | 91.31% top 49 | 66.28% first position |

| Flukebook - CurvRank + CurvRank v2 | 88.84% top 49 | 72.98% first position | 88.85% top 49 | 73.19% first position |

| Flukebook - CurvRank + CurvRank v2 + finFindR | 93.01% top 49 | 78.43% first position | 92.80% top 49 | 73.92% first position |

The percentage of correct matches within the top-X ranked positions and the first position for each dataset comparison test (i.e., comprehensive, ideal, and equal matchability tests for the one-to-many annotations and one-to-many names comparisons) and each algorithm evaluated (the finFindR R application, and the CurvRank, CurvRank v2, and finFindR algorithms and their combinations integrated into Flukebook).

Note the comprehensive and ideal tests evaluated the top-50 ranked positions, while the equal matchability tests evaluated the top 49-ranked positions.

Text Correction

In the published article, there was an error in the text. In the fourth sentence of the first paragraph of the Discussion, the authors incorrectly refer to the wrong Figure panels.

A correction has been made to the Discussion, paragraph one. This sentence previously stated:

“For example, match success was over 98.92% in the top 50-ranked positions for the finFindR R application, and the CurvRank and CurvRank v2 algorithms within Flukebook in both the one-to-many annotations comparisons and the one-to-many names comparisons of Q1, Q2 and D1, D2 images (Table 1, Figures 7A, B)”.

The corrected sentence appears below:

“For example, match success was over 98.92% in the top 50-ranked positions for the finFindR R application, and the CurvRank and CurvRank v2 algorithms within Flukebook in both the one-to-many annotations comparisons and the one-to-many names comparisons of Q1, Q2 and D1, D2 images (Table 1, Figures 6A, B)”.

The authors apologize for these errors and state that this does not change the scientific conclusions of the article in any way. The original article has been updated.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Summary

Keywords

photographic-identification, photo-id, computer vision, finFindR, CurvRank, Flukebook, bottlenose dolphin, tursiops truncates

Citation

Tyson Moore RB, Urian KW, Allen JB, Cush C, Parham JR, Blount D, Holmberg J, Thompson JW and Wells RS (2022) Corrigendum: Rise of the machines: Best practices and experimental evaluation of computer-assisted dorsal fin image matching systems for bottlenose dolphins. Front. Mar. Sci. 9:998145. doi: 10.3389/fmars.2022.998145

Received

19 July 2022

Accepted

30 August 2022

Published

21 September 2022

Volume

9 - 2022

Edited and reviewed by

Lars Bejder, University of Hawaii at Manoa, United States

Updates

Copyright

© 2022 Tyson Moore, Urian, Allen, Cush, Parham, Blount, Holmberg, Thompson and Wells.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Reny B. Tyson Moore, renytysonmoore@gmail.com

This article was submitted to Marine Megafauna, a section of the journal Frontiers in Marine Science

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.