Abstract

Ocean practices, intended as a wide spectrum of methodologies supporting ocean-related research, operations, and applications, are constantly developed and improved to enable informed decision-making. Practices start from the idea of an individual or a group and often evolve towards what can be called good or best practices. This bottom-up approach may in principle result in different paths for the evolution of each practice, and ultimately generate situations where it is not clear to a new user how to compare two practices aiming at the same objective, and determine which one is best. Also, although a best practice is supposed to be the result of a multi-institutional collaborative effort based on the principles of evidence, repeatability and comparability, a set of individual requirements is not yet defined in literature for a practice to be considered a good, better, and ultimately a best practice. This paper proposes a method for addressing those questions and presents a new maturity model for ocean practices, built upon existing maturity models for systems and software, developed and adopted in the last decades. The model provides attributes for assessing both the maturity of the practice description and its implementation. It also provides a framework for analyzing gaps and suggesting actions for practice evolution. The model has been tested against a series of widely adopted practices and the results are reported and discussed. This work facilitates a common approach for developing and assessing practices, from which greater interoperability and trust can be achieved.

1 Introduction

A best practice has been defined as a “methodology that has repeatedly produced superior results relative to other methodologies with the same objective; to be fully elevated to a best practice, a promising method will have been adopted and employed by multiple organizations” (Pearlman et al., 2019).

Best practices provide significant benefits, and the use of shared and well-documented methods support global and regional interoperability across the value chain, from requirement setting, through observations, to data management and ultimately to the end user applications and societal impacts (Figure 1). By offering transparency, best practices engender trust in data and information. They facilitate capacity development through their documentation. They further maintain provenance for data and information.

Figure 1

Ocean observing value chain.

Formally defined, documented practices should ensure that people knowledgeable in the field can successfully execute the practice and have the same outcomes that the process creators achieved. As a practice matures, broader adoption will test the practice in many environments to ascertain the applicability to diverse regional missions where, for example, low-cost solutions may be required.

In formulating a maturity model for ocean practices, key questions should be addressed as a practice matures: which method can be considered a best practice, and is there a measure of maturity to identify best practices? Does the process described in the practice follow guidelines or standards produced by experts? Is the documentation easily findable and allow easy readability? Is the practice documentation format consistent with machine-to-machine discoverability? Has the practice been reviewed by independent experts? (Hörstmann et al., 2020). For operational systems, questions relating to long term implementation should also be addressed (Mantovani et al., 2023).

This paper focuses on the maturity characteristics of a practice, both its implementation and its documentation. It addresses the above questions and forms a new maturity model identifying the attributes which enable a practice to become a good, better or best practice. The existence of a sufficiently detailed maturity model is beneficial in many ways. A maturity model will be a guide for practices to enable users to choose a more mature practice. For new (or reluctant) users, a better practice has documentation that is sufficient for consistent replication. If the document structure and related metadata follow a standard format, users can more easily compare practices. Users will know what is needed to implement a practice or if there are quality assurance procedures when using a practice. For mature practices, user feedback mechanisms will also help with selection. For practice developers, the maturity model offers clear guidelines for continuous improvement of practices. It can foster inter-institutional and international collaboration toward practice standardization.

The ocean-focused model derives from work over many decades on defining the maturity of space systems, software development and, more recently, project management. These are presented in the next section 2. The new maturity model is presented in section 3. Then, in section 4, the maturity model is evaluated through application to three case studies: High Frequency Radar (HFR), Multibeam ocean floor monitoring and quality assurance for sea level observations. An assessment is also carried out for current GOOS/OBPS-endorsed practices.

2 Existing practice maturity models

For a maturity model to be of continuing value, certain tradeoffs should be considered. For example, if a model is general, it can last without review for a long time, but it does not provide very specific guidelines for evaluating and evolving a practice. On the other hand, specific criteria can provide guidance for evolving practices, but they should be reviewed regularly in order to keep up with technology evolution. Practices across disciplines can vary and a maturity model may not be appropriate for all practices if the level descriptions are too specific. A summary of the benefits and challenges is provided in Table 1.

Table 1

| Benefits |

|---|

| The path to maturity and a best practice is clearly documented |

| Gaps in documentation and capabilities are identified and can be addressed |

| Maturity structure supports monitoring of implementation, evolution and sustainability of practices |

| Users are aware of the practice maturity in selecting a practice for adoption |

| Practices can be compared according to selected criteria |

| Transparency increases trust in adopting practices |

| Challenges |

| Maturity models can be static, a snapshot, and not be able to keep up with technology evolution (it would demand a regular review to evolve) |

| The path through each level may not be optimized. |

| Practices across disciplines can vary and a maturity model may not be appropriate for all practices |

| Maturity criteria may be defined that are subjective and not objective |

| Maturity model implementation may demand additional effort for which the cost/benefit may not be clear, nor the quantitative implementation costs and infrastructure/human requirements |

| Independent organization may be required to assess maturity criteria that are subjective |

| Widespread recognition and adoption of the maturity model |

Benefits and challenges in using practices maturity models.

Maturity models usually comprise three or more levels of maturity from initial concept to a fully mature practice. The ocean community currently uses three level maturity models. The Framework for Ocean Observing (FOO) (Lindstrom et al., 2012) and the Ocean Best Practices System (OBPS) (OceanBestPractices System (OBPS), 2024) each has three levels. For the OBPS, these are:

Concept: A practice is being developed at one institution(s) but has not been agreed to by the community; requirements and form for a methodology are understood (but practice may not be documented).

Pilot or Demonstrated: Practice is being demonstrated and validated; limited consensus exists on widespread use or in any given situation.

Mature: Practice is well demonstrated for a given objective, documented and peer reviewed; practice is commonly used by more than one organization.

While the three levels represent a logical progression of maturity, their description does not provide enough detail to accurately identify the appropriate level for a practice. It is unclear at what point a practice becomes a good practice or even a better practice. The three-level maturity scale is also focused on the practice itself, but does not account for aspects such as training, user feedback, routine updates, nor guidance on how to write and make available practice documentation.

In project management, software development and system development, the number of levels typically range between four and nine. For long term projects that are complex, include many different teams and last one or more decades, maturity models with more levels may be used. NASA ran complex space system developments, monitoring technology from inception to space flight hardware over decades. They used a nine level Technology Readiness Level maturity model (Hirshorn and Jefferies, 2016). Similarly, NOAA has a nine level system as an idea evolves to a mature system (NOAA, 2019).

Experience developing and using maturity models in industry and academia suggests that a five level structure may be optimum for most applications (Chrissis et al., 2011). Maturity models are broadly used in industry. They cover a wide range of subject areas where they are used to monitor the efficiency of business processes (Tarhan et al., 2016; Lutkevich, 2024) such as project management (Rosenstock et al., 2000; Pennypacker and Grant, 2002) or software development (ISO/IEC 15504). The models have several objectives: benchmark internal performance; catalyze performance improvements; and create and evolve a common language to understand performance. The latter supports a commitment to foster the engagement of all stakeholders. The degree of maturity described in these business models is done through assigning levels related to the degree to which processes are documented and followed (Newsom, 2024).

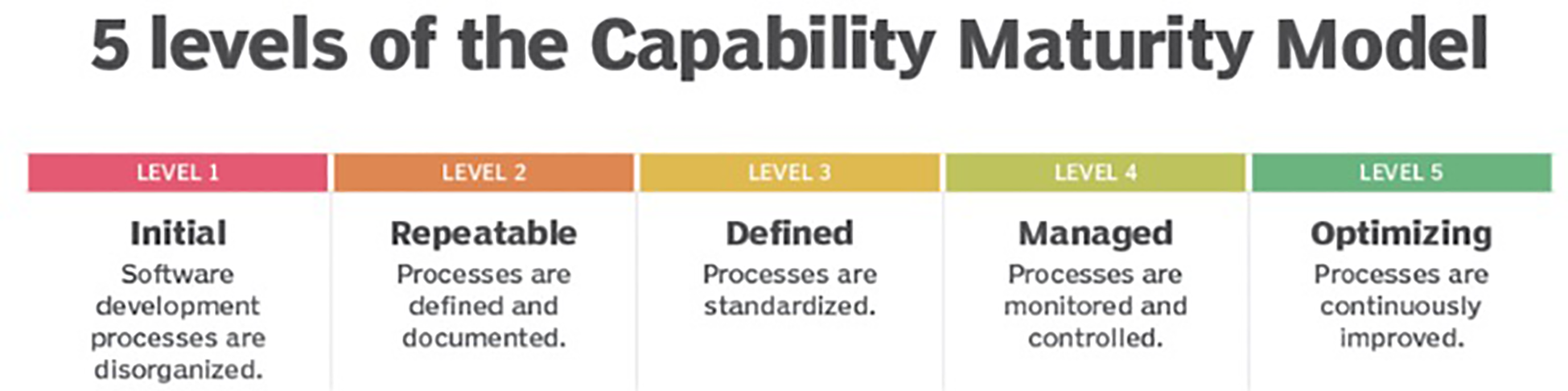

For software development, there are complementary paths for assessing maturity, e.g. International Standards Organization (ISO) 9001 standards (ISO 9001:2015, 2024) or the Capability Maturity Model (CMM) (Lutkevich, 2024). The main difference between CMM and ISO 9001 lies in their respective purposes: ISO 9001 specifies a minimal acceptable quality level for software processes, while CMM establishes a framework for continuous process improvement. ISO in collaboration with the International Electrotechnical Commission (IEC) has also provided a series of guidelines known as the Software Process Improvement and Capability Determination (SPICE) framework (ISO/IEC 15504). CMM is more explicit than the ISO standard in defining the means to be employed. For its implementation, the CMM describes a five-level evolutionary path of increasingly organized and systematically more mature processes (Figure 2) (Lutkevich, 2024). IOOS chose a five-level model in their 2010 blueprint for full capability (U.S. IOOS Office, 2010).

Figure 2

Five levels of the capability maturity model, adapted from (Lutkevich, 2024).

The ocean maturity model defined in this paper also uses five levels. Five levels offer a granularity that has enough detail to differentiate between maturity levels without being overly intricate. The five levels, with appropriate sublevels, allow organizations to identify areas to focus their efforts for improvement.

3 A new ocean practices maturity model

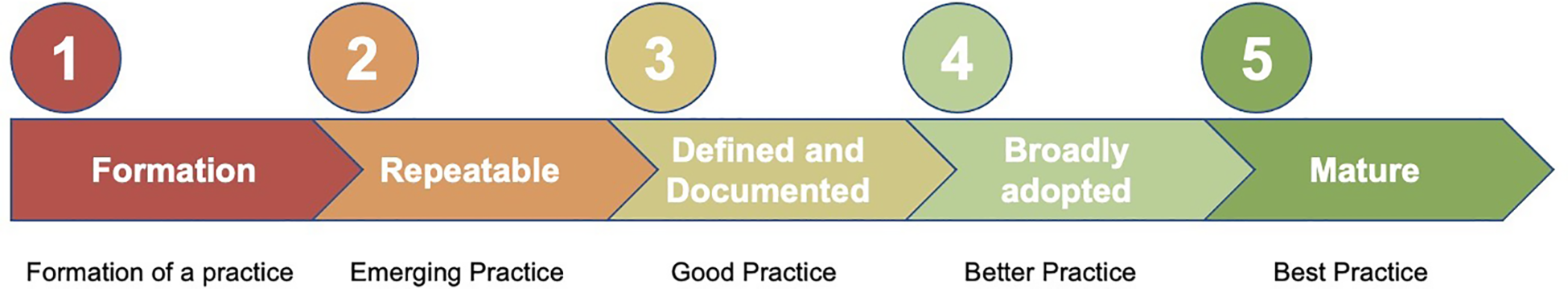

A key question to be considered in designing an ocean-focused five level maturity model is how can the five levels be formulated to stimulate evolution from a practice to an endorsed, widely-adopted best practice. The description of levels in Figure 2 for software evolution provides a foundation. For oceans, levels one and two are similar to the software model and are focused on taking ad hoc practice of level 1 and moving it toward a repeatable practice (Level 2). In Level 3, practices should be open, defined and fully documented so they can be replicated by other experts. At Level 4, the practices are well developed and adopted across multiple institutions. Level 5 includes mature practices which are even more widely implemented, have user feedback and are endorsed by experts. Figure 3 offers a very high level sketch of what each level is. This would not suffice by itself. More details at each level are necessary and are provided in Section 3.1 below.

Figure 3

Five levels of ocean practice maturity model.

3.1 Ocean practices maturity model attributes

The maturity model levels described above have been expanded in a number of attributes that are inspired by four foundational elements. First is multi-institutional adoption and use of the practice. Second is complete and open documentation of the practice including the process description and related metadata. The documentation should be in a sustained repository. Third is community engagement and feedback in evolving and endorsing the practice. Fourth is sustaining the practice and its related experience. This would involve, for example, training in its use and updates relevant to changing technologies.

There are two parallel processes included in maturity assessments. First is the maturity of a practice and its documentation. The second is the maturity of the implementation of the practice, particularly for operational systems. Both the practice maturity and the implementation maturity are considered in building the maturity model. The new ocean maturity model described in this paper is designed to support diverse elements of the ocean value chain, which includes observations, modeling and applications. It strikes a balance so that enough detail is included to support practice evolution while still offering criteria general enough to keep the maturity model stable and valid over time. For best practices to evolve, tools to monitor implementation are needed as well as feedback loops with users (Przeslawski et al., 2021). Ideally, there is a central repository which collects feedback information, supports optimization and keeps track of the evolution. The evolution of practice maturity is promoted at all levels. The implementation maturity is visible only in levels four and five when the practice is mature enough to be considered for an operational or complex research environment. For example, Level 5 in Table 2 includes user feedback loops, diagnostic tools and protocols for evolution.

Table 2

| Level | Description of items to achieve the level | |

|---|---|---|

| 1 | Formation of Practice | 1.1 Practice is ad hoc with little documentation. |

| 2 | Emerging Practice - Repeatable |

2.1 Practice is defined and may be documented. (.50) 2.2 Practice is repeatable by the practice creator. (.50) Each of the above provides a score increment toward Level 2. Items from Level 2 and 3 may be used to achieve Level 2. |

| 3 |

Good Practice

- Defined and documented |

3.1 Practice is formally documented and supported by searchable metadata. (.30) 3.2 Practice documentation is openly available in a sustained repository with a DOI. (.30) 3.3 Practice documentation is sufficient for the practice to be replicated by practitioners with prior knowledge in similar practices. (.30) 3.4 Practice document formats and metadata conform at least to some existing guidelines. (.10) Each of the above attributes provides a score increment toward Level3. Items from Level 3 and 4 may be used to achieve Level 3. All items in Level 2 will have to be completed prior to achieving Level 3. |

| 4 |

Better Practice

- Developed and Adopted |

4.1 Practice is recognized and actively used by multiple institutions but not necessarily formally endorsed. (.25) 4.2 Practice document describes either explicitly or implicitly how practitioners can verify their successful implementation of the practice. (.20) 4.3 Practice documentation is sufficient for the practice to be replicated by users without prior knowledge of similar practices (.20) 4.4 Guidelines are available for evolution of practice and its documentation, such as updates or reviews and also have procedures for user feedback. (.20) 4.5 Practice documentation has standardized formats and comprehensive metadata conforming to OBPS or other global standards (.10) 4.6 Practice documents and metadata are machine-readable (.05) Each of the above attributes provides a score increment toward Level 4. Attributes from Level 4 and 5 may be used to achieve Level 4. All items in Level 3 will have to be completed prior to being at Level 4. |

| 5 | Best Practice - Mature |

5.1 Practice is reviewed and endorsed by a multi-institutional expert panel following OBPS/GOOS endorsement protocols. (.35) 5.2 Practice is adopted at least regionally. (.20) 5.3 Practice includes process for quality assessment. (.15) 5.4 Practice has specific protocols for supporting improvements including user feedback loops. (.10) 5.5 Implementation of practice has formal tools (such as checklists, software checkers, assessment procedure, etc.) to verify implementation. (.10) 5.6 Practice has materials for training (either described in the practice document or in a separate linked document(s)). (.10) Each of the above attributes provides a score increment toward Level 5. All items in both 4 and 5 must be satisfied for practice to be at Level 5 |

The five levels of maturity for ocean practices and the attributes required to reach a level.

Score increment shown is used for quantifying maturity level. A more detailed description is provided for some terms used in this table.

DOI: A DOI is a permanent digital identifier of an object, any object — physical, digital, or abstract. A DOI is a unique number made up of a prefix and a suffix separated by a forward slash. DOIs identify objects persistently. They allow things to be uniquely identified and accessed reliably. (https://www.doi.org/the-identifier/what-is-a-doi/).

Feedback loop: e.g., a collaborative environment, with tracking capabilities for versions, comments, users (with authentication), where everyone can read comments from everyone. Not necessarily built by practice authors, it could be a third-party tool enabled by practice authors for this purpose.

Formal monitoring tools: tools for tracking and self-assessment, primarily for practitioners, but useful also for others.

Machine-readable: Product output that is in a structured format, which can be consumed by a computer program using consistent processing logic. For example, a PDF file is machine readable if it has either been computer generated or run through an OCR converter to convert it from a scanned PDF to searchable PDF.

OBPS: Ocean Best Practices System (https://www.oceanbestpractices.org/).

Quality assessment: QA focuses on preventing defects or errors in the processes used to develop products. It is a proactive approach aimed at ensuring that the processes are designed and implemented effectively to produce high-quality products.

Searchable metadata: the type of metadata that allows content to be found by a search phrase that has been used to describe the content in the metadata fields.

Standardized formats: referring to standardized formats and metadata conforming to OBPS document templates, see Ocean Best Practices System (2023).

Sustained repository: a permanent repository that has continuing funding and strategies in place for submissions, data curation, preservation, and dissemination to ensure that the stored information remains relevant, reliable, and accessible over time.

In defining the attributes for each level, there are many trade-offs about what constitutes a good, better or best practice. Applying these maturity assessment criteria to practices is a combination of fact and judgment. In defining the attributes of each level, there was a focused effort to make as many of them quantitative and to minimize subjective judgements. Additionally, in some cases, two similar attributes are assigned to two different levels. In this case, at the higher level, the attribute is more formal in its structure or more pervasive in its use. For example, in Level 4, a practice is used by multiple institutions while in Level 5, the practice is adopted at least regionally. Similarly, in Level 4 a practice document describes how practitioners can verify their successful implementation of the practice, while in Level 5 implementation of practice has formal tools to accomplish this task.

Level 5 introduces endorsement. To guide users in method selection, an endorsement process was developed through a collaboration of the Global Ocean Observing System (GOOS) and OBPS which provides guidelines for endorsement (Hermes, 2020; Przeslawski et al., 2023). Based on this experience, OBPS has defined an endorsement process that can be extended to a broader range of disciplines (Bushnell and Pearlman, 2024). An example of an endorsed practice is the Ocean Gliders Oxygen SOP V1. (Lopez-Garcia et al., 2022).

In applying labels to levels four and five, it is recognized that better or best could be relative to a specific environment. A better practice for the coastal regions and a better practice in open ocean may be best for their locations, but may differ in their description and implementation. Thus, multiple practices may exist simultaneously for the same high level objective. For example, measurements of dissolved oxygen in seawater are quite commonly used by biologists as a measure of water quality. Low oxygen levels stress organisms and anoxic conditions create dead zones. Oxygen levels are also used by physical oceanographers as water mass tracers to estimate how much time has passed since deep water was last in contact with the atmosphere, requiring measurements with a higher accuracy. To make the measurements, a wide variety of practices may be employed, each with benefits and drawbacks. It is best to modify the traditional titrimetric method used in open ocean waters when making the same measurement in coastal waters with high organic loads (American Public Health Association (APHA), 1976).

3.2 Ocean practices maturity model assessment

Assignment of maturity level for a process must be transparent and be easily used by those employing the maturity model as a tool for self-assessing the maturity of their practice. It must also allow straightforward interpretation by users who want to decide whether to adopt a practice. To this end, a quantitative scoring system was defined for assessing a practice. The score for a level is built on specific scores for each attribute of that level, so that the sum is the number “one”. This is valid for all the levels. While the assignment of “one” as the sum is arbitrary, it makes the assessment within and across levels more straightforward. Another factor in the scoring is that a weighting is introduced to recognize that not all attributes at a given level are equally important when progressing to a good, better or best practice. This was done assigning non-uniform scores to attributes, within each level. Attribute scores, thus their priority rating within each level, are based on the authors’ experience and inputs from other experts in the ocean community. Since the number of attributes is not the same for each level, but their score has to sum one within each level, individual attribute scores from different levels are not meant to be comparable, i.e. a concept of absolute priority is not defined.

Using this scoring, the calculation of the maturity level for a practice looks at the items needed to achieve each level (see Table 2) and follows a few simple rules. To be awarded as fully compliant with a level, a score of 1.0 is required in that level (i.e. all the attributes are fulfilled in that level). However, there will be times in the evolution of a practice when not all of the attributes to achieve a level have been satisfied, and at the same time attributes from the next higher levels have been satisfied. To not restrict developers, the score for a level can be obtained as the sum of all the scores of the fulfilled criteria for that level and the one above it. There is an additional facet that to be declared at a certain level, all items in the lower levels must be completed. Synthesizing this approach in other words, maturity Level N is obviously achieved when all attributes are fulfilled from Level 1 to N. In a more realistic case in which not all the levels are fully completed, Level N can be achieved when: a) all the attributes from levels from 1 to N-1 are fully satisfied and b) attributes fulfilled in Level N and Level N+1 give a score >=1. Levels N+2 and above cannot contribute to an additional score, in order to encourage a balanced evolution of the practice.

For an example of the assessment calculation see Table 3. The table considers a practice that has completed Level 2 and is working toward recognition at Level 3. It has satisfied three of four attributes for Level 3 and has a score of 0.70, but does not yet have a score of 1.00 at Level 3. However, it may use actions from Level 4 that it has completed to get credit toward Level 3 completion, but it cannot use any activities from two levels higher, in this case, Level 5. In the case of Table 3, three attributes of Level 4 are satisfied: the practice document describes how practitioners can verify their successful implementation of the practice, the practice has been submitted to a sustained repository in a standardized format, and practice documents and metadata are machine-readable, which together have an associated score of 0.35. Adding this to the 0.70 from Level 3 attributes that are satisfied, gives a total score of 1.05 and the practice is acknowledged to be at Level 3, a “good practice”. It is also necessary that all items at lower levels (in this case Levels 1 and 2) have been fully completed so that there are no residual holes in the maturity evolution. The rationale for not using scores from two levels above the current level is to focus the maturity evolution at no more than two levels and not have an effort distributed across the entire maturity spectrum. This helps users more clearly understand if a practice is good, better or best.

Table 3

| Comply | score | Level 3 |

|---|---|---|

|

.30 | 3.1 Practice is formally documented and supported by searchable metadata. |

|

.30 | 3.2 Practice is openly available in a sustained repository with a DOI. |

|

.30 | 3.3 Practice documentation is sufficient for the practice to be replicated by practitioners with prior knowledge in similar practices. |

|

.10 | 3.4 Practice document formats and metadata conform at least to local guidelines. |

| .70 | Total for Level 3 | |

| Level 4 | ||

|

.25 | 4.1 Practice is recognized and actively used by multiple institutions but not formally endorsed. |

|

.20 | 4.2 Practice document describes either explicitly or implicitly how practitioners can verify their successful implementation of the practice. |

|

.20 | 4.3 Practice documentation is sufficient for the practice to be replicated by users without prior knowledge of similar practices. |

|

.20 | 4.4 Guidelines are available for evolution of practice and its documentation, such as updates or reviews and also have procedures for user feedback. |

|

.10 | 4.5 Practice documentation has standardized formats and metadata conforming to OBPS or other global standards. |

|

.05 | 4.6 Practice documents and metadata are machine-readable. |

| .35 | Total for level 4 | |

| 1.05 | Overall score for Level 3 acknowledgement (>=1.00 means practice is at Level 3) | |

| 3.05 | Overall score | |

Overall stars

|

Example of scoring in the maturity mode.

= complies with attribute;

= complies with attribute;  = does not satisfy this attribute. The analysis represents 2 full stars (Levels 1 and 2 completed), and two half stars (Levels 3 and 4 only partially fulfilled) and gives an overall score of 3.05.

= does not satisfy this attribute. The analysis represents 2 full stars (Levels 1 and 2 completed), and two half stars (Levels 3 and 4 only partially fulfilled) and gives an overall score of 3.05.

In order to consider the full capability of a practice in terms of readiness for each level and to provide a quick visual and complementary reference for users of the scoring results above, a practice can be assigned from one to five stars corresponding to its maturity level attributes. The number of full stars indicates that a specific level has been completed. Half stars may be used for completion of a partial level. Since this is a qualitative score, a half star does not mean the achievement of a score of 0.50 in that level; it means that some score was achieved in that Level. A qualitative score of 5 stars is equal to the quantitative score of 5.00, meaning that the practice is a “Best Practice” and has fulfilled all attributes in the maturity model. The quantitative score and the number of stars may change as the practice matures. Specific examples of this scoring system are given in the next section where documented practices related to three ocean observing practices are considered, and the maturity assessment is done.

4 Application of the maturity assessment

In this section, three practices are provided and analyzed to understand how the maturity scale is applied. These include practices related to different ocean observing technologies and methodologies: High Frequency Radar (HFR), a multi-beam scanner and a sea level quality control process. While these are all observations, they represent various segments of ocean observing. In addition, interviews were conducted with lead authors on seven OBPS/GOOS endorsed practices to consider whether these practices are good, better or best practices. A summary of the lead author self-assessment from the interviews, giving the maturity rating, is provided in section 4.4.

All the exemplars in this section 4 have completed the attributes of Level 1, 2 and 3, and therefore have a guaranteed starting score of 3 (and 3 full stars). Thus, the discussions and tables below focus on Level 4 and 5. For the three examples of HFR, multi-beam scanner and sea level quality control, the maturity tables include comments on each attribute to better understand how the factors are applied in the ratings.

4.1 Assessment of high frequency radar practices

4.1.1 What is HFR?

In oceanography, HFR is a remote sensing technology employed for monitoring ocean surface currents, waves, and wind direction. Operating in the HF radio frequency band (3-30 MHz), these radar systems extract ocean surface current velocity information from the Doppler shift induced by the movement of the ocean surface (Gurgel, 1994; Gurgel et al., 1999; Lorente et al., 2022; Reyes et al., 2022). They are typically deployed in networks along coastlines, providing information in extended coastal zones and up to 200 km from the shoreline.

4.1.2 What issue in ocean observing is it addressing?

HR radars are complementary to other existing instruments used in oceanography for monitoring ocean currents and waves. Traditional oceanographic methods often involve deploying buoys or drifters, or moored Acoustic Doppler Current Profilers (ADCs or ADCPs). More conventional instrumentation can be limited in spatial and/or temporal coverage and may not provide real-time data, and do not sample the upper layer of the water column where the ocean-atmosphere exchanges are most pronounced. HFRs provides non-intrusive, wide-area coverage of coastal regions, offering real-time data at high spatial and temporal resolution from an easily accessible shore location. Their primary output is a 2-dimensional map of ocean surface currents. Gridded or single-point waves (significant wave height, period, direction) and wind (direction) data can be derived also (Roarty et al., 2019a).

4.1.3 What are the challenges?

Challenges of HFR technology and related research activities are multiple and are synthesized in recent papers (Rubio et al., 2017; Roarty et al., 2019b; de Vos et al., 2020; Lorente et al., 2022; Reyes et al., 2022). Given the multiple applications in operational oceanography, a priority identified by the research community was the efficient and standardized management of near real time HFR-derived velocity maps. To obtain surface current vectors, an HFR installation must include at least two radar sites, each one measuring the radial velocity component in its look direction when operated in standard monostatic configuration. Different sources of uncertainty in the velocity estimation can be related to variations of the radial current component within the radar scattering patch or over the duration of the radar measurement; incorrect antenna patterns or errors in empirical first order line determination, the presence of environmental noise (Rubio et al., 2017). Also, in the combination from radials additional geometric errors can affect the accuracy of the HFR data, like the Geometric Dilution Of Precision (GDOP) (Chapman et al., 1997).

The need of ensuring best data quality for near-real time (NRT) data products motivated the community to work on the definition of a practice including the most suited QA/QC protocols, based on metrics which could be computed, with a reasonable computational effort, directly from the received data and a set of reference threshold values.

4.1.4 What is the maturity of the HFR practices?

Two practices related to HFR technology are analyzed in this section. They both refer to Quality Control of HFR data and have been developed in two different regions, Europe and Australia.

A first practice is selected from the Joint European Research Infrastructure for Coastal Observatories (JERICO) inventory: Recommendation Report 2 on improved common procedures for HFR QC analysis, JERICO-NEXT Deliverable 5.14, Version 2.0 (Corgnati et al., 2024).

In 2014, EuroGOOS initiated the HFR Task Team to advance the establishment of an operational HFR network in Europe. The focus was on coordinated data management and integrating basic products into major marine data distribution platforms. A core group of this task team was then involved in a series of projects (Lorente et al., 2022) that supported the development of practices for HFR system operations including surface currents data management.

The project deliverable JERICO-NEXT D5.14 is the practice describing the data model to be applied to HFR derived surface current data required for complying with international standards. This document, which is available from the OBPS, illustrates the practice maturity which relies on standards (NetCDF data format, CF conventions, INSPIRE directives) and widely accepted procedures (QARTOD manual, US HFR network best practices), and has been drafted in the multi-institutional context mentioned above.

A second practice is selected from the Ocean Radar Facility at the University of Western Australia (UWA) (de Vos et al., 2020; Cosoli and Grcic, 2024). The practice is also provided in the oBPS repository. The Ocean Radar Facility at the University of Western Australia (UWA) is part of Australia’s Integrated Marine Observing System (IMOS) and was first established in 2009. Best practices and workflows were established in collaboration with the Australian Ocean Data Network (AODN) to document near real-time (NRT) and delayed-mode (DM) data flow, planning, clarify responsibilities, improve communication, ensure transparency, and aid in deployment reporting. These are refreshed periodically to reflect changes in network settings and hardware or software upgrades. Standard practices include using near-surface currents from independent platforms to optimize radar settings and assess QA/QC tests quantitatively. Optimized thresholds are then established based on regional variability in current regimes for both near real-time and delayed mode products rather than being set a-priori.

Maturity of the Practices associated with each of these HFR applications are assessed. The different levels of maturity associated with these practices (Level 4 and Level 5) are detailed in Table 4.

Table 4

| Recommendation Report 2 on improved common procedures for HFR QC analysis, JERICO-NEXT Deliverable 5.14, Version 2.0. Subject of the practice: near real time data quality control methods, data and metadata model implementation methods, for High Frequency Radar derived surface current. Material available: manual, software tools. |

Quality Control procedures for IMOS Ocean Radar Manual, Version 3.0. Subject of the practice: data quality control methods for near-real time and delayed mode products, data formats, metadata, for High Frequency Radar derived surface current. Material available manual (updated). |

|||||

| attribute | comply | score | comments | comply | score | comments |

| Level 4 | ||||||

|---|---|---|---|---|---|---|

| 4.1 Practice is recognized and actively used by multiple institutions but not necessarily formally endorsed. |

|

.25 | Yes. recognized by JERICO partners, EuroGOOS, SeaDatanet partners, Copernicus In Situ TAC partners, Emodnet Physics. Employed by multiple institutions through the EU HFR node services. |

|

.25 | Yes. |

| 4.2 Practice document describes either explicitly or implicitly how practitioners can verify their successful implementation of the practice. |

|

.20 | Yes, the practice documentation contains explicit description of means to verify the implementation. A formal verification can be performed at the EU HFR Node level for assessing the compliance with the data and metadata model suggested by the practice. |

|

.20 | Yes, as formal evaluation (i.e. CF compliance) and assessment are done and documented. |

| 4.3 Practice documentation is sufficient for the practice to be replicated by users without prior knowledge of similar practices |

|

.20 | Yes, it relies on common statistical approach for data quality control, and refers to accepted standards for environmental data formats and metadata vocabularies. |

|

.20 | Yes, commonly used statistical tests are implemented along with platform specific tests that require access to low level files in proprietary format. |

| 4.4 Guidelines are available for evolution of practice and its documentation, such as updates or reviews and also have procedures for user feedback. |

|

.20 | Yes. The process of reviewing the practice and its documentation is coordinated by the EuroGOOS HFR Task Team. The practice documentation is published on GitHub for user feedback, support and troubleshooting. |

|

.20 | A periodic review process is coordinated by IMOS Community Practices and AODN, then submitted to Ocean Best Practices. |

| 4.5 Practice documentation has standardized formats and comprehensive metadata conforming to OBPS or other global standards. |

|

.10 | Practice documentation follows the structure provided by the OBPS data management template (for the data model sub-section). |

|

.10 | Yes, OBPS format. |

| 4.6 Practice documents and metadata are machine-readable. |

|

.05 | Document is in a computer-generated PDF format. |

|

.05 | Document is in a computer-generated PDF/A format. |

| Total for level 4 | 1.00 | 1.00 | ||||

| Level 5 | ||||||

| 5.1 Practice is reviewed and endorsed by a multi-institutional expert panel following OBPS/GOOS endorsement protocols. |

|

.35 | No. |

|

No. | |

| 5.2 Practice is adopted at least regionally. |

|

.20 | Yes, it represents the European data and metadata model and is adopted by multiple European Institutions (see 4.1). |

|

.20 | Yes, as the Facility acts as a single data supplier for the entire Australia. |

| 5.3 Practice includes process for quality assessment. |

|

.15 | Yes, it includes algorithms for data QC. |

|

.15 | Yes. |

| 5.4 Practice has specific protocols for supporting improvements including user feedback loops. |

|

.10 | Yes, the practice is also published on GitHub for providing user feedback loop, support and troubleshooting. |

|

.10 | Registration of data users. |

| 5.5 Implementation of practice has formal tools (such as checklists, software checkers, assessment procedure, etc.) to verify implementation. |

|

.10 | Yes, software tools are publicly available on GitHub and Zenodo repositories for implementing the practice. |

|

.10 | Yes, automatic CF compliance checkers available as standard AODN practice. |

| 5.6 Practice has materials for training (either described in the practice document or in a separate linked document(s) |

|

.10 | Yes, the training sessions and related material are managed by the EuroGOOS HFR Task Team. |

|

.10 | Yes, although not managed directly by the Facility and managed by AODN instead. |

| Total for Level 5 | .65 | .65 | ||||

| Overall score | 4.65 | 4.65 | ||||

| Overall stars |

|

|

||||

Maturity assessment of HFR practices.

Level 4: for (Corgnati et al., 2024) attributes of level 4 are fully satisfied. In particular, thanks to the commitment of the EuroGOOS HFR Task Team and its operational asset, the European HFR Node (EU HFR Node), in promoting the multi-institutional adoption and implementation of the European QC, data and metadata model for real-time HFR data, specific procedures for regularly reviewing and updating the data model practice documentation, for providing feedback loops with users, for providing training on the data model application and for providing means for assessing the correct adoption of the data model are in place and described in the practice documentation. The EU HFR Node also provides an operational service for collecting HFR data, quality checking and converting them according to the QC and data model: this workflow operationally checks adherence to file format conventions and metadata in the NRT and DM pipelines.

(Cosoli and Grcic, 2024) reported that all Level 4 attributes are met, however acknowledging that advanced skill levels may be required to replicate some of the proposed tests and approaches. Feedback loops are received directly from the data users when inconsistencies are noted in the data products or clarification about data formats and content are needed. Also, the data collection center (Australian Ocean Data Network, AODN) operationally checks adherence to file format conventions and metadata in their NRT and DM pipelines. AODN also ensures the Ocean Radar Facility satisfies standard IMOS community practices.

Level 5: for (Corgnati et al., 2024) attributes of level 5 are partially satisfied (5 out of 6). The aforementioned commitment and operational service provided by the EuroGOOS HFR Task Team and the EU HFR Node promote the global adoption of the practice and support its evolution. The missing attribute relates to the formal endorsement of the practice. This step is still being implemented.

(Cosoli and Grcic, 2024) report that 5 out of 6 level 5 attributes are met, however consensus is reached in regards to the fundamental need of thorough review and endorsement.

The comparison is included here as another potential for using the maturity model. If there are similar practices with the same objective, there is interest in assessing if convergence between the practices into a single practice can be achieved. The parties (Corgnati et al., 2024; Cosoli and Grcic, 2024) have agreed to pursue this as the maturity of both practices is nearly the same. The convergence effort was motivated by the maturity model analyses of this use case and envisions an investigation for other practices adopted in the same field, e.g. the QARTOD manual for HFR data QC (U.S. Integrated Ocean Observing System, 2022b), aiming at the production of a unique and endorsed reference practice for the international HFR community. The subject of convergence will be further discussed in a dedicated future document. Also, the dialogue will be extended to other national and regional HFR networks (Roarty et al, 2019b; Jena et al., 2019b) aiming at a global HFR practices harmonization and maturity model application.

4.2 Assessment of multibeam practice

Multibeam echosounders are marine acoustic systems that create data based on the interaction between underwater sound waves and physical obstacles, the latter of which can either be on the seabed or in the water column. Data acquisition involves a transmitter which emits multiple sound pulses at a given time and a receiver which receives this sound pulse. The difference in time between the send and receive signal will result in a depth measurement (bathymetry), and the strength of the return of the emitted pulse can be used to infer seabed hardness (backscatter). The main use of multibeam echosounders is to generate high resolution maps of the physical features of the seafloor over a broad spatial area. These maps can then be used for a range of purposes, including characterizing key seabed features (Post et al., 2022; Wakeford et al., 2023), choosing benthic sampling locations (Bax and Williams, 2001), and producing habitat maps (Misiuk and Brown, 2024). Acoustic techniques can also be used to detect and predict the spatial extent of broad ecological communities such as kelp and sessile invertebrates (Rattray et al., 2013; Bridge et al., 2020). All of these contribute to establishment and management of marine parks (Lucieer et al., 2024). Occasionally multibeam is used to detect change based on data acquired from repeated surveys (Rattray et al., 2013).

Until recently, multibeam practices were not unified or publicly accessible, making it challenging to collate and compare data from different surveys particularly in relation to characterizing and managing Australia’s vast marine park network (Przeslawski et al., 2019). The current version of the Australian Multibeam Guidelines was released in 2020 as a national best practice to guide multibeam data collection, processing and distribution (Picard et al., 2020). This version has higher maturity, than previous iterations, thus providing a useful example for the entire maturity assessment framework described in Table 5:

Table 5

| Comply | Score | Level 4 | Comments |

|---|---|---|---|

|

.25 | 4.1 Practice is recognized and actively used by multiple institutions but not necessarily formally endorsed. | Multiple institutions use these guidelines, including government and national research agencies |

|

.20 | 4.2 Practice document describes either explicitly or implicitly how practitioners can verify their successful implementation of the practice. | Practice is part of a suite of marine sampling practices that describe how to appropriately cite and confirm implementation. |

|

.20 | 4.3 Practice documentation is sufficient for the practice to be replicated by users without prior knowledge of similar practices. | The nature of the practice requires technical specialization and complex and expensive equipment, but the documentation is as clear and detailed as possible for non-experts. |

|

.20 | 4.4 Guidelines are available for evolution of practice and its documentation, such as updates or reviews and also have procedures for user feedback. | Practice is part of a suite of marine sampling practices that have version control and maintenance plans. User feedback is solicited through an online form. Practice is part of a suite of marine sampling practices that describe how to appropriately cite and confirm implementation. |

|

.10 | 4.5 Practice documentation has standardized formats and comprehensive metadata conforming to OBPS or other global standards. | Appendix H in the practice (Picard et al., 2020) details the required metadata in line with International Hydrographic Org. |

|

.05 | 4.6 Practice documents and metadata are machine-readable. | Document is presented in two forms, both machine readable: 1) standard pdf, 2) webpage (GitHub). |

| 1.0 | Total for Level 4 | ||

| Level 5 | |||

|

.35 | 5.1 Practice is reviewed and endorsed by a multi-institutional expert panel following OBPS/GOOS endorsement protocols. | Endorsement has not yet been received as of March 2024, awaiting OBPS endorsement process |

|

.20 | 5.2 Practice is adopted at least regionally. | Practice has one of the highest uptakes for all Australian marine sampling practices (Przeslawski et al., 2021) |

|

.15 | 5.3 Practice includes process for quality assessment. | Included in section ‘Quality assessment/uncertainty scheme’ (Github - AusSeabed, 2024) |

|

.10 | 5.4 Practice has specific protocols for supporting improvements including user feedback loops. | Practice is managed through government initiative AusSeabed and is also part of a suite of marine sampling practices (Github - Field Manuals, 2024) that have version control and maintenance plans. User feedback is solicited through an online form. |

|

.10 | 5.5 Implementation of practice has formal tools (such as checklists, software checkers, assessment procedure, etc.) to verify implementation. | Uptake is measure through citations and google analytics via AusSeabed and NESP Marine and Coastal Hub |

|

.10 | 5.6 Practice has materials for training (either described in the practice document or in a separate linked document(s) | Relevant training and information materials are managed through AusSeabed’s resource webpage (AusSeabed, 2024). |

| .65 | Total for Level 5 | ||

| 4.65 | Overall score | ||

Overall stars

|

Maturity assessment of Multibeam practice.

-

Level 1-2: Prior to 2018, the multibeam practices used within Australia were separately developed and internally documented among relevant institutions. Practitioners were unable to discover most of these in-house practices, and each was written for various purposes (e.g. research and monitoring, hydrographic charting) and to varying levels of detail.

-

Level 3: In 2018, national multibeam guidelines were independently released by two different consortiums, AusSeabed and the NESP Marine Biodiversity Hub (Przeslawski et al., 2019), each of which represented multiple institutions. The practices were released and promoted, and they were lodged on national government webpages, as well as the OBPS Repository.

-

Level 4: In 2020, the previous two guidelines were merged into a new version and released as part of the national marine sampling best practices for Australian waters (Przeslawski et al., 2023). This version began to be adopted by multiple institutions in Australia (e.g. Geoscience Australia, CSIRO, Australian Hydrographic Office), with rapidly increasing uptake and impact.

-

Level 5: By 2024, the Australian Multibeam Guidelines were broadly used throughout Australia as a best practice for seabed mapping and hydrographic mapping. Their maintenance is the responsibility of the AusSeabed Steering Committee, and various related training and data tools are available through (AusSeabed, 2024). The Australian Government recommends them in their permit applications for marine park monitoring and research. Formal endorsement will be sought by the OBPS process established in 2024 (Bushnell and Pearlman, 2024). When this is completed, the guidelines will be at Level 5 maturity.

4.3 Assessment of QARTOD real-time water level quality control practice

4.3.1 Why observe and disseminate water levels in real-time?

Water levels are measured using a variety of technologies. Examples include pressure sensors, acoustic rangefinders (guided or unguided), floats/encoder systems, and more recently microwave rangefinders. Applications requiring ever-decreasing latencies have only increased with the need for dissemination in real-time. These include support for safe and efficient maritime commerce, storm surge and inundation observations, and even tsunami observations (Edwing, 2019). reports the economic benefits of real-time observations, including sea level observations provided each six minutes, are on the order of $10M per year, while accidents are substantially reduced.

4.3.2 What are the challenges associated with real-time water level observations?

“It seems a very simple task to make correct tidal observations; but in my experience, I have found no observations which require such constant care and attention…” - Alexander Dallas Bache, Second Superintendent of the Coast Survey, 1854.

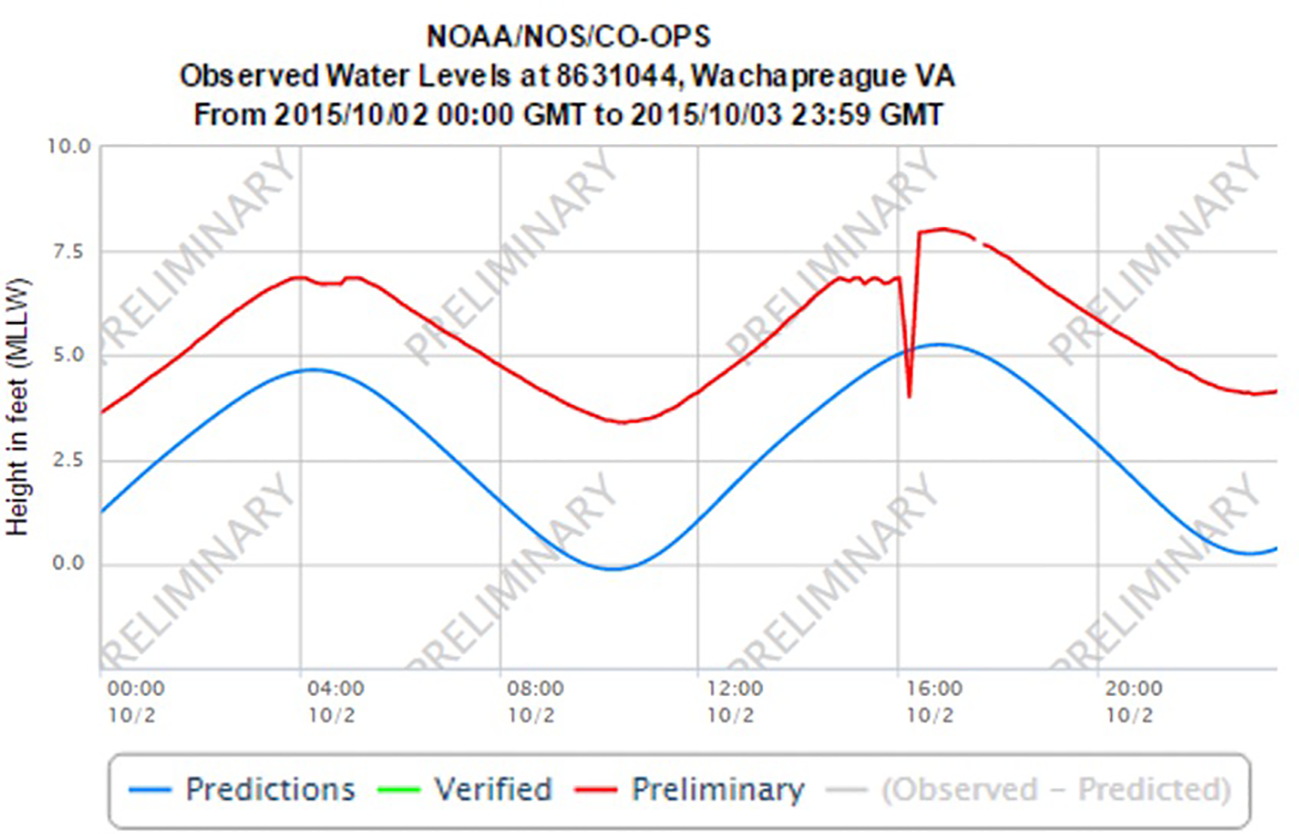

Flaws often detected in real-time include data spikes, invariant flat line, abrupt offsets/shifts, telemetry dropouts, and other equipment failures (Figure 4). Real-time QC can decrease down time and reduce repair costs by alerting operators to data flaws more quickly and assisting with troubleshooting. Other flaws seen in water level data, such as slow sensor drift, platform subsidence, and errors associated with biofouling, cannot be detected in real time.

Figure 4

The flawed data disseminated in real-time on October 02, 2015, are associated with a storm surge which brought water levels too close to the acoustic sensor. The abrupt shift seen shortly after 16:00 on 10/02/15 is the result of shifting to an alternative sensor at this site.

4.3.3 What is QARTOD?

The Quality Assurance/Quality Control of Real-Time Oceanographic Data (QARTOD) project began as a grassroots effort in 2003. Approximately 75 oceanographers and data managers met at NOAA’s National Data Buoy Center to initiate an effort to standardize the quality control of a variety of observations. Several years later, the first manuals were drafted, but there was no authority ready to accept them. In 2013, the U.S. Integrated Ocean Observing System (U.S. IOOS Office, 2010) program adopted the QARTOD project. Today a total of thirteen manuals (U.S. Integrated Ocean Observing System, 2022a) have been created and endorsed by IOOS.

Rigorous community input is the key to the creation of a QARTOD manual. Initially a small committee of subject matter experts (SME) produces the first draft, which is then reviewed by a larger group of users. The edited draft is then reviewed again by all relevant IOOS partners National Oceanic and Atmospheric Administration (NOAA), U.S. Army Corps of Engineers (USACE), Environmental Protection Agency (EPA), etc. The resultant draft is further reviewed by international agencies with interest in the effort, and then the initial SME committee provides a final review. The manual is submitted to IOOS for acceptance and signed by the Director of IOOS. The manual is posted to the IOOS web site and submitted to both the NOAA Institutional Repository and the OBPS repository. Throughout this process, an adjudication matrix is maintained to record comments and responses. The manuals are updated periodically to ensure that they remain relevant, accurate, and incorporate emergent QC capabilities. This QARTOD process is fully described in the QARTOD Project Plan Update 2022-2026 (U.S. Integrated Ocean Observing System, 2022a), and in Prospects for Real-Time Quality Control Manuals, How to Create Them, and a Vision for Advanced Implementation (U.S. Integrated Ocean Observing System, 2020).

The practices described in the manuals have been incorporated internationally by governmental and private sector entities. They have also been used in the classroom by graduate-level program. The manuals are intended to provide guidance to a broad variety of observers and capabilities. Each manual describes a series of increasingly challenging tests, identified as either required, recommended, and suggested, thus allowing for a variety of data management capabilities to participate in real-time quality control.

Initially, the target audience for the QC manuals was the eleven IOOS Regional Associations (RA), which cover the entire coast of the US and represent a geographically distributed group of users. The RAs are required to adopt QARTOD QC standards to obtain Regional Coastal Observing Systems (RCOS) certification.

4.3.4 What is the maturity of QARTOD quality control for real-time water levels

For this example, the real-time water level QC (RT WL QC) is evaluated for its maturity level (see Table 6). The RT WL QC manual (U.S. Integrated Ocean Observing System, 2021) was first created in 2014, updated in April 2016, and again in March 2021. A total of 271 comments were logged from 33 individuals representing 20 institutions who contributed to the manual.

Table 6

| Comply | Score | Level 4 | Comments |

|---|---|---|---|

|

.25 | 4.1 Practice is recognized and actively used by multiple institutions but not necessarily formally endorsed | Implemented by the Global Sea-Level Observing System (GOOS), a private engineering firm (OMC International) in Australia for dynamic under-keel clearance, and water level sensor manufacturer (HoHonu). |

|

.20 | 4.2 Practice document describes either explicitly or implicitly how practitioners can verify their successful implementation of the practice. | Each QC test description is accompanied by an example of the test. |

|

.20 | 4.3 Practice documentation is sufficient for the practice to be replicated by users without prior knowledge of similar practices | Manual is designed to train data managers how to implement QC. |

|

.20 | 4.4 Guidelines are available for evolution of practice and its documentation, such as updates or reviews and also have procedures for user feedback | Update procedures are described in the QARTOD Project Plan, which is itself updated every five years. |

|

.10 | 4.5 Practice documentation has standardized formats and comprehensive metadata conforming to OBPS or other global standards | QC data flag format matches Intergovernmental Oceanographic Commission, 2013 flagging standard. |

|

.05 | 4.6 Practice documents and metadata are machine-readable | All NOAA repository documents required to be machine-readable (Section 508 compliance). |

| 1.0 | Total for Level 4 | ||

| Level 5 | |||

|

.35 | 5.1 Practice is reviewed and endorsed by a multi-institutional expert panel following OBPS/GOOS endorsement protocols. | Manual is endorsed by U.S. IOOS, which is an organization of multiple Regional Associations and was approved by OBPS to be identified as endorsed in the repository. |

|

.20 | 5.2 Practice is adopted at least regionally | GLOSS, OMC International, Hohonu. |

|

.15 | 5.3 Practice includes process for quality assessment | The purpose of the manual is to standardize real-time QC, a significant component of QA. |

|

.10 | 5.4 Practice has specific protocols supporting continuous improvement including user feedback loops | Capabilities include: 1) a designated QARTOD National Coordinator, 2) a QARTOD Board of Advisors, ~12 members meet quarterly, 3) a request in the manual to contact either of these to report usage of the practice, 4) an annual meeting of the U.S. IOOS Data Management & Cyberinfrastructure (DMAC), the designated target audience for QARTOD manuals, where any of the users are able to initiate discussions about any QARTOD issues. The update process is described in the QARTOD Project Plan, which is also updated every five years. The two RT QC WL manual updates were initiated by the National Coordinator, who solicited input from the reviewers of the previous manual versions. |

|

.10 | 5.5 Implementation of practice has formal tools (such as checklists, software checkers, assessment procedure, etc.) to verify implementation. | The QARTOD manual does not include implementation monitoring tools. IOOS regional associations self-report implementation. They are required to implement QARTOD QC. |

|

.10 | 5.6 Practice has materials for training (either described in the practice document or in a separate linked document(s) | RT WL QC manual is the training material. |

| .90 | Total for Level 5 | ||

| 4.90 | Overall score | ||

Overall stars

|

Maturity assessment of QARTOD Real-Time Water Level Quality Control practice.

Level 1-2: The initial RT WL QC manual exceeded Levels 1 and 2 upon publication.

Level 3: All QARTOD manuals satisfy Level 3 guidelines.

Level 4: Documented examples of institutions implementing the QARTOD RT WL QC manual include:

-

In (UNESCO/IOC, 2020), the use of QARTOD WL QC tests is described for the Global Sea-Level Observing System (GLOSS, 2024).

-

In (Hofmann and Healy, 2017), the authors describe using the RT WL QC manual for the calculation of vessel dynamic under-keel clearance in Australian ports.

-

HoHonu, a commercial manufacturer of water level gauges (Hohonu, 2024). On their frequently asked questions page they state “Processed QA/QC data - “Cleaned” data following QARTOD methodologies, implemented over four years of collaboration with SECOORA, Axiom Data Science, and NOAA CO-OPS”.

Regarding sufficient practice documentation, indeed the purpose of QARTOD manuals is to convey standardized QC practices to the eleven U.S. IOOS regional association data managers. Collectively, these data managers have used the QC manuals to create GitHub pages which further support standardization.

All QARTOD manuals are posted on the U.S. IOOS web page (U.S IOOS Website, 2024), and reside on both the NOAA Institutional Repository (NOAA repository, 2024) and the OBPS repository. Because the QARTOD acronym is quite unique, an internet search using any engine makes the manuals readily findable.

The process for updating all QARTOD manuals is described in the QARTOD project plan update (U.S. Integrated Ocean Observing System, 2022a), and as previously noted, this has been conducted twice for this manual. The format for the results of the QC tests (QC data flags) is described in (U.S. Integrated Ocean Observing System, 2020), which adheres to the IOC standard described in (Intergovernmental Oceanographic Commission, 2013).

All documents in the NOAA Institutional Repository are required to be Section 508 (U.S. Access Board, 2024) compliant. Section 508 compliance is a machine-readable U.S. standard designed to enable disabled individuals equal access to documents. As of January 18, 2018, all government documents posted online are required to be Section 508 compliant.

Level 5: QARTOD RT WL QC tests have been implemented by multiple international entities. The manual focuses on quality control, a critical aspect of quality assessment. Feedback is obtained through quarterly QARTOD Board of Advisors meetings and broadly distributed requests for comments during manual updates by the National Coordinator. These updates are either incremental (no change to QC tests already implemented) or substantial updates (requested by the community and requiring changes to an implemented test), as described in U.S. Integrated Ocean Observing System (2022a). Finally, the purpose of the manual is in fact to train data managers in the activity of data quality control in real time.

4.4 Assessment of endorsed practices

Interviews were done with the primary author of each of seven practices endorsed by GOOS/OBPS and held in the Ocean Best Practices System Repository. See Table 7. All the practices had completed the attributes of Levels 1, 2 and 3. As a result, they are not included in the table, and only Levels 4 and 5 are shown.

Table 7

| Level 4 | Core Argo Floats | OOI BG | Glider Oxygen | GO-SHIP Nutrient | GO-SHIP Plankton | Baited Remote Survey | XBT QA Practices |

|---|---|---|---|---|---|---|---|

| (Morris et al., 2023) | (Palevsky et al., 2023) | (López-García et al., 2022) | (Becker et al., 2020) | (Boss et al., 2020) | (Langlois et al., 2020) | (Parks et al., 2021) | |

| 4.1 Practice is recognized and actively used by multiple institutions but not necessarily formally endorsed. (.25) |

|

|

|

|

|

|

|

| 4.2 Practice document describes either explicitly or implicitly how practitioners can verify their successful implementation of the practice. (.20) |

|

|

|

|

|

|

|

| 4.3 Practice documentation is sufficient for the practice to be replicated by users without prior knowledge of similar practices (.20) |

|

|

|

|

|

|

|

| 4.4 Guidelines are available for evolution of practice and its documentation, such as updates or reviews and also have procedures for user feedback. (.20) |

|

|

|

|

|

|

|

| 4.5 Practice documentation has standardized formats and comprehensive metadata conforming to OBPS or other global standards (.10) |

|

|

|

|

|

|

|

| 4.6 Practice documents and metadata are machine-readable (.05) |

|

|

|

|

|

|

|

| Total for Level 4 | .80 | .80 | 1.00 | .80 | .80 | .80 | 1.00 |

| Level 5 | Core Argo Floats | OOI BG | Glider Oxygen | GO-SHIP Nutrient | GO-SHIP Plankton | Baited Remote Survey | XBT QA Practices |

| (Morris et al., 2023) | (Palevsky et al., 2023) | (López-García et al., 2022) | (Becker et al., 2020) | (Boss et al., 2020) | (Langlois et al., 2020) | (Parks et al., 2021) | |

| 5.1 Practice is reviewed and endorsed by a multi-institutional expert panel following OBPS/GOOS endorsement protocols. (.35) |

|

|

|

|

|

|

|

| 5.2 Practice is adopted at least regionally (.20) |

|

|

|

|

|

|

|

| 5.3 Practice includes process for quality assessment. (.15) |

|

|

|

|

|

|

|

| 5.4 Practice has specific protocols for supporting improvements including user feedback loops. (.10) |

|

|

|

|

|

|

|

| 5.5 Implementation of practice has formal tools (such as checklists, software checkers, assessment procedure, etc.) to verify implementation. (.10) |

|

|

|

|

|

|

|

| 5.6 Practice has materials for training (either described in the practice document or in a separate linked document(s) (.10) |

|

|

|

|

|

|

|

| Total for level 5 | .90 | .90 | .35 | .90 | .80 | .65 | .80 |

| Overall score | 4.70 | 4.70 | 4.35 | 4.70 | 4.60 | 4.45 | 4.80 |

| Overall stars | (Morris et al., 2023), (Palevsky et al., 2023), (Becker et al., 2020), (Boss et al., 2020), (Langlois et al., 2020) |

||||||

(López-García et al., 2022), (Parks et al., 2021) |

|||||||

Overview of endorsed practice assessment by practices lead authors. In the table, V means a practice complies with the attribute, X means a practice does not comply with the attribute (X* - a new version will be published soon which is compliant in this area), and? means there is not sufficient information to determine compliance. Since the interviews, the maturity model was refined to clarify the attributes.

The interviews covered both practice maturity and the ease or difficulty of using the attributes in each of the maturity levels. The assessment served as a way to see where there were gaps in the practice capabilities that should be addressed in moving to a fully mature best practice (Level 5). Generally, the endorsed practices included guidance in duplicating the practice, most of the time by experts and at times by new users. This is understandable, for some of these practices, as technologies like DNA applications in the GO-SHIP plankton endorsed practice require expertise for their implementation.

It is important to recognize that endorsement is not synonymous with being a mature best practice. The endorsement criteria (Bushnell and Pearlman, 2024) and (Hermes, 2020) do not include all attributes of a Level 5 mature practice. The differences are attributes such as continuous improvement and training. Thus, to be mature, a practice must be endorsed. On the other hand, an endorsed practice may not include all the attributes to be fully mature. In the interviews summarized in Table 7, these differences are apparent.

The responses of the lead authors for their endorsed practices are given in Table 7. According to the scoring system of the maturity model, where credit can be given on the attribute of the next higher level, all of the endorsed practices were at Level 4 “Better Practice”. There are several attributes in Level 5 that are not addressed by most of the practices. These include guidelines and protocols for continuous improvement, formal monitoring for implementation and documented training materials. These are a foundation for sustaining and improving practices and are essential for a best practice. During the interviews, many of the lead authors agreed that these elements are important and indicated that, as a result of the interviews, they will be addressed in the next version of the practice. Setting such goals for practices is one benefit of the maturity model.

5 Summary and recommendations

Whether the issue is climate, marine litter or productivity of aquaculture, practices are created and evolve to observe and analyze conditions to support decision making. There are many practices in ocean research and applications which are created to address a particular measurement for understanding the ocean and coastal environment. Sometimes these practices align or are naturally complementary. Sometimes there are different approaches for the same end goal. This presents a problem for practitioners to decide which method to use as they engage in new projects or applications.

An important question is what is a good practice or, ultimately, what is a best practice?

To address these questions in a systematic way, a maturity model for ocean practices was developed. The model is built upon the experience over the last four decades in creating maturity models for systems and software. There are challenges in doing this, relating to the degree of generality or specificity that is needed for a practical model. General models endure, useful for strategic guidance; more detailed models are effective for assessing the exact status of maturity but may need to evolve more often.

For oceans, a five level model was chosen to provide a balance between generality and specificity. The model has detailed attributes at each level to make the assessment more quantitative. In addition, the attributes identify actions needed to move toward higher levels of maturity. In the process, the model addresses both the maturity of the practice description (documentation) and maturity of its implementation. The implementation maturity and, in particular, the sustainability and evolution of the practice are generally not addressed by experts and yet are essential to foster regional and global interoperability of practices.

The model was tested against practices with widespread adoption, some of which were also formally endorsed using a GOOS/OBPS endorsement process. In this testing, it was observed that a number of Level 5 attributes were not satisfied. These relate to sustainment and evolution of the practice. In testing practices with reference to the maturity model, practice authors agreed that there are gaps in their practice attributes and that the model was very useful in defining next steps for upgrading the practice to a best practice.

Propagating the maturity model to encourage widespread use is needed and will be done through incorporation of the model by various organizations as they guide ocean research and applications. Additional attributes for the model are under study. One question is whether there should be an attribute in the maturity matrix to credit a practice that is usable for a wide range of stakeholders with different resource availability. OBPS has a task team to address this, looking at applicability of practices in regions with limited human or infrastructure resources. This is important. The challenge is to define quantitative criteria to measure this attribute on a global scale. It is anticipated that this topic and others will be addressed in the next evolution of the maturity model.

In summary, the maturity model can identify key gaps in the evolution of a practice. It provides a definition for good, better, and best practices. The maturity model is a “living” concept which is expected to evolve over time. The work here provides a necessary foundation for widespread dialog on maturing and globalizing practices to support understanding processes, interoperability, and trust.

Statements

Data availability statement

The original contributions presented in the study are included in the article/supplementary material. Further inquiries can be directed to the corresponding author.

Author contributions

CM: Conceptualization, Writing – original draft, Writing – review & editing, Methodology, Visualization. JP: Conceptualization, Methodology, Visualization, Writing – original draft, Writing – review & editing. AR: Conceptualization, Methodology, Visualization, Writing – original draft, Writing – review & editing. RP: Conceptualization, Methodology, Visualization, Writing – original draft, Writing – review & editing. MB: Conceptualization, Methodology, Visualization, Writing – original draft, Writing – review & editing. PS: Conceptualization, Methodology, Visualization, Writing – original draft, Writing – review & editing. LC: Conceptualization, Methodology, Visualization, Writing – original draft, Writing – review & editing. EA: Conceptualization, Methodology, Visualization, Writing – original draft, Writing – review & editing. SC: Conceptualization, Methodology, Visualization, Writing – original draft, Writing – review & editing. HR: Conceptualization, Methodology, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. The Funders are not responsible for any use that may be made of the information it contains. LC, CM, JP, AR, PS, received funding from the JERICO-S3 European Union’s Horizon 2020 research and innovation programme under grant agreement No. 871153. Project Coordinator: Ifremer, France. LC, CM, JP, AR, PS, received funding from EuroSea, the European Union’s Horizon 2020 research and innovation programme under grant agreement No. 862626. Project Coordinator: GEOMAR, Germany. JP and PS received funding from ObsSea4Clim. ObsSea4Clim “Ocean observations and indicators for climate and assessments” is funded by the European Union. Grant Agreement number: 101136548. DOI: 10.3030/101136548. Contribution nr. #1. Project Coordinator Danmarks Meteorologiske Institut (DMI). EA has received funding from Mercator Ocean International. MB received funding support from the U.S. Integrated Ocean Observing System (U.S. IOOS®) under prime contract 1305-M2-21F-NCNA0208. SC received funding from Australia's Integrated Marine Observing System, enabled by the National Collaborative Research Infrastructure Strategy (NCRIS). JP and PS received support under the European Climate, Infrastructure and Environment Executive Agency (CINEA) under contract number CINEA/EMFAF/2023/3.5.1/SI2.916032 “Standards and Best Practices in Ocean Observation” CINEA/2023/OP/0015.

Acknowledgments

The authors would like to thank lead authors of endorsed practices that have engaged in the testing of the maturity model. These include (in alphabetical order) Susan Becker, Emmanuel Boss, Tim Langlois, Patricia Lopez-Garcia, Tammy Morris, Hilary Palevsky, Justine Parks. Australia’s Integrated Marine Observing System (IMOS) is enabled by the National Collaborative Research Infrastructure Strategy (NCRIS). It is operated by a consortium of institutions as an unincorporated joint venture, with the University of Tasmania as Lead Agent. The work of the IOC Ocean Best Practices System, co-sponsored by GOOS and IODE, has provided a foundation for the development of this paper. This includes the procedures for endorsement of practices done collaboratively by GOOS and OBPS.

Conflict of interest

RP was employed by company RPS Australia Asia Pacific and MB was employed by CoastalObsTechServices LLC.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Glossary

| Convergence | Agreement on a recommendation for a common practice in the selected areas |

| Endorsement | To approve openly, to express support or approval of publicly and, definitely, to recommend. |

| Harmonization | Practices which improve the comparability of variables from separate studies, permitting the pooling of data collected in different ways, and reducing study heterogeneity. |

| Interoperability | The ability of two or more systems to exchange and mutually use data, metadata, information, or system parameters using established protocols or standards. Involves standardizing technologies, data and analysis methods to facilitate collaboration and information sharing among various stakeholders in the ocean community. |

| Maturity | Mature process or technology is one that has been in use for long enough that most of its initial faults and inherent problems have been removed or reduced by further development. |

| Maturity level | A measure describing the state of development or readiness of a practice. |

| Metadata | Data that describes other data. Meta is a prefix that in most information technology usages means “an underlying definition or description.” Metadata summarizes basic information about data, which can make finding and working with particular instances of data easier; metadata may also be applied to descriptions of methodologies |

| NOAA Institutional Repository | a digital library of scientific literature and research produced by the National Oceanic and Atmospheric Administration (https://repository.library.noaa.gov/) |