Abstract

Esophageal cancer (EC) remains a significant health challenge globally, with increasing incidence and high mortality rates. Despite advances in treatment, there remains a need for improved diagnostic methods and understanding of disease progression. This study addresses the significant challenges in the automatic classification of EC, particularly in distinguishing its primary subtypes: adenocarcinoma and squamous cell carcinoma, using histopathology images. Traditional histopathological diagnosis, while being the gold standard, is subject to subjectivity and human error and imposes a substantial burden on pathologists. This study proposes a binary class classification system for detecting EC subtypes in response to these challenges. The system leverages deep learning techniques and tissue-level labels for enhanced accuracy. We utilized 59 high-resolution histopathological images from The Cancer Genome Atlas (TCGA) Esophageal Carcinoma dataset (TCGA-ESCA). These images were preprocessed, segmented into patches, and analyzed using a pre-trained ResNet101 model for feature extraction. For classification, we employed five machine learning classifiers: Support Vector Classifier (SVC), Logistic Regression (LR), Decision Tree (DT), AdaBoost (AD), Random Forest (RF), and a Feed-Forward Neural Network (FFNN). The classifiers were evaluated based on their prediction accuracy on the test dataset, yielding results of 0.88 (SVC and LR), 0.64 (DT and AD), 0.82 (RF), and 0.94 (FFNN). Notably, the FFNN classifier achieved the highest Area Under the Curve (AUC) score of 0.92, indicating its superior performance, followed closely by SVC and LR, with a score of 0.87. This suggested approach holds promising potential as a decision-support tool for pathologists, particularly in regions with limited resources and expertise. The timely and precise detection of EC subtypes through this system can substantially enhance the likelihood of successful treatment, ultimately leading to reduced mortality rates in patients with this aggressive cancer.

Introduction

Esophageal cancer (EC), which has seen a concerning rise in prevalence over the past 4 decades (Napier et al., 2014), now ranks as the eighth most common cancer and the sixth leading cause of cancer-related mortality worldwide (Pakzad et al., 2016). This aggressive disease presents a formidable challenge, with 5-year survival rates between 15% and 25% (Pennathur et al., 2013). In 2020 alone, approximately 600,000 new EC cases were reported, tragically resulting in an estimated 540,000 fatalities (Sung et al., 2021). The origins of this malignancy typically lie in the mucosa, the innermost layer of the esophagus, and from there, it can infiltrate various vital organs, including the stomach, liver, lungs, chest, blood vessels, and lymph nodes. EC comprises two primary subtypes: Esophageal Squamous Cell Carcinoma (SCC) and Adenocarcinoma (ADC), along with a handful of relatively uncommon small-cell carcinomas like melanoma, sarcoma and lymphoma (Abbas and Krasna, 2017; Yang et al., 2020).

In medical diagnostics, histopathological image analysis is the gold standard for cancer diagnosis (Gurcan et al., 2009; Tomaszewski, 2021). Histopathological images provide a comprehensive view of diseases, capturing many cytological features that furnish detailed diagnostic insights. These features encompass heightened mitotic activity, cellular enlargement, nuclear hyperchromasia, irregular nuclear chromatin distribution, prominent and sizable nucleoli, cellular and nuclear pleomorphism, increased cellularity, abnormalities in nuclear membranes, cellular discohesiveness, and the presence of tumor necrosis in the background, also known as tumor diathesis. Unlike mammography or CT scans, these cytological characteristics are not easily discernible through other imaging techniques (Al-Abbadi, 2011). Nevertheless, conventional methods employed to analyze histopathology whole-slide images (WSI) have limitations, notably in time consumption and observer variability. Traditionally, pathologists scrutinize tissue samples under a microscope, having stained them with Haematoxylin and Eosin (H&E) to enhance tissue organ contrast (He et al., 2012). However, this labour-intensive visual inspection and subjective interpretive process require pathologists to examine multiple regions of interest within each slide.

Furthermore, the inherent subjectivity in visual interpretation can introduce variations among different observers, leading to inconsistent diagnoses and assessments (Allison et al., 2014). Hence, a compelling need exists for more efficient and objective approaches to WSI analysis, such as advanced digital pathology techniques that leverage image analysis algorithms, artificial intelligence, and machine learning. These cutting-edge methods aim to automate and streamline the analysis process, diminishing the reliance on manual examination and enhancing efficiency, consistency, and accuracy in evaluating histopathology WSI.

Artificial intelligence (AI) and deep learning (DL) diagnostic systems are becoming increasingly popular, opening up new possibilities in image analysis (Guo et al., 2023; Muller et al., 2023; Wang et al., 2023; Xiao et al., 2023). By harnessing shape and texture attributes alongside higher-order spatial features that capture intricate pixel-level relationships, these systems elevate images into high-dimensional features, vastly enhancing their capability for detection and classification (Kumar et al., 2012). While feature extraction-based classification systems, which rely on handcrafted features to characterize cancer subtypes, have seen advancements, researchers increasingly recognize the potency of deep neural networks (DNN) for automatic feature extraction and comprehensive visual analysis (Kermany et al., 2018; Shimizu and Nakayama, 2020; Zhou et al., 2020). Unlike traditional manual approaches, deep learning’s automatic feature extraction through multiple non-linear transformation layers empowers it to capture a broad spectrum of generalizable and high-level features, particularly suited for intricate tasks like medical image analysis. Remarkably, both DL and machine learning (ML) have been deployed in intriguing ways for the diagnosis, prognosis prediction (Tran et al., 2021), and classification of various cancer types, utilizing diverse imaging modalities such as histopathology, CT scans, and MRI imaging. Their ability to handle vast medical data and discern complex patterns promises to revolutionize cancer diagnosis and treatment. In the context of EC patients, correct staging, treatment planning, and prognostication are pivotal for improving patient outcomes and survival rates (Huang et al., 2022). Unfortunately, EC is frequently diagnosed at advanced stages, owing to its non-specific early symptoms and the challenge of accessing the esophagus, resulting in a bleak prognosis. Thus, the importance of early detection cannot be overstated, as it holds the key to timely interventions, exploration of a broader range of treatment options, and, ultimately, better long-term survival for patients.

A recent investigation (Wagner and Cosgrove, 2023) utilized ResNet-18 for endometrial subtype classification, employing Tissue level annotation of WSIs from the (TCGA-UCEC) dataset. This study achieved the best AUC of 0.82 in predicting the subtypes of cancer. In another research (Zhao et al., 2023), a DL model based on ResNet50, utilizing 523 annotated whole-slide images, demonstrated the capability to classify invasive non-mucinous lung adenocarcinoma subtypes with an AUC of 0.80. A study has employed transfer learning to extract features, subsequently utilizing various machine learning models for classification, achieving an accuracy of 0.93 (Kumar A and Mubarak, 2022). Another study presents a two-stage deep transfer learning method for subclass classification in histopathology images, overcoming limitations in traditional patch-based approaches. While employing patch-based classification, the results are aggregated for slide-level classification. The study attains automatic classification of epithelial ovarian carcinoma WSIs with an accuracy of 0.87 (Wang et al., 2020).

Contemporary studies on esophageal cancer (EC) subtype classification predominantly rely on patch-level annotations, introducing different challenges. The reliance on such annotations has been associated with inter-observer variability and limitations in capturing the comprehensive spatial and morphological distinctions inherent in esophageal cancer tissues (Paul and Mukherjee, 2015). The imperative for direct pathologist involvement in the annotation process not only imposes heightened time and resource demands but also intensifies the potential for divergent annotation practices. The repeated emphasis on direct pathologist involvement extends the temporal and resource investment and underscores the susceptibility to variations in annotation practices. These variations further challenge the integration and comparability of results across diverse research studies, hindering the establishment of a cohesive and standardized understanding of EC subtypes (Evans et al., 2023).

Addressing this gap, we aimed to develop an advanced DL-based automated diagnostic system, leveraging state-of-the-art deep learning techniques for feature engineering and machine learning, to accurately classify different subtypes of esophageal cancer using H&E images of the esophagus. While adhering to fundamental predictive modelling procedures, we have introduced an innovative approach by incorporating a median-based technique to effectively address the inherent variability in the number of tumor tiles across Whole Slide Images (WSIs) and demonstrate resilience to outliers, enhancing the stability and reliability of our model. We trained our model on a dataset comprising 42 high-resolution WSI and subsequently validated it using 17 additional images.

Methodology

Data collection

The Cancer Genome Atlas Esophageal Carcinoma Collection (TCGA-ESCA) cohort comprises diagnostic and tissue slides for 182 subjects. Diagnostic slides, which provide detailed information about tissue phenotypic heterogeneity (Chen et al., 2022), were exclusively focused upon due to their significance in histologic analysis. This study did not consider tissue slides, often used for genomic analysis (Gutman et al., 2013). As such, only 156 subjects with diagnostics slides available in TCGA-ESCA were selected, as shown in Figure 1. Following rigorous filtering criteria by excluding the WSIs with poor visual quality, extensive blurring, abnormal staining, and ink marks. Finally, 59 high-quality diagnostic WSIs were obtained from the TCGA-ESCA cohort. This curated dataset, consisting of H&E stained WSIs categorized into SCC and ADC, ensured consistency and quality for subsequent research and analysis. Significantly, the TCGA cohort’s open accessibility without authentication requirements greatly facilitated our investigative activities.

FIGURE 1

Exclusion criteria: The complete data selection process, incorporating exclusion criteria and showcasing the data distribution in train and test sets.

Preprocessing of histopathology images

Training DNN and ML models directly on entire gigapixel H&E WSI presents substantial computational challenges with current standard computing resources. We adopted strategies such as tiling and down-sampling (Srinidhi et al., 2021). High-resolution images are broken down into smaller, more manageable patches. Within these WSIs, there were both informative and non-informative regions. The Canny edge detection algorithm was employed for its ability to differentiate and outline tissue boundaries, leveraging its capability to identify noisy edges effectively. The method offers criteria of strong edge detection, precise localization, and single response, making it a suitable choice for our purpose (Canny, 1986). Subsequently, masks were generated to demarcate the boundary between the background and the tissue (foreground) using a graph-based segmentation method. The method was chosen due to its adeptness in handling pixel intensity variations, ensuring resilience against challenges posed by staining and tissue characteristic variations, and fostering consistent and reliable boundary demarcation (Felzenszwalb and Huttenlocher, 2004). These mask images were then organized into a grid of tiles based on specified dimensions to form patches. Patches comprising background information were discarded, while those primarily containing tissue (foreground) information were retained for further analysis. Various techniques, including random sampling, Otsu, and adaptive thresholding, can be used to eliminate background tiles (Otsu, 1979; Kalton, 2011; Roy et al., 2014). We utilized the Otsu method, a widely used thresholding algorithm (Goh et al., 2018; Huang et al., 2021; Singh et al., 2021), to discard unwanted background images from the generated patch set due to its thresholding capability for varying image characteristics, allowing for optimal discrimination between tissue and background in an unsupervised manner. Tiles containing less than 10% tissue were excluded and classified as background by setting the threshold to 0.1. Histology images were preprocessed using PyHIST (Muñoz-Aguirre et al., 2020), a TIFF/SVS file format segmentation tool. Nonoverlapping patches measuring 512 × 512 pixels were extracted from each WSI, and H&E images with a magnification greater than ×20 were only considered for this study.

Data normalization

Histopathology images exhibit inherent color variations stemming from differences in staining concentration, varied equipment usage, and occasional inconsistencies in tissue sectioning. These color disparities within WSI introduce increased variability into the training data, potentially impacting the model’s efficacy during training. Various methods exist for image color normalization, including the Reinhard Algorithm (RA) (Reinhard et al., 2001), Histogram Specification (Hussain et al., 2018), and Structure-Preserving Color Normalization (Vahadane et al., 2016), with RA notably demonstrating superior performance in DL models. The RA method computes a chosen reference image’s mean and standard deviation. During the color correction process, the color characteristics of one image are aligned with those of another. Careful consideration was given to the reference image selection, as normalization outcomes can be influenced if the chosen reference image exhibits darker colors (Roy et al., 2021). The application of Reinhard color normalization to our dataset is based on established practices in the literature (Lakshmanan et al., 2019; Qu et al., 2021), demonstrating its superior performance compared to other techniques. This normalization method effectively mitigated the complexity of the extracted features, thereby enhancing the overall quality of our analysis.

Model training and evaluation

We employed a two-step approach for classification, as outlined in Figure 2. In the initial step, we performed feature extraction on all the tiles within the WSI using a pre-trained CNN (ResNet101) (He et al., 2016). This process generated a 2048-dimensional feature vector for each tile in the WSI. Consequently, we constructed a feature matrix of size N x 2048 for each subject, where N represents the number of tumor tiles within the WSI. This variable significantly differs across WSIs. The variance in the number of patches generated during the tiling process impacts the model’s prediction efficiency. To address this, we calculated the median of the stacked feature vectors from all the WSI tiles along the vertical axis, thereby creating a single feature vector with a size of 2048. These feature vectors were subsequently input into various classification algorithms to predict the EC subtypes. The experimentation was conducted on an NVIDIA Tesla V100 GPU with 32 GB memory, boasting 15,360 CUDA Cores and a processing power of 2.976 TFLOPS. On average, normalization per tile required 0.25 s, and Feature extraction exhibited an average time of 0.03 s per tile. These metrics underscore the computational efficiency of the algorithms on the specified high-performance GPU architecture. We employed performance metrics such as Accuracy, AUC, Recall, F1-score, and Precision to assess the proposed model’s efficacy in classifying EC subtypes. Accuracy provides a comprehensive assessment of correctness in both classes, particularly effective in balanced class distributions. AUC evaluates the model’s discriminatory ability across various thresholds. Recall quantifies the model’s efficacy in identifying positive instances out of total positive instances, which is crucial in scenarios prioritizing sensitivity. Precision measures the accuracy of actual positive predictions, offering insights into the model’s specificity. Lastly, the F1 score strikes a balance between precision and recall, furnishing the evaluation of overall model performance in classification tasks.

FIGURE 2

Experimentation pipeline: The process involves taking each color-normalized tile and passing it through a pre-trained model to obtain a 2048-dimensional feature vector. These feature vectors from all tiles belonging to the same patient are then combined into a single feature vector of size 2048 and used as input for various machine-learning models to make predictions about esophageal cancer (EC) subtypes.

During the model training and evaluation, we utilized a Feed-Forward Neural Network (FFNN) configured with only two layers. The first layer, with a size of 2048, was designed to accept the input feature vector, preserving compatibility with the size of the extracted features obtained during the feature extraction process using pretrained models. The second layer was employed for classification, strategically reintegrating the last layer of the pretrained model, which was initially removed during the feature extraction process. This approach allows for an efficient utilization of the deep hierarchical representations learned by the model. The choice of two dense layers with Rectified Linear Unit (ReLU) activation function (Agarap, 2018), a learning rate of 10–3 over 800 epochs, and the Adam as optimizer (Kingma and Ba, 2015) was opted due to the ability of DL to complex information through multiple layers (Bebis and Georgiopoulos, 1994). In addition to FFNN, we utilized various machine learning classifiers for comparative analysis following the previous studies (Singh et al., 2023; Saxe and Berlin, 2015). AdaBoost, an ensemble learning method, was chosen for its ability to combine weak classifiers, such as decision trees, to form a robust classifier (Freund and Schapire, 1997). Decision Tree, a non-parametric algorithm, was also employed for its tree-like structure and adaptability to handle noise and missing data (Cycles, 1989). Random Forest, another ensemble method was selected to enhance classification performance by combining multiple decision trees through bootstrap sampling and feature subsetting (BREIMAN, 2021). Logistic Regression, a binary classification algorithm, served as a widely used and interpretable model, estimating probabilities for esophageal carcinoma subtypes based on input feature vectors (Peng et al., 2002). Finally, the Support Vector Classifier (SVC), known for its robustness and versatility in handling both linear and non-linearly separable data, was applied for effective classification between esophageal cancer subtypes (Busuttil, 2003). Each model brought a unique strategy to the analysis, contributing to a comparative analysis of our proposed approach, which provides valuable insights into the suitability of various machine learning algorithms.

Results

High-resolution histopathological dataset of ADC and SCC

Following a meticulous qualitative analysis that excluded small-sized images and those with significant blurring artefacts and ink marks, we systematically organized a dataset comprising 59 high-resolution histopathological H&E stained images of ADC and ESCC. This dataset was then divided into distinct training and testing sets, maintaining a balanced 70:30 ratio. After extracting WSI tiles and carefully selecting tumor tiles, the training and testing sets for EC comprised 191,798 and 48,665 tiles, respectively. The distribution of these tiles is visually depicted in Figure 3 for both the train and test sets. Moreover, the demographic and other characteristics of all patients included in our experimentation are summarized in Table 1.

FIGURE 3

Patch Variation and Distribution: This figure visually presents the patch variation after preprocessing, showing the data distribution between training and testing sets. Patch counts range from as high as 6,000 patches per slide to as low as 1,200 patches per slide. The variation is handled by calculating the median of all the features per WSI to form a single feature vector.

TABLE 1

| Characteristics | Data set | ||

|---|---|---|---|

| ADC | SCC | ||

| Gender | Male | 26 | 25 |

| Female | 5 | 3 | |

| Age | ≤60 | 9 | 21 |

| >60 | 22 | 7 | |

| Vital Status | Dead | 14 | 8 |

| Alive | 17 | 20 | |

| Alcohol History | Yes | 26 | 19 |

| No | 5 | 9 | |

| Location | Lower Third | 29 | 11 |

| Middle Third | - | 12 | |

| Upper third | 2 | 3 | |

| Unspecified Location | - | 2 | |

Patient characteristics on the 59 cases from TCGA - ESCA cohort.

Enhancing digital pathology by data-preprocessing

Preprocessing of digital pathology played a crucial role in addressing differences among images, including variations in color, illumination, and imperfections like artefacts or noise (Figure 4). These variations and imperfections can be effectively minimized or corrected by applying preprocessing techniques, ensuring more consistent and reliable image quality across diverse datasets. The advantages of digital pathology still need to be increased to overcome its limitations: WSI requires considerably large storage volumes (since each image can need 2–3 GB). Moreover, as depicted in Figure 5, these WSIs exhibit undesirable blank backgrounds, which were eliminated during this stage. Despite efforts toward standardization, attaining impeccable color calibration across all samples remains challenging (Lyon et al., 1994). This challenge drives the adoption and implementation of color normalization techniques, which have led to increased efficiency in our model. Figure 6 provides a visual difference before and after color normalization, and the general normalization process is shown in (Figure 7).

FIGURE 4

WSI Noise and Artefacts: (A) an example of noise in WSI (random or unwanted variations in image data), (B) an example of artefact in the Whole Slide Image of our dataset. (Artefacts can include staining artefacts, tissue folding or tearing, scanner artefacts, or any other unintended alterations that may occur during slide preparation, scanning, or digitization).

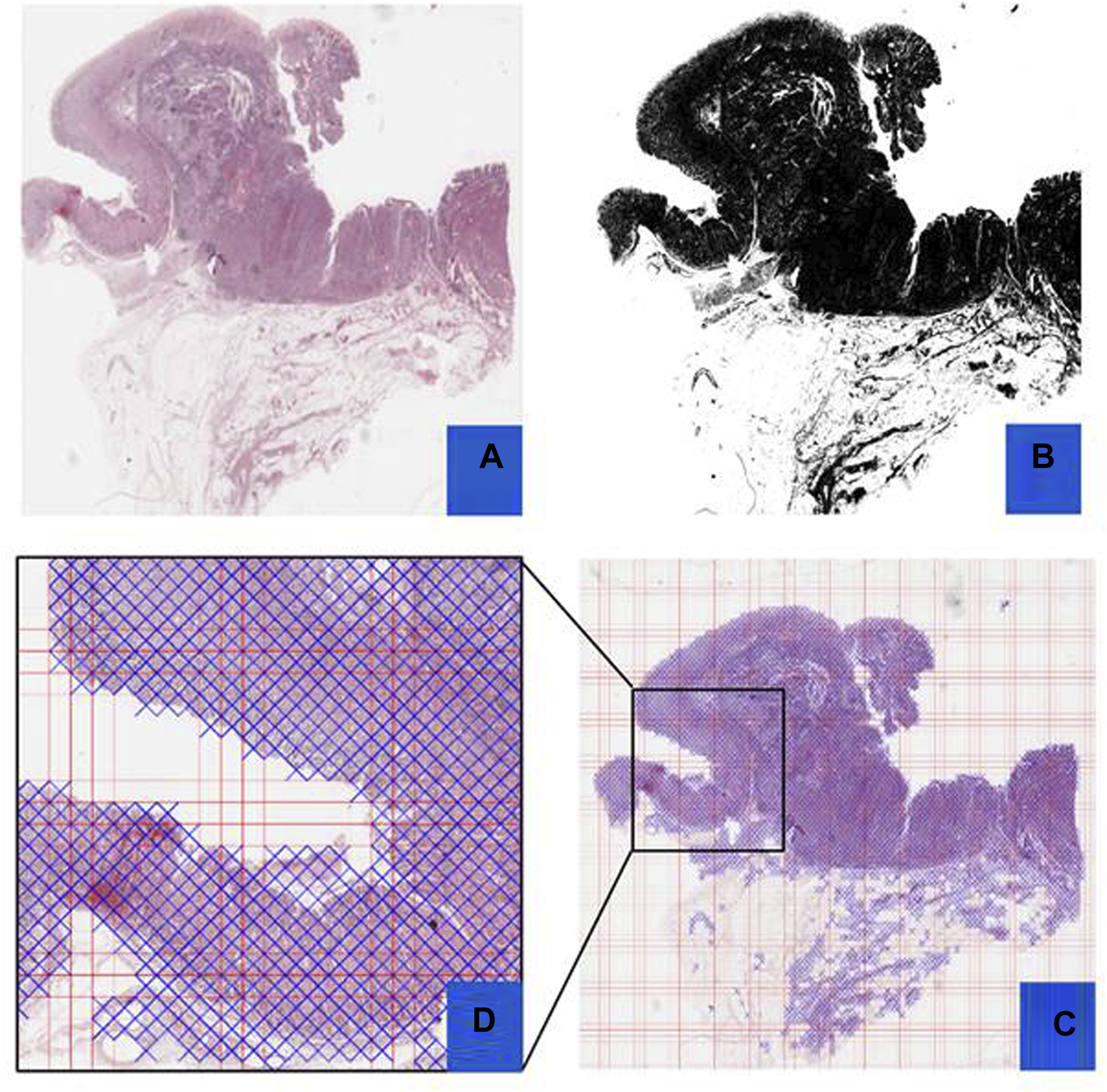

FIGURE 5

Preprocessing Steps: This figure outlines the preprocessing steps using PyHIST. Panel (A) displays an H&E WSI from TCGA, (B) illustrates a mask generated during preprocessing, (C) presents a Mask Grid created for patching WSI, and (D) shows a zoomed view of tiles selected from the grid.

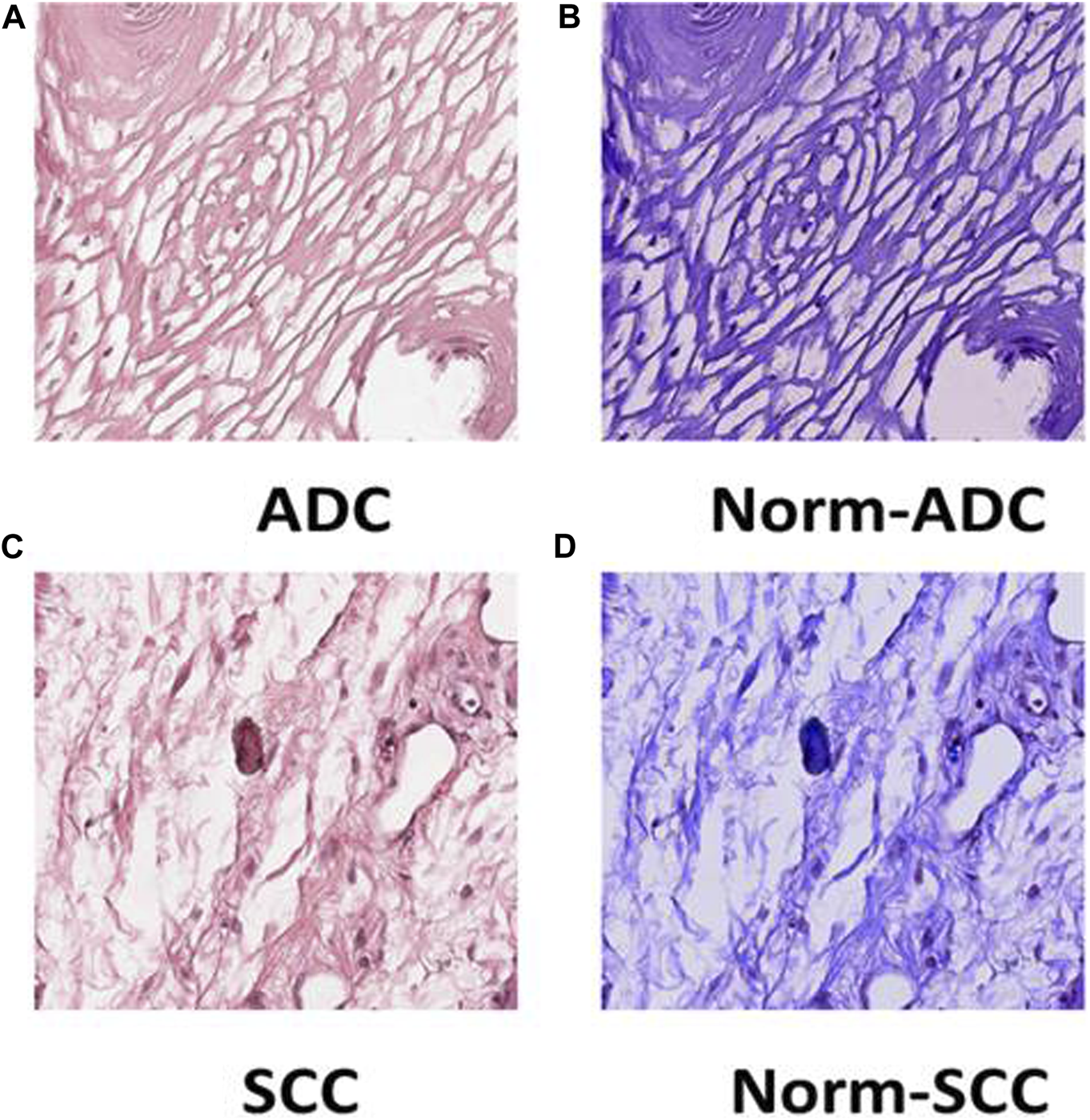

FIGURE 6

Tile Transformation: The figure exhibits the normalization process for ADC and SCC tiles. Panel (A) displays an original tile of ADC WSI after patching, (B) shows the ADC tile after normalization, (C) presents an original tile of SCC WSI after patching, and (D) illustrates the SCC tile after normalization.

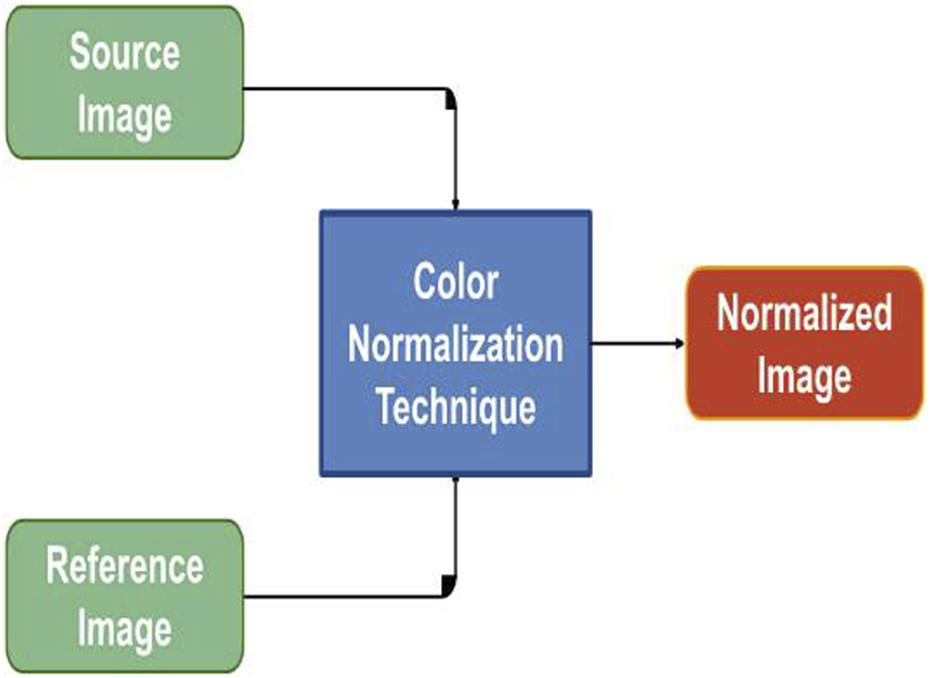

FIGURE 7

Color Normalization Flowchart: The figure provides a flow chart representing the color normalization process for images with different color variations. This process enhances the consistency of color across images, ensuring uniformity in the dataset.

Performance evaluation and comparative analysis

We utilized confusion matrices, as illustrated in Figure 8, to assess the overall accuracy of our classification models. Calculating recall, precision, and F1 score from the confusion matrix values provided a comprehensive evaluation of model performance for both SCC and ADC classes. This approach aligns with established practices, reflecting evaluation matrices utilized in previous studies (Le and Ou, 2016; Le et al., 2017). The FFNN stands out as the top-performing model, achieving an impressive classification rate of 86% for SCC patients and 100% for ADC patients in the validation set. Our feature-based classification method successfully demonstrated the use of machine learning in accurate classification of esophageal squamous cell carcinoma and adenocarcinoma. The SVC and LR models also demonstrated strong performance, achieving prediction accuracies of 86% for SCC patients and 90% for ADC patients. The RFC provided acceptable predictions of 71% for SCC patients and 90% for ADC patients. AdaBoost achieved a perfect prediction rate (100%) for SCC patients but presented the lowest performance of 40% only for ADC patients. The Decision Tree model also exhibited the least effectiveness, with correct classification rates of 85% for SCC patients and 50% for ADC patients.

FIGURE 8

Confusion matrices illustrating the performance of five ML classifiers [represented as (A, B, D, E, F)]. (C) shows the confusion matrix of the FFNN classifier with the best values for classifying esophageal cancer subtypes. The matrices display the true positive (TP), true negative (TN), false positive (FP), and false negative (FN) values for each model, offering insights into their predictive capabilities and error distributions.

Due to the large size, WSIs were divided into smaller patches for analysis, and image features were extracted at a ×20 magnification level. The results from different ML classification methods, utilizing identical methodology and data distribution, were compared while maintaining the training and test ratio. These classifiers use the feature vectors of size N x 2048 as input, extracted using ResNet101. The evaluation considered essential performance metrics such as precision, recall, F1-score, accuracy, and AUC. The results in Table 2 demonstrated that the feed-forward neural network (FFNN) model outperformed other ML models with an accuracy of 94.11% and an AUC score of 92%.

TABLE 2

| Feature extraction | Algorithms | Precision | Recall | F1-score | ACC | AUC | |||

|---|---|---|---|---|---|---|---|---|---|

| SCC | ADC | SCC | ADC | SCC | ADC | ||||

| Resnet101 | SVC | 0.86 | 0.9 | 0.86 | 0.9 | 0.86 | 0.9 | 0.88 | 0.87 |

| LR | 0.86 | 0.9 | 0.86 | 0.9 | 0.86 | 0.9 | 0.88 | 0.87 | |

| DT | 0.55 | 0.83 | 0.86 | 0.5 | 0.67 | 0.62 | 0.64 | 0.68 | |

| AdaBoost | 0.54 | 1 | 1 | 0.4 | 0.7 | 0.57 | 0.64 | 0.7 | |

| RFC | 0.6 | 0.86 | 0.86 | 0.6 | 0.71 | 0.71 | 0.82 | 0.72 | |

| FFNN | 1 | 0.91 | 0.86 | 1 | 0.92 | 0.95 | 0.94 | 0.92 | |

| InceptionV3 | SVC | 0.75 | 0.89 | 0.86 | 0.80 | 0.80 | 0.84 | 0.82 | 0.82 |

| LR | 0.70 | 1.0 | 1.0 | 0.70 | 0.82 | 0.82 | 0.82 | 0.85 | |

| DT | 0.67 | 0.73 | 0.57 | 0.80 | 0.62 | 0.76 | 0.71 | 0.68 | |

| AdaBoost | 0.71 | 0.80 | 0.71 | 0.80 | 0.71 | 0.80 | 0.76 | 0.75 | |

| RFC | 0.67 | 0.73 | 0.57 | 0.80 | 0.62 | 0.76 | 0.71 | 0.68 | |

| FFNN | 0.67 | 0.88 | 0.86 | 0.70 | 0.75 | 0.78 | 0.76 | 0.77 | |

| Resnet50 | SVC | 0.80 | 0.75 | 0.57 | 0.90 | 0.67 | 0.82 | 0.76 | 0.73 |

| LR | 0.62 | 0.78 | 0.71 | 0.70 | 0.67 | 0.74 | 0.71 | 0.70 | |

| DT | 0.57 | 0.70 | 0.57 | 0.70 | 0.57 | 0.70 | 0.65 | 0.63 | |

| AdaBoost | 0.67 | 0.88 | 0.86 | 0.70 | 0.75 | 0.78 | 0.76 | 0.77 | |

| RFC | 0.67 | 0.73 | 0.57 | 0.80 | 0.62 | 0.76 | 0.71 | 0.68 | |

| FFNN | 0.75 | 0.89 | 0.86 | 0.80 | 0.80 | 0.84 | 0.82 | 0.82 | |

Results of WSI classification.

Similarly, the SVC and LR models displayed comparable performance, achieving an accuracy of 88% and an AUC of 0.87, surpassing the performance of the remaining models. Notably, the DT and AdaBoost models exhibited the lowest accuracy at 64%. We observed that while FFNN outperforms all other algorithms in terms of accuracy, the imbalance in the dataset was better handled by SVC and LR, where the difference in recall between SCC and ADC is the least, showing that both classes have a similar number of misclassifications and accurate classifications. These metrics offer a holistic assessment of the model’s performance, highlighting its strengths and weaknesses. Such insights are invaluable for refining and advancing classification models in subsequent studies. Additionally, hyperparameters were carefully tuned using a grid search approach in our model training process, except for FFNN. They were optimized for each model to achieve the best performance on the test set (Table 3).

TABLE 3

| Model | Hyperparameter | Search space | Best value |

|---|---|---|---|

| AdaBoost | max_depth | [2, 11] | 6 |

| min_samples_leaf | [5, 10] | 10 | |

| learning_rate | [0.01, 0.1] | 0.1 | |

| n_estimators | [10, 50, 250, 1,000] | 50 | |

| Decision Tree (DT) | max_features | [‘auto’, ‘sqrt’, ‘log2'] | auto |

| ccp_alpha | [0.1, 0.01, 0.001] | 0.01 | |

| criterion | [‘gini’, ‘entropy'] | gini | |

| Random Forest (RF) | max_depth | [10, 15] | 11 |

| max_features | [0, 14] | 4 | |

| Logistic Regression (LR) | C | [0.1, 1, 10, 100] | 0.1 |

| penalty | [‘l1', ‘l2'] | l1 | |

| solver | [‘newton-cg’, ‘lbfgs’, ‘liblinear'] | liblinear | |

| Support Vector Classifier (SVC) | C | [0.1, 1, 10, 100] | 0.1 |

| kernel | [‘rbf’, ‘linear’] | linear | |

| gamma | [‘scale’, ‘auto'] | scale |

Hyperparameters settings for selected models.

For a comparative analysis (refer to Table 2) involving transfer learning, we employed pre-trained InceptionV3 and ResNet50 models for feature extraction. The results showed that our proposed architecture outperformed other models, utilizing ResNet101 for feature extraction. The accuracy of our proposed model was 0.94, surpassing the best classification accuracy of 0.82 achieved by the other two models, thereby establishing the suitability of ResNet101 for the classification of esophageal cancer into Adenocarcinoma and Squamous Cell Carcinoma from histopathology images.

Discussion and conclusion

Manual histopathological diagnosis of esophageal cancer subtypes poses challenges, emphasizing the need for a sophisticated and automated classification system. Our study adopts a tissue-level diagnostic approach leveraging deep learning and machine learning techniques to construct a reliable model, aiming to alleviate the limitations associated with manual diagnosis, providing a more efficient and objective solution.

We precisely curated the dataset TCGA-ESCA, ensuring consistency and quality while addressing potential variations in size, color intensities, and equipment usage. The preprocessing phase involved strategic techniques such as tiling, down-sampling, and edge detection to manage computational challenges and enhance the model’s efficiency. These methods facilitated the extraction of informative patches from high-resolution whole-slide images (WSI) for further analysis. Moreover, a data normalization technique was adopted, where inherent color variations in histopathology images are addressed using the Reinhard Algorithm. This normalization process proves crucial in reducing variability and enhancing the model’s overall efficacy during training.

Our study emphasizes tissue-level classification rather than patch-level analysis. While patch-level annotations (Hou et al., 2016; Mi et al., 2021) have gained popularity for cancer sub-type detection in digital pathology, relying solely on them has notable drawbacks. A primary concern is the substantial manual annotation effort required for this approach, involving the careful marking and annotation of individual patches within the WSIs, making the process time-consuming and labour-intensive. However, depending solely on patch-level analysis may result in overlooking crucial contextual information within the slide. By focusing exclusively on isolated patches, there is a risk of missing important features and spatial relationships between regions in the slide. This limitation can lead to inaccurate or incomplete detection, as the analysis may fail to capture the full extent of the disease and its characteristics. Moreover, a patch-level analysis may be susceptible to sampling bias (Hägele et al., 2020), as the selected patches may not adequately represent the entire slide, potentially causing the omission of critical regions of interest crucial for accurate cancer subtype identification.

Furthermore, the challenges of intratumoral heterogeneity pose a significant obstacle for patch-level cancer subtype detection. Cancer subtypes can exhibit spatial variability within a single tumor, with distinct regions displaying different cellular characteristics and molecular profiles. By relying solely on patch-level analysis, there is a risk of obtaining inconsistent or ambiguous results, as the selected patches may not fully capture the heterogeneity present within the tumor. This limitation can impact the reliability and accuracy of the cancer subtype detection, particularly when attempting to classify tumors with diverse intratumoral characteristics. We assessed the method’s effectiveness in detecting esophageal subtype carcinoma from H&E WSIs. We found that ML can accurately classify cancer subtypes in EC from H&E WSIs. Pathologists, who have a laborious, time-consuming, and easily misinterpreted diagnostic process, could benefit significantly from automated cancer subtype detection by having less work to do.

Recognizing that patch-level cancer subtype detection can be integrated with additional contextual information from WSI, such as slide-level annotations, can help overcome the limitations associated with patch-level analysis. By incorporating a broader perspective and considering the overall tissue architecture and spatial relationships within the slide, the accuracy and reliability of cancer subtype detection can be improved. Further research and development efforts are necessary to address these limitations and refine the performance of patch-level cancer subtype detection methods in the context of a digital pathology-based cancer diagnosis. Addressing data scarcity in tasks linked to histopathology is also crucial to maximizing the effectiveness of deep learning systems. High-quality and massive amounts of disease data with detailed tile annotations will be required to accelerate model building. DL models, are particularly suitable for large-scale data analysis (Jan et al., 2019), depending on the amount of data provided, making it challenging to apply them to real-world circumstances. Unfortunately, having enough data for DL models in most real-world circumstances is not feasible, especially in medical science (Zewdie et al., 2021). As an alternative, transfer learning has shown promising results for computer vision problems where only a few training samples are available (refer to Table 4). A feature extractor trained on various pathological datasets may produce better outcomes than our cancer sub-type-specific model.

TABLE 4

| Study | Organ | Approach | Classifier | Dataset | Patients | Results |

|---|---|---|---|---|---|---|

| Wagner and Cosgrove (2023) | Uterus | Image level annotation | CNN | TCGA-UCEC | 657 | 0.82 (AUC) |

| Zhao et al. (2023) | Lung | pixel-level annotation | CNN | Beijing Chest Hospital | 523 | 0.80 (AUC) |

| Kumar A and Mubarak (2022) | Esophagus | Image level annotation | SVM | Kvasir dataset | 1800 | 0.93 (ACC) |

| Wang et al. (2020) | Ovarian Cancer | Patch level annotation | Random Forest | Vancouver General Hospital | 305 | 0.87 (ACC) |

| Proposed Method | Esophagus | Image level annotation | FFNN | TCGA-ESCA | 59 | 0.94 (ACC) |

Comparison of studies with proposed architecture.

Utilizing transfer learning with ResNet101 on histopathological imagery, our study achieved superior performance with a Feed Forward Neural Network, boasting 94% accuracy and 0.92 AUC. Support Vector Classifier and Logistic Regression followed closely with an 88% accuracy, outperforming Random Forest Classifier (RFC) at 82%, while Decision Tree (DT) and AdaBoost lagged with 64% accuracy. This approach was centred on examining H&E stained WSIs, renowned for their capacity to preserve intricate microscopic tissue properties, thereby providing a robust foundation for detailed cancer tissue analysis. Looking ahead, we propose the inclusion of WSIs from additional databases in future investigations. These datasets should ideally share resolutions and characteristics similar to those used in this study to ensure consistency and reliability in comparative analyses. A thorough assessment of these representations and their parameters could offer insightful perspectives on the accuracy and repeatability of diagnostic algorithms, especially considering the distinctive nature of realistic versus pathological imagery domains. To sum up, our research proves that ResNet101-based transfer learning outperforms other methods in analyzing histopathological images and presents new opportunities for improving cancer diagnosis techniques. By utilizing the capabilities of sophisticated neural network architectures, there is considerable potential to increase the precision and speed of cancer detection from histological images. In addition, we recognize the importance of addressing the interpretability of the proposed model and plan to incorporate explainable AI techniques as part of our future research.

Statements

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding authors.

Author contributions

SA: Data curation, Formal Analysis, Investigation, Validation, Writing–original draft, Writing–review and editing. ABA: Data curation, Formal Analysis, Investigation, Validation, Writing–original draft. TM: Resources, Writing–review and editing. AB: Writing–review and editing. AA-S: Writing–review and editing. MK: Writing–review and editing. AAs: Conceptualization, Supervision, Writing–review and editing, Resources. MM: Conceptualization, Funding acquisition, Supervision, Writing–review and editing, Resources. MB: Supervision, Writing–original draft, Writing–review and editing, Conceptualization, Resources.

Funding

The author(s) declare that financial support was received for the research, authorship, and/or publication of this article. This study was funded, in part, by a Research Grant (Grant number: ID No. 2022-16465) from the Indian Council of Medical Research (ICMR) Govt. of India, New Delhi and a Core Research Grant (CRG/2021/003805) from Science and Engineering Research Board (SERB), Govt. of India, New Delhi to MM. Promotion of University Research and Scientific Excellence (PURSE) (SR/PURSE/2022/121) grant from the Department of Science and Technology, Govt. of India, New Delhi to the Islamic University of Science and Technology (IUST), Awantipora.

Conflict of interest

Author MK was employed by DigiBiomics Inc.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1

Abbas G. Krasna M. (2017). Overview of esophageal cancer. Ann. Cardiothorac. Surg.6 (2), 131–136. 10.21037/acs.2017.03.03

2

Agarap A. F. (2018). Deep Learn. using Rectified Linear Units (ReLU)2 (1), 2–8. 10.48550/arXiv.1803.08375

3

Al-Abbadi M. A. (2011). Basics of cytology. Avicenna J. Med.01 (01), 18–28. 10.4103/2231-0770.83719

4

Allison K. H. Reisch L. M. Carney P. A. Weaver D. L. Schnitt S. J. O'Malley F. P. et al (2014). Understanding diagnostic variability in breast pathology: lessons learned from an expert consensus review panel. Histopathology65 (2), 240–251. 10.1111/his.12387

5

Bebis G. Georgiopoulos M. (1994). Feed-forward neural networks: why network size is so important. IEEE Potentials, 27–31. Available: http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=329294.

6

Breiman L. E. O. (2021). Random forests. Mach. Learn., 542–545. 10.1023/A:1010933404324

7

Busuttil S. (2003). “Support vector machines,” in 1st Computer Science Annual Workshop (CSAW’03), 34–39.

8

Canny J. (1986). A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell.8 (6), 679–698. 10.1109/TPAMI.1986.4767851

9

Chen R. J. Lu M. Y. Wang J. Williamson D. F. K. Rodig S. J. Lindeman N. I. et al (2022). Pathomic fusion: an integrated framework for fusing histopathology and genomic features for cancer diagnosis and prognosis. IEEE Trans. Med. Imaging41 (4), 757–770. 10.1109/TMI.2020.3021387

10

Cycles S. (1989). Decision trees. Cycle1897, 44–45. Figure 1. 10.1007/0-387-25465-X

11

Evans H. Hero E. Minhas F. Wahab N. Dodd K. Sahota H. et al (2023). Standardized clinical annotation of digital histopathology slides at the point of diagnosis. Mod. Pathol.36 (11), 100297. 10.1016/j.modpat.2023.100297

12

Felzenszwalb P. F. Huttenlocher D. P. (2004). Efficient graph-based image segmentation. Int. J. Comput. Vis.59 (2), 167–181. 10.1023/B:VISI.0000022288.19776.77

13

Freund Y. Schapire R. E. (1997). A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci.55 (1), 119–139. 10.1006/jcss.1997.1504

14

Goh T. Y. Basah S. N. Yazid H. Aziz Safar M. J. Ahmad Saad F. S. (2018). Performance analysis of image thresholding: Otsu technique. Measurement114, 298–307. 10.1016/j.measurement.2017.09.052

15

Guo B. Liu H. Niu L. (2023). Integration of natural and deep artificial cognitive models in medical images: BERT-based NER and relation extraction for electronic medical records. Front. Neurosci.17, 1266771. 10.3389/fnins.2023.1266771

16

Gurcan M. N. Boucheron L. E. Can A. Madabhushi A. Rajpoot N. M. Yener B. (2009). Histopathological image analysis: a review. IEEE Rev. Biomed. Eng.2 (2), 147–171. 10.1109/RBME.2009.2034865

17

Gutman D. A. Cobb J. Somanna D. Park Y. Wang F. Kurc T. et al (2013). Cancer Digital Slide Archive: an informatics resource to support integrated in silico analysis of TCGA pathology data. J. Am. Med. Inf. Assoc.20 (6), 1091–1098. 10.1136/amiajnl-2012-001469

18

Hägele M. Seegerer P. Lapuschkin S. Bockmayr M. Samek W. Klauschen F. et al (2020). Resolving challenges in deep learning-based analyses of histopathological images using explanation methods. Sci. Rep.10 (1), 6423–6512. 10.1038/s41598-020-62724-2

19

He K. Zhang X. Ren S. Sun J. (2016). Deep residual learning for image recognition. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit.2016, 770–778. 10.1109/CVPR.2016.90

20

He L. Long L. R. Antani S. Thoma G. R. (2012). Histology image analysis for carcinoma detection and grading. Comput. Methods Programs Biomed.107 (3), 538–556. 10.1016/j.cmpb.2011.12.007

21

Hou L. Samaras D. Kurc T. M. Gao Y. Davis J. E. Saltz J. H. (2016). Patch-based convolutional neural network for whole slide tissue image classification. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit.2016, 2424–2433. 10.1109/CVPR.2016.266

22

Huang C. Dai Y. Chen Q. Chen H. Lin Y. Wu J. et al (2022). Development and validation of a deep learning model to predict survival of patients with esophageal cancer. Front. Oncol.12 (August), 971190. 10.3389/fonc.2022.971190

23

Huang C. Li X. Wen Y. (2021). AN OTSU image segmentation based on fruitfly optimization algorithm. Alex. Eng. J.60 (1), 183–188. 10.1016/j.aej.2020.06.054

24

Hussain K. Rahman S. Rahman M. M. Khaled S. M. Abdullah-Al Wadud M. Hossain Khan M. A. et al (2018). A histogram specification technique for dark image enhancement using a local transformation method. IPSJ Trans. Comput. Vis. Appl.10 (1), 3. 10.1186/s41074-018-0040-0

25

Jan B. Farman H. Khan M. Imran M. Islam I. U. Ahmad A. et al (2019). Deep learning in big data Analytics: a comparative study. Comput. Electr. Eng.75, 275–287. 10.1016/j.compeleceng.2017.12.009

26

Kalton G. (2011). Simple random sampling. Introd. Surv. Sampl., 9–16. 10.4135/9781412984683.n2

27

Kermany D. S. Goldbaum M. Cai W. Valentim C. C. S. Liang H. Baxter S. L. et al (2018). Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell172 (5), 1122–1131. 10.1016/j.cell.2018.02.010

28

Kingma D. P. Ba J. L. (2015). Adam: a method for stochastic optimization. 3rd Int. Conf. Learn. Represent. ICLR 2015 - Conf. Track Proc., 1–15. 10.48550/arXiv.1412.6980

29

Kumar V. Gu Y. Basu S. Berglund A. Eschrich S. A. Schabath M. B. et al (2012). Radiomics: the process and the challenges. Magn. Reson. Imaging30 (9), 1234–1248. 10.1016/j.mri.2012.06.010

30

Kumar A C. Mubarak D. M. N. (2022). Classification of early stages of esophageal cancer using transfer learning. IRBM43 (4), 251–258. 10.1016/j.irbm.2021.10.003

31

Lakshmanan B. Anand S. Jenitha T. (2019). Stain removal through color normalization of Haematoxylin and Eosin images: a review. J. Phys. Conf. Ser.1362 (1), 012108. 10.1088/1742-6596/1362/1/012108

32

Le N.-Q.-K. Nguyen T.-T.-D. Ou Y.-Y. (2017). Identifying the molecular functions of electron transport proteins using radial basis function networks and biochemical properties. J. Mol. Graph. Model.73, 166–178. 10.1016/j.jmgm.2017.01.003

33

Le N.-Q.-K. Ou Y.-Y. (2016). Incorporating efficient radial basis function networks and significant amino acid pairs for predicting GTP binding sites in transport proteins. BMC Bioinforma.17 (Suppl. 19), 501. 10.1186/s12859-016-1369-y

34

Lyon H. O. De Leenheer A. P. Horobin R. W. Lambert W. E. Schulte E. K. Van Liedekerke B. et al (1994). Standardization of reagents and methods used in cytological and histological practice with emphasis on dyes, stains and chromogenic reagents. Histochem. J.26 (7), 533–544. 10.1007/BF00158587

35

Mi W. Li J. Guo Y. Ren X. Liang Z. Zhang T. et al (2021). Deep learning-based multi-class classification of breast digital pathology images. Cancer Manag. Res.13, 4605–4617. 10.2147/CMAR.S312608

36

Muller J. J. Wang R. Milddleton D. Alizadeh M. Kang K. C. Hryczyk R. et al (2023). Machine learning-based classification of chronic traumatic brain injury using hybrid diffusion imaging. Front. Neurosci.17, 1182509. 10.3389/fnins.2023.1182509

37

Muñoz-Aguirre M. Ntasis V. F. Rojas S. Guigó R. (2020). PyHIST: a histological image segmentation tool. PLoS Comput. Biol.16 (10), e1008349–9. 10.1371/journal.pcbi.1008349

38

Napier K. J. Scheerer M. Misra S. (2014). Esophageal cancer: a review of epidemiology, pathogenesis, staging workup and treatment modalities. World J. Gastrointest. Oncol.6 (5), 112–120. 10.4251/wjgo.v6.i5.112

39

Otsu N. (1979). A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man. Cybern.20 (1), 62–66. 10.1109/TSMC.1979.4310076

40

Pakzad R. Mohammadian-Hafshejani A. Khosravi B. Soltani S. Pakzad I. Mohammadian M. et al (2016). The incidence and mortality of esophageal cancer and their relationship to development in Asia. Ann. Transl. Med.4 (2), 29. 10.3978/j.issn.2305-5839.2016.01.11

41

Paul A. Mukherjee D. P. (2015). Mitosis detection for invasive breast cancer grading in histopathological images. IEEE Trans. Image Process.24 (11), 4041–4054. 10.1109/TIP.2015.2460455

42

Peng C. Y. J. Lee K. L. Ingersoll G. M. (2002). An introduction to logistic regression analysis and reporting. J. Educ. Res.96 (1), 3–14. 10.1080/00220670209598786

43

Pennathur A. Gibson M. K. Jobe B. A. Luketich J. D. (2013). Oesophageal carcinoma. Lancet381 (9864), 400–412. 10.1016/S0140-6736(12)60643-6

44

Qu H. Zhou M. Yan Z. Wang H. Rustgi V. K. Zhang S. et al (2021). Genetic mutation and biological pathway prediction based on whole slide images in breast carcinoma using deep learning. npj Precis. Oncol.5 (1), 87. 10.1038/s41698-021-00225-9

45

Reinhard E. Ashikhmin M. Gooch B. Shirley P. Pre S. Reinhard et al (2001). Color transfer between images. Appl. Percept.21, 34–41. 10.1109/38.946629

46

Roy P. Dutta S. Dey N. Dey G. Chakraborty S. Ray R. (2014). “Adaptive thresholding: a comparative study,” in 2014 International Conference on Control, Instrumentation, Communication and Computational Technologies (ICCICCT), Kanyakumari, India, 10-11 July 2014, 1182–1186. 10.1109/ICCICCT.2014.6993140

47

Roy S. Panda S. Jangid M. (2021). Modified reinhard algorithm for color normalization of colorectal cancer histopathology images. Eur. Signal Process. Conf., 1231–1235. 10.23919/EUSIPCO54536.2021.9616117

48

Saxe J. Berlin K. (2015). “Deep neural network based malware detection using two dimensional binary program features,” in 2015 10th International Conference on Malicious and Unwanted Software (MALWARE), Fajardo, PR, USA, 20-22 October 2015, 11–20. 10.1109/MALWARE.2015.7413680

49

Shimizu H. Nakayama K. I. (2020). Artificial intelligence in oncology. Cancer Sci.111 (5), 1452–1460. 10.1111/cas.14377

50

Singh N. Bhandari A. K. Kumar I. V. (2021). Fusion-based contextually selected 3D Otsu thresholding for image segmentation. Multimed. Tools Appl.80 (13), 19399–19420. 10.1007/s11042-021-10706-5

51

Singh P. Borgohain S. K. Sarkar A. K. Kumar J. Sharma L. D. (2023). Feed-forward deep neural network (FFDNN)-Based deep features for static malware detection. Int. J. Intell. Syst.2023, 1–20. 10.1155/2023/9544481

52

Srinidhi C. L. Ciga O. Martel A. L. (2021). Deep neural network models for computational histopathology: a survey. Med. Image Anal.67 (1), 101813–102148. 10.1016/j.media.2020.101813

53

Sung H. Ferlay J. Siegel R. L. Laversanne M. Soerjomataram I. Jemal A. et al (2021). Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. Ca. Cancer J. Clin.71 (3), 209–249. 10.3322/caac.21660

54

Tomaszewski J. E. (2021). Overview of the role of artificial intelligence in pathology: the computer as a pathology digital assistant. Artif. Intell. Deep Learn. Pathol., 237–262. 10.1016/B978-0-323-67538-3.00011-7

55

Tran K. A. Kondrashova O. Bradley A. Williams E. D. Pearson J. V. Waddell N. (2021). Deep learning in cancer diagnosis, prognosis and treatment selection. Genome Med.13 (1), 152–217. 10.1186/s13073-021-00968-x

56

Vahadane A. Peng T. Sethi A. Albarqouni S. Wang L. Baust M. et al (2016). Structure-preserving color normalization and sparse stain separation for histological images. IEEE Trans. Med. Imaging35 (8), 1962–1971. 10.1109/TMI.2016.2529665

57

Wagner V. Cosgrove C. (2023). Predicting endometrial cancer molecular classification from histology slides using deep learning (030). Gynecol. Oncol.176, S23. 10.1016/j.ygyno.2023.06.495

58

Wang L. Wang C. Liu C. (2023). Trends in using deep learning algorithms in biomedical prediction systems. Front. Neurosci.17, 1256351. 10.3389/fnins.2023.1256351

59

Wang Y. et al (2020). Classification of epithelial ovarian carcinoma whole-slide pathology images using deep transfer learning, 1–5. Available: http://arxiv.org/abs/2005.10957.

60

Xiao D. Zhu F. Jiang J. Niu X. (2023). Leveraging natural cognitive systems in conjunction with ResNet50-BiGRU model and attention mechanism for enhanced medical image analysis and sports injury prediction. Front. Neurosci.17, 1273931. 10.3389/fnins.2023.1273931

61

Yang J. Liu X. Cao S. Dong X. Rao S. Cai K. (2020). Understanding esophageal cancer: the challenges and opportunities for the next decade. Front. Oncol.10 (September), 1727–1813. 10.3389/fonc.2020.01727

62

Zewdie E. T. Tessema A. W. Simegn G. L. (2021). Classification of breast cancer types, sub-types and grade from histopathological images using deep learning technique. Health Technol.11 (6), 1277–1290. 10.1007/s12553-021-00592-0

63

Zhao Y. He S. Zhao D. Ju M. Zhen C. Dong Y. et al (2023). Deep learning-based diagnosis of histopathological patterns for invasive non-mucinous lung adenocarcinoma using semantic segmentation. BMJ Open13 (7), e069181. 10.1136/bmjopen-2022-069181

64

Zhou X. Li C. Rahaman M. M. Yao Y. Ai S. Sun C. et al (2020). A comprehensive review for breast histopathology image analysis using classical and deep neural networks. IEEE Access8, 90931–90956. 10.1109/ACCESS.2020.2993788

Summary

Keywords

histopathology, deep learning, machine learning, transfer learning, image processing, whole slide image, patching, normalization

Citation

Aalam SW, Ahanger AB, Masoodi TA, Bhat AA, Akil ASA-S, Khan MA, Assad A, Macha MA and Bhat MR (2024) Deep learning-based identification of esophageal cancer subtypes through analysis of high-resolution histopathology images. Front. Mol. Biosci. 11:1346242. doi: 10.3389/fmolb.2024.1346242

Received

29 November 2023

Accepted

23 February 2024

Published

19 March 2024

Volume

11 - 2024

Edited by

Xin Zhang, Jiangmen Central Hospital, China

Reviewed by

Nguyen Quoc Khanh Le, Taipei Medical University, Taiwan

Shigao Huang, Air Force Medical University, China

Updates

Copyright

© 2024 Aalam, Ahanger, Masoodi, Bhat, Akil, Khan, Assad, Macha and Bhat.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Muzafar Rasool Bhat, Muzafar.rasool@islamicuniversity.edu.in; Muzafar A. Macha, muzafar.macha@iust.ac.in, muzafar.aiiims@gmail.com

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.