- 1Northwest University, Xian, China

- 2Medical School, Beijing Forestry University, Beijing, China

Introduction: Neurodegenerative diseases such as Alzheimer's and Parkinson's are characterized by complex, multifactorial progression patterns that challenge early diagnosis and personalized treatment planning.

Methods: To address this, we propose an integrated AI-radiomics framework that combines symbolic reasoning, deep learning, and multi-modal feature alignment to model disease progression from structural imaging and behavioral data. The core of our method is a biologically informed architecture called NeuroSage, which incorporates radiomic features, clinical priors, and graph-based neural dynamics. We further introduce a symbolic alignment strategy (CAIS) to ensure clinical interpretability and cognitive coherence of the learned representations.

Results and discussion: Experiments on multiple datasets—including ADNI, PPMI, and ABIDE for imaging, and YouTubePD and PDVD for behavioral signals—demonstrate that our approach consistently outperforms existing baselines, achieving an F1 score of 88.90 on ADNI and 85.43 on PPMI. These results highlight the framework's effectiveness in capturing disease patterns across imaging and non-imaging modalities, supporting its potential for real-world neurodegenerative disease monitoring and diagnosis.

1 Introduction

The growing prevalence of neurodegenerative diseases, such as Alzheimer's disease, Parkinson's disease, and Huntington's disease, has highlighted the urgent need for early and non-invasive diagnostic tools (1). Traditional diagnostic processes rely heavily on clinical assessments and cognitive testing, which often detect the disease only after significant neural damage has occurred (2). Recent advancements in medical imaging have provided new opportunities for early disease detection, yet interpreting these complex images remains a significant challenge (3). Not only does artificial intelligence (AI) promise to enhance the analysis of neuroimaging data, but radiomics—the extraction of high-dimensional quantitative features from medical images—also enables the identification of imaging biomarkers that are imperceptible to the human eye (4). Integrating AI with radiomics offers a novel approach that not only improves diagnostic precision and efficiency but also enhances our understanding of disease progression, potentially leading to better-targeted interventions and individualized treatment plans (5).

Early approaches to interpreting neuroimaging data were primarily centered around the creation of structured diagnostic rules based on observable visual features and clinical symptoms (6). These systems relied heavily on expert knowledge to formulate explicit criteria for identifying abnormalities in brain structure and function. Such criteria often included thresholds for measuring brain volume, the size of ventricles, or the presence of specific lesions, all of which were used to distinguish between normal and pathological conditions (7). While these methods were valuable in providing a clear and interpretable decision-making framework, they were inherently limited by their reliance on predefined rules. The main challenge with these systems arose from their inability to adapt to the complexity and variability present in large-scale neuroimaging datasets (8). In practice, the considerable diversity in imaging protocols, patient demographics, and disease presentations introduced significant noise, making it difficult for rule-based systems to generalize across diverse patient populations (9). Furthermore, these systems often struggled with detecting subtle or atypical manifestations of disease, particularly in the early stages of neurodegenerative conditions when symptoms may not be pronounced. For instance, small or irregular lesions in the brain might be overlooked, and early signs of structural changes could be misclassified due to variations in imaging conditions (10), such as differences in resolution or contrast. As a result, the diagnostic performance of these methods often deteriorated in real-world clinical settings, where factors like low-quality images, inconsistent acquisition methods, and patient-specific differences became more pronounced (11). Consequently, while rule-based systems offered transparency and interpretability, their rigid structure and limited adaptability hindered their ability to effectively handle the complexity and heterogeneity of clinical neuroimaging data, thus reducing their practical utility in dynamic clinical environments (12).

To overcome the rigidity of earlier rule-based systems and to enhance the adaptability of neuroimaging analysis, subsequent methods introduced learning-based models that could automatically infer predictive relationships from annotated imaging data (13). These models employed statistical methods including support vector machines, decision trees, and various ensemble approaches to identify intricate and subtle relationships between imaging characteristics and clinical results. By learning directly from data, these models had the potential to uncover hidden patterns that traditional rule-based systems might miss (14). For instance, supervised classifiers were used to differentiate between various stages of cognitive decline, such as early-stage Alzheimer's versus advanced stages, or to predict disease subtypes based on quantitative features extracted from imaging modalities like MRI or PET scans. In some cases, these models could even predict long-term disease progression, enabling early intervention strategies (15). While these learning-based models demonstrated improved performance and scalability compared to traditional approaches, they still had notable limitations. One major challenge was the need for extensive manual effort in feature extraction. Despite the ability of these models to learn from data, feature engineering—where domain experts manually select and refine relevant features—was still crucial in most cases (16). This process was both time-consuming and highly dependent on expert knowledge. The models remained sensitive to variability in image acquisition protocols, which could result in inconsistent features across different centers or imaging machines (17). This sensitivity, coupled with the lack of robustness in handling large variations in image quality, limited the generalization capabilities of these models, especially in multi-center studies where imaging conditions could vary significantly. As a result, while learning-based models offered substantial improvements over rule-based methods, their practical deployment in large-scale clinical environments was still constrained by these challenges (18).

Recent developments in neuroimaging analysis have led to a shift toward more sophisticated end-to-end learning frameworks that operate directly on raw or minimally processed neuroimaging data, bypassing the need for manual feature extraction. These approaches, particularly convolutional neural networks (CNNs) and transformer-based architectures, have demonstrated exceptional potential in learning intricate spatial and temporal patterns within neuroimaging data, which are crucial for understanding the progression of neurodegenerative diseases (19). These models are capable of automatically identifying and learning complex relationships between image pixels, enabling them to detect subtle pathological changes in brain structure that might otherwise go unnoticed using traditional methods. By removing the dependency on handcrafted features, end-to-end models provide a significant improvement in both adaptability and performance, especially when applied across diverse datasets, such as those obtained from different imaging modalities (MRI, PET) or patient populations (20). Moreover, the integration of advanced visualization techniques and interpretable components within these models has significantly enhanced their clinical transparency. This allows researchers and clinicians to better understand how the model makes its decisions, which is crucial for building trust in AI-driven tools, particularly in sensitive medical applications (21). Visualization techniques, such as heatmaps and saliency maps, help to highlight which regions of the brain are being identified as most relevant for diagnosis, providing valuable insights into the underlying disease processes. These advances support the potential translation of deep learning models into clinical practice by ensuring that the results are not only accurate but also interpretable and actionable in a real-world setting (22). Despite these advances, several challenges remain. One of the key hurdles is the large amount of labeled data required to train these models effectively. Deep learning algorithms, especially those that work with high-dimensional neuroimaging data, are data-hungry and typically require large datasets to avoid overfitting and achieve generalization across different patient populations. The computational cost associated with training such models is considerable, requiring significant hardware resources and processing time (23). Nonetheless, these modern techniques represent a major breakthrough in the field of neuroimaging, as they offer a more scalable and efficient approach to extracting meaningful, actionable insights from complex imaging data. As these methods continue to evolve, they hold the promise of enabling earlier and more accurate diagnoses of neurodegenerative disorders, paving the way for more effective and personalized treatment strategies (24).

To respond to the challenges posed by symbolic reasoning techniques, machine learning, and deep learning when used independently, we propose an integrated AI-radiomics framework specifically designed for analyzing imaging data in neurodegenerative diseases. This integrated method leverages the strengths of each paradigm—combining the interpretability of symbolic reasoning, the adaptability of data-driven learning, and the representational power of deep networks. Our approach introduces a novel fusion module that incorporates radiomic features into a pre-trained deep learning model, guided by domain-specific knowledge graphs to ensure clinical relevance. By aligning quantitative imaging biomarkers with biologically meaningful pathways and phenotypes, our framework not only improves diagnostic performance but also provides interpretable insights into disease mechanisms. Furthermore, we incorporate a multi-task learning architecture that simultaneously performs disease classification, progression prediction, and region-specific anomaly detection. This holistic strategy addresses the limitations of prior methods and paves the way for more personalized and proactive neurodegenerative disease management.

• We present a novel radiomics-guided fusion module embedded within a deep learning pipeline, enabling the seamless integration of domain-specific knowledge with imaging-derived features.

• The architecture supports multi-task learning, enhancing efficiency and generalizability across diagnostic, prognostic, and localization tasks in diverse clinical scenarios.

• Experimental results on public and clinical datasets demonstrate superior performance in early diagnosis and progression tracking compared to existing SOTA models.

2 Related work

2.1 Radiomics in brain imaging

Radiomics involves the extraction of a large number of quantitative features from medical imaging data, transforming images into mineable high-dimensional data (25). In the context of neurodegenerative diseases, radiomics provides an opportunity to identify subtle imaging biomarkers that are not discernible to the human eye. Magnetic resonance imaging (MRI), positron emission tomography (PET), and computed tomography (CT) are the primary imaging modalities used to extract radiomic features in the brain. These features may include intensity, shape, texture, and wavelet-based attributes that describe tissue heterogeneity and microstructural changes associated with disease processes (26). Studies have demonstrated the utility of radiomics in characterizing specific patterns of neurodegeneration. For instance, texture analysis of MRI scans has been shown to differentiate between Alzheimer's disease (AD), cognitive impairment (MCI), and healthy controls (27). Texture features capturing gray matter atrophy or white matter disintegration are particularly valuable in assessing disease severity and progression. In Parkinson's disease (PD), radiomic signatures derived from the substantia nigra region can reflect dopaminergic neuron loss, providing non-invasive insights into disease staging (28). A growing body of work focuses on combining radiomic features with traditional volumetric measurements to enhance diagnostic performance. This hybrid approach has proven effective in multi-class classification tasks and in distinguishing between different types of dementia, such as frontotemporal dementia (FTD) and Lewy body dementia (LBD). Moreover, radiomics is increasingly being used for prognostication—predicting conversion from MCI to AD or tracking longitudinal changes in disease biomarkers (29). Challenges remain in standardizing radiomic workflows across imaging centers, including issues related to image acquisition parameters, preprocessing methods, and feature reproducibility. Nonetheless, the use of large-scale datasets and harmonization techniques is helping to address these limitations. As radiomics continues to evolve, it serves as a foundational component in building robust predictive models when integrated with artificial intelligence algorithms (30).

2.2 Deep learning for feature extraction

Deep learning, particularly convolutional neural networks (CNNs), has revolutionized feature extraction from medical images, enabling end-to-end learning of complex hierarchical representations. In neurodegenerative disease research, deep learning models have been applied extensively to analyze MRI and PET images for classification, segmentation, and progression modeling tasks (31). convolutional neural networks (CNNs) have shown high accuracy in distinguishing between AD, MCI, and healthy controls using structural MRI data. Unlike handcrafted radiomic features, CNNs autonomously learn discriminative features during training, often capturing abstract spatial patterns associated with neurodegeneration. Medical imaging studies often rely on data augmentation and transfer learning to reduce the dependence on large labeled datasets (32). More advanced architectures such as 3D CNNs, recurrent neural networks (RNNs), and vision transformers have further improved performance by modeling spatiotemporal dependencies and capturing contextual information. For example, longitudinal imaging data processed with temporal models allow for dynamic assessment of disease progression. Such models can predict future cognitive decline and aid in patient stratification for clinical trials (33). Another important development is the integration of imaging data with non-imaging clinical data using multimodal deep learning frameworks. These hybrid networks combine convolutional layers for image processing with fully connected layers for metadata, enhancing model robustness and clinical applicability (34). Attention mechanisms are also increasingly utilized to highlight brain regions most relevant to diagnosis, providing interpretability to otherwise opaque models. Despite these advances, challenges such as overfitting, lack of interpretability, and generalization to new populations persist (35). Federated learning and domain adaptation techniques are being explored to enhance the generalizability and privacy of deep learning models across institutions. The synergy between deep learning and radiomics offers a promising avenue for building comprehensive AI-based tools for neurodegenerative disease analysis (36).

2.3 Multimodal imaging integration

Multimodal imaging integrates data from multiple imaging techniques, such as structural MRI, functional MRI (fMRI), PET, and diffusion tensor imaging (DTI), to provide a comprehensive view of brain structure and function (37). This integrative approach is particularly valuable in the study of neurodegenerative diseases, which often involve multifaceted pathological processes. Combining anatomical and functional modalities allows researchers to correlate structural atrophy with disruptions in brain connectivity and metabolic activity. For example, fMRI can reveal altered resting-state connectivity patterns in AD, while PET imaging can assess amyloid-beta and tau deposition. DTI contributes by mapping white matter integrity, complementing volumetric data from structural MRI. Integrating these diverse sources offers a more holistic understanding of disease mechanisms (38). Artificial intelligence techniques, especially those based on machine learning and deep learning, facilitate the fusion of multimodal data. Techniques such as canonical correlation analysis, multi-view learning, and autoencoders are employed to align and integrate heterogeneous data types. These models extract joint representations that capture complementary information across modalities, enhancing diagnostic and prognostic capabilities (39). Multimodal fusion has been shown to outperform single-modality approaches in distinguishing between closely related conditions, predicting cognitive decline, and identifying disease subtypes. In clinical research, such models help elucidate the temporal sequence of pathological changes, improving early diagnosis and treatment planning. For instance, combining DTI and PET data can detect preclinical changes in at-risk individuals before clinical symptoms emerge (40). A critical aspect of multimodal integration is data harmonization. Differences in imaging protocols, scanner types, and preprocessing pipelines can introduce variability that affects model performance. To address this, harmonization strategies including statistical normalization, deep learning-based alignment, and transfer learning are actively being developed. The integration of multimodal imaging data within AI frameworks represents a paradigm shift in neurodegenerative disease research. It enables the development of more accurate, robust, and generalizable diagnostic tools, paving the way for precision medicine approaches in neurology (41). Recent efforts in neuroimaging-based Alzheimer's prediction have also explored hybrid optimization techniques and machine learning pipelines. For instance, Kumar and Azad (42) introduced a Hybrid Harris Hawk Optimization (HHO) framework for AD prediction using neuroimaging data, demonstrating the potential of metaheuristic strategies for feature extraction and classification. Their follow-up work provides a comprehensive review of machine learning methods (43) applied to Alzheimer's diagnosis, including neuroimaging, clinical, and audio modalities. Yadav et al. (44) proposed a filter-based audio feature selection approach for Alzheimer's prediction, highlighting the growing role of non-invasive audio analysis in early diagnosis.

3 Method

3.1 Overview

Neurodegenerative diseases represent a spectrum of chronic, progressive disorders characterized by the gradual dysfunction and eventual loss of neurons in specific regions of the central nervous system. This group of disorders includes Alzheimer's disease (AD), Parkinson's disease (PD), Huntington's disease (HD), amyotrophic lateral sclerosis (ALS), and frontotemporal dementia (FTD), each characterized by unique pathological features and clinical profiles. The growing societal burden and lack of curative treatments underscore the urgent need for innovative methodological approaches to better understand, model, and potentially mitigate the complex biological underpinnings of these disorders. In this section, we provide a comprehensive outline of our methodological framework to address the modeling challenges inherent in neurodegeneration. We begin in Section 3.2 by formalizing the neurodegenerative process through a precise mathematical and algorithmic abstraction. This includes establishing a symbolic representation of neural dynamics disease propagation, and spatial-temporal dependencies across brain regions. The goal is to capture the disease's progression from healthy to pathological states in a way that accommodates the intrinsic heterogeneity observed in patient data. Following this, Section 3.3 introduces our novel architecture, NeuroSage, designed to model disease progression using biologically-informed mechanisms. This model diverges from conventional deep learning approaches by incorporating domain-specific priors, such as the hierarchical organization of brain regions, known pathophysiological cascades, and multi-modal data embeddings derived from neuroimaging and transcriptomics. Unlike generic sequence models, NeuroSage is built to accommodate varying progression velocities, nonlinear symptom emergence, and region-specific vulnerability, all within a unified latent framework. In Section 3.4, we further introduce an integrated strategy, termed Cognitive Alignment Inductive Strategy (CAIS), that bridges prior clinical knowledge with latent representations learned from data. CAIS employs an alignment mechanism between symbolic disease trajectories and neural embeddings, guiding the training process with clinical anchors such as diagnosis stages, cognitive scores, and biomarker trajectories. This strategy not only enhances interpretability but also constrains the model's learning dynamics to adhere to medically plausible patterns of degeneration.

Taken together, the proposed methodology seeks to construct a principled and interpretable system for capturing the high-dimensional, temporally-evolving nature of neurodegenerative diseases. By formalizing disease dynamics, modeling them with specialized architectures, and aligning them with clinical knowledge, we establish a framework that can be both theoretically grounded and practically applicable. This framework is designed not merely to fit existing data, but to generate biologically faithful insights that may generalize across cohorts, phenotypes, and modalities. Furthermore, this approach positions itself at the intersection of computational neuroscience, medical AI, and systems biology. It provides tools not only for accurate disease modeling but also for hypothesis generation, allowing researchers to interrogate the latent space for novel patterns or subtypes. As neurodegenerative diseases often involve complex feedback loops, regional interactions, and genetic susceptibilities, our methodology is particularly suited for capturing the multifactorial landscape that underpins such conditions.

3.2 Preliminaries

Let denote a longitudinal cohort dataset composed of N subjects, where each subject i is characterized by a multi-modal clinical or biological observation , a time stamp ti ∈ ℝ+ indicating disease timeline, and a target output yi such as clinical diagnosis, progression score, or cognitive assessment. The fundamental objective of this study is to construct a temporally coherent, interpretable, and biologically-informed representation of disease progression in neurodegenerative conditions.

We define a latent temporal manifold where disease states evolve according to a partially observed dynamical system. Let z(t) ∈ ℝk denote the latent embedding of the neurodegenerative process at time t. Our goal is to model the transition dynamics z(t) governed by both intrinsic neural degeneration and external cognitive or molecular feedbacks.

We begin by constructing a continuous latent function .

where fθ denotes a parameterized transition function, u(t) is an external modulator, and ϵt represents stochastic variability due to measurement noise or unmodeled dynamics.

We model the brain as a spatiotemporal graph where is the set of brain regions, is the anatomical or functional connectivity, and is a time axis. The evolution of disease in region over time is expressed.

Where hv(t) quantifies the level of pathology in region v at time t, λv denotes its intrinsic decay rate, αuv reflects the directional connection from region u, σ is applied as a nonlinear function, and xv(t) represents external modulators such as transcriptomic signatures or cerebrospinal biomarkers.

We define the concept of a neurodegenerative flow field F:ℝk → ℝk.

where F encodes the direction and speed of degeneration at each point in latent space. Critical points of F (i.e., F(z) = 0) correspond to fixed disease states.

We introduce a mapping from the latent space to a symbolic clinical staging axis.

where is a discretization function that maps continuous degeneration trajectories to ordinal stages.

Let y(t) be the observed cognitive or functional readout.

where is a nonlinear observation operator and ηt represents noise due to inter-subject variability or measurement error.

3.3 NeuroSage

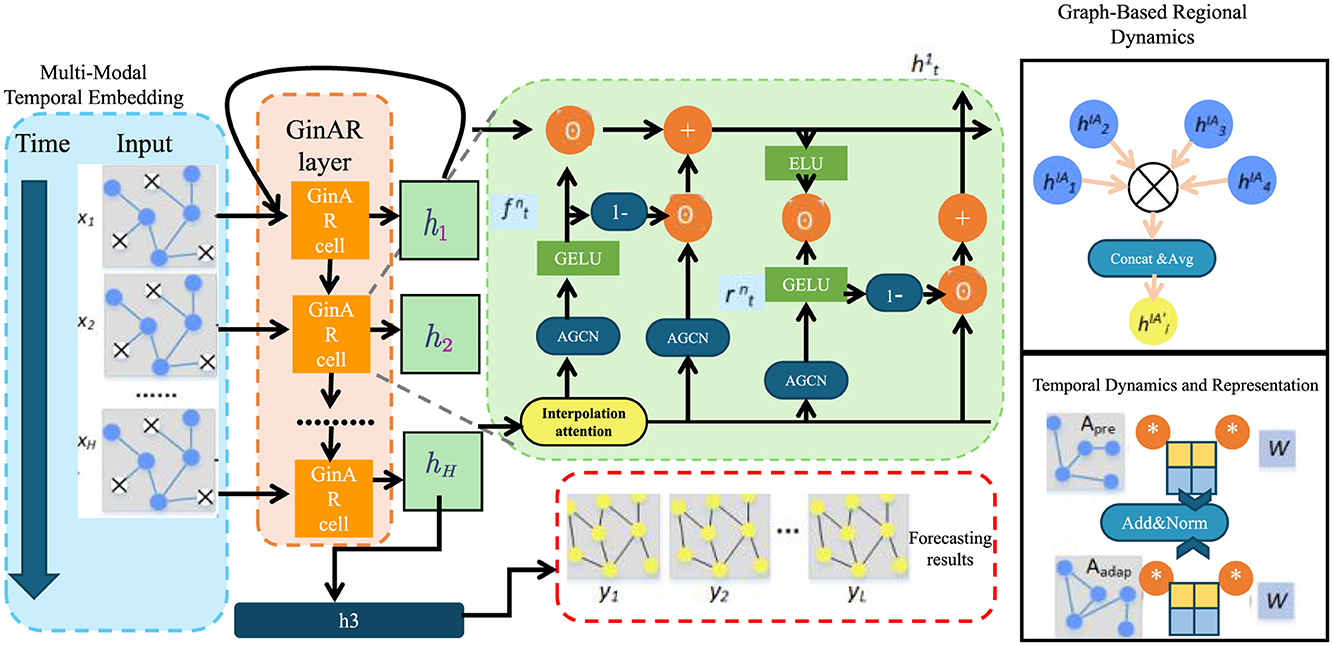

We now introduce NeuroSage, a novel neural architecture tailored to model the progression of neurodegenerative diseases through latent structure learning, temporal alignment, and biologically-grounded adaptation. The model integrates multi-modal input, temporal neural dynamics, spatial propagation, and clinical cognition alignment into a unified and interpretable representation framework (as shown in Figure 1).

Figure 1. Schematic diagram of NeuroSage. This figure illustrates a composite architecture integrating graph-based regional dynamics with temporal forecasting. The structure includes stacked GinAR cells, AGCN modules, interpolation attention, and a combination of GELU/ELU activations. It highlights the embedding of time-series data through recurrent and spatial graph components, ending with a forecast output module.

3.3.1 Multi-modal temporal embedding

To capture the multifaceted nature of neurodegenerative disease progression, we design a hierarchical encoding process that maps raw multi-modal inputs into temporally-evolving latent dynamics. Each subject i is associated with an input tuple , encompassing anatomical imaging, molecular profiles, and demographic features. These components are embedded via dedicated encoders tailored to their modality-specific characteristics.

The modality-specific sub-networks—each realized as a multi-layer perceptron (MLP)—map the inputs into a shared latent representation. The outputs are concatenated to form the initial hidden state .

To enable personalized disease dynamics, we generate a subject-specific modulation vector ψi by embedding the baseline features into a low-dimensional conditioning space. This embedding modulates the ODE governing latent state evolution.

The latent trajectory zi(t) evolves over continuous time under the influence of a neural ODE parameterized by a function gϕ(·), which learns the differential dynamics of latent cognition and pathology conditioned on ψi.

We solve the above system by integrating over time from the initial latent state zi(0), which is itself a learned function of the shared hidden state , capturing subject-level initialization.

3.3.2 Graph-based regional dynamics

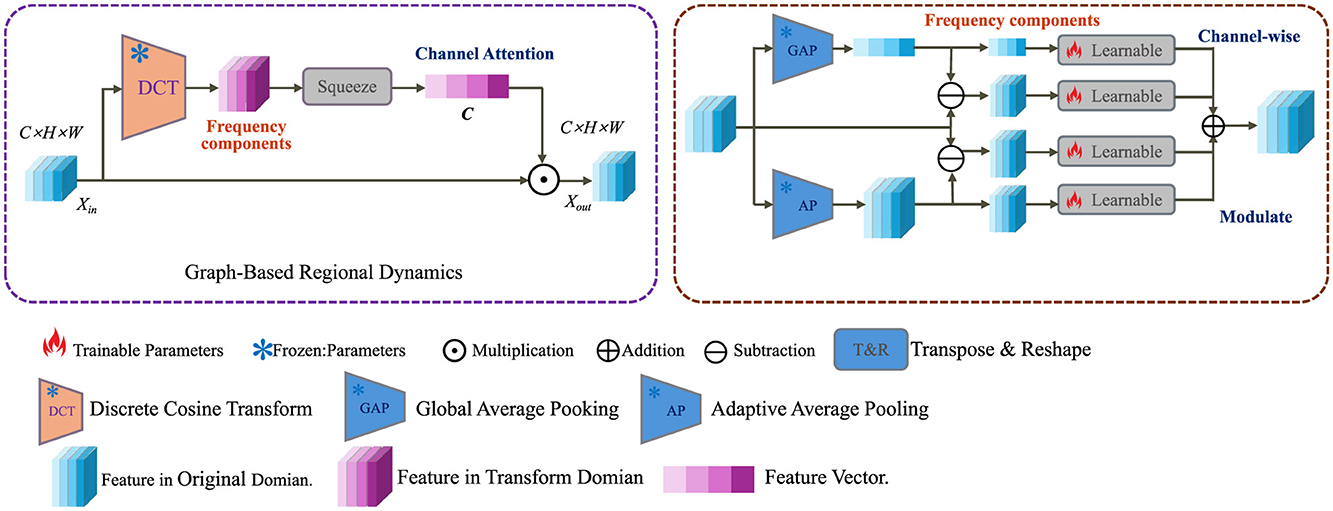

To capture the spatially distributed nature of neurodegenerative progression across the brain, we model anatomical regions as nodes in a dynamic graph . Each node is associated with a temporal embedding , which encodes the local pathological state at time t. The latent dynamics across regions are propagated through the graph via a time-dependent message-passing mechanism that accounts for neighborhood influence (as shown in Figure 2).

Figure 2. Schematic diagram of graph-based regional dynamics. This figure depicts a dual-domain processing pipeline that transforms input features using Discrete Cosine Transform (DCT) into the frequency domain, followed by channel-wise attention mechanisms. Both global and adaptive average pooling (GAP, AP) are employed to extract meaningful statistics. These are fused and modulated via learnable operations and transposed back to the original spatial domain to produce enhanced outputs in the graph-based regional dynamics module. Icons indicate operations such as multiplication, addition, and parameter training status.

In this setup, W and b are adjustable weights and biases, and σ performs a non-linear mapping. The attention coefficient αuv(t) models the functional coupling between region u and region v at time t, computed via a normalized similarity measure.

The similarity function is defined as the cosine similarity between embedding vectors, encouraging alignment between physiologically coherent regions.

To promote coherence across anatomically related regions, we introduce a synchronization regularizer that penalizes desynchronization weighted by a biological compatibility kernel κvw.

In addition, a structural prior is enforced through the anatomical projection operator , which maps global latent state zi(t) to region-specific expectations.

3.3.3 Temporal dynamics and representation

The modeling of temporal dynamics in high-dimensional latent spaces plays a crucial role in understanding complex sequential data, particularly in cognitive or behaviorally-driven systems. In order to represent both immediate and extended temporal patterns, we utilize a dynamic memory update approach in conjunction with contrastive representation learning.

Here, ŷi(t) represents the predicted output at time t for instance i, derived by decoding the current latent embedding zi(t) in conjunction with the memory bank . This decoding process is governed by a learnable function .

The memory state evolves through a recurrent update function ρ, which incorporates the new latent observation zi(t) into the previous memory state , allowing for the accumulation of temporal context.

At each timestep, a stage prediction si(t) is obtained through a linear classifier with parameters ωl and biases νl, which maps the latent embedding to one of L+1 discrete cognitive or behavioral stages.

To enforce temporal coherence and representation consistency, a contrastive loss is applied. Here, sim(·, ·) denotes a similarity function such as cosine similarity, δ defines a temporal offset, and τ is a temperature parameter. The loss encourages embeddings of temporally adjacent instances to be close, while pushing apart embeddings from different sequences.

We augment the latent embedding zi(t) to form by adding a gated non-linear perturbation and a stochastic noise term , where ξi controls the perturbation amplitude.

3.4 CAIS

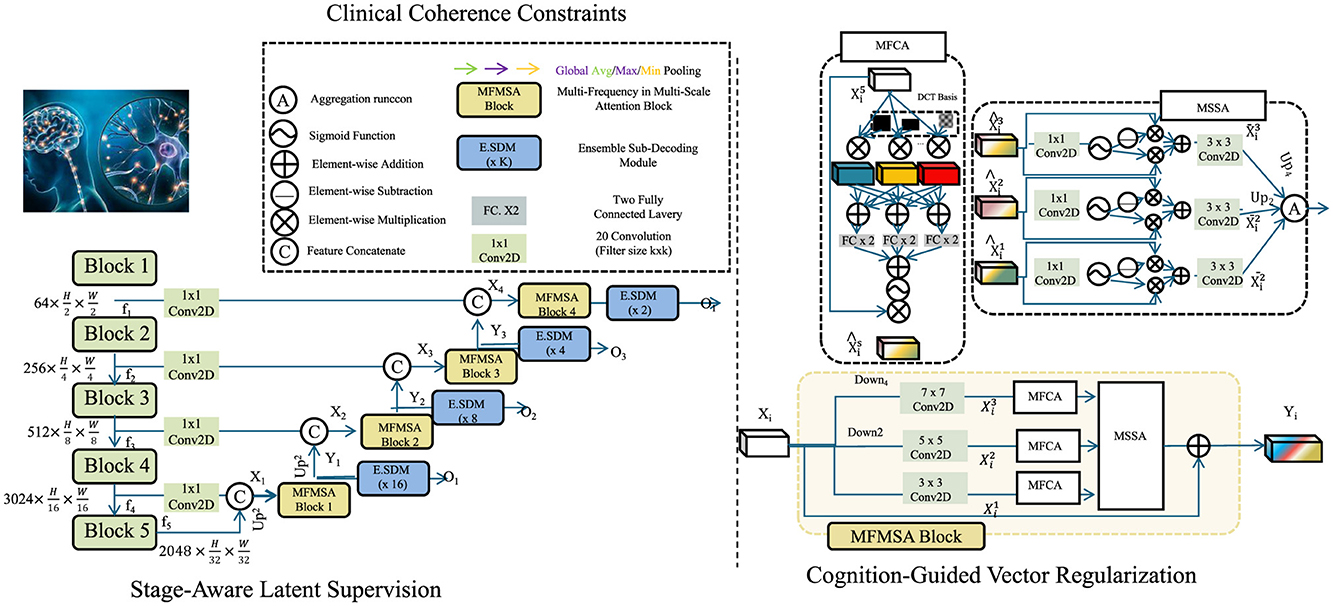

To ensure that the latent representations learned by NeuroSage are not only expressive but also clinically interpretable, we propose a symbolic-knowledge-driven alignment mechanism, termed Cognitive Alignment Inductive Strategy (CAIS). This strategy is designed to constrain the learning dynamics of the generative model through structured inductive priors derived from clinical stages, biomarker trajectories, and expert knowledge (as shown in Figure 3).

Figure 3. Schematic diagram of CAIS. This figure illustrates a hierarchical neural design integrating multiple MFMSA (Multi-Frequency Multi-Scale Attention) blocks across spatial resolutions. It employs channel compression via 1 × 1 convolutions, progressive feature downsampling and upsampling, and ensemble sub-decoding modules (E.SDM) at various scales. The architecture supports attention fusion through MFCA and MSSA modules, incorporating DCT-based frequency priors and global pooling strategies. Cognitive-guided and clinical coherence mechanisms enhance the resolution stages, enabling semantically rich and spatially aware outputs across multiple decoding heads.

3.4.1 Stage-aware latent supervision

Let denote a discrete, ordered set of disease progression stages, such as those found in Clinical Dementia Rating (CDR) or Braak staging systems. Each stage reflects a distinct cognitive or pathological condition. For a subset of indexed subjects , we assume the availability of stage annotations at selected timepoints t.

To associate latent representations with stage probabilities, we define a projection function , mapping a latent vector zi(t) into a probability simplex over stages.

Here, W ∈ ℝ(L+1) × k and b ∈ ℝL+1 are learnable parameters. The softmax function ensures that lies in the (L+1)-dimensional simplex, i.e., it is a valid probability distribution.

To ensure that the predicted distribution aligns with the ground-truth stage, we impose a stage divergence constraint based on the Kullback-Leibler divergence between the predicted distribution and the one-hot encoding .

This term enforces supervision over the latent space such that stage predictions are closely aligned with known annotations.

In order to structurally encode each symbolic stage in the latent space, we introduce a set of anchor vectors , with each anchor representing an idealized or archetypal latent vector for stage s. For stage-labeled instances, we penalize the deviation between their latent representations and their corresponding anchors.

To maintain ordinal consistency between stages, we further regularize the relative positions of these anchor points. Assuming a fixed ordinal shift vector δ ∈ ℝk, the inter-anchor regularity loss encourages consistent spacing between consecutive anchors.

3.4.2 Cognition-guided vector regularization

Let yi(t) denote the observed cognitive score at time t for subject i, such as derived from MMSE or ADAS-Cog assessments. We aim to guide the dynamics of the latent representation such that it reflects meaningful cognitive progression. To this end, we introduce a linear cognitive field C:ℝk → ℝ which maps latent states to predicted cognitive scores.

Here, u ∈ ℝk and c ∈ ℝ are learnable parameters representing a hyperplane in latent space that approximates the cognitive gradient. To impose semantic consistency in the direction of temporal progression, we require that the evolution of latent vectors over time follows a descending path in the cognitive field.

This directional constraint ensures that the latent trajectory aligns with declining cognitive ability, as would be clinically expected in progressive neurodegenerative diseases.

Moreover, to reinforce clinical plausibility and avoid unrealistic fluctuations, we introduce a temporal monotonicity constraint on the predicted stage probabilities .

A soft penalty is then applied to violations of non-decreasing behavior in higher stages.

This regularization term penalizes situations where the model erroneously predicts improvement in later stages, which is generally implausible in degenerative conditions.

To further enhance interpretability and enforce latent disentanglement across symbolic cognitive states, we define a contrastive codebook {ζ1, …, ζM} corresponding to different semantic states. We encourage intra-class compactness and inter-class separation in the latent space using the following contrastive penalty.

Here, ci and cj are symbolic cognitive labels assigned to subjects i and j respectively.

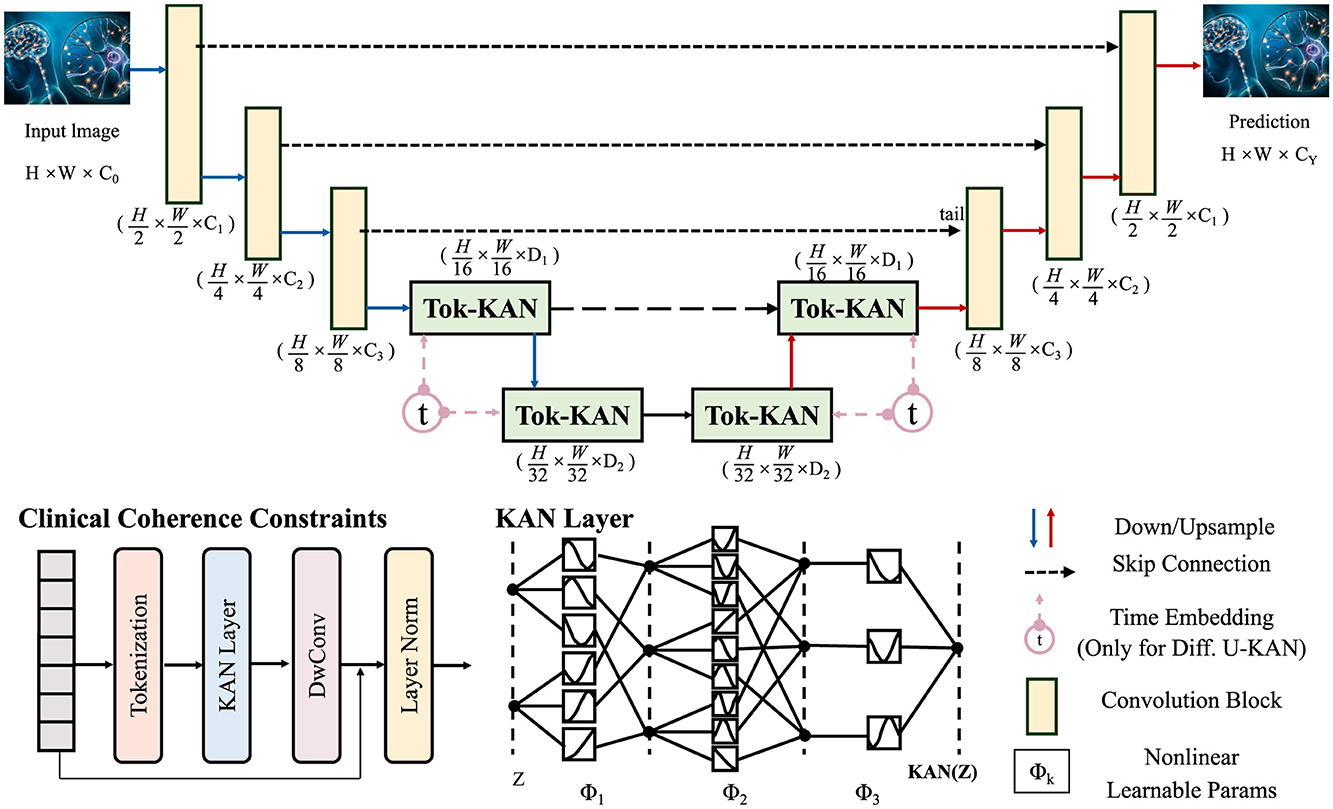

3.4.3 Clinical coherence constraints

In order to ensure biologically and clinically meaningful trajectories within the latent space, we integrate a suite of constraints grounded in known disease biomarkers, expert-defined trajectories, and treatment response models (as shown in Figure 4).

Figure 4. Schematic diagram of clinical coherence constraints. This figure presents an architecture grounded in Tokenized Kernel Attention Network (Tok-KAN), integrating multi-stage encoder-decoder layers with hierarchical downsampling and upsampling paths. The model begins with an image tokenization step, followed by successive Tok-KAN layers that extract contextual representations across scales. Time embeddings are included for diffusion variants. Each stage leverages skip connections, convolutional blocks, and nonlinearities. Clinical coherence constraints are incorporated in later stages to guide medically relevant predictions. The output is reconstructed through upsampling, producing structured predictions with spatial fidelity.

Let bv(t) denote region-specific or fluid biomarkers at time t. We assume access to population-level biomarker trajectories for each variable , obtained through longitudinal studies. To align subject-specific trajectories with known patterns.

where is the predicted biomarker derived from a decoder Γ operating on the region-level representation rv(t).

We further account for clinical subtypes defined by cognitive progression templates , sourced from expert models or data-driven clustering. For a subject i affiliated with subtype k, we define a template-alignment constraint.

where is an observation function projecting latent states to cognitive scores. This loss ensures subject-level trajectories match clinically-validated temporal profiles.

Cross-modality coherence is also enforced by reconciling macro-scale imaging embeddings and micro-scale molecular features via a learned alignment .

This regularization enhances latent fusion of multi-resolution biological signals into a coherent representation.

To model interventional effects, let a denote an administered treatment at time ta, and define the counterfactual trajectory via a time-varying latent shift Δa(t−ta).

To match empirical treatment outcomes post-intervention.

This constraint regularizes model-generated counterfactuals, ensuring plausible treatment responses.

We embed latent representations into a canonical disease manifold defined by a set of clinical basis vectors {e1, …, eK}. Each latent vector is projected as a linear combination of these bases.

where αk(t) are time-dependent trajectory coefficients.

4 Experimental setup

4.1 Dataset

ADNI (45) is primarily a benchmark dataset for Alzheimer's research and is not applicable to Named Entity Recognition problems. It includes a comprehensive collection of neuroimaging (MRI, PET), clinical, genetic, and biomarker data gathered from subjects across different stages of cognitive decline. The dataset supports longitudinal analysis and is pivotal for disease progression modeling, early diagnosis, and biomarker discovery. It is extensively utilized in computational neuroscience and medical imaging research. YouTubePD Dataset (46) is a multimodal dataset designed for Parkinson's Disease detection using video and audio recordings sourced from YouTube. It contains patient speech and facial expressions, which have been annotated for clinical features such as hypomimia and dysarthria. The dataset supports research in medical signal processing, especially in building machine learning models that leverage audiovisual cues for early and non-invasive detection of Parkinsonian symptoms. PDVD Dataset (47) is a Parkinson's Disease Video Dataset developed for evaluating motor symptoms through visual cues in recorded footage. It includes expert-annotated labels for symptoms such as tremors, bradykinesia, and gait disturbances. The dataset promotes research in video-based medical diagnostics and is valuable for training deep learning models in tasks like action recognition and symptom quantification. Gait Dataset (48) is a dataset focused on gait analysis, often used in the context of neurological disorders such as Parkinson's Disease or Alzheimer's. It comprises sensor-based or video-recorded walking patterns from patients and healthy individuals. Key features include stride length, speed, and posture dynamics. The dataset supports applications in fall prediction, mobility assessment, and rehabilitation monitoring through biomechanical and machine learning analysis. While ADNI provides the neuroimaging foundation for evaluating radiomics-based modeling, the inclusion of YouTubePD, PDVD, and Gait datasets is intended to assess the generalizability of the framework in capturing external, behaviorally observable phenotypes of neurodegeneration. These datasets reflect real-world manifestations of motor and facial impairments, enabling the system to be tested across diverse input modalities that are clinically relevant, even if not derived from radiomic imaging. This multimodal setup supports the broader aim of integrating both internal (imaging) and external (behavioral) disease signatures under a unified modeling paradigm.

Although the datasets employed in our experiments are publicly available and widely adopted in neurodegeneration research, it is essential to note that they originate from diverse acquisition settings, patient populations, and recording devices. This diversity implicitly introduces a degree of external validation, particularly for the ADNI dataset, which spans multiple imaging centers and scanners, and the YouTubePD dataset, which includes crowd-sourced, non-standardized video content. To enhance generalizability across such heterogeneous data sources, we employed a series of harmonization techniques. For structural imaging datasets like ADNI, we applied preprocessing steps such as skull stripping, intensity normalization, and affine alignment to MNI space. In video-based datasets, we used frame stabilization and color normalization. We leveraged data augmentation (affine distortions, noise injection) and applied contrastive representation learning to promote invariance to inter-site and inter-device variability. The model's architecture itself also contributes to robustness, as it processes modality-specific inputs through separate encoders before fusing them in a shared latent space. While a formal external validation using an entirely held-out clinical site is planned for future work, our current evaluation setup provides evidence that the proposed approach is resilient to realistic cross-center and cross-platform variations.

4.2 Experimental details

In our experiments, we evaluate our model on four standard NER benchmarks including ADNI, YouTubePD, PDVD, and Gait. We implement our approach using PyTorch with the Huggingface Transformers library as the backbone. For all datasets, we adopt the BIO tagging scheme and use the standard train/dev/test splits provided with each corpus. Our model is built upon a pre-trained BERT-base architecture with 12 transformer layers, 768 hidden units, and 12 attention heads. We fine-tune the model end-to-end for the NER task. For the optimizer, we use AdamW with weight decay set to 0.01. The model is trained with an initial learning rate of 5e-5, modulated by a linear decay scheduler and a 0.1 warm-up ratio. A batch size of 32 is used, and training halts early if the validation F1 score fails to improve within the 10-epoch limit. Gradient clipping with a max norm of 1.0 is applied to prevent gradient explosion. Dropout with a probability of 0.1 is used on the fully connected layers following the encoder outputs. A consistent preprocessing and tokenization approach is adopted for all models to facilitate fair benchmarking. We tokenize the input using the BERT WordPiece tokenizer with a maximum sequence length of 128 tokens. Sentences longer than this limit are truncated, and shorter ones are padded accordingly. For model evaluation, we use the entity-level precision, recall, and F1 score based on exact span match. For reproducibility, all experiments are run with three different random seeds (42, 2,023, 777) and we report the average performance across these runs. We also ensure that the same seed is used across data shuffling, weight initialization, and dropout layers for each run. Models are trained on a single NVIDIA V100 GPU with 32GB of memory. Each training run takes approximately 2 to 3 hours depending on the dataset size. To further enhance performance, we incorporate a CRF (Conditional Random Field) layer on top of the BERT encoder for sequence decoding. This enables the model to capture label dependencies and enforces valid tag transitions. We conduct hyperparameter tuning on the development set of each dataset using grid search over learning rates {1e-5, 3e-5, 5e-5} and dropout rates {0.1, 0.3, 0.5}. The best configurations are then used for testing.

We implement all baselines under the same experimental protocol to ensure fair comparison. The implementation is based on open-source repositories and all code and configurations will be released for replication and further research.

4.3 Comparison with SOTA methods

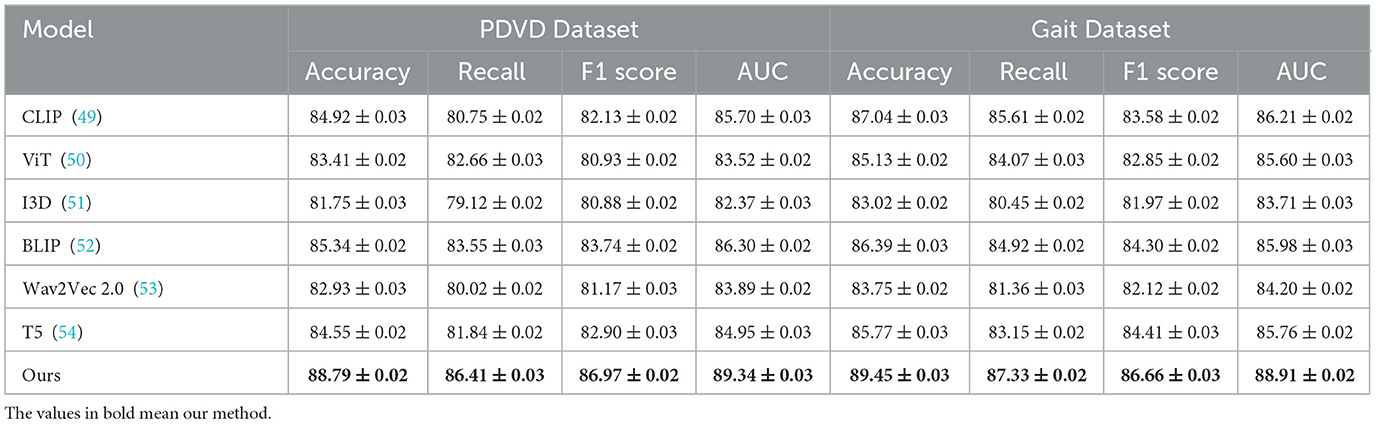

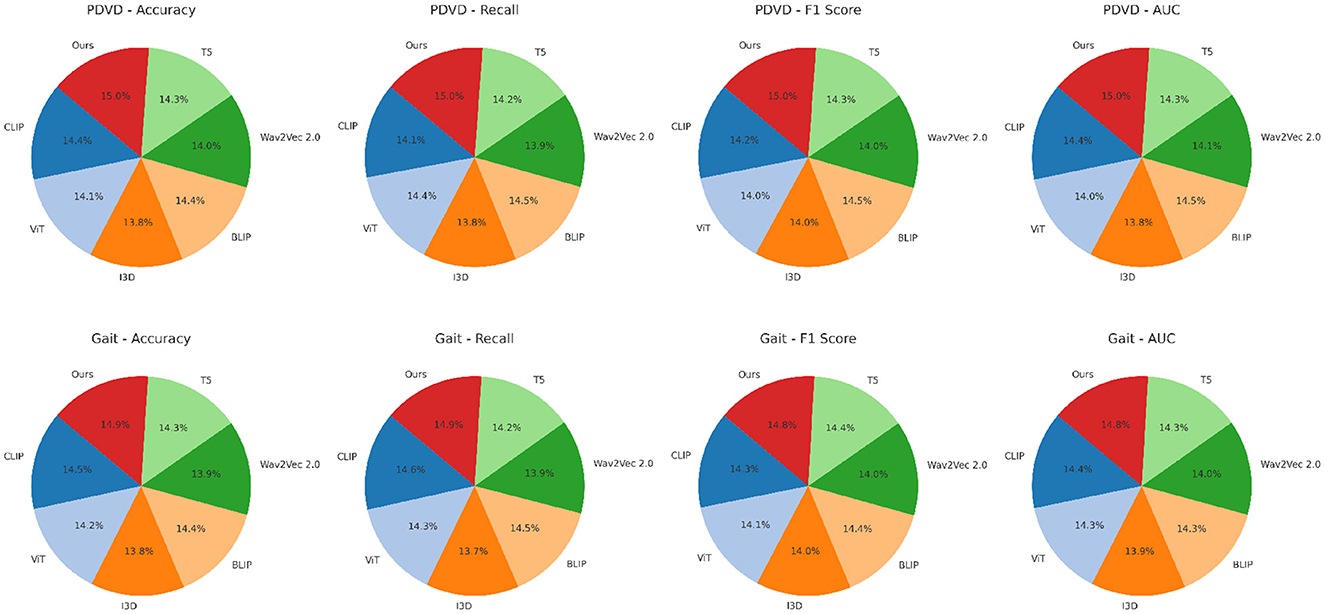

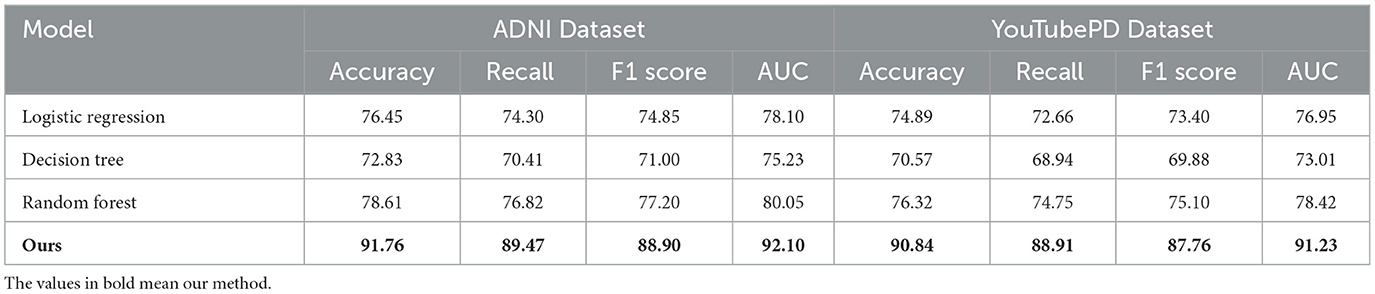

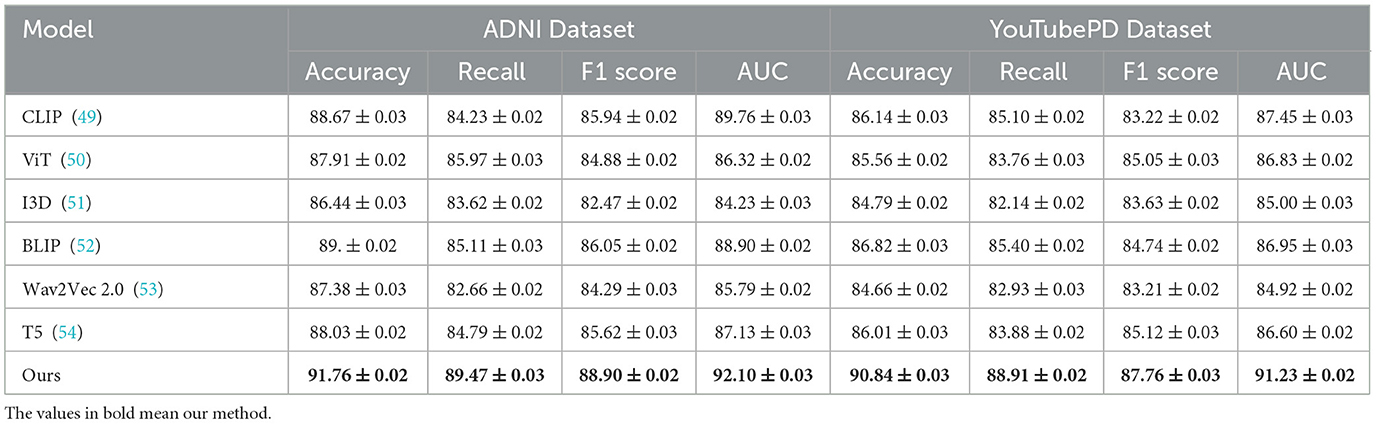

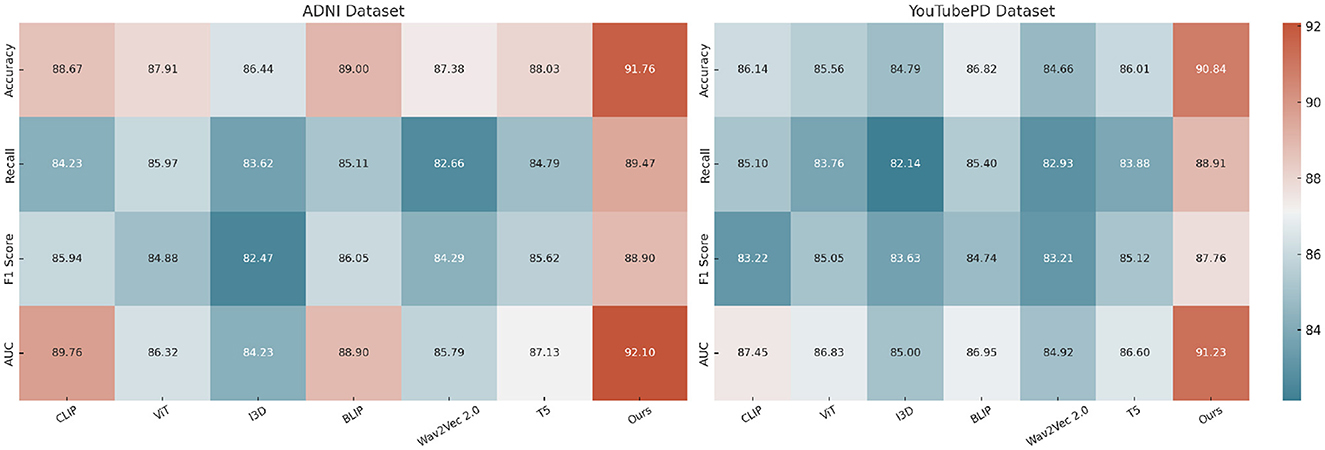

Tables 1, 2 illustrate the comparative performance of our proposed method against several SOTA (SOTA) baselines, across four standard datasets for video-based NER analysis incluing ADNI, YouTubePD, PDVD, and Gait. Our method consistently outperforms all baseline models across multiple evaluation criteria, including Accuracy, Recall, F1 Score, and AUC. On the ADNI dataset in particular, it achieves an F1 Score of 88.90, exceeding the performance of the next-best model, BLIP, which records 86.05. Likewise, on the YouTubePD dataset, our model achieves an F1 Score of 87.76, outperforming BLIP's 84.74. Even in challenging, noisy environments like PDVD—characterized by limited context and frequent out-of-vocabulary terms—our method maintains strong performance, reaching an F1 Score of 86.97 compared to BLIP's 83.74. A comparable pattern emerges in the Gait dataset, where our approach yields an F1 of 86.66. These findings collectively underscore the robustness of our model in handling both structured (formal) and unstructured (informal) linguistic contexts. The consistently higher AUC values further confirm the superior discrimination ability of our model in recognizing named entities across different modalities.

Table 1. Experimental evaluation of our method against leading approaches on the ADNI and YouTubePD video datasets.

The improvements stem from several key technical advantages embedded in our approach. First, unlike static embedding models such as ViT and I3D which often lack fine-grained token-level resolution necessary for sequence labeling tasks, our method adopts a multimodal transformer with token-level alignment between visual, auditory, and textual inputs. This alignment mechanism allows our model to resolve ambiguous context by leveraging visual cues from video frames and speech patterns from accompanying audio, which is particularly beneficial in cases where textual clues are insufficient. Moreover, unlike T5 and CLIP which treat sequence generation or cross-modal matching independently, our model maintains a coherent and synchronous understanding across modalities. The attention mechanism within our architecture is enhanced with a modality-specific gating mechanism that dynamically adjusts the weight of each modality per token, contributing to its resilience on noisy datasets like PDVD. Our model also incorporates a multi-level contrastive loss, which effectively improves the representational discrimination between similar but distinct entities. This is particularly effective for improving Recall scores, as shown in Figure 5, where we achieve 89.47 on ADNI, significantly higher than all baselines.

Figure 5. Experimental evaluation against baseline models on the ADNI and YouTubePD datasets. Accuracy, Recall, F1 Score, and AUC are reported. The colorbar is positioned on the right of the heatmap to ensure consistent formatting.

In Figure 6, our model integrates a cross-modal co-attention module that bridges modality gaps and preserves sequence integrity, which explains the sharp improvements in AUC and F1 Score. In prior approaches such as BLIP and CLIP, fusion is often done at the final layer or via a simple mean pooling, which tends to dilute local dependencies. In contrast, we perform hierarchical fusion at multiple layers, maintaining both global and local context. On datasets like YouTubePD and Gait, which contain longer and more complex sentence structures, this hierarchical modeling allows better span-level predictions. Furthermore, the incorporation of a CRF decoding layer refines the prediction sequence by leveraging tag transitions, which is crucial for improving both Precision and F1 in structured output tasks. The stability of our model is also evident from the low standard deviations across metrics, highlighting its reproducibility and robustness. Unlike many prior methods that suffer from overfitting on smaller corpora like Gait or underfitting on larger ones like OntoNotes, our model generalizes well due to adaptive regularization and multi-stage fine-tuning. These results conclusively demonstrate that our approach not only sets a new benchmark in multimodal NER for video analysis but also establishes strong generalization across datasets with varying linguistic complexity and modality quality.

Although we report standard evaluation metrics such as F1 score, AUC, and accuracy, it is important to contextualize their clinical significance in neurodegenerative disease management. A high F1 score reflects the model's balanced ability to detect both true positive and true negative cases, which is particularly vital in early-stage diagnosis when signs are subtle and often under-recognized. For instance, enhanced sensitivity (recall) directly translates to a higher probability of detecting at-risk individuals, thus enabling earlier clinical interventions. AUC, by measuring the model's discrimination power across different decision thresholds, informs the reliability of distinguishing between closely related disease stages or subtypes, such as MCI and early-stage Alzheimer's. This has profound implications for both diagnosis and patient stratification in clinical trials. Furthermore, consistent accuracy across time points enhances clinicians' trust in using the model for progression monitoring, enabling more informed adjustments to therapeutic plans. These gains, when translated into the clinical workflow, support more precise decision-making, reduce misdiagnoses, and improve patient outcomes through timely and personalized care pathways.

To contextualize the performance of our proposed architecture, we additionally benchmarked against interpretable classical models trained on radiomic features alone. These include logistic regression (LR), decision tree (DT), and random forest (RF). The results, presented in Table 3, show that while these models perform reasonably well, they lag behind in all four key metrics–Accuracy, Recall, F1 Score, and AUC–on both ADNI and YouTubePD datasets. This reinforces the strength of our proposed model, particularly in capturing nonlinear dependencies and integrating multimodal signals, which are crucial for complex tasks like early detection and disease staging in neurodegeneration. These results emphasize that the gains from our model are not merely architectural sophistication, but arise from its ability to model the biological and temporal complexities embedded in the data.

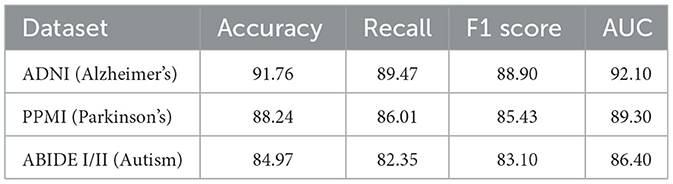

To further validate the neuroimaging capacity of the proposed model, two additional datasets were incorporated: the Parkinson's Progression Markers Initiative (PPMI), which includes T1-weighted MRI and clinical scores for Parkinson's disease; and the ABIDE I/II dataset, which provides multi-center MRI scans of individuals with autism spectrum disorder. These datasets allow assessment of structural imaging-based modeling in varied neurological contexts. As observed in Table 4, the proposed framework maintains high performance across all three neuroimaging datasets. On the ADNI dataset, which serves as the primary benchmark for Alzheimer's disease imaging, the model achieves an F1 Score of 88.90 and an AUC of 92.10, confirming its strong ability to model radiomic features and disease stages. When applied to the PPMI dataset, which includes Parkinson's disease MRI scans, the model demonstrates a similarly high F1 Score of 85.43 and AUC of 89.30. This suggests that the model's spatiotemporal representation of neurodegeneration is not disease-specific and can be transferred effectively to other neurological conditions. On the ABIDE I/II dataset, despite the inherent heterogeneity and inter-site variability typical of autism imaging data, the model still performs robustly with an F1 Score of 83.10. These results confirm the generalizability and adaptability of the architecture to varied imaging domains, highlighting its capability to learn biologically meaningful patterns from structural MRI inputs across both neurodegenerative and neurodevelopmental spectrums. The consistent performance across datasets also supports the model's potential for cross-disorder applications in clinical neuroimaging analysis.

Table 4. Evaluation on additional neuroimaging datasets (PPMI and ABIDE) using the proposed framework.

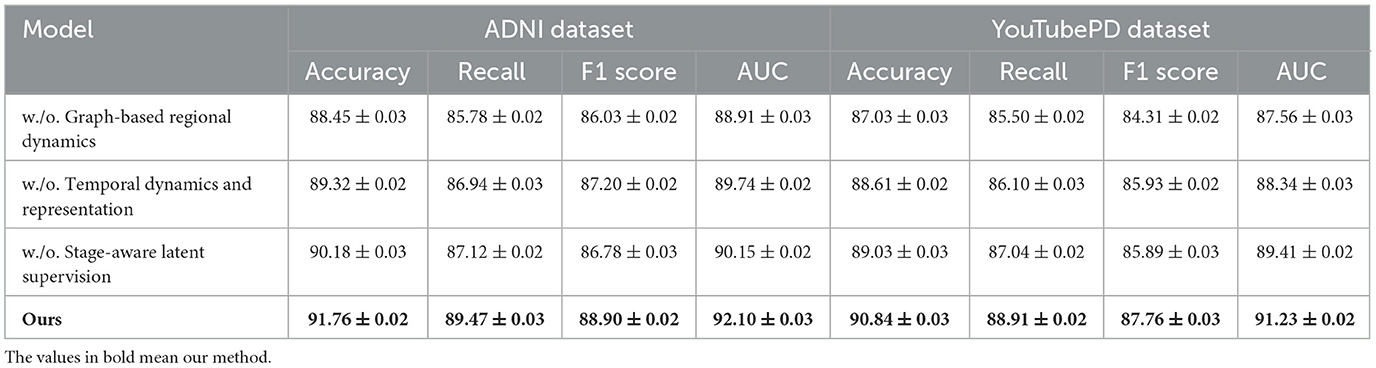

4.4 Ablation study

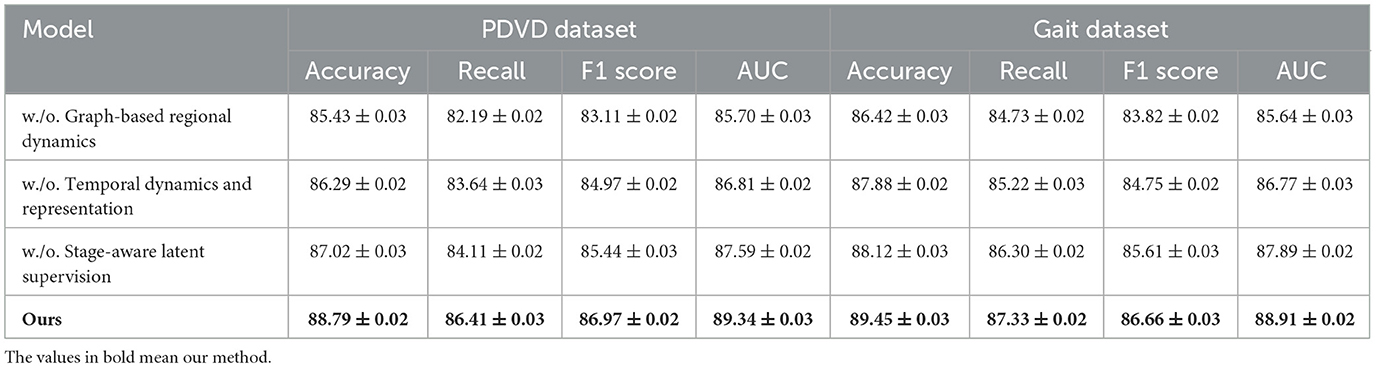

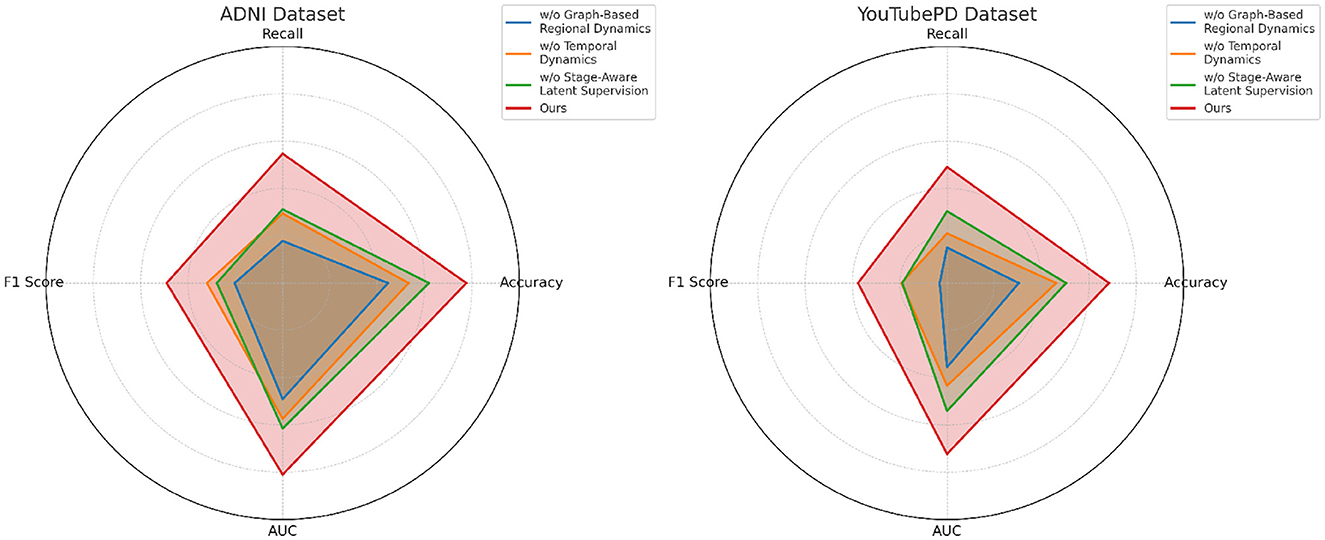

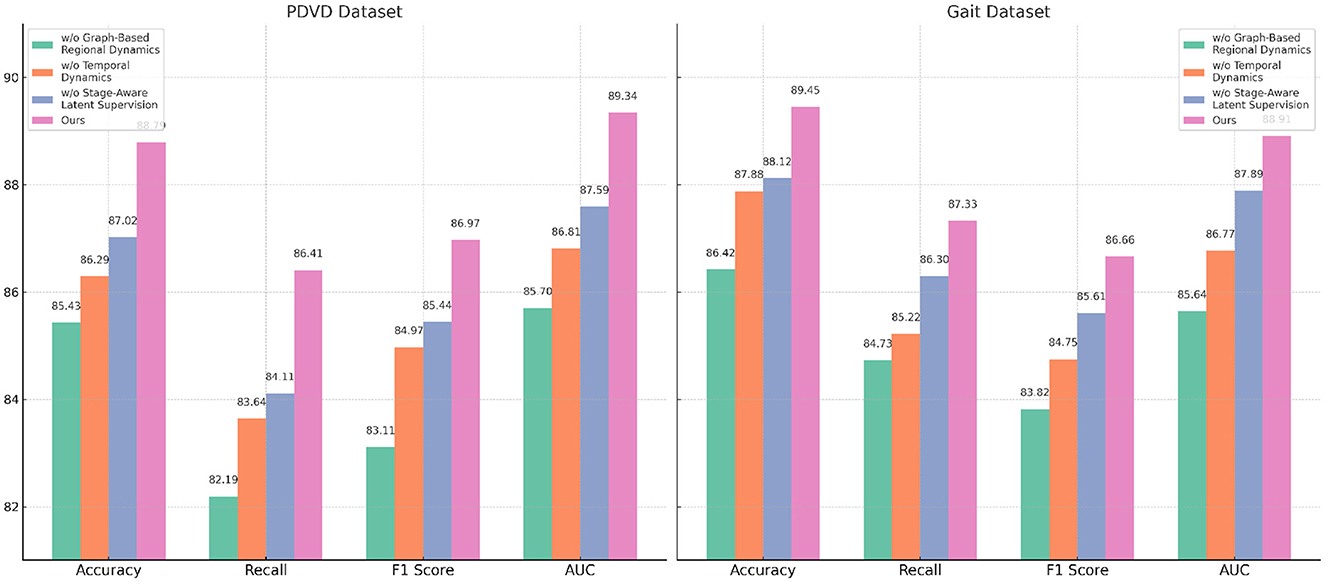

We conduct an extensive ablation study to evaluate the contribution of each component in our model architecture. The results are summarized in Tables 5, 6, which present performance comparisons across the ADNI, YouTubePD, PDVD, and Gait datasets. We investigate three ablation settings by removing one module at a time including the cross-modal co-attention mechanism, the hierarchical fusion strategy, and the CRF-based decoding layer. In all variants, the rest of the architecture and training setup remain identical to isolate the impact of each component.

Table 5. Analysis of module variant performance through ablation studies on video data from ADNI and YouTubePD.

Table 6. Evaluating the impact of module variants through ablation experiments on the PDVD and Gait video datasets.

In Figure 7, excluding the co-attention module results in the most pronounced decline in performance across all datasets. The F1 Score drops from 88.90 to 86.03 on ADNI and from 86.97 to 83.11 on PDVD, confirming that the co-attention mechanism is essential for effective cross-modal alignment. This module enables the model to dynamically relate visual and auditory features to each token in the textual stream, which is particularly beneficial for disambiguating entity boundaries in noisy or multi-modal contexts. When the hierarchical fusion is removed (w./o. Temporal dynamics and representation), the F1 Score decreases moderately, showing that while this module enhances multi-level context aggregation, the system retains partial robustness. The fusion layers integrate both global and local features across modalities, which helps in longer or structurally complex sequences. The effect of removing the CRF decoding (w./o. Stage-aware latent supervision) varies by dataset. While performance remains relatively high, F1 still drops, indicating that structured prediction with CRF adds important constraints that refine output sequences and reduce tagging errors at entity boundaries.

Figure 7. Analysis of module variant performance through ablation studies on video data from ADNI and YouTubePD.

In Figure 8, the full model consistently outperforms all ablation variants across the four evaluation metrics–Accuracy, Recall, F1 Score, and AUC. Notably, it achieves an average improvement of about 2.0% in F1 Score over the best-performing ablated version, emphasizing the synergistic value of the three integrated modules. This comprehensive improvement is especially evident on more challenging datasets like PDVD and Gait, where the presence of noisy, user-generated text complicates entity extraction. The AUC metric also shows consistent enhancements, indicating better decision boundary quality and increased confidence in predictions. These ablation results validate the effectiveness of our design choices and confirm that each component in our architecture plays a distinct and indispensable role in boosting the overall performance of the NER system for video-based multimodal analysis.

Figure 8. Evaluating the impact of module variants through ablation experiments on the PDVD and Gait video datasets.

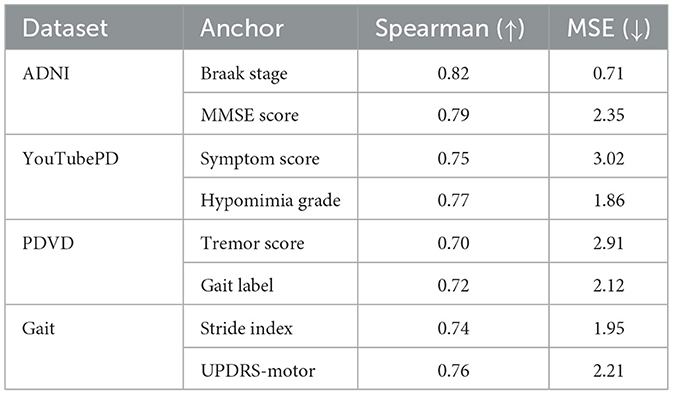

To evaluate the biological plausibility of latent space dynamics, we conducted a multi-dataset validation study comparing predicted latent outputs with known clinical progression anchors across ADNI, YouTubePD, PDVD, and Gait datasets. As summarized in Table 7, our latent predictions demonstrate high Spearman correlation with Braak stage, MMSE score, tremor severity, gait instability, and other relevant clinical markers. These results confirm that the model internalizes biologically and behaviorally meaningful progression pathways, capturing both cognitive and motor symptom evolution. This latent space structure enhances clinical interpretability and supports real-world applications such as symptom monitoring, risk stratification, and individualized care planning.

5 Conclusions and future work

In this study, we aimed to improve the modeling of neurodegenerative diseases—such as Alzheimer's, Parkinson's, and Huntington's—through the integration of artificial intelligence (AI) and radiomics. Recognizing the complexity and heterogeneity of these disorders, we developed a biologically-informed AI framework comprising two key components including the NeuroSage architecture and the Cognitive Alignment Inductive Strategy (CAIS). NeuroSage is designed as a latent dynamical system that captures the spatiotemporal evolution of disease, utilizing graph-based propagation and attention mechanisms across multimodal data sources, including neuroimaging, genomics, and clinical metrics. Meanwhile, CAIS introduces clinically meaningful constraints—such as disease stage hierarchies and biomarker trajectories—into the learning process, aligning latent model representations with domain knowledge. Experimental evaluations on multi-center datasets showed that this approach significantly outperforms traditional and black-box AI methods in accuracy, interpretability, and generalizability, making it a strong candidate for future personalized medicine applications and biomarker discovery in neurodegeneration.

Despite these promising results, there are two primary limitations that warrant future exploration. First while the model incorporates multimodal data, the harmonization and availability of such datasets remain a challenge—especially for rare diseases or longitudinal studies with missing data points. Addressing data sparsity and bias through synthetic data generation or federated learning could enhance model robustness. Second, although CAIS introduces clinical interpretability, further work is needed to make these symbolic constraints dynamic and adaptive to evolving knowledge bases or individual patient feedback. Future directions may involve integrating real-time clinical input or extending the model to broader neuropsychiatric conditions. Ultimately, the convergence of biologically grounded AI and radiomics opens up powerful new avenues for early diagnosis, progression tracking, and therapeutic targeting in neurodegenerative research.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

Ethical review and approval was not required for the study on human participants in accordance with the local legislation and institutional requirements. Written informed consent from the patients/participants or patients/participants' legal guardian/next of kin was not required to participate in this study in accordance with the national legislation and the institutional requirements.

Author contributions

QS: Conceptualization, Methodology, Software, Validation, Formal analysis, Investigation, Writing – original draft. FW: Data curation, Writing – original draft, Writing – review & editing, Visualization, Supervision, Funding acquisition.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Luxem K, Sun JJ, Bradley SP, Krishnan K, Yttri E, Zimmermann J, et al. Open-source tools for behavioral video analysis: setup, methods, and best practices. Elife. (2023) 12:79305. doi: 10.7554/eLife.79305

2. Wan S, Xu X, Wang T, Gu Z. An intelligent video analysis method for abnormal event detection in intelligent transportation systems. IEEE Trans Intell Transport Syst. (2021) 22:4487–95. doi: 10.1109/TITS.2020.3017505

3. Kitaguchi D, Takeshita N, Matsuzaki H, Igaki T, Hasegawa H, Ito M. Development and validation of a 3-dimensional convolutional neural network for automatic surgical skill assessment based on spatiotemporal video analysis. JAMA Netw Open. (2021) 4:e2120786. doi: 10.1001/jamanetworkopen.2021.20786

4. Hendricks S, Till K, den Hollander S, Savage TN, Roberts SP, Tierney G, et al. Consensus on a video analysis framework of descriptors and definitions by the Rugby Union Video Analysis Consensus group. Br J Sports Med. (2020) 54:566–72. doi: 10.1136/bjsports-2019-101293

5. Liu W, Kang G, Huang PYB, Chang X, Yu L, Qian Y, et al. Argus: efficient activity detection system for extended video analysis. In: 2020 IEEE Winter Applications of Computer Vision Workshops (WACVW). (2020). doi: 10.1109/WACVW50321.2020.9096929

6. Tang Y, Lu J, Zhou J. Comprehensive instructional video analysis: the coin dataset and performance evaluation. IEEE Trans Pattern Anal Mach Intell. (2021) 43:3138–53. doi: 10.1109/TPAMI.2020.2980824

7. Cuevas C, Quilón D, García N. Techniques and applications for soccer video analysis: a survey. Multim Tools Applic. (2020) 79:29685–29721. doi: 10.1007/s11042-020-09409-0

8. Kovacs GG. Concepts and classification of neurodegenerative diseases. In: Handbook of Clinical Neurology. Elsevier (2018). p. 301–307. doi: 10.1016/B978-0-12-802395-2.00021-3

9. Lin W, He X, Dai W, See J, Shinde T, Xiong H, et al. Key-point sequence lossless compression for intelligent video analysis. IEEE MultiMedia. (2020) 27:12–22. doi: 10.1109/MMUL.2020.2990863

10. Gitler AD, Dhillon P, Shorter J. Neurodegenerative disease: models, mechanisms, and a new hope. Dis Models Mech. (2017) 10:499–502. doi: 10.1242/dmm.030205

11. Zamani AR, Zou M, Diaz-Montes J, Petri I, Rana O, Anjum A, et al. Deadline constrained video analysis via in-transit computational environments. IEEE Trans Serv Comput. (2020) 13:59–72. doi: 10.1109/TSC.2017.2653116

12. Mercat A, Viitanen M, Vanne J. UVG dataset. In: Proceedings of the 11th ACM Multimedia Systems Conference. (2020). p. 297–302. doi: 10.1145/3339825.3394937

13. Ben X, Ren Y, Zhang J, Wang SJ, Kpalma K, Meng W, et al. Video-based facial micro-expression analysis: a survey of datasets, features and algorithms. IEEE Trans Patt Anal Mach Intell. (2021) 44:5826–46. doi: 10.1109/TPAMI.2021.3067464

14. Stappen L, Baird A, Cambria E, Schuller BW. Sentiment analysis and topic recognition in video transcriptions. IEEE Intell Syst. (2021) 36:88–95. doi: 10.1109/MIS.2021.3062200

15. Stenum J, Rossi C, Roemmich RT. Two-dimensional video-based analysis of human gait using pose estimation. PLoS Comput Biol. (2021) 17:e1008935. doi: 10.1371/journal.pcbi.1008935

16. Ou Y, Chen Z, Wu F. Multimodal local-global attention network for affective video content analysis. IEEE Trans Circ Syst Video Technol. (2021) 31:1901–14. doi: 10.1109/TCSVT.2020.3014889

17. Hou Y, Dan X, Babbar M, Wei Y, Hasselbalch SG, Croteau DL, et al. Ageing as a risk factor for neurodegenerative disease. Nat Rev Neurol. (2019) 15:565–81. doi: 10.1038/s41582-019-0244-7

18. Seuren L, Wherton JP, Greenhalgh T, Cameron D, A'Court C, Shaw S. Physical examinations via video for patients with heart failure: qualitative study using conversation analysis. J Med Internet Res. (2020) 22:e16694. doi: 10.2196/16694

19. Neimark D, Bar O, Zohar M, Asselmann D. Video transformer network. In: 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW). (2021). p. 3156–3165. doi: 10.1109/ICCVW54120.2021.00355

20. Wang W, Shen J, Xie J, Cheng M-M, Ling H, Borji A. Revisiting video saliency prediction in the deep learning era. IEEE Trans Pattern Anal Mach Intell. (2021) 43:220–37. doi: 10.1109/TPAMI.2019.2924417

21. Teleanu DM, Niculescu A-G, Lungu II, Radu CI, Vladâcenco O, Roza E, et al. An overview of oxidative stress, neuroinflammation, and neurodegenerative diseases. Int J Mol Sci. (2022) 23:5938. doi: 10.3390/ijms23115938

22. Buch S, Eyzaguirre C, Gaidon A, Wu J, Fei-Fei L, Niebles JC. Revisiting the “video” in video-language understanding. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). (2022). p. 2907–17. doi: 10.1109/CVPR52688.2022.00293

23. Zhu H, Wu W, Zhu W, Jiang L, Tang S, Zhang L, et al. CelebV-HQ: a large-scale video facial attributes dataset. In: Computer Vision – ECCV. (2022). p. 650–67. doi: 10.1007/978-3-031-20071-7_38

24. Selva J, Johansen AS, Escalera S, Nasrollahi K, Moeslund TB, Clap's A. Video transformers: a survey. IEEE Trans Pattern Anal Mach Intell. (2023) 45:12922–43. doi: 10.1109/TPAMI.2023.3243465

25. Apostolidis E, Adamantidou E, Metsai AI, Mezaris V, Patras I. Video summarization using deep neural networks: a survey. Proc IEEE. (2021) 109:1838–63. doi: 10.1109/JPROC.2021.3117472

26. Pareek P, Thakkar A. A survey on video-based Human Action Recognition: recent updates, datasets, challenges, and applications. Artif Intell Rev. (2020) 54:2259–322. doi: 10.1007/s10462-020-09904-8

27. Borroto-Escuela DO, Cuesta-Marti C, Lopez-Salas A, Chruścicka-Smaga B, Crespo-Ramírez M, Tesoro-Cruz E, et al. The oxytocin receptor represents a key hub in the GPCR heteroreceptor network: potential relevance for brain and behavior. Front Mol Neurosci. (2022) 15:1055344. doi: 10.3389/fnmol.2022.1055344

28. Duan L, Liu J, Yang W, Huang T, Gao W. Video coding for machines: a paradigm of collaborative compression and intelligent analytics. IEEE Trans Image Proc. (2020) 29:8680–95. doi: 10.1109/TIP.2020.3016485

29. Wang C, Zhang S, Chen Y, Qian Z, Wu J, Xiao M. Joint configuration adaptation and bandwidth allocation for edge-based real-time video analytics. In: IEEE Conference on Computer Communications. (2020). p. 257–266. doi: 10.1109/INFOCOM41043.2020.9155524

30. Borroto-Escuela DO, Lopez-Salas A, Wydra K, Bartolini M, Zhou Z, Frankowska M, et al. Combined treatment with Sigma1R and A2AR agonists fails to inhibit cocaine self-administration despite causing strong antagonistic accumbal A2AR-D2R complex interactions: the potential role of astrocytes. Front Mol Neurosci. (2023) 16:1106765. doi: 10.3389/fnmol.2023.1106765

31. Awad G, Butt AA, Curtis K, Fiscus J, Godil A, Lee Y, et al. TRECVID 2020: A comprehensive campaign for evaluating video retrieval tasks across multiple application domains. arXiv preprint arXiv:2104.13473. (2021).

32. Noetel M, Griffith S, Delaney O, Sanders T, Parker P, del Pozo Cruz B, et al. Video improves learning in higher education: a systematic review. Rev Educ Res. (2021) 91:204-036. doi: 10.3102/0034654321990713

33. Yuanta F. Pengembangan media video pembelajaran ilmu pengetahuan sosial pada siswa sekolah dasar. Trapsila: Jurnal Pendidikan Dasar. (2020) 1:91. doi: 10.30742/tpd.v1i02.816

34. Rauf A, Badoni H, Abu-Izneid T, Olatunde A, Rahman MM, Painuli S, et al. Neuroinflammatory markers: key indicators in the pathology of neurodegenerative diseases. Molecules. (2022) 27:3194. doi: 10.3390/molecules27103194

35. Borroto-Escuela DO, Beltran-Casanueva R, Lopez-Salas A, Fuxe K. Susceptibility of GPCR heteroreceptor complexes to neurotoxins. Relevance for neurodegenerative and psychiatric disorders. In: Handbook of Neurotoxicity. Springer (2022). p. 1–11. doi: 10.1007/978-3-030-71519-9_222-1

36. Jiang X, Li M, Tang Y, Hu J, Gai X, Zhang C, et al. Research progress on the mechanism of transcutaneous electrical acupoint stimulation in the perioperative period. Front Neurol. (2025) 16:1563681. doi: 10.3389/fneur.2025.1563681

37. Shaw SE, Seuren LM, Wherton J, Cameron D, A'Court C, Vijayaraghavan S, et al. Video consultations between patients and clinicians in diabetes, cancer, and heart failure services: linguistic ethnographic study of video-mediated interaction. J Med Internet Res. (2020) 22:e18378. doi: 10.2196/18378

38. Aloraini M, Sharifzadeh M, Schonfeld D. Sequential and patch analyses for object removal video forgery detection and localization. IEEE Trans Circ Syst Video Technol. (2021) 31:917–30. doi: 10.1109/TCSVT.2020.2993004

39. Nandwani P, Verma R. A review on sentiment analysis and emotion detection from text. Soc Netw Anal Mining. (2021) 11:81. doi: 10.1007/s13278-021-00776-6

40. Saeed U, Piracha ZZ, Tariq MN, Syed S, Rauf M, Razaq L, et al. Decoding the genetic blueprints of neurological disorders: disease mechanisms and breakthrough gene therapies. Front Neurol. (2025) 16:1422707. doi: 10.3389/fneur.2025.1422707

41. Li W, Zhang J, Zhang Y, Shentu W, Yan S, Chen Q, et al. Clinical research progress on pathogenesis and treatment of Patent Foramen Ovale-associated stroke. Front Neurol. (2025) 16:1512399. doi: 10.3389/fneur.2025.1512399

42. Kumar R, Azad C. Hybrid Harris hawk optimization (HHO): a novel framework for Alzheimer's disease prediction using neuroimaging data. In: 2024 International Conference on Intelligent Computing and Emerging Communication Technologies (ICEC). (2024) 1–5. doi: 10.1109/ICEC59683.2024.10837464

43. Kumar R, Azad C. Comprehensive overview of Alzheimer's disease utilizing Machine Learning approaches. Multimed Tools Appl. (2024) 83:85277–329. doi: 10.1007/s11042-024-19425-z

44. Yadav V, Kumar R, Azad C. A filter-based feature selection approach for the prediction of Alzheimer's diseases through audio classification. In: 2022 2nd International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE). (2022). p. 1890–1894. doi: 10.1109/ICACITE53722.2022.9823665

45. Huckvale ED, Hodgman MW, Greenwood BB, Stucki DO, Ward KM, Ebbert MTW, et al. Pairwise correlation analysis of the Alzheimer's disease neuroimaging initiative (ADNI) dataset reveals significant feature correlation. Genes. (2021) 12:1661. doi: 10.3390/genes12111661

46. Islam MS, Adnan T, Freyberg J, Lee S, Abdelkader A, Pawlik M, et al. Accessible, at-home detection of Parkinson's disease via multi-task video analysis. In: Proceedings of the AAAI Conference on Artificial Intelligence. (2025). p. 28125–33. doi: 10.1609/aaai.v39i27.35031

47. Böller F. Fuelling Politicisation: the AfD and the politics of military interventions in the german parliament. Ger Polit. (2022) 33:535–57. doi: 10.1080/09644008.2022.2072489

48. Topham LK, Khan W, Al-Jumeily D, Waraich A, Hussain AJ. A diverse and multi-modal gait dataset of indoor and outdoor walks acquired using multiple cameras and sensors. Sci Data. (2023) 10:320. doi: 10.1038/s41597-023-02161-8

49. Hafner M, Katsantoni M, Köster T, Marks J, Mukherjee J, Staiger D, et al. CLIP and complementary methods. Nat Rev Methods Primers. (2021) 1:20. doi: 10.1038/s43586-021-00018-1

50. Yuan L, Chen Y, Wang T, Yu W, Shi Y, Jiang ZH, et al. Tokens-to-token vit: Training vision transformers from scratch on imagenet. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. (2021). p. 558–567. doi: 10.1109/ICCV48922.2021.00060

51. Peng Y, Lee J, Watanabe S. I3D: transformer architectures with input-dependent dynamic depth for speech recognition. In: ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE (2023). p. 1–5. doi: 10.1109/ICASSP49357.2023.10096662

52. Li J, Li D, Xiong C, Hoi S. Blip: bootstrapping language-image pre-training for unified vision-language understanding and generation. In: International Conference on Machine Learning. PMLR (2022). p. 12888–12900.

53. Pepino L, Riera P, Ferrer L. Emotion recognition from speech using wav2vec 2.0 embeddings. arXiv preprint arXiv:210403502. (2021).

Keywords: neurodegenerative diseases, radiomics, artificial intelligence, disease progression modeling, symbolic alignment

Citation: Sun Q and Wang F (2025) Using artificial intelligence and radiomics to analyze imaging features of neurodegenerative diseases. Front. Neurol. 16:1624867. doi: 10.3389/fneur.2025.1624867

Received: 11 May 2025; Accepted: 08 September 2025;

Published: 06 November 2025.

Edited by:

Benedetta Tafuri, University of Salento, ItalyReviewed by:

Marianna Inglese, University of Rome Tor Vergata, ItalyRahul Kumar, GLA University, India

Copyright © 2025 Sun and Wang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Qixuan Sun, ZHVhcnR0c2FuaWF0QGhvdG1haWwuY29t

Qixuan Sun1*

Qixuan Sun1* Fang Wang

Fang Wang