- 1Department of Agricultural and Biosystems Engineering, North Dakota State University, Fargo, ND, United States

- 2Department of Crop and Soil Sciences, North Carolina State University, Fargo, ND, United States

The fusion of unmanned aerial system (UAS) and satellite imagery has emerged as a pivotal strategy in advancing precision agriculture. This review explores the significance of integrating high-resolution UAS and satellite imagery via pixel-based, feature-based, and decision-based fusion methods. The study investigates optimization techniques, spectral synergy, temporal strategies, and challenges in data fusion, presenting transformative insights such as enhanced biomass estimation through UAS-satellite synergy, improved nitrogen stress detection in maize, and refined crop type mapping using multi-temporal fusion. The combined spectral information from UAS and satellite sources confirms instrumental in crop monitoring and biomass estimation. Temporal optimization strategies consider factors such as crop phenology, spatial resolution, and budget constraints, offering effective and continuous monitoring solutions. The review systematically addresses challenges in spatial and temporal resolutions, radiometric calibration, data synchronization, and processing techniques, providing practical solutions. Integrated UAS and satellite data impact precision agriculture, contributing to improved resolution, monitoring capabilities, resource allocation, and crop performance evaluation. A comparative analysis underscores the superiority of combined data, particularly for specific crops and scenarios. Researchers exhibit a preference for pixel-based fusion methods, aligning fusion goals with specific needs. The findings contribute to the evolving landscape of precision agriculture, suggesting avenues for future research and reinforcing the field’s dynamism and relevance. Future works should delve into advanced fusion methodologies, incorporating machine learning algorithms, and conduct cross-crop application studies to broaden applicability and tailor insights for specific crops.

Highlights

• Integration of UAS and satellite imagery revolutionizes precision agriculture by optimizing spatial resolution and spectral synergy.

• Pixel-based, feature-based, and decision-based fusion methods enhance spatial and spectral resolution for detailed crop characterization.

• Temporal optimization strategies balance crop phenology, spatial resolution, and budget constraints for effective and continuous monitoring.

1 Introduction

Precision agriculture has brought about a revolution in the agricultural industry that has rarely been seen before in agriculture’s lengthy history (Sung, 2018). The need for sustainable resource management and increased productivity is driving this revolution in farming operations (Patil Shirish and Bhalerao, 2013). In principle, precision agriculture uses technology to maximize agricultural yield (Zhang, 2016), reduce resource waste (Karunathilake et al., 2023), and eventually guarantee food security for a world population that is growing at an accelerated rate (Waqas et al., 2023). The use of satellite remote sensing data provides invaluable insights into the condition of our fields and crops, serving as a crucial tool in this transition. Satellites act as the eyes in the sky, capturing data that enables us to monitor and analyze agricultural landscapes with precision. (Radočaj et al., 2022). However, while satellites provide essential macroscopic perspectives, addressing the finer details of modern agricultural challenges often requires complementary solutions.

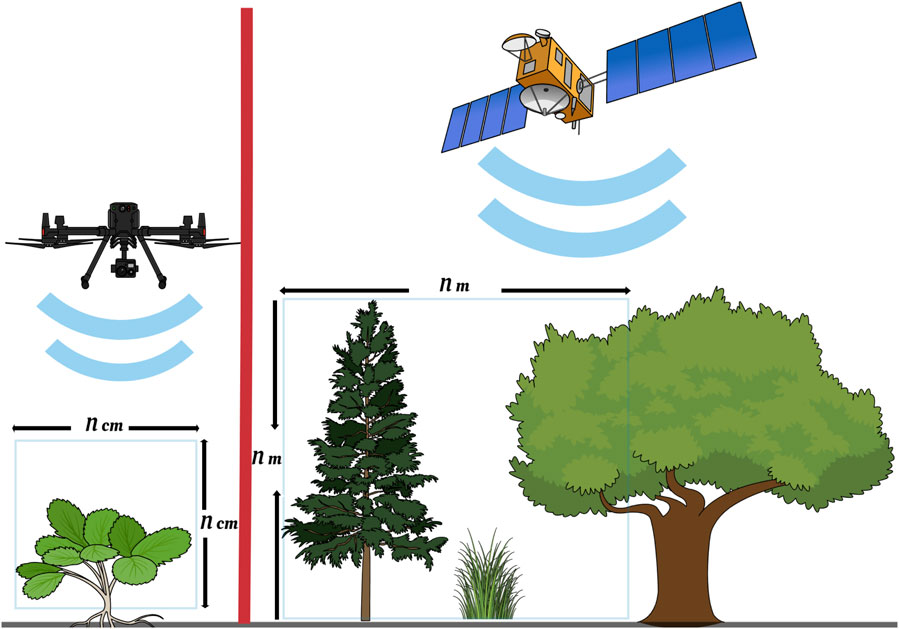

Satellites and unmanned aerial systems (UASs) are two platforms used to acquire remotely sensed data. Both have particular benefits and drawbacks. From their high altitude in space, satellites provide a wide, macroscopic picture of our planet’s surface. They can cover large swaths of land in each frame of image, making it the tool of choice to monitor large agricultural fields. On the other hand, their spatial and temporal resolutions are often too coarse to capture the fine features needed for detailed crop monitoring (Saxena et al., 2021). Conversely, UASs, obtain close-up shots and high-resolution images. However, their limited flight duration and relatively small coverage area are seen as the main drawbacks of the technology, especially by large agricultural enterprises (Zhang et al., 2021). These complementary strengths and weaknesses have prompted exploration into how these technologies can work together to address the demands of modern precision agriculture.

Precision agriculture finds a compelling solution in the integration of UAS imagery and satellite, realizing the potential for a mutually beneficial synergy. One can improve the spatial resolution of satellite imagery and close the gap between macroscopic and microscopic views by combining the advantages of both technologies (Chen et al., 2019; Messina et al., 2020). This integration holds significant potential in enhancing the accuracy of crop classification and health assessments, enabling farmers and researchers to better understand and respond to agricultural challenges. Despite the promising benefits of integrating high-resolution imagery for precision agriculture, several challenges persist. These include differences in data formats between satellite and UAS platforms, variations in spatial and temporal resolutions, and the need for proper radiometric and geometric calibration to ensure data consistency. Additionally, fusing datasets collected under varying environmental conditions or sensor specifications can introduce uncertainties that complicate analysis. Addressing these issues through further research is essential to fully unlock the benefits of this synergistic approach to precision agriculture.

The integration of satellite-improved imagery with UAS data holds significant promise for both researchers and plant breeders, revolutionizing agricultural practices. By combining these technologies, plant breeders gain a comprehensive understanding of crop conditions across larger areas, allowing for targeted interventions and optimized resource allocation (Sankaran et al., 2021). Timely monitoring provided by satellites, coupled with the flexibility of UAS deployments, enables proactive decision-making and swift issue resolution (Agarwal et al., 2018). Moreover, data fusion techniques extract valuable insights, accelerating the breeding process by identifying promising crop varieties efficiently. These techniques allow researchers to monitor crop growth, assess traits such as drought tolerance, pest resistance, and yield potential, and make data-driven decisions with greater accuracy. By combining detailed local data with large-scale trends, breeders can significantly reduce the time required for field trials, optimize resource allocation, and improve the success rate of developing new crop varieties tailored to specific environmental conditions. This integration overcomes challenges associated with data collection, enabling the creation of high-resolution maps within fields to facilitate site-specific management practices and optimize resource utilization (Ahmad et al., 2022).

Comprehensive information specifically on this field is either scarce or the latest information does not exist. Therefore, a systematic literature review (SLR) was conducted to generate the latest comprehensive information on the integration of UAS and satellite for precision agricultural practices. The specific research questions considered for the SLR are:

• Q1: What are the dominant types of sensors and data sources used in UAS–satellite integration for precision agriculture?

• Q2: Which fusion methods are most commonly applied, and how are they categorized?

• Q3: What are the main applications of data fusion in precision agriculture (e.g., yield prediction, crop classification)?

• Q4: What challenges are reported in implementing data fusion techniques, and how have they been addressed?

• Q5: What are the research gaps and future directions in this field?

Each of these questions is systematically addressed in Sections 3.1–3.9 of the manuscript This review contributes uniquely to the precision agriculture field by providing a systematic synthesis of recent developments in the fusion of UAS and satellite imagery specifically for precision agriculture, focusing on three major fusion levels: pixel-, feature-, and decision-based methods. Unlike previous reviews which broadly covered remote sensing platforms or sensor types (Rudd et al., 2017; Alexopoulos et al., 2023), our work delves into the comparative performance of fusion approaches across key agricultural tasks such as crop classification, yield prediction, and nutrient estimation. Furthermore, we highlight implementation challenges and practical considerations, drawing on recent empirical studies to offer actionable insights for researchers and practitioners. This targeted analysis fills a critical gap by linking fusion methodology with agronomic outcomes, thus providing a framework to guide future cross-crop and cross-platform data integration studies.

Overall, this study aims to evaluate the extent to which precision agricultural practices can benefit from the combined use of UAS and satellite data, providing insights into its impact on decision-making processes for farmers, plant breeders, researchers, and other stakeholders. It is expected from this SLR, through a comparative analysis, to determine whether the integration of UAS and satellite imagery yields more insight compared to using each source independently, with a focus on specific situations and crop types where this integration proves most effective.

2 Materials and methods

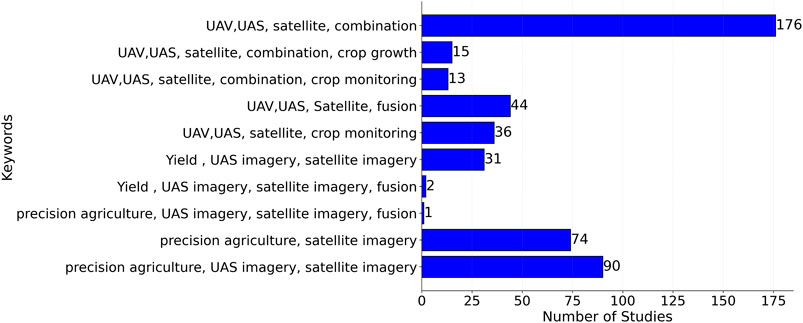

Literature from scholarly sources for this review was chosen using the SLR approach. To gain a thorough grasp of the potential technology of combining the UAS and satellite images for crop monitoring, the SLR guidelines were used to choose and examine academic publications. The process started with a thorough preliminary search phase that involved searching a wide range of scholarly resources based on different keywords related to the research questions. The number of records based on a selected combination of keywords related to the field of study are presented in Figure 1.

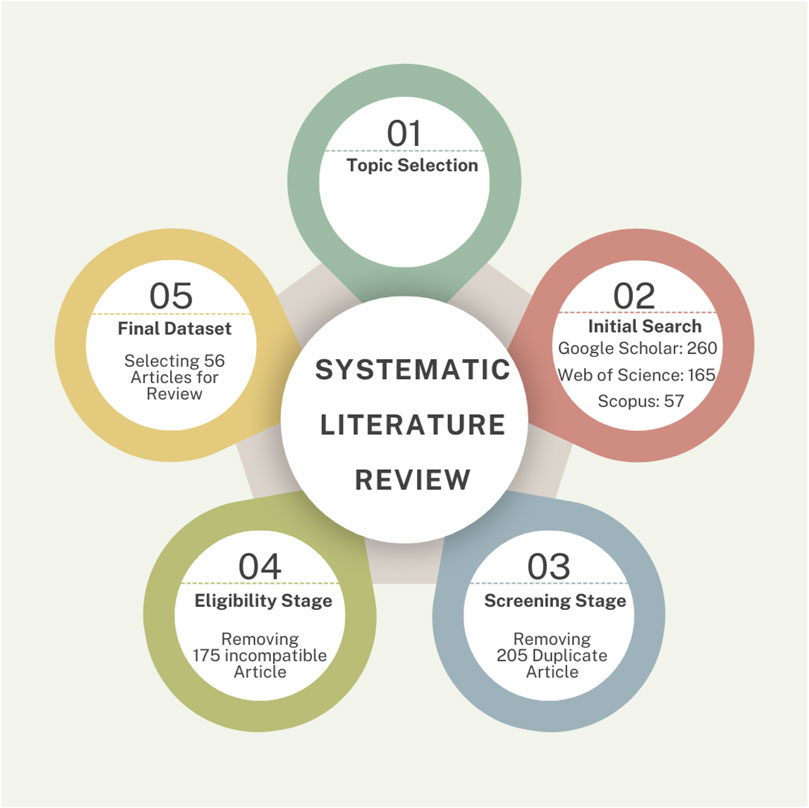

The initial search yielded 260 publications from Google Scholar, 165 from Web of Science, and 57 from Scopus (Figure 2). After an initial screening, 205 of them were identified as duplicates and subsequently removed. A thorough assessment was conducted throughout the eligibility phase to make sure every article complied with the established study goals. At the end of the screening phase, an additional 175 papers were eliminated, and only 56 relevant articles were ultimately selected. These selected publications would act as the basis of a thorough assessment and provide the framework for a detailed examination of the combination of imagery from UAS and satellites for crop monitoring.

Figure 2. Systematic literature review methodology for the combination of UAS and satellite–based imagery for precision agriculture.

3 Result and discussion

3.1 Overview and classification of remote sensing data and fusion strategies

3.1.1 Overview of reviewed studies

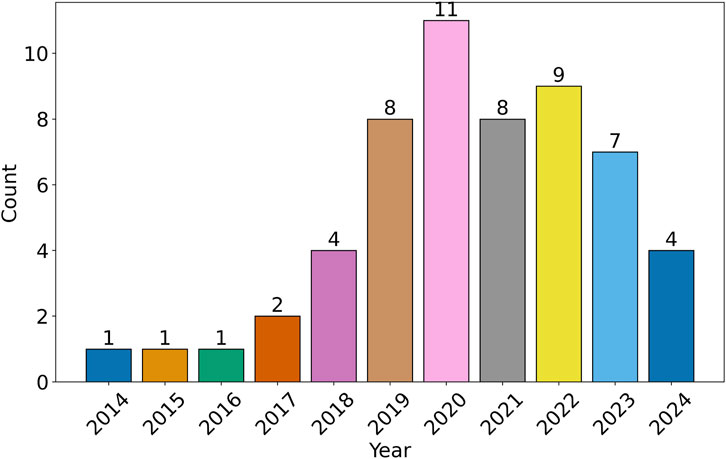

Between the years 2014 and 2024, there was an increasing trend in the number of research projects related to the combined use of UAS and satellite imagery for precision agricultur. The highest number of studies in the review scope was observed in 2020 (Figure 3). The upward trend in the number of publications from 2014 to 2024 reflects the growing recognition of the value of image fusion techniques in precision agriculture. Notably, a marked increase is observed after 2018, which may be attributed to several factors. First, the wider availability and affordability of UAS platforms and high-resolution satellite data during this period made multi-source data integration more accessible. Second, advancements in machine learning and deep learning algorithms enhanced the ability to process and interpret large, heterogeneous datasets, encouraging researchers to explore fusion-based approaches. Third, the growing concerns over climate variability and sustainable resource management likely prompted a surge in research efforts focused on improving crop monitoring and decision-making capabilities. In addition, the growing trend in using UAS and satellite imagery for precision agriculture can be attributed to significant technological advancements, economic accessibility, and a pressing need for sustainable agricultural solutions. Advances in remote sensing technology, including high-resolution imagery and hyperspectral sensors, provided precise data for applications like crop monitoring and soil analysis, addressing the complexity of agricultural challenges (Sishodia et al., 2020). UAS emerged as cost-effective and flexible tools, offering higher spatial and temporal resolution than traditional satellite methods, making them suitable for localized observations and integration with satellite data for broader coverage (Rudd et al., 2017). Furthermore, the integration of machine learning and artificial intelligence enabled the processing of complex datasets to optimize yield prediction and resource management (Wang D. et al., 2022). This shift is also driven by increasing global food demand and the need for sustainable practices to address climate and resource constraints (Farhad et al., 2024).

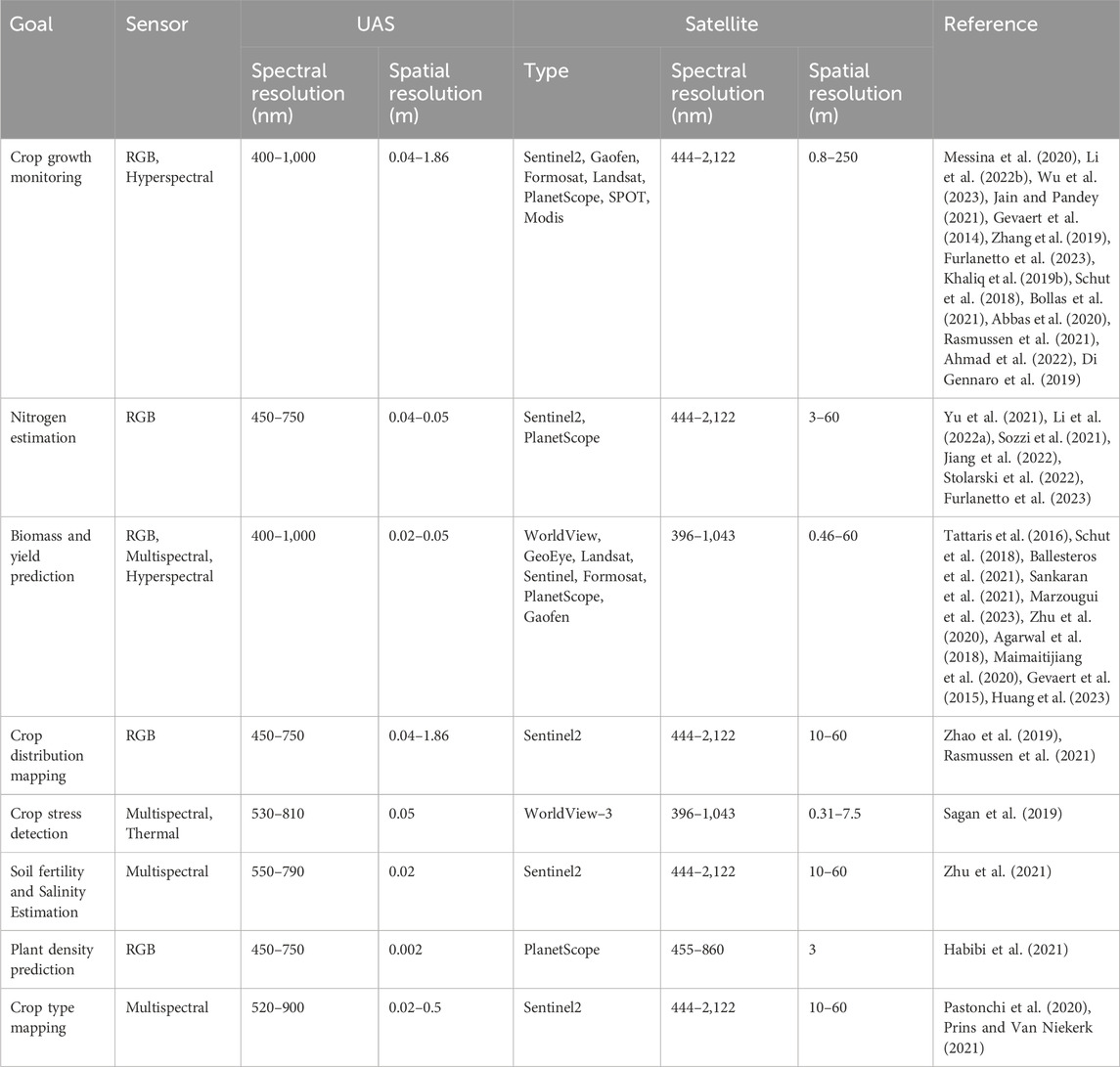

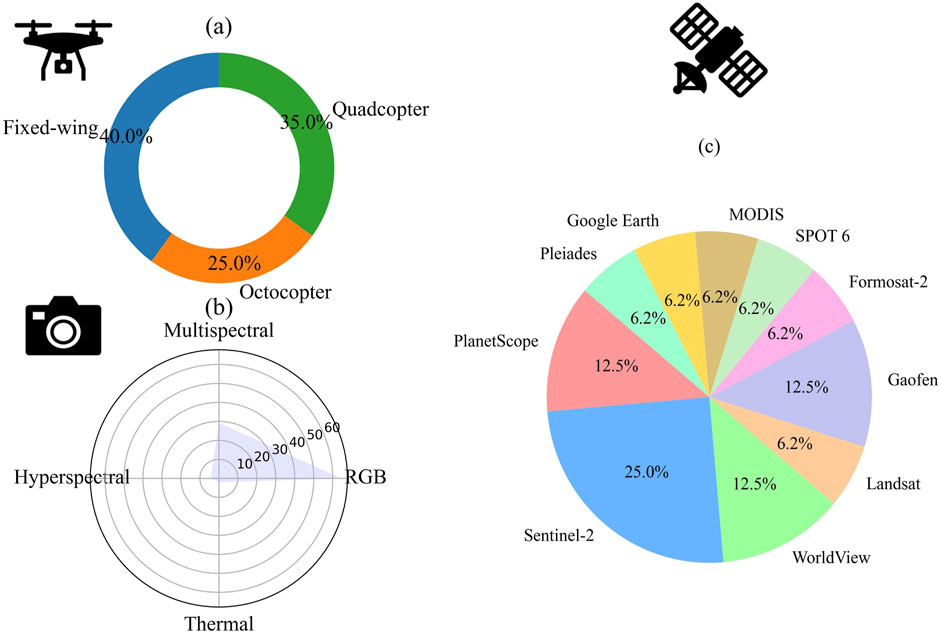

An overview of the diverse research goals in the field of precision agriculture and the corresponding UAS and satellite sensors employed for each objective has been presented in Table 1. Researchers have utilized a wide range of sensor capabilities to address specific agricultural challenges. When examining UAS-mounted sensors, a spectral range spanning from 400 nm to 1,000 nm was employed to capture diverse aspects of crop information and offered flexibility. In terms of spatial resolution, the range extends from a fine 3 mm to a broader 1.86 m, accommodating the need for both minute and large-scale data collection. On the other hand, satellite sensors display a similarly extensive spectral range from 396 nm to 2,122 nm, facilitating the monitoring of a broad spectrum of crop characteristics. In the spatial domain, satellite imagery offers spatial resolutions ranging from 31 cm to 60 m, allowing researchers to balance fine-grained detail with broader landscape context. This wealth of sensor options enables tailored solutions for various research objectives and underscores the adaptability of remote sensing technology in precision agriculture.

Table 1. Classification of research goals that use a combination of UAS and satellite remote sensing.

3.1.2 Fusion approaches by methodological level

In the literature, we find a convergence of research goals and the sensors used, reflecting the ability of both UAS and satellite technologies to adapt to a range of agricultural challenges. The selection of a sensor is contingent upon the specific requirements of the research objectives (Table 1). For example, in crop growth monitoring, researchers have employed a variety of sensors, from RGB to hyperspectral, to capture the intricate dynamics of plant development. Similarly, for nitrogen estimation, researchers have taken advantage of RGB and multispectral sensors, indicating the adaptability of these technologies to this specific application. The adaptability of sensors in crop growth monitoring and nitrogen estimation is due to their diverse spectral capabilities, enabling detection of plant traits across the electromagnetic spectrum, and their scalability across applications, with RGB sensors being cost-effective and hyperspectral sensors offering precision for nutrient analysis. These sensors correlate well with biophysical parameters like chlorophyll content and can be deployed on varied platforms, ensuring flexibility in spatial and temporal resolutions. Additionally, advancements in sensor fusion enhance their utility by combining strengths of multiple sensor types (Ruihong and Ying, 2019). This flexibility extends to goals such as aboveground biomass prediction and yield estimation, crop distribution mapping, crop stress detection, soil fertility and salinity estimation, plant density prediction, and crop type mapping. Overall, the diversity of research goals and sensor choices highlights the versatile and comprehensive nature of UAS and satellite imagery in addressing the multifaceted requirements of crop monitoring in precision agriculture. Generally, UAS and satellite image synergy have been predominantly employed in crop growth monitoring and biomass/yield estimation, constituting 40% and 29% of the total studies, respectively. For example, combining UAS-derived canopy structure with satellite-based spectral information improves the accuracy of biomass estimation by reducing soil effects and addressing saturation issues in vegetation indices (Maimaitijiang et al., 2020; Shamaoma et al., 2024). Additionally, hyperspectral and multispectral sensors on UAS platforms have been shown to enhance the estimation of aboveground biomass through refined vegetation indices and canopy metrics (Yue et al., 2017). Integrating multi-temporal UAV-derived crop type with satellite data has further enabled robust modeling of plant growth and crop type mapping in heterogeneous field conditions (Zabala, 2017). These integrated approaches demonstrate the potential for accurate monitoring and improved yield predictions, particularly when combining spectral and structural data from both platforms (Poley and McDermid, 2020).

3.1.3 Classification of remote sensing platforms

In the majority of studies, fixed-wing UASs were the most commonly employed drones, representing 40% of the cases (Figure 4a). Interestingly, the existing literature does not provide substantial evidence to support the idea that the choice of UAS type significantly affects the accuracy of precision agriculture when integrating UAS and satellite data. This suggests that, while drone selection may be driven by specific operational needs, its impact on the overall precision of crop monitoring remains largely inconclusive within the scope of the research reviewed. RGB and multispectral sensors emerge as the predominant choices for UAS-based data collection, reflecting their prevalence in the field (Figure 4b). This preference can be attributed to their capacity to fulfill both spectral and spatial requisites for plant analysis in smaller agricultural areas. For example, RGB sensors have been successfully employed in studies like Maimaitijiang et al. (2020), where they were used to assess canopy coverage and crop growth stages in soybean fields, demonstrating high accuracy and ease of deployment. Similarly, multispectral sensors have been used for canopy nitrogen weight in wheat, as highlighted by Yu et al. (2021), due to their capability to capture critical vegetation indices like NDVI and NDRE efficiently. Moreover, their extensive acceptance is further justified by their cost-effectiveness and user-friendly attributes in comparison to hyperspectral and thermal sensors. This underscores the practicality and efficiency of employing RGB and multispectral sensors, aligning them as the favored options for researchers in the precision agriculture domain. Thermal sensors and hyperspectral sensors are less used, likely due to higher costs or specific application requirements (e.g., water stress or advanced vegetation analysis).

Figure 4. Prevalence of UAS types (a), UAS sensors (b), and satellite platforms (c) in reviewed studies in precision agriculture.

Sentinel 2 stands out as the most prevalent choice for satellite imagery (Figure 4c). This prominence can be attributed to two key factors: (i) it offers a well–balanced combination of spectral (444 nm–2,122 nm) and spatial (10 m–60 m) resolutions, effectively addressing the requirements of plant studies on medium and large scales; and (ii) being entirely cost-free for downloading and usage. Worldview, PlanetScope, and Gaofen satellites are the main alternatives to Sentinel-2, although nominal fees may apply for their usage. However, these satellites are distinguished by their high spatial resolution, ranging from 0.31 to 3 m, which renders them suitable for direct comparison with UAS-derived images. Satellites like MODIS, SPOT 6, Formosat-2, Pleiades, and Google Earth occupy smaller proportions (6.2% each) because their spatial, spectral, or temporal resolutions are not as optimal for agricultural applications compared to Sentinel-2 or Landsat. For example, MODIS has a lower spatial resolution, making it less effective for fine-grained, field-level analyses. SPOT 6 and Pleiades provide high-resolution imagery but are often expensive, limiting widespread adoption in cost-sensitive agricultural applications. Overall, Sentinel 2’s cost-effectiveness, combined with its spectral and spatial capabilities, makes it a standout choice, while Worldview, PlanetScope, and Gaofen satellites offer compelling alternatives for synergistic use in conjunction with UAS imagery for precision agriculture studies. A detailed specification of these satellites can be found in Zhang et al. (2020).

3.2 Integration techniques

Combining the high-resolution information from UAS images to improve the spatial resolution of satellite images leads to a more detailed perspective of crop conditions (Ahmad et al., 2022; Ballesteros et al., 2021; Jain and Pandey, 2021; Stolarski et al., 2022; Mancini et al., 2019) and finer-scale variations in the crop canopy (Furlanetto et al., 2023). UAS imagery refined satellite-derived vegetation indices like NDVI and NDRE, enabling accurate nitrogen stress assessments during maize’s critical growth stages (Maimaitijiang et al., 2020). The optimization of UAS and satellite image integration can enhance the spatial resolution of satellite images for effective crop monitoring, and this can be achieved by following the steps described subsequently:

3.2.1 Plan coordinated data acquisition

Coordinating the timing of image acquisitions is imperative to ensure coverage of the same area of interest (Messina et al., 2020; Li Y. et al., 2022) and to capture crops at similar growth stages, enhancing data compatibility (Marzougui et al., 2023). For instance, UAS imagery proves valuable during pivotal moments in the crop growth cycle when detailed insights are required, while satellite images offer broader field overviews and are available at regular intervals (Messina et al., 2020). It is vital to account for environmental conditions that affect the relationship between UAS and satellite data, including differences in spatial resolution between the two sources and the spatial diversity of observed objects (Zhu et al., 2021). Such considerations are important to ensure the accuracy of estimations while combining UAS and satellite data. For instance, integrating UAV-derived canopy structure with Worldview-2/3 spectral data improves soybean biomass and nitrogen estimation, addressing issues like soil effects and vegetation index saturation (Maimaitijiang et al., 2020). Similarly, UAS imagery aids WorldView-3 and RapidEye based classification, achieving accuracy comparable to field surveys in wetland monitoring (Gray et al., 2018). In vineyards, this integration improves temporal and spatial resolution, critical for managing high-value crops (Brook et al., 2020). Additionally, UAV-satellite synergy effectively detects yield and fertilizer variability in smallholder farms, supporting targeted interventions (Schut et al., 2018).

3.2.2 Calibrate and validate UAS and satellite imagery

Radiometric and geometric calibration for both UAS and satellite images are essential as calibration is pivotal in achieving consistency and accuracy (Mancini et al., 2019; Pastonchi et al., 2020; Li Y. et al., 2022). Radiometric calibration ensures that sensor responses are normalized for atmospheric effects and variations in illumination, allowing accurate comparisons of spectral data across time and platforms (Bollas et al., 2021; Lacerda et al., 2022). This step is critical for applications such as vegetation monitoring, where indices like NDVI depend on precise spectral reflectance values (Manivasagam et al., 2021) Validation of the acquired data through “ground truth” and comparison with field measurements further enhances the reliability of the integrated data (Bollas et al., 2021; Lacerda et al., 2022). Validation through ground truth measurements further strengthens the reliability of remote sensing outputs by aligning modeled data with real-world conditions. For instance, validating crop health or LAI estimates with field data helps refine algorithms and improve prediction accuracy in wheat (Waldner et al., 2019). Geometric calibration, on the other hand, corrects spatial distortions caused by sensor motion, lens distortions, or topographic variations, ensuring spatial accuracy for analyses like field alignment and multi-source data integration (Maimaitijiang et al., 2020). Geometric calibration can be achieved through the use of ground control points (GCPs) or image matching techniques including nearest neighbor (NN) and brute force (BF) matching (Abbas et al., 2020), which enable the images to be aligned pixel by pixel (Pastonchi et al., 2020). The UAS images are then processed to remove any distortions, artifacts, or outlier (Abbas et al., 2020; Huang et al., 2023) and create orthomosaics or digital surface models (DSMs) of the crop area (Chen et al., 2019). A moving window approach (Wu et al., 2023), which adapts to the dimensions of the UAS image, effectively tackles the challenge of mutual matching in images. By dynamically adjusting the window size based on the characteristics of the UAS image, accurate alignment and comparison with other images, regardless of their spatial resolutions was achieved.

3.2.3 Use image fusion techniques

The fusion process of UAS and satellite images can be realized through pixel-based or feature-based fusion methods, both of which contribute to enhancing the spatial resolution of the satellite imagery (Messina et al., 2020; Tattaris et al., 2016; Jain and Pandey, 2021). This integration significantly enhances the understanding of within-field variability by combining the high spatial resolution of UAS data with the broad temporal and spectral coverage of satellite imagery (Schut et al., 2018). This synergy provides a more comprehensive view of crop conditions and enables precision agriculture practices to address intra–field heterogeneity effectively. Studies highlight how this integration improves temporal monitoring capabilities. For example, during maize’s critical growth stages, UAS data refined satellite-derived vegetation indices such as NDVI and NDRE, resulting in precise nitrogen stress assessments (Maimaitijiang et al., 2020). Another study focused on yield prediction demonstrated how combining UAS-derived structural features with satellite spectral data improved correlations between vegetation indices and crop yield, leading to more accurate harvest forecasts (Marzougui et al., 2023). Pixel-based integration involves combining the pixel values of corresponding locations in the images, while object-based integration involves segmenting the images into meaningful objects and then integrating the information at the object level (Pastonchi et al., 2020; Khaliq et al., 2019a; Abbas et al., 2020). Pixel-based fusion techniques discussed include spatial and temporal adaptive reflectance fusion model (STARFM) (Gevaert et al., 2014), Spectral harmonization (Nurmukhametov et al., 2022), Unmixing-based data fusion (Gevaert et al., 2014; Gevaert et al., 2015), Pan-sharpening (Gevaert et al., 2014; Khaliq et al., 2019a; Sozzi et al., 2021), and Model base harmonization techniques like machine learning (ML) (Bollas et al., 2021; Maimaitijiang et al., 2020; Prins and Van Niekerk, 2021), and deep learning (DL) techniques (Mazzia et al., 2020; Khaliq et al., 2019b). ML algorithms can be trained using the combined UAS and satellite data to improve the spatial resolution of satellite images. These algorithms can learn the relationship between the low-resolution satellite images and the corresponding high-resolution UAS images, and then generate enhanced versions of the satellite images (Sankaran et al., 2021).

3.2.4 Utilize image classification and analysis

Image classification algorithms and analysis techniques to the fused images can be used to extract relevant information about crop health, pests and/or diseases detection, monitoring growth stages, assessing yield potential, and assessing crop response (Tattaris et al., 2016; Chen et al., 2019; Wu et al., 2023; Schut et al., 2018; Peter et al., 2020). This can include ML algorithms to classify different crop types or detect specific features. ML algorithms can be trained, using ground truth data, to classify and map different crop types or detect specific crop conditions (e.g., disease or nutrient deficiency). The fused image, along with the extracted features, can be used as input to these algorithms for accurate classification and monitoring (Khaliq et al., 2019a), identifying patterns, detecting anomalies, and extracting valuable information for crop monitoring (Bollas et al., 2021).

3.2.5 Time series analysis

In addition to spatial resolution, the temporal resolution of the UAS and satellite images should be considered for comprehensive analysis (Bollas et al., 2021). By collecting UAS and satellite images at different time points throughout the growing season, a time series analysis can be performed (Zhu et al., 2020). This allows for the monitoring of crop growth and development over time (Bollas et al., 2021; Sankaran et al., 2021; Prins and Van Niekerk, 2021). The integration of both data sources provides a more complete picture of crop health and can help identify patterns and trends that may not be apparent from a single image (Li M. et al., 2022; Zhao et al., 2019; Sagan et al., 2019). Studies highlighed the importance of temporal resolution in UAS and Satellite imagery integration. For instance, combining UAS and Sentinel-2 data for vineyards enabled time–series analysis of vegetation indices, capturing plant responses to environmental changes throughout the growing season (Brook et al., 2020). In estuarine environments, UAS data supplemented satellite imagery to dynamically monitor vegetation after disturbances, showcasing the importance of high temporal resolution (Gray et al., 2018).

3.3 Spectral synergy

The spectral attributes of UAS and satellite images complement one another through their inherent strengths (Tattaris et al., 2016; Li Y. et al., 2022). To illustrate the practical value of spectral synergy in precision agriculture, recent studies have demonstrated how the integration of UAS and satellite imagery can enhance biophysical estimations. For instance, (Puliti et al., 2017), successfully combined UAS-derived photogrammetric point clouds with Sentinel-2 imagery and limited field data to estimate growing stock volume across large forested areas in Norway, achieving a cost-effective solution for regional forest resource assessment. Similarly, (Wang et al., 2019), utilized UAS-LiDAR data in conjunction with Sentinel-2 imagery to estimate aboveground biomass in mangrove in China, showing improved accuracy over traditional field-based approaches.

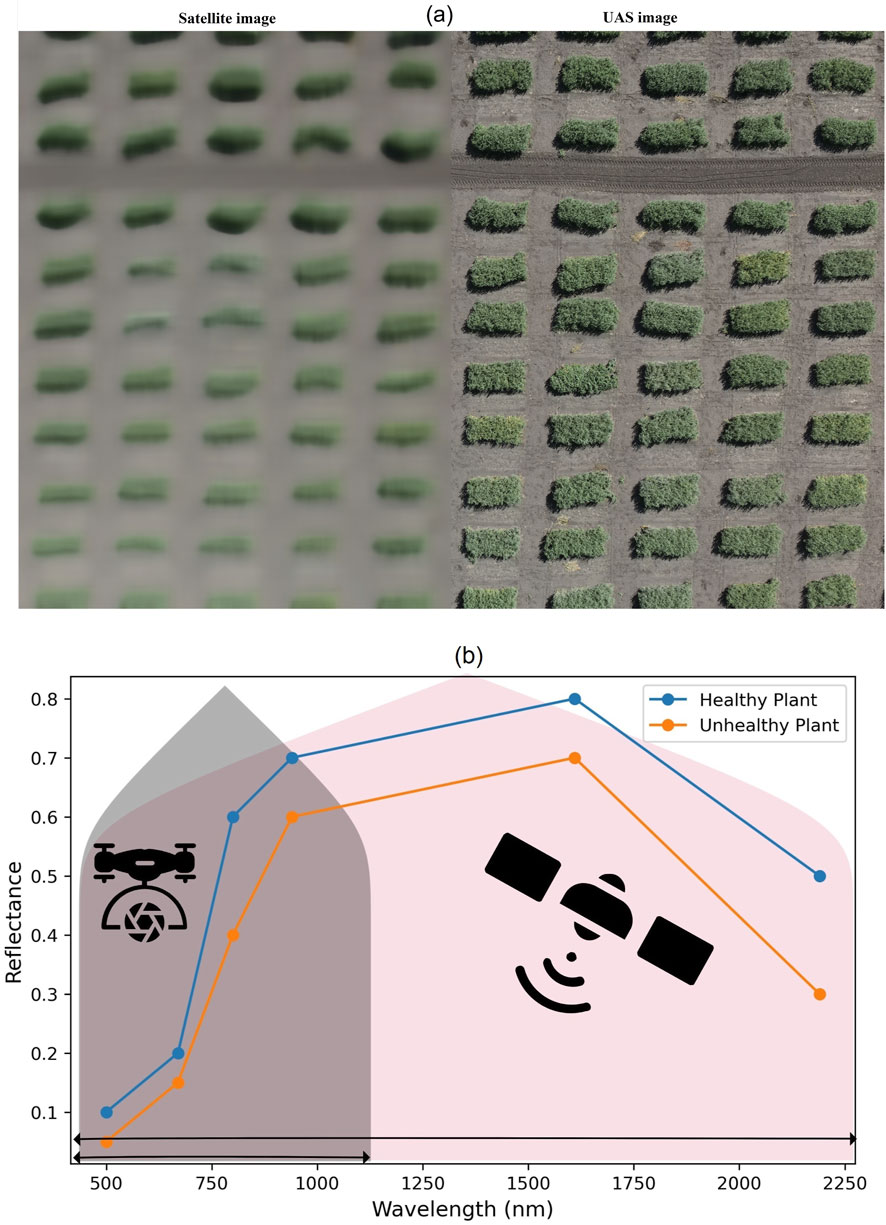

UAS imagery provides high-resolution data at the plot level, allowing for a more detailed analysis of crop health and classification, while satellite imagery gives low-resolution but covers larger areas (Figure 5a). UASs offer flexibility in terms of flight planning and sensor configuration, allowing for targeted data collection based on specific crop management needs (Schut et al., 2018; Khaliq et al., 2019a; Bollas et al., 2021). This means that UASs can be made to provide more frequent updates on crop health and changes over time (Li M. et al., 2022). Even though impacted by weather conditions, UASs can capture images even in partially cloudy conditions allowing for reliable data acquisition, continuous crop health monitoring, and accurate classification analysis, unlike satellites which may be hindered by complete cloud cover (Tattaris et al., 2016; Ballesteros et al., 2021; Rasmussen et al., 2021).

Figure 5. Differences between UAS and satellite images in terms of spatial (a) and spectral (b) resolution.

UASs can be custom-made to carry multiple sensors, including thermal and multispectral sensors; while satellites have a variety of multispectral sensors the users cannot customize them (Figure 5b). Integrating these sensors a broader range of vegetation indices and spectral signatures can be derived for monitoring crop health and physiology (Tattaris et al., 2016; Pastonchi et al., 2020); classifying crop types, identifying stress factors, and estimating crop leaf area index and chlorophyll content (Chen et al., 2019; Jiang et al., 2022). The high-resolution data from UASs can serve as ground truth for validating and calibrating satellite data (Sozzi et al., 2021). The application of such validation includes crop classification, crop health assessment, and satellite-derived indices and model development (Tattaris et al., 2016; Jain and Pandey, 2021). UAS and satellite imagery capture different parts of the electromagnetic spectrum, providing complementary spectral information (Lacerda et al., 2022). UASs often capture imagery in the visible, near-infrared, and thermal infrared bands, while satellites typically capture imagery in the visible, near-infrared, and shortwave infrared bands (Wu et al., 2023). However, the spectral range of satellites encloses the UAS (Figure 5b) while the difference mostly lies in the image resolution (Figure 5A). Fusion of these spectral records from both sources will provide a more comprehensive understanding for various agricultural applications (Li Y. et al., 2022; Chen et al., 2019).

3.4 Temporal optimization

Understanding the growth stages and phenological changes of the crop is essential as different crops have different growth patterns and critical stages that require monitoring. For example, during the early stages, frequent monitoring may be required to detect emergence and assess establishment, while during the reproductive stage, monitoring may focus on yield estimation (Messina et al., 2020; Ballesteros et al., 2021). The timing of image acquisition should align with critical stages of crop development and management activities (Jain and Pandey, 2021; Jiang et al., 2022). Key stages include planting, emergence, vegetative growth, flowering, fruiting, and senescence. It is important to capture images before and after important management practices such as fertilization, irrigation, and pest control (Schut et al., 2018). The temporal resolution of the imagery should align with the rate of change in the crop. Rapidly changing crops may require more frequent image acquisition, while slower-growing crops may require less frequent monitoring. It is important to strike a balance between capturing dynamic changes and avoiding unnecessary data collection.

The spatial resolution of the imagery should be appropriate for capturing the desired level of detail. Higher spatial resolution is beneficial for detecting small-scale variations within the field, such as disease hotspots or nutrient deficiencies. However, higher resolution imagery often comes at a higher cost and may require more processing time (Marzougui et al., 2023). Weather conditions can impact the quality of the imagery and the ability to capture meaningful information. Cloud cover, for example, can obstruct satellite imagery, while strong winds or rain may limit UAS flights. Monitoring weather forecasts and selecting suitable time windows for image acquisition is important (Mazzia et al., 2020). The frequency and timing of image acquisition should also consider budget constraints and available resources. UAS flights require equipment, personnel, and time, while satellite imagery may involve subscription costs. Therefore, to optimize the frequency and timing of acquiring UAS and satellite images for precision agriculture, crop phenology, temporal resolution, spatial resolution, weather conditions, resources, and budgets’ costs and benefits of different approaches need to be considered (Messina et al., 2020; Li Y. et al., 2022; Wu et al., 2023; Ballesteros et al., 2021; Di Gennaro et al., 2019).

Varying satellite revisit times and weather conditions can lead to missed opportunities for capturing critical growth stages due to cloud cover or longer intervals between image acquisitions. Careful consideration of satellite revisit frequency and weather conditions is crucial for data collection planning. Selecting a satellite imagery provider with a shorter revisit period may be beneficial for timely and frequent monitoring. Integrating UAS imaging alongside satellite data provides more detailed and frequent monitoring at specific growth stages. Despite weather-related constraints, the combination approach is better than either of the individual methods, hence needs optimization strategies (Sankaran et al., 2021).

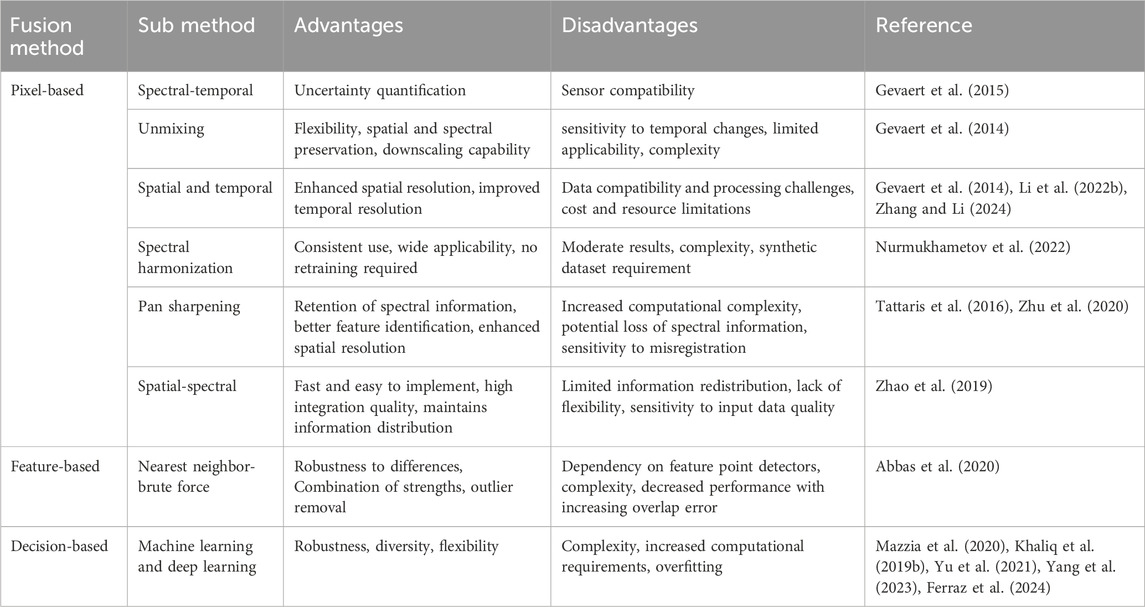

3.5 Data fusion methods

Fusion refers to the process of combining different types of imagery or data to create a more comprehensive and detailed picture of an object (Nurmukhametov et al., 2022). Fusion techniques aim to enhance the spatial and spectral resolution of the data, allowing for more accurate and precise analysis of plant traits (Tattaris et al., 2016). This process involves merging different imagery with low- and high-resolution to create a single high-resolution image by combining the detailed spatial information with spectral data. (Gevaert et al., 2014). To systematically analyze data fusion approaches, we adopted the widely accepted classification into pixel-level, feature-level, and decision-level fusion. This taxonomy, rooted in remote sensing literature (Pohl and Van Genderen, 1998), reflects the stage at which data from multiple sensors are integrated within the processing pipeline. Pixel-based fusion operates at the data level, feature-based fusion occurs after feature extraction, and decision-based fusion combines the outputs of independent models. This structure allows for a comparative understanding of methodological complexity, performance, and suitability for different agricultural applications.

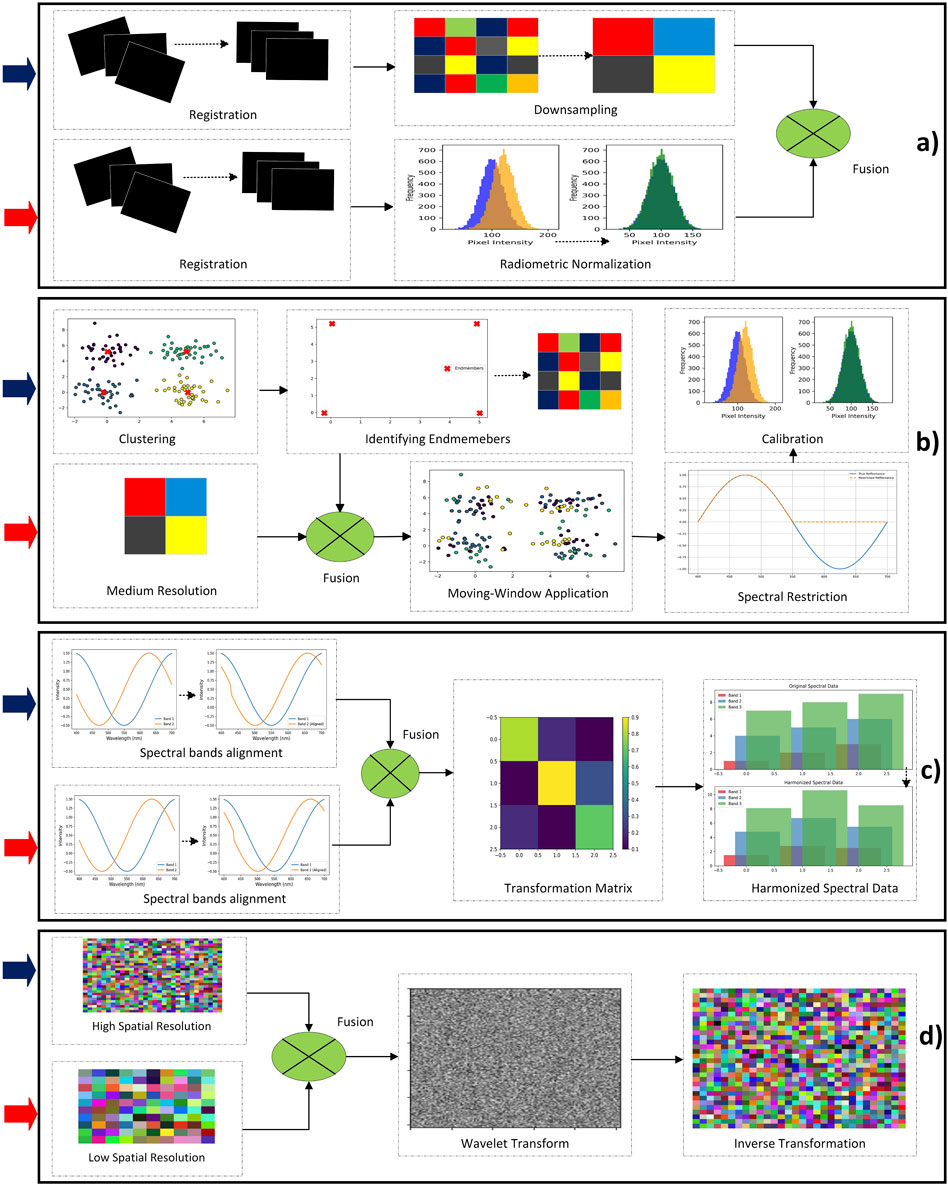

3.5.1 Spatial-temporal method

Pixel-based fusion combines the pixel values from corresponding locations in UAS and satellite images to create a single fused image. This method enhances the spatial resolution of the satellite imagery by incorporating high-resolution details from the UAS images. Major examples of this method include spatio-temporal fusion (STF), unmixing-based data fusion, spectral harmonization, pan-sharpening, spatial-spectral, and spectral-temporal. STF that combines medium-resolution satellite and high-resolution UAS imagery is often used to merge multispectral satellite imagery with hyperspectral UAS imagery (Gevaert et al., 2014). The STF framework consists of several steps, including registration, radiometric normalization, preliminary fusion, and reflectance reconstruction (Figure 6a). By combining the benefits of both platforms, the framework aims to generate continuous imagery with high spatial and temporal resolution (Li Y. et al., 2022).

Figure 6. Image data fusion workflows: (a) Spatial-temporal; (b) Unmixing-based; (c) Spectral harmonization; (d) Pan-sharpening. The solid blue arrows

3.5.2 Unmixing-based method

Unmixing-based data fusion is a concept that involves combining data from multiple sensors with different spectral characteristics to create a more complete and accurate representation of the observed scene (Gevaert et al., 2015). This method eliminates the requirement for corresponding spectral bands between the sensors and allows for the downscaling of additional spectral bands from medium spatial resolution sensors. The unmixing process involves decomposing the mixed pixel spectra into their constituent end-members and their corresponding fractional abundances (Figure 6b). This method is also used to fuse multispectral satellite imagery with high-resolution datasets (Gevaert et al., 2014).

3.5.3 Spectral harmonization method

Spectral harmonization refers to the process of aligning the spectral characteristics of data acquired from different platforms to ensure consistency and comparability (Nurmukhametov et al., 2022). Suitable methods for spectral harmonization can include empirical ones like principal component (PC), root-polynomial correction (RPC); or physical methods like model-based spectral harmonization (MBSH) (Zhao et al., 2019). These models are trained using reference data and can be used to predict the response of one sensor based on the response of another one. Training the model involves determining the weights or coefficients for the transformation matrix. This matrix maps the spectral responses from the source sensor to the spectral responses of the destination sensor. Finally, the transformation matrix applies to the spectral data from the source sensor (Figure 6c) to obtain the harmonized spectral data for the destination one (Nurmukhametov et al., 2022).

3.5.4 Pan sharpening method

Pan sharpening is another method that involves fusing high-resolution panchromatic (UAS) imagery with low-resolution multispectral imagery (satellite) (Tattaris et al., 2016). The process begins by acquiring both the panchromatic and multispectral images of the same area. The panchromatic image provides high spatial resolution images but lacks spectral information. On the other hand, the multispectral image provides spectral information but has lower spatial resolution (Zhu et al., 2020). Component substitution and multi-resolution analysis are the main algorithms used for pan sharpening. Component substitution involves converting the bands of the low-resolution multispectral imagery to intensity-hue-saturation (IHS) components using a wavelet transform. The panchromatic band then replaces the intensity component after histogram matching. In the multi-resolution analysis, the low-spatial resolution image is decomposed into scale levels, while injecting the panchromatic band matched by each decomposed layer (Figure 6d). An inverse transformation is then applied to enhance the imagery spatial resolution (Marzougui et al., 2023).

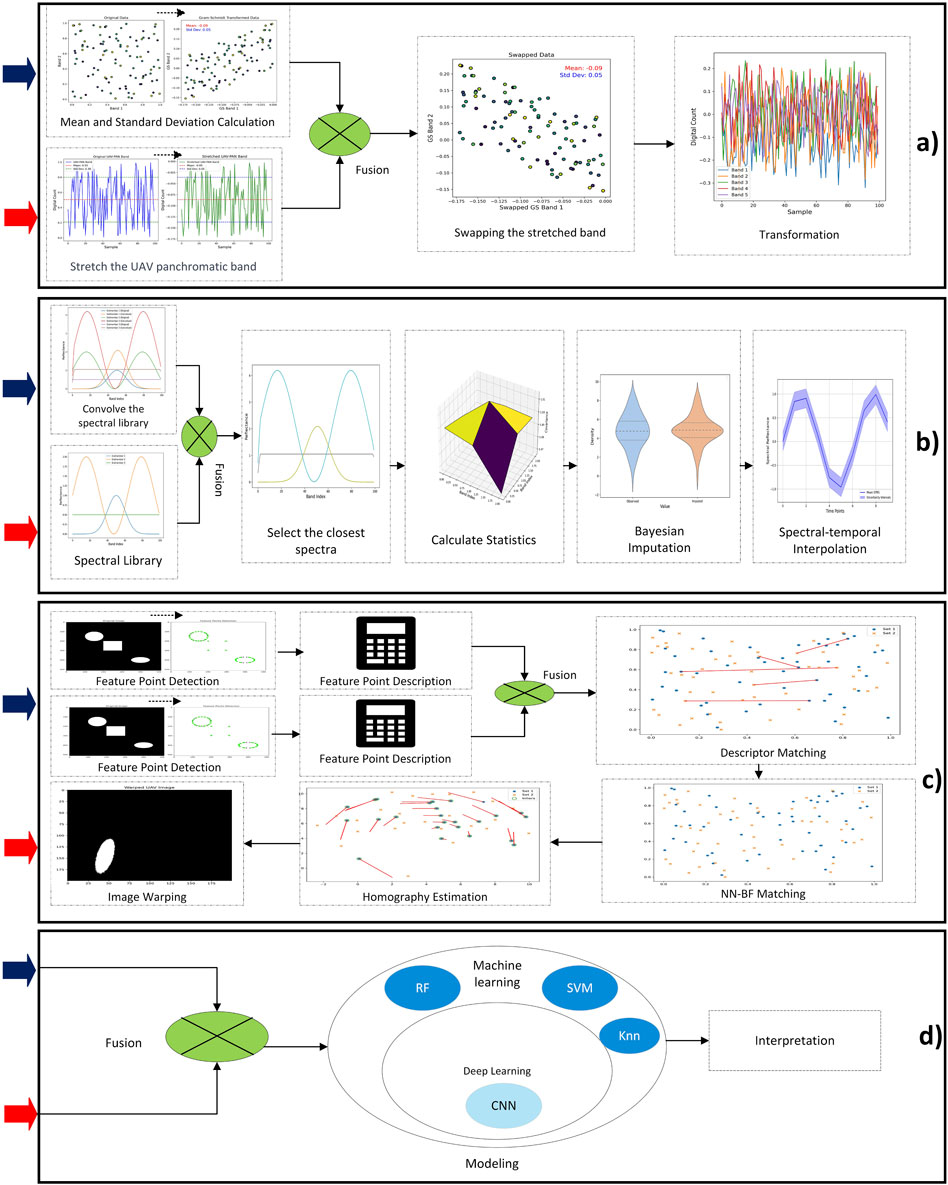

3.5.5 Spatial-spectral method

The spatial-spectral fusion technique uses Gram-Schmidt (GS) transformation to combine the UAS high spatial but low spectral resolution mages with satellite high spectral resolution images to create a fused image. The GS transformation calculates the mean and standard deviation of the panchromatic band (UAS-PAN) and the first band (GS1) of the imagery obtained by the GS transform. The UAS panchromatic band is then stretched so that its mean and standard deviation match the mean and standard deviation of the first GS band. The stretched high-resolution panchromatic band is swapped for the first GS band, and the data is transformed back into the original multispectral band space (Figure 7a), resulting in higher-resolution multispectral bands (Zhao et al., 2019).

Figure 7. Image data fusion workflows: (a) Spatial-spectral; (b) Spectral-temporal; (c) Feature-based; (d) Decision-level. The solid blue arrows

3.5.6 Spectral-temporal method

The spectral-temporal works based on using Bayesian inference to combine different sources of data. In this approach, a vector of true spectral reflectance factors is inferred from several noisy observations. The mathematical formulation sets up a linear Gaussian system, where the observations are represented as a product of a matrix and the true reflectance factors, plus a noise term. The matrix is used to select the available images, and the noise is assumed to have a normal Gaussian distribution. The Bayesian approach considers the uncertainties of each measurement and allows for the quantification of these uncertainties (Figure 7b). It also utilizes prior information, such as the covariance between spectral bands of similar signatures, to impute multispectral reflectance spectra to hyperspectral intervals. This helps retain the physical features characteristic of vegetation spectra when combining multispectral and hyperspectral images (Gevaert et al., 2015).

3.5.7 Feature-based method

A feature-based fusion method is a technique used in image registration to combine and integrate information from multiple feature points in order to accurately align and match images (Abbas et al., 2020). The first step of the feature-based fusion is to detect feature points in each image. Feature points are distinctive locations or regions in an image that can be easily identified and matched with corresponding points in another image. Once the feature points are detected, their descriptors are computed. Descriptors are numerical representations that capture the unique characteristics of each feature point, such as its location, scale, and orientation. The next step is to match the feature point descriptors between the two images. This is done by comparing the descriptors of each feature point in one image with the descriptors of all feature points in the other image. The goal is to find the best matches based on similarity measures. In the feature-based fusion method, the matches obtained from the descriptor matching step are combined and fused to generate a final set of accurate matches (Figure 7c). This fusion process involves considering various factors such as the quality of matches, geometric constraints, and statistical measures. Once the accurate matches are obtained, the images can be aligned and registered based on the correspondences between the feature points. This alignment process involves estimating the transformation parameters, such as translation, rotation, and scaling, that best align the images (Abbas et al., 2020).

The main method used in feature-based fusion in precision agriculture is the nearest neighbor-brute force (NN-BF) method. This method combines the strength of NN and the BF descriptor matching strategies to register images. It involves identifying corresponding feature point descriptor matches between the images of the training set with overlap error (Abbas et al., 2020). These matches are then further matched with the descriptors of the test set using the NN and BF strategies. Finally, the matches obtained are processed with random sample consensus (RANSAC) to remove outliers and estimate a homography for image registration. The NN-BF method has shown improved performance compared to other feature points such as scale-invariant feature transform (SIFT), speeded-up robust features (SURF), and oriented FAST and rotated BRIEF (ORB) in remote sensing image registration.

3.5.8 Decision-level method

Decision-level fusion involves making decisions based on information from both UAS and satellite images. It often incorporates ML, DL or statistical techniques to make informed decisions. Advanced computing techniques, such as deep neural networks (DNN) and convolutional neural networks (CNN), random forest (RF), and support vector machine (SVM) can be employed to fuse information from multiple sources and improve the quality of moderate-resolution platforms (Mazzia et al., 2020; Yu et al., 2021). The decision-based fusion approach aims to refine satellite images by UAS imagery (Figure 7d). The ML and DL models are trained to learn the mapping function between the satellite images and the ground truth instances extracted from the UAS images. By optimizing the model parameters using the loss function, these models can generate a non–linear mapping function that improves the coherence between the predicted satellite pixels and the UAS pixels (Yu et al., 2021; Khaliq et al., 2019b). By leveraging the benefits of both UAS and satellite imagery, decision fusion enables the monitoring of vegetation with improved spatial and temporal resolution. (Khaliq et al., 2019b; Mazzia et al., 2020). Table 2 presents the advantages and disadvantages of various image fusion techniques.

Regarding the fusion of UAS and satellite images for precision agriculture, researchers have primarily favored pixel-based fusion methods in 70% of cases, followed by decision-based fusion methods at 23%, with only 7% opting for feature-based fusion approaches. Intriguingly, there’s no clear consensus on which fusion method is the most accurate, mainly due to the absence of comprehensive comparative studies. This gap in the literature points to a promising area for future research. The key factors in selecting a fusion method revolve around the intrinsic traits of the data sources, such as spatial resolution, spectral range, and temporal resolution. The choice of a fusion method should align with the specific goals, whether they involve enhancing spatial detail, preserving spectral information, or integrating various data sources (Sozzi et al., 2021). Furthermore, it is essential to consider computational complexity, especially when dealing with large datasets, to ensure that the method is both efficient and scalable (Zhu et al., 2020). Ultimately, making an informed decision about the fusion method depends on a thorough assessment of these factors, all tailored to the specific data and objectives of your application (Mancini et al., 2019).

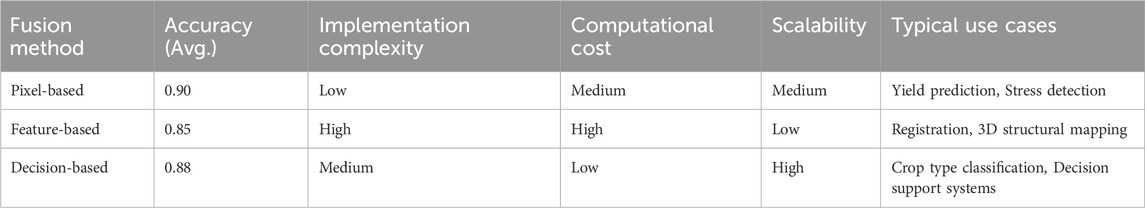

To strengthen the comparative analysis of fusion method performance, we synthesized results from the 56 reviewed studies to evaluate how each method performs across key precision agriculture tasks. As shown in Table 3, pixel-based fusion methods achieved the highest average classification accuracy (90%) and were consistently preferred in tasks such as crop type mapping, stress detection, and yield prediction. This preference is not solely due to performance; pixel-based methods are generally easier to implement and require fewer computational resources compared to feature- or decision-based approaches. While feature-based methods provided enhanced structural detail, especially in vegetation segmentation tasks, they involved higher computational complexity and longer processing times. Decision-based fusion methods showed robust generalization across multi-sensor sources but often lacked the spatial precision needed for fine-grained agricultural assessments. These findings illustrate that the choice of fusion strategy is context-dependent, influenced not only by accuracy but also by operational efficiency, sensor characteristics, and end-use application.

3.6 Data fusion challenges and solutions

The challenges associated with integrating UAS and satellite images for precision agriculture pose significant hurdles that impact the reliability and effectiveness of the combined data. One primary challenge is the disparity in spatial and temporal resolutions between UASs and satellites (Li Y. et al., 2022; Jiang et al., 2022). This incongruity can lead to difficulties in achieving a seamless integration of data (Pastonchi et al., 2020). Coordinated data collection schedules and enhanced fusion techniques, however, provide a viable solution by ensuring synchronized acquisition of data from both platforms (Tattaris et al., 2016; Wu et al., 2023). This not only addresses the spatial and temporal misalignments but also fosters a more cohesive integration of UAS and satellite data (Jain and Pandey, 2021; Lacerda et al., 2022). Comparatively, other studies emphasize the need for coordinated data collection schedules and advanced fusion techniques to mitigate these issues. For instance, synchronized data acquisition methods have demonstrated the ability to minimize spatial and temporal misalignments, enabling the effective blending of UAS and satellite datasets (Lu and Shi, 2024). Similarly, innovative fusion frameworks, such as the high-resolution spatiotemporal image fusion (HISTIF) method, have shown promise in enhancing the coherence of integrated data, allowing daily monitoring with high spatial detail (Jiang et al., 2020). However, these techniques are not without drawbacks. Advanced fusion processes require computational resources and expertise, posing a barrier to widespread adoption, especially in resource-constrained settings. Moreover, errors in radiometric and geometric calibration can exacerbate the integration challenges, affecting the overall accuracy of the combined outputs (Collings et al., 2011; Bansod et al., 2017). Recent advancements highlight the potential of Spatio-Temporal-Spectral (STS) fusion frameworks for addressing these challenges. For instance, the use of Spatial and Temporal Adaptive Reflectance Fusion Model (STARFM) coupled with Consistent Adjustment of the Climatology to Actual Observations (CACAO) has demonstrated superior performance in combining spatial, temporal, and spectral data from UAV and Sentinel-2 images (Zhang and Li, 2024). This method achieves precise downscaling of Sentinel-2 images, yielding results that are both spectrally and spatially consistent with high-resolution UAV observations. By integrating the strengths of multiple sensors, such frameworks enhance the reliability of fused datasets, particularly in resolving spatial mismatches and temporal inconsistencies. Furthermore, the Spatio-Spectral fusion component of the STS framework has shown the ability to predict absent spectral bands, such as Red-Edge and NIR, critical for agricultural monitoring. This approach not only bridges the spectral gaps but also mitigates the impact of geographic registration errors at large spatial scales, a common issue when fusing data from UAVs and satellites (Zhang and Li, 2024). However, these advanced fusion frameworks are not without limitations. The computational intensity of STS methods and the need for precise calibration of input data remain significant barriers. As noted in recent studies, the integration of CACAO in Spatio-Spectral fusion ensures pixel-level reflectance accuracy but requires further refinement to minimize errors in downscaled spectral bands, especially in complex agricultural landscapes. Despite these challenges, the potential benefits of improved crop monitoring, yield prediction, and resource optimization justify continued investment in refining these methodologies. Optimizing these fusion frameworks through scalable and cost-effective approaches, including AI-driven algorithms for super-resolution and automated spectral calibration, could further enhance their applicability. This findings align with the results of Zhang and Li (2024) mentioning future research should explore the integration of deep learning models with STS fusion methods to improve spatial and spectral detail recovery while addressing the accessibility and scalability challenges associated with computational resources.

Radiometric calibration discrepancies between UAS and satellite sensors pose a significant challenge in integrating data for precision agriculture, as they introduce variations in measured values and lead to inconsistencies in indices like NDVI and LAI (Lacerda et al., 2022; Maimaitijiang et al., 2020). Addressing these discrepancies requires the development of standardized calibration procedures and harmonized algorithms to ensure compatibility and comparability of datasets across platforms. Studies have shown that standardized protocols not only improve data accuracy but also facilitate seamless integration of high-resolution UAS imagery with the broader temporal coverage of satellite data (Prins and Van Niekerk, 2021; Stolarski et al., 2022). In addition, advanced fusion frameworks, such as HISTIF, have demonstrated the ability to mitigate radiometric mismatches and geometric distortions, enhancing the reliability of integrated datasets (Jiang et al., 2020). While resource-intensive, these solutions offer significant benefits for precision agriculture, including improved crop monitoring, better yield predictions, and optimized resource use, making the investment in calibration advancements worthwhile. Future research should focus on scalable, cost-effective calibration methodologies to make these benefits accessible to resource-constrained settings, ensuring a broader application of integrated remote sensing technologies in agriculture.

Data synchronization, both in terms of acquisition time and location, introduces complexities arising from the short-duration, small-area coverage of UAS data compared to the single, wide-swath image captured by satellites (Furlanetto et al., 2023; Jiang et al., 2022). For instance, UAS-based vegetation indices collected in a specific field often require higher temporal resolution for phenological studies, whereas satellite imagery provides broader coverage but at coarser spatial resolution. A comparative study by Amankulova et al. (2024) demonstrated that fusion of PlanetScope and Sentinel–2 data improved the accuracy of crop yield predictions, highlighting the value of coordinated data collection and interpolation techniques. Coordinated data collection schedules, interpolation, and fusion techniques play crucial roles in overcoming these challenges. For example, temporal interpolation methods such as linear and spline interpolation have been employed successfully to align UAS data with satellite revisit times. A case study by Jenerowicz et al. (2017) on UAS and Landsat data integration showed that the integration of UAS and Landsat data, using methods such as pansharpening, effectively bridges spatial and spectral resolution gaps by fusing high-spatial-resolution RGB imagery from UAV platforms with multispectral satellite data, enhancing both spatial and spectral accuracies. Additionally, georeferencing and coregistration techniques ensure the accurate alignment of spatial locations between UAS and satellite data, resolving issues related to their disparate acquisition characteristics (Jain and Pandey, 2021). For example, the use of ground control points (GCPs) and automated image registration algorithms enabled the seamless integration of high-resolution UAS images for urban mapping (Salas López et al., 2022). In comparison to other results, studies such as Wang (Wang P. et al., 2022) have reported similar challenges but emphasized that advanced AI-driven methods, like deep learning-based super-resolution techniques, can further improve data fusion outcomes by enhancing the spatial resolution of satellite imagery to match UAS data. Such advancements suggest potential pathways for enhancing synchronization and integration efforts.

The divergence in processing techniques and algorithms required for UAS and satellite data poses a notable challenge in the integration process (Jain and Pandey, 2021; Pastonchi et al., 2020). For example, UAS imagery typically involves high–resolution, small–scale data processed using specialized software such as Pix4D or Agisoft Metashape, while satellite data often requires large–scale, multi–spectral analysis using tools like Google Earth Engine or ENVI. This discrepancy can lead to inefficiencies when trying to integrate the two data types for applications such as precision agriculture. Standardizing workflows emerges as a key solution, enhancing not only the compatibility of data but also streamlining the processing pipeline (Stolarski et al., 2022). For example, the development of a unified platform like the end-to-end deep convolutional neural network proposed by Tsai et al. (2017) has shown success in harmonizing datasets, leading to improved output accuracy and reduced processing time. Compared to traditional approaches, where integration often involved significant manual intervention, this standardization reduces the potential for errors, making the integration process more efficient and ensuring consistent and reliable results (Marzougui et al., 2023; Habibi et al., 2021). Studies by Pakdil and Çelik (2022) have demonstrated similar results, confirming that standardized processing workflows not only enhance integration efficiency but also enable better scalability of geospatial projects. However, the cost and accessibility issues associated with UAS platforms and satellite data introduce financial and operational constraints (Marzougui et al., 2023; Sankaran et al., 2021; Pastonchi et al., 2020). For example, while UAS platforms provide greater flexibility in data acquisition, their operational costs can be prohibitive for small–scale users. In contrast, satellite data, while often more accessible, may lack the spatial resolution required for certain applications. The integrating the two technologies through cost-sharing models and open-access satellite platforms like Sentinel-2 might partially mitigate these constraints. These findings align with the results of (Politi et al., 2019) underscoring the importance of innovation and collaboration in overcoming these obstacles.

Optimizing UAS data collection strategies (Li M. et al., 2022) and considering the use of open-source or freely available satellite imagery (Mazzia et al., 2020) can represent strategic measures to enhance cost-effectiveness. However, relying solely on free satellite data may limit flexibility regarding the timing of data collection and available options for imagery processing. Therefore, a balanced approach that incorporates both UAS and satellite data sources, while also considering the potential limitations of freely available satellite imagery, is recommended for maximizing cost-effectiveness and flexibility in data acquisition and processing. Collaboration with industry stakeholders and research institutions further addresses accessibility challenges by providing valuable resources and shared data, ultimately making UAS platforms and satellite data more accessible and cost-effective (Marzougui et al., 2023).

Finally, to address the challenges of data heterogeneity, one practical approach involves the development of preprocessing pipelines that standardize spatial resolution and radiometric properties across UAS and satellite datasets. For example, pan-sharpening techniques can be applied to coarsely resolved satellite imagery to improve alignment with UAS imagery. For temporal alignment, interpolation or data assimilation methods can be used to reconcile differences in acquisition dates. To tackle computational load, cloud-based platforms such as Google Earth Engine and Amazon Web Services (AWS) allow scalable processing of fused datasets. Additionally, implementing machine learning frameworks, such as decision-level fusion using ensemble models (Bazrafkan et al., 2024b), can improve robustness by leveraging diverse input sources without needing complete harmonization. These strategies offer practical paths for overcoming fusion-related barriers in precision agriculture applications.

3.7 Impact on precision agriculture

The integration of UAS and satellite data in precision agriculture significantly enhances the decision-making process for farmers and plant breeders. For instance, a study demonstrated how UAS imagery enabled real-time monitoring of nitrogen deficiencies within cornfields, while satellite data provided insights into regional growth patterns and drought stress over a season (Yu et al., 2021). This dual-level monitoring allowed for the precise application of nitrogen fertilizers, reducing costs and improving yields. The integration of UAS and Sentinel-2 imagery using the proposed spatio-temporal fusion (STF) framework by (Li Y. et al., 2022) led to significant quantitative improvements in monitoring crop growth. The framework reduced the spatial texture error (measured by local binary pattern) by more than 0.10 and the edge error (Robert’s edge) by over 0.25 compared to traditional STARFM methods. Furthermore, the correlation coefficient (

3.8 Comparative analysis

In specific scenarios and for particular crop types, the synergistic use of UAS and satellite images surpasses the individual utility of each source, providing enhanced insights. Notably, crops in row configurations (e.g., vineyards, orchards) (Mazzia et al., 2020; Nurmukhametov et al., 2022; Mancini et al., 2019; Huang et al., 2023; Khaliq et al., 2019b), crops with larger field sizes or homogeneous canopy structures (e.g., wheat, rice, cotton, chickpea, potato) (Tattaris et al., 2016; Ahmad et al., 2022; Habibi et al., 2021), and crops with complex canopy structures or high spatial variability (e.g., maize, soybean, dry pea) (Marzougui et al., 2023; Jiang et al., 2022) would benefit from this integration.

Recent research emphasizes that within precision agriculture, cover-crop spectra can offer valuable insights beyond their historical perception as noise. For instance, studies on vineyards have shown that cover-crop spectral data, acquired through UASs and moderate-resolution satellite imagery like Sentinel-2, significantly influence the accuracy of predicting grape yield and quality (Williams et al., 2024). This finding challenges the traditional focus solely on vine spectra and underscores the potential of using cover-crop spectral variation to enhance predictive models. Cover crops, when combined with spectral data from both UASs and satellites, provide a mechanism for indirect yet meaningful insights into crop quality and yield variability. Moreover, integrating high-resolution UAS imagery with moderate-resolution satellite data can mitigate the limitations of mixed pixels in moderate-resolution data, such as those acquired by Sentinel-2. For example, vineyard monitoring studies have demonstrated that despite spatial blending, Sentinel-2 data robustly describe variations in grape quality parameters, as cover-crop spectra dominate the mixed-pixel signal over vine spectra. This insight aligns with the broader scope of leveraging both technologies to achieve cost-effective, scalable monitoring solutions for precision agriculture (Williams et al., 2024).

Notably, while existing research demonstrates the benefits of this integration for various crop types, there remains a gap in understanding the optimal integration methods and techniques to maximize insights across different agricultural contexts. Future research endeavors should focus on addressing these gaps by exploring innovative approaches, such as multi-temporal monitoring during key phenological stages (e.g., veraison) and optimizing data acquisition timing to reduce spectral variability caused by environmental factors (Williams et al., 2024). By advancing methodologies tailored to specific crop characteristics and environmental conditions, the synergistic use of UAS and satellite imagery can further revolutionize precision agriculture.

3.9 Research gaps identified for future works

The SLR reveals only a limited number of studies combining UASs and satellite imagery for precision agriculture compared to the individual remote sensing platforms. This disparity may arise from technological challenges, economics, or limitations in data integration. The limited research in this area indicates a potential gap in understanding or the research is at an early stage in exploring synergies between UASs and satellite images for precision agriculture, despite the various advantages. One significant gap pertains to the limited studies regarding the performance of the same data fusion model when integrating data from both UAS-mounted and satellite sensors across a variety of crops. Different crops exhibit unique characteristics, growth patterns, and nutrient requirements. Evaluating the fusion approach on various crops not only ensures its generalizability and robustness but also provides insights into the specific challenges and opportunities associated with each crop. This allows for the refinement and optimization of the fusion approach tailored to specific crop types, enhancing its applicability across a broad spectrum of agricultural contexts (Prins and Van Niekerk, 2021; Sozzi et al., 2021; Furlanetto et al., 2023).

While the integration of UAS and satellite imagery in fusion offers promising potential, practical applications face significant challenges. One of the primary obstacles is the variability in sensor configurations and data acquisition conditions, which can lead to inconsistencies in spectral and spatial resolutions, particularly in large-scale agricultural applications (Zhang and Li, 2024). These inconsistencies necessitate advanced preprocessing techniques, such as image registration and normalization, to mitigate discrepancies between UAS and satellite data. Furthermore, adapting this fusion technology to diverse agricultural scenarios requires addressing site-specific factors like crop heterogeneity, spectral variability, and dynamic growth conditions. For example, while CA-STARFM has demonstrated its effectiveness in reducing errors across spectral and spatial dimensions, its performance may vary depending on the vegetation type and the presence of mixed land covers, such as bare soil and water, commonly found in agricultural landscapes (Zhang and Li, 2024). This highlights the need for further refinement in modeling techniques and the incorporation of adaptive algorithms capable of contextual adjustments to cater to diverse agricultural practices and climatic conditions. Another critical research gap involves the improvement of segmentation algorithms, as accurate segmentation is pivotal for separating vegetation from the background in UAS and satellite imagery. Image fusion, combining information from satellite imagery and UAS data, relies on effective segmentation to enhance the accuracy of vegetation monitoring. Improving segmentation algorithms enables more precise isolation and extraction of vegetation information from diverse data sources. Enhancing segmentation algorithms, therefore, holds the potential to improve the overall performance and reliability of image fusion for vegetation monitoring.

In addition, enhancing radiometric calibration is imperative for the accuracy and reliability of UAS and satellite image fusion in future works. Several challenges, including shifting light conditions during UAS image capture, insufficient calibration methods, variability in radiometric calibration, and reproducibility issues, need attention. Shifting light conditions pose challenges for accurate radiometric calibration, potentially introducing errors that impact the fused image accuracy (Rasmussen et al., 2021). Current calibration methods for UAS and satellite images may prove insufficient, and the approximation nature of radiometric calibration algorithms in UAS imagery raises concerns about their accuracy (Svensgaard et al., 2019). The calibration process, converting a sensor radiance into reflectance, is influenced by factors such as camera characteristics and spectral differences between sensors, affecting the accuracy of this conversion. Reproducibility issues in UAS imagery, compared to satellite imagery, further impact the absolute values of vegetation indices derived from UAS images. Factors such as cloud cover and camera types can influence the consistency and reliability of the fused images (Rasmussen et al., 2021; Rasmussen et al., 216). Addressing these radiometric calibration challenges is essential for advancing the accuracy and reliability of UAS and satellite image fusion for various precision agriculture applications.

To enable robust cross-crop applications of data fusion models, future studies should design comparative experiments that evaluate the same fusion algorithm across multiple crop types. For instance, integrating UAS and satellite data can be tested on structurally and phenologically distinct crops such as maize, wheat, and chickpeas. Such studies should employ standardized preprocessing pipelines, consistent feature sets (e.g., vegetation indices, canopy metrics), and uniform evaluation metrics (e.g., RMSE, R2). Model generalizability can then be assessed through transfer learning techniques or cross-validation where a model trained on one crop is tested on another. This approach allows for identification of crop-specific limitations and opportunities, thereby enhancing the adaptability and robustness of fusion frameworks across diverse agricultural systems. Future studies should assess fusion model performance across diverse crop types, accounting for variations in canopy architecture, growth stage reflectance dynamics, and background soil conditions. Such designs could involve testing models trained on one crop (e.g., wheat) and applying them to morphologically different crops (e.g., soybean) to evaluate generalizability. To address segmentation challenges in heterogeneous fields, advanced deep learning architectures such as U-Net, DeepLabv3+, or Mask R-CNN could be adopted. These models have shown high precision in extracting crop boundaries from high-resolution imagery (Bazrafkan et al., 2024a) and can be trained with annotated datasets using transfer learning approaches. Radiometric calibration can be improved through standardized use of calibration panels (Wang and Myint, 2015), and atmospheric correction tools such as Sen2Cor (Main-Knorn et al., 2017) for satellite imagery or empirical line calibration for UAS data. Future research could also explore harmonization strategies using Bidirectional Reflectance Distribution Function (BRDF) (Marschner et al., 2000) models to compensate for view angle differences and lighting variability.

4 Conclusion

This systematic literature review highlights the transformative potential of integrating UAS and satellite imagery in precision agriculture. By focusing on optimizing spatial resolution, leveraging spectral synergies, and employing temporal strategies, the review identifies pixel-based, feature-based, and decision-based fusion methods as pivotal in enhancing the spatial and spectral resolution required for detailed crop monitoring and assessment. The complementary spectral features of UAS and satellite data prove particularly valuable for applications such as crop growth monitoring, nitrogen estimation, biomass prediction, and yield forecasting. Temporal optimization strategies underscore the necessity of aligning data collection with crop phenology while balancing spatial resolution and budget constraints. The review systematically addresses challenges in spatial and temporal resolution, radiometric calibration, data synchronization, and processing disparities. Solutions such as coordinated data collection, advanced fusion techniques, and standardized workflows demonstrate significant improvements in integration efficiency and accuracy. The integration of UAS and satellite data enhances precision agriculture by improving spatial and temporal monitoring capabilities, optimizing resource allocation, and enabling more precise crop performance assessments. Comparative analyses reveal the advantages of combining UAS and satellite data for specific crops and scenarios, particularly where high spatial resolution and broader coverage are required. Pixel-based fusion methods are frequently preferred due to their ability to preserve spatial detail, though considerations around computational complexity remain critical. Future research should focus on advancing machine learning (ML) and deep learning (DL) algorithms for data fusion, which promise to improve precision and scalability. Cross-crop applications should also be prioritized to generalize findings and provide tailored solutions for diverse agricultural contexts. Additionally, the integration of UAS RGB sensors with multispectral satellite imagery, such as Sentinel-2, has emerged as a widely adopted approach, proving effective for crop type mapping, nitrogen estimation, and yield prediction. Continued exploration of ML and DL-driven fusion frameworks will further refine the integration process, offering robust, cost-effective, and scalable solutions for the evolving needs of precision agriculture. By addressing these opportunities and challenges, this integrated approach has the potential to revolutionize agricultural practices, fostering sustainable, efficient, and precise farming systems.

Author contributions

AB: Writing – original draft, Software, Conceptualization, Formal Analysis, Visualization, Data curation, Methodology, Validation, Writing – review and editing, Resources, Investigation. CI: Writing – review and editing. NB: Writing – review and editing. PF: Writing – review and editing, Supervision, Project administration, Resources, Funding acquisition.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This research was funded by the North Dakota Department of Agriculture through the Specialty Crop Block Grant Program (21-316 and 20-489), USDA-NIFA Hatch Projects ND01488, ND01493, and ND01513. The viewpoints, findings, conclusions, or suggestions articulated in this document are attributed to the author(s) and do not necessarily represent the perspective of the funding organizations.

Acknowledgments

The authors express their gratitude to the Northern Pulse Growers Association for the funding support in the development of the NDSU advanced pea breeding lines.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that Generative AI was used in the creation of this manuscript. Generative AI was partially utilized during the preparation of this manuscript solely for the purpose of correcting and refining English language usage.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Abbas, M., Saleem, S., Subhan, F., and Bais, A. (2020). Feature points-based image registration between satellite imagery and aerialimages of agricultural land. Turkish J. Electr. Eng. Comput. Sci. 28, 1458–1473. doi:10.3906/elk-1907-92

Agarwal, A., Singh, A. K., Kumar, S., and Singh, D. (2018). “Critical analysis of classification techniques for precision agriculture monitoring using satellite and drone,” in 2018 IEEE 13th international conference on industrial and information systems (ICIIS), Rupnagar, India, 01-02 December 2018 (IEEE), 83–88.

Ahmad, N., Iqbal, J., Shaheen, A., Ghfar, A., Al-Anazy, M. M., and Ouladsmane, M. (2022). Spatio-temporal analysis of chickpea crop in arid environment by comparing high-resolution UAV image and LANDSAT imagery. Int. J. Environ. Sci. Technol. 19, 6595–6610. doi:10.1007/s13762-021-03502-z