- 1Department of Psychology, Graduate School of Letters, Kyoto University, Kyoto, Japan

- 2Japan Society for the Promotion of Science, Tokyo, Japan

Humans perceive self-motion using multisensory information, while vision has a dominant role as is utilized in virtual reality (VR) technologies. Previous studies reported that visual motion presented in the lower visual field (LoVF) induces stronger illusion of self-motion (vection) as compared with the upper visual field (UVF). However, it was still unknown whether the LoVF superiority in vection was based on the retinotopic frame, or rather related to the environmental frame of reference. Here, we investigated the influences of retinotopic and environmental frames on the LoVF superiority of vection. We presented a planer surface along the depth axis in one of four visual fields (upper, lower, right, or left). The texture on the surface moved forward or backward. Participants reported vection while observing the visual stimulus through a VR head mounted display (HMD) in the sitting posture or lateral recumbent position. Results showed that the visual motion induced stronger vection when presented in the LoVF compared with the UVF in both postures. Notably, the vection rating in LoVF was stronger in the sitting than in the recumbent. Moreover, recumbent participants reported stronger vection when the stimulus was presented in the gravitationally lower field than in the gravitationally upper field. These results demonstrate contribution of multiple spatial frames on self-motion perception and imply the importance of ground surface.

Introduction

Perceiving the position and movement of one's body is essential for acting in the environment appropriately. Humans perceive self-motion using multisensory information (Berthoz et al., 1975; Zacharias and Young, 1981; Fitzpatrick and McCloskey, 1994; Greenlee et al., 2016). The vestibular system plays a primary role by detecting rotational acceleration with the semicircular canals and translational acceleration with the otolith organs. The vestibular system also signals head posture with respect to gravity, as well as angular and linear acceleration (Jamali et al., 2019). However, vestibular cues for self-motion adapt when exposed to constant velocity (Fernandez and Goldberg, 1976; St George et al., 2011), emphasizing the need for vestibular-multisensory integration when perceiving self-motion over longer time periods (DeAngelis and Angelaki, 2012). Vision can compensate for the insensitivity of the vestibular systems to constant self-motion; it also provides rich information of self-motion such as heading direction (Warren et al., 1988), and visual signals of self-motion persist at constant velocity of self-motion, although their buildup is relatively sluggish (Waespe and Henn, 1977). If only visual information is available for detecting self-motion, such as in a situation of locomotion at a constant velocity, observers cannot distinguish visually simulated self-motion from real self-motion. In fact, stationary observers often feel as if they are moving when they continuously observe optic flow, a coherent visual motion that simulates self-motion. This illusory self-motion is referred to as vection (Fischer and Kornmüller, 1930; Palmisano et al., 2015), which is relevant in vision-based virtual reality (VR) technologies where vestibular or proprioceptive stimulation is not easy to achieve. Although vection is originally defined as visually induced illusion (Palmisano et al., 2015), vestibular and proprioceptive cues can affect vection (Kano, 1991; Lepecq et al., 1999, Lepecq et al., 2006; Seno et al., 2011, Tanahashi et al., 2012; Ash et al., 2013; Seno et al., 2014; Weech and Troje, 2017), extending vection research as a multisensory process. Investigation on multisensory influence on vection could be also helpful for the VR application when a visual display is combined with nonvisual displays such as a motion simulator and the galvanic vestibular stimulation.

It has been reported that optic flow in the lower visual field (LoVF) induces stronger vection than in the upper visual field (UVF) (Telford and Frost, 1993; Sato et al., 2007). Estimation of the heading direction of optic flow is also more precise when optic flow is presented in the LoVF compared to the UVF (D'Avossa and Kersten, 1996). This LoVF superiority in self-motion perception is consistent with general visual biases across the UVF and LoVF that have been revealed by a large number of studies (Skrandies, 1987; Previc, 1990, 1998). For example, luminance and contrast sensitivities are higher in the LoVF than UVF (see review in Previc [1990]). Spatial resolution (Carrasco et al., 2002; Talgar and Carrasco, 2002) and temporal resolution (Previc, 1990) are also greater in the LoVF. In the studies of motion perception, threshold of coherent motion detection was also better in the LoVF (Edwards and Badcock, 1993; Zito et al., 2016). Other visual motion performances were also greater in the LoVF, such as chromatic motion sensitivity (Bilodeau and Faubert, 1997) and moving target detection (Lakha and Humphreys, 2005). In addition, shape perception is better in the LoVF than UVF (Schmidtmann et al., 2015). In attention studies, performances in attention-demanding tasks, such as conjunction detection and attentional tracking, were greater when the tasks were performed in the LoVF than UVF (He et al., 1996), although it was disputed by another study (Carrasco et al., 1998). It was also reported that attention was more weighted to the LoVF than UVF (Dobkins and Rezec, 2004). On the neural bases, latency of visually evoked potential is shorter (Skrandies, 1987; Kremláček et al., 2004), and mismatch negativity to visual motion is greater (Amenedo et al., 2007) in the LoVF presentation than UVF presentation. Some studies have claimed ecological importance of LoVF (Gibson, 1950; Skrandies, 1987; Previc, 1998; Dobkins and Rezec, 2004); objects on the ground are more stable and therefore provide more reliable information on self-motion than those in the sky (Gibson, 1950). Based on the ecological dominance of ground that was first proposed by Gibson (1950), several studies used optic flow displays projected on the floor to effectively produce vection (Trutoiu et al., 2009; Riecke, 2010; Tamada and Seno, 2015).

Because those studies that revealed greater reliance on the LoVF for self-motion perception tested the observers in the upright posture, it is unclear whether this LoVF superiority is caused by the retinotopic or environmental reference frame. The most plausible cause of the LoVF superiority in vection would be the greater intensity of visual inputs in the retinotopic LoVF, as an anatomical study revealed the higher density of retinal ganglion cells in the LoVF (Curcio and Allen, 1990) and as functional magnetic resonance imaging (fMRI) studies with the participants in the supine position revealed faster and stronger representation of visual inputs in the LoVF in V1–V3 (Chen et al., 2004; Liu et al., 2006; O'Connell et al., 2016). On the other hand, the ecological importance of the ground surface (Gibson, 1950; Previc, 1998) is thought to underlie the superiority of the LoVF. That is, the LoVF superiority in vection could be caused by the environmental frame rather than the retinotopic frame, as the location of the ground plane is usually determined by the environmental (gravitational) frame. Note that the gravitational frame of reference is determined not only by visual, but also vestibular, visceral, and proprioceptive cues (Lacquaniti et al., 2015). Effects of retinotopic and environmental frames can be separated by testing participants in a non-upright posture.

In this study, we examined the effect of retinotopic and environmental frames on the LoVF superiority in vection by testing observers in upright and recumbent postures. We divided the visual field into halves horizontally or vertically and presented the radial optic flow in one of the four visual fields (UVF, LoVF, RVF: right visual field, and LeVF: left visual field), while observers were sitting upright or lying down on their side. When observers lie on their side, retinotopically vertical axis became orthogonal to the gravitational axis, and the visual stimuli in the UVF and LoVF were positioned in the environmentally lateral location. On the other hand, surfaces presented in the right and left visual fields for the recumbent observers are gravitationally polarized.

We tested two hypotheses: (1) stronger vection would be induced in the retinotopic LoVF because visual inputs are superior in LoVF even in the supine posture (Chen et al., 2004; Liu et al., 2006; O'Connell et al., 2016) and stronger visual inputs could enhance vection (Brandt et al., 1973), and (2) optic flow in the gravitationally lower field (LGVF: lower gravity visual field) would enhance vection, as suggested by the ecological validity. Note that these two hypotheses are not mutually exclusive and could be supported simultaneously.

Methods

Participants

Sample size was determined based on a power analysis by G*Power (http://www.gpower.hhu.de/). We computed sample size assuming to conduct a paired t test with effect size d = 0.5, α = 0.05, β = 0.8 and obtained 27 as the estimated number of participants. As we had 16 counterbalance groups for postural order, visual block order in the sitting and the recumbent condition, and recumbent direction, we assigned two participants per group. As a result, a total of 32 healthy adults took part in the experiment (mean age = 23.03 years, SD = 4.85 years). All participants were recruited from Kyoto University. They had normal visual acuity or corrected-to-normal visual acuity with contact lenses, as some glasses can distort participants' peripheral field. Three participants were left-handed, one participant was ambidextrous, and the others were right-handed. None of them had a history of vestibular disorders. They were not informed of the purpose of the study. They all provided written informed consent to the procedure, which was in accordance with the ethics standards of the Declaration of Helsinki and was approved by the local ethics committee of Kyoto University.

Apparatus

Visual stimuli were presented on an HTC VIVE HMD (HTC Corporation, Taoyuan, Taiwan), which has dual AMOLED displays with a resolution of 1,080 × 1,200 pixels/eye and field of view of the display ~110° visual angle diagonally. The refresh rate of the displays was 90 Hz. Lens distance of the HMD was corrected to each participant's interpupillary distance. The tracking of HMD position and orientation was enabled. The experiment was controlled by a BTO desktop PC running Windows 10 (Microsoft, Redmond, WA, USA). An Xbox One gamepad (Microsoft) was used as an input device. In the recumbent condition, participants were positioned on a foam mattress in the lateral recumbent position and rested their head on the buckwheat pillow.

Stimuli

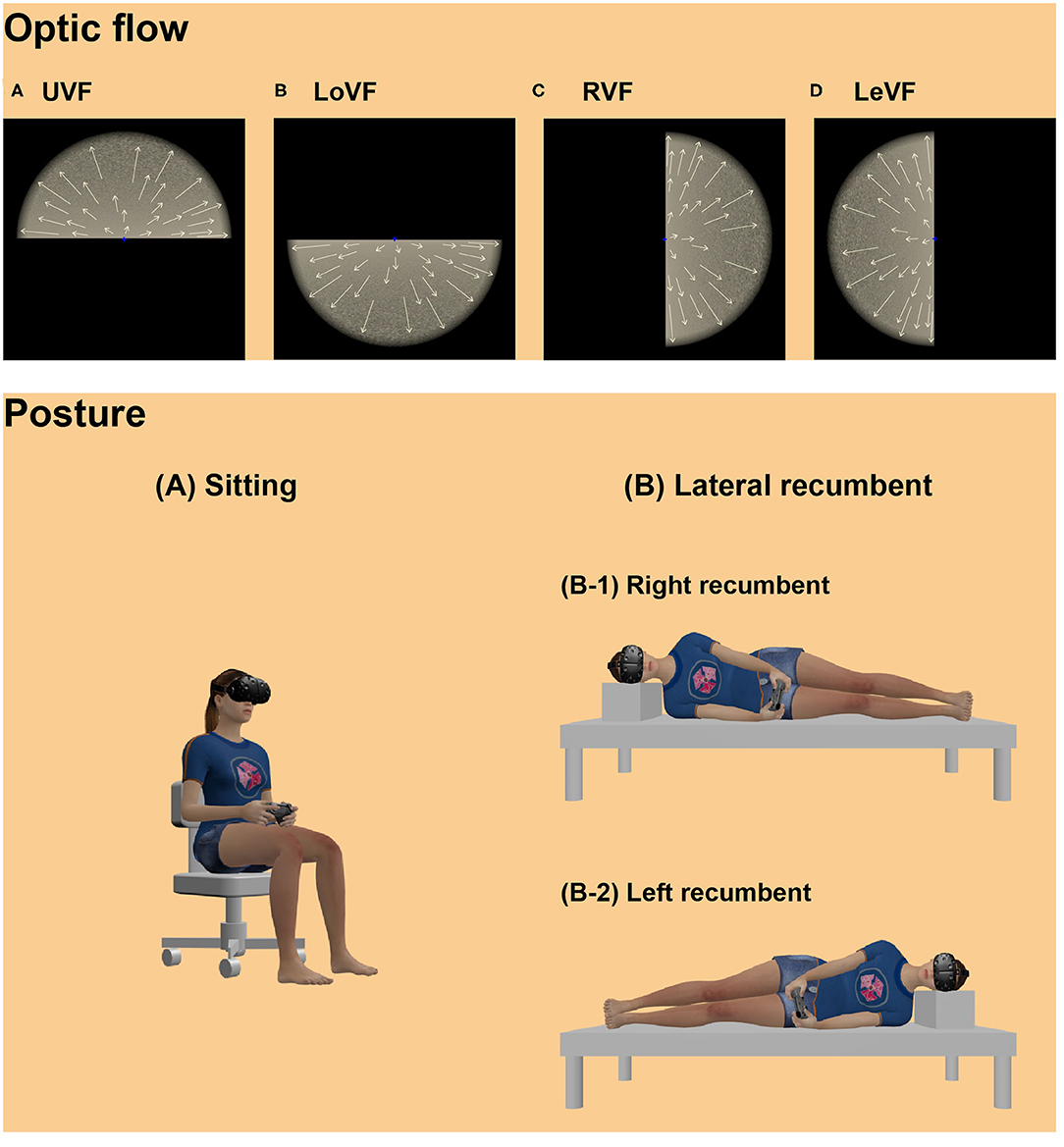

Stimuli were created using Unity engine 2019.1.5f1 (Unity Technologies, San Francisco, CA, USA) and presented on the HMD with binocular disparities. Planer surface with grain noise texture was presented in one of four visual locations with black background: upper, lower, right, and left locations from the viewpoint (Figure 1, upper panel). Each visual location was named as UVF, LoVF, RVF, and LeVF. In the UVF and LoVF conditions, the surface was parallel to the transverse plane, and each surface was located 4 m above or below the viewpoint. In the RVF and LeVF conditions, the surface was parallel to the sagittal plane, and each surface was located at right or left side by 4 m from the viewpoint. The stimuli were presented on the HMD with a 38° radius round aperture with edge blur. The viewpoint moved either forward or backward at a constant speed of 9.4 m/s, producing an expanding or contracting optic flow to each eye, respectively. The stimuli were always shown within a clipping distance of 0.01 to 1,000 m away from the viewpoint.

Figure 1. Illustrations of optic flow displays (upper panel) and postural conditions (lower panel) in the VR scene. Illustrations in the upper panel indicate optic flow stimuli presented in the (A) UVF, (B) LoVF, (C) RVF, (D) LeVF, moving radially. Arrows on the optic flows illustrate motion vectors. Note that the visual fields were defined based on the egocentric axis of the participants. Participants observed the optic flow stimulus while (A) sitting upright or (B) lying down on their side: half of the participants lay right side down (right recumbent: B-1), and the other half lay left side down (left recumbent: B-2). Licensed 3d models: “HTC Vive” Eternal Realm, available at https://sketchfab.com/3d-models/xbox-one-controller-free-97fb54c001f84a6896b6ce8eb7a1814d; “Xbox One Controller Free” paxillop, available at https://sketchfab.com/3d-models/htc-vive-4818cdb261714a70a08991a3d4ed3749 (all licensed under CC BY 4.0).

Procedure

Design

Four blocks of eight trials were carried out. In two blocks, participants comfortably sat on an office chair, holding a gamepad in both hands on their laps. In the other two blocks, they lay with either of their right or left side down, holding a gamepad in both hands in front of their bodies (Figure 1, lower panel). To counterbalance the recumbent directions, half of the participants were asked to lie with their right side down, while the other half lay with their left side down. In each of the sitting and recumbent sessions, one block included 2 trials for each of UVF and LoVF conditions with expanding and contracting conditions, while the other block included 2 trials for each of RVF and LeVF conditions with the two moving directions. The order of the stimulus presentation was randomized within each block. Two blocks in each posture were conducted successively and counterbalanced, and the order of the posture blocks was counterbalanced across participants.

Training

Before the main experiment begun, the participants performed four practice trials in the sitting posture to understand the procedure. The procedure of the training was identical with the main experiment. On the other hand, for the optic flow stimulus, radially expanding or contracting optic flow produced by random dots cloud was presented in the training trials to reduce the possible training effect to the stimulus used in the main experiment. The random dots were distributed over the whole visual field. Moving speed of the viewpoint in the training was identical with optic flow displays in the main experiment. Participants were allowed to conduct more practice trials, if they were not confident of the procedure, but none of them requested extended practices.

Tasks

Participants performed two types of experimental task: online vection report and retrospective rating. Online task was conducted during the optic flow presentation. Participants were asked to keep pressing the A button of the gamepad while they experienced vection during the optic flow presentation. After the presentation, the participants performed the retrospective task by reporting the overall magnitude of vection on a scale from 0 to 100. The rating criteria used were from Bubka et al. (2008). A value of 0 meant that participants felt themselves totally stationary, and only the optic flow appeared to move. A value of 100 meant that the participants felt that they were moving parallel to the stationary surface. Eventually, we obtained three vection measures: latency, duration, and strength rating. Latency was defined as the interval between the optic flow onset and the first button press. Duration was computed by summing the length of time that participants pressed the button during the optic flow presentation.

Trial Preparation

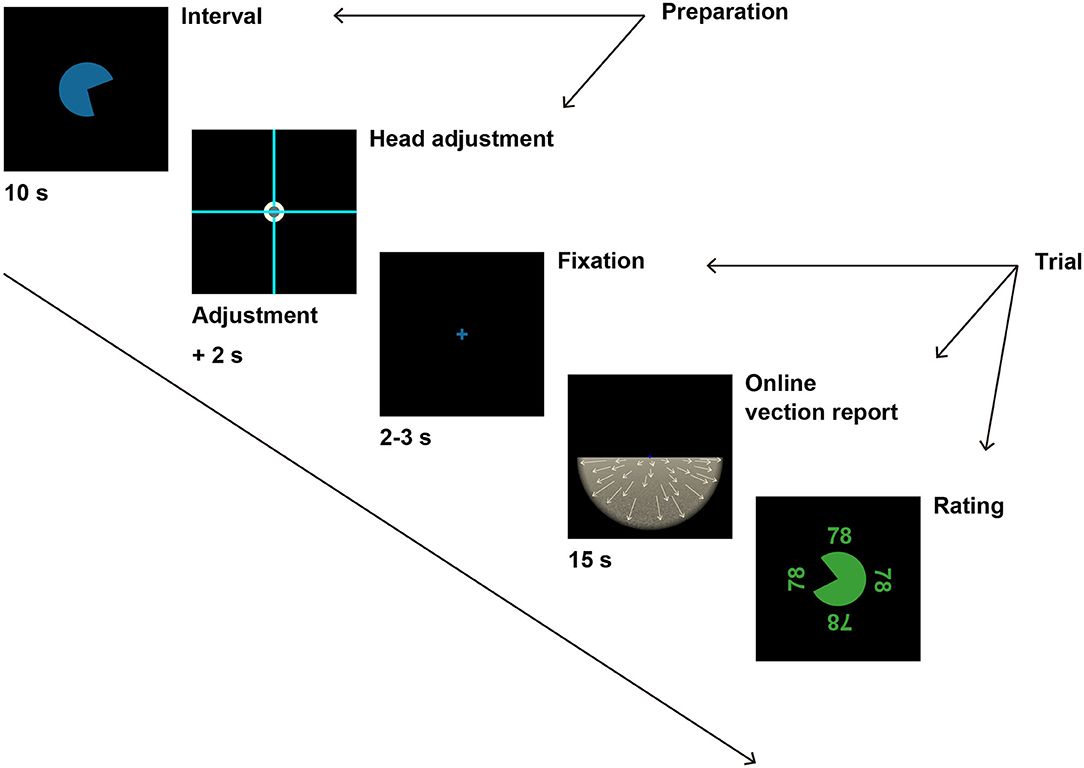

Figure 2 illustrates the experimental procedure. Black background was presented for 10 s as an interval before each trial with a round timer at the center of the VR scene, which informed participants of time remaining until the next trial. The start position of the timer was randomized in each trial. After the interval, the timer disappeared, and two light blue crosses appeared instead, spreading across the display. One cross was positioned at the center of the VR scene and aligned with earth-vertical as a standard reference (scene-fixed cross), whereas the other cross was aligned with the head axis and moved with the participants' head movement (head-fixed cross). A white circle (3.3°) was positioned at the center of the scene-fixed cross, and the head-fixed cross came with a gray circle (1.8°) at the center of the cross. Participants were asked to fit the two crosses by moving their heads to start the next trial in order to adjust the head orientation to straight ahead before each trial. In the recumbent position, participants fitted the crosses with the head-fixed cross rotated by 90° in the roll angle. When the head orientation was within ±2° in pitch, roll, and yaw angles from the straight ahead, the gray circle on the head-fixed cross turned red to indicate that the participants were ready for the next trial. When the participants kept the correct head orientation for 2 s, the scene and head-fixed crosses disappeared, and the next trial started automatically.

Figure 2. A schematic illustration of the experimental procedure. After 10 s of interval, participants were instructed to fit the head-fixed cross to the scene-fixed cross to prepare for the next trial. If participants were able to fit the two crosses for 2 s, the adjustment crosses disappeared, and a fixation cross appeared instead. The fixation cross was followed by optic flow stimulus after a random duration between 2 and 3 s. Participants reported vection experience by pressing a button during the optic flow presentation. After the optic flow presentation for 15 s, participants evaluated the overall magnitude of vection strength by moving the round gauge. See Procedure in Methods for details.

Trial Procedure

At the beginning of each trial, black background with a blue fixation cross (1.3°) at the center was presented for random duration between 2 and 3 s. The fixation cross was followed by the optic flow stimulus. Participants fixated on the fixation cross throughout the optic flow presentation. Participant performed the online vection report during the stimulus presentation. The optic flow stimulus was presented for 15 s. After the stimulus presentation, the participants reported the overall magnitude of vection by moving around gauge at the center of the scene. The start position and the initial value of the gauge were both randomized for each trial. The value of the gauge was displayed at four sides of the gauge (upper, lower, right, left), and the displayed value at each position was oriented against the center of the gauge.

We provided written information on the display only in the training session and did not provide it in the main experiment because it could provide directional cues of downward to the recumbent participants, save for the rating values radially displayed around the gauge. We instead gave verbal instruction to the participants before each postural condition to remind the participants of the task instruction.

Results

Two of 32 participants were excluded from the analyses because they did not experience sufficient vection (<30% of all trials with rating larger than zero and less than 15% of all trials in which online button response was observed). In the remaining participants, online responses (latency and duration) from one participant were excluded from the analyses because the button was not pressed at all in any trials while the rating scores were above zero.

We first checked that there was no significant effect of optic flow direction (contraction/expansion) on vection measures averaged for each participant (strength rating: t29 = −1.15, p = 0.260, d = −0.13; latency: t28 = 0.94, p = 0.353, d = 0.09; duration: t28 = −1.02, p = 0.316, d = −0.10). We therefore averaged the data across motion directions in the later analyses.

We then examined the vertically divided visual fields (UVF and LoVF) and the laterally divided visual fields (RVF and LeVF) separately, as we were not interested in the comparison across the vertical and lateral visual fields such as UVF versus RVF.

Effects of LoVF/UVF and Posture

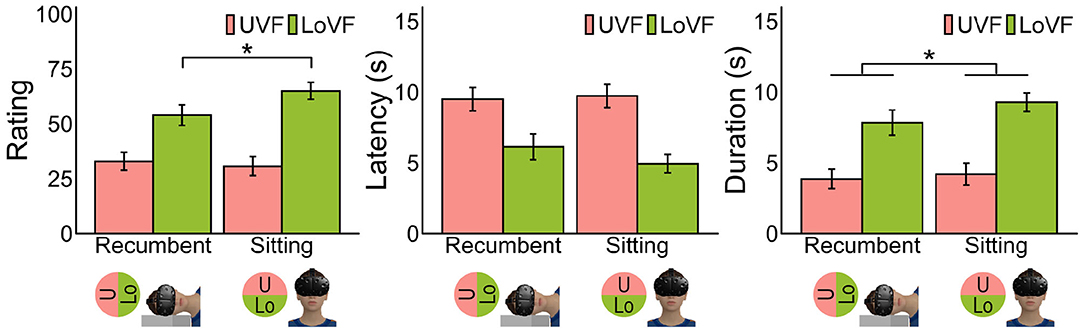

The left panel in Figure 3 shows the mean vection ratings across participants for each posture and visual field. It is evident that vection was stronger in LoVF than in UVF in both postures, and vection in LoVF was weaker in the recumbent position than in the sitting posture. These findings were statistically supported as follows by two-way analysis of variance with two postures (sitting/recumbent) and two vertical visual fields (UVF/LoVF). The main effect of visual field was significant [F(1,31) = 43.12, p < 0.001, η2 = 0.250], but the main effect of posture was not [F(1,31) = 3.67, p = 0.065, η2 = 0.006]. The interaction of visual field and posture was significant [F(1,31) = 5.09, p = 0.031, η2 = 0.014]. The simple main effects of visual field were significant both at recumbent position [F(1,31) = 14.76, p < 0.001, η2 = 0.156] and sitting posture [F(1,31) = 52.25, p < 0.001, η2 = 0.360]. The simple main effect of posture in LoVF [F(1,31) = 7.01, p = 0.013, η2 = 0.050] was significant, but not in UVF [F(1,31) = 0.45, p = 0.507, η2 = 0.002].

Figure 3. Mean vection measures for each posture and vertical location of visual field. Error bars represent standard error of the mean. Pictures at the bottom illustrate each postural condition and VF. *p < 0.05.

LoVF superiority was also found in the other two measures. The main effects of visual field were significant for latency [Figure 3, middle panel; F(1,30) = 38.85, p < 0.001, η2 = 0.174] and duration [Figure 3, right panel; F(1,30) = 46.81, p < 0.001, η2 = 0.227], showing shorter latency and longer duration for LoVF than UVF. The main effect of posture was not significant for latency [F(1,30) = 1.07, p = 0.309, η2 = 0.002] but significant for duration [F(1,30) = 4.27, p = 0.047, η2 = 0.009]. The interaction of visual field and posture was not significant for both measures [latency: F(1,30) = 2.80, p = 0.105, η2 = 0.005; duration: F(1,30) = 1.52, p = 0.227, η2 = 0.003].

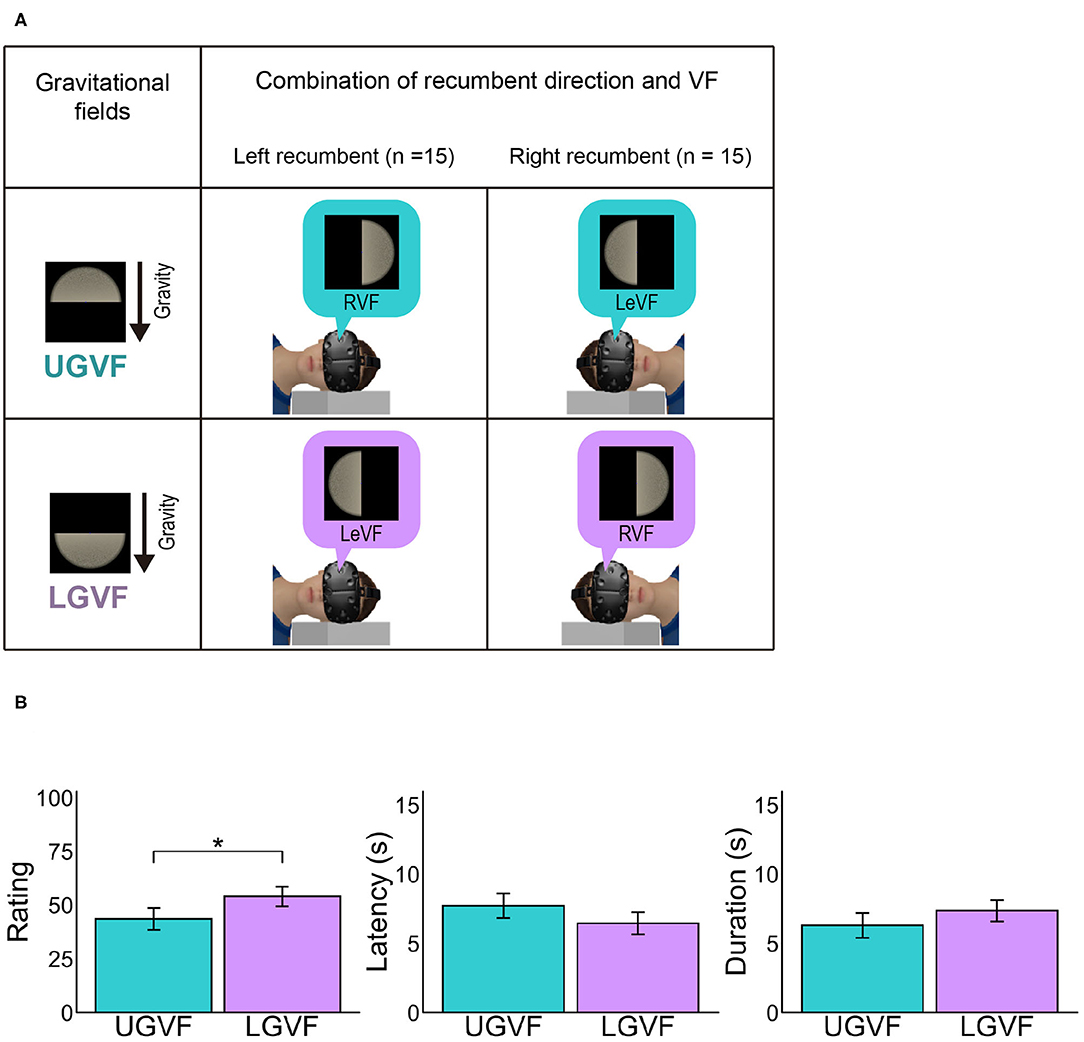

Effects of Gravitational Visual Field

Vection rating in LoVF might be weakened in the recumbent position as compared to the sitting posture because LoVF in the recumbent position does not correspond to the environmentally lower field, while LoVF in the sitting posture does. To directly examine the effect of environmentally upper and lower locations, we labeled the lateral visual fields based on the gravitational frame of reference. Because the recumbent condition was crucial for the comparison, the visual fields that were positioned in the gravitationally upper and lower location relative to the viewpoint in the recumbent position were defined as upper gravity visual field (UGVF) and LGVF, respectively. For the group of left lateral recumbent participants (n = 15), RVF and LeVF were labeled as UGVF and LGVF, respectively, while the definition was reversed for the group of right lateral recumbent (n = 15) (Figure 4A). Then, we conducted paired t tests to compare LGVF and UGVF in the recumbent position.

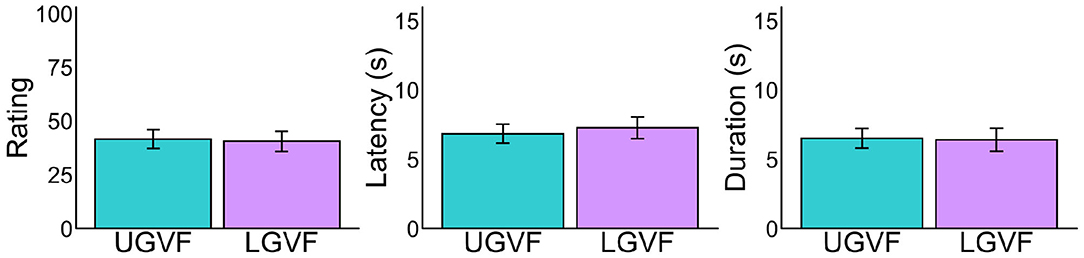

Figure 4. (A) Illustration of upper gravity visual field (UGVF) and lower gravity visual field (LGVF). (B) Mean vection measures for each lateral location of visual field. UGVF refers to the hemi visual field that is positioned at the gravitationally upper location relative to the viewpoint when participants were lying down on their side, while LGVF refers to the hemi visual field at the gravitationally lower location. Error bars represent standard error of the mean across participants *p < 0.05.

The left panel in Figure 4B shows the mean vection ratings across participants in UGVF and LGVF under the recumbent condition. The difference between UGVF and LGVF was significant [t(31) = −2.32, p = 0.027, d = −0.378], demonstrating stronger vection rating in the LGVF than in the UGVF. On the other hand, difference between UGVF and LGVF was not significant for latency [t(30) = 1.73, p = 0.094, d = 0.270] or duration [t(30) = −1.43, p = 0.162, d = −0.228], although the patterns of results are similar to the rating response (i.e., shorter latency and longer duration in LGVF than in UGVF).

No Evidence for Biases Across Lateral Visual Fields

We ruled out the possibility that the above difference between UGVF and LGVF reflected individual biases in LeVF and RVF, by comparing the results under the sitting condition (Figure 5). Although LeVF and RVF were environmentally lateral in the sitting posture, we labeled these fields as consistent with the recumbent condition for each participant. As shown in Figure 5, difference between the UGVF and LGVF is not evident under the sitting condition in any of the three measures [ratings: t(31) = 0.32, p = 0.752, d = 0.040; latency: t(30) = −0.96, p = 0.345, d = −0.103; duration: t(30) = 0.21, p = 0.837, d = 0.023].

Figure 5. Mean vection measures for each lateral location of visual field in the sitting posture. Error bars represent standard error of the mean. Note that the UGVF and LGVF in the sitting posture were defined based on the individual recumbent direction in the recumbent condition. See Figure 4A for the details of UGVF and LGVF.

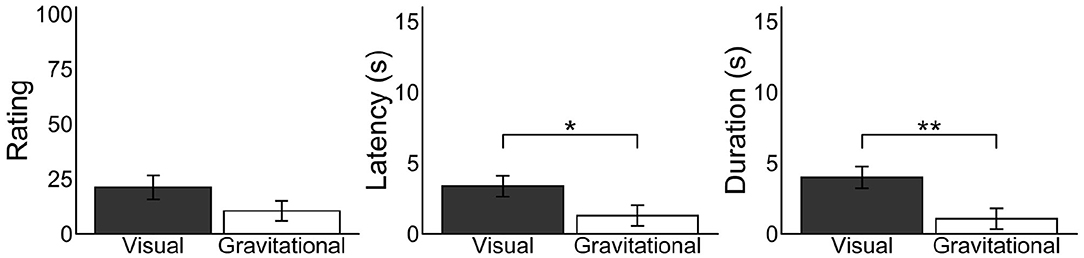

Effects of Retinotopically and Gravitationally Lower Visual Fields

We examined whether the retinotopic or environmental frame is of primary importance in enhancing vection. Effects of the retinotopically LoVF were calculated by subtracting vection responses in the UVF condition from ones in the LoVF condition in the recumbent position for each participant, and effects of the gravitationally LoVF were calculated by subtracting vection responses in the UGVF condition from ones in the LGVF condition in the recumbent position for each participant (Figure 6). We found significantly shorter latency [t(30) = −2.20, p = 0.035, d = 0.51] and longer duration [t(30) = 3.19, p = 0.0034, d = 0.70] for the retinotopic effect as compared with the environmental effect, while the result in the rating was not significant [t(31) = 2.00, p = 0.055, d = 0.37]. These results suggest that retinotopic effect is larger and more robust than the environmental effect, but also that the environmental effect is not trivial.

Figure 6. Mean differences of vection measures for each vertically divided and laterally divided visual field in the recumbent position. Bar plots in the visual condition represent differences of vection responses between the UVF and LoVF conditions in the recumbent position for each participant, representing the effects of the retinotopically lower visual field. Bar plots in the gravitational condition represent differences of vection responses between the UGVF and LGVF conditions in the recumbent position for each participant, representing the effects of the gravitationally lower visual field. Error bars represent standard error of the mean *p < 0.05, **p < 0.01.

Discussion

In this study, we examined the effect of retinotopic and environmental frames on the LoVF superiority in vection (Telford and Frost, 1993; Sato et al., 2007), by comparing the results in sitting and recumbent postures. Our findings are threefold. First, we found a robust effect of the retinotopic location. Visual motion presented in the LoVF induced stronger vection than in the UVF, both in the sitting and recumbent postures, which was shown by all of three vection responses. Second, we observed the interaction effect between visual field and posture on vection strength ratings. Vection ratings were higher when participants viewed LoVF displays while seated compared with lying down on their side. And finally, we also found stronger vection rating in the recumbent participants when a stimulus was presented in the gravitationally lower but retinotopically lateral location, as compared with the gravitationally upper location. The second and third findings were statistically supported only in vection rating with small effect sizes, suggesting that the primary factor for the LoVF superiority is retinotopy, and the effect of environmental frame is not very robust. The larger effects of the retinotopic frame on the LoVF superiority were also supported by the results in Effects of Retinotopically and Gravitationally Lower Visual Fields. On a side note, vection durations for the vertically divided visual fields were shorter in the recumbent than the sitting, which is in line with a previous study (Guterman et al., 2012) that found reduced vection responses in the lateral recumbent position compared with the erect posture. However, the effect of posture was only significant for duration.

Retinotopic effects of visual fields are consistent with an anatomical finding of the bias of ganglion cell distribution between the upper and lower retina (Curcio and Allen, 1990) and fMRI studies that showed faster and stronger visual representation in the human primary visual cortex in the supine posture when visual stimulus was presented in the LoVF than UVF (Chen et al., 2004; Liu et al., 2006; O'Connell et al., 2016). Stronger vection in the LoVF in both sitting and recumbent postures suggests that intensity of visual inputs in the early stage affects self-motion perception.

Current results imply the effect of environmental frame on self-motion perception, as well as retinotopic frame. In this study, vection rating in the LoVF condition in the sitting posture was stronger than that in the recumbent position. This result suggests that the LoVF superiority in vection is not only caused by the retinotopy, but also modulated by the environmental frame. Weaker LoVF rating in the recumbent position could be explained as the recumbent position moves the LoVF into environmentally lateral location, possibly removing the benefit of gravitationally lower location. The benefit of gravitationally lower location was also shown by the rating result that optic flow presented in the LGVF induced stronger vection rating than in the UGVF for the recumbent participants. These results imply that the LoVF superiority is partially attributed to the superiority of gravitationally lower location even in the sitting posture. The effects of environmental frame observed in the current study are in line with the idea of the ecological importance of ground plane that objects near the ground is more reliable than objects near the sky for self-motion perception (Gibson, 1950). Note again that the effects of environmental frame were only supported in the ratings with small effect sizes.

As the visual stimuli in this experiment had no explicit information about gravity (such as the direction of furniture in the room [Howard and Hu, 2001]), other nonvisual information including vestibular and somatosensory inputs should have been used to detect the direction of gravity (Dakin and Rosenberg, 2018) to form the environmental frame in the visual scene. Multisensory information about gravity might be related to the current results that suggested the influence of environmental frame.

Considering the effect of environmental frame on vection, LoVF superiority for the recumbent observers could be induced because of the body frame as well as the visual retinotopy, as the direction of vertical (Mittelstaedt, 1983) or upright (Dyde et al., 2006) is known to be biased toward the body axis. In addition, perception of verticality might affect the LoVF superiority in vection, as the ground surface could be ecologically relevant when it is orthogonal to the gravity. Vection might be more efficiently induced by the planer surface that is orthogonal to the perceived vertical than the true vertical, especially when the perceived vertical direction is biased in the recumbent position. Further research is required to separate the retinotopic effect and the effect of body axis on the superiority of LoVF.

One question worthy of future research for VR application is how pictorial cues contribute to the effect of visual location on vection. Howard and Hu (2001) reported the dominance of the pictorial cues over the body and gravity axis for the downward estimation, as well as the interactions with the body and gravity. Given that most of the VR environments simulate the naturalistic visual scene, whether the visual location in the pictorial frame of reference affects vection should be further studied, as well as its interaction with other reference frames.

Data Availability Statement

The datasets presented in this article are not readily available because of ethical restrictions on raw data availability. Requests to access the datasets should be directed to ZnVqaW1vdG8ua2Fub24uNjNhQHN0Lmt5b3RvLXUuYWMuanA=.

Ethics Statement

The studies involving human participants were reviewed and approved by local ethics committee of Kyoto University. The patients/participants provided their written informed consent to participate in this study.

Author Contributions

All authors reviewed and edited the manuscript and contributed to the design of the study. KF performed the experiments, analyzed the data, and wrote the manuscript with feedback from HA.

Funding

This study was supported by a JSPS grant-in-aid for scientific research (15H01984 and 19K03367 to HA; 20J10183 to KF) and HAYAO NAKAYAMA Foundation for Science & Technology and Culture.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Juno Kim and his Sensory Processes Innovation Network (SPINet) for valuable comments and suggestions.

References

Amenedo, E., Pazo-Alvarez, P., and Cadaveira, F. (2007). Vertical asymmetries in pre-attentive detection of changes in motion direction. Int. J. Psychophysiol. 64, 184–189. doi: 10.1016/j.ijpsycho.2007.02.001

Ash, A., Palmisano, S., Ahorp, D., and Allison, R. S. (2013). Vection in depth during treadmill walking. Perception 42, 562–576. doi: 10.1068/p7449

Berthoz, A., Pavard, B., and Young, L. R. (1975). Perception of linear horizontal self-motion induced by peripheral vision (linearvection) basic characteristics and visual-vestibular interactions. Exp. Brain Res. 23, 471–489. doi: 10.1007/BF00234916

Bilodeau, L., and Faubert, J. (1997). Isoluminance and chromatic motion perception throughout the visual field. Vision Res. 37, 2073–2081. doi: 10.1016/S0042-6989(97)00012-6

Brandt, T., Dichgans, J., and Koenig, E. (1973). Differential effects of central versus peripheral vision on egocentric and exocentric motion perception. Exp. Brain Res. 16, 476–491. doi: 10.1007/BF00234474

Bubka, A., Bonato, F., and Palmisano, S. (2008). Expanding and contracting optic-flow patterns and vection. Perception 37, 704–711. doi: 10.1068/p5781

Carrasco, M., Wei, C., Yeshurun, Y., and Orduña, I. (1998). Do attentional effects differ across visual fields? Perception 27(Suppl.1):24.

Carrasco, M., Williams, P. E., and Yeshurun, Y. (2002). Covert attention increases spatial resolution with or without masks: support for signal enhancement. J. Vis. 2:4. doi: 10.1167/2.6.4

Chen, H., Yao, D., and Liu, Z. (2004). A study on asymmetry of spatial visual field by analysis of the fMRI BOLD response. Brain Topogr. 17, 39–46. doi: 10.1023/B:BRAT.0000047335.00110.6a

Curcio, C. A., and Allen, K. A. (1990). Topography of ganglion cells in human retina. J. Comp. Neurol. 300, 5–25. doi: 10.1002/cne.903000103

Dakin, C. J., and Rosenberg, A. (2018). Gravity estimation and verticality perception. Handb. Clin. Neurol. 159, 43–59. doi: 10.1016/B978-0-444-63916-5.00003-3

D'Avossa, G., and Kersten, D. (1996). Evidence in human subjects for independent coding of azimuth and elevation for direction of heading from optic flow. Vision Res. 36, 2915–2924. doi: 10.1016/0042-6989(96)00010-7

DeAngelis, G., and Angelaki, D. (2012). “Visual–vestibular integration for self-motion perception,” in The Neural Bases of Multisensory Processes, eds M. Murray, and M. T. Wallace (Boca Raton, FL: CRC Press/Taylor & Francis).

Dobkins, K., and Rezec, A. (2004). Attentional weighting: a possible account of visual field asymmetries in visual search? Spat. Vis. 17, 269–293. doi: 10.1163/1568568041920203

Dyde, R. T., Jenkin, M. R., and Harris, L. R. (2006). The subjective visual vertical and the perceptual upright. Exp. Brain Res. 173, 612–622. doi: 10.1007/s00221-006-0405-y

Edwards, M., and Badcock, D. R. (1993). Asymmetries in the sensitivity to motion in depth: a centripetal bias. Perception 22, 1013–1023. doi: 10.1068/p221013

Fernandez, C., and Goldberg, J. M. (1976). Physiology of peripheral neurons innervating otolith organs of the squirrel monkey. II. directional selectivity and force response relations. J. Neurophysiol. 39, 985–995. doi: 10.1152/jn.1976.39.5.985

Fischer, H. M., and Kornmüller, A. E. (1930). Optokinetic ausgelöste bewegungswahrnehmungen und opto-kinetischer nystagmus. J. Psychol. Neurol. 41, 273–308.

Fitzpatrick, R., and McCloskey, D. I. (1994). Proprioceptive, visual and vestibular thresholds for the perception of sway during standing in humans. J. Physiol. 478, 173–186. doi: 10.1113/jphysiol.1994.sp020240

Greenlee, M. W., Frank, S. M., Kaliuzhna, M., Blanke, O., Bremmer, F., Churan, J., et al. (2016). Multisensory integration in self motion perception. Multisens. Res. 29, 525–556. doi: 10.1163/22134808-00002527

Guterman, P. S., Allison, R. S., Palmisano, S., and Zacher, J. E. (2012). Influence of head orientation and viewpoint oscillation on linear vection. J. Vestibul. Res. Equil. Orient. 22, 105–116. doi: 10.3233/VES-2012-0448

He, S., Cavanagh, P., and Intriligator, J. (1996). Attentional resolution and the locus of visual awareness. Nature 383, 334–337. doi: 10.1038/383334a0

Howard, I. P., and Hu, G. (2001). Visually induced reorientation illusions. Perception 30, 583–600. doi: 10.1068/p3106

Jamali, M., Carriot, J., Chacron, M. J., and Cullen, K. E. (2019). Coding strategies in the otolith system differ for translational head motion vs. static orientation relative to gravity. Elife 8:e45573. doi: 10.7554/eLife.45573

Kano, C. (1991). The perception of self-motion induced by peripheral visual information in sitting and supine postures. Ecol. Psychol. 3, 241–252. doi: 10.1207/s15326969eco0303_3

Kremláček, J., Kuba, M., Chlubnová, J., and Kubová, Z. (2004). Effect of stimulus localisation on motion-onset VEP. Vision Res. 44, 2989–3000. doi: 10.1016/j.visres.2004.07.002

Lacquaniti, F., Bosco, G., Gravano, S., Indovina, I., La Scaleia, B., Maffei, V., et al. (2015). Gravity in the brain as a reference for space and time perception. Multisens. Res. 28, 397–426. doi: 10.1163/22134808-00002471

Lakha, L., and Humphreys, G. (2005). Lower visual field advantage for motion segmentation during high competition for selection. Spat. Vis. 18, 447–460. doi: 10.1163/1568568054389570

Lepecq, J. C., De Waele, C., Mertz-Josse, S., Teyssèdre, C., Tran Ba Huy, P., Baudonnière, P. M., et al. (2006). Galvanic vestibular stimulation modifies vection paths in healthy subjects. J. Neurophysiol. 95, 3199–3207. doi: 10.1152/jn.00478.2005

Lepecq, J. C., Giannopulu, I., Mertz, S., and Baudonnière, P. M. (1999). Vestibular sensitivity and vection chronometry along the spinal axis in erect man. Perception. 28, 63–72. doi: 10.1068/p2749

Liu, T., Heeger, D. J., and Carrasco, M. (2006). Neural correlates of the visual vertical meridian asymmetry. J. Vis. 6, 1294–1306. doi: 10.1167/6.11.12

Mittelstaedt, H. (1983). A new solution to the problem of the subjective vertical. Naturwissenschaften 70, 272–281. doi: 10.1007/BF00404833

O'Connell, C., Ho, L. C., Murphy, M. C., Conner, I. P., Wollstein, G., Cham, R., et al. (2016). Structural and functional correlates of visual field asymmetry in the human brain by diffusion kurtosis MRI and functional MRI. Neuroreport 27, 1225–1231. doi: 10.1097/WNR.0000000000000682

Palmisano, S., Allison, R. S., Schira, M. M., and Barry, R. J. (2015). Future challenges for vection research: definitions, functional significance, measures, and neural bases. Front. Psychol. 6:193. doi: 10.3389/fpsyg.2015.00193

Previc, F. H. (1990). Functional specialization in the lower and upper visual fields in humans: Its ecological origins and neurophysiological implications. Behav. Brain Sci. 13, 519–542. doi: 10.1017/S0140525X00080018

Previc, F. H. (1998). The neuropsychology of 3-D space. Psychol. Bull. 124, 123–164. doi: 10.1037/0033-2909.124.2.123

Riecke, B. E. (2010). “Compelling self-motion through virtual environments without actual self-motion – using self-motion illusions (‘Vection’) to improve VR user experience,” in Virtual Reality, ed J. J. Kim (London: InTech).

Sato, T., Seno, T., Kanaya, H., and Hukazawa, H. (2007). “The ground is more effective than the sky - the comparison of the ground and the sky in effectiveness for vection,” in Proceedings of ASIAGRAPH 2007 (Shanghai; Tokyo: Virtual Reality Society of Japan), 103–108.

Schmidtmann, G., Logan, A. J., Kennedy, G. J., Gordon, G. E., and Loffler, G. (2015). Distinct lower visual field preference for object shape. J. Vis. 15:18. doi: 10.1167/15.5.18

Seno, T., Ito, H., and Sunaga, S. (2011). Inconsistent locomotion inhibits vection. Perception 40, 747–750. doi: 10.1068/p7018

Seno, T., Palmisano, S., Riecke, B. E., and Nakamura, S. (2014). Walking without optic flow reduces subsequent vection. Exp. Brain Res. 233, 275–281. doi: 10.1007/s00221-014-4109-4

Skrandies, W. (1987). “The upper and lower visual field of man: electrophysiological and functional differences,” in Progress in Sensory Physiology, eds H. Autrum, D. Ottoson, E. R. Perl, R. F. Schmidt, H. Shimazu, W. D. Willis (Berlin: Springer Heidelberg), 1–93.

St George, R. J., Day, B. L., and Fitzpatrick, R. C. (2011). Adaptation of vestibular signals for self-motion perception. J. Physiol. 589, 843–853. doi: 10.1113/jphysiol.2010.197053

Talgar, C. P., and Carrasco, M. (2002). Vertical meridian asymmetry in spatial resolution: visual and attentional factors. Psychon. Bull. Rev. 9, 714–22. doi: 10.3758/BF03196326

Tamada, Y., and Seno, T. (2015). Roles of size, position, and speed of stimulus in vection with stimuli projected on a ground surface. Aerosp. Med. Hum. Perform. 86, 794–802. doi: 10.3357/AMHP.4206.2015

Tanahashi, S., Ujike, H., and Ukai, K. (2012). Visual rotation axis and body position relative to the gravitational direction: effects on circular vection. Iperception. 3, 804–819. doi: 10.1068/i0479

Telford, L., and Frost, B. J. (1993). Factors affecting the onset and magnitude of linear vection. Percept. Psychophys. 53, 682–692. doi: 10.3758/BF03211744

Trutoiu, L. C., Mohler, B. J., Schulte-Pelkum, J., and Bülthoff, H. H. (2009). Circular, linear, and curvilinear vection in a large-screen virtual environment with floor projection. Comput. Graph. 33, 47–58. doi: 10.1016/j.cag.2008.11.008

Waespe, W., and Henn, V. (1977). Neuronal activity in the vestibular nuclei of the alert monkey during vestibular and optokinetic stimulation. Exp. Brain Res. 27, 523–538. doi: 10.1007/BF00239041

Warren, W. H., Morris, M. W., and Kalish, M. (1988). Perception of translational heading from optical flow. J. Exp. Psychol. Hum. Percept. Perform. 14, 646–660. doi: 10.1037/0096-1523.14.4.646

Weech, S., and Troje, N. F. (2017). Vection latency is reduced by bone-conducted vibration and noisy galvanic vestibular stimulation. Multisens. Res. 30, 65–90. doi: 10.1163/22134808-00002545

Zacharias, G. L., and Young, L. R. (1981). Influence of combined visual and vestibular cues on human perception and control of horizontal rotation. Exp. Brain Res. 41, 159–171. doi: 10.1007/BF00236605

Keywords: vection, lower visual field, reference frame, gravity, posture, virtual reality

Citation: Fujimoto K and Ashida H (2020) Roles of the Retinotopic and Environmental Frames of Reference on Vection. Front. Virtual Real. 1:581920. doi: 10.3389/frvir.2020.581920

Received: 10 July 2020; Accepted: 18 September 2020;

Published: 24 November 2020.

Edited by:

Mar Gonzalez-Franco, Microsoft Research, United StatesReviewed by:

Yuki Yamada, Kyushu University, JapanMaria Gallagher, Cardiff University, United Kingdom

Copyright © 2020 Fujimoto and Ashida. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kanon Fujimoto, ZnVqaW1vdG8ua2Fub24uNjNhQHN0Lmt5b3RvLXUuYWMuanA=

Kanon Fujimoto

Kanon Fujimoto Hiroshi Ashida

Hiroshi Ashida