- 1Luminex Technologies, Boulder, CO, United States

- 2Information Technology Laboratory, NIST, Boulder, CO, United States

Extended reality devices are enabling new approaches and procedures in the medical field. However, many in the medical community are unfamiliar with these technologies and how to evaluate them for a given application. This article presents an overview of the available international visual performance standards for virtual and augmented reality devices to better inform users and developers on the critical metrics and methods commonly used to assess the devices. These standards provide guidance to minimize collisions and falls during casual use, reduce visual discomfort and fatigue during extended use in areas such as pain management, training, and surgery, and increase efficiency and outcomes through improved image quality and readability of visual information. The article is intended to assist users with identifying which visual performance parameters may be critical for their application and directs the user to the relevant standards where the detailed evaluation methods can be obtained.

1 Introduction

The rapid development of virtual reality (VR) and augmented reality (AR) devices has enabled a broad range of applications, ranging from entertainment to medical procedures. These devices can render immersive environments which merge the physical and virtual worlds into an extended reality (XR) that allows users to interact with computer-generated elements. In the medical field, these technologies are being used in areas such as pain management (Spiegel et al., 2019), preoperative planning and intraoperative navigation (Gupta et al., 2021), and even providing real-time guidance from a remote surgeon (Huang et al., 2019). However, the initial adoption of XR devices into medical applications has been hampered by the limitations of the technology. For example, wearing XR devices may increase the risk of collisions and falls. This is especially a concern for VR users since the real world is often obscured from them. This can be somewhat mitigated by restricting the movement of the VR user, or using external cameras that provide an image of the real world. These safety factors can be further reduced by using AR devices, since the user can directly see the real world. However, safety is still a concern since wearing AR devices typically produces a dimmer real scene and decreases the field of view of the user. Bright lighting environments may also reduce the visibility of the virtual image rendered by the AR device. In applications where prolonged use of XR devices is necessary, the weight/balance and virtual image content of the device can cause physical and as well as visual discomfort, leading to muscle strain and nausea. Visual discomfort can be induced by the image content (e.g., visually induced motion sickness and photosensitive seizures) and image quality (e.g., vergence-accommodation mismatch). In addition, the virtual image quality of the XR device will also impact the readability and effective use of the device. The adverse impact on image quality from insufficient XR spatial resolution, contrast, and viewing in bright environments was demonstrated in prior work (Beams et al., 2022). But the XR industry is actively evolving to address many of these safety, comfort and image quality issues. The industry has also published numerous standards to assess and guide the XR users toward the appropriate application of these devices. However, many in the medical field are unfamiliar with XR technology and lack the knowledge to adequately evaluate the suitability of the devices for the task (Beams et al., 2022). This review seeks to provide an overview of the published visual performance standards related to XR safety, visual comfort, and image quality in order to guide the user and medical system developer in identifying the critical performance metrics for their application and directs them to the standards where the detailed evaluation methods for those metrics can be obtained.

Standards are often used to facilitate greater trade and ensure a minimum level of quality. International standards like the International Telecommunications Union (ITU), International Organization for Standardization (ISO), and the International Electrotechnical Commission (IEC) are recognized by the World Trade Organization in international treaties. The international standards published by these three organizations have been approved by numerous member countries and offer the highest level of adoption and conformity. This review will focus on the applicable international standards for evaluating XR visual performance. However, these international standards typically require several years to develop and be approved. Often individual country standards or industry standards are developed first and then adopted by the international standards. Our study will also include these standards when there are gaps in the international standards.

2 Safety, comfort, and visual fatigue

2.1 Safety and comfort

The XR device design will impact user safety, headset comfort, and visual comfort. Several standards have identified important characteristics that influence the safety and ergonomics of the headset. Underwriters Laboratories (UL) recently published an American/Canadian national standard focused on the safety aspects of using XR devices, typically in commercial or industrial settings (ANSI, 2025). In addition to considering the physical characteristics of the headset (such biomechanical stress, heat exposure, and biocompatibility) for safely wearing the headset, this national standard also highlights visual performance characteristics that should be considered when viewing information with the headset. For example, wearing a headset typically limits the user’s view of the real world (real scene field of view). This optical occlusion from the headset limits the user’s spatial awareness and can increase the risk of collisions and falls. This standard provides guidance on assessing risk and mitigating collision and fall hazards, but it does not offer a method for measuring the headset real scene field of view. However, IEC 63145-22-20 does provide a standard measurement procedure for determining the headset field of view of the real scene for an AR device (IEC, 2024). The real scene field of view can be based on a specified change in luminance, Michelson contrast, or color when performing an angular scan in the horizontal, vertical, and diagonal directions.

Another safety related issue addressed in the ANSI 8400 standard involves poor visibility due to low optical transmittance of AR devices (ANSI, 2025). If the headset transmittance is not sufficiently high for a given ambient lighting environment, the user may not see warning signs or avoid hazards. The standard refers to other conventional eye and face protection standards for recommended transmission levels and measuring methods. However, these eye and face protection standards do not consider the geometric constraints and diffractive optical components typically used by XR devices. The recent publication of IEC 63145-10 specifically addressed AR devices and their optical transmission depending on how they are used (IEC, 2023). This IEC standard refers to IEC 63145-22-10 for the directional transmittance measurement procedure of the AR device (IEC, 2023).

Display flicker is another safety issue covered by ANSI 8400 (ANSI, 2025). In general, flicker is an unwanted temporal artifact that is distracting to the user of the headset. However, if the light flashes are of a certain frequency, amplitude, and color, they can induce a photosensitive seizure (ISO, 2016). In addition to the induced seizure, the user is also susceptible to a fall hazard. The ANSI 8400 standard provides a short method and threshold requirements for avoiding photosensitive seizures due to unwanted flicker artifacts from the XR device hardware. However, these flashes can also unintentionally occur in video content if the content creator is unaware of this issue. ISO 9241-391 provides requirements that can be used to assess the potential of inducing photosensitive seizures from video content (ISO, 2016).

2.2 Visually induced motion sickness

Immersive XR technologies can cause visually induced motion sickness (VIMS) (ISO, 2020a). This can not only cause discomfort, but also increases the risk of falls and collisions. ANSI 8400 indicates that a large motion-to-photon latency, a mismatch between the interpupillary distance of the pupils and the distance between the centers of the XR lenses, motion-induced blur, and an unstable vertical visual reference as XR devices characteristics that can lead to VIMS (ANSI, 2025). The national standard offers conformance levels for the corresponding metrics and gives brief descriptions of their measuring methods. In addition to VIMS caused by the XR hardware, it can also be induced by the video content. ISO 9241-394 provides guidance to the content creators for mitigating VIMS (ISO, 2020b). This guidance includes the prevalence of VIMS with the total amount of rotation in the pitch, yaw, and roll directions.

2.3 Visual fatigue

Humans use binocular vision to obtain important information about a real scene. The brain fuses the slightly different views from the left and right eye to perceive the shape and depth of real objects. This information is critical when performing detailed procedures like surgery. XR devices try to create virtual images that simulate real objects with the same sense of 3D perception. However, these virtual simulations are often imperfect and the brain has trouble reconciling the virtual image. This can cause visual discomfort, visual fatigue, and even nausea. ISO 9241-392 considers several factors that can cause visual fatigue when using stereoscopic images (ISO, 2015). One factor is the interpupillary distance of the user. The distance between the centers of the XR lenses needs to match that of the user’s interpupillary distance (ANSI, 2025). Another is interocular geometric differences between the virtual images presented to the left and right eye. Real images are always well aligned, however, the XR device can have a vertical misalignment, a rotational misalignment, and a magnification difference between the device eye pieces which can cause visual fatigue. Luminance, contrast, color, crosstalk and temporal differences between the left and right virtual image can also cause visual fatigue. ISO 9241-392 offers guidance for mitigating some of factors, but not all. The standard does not provide procedures for measuring these attributes, but IEC 63145-20-10 does give standard procedures for measuring the luminance, contrast, and color (IEC, 2019a). A procedure for measuring crosstalk between stereoscopic images is given in the Information Display Measurements Standard (IDMS) section 17.2.2 (IDMS, 2024). This measures the amount of light leakage from a white field in one eye to a dark field in the other eye.

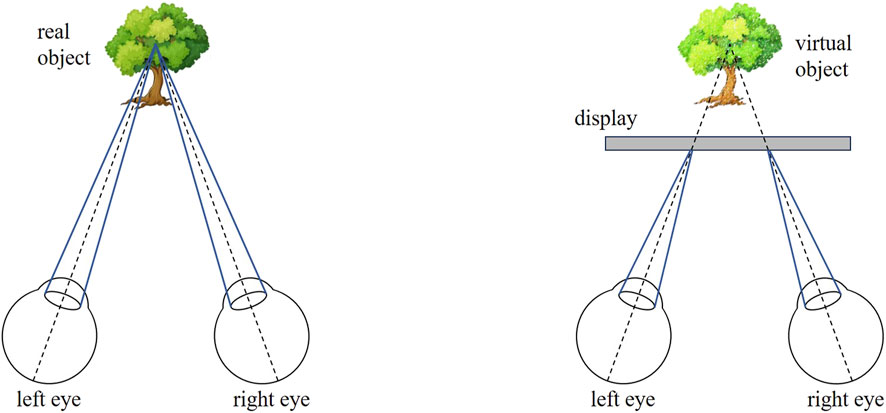

Vergence-accommodation mismatch (or conflict) is another important factor (Hoffman et al., 2019). When viewing real scenes, our eyes simultaneously focus on an object and rotate inward to create a converging angle centered at the object (see left side of Figure 1). However, XR devices often create virtual images with a fixed focus, but the left and right virtual images can have variable horizontal disparity or vergence angle. This means that the apex of the vergence angle could be in front or behind the focal distance of the device. The right side of Figure 1 shows the situation where the eyes are focused on the display but they converge on the virtual image behind the display. If there is a significant difference between the position of the vergence apex and the focus position, it can lead to visual fatigue. ISO 9241-392 summaries the research on the relationship between vergence and focus (accommodation) and highlights Percival’s zone of comfort that gives an allowable tolerance for the mismatch where a clear single binocular image can be seen (ISO, 2015). This tolerance grows with increasing distance, making the vergence-accommodation mismatch more important at close arm’s length applications. Although ISO 9241-392 offers a mitigation method for vergence-accommodation mismatch, it does not provide measurement procedures for determining the focus distance and vergence angle. The virtual image focal distance can be determined using IEC 63145-20-20, but measuring the vergence angle is not standardized (IEC, 2019b).

Figure 1. Illustration of vergence and accommodation. Left example shows the normal situation where the eyes focus at the object and converge at the same distance. Right example shows the possible case where the eyes focus on the display but converge on the virtual object behind the display.

Measuring the vergence angle of an XR device is complicated by the precise determination of the eye point position of the headset. XR devices have an optical exit pupil position where the user is to place their eyeball for best performance. This is the eye point of the device, and is generally along the optical axis of the left and right eyepiece of the XR device. This eye point is used as the reference position where the entrance pupil of a light measuring device (LMD) should be placed for determining many of the visual performance parameters of the XR device. IEC 63145-20-10 and IDMS section 19.3 offer several methods that could be used to estimate the eye point position of an XR device, but a single method has yet to be standardized (IEC, 2019a; IDMS, 2024). However, recent studies on eye point determination by using optical aberrations suggest a promising approach (Beams et al., 2024). Once the eye point for the left and right eyepiece of the XR device is determined, the LMD optical axis at each eye point is then typically rotated to align with the center of the virtual image. The total angle between the two directions is the vergence angle.

It should also be noted that due to risks associated with prolonged use of the XR device (such as thermal and biomechanical stress, and viewing visual distortions), ANSI 8400 recommends that these devices should not be used by children under the age of 12 (ANSI, 2025).

3 Virtual image quality and legibility

3.1 Terminology and equipment

Using common terminology is a fundamental aspect of standards that foster accurate understanding. For this review, we will focus on the terminology necessary to evaluation the visual performance of XR technologies. To this end, IEC 63145-1-1 provides a useful background to many of the terms and concepts used with this technology, while IEC 63145-1-2 provides a more comprehensive glossary of the relevant terms (IEC, 2018; IEC, 2022).

The equipment necessary for measuring the visual performance of XR devices will depend on the specific attribute to be measured. However, there are some common characteristics that are typically needed. All display light measurements should use instruments which have a photopic response to measure luminance, or acquire the tristimulus values for color (typically chromaticity) measurements (CIE, 2018). Unlike conventional LMDs used to measure direct view displays (e.g., TVs and monitors), LMDs used for XR measurements should have a small (2–5 mm diameter) entrance pupil to simulate the human eye under typical lighting conditions (IEC, 2019a). It is also operationally useful to have the entrance pupil located at the front of the LMD. Example concepts are illustrated in IDMS section 19.2 (IDMS, 2024). LMD manufacturers sometimes implement this by placing an external aperture in front of a conventional LMD and calibrate it in that position. However, when the LMD is not specifically designed with the entrance pupil in front, it may be necessary to calibrate the LMD at all of the focal distances that will be used.

The measurement process often requires moving the LMD (or the XR device) to determine the eye point, scan the virtual image field of view, scan the viewing space behind the headset, etc. This typically requires a goniometric system (or robot) with 6 degrees of freedom (three rotation axes and three translation axes). This goniometric system should be able to perform angular scans using a spot-measuring LMD by pivoting about its entrance pupil (called pupil rotation), or pivoting 10 mm behind the entrance pupil (called eye rotation) to simulate the gaze motion of the human eye. Alternatively, an LMD mounted on a 6-axis robot can also provide the necessary scanning motions. If an imaging LMD (camera) is used to measure the entire virtual image field of view from a fixed orientation, then these measurements will not fully capture the impact of eye gaze.

3.2 Luminance, luminance uniformity, contrast, and field of view

General guidance for reading information on displays in an office environment is given in ISO 9241-303 and further detailed in ISO 9241-307 (ISO, 2008a; ISO, 2008b). Although these visual ergonomic standards were intended for conventional direct view displays, given the lack of equivalent guidance specific to XR devices, they can serve as a useful starting point for XR technologies.

ISO 9241-307 specifies a minimum level of luminance under dim lighting environments to adequately perceive information, and IEC 63145-20-10 provides a detailed procedure to measure the XR device luminance (ISO, 2008b; IEC, 2019a). The luminance level will need to increase with increasing background luminance in order to achieve sufficient luminance contrast (white to dark luminance, LW/LK) for legibility. For good visual performance and comfortable reading, ISO 9241-303 recommends a luminance contrast higher than 3:1, or the equivalent Michelson contrast modulation depth of (LW-LK)/(LW + LK) = 0.5 (ISO, 2008a). The measured Michelson contrast tends to decrease as the separation between white and dark regions gets smaller (higher spatial or angular resolution). Measuring the Michelson contrast over a range of spatial frequencies using black/white grille test patterns can determine the resolution of an XR device with the necessary modulation depth for achieving legible text or to perceive small image features. IEC 63145-20-20 provides a measurements procedure for measuring the Michelson contrast of the virtual image, while 63145-22-20 gives the analogous procedure for measuring the resolution in a real scene when viewed through the headset (IEC, 2019b; IEC, 2020). Due to the potential impact of the display pixel structure or XR optical design, the Michelson contrast is generally measured with both a vertical and horizontal grille pattern. However, recent research suggests that a concentric ring pattern may give a more general description of the radial behavior of Michelson contrast (Beams et al., 2024).

The luminance uniformity of the virtual image also needs to be acceptable in order for the other luminance-based metrics to be of consistent quality over the image. ISO 9241-307 specifies the luminance non-uniformity in terms of the ratio of maximum to minimum luminance measured as the LMD performs an angular scan from the center of the image outward, with the non-uniformity allowed to gradually increase with increasing angles (ISO, 2008b). However, IEC 63145-20-10 uses an alternate luminance non-uniformity method where 5 or 9 positions are measured at the virtual image center and near the edges (IEC, 2019a).

An angular scanning method is employed by IEC 63145-20-10 to determine the virtual image field of view (based on luminance) in the horizontal, vertical, and diagonal directions (IEC, 2019a). The field of view boundaries are defined as the angles at which the luminance drops to a specified fraction of the center luminance. This luminance-based field of view measures the perceived angular span of light created by the virtual image, but it does not necessarily mean that the image is readable. Additional information can be obtained by measuring the field of view based on Michelson contrast using a specified modulation depth to determine the boundaries (IEC, 2019b). It should be noted that either of these field of view measurements can be implemented by using pupil rotation or eye rotation, where eye rotation considers the natural movement of eye gaze.

3.3 Color, color uniformity, and color gamut

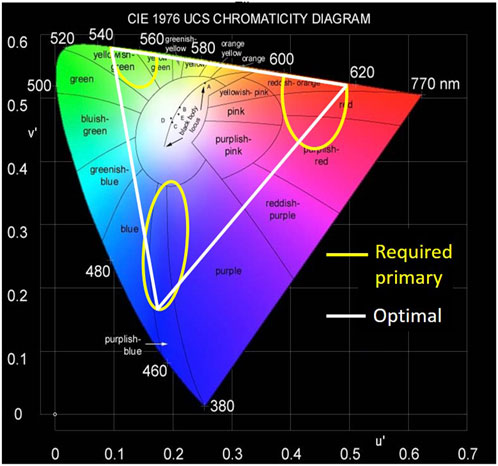

The color used in an XR image is another important way to render information. This can be in the form of color text, false color maps, or color images. ISO 9241-307 provides recommendations on what the white point and red, green, and blue primary colors should be to achieve reasonable color imagery (ISO, 2008b). Figure 2 illustrates the ISO 9241-307 acceptable range of primary colors as represented in the CIE 1976 chromaticity diagram. The measurement of the color primaries enables an estimation of the display’s range of colors (color gamut), where a larger gamut increases the number of distinguishable colors that can be used and offers a better opportunity to accurately represent the colors of the original object (IEC, 2019a). The white triangle in Figure 2 represents the maximum range of achievable colors using the acceptable primaries. However, the ISO 9241-307 acceptable primaries are a bit dated and modern displays can now exceed this color range. ISO 9241-307 also recommends color uniformity tolerances over specified fields of view to ensure that the color information be consistently represented over the image (ISO, 2008b). However, the measurement procedure given in IEC 63145-20-10 uses 5 to 9 positions in the image center and edges (IEC, 2019a).

Figure 2. Primary color requirements specified by ISO 9241-307 represented in the CIE 1976 chromaticity diagram. The enclosed yellow regions identify the range of chromaticities that are acceptable as red, green and blue primaries. The white triangle identifies the optimal color gamut available based on those primaries.

3.4 Eye-box based on luminance or Michelson contrast

The eye-box (or qualified viewing space) is the volume behind an XR eyepiece where the user can see the full virtual image field of view. As discussed above, the XR field of view can be based on luminance or Michelson contrast. Therefore, the eye-box is also based on either the luminance or Michelson contrast field of view. IEC 63145-20-10 provides the procedure for translating the LMD pupil in the space behind the left or right XR eyepiece to determine the volumetric boundary where the luminance-based field of view is maintained (IEC, 2019a). IEC 63145-20-20 gives a similar translation procedure but uses the field of view based on Michelson contrast to define the boundary of the eye-box volume (IEC, 2019b).

3.5 Additional visual performance characteristics

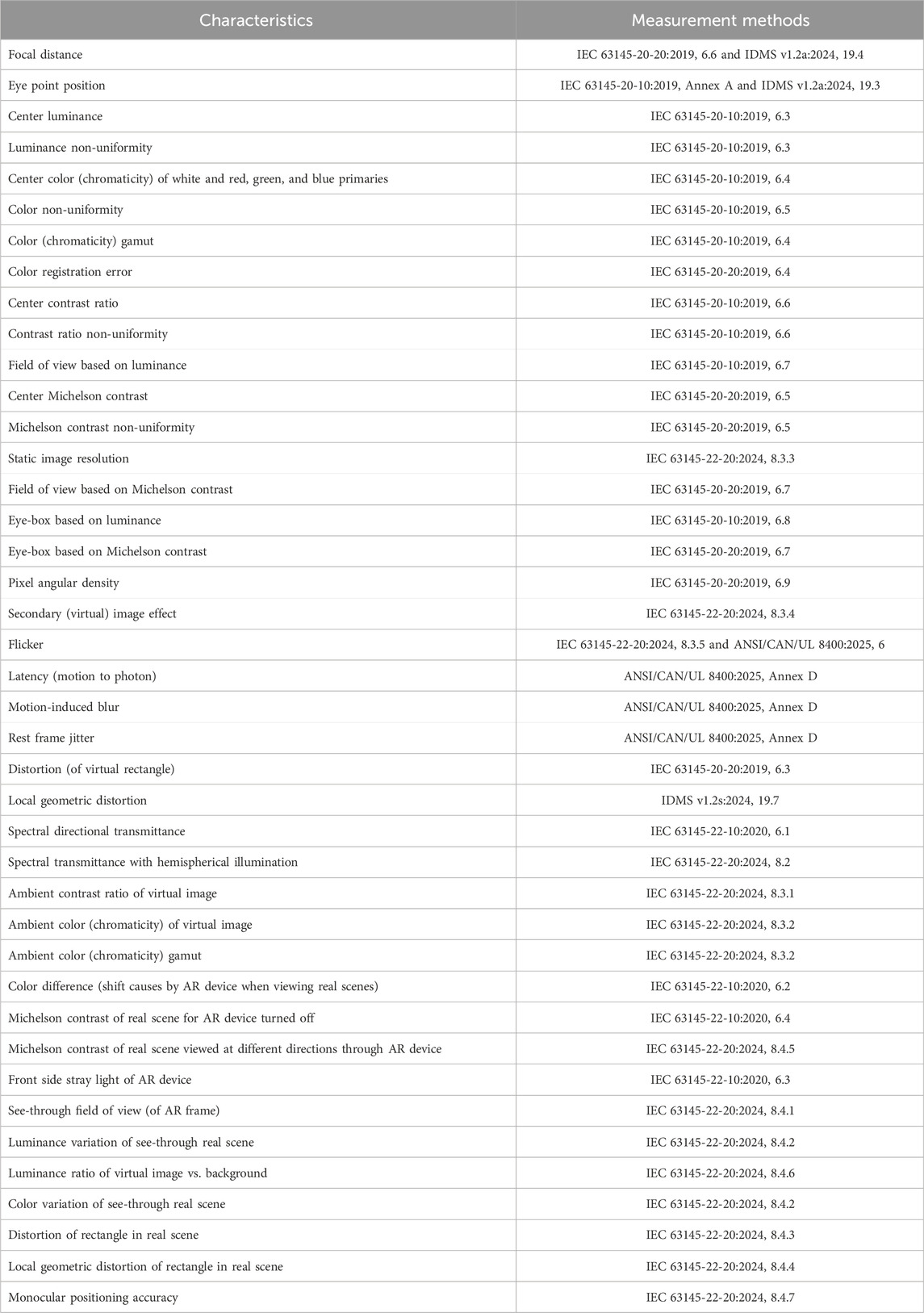

In addition to the XR visual performance characteristics mentioned above, there are numerous other XR attributes that may be important depending on the application. For example, IEC 63145-20-20 gives a procedure for measuring the geometric distortion at the outer boundary of the virtual image (IEC, 2019b). This may be critical when evaluating sizes or registering virtual images to real objects. However, the geometric distortion is generally not linear from the center of the virtual image to the edges. In that case, the local geometric distortion measurement specified in IDMS sectional 19.7 could prove more useful when using the virtual image for positioning and assisted surgical navigation (IDMS, 2024). Since AR devices are often used in bright environments, IEC 63145-22-20 offers several measurements for evaluating the influence of the ambient light on the contrast and color of the virtual image (IEC, 2024). A more comprehensive compilation of XR measuring methods is given in Table 1.

4 Discussion

New XR products are rapidly being developed with increasing complexity. This review reflects the current status of XR measurement standards. Given that international standards take several years to develop and publish, there is inherently a lag between the latest technology and its measurement standards. These standards will inevitably need to be revised to adapt to new innovations, but the general ergonomic requirements for comfortable viewing are expected to largely remain unchanged. Until more XR-specific visual ergonomic requirements are standardized, the generic guidance given in ISO 9241-303 (and its implementation to direct view displays in ISO 9241-307) was used in this review as a benchmark for users to evaluate XR devices for their needs (ISO, 2008a; ISO, 2008b). But as noted by a recent FDA consensus paper on medical XR, even for a given XR device, the necessary evaluation methods and performance characteristics will depend on the intended use of the device (Beams et al., 2022). The consensus paper also provides useful guidance on evaluating and validating the standard methods for medical applications. By making the user aware of the ergonomic requirements and methods of related display technologies, this review assists the user in making reasonable initial choices for their application. This review strived to highlight the important ergonomic requirements and reference the most relevant XR-specific standard measuring methods. There are also many XR measurements listed in Table 1 that have no industry ergonomic guidance. These standard measuring methods identify XR device characteristics that may still be important to the user. They provide the user a metric to quantify the characteristic, and a measuring procedure to gauge improvements.

Although there has been good progress toward developing a broad range of XR measurement methods, there are still metrology gaps that need to be addressed related to spatial image quality, temporal image quality, and usability (Beams et al., 2022). For example, a robust eye point position measurement needs to be standardized. This is important since the eye point position serves as the starting reference for many other measurements. A more accurate method is also needed to determine the focal distance and how it changes within the virtual image (called field curvature). And as eye-tracking technologies and related dynamic rendering methods become incorporated into the XR devices, new procedures will be needed to simulate eye movement into the measurements. It should also be noted that aside from some of the measurements discussed in regard to visual fatigue, all of the other standards to date have focused on monocular characteristics. More guidance is needed to evaluate binocular characteristics and their corresponding measuring methods. And finally, as new technologies using holographic or light field displays become available, they will likely require a significant revision to the existing methods. Thus, the dynamic nature of XR technologies will inevitably require the evolution of their measurement standards.

Author contributions

JP: Writing – original draft, Writing – review and editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

Author JP was employed by Luminex Technologies.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

ANSI (2025). Standard for safety: virtual reality, augmented reality and mixed reality technology equipment. UL Solutions. ANSI/CAN/UL 8400:2025. Available online at: https://www.shopulstandards.com/ProductDetail.aspx?productId=UL8400_1_S_20230428.

Beams, R., Brown, E., Cheng, W. C., Joyner, J. S., Kim, A. S., Kontson, K., et al. (2022). Evaluation challenges for the application of extended reality devices in medicine. J. Digit. Imaging 35, 1409–1418. doi:10.1007/s10278-022-00622-x

Beams, R., Zhao, C., and Badano, A. (2024). Image quality characterization of near-eye displays. J. Soc. Inf. Disp. 33, 101–121. doi:10.1002/jsid.2015

CIE (2018). Colorimetry. Ed 4. International Commission on Illumination. CIE 15:2018. doi:10.25039/TR.015.2018

Gupta, A., Ruijters, D., and Flexman, M. L. (2021). “Augmented reality for interventional procedures,” in Digital surgery. Editor S. Atallah (Cham: Springer). doi:10.1007/978-3-030-49100-0_17

Hoffman, D. M., Girshick, A. R., Kurt, A., and Banks, M. S. (2019). Vergence-accommodation conflicts hinder visual performance and cause visual fatigue. J. Vis. 8, 1–30. doi:10.1167/8.3.33

Huang, E. Y., Knight, S., Guetter, C. R., Davis, C. H., Moller, M., Slama, E., et al. (2019). Telemedicine and telementoring in the surgical specialties: a narrative review. Am. J. Surg. 218, 760–766. doi:10.1016/j.amjsurg.2019.07.018

IEC (2018). Eyewear display – Part 1-1: generic introduction. International Electrotechnical Commission. IEC 63145-1-1:2018. Available online at: https://webstore.iec.ch/.

IEC (2019a). Eyewear display – Part 20-10: fundamental measurement methods – optical properties. International Electrotechnical Commission. IEC 63145-20-10:2019. Available online at: https://webstore.iec.ch/.

IEC (2019b). Eyewear display – Part 20-20: fundamental measurement methods – image quality. International Electrotechnical Commission. IEC 63145-20-20:2019. Available online at: https://webstore.iec.ch/.

IEC (2020). Eyewear display – Part 22-10: specific measurement methods for AR – optical properties. International Electrotechnical Commission. IEC 63145-22-10:2020. Available online at: https://webstore.iec.ch/.

IEC (2022). Eyewear display – Part 1-2: generic - terminology. International Electrotechnical Commission. IEC 63145-1-2:2022. Available online at: https://webstore.iec.ch/.

IEC (2023). Eyewear display – Part 10: specifications. International Electrotechnical Commission. IEC 63145-10:2023. Available online at: https://webstore.iec.ch/.

IEC (2024). Eyewear display – Part 22-20: specific measurement methods for AR – image quality. International Electrotechnical Commission. IEC 63145-22-20:2024. Available online at: https://webstore.iec.ch/.

Information Display Measurements Standard (2024). International committee for display metrology, society for information display. Available online at: https://www.sid.org/Standards/ICDM.

ISO (2008a). Ergonomics of human-system interaction – Part 303: requirements for electronic visual displays. International Organization for Standardization. ISO 9241-303:2008. Available online at: https://www.iso.org/store.html/.

ISO (2008b). Ergonomics of human-system interaction – Part 307: analysis and compliance test methods for electronic visual displays. International Organization for Standardization. ISO 9241-307:2008. Available online at: https://www.iso.org/store.html/.

ISO (2015). Ergonomics of human-system interaction – Part 392: ergonomic recommendations for the reduction of visual fatigue from stereoscopic images. International Organization for Standardization. ISO 9241-392:2015. Available online at: https://www.iso.org/store.html/.

ISO (2016). Ergonomics of human-system interaction – Part 391: requirements, analysis and compliance test methods for the reduction of photosensitive seizures. International Organization for Standardization. ISO 9241-391:2016. Available online at: https://www.iso.org/store.html/.

ISO (2020a). Ergonomics of human-system interaction – Part 393: structured literature review of visually induced motion sickness during watching electronic images. International Organization for Standardization. ISO 9241-393:2020. Available online at: https://www.iso.org/store.html/.

ISO (2020b). Ergonomics of human-system interaction – Part 394: ergonomic requirements for reducing undesirable biomedical effects of visually induced motion sickness during watching electronic images. International Organization for Standardization. ISO 9241-394:2020. Available online at: https://www.iso.org/store.html/.

Keywords: virtual reality, augmented reality, optical measurement, standards, visual fatigue, visual performance

Citation: Penczek J (2025) Visual performance standards for virtual and augmented reality. Front. Virtual Real. 6:1575870. doi: 10.3389/frvir.2025.1575870

Received: 13 February 2025; Accepted: 17 June 2025;

Published: 16 July 2025.

Edited by:

Vangelis Lympouridis, University of Southern California, United StatesReviewed by:

Omar Janeh, University of Technology, IraqRobert LiKamWa, Arizona State University, United States

Ramkrishna Mondal, All India Institute of Medical Sciences (Patna), India

Chumin Zhao, United States Food and Drug Administration, United States

Copyright © 2025 Penczek. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: John Penczek, am9obkBsdW1pbmV4dGVjLmNvbQ==

†ORCID: John Penczek, orcid.org/0000-0002-8817-4624

John Penczek

John Penczek