- 1Department of Industrial and Manufacturing Systems Engineering, Iowa State University, Ames, IA, United States

- 2Department of English, Iowa State University, Ames, IA, United States

Introduction: Socialization is crucial for facilitating disciplinary enculturation, yet traditional classroom instruction often lacks authentic socialization opportunities, limiting students’ exposure to their disciplinary communities. To address this gap, this study develops an immersive Virtual Reality-Artificial Intelligence (VR-AI) environment that simulates academic conference poster sessions. Learners interact with AI-driven agents, engaging in discussions and receiving real-time feedback on research communication. This study focuses on developing, operationalizing, and evaluating the fidelity of the VR-AI environment across four key dimensions: physical, functional, psychological, and social fidelity.

Methods: Twenty participants tested the environment, completing two learning tasks: engaging with poster presenters and reflecting with a major professor. Fidelity was assessed using mixed methods, including presence questionnaires, workload assessments, behavioral observations, and semi-structured interviews.

Results: Findings indicate high physical and functional fidelity, with participants describing the environment as immersive and reflective of real-world academic settings. Psychological fidelity was also well represented, as learners engaged in cognitively demanding research discussions and rhetorical reflection. However, social fidelity remained a challenge, as AI agents struggled with conversational turn-taking and response length, reducing the authenticity of academic exchanges.

Discussion: These findings highlight the potential of VR-AI environments for disciplinary socialization while underscoring the need for refined AI-driven interaction designs to support more fluid, reciprocal dialogue.

1 Introduction

This study aims to operationalize, develop, and evaluate a Virtual Reality-Artificial Intelligence (VR-AI) learning environment to aid graduate students in developing the socio-rhetorical knowledge of their discipline. Developing socio-rhetorical knowledge is crucial in mastering research genres across an academic discipline. Genres can be defined as “socially recognized ways of using language” (Hyland, 2007, p. 149) that emerge to fulfill recurring communicative needs. These genres may include research articles, conference presentations, book reviews, and poster presentations, amongst many others.

Genre knowledge involves understanding the expectations and confirming with the conventions of the target disciplinary community. As identified by Tardy et al. (2020), four key domains are necessary for genre acquisition: formal knowledge (structural features and conventions), rhetorical knowledge (purpose and audience expectations), process knowledge (creation and distribution steps), and subject-matter knowledge (disciplinary content). This study focuses on rhetorical knowledge to help novice members of the disciplinary community better understand and adapt to the communicative situations and expectations of research-related genres. Rhetorical knowledge must occupy a more prominent place in technology-assisted academic writing in both research and pedagogy; otherwise, theoretically imbalanced instruction will expose novice writers to an overemphasis on subject-matter knowledge at the expense of rhetorical knowledge, even though each is necessary and neither is sufficient on its own (Tardy, 2005). Arguably, developing rhetorical knowledge is a complex process that requires novice scholars’ socialization into their disciplinary communities (Casanave, 2002; Johns and Swales, 2002). Successful rhetorical knowledge development involves being socialized into the socio-disciplinary expectations of the discourse community and the ability to make appropriate rhetorical choices in communication based on those expectations (Hyland, 2018). Because the primary task of a researcher is to convince other researchers within the disciplinary community of their scientific contribution, rhetorical knowledge is paramount. As rhetorical knowledge can be successfully acquired through a process of socialization, early and frequent exposure to the socio-rhetorical practices of a disciplinary community is crucial (Kobayashi et al., 2017; Tardy et al., 2020).

Rhetorical knowledge is a type of implicit insider knowledge that cannot be acquired by novice scholars like graduate students only in formal course settings (Ahn and Lee, 2016; Lee and Park, 2020; Yang, 2006). While classroom instruction creates conditions for learning about their disciplinary community, it is limited in that students cannot participate in the actual practices of the community itself. Socialization into the community can only be achieved through interactions with core members who have a stronger, more proficient grasp of the conventions and practices of their discipline (Duff, 2010). However, research has shown that many graduate students often lack access to their disciplinary communities (Duff, 2007; Haneda, 2006) and that this lack of access is detrimental to their overall proficiency of producing research genres (Tardy et al., 2020; Zappa-Hollman, 2007). This gap underscores the need for innovative pedagogical approaches that can bridge the divide between classroom learning and real-world academic practice.

Emerging technologies like Virtual Reality (VR) and Artificial Intelligence (AI) hold potential in addressing this need, particularly in integrating the two to create a situated learning environment. Situated learning theory posits that meaningful learning can only take place if it is embedded in the social and physical context within which it will be used (Brown et al., 1989). According to instructional technologists, situated learning environments need to provide authentic context, authentic activities, access to expert performances, multiple perspectives and guidance, collaborative knowledge construction, and reflection to articulate tacit knowledge (Herrington and Oliver, 2000). The technological affordances of VR and AI can be used to create meaningful situation learning environments. VR allows designers to immerse learners in simulated settings that replicate real-world disciplinary communities and practices, while AI-driven generative agents can emulate the communication of expert community members’ knowledge, values, and interactional patterns. For instance, a VR-AI environment of an academic conference poster session could allow learners to present research to other scholars, watch more experienced presenters present, respond to audience critiques, and interact with scholars from a wide range of fields and perspectives. Through tailored activities, designers can scaffold collaborative knowledge construction (e.g., negotiating feedback with other researchers) and prompt reflection on tacit norms, allowing leaners to exercise their socio-rhetorical understanding in real-time.

Guidelines on how to develop situated learning environments are scarce, particularly within the realm of commercial-grade VR and Generative AI. A key challenge in developing situated learning environments lies in aligning learning objectives, theoretical underpinnings, and technological affordances to ensure its design supports the intended pedagogical outcome. Recent systematic reviews of the use of VR technologies in higher education found a rise in its adoption but have also noted a significant lack of explicit pedagogical frameworks in the development and use of these environments (Chen et al., 2024; Radianti et al., 2020), resulting in poorly designed instructional activities and ambiguity in learning outcomes. Similarly, recent systematic reviews of AI in higher education have called for adopting educational theories in the use of AI technologies (Chen et al., 2020; Ouyang et al., 2022; Zawacki-Richter et al., 2019). While generative AI agents like GPTs can simulate human-like interactions, their pedagogical effectiveness hinges on training protocols that embed learning theories and objectives. Additionally, guidelines on evaluating a situated learning environment are also limited, leading to the adoption of environments without sufficient testing and validation. In order for a situated learning environment to be successful, it is necessary first to establish whether it captures fundamental features of the actual task and environment, and whether it elicits realistic behavior. The design of these features demands careful attention to fidelity—the degree to which simulations replicate real-world systems (Harris et al., 2020). While many types of fidelities exist, this study focuses on physical (visual and sensory realism), functional (realistic use and behavior of objects), psychological (replication of cognitive demands), and social fidelity (realism of interpersonal interactions), as these aspects are particularly important for pedagogical effectiveness. For training and learning purposes, the environment needs to be only as “real” as required to achieve the desired learning outcomes. Without intentional alignment of pedagogical goals, fidelity dimensions, and technological capabilities, simulations risk fostering superficial engagement.

This study aims to address these gaps by developing, operationalizing, and evaluating a genre-based VR-AI environment that simulates a conference poster session, a context chosen for its density of socialization activities, including real-time feedback exchanges, interactive negotiations, and models of expert performances (Tan et al., 2023a). The intended learning outcome is to enhance learners’ social and rhetorical understanding of their disciplinary communities. The research objectives of this study are as follows:

1. Development: To describe the development of the learning environment grounded in learning theories, creating a design rationale that aligns VR/AI affordances with socio-rhetorical learning goals.

2. Operationalization: To provide directions for training and integrating GPT agents as pedagogical proxies, using discipline-specific corpora and rhetorical schemas to encode authentic interactional patterns characteristic of research communication.

3. Evaluation: To test and validate the learning environment’s physical, psychological, and interactional fidelity as a poster session for pedagogical use.

As scholarship in academic research communication continues to evolve in the digital age, this study contributes to the broader discourse on addressing the challenges of academic socialization while also providing a framework for the design and evaluation of VR-AI learning environments that can be adapted to other educational contexts.

2 Related works

This study develops and evaluates a VR-AI learning environment aimed at fostering socio-rhetorical knowledge, a key domain of genre knowledge. This section examines genre theory to understand the role of rhetorical knowledge and how it is developed through social interactions. This study draws from research on situated learning theory and genre theory (Swales, 1990), leveraging its process of learning through which socio-rhetorical knowledge can be developed. Building on this foundation, the study explores the affordances of VR and AI in creating a situated learning environment. Finally, this study reviews fidelity as an evaluative framework to test and validate the environment on its pedagogical usefulness. By grounding learning theories with meaningful integration of emerging technologies, this work aims to serve as a valuable guideline for the design and evaluation of VR and AI learning environments.

2.1 Genre knowledge and genre-based pedagogies

At its core, genres can be defined as “socially recognized ways of using language” (Hyland, 2007, p. 149) that emerge to fulfill recurring communicative needs. Acquiring knowledge of a genre is a complex and multilayered process as members within these communities need to know not just the preferred forms of a genre but how and when a genre can be used to further a community’s goals, the common practices and values of the genre users, the identity taken on in using the genre, the subject-matter of their discipline, and how to exploit the genre for their own agendas (Tardy et al., 2020). Tardy et al. (2020) provide a comprehensive framework detailing the four crucial domains for genre knowledge acquisition:

• Formal knowledge: Understanding of the structural features, conventions, and stylistic norms of a genre (e.g., organization, formatting, language use)

• Rhetorical knowledge: Understanding of genre’s purpose, audience expectations, and strategies to help achieve its goals

• Process knowledge: Understanding of steps involved in creating, distributing, and using the genre (e.g., drafting, revising, peer review process, publishing process)

• Subject-matter knowledge: Understanding of disciplinary content

This study focuses on rhetorical knowledge for several critical reasons. Firstly, despite its importance, rhetorical knowledge is often marginalized in formal curricula (Tardy, 2005; Zhang and Zhang, 2021). Rhetorical knowledge is often assumed to develop implicitly through exposure to academic discourse, leading educators to prioritize subject-matter knowledge and technical skills over the nuanced teaching of rhetorical competence. Secondly, while most genre-based approaches in the classrooms aim to raise rhetorical awareness, these approaches still fall short in providing authentic context and opportunities for practice. Due to the nature of formal classroom education, classroom activities are often decontextualized, with assignments focused on producing texts for the instructor rather than engaging with real-world audiences or purposes (Devitt et al., 2003; Hyland, 2007). For instance, while popular tasks like analyzing model texts or practicing rhetorical moves (i.e., communicative goals) can raise awareness of genre conventions and do indeed contribute to developing rhetorical knowledge, they still fail to immerse students in the dynamic social contexts where genres are actively negotiated and used (Freedman and Medway, 2003; Worden, 2018). Consequently, genre-based practitioners have advocated for more situated and authentic practices that integrate rhetorical instruction with meaningful, context-rich activities to better socialize learners (Hyland, 2022; Tardy, 2005). These limitations highlight the need for pedagogical approaches that immerse learners in authentic social contexts—making situated learning an ideal complement.

2.2 Situated learning for rhetorical knowledge acquisition

Situated learning theory offers a valuable pedagogical framework for addressing the limitations of genre-based pedagogy by suggesting how learners can develop rhetorical knowledge through meaningful participation in communities of practice. Defined as a process where individuals acquire knowledge and skills by engaging in authentic activities within real-life contexts (Lave and Wenger, 1991), situated learning emphasizes participation over abstraction. Derived from Sociocultural Theory (Vygotsky, 1978), situated learning builds on the idea that learning occurs through social interaction and mediation, with expert members scaffolding newcomers’ understanding of community norms. It also extends sociocultural theory by introducing the concept of legitimate peripheral participation, which describes how novices gradually transition from peripheral observers to full participants in a community of practice as they gain expertise and integrate into the sociocultural practices of the group.

This theory is particularly instructive for rhetorical knowledge acquisition because it emphasizes authentic engagement within disciplinary communities, which are essentially communities of practice. Such engagement is an essential component typically missing from traditional classrooms (Kobayashi et al., 2017; Tardy et al., 2020; Zappa-Hollman and Duff, 2015). Through repeated interactions with experts and meaningful tasks–such as presenting at simulated conferences–novices would be able to internalize the socio-rhetorical logic underpinning disciplinary communication. Herrington and Oliver (2000) identify nine essential elements for creating effective situated learning environments:

1. Provide authentic contexts that reflect the way knowledge will be used in real life

2. Provide authentic activities

3. Provide access to expert performances for the modeling of processes

4. Provide multiple roles and perspectives

5. Support collaborative construction of knowledge

6. Promote reflection to enable abstractions to be formed

7. Promote articulation to enable tacit knowledge to be made explicit

8. Provide coaching and scaffolding by the teacher at critical times

9. Provide authentic assessment of learning within the tasks.

However, traditional classroom activities often fail to meet these criteria, as creating situated learning activities is very difficult in the formal classroom setting (Ahn and Lee, 2016; Lee and Park, 2020; Yang, 2006). Emerging technologies like VR and AI offer promising avenues to address these challenges by simulating immersive environments where learners can engage with realistic rhetorical situations while receiving scaffolded feedback akin to expert guidance within communities of practice. By bridging this gap between theory and application, situated learning complements genre-based pedagogy effectively while addressing its shortcomings in teaching rhetorical knowledge in close to authentic ways.

2.3 VR and AI affordances for creating situated learning environments

2.3.1 VR affordances

The growing field of VR has spurred extensive discussions on its affordances for learning, particularly in creating immersive and interactive environments (Burdea and Coiffet, 2003; Slater et al., 2022; Steffen et al., 2019). Kaplan-Rakowski and Gruber (2019) define VR environments (VREs) as “computer-generated 360° virtual spaces that can be perceived as being spatially realistic due to the high immersion afforded by a head-mounted device” (p. 552). Immersion, a key feature of VR, refers to the subjective impression of being fully engaged in a comprehensive, realistic experience (Dede, 2009). Drawing on Steffen et al.’s (2019) framework, VR technology enables immersive learning experiences by creating, recreating, enhancing, or diminishing aspects of physical reality in simulated environments. These affordances align with Herrington and Oliver (2000) nine essential elements of situated learning environments by allowing instructors to create authentic contexts and activities that mirror authentic contexts and practices, providing access to expert performances through modeled behaviors and enabling learners to assume multiple roles and perspectives. For example, VR can simulate academic poster sessions where learners present research and interact with AI agents representing members of their target disciplinary community. Such immersive tasks support collaborative knowledge construction, foster reflection on rhetorical strategies, and promote articulation of tacit knowledge in ways that traditional classrooms cannot.

Despite their promising potential, systematic reviews have consistently highlighted that most VR-based educational interventions focused on the technological capabilities of VR, while only a few provided theoretical models (Chen et al., 2024; Fowler, 2015; Pellas et al., 2021; Radianti et al., 2020) or explicitly aligned VR affordances informing the design of a VRE (Fromm et al., 2024; McGowin et al., 2021). The lack of theoretical grounding can inadvertently lead to inconsistent learning outcomes (Radianti et al., 2020). Poorly designed instructional activities in immersive environments can reduce their effectiveness in achieving pedagogical goals (Chen et al., 2024). Theoretically informed instructional designs are needed to maximize the educational potential of VR applications (Pellas et al., 2021). These insights underscore the importance of aligning VR affordances with learning theories to ensure meaningful and effective learning experiences.

2.3.2 AI affordances

AI offers significant affordances in higher education, particularly in learning, teaching, assessment, and administration (Chiu et al., 2023; Zawacki-Richter et al., 2019). Empirical research has shown that, in some instances, AI can enhance online instruction and learning quality through high-accuracy prediction, personalized recommendations, and increased engagement and participation (Ouyang et al., 2022). In the context of this study, it could be used to create authentic communities of practice by simulating dynamic interactions with mentors and other community members. Through the use of GPT models, AI agents can be trained to embody the rhetorical knowledge, conventions, and identities unique to specific disciplinary communities, enabling learners to engage with these agents as believable community members. These affordances also align well with Herrington and Oliver (2000) framework for situated learning environments. AI agents can act as mentors or peers, offering tailored feedback, modeling disciplinary norms, and facilitating collaborative tasks. AI can also support collaborative knowledge construction through interactive dialogue with agents and promote reflection and articulation of research content by enabling learners to receive immediate feedback on their communication strategies. Additionally, AI systems can be designed to provide scaffolding at critical moments by adapting responses to learners’ needs and offering formative assessments embedded within tasks. By aligning these affordances with situated learning principles, AI can serve as a powerful tool for fostering rhetorical knowledge acquisition and preparing learners for effective participation in their disciplinary communities.

Despite these promising affordances, systematic reviews have highlighted a critical gap in aligning AI applications with robust educational theories. For example, Ouyang et al. (2022) found that while AI applications in higher education have demonstrated potential for improving academic performance and engagement, their effectiveness is often undermined by a lack of integration with learning theories or long-term empirical validation. Similarly, Chen et al. (2020) emphasized the need for theoretically grounded designs to maximize the pedagogical impact of AI systems. Addressing these challenges requires not only integrating AI with established learning theories but also ensuring that AI fosters meaningful and context-rich interactions. These reviews recommend incorporating authentic characteristics and real-time process data to enhance agent accuracy, which is important if using it as a tutoring system. Researchers have also called for more transparency in how AI applications are trained (İpek et al., 2023), along with more interdisciplinary collaboration between educators and technologists to ensure AI systems meet complex educational needs effectively (Chiu et al., 2023; Zawacki-Richter et al., 2019).

2.4 Fidelity as an evaluative framework for situated learning environments

The increasing adoption of learning environments in education is promising; however, for learning to be effective, it is essential to establish whether the environment captures the fundamental features of the real task and environment while eliciting realistic behaviors (Harris et al., 2020). Fidelity, defined as the accuracy between “the real-life” contextual circumstances and the digitally created immersive environment, is central to this evaluation (Bjørn et al., 2024).

2.4.1 Types of fidelity for situated learning

Numerous conceptualizations of fidelity exist, including environmental fidelity (Rehmann, 1995), equipment or technical fidelity (Hontvedt and Øvergård, 2020), social fidelity (Bjørn et al., 2024; Sinatra et al., 2021), psychological fidelity (Schiflett et al., 2004; Harris et al., 2020), functional fidelity (Alexander et al., 2005), physical fidelity (Birbara and Pather, 2021; Harris et al., 2020), among many others. While it is often assumed that higher fidelity automatically enhances learning outcomes, this claim lacks empirical support (Schiflett et al., 2004; Sinatra et al., 2021). Moreover, achieving high levels of fidelity in many of these dimensions can be resource-intensive, requiring substantial time and resources. Therefore, it is important to carefully determine which aspects of the real-world environment should be implemented and to design scenarios, artifacts, and activities that directly support learning objectives (Bjørn et al., 2024). Since situated learning environments aim to replicate authentic contexts, facilitate expert interactions, and provide scaffolded practice and reflection, this study focuses on three key fidelity types: functional physical fidelity, psychological fidelity, and social fidelity.

2.4.2 Physical fidelity

Physical fidelity refers to the degree of realism provided by the visual and physical elements of a virtual environment, including its visual representation, behavior, and adherence to the laws of physics (Harris et al., 2020). In situated learning environments, physical fidelity is often associated with the visual resemblance of objects and spaces to their real-world counterparts. For example, in a poster session setting, physical fidelity ensures that elements such as poster boards, research posters, and seating arrangements mirror their real-world equivalents. While research suggests that low- and even zero-physical fidelity environments can serve as efficient training tools (Toups Dugas et al., 2011), high physical fidelity can still enhance immersion and engagement (Slater et al., 2022) and thus should not be isolated from aspects of learning (Lowell and Tagare, 2023). To assess physical fidelity, researchers typically use direct participant reports, presence questionnaires, and behavioral observations to determine whether users perceive the environment as visually realistic and immersive (Harris et al., 2020).

2.4.3 Functional fidelity

Functional fidelity refers to the extent to which artifacts (or virtual objects) and interactions replicate the real-world functionality of their counterparts, particularly in how actions within the environment align with real-world affordances (Bjørn et al., 2024). Unlike physical fidelity, which is concerned with whether artifacts look like the real world, functional fidelity is concerned with whether artifacts serve their functions like the real world (Alexander et al., 2005). For example, in a poster session environment, a research poster should serve as an actual multimodal component, allowing users to read and use the information during interactions in meaningful ways. Functional fidelity is typically measured by observing the object’s functional behaviors during task execution (e.g., whether learners interact with posters as they would in real life) and by collecting user feedback through surveys, interviews, or questionnaires to evaluate whether the simulation supports realistic interactions and effective learning (Alexander et al., 2005).

2.4.4 Psychological fidelity

Psychological fidelity refers to the degree to which a training environment replicates the psychological demands, decision-making, and cognitive processes of real-world tasks (Gray, 2019). This includes the extent to which learners must analyze information, adapt their responses in real-time, manage cognitive load, and engage in authentic social interactions, ensuring that the task elicits the same mental effort, problem-solving, and communicative complexity required in real-world scenarios. Psychological fidelity is often considered the most relevant attribute for the transfer of learning (Harris et al., 2020). To achieve psychological fidelity, learning environments must identify the cognitive and performance requirements of real-world tasks and design scenarios that elicit these processes effectively. Measurements are then used to evaluate whether the simulation successfully engages users in these processes. Cognitive load is also a particularly relevant measure in education and training, as maintaining an optimal level of load is critical for successful learning outcomes (Kirschner, 2002).

2.4.5 Social fidelity

Social fidelity, also referred to as interactional fidelity in socio-technical systems (Hontvedt and Øvergård, 2020), is defined as the extent to which the social aspects of a virtual character emulate real-world social interactions (Sinatra et al., 2021). This includes the realism of content and interaction patterns, enabling learners and agents to collaborate, coordinate, and create shared meaning effectively. Pedagogical agents are simulated characters that interact socially with users to support learning, and can serve as teachers, coaches, peers, or emotional supporters, and can influence learning in profound ways (Sinatra et al., 2021). Social fidelity is typically assessed using a combination of behavioral observations and self-reported measures. Behavioral assessments focus on user engagement with virtual agents and metrics such as time-on-task, while self-reported measures involve questionnaires or interviews to capture users’ perceptions of social presence, connectedness, and the naturalness of their interactions with the agents (Sinatra et al., 2021).

As fidelity is crucial for any learning environment, there is thus a need to evaluate how realistic it is before moving forward. This evaluation process is integral to the operationalization of the VR-AI environment, where the theoretical fidelity is translated into tangible design and learning tasks to ensure the environment functions as intended.

3 VR-AI environment operationalization

This section first explains how the learning environment was designed based on educational theories, explicit learning outcomes, and technological affordances. It then details the fine-tuning of AI agents and the development of learning tasks.

3.1 VR-AI environment development

3.1.1 Developing the virtual environment

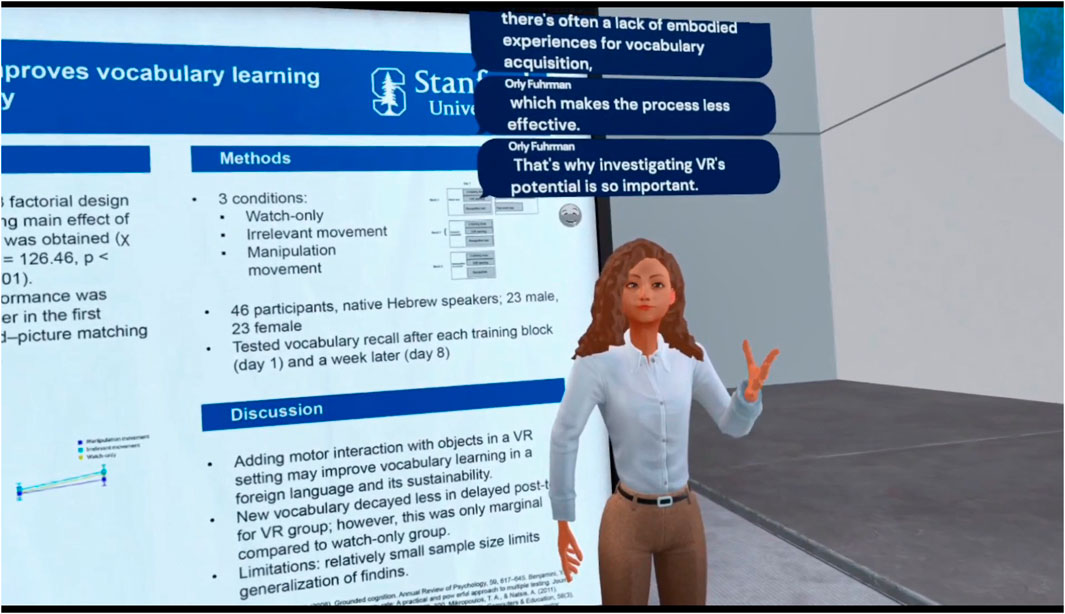

The VR environment was developed using Unity3D software and deployed on a Meta Quest 3 headset. The visual set up of a conference hall and poster boards was determined by the primary researcher’s own experience and confirmed by domain experts. Assets from the Unity Asset Store were used as objects of the poster session environment. To ensure readability, the posters are billboard-sized instead of the typical 64′ x 48′ dimensions. The posters were from real researchers; one was adapted with informed consent and permission, and the other was created based on Fuhrman et al. (2021) research article. Both posters served as functional artifacts containing the four major research sections, graphs, and relevant visuals. The AI agents’ visual representations were created using Ready Player Me, a 3D Avatar Creator.

3.1.2 Developing the learning tasks

Narratively in the environment, learners attend three conference days (three learning sessions) with scaffolded tasks. On the first day of the conference, the Major Professor agent guides the learner around the conference hall and invites the learner to observe how experts interact with the Poster Presenters. On the second day of the conference, the Major Professor encourages the learner to interact with the Poster Presenters independently. On the third and final day of the conference, the learner is tasked with presenting their own research. On each of these days, the Major Professor explicitly instructs learners to pay attention to the socio-rhetorical conventions of the tasks.

In this paper, we report on the development and piloting of only Day 2. Day 2 was chosen to demonstrate proof-of-concept because of its pedagogical and technical complexity: both the Poster Presenter and Major Professor agents needed to demonstrate spontaneous yet believable socio-rhetorical behavior and dialogue. There were two tasks for Day 2: interacting with the Poster Presenters and completing a guided reflection with the Major Professor. The tasks are further detailed below:

Upon entering the environment, the learner was greeted by the Major Professor, who welcomed them to the conference and the poster session. He explained that poster sessions are a great opportunity to learn about research and connect with other scholars. The Major Professor encouraged them to read the posters and ask the Poster Presenters questions. He suggested a list of key questions:

1. Why is this an important topic to study?

2. What problem is this research addressing?

3. How did you study this?

4. What are your main findings?

5. What is the biggest takeaway from this study?

6. What are the practical applications of these findings?

Following this, learners interacted with two Poster Presenters about their research (see Figure 1). Each Poster Presenter gave a brief overview of their study and invited comments and questions. The participants engaged in conversation with both presenters.

Upon task completion, learners returned to the Major Professor. The Major Professor led a reflection session using guided questions such as, “How did the presenter make a case for the importance of their study?” or “What would have been a better way of showing the impact of the study?” At the end of the session, the Major Professor congratulated the participant on a job well done and wished them luck in preparing for Day 3 of the poster session.

3.1.3 Customizing the AI agents

The AI agents were instantiated using InWorld, an AI game-engine platform that creates virtual characters with customized personalities and domain-specific knowledge. Since InWorld integrates GPTs, it allows for the adaptation of discourse community knowledge to develop agents that behave as credible members of their academic discipline. The technology enables dynamic, real-time conversations, facilitating natural back-and-forth dialogue between participants and the agents. Each agent was fine-tuned and configured to present information in ways consistent with disciplinary values and practices. Their responses were informed by a dataset of authentic dialogue samples collected from domain experts in a previous pilot study (Tan et al., 2023b). The output was further refined using common prompts aligned with the task’s goals and intended learning outcomes.

Each Poster Presenter agent was first assigned a physical appearance and a name. Then, each presenter was given unique background information and a distinct personality to differentiate them from one another. For example, one of the presenters is named Yvette Fahey. She is a second year PhD student in the department of Industrial Engineering at a large mid-western university in the United States, who loves to play Overcooked 2 in her free time, and has seven cats. She has a friendly demeanor and tends to give comprehensive but engaging responses. Each agent was also given some context of their environment, role, and relationship to the other agents using natural language description. For example:

{Character} is currently a Poster Presenter at Prestigious Conference 2024.

{Character} is standing in front of her poster board and is excited to share her research with scholars worldwide. {Character} references her poster often when presenting her research.

{Character}’s major advisor is John Smith, the keynote speaker for this conference.

Next, they were each given domain-specific knowledge about their own research study. For example, Yvette has the following description for her introduction section:

{Character}’s introduction section: This study describes the process of developing a teamwork measurement system by investigating cooperative video game environments for teamwork assessment. The work aims to provide teamwork measurement and testbed design guidance through cooperative games. Cooperative video games have been shown to foster prosocial behaviors, communication, and cooperative activities. However, work is needed to establish the relationship between cooperative features and teamwork behaviors and investigate the internal validity of these environments as assessment testbeds.

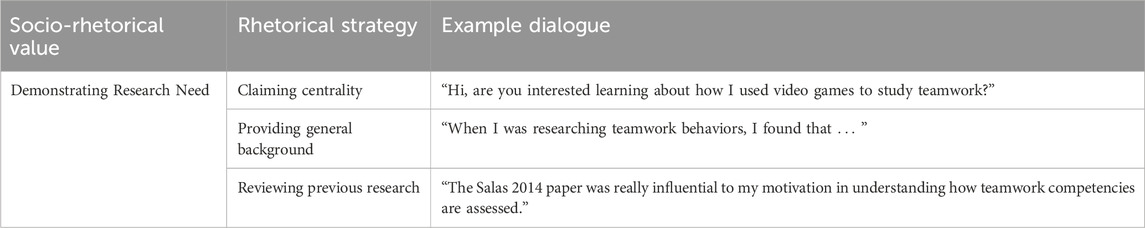

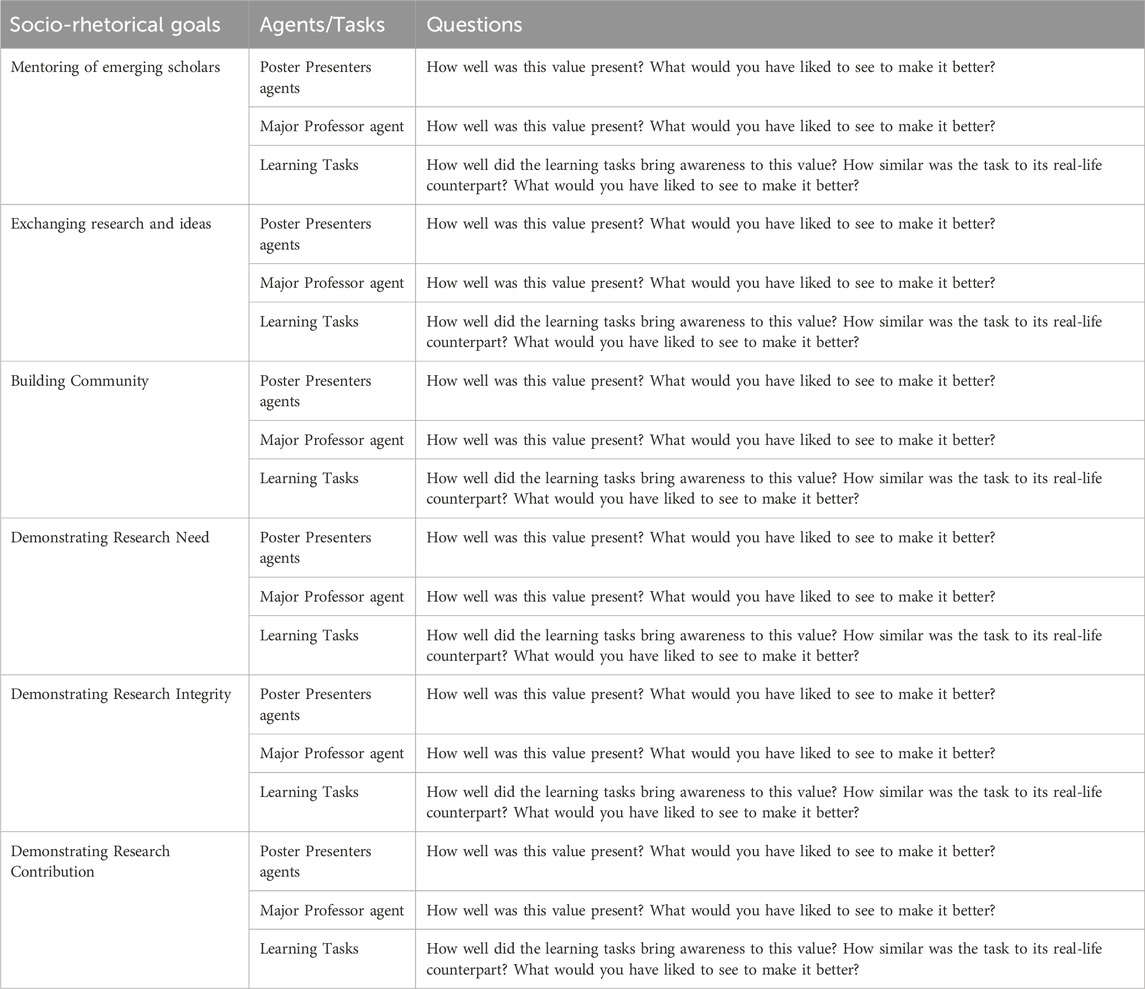

Finally, the last step was to imbue the Poster Presenters with the unique socio-rhetorical goals of the disciplinary community. The poster session move/step framework (communicative goals and strategies), adapted from a prior pilot study (Tan et al., 2023b) and previous research (adapted from Swales and Feak, 2012; Yoon and Casal, 2020), was used to ensure that each move and step included specific training phrases. Dialogue examples for Moves 1, 7, and 8 are detailed in Table 1.

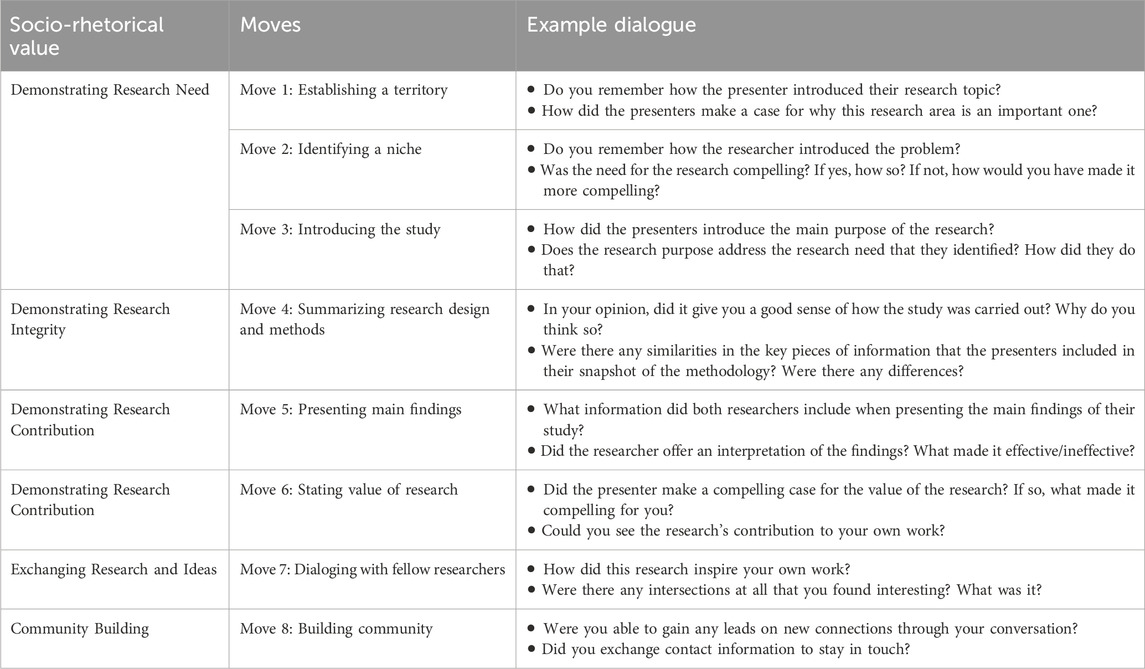

The Major Professor agent, Professor Long, went through a similar instantiation process where he was given a physical appearance, name, personality, and socio-rhetorical knowledge of the community. Unlike the Poster Presenters, the Major Professor was programmed to take the learner through a guided debriefing at the end of the poster session. The debriefing aimed to raise awareness of the disciplinary community’s socio-rhetorical knowledge. The Major Professor did so by asking reflective questions based on the learner’s experience after the learning task. To ensure that each move was addressed, the poster session move/step framework was used (see Table 2).

3.1.4 Summarizing the VR-AI learning environment

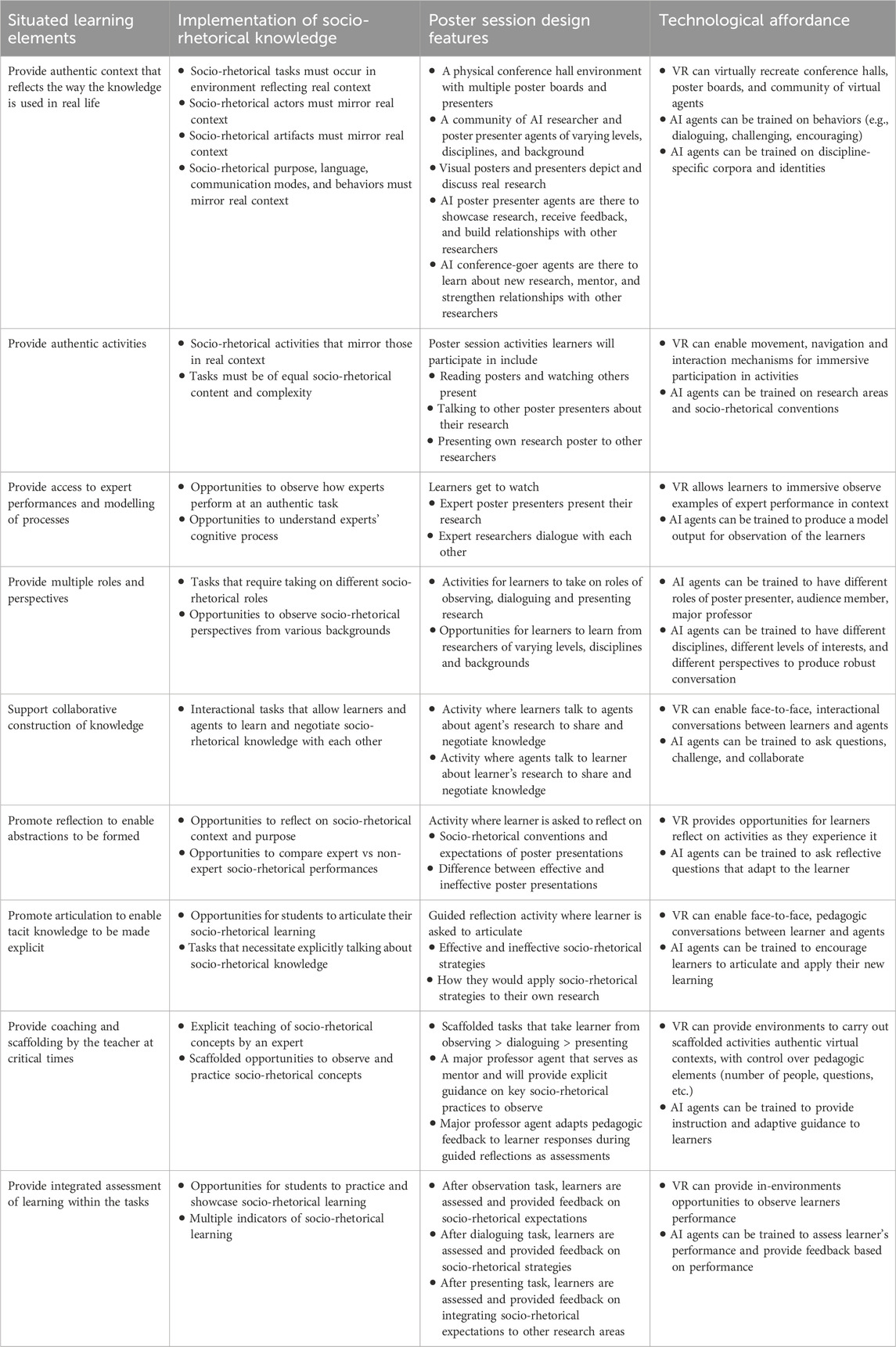

Requirements were developed to translate learning theories, objectives, and theoretical affordances into design features. Elements for situated learning environments were drawn from Herrington and Oliver (2000). Socio-rhetorical knowledge elements were gathered from a prior study (Tan et al., 2023b), which identified three social goals (initiating newcomers, facilitating research exchange, and building community) and three rhetorical goals (demonstrating research need, research integrity, and research contribution). Additionally, authentic user behavior, dialogue, language use, and expectations from the same study informed the design features of the learning environment.

Table 3 synthesizes this alignment, mapping Herrington and Oliver (2000) situated learning elements to socio-rhetorical learning goals, design features, and technological implementations. For instance, the authentic context requirement was operationalized through a virtual conference hall populated with AI agents representing diverse researchers, while authentic activities were embedded in learning tasks where learners negotiated feedback with AI agents. The AI-driven agents, trained on discipline-specific corpora, were designed to emulate expert behaviors, such as questioning research integrity or modeling rhetorical strategies like demonstrating research contribution. VR affordances, such as spatial immersion, enabled learners to engage in activities mirroring real-world academic practices (e.g., presenting posters, critiquing research). This aligned approach ensured that each design choice directly supported the acquisition of socio-rhetorical knowledge, the ultimate learning objective.

4 Methods

4.1 Research objectives

The environment was evaluated for its physical, functional, psychological, and social fidelity to ensure it met pedagogical requirements.

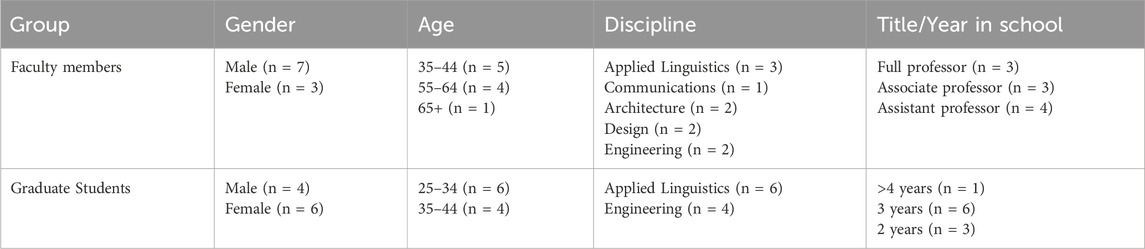

4.2 Participants

Ten faculty members and 10 graduate students participated in a test session. Faculty members had to be either an associate, assistant, or full professor and must have attended more than 10 academic conferences. Students had to be currently enrolled as graduate students and have attended at least one poster session at a conference (see Table 4). All participants had normal or corrected-to-normal vision.

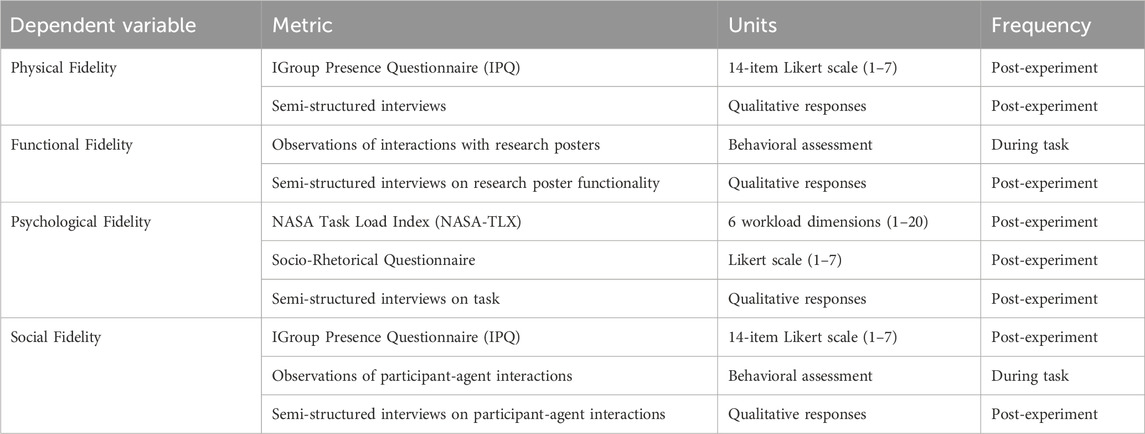

4.3 Measures

Table 5 lists the dependent variables, measurement metrics, and data collection frequency for evaluating fidelity in the VR-AI learning environment.

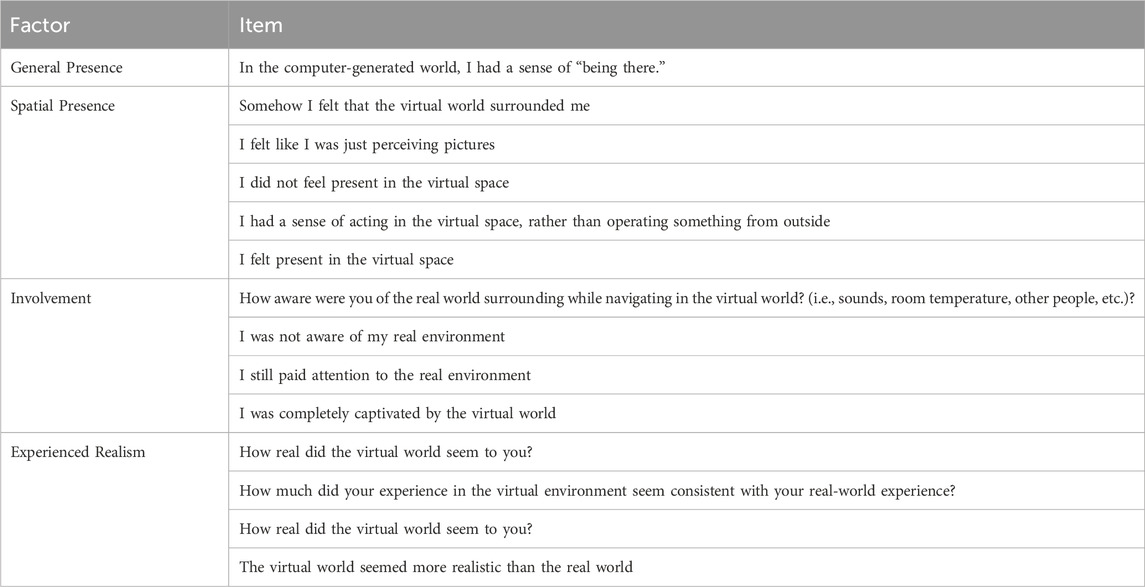

Physical fidelity was evaluated using the Igroup Presence Questionnaire (IPQ) (Igroup, 2000) to assess realism and the extent to which participants felt like they were “at a real conference” engaging with “real people.” The IPQ consists of 14 questions measuring four factors: General Presence, Spatial Presence, Involvement, and Experienced Realism (Table 6).

Additionally, interview data was gathered to elicit qualitative insights into participants’ perceptions of realism. To convert the raw IPQ scores into something more interpretable, Melo et al. (2023) qualitative grading description tool was used to provide a more meaningful interpretation of the environment (see Table 7).

Table 7. Melo et al. (2023) qualitative grading description tool for the IPQ.

Functional fidelity was evaluated using observational data and interviews. Users’ interactions with artifacts (research posters) were observed to see whether they reflected real-world conference behavior, such as reading, questioning, and discussing content. Interviews further explored participants’ perceptions of the usability and functionality of these artifacts.

Psychological fidelity was evaluated using the NASA-TLX (Hart and Staveland, 1988) to measure the perceived demands of the task and a 7-point Likert-scale questionnaire assessing how well the socio-rhetorical values of the discourse community were embodied in the agents and learning tasks (see Table 8). Follow-up interviews were conducted to determine whether the task reflected the psychological aspects of research discussions of critically processing, synthesizing, and articulating research thoughts.

Interactional fidelity was evaluated using relevant sub-scales of the IPQ (Igroup, 2000), observational data, and a semi-structured interview. The analysis included participant engagement with AI agents, while interviews captured perceptions of social presence, conversational flow, and authenticity in interactions.

4.4 Procedure

Participants were welcomed into a private room fitted with a Meta Quest 2 headset, and their Interpupillary Distance (IPD) was adjusted to ensure visual comfort and optimal clarity. They were given a brief tutorial on how to use the controllers and headset. All movement within the VR environment was controlled with the joysticks on the controller and did not require physical movement. Participants stood in place while they navigated virtually in the VR. Once comfortable, participants were instructed to stand within a 4′x4′ marked area, ensuring a safe, obstruction-free space.

Participants were then virtually transported into the poster session environment, where they completed Day 2 of the poster session scenario. During the 30-min learning task, participants engaged in observing, questioning, and discussing research posters with AI agents. Participant interactions were screen-recorded and observed throughout the session to assess how they engaged with research posters and AI agents.

Immediately after completing the task, participants completed three questionnaires to assess different aspects of the virtual learning environment: (1) the Igroup Presence Questionnaire (IPQ) to measure physical and social fidelity, (2) the NASA Task Load Index (NASA-TLX) to assess cognitive workload, and (3) a 7-point Likert-scale questionnaire to evaluate how well the socio-rhetorical values of the discourse community were embodied in the AI agents and learning tasks. Following the questionnaires, a 25-min semi-structured interview assessed participants’ psychological and social fidelity perceptions. The interview focused on three dimensions: the Poster Presenters, the Major Professor, and the Learning Tasks, exploring whether these elements aligned with the discourse community’s socio-rhetorical expectations. Participants were also asked about usability issues (e.g., frustrations, pain points, areas for improvement).

4.5 Data analysis

The IPQ, NASA-TLX, and socio-rhetorical value ratings were analyzed using descriptive statistics. For the semi-structured interview data, thematic analysis (Byrne, 2022) was employed. A deductive approach was adopted as this process was guided by the research objectives and the design of the interview questions. Specifically, a priori codes were created for each of the six socio-rhetorical goals, in addition to codes for usability and improvement suggestions. New codes were also generated based on reoccurring participant responses.

5 Results

5.1 Physical fidelity

5.1.1 IPQ

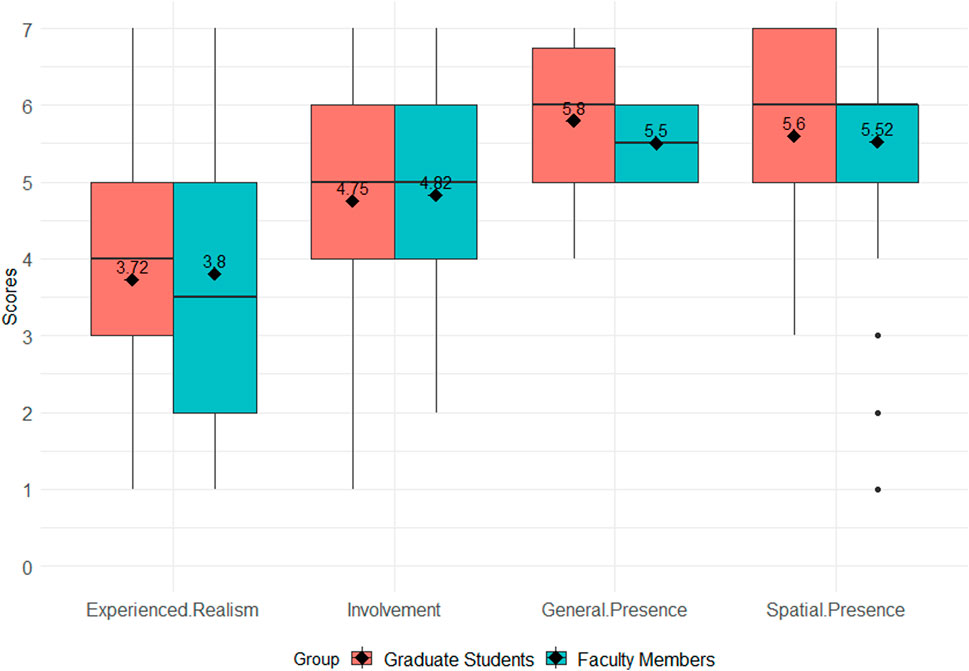

All participants rated the environment highly in spatial presence (M = 5.5, SD = 1.2), general presence (M = 5.6, SD = 0.7), and involvement (M = 4.7, SD = 1.5). However, experienced realism received a lower rating (M = 3.8, SD = 1.6) (see Figure 2). Using Melo et al. (2023) qualitative grading description tool, all of the subscales received an “A” grade except for experienced realism, which received a “B.”

5.1.2 Observational and interview data

Observations indicated that all participants were immersed in the environment and appeared to respect the general physics of the world, such as avoiding physical objects and walking around people. Interview responses supported these findings, with all participants describing the virtual environment as immersive and engaging.

“Environment-wise, it was kind of dead on. The conference hall, and the poster presentations. And there are people milling about, and there’s like a person that comes up to the presenter.”

“The characters, they all looked very real. And the environment too, it’s like what you can see in a conference.”

“It’s super close to what a poster session is. Big room, people walking around, I liked that. And that you can walk around and visit any person was cool.”

5.2 Functional fidelity

5.2.1 Observational data

Observations revealed that half of the participants (n = 10) read the research poster before engaging with the Poster Presenter, while the other half either became too engaged in the interaction or simply overlooked this step. Those who did engage with the poster skimmed each major section (Introduction, Results, Discussion, Methods) and used the content to guide their discussion with the presenter. Some even challenged the presenter’s methods (n = 7) and asked about the literature review (n = 5).

5.2.2 Interview data

Interview responses supported the observational data. While participants generally found the posters functional, some noted that the oversized poster boards made it difficult to glance back and forth while conversing, requiring them to walk back and forth, a deviation from real-life interactions.

“There was a challenging visual thing. I needed to be close enough for them to hear me, but I cannot see the panels.”

“I think I wanted smaller panels to fit within the field of vision, but at the same time I liked how readable it was.”

“If I was further away then the labels became hard to read, but if I moved closer then I could not see the presenter, so I felt like.”

5.3 Psychological fidelity

5.3.1 NASA-TLX

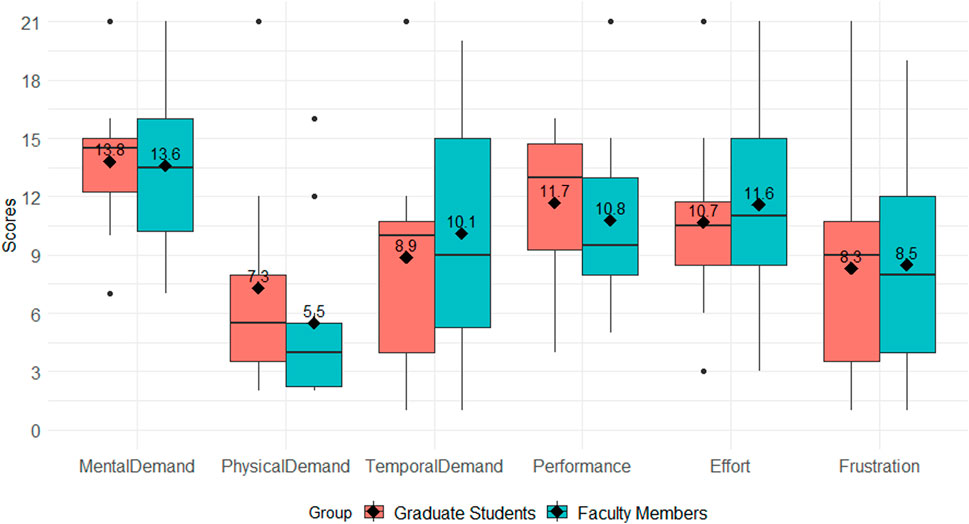

Participants reported the following scores for each NASA TLX subscale: mental demand (M = 13.7, SD = 3.9), physical demand (M = 6.4, SD = 5.2), temporal demand (M = 9.5, SD = 6.2), performance (M = 11.2, SD = 5.0), effort (M = 11.2, SD = 5.0) and frustration (M = 8.4, SD = 5.7) (see Figure 3).

5.3.2 Socio-rhetorical questionnaire

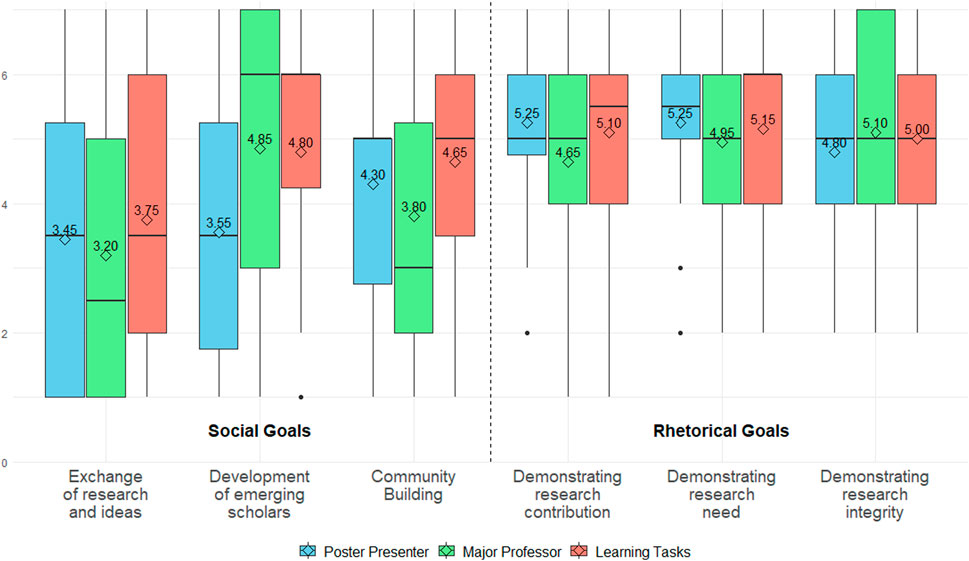

The overall mean for rhetorical goals was (M = 5.20, SD = 1.49), with relatively small standard deviations, indicating general agreement among participants. Demonstrating Research Need (M = 5.25, SD = 1.25) and Demonstrating Research Contribution (M = 5.25, SD = 1.37) scored the highest. Demonstrating Research Integrity also had high ratings (M = 5.1, SD = 1.80). These scores suggest that the rhetorical goals were well represented in the environment (see Figure 4).

The overall mean for social goals was (M = 4.05, SD = 2.09), with large standard deviations, indicating greater variability in participant responses. Community Building (M = 4.25, SD = 1.99) and Development of Emerging Scholars (M = 4.80, SD = 1.99) received moderately high scores with the exception of the Poster Presenters, who were not designed to “mentor” the learner, while Exchange of Research and Ideas received lower ratings (M = 3.45, SD = 2.21). These scores suggest that while social goals were present in the environment, participants had more varied perceptions of their representation.

5.3.3 Semi-structured interviews

Overall, participants reported that the learning tasks–dialoguing with the Poster Presenters and reflecting with the Major Professor–demonstrated psychological fidelity by engaging them in research communication and rhetorical reflection. The Poster Presenter task successfully engaged participants in processing, synthesizing, and articulating research ideas, requiring them to construct questions and evaluate responses in ways that mirrored real-world academic exchanges. Most (n = 8) found the cognitive demands appropriate, though some (n = 4) noted that poster presenters could have provided more depth and adaptability in their responses to follow-up questions.

The reflection task with the Major Professor demonstrated mixed psychological fidelity, reinforcing rhetorical awareness but introducing cognitive workload that reduced opportunities for active engagement. Most faculty participants (n = 7) noted that the Major Professor effectively guided learners through key research communication strategies, with many stating that his mentoring closely resembled their own teaching styles. Some faculty participants (n = 5) even reported that he provided more substantial mentorship than they typically do, offering clear conventions and structured guidance on how to engage in academic discourse. Students (n = 7) noted that the Major Professor was a helpful mentor, gave them key conventions to look out for, and provided guidance on how to participate in these conventions. Examples of participant excerpts include:

“I’ve never had a major professor give me pointers for a poster session. I've always kind of just been like, turned loose at a conference. And I would just fake it the entire time. So, this was helpful, that I come back to interact and be given encouragement.”

“It’s a nice set up, telling the students what to look out for and then the debriefing. The professor gave a good structure so that even new graduate students can participate and ask good questions.”

However, students found the task cognitively demanding. Many struggled to remember the six prompts provided by the Major Professor. They felt that the debriefing required them to retrieve information, making the experience feel more like a memory test than a critical reflection exercise. Participants reported feeling unprepared and pressured, noting that in a real setting, they would not be expected to recall every detail from multiple poster discussions, making the task feel artificial and disconnected from authentic academic experiences. Examples of participant excerpts include:

“And I thought, I’m never going to be able to remember all of this. Even though I knew they were typical questions. I think I remembered maybe three or four of them. But then also being able to remember the answers from two different people … I knew I was not going to be able to remember.”

“Why is the professor asking me things that he did not warn me about? It felt like an exam and it was a bit stressful. And I thought, oh no, I’m going to fail this test.”

“I think the questions, they were too many questions. Yeah, maybe highlight something and then I can focus on it. Because it's kind of hard to memorize.”

5.4 Social fidelity

5.4.1 IPQ (experience realism)

The sub-scale experienced realism of the IPQ was used as a measure of social fidelity. Experienced realism received a lower rating (M = 3.8, SD = 1.6) (see Figure 2). Using Melo et al. (2023) qualitative grading description tool, this subscale received a “B” grade.

5.4.2 Observational and interview data

Overall, observational data showed poor social fidelity, particularly in the AI agents’ inability to manage conversational flow. The agents struggled with turn-taking in two key ways: first, they frequently misinterpreted participant pauses as conversation endings, leading to interruptions; second, they delivered overly long, one-sided responses, making it difficult for participants to interject or steer the conversation. As a result, participants hesitated to engage in dialogue, fearing they would either be cut off too soon or subjected to an extended monologue.

Participant interview responses corroborated these observations. All participants reported that conversational interactions were poor as they were constantly interrupted by the AI agents, particularly the Major Professor, and the agents’ responses were too long and one-sided. Below are interview excerpts:

“He [Major Professor] did not wait for me to talk after the prompts and did not allow for a moment to allow a person to speak. I wished we would have had more of a dialogue. And he waited for my responses. I think that could be really valuable.”

“The Major Professor especially was word vomiting but in a very, very long winded type of way. Sometimes, he even generated new responses as I was talking. Sense making was hard.”

“I wanted to be able to interrupt the presenters. I wanted shorter responses so that I could ask them more questions.”

6 Discussion

This study aimed to develop, operationalize, and evaluate a VR-AI learning environment that facilitates the development of socio-rhetorical knowledge through disciplinary socialization. The environment’s design was grounded in genre theory (defining what must be learned) and situated learning theory (determining how it is learned). Based on these principles, the study developed key features of the virtual environment, customized AI-driven agents, and structured learning tasks to align with authentic academic interactions and learning outcomes. The final step involved evaluating the environment’s physical, functional, psychological, and social fidelity to determine its effectiveness and pedagogical viability. Ensuring that a situated learning environment successfully captures the fundamental features of real-world tasks and contexts is critical to its educational effectiveness.

Overall, physical and functional fidelity were rated highly, with participants frequently describing the simulation as feeling and operating “just like a real poster session.” The realistic design of the environment, coupled with artifacts high in functional fidelity, successfully immersed learners in authentic socio-disciplinary practices. Similarly, psychological fidelity was high as the cognitive demands required to engage with the environment’s tasks closely mirrored those encountered in real-world settings. Learners were required to critically engage with research presentations, analyze rhetorical strategies, and reflect on communicative effectiveness–tasks that align with the cognitive processes necessary for research socialization. While the cognitive workload for the guided reflection task was found to be too high, the results suggest that the simulation successfully supported learners in developing their awareness of socio-rhetorical conventions.

However, social fidelity was low, with participants frequently describing conversations as “not organic.” Issues with turn-taking (when speakers exchange turns) and turn length (how long each speaker talks) emerged as key issues as the AI-driven agents struggled to facilitate natural dialogue. Participants noted that the lack of fluid, reciprocal exchanges limited their ability to engage meaningfully in discussions about research quality and key disciplinary concepts. Given that learning is inherently social, the interaction design must be improved to better support dynamic, back-and-forth discussions that more accurately reflect real-world academic exchanges. Enhancing these interactions will be crucial for ensuring that learners can fully engage in socio-rhetorical learning.

These interactional challenges stem from fundamental limitations in AI-driven conversation management, specifically in its simplified detection systems for turn taking. Turn-taking, a core aspect of human dialogue, is governed by complex linguistic and non-linguistic cues that signal when a speaker’s turn has ended, and the next speaker should begin (Sacks et al., 1974). Human speakers naturally regulate these transitions through subtle cues such as pitch, intonation, pausing, and gaze direction. In contrast, AI-driven agents in this study relied on voice activity detection, which erroneously assumed that any pause signaled the end of a turn. This misinterpretation frequently led to interruptions, disrupting the natural flow of conversation and reducing engagement. Additionally, the agents often delivered excessively long, one-sided responses, violating Grice’s (1975) Maxim of Quantity, which states that contributions to a conversation should be as informative as necessary but not overly verbose. These extended monologues made interactions feel unnatural, diminishing the sense of reciprocal exchange and frustrating participants.

To address these limitations to increase social fidelity, several improvements are recommended. First, implementing a push-to-talk feature can provide a clear signal for turn transitions, giving participants greater control over conversational flow and reducing unintended interruptions. Second, adjusting response length is essential; agents should be programmed to provide concise, targeted responses (e.g., limiting turns to 50 words during general dialogue and up to 80 words for structured presentations or mentoring). Training agents to ask more questions and engage in active turn-taking will also encourage more dynamic and cooperative exchanges, better simulating real-world academic discussions. These refinements will be crucial in fostering more natural, interactive, and pedagogically effective conversations within the VR-AI learning environment.

6.1 Implications

This work contributes to the growing body of research on using immersive simulations with social interactions for education and training. While immersive simulations have long been used for technical training in fields such as aviation (Caro, 1973; Guthridge and Clinton-Lisell, 2023), athletics (Gray, 2019; Miles et al., 2012), and surgery (Hashimoto et al., 2018; Sutherland et al., 2006), these studies have primarily focused on hardware and software requirements to ensure reliable training outcomes. With the rise of GenAI, attention has shifted towards simulations that also support social realism. Emerging applications have begun to arise in fields like patient care (Lowell and Tagare, 2023; Carnell et al., 2022) and education (Chheang et al., 2024; Mulvaney et al., 2024), where learners must learn how to navigate social complexity while performing in their task domain. This is especially beneficial for work that requires the development of teamwork and collaboration, socialization, or interpersonal skills.

As this is a burgeoning field, this contributes to the body of work by identifying the types of fidelity required for immersive, socially rich learning experiences that allow learners to participate not just in authentic contexts, but also in authentic interactions. Physical fidelity is essential for establishing presence, making it crucial to incorporate realistic materials and artifacts—such as authentic research posters and academic settings—to simulate real-world participation. Functional fidelity ensures that these artifacts operate meaningfully within the virtual space, allowing learners to interact with research materials in ways that mirror authentic disciplinary practices. Psychological fidelity is critical for fostering cognitive engagement, requiring tasks that replicate the mental processes involved in evaluating, analyzing, and discussing research. Finally, social fidelity underpins collaborative knowledge construction and disciplinary socialization, necessitating interaction designs that enable fluid, reciprocal exchanges that reflect real-world academic discourse. While this work was designed around a poster session context focusing on disciplinary socialization, its underlying learning mechanisms can be applied to other educational and training contexts requiring immersive and socially interactive experiences. It offers theoretical and practical contributions to the development of situated simulations, as well as methodological guidance for evaluating how specific design features can be aligned with learning goals and intended learner outcomes.

At the same time, fidelity levels must be carefully calibrated to avoid cognitive overload, as excessively high or low fidelity can hinder learning by either overwhelming learners or limiting their ability to engage in higher-order thinking (Champney et al., 2017). Rather than maximizing fidelity across all dimensions, situated learning environments should balance realism with pedagogical considerations, ensuring an authentic yet cognitively manageable experience that facilitates meaningful engagement and skill transfer. In the context of this VR-AI learning environment, achieving the right balance is crucial to ensuring that learners can authentically engage in socio-rhetorical practices without unnecessary distractions or barriers. For instance, functional fidelity in the research posters was effective because it replicated a real academic setting, allowing learners to engage in familiar knowledge negotiation. Conversely, low social fidelity in the AI agents hindered authentic research dialogue.

6.2 Limitations and future work

While this study demonstrates the potential of VR-AI learning environments for fostering socio-rhetorical learning, several limitations highlight areas for future improvement. First, social fidelity remains a challenge, as AI-driven agents struggled with natural turn-taking, response length, and dynamic interaction, limiting the authenticity of academic discourse. Future work should focus on enhancing AI-driven conversational models to better replicate the fluid, reciprocal exchanges essential for research socialization. Second, the study’s participant sample, though representative of a disciplinary community, was relatively small, which may affect the generalizability of findings. Expanding the study across diverse academic disciplines and larger student populations will provide a more comprehensive understanding of how different learners engage with VR-AI environments. Additionally, this study primarily relied on a self-reported questionnaire to assess the psychological fidelity of the primary learning construct, socio-rhetorical knowledge. However, psychological fidelity is critical, as it has been established as the most important factor for real-world transfer (Gray, 2019; Harris et al., 2020). Given the complexity of this construct, self-reports alone may be insufficient, and a more robust way of evaluating psychological fidelity is needed. For example, many studies utilized a combination of self-reports and such as eye-tracking to evaluate gaze behavior (Frederiksen et al., 2020; Vine et al., 2014), pscyhophysiological measurements to evaluate stress (Slater et al., 2006) and cardiovascular activity (Ćosić et al., 2010), and EEGs to measure neural activity (Brouwer et al., 2010; Tromp et al., 2018). Finally, while this study described the development and evaluation of a VR-AI learning environment, it did not assess learning outcomes or skill transfer. Future research should include longitudinal studies to examine how engagement in VR-AI environments translates into actual intended learning outcomes over time.

7 Conclusion

This study explored how a VR-AI learning environment can facilitate socio-rhetorical learning by immersing graduate students in simulated academic interactions. By integrating trained GPT agents and structured learning tasks within a multi-fidelity framework, the simulation successfully replicated key aspects of disciplinary socialization. Findings showed that while physical, functional, and psychological fidelity were well-embodied, social fidelity remained a challenge, highlighting the complexities of designing AI-driven conversational interactions. Despite this, the VR-AI environment provided an authentic and immersive space for learners to engage with their discourse communities and navigate academic discourse in a way that traditional classroom settings often fail to achieve.

This work makes several key contributions. First, it demonstrates how situated learning theory can be operationalized in VR-AI environments to bridge the gap between classroom instruction and real-world academic participation. Second, existing research evaluating pedagogically-grounded learning environments remains limited, this work provides a structured approach to examining how different fidelity dimensions influence learning experiences. Finally, as research in academic research communication continues to evolve in the digital age, this study contributes to the broader discourse on addressing the challenges of academic socialization, while also providing a framework for the design and evaluation of VR-AI learning environments that can be adapted to other educational contexts.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Iowa State University Institutional Review Board. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

AT: Conceptualization, Data curation, Formal Analysis, Investigation, Methodology, Project administration, Software, Validation, Visualization, Writing – original draft, Writing – review and editing. MD: Conceptualization, Methodology, Writing – review and editing. EC: Conceptualization, Methodology, Writing – review and editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Ahn, T. youn, and Lee, S.-M. (2016). User experience of a mobile speaking application with automatic speech recognition for EFL learning. Br. J. Educ. Technol. 47 (4), 778–786. doi:10.1111/bjet.12354

Alexander, A. L., Brunyé, T., Sidman, J., and Weil, S. A. (2005). “From gaming to training: a review of studies on fidelity,” in Immersion, presence, and buy-in and their effects on transfer in PC-based simulations and games.

Birbara, N. S., and Pather, N. (2021). Real or not real: the impact of the physical fidelity of virtual learning resources on learning anatomy. Anat. Sci. Educ. 14 (6), 774–787. doi:10.1002/ase.2022

Bjørn, P., Han, M. L., Parezanovic, A., and Larsen, P. (2024). Social fidelity in cooperative virtual reality maritime training. Human–Computer Interact., 1–25. doi:10.1080/07370024.2024.2372716

Brouwer, A.-M., Neerincx, M. A., Kallen, V. L., Van Der Leer, L., and Brinke, M. T. (2010). “Virtual reality exposure and neuro-bio feedback to help coping with traumatic events,” in Proceedings of the 28th annual European conference on cognitive ergonomics, 367–369. doi:10.1145/1962300.1962388

Brown, J. S., Collins, A., and Duguid, P. (1989). Situated cognition and the culture of learning. Educ. Res. 18 (1), 32–42. doi:10.3102/0013189x018001032

Byrne, D. (2022). A worked example of Braun and Clarke’s approach to reflexive thematic analysis. Qual. and Quantity 56 (3), 1391–1412. doi:10.1007/s11135-021-01182-y

Caro, P. W. (1973). Aircraft simulators and pilot training. Hum. Factors 15 (6), 502–509. doi:10.1177/001872087301500602

Casanave, C. P. (2002). Writing games: multicultural case studies of academic literacy practices in higher education. Mahwah, N.J: Lawrence Erlbaum Associates. Available online at: https://web.p.ebscohost.com/ehost/ebookviewer/ebook/ZTAwMHhuYV9fMTI5NjQyX19BTg2?sid=b4a3b6c6-eb26-4aef-b118-d63f3ed69b64@redis&vid=0&format=EB&rid=1.

Champney, R. K., Stanney, K. M., Milham, L., Carroll, M. B., and Cohn, J. V. (2017). An examination of virtual environment training fidelity on training effectiveness. Int. J. Learn. Technol. 12 (1), 42. doi:10.1504/IJLT.2017.083997

Chen, L., Chen, P., and Lin, Z. (2020). Artificial intelligence in education: a review. IEEE Access 8, 75264–75278. doi:10.1109/ACCESS.2020.2988510

Chen, Y., Li, M., Huang, C., Cukurova, M., and Ma, Q. (2024). “A systematic review of research on immersive technology-enhanced writing education: the current state and a research agenda,”IEEE Trans. Learn. Technol. 17. 919–938. doi:10.1109/TLT.2023.3341420

Chheang, V., Sharmin, S., Márquez-Hernández, R., Patel, M., Rajasekaran, D., Caulfield, G., et al. (2024). “Towards anatomy education with generative AI-based virtual assistants in immersive virtual reality environments,” in 2024 IEEE international conference on artificial intelligence and eXtended and virtual reality (AIxVR), 21–30. doi:10.1109/AIxVR59861.2024.00011

Chiu, T. K. F., Xia, Q., Zhou, X., Chai, C. S., and Cheng, M. (2023). Systematic literature review on opportunities, challenges, and future research recommendations of artificial intelligence in education. Comput. Educ. Artif. Intell. 4, 100118. doi:10.1016/j.caeai.2022.100118

Ćosić, K., Popović, S., Kukolja, D., Horvat, M., and Dropuljić, B. (2010). Physiology-driven adaptive virtual reality stimulation for prevention and treatment of stress related disorders. Cyberpsychology, Behav. Soc. Netw. 13 (1), 73–78. doi:10.1089/cyber.2009.0260

Dede, C. (2009). Immersive interfaces for engagement and learning. Science 323 (5910), 66–69. doi:10.1126/science.1167311

Devitt, A. J., Bawarshi, A., and Reiff, M. J. (2003). Materiality and genre in the study of discourse communities. Coll. Engl. 65 (5), 541–558. doi:10.2307/3594252

Duff, P. A. (2007). Second language socialization as sociocultural theory: insights and issues. Lang. Teach. 40 (4), 309–319. doi:10.1017/S0261444807004508

Duff, P. A. (2010). Language socialization into academic discourse communities. Annu. Rev. Appl. Linguistics 30, 169–192. doi:10.1017/S0267190510000048

Fowler, C. (2015). Virtual reality and learning: where is the pedagogy? Br. J. Educ. Technol. 46 (2), 412–422. doi:10.1111/bjet.12135

Frederiksen, J. G., Sørensen, S. M. D., Konge, L., Svendsen, M. B. S., Nobel-Jørgensen, M., Bjerrum, F., et al. (2020). Cognitive load and performance in immersive virtual reality versus conventional virtual reality simulation training of laparoscopic surgery: a randomized trial. Surg. Endosc. 34 (3), 1244–1252. doi:10.1007/s00464-019-06887-8

Fromm, J., Stieglitz, S., and Mirbabaie, M. (2024). Virtual reality in digital education: an affordance network perspective on effective use behavior. ACM SIGMIS Database DATABASE Adv. Inf. Syst. 55 (2), 14–41. doi:10.1145/3663682.3663685

Fuhrman, O., Eckerling, A., Friedmann, N., Tarrasch, R., and Raz, G. (2021). The moving learner: object manipulation in virtual reality improves vocabulary learning. J. Comput. Assisted Learn. 37 (3), 672–683. doi:10.1111/jcal.12515

Gray, R. (2019). “Virtual environments and their role in developing perceptual-cognitive skills in sports,” in Anticipation and decision making in sport. Editors M. Williams, and R. C. Jackson doi:10.4324/9781315146270

Grice, H. P. (1975). Logic and conversation. In Editors: P. Cole, and J. L. Morgan. Syntax and semantics, (New York, NY: Academic Press) 3: Speech acts 41–58. doi:10.1163/9789004368811_003

Guthridge, R., and Clinton-Lisell, V. (2023). Evaluating the efficacy of virtual reality (VR) training devices for pilot training. J. Aviat. Technol. Eng. 12 (2). doi:10.7771/2159-6670.1286

Haneda, M. (2006). Classrooms as communities of practice: a reevaluation. TESOL Q. 40 (4), 807–817. doi:10.2307/40264309

Harris, D. J., Bird, J. M., Smart, P. A., Wilson, M. R., and Vine, S. J. (2020). A framework for the testing and validation of simulated environments in experimentation and training. Front. Psychol. 11, 605. doi:10.3389/fpsyg.2020.00605

Hart, S. G., and Staveland, L. E. (1988). “Development of NASA-TLX (task load Index): results of empirical and theoretical research,”. Editors P. A. Hancock, and N. Meshkati (North-Holland), 52, 139–183. doi:10.1016/S0166-4115(08)62386-9Adv. Psychol.

Hashimoto, D. A., Petrusa, E., Phitayakorn, R., Valle, C., Casey, B., and Gee, D. (2018). A proficiency-based virtual reality endoscopy curriculum improves performance on the Fundamentals of Endoscopic Surgery examination. Surg. Endosc. 32 (3), 1397–1404. doi:10.1007/s00464-017-5821-5

Herrington, J., and Oliver, R. (2000). An instructional design framework for authentic learning environments. ETR&D 48, 23–48. doi:10.1007/BF02319856

Hontvedt, M., and Øvergård, K. I. (2020). Simulations at work—a framework for configuring simulation fidelity with training objectives. Comput. Support. Coop. Work (CSCW) 29 (1–2), 85–113. doi:10.1007/s10606-019-09367-8

Hyland, K. (2007). Genre pedagogy: language, literacy and L2 writing instruction. J. Second Lang. Writ. 16 (3), 148–164. doi:10.1016/j.jslw.2007.07.005

Hyland, K. (2018). Genre and second language writing. In Editor: J. I. Liontas, The TESOL encyclopedia of English language teaching. Wiley. doi:10.1002/9781118784235.eelt0535

Hyland, K. (2022). English for specific purposes: what is it and where is it taking us? Esp. Today 10 (2), 202–220. doi:10.18485/esptoday.2022.10.2.1

İpek, Z. H., Gözüm, A. İ. C., Papadakis, S., and Kallogiannakis, M. (2023). Educational applications of the ChatGPT AI system: a systematic review research. Educ. Process Int. J. 12 (3). doi:10.22521/edupij.2023.123.2

Johns, A. M., and Swales, J. M. (2002). Literacy and disciplinary practices: opening and closing perspectives. J. Engl. Acad. Purp. 1 (1), 13–28. doi:10.1016/S1475-1585(02)00003-6

Kaplan-Rakowski, R., and Gruber, A. (2019). Low-immersion versus high-immersion virtual reality:Definitions, classification, and examples with a foreign language focus. In Proceedings of the Innovation in Language Learning International Conference 2019: Florence: Pixel.

Kirschner, P. A. (2002). Cognitive load theory: implications of cognitive load theory on the design of learning. Learn. Instr. 12 (1), 1–10. doi:10.1016/S0959-4752(01)00014-7

Kobayashi, M., Zappa-Hollman, S., and Duff, P. A. (2017). “Academic discourse socialization,” in Language socialization. Editors P. A. Duff, and S. May (Springer International Publishing), 239–254. doi:10.1007/978-3-319-02255-0_18

Lave, J., and Wenger, E. (1991). Situated learning: legitimate peripheral participation. Cambridge University Press.

Lee, S.-M., and Park, M. (2020). Reconceptualization of the context in language learning with a location-based AR app. Comput. Assist. Lang. Learn. 33 (8), 936–959. doi:10.1080/09588221.2019.1602545

Lowell, V. L., and Tagare, D. (2023). Authentic learning and fidelity in virtual reality learning experiences for self-efficacy and transfer. Comput. and Educ. X Real. 2, 100017. doi:10.1016/j.cexr.2023.100017

McGowin, G., Fiore, S. M., and Oden, K. (2021). Learning affordances: theoretical considerations for design of immersive virtual reality in training and education. Proc. Hum. Factors Ergonomics Soc. Annu. Meet. 65 (1), 883–887. doi:10.1177/1071181321651293

Melo, M., Gonçalves, G., Vasconcelos-Raposo, J., and Bessa, M. (2023). How much presence is enough? Qualitative scales for interpreting the Igroup presence questionnaire score. IEEE Access 11, 24675–24685. doi:10.1109/ACCESS.2023.3254892

Miles, H. C., Pop, S. R., Watt, S. J., Lawrence, G. P., and John, N. W. (2012). A review of virtual environments for training in ball sports. Comput. and Graph. 36 (6), 714–726. doi:10.1016/j.cag.2012.04.007

Mulvaney, P., Rooney, B., Friehs, M. A., and Leader, J. F. (2024). Social VR design features and experiential outcomes: narrative review and relationship map for dyadic agent conversations. Virtual Real. 28 (1), 45. doi:10.1007/s10055-024-00941-0

Ouyang, F., Zheng, L., and Jiao, P. (2022). Artificial intelligence in online higher education: a systematic review of empirical research from 2011 to 2020. Educ. Inf. Technol. 27 (6), 7893–7925. doi:10.1007/s10639-022-10925-9

Pellas, N., Mystakidis, S., and Kazanidis, I. (2021). Immersive Virtual Reality in K-12 and Higher Education: a systematic review of the last decade scientific literature. Virtual Real. 25 (3), 835–861. doi:10.1007/s10055-020-00489-9

Radianti, J., Majchrzak, T. A., Fromm, J., and Wohlgenannt, I. (2020). A systematic review of immersive virtual reality applications for higher education: design elements, lessons learned, and research agenda. Comput. and Educ. 147, 103778. doi:10.1016/j.compedu.2019.103778

Rehmann, A. J. (1995). A handbook of flight simulation fidelity requirements for human factors research: (472002008-001). doi:10.1037/e472002008-001

Sacks, H., Schegloff, E. A., and Jefferson, G. (1974). A simplest systematics for the organization of turn-taking for conversation. language 50 (4), 696–735. doi:10.2307/412243

Schiflett, S. G., Elliott, L. R., Salas, E., and Coovert, M. D. (2004). Scaled worlds: Development, validation, and applications. Surrey, England: Ashgate doi:10.4324/9781315243771

Sinatra, A. M., Pollard, K. A., Files, B. T., Oiknine, A. H., Ericson, M., and Khooshabeh, P. (2021). Social fidelity in virtual agents: impacts on presence and learning. Comput. Hum. Behav. 114, 106562. doi:10.1016/j.chb.2020.106562

Slater, M., Antley, A., Davison, A., Swapp, D., Guger, C., Barker, C., et al. (2006). A virtual reprise of the stanley milgram obedience experiments. PLoS ONE 1 (1), e39. doi:10.1371/journal.pone.0000039